Abstract

Capturing psychological, emotional, and physiological states, especially during a pandemic, and leveraging the captured sensory data within the pandemic management ecosystem is challenging. Recent advancements for the Internet of Medical Things (IoMT) have shown promising results from collecting diversified types of such emotional and physical health-related data from the home environment. State-of-the-art deep learning (DL) applications can run in a resource-constrained edge environment, which allows data from IoMT devices to be processed locally at the edge, and performs inferencing related to in-home health. This allows health data to remain in the vicinity of the user edge while ensuring the privacy, security, and low latency of the inferencing system. In this article, we develop an edge IoMT system that uses DL to detect diversified types of health-related COVID-19 symptoms and generates reports and alerts that can be used for medical decision support. Several COVID-19 applications have been developed, tested, and deployed to support clinical trials. We present the design of the framework, a description of our implemented system, and the accuracy results. The test results show the suitability of the system for in-home health management during a pandemic.

Keywords: Affective computing, deep learning (DL), emotion analysis, Internet of Medical Things (IoMT)

I. Introduction

Recent advancement in several domains, such as health-related Internet of Things and AI, have made a significant contribution to health support during the COVID-19 pandemic. People are stuck due to travel restrictions, and healthcare facilities are overwhelmed due to a large number of critical patients. The elderly is particularly vulnerable to the pandemic. Hence, in-home healthcare monitoring has become commonplace for both regular citizens and healthcare providers. Recent Internet-of-Medical-Things (IoMT) devices can monitor physiological, emotional, activity-related, and vital states using wearable and noninvasive off-the-shelf hardware [1]. Furthermore, the accuracy of AI algorithms that can interpret the IoMT data has also been remarkable [2]. Different types of AI algorithms have emerged, especially deep learning (DL) algorithms, that have shown tremendous accuracy in interpreting health-related data [3]. State-of-the-art DL algorithms can even detect and recognize phenomena from live camera or IoMT sensory data in real time. This has introduced a new generation of IoMT-supported DL applications [4].

Among IoMT hardware advancements, one innovation has made a breakthrough in the healthcare industry, called edge IoMT nodes [1]. The current generation of IoMT nodes can be independently deployed at the edge, e.g., the hospital or home, where the health data needs to be monitored. These edge health nodes now have a full OS with edge CPU and graphics processing unit (GPU) support that allows the node to perform complex DL computations on the edge. To leverage the growth of sophisticated edge IoMT nodes, DL applications have also been improved to support edge learning and inferencing. Data originating from a subject or hospital does not need to travel outside the owner’s vicinity or edge; rather, DL and event monitoring can take place at the edge. This allows data privacy, security, and low-latency health applications to run on user premises with the support of DL and the IoMT. The edge IoMT has hence ushered in new paradigms of DL, such as federated learning, where learning takes place in a distributed fashion at the edge, while only the model is distributed [5]. Cheap GPU hardware can support IoMT nodes that act as federated learning nodes.

IoMT has been used in several health-related applications, such as physical therapy [1], mobile-edge computing [6], seizure detection [9], pandemic management [10], and social distancing monitoring [11], to cite a few. On the other hand, DL has been used extensively to combat COVID-19 [7], [32] in several contexts, such as tracking wounds of a person [12], diagnosing COVID-19 from X-rays [13], [14], computerized tomography (CT) scans [15], [16], home health monitoring [17], detecting multiple objects in a single camera feed [19], and diagnosing diabetic retinopathy [21]. In order to reduce the latency of IoMT inferencing and to ensure the privacy and security of IoMT data, edge inferencing has been suggested [8]. In another development, federated learning has been proposed to support edge learning [5]. Finally, IoMT and DL have been used together to deduce user emotions and affective states [23]. For example, user emotions were collected through the IoMT in [4]. Jelodar et al. [25] developed a DL sentiment analysis system that could understand the emotional state of a patient. The system presented in [26] used DL to classify the activities of daily life.

Although much progress has been reported with the IoMT and DL, very few have been used in the context that accommodates different in-home Quality-of-Life (QoL) monitoring scenarios within one framework. QoL is the perceived health and emotional status of people. To this end, we introduce the following novelties in this study.

-

1)

We studied, implemented, and recommended a set of edge learning IoMT devices that can be used to develop DL edge solutions.

-

2)

We have developed edge DL libraries for several IoMT devices that can be used for developing health applications at the edge, e.g., at home. Our IoMT nodes support a DL-based convolutional neural network (CNN) architecture with the assistance of edge TPU/GPU.

-

3)

We have developed a set of QoL monitoring applications based on IoMT edge learning. The applications can monitor different phenomena and generate alerts in real time.

The remainder of this article is organized as follows. In Section II, we present some closely related works. In Section III, we show the design and modeling of the system. In Section IV, we show the implementation details, while in Section V, we present and discuss the test results. In Section VI, we conclude this article with our envisioned future works.

II. Related Works

To report the state of the art in this domain, we present advancements in four main areas: 1) IoMT devices; 2) DL applications; 3) affective computing; and 4) edge learning.

A. IoMT

Yang et al. [1] used the IoMT in the context of physical therapy at home. The IoMT was surveyed in the context of mobile-edge computing in [6]. An overview of IoMT sensors was studied in the context of patient monitoring in [7]. A comprehensive survey of health IoT based on the effect of the Internet of Nano Things and 5G tactile Internet on healthcare quality of service was portrayed [8]. The convergence of IoT and cloud was used by Alhussein et al. [9] to track the patient health status in a Cognitive Healthcare IoT (CHIoT) system. A review of the IoMT for managing pandemics, such as COVID-19, was presented in [10]. Ahmed et al. [11] designed a framework that could track social distancing and generate alerts when social distancing was violated.

B. Deep Learning

Explainable AI was used by Lin et al. [2] to combat the COVID-19 pandemic [16]. Federated learning was used in [12] to track the wounds of subjects. DL has been used to diagnose COVID-19 from X-rays [13], [14] and CT scans [15], [16]. A detailed survey on DL in the context of IoMT was presented in [3]. In order to add a semantic explanation and evidence, the authors added model explainability on top of the DL layers in [18]. DL has been used for object classification to detect multiple objects using a single camera feed [19], [20]. DL has also been used to diagnose diabetic retinopathy [21]. A DL model was proposed in [22] that could detect cancer patient using data classification.

C. Affective Computing for Health

The usage of the IoMT was surveyed by Singh et al. [23]. Collecting user emotions using the IoMT was presented in [4]. Machine learning with the health IoT was used by Machorro-Cano et al. [24] to support people with obesity in managing their health status. Jelodar et al. [25] developed a DL sentiment analysis system that could understand the emotional state of a patient [25], [26]. Hossain and Ghulam described a system, where emotion is recognized by the extreme learning machine (ELM) classifier. The system presented in that employed DL was used to classify the activities of daily life.

D. Edge Learning

A 5G-based edge learning framework for COVID-19 patient monitoring was presented in [1], in which the IoMT was used to monitor symptoms. In order to reduce the latency of IoMT inferencing and to add privacy and security to the IoMT data, the authors suggested edge inferencing [8], [35]. To avoid delay in reporting critical in-home patient health data, Hossain [27] proposed a cloud-assisted health monitoring system. Meanwhile, federated learning was proposed to support edge learning in [5]. In another effort, edge-AI was investigated [28] to support mobile edge learning and computing. Rahman et al. [29] proposed an edge computing framework to provide secure medical therapy.

III. System Design

In this section, we show the detailed design of the in-home COVID-19 symptom management system. To show the design using a modular approach, we present the design along the following four dimensions. We first show high-level applications that can be built using the IoMT and edge learning. We then deduce the IoMT edge hardware that will allow us to support the applications. Next, we show the complete end-to-end stack followed by our designed model showing the flow of the overall system.

A. Edge Applications

1). In-Home Quality of Life Management (HRQoL in the Time of COVID-19):

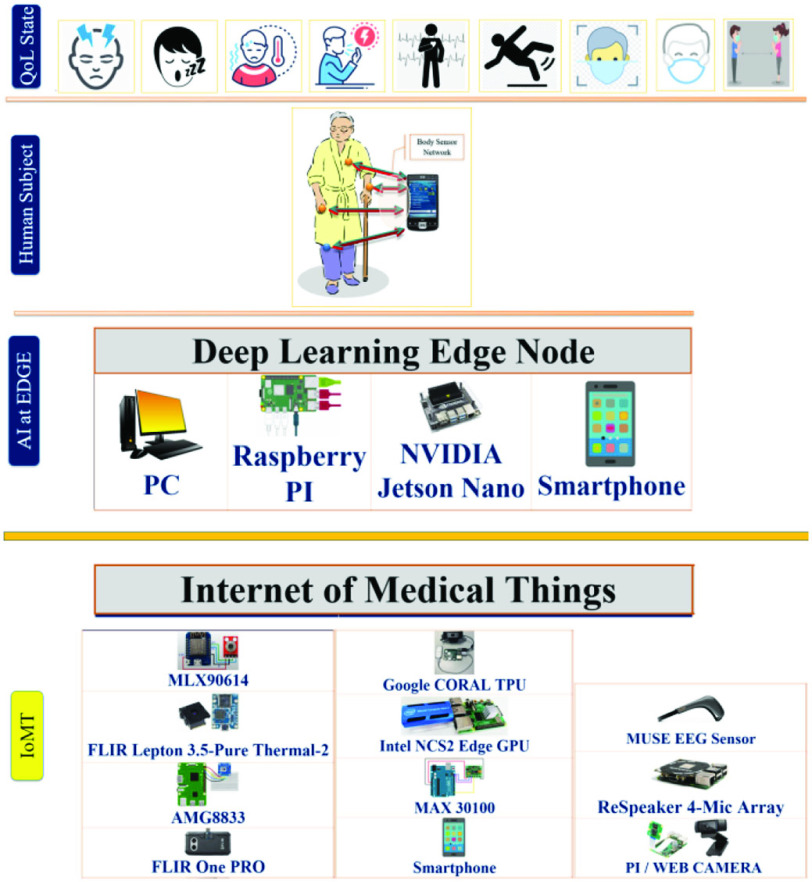

Health-Related QoL (HRQoL) is a metric by which a subject can provide feedback to his/her healthcare provider regarding how effective the treatment is and how much he/she can enjoy a natural way of life [34]. Fig. 1 shows various qualities of life states that were taken into consideration, implemented and tested for their effectiveness in the home environment.

Fig. 1.

Edge-capable IoMT that are connected with edge learning hardware to detect in-home user QoL data.

2). In-Home Pain/Depression and Facial Emotion Detection (Computer Vision):

This type of application uses a public-facing camera and DL on camera streams to recognize pain, depression, and facial emotions.

Electroencephalogram (EEG) Signal Detection: This type of application uses raw EEG signals to classify pain, depression, and emotion by measuring signals from an appropriate area of the scalp.

3). In-Home Self-Quarantine and Safety (Facial Mask Detection):

Due to the nature of the airborne spreading pattern, this application allows tracking high-risk visitors with facial mask detection through DL algorithms.

Social Distancing Detection: Appropriate social distancing can be monitored via wearable sensors or public-facing cameras.

4). In-Home Symptom Management [Electrocardiogram (ECG), Oxygen Saturation (SPo2), and Body Temperature Detection]:

Raw IoMT sensors can be used to detect different COVID-19-related symptoms, such as oxygen saturation level, body temperature, and heart rate.

Tiredness/Drowsiness Detection: Tiredness, excessive yawning, drowsiness, and other related symptoms can be classified using the IoMT and DL applications.

Speech Emotion Detection: Speech can be used to identify the emotional state of a subject.

Fever Detection: Fever can also be detected in a noninvasive way by using thermal cameras.

5). In-Home Treatment and Diagnosis (Pill Detection and Reminder):

DL can be used to identify the necessary pills using regular cameras.

Doctor-on-Demand Chatbot: AI-based chatbots can be used to follow up with patients, where a subject asks COVID-19-related queries and obtains most of the answers. In addition, the chatbot may follow-up with a doctor’s prescriptions.

Cough Sound: Cough sounds captured through a smartphone can be used to diagnose COVID-19.

B. Edge IoMT Device Selection

Fig. 1 shows a matrix of IoMT devices and corresponding deployment scenarios. The bottom row shows the types of IoMT hardware tested in this research, and the second row called the AI at edge shows the hardware environment in which the actual edge IoMT hardware was deployed and interfaced. While regular PCs and Raspberry PI were interfaced with all the hardware mentioned in Fig. 1, the FLIR thermal camera was interfaced with a smartphone.

C. Edge Deep Learning Stack Design

Fig. 1 also shows the complete stack in which the edge IoMT hardware was incorporated and diverse types of emotional and physiological phenomena detection systems were developed. A sample QoL monitoring system that was developed as described in Section III-A. As shown in Fig. 1, the IoMT layer consists of the sensory media that help in event data collection from the user ambience, which passes the raw sensory data to the edge AI layer where either a Raspberry PI or an NVIDIA Jetson platform captures the sensory data, processes it with a CPU/GPU, and generates a report or alert based on the type of QoL application the sensory media serves.

D. System Workflow

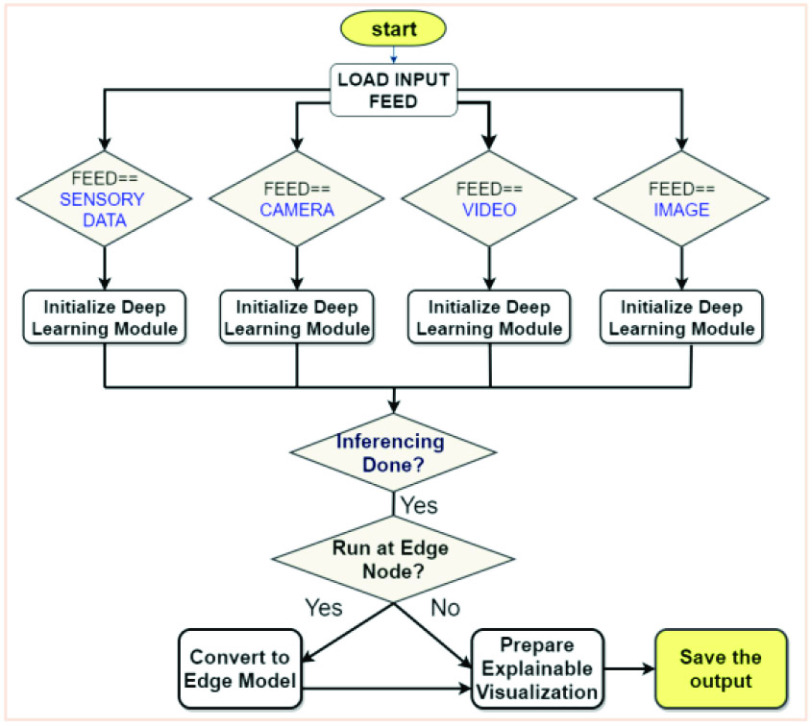

Fig. 2 shows the system workflow in which all the edge IoMT hardware works within a certain scenario. The system architecture starts with capturing the sensory data feed as input to the system. The input can be from any of the four categories: 1) image; 2) video; 3) live camera feed; and 4) sensory data. Appropriate DL libraries were developed for each input feed, which is responsible for using the raw data set for training the DL algorithm, adding more accuracy to the modules, and performing the inferencing. If the inferencing needs to be done at the client edge, the DL model is converted to an edge model, such as TensorFlow Lite (tflite). Finally, the model is visualized using either a Web or smartphone application.

Fig. 2.

Edge learning and processing flow for the IoMT.

IV. Implementation

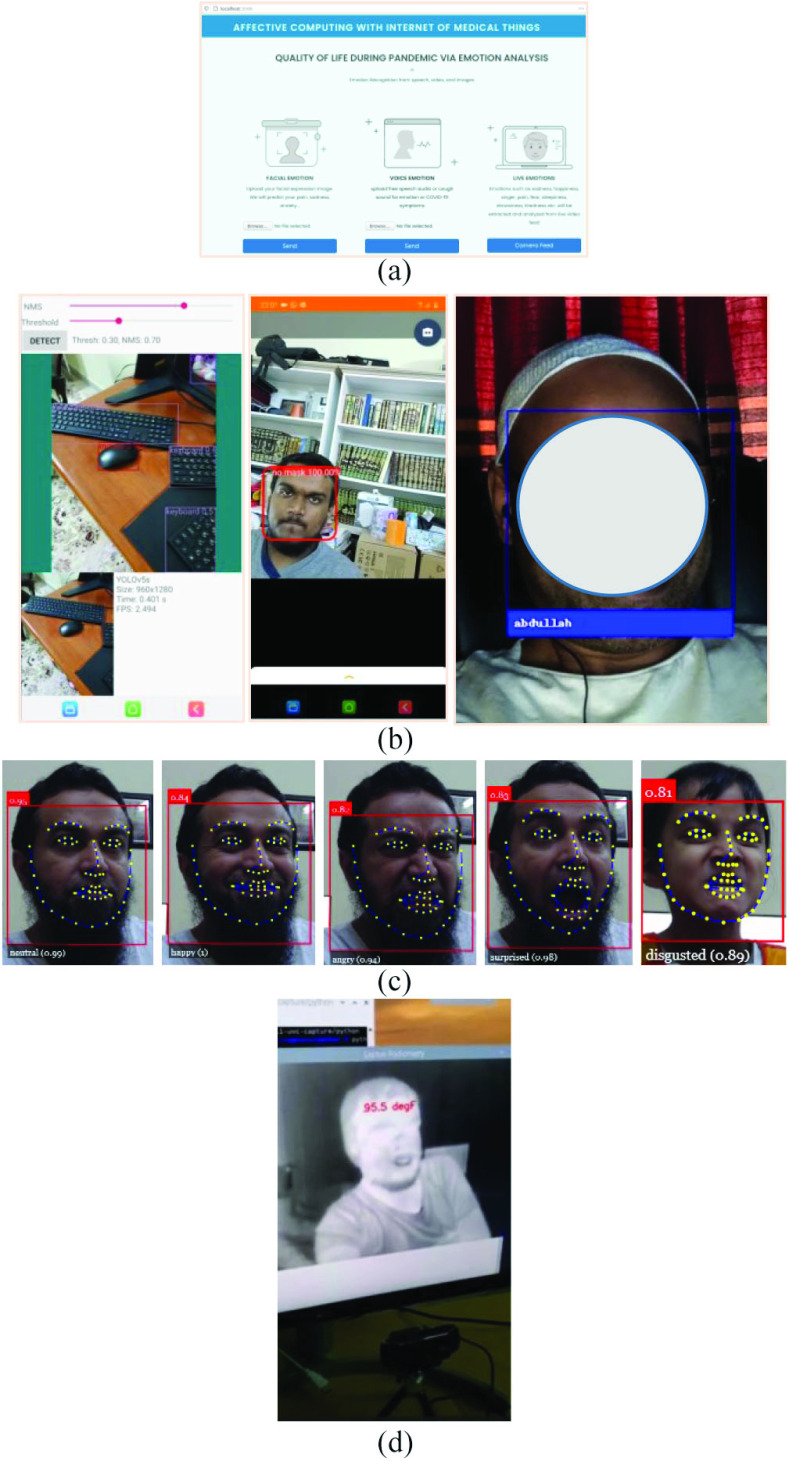

We implemented a wide range of edge learning applications that leverage the IoMT, and they can be explored on our project homepage.1 We leveraged the IoMT hardware shown in Fig. 1. As for the thermal camera, we used the FLIR Lepton 3.5 module, which was housed on a Pure Thermal 2 board having a USB interface with the camera. Each of our developed applications was developed end to end, starting with training on the training data set, and including testing and validating, and finally deployment via Web or smartphone applications. Fig. 3(a) shows sample AI-based Web and smartphone application interfaces that we developed for the proof of concept for this research.

Fig. 3.

UI for the edge learning IoMT applications: (a) Web interface; (b) from left—smartphone application showing surrounding objects, people around a subject with mask warning, and their identity; (c) capturing different emotional states from a public facing camera; and (d) fever detection using a thermal camera.

The DL applications either use TensorFlow/Keras or PyTorch. The applications were trained using a cluster of 10 Nvidia RTX 2080 Ti GPUs. After each model was trained, we deploy the model to run either on the Web or a smartphone. In the case of Web-based deployment, we used a Flask server deployed behind a Nginx reverse proxy server. The Flask applications and the DL model were deployed as a docker container. To access the GPU over the container, we used an Nvidia docker with CUDA 9.0 and cudnn7-devel. For some applications that required specialized CUDA support that was not available from the cloud host, we have used ngrok to securely tunnel the data traveling from our localhost DL server through any network address translation (NAT) or firewall. To deploy the DL application to the smartphone, we converted the DL model to a tflite model and then quantized to support the smartphone GPU/CPU.

Fig. 3(b) shows a smartphone application that can be used by an elderly person having dementia or poor eyesight. The applications can recognize objects and people around the subject and inform him/her through text-to-speech. Fig. 3(c) shows an emotion collection application that uses a Web-based edge learning client. Fig. 3(d) shows fever temperature detection using a thermal camera. We also developed an alert system based on IoMT hardware, shown in Fig. 1.

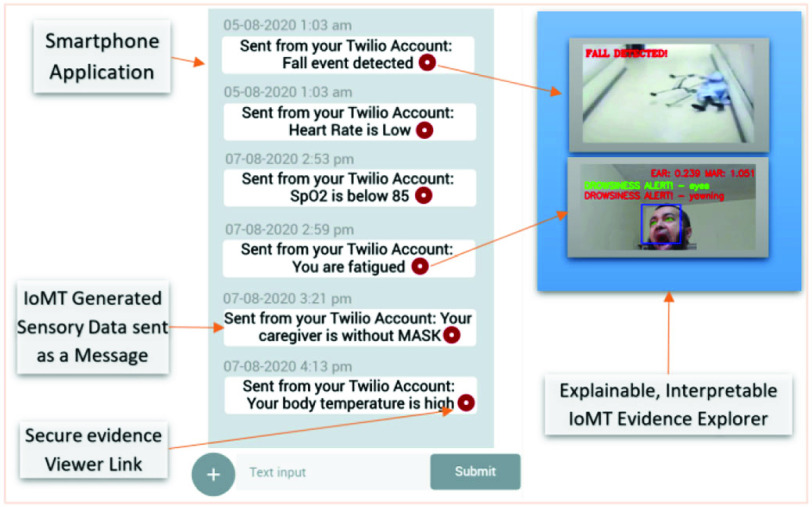

To ensure privacy and security for the IoMT data, we passed the transactional data through an Ethereum and Hyperledger-based blockchain framework to support both public and private networks. The raw health data was saved off-chain and linked with the blockchain for evidence support purposes. Fig. 4 shows the AI alert generation system in which the critical events were captured, recognized, and shared with the appropriate authorities via a messaging system. The system also makes the IoMT-generated evidence available via the blockchain. In addition to the computer vision-based DL applications, sensory data, such as oxygen saturation and ECG values, were calculated using a recurrent NN (RNN).

Fig. 4.

IoMT data inferenced by the edge AI modules and alert generated for further support of in-home subjects.

V. Test Results

In this section, we elaborate on the tests that we conducted to validate the efficacy of different applications. Due to the diversity of each application type, details about the data set, testing mechanism, and test results are presented in their respective sections.

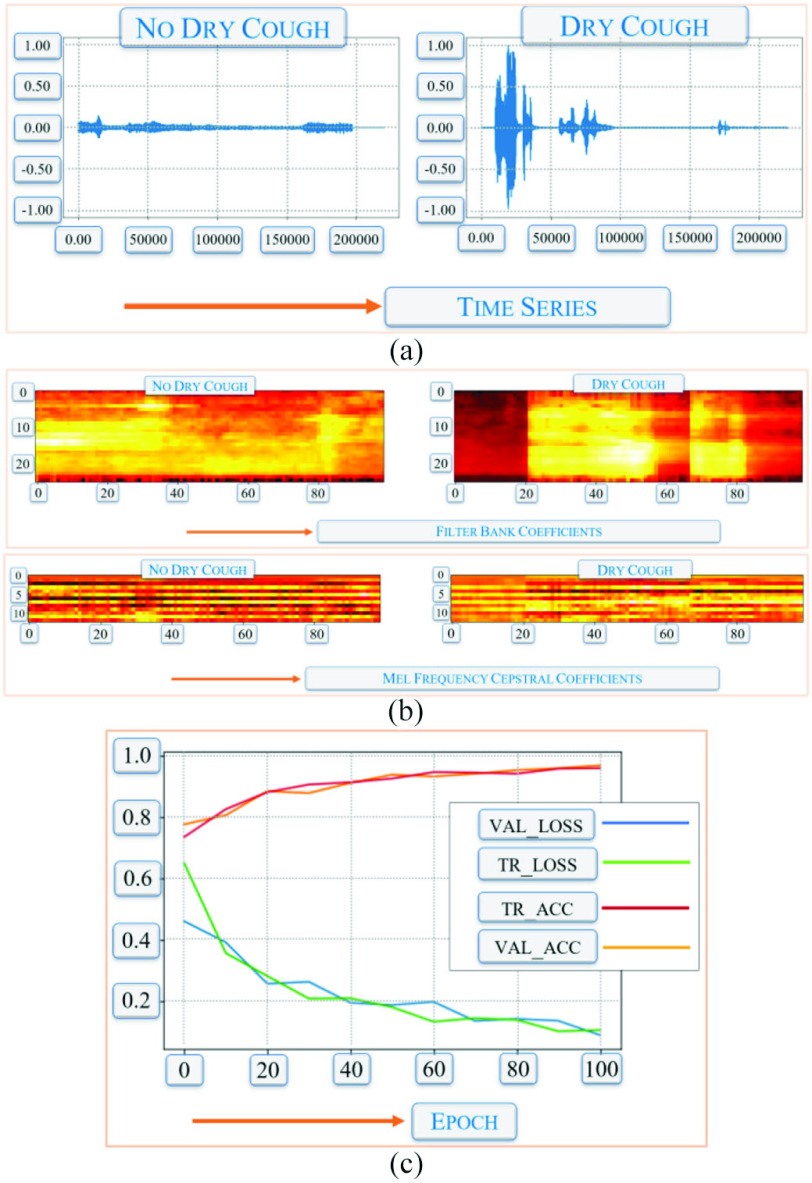

A. Sick or Nonsick From In-Home Cough Sound Analysis

We used a data set of 59 cough samples taken from 15 COVID-19 subjects who were confirmed with an RT-PCR test. The subjects belonged to different age groups. We also used around 219 samples of COVID-free subjects’ cough sounds, which were labeled as pneumonia. The other 210 samples were from normal subjects that were collected from Google’s audio data set. We converted cough sounds to images [see Fig. 5(a) and (b)] and then applied a CNN architecture with 3*CONV2D followed by an FC layer. The resulting training loss and accuracy is shown in Fig. 5(c). The training and validation loss were found to be 0.13 and 0.06, respectively, while the training and validation accuracy were recorded as 0.94 and 0.98, respectively.

Fig. 5.

QoL measurement through cough sound recording from a sound sensor: (a) time-series analysis of sample cough sound; (b) corresponding frequency analysis in the image domain; and (c) training and validation loss and accuracy.

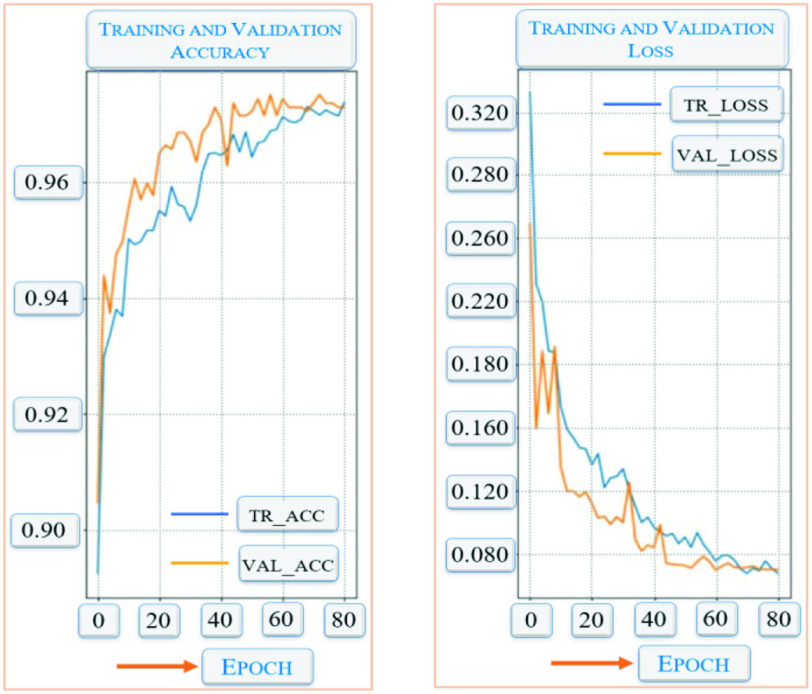

B. Drowsiness Analysis

We used the dlib library along with You only Look once (YoLo) V4 for facial mapping and facial landmark detection. The sleeping pattern was calculated using the duration of a closed eye, the frequency of eyelid closure, and blinking frequency. The algorithm also tracked head tilting and yawning. We used the UTA drowsiness data set2 to train our algorithm. The training and validation accuracy and loss are shown in Fig. 6.

Fig. 6.

QoL measurement through tiredness and drowsiness detection via camera sensor: (left) training and validation accuracy and (right) training and validation loss.

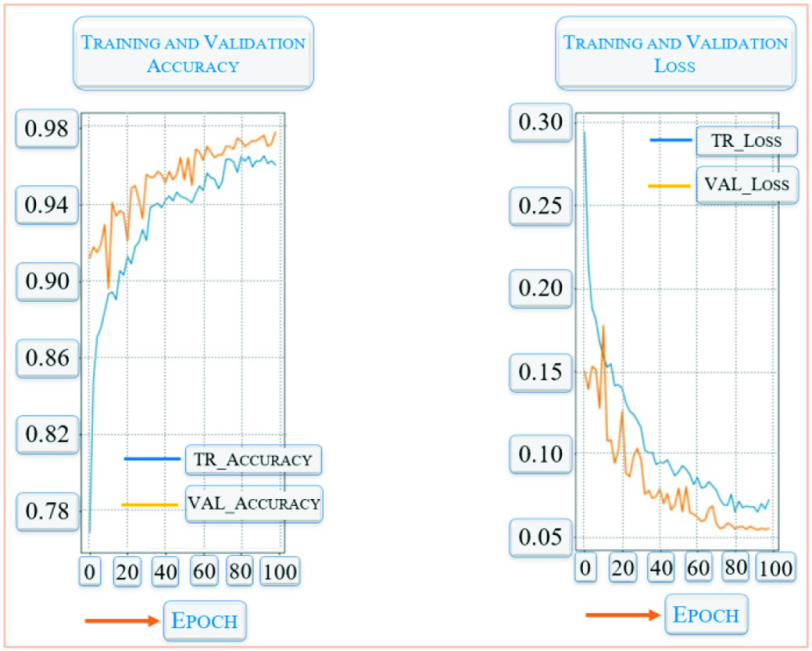

C. Face Mask Detection

To train our face mask detection algorithm, we first used a face detection algorithm based on the dlib library. We then used a total of 8541 images consisting of 4250 images with a face mask, and the rest without a face mask, downloaded from a selenium-based Google search. Modified transfer learning using the MobieNetV2 architecture with a TensorFlow-based CNN architecture was used. We achieved a training and validation accuracy of 0.98 and 0.97, respectively, which is shown in Fig. 7.

Fig. 7.

QoL measurement through social interaction using facial mask detection via camera sensor: (left) training and validation accuracy and (right) training and validation loss.

D. ECG

We used the Keras framework to implement a custom CNN architecture to train the MIT-BIH Arrhythmia3 ECG data set. The ECG signal was first preprocessed to generate a 2-D image spectrogram. After the augmentation process, the 2-D image was passed to a CNN architecture, which used seven convolution layers followed by max pooling, global average pooling, a fully connected layer, and a softmax layer, with four classes of output. The resulting precision and recall values during the training and validation process are shown in Table I, while the accuracy and loss values are presented in Fig. 8. As shown in Table I, the training precision and recall values of the ECG data were recorded to be 0.96 and 0.95, respectively, for the training data set, whereas the validation data set produced precision and recall values of 0.95 and 0.94, respectively.

TABLE I. Precision and Recall of ECG Data Classification.

| Type | Precision | Recall |

|---|---|---|

| Training | 0.96 | 0.95 |

| Validation | 0.95 | 0.94 |

Fig. 8.

QoL measurement through physiological vital information collected through ECG sensors: (left) training and validation accuracy and (right) training and validation loss.

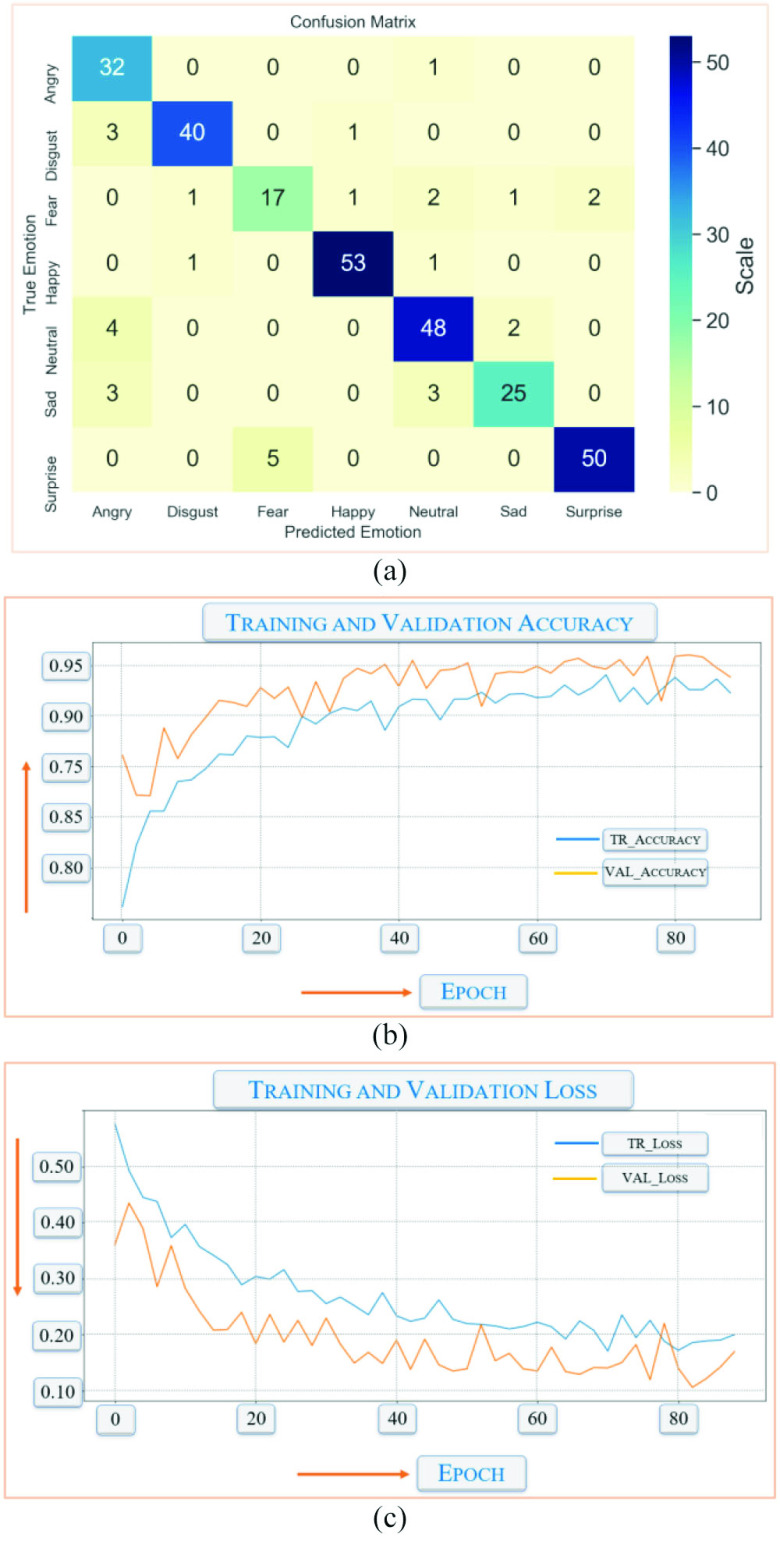

E. Physiological State Determination Using Emotion Analysis

For this test, we used 1028 images for each of the seven classes in training, 100 images for each of the seven classes in validation, and 172 images for each of the seven classes in the testing data set, as shown in Fig. 9(a). Fig. 9(a) shows the confusion matrix with the precision and recall values of seven emotion types, as shown in Table II. Fig. 9(b) and (c) shows the training and validation accuracy and loss, respectively. Table III shows the precision and recall values of six different types of activities of daily life, which were recorded through a smartphone.

Fig. 9.

QoL measurement through emotional state detection using camera sensor: (a) confusion metrics showing actual and predicted results; (b) training and validation accuracy; and (c) training and validation loss.

TABLE II. Precision and Recall of Emotion Classification.

| Emotion Type | Precision | Recall |

|---|---|---|

| Surprise | 0.962 | 0.909 |

| Happy | 0.964 | 0.964 |

| Fear | 0.798 | 0.748 |

| Angry | 0.762 | 0.970 |

| Disgust | 0.952 | 0.909 |

| Neutral | 0.873 | 0.889 |

| Sad | 0.893 | 0.806 |

TABLE III. Precision and Recall of Activities of Daily Life.

| Activity Type | Precision | Recall |

|---|---|---|

| Walking | 0.97 | 0.88 |

| laying down | 1.00 | 0.96 |

| Sitting | 0.84 | 0.78 |

| Taking Upstairs | 0.83 | 0.93 |

| Taking Downstairs | 0.89 | 0.97 |

| Standing | 0.84 | 0.85 |

F. Fever Detection

The application was trained with several modules: object detection with YoloV3, face, eye, and forehead detection using dlib, and then the live thermal camera reading of the forehead was used to determine temperature, as shown in Fig. 3(d). The application was tested on an NVIDIA Jetson Nano. The alarm threshold was set at 37.5 °C. We used the Kaggle data set4 to train our FLIR thermal camera images. The recorded precision and recall values of the training and validation data sets are shown in Table IV, and the corresponding chart is shown in Fig. 10.

TABLE IV. Precision and Recall of Fever Classification.

| Type | Precision | Recall |

|---|---|---|

| Training | 0.97 | 0.95 |

| Validation | 0.88 | 0.78 |

Fig. 10.

QoL measurement through fever detection using thermal camera sensors: (a) training and validation accuracy and (b) training and validation loss.

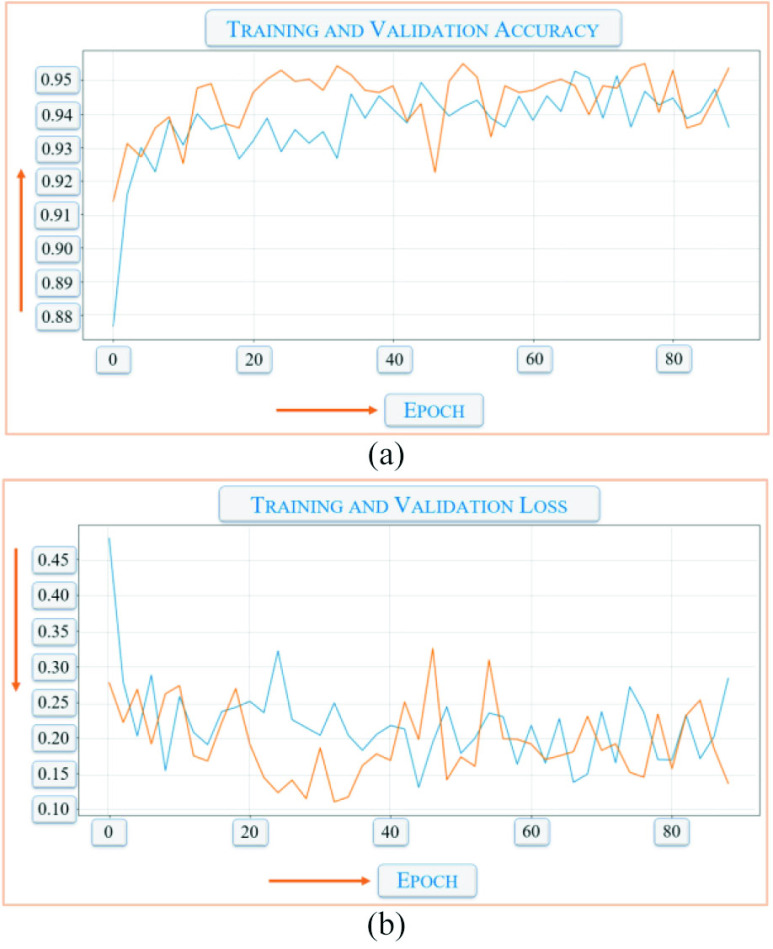

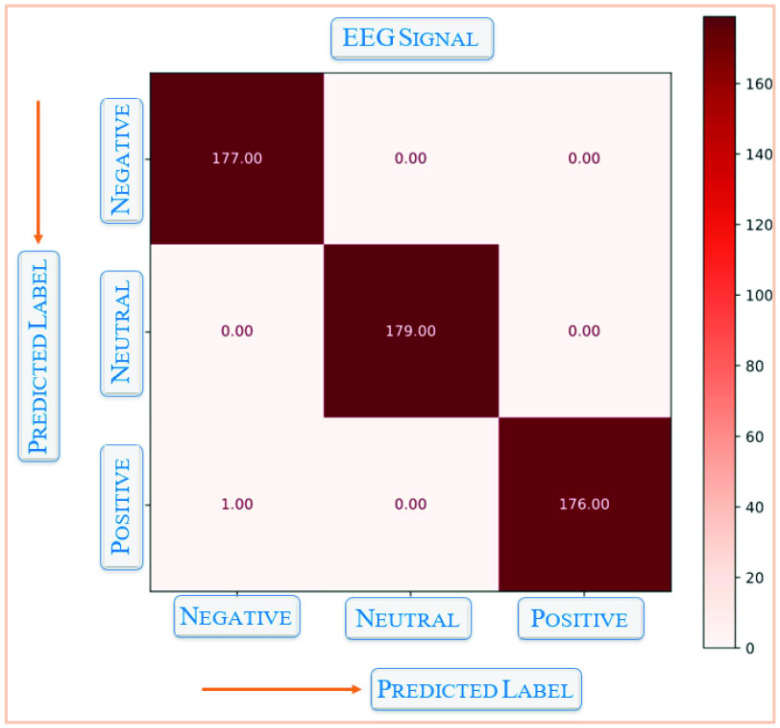

G. EEG Signal Classification

We trained our model using two data sets available from Kaggle.5,6 The data sets provide the mental and emotional states of subjects, captured through wearable BCI hardware. The states are shown in Fig. 11. As shown in Fig. 11, the trained random forest model could classify whether a person was feeling positive, negative, or neutral. The precision and recall values for three emotion types are shown in Table V. Due to the clear demarcation of data types, we observed high precision and recall values for each type of emotional value.

Fig. 11.

QoL measurement through emotion detection using EEG sensors—confusion metrics.

TABLE V. Precision and Recall of EEG Classification.

| Emotion Type | Precision | Recall |

|---|---|---|

| Positive | 0.99 | 0.99 |

| Neutral | 0.99 | 1.00 |

| Negative | 1.00 | 0.99 |

We were able to observe eight different types of COVID-19-related phenomena, namely, the cough sound, drowsiness, face mask detection, ECG and EEG data, emotional data, activities of daily life, and fever detection from open-source data sets. Because the trained models had acceptable accuracy, precision, and recall values, we are now working with three different hospitals at Madinah so that these trained models can be deployed at patients’ home premises. The real challenge lies in the actual data collection in the home. For that, we are planning to incorporate differential privacy, end-to-end encryption, and federated learning among the edge devices. We will also investigate ways to improve the accuracy, precision, and recall values.

VI. Conclusion and Future Work

In this article, we introduced an affective computing framework that leverages the IoMT deployed in the user edge environment, e.g., one’s home. The edge nodes use state-of-the-art edge GPUs to run DL applications on the edge by enabling collection and alert generation applications for a multitude of COVID-19-related symptoms. Using the edge-GPU architecture, user data privacy, security, and low-latency were achieved. Due to the scarcity of specialized doctors and pandemic travel restrictions, especially for the elderly, in-home healthcare and symptom management would provide the next generation of healthcare support.

As a future work, we are planning to improve the accuracy of each application and to deploy to real subjects. We are in touch with several hospitals in which we have demonstrated our solutions. After we attain acceptable accuracy, we will plan for clinical trials.

Biographies

Md. Abdur Rahman (Senior Member, IEEE) received the Ph.D. degree in electrical and computer engineering from the University of Ottawa, Ottawa, ON, Canada, in 2011.

He is an Associate Professor with the Department of Cyber Security and Forensic Computing and the Director of Scientific Research and Graduate Studies, University of Prince Mugrin, Madinah, Saudi Arabia. He has authored more than 120 publications. He has one U.S. patent granted and several are pending.

Dr. Rahman has received more than 18 million SAR as research grant.

M. Shamim Hossain (Senior Member, IEEE) received the Ph.D. degree in electrical and computer engineering from the University of Ottawa, Ottawa, ON, Canada, in 2009.

He is currently a Professor with the Department of Software Engineering, College of Computer and Information Sciences, King Saud University, Riyadh, Saudi Arabia. He is also an Adjunct Professor with the School of Electrical Engineering and Computer Science, University of Ottawa. He has authored and coauthored more than 300 publications.

Prof. Hossain is the Chair of the IEEE Special Interest Group on Artificial Intelligence for Health with IEEE ComSoc eHealth Technical Committee. He is on the editorial board of the IEEE Transactions on Multimedia, IEEE Multimedia, IEEE Network, IEEE Wireless Communications, IEEE Access, Journal of Network and Computer Applications (Elsevier), and International Journal of Multimedia Tools and Applications (Springer). He is a Senior Member of ACM. He is an IEEE ComSoc Distinguished Lecturer.

Funding Statement

This work was supported by the Deanship of Scientific Research at King Saud University, Riyadh, Saudi Arabia, through the Vice Deanship of Scientific Research Chairs: Chair of Pervasive and Mobile Computing.

Footnotes

Contributor Information

Md. Abdur Rahman, Email: m.arahman@upm.edu.sa.

M. Shamim Hossain, Email: mshossain@ksu.edu.sa.

References

- [1].Yang Y., Wang X., Ning Z., Rodrigues J. J. P. C., Jiang X., and Guo Y., “Edge learning for Internet of Medical Things and its COVID-19 applications: A distributed 3C framework,” IEEE Internet Things Mag., to be published.

- [2].Lin H., Garg S., Hu J., Wang X., Piran Md. J., and Hossain M. S., “Privacy-enhanced data fusion for COVID-19 applications in intelligent Internet of Medical Things,” IEEE Internet Things J., early access, Oct. 22, 2020, doi: 10.1109/JIOT.2020.3033129. [DOI] [PMC free article] [PubMed]

- [3].Alom M. Z.et al. , “A state-of-the-art survey on deep learning theory and architectures,” Electronics, vol. 8, no. 3, p. 292, 2019, doi: 10.3390/electronics8030292. [DOI] [Google Scholar]

- [4].Hossain M. S. and Muhammad G., “Emotion-aware connected healthcare big data towards 5G,” IEEE Internet Things J., vol. 5, no. 4, pp. 2399–2406, Aug. 2018. [Google Scholar]

- [5].Lim W. Y. B.et al. , “Federated learning in mobile edge networks: A comprehensive survey,” IEEE Commun. Surveys Tuts., vol. 22, no. 3, pp. 2031–2063, 3rd Quart., 2020. [Google Scholar]

- [6].Porambage P., Okwuibe J., Liyanage M., Ylianttila M., and Taleb T., “Survey on multi-access edge computing for Internet of Things realization,” IEEE Commun. Surveys Tuts., vol. 20, no. 4, pp. 2961–2991, 4th Quart., 2018. [Google Scholar]

- [7].Hossain M. S., Muhammad G., and Guizani N., “Explainable AI and mass surveillance system-based healthcare framework to combat COVID-I9 like pandemics,” IEEE Netw., vol. 34, no. 4, pp. 126–132, Jul./Aug. 2020. [Google Scholar]

- [8].Greco L., Percannella G., Ritrovato P., Tortorella F., and Vento M., “Trends in IoT based solutions for health care: Moving AI to the edge,” Pattern Recognit. Lett., vol. 135, pp. 346–353, Jul. 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Alhussein M., Muhammad G., Hossain M. S., and Amin S. U., “Cognitive IoT-cloud integration for smart healthcare: Case study for epileptic seizure detection and monitoring,” Mobile Netw. Appl., vol. 23, no. 6, pp. 1624–1635, Dec. 2018. [Google Scholar]

- [10].Yassine A. and Hossain M. S. “COVID-19 networking demand: An auction-based mechanism for automated selection of edge computing services,” IEEE Trans. Netw. Sci. Eng., early access, Sep. 24, 2020, doi: 10.1109/TNSE.2020.3026637. [DOI] [PMC free article] [PubMed]

- [11].Ahmed I., Ahmad M., Rodrigues J. J. P. C., Jeon G., and Din S., “A deep learning-based social distance monitoring framework for COVID-19,” Sustain. Cities Soc., vol. 65, Feb. 2020, Art. no. 102571. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Rahman M. A., Hossain M. S., Islam M. S., Alrajeh N. A., and Muhammad G., “Secure and provenance enhanced Internet of Health Things framework: A blockchain managed federated learning approach,” IEEE Access, vol. 8, pp. 205071–205087, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].El Asnaoui K. and Chawki Y., “Using X-ray images and deep learning for automated detection of coronavirus disease,” J. Biomol. Struct. Dyn., to be published, doi: 10.1080/07391102.2020.1767212. [DOI] [PMC free article] [PubMed]

- [14].Shorfuzzaman M. and Hossain M. S., “MetaCOVID: A Siamese neural network framework with contrastive loss for n-shot diagnosis of COVID-19 patients,” Pattern Recognit., to be published, doi: 10.1016/j.patcog.2020.107700. [DOI] [PMC free article] [PubMed]

- [15].Li X., Deng Z., Deng Q., Zhang L., Niu T., and Kuang Y. U., “A novel deep learning framework for internal gross target volume definition from 4D computed tomography of lung cancer patients,” IEEE Access, vol. 6, pp. 37775–37783, 2018. [Google Scholar]

- [16].Rahman M. A., Hossain M. S., Alrajeh N. A., and Guizani N., “B5G and explainable deep learning assisted healthcare vertical at the edge: COVID-I9 perspective,” IEEE Netw., vol. 34, no. 4, pp. 98–105, Jul./Aug. 2020. [Google Scholar]

- [17].Muhammad G., Hossain M. S., and Kumar N., “EEG-based pathology detection for home health monitoring,” IEEE J. Sel. Areas Commun., vol. 39, no. 2, pp. 603–610, Feb. 2021, doi: 10.1109/JSAC.2020.3020654. [DOI] [Google Scholar]

- [18].Selvaraju R. R., Cogswell M., Das A., Vedantam R., Parikh D., and Batra D., “Grad-CAM: Visual explanations from deep networks via gradient-based localization,” Int. J. Comput. Vis., vol. 128, no. 2, pp. 336–359, 2020. [Google Scholar]

- [19].Yang X., Zhang T., Xu C., Yan S., Hossain M. S., and Ghoneim A., “Deep relative attributes,” IEEE Trans. Multimedia, vol. 18, no. 9, pp. 1832–1842, Sep. 2016. [Google Scholar]

- [20].Hossain M. S., Al-Hammadi M., and Muhammad G., “Automatic fruit classification using deep learning for industrial applications,” IEEE Trans. Ind. Informat., vol. 15, no. 2, pp. 1027–1034, Feb. 2019. [Google Scholar]

- [21].Asiri N., Hussain M., Al Adel F., and Alzaidi N., “Deep learning based computer-aided diagnosis systems for diabetic retinopathy: A survey,” Artif. Intell. Med., vol. 99, Aug. 2019, Art. no. 101701. [DOI] [PubMed] [Google Scholar]

- [22].Shah S. H., Iqbal M. J., Ahmad I., Khan S., and Rodrigues J. J. P. C., “Optimized gene selection and classification of cancer from microarray gene expression data using deep learning,” Neural Comput. Appl., to be published. [Online]. Available: https://doi.org/10.1007/s00521-020-05367-8

- [23].Singh R. P., Javaid M., Haleem A., and Suman R., “Internet of Things (IoT) applications to fight against COVID-19 pandemic,” Diabetes Metab. Synd. Clin. Res. Rev., vol. 14, no. 4, pp. 521–524, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Machorro-Cano I., Alor-Hernández G., Paredes-Valverde M. A., Ramos-Deonati U., Sánchez-Cervantes J. L., and Rodríguez-Mazahua L., “PISIoT: A machine learning and IoT-based smart health platform for overweight and obesity control,” Appl. Sci., vol. 9, no. 15, p. 3037, 2019. [Google Scholar]

- [25].Jelodar H., Wang Y., Orji R., and Huang H., “Deep sentiment classification and topic discovery on novel coronavirus or COVID-19 online discussions: NLP using LSTM recurrent neural network approach,” IEEE J. Biomed. Health Inform., vol. 24, no. 10, pp. 2733–2742, Oct. 2020. [DOI] [PubMed] [Google Scholar]

- [26].Hossain M. S., and Muhammad G., “Emotion recognition using secure edge and cloud computing,” Inf. Sci., vol. 504, pp. 589–601, Dec. 2019. [Google Scholar]

- [27].Hossain M. S., “Cloud-supported cyber–physical localization framework for patients monitoring,” IEEE Syst. J., vol. 11, no. 1, pp. 118–127, Mar. 2017. [Google Scholar]

- [28].Zhang Y., Ma X., Zhang J., Hossain M. S., Muhammad G., and Amin S. U., “Edge intelligence in the cognitive Internet of Things: Improving sensitivity and interactivity,” IEEE Netw., vol. 33, no. 3, pp. 58–64, May/Jun. 2019. [Google Scholar]

- [29].Rahman M. A.et al. , “Blockchain-based mobile edge computing framework for secure therapy applications,” IEEE Access, vol. 6, pp. 72469–72478, 2018. [Google Scholar]

- [30].Chen M., Li M., Hao Y., Liu Z., Hu L., and Wang L., “The introduction of population migration to SEIAR for COVID-19 epidemic modeling with an efficient intervention strategy,” Inf. Fusion, vol 64, pp. 252–258, Dec. 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Lin K., Song J., Luo J., Ji W., Hossain M. S., and Ghoneim A., “Green video transmission in the mobile cloud networks,” IEEE Trans. Circuits Syst. Video Technol., vol. 27, no. 1, pp. 159–169, Jan. 2017. [Google Scholar]

- [32].Mauro A.da Cruz A., Rodrigues J. J. P. C., Lorenz P., Korotaev V., and de Albuquerque V. H. C., “In IoT–A new middleware for Internet of Things,” IEEE Internet Things J., early access, Dec. 1, 2020, doi: 10.1109/JIOT.2020.3041699. [DOI]

- [33].Hossain M. S. and Muhammad G., “Audio-visual emotion recognition using multi-directional regression and ridgelet transform,” J. Multimodal User Interfaces, vol. 10, no. 4, pp. 325–333, Dec. 2016. [Google Scholar]

- [34].Hu L., Qiu M., Song J., Hossain M. S., and Ghoneim A. “Software defined healthcare networks,” IEEE Wireless Commun., vol. 22, no. 6, pp. 67–75, Dec. 2015. [Google Scholar]

- [35].Hao Y.et al. , “Smart-Edge-CoCaCo: AI-enabled smart edge with joint computation, caching, and communication in heterogeneous IoT,” IEEE Network, vol. 33, no. 2, pp. 58–64, Mar./Apr. 2019. [Google Scholar]