Abstract

Security is a prevailing concern in communication as conventional encryption methods are challenged by progressively powerful supercomputers. Here, we show that biometrics-protected optical communication can be constructed by synergizing triboelectric and nanophotonic technology. The synergy enables the loading of biometric information into the optical domain and the multiplexing of digital and biometric information at zero power consumption. The multiplexing process seals digital signals with a biometric envelope to avoid disrupting the original high-speed digital information and enhance the complexity of transmitted information. The system can perform demultiplexing, recover high-speed digital information, and implement deep learning to identify 15 users with around 95% accuracy, irrespective of biometric information data types (electrical, optical, or demultiplexed optical). Secure communication between users and the cloud is established after user identification for document exchange and smart home control. Through integrating triboelectric and photonics technology, our system provides a low-cost, easy-to-access, and ubiquitous solution for secure communication.

Biometrics-protected communication is realized by superimposing biometric signals on optical data using TENG/photonic interface.

INTRODUCTION

Wearable flexible sensors have experienced vigorous development and advancement in the past decade because of their attractive characteristics such as flexibility, stretchability, light weight, and function diversity for widespread applications including personalized health care, soft robotics, prosthetics, and human-machine interfaces (HMIs) (1–5). In the era of the fifth generation (5G) mobile network and the Internet of Things (IoT), numerous sensors are expected to be connected wirelessly (6, 7). These sensors serve as sensor nodes in a communication framework where the sensors and the cloud exchange data at an ultrafast data rate (8, 9). Following this trend, a body area sensor network has been proposed to wirelessly connect wearable transmitters, receivers, and sensors with various functionalities to comprehensively monitor human health conditions (10, 11). The traditional and dominant wearable sensors are based on the resistive mechanism and the capacitive mechanism (12, 13). The need for external power supplies limits their widespread deployment in the IoT because of the power consumption issue and the battery replacement issue. The triboelectric nanogenerator (TENG), since its invention in 2012, has been explored as energy harvesters to drive wearable sensors due to its high output characteristics and wide adaptability (14–18). Benefiting from its versatile configurations and self-powered characteristics, TENG has been further investigated and deployed as self-powered sensors for motion monitoring and health care monitoring (19–23). TENG-based sensors have been integrated into diversified clothes to enable smart socks, belts, and wrist bands for gait analysis, driving status monitoring, and arterial pulse detection, respectively (24–27).

In addition to monitoring functions, TENG-based sensors can also provide promising control functions when configured into HMIs (28–30). Wearable HMIs are in burgeoning demand as an advanced solution to achieve human-machine interactions by virtue of their human state tracking capability (31, 32). Versatile working mechanisms and structural configurations of TENGs have been used to implement different types of HMIs, such as touchpads, gloves, glasses, etc. (33–35). To enhance the control capability, various coding methods for TENG-based HMIs have been developed (36–38). Shi and Lee (38) developed a highly scalable and wearable control interface by encoding multidigit binary information into a spider net–shaped electrode layout, achieving a multidirectional three-dimensional (3D) control system with only one single electrode. Besides improved control capability, the TENG-based HMIs operate in a self-powered manner without the need for external power supplies, making them ideal sensor nodes in the communication framework of IoT systems. However, these HMIs face security issues in communication. Using current TENG-based HMIs, unauthorized users can also send commands and control designated entities. Potentially, TENG itself can address the security issue by incorporating the biometric identification function into HMIs (39, 40). Recently, the use of TENG for biometric identification has been investigated. Deep learning (DL) analytics as a technique in artificial intelligence has been used to enhance the data analysis of TENG-based sensor signals to achieve biometric identification (41–44). Wu et al. (41) proposed a keystroke dynamics–based authentication system with TENG-based sensors to recognize the identity of users through their unique typing habits, which could potentially push cybersecurity to a new level without the concern of leaking passwords. Shi et al. (42) developed a smart floor monitoring system that can detect the walking position and gait-based identity of a user simultaneously by integrating DL analytics in TENG-based floor mats, enabling secure smart homes. Moving forward, it is desired to combine the biometric identification function and the control function into a single TENG-based sensor where control is only allowed after authority check, so as to enhance the system security.

To function as sensor nodes in IoT systems, TENG-based sensors should be able to transmit their signals to the cloud efficiently and robustly (45). Traditionally, signals from TENG-based sensors are captured by a microcontroller unit (MCU); then, the MCU controls a transmitter to send out the signals (46–48). This method involves many analog-to-digital and digital-to-analog conversions (ADC and DAC) and several communication interfaces, limiting the transmission efficiency and increasing the power consumption on the sensor end. To address this issue, a method based on electromagnetic coupling has been proposed (49, 50). Two closed electrical circuits are placed in proximity, where one hosts the TENG-based sensor and the other hosts the receiver circuit. Upon human interaction, the current flow in the TENG circuit induces a current in the readout circuit via electromagnetic induction. Although the transmission efficiency is improved by removing the intermediate MCU, the transmission distance is limited because of the weak coupling between two circuits. Moreover, the electromagnetic coupling is vulnerable to electromagnetic interference (EMI), limiting their robustness for applications in complex IoT systems where strong EMI occurs because of the presence of numerous electrical components. In this regard, transmitting the TENG signals in the optical communication infrastructure could be advantageous. The optical communication is immune to EMI and can transmit ultrahigh-speed signals with low attenuation and low dispersion over a long distance. Nevertheless, it is challenging to load signals generated by TENG-based sensors into the optical domain efficiently and directly.

Combining triboelectric technology with nanophotonics technology could be a promising solution. Recently, the synergistic effect between triboelectric technology and aluminum nitride (AlN) photonics has been investigated (51, 52). Two main advantages have been proven. On the one hand, thanks to the Pockels effect in AlN photonics (53, 54), the coded control signals generated by TENG-based sensors in the form of high voltage can be loaded into the optical domain by the electro-optic effect without the need for external circuits or power supplies, resulting in optical strings of “ones” and “zeros” that carry the control information (51). On the other hand, when TENG-based sensors are used for monitoring functions, the sensory information can be recorded continuously and accurately because the capacitive nature of AlN photonic devices provides the open-circuit working condition for TENG (52). The availability of the open-circuit working condition can be attributed to the fact that the impedance of AlN photonic devices is several orders higher compared to TENG sensors because of their size difference. Benefiting from the two advantages, optical Morse code transmission and human-machine interaction in the virtual reality/augmented reality (VR/AR) space have been demonstrated using the triboelectric/photonics interface. However, the monitoring and transmission of biometric information have not been investigated using the triboelectric/photonics interface. Furthermore, the previous works did not incorporate the triboelectric/photonic interface into the optical communication infrastructure to exploit its main superior function of transmitting information at ultrahigh data rate. Whether the direct transmission of TENG-based sensor signals in the optical communication infrastructure will disrupt the digital information that is originally propagating in optical fibers remains a severe unknown issue that may hinder practical applications.

Here, we present a biometrics-protected optical communication technology as a low-cost, easy-to-access, and ubiquitous solution for secure communication between users (sensor nodes) and the cloud by leveraging on the DL-enhanced triboelectric/photonic synergistic interface. The synergistic interface is constructed on the basis of the fusion of flexible triboelectric biometric (TEB) scanner and AlN photonics-based biometric-optical information multiplexer (BOIMUX). The TEB scanner is a single-electrode TENG-based sensor that provides both the biometric identification function for secure communication and the control function for human-machine interactions. The system only enables the control function after the user authority is checked by biometric identification assisted with DL. Upon user interactions, enabled by the synergistic effect between triboelectric and nanophotonics, the interface loads biometric information into the optical domain and multiplexes biometric information and digital information that is originally propagating in optical fibers in a self-sustainable manner. The digital signals are sealed in a biometric envelope after multiplexing, thereby eliminating the communication latency and enhancing the complexity of transmitted information. The multiplexed signal in the form of a modulated wave packet is then transmitted efficiently and robustly to the cloud via the optical communication infrastructure. Because of the large frequency difference, low-frequency biometric information does not disrupt high-frequency digital information in the optical domain. In the cloud, the high-frequency digital information and low-frequency biometric information can be separated using fast Fourier transform (FFT) filters. Assisted with DL, biometric identification can be implemented to identify 15 users with around 95% accuracy and 23 users with around 90% accuracy irrespective of the data types of biometric information (electrical, optical, or demultiplexed optical), enabling secure communication between users and the cloud via the triboelectric/photonics interface. Secure exchange of high-speed documents and secure control of smart homes in the VR space are both demonstrated to prove the practicality of the proposed system.

RESULTS

Operation principle and architecture of biometrics-protected optical communication

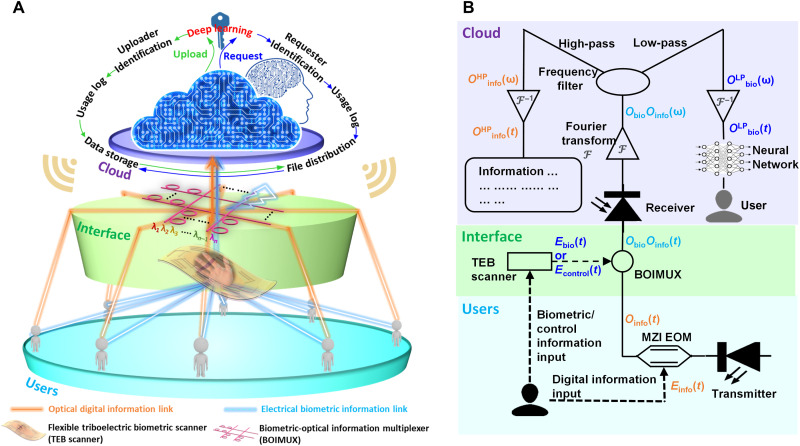

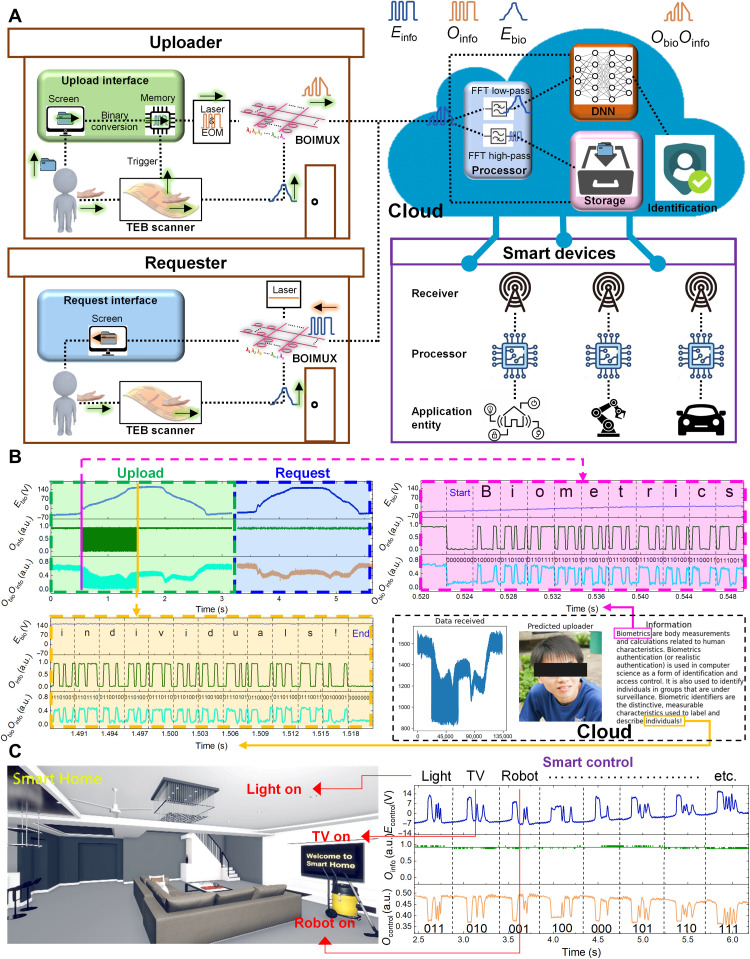

The system comprises three components: users, interface, and cloud (Fig. 1A). The users generate digital information, biometric information, and control information. The digital information is transmitted at high data rate in the optical communication infrastructure. The biometric and control information is generated when the user interacts with the interface. The interface features the combination of a TEB scanner and a BOIMUX, which synergistically loads the biometric information into the optical domain and multiplexes the digital information with biometric and control information. The cloud performs demultiplexing, then uses biometric information for user identification through DL, and decodes digital information. After the user identity is checked, the control authority is then granted to the user to control entities via the cloud.

Fig. 1. Architecture and operation principle of biometrics-protected optical communication.

(A) Architecture with three components: users, interface, and cloud. (B) Detailed operation principle. LP, low-pass; HP, high-pass.

The detailed operation principle in terms of information conversion and information flow is illustrated in Fig. 1B. Files to be uploaded are encoded as digital information Einfo in the electrical domain. A Mach-Zehnder interferometer electro-optic modulator (MZI EOM) converts Einfo into Oinfo in the optical domain as the optical input of BOIMUX. Meanwhile, users interact with the TEB scanner to generate biometric information Ebio in the electrical domain via the coupling effect of contact electrification and electrostatic induction. Ebio is applied to the BOIMUX. Because of the Pockels effect, Ebio modulates Oinfo, resulting in ObioOinfo as the output of BOIMUX. Obio is the biometric information in the optical domain. Since the frequency of digital information is several orders of magnitude higher than biometric information, ObioOinfo is a wave packet that simultaneously contains Oinfo as the signal and Obio as the envelope. Therefore, Oinfo and Obio can be separated in the frequency domain. In the cloud, ObioOinfo is received. FFT filters are used to demultiplex ObioOinfo into a high-frequency component OHPinfo and a low-frequency component OLPbio. OLPbio represents the biometric information and is propagated through a deep neural network (DNN) for user identification. After verifying the identity of users, OHPinfo is decoded to ensure secure document transmission. Meanwhile, the control authority will be granted so that the users can use the same TEB scanner to generate control signals Econtrol. Econtrol is then converted to Ocontrol and similarly transmitted to the cloud as discussed above. Leveraging this system assisted by a single TENG-based sensor coupled to an AlN photonic device, further enhanced by DL analytics, document exchange and control can be implemented more securely.

Co-design and characterization of TEB scanner and BOIMUX

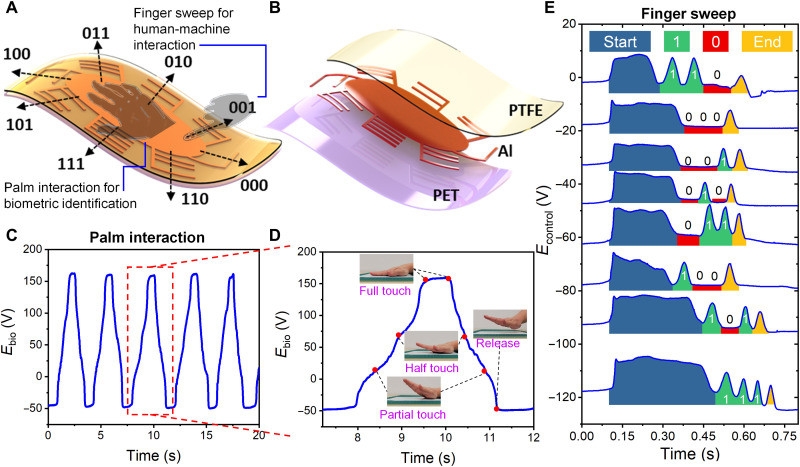

To enable both the biometric identification function and the control function in a single TENG-based sensor and to load the corresponding signals into the optical domain efficiently and directly without disrupting the digital information in optical fibers, the triboelectric/photonic synergistic interface requires the co-design of TEB scanner and BOIMUX. The TEB scanner is a single-electrode TENG-based sensor that records both biometric information and control information (Fig. 2, A and B). The central ellipse works in contact separation–based single-electrode mode (fig. S1) for user palm monitoring. Eight digitated electrodes protrude from the central ellipse and work in sliding-based single-electrode mode (fig. S2) for control command recording. The optical image, power curve, and internal impedance of the manufactured TEB scanner are presented in figs. S3 and S4. The biometric information Ebio generated by palm interaction spans 200 V from −50 to 150 V (Fig. 2C). In an interaction cycle of 3 s, four characteristic states are identified, indicating that the TEB scanner can monitor palm characteristics (Fig. 2D). The control signal Econtrol generated by sweeping across eight digitated electrodes using a finger shows that the eight distinct three-digit binary codes can be distinguished (Fig. 2E). Econtrol spans approximately 10 V, which is an order lower than Ebio. Although sweeping with more fingers at a faster speed can increase Econtrol, Econtrol can hardly exceed 20 V (fig. S5).

Fig. 2. Design and characterization of TEB scanner.

(A) Single-electrode TENG-based sensor with a central ellipse (contact separation–based single-electrode mode) for palm interaction to monitor biometric information and eight protruded digitated electrodes (sliding-based single-electrode mode) for finger sweep to record control information. (B) TEB scanner features an Al electrode sandwiched by a bottom polyethylene terephthalate (PET) layer for insulation and a top polytetrafluoroethylene (PTFE) layer as the triboelectric material, rendering low cost and ease of fabrication. (C) Self-generated Ebio spectrum under repeated human palm interaction. (D) Zoom-in of (C) to show the successful recording of palm characteristics with four characteristic states, namely, “partial touch,” “half touch,” “full touch,” and “release.” (E) Self-generated Econtrol spectrum when a finger sweeps across eight digitated electrodes. The resultant control signals are three-digit binary signals. “Start” corresponds to the moment when the finger touches the central ellipse and “End” corresponds to the moment when the finger sweeps across the outermost electrode bar.

The BOIMUX is an AlN microring resonator (MRR) EOM that performs electrical-to-optical (E-O) conversion on biometric and control information generated by the TEB scanner. The BOIMUX is designed to operate around 1550 nm, which is a standard working wavelength for optical communication with the lowest propagation loss. Because the TEB scanner adopts a minimalist design with only one shared electrode for cost optimization, the BOIMUX should preferably simultaneously have a high sensitivity to record control information of 20-V small voltages and a large sensing range to measure biometric information of 200-V large voltages.

Consequently, to cooperate with the TEB scanner, the BOIMUX meets three conditions that can be formulated as:

A) Nonzero transmission: For all t > 0, Obio(t) > 0 and Ocontrol(t) > 0. Otherwise, a small Obio or Ocontrol leads to ObioOinfo ≈ 0 or OcontrolOinfo ≈ 0. Digital information is lost.

B) High sensitivity: , so that BOIMUX has enough sensitivity to generate clear three-digit binary codes using 20-V small Econtrol.

C) Large sensing range: . Otherwise, Obio saturates. Biometric information is lost.

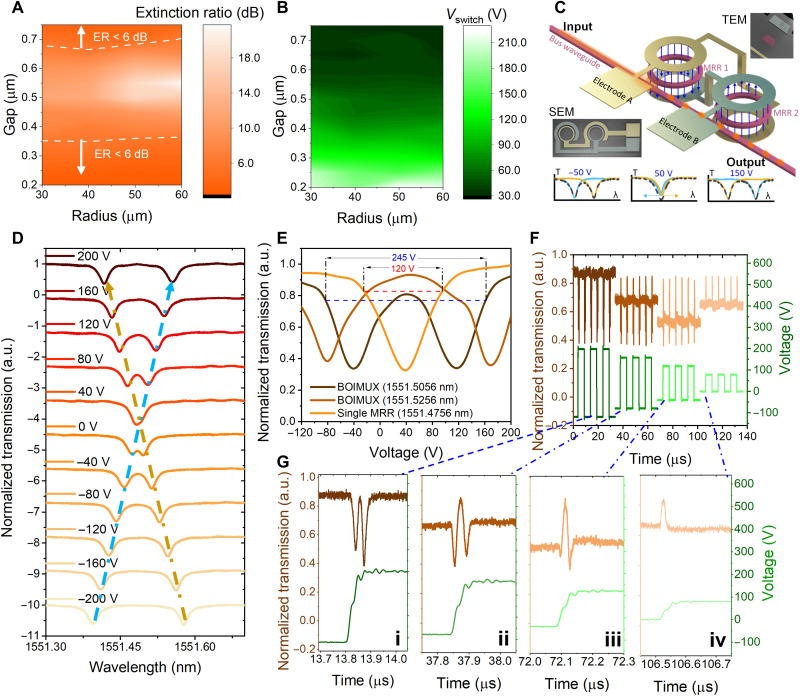

Condition (A) can be satisfied by engineering the radius R and coupling gap g of MRRs to ensure that the ratio between the highest optical transmission and the lowest optical transmission, i.e., extinction ratio (ER), is within 6 dB. Hence, Obio and Oinfo are higher than 25% (equivalent to −6 dB), far exceeding 0. Arrays of MRRs are systematically studied to obtain the ER map with respect to R and g. A total of 48 MRRs that are formed by the combination of four different R’s (30, 40, 50, and 60 μm) and 12 different g’s (0.2 to 0.75 μm in the step of 0.05 μm) are investigated (fig. S6, A and B). The measured free spectral ranges are inversely proportional to R and do not depend on g. Figure S6 (C to F) shows all the spectra of the 48 MRRs, showing high fabrication uniformity and strong dependence of ER on g. The ER map shows that ER ≤ 6 dB is achieved in two regions: g ≤ 0.35 μm and g ≥ 0.7 μm (Fig. 3A). For condition (B), the switch voltage (Vswitch) is defined as the voltage required to realize ΔOcontrol = 3 dB. As shown in Fig. 3B, Vswitch is negatively related to g. When g ≥ 0.7 μm, Vswitch ≤ 30 V can be achieved. Hence, selecting g > 0.7 μm in a BOIMUX can satisfy both condition (A) and condition (B) at any examined R. However, the huge mismatch between Ebio and Econtrol makes it challenging to satisfy condition (B) and condition (C) simultaneously because the optical spectrum of any MRR features a Lorentzian line shape, whose sensitivity and sensing range are negatively related. A resonant line shape with 30-V Vswitch and 6-dB ER only has a 120-V sensing range (as described in text S1 and fig. S7), which cannot cover −50 to 150 V. To tackle this challenge, the BOIMUX adopts a cascaded MRR (CMRR) design with a push-pull electrode layout (Fig. 3C). The sensing range can be doubled without compromising the sensitivity, as will be shown later. Therefore, using the CMRR design with push-pull electrode layout with g = 0.75 μm and R = 40 μm, the BOIMUX can satisfy all conditions (A), (B), and (C).

Fig. 3. Design and characterization of BOIMUX.

All presented transmissions are normalized. (A) ER map. ER ≤ 6 dB is required to secure nonzero optical transmission. (B) Vswitch map. Small Vswitch is required for high sensitivity to record the 20-V small Econtrol. (C) CMRR design with push-pull electrode layout. Waveguides are sandwiched by the top and bottom electrodes (inset: TEM). The top electrode of MRR1 is connected to the bottom electrode of MRR2 and vice versa (inset: SEM). This design helps double the voltage sensing range without sacrificing sensitivity. (D) DC response of BOIMUX showing good linearity of resonant wavelength change with respect to applied voltages. a.u., arbitrary units. (E) Comparison of transmission-voltage curve in single MRR and BOIMUX. For a single MRR, only one trough is presented. The sensitive range is from −25 to 95 V. For BOIMUX, two troughs can be observed when the operation wavelength λop (1551.5056 or 1551.5256 nm) does not coincide with λmid (1551.4756 nm). The sensitive range is extended to cover −80 to 165 V at λop = 1551.5056 nm. (F) AC response of BOIMUX under different applied voltages. The working wavelength is 1551.5056 nm. (G) Zoom in of (F) for analyzing AC response in submicrosecond scale.

In the BOIMUX, the top electrode of MRR1 is connected to the bottom electrode of MRR2 and vice versa. Specifically, fig. S8 (A to C) shows false-colored scanning electron microscope (SEM) images of manufactured CMRRs under different magnifications, and fig. S8D shows a transmission electron microscope (TEM) image of the cross section labeled in fig. S8C. When Ebio or Econtrol is applied, opposite electric fields with the same amplitude penetrate through the two MRRs, leading to opposite resonant wavelength shifts (fig. S9). The DC response of BOIMUX shows good linearity of resonant wavelength change with respect to applied voltage (Fig. 3D), with a sensitivity of 0.4 pm/V (fig. S10). The BOIMUX doubles the sensitive voltage range from 120 to 245 V without sacrificing sensitivity (Fig. 3E). Note that the normalized optical transmission is symmetric with respect to around 50 V. It is designed on purpose to make most of the TEB scanner output (−50 to 150 V) fall in the sensitive range of the BOIMUX. The AC response of BOIMUX is studied by operating the BOIMUX at 1551.5056 nm and sequentially applying varying square waves (Fig. 3F). Figure 3G zooms in to Fig. 3F to analyze the dynamic response at submicrosecond scale. The observed AC response is well explained by the DC results, noting that Fig. 3G (i) is a replicate of the “1551.5056-nm” spectrum in Fig. 3E because the voltage rises linearly with respect to time. From Fig. 3G (ii) to (iii), the initial and final optical transmission decreases compared to Fig. 3G (i) because the starting voltage changes from −120 to −80 V and then to −40 V, as suggested in Fig. 3E. In Fig. 3G (iv), a single peak is observed because of the small voltage span that only covers the peak centering at 40 V in Fig. 3E. In addition, the distinct features shown in Fig. 3G imply that different Ebio carrying distinct user traits can be recorded. Using a 1-MHz square wave with 20-V Vpp, the electrical information is accurately loaded into the optical domain (fig. S11). The high bandwidth advantageously guarantees that all palm interaction details can be recorded since the temporal resolution of human motion is at the kilohertz scale (55). It also proves that 20-V small voltages are measurable.

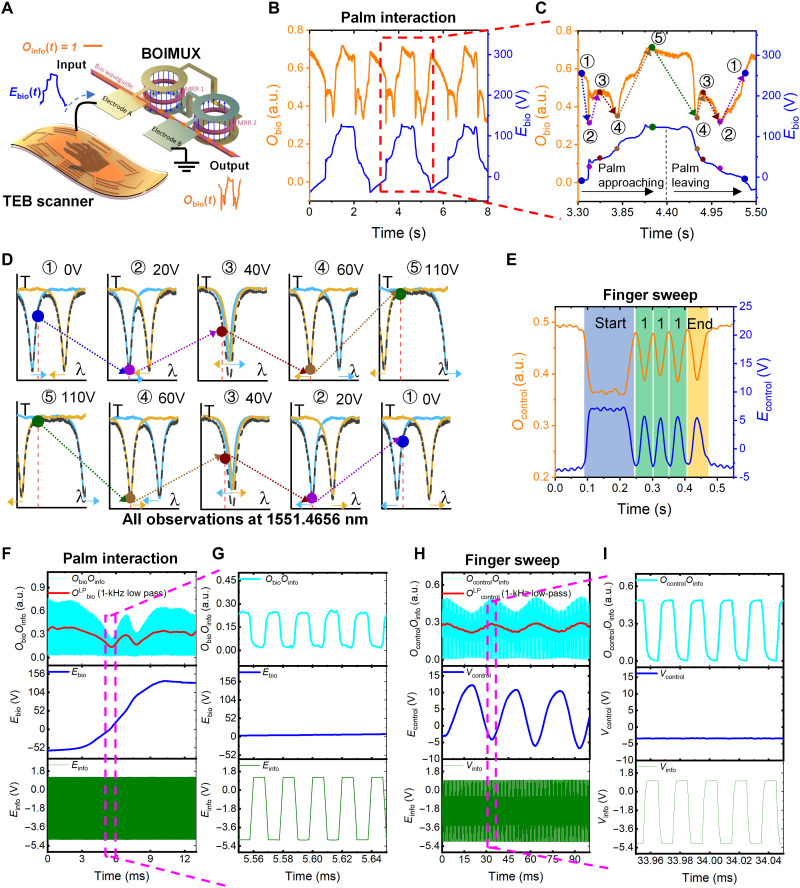

Loading biometric and control information into optical domain without disrupting digital information

The primary function of the triboelectric/photonic interface is to record and perform E-O conversion on biometric and control information. Therefore, the top electrode of MRR1 of BOIMUX is connected to the single electrode of TEB scanner, while the top electrode of MRR2 is grounded (Fig. 4A). The biometric information Ebio and the corresponding converted Obio of three consecutive palm interaction cycles are plotted in Fig. 4B. Ebio keeps the features shown in Fig. 1D. Meanwhile, Obio shows a dynamic response similar to Fig. 3G (i), indicating that the triboelectric/photonic interface maintains the performance of TEB scanner and BOIMUX in the E-O conversion process. The analysis of one interaction cycle (Fig. 4C) shows that the E-O conversion process can be explained by the push-pull mechanism (Fig. 4D). When the palm approaches the TEB scanner, Ebio increases and Obio undergoes five characteristic states (① to ⑤). When the palm leaves the TEB scanner, Ebio decreases and the five characteristic states are experienced in the reversed order (⑤ to ①). At state ①, Ebio is around 0 V, and Obio is around 0.6. From state ① to state ②, Ebio rises from 0 V to around 20 V, λ1 (blue spectrum) redshifts to 1551.4656 nm, resulting in the lowest Obio of 0.3. As Ebio further increases from 20 to 40 V during state ② to state ③, λ1 and λ2 (yellow spectrum) coincide at 1551.4756 nm, leading to a moderate Obio of 0.5 at 1551.4656 nm. From state ③ to state ④, Ebio increases from 40 to 60 V, λ2 blueshifts to 1551.4656 nm, causing the lowest Obio again. As Ebio increases to more than 100 V at state ⑤, λ1 and λ2 are driven to wavelengths that are far away from 1551.4656 nm, resulting in the highest Obio of more than 0.7. After state ⑤, Ebio gradually falls from more than 100 to −30 V, and the corresponding Obio spectra follow the reverse process of state ① to state ⑤ and will not be discussed in detail here. Next, the loading to control information Econtrol generated by finger sweep into the optical domain as Ocontrol is examined. Figure 4E demonstrates the conversion and recognition of the three-digit binary code “111.” The corresponding E-O conversion process is explained in fig. S12. The other seven three-digit binary codes can also be successfully converted and recognized (fig. S13), confirming the general control capability of the interface. In the current BOIMUX, the small Pockels coefficient of AlN limits the sensitivity. Only large biometric signals generated by strenuous human motion can be recorded. Other photonic materials with larger Pockels coefficients such as lithium niobate (LiNbO3) or barium titanate (BaTiO3) (53) can be used for subtle human motion recording (such as voice or eye motion) (35, 56). The required footprint of TEB scanner can be reduced accordingly.

Fig. 4. Loading biometric and control information into optical domain without disrupting digital information.

The working wavelength is 1551.4656 nm. All presented transmissions are normalized. (A) Electrical connection between BOIMUX and TEB scanner. Electrode A of BOIMUX is connected to the single electrode of TEB scanner, while electrode B is grounded. (B) Conversion from Ebio to Obio upon palm interaction. (C) Zoom in of (B) for the analysis of Ebio to Obio conversion. (D) Explanation of the observed Ebio to Obio conversion using schematic spectra of Obio under different Ebio’s at different states. (E) Conversion from Econtrol to Ocontrol when finger sweeps across the 111 digitized electrode. (F to I) Multiplexed ObioOinfo and OcontrolOinfo (cyan) generated by using Ebio and Econtrol (blue) to modulate Oinfo (green) and the corresponding extracted biometric information OLPbio and control information OLPcontrol (red) using a 1-kHz FFT low-pass filter. (F) Upon palm interaction. (G) Zoom in of (F). (H) Upon finger sweep. (I) Zoom in of (H).

While the biometric and control information is efficiently and directly loaded into the optical domain, it should not disrupt the digital information that is originally propagating in the optical fibers. Otherwise, the high-speed data transmission advantage of optical communication will be inhibited. Through the proper co-design, the triboelectric/photonic interface is able to multiplex digital information with biometric and control information while loading. Figure 4 (F and G) shows the individual digital information and biometric information in the electrical domain (Einfo, bottom green; Ebio, middle blue) and the multiplexed signal in the optical domain (ObioOinfo, top cyan). The upper envelope of ObioOinfo has similar characteristics as Obio in Fig. 4A, suggesting that ObioOinfo effectively carries the biometric information. The biometric information can be extracted using an FFT low-pass filter to obtain the resultant OLPbio (red curve). Digital information is well preserved in ObioOinfo (Fig. 4G top), suggesting the successful multiplexing of digital information and biometric information. Besides simple digital information, the multiplexing of modulated digital information and biometric information is also studied. In fig. S14, the original digital information is amplitude-modulated, frequency-modulated, and phase-modulated, respectively. In all three cases, the biometric information can be extracted as OLPbio using FFT low-pass filters. Furthermore, the original digital information is not disturbed. In the case of control information generated by finger sweep, the multiplexing is still effective (Fig. 4, G and H, and fig. S15).

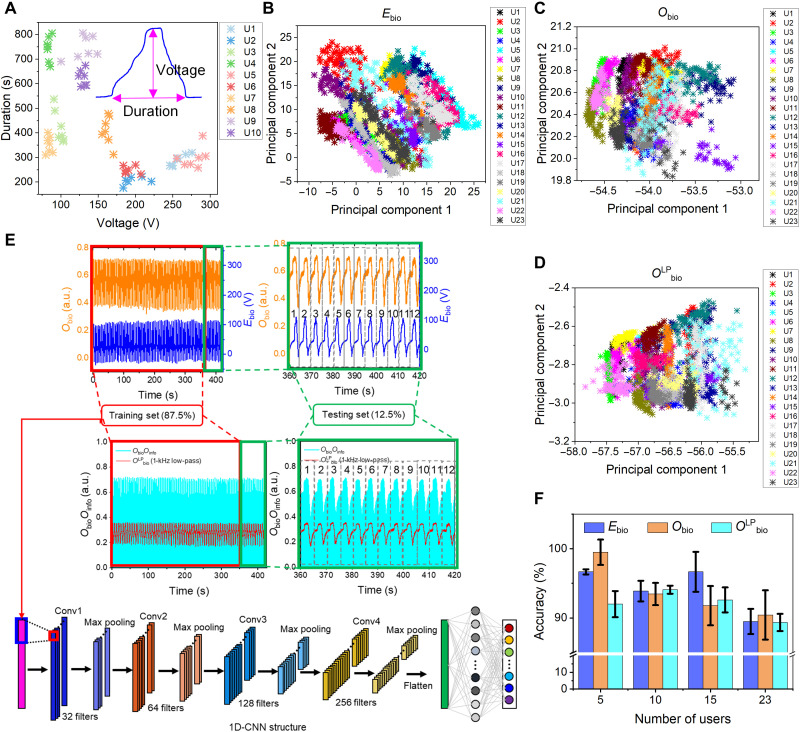

DL-enhanced biometrics-protected optical communication and its applications

To enhance security, the system requires the users to upload their biometric information for identification before permitting the control authority. The biometric information is recorded by the TEB scanner upon user palm interaction. In principle, the recorded biometric information is high-dimensional information with embedded user traits. Therefore, using DL based on convolutional neural networks (CNNs) might help with feature extraction and user identification. However, before implementing DL, we evaluate the existence of user traits in the recorded behavioral biometric information. To find physical evidence, the two most prominent features of Ebio in one user palm interaction cycle are analyzed, i.e., voltage span and duration (Fig. 5A, inset). Using the voltage-duration map, 10 users can be approximately distinguished (Fig. 5A). However, strong confusion happens when 23 users are considered (fig. S16). 2D principal components analysis (2D-PCA) is then used to find statistical evidence. To obtain a data size big enough for statistical analysis using 2D-PCA and the subsequent DL-enabled biometric identification, 96 samples are collected from each of the 23 users (labeled as user 1 to user 23). Each sample has 1600 temporal data points. One typical sample of different data types (electrical, optical, or demultiplexed optical) generated by each user is presented in figs. S17 to S19. Ebio, Obio, and ObioOinfo are all recorded for comparison. The recorded spectra illustrate that the conversion and the use of FFT low-pass filters do not deteriorate the data quality. For 2D-PCA, 84 samples of the 96 samples from each user are used. Thus, the matrix is a 1600 by 1932 matrix (fig. S20). The distribution of 1932 samples (84 from each of 23 users) shows that 23 users can be approximately distinguished in the PC1-PC2 space (Fig. 5B). It provides a stronger piece of evidence to support the existence of user traits that justifies the use of DL-enabled analytics. Since the system involves E-O conversions, the multiplexing of Oinfo and Obio, and the FFT filtering of ObioOinfo, the data robustness is important to ensure that DL-enabled biometric identification can maintain a high accuracy if practically the FFT-filtered biometric information OLPbio is used. Figure 5C proves that the 2D-PCA classification can still be done if Obio is used. Even OLPbio can be distinguished (Fig. 5D). The cumulative variance plot shows that the first 20 PCs in Ebio and Obio can account for 99% total variance, while the first 10 PCs in OLPbio can account for the same variance (fig. S21). A 1D-CNN is used to implement DL. The dataset division and detailed 1D-CNN structure are shown in Fig. 5E. A total of 96 × 23 samples are divided into two portions, 84 × 23 (87.5%) as the training set, and 12 × 23 (12.5%) as the testing set. The effect of different numbers of users and different data formats (Ebio, Obio, and OLPbio) on identification accuracy is investigated by comparing 12 cases (four numbers of users and three data formats). To obtain reliable results for comparison, the DL analysis is implemented 20 times for each case. The mean accuracies are reported as columns in Fig. 5F with the SD as error bars. The three types of biometric information present similar accuracy when 5, 10, 15, or 23 users are involved, and all maintain around 90% accuracy in identifying 23 users. Typical confusion maps of all scenarios are presented in figs. S22 to S29. The implementation of DL-enabled biometric identification is thus successfully demonstrated. Besides directly using the instantaneous time-varying finite signal as the input to implement DL, wavelet transform–enhanced DL is also investigated (text S2 and figs. S30 to S32). The wavelet transform helps convert the original instantaneous time-varying finite signal into a 2D map that contains both the frequency and time information. The 2D map is then used as the input for a 2D-CNN. The resultant identification accuracy using the wavelet transform–enhanced DL method is comparable to that using the original instantaneous time-varying finite signal. The comparable accuracy could be possibly attributed to the lack of frequency information in the biometric signal. The frequencies of all the biometric signals are very low and thus do not provide substantial features to classify different users.

Fig. 5. DL-enabled biometric identification rationality and results.

All presented transmissions are normalized. (A) Distinguish 10 users using the voltage span and duration of Ebio. Each user contains seven samples. (B to D) 2D-PCA results of biometric information in the form of (B) Ebio, (C) Obio, and (D) OLPbio. Eighty-four samples are collected from each of the 23 users. Each sample contains 1600 temporal data points. Thus, 1600 by 1932 matrices are used for 2D-PCA. (E) Dataset division and detailed 1D-CNN structure. A total of 96 × 23 samples are divided into two portions, 84 × 23 (87.5%) as the training set and 12 × 23 (12.5%) as the testing set. (F) Accuracy of using DL-enabled biometric identification for three different biometric information data types (Ebio, Obio, and OLPbio) when 5, 10, 15, or 23 users are involved.

To present system practicality, we demonstrate biometrics-protected document exchange and smart home control in the VR space. As shown in Fig. 6A, in the file upload process, the uploader inputs via the uploader interface. The file is converted to digital information Einfo and stored in memory. To ensure that the digital information and biometric information are generated simultaneously so that they can be multiplexed, another TENG device (called trigger TENG) with a structure similar to the TEB scanner is placed beneath the TEB scanner (fig. S33). The TEB scanner is connected to the BOIMUX, while the trigger TENG is connected to an MCU that controls the laser and EOM. When the user interacts with the TEB scanner, biometric information Ebio is generated. Meanwhile, the TEB scanner presses the trigger TENG and leads to a pulse trigger signal that activates the generation of digital information Oinfo. Ebio modulates Oinfo at BOIMUX and generates ObioOinfo. ObioOinfo arrives at the cloud and is filtered as OLPbio using an FFT low-pass filter. OLPbio is then used for DNN inference. Upon successful authority identification, the digital information is extracted from ObioOinfo using an FFT high-pass filter and decoded. In the file request process, the requester delivers the biometric information to the cloud similar to the upload process, but no digital information uploading is involved. The detailed upload and request mechanism and real-life demonstration of upload and request are shown in movies S1 to S4. An exemplary upload and request waveform, together with the receiving interface at the cloud, are highlighted in Fig. 6B. As shown in the pink and the yellow box corresponding to the start and the end of digital information, respectively, the two words “Biometrics” and “individuals!” are successfully transmitted and displayed. The data transmission speed of the current system is limited by the bandwidth of the MCU. However, using faster data acquisition systems or even potential all-optical ADC, the high-speed advantage of optical transmission can be fully exploited. For instance, the current bit rate of optical information can exceed 400 Gbps using wavelength-division multiplexing (57).

Fig. 6. Demonstration of applications using biometrics-protected optical communication.

(A) Operation principle of demonstrations. Uploaders use an upload interface, TEB scanner, and BOIMUX to upload files to the cloud. The cloud implements data demultiplexing, identification, display, and storage. Requesters use TEB scanner and BOIMUX to send their biometric information for identification. Upon successful request, the requester will receive the requested file (in document exchange) or be able to control smart devices via the cloud (in smart home control). (B) Exemplary upload and request waveforms and the receiving interface. User identification and authority check are successful. The text file in the form of digital information is embedded in the multiplexed waveform ObioOinfo (cyan). The information is correctly decoded and displayed as a paragraph starting with Biometrics (pink) and ending with individuals! (yellow). The difference between upload and request from the same user is the existence of meaningful Oinfo. During upload, Oinfo contains digital information that can be effectively carried over by ObioOinfo and captured and recovered by the cloud. In contrast, Oinfo will be constantly 1 in the request process. (C) Exemplary control waveforms and the controlled smart home in the VR space. The three-digit binary codes 011, 010, and 001 are used for light, TV, and sweeping robot control, respectively.

Besides biometrics-protected document exchange, the system also allows biometrics-protected smart home control in the VR space. Upon successful authority identification, the user can send control signals Econtrol to the cloud using the TEB scanner control interface. Different control commands are defined by different digitated electrodes. As shown in Fig. 6C, the light, TV, and sweeping robot can be turned on by control signals “011,” “010,” and “001,” respectively. The real-life demonstration of smart home control in the VR space is shown in movie S5.

Traditionally, encryption algorithms were adopted for optical communication security. Although physiological biometrics based on human images were recently used to improve security, there are still privacy and cost issues. Our demonstrations show that behavioral biometrics assisted with DL can enhance optical communication security while maintaining good privacy and cost-effectiveness. Digital information and biometric information can be multiplexed by leveraging the triboelectric/photonic synergistic interface, suggesting that the deployment of biometrics-protected optical communication can be built on the existing high-speed optical communication infrastructure to achieve value-added.

DISCUSSION

Biometrics-protected optical communication is realized by a system using the DL-enhanced triboelectric/photonic synergistic interface that provides biometric-traits/control-commands superimposed digital data in the high-speed optical communication infrastructure for secure data access and transmission in the cloud. Our system offers many features including the recording of both biometric information and control information using a single TENG-based sensor with low-cost and minimized power consumption, the ability to load biometric and control information generated by TENG-based sensors into the optical domain without disrupting the original digital information propagating in optical fibers, and the high identification accuracy for various types of biometric information using DL. First, the TEB scanner in the form of a single TENG-based sensor is designed with a central ellipse for biometric information monitoring and surrounding protruded digitate electrodes for control information recording. The signals are generated on the basis of contact electrification through user-scanner interaction, which effectively converts human motion into electricity in a cost-effective manner. Second, the synergy between the triboelectric effect and the Pockels effect further enables the direct loading of biometric and control information into the optical domain without the need for external circuits or power supply, minimizing cost and power consumption. By leveraging the large frequency difference between digital information and biometric and control information, multiplexing can be realized via the co-design of TEB scanner and BOIMUX to avoid disrupting digital information. After multiplexing, digital signals are sealed with a biometric envelope to enhance the complexity of transmitted information. Third, DL is used to analyze the complex and high-dimensional biometric information, resulting in ~95% accuracy for biometric identification of 15 users and ~90% for 23 users. The accuracy does not decay when biometric information is in the form of original electrical signals, converted optical signals, and even demultiplexed optical signals. Although alternative technologies such as physical unclonable functions and quantum key distribution are theoretically unbreakable and potentially provide stronger security, they require complex, high-end, and expensive hardware support, impeding user-friendly and low-cost applications. In contrast, our proposed system offers a solution for low-cost and easy-to-access optical communication architecture with a great degree of security as a potential ubiquitous solution. Its potential to integrate numerous sensors via various optical multiplexing methods for DL-based multisensor data fusion (58) and to directly interface accelerated photonic neural networks (59, 60) for efficient edge computing could lead to more secure and accurate systems with stronger computation power for communication in the DL-enabled 5G and IoT era.

MATERIALS AND METHODS

Fabrication of the TEB scanner

The TEB scanner was fabricated by a low-cost and facile process. First, an unmodified polytetrafluoroethylene (PTFE) thin film of 100-μm thickness was cut into an ellipse shape. Next, Al tapes as the sensing electrode were cut into thin bars and an ellipse shape but with a smaller size than the PTFE thin film to match common human fingers and palms. The obtained Al components were then attached to the backside of the PTFE thin film, with the ellipse-shaped electrode in the middle and the thin-bar electrodes at the surroundings according to the specifically designed coding patterns. All the Al electrodes are connected, forming the single-electrode interface for both palm and finger interactions. Then, a thin copper wire was connected to the Al electrode to collect the generated output signals during various human interactions. After that, a whole layer of double-sided adhesive tape was applied to the previously fabricated Al/PTFE part. Last, a polyethylene terephthalate (PET) thin film (thickness, 100 μm) with the same size as the PTFE layer was attached and served as the insulation/protection layer and the supporting substrate for the TEB scanner. Hence, the final configuration was PET/Al/PTFE, from bottom to top.

Characterization of the TEB scanner

A programmable electrometer (Keithley, 6514) was used to test the open-circuit voltage, and an oscilloscope (Agilent, DSO-X3034A) was connected to the electrometer using a BNC cable for real-time data acquisition. The positive electrode of the electrometer was connected to the single electrode of the TEB scanner. The negative electrode of the electrometer was connected to the ground.

Fabrication of BOIMUX

BOIMUX was fabricated by a standard complementary metal-oxide semiconductor manufacturing process. The fabrication started from 8-inch SiO2-covered Si wafers. A 120-nm TiN layer and 50-nm Si3N4 layer were deposited and patterned as bottom electrodes. A 2-μm SiO2 was deposited and planarized as waveguide bottom cladding. Next, a 400-nm AlN layer was deposited followed by the deposition of a 200-nm SiO2 layer as the hard mask. The waveguide patterns were defined using deep ultraviolet lithography and then etched using deep reactive-ion etching. After waveguide formation, another 2-μm SiO2 was deposited and planarized as waveguide top cladding. Contact holes were etched for the bottom TiN electrodes. Last, a 2-μm Al layer was deposited and patterned as top electrodes and contacts to bottom TiN electrodes.

Characterization of BOIMUX

Characterization of passive performance

A tunable laser (Keysight, 81960A) was used as the light source. The light entered a single mode–maintaining polarization controller for polarization control. Then, the light was focused into the on-chip AlN waveguide using a tapered fiber (OZ Optics, TSMJ-3A-1550-9/125-0.25-18-2.5-14-3-AR). After on-chip routing, the light was collected by another tapered fiber and directed to a power sensor (Keysight, 81636B). The tunable laser and the power sensor formed an integrated system provided by Keysight. The wavelength scanning was controlled using a commercial software offered by Keysight as well (Keysight; IL Engine, Photonic Application Package Manager, N7700A Photonic Application Suite). The computer to laser/power sensor integrated system connection was enabled by a USB/GPIB adapter (Keysight, 82357B)

Characterization of active performance

For DC performance characterization, the optical setup was the same as in the “Characterization of passive performance” section. A tunable DC voltage supply (Agilent, E3631A) was used. The voltage was amplified by 20 times using a voltage amplifier (FLC Electronics, A400DI) and then applied to BOIMUX via a ground-signal-ground (GSG) probe (MPI, T26A GSG100). For AC performance characterization, a waveform generator (HP, 33120A) was used instead of a DC voltage supply. The rest of the electrical setup was the same as DC performance characterization. In the optical setup, since Keysight 81636B is not compatible with high-speed measurement, a high-speed photodetector (Thorlabs, DET08CFC/M) was used instead. An erbium-doped fiber amplifier was applied before the high-speed photodetector so that the photodetector output was high enough to be captured by an oscilloscope (Agilent, DSO-X3034A) for dynamics analysis. The rest of the optical setup was the same as DC performance characterization.

Converting biometric information from the electrical domain to the optical domain

The working wavelength was 1551.4656 nm. The whole setup was generally the same as in the “Characterization of active performance” section, but neither the DC voltage supply nor the waveform generator together with the voltage amplifier was required. Electrode A of BOIMUX was connected to the single electrode of the TEB scanner, while electrode B was grounded. The TEB scanner directly applied voltage to BOIMUX without the need for external circuits or power supplies.

Multiplexing digital information and biometric information

The working wavelength was 1551.4656 nm. The whole setup was generally the same as in the “Converting biometric information from the electrical domain to the optical domain” section. However, the light entered a commercial EOM (Thorlabs, LN81S-FC) directly after the light was emitted from the tunable laser. A waveform generator (HP, 33120A) was connected to the EOM for converting digital information from the electrical domain into the optical domain. After the modulated light that carried the digital information passed through a polarization controller and entered BOIMUX, it was further modulated by the voltage from the TEB scanner that carried the biometric information. Consequently, the output light from BOIMUX carried both the digital information as a high-frequency signal and the biometric information as a low-frequency envelope.

Data collection and DL training model

Data collection and dataset

In the data collection process, the participants were asked to interact with the TEB scanner using their palms in a predefined manner (i.e., in the order of partial touch, half touch, full touch, and release, as shown in Fig. 2D). This predefined manner helps collect as much temporal palm interaction information as possible. The sample data were collected by the same method as in the “Multiplexing digital information and biometric information” section. However, the real-time sample data from the photodetector were first sent to an amplifying circuit, then filtered by a low-pass filter to reduce noise, and lastly acquired by the signal acquisition module in a MCU (STM32F746ZG) embedded in a development board (STMicroelectronics, NUCLEO-F746ZG). The acquired signals were sent to a laptop via USB cable communication with a baud rate of 1.3824 MHz/s limited by the MCU. Each sample spanned 5 s and was smoothened to 1600 data points. The data collection process involved 23 users, labeled as user 1 to user 23. For each user, 96 samples were collected, leading to a total of 2208 samples. A total of 1932 samples (87.5%) were used as the training set, while the other 276 samples (12.5%) were used as the testing set.

DL training model

A 1D-CNN was trained on a standard consumer-grade computer to predict the 23 different users, given biometric information (palm interaction) of each person. The 1D-CNN was developed in Python with a Keras and TensorFlow backend. The network consisted of four convolution layers, each of which was followed by a MaxPooling1D layer. These layers extracted patterns from the time-sequent samples, and the resulting embedding was then passed through a fully connected layer to lastly output the predicted user. The network was trained using stochastic gradient descent to minimize the mean squared error between the predicted user and the labeled user. More specifically, we trained the network with a batch size of 50 and with a learning rate of 0.0001 using the adaptive moment estimation (Adam) optimizer. After training the 1D-CNN for 500 epochs, the inference process was implemented for the testing set.

Demonstration of applications using biometrics-protected optical communication system

Document exchange (upload)

On the uploader side, the text document was converted to digital information following the American Standard Code for Information Interchange (ASCII), where each character contains 8 bits. The whole setup was generally the same as in the “Multiplexing digital information and biometric information” section. However, instead of a waveform generator, the commercial EOM was controlled by an MCU (Arduino, MEGA 2560). The Arduino generated meaningful digital information with a bit rate of 300 μs/bit. Hence, the Arduino could transmit 1/(300 × 10^-6 × 8) ≈ 417 characters per second.

On the cloud side, a development broad (STMicroelectronics, NUCLEO-F746ZG) was used to collect the analog signal from the amplifying circuit after photodetector and convert the analog signal to digital signal using the built-in ADC. The development board was connected to a PC via USB cable communication to transmit the voltage information (VI) with a rate of 37.5 μs per VI, and every eight VIs represented one bit at the receiver side for stable and reliable transmission. Therefore, at the receiver side, 1 s/37.5 μs/8 = 3333.3 bits/s was received, corresponding to 3333.3/8 ≈ 417 characters per second (same as uploader side). Then, the PC started to process the data. First, the acquired signals spanning 5 s went through an FFT low-pass filter (with a sampling frequency of 1 kHz and cut-off frequency of 20 Hz) developed in Python environment to extract the biometric information OLPbio. OLPbio was smoothened to 1600 data points and then recognized by the trained DL model to predict the user. If the authority check failed, the document exchange process terminated immediately. If the authority check succeeded, the acquired signals went through an FFT high-pass filter and then divided into high values (as “1’s”) and low values (as “0’s”) by a threshold to convert the signals into binary data. The binary data were separated by 8-bit intervals and decoded back to the original text information using ASCII. The text information was lastly displayed and stored in the cloud.

Document exchange (request)

The difference between upload and request from the same user was the existence of meaningful Oinfo. During upload, Oinfo contained digital information that could be carried over by ObioOinfo and then captured and recovered by the cloud. In contrast, Oinfo will be constantly “1” in the request process.

Smart home control in the VR space

The whole setup was generally the same as in the “Document exchange (upload)” section. A piece of biometric information was firstly sent to the cloud to request. The request process was similar to the “Document exchange (request)” section. If the request succeeded, i.e., the DL model prediction indicated control authority, control commands generated by the TEB scanner control interface would be sent to Unity 3D interface in the cloud to enable smart home control.

All the performed experiments in this work complied with a protocol approved by the National University of Singapore Institutional Review Board. All participation subjects were volunteers, and informed consent was obtained before participation in the experiments.

Acknowledgments

Funding: National Research Foundation Singapore grant NRF-CRP15-2015-02, Ministry of Education Singapore grant T2EP50220-0035, Agency for Science, Technology and Research Singapore grant RIE2020-AME-2019-BRENAIC, and Advanced Research and Technology Innovation Centre Singapore grant R-261-518-009-720.

Author contributions: Conceptualization: B.D. and C.L. Methodology: B.D., Z.Z., and Q.S. Investigation: B.D., Z.Z., Q.S., Z.X., J.W., and Y.M. Visualization: B.D. and Z.Z. Supervision: C.L. Writing (original draft): B.D. and C.L. Writing (review and editing): B.D., Z.Z., Q.S., J.W., and C.L.

Competing interests: B.D., Z.Z., and C.L. are inventors on a provisional patent application related to this work filed by the National University of Singapore (no. 10202107074W, filed 21 July 2021). The other authors declare that they have no competing interests.

Data and materials availability: All data needed to evaluate the conclusions in this paper are present in the paper and/or the Supplementary Materials.

Supplementary Materials

This PDF file includes:

Text S1 and S2

Figs. S1 to S33

Legends for movies S1 to S5

Other Supplementary Material for this manuscript includes the following:

Movies S1 to S5

REFERENCES AND NOTES

- 1.Kim J., Campbell A. S., de Ávila B. E. F., Wang J., Wearable biosensors for healthcare monitoring. Nat. Biotechnol. 37, 389–406 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bariya M., Nyein H. Y. Y., Javey A., Wearable sweat sensors. Nat. Electron. 1, 160–171 (2018). [Google Scholar]

- 3.Ray T. R., Choi J., Bandodkar A. J., Krishnan S., Gutruf P., Tian L., Ghaffari R., Rogers J. A., Bio-integrated wearable systems: A comprehensive review. Chem. Rev. 119, 5461–5533 (2019). [DOI] [PubMed] [Google Scholar]

- 4.Yang Y., Gao W., Wearable and flexible electronics for continuous molecular monitoring. Chem. Soc. Rev. 48, 1465–1491 (2019). [DOI] [PubMed] [Google Scholar]

- 5.Yang J. C., Mun J., Kwon S. Y., Park S., Bao Z., Park S., Electronic skin: Recent progress and future prospects for skin-attachable devices for health monitoring, robotics, and prosthetics. Adv. Mater. 31, 1904765 (2019). [DOI] [PubMed] [Google Scholar]

- 6.Li S., Ni Q., Sun Y., Min G., Al-Rubaye S., Energy-efficient resource allocation for industrial cyber-physical IoT systems in 5G era. IEEE Trans. Ind. Informatics. 14, 2618–2628 (2018). [Google Scholar]

- 7.Dong B., Shi Q., Yang Y., Wen F., Zhang Z., Lee C., Technology evolution from self-powered sensors to AIoT enabled smart homes. Nano Energy 79, 105414 (2021). [Google Scholar]

- 8.Cai X., Geng S., Wu D., Cai J., Chen J., A multi-cloud model based many-objective intelligent algorithm for efficient task scheduling in internet of things. IEEE Internet Things J. 14, 9645–9653 (2020). [Google Scholar]

- 9.Stergiou C., Psannis K. E., Kim B. G., Gupta B., Secure integration of IoT and Cloud Computing. Futur. Gener. Comput. Syst. 78, 964–975 (2018). [Google Scholar]

- 10.Niu S., Matsuhisa N., Beker L., Li J., Wang S., Wang J., Jiang Y., Yan X., Yun Y., Burnett W., Poon A. S. Y., Tok J. B.-H., Chen X., Bao Z., A wireless body area sensor network based on stretchable passive tags. Nat. Electron. 2, 361–368 (2019). [Google Scholar]

- 11.Tian X., Lee P. M., Tan Y. J., Wu T. L. Y., Yao H., Zhang M., Li Z., Ng K. A., Tee B. C. K., Ho J. S., Wireless body sensor networks based on metamaterial textiles. Nat. Electron. 2, 243–251 (2019). [Google Scholar]

- 12.Lee S., Franklin S., Hassani F. A., Yokota T., Nayeem M. O. G., Wang Y., Leib R., Cheng G., Franklin D. W., Someya T., Nanomesh pressure sensor for monitoring finger manipulation without sensory interference. Science 370, 966–970 (2020). [DOI] [PubMed] [Google Scholar]

- 13.Chun S., Kim J.-S., Yoo Y., Choi Y., Jung S. J., Jang D., Lee G., Song K.-I., Nam K. S., Youn I., Son D., Pang C., Jeong Y., Jung H., Kim Y.-J., Choi B.-D., Kim J., Kim S.-P., Park W., Park S., An artificial neural tactile sensing system. Nat. Electron. 4, 429–438 (2021). [Google Scholar]

- 14.Fan F. R., Tian Z. Q., Lin Wang Z., Flexible triboelectric generator. Nano Energy 1, 328–334 (2012). [Google Scholar]

- 15.Wang Z. L., On the first principle theory of nanogenerators from Maxwell’s equations. Nano Energy 68, 104272 (2020). [Google Scholar]

- 16.Xu W., Zheng H., Liu Y., Zhou X., Zhang C., Song Y., Deng X., Leung M., Yang Z., Xu R. X., Wang Z. L., Zeng X. C., Wang Z., A droplet-based electricity generator with high instantaneous power density. Nature 578, 392–396 (2020). [DOI] [PubMed] [Google Scholar]

- 17.Hinchet R., Yoon H.-J., Ryu H., Kim M.-K., Choi E.-K., Kim D.-S., Kim S.-W., Transcutaneous ultrasound energy harvesting using capacitive triboelectric technology. Science 365, 491–494 (2019). [DOI] [PubMed] [Google Scholar]

- 18.Song Y., Min J., Yu Y., Wang H., Yang Y., Zhang H., Gao W., Wireless battery-free wearable sweat sensor powered by human motion. Sci. Adv. 6, eaay9842 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Wu Z., Cheng T., Wang Z. L., Self-powered sensors and systems based on nanogenerators. Sensors. 20, 2925 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Wang J., He T., Lee C., Development of neural interfaces and energy harvesters towards self-powered implantable systems for healthcare monitoring and rehabilitation purposes. Nano Energy 65, 104039 (2019). [Google Scholar]

- 21.Zhang B., Wu Z., Lin Z., Guo H., Chun F., Yang W., Wang Z. L., All-in-one 3D acceleration sensor based on coded liquid–metal triboelectric nanogenerator for vehicle restraint system. Mater. Today 43, 37–44 (2021). [Google Scholar]

- 22.Shi Y., Wang F., Tian J., Li S., Fu E., Nie J., Lei R., Ding Y., Chen X., Wang Z. L., Self-powered electro-tactile system for virtual tactile experiences. Sci. Adv. 7, eabe2943 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ouyang H., Tian J., Sun G., Zou Y., Liu Z., Li H., Zhao L., Shi B., Fan Y., Fan Y., Wang Z. L., Li Z., Self-powered pulse sensor for antidiastole of cardiovascular disease. Adv. Mater. 29, 1703456 (2017). [DOI] [PubMed] [Google Scholar]

- 24.Kwak S. S., Yoon H. J., Kim S. W., Textile-based triboelectric nanogenerators for self-powered wearable electronics. Adv. Funct. Mater. 29, 1804533 (2019). [Google Scholar]

- 25.Zhu M., Shi Q., He T., Yi Z., Ma Y., Yang B., Chen T., Lee C., Self-powered and self-functional cotton sock using piezoelectric and triboelectric hybrid mechanism for healthcare and sports monitoring. ACS Nano 13, 1940–1952 (2019). [DOI] [PubMed] [Google Scholar]

- 26.Feng Y., Huang X., Liu S., Guo W., Li Y., Wu H., A self-powered smart safety belt enabled by triboelectric nanogenerators for driving status monitoring. Nano Energy 62, 197–204 (2019). [Google Scholar]

- 27.Fan W., He Q., Meng K., Tan X., Zhou Z., Zhang G., Yang J., Wang Z. L., Machine-knitted washable sensor array textile for precise epidermal physiological signal monitoring. Sci. Adv. 6, eaay2840 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Chen T., Shi Q., Zhu M., He T., Sun L., Yang L., Lee C., Triboelectric self-powered wearable flexible patch as 3D motion control interface for robotic manipulator. ACS Nano 12, 11561–11571 (2018). [DOI] [PubMed] [Google Scholar]

- 29.Zhao X., Zhang Z., Liao Q., Xun X., Gao F., Xu L., Kang Z., Zhang Y., Self-powered user-interactive electronic skin for programmable touch operation platform. Sci. Adv. 6, eaba4294 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Wu Z., Ding W., Dai Y., Dong K., Wu C., Zhang L., Lin Z., Cheng J., Wang Z. L., Self-powered multifunctional motion sensor enabled by magnetic-regulated triboelectric nanogenerator. ACS Nano 12, 5726–5733 (2018). [DOI] [PubMed] [Google Scholar]

- 31.Peng X., Dong K., Ye C., Jiang Y., Zhai S., Cheng R., Liu D., Gao X., Wang J., Wang Z. L., A breathable, biodegradable, antibacterial, and self-powered electronic skin based on all-nanofiber triboelectric nanogenerators. Sci. Adv. 6, eaba9624 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Dong C., Leber A., Das Gupta T., Chandran R., Volpi M., Qu Y., Nguyen-Dang T., Bartolomei N., Yan W., Sorin F., High-efficiency super-elastic liquid metal based triboelectric fibers and textiles. Nat. Commun. 11, 3537 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Pu X., Tang Q., Chen W., Huang Z., Liu G., Zeng Q., Chen J., Guo H., Xin L., Hu C., Flexible triboelectric 3D touch pad with unit subdivision structure for effective XY positioning and pressure sensing. Nano Energy 76, 105047 (2020). [Google Scholar]

- 34.Wen F., Sun Z., He T., Shi Q., Zhu M., Zhang Z., Li L., Zhang T., Lee C., Machine learning glove using self-powered conductive superhydrophobic triboelectric textile for gesture recognition in VR/AR applications. Adv. Sci. 7, 2000261 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Pu X., Guo H., Chen J., Wang X., Xi Y., Hu C., Wang Z. L., Eye motion triggered self-powered mechnosensational communication system using triboelectric nanogenerator. Sci. Adv. 3, e1700694 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Yuan Z., Du X., Li N., Yin Y., Cao R., Zhang X., Zhao S., Niu H., Jiang T., Xu W., Wang Z. L., Li C., Triboelectric-based transparent secret code. Adv. Sci. 5, 1700881 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Chen J., Pu X., Guo H., Tang Q., Feng L., Wang X., Hu C., A self-powered 2D barcode recognition system based on sliding mode triboelectric nanogenerator for personal identification. Nano Energy 43, 253–258 (2018). [Google Scholar]

- 38.Shi Q., Lee C., Self-powered bio-inspired spider-net-coding interface using single-electrode triboelectric nanogenerator. Adv. Sci. 6, 1900617 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Maharjan P., Shrestha K., Bhatta T., Cho H., Park C., Salauddin M., Rahman M. T., Rana S. S., Lee S., Park J. Y., Keystroke dynamics based hybrid nanogenerators for biometric authentication and identification using artificial intelligence. Adv. Sci. 8, e2100711 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Zhang W., Deng L., Yang L., Yang P., Diao D., Wang P., Wang Z. L., Multilanguage-handwriting self-powered recognition based on triboelectric nanogenerator enabled machine learning. Nano Energy 77, 105174 (2020). [Google Scholar]

- 41.Wu C., Ding W., Liu R., Wang J., Wang A. C., Wang J., Li S., Zi Y., Wang Z. L., Keystroke dynamics enabled authentication and identification using triboelectric nanogenerator array. Mater. Today 21, 216–222 (2018). [Google Scholar]

- 42.Shi Q., Zhang Z., He T., Sun Z., Wang B., Feng Y., Shan X., Salam B., Lee C., Deep learning enabled smart mats as a scalable floor monitoring system. Nat. Commun. 11, 4609 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Zhang Z., He T., Zhu M., Sun Z., Shi Q., Zhu J., Dong B., Yuce M. R., Lee C., Deep learning-enabled triboelectric smart socks for IoT-based gait analysis and VR applications. npj Flex. Electron. 4, 29 (2020). [Google Scholar]

- 44.Wen F., Zhang Z., He T., Lee C., AI enabled sign language recognition and VR space bidirectional communication using triboelectric smart glove. Nat. Commun. 12, 5378 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Qiu C., Wu F., Lee C., Yuce M. R., Self-powered control interface based on Gray code with hybrid triboelectric and photovoltaics energy harvesting for IoT smart home and access control applications. Nano Energy 70, 104456 (2020). [Google Scholar]

- 46.Qiu C., Wu F., Shi Q., Lee C., Yuce M. R., Sensors and control interface methods based on triboelectric nanogenerator in IoT applications. IEEE Access. 7, 92745–92757 (2019). [Google Scholar]

- 47.Lin Z., Yang J., Li X., Wu Y., Wei W., Liu J., Chen J., Yang J., Large-scale and washable smart textiles based on triboelectric nanogenerator arrays for self-powered sleeping monitoring. Adv. Funct. Mater. 28, 1704112 (2018). [Google Scholar]

- 48.Zhu M., Sun Z., Chen T., Lee C., Low cost exoskeleton manipulator using bidirectional triboelectric sensors enhanced multiple degree of freedom sensory system. Nat. Commun. 12, 2692 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Zhang C., Chen J., Xuan W., Huang S., You B., Li W., Sun L., Jin H., Wang X., Dong S., Luo J., Flewitt A. J., Wang Z. L., Conjunction of triboelectric nanogenerator with induction coils as wireless power sources and self-powered wireless sensors. Nat. Commun. 11, 58 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Wen F., Wang H., He T., Shi Q., Sun Z., Zhu M., Zhang Z., Cao Z., Dai Y., Zhang T., Lee C., Battery-free short-range self-powered wireless sensor network (SS-WSN) using TENG based direct sensory transmission (TDST) mechanism. Nano Energy 67, 104266 (2020). [Google Scholar]

- 51.Dong B., Shi Q., He T., Zhu S., Zhang Z., Sun Z., Ma Y., Kwong D., Lee C., Wearable triboelectric/aluminum nitride nano-energy-nano-system with self-sustainable photonic modulation and continuous force sensing. Adv. Sci. 7, 1903636 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Dong B., Yang Y., Shi Q., Xu S., Sun Z., Zhu S., Zhang Z., Kwong D.-L., Zhou G., Ang K.-W., Lee C., Wearable triboelectric−human−machine interface (THMI) using robust nanophotonic readout. ACS Nano 14, 8915–8930 (2020). [DOI] [PubMed] [Google Scholar]

- 53.Li M., Tang H. X., Strong pockels materials. Nat. Mater. 18, 9–11 (2019). [DOI] [PubMed] [Google Scholar]

- 54.Li N., Ho C. P., Zhu S., Fu Y. H., Zhu Y., Lee L. Y. T., Aluminium nitride integrated photonics: A review. Nanophotonics. 10, 2347–2387 (2021). [Google Scholar]

- 55.Varkey J. P., Pompili D., Walls T. A., Human motion recognition using a wireless sensor-based wearable system. Pers. Ubiquitous Comput. 16, 897–910 (2012). [Google Scholar]

- 56.Guo H., Pu X., Chen J., Meng Y., Yeh M.-H., Liu G., Tang Q., Chen B., Liu D., Qi S., Wu C., Hu C., Wang J., Wang Z. L., A highly sensitive, self-powered triboelectric auditory sensor for social robotics and hearing aids. Sci. Robot. 3, eaat2516 (2018). [DOI] [PubMed] [Google Scholar]

- 57.Maeda H., Saito K., Sasai T., Hamaoka F., Kawahara H., Seki T., Kawasaki T., Kani J., Real-time 400 Gbps/carrier WDM transmission over 2,000 km of field-installed G.654.E fiber. Opt. Express 28, 1640–1646 (2020). [DOI] [PubMed] [Google Scholar]

- 58.Fortino G., Galzarano S., Gravina R., Li W., A framework for collaborative computing and multi-sensor data fusion in body sensor networks. Inf. Fusion. 22, 50–70 (2015). [Google Scholar]

- 59.Feldmann J., Youngblood N., Karpov M., Gehring H., Li X., Gallo M. L., Fu X., Lukashchuk A., Raja A. S., Liu J., Wright C. D., Sebastian A., Kippenberg T. J., Pernice W. H. P., Bhaskaran H., Parallel convolutional processing using an integrated photonic tensor core. Nature 589, 52–58 (2021). [DOI] [PubMed] [Google Scholar]

- 60.Xu X., Tan M., Corcoran B., Wu J., Boes A., Nguyen T. G., Chu S. T., Little B. E., Hicks D. G., Morandotti R., Mitchell A., Moss D. J., 11 TOPS photonic convolutional accelerator for optical neural networks. Nature 589, 44–51 (2021). [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Text S1 and S2

Figs. S1 to S33

Legends for movies S1 to S5

Movies S1 to S5