Abstract

Since its selection as the method of the year in 2013, single-cell technologies have become mature enough to provide answers to complex research questions. With the growth of single-cell profiling technologies, there has also been a significant increase in data collected from single-cell profilings, resulting in computational challenges to process these massive and complicated datasets. To address these challenges, deep learning (DL) is positioned as a competitive alternative for single-cell analyses besides the traditional machine learning approaches. Here, we survey a total of 25 DL algorithms and their applicability for a specific step in the single cell RNA-seq processing pipeline. Specifically, we establish a unified mathematical representation of variational autoencoder, autoencoder, generative adversarial network and supervised DL models, compare the training strategies and loss functions for these models, and relate the loss functions of these models to specific objectives of the data processing step. Such a presentation will allow readers to choose suitable algorithms for their particular objective at each step in the pipeline. We envision that this survey will serve as an important information portal for learning the application of DL for scRNA-seq analysis and inspire innovative uses of DL to address a broader range of new challenges in emerging multi-omics and spatial single-cell sequencing.

Keywords: deep learning, single-cell RNA-seq, imputation, dimensionality reduction, clustering, batch correction, cell-type identificationfunctional prediction, visualization

Introduction

Single cell sequencing technology has been a rapidly developing area to study genomics, transcriptomics, proteomics, metabolomics and cellular interactions at the single cell level for cell-type identification, tissue composition and reprogramming [1, 2]. Specifically, sequencing of the transcriptome of single cells, or single-cell RNA-sequencing (scRNA-seq), has become the dominant technology in many frontier research areas such as disease progression and drug discovery [3, 4]. One particular area where scRNA-seq has made a tangible impact is cancer, where scRNA-seq is becoming a powerful tool for understanding invasion, intratumor heterogeneity, metastasis, epigenetic alterations, detecting rare cancer stem cells and therapeutic response [5, 6]. Currently, scRNA-seq is applied to develop personalized therapeutic strategies that are potentially useful in cancer diagnosis, therapy resistance during cancer progression and the survival of patients [5, 7]. The scRNA-seq has also been adopted to combat COVID-19 to elucidate how the innate and adaptive host immune system miscommunicates, worsening the immunopathology produced during the viral infection [8, 9].

These studies have led to a massive amount of scRNA-seq data deposited to public databases such as the 10× single-cell gene expression dataset, Human Cell Atlas and Mouse Cell Atlas. Expressions of millions of cells from 18 species have been collected and deposited, waiting for further analysis (Single Cell Expression Atlas, EMBL-EBI, October 2021). On the other hand, due to biological and technical factors, scRNA-seq data present several analytical challenges related to its complex characteristics such as missing expression values, high technical and biological variance, noise and sparse gene coverage, and elusive cell identities [1]. These characteristics make it difficult to directly apply commonly used bulk RNA-seq data analysis techniques and have called for novel statistical approaches for scRNA-seq data cleaning and computational algorithms for data analysis and interpretation. To this end, specialized scRNA-seq analysis pipelines such as Seurat [10] and Scanpy [11], along with a large collection of task-specific tools, have been developed to address the intricate technical and biological complexity of scRNA-seq data.

Recently, deep learning (DL) has demonstrated its significant advantages in natural language processing and speech and facial recognition with massive data [12–14]. Such advantages have initiated the application of DL in scRNA-seq data analysis as a competitive alternative to conventional machine learning (ML) approaches for uncovering cell clustering [15, 16], cell-type identification [15, 17], gene imputation [18–20] and batch correction [21] in scRNA-seq analysis. Compared with conventional ML approaches, DL is more powerful in capturing complex features of high-dimensional scRNA-seq data. It is also more versatile, where a single model can be trained to address multiple tasks or adapted and transferred to different tasks. Moreover, DL training scales more favorably with the number of cells in scRNA-seq data size, making it particularly attractive for handling the ever-increasing volume of single cell data. Indeed, the growing body of DL-based tools has demonstrated DL’s exciting potential as a learning paradigm to significantly advance the tools we use to interrogate scRNA-seq data.

In this paper, we present a comprehensive review of the recent advances of DL methods for solving the challenges in scRNA-seq data analysis (Table 1) from the quality control (QC), normalization/batch effect correction, dimensionality reduction, visualization, feature selection and data interpretation by surveying DL papers published up to April 2021. In order to maintain high quality for this review, we choose not to include any (bio)archival papers, although a proportion of these manuscripts contain important new findings that would be published after completing their peer-reviewed process. Previous efforts to review the recent advances in ML methods focused on efficient integration of single cell data [22, 23]. A recent review of DL applications on single cell data has summarized 21 DL algorithms that might be deployed in single cell studies [24]. It also evaluated the clustering and data correction effect of these DL algorithms using 11 datasets.

Table 1.

DL algorithms reviewed in the paper

| App | Algorithm | Models | Evaluation | Environment | Codes | Refs |

|---|---|---|---|---|---|---|

| Imputation | ||||||

| DCA | AE | DREMI | Keras, Tensorflow, scanpy | https://github.com/theislab/dca | [18] | |

| SAVER-X | AE + TL | t-SNE, ARI | R/sctransfer | https://github.com/jingshuw/SAVERX | [58] | |

| DeepImpute | DNN | MSE, Pearson’s correlation | Keras/Tensorflow | https://github.com/lanagarmire/DeepImpute | [20] | |

| LATE | AE | MSE | Tensorflow | https://github.com/audreyqyfu/LATE | [59] | |

| scGAMI | AE | NMI, ARI, HS and CS | Tensorflow | https://github.com/QUST-AIBBDRC/scGMAI/ | [60] | |

| scIGANs | GAN | ARI, ACC, AUC and F-score | PyTorch | https://github.com/xuyungang/scIGANs | [19] | |

| Batch correction | ||||||

| BERMUDA | AE + TL | KNN batch-effect test (kBET), the entropy of Mixing, SI | PyTorch | https://github.com/txWang/BERMUDA | [63] | |

| DESC | AE | ARI, KL | Tensorflow | https://github.com/eleozzr/desc | [67] | |

| iMAP | AE + GAN | kBET, Local Inverse Simpson’s Index (LISI) | PyTorch | https://github.com/Svvord/iMAP | [70] | |

| Clustering, latent representation, dimension reduction and data augmentation | ||||||

| Dhaka | VAE | ARI, Spearman Correlation | Keras/Tensorflow | https://github.com/MicrosoftGenomics/Dhaka | [72] | |

| scvis | VAE | KNN preservation, log-likelihood | Tensorflow | https://bitbucket.org/jerry00/scvis-dev/src/master/ | [75] | |

| scVAE | VAE | ARI | Tensorflow | https://github.com/scvae/scvae | [76] | |

| VASC | VAE | NMI, ARI, HS and CS | H5py, keras | https://github.com/wang-research/VASC | [77] | |

| scDeepCluster | AE | ARI, NMI, clustering accuracy | Keras, Scanpy | https://github.com/ttgump/scDeepCluster | [79] | |

| cscGAN | GAN | t-SNE, marker genes, MMD, AUC | Scipy, Tensorflow | https://github.com/imsb-uke/scGAN | [82] | |

| Multi-functional models (IM: imputation, BC: batch correction, CL: clustering) | ||||||

| scVI | VAE | IM: L1 distance; CL: ARI, NMI, SI; BC: Entropy of Mixing | PyTorch, Anndata | https://github.com/YosefLab/scvi-tools | [17] | |

| LDVAE | VAE | Reconstruction errors | Part of scVI | https://github.com/YosefLab/scvi-tools | [86] | |

| SAUCIE | AE | IM: R2 statistics; CL: SI; BC: modified kBET; Visualization: Precision/Recall | Tensorflow | https://github.com/KrishnaswamyLab/SAUCIE/ | [15] | |

| scScope | AE | IM:Reconstruction errors; BC: Entropy of mixing; CL: ARI | Tensorflow, Scikit-learn | https://github.com/AltschulerWu-Lab/scScope | [92] | |

| Cell-type Identification | ||||||

| DigitalDLSorter | DNN | Pearson correlation | R/Python/Keras | https://github.com/cartof/digitalDLSorter | [51] | |

| scCapsNet | CapsNet | Cell-type Prediction accuracy | Keras, Tensorflow | https://github.com/wanglf19/scCaps | [52] | |

| netAE | VAE | Cell-type Prediction accuracy, t-SNE for visualization | pyTorch | https://github.com/LeoZDong/netAE | [101] | |

| scDGN | DANN | Prediciton accuracy | pyTorch | https://github.com/SongweiGe/scDGN | [53] | |

| Function analysis | ||||||

| CNNC | CNN | AUROC, AUPRC and accuracy | Keras, Tensorflow | https://github.com/xiaoyeye/CNNC | [54] | |

| scGen | VAE | Correlation, visualization | Tensorflow | https://github.com/theislab/scgen | [114] | |

DL Model keywords: AE + TL: autoencoder with transfer learning, DANN: domain adversarial neural network, CapsNet: capsule neural network

In this review, we focus more on the DL algorithms with a much detailed explanation and comparison. Furthermore, to better understand the relationship of each surveyed DL model with the overall scRNA-seq analysis pipeline, we organize the surveys according to the challenge they address and discuss these DL models following the analysis pipeline. A unified mathematical description of the surveyed DL models is presented and the specific model features are discussed when reviewing each method. This will also shed light on the modeling connections among the surveyed DL methods and the recognization of the uniqueness of each model. Besides the models, we also summarize the evaluation matrics used by these DL algorithms and methods that each DL algorithm was compared with. The online location of the code, the development platform and the used datasets for each method are also cataloged to facilitate their utilization and additional effort to improve them. Finally, we also created a companion online version of the paper at https://huang-ai4medicine-lab.github.io/survey-of-DL-for-scRNA-seq-analysis/_book/, which includes expanded discussion as well as a survey of additional methods. We envision that this survey will serve as an important information portal for learning the application of DL for scRNA-seq analysis and inspire innovative use of DL to address a broader range of new challenges in emerging multi-omics and spatial single-cell sequencing.

Overview of the scRNA-seq processing pipeline

Various scRNA-seq techniques (such as SMART-seq, Drop-seq and 10× genomics sequencing) [25, 26] are available nowadays with their sets of advantages and disadvantages. Despite the differences in the scRNA-seq techniques, the data content and processing steps of scRNA-seq data are quite standard and conventional. A typical scRNA-seq dataset consists of three files: genes quantified (gene IDs), cells quantified (cellular barcode) and a count matrix (number of cells × number of genes), irrespective of the technology or pipeline used. A series of essential steps in the scRNA-seq data processing pipeline and optional tools for each step with both ML and DL approaches are illustrated in Figure 1.

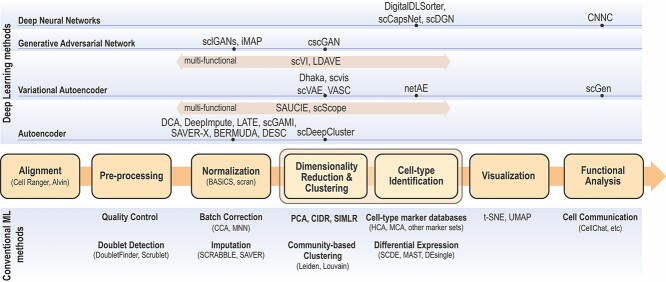

Figure 1.

Single cell data analysis steps for both conventional ML methods (bottom) and DL methods (top). Depending on the input data and analysis objectives, major scRNA-seq analysis steps are illustrated in the center flow chart (color boxes) with conventional ML approaches along with optional analysis modules below each analysis step. DL approaches are categorized as DNN, GAN, VAE, and AE. For each DL approach, optional algorithms are listed on top of each step.

With the advantage of identifying each cell and unique molecular identifiers (UMIs) for expressions of each gene in a single cell, scRNA-seq data are embedded with increased technical noise and biases [27]. QC is the first and the key step to filter out dead cells, double-cells or cells with failed chemistry or other technical artifacts. The most commonly adopted three QC covariates include the number of counts (count depth) per barcode identifying each cell, the number of genes per barcode and the fraction of counts from mitochondrial genes per barcode [28].

Normalization is designed to eliminate imbalanced sampling, cell differentiation, viability and many other factors. Approaches tailored for scRNA-seq have been developed including the Bayesian-based method coupled with spike-in, or BASiCS [29], deconvolution approach, scran [30] and scTransfrom in Seurat where regularized Negative Binomial Regression was proposed [31]. Two important steps, batch correction and imputation, will be carried out if required by the analysis.

Batch Correction is a common source of technical variation in high-throughput sequencing experiments due to variant experimental conditions such as technicians and experimental time, imposing a major challenge in scRNA-seq data analysis. Batch effect correction algorithms include detection of mutual nearest neighbors (MNNs) [32], canonical correlation analysis (CCA) with Seurat [33] and Harmony algorithm through cell-type representation [34].

Imputation step is necessary to handle high sparsity data matrix, due to missing value or dropout in scRNA-seq data analysis. Several tools have been developed to ‘impute’ zero values in scRNA-seq data, such as SCRABBLE [35], SAVER [36] and scImpute [37].

Dimensionality reduction and visualization are essential steps to represent biologically meaningful variations and high dimensionality with significantly reduced computational cost. Dimensionality reduction methods, such as principal component analysis (PCA), are widely used in scRNA-seq data analysis to achieve that purpose. More advanced nonlinear approaches that preserve the topological structure and avoid overcrowding in lower dimension representation, such as LLE [38] (used in SLICER [39]), t-SNE [40] and UMAP [41], have also been developed and adopted as a standard in single-cell data visualization.

Clustering analysis is a key step to identify cell subpopulations or distinct cell types to unravel the extent of heterogeneity and their associated cell-type-specific markers. Unsupervised clustering is frequently used to categorize cells into clusters according to their similarity often measured in the aforementioned dimensionality-reduced representations. Some of popular algorithms include the community detection algorithm Louvain [42] and Leiden [43], and data-driven dimensionality reduction followed with k-Means cluster by SIMLR [44].

Feature selection is another important step in single-cell RNA-seq analysis to select a subset of genes, or features, for cell-type identification and functional enrichment of each cluster. This step is achieved by differential expression analysis designed for scRNA-seq, such as MAST that used linear model fitting and likelihood ratio testing [45]; SCDE that adopted a Bayesian approach with a Negative Binomial model for gene expression and Poisson process for dropouts [46], or DEsingle that utilized a Zero-Inflated Negative Binomial (ZINB) model to estimate the dropouts [47].

Besides these key steps, downstream analyses include cell-type identification, coexpression analysis, prediction of perturbation response, where DL has also been applied. Other advanced analyses including trajectory inference and velocity and pseudotime analysis are not discussed here because most of the approaches on these topics are non-DL based.

Overview of common DL models for scRNA-seq analysis

We start our review by introducing the general formulations of widely used DL models. As most of the tasks including batch correction, dimensionality reduction, imputation and clustering are unsupervised learning tasks, we will give special attention to unsupervised models including variational autoencoder (VAE), the autoencoder (AE) or generative adversarial networks (GAN). We will also discuss the general supervised and transfer learning formulations, which find their applications in cell-type predictions and functional studies. We will discuss these models in the context of scRNA-seq, detailing the different features and training strategies of each model and bringing attention to their uniqueness.

Variational autoencoder

Let  represent a

represent a  vector of expression levels (UMI counts or normalized, log-transformed expression) of

vector of expression levels (UMI counts or normalized, log-transformed expression) of  genes in cell

genes in cell  , where

, where  follows some distribution (e.g. ZINB or Gaussian), where

follows some distribution (e.g. ZINB or Gaussian), where  and

and  are distribution parameters (e.g. mean, variance or dispersion) (Figure 2A). We consider

are distribution parameters (e.g. mean, variance or dispersion) (Figure 2A). We consider  to be of particular interest (e.g. the mean counts) and is thus further modeled by a decoder neural network

to be of particular interest (e.g. the mean counts) and is thus further modeled by a decoder neural network  (Figure 2A) as

(Figure 2A) as

|

(1) |

where the  th element of

th element of  is

is  and

and  is a vector of decoder weights,

is a vector of decoder weights,  represents a latent representation of gene expression and is used for visualization and clustering and

represents a latent representation of gene expression and is used for visualization and clustering and  is an observed variable (e.g. the batch ID). For VAE,

is an observed variable (e.g. the batch ID). For VAE,  is commonly assumed to follow a multivariate standard Normal prior, i.e.

is commonly assumed to follow a multivariate standard Normal prior, i.e.  with

with  being a

being a  identity matrix. Furthermore,

identity matrix. Furthermore,  of

of  is a nuisance parameter, which has a prior distribution

is a nuisance parameter, which has a prior distribution  and can be either estimated or marginalized in variational inference. Now define

and can be either estimated or marginalized in variational inference. Now define  . Then,

. Then,  and (1) together define the likelihood

and (1) together define the likelihood  .

.

Figure 2.

Graphical models of the major surveyed DL models including (A) VAE, (B) AE and (C) GAN.

The goal of training is to compute the maximum likelihood estimate of

|

(2) |

where  is the evidence lower bound (ELBO)

is the evidence lower bound (ELBO)

|

(3) |

and  is an approximate to

is an approximate to  and assumed as

and assumed as

|

(4) |

with  given by an encoder network

given by an encoder network  (Figure 2A) as

(Figure 2A) as

|

(5) |

where  is the weights vector. Now,

is the weights vector. Now,  and Eq. (2) is solved by the stochastic gradient descent approach while a model is trained.

and Eq. (2) is solved by the stochastic gradient descent approach while a model is trained.

All the surveyed papers that deploy VAE follow this general modeling process. However, a more general formulation has a loss function defined as

|

(6) |

where  are losses for different functions (clustering, cell-type prediction, etc.) and

are losses for different functions (clustering, cell-type prediction, etc.) and  s are the Lagrange multipliers. With this general formulation, for each paper, we examined the specific choices of data distribution

s are the Lagrange multipliers. With this general formulation, for each paper, we examined the specific choices of data distribution  that define

that define  , different

, different  designed for specific functions, and how the decoder and encoder were applied to model different aspects of scRNA-seq data.

designed for specific functions, and how the decoder and encoder were applied to model different aspects of scRNA-seq data.

Autoencoders

AEs learn the low dimensional latent representation  of expression

of expression  . The AE includes an encoder

. The AE includes an encoder  and a decoder

and a decoder  (Figure 2B) such that

(Figure 2B) such that

|

(7) |

where  are encoder and decoder weight parameters, respectively, and

are encoder and decoder weight parameters, respectively, and  defines the parameters (e.g. mean) of the likelihood

defines the parameters (e.g. mean) of the likelihood  (Figure 2B) and is often considered as imputed and denoised expressions. Additional design can be included in an AE model for batch correction, clustering and other objectives.

(Figure 2B) and is often considered as imputed and denoised expressions. Additional design can be included in an AE model for batch correction, clustering and other objectives.

The training of an AE model is generally carried out by stochastic gradient descent algorithms to minimize the loss similar to Eq. (6) except  . When

. When  is the Gaussian,

is the Gaussian,  becomes the mean square error (MSE) loss

becomes the mean square error (MSE) loss

|

(8) |

Because different AE models differ in their AE architectures and loss functions, we will discuss the specific architecture and loss functions for each reviewed DL model in ****Section 4.

Generative adversarial networks

GANs have been used for imputation, data generation and augmentation of the scRNA-seq analysis. Without loss of generality, the GAN, when applied to scRNA-seq, is designed to learn how to generate gene expression profiles from  , the distribution of

, the distribution of  . The vanilla GAN consists of two deep neural networks (DNNs) [48]. The first network is the generator

. The vanilla GAN consists of two deep neural networks (DNNs) [48]. The first network is the generator with parameter

with parameter  , a noise vector

, a noise vector  from the distribution

from the distribution  and a class label

and a class label  (e.g. cell type), and is trained to generate

(e.g. cell type), and is trained to generate  , a ‘fake’ gene expression (Figure 2C). The second network is the discriminator network

, a ‘fake’ gene expression (Figure 2C). The second network is the discriminator network  with parameters

with parameters  , trained to distinguish the ‘real’

, trained to distinguish the ‘real’  from fake

from fake  (Figure 2C). Both networks,

(Figure 2C). Both networks,  and

and  , are trained to outplay each other, resulting in a minimax game, in which

, are trained to outplay each other, resulting in a minimax game, in which  is forced by

is forced by  to produce better samples, which, when converge, can fool the discriminator

to produce better samples, which, when converge, can fool the discriminator  , thus becoming samples from

, thus becoming samples from  . The vanilla GAN suffers heavily from training instability and mode collapsing [49]. To that end, Wasserstein GAN (WGAN) [49] was developed with the WGAN loss [50]

. The vanilla GAN suffers heavily from training instability and mode collapsing [49]. To that end, Wasserstein GAN (WGAN) [49] was developed with the WGAN loss [50]

|

(9) |

Additional terms can also be added to Eq. (9) to constrain the functions of the generator. Training based on the WGAN loss in Eq. (9) amounts to a min-max optimization, which iterates between the discriminator and the generator, where each optimization is achieved by a stochastic gradient descent algorithm through back-propagation. The WGAN requires  to be K-Lipschitz continuous [50], which can be satisfied by adding the gradient penalty to the WGAN loss [49]. Once the training is done, the generator

to be K-Lipschitz continuous [50], which can be satisfied by adding the gradient penalty to the WGAN loss [49]. Once the training is done, the generator  can be used to generate gene expression profiles of new cells.

can be used to generate gene expression profiles of new cells.

Supervised DL models

Supervised DL models, including DNNs, convolutional neural network (CNN) and capsule networks (CapsNet), have been used for cell-type identifications [51–53] and functional predictions [54]. The general supervised DL model  takes

takes  as an input and outputs

as an input and outputs  , the probability of phenotype label

, the probability of phenotype label  (e.g., a cell type) as

(e.g., a cell type) as

|

(10) |

where  can be DNN, CNN or CapsNet. We omit the discussion of DNN and CNN as they are widely used in different applications and there are many excellent surveys on them [55]. We will focus our discussion on CasNet next.

can be DNN, CNN or CapsNet. We omit the discussion of DNN and CNN as they are widely used in different applications and there are many excellent surveys on them [55]. We will focus our discussion on CasNet next.

A CasNet takes an expression  to first form a feature extraction network (consisting of

to first form a feature extraction network (consisting of  parallel single-layer neural networks) followed by a classification capsule network. Each of the

parallel single-layer neural networks) followed by a classification capsule network. Each of the  parallel feature extraction layers generates a primary capsule

parallel feature extraction layers generates a primary capsule  as

as

|

(11) |

where  is the weight matrix. Then, the primary capsules are fed into the capsule network to compute

is the weight matrix. Then, the primary capsules are fed into the capsule network to compute  label capsules

label capsules  , one for each label, as

, one for each label, as

|

(12) |

where  is the squashing function [56] to normalize the magnitude of its input vector to be less than one,

is the squashing function [56] to normalize the magnitude of its input vector to be less than one,  is another trainable weight matrix and

is another trainable weight matrix and  are the coupling coefficients that represent the probability distribution of each primary capsule’s impact on the predicted label

are the coupling coefficients that represent the probability distribution of each primary capsule’s impact on the predicted label  . Parameters

. Parameters  are not trained but computed through the dynamic routing process proposed in the original capsule networks [52]. The magnitude of each capsule

are not trained but computed through the dynamic routing process proposed in the original capsule networks [52]. The magnitude of each capsule  represents the probability of predicting label

represents the probability of predicting label  for input

for input  . Once trained, the important primary capsules for each label and then the most significant genes for each important primary capsule can be used to interpret biological functions associated with the prediction.

. Once trained, the important primary capsules for each label and then the most significant genes for each important primary capsule can be used to interpret biological functions associated with the prediction.

The training of the supervised models for classification overwhelmingly minimizes the cross-entropy loss by stochastic gradient descent computed by a back-propagation algorithm.

Survey of DL models for scRNA-seq analysis

In this section, we survey applications of DL models for scRNA-seq analysis. To better understand the relationship between the problems that each surveyed work addresses and the key challenges in the general scRNA-seq processing pipeline, we divide the survey into sections according to steps in the scRNA-seq processing pipeline illustrated in Figure 1. For each DL model, we present the model details under the general model framework introduced in Section 3 and discuss the specific loss functions. We also survey the evaluation metrics and summarize the evaluation results. To facilitate cross-references of the information, we summarized all algorithms reviewed in this section in Table 1 and tabulated the datasets and evaluation metrics used in each paper in Tables 3 and 8. We also listed in Figure 3 all other algorithms against which each surveyed method evaluated, highlighting the extensiveness that these algorithms were assessed for their performance.

Table 3.

Simulated single-cell data/algorithms

| Title | Algorithm | # Cells | Simulation methods | Reference |

|---|---|---|---|---|

| Splatter | DCA, DeepImpute, PERMUDA, scDeepCluster, scVI, scScope, solo | ~2000 | Splatter/R | [84] |

| CIDR | sclGAN | 50 | CIDR simulation | [61] |

| NB + dropout | Dhaka | 500 | Hierarchical model of NB/Gamma + random dropout | |

| Bulk RNA-seq | SAUCIE | 1076 | 1076 CCLE bulk RNAseq + dropout conditional on the expression level | |

| SIMLR | scScope | 1 million | SIMLR, high-dimensional data generated from latent vector | [44] |

| SUGAR | cscGAN | 3000 | Generating high dimensional data that follows a low dimensional manifold | [85] |

Table 8.

Evaluation metrics used in surveyed DL algorithms

| Evaluation Method | Equations | Explanation |

|---|---|---|

| Pseudobulk RNA-seq | Average of normalized (log2-transformed) scRNA-seq counts across cells is calculated and then correlation coefficient between the pseudobulk and the actual bulk RNA-seq profile of the same cell type is evaluated. | |

| Mean squared error (MSE) |

|

MSE assesses the quality of a predictor, or an estimator, from a collection of observed data x, with  being the predicted values. being the predicted values. |

| Pearson correlation |

|

where cov() is the covariance, σX and σY are the standard deviation of X and Y, respectively. |

| Spearman correlation |

|

The Spearman correlation coefficient is defined as the Pearson correlation coefficient between the rank variables, where rX is the rank of X. |

| Entropy of accuracy, Hacc [21] |

|

Measures the diversity of the ground-truth labels within each predicted cluster group. pi(xj) (or qi(xj)) are the proportions of cells in the jth ground-truth cluster (or predicted cluster) relative to the total number of cells in the ith predicted cluster (or ground-truth clusters), respectively. |

| Entropy of purity, Hpur [21] |

|

Measures the diversity of the predicted cluster labels within each ground-truth group. |

| Entropy of mixing [32] |

|

This metric evaluates the mixing of cells from different batches in the neighborhood of each cell. C is the number of batches, and  is the proportion of cells from batch is the proportion of cells from batch  among N nearest cells. among N nearest cells. |

| MI [162] |

|

where  and and  . Also, define the joint distribution probability is . Also, define the joint distribution probability is  . The MI is a measure of mutual dependency between two cluster assignments U and V. . The MI is a measure of mutual dependency between two cluster assignments U and V. |

| Normalized MI (NMI) [163] |

|

where  . The NMI is a normalization of the MI score between 0 and 1. . The NMI is a normalization of the MI score between 0 and 1. |

| KL divergence [164] |

|

where discrete probability distributions P and Q are defined on the same probability space χ. This relative entropy is the measure for directed divergence between two distributions. |

| Jaccard Index |

|

0 ≤ J(U,V) ≤ 1. J = 1 if clusters U and V are the same. If U are V are empty, J is defined as 1. |

| Fowlkes–Mallows Index for two clustering algorithms (FM) |

|

TP as the number of pairs of points that are present in the same cluster in both U and V; FP as the number of pairs of points that are present in the same cluster in U but not in V; FN as the number of pairs of points that are present in the same cluster in V but not in U and TN as the number of pairs of points that are in different clusters in both U and V. |

| Rand index (RI) |

|

Measure of constancy between two clustering outcomes, where a (or b) is the count of pairs of cells in one cluster (or different clusters) from one clustering algorithm but also fall in the same cluster (or different clusters) from the other clustering algorithm. |

| Adjusted Rand index (ARI) [165] |

|

ARI is a corrected-for-chance version of RI, where E[RI] is the expected RI. |

| Silhouette index |

|

where a(i) is the average dissimilarity of ith cell to all other cells in the same cluster, and b(i) is the average dissimilarity of ith cell to all cells in the closest cluster. The range of s(i) is [−1,1], with 1 to be well-clustered and −1 to be completely misclassified. |

| MMD [64] |

|

MMD is a non-parametric distance between distributions based on the reproducing kernel Hilbert space, or, a distance-based measure between two distribution p and q based on the mean embeddings μp and μq in a reproducing kernel Hilbert space F. |

| kBET [166] |

|

Given a dataset of  cells from cells from  batches with batches with  denoting the number of cells in batch denoting the number of cells in batch  , ,  is the number of cells from batch is the number of cells from batch  in the KNN s of cell in the KNN s of cell  , fl is the global fraction of cells in batch , fl is the global fraction of cells in batch  , or , or  , and , and  denotes the denotes the  distribution with distribution with  degrees of freedom. It uses a degrees of freedom. It uses a  -based test for random neighborhoods of fixed size to determine the significance (‘well-mixed’). -based test for random neighborhoods of fixed size to determine the significance (‘well-mixed’). |

| LISI [34] |

|

This is the inverse Simpson’s Index in the KNNs of cell  for all batches, where for all batches, where  denotes the proportion of batch denotes the proportion of batch  in the KNNs. The score reports the effective number of batches in the KNNs of cell in the KNNs. The score reports the effective number of batches in the KNNs of cell  . . |

| Homogeneity |

|

where H() is the entropy, and U is the ground-truth assignment and V is the predicted assignment. The HS range from 0 to 1, where 1 indicates perfectly homogeneous labeling. |

| Completeness |

|

Its values range from 0 to 1, where 1 indicates all members from a ground-truth label are assigned to a single cluster. |

| V-Measure [167] |

|

where β indicates the weight of HS. V-Measure is symmetric, i.e. switching the true and predicted cluster labels does not change V-Measure. |

| Precision, recall |

|

TP: true positive, FP: false positive, FN, false negative. |

| Accuracy |

|

N: all samples tested, TN: true negative |

| F1-score |

|

A harmonic mean of precision and recall. It can be extended to  where where  is a weight between precision and recall (similar to V-measure). is a weight between precision and recall (similar to V-measure). |

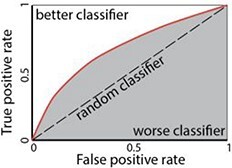

| AUC, RUROC |

|

Area Under Curve (grey area). Receiver operating characteristic (ROC) curve (red line). A similar measure can be performed on the Precision-Recall curve (PRC) or AUPRC. PRCs summarize the trade-off between the true positive rate and the positive predictive value for a predictive model (mostly for an imbalanced dataset). |

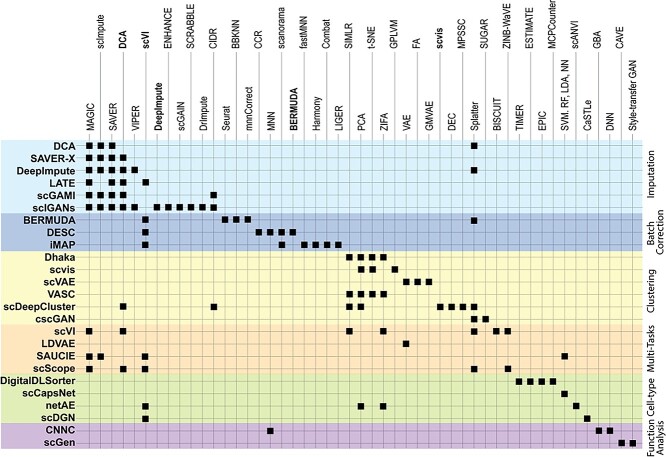

Figure 3.

Algorithm comparison grid. DL methods surveyed in the paper are listed on the left-hand side, and some in the column. Algorithms selected to compare in each DL method are marked by ‘■’ at each cross-point.

Imputation

DCA: Deep count AE

DCA [18] is an AE for imputation (Figures 2B and 4B) and has been integrated into the Scanpy framework.

Figure 4.

DL model network illustration. (A) DNN, (B) AE, (C) VAE, (D) AE with recursive imputer, (E) GAN, (F) CNN and (G) domain adversarial neural network.

Model

DCA models UMI counts with missing values using the ZINB distribution

|

(13) |

where  is a Dirac delta function,

is a Dirac delta function, denotes the negative binomial distribution and

denotes the negative binomial distribution and  , representing dropout rate, mean and dispersion, respectively, are functions of the output (

, representing dropout rate, mean and dispersion, respectively, are functions of the output ( ) of the decoder in the DCA as follows:

) of the decoder in the DCA as follows:

|

(14) |

where  ,

, and

and  are additional weights to be estimated. The DCA encoder and decoder follow the general AE formulation as in Eq. (7) but the encoder takes the normalized, log-transformed expression as input. To train the model, DCA uses a constrained log-likelihood as the loss function

are additional weights to be estimated. The DCA encoder and decoder follow the general AE formulation as in Eq. (7) but the encoder takes the normalized, log-transformed expression as input. To train the model, DCA uses a constrained log-likelihood as the loss function

|

(15) |

with  . Once the DCA is trained, the mean counts

. Once the DCA is trained, the mean counts  are used as the denoised and imputed counts for cell

are used as the denoised and imputed counts for cell  .

.

Results

For evaluation, DCA was compared with other methods using simulated data (using Splatter R package), and real bulk transcriptomics data from a developmental Caenorhabditis elegans time-course experiment were used with added simulating single-cell specific noise. Gene expression was measured from 206 developmentally synchronized young adults over a 12-h period (C. elegans). Single-cell specific noise was added in silico by genewise subtracting values drawn from the exponential distribution such that 80% of values were zeros. The paper analyzed the Bulk contains less noise than single-cell transcriptomics data and can thus aid in evaluating single-cell denoising methods by providing a good ground truth model. The authors also did a comparison of other methods including SAVER [36], scImpute [37] and MAGIC [57]. DCA denoising recovered original time-course gene expression pattern while removing single-cell specific noise. Overall, DCA demonstrated the strongest recovery of the top 500 genes most strongly associated with development in the original data without noise; DCA was shown to outperform other existing methods in capturing cell population structure in real data using PBMC, CITE-seq, runtime scales linearly with the number of cells.

SAVER-X: Single-cell analysis via expression recovery harnessing external data

SAVER-X [58] is an AE model (Figures 2B and 4B) developed to denoise and impute scRNA-seq data with transfer learning from other data resources.

Model

SAVER-X decomposes the variation in the observed counts  with missing values into three components: (i) predictable structured component representing the shared variation across genes, (ii) unpredictable cell-level biological variation and gene-specific dispersions and (iii) technical noise. Specifically,

with missing values into three components: (i) predictable structured component representing the shared variation across genes, (ii) unpredictable cell-level biological variation and gene-specific dispersions and (iii) technical noise. Specifically,  is modeled as a Poisson–Gamma hierarchical model

is modeled as a Poisson–Gamma hierarchical model

|

(16) |

where  is the sequencing depth of cell n,

is the sequencing depth of cell n,  is the mean and

is the mean and  is the dispersion. This Poisson–Gamma mixture is an equivalent expression to the NB distribution, and thus, the ZINB distribution as Eq. (13) is adopted to model missing values.

is the dispersion. This Poisson–Gamma mixture is an equivalent expression to the NB distribution, and thus, the ZINB distribution as Eq. (13) is adopted to model missing values.

The loss is similar to Eq. (15). However,  is initially learned by an AE pre-trained using external datasets from an identical or similar tissue and then transferred to

is initially learned by an AE pre-trained using external datasets from an identical or similar tissue and then transferred to  to be denoised. Such transfer learning can be applied to data between species (e.g. human and mouse in the study), cell types, batches and single-cell profiling technologies. After

to be denoised. Such transfer learning can be applied to data between species (e.g. human and mouse in the study), cell types, batches and single-cell profiling technologies. After  is inferred, SAVER-X generates the final denoised data

is inferred, SAVER-X generates the final denoised data  by an empirical Bayesian shrinkage.

by an empirical Bayesian shrinkage.

Results

SAVER-X was applied to multiple human single-cell datasets of different scenarios: (i) T-cell subtypes, (ii) a cell type (CD4+ regulatory T cells) that was absent from the pretraining dataset, (iii) gene-protein correlations of CITE-seq data and (iv) immune cells of primary breast cancer samples with a pretraining on normal immune cells. SAVER-X with pretraining on HCA and/or PBMCs outperformed the same model without pretraining and other denoising methods, including DCA [28], scVI [17], scImpute [37] and MAGIC [57]. The model achieved promising results even for genes with very low UMI counts. SAVER-X was also applied for a cross-species study in which the model was pre-trained on a human or mouse dataset and transferred to denoise another. The results demonstrated the merit of transferring public data resources to denoise in-house scRNA-seq data even when the study species, cell types or single-cell profiling technologies are different.

DeepImpute: DNN imputation

DeepImpute [20] imputes genes in a divide-and-conquer approach, using a bank of DNN models (Figure 4A) with 512 output, each to predict gene expression levels of a cell.

Model

For each dataset, DeepImpute selects to impute a list of genes or highly variable genes (variance over mean ratio, default = 0.5). Each sub-neural network aims to understand the relationship between the input genes and a subset of target genes. Genes are first divided into  random subsets of 512 target genes. For each subset, a two-layer DNN is trained where the input includes genes that are among the top 5 best-correlated genes to target genes but not part of the target genes in the subset. The loss is defined as the weighted MSE

random subsets of 512 target genes. For each subset, a two-layer DNN is trained where the input includes genes that are among the top 5 best-correlated genes to target genes but not part of the target genes in the subset. The loss is defined as the weighted MSE

|

(17) |

which gives higher weights to genes with higher expression values.

Result

DeepImpute had the highest overall accuracy and offered shorter computation time with less demand on computer memory than other methods such as MAGIC, DrImpute, ScImpute, SAVER, VIPER and DCA. Using simulated and experimental datasets (Table 3), DeepImpute showed benefits in improving clustering results and identifying significantly differentially expressed genes. DeepImpute and DCA show overall advantages over other methods and between which DeepImpute performs even better. The properties of DeepImpute contribute to its superior performance include (i) a divide-and-conquer approach which contrary to an AE as implemented in DCA, resulting in a lower complexity in each sub-model and stabilizing neural networks and (ii) the subnetworks are trained without using the target genes as the input which reduces overfitting while enforcing the network to understand true relationships between genes.

LATE: Learning with AE

LATE [59] is an AE (Figures 2B and 4B) whose encoder takes the log-transformed expression as input.

Model

LATE sets zeros for all missing values and generates the imputed expressions. LATE minimizes the MSE loss as Eq. (8). One issue is that some zeros could be real and reflect the actual lack of expressions.

Result

Using synthetic data generated from pre-imputed data followed by random dropout selection at different degrees, LATE outperforms other existing methods such as MAGIC, SAVER, DCA and scVI, particularly when the ground truth contains only a few or no zeros. However, when the data contain many zero expression values, DCA achieved a lower MSE than LATE, although LATE still has a smaller MSE than scVI. This result suggests that DCA likely does a better job identifying true zero expressions, partly because LATE does not make assumptions on the statistical distributions of the single-cell data that potentially have inflated zero counts.

scGMAI

Technically, scGMAI [60] is a model for clustering but it includes an AE (Figures 2B and 4B) in the first step to combat dropout.

Model

To impute the missing values, scGMAI applies an AE like LATE to reconstruct log-transformed expressions with dropout but chooses a smoother Softplus activation function instead. The MSE loss as in Eq. (8) is adopted.

After imputation, scGMAI uses fast independent component analysis (ICA) on the AE reconstructed expressions to reduce the dimension and then applies a Gaussian mixture model on the ICA reduced data to perform the clustering.

Results

To assess the performance, the AE in scGMAI was replaced by five other imputation methods including SAVER [36], MAGIC [57], DCA [28], scImpute [37] and CIDR [61]. A scGMAI implementation without AE was also compared. Seventeen scRNA-seq data (part of them are listed in Tables 4 and 5 as marked) were used to evaluate cell clustering performances. The results indicated that the AEs significantly improved the clustering performance in 8 of 17 scRNA-seq datasets.

Table 4.

Human single-cell data sources used by different DL algorithms

| Title | Algorithm | Cell origin | # Cells | Data sources | Reference |

|---|---|---|---|---|---|

| 68 k PBMCs | DCA SAVER-X LATE, scVAE, scDeepCluster, scCapsNet, scDGN | Blood | 68 579 | 10× Single Cell Gene Expression Datasets | |

| Human pluripotent | DCA | hESCs | 1876 | GSE102176 | [128] |

| CITE-seq | SAVER-X | Cord blood mononuclear cells | 8005 | GSE100866 | [129] |

| Midbrain and Dopaminergic Neuron Development | SAVER-X | Brain/ embryo ventral midbrain cells | 1977 | GSE76381 | [130] |

| HCA | SAVER-X | Immune cell, Human Cell Atlas | 500 000 | HCA data portal | |

| Breast tumor | SAVER-X | Immune cell in tumor micro-environment | 45 000 | GSE114725 | [131] |

| 293 T cells | DeepImpute, iMAP | Embryonic kidney | 13 480 | 10X Single Cell Gene Expression Datasets | |

| Jurkat | DeepImpute, iMAP | Blood/ lymphocyte | 3200 | 10X Single Cell Gene Expression Datasets | |

| ESC, Time-course | scGAN | ESC | 350 758 | GSE75748 | [132] |

| Baron-Hum-1 | scGMAI, VASC | Pancreatic islets | 1937 | GSM2230757 | [133] |

| Baron-Hum-2 | scGMAI, VASC | Pancreatic islets | 1724 | GSM2230758 | [133] |

| Camp | scGMAI, VASC | Liver cells | 303 | GSE96981 | [134] |

| CEL-seq2 | PERMUDA, DESC | Pancreas/Islets of Langerhans | GSE85241 | [135] | |

| Darmanis | scGMAI, sclGAN, VASC | Brain/cortex | 466 | GSE67835 | [136] |

| Tirosh-brain | Dhaka, scvis | Oligodendroglioma | >4800 | GSE70630 | [137] |

| Patel | Dhaka | Primary glioblastoma cells | 875 | GSE57872 | [138] |

| Li | scGMAI, VASC | Blood | 561 | GSE146974 | [67] |

| Tirosh-skin | scvis | melanoma | 4645 | GSE72056 | [73] |

| xenograft 3, and 4 | Dhaka | Breast tumor | ~250 | EGAS00001002170 | [74] |

| Petropoulos | VASC/netAE | Human embryos | 1529 | E-MTAB-3929 | |

| Pollen | scGMAI, VASC | 348 | SRP041736 | [139] | |

| Xin | scGMAI, VASC | Pancreatic cells (α-, β-, δ-) | 1600 | GSE81608 | [140] |

| Yan | scGMAI, VASC | embryonic stem cells | 124 | GSE36552 | [141] |

| PBMC3k | VASC, scVI | Blood | 2700 | SRP073767 | [99] |

| CyTOF, Dengue | SAUCIE | Dengue infection | 11 M, ~42 antibodies | Cytobank, 82 023 | [15] |

| CyTOF, ccRCC | SAUCIE | Immunue profile of 73 ccRCC patients | 3.5 M, ~40 antibodies | Cytobank: 875 | [142] |

| CyTOF, breast | SAUCIE | 3 patients | Flow Repository: FR-FCM-ZYJP | [131] | |

| Chung, BC | DigitalDLSorter | Breast tumor | 515 | GSE75688 | [93] |

| Li, CRC | DigitalDLSorter | Colorectal cancer | 2591 | GSE81861 | [94] |

| Pancreatic datasets | scDGN | Pancreas | 14 693 | SeuratData | |

| Kang, PBMC | scGen | PBMC stimulated by INF-β | ~15 000 | GSE96583 | [115] |

Table 5.

Mouse single-cell data sources used by different DL algorithms

| Title | Algorithm | Cell origin | # Cells | Data Sources | Reference |

|---|---|---|---|---|---|

| Brain cells from E18 mice | DCA, SAUCIE | Brain Cortex | 1 306 127 | 10×: Single Cell Gene Expression Datasets | |

| Midbrain and Dopaminergic Neuron Development | SAVER-X | Ventral Midbrain | 1907 | GSE76381 | [130] |

| Mouse cell atlas | SAVER-X | 405 796 | GSE108097 | [143] | |

| neuron9k | DeepImpute | Cortex | 9128 | 10×: Single Cell Gene Expression Datasets | |

| Mouse Visual Cortex | DeepImpute | Brain cortex | 114 601 | GSE102827 | [144] |

| murine epidermis | DeepImpute | Epidermis | 1422 | GSE67602 | [145] |

| myeloid progenitors | LATE DESC, SAUCIE | Bone marrow | 2730 | GSE72857 | [146] |

| Cell-cycle | sclGAN | mESC | 288 | E-MTAB-2805 | [147] |

| A single-cell survey | Intestine | 7721 | GSE92332 | [119] | |

| Tabula Muris | iMAP | Mouse cells | >100 K | ||

| Baron-Mou-1 | VASC | Pancreas | 822 | GSM2230761 | [133] |

| Biase | scGMAI, VASC | Embryos/SMARTer | 56 | GSE57249 | [148] |

| Biase | scGMAI, VASC | Embryos/Fluidigm | 90 | GSE59892 | [148] |

| Deng | scGMAI, VASC | Liver | 317 | GSE45719 | [149] |

| Klein | VASC scDeepCluster sclGAN | Stem Cells | 2717 | GSE65525 | [150] |

| Goolam | VASC | Mouse Embryo | 124 | E-METAB-3321 | [151] |

| Kolodziejczyk | VASC | mESC | 704 | E-MTAB-2600 | [152] |

| Usoskin | VASC | Lumbar | 864 | GSE59739 | [153] |

| Zeisel | VASC, scVI, SAUCIE, netAE | Cortex, hippocampus | 3005 | GSE60361 | [154] |

| Bladder cells | scDeepCluster | Bladder | 12 884 | GSE129845 | [155] |

| HEMATO | scVI | Blood cell | >10 000 | GSE89754 | [156] |

| retinal bipolar cells | scVI, scCapsNet SAUCIE | retinal | ~25 000 | GSE81905 | [100] |

| Embryo at 9 time points | LDAVE | embryos from E6.5 to E8.5 | 116 312 | GSE87038 | [157] |

| Embryo at 9 time points | LDAVE | embryos from E9.5 to E13.5 | ~2 millions | GSE119945 | [158] |

| CyTOF, | SAUCIE | Mouse thymus | 200 K, ~38 antibodies | Cytobank: 52942 | [159] |

| Nestorowa | netAE | hematopoietic stem and progenitor cells | 1920 | GSE81682 | [160] |

| small intestinal epithelium | scGen | Infected with Salmonella and worm H. polygyrus | 1957 | GSE92332 | [119] |

scIGANs

Imputation approaches based on information from cells with similar expressions suffer from oversmoothing, especially for rare cell types. scIGANs [19] is a GAN-based imputation algorithm (Figures 2C and 4E), which overcomes this problem by training a GAN model to generate samples with imputed expressions.

Model

scIGAN takes the image-like reshaped gene expression data  as input. The model follows a BEGAN [62] framework, which replaces the GAN discriminator

as input. The model follows a BEGAN [62] framework, which replaces the GAN discriminator  with a function

with a function  to compute the reconstruction MSE. Then, the Wasserstein distance loss between the reconstruction errors between the real and generated samples is computed

to compute the reconstruction MSE. Then, the Wasserstein distance loss between the reconstruction errors between the real and generated samples is computed

|

(18) |

This framework forces the model to meet two computing objectives, i.e. reconstructing the real samples and discriminating between real and generated samples. Proportional Control Theory was applied to balance these two goals during the training [62].

After training, the decoder  is used to generate new samples of a specific cell type. Then, the k-nearest neighbors (KNNs) approach is applied to the real and generated samples to impute the real samples’ missing expressions.

is used to generate new samples of a specific cell type. Then, the k-nearest neighbors (KNNs) approach is applied to the real and generated samples to impute the real samples’ missing expressions.

Results

scIGANs were first tested on simulated samples with different dropout rates. Performance of rescuing the correct clusters was compared with 11 existing imputation approaches including DCA, DeepImpute, SAVER, scImpute, MAGIC, etc. scIGANs reported the best performance for all metrics. scIGAN was next evaluated for its ability to correctly cluster cell types on the Human brain scRNA-seq data, which showed superior performance than existing methods again. scIGANs were then evaluated for identifying cell-cycle states using scRNA-seq datasets from mouse embryonic stem cells. The results showed that scIGANs outperformed competing existing approaches for recovering subcellular states of cell cycle dynamics. scIGANs were further shown to improve the identification of differentially expressed genes and enhance the inference of cellular trajectory using time-course scRNA-seq data from the differentiation from H1 ESC to definitive endoderm cells (DECs). Finally, scIGAN was also shown to scale to scRNA-seq methods and data sizes.

Batch effect correction

BERMUDA: Batch Effect ReMoval Using Deep AEs

BERMUDA [63] deploys a transfer-learning method (Figures 2B and 4B) to remove the batch effect. It performs correction to the shared cell clusters among batches and therefore preserves batch-specific cell populations.

Model

BERMUDA is an AE that takes normalized, log-transformed expression as input. Its consists of two parts as

|

(19) |

where  is the MSE loss and

is the MSE loss and  is the maximum mean discrepancy (MMD) [64] loss that measures the differences in distributions between pairs of similar cell clusters shared among batches as

is the maximum mean discrepancy (MMD) [64] loss that measures the differences in distributions between pairs of similar cell clusters shared among batches as

|

(20) |

where  is the latent variable of

is the latent variable of  , the input expression of a cell from cluster

, the input expression of a cell from cluster  of batch

of batch  ,

,  is

is  if cluster

if cluster  of batch

of batch  and cluster

and cluster  of batch

of batch  are determined to be similar by MetaNeighbor [65] and

are determined to be similar by MetaNeighbor [65] and  , otherwise. The

, otherwise. The  equals zero when the underlying distributions of the observed samples are the same.

equals zero when the underlying distributions of the observed samples are the same.

Results

BERMUDA was shown to outperform other methods such as MNNCorrect [32], BBKNN [66], Seurat [10] and scVI [17] in removing batch effects on simulated and human pancreas data while preserving batch-specific biological signals. BERMUDA provides several improvements compared with existing methods: (i) capable of removing batch effects even when the cell population compositions across different batches are vastly different and (ii) preserving batch-specific biological signals through transfer-learning which enables discovering new information that might be hard to extract by analyzing each batch individually.

DESC: Batch correction based on clustering

DESC [67] is an AE model (Figures 2B and 4B) that removes batch effect through clustering with the hypothesis that batch differences in expressions are smaller than true biological variations between cell types, and, therefore, properly performing clustering on cells across multiple batches can remove batch effects without the need to define batches explicitly.

Model

DESC has a conventional AE architecture. Its encoder takes normalized, log-transformed expression and uses decoder output,  as the reconstructed gene expression, which is equivalent to a Gaussian data distribution with

as the reconstructed gene expression, which is equivalent to a Gaussian data distribution with  being the mean. The loss function is similar to Eq. (19) and except that the second loss

being the mean. The loss function is similar to Eq. (19) and except that the second loss  is the clustering loss that regularizes the learned feature representations to form clusters as in the deep embedded clustering [68]. The model is first trained to minimize

is the clustering loss that regularizes the learned feature representations to form clusters as in the deep embedded clustering [68]. The model is first trained to minimize  only to obtain the initial weights before minimizing the combined loss. After the training, each cell is assigned with a cluster ID.

only to obtain the initial weights before minimizing the combined loss. After the training, each cell is assigned with a cluster ID.

Table 6.

Single-cell data derived from other species

| Title | Algorithm | Species | Tissue | # Cells | SRA/GEO | Reference |

|---|---|---|---|---|---|---|

| Worm neuron cells a | scDeepCluster | C. elegans | Neuron | 4186 | GSE98561 | [161] |

| Cross species, stimulation with LPS and dsRNA | scGen | Mouse, rat, rabbit and pig | bone marrow-derived phagocyte | 5000–10 000 /species | 13 accessions in ArrayExpress | [120] |

aProcessed data are available at https://github.com/ttgump/scDeepCluster/tree/master/scRNA-seq%20data

Results

DESC was applied to the macaque retina dataset, which includes animal level, region level and sample-level batch effects. The results showed that DESC is effective in removing the batch effect, whereas CCA [33], MNN [32], Seurat 3.0 [10], scVI [17], BERMUDA [63] and scanorama [69] were all sensitive to batch definitions. DESC was then applied to human pancreas datasets to test its ability to remove batch effects from multiple scRNA-seq platforms and yielded the highest ARI among the comparing approaches mentioned above. When applied to human PBMC data with interferon-beta stimulation, where biological variations are compounded by batch effect, DESC was shown to be the best in removing batch effect while preserving biological variations. DESC was also shown to remove batch effect for the monocytes and mouse bone marrow data, and DESC was shown to preserve the pseudotemporal structure. Finally, DESC scales linearly with the number of cells, and its running time is not affected by the increasing number of batches.

iMAP: Integration of Multiple single-cell datasets by Adversarial Paired-style transfer networks

iMAP [70] combines AE (Figures 2B and 4B) and GAN (Figures 2C and 4E) for batch effect removal. It is designed to remove batch biases while preserving dataset-specific biological variations.

Model

iMAP consists of two processing stages, each including a separate DL model. In the first stage, a special AE, whose decoder combines the output of two separate decoders  and

and  , is trained such that

, is trained such that

|

(21) |

where  is the one-hot encoded batch number of cell

is the one-hot encoded batch number of cell  .

.  can be understood as decoding the batch noise, whereas

can be understood as decoding the batch noise, whereas  reconstructs batch-removed expression from the latent variable

reconstructs batch-removed expression from the latent variable  . The training minimizes the loss in Eq. (19) except the 2nd loss is the content loss

. The training minimizes the loss in Eq. (19) except the 2nd loss is the content loss

|

(22) |

where  is a random batch number. Minimizing

is a random batch number. Minimizing  further ensures that the reconstructed expression

further ensures that the reconstructed expression  would be batch agnostic and has the same content as

would be batch agnostic and has the same content as  .

.

Table 7.

Large single-cell data source used by various algorithms

| Title | Sources | Notes |

|---|---|---|

| 10× Single-cell gene expression dataset | https://support.10xgenomics.com/single-cell-gene-expression/datasets | Contains large collection of scRNA-seq dataset generated using 10× system |

| Tabula Muris | https://tabula-muris.ds.czbiohub.org/ | Compendium of scRNA-seq data from mouse |

| HCA | https://data.humancellatlas.org/ | Human single-cell atlas |

| MCA | https://figshare.com/s/865e694ad06d5857db4b, or GSE108097 | Mouse single-cell atlas |

| scQuery | https://scquery.cs.cmu.edu/ | A web server cell-type matching and key gene visualization. It is also a source for scRNA-seq collection (processed with common pipeline) |

| SeuratData | https://github.com/satijalab/seurat-data | List of datasets, including PBMC and human pancreatic islet cells |

| cytoBank | https://cytobank.org/ | Community of big data cytometry |

However, due to the limitation of AE, this step is still insufficient for batch removal. Therefore, a second stage is included to apply a GAN model to make expression distributions of the shared cell type across different baches indistinguishable. To identified the shared cell types, an MNNs strategy adapted from [32] was developed to identify MNN pairs across batches using batch effect independent  as opposed to

as opposed to  . Then, a mapping generator

. Then, a mapping generator  is trained using MNN pairs based on GAN such that

is trained using MNN pairs based on GAN such that  , where

, where  and

and  are the MNN pairs from batch

are the MNN pairs from batch  and an anchor batch

and an anchor batch  . The WGAN-GP loss as in Eq. (9) was adopted for the GAN training. After training,

. The WGAN-GP loss as in Eq. (9) was adopted for the GAN training. After training,  is applied to all cells of a batch to generate batch-corrected expression.

is applied to all cells of a batch to generate batch-corrected expression.

Results

iMAP was first tested on benchmark datasets from human dendritic cells and Jurkat and 293 T cell lines and then human pancreas datasets from five different platforms. All the datasets contain both batch-specific cells and batch-shared cell types. iMAP was shown to separate the batch-specific cell types but mix batch shared cell types and outperformed nine other existing batch correction methods including Harmony, scVI, fastMNN, Seurat, etc. iMAP was then applied to the large-scale Tabula Muris datasets containing over 100 K cells sequenced from two platforms. iMAP could not only reliably integrate cells from the same tissues but identify cells from platform-specific tissues. Finally, iMAP was applied to datasets of tumor-infiltrating immune cells and shown to reduce the dropout ratio and the percentage of ribosomal genes and non-coding RNAs, thus improving the detection of rare cell types and ligand-receptor interactions. iMAP scales with the number of cells, showing minimal time cost increase after the number of cells exceeds thousands. Its performance is also robust against model hyperparameters.

Dimensionality reduction, latent representation, clustering and data augmentation

Dimensionality reduction is indispensable for many type of scRNA-seq data analysis, considering the limited number of cell types in each biospecimen. Furthermore, biological processes of interests often involve the complex coordination of many genes, therefore, latent representations which capture biological variation in reduced dimensions are useful in interpreting experiment conditions and cell heterogeneity. Both AE- and VAE-based are capable of learning latent representations. VAE-based models have the benefit of regularity of the latent space and generative factors. The GAN-based models can produce augmented data that may in return to enhance the clustering, e.g. due to low representation of certain cell types.

Dimensionality reduction by AEs with gene-interaction constrained architecture

This study [71] considers AEs (Figures 2B and 4B) for learning the low-dimensional representation and specifically explores the benefit of incorporating prior biological knowledge of gene–gene interactions to regularize the AE network architecture.

Model

Several AE models with single or two hidden layers that incorporate gene interactions reflecting transcription factor (TF) regulations and protein–protein interactions (PPIs) are implemented. The models take normalized, log-transformed expressions and follow the general AE structure, including dimension-reducing and reconstructing layers, but the network architectures are not symmetrical. Specifically, gene interactions are incorporated such that each node of the first hidden layer represented a TF or a protein in the PPI; only genes that are targeted by TFs or involved in the PPI were connected to the node. Thus, the corresponding weights of  and

and  are set to be trainable and otherwise fixed at zero throughout the training process. Both unsupervised (AE-like) and supervised (cell-type label) learning were studied.

are set to be trainable and otherwise fixed at zero throughout the training process. Both unsupervised (AE-like) and supervised (cell-type label) learning were studied.

Results

Regularizing encoder connections with TF and PPI information considerably reduced the model complexity by almost 90% (7.5–7.6 M to 1.0–1.1 M). The clusters formed on the data representations learned from the models with or without TF and PPI information were compared with those from PCA, NMF, ICA, t-SNE and SIMLR [44]. The model with TF/PPI information and two hidden layers achieved the best performance by five of the six measures and the best average performance. In terms of the cell-type retrieval of single cells, the encoder models with and without TF/PPI information achieved the best performance in four and three cell types, respectively. PCA yielded the best performance in only two cell types. The DNN model with TF/PPI information and two hidden layers again achieved the best average performance across all cell types. In summary, this study demonstrated a biologically meaningful way to regularize AEs by the prior biological knowledge for learning the representation of scRNA-seq data for cell clustering and retrieval.

Dhaka: A VAE-based dimension reduction model

Dhaka [72] was proposed to reduce the dimension of scRNA-seq data for efficient stratification of tumor subpopulations.

Model

Dhaka adopts a general VAE formulation (Figures 2A and 4C). It takes the normalized, log-transformed expressions of a cell as input and outputs the low-dimensional representation.

Result

Dhaka was first tested on the simulated dataset. The simulated dataset contains 500 cells, each including 3 K genes, clustered into 5 different clusters with 100 cells each. The clustering performance was compared with other methods including t-SNE, PCA, SIMLR, NMF, an AE, MAGIC and scVI. Dhaka was shown to have an ARI higher than most other comparing methods. Dhaka was then applied to the Oligodendroglioma data and could separate malignant cells from non-malignant microglia/macrophage cells. It also uncovered the shared glial lineage and differentially expressed genes for the lineages. Dhaka was also applied to the Glioblastoma data and revealed an evolutionary trajectory of the malignant cells where cells gradually evolve from a stemlike state to a more differentiated state. In contrast, other methods failed to capture this underlying structure. Dhaka was next applied to the Melanoma cancer dataset [73] and uncovered two distinct clusters that showed the intra-tumor heterogeneity of the Melanoma samples. Dhaka was finally applied to copy number variation data [74] and shown to identify one major and one minor cell clusters, of which other methods could not find.

scvis: A VAE for capturing low-dimensional structures

scvis [75] is a VAE network (Figures 2A and 4C) that learns that the low-dimensional representations capture both local and global neighboring structures in scRNA-seq data.

Model

scvis adopts the generic VAE formulation described in section 3.1. However, it has a unique loss function defined as

|

(23) |

where  is ELBO as in Eq. (3) and

is ELBO as in Eq. (3) and  is a regularizer using non-symmetrized t-SNE objective function [75], which is defined as

is a regularizer using non-symmetrized t-SNE objective function [75], which is defined as

|

(24) |

where  and

and  are two different cells,

are two different cells,  measures the local cell relationship in the data space and

measures the local cell relationship in the data space and  measures such relationship in the latent space. Because t-SNE algorithm preserves the local structure of high dimensional space,

measures such relationship in the latent space. Because t-SNE algorithm preserves the local structure of high dimensional space,  learns local structures of cells.

learns local structures of cells.

Results

scvis was tested on the simulated data and outperformed t-SNE in a nine-dimensional space task. scvis preserved both local structure and global structure. The relative positions of all clusters were well kept but outliers were scattered around clusters. Using simulated data and comparing to t-SNE, scvis generally produced consistent and better patterns among different runs, while t-SNE could not. scvis also presented good results on adding new data to an existing embedding, with median accuracy on new data at 98.1% for K = 5 and 94.8% for K = 65, when train K cluster on original data then test the classifier on new-generated sample points. The scvis was subsequently tested on four real datasets including metastatic melanoma, oligodendroglioma, mouse bipolar and mouse retina datasets. In each dataset, scvis was showed to preserve both the global and local structure of the data.

scVAE: VAE for single-cell gene expression data

scVAE [76] includes multiple VAE models (Figures 2A and4C) for denoising gene expression levels and learning the low-dimensional latent representation of cells. It investigates different choices of the likelihood functions in the VAE model to model different data sets.

Model

scVAE is a conventional fully connected network. However, different distributions have been discussed for  to model different data behaviors. Specifically, scVAE considers Poisson, constrained Poisson and negative binomial distributions for count data, piece-wise categorical Poisson for data including both high and low counts, and zero-inflated version of these distributions to model missing values. To model multiple modes in cell expressions, a Gaussian mixture is also considered for

to model different data behaviors. Specifically, scVAE considers Poisson, constrained Poisson and negative binomial distributions for count data, piece-wise categorical Poisson for data including both high and low counts, and zero-inflated version of these distributions to model missing values. To model multiple modes in cell expressions, a Gaussian mixture is also considered for  , resulting in a GMVAE. The inference process still follows that of a VAE as discussed in section 3.1.

, resulting in a GMVAE. The inference process still follows that of a VAE as discussed in section 3.1.

Results

scVAEs were evaluated on the PBMC data and compared with factor analysis (FA) models. The results showed that GMVAE with negative binomial distribution achieved the highest lower bound and ARI. Zero-inflated Poisson distribution performed the second best. All scVAE models outperformed the baseline linear FA model, which suggested that a non-linear model is needed to capture single-cell genomic features. GMVAE was also compared with Seurat and shown to perform better using the withheld data. However, scVAE performed no better than scVI [17] or scvis [75], both are VAE models.

VASC: VAE for scRNA-seq

VASC [77] is another VAE (Figures 2A and 4C) for dimension reduction and latent representation but it models dropout.

Model

VASC’s input is the log-transformed expression but rescaled in the range [0,1]. A dropout layer (dropout rate of 0.5) is added after the input layer to force subsequent layers to learn to avoid dropout noise. The encoder network has three layers fully connected and the first layer uses linear activation, which acts like an embedded PCA transformation. The next two layers use the ReLU activation, which ensures a sparse and stable output. This model’s novelty is the zero-inflation layer (ZI layer), which is added after the decoder to model scRNA-seq dropout events. The probability of dropout event is defined as  where

where  is the recovered expression value obtained by the decoder network. Since back-propagation cannot deal with a stochastic network with categorical variables, a Gumbel-softmax distribution [78] is introduced to address the difficulty of the ZI layer. The loss function of the model takes the form

is the recovered expression value obtained by the decoder network. Since back-propagation cannot deal with a stochastic network with categorical variables, a Gumbel-softmax distribution [78] is introduced to address the difficulty of the ZI layer. The loss function of the model takes the form  , where

, where  is the binary entropy because the input is scaled to [0 1], and

is the binary entropy because the input is scaled to [0 1], and  a loss performed using Kullback–Leibler (KL) divergence on the latent variables. After the model is trained, the latent code can be used as the dimension-reduced feature for downstream tasks and visualization.

a loss performed using Kullback–Leibler (KL) divergence on the latent variables. After the model is trained, the latent code can be used as the dimension-reduced feature for downstream tasks and visualization.

Results

VASC was compared with PCA, t-SNE, ZIFA and SIMLR on 20 datasets. In the study of embryonic development from zygote to blast cells, all methods roughly re-established the development stages of different cell types in the dimension-reduced space. However, VASC showed the better performance to model embryo developmental progression. In the Goolam, Biase and Yan datasets, scRNA-seq data were generated through embryonic development stages from zygote to blast, VASC re-established development stage from 1, 2, 4, 8, 16 to blast, while other methods failed. In the Pollen, Kolodziejczyk and Baron datasets, VASC formed an appropriate cluster, either with homogeneous cell type, preserved proper relative positions, or minimal batch influence. Interestingly, when tested on the PBMC dataset, VASC was shown to identify the major global structure (B cells, CD4+, CD8+ T cells, NK cells, Dendritic cells) and detect subtle differences within monocytes (FCGR3A+ versus CD14+ monocytes), indicating the capability of VASC handling a large number of cells or cell types. Quantitative clustering performance in NMI, ARI, homogeneity and completeness was also performed. VASC always ranked top two in all the datasets. In terms of NMI and ARI, VASC best performed on 15 and 17 out of 20 datasets, respectively.

scDeepCluster

scDeepCluster [79] is an AE network (Figures 2B and 4B) that simultaneously learns feature representation and performs clustering via explicit modeling of cell clusters as in DESC.

Model

Similar to DCA, scDeepCluster adopts a ZINB distribution for  as in Eqs. (13) and (15). The loss is similar to Eq. (19) except that the first term is the negative log-likelihood of the ZINB data distribution as defined in Eq. (15) and the second

as in Eqs. (13) and (15). The loss is similar to Eq. (19) except that the first term is the negative log-likelihood of the ZINB data distribution as defined in Eq. (15) and the second  is a clustering loss performed using KL divergence as in DESC algorithm. Compared with ***csvis, scDeepcluster focuses more on clustering assignment due to the KL divergence.

is a clustering loss performed using KL divergence as in DESC algorithm. Compared with ***csvis, scDeepcluster focuses more on clustering assignment due to the KL divergence.

Results

scDeepCluster was first tested on the simulation data and compared with other seven methods including DCA [18], two multi-kernel spectral clustering methods MPSSC [80] and SIMLR [44], CIDR [61], PCA + k-mean, scvis [75] and DEC [81]. In different dropout rate simulations, scDeepCluster significantly outperformed the other methods consistently. In signal strength, imbalanced sample size and scalability simulations, scDeepcluster outperformed all other algorithms and scDeepCluster and most notably advantages for weak signals, robust against different data imbalance levels and scaled linearly with the number of cells. scDeepCluster was then tested on four real datasets (10X PBMC, Mouse ES cells, Mouse bladder cells, Worm neuron cells) and shown to outperform all other comparing algorithms. Particularly, MPSSC and SIMLR failed to process the full datasets due to quadratic complexity.

cscGAN: Conditional single-cell generative adversarial neural networks

cscGAN [82] is a GAN model (Figures 2C and 4E) designed to augment the existing scRNA-seq samples by generating expression profiles of specific cell types or subpopulations.

Model