Objectives:

Children with hearing loss (CHL) may exhibit spoken language delays and may also experience deficits in other cognitive domains including working memory. Consistent hearing aid use (i.e., more than 10 hours per day) ameliorates these language delays; however, the impact of hearing aid intervention on the neural dynamics serving working memory remains unknown. The objective of this study was to examine the association between the amount of hearing aid use and neural oscillatory activity during verbal working memory processing in children with mild-to-severe hearing loss.

Design:

Twenty-three CHL between 8 and 15 years-old performed a letter-based Sternberg working memory task during magnetoencephalography (MEG). Guardians also completed a questionnaire describing the participants’ daily hearing aid use. Each participant’s MEG data was coregistered to their structural MRI, epoched, and transformed into the time–frequency domain using complex demodulation. Significant oscillatory responses corresponding to working memory encoding and maintenance were independently imaged using beamforming. Finally, these whole-brain source images were correlated with the total number of hours of weekly hearing aid use, controlling for degree of hearing loss.

Results:

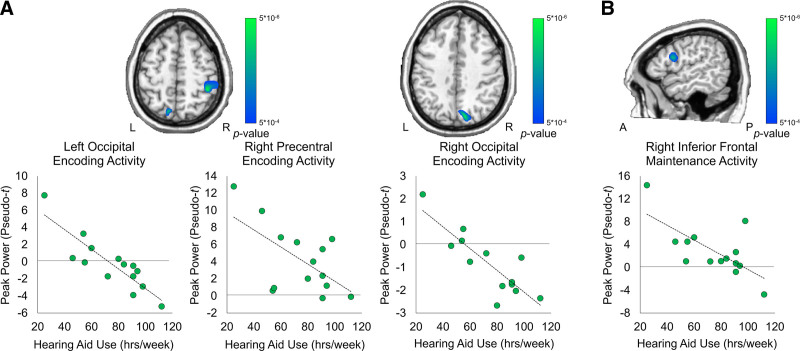

During the encoding period, hearing aid use negatively correlated with alpha-beta oscillatory activity in the bilateral occipital cortices and right precentral gyrus. In the occipital cortices, this relationship suggested that with greater hearing aid use, there was a larger suppression of occipital activity (i.e., more negative relative to baseline). In the precentral gyrus, greater hearing aid use was related to less synchronous activity (i.e., less positive relative to baseline). During the maintenance period, hearing aid use significantly correlated with alpha activity in the right prefrontal cortex, such that with greater hearing aid use, there was less right prefrontal maintenance-related activity (i.e., less positive relative to baseline).

Conclusions:

This study is the first to investigate the impact of hearing aid use on the neural dynamics that underlie working memory function. These data show robust relationships between the amount of hearing aid use and phase-specific neural patterns during working memory encoding and maintenance after controlling for degree of hearing loss. Furthermore, our data demonstrate that wearing hearing aids for more than ~8.5 hours/day may serve to normalize these neural patterns. This study also demonstrates the potential for neuroimaging to help determine the locus of variability in outcomes in CHL.

Keywords: Auditory experience, Cognitive development, Magnetoencephalography, Neurophysiology, Cognitive development, Oscillations

INTRODUCTION

Hearing loss at birth or early childhood is associated with delays in spoken language development (Moeller 2000; Sininger et al. 2010; Yoshinaga-Itano et al. 1998). Research also suggests that hearing loss may lead to additional problems with executive function (Conway et al. 2009; Jones et al. 2020), reading (Cupples et al. 2014; Tomblin et al. 2020), psychosocial outcomes (Wong et al. 2017), and academic achievement (Calderon & Low 1998). Several recent studies have attempted to identify the underlying mechanisms and interventions that support resilience in these developmental domains (Ching et al. 2013; Moeller & Tomblin 2015). One key finding is that CHL who have a greater number of hours of device use (e.g., hearing aids or cochlear implants) have better outcomes than children with fewer hours of device use (Tomblin et al. 2015; Park et al. 2019). However, it remains unclear how these differences in consistent auditory access might influence the development of neural structures and function that are involved with cognitive and linguistic processes in CHL. To this end, we sought to investigate the impact of device use on the underlying neural dynamics serving working memory.

Early sensory experiences shape the neural circuitry of the auditory system. In animal models, the auditory cortex adapts to frequency-specific patterns of auditory stimulation (de Villers-Sidani et al. 2008; Kilgard et al. 2001). In humans, much of the research on the development of auditory cortex has focused on the effects of auditory deprivation related to hearing loss (see Kral & Eggermont 2007 for review) and critical periods during early development when the auditory system is more malleable (Sharma et al. 2005). However, much of the previous work on the effects of early auditory experience on neural development has been focused specifically on the central auditory system in children who have severe to profound hearing loss and who receive cochlear implants (Kral & Sharma 2012). Children with cochlear implants often show rapid maturation of neural responses to auditory stimulation following cochlear implantation. Intuitively, the contrast between auditory input with a profound hearing loss, where there is little to no residual hearing, and auditory input following cochlear implantation is so stark that it often results in large changes to the underlying neural responses and structures (Glennon et al. 2020).

Less is known about the impact of milder degrees of hearing loss and acoustic amplification with hearing aids on auditory neural development. In contrast to children who receive cochlear implants, children with mild-to-severe hearing loss often have considerable residual hearing. The current generation of children with mild-to-severe hearing loss are often fitted with hearing aids within the first few months of life to improve their access to the acoustic cues that are needed to develop spoken language (Holte et al. 2012). Nonetheless, they show significant variability in the amount of time that they use their hearing aids; some children use their hearing aids nearly all waking hours and others use their hearing aids only an hour or two on an average day or not at all (Walker et al. 2013, 2015b). The individual variability in device use means that children with mild-to-severe hearing loss have considerable variation in the quantity of their auditory input over time.

Several studies have examined the impact of individual differences in hearing aid use on language and speech perception outcomes for children with mild-to-severe hearing loss (Tomblin et al. 2015; Walker et al. 2019, 2020; Persson et al. 2020). In general, the main finding is device use contributes to a child’s cumulative auditory experience and that children with more consistent hearing aid use will often have better spoken language outcomes than children with inconsistent device use after controlling for other factors (e.g., maternal educational level and degree of hearing loss). For example, CHL in the mild range (20–40 dB HL) who wore their hearing aids consistently had better vocabulary and morphosyntactic development than children with similar degrees of hearing loss who did not wear hearing aids (Walker et al. 2015a). Tomblin et al. (2015) found that children who wore hearing aids for at least 10 hours/day during the preschool years had stronger language growth trajectories than peers who wore hearing aids for less than 10 hours/day. More recently, Walker et al. (2019) showed that children with higher amounts of auditory dosage, a measure that combines both a child’s aided audibility for speech sounds and their device use, had better sentence recognition than peers with lower auditory dosage. Consistent auditory access, as measured by hours of device use, predicts individual differences in language and speech perception outcomes for CHL. However, the underlying effects of this experience on neural circuitry remain unclear.

Language processing and speech recognition both rely on working memory, but the effects of hearing loss and auditory experience on working memory in the previous literature are mixed. Some studies show working memory deficits across both verbal and visuospatial domains (Pisoni & Cleary 2003), while other studies show deficits only in verbal domains (Davidson et al. 2019), and still others show comparable verbal and visuospatial working memory skills in CHL relative to children with normal hearing (CNH; Stiles et al. 2012). With the exception of work by Stiles et al. (2012) that included children with hearing aids, much of the previous literature has focused solely on children with profound hearing loss who received cochlear implants. Our recent research suggests that CHL who have greater auditory dosage due in part to more consistent hearing aid use have better outcomes on standardized measures of executive function than peers with less auditory dosage (McCreery & Walker, 2022). However, the effects of hearing aid use on the underlying neural structure and dynamics related to working memory have not been examined in children with mild-to-severe hearing loss.

Fortunately, there has been a wealth of research related to the locus of working memory processing in the human brain. The majority of this work has been investigated using functional magnetic resonance imaging (fMRI). Indeed, a large meta-analysis of over 185 fMRI studies of working memory showed strong convergence of activity in the bilateral frontoparietal network, including the inferior, superior, and dorsolateral prefrontal cortices and superior parietal cortices, posterior parietal cortices and intraparietal sulci, as well as the supplementary motor area, cerebellum, thalamus, and basal ganglia during working memory tasks (Rottschy et al. 2012). Furthermore, neural activation tended to be lateralized depending on the stimuli to be remembered, such that verbal working memory tasks resulted in more left-lateralized responses in the prefrontal and parietal cortices, whereas nonverbal working memory tasks activated more right-lateralized frontoparietal regions (Rottschy et al. 2012). A more recent meta-analysis focusing on 42 verbal working memory fMRI tasks showed that visually presented, verbal working memory stimuli consistently and robustly activated regions including multiple regions of the bilateral (but predominantly left) inferior frontal gyrus, medial cingulate, and regions of the rolandic operculum (e.g., superior temporal gyrus and pars opercularis), as well as other brain areas that were more heterogeneous, albeit significant across studies, including the left inferior parietal cortex and angular gyrus (Emch et al. 2019). Given the importance of the left frontoparietal network, especially the inferior frontal gyrus and angular gyrus, in language processing, it is unsurprising that these regions are also preferentially active during verbal working memory tasks.

While there has been a tremendous amount of work done to identify the neural correlates of verbal working memory, it is difficult to elucidate which regions differentially serve each subprocess of working memory. Working memory can be broken up into three phases: encoding, or the processing and loading of information into the memory store; maintenance, where this information is actively rehearsed and retained for future use; and retrieval, or the recollection or usage of the information that was stored. Recent work using electroencephalography (EEG) and magnetoencephalography (MEG) has helped to clarify which neural regions serve each stage of the working memory process. In particular, a number of recent MEG studies have characterized the nature of oscillatory activity during verbal working memory tasks. Briefly, population-level neuronal activity is rhythmic in nature; in other words, active neuronal populations oscillate at different frequencies. These frequency-specific increases and decreases in activity during the performance of a task are thought to reflect distinct stages of cognitive processing (Singer 2018). To this end, there is evidence for a consistent, a robust pattern of neural activity that falls into distinct frequency bands during each stage of working memory processing. In both adults and children, there is a strong alpha-beta (~10–18 Hz) decrease in activity that begins during the encoding period in the occipital cortices and then is sustained through the maintenance phase, during which it extends into the left lateral parietal cortex and into the left inferior frontal gyrus (Heinrichs-Graham & Wilson 2015; Proskovec et al. 2016, 2019a). These alpha-beta neural dynamics increase in amplitude with memory load (Proskovec et al. 2019a), which underscores the importance of these left-hemisphere dynamics on proper encoding. In addition, there is a strong, sustained increase in alpha (8–12 Hz) activity in parieto-occipital regions that emerges after the onset of the maintenance phase and is sustained until retrieval (Tuladhar et al. 2007; Bonnefond & Jensen 2012, 2013; Proskovec et al. 2019a; Wianda & Ross 2019). This strong parieto-occipital alpha increase has been reliably associated with the inhibition of distractions during the maintenance to be remembered (Bonnefond & Jensen 2012, 2013; Wianda & Ross 2019).

A number of studies also have sought to characterize how these neural oscillations change as a function of age, though unfortunately these studies predominantly focus on healthy aging (e.g., Proskovec et al. 2016) and not child development. In fact, there is only one study to our knowledge that has investigated the developmental trajectory of neural oscillatory behavior during working memory encoding and maintenance. Embury et al. (2019) recorded a verbal working memory task during MEG in a large sample of youth between 9 and 15 years old. They found that these youth showed a similar pattern of neural activity as that seen in healthy adults, but that maintenance-related increases in alpha activity were notably diminished (Embury et al. 2019). They also found sex-by-age interactions in the right inferior frontal gyrus during encoding, as well as the parieto-occipital cortices during maintenance. This pattern of results suggests that the neural dynamics serving each working memory phase are still developing through adolescence and demonstrates the utility by which working memory dynamics can be systematically studied using MEG in pediatric populations.

The goal of this study was to determine the impact of hearing aid use on the neural dynamics that serve verbal working memory processing in children. To this end, we recorded high-density MEG during the performance of a verbal working memory task in a group of children and adolescents with mild-to-severe hearing loss who used hearing aids. MEG is a neuroimaging device that records the minute magnetic fields that naturally emanate from active neuronal populations. By recording the magnetic fields rather than the electric currents (i.e., in EEG), we can increase the spatial precision by an order of magnitude while maintaining millisecond precision. Thus, MEG is the only neurophysiological recording instrument currently available that directly quantifies the population-level neural activity with both excellent precision and good spatial accuracy. These unique spatiotemporal qualities allow us to identify the spatiotemporal oscillatory dynamics serving different subprocesses of working memory simultaneously with performance measures, all of which holds promise to help determine the locus of variability in the current behavioral literature. We characterized the neural patterns underlying working memory encoding and maintenance separately, and then ran whole-brain correlations between brain activity during each phase and amount of hearing aid use. We hypothesized that there would be significant correlations between hearing aid use and neural activity in key areas that have been shown to be important to working memory, including inferior frontal, superior parietal, and occipital regions, but that these patterns would be specific to the subphase of working memory (i.e., encoding versus maintenance).

MATERIALS AND METHODS

Participants

Twenty-three youth ages 8 to 15 years old (mean = 11.96 years; SD = 1.93 years; age range: 8.25–15.6 years; 8 females, 2 left-handed) with bilateral mild-to-severe hearing loss (better-ear pure-tone average [BEPTA] of 25–79 dB) who were fitted with two hearing aids were recruited to participate in this study. Of note, a cohort of matched CNH was also recruited to participate, and between-group differences in the neural patterns serving this task are reported elsewhere (Heinrichs-Graham et al. 2021). We chose to focus solely on the CHL for this analysis, as we were uniquely interested in the effects of hearing aid use on within-group variability in this population. While the participant sample, task, sensor-level methods, and beamforming methods are the same as those found in the between-groups comparison paper (Heinrichs-Graham et al. 2021), the identification of oscillatory events and whole-brain analyses included in this article are completely unique to this article and only include the sample of CHL. Exclusionary criteria included any medical illness affecting central nervous system function, current or previous major neurological or psychiatric disorder, history of head trauma, current substance abuse, and/or the presence of irremovable ferromagnetic material in or on the body that may impact the MEG signal (e.g., dental braces, metal- or battery-operated implants). After a complete description of the study was given to participants, written informed consent was obtained from the parent/guardian of the participant and informed assent was obtained from the participant following the guidelines of the University of Nebraska Medical Center’s Institutional Review Board, which approved the study protocol. Nine youth were excluded from analysis: two participants due to excessive movement or magnetic artifact, five participants due to processing (i.e., beamforming) artifacts, and two participants for an inability to perform the task; thus, 14 CHL were included in the final analysis.

Hearing Aid Use Measures

Degree of hearing loss (i.e., BEPTA) was identified from the participants’ most recent clinical audiogram, which was provided with parent consent. All audiograms were conducted within 12 months of the test visit. Briefly, audiograms consisted of unaided air conduction audiometric thresholds that had been measured with ER-3A insert earphones at octave frequencies from 250 to 8000 Hz. The thresholds at 500, 1000, 2000, and 4000 Hz were averaged to calculate the PTA for each ear, and the PTA for the better ear was used to represent the degree of hearing loss in the statistical analyses. Once parents consented and participants gave assent, parents or guardians filled out questionnaires regarding their child’s hearing aid use during the school year, summer, and weekends (e.g., “During the school year, how many hours a day does your child wear the aids Monday-Friday? Saturday-Sunday?”). We then calculated hearing aid use in the total number of hours per week, Monday through Sunday. For all analyses, we used the number of hours participants wore their hearing aids during the school year. Of note, we did not collect datalogging estimates of daily hearing aid use from the participants’ hearing aids for this particular study; however, large-scale studies have shown that while parents tend to overestimate their child’s hearing aid use, there is a significant linear correlation between parent report of use and hearing aid data logging (all p’s < 0.001, Walker et al. 2013; 2015a,b). Thus, we are confident that reliable estimates of hearing aid use can be obtained from parent report measures.

Neuropsychological Testing

All participants completed all four subtests of the Wechsler Abbreviated Scale of Intelligence (WASI-II; Weschler & Zhou 2011) to characterize their level of verbal and nonverbal cognitive function. Briefly, the WASI-II consists of the following subtests: vocabulary, similarities, block design, and matrix reasoning, which can be used to calculate an individual’s verbal, nonverbal, and overall IQ. Scores on the vocabulary and similarities subtests are combined to create the verbal composite index, which is a metric of verbal intelligence, while the block design and matrix reasoning scores are combined to create a perceptual reasoning index, which is a measure of nonverbal intelligence. Tests were administered verbally while the children wore their hearing aids by staff trained on the proper administration of the test.

Experimental Paradigm

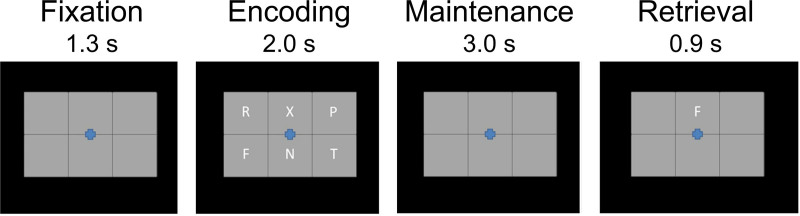

During MEG recording, participants were instructed to fixate on a crosshair presented centrally. A 19 cm wide × 13 cm tall, 3 × 2 grid containing six letters was initially presented for 2.0 s (encoding phase). The letters then disappeared, leaving an empty grid for 3.0 s (maintenance phase). Finally, a single “probe” letter appeared (retrieval phase) for 0.9 s. Participants were instructed to respond by pressing a button with their right index figure if the probe letter was one of the six letters previously presented in the stimulus encoding set, and with their right middle finger if it was not. The intertrial interval was 7.2 s; Figure 1 shows an example trial. Each participant completed 128 trials, which were pseudorandomized based on whether the probe letter was one of the previous six letters (i.e., 64 in set, 64 out of set). The task lasted approximately 16 minutes and included a 30-s break in the middle.

Fig. 1.

Task paradigm. After a baseline period, participants were presented with six letter stimuli (encoding phase). After 2.0 s, the letter stimuli disappeared (i.e., maintenance), and then 3.0 s later a probe stimulus appeared (i.e., retrieval). Participants were asked to respond via button press whether the probe letter was one of the prior encoding stimuli.

MEG Data Acquisition

Neuromagnetic data were sampled continuously at 1 kHz using a Neuromag system with 306 sensors (Elekta/MEGIN, Helsinki, Finland) with an acquisition bandwidth of 0.1 to 330 Hz. All recordings were conducted in a one-layer magnetically shielded room with active shielding engaged. Before MEG measurement, four coils were attached to the subject’s head and localized, together with the three fiducial points and scalp surface, with a 3-D digitizer (Fastrak 3SF0002, Polhemus Navigator Sciences, Colchester, VT). Once the subject was positioned for MEG recording, an electric current with a unique frequency label (e.g., 322 Hz) was fed to each of the coils. This induced a measurable magnetic field and allowed each coil to be localized in reference to the sensors throughout the recording session, and thus head position was continuously monitored. MEG data from each subject were individually corrected for head motion off-line and subjected to noise reduction using the signal space separation method with a temporal extension (tSSS; Taulu et al. 2005; Taulu & Simola 2006).

MEG Coregistration and Structural MRI Processing

Because head position indicator coil locations were also known in head coordinates, all MEG measurements could be transformed into a common coordinate system. Using this coordinate system, each participant’s MEG data were coregistered with structural T1-weighted MRI data before source space analyses using BESA MRI (Version 2.0). Structural MRI data were aligned parallel to the anterior and posterior commissures and transformed into the Talairach coordinate system (Talairach & Tournoux 1988). Following source analysis (i.e., beamforming), each subject’s functional images were transformed into the same standardized space using the transform applied to the structural MRI volume.

MEG Time–Frequency Transformation and Statistics

MEG preprocessing and imaging used the Brain Electrical Source Analysis (BESA version 7.0) software. Cardio and eye blink artifacts were removed from the data using signal space projection, which was accounted for during source reconstruction (Uusitalo & Ilmoniemi 1997). The continuous magnetic time series was divided into epochs of 6.3-s duration, with baseline being defined as −0.4 to 0.0 s before the initial stimulus onset. Both correct and incorrect trials were included in the analysis. Trials where the participant did not respond or responded after the onset of the next trial were excluded. Epochs containing artifacts were rejected based on a fixed threshold method, supplemented with visual inspection. Briefly, the raw MEG signal amplitude is strongly affected by the distance between the brain and the MEG sensor array, as the magnetic field strength falls off exponentially as the distance from the current source increases. Thus, differences in the head size or position within the array greatly affect the distribution of amplitudes of the neural signals as measured at the sensor array. To account for this source of variance across participants, as well as actual variance in neural response amplitude, we computed thresholds based on the signal distribution for both signal amplitude and gradient (i.e., change in amplitude as a function of time) to reject artifacts in each participant individually. Across all participants, the average amplitude threshold was 1137.86 (SD = 251.83) fT/cm and the average gradient threshold was 254.29 (SD = 81.39) fT/(cm ∂T). Across participants, an average of 95.79 (SD = 10.82) trials were used for further analysis. Artifact-free epochs were transformed into the time–frequency domain using complex demodulation (resolution: 2.0 Hz, 25 ms; Papp & Ktonas 1977). The resulting spectral power estimations per sensor were averaged over trials to generate time–frequency plots of mean spectral density. These sensor-level data were normalized by dividing the power value of each time–frequency bin by the respective bin’s baseline power, which was calculated as the mean power during the −0.4 to 0.0 s time period. This normalization allowed task-related power fluctuations to be visualized in sensor space.

The time–frequency windows subjected to beamforming (i.e., imaging) in this study were derived through a purely data-driven approach. Briefly, the specific time–frequency windows used for imaging were determined by statistical analysis of the sensor-level spectrograms across the entire array of gradiometers during the 2-s “encoding” and 3-s “maintenance” time windows; see Figure 1. Each data point in the spectrogram was initially evaluated using a mass univariate approach based on the general linear model. To reduce the risk of false positive results while maintaining reasonable sensitivity, a two-stage procedure was followed to control for type 1 error. In the first stage, one-sample t-tests were conducted on each data point and the output spectrogram of t-values was thresholded at p < 0.05 to define time–frequency bins containing potentially significant oscillatory deviations across all participants. In the second stage, time–frequency bins that survived the threshold were clustered with temporally, spectrally, and/or spatially (i.e., within 4 cm) neighboring bins that were also above the p < 0.05 threshold, and a cluster value was derived by summing all of the t-values of all data points in the cluster. Nonparametric permutation testing was then used to derive a distribution of cluster values, and the significance level of the observed clusters (from stage 1) was tested directly using this distribution (Ernst 2004; Maris & Oostenveld 2007). For each comparison, at least 10,000 permutations were computed to build a distribution of cluster values. Based on these analyses, the time–frequency windows that contained significant oscillatory events across all participants during the encoding and maintenance phases were subjected to the beamforming analysis.

MEG Source Imaging and Statistics

Source images were constructed using a dynamic imaging of coherent sources beamformer (Gross et al. 2001), which applies spatial filters to time–frequency sensor data to calculate voxel-wise source power for the entire brain volume. Following convention, the source power in these images was normalized per participant using a separately averaged prestimulus noise period (i.e., baseline) of equal duration and bandwidth (Van Veen et al. 1997). Such images are typically referred to as pseudo-t maps, with units (i.e., pseudo-t) that reflect noise-normalized power differences between active and baseline periods per voxel. Normalized differential source power was computed for the selected time–frequency bands, using a common baseline, over the entire brain volume per participant at 4.0 × 4.0 × 4.0 mm resolution. Each participant’s functional images were then transformed into standardized space using the transform that was previously applied to the structural images and spatially resampled to 1.0 × 1.0 × 1.0 mm resolution. Then, whole-brain Pearson correlations were performed to dissociate the impact of hearing aid use on working memory dynamics. We controlled for degree of hearing loss in all analyses by taking BEPTA as a covariate. All output statistical maps were then adjusted for multiple comparisons using a spatial extent threshold (i.e., cluster restriction; k = 300 contiguous voxels) based on the theory of Gaussian random fields (Poline et al. 1995; Worsley et al. 1996, 1999). Basically, statistical maps were initially thresholded at p < 5 × 10−4, and then a cluster-based correction method was applied such that at least 300 contiguous voxels must be significant at p < 5 × 10−4 in order for a cluster to be considered significant. These methods are consistent with standards in the field and our previous work (Heinrichs-Graham & Wilson 2015; Embury et al. 2018, 2019; McDermott et al. 2016; Proskovec et al. 2016, 2019a, b; Spooner et al. 2020).

RESULTS

Descriptive Statistics

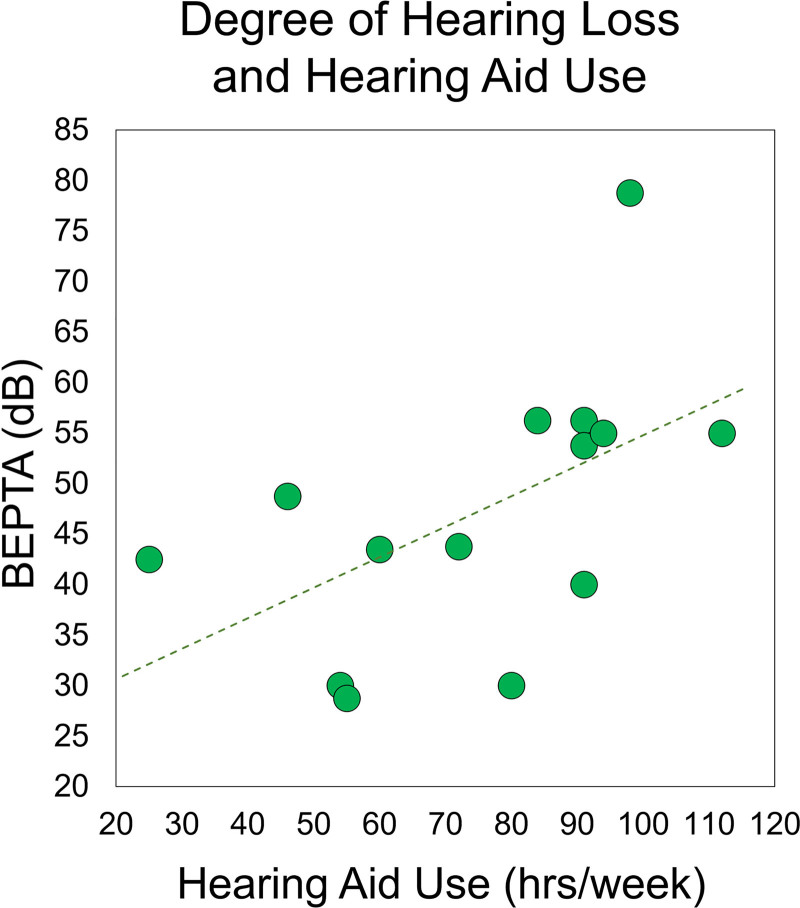

As described earlier, a total of nine participants were excluded from the final analysis. Individual demographic information, hearing history, neuropsychological, and task performance for the remaining 14 participants are shown in Table 1. The remaining participants had an average BEPTA of 46.30 dB (SD = 12.98 dB, range 28.75–78.75 dB) and used their hearing aids an average of 75.24 hours/week (SD: 24.10 hours/week; range: 25–112 hours/week). There was a significant correlation between BEPTA and total hours of hearing aid use, r(14) = 0.540, p = 0.046, such that with greater degree of hearing loss, participants wore their hearing aids more often (Fig. 2).* The 14 participants included in the final sample had an average accuracy of 61.77% (SD: 12.58%) and average reaction time of 1103.97 ms (SD: 205.97 ms). There was also significant correlation between age and accuracy, r(14) = 0.610, p = 0.021, such that accuracy improved with age in this sample. There were no other significant correlations between age, neuropsychological test performance, task behavior, or neural metrics (all p’s > 0.05). There were also no correlations between hearing metrics and behavioral performance on the task (all p’s > 0.05).

TABLE 1.

Demographics and task performance

| Age (yrs) | Sex (M/F) | Left PTA (dB) | Right PTA (dB) | Age of Diagnosis (mo) | Age of First Fitting (mo) | Hearing Aid Use (hrs/wk) | Task Accuracy (%) | Task Reaction Time (ms) | WASI-II VCI | WASI-II PRI | WASI-II FSIQ-4 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 12.42 | F | 55 | 56.25 | 55 | 57 | 112 | 78.13 | 895.25 | 110 | 116 | 115 |

| 10.79 | F | 40 | 41.25 | 1.5 | 11 | 91 | 51.56 | 812.6 | 94 | 103 | 98 |

| 10.96 | M | 48.75 | 51.25 | 44.5 | 45 | 46 | 74.22 | 952.76 | 96 | 108 | 102 |

| 11.44 | F | 56.25 | 65 | 25 | 27 | 84 | 52.34 | 1384.75 | 89 | 104 | 96 |

| 12.77 | M | 31.25 | 30 | 66 | 96 | 54 | 46.88 | 1315.49 | 105 | 120 | 114 |

| 11.97 | F | 43.75 | 42.5 | 2 | 6 | 25 | 68.75 | 1214.9 | 100 | 105 | 103 |

| 14.97 | F | 33.75 | 30 | — | — | 80 | 65.63 | 948.37 | 90 | 92 | 90 |

| 13.50 | M | 31.25 | 28.75 | 66 | 96 | 55 | 62.5 | 1009.72 | 116 | 96 | 107 |

| 8.31 | F | 43.75 | 47.5 | — | — | 72 | 43.75 | 1409.78 | 113 | 120 | 119 |

| 11.24 | M | 58.75 | 56.25 | 0.5 | 1 | 91 | 74.22 | 1282.92 | 90 | 101 | 95 |

| 12.67 | M | 53.75 | 53.75 | 5 | 6 | 91 | 62.5 | 1070.36 | 105 | 101 | 104 |

| 12.69 | F | 82.5 | 78.75 | 39 | 40 | 98 | 60.16 | 1222.13 | 90 | 109 | 99 |

| 10.03 | F | 43.75 | 46.25 | 2 | 3.5 | 60 | 43.75 | 1165.3 | 128 | 112 | 124 |

| 15.63 | M | 55 | 61.25 | 55 | 56 | 94 | 80.47 | 978.54 | 102 | 130 | 117 |

F, female; FSIQ-4, Full scale IQ based on all 4 WASI-II subtests; PTA, pure-tone average; M, male; PRI, perceptual reasoning index; VCI, verbal composite index.

published online ahead of print July 20, 2021.

Fig. 2.

Relationship between BEPTA and hearing aid use. Degree of hearing loss (i.e., BEPTA, in dB) is shown on the y axis, while hearing aid use (in total number of hours per week) is denoted on the x axis. There was a significant correlation between degree of hearing loss and hearing aid use, such that participants with more severe hearing loss wore their hearing aids more often (p < 0.05). BEPTA, better-ear pure-tone average.

Identification of Significant Task-Related Time–Frequency Responses

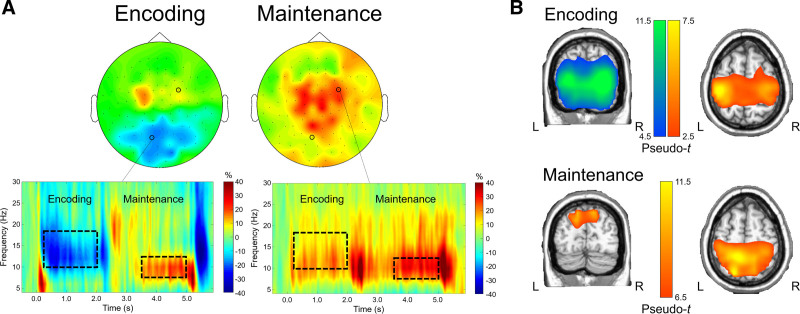

The two-stage statistical analysis of the time–frequency spectrograms across the sensor array resulted in two significant bins. During the encoding phase, there was a strong, sustained alpha-beta event-related desynchronization (ERD) that peaked from about 8 to 18 Hz and was sustained from about 200 ms after initial presentation of the encoding set until the offset of the encoding grid (p < 1 × 10−4, corrected). This cluster was found largely in posterior and central sensors. There was also a strong event-related synchronization (ERS) in a narrower alpha band (i.e., 8–12 Hz) that peaked from about 3400 ms (i.e., 1400 ms after maintenance onset) and dissipated around the onset of the retrieval grid in medial posterior, central, and right frontal sensors (p < 1 × 10−4, corrected). Of note, this ERS response was more widespread than typically found in previous literature (e.g., Tuladhar et al. 2007; Heinrichs-Graham & Wilson 2015; Embury et al. 2019; Proskovec et al. 2019a), and also included significant time–frequency components in more central sensors (see Fig. 3A). These two responses (encoding alpha-beta ERD: 10–18 Hz, 200–1800 ms; maintenance alpha ERS response: 8–12 Hz, 3400–5000 ms; 0 ms = encoding grid onset) were imaged using beamforming. Of note, due to limitations of baseline length, the beamformer images were computed in nonoverlapping 400 ms time windows and then averaged across each time window of interest, which resulted in one encoding and one maintenance image per person.

Fig. 3.

A, Time–frequency components serving working memory in CHL. Time–frequency spectrograms from representative sensors are shown on the bottom, with frequency (in Hz) shown on the y axis and time (in s; 0.0 s = encoding stimulus onset) shown on the x axis. Color bars denote the percentage change from baseline, with warmer colors reflecting increases in power from baseline (i.e., ERS) and cooler colors reflecting decreases in neural power from baseline (i.e., ERD). Dotted boxes denote time–frequency components that were selected for source imaging; the distribution of activity across sensors within these time–frequency windows is shown on top (left: encoding; right: maintenance). B, Neural dynamics serving encoding and maintenance phases. Grand-averaged beamformer images of encoding (top) and maintenance (bottom) responses are shown (pseudo-t). Coronal and axial slices of the same images for each phase are shown on the left and right, respectively. Warmer colors denote ERS responses, while cooler colors denote ERD responses.

Group-averaged whole-brain maps of each response showed distinct patterns of neural oscillatory activity during the working memory encoding and maintenance phases of the task. During encoding, there was a strong ERD response that peaked in the bilateral lateral occipital cortices and spread superior into bilateral parieto-occipital regions. Notably absent were robust ERD responses in the left inferior frontal gyrus and left supramarginal gyrus, which are responses typically elicited with this task in both adults and children (Proskovec et al. 2016; Heinrichs-Graham & Wilson 2015; Embury et al. 2019; Heinrichs-Graham et al. 2021). Interestingly, there was a strong ERS response found in this time–frequency bin that peaked in the left and right precentral gyri, left superior parietal cortex, and supplementary motor area. During maintenance, there persisted a strong ERS response in the bilateral precentral gyri, SMA, and superior parietal cortices, and this response extended into the right inferior frontal gyrus and right superior temporal gyrus. In addition, there were robust ERS response peaks in the parieto-occipital cortices bilaterally, as well as the superior medial occipital cortex (Fig. 3B). As described earlier, a comparison of these neural dynamics between these CHL and a matched group of CNH was outside of the scope of this study and can be found elsewhere (Heinrichs-Graham et al. 2021).

Whole-Brain Correlations With Hearing Aid Use

Our primary research goal was to determine if encoding- and/or maintenance-related neural responses were significantly correlated with the consistency of hearing aid use. To this end, total number of hours of hearing aid use (Monday–Sunday, during the school year) were entered into a voxel-wise whole-brain correlation analysis with encoding and maintenance maps separately, with BEPTA acting as a covariate. As described above, the resultant correlation maps were thresholded at p < 5 × 10−4 and corrected for multiple comparisons using a cluster threshold of k = 300 voxels. The individual contributions of BEPTA and hearing aid use from the peak voxels for each region were as follows: left occipital: BEPTA semipartial r = 0.066, hearing aid use semipartial r = −0.786; right occipital: BEPTA semipartial r = 0.256, hearing aid use semipartial r = −0.856; right precentral: BEPTA semipartial r = 0.652, hearing aid use semipartial r = −0.863.

Significant negative correlations between encoding-related activity and hearing aid use were found in the right precentral gyrus, as well as the bilateral superior parietal cortices (p < 5 × 10−4, corrected). In all these regions, there was a decrease in alpha-beta activity with increased hearing aid use (Fig. 4A). During maintenance, there was a negative correlation between hearing aid use and activity in the right inferior frontal cortex, such that with increased hearing aid use, there was a decrease in alpha activity in this area (p < 5 × 10−4, corrected; Fig. 4B). The individual contributions of BEPTA and hearing aid use from the peak voxel within the right inferior frontal cortex were: BEPTA semipartial correlation r = 0.492, hearing aid use semipartial r = −0.845.

Fig. 4.

Whole-brain correlation between encoding-related neural activity and hearing aid use. Degree of hearing loss (in dB) acted as a covariate. Images are thresholded at p < 0.0005, corrected. Scatterplots denote peak activity values (pseudo-t) on the y axis and total hours of hearing aid use (hrs/week) on the x axis. A, There were significant negative correlations between hearing aid use and activity in the left and right occipital cortices and right precentral gyrus during encoding, controlling for degree of hearing loss (p < 0.0005, corrected). B, There was a significant negative correlation between neural activity in the right inferior frontal gyrus and hearing aid use, controlling for degree of hearing loss (p < 0.0005, corrected).

DISCUSSION

Prior studies have demonstrated that consistent hearing aid use provides access to higher-quality auditory input, which is paramount to positive spoken language and cognitive outcomes in CHL even above and beyond the severity of the hearing loss (Tomblin et al. 2015; Park et al. 2019). Nonetheless, the impact of hearing aid use on neural dynamics serving these behavioral outcomes in CHL has yet to be characterized. This is particularly important, as there is significant variability in the amount of time children wear their hearing aids (Walker et al. 2013, 2015b). To this end, this study sought to identify the impact of individual differences in the average amount of hearing aid use on the neural dynamics serving verbal working memory performance in a sample of children with mild-to-severe hearing loss using high-density MEG imaging. MEG is the only neuroimaging technology that allows for the direct quantification of the spatiotemporal neural dynamics of different working memory subprocesses in real-time during a working memory task with good spatial and temporal precision. We hypothesized that CHL who had a greater number of hours of hearing aid use would have neural responses that were more comparable to the patterns commonly elicited by this task in CNH (e.g., Embury et al. 2019; Heinrichs-Graham et al. 2021). Our hypothesis was based on previous evidence that cumulative auditory experience impacts language and cognition in CHL (Tomblin et al. 2015). We decomposed the neural activity into time–frequency components and imaged them using beamforming to identify where the neural activity serving each phase of working memory was found. We then correlated the whole-brain maps of activity during encoding and maintenance, separately, with the participant’s hearing aid use, controlling for degree of hearing loss. We found significant correlations between encoding-related neural activity and hearing aid use in the bilateral occipital and right precentral regions. In contrast, we found a significant correlation between hearing aid use and maintenance-related activity in the right inferior frontal cortex, controlling for degree of hearing loss. While studies have shown changes in neural activity following cochlear implant stimulation (Kral & Tillein 2006; Gordon et al. 2011; Kral & Sharma 2012), these results are the first to characterize the associations between hearing aid use and neural function in CHL and provide convincing evidence on the importance of consistent hearing aid use for neural and cognitive health.

In healthy adults and children, working memory tasks have been shown to elicit widespread alpha-beta desynchronous (ERD) activity during the encoding phase that starts in the bilateral occipital cortices, and then spreads to the left superior parietal, superior temporal, and inferior frontal cortices throughout encoding and maintenance (Heinrichs-Graham & Wilson 2015; Embury et al. 2019; Proskovec et al. 2019b). This pattern of bilateral occipital encoding-related ERD activity was replicated in our recent article comparing the current sample of CHL group-wise to demographically matched CNH (Heinrichs-Graham et al. 2021). Notably, this pattern of ERD activity increases with working memory load throughout this left-lateralized network, underscoring these dynamics as crucial to verbal working memory processing (Proskovec et al. 2019a). Given the relative stability of this neural pattern, it is striking, then, that while there was a robust alpha-beta ERD that peaked in bilateral occipital regions on average across the CHL, a large percentage of CHL lacked ERD activity in bilateral occipital regions during the encoding phase (see pseudo-t values in the scatterplots in Fig. 4A). Notably, this activity was largely normalized (i.e., became negative) in participants with more consistent hearing aid use. Given the importance of occipital alpha oscillatory activity and in particular, the utility of alpha ERD as a high-order cognitive resource in visual attention and working memory (Jensen & Mazaheri 2010; Klimesch 2012), this aberrant pattern of activity could result not only in eventual deficits in working memory, but also more broadly in visual attention and memory processing. Indeed, studies show that CHL perform more poorly on tests of visual processing, attention, and memory compared to CNH (Bell et al. 2020; Jerger et al. 2020; Tharpe et al. 2008; Theunissen et al. 2014a,b), though the impact of hearing aid use on performance in these domains has been largely neglected. On the other hand, a large percentage of participants did show appropriate occipital alpha-beta ERD activity, and these participants also utilized their hearing aids the majority of their waking hours (i.e., more than about 60 hours/week). In sum, these results suggest that inconsistent hearing aid use may lead to aberrant occipital alpha-beta ERD responses, which are crucial to a variety of cognitive processes beyond just working memory. Thus, the potential behavioral implications of this pattern of results are widespread and could potentially extend to many other cognitive domains.

Whole-brain correlation maps also showed a significant peak in right motor-related regions that negatively correlated with hearing aid use, such that alpha-beta ERS responses were found more prominently in CHL who wore their hearing aids less often, whereas this response was largely absent in those who consistently wore their hearing aids. This pattern suggests a relative “normalization” of activity, as right precentral ERS activity has not typically been elicited by this task in earlier studies of normal-hearing children and adults (Heinrichs-Graham & Wilson 2015; Embury et al. 2019; Heinrichs-Graham et al. 2021). Indeed, in a recent study comparing the same CHL group and a demographically matched CNH sample, these CHL showed significantly elevated right precentral activity relative to CNH during both encoding and maintenance (Heinrichs-Graham et al. 2021). There are a number of studies that suggest that cortical motor activation is likely important in subvocal rehearsal of verbal or speech-related stimuli (Cho et al. 2018), though this has been difficult to study using neuroimaging. Interestingly, synchronization (i.e., ERS activity) is typically associated with motor suppression, while desynchronization (i.e., ERD activity) is associated with motor activation (Neuper et al. 2006). Thus, the pattern suggests that as CHL wear their hearing aids less, they engage in more motor suppression during encoding of the letter stimuli. Though speculative, it is possible that these participants were suppressing an urge to physically vocalize or “mouth” (i.e., rehearse) the letter stimuli to aid in encoding, and that with greater hearing aid use, this subvocal rehearsal and subsequent motor inhibition is utilized more efficiently. From a clinical perspective, if CHL who do not wear their hearing aids have less efficient subvocalization (rehearsal) during encoding, then a more difficult task or a more rapid influx of information (as is common in the real world) may result in a degraded ability to encode and lead to subsequent declines in working memory function. A tight relationship between faster (i.e., more efficient) subvocal rehearsal rates and better verbal working memory performance has been posited in children with profound hearing loss who wear cochlear implants (Burkholder & Pisoni 2003), as well as those with mild-to-severe hearing loss (Stiles et al. 2012). Nonetheless, we did not record electromyography (EMG) signals and movement artifacts were excluded from the data, so future work is needed to directly test this hypothesis.

During the maintenance phase, there was a significant negative correlation between activity in the right inferior frontal gyrus and hearing aid use, such that those who wore their hearing aids less often elicited stronger neural activity in this region compared to children who consistently wore their hearing aids. During verbal working memory tasks, there is typically strong alpha activity in the left inferior frontal gyrus and lateral parietal cortex that persists from encoding through maintenance. As described at length above, this activity is strongly left-lateralized. However, in the case of healthy aging or in cognitive or psychiatric disorders, this activity becomes less lateralized and instead there arises strong bilateral activation, especially in the inferior frontal gyri. In the healthy aging literature, this phenomenon is termed the Compensation-Related Utilization of Neural Circuits Hypothesis (CRUNCH; Reuter-Lorenz & Cappell 2008; Schneider-Garces et al. 2010). From a clinical perspective, CRUNCH suggests that in moderately difficult tasks, there is greater recruitment of resources in order for aging adults to maintain relatively intact performance. With greater task difficulty, this compensatory activity is no longer useful and leads to subsequent declines in performance. With this in mind, it is intuitive that increased activity in the right inferior frontal gyrus during the maintenance phase of this task acts as a compensatory mechanism, and this compensatory mechanism is less necessary or unnecessary in those who wear their hearing aids more consistently. From a functional perspective, right prefrontal activity has also been broadly related to executive function and/or decision-making ability during higher-order cognitive tasks (e.g., Edgcumbe et al. 2019; Ota et al. 2019). Thus, it is possible that this compensatory pattern of activity could serve to prepare the participant for the decision-making requirement at retrieval onset in those who wore their hearing aids less often. Again, this negative correlation can be interpreted as a normalization of the neural dynamics serving working memory maintenance with regular hearing aid use. If CRUNCH can be applied in the context of CHL, then detriments in working memory performance could arise during more difficult working memory tasks in those who wear their hearing aids less consistently. Previous research has shown that load increases lead to a decline in working memory performance (Osman & Sullivan 2014; Sullivan et al. 2015; Proskovec et al. 2019a). Crucially, suboptimal auditory environments (i.e., in noise) impact auditory working memory performance universally above and beyond the effects of working memory load (Osman & Sullivan 2014; Sullivan et al. 2015). It is unclear whether this pattern of results extends to those with hearing loss. Our paradigm did not allow for an investigation into the effects of load on these neural dynamics in CHL. Future work could focus on load-related differences in the neural dynamics serving working memory as a function of hearing aid use.

Taken together, these data suggest that with more consistent hearing aid use, there is a normalization of the neural activity serving working memory processing in CHL. Basically, bilateral occipital alpha-beta ERD activity has been shown to be crucial to successful working memory encoding (as well as a wealth of other cognitive functions), while right precentral and right inferior frontal activity during this task is uncommon (Heinrichs-Graham & Wilson 2015; Proskovec et al. 2019a; Embury et al. 2019). This pattern is demonstrated in the participants with less hearing aid use who showed more aberrant neural activity during this task relative to participants with more hearing aid use. Importantly, these relationships were significant above and beyond the effect of degree of hearing loss, which underscores the importance of consistent hearing aid use on the neural processes serving working memory function in children with any degree of HL.

Clinical Implications

Even with early intervention, a large percentage of CHL fall behind their peers in language and cognitive outcomes (Tomblin et al. 2015; McCreery et al. 2020; Walker et al. 2020). However, recent research suggests that consistent hearing aid use may ameliorate some of these deficits. For example, Tomblin et al. (2015) showed that, while CHL aged 2 to 6 years old fell behind CNH on multiple language metrics, these deficits could be moderated by consistent hearing aid use, such that those who wore their hearing aids more than 10 hours/day had a significant increase in language scores as a function of age, while those who wore their hearing aids less than 10 hours/day did not show significant developmental improvement in language scores. Walker et al. (2015a,b) showed that full-time hearing aid use (i.e., more than 8 hours/day) was significantly associated with better vocabulary and grammar scores in children aged 5 to 7 years old with mild hearing loss. More recently, Walker et al. (2020) sought to determine the impact of hearing aid use on outcomes in school-age children with mild hearing loss. They found that children with typical hearing outperformed children with mild hearing loss on language comprehension measures, but greater hearing aid use was significantly associated with better language comprehension scores. This evidence suggests that as CHL get older, they may be more at-risk of delays in higher-order measures of verbal cognition, but consistent hearing aid use may serve to buffer these risks. Future studies should probe the effects of hearing aid use and neural activity in a large developmental cohort to probe how these effects change as a function of age.

Despite emerging evidence on the importance of consistent hearing aid use, there has never been an investigation on the impact of hearing aid use on working memory, nor has there been an investigation of the effects of hearing aid use on neural activity in CHL. Our results suggest that CHL who wear their hearing aids less than 60 hours/week (~8.5 hours/day) show atypical neural activity in the bilateral occipital cortices during working memory encoding. Furthermore, CHL who wear their hearing aids more consistently show a decrease in compensatory activity in the right precentral gyrus during encoding and inferior frontal gyrus during maintenance. These data suggest that neuroimaging with MEG is sensitive to differences in the neural patterns serving higher-order cognition within the CHL population, potentially to a greater extent than behavioral tests alone.

Limitations

The current results are the first of their kind to show frequency, spatially distinct neural responses that are impacted by hearing aid use in CHL. Nonetheless, this study is not without its limitations. First, the participant sample was relatively small. Future studies should expand this work into a larger and more diverse sample, to enable additional variables such as vocabulary or socioeconomic status to be considered. In addition, recent work suggests that auditory dosage, which is the cumulative effect of both hearing aid use and the quality of hearing aid fit, may be a more crucial component to behavioral outcomes in CHL than hearing aid use by itself (McCreery & Walker, in press; Walker et al. 2020). Future studies should include measures of hearing aid fit and other auditory experience parameters when determining the impact of hearing aid use on neural activity in CHL. We also collected hearing aid use data using a parent report questionnaire, rather than directly from the participants’ hearing aids. Studies have shown that parents tend to over report their child’s hearing aid use, and thus the raw hours of use reported here may be artificially high. Nonetheless, there is a significant correlation between parent reports of hearing aid use and use data as collected from the hearing aids (p’s < 0.001, Walker et al. 2013, 2015a,b), and thus we are confident that the data presented here would survive or be strengthened with use data from the hearing aids instead of parent report. Finally, the current study did not look at load-related differences in working memory. Given the impact of working memory load on neural activity, as well as the potential relationship between load-related increases in activity and behavior in other populations, this is an important future direction.

CONCLUSIONS

This study examined the impact of hearing aid use on the oscillatory responses that are critical to successful working memory processing. We found robust relationships between consistency of hearing aid use and normalization of neural responses in the occipital and precentral regions during working memory encoding, as well as in the prefrontal cortices during working memory maintenance. In general, those who wore their hearing aids more than ~8.5 hours/day showed a normalization of neural activity during this task, while those who work their hearing aids less often showed a more aberrant oscillatory pattern. These data underscore the importance of consistent hearing aid use in CHL and provide convincing evidence that neuroimaging with MEG is a powerful and sensitive technique by which to assess the impact of hearing loss and subsequent intervention on brain and cognitive development in these children.

ACKNOWLEDGMENTS

We want to thank the families and children who participated in the research.

This work was supported by National Institutes of Health grants NIGMS U54-GM115458 (principal investigator Matthew Rizzo, University of Nebraska Medical Center) and NIDCD R01-DC013591 (principal investigator, R.W.M., Boys Town National Research Hospital). The content of this project is solely the responsibility of the authors and does not represent the official views of the National Institutes of Health. Special thanks to Meredith Spratford and Timothy Joe for their support and assistance throughout the project.

We ran this correlation with and without the participant with severe hearing loss. Without the participant, the correlation between hearing aid use and BEPTA was marginally significant, r(13) = 0.501, p = 0.081. All other analyses were significant with and without this participant.

The authors have no conflicts of interest to disclose.

REFERENCES

- Bell L., Scharke W., Reindl V., Fels J., Neuschaefer-Rube C., Konrad K. Auditory and visual response inhibition in children with bilateral hearing aids and children with ADHD. Brain Sci, (2020). 10, E307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonnefond M., Jensen O. Alpha oscillations serve to protect working memory maintenance against anticipated distracters. Curr Biol, (2012). 22, 1969–1974. [DOI] [PubMed] [Google Scholar]

- Bonnefond M., Jensen O. The role of gamma and alpha oscillations for blocking out distraction. Commun Integr Biol, (2013). 6, e22702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burkholder R. A., Pisoni D. B. Speech timing and working memory in profoundly deaf children after cochlear implantation. J Exp Child Psychol, (2003). 85, 63–88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calderon R., Low S. Early social-emotional, language, and academic development in children with hearing loss. Families with and without fathers. Am Ann Deaf, (1998). 143, 225–234. [DOI] [PubMed] [Google Scholar]

- Ching T. Y., Day J., Seeto M., Dillon H., Marnane V., Street L. Predicting 3-year outcomes of early-identified children with hearing impairment. B-ENT, (2013). Suppl 21, 99–106. [PMC free article] [PubMed] [Google Scholar]

- Cho H., Dou W., Reyes Z., Geisler M. W., Morsella E. The reflexive imagery task: An experimental paradigm for neuroimaging. AIMS Neurosci, (2018). 5, 97–115. [DOI] [PubMed] [Google Scholar]

- Conway C. M., Pisoni D. B., Kronenberger W. G. The importance of sound for cognitive sequencing abilities: The auditory scaffolding hypothesis. Curr Dir Psychol Sci, (2009). 18, 275–279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cupples L., Ching T. Y., Crowe K., Day J., Seeto M. Predictors of early reading skill in 5-year-old children with hearing loss who use spoken language. Read Res Q, (2014). 49, 85–104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davidson L. S., Geers A. E., Hale S., Sommers M. M., Brenner C., Spehar B. Effects of early auditory deprivation on working memory and reasoning abilities in verbal and visuospatial domains for pediatric cochlear implant recipients. Ear Hear, (2019). 40, 517–528. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Villers-Sidani E., Simpson K. L., Lu Y. F., Lin R. C., Merzenich M. M. Manipulating critical period closure across different sectors of the primary auditory cortex. Nat Neurosci, (2008). 11, 957–965. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edgcumbe D. R., Thoma V., Rivolta D., Nitsche M. A., Fu C. H. Y. Anodal transcranial direct current stimulation over the right dorsolateral prefrontal cortex enhances reflective judgment and decision-making. Brain Stimul, (2019). 12, 652–658. [DOI] [PubMed] [Google Scholar]

- Embury C. M., Wiesman A. I., Proskovec A. L., Heinrichs-Graham E., McDermott T. J., Lord G. H., Brau K. L., Drincic A. T., Desouza C. V., Wilson T. W. Altered brain dynamics in patients with type 1 diabetes during working memory processing. Diabetes, (2018). 67, 1140–1148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Embury C. M., Wiesman A. I., Proskovec A. L., Mills M. S., Heinrichs-Graham E., Wang Y. P., Calhoun V. D., Stephen J. M., Wilson T. W. Neural dynamics of verbal working memory processing in children and adolescents. Neuroimage, (2019). 185, 191–197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emch M., von Bastian C. C., Koch K. Neural correlates of verbal working memory: An fMRI meta-analysis. Front Hum Neurosci, (2019). 13, 180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ernst M. D. Permutation methods: A basis for exact inference. Stat Sci, (2004). 19, 676–685. [Google Scholar]

- Glennon E., Svirsky M. A., Froemke R. C. Auditory cortical plasticity in cochlear implant users. Curr Opin Neurobiol, (2020). 60, 108–114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gordon KA, Wong DDE, Valero J, Jewell SF, Yoo P, Papsin BC. Use it or lose it? Lesssons learned from the developing brains of children who are deaf and use cochlear implants to hear. Brain Topogr (2011). 3-4:204–219. [DOI] [PubMed] [Google Scholar]

- Gross J., Kujala J., Hamalainen M., Timmermann L., Schnitzler A., Salmelin R. Dynamic imaging of coherent sources: Studying neural interactions in the human brain. Proc Natl Acad Sci U S A, (2001). 98, 694–699. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heinrichs-Graham E., Walker E. A., Eastman J. A., Frenzel M. R., Joe T. R., McCreery R. W. The impact of mild-to-severe hearing loss on the neural dynamics serving verbal working memory processing in children. Neuroimage Clin, (2021). 30, 102647. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heinrichs-Graham E., Wilson T. W. Spatiotemporal oscillatory dynamics during the encoding and maintenance phases of a visual working memory task. Cortex, (2015). 69, 121–130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holte L., Walker E., Oleson J., Spratford M., Moeller M. P., Roush P., Ou H., Tomblin J. B. Factors influencing follow-up to newborn hearing screening for infants who are hard of hearing. Am J Audiol, (2012). 21, 163–174. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jensen O., Mazaheri A. Shaping functional architecture by oscillatory alpha activity: Gating by inhibition. Front Hum Neurosci, (2010). 4, 186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jerger S., Damian M. F., Karl C., Abdi H. Detection and attention for auditory, visual, and audiovisual speech in children with hearing loss. Ear Hear, (2020). 41, 508–520. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones A., Atkinson J., Marshall C., Botting N., St Clair M. C., Morgan G. Expressive vocabulary predicts nonverbal executive function: A 2-year longitudinal study of deaf and hearing children. Child Dev, (2020). 91, e400–e414. [DOI] [PubMed] [Google Scholar]

- Kilgard M. P., Pandya P. K., Vazquez J. L., Rathbun D. L., Engineer N. D., Moucha R. Spectral features control temporal plasticity in auditory cortex. Audiol Neurootol, (2001). 6, 196–202. [DOI] [PubMed] [Google Scholar]

- Klimesch W. α-band oscillations, attention, and controlled access to stored information. Trends Cogn Sci, (2012). 16, 606–617. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kral A., Eggermont J. J. What’s to lose and what’s to learn: Development under auditory deprivation, cochlear implants and limits of cortical plasticity. Brain Res Rev, (2007). 56, 259–269. [DOI] [PubMed] [Google Scholar]

- Kral A., Sharma A. Developmental neuroplasticity after cochlear implantation. Trends Neurosci, (2012). 35, 111–122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kral A., Tillein J. Brain plasticity under cochlear implant stimulation. Adv Otorhinolaryngol. (2006). 64, 89–108. [DOI] [PubMed] [Google Scholar]

- Maris E., Oostenveld R. Nonparametric statistical testing of EEG- and MEG-data. J Neurosci Methods, (2007). 164, 177–190. [DOI] [PubMed] [Google Scholar]

- McCreery R. W., Miller M. K., Buss E., Leibold L. J. Cognitive and linguistic contributions to masked speech recognition in children. J Speech Lang Hear Res, (2020). 63, 3525–3538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCreery R. W., Walker E.Variation in auditory experience affects language and executive function skills in children who are hard of hearing Ear Hear, (2022). 43, 347–360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDermott T. J., Badura-Brack A. S., Becker K. M., Ryan T. J., Khanna M. M., Heinrichs-Graham E., Wilson T. W. Male veterans with PTSD exhibit aberrant neural dynamics during working memory processing: an MEG study. J Psychiatry Neurosci, (2016). 41, 251–260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moeller M. P. Early intervention and language development in children who are deaf and hard of hearing. Pediatrics, (2000). 106, E43. [DOI] [PubMed] [Google Scholar]

- Moeller M. P., Tomblin J. B. An introduction to the outcomes of children with hearing loss study. Ear Hear, (2015). 36 (Suppl 1), 4S–13S. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neuper C., Wortz M., Pfurtscheller G. ERD/ERS patterns reflecting sensorimotor activation and deactivation. Prog Brain Res, (2006). 159, 211–222. [DOI] [PubMed] [Google Scholar]

- Osman H., Sullivan J. R. Children’s auditory working memory performance in degraded listening conditions. J Speech Lang Hear Res, (2014). 57, 1503–1511. [DOI] [PubMed] [Google Scholar]

- Ota K., Shinya M., Kudo K. Transcranial direct current stimulation over dorsolateral prefrontal cortex modulates risk-attitude in motor decision-making. Front Hum Neurosci, (2019). 13, 297. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Papp N., Ktonas P. Critical evaluation of complex demodulation techniques for the quantification of bioelectrical activity. Biomed Sci Instrum, (1977). 13, 135–145. [PubMed] [Google Scholar]

- Park L. R., Gagnon E. B., Thompson E., Brown K. D. Age at full-time use predicts language outcomes better than age of surgery in children who use cochlear implants. Am J Audiol, (2019). 28, 986–992. [DOI] [PubMed] [Google Scholar]

- Persson A. E., Al-Khatib D., Flynn T. Hearing aid use, auditory development, and auditory functional performance in swedish children with moderate hearing loss during the first 3 years. Am J Audiol, (2020). 29, 436–449. [DOI] [PubMed] [Google Scholar]

- Pisoni D. B., Cleary M. Measures of working memory span and verbal rehearsal speed in deaf children after cochlear implantation. Ear Hear, (2003). 24(1 Suppl), 106S–120S. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poline J. B., Worsley K. J., Holmes A. P., Frackowiak R. S., Friston K. J. Estimating smoothness in statistical parametric maps: variability of p values. J Comput Assist Tomogr, (1995). 19, 788–796. [DOI] [PubMed] [Google Scholar]

- Proskovec A. L., Heinrichs-Graham E., Wilson T. W. Aging modulates the oscillatory dynamics underlying successful working memory encoding and maintenance. Hum Brain Mapp, (2016). 37, 2348–2361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proskovec A. L., Heinrichs-Graham E., Wilson T. W. Load modulates the alpha and beta oscillatory dynamics serving verbal working memory. Neuroimage, (2019a). 184, 256–265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proskovec A. L., Wiesman A. I., Heinrichs-Graham E., Wilson T. W. Load effects on spatial working memory performance are linked to distributed alpha and beta oscillations. Hum Brain Mapp, (2019b). 40, 3682–3689. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reuter-Lorenz P. A., Cappell K. Neurocognitive aging and the compensation hypothesis. Curr Dir Psychol Sci, (2008). 17, 177–182. [Google Scholar]

- Rottschy C., Langner R., Dogan I., Reetz K., Laird A. R., Schulz J. B., Fox P. T., Eickhoff S. B. Modelling neural correlates of working memory: a coordinate-based meta-analysis. Neuroimage, (2012). 60, 830–846. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schneider-Garces N. J., Gordon B. A., Brumback-Peltz C. R., Shin E., Lee Y., Sutton B. P., Maclin E. L., Gratton G., Fabiani M. Span, CRUNCH, and beyond: working memory capacity and the aging brain. J Cogn Neurosci, (2010). 22, 655–669. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sharma A., Dorman M. F., Kral A. The influence of a sensitive period on central auditory development in children with unilateral and bilateral cochlear implants. Hear Res, (2005). 203, 134–143. [DOI] [PubMed] [Google Scholar]

- Singer W. Neuronal oscillations: unavoidable and useful? Eur J Neurosci, (2018). 48, 2389–2398. [DOI] [PubMed] [Google Scholar]

- Sininger Y. S., Grimes A., Christensen E. Auditory development in early amplified children: factors influencing auditory-based communication outcomes in children with hearing loss. Ear Hear, (2010). 31, 166–185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spooner R. K., Wiesman A. I., Proskovec A. L., Heinrichs-Graham E., Wilson T. W. Prefrontal theta modulates sensorimotor gamma networks during the reorienting of attention. Hum Brain Mapp, (2020). 41, 520–529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stiles D. J., McGregor K. K., Bentler R. A. Vocabulary and working memory in children fit with hearing aids. J Speech Lang Hear Res, (2012). 55, 154–167. [DOI] [PubMed] [Google Scholar]

- Sullivan J. R., Osman H., Schafer E. C. The effect of noise on the relationship between auditory working memory and comprehension in school-age children. J Speech Lang Hear Res, (2015). 58, 1043–1051. [DOI] [PubMed] [Google Scholar]

- Talairach G., Tournoux P. Planar Stereotaxic Atlas of the Human Brain. (1988). Thieme. [Google Scholar]

- Taulu S., Simola J. Spatiotemporal signal space separation method for rejecting nearby interference in MEG measurements. Phys Med Biol, (2006). 51, 1759–1768. [DOI] [PubMed] [Google Scholar]

- Taulu S., Simola J., Kajola M. Applications of the signal space separation method (SSS). IEEE Trans Signal Process, (2005). 53, 3359–3372. [Google Scholar]

- Tharpe A. M., Ashmead D., Sladen D. P., Ryan H. A., Rothpletz A. M. Visual attention and hearing loss: Past and current perspectives. J Am Acad Audiol, (2008). 19, 741–747. [DOI] [PubMed] [Google Scholar]

- Theunissen S. C., Rieffe C., Kouwenberg M., De Raeve L. J., Soede W., Briaire J. J., Frijns J. H. Behavioral problems in school-aged hearing-impaired children: The influence of sociodemographic, linguistic, and medical factors. Eur Child Adolesc Psychiatry, (2014a). 23, 187–196. [DOI] [PubMed] [Google Scholar]

- Theunissen S. C., Rieffe C., Netten A. P., Briaire J. J., Soede W., Schoones J. W., Frijns J. H. Psychopathology and its risk and protective factors in hearing-impaired children and adolescents: A systematic review. JAMA Pediatr, (2014b). 168, 170–177. [DOI] [PubMed] [Google Scholar]

- Tomblin J. B., Harrison M., Ambrose S. E., Walker E. A., Oleson J. J., Moeller M. P. Language outcomes in young children with mild to severe hearing loss. Ear Hear, (2015). 36(suppl 1), 76S–91S. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tomblin J. B., Oleson J., Ambrose S. E., Walker E. A., Moeller M. P. Early literacy predictors and second-grade outcomes in children who are hard of hearing. Child Dev, (2020). 91, e179–e197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tuladhar A. M., ter Huurne N., Schoffelen J. M., Maris E., Oostenveld R., Jensen O. Parieto-occipital sources account for the increase in alpha activity with working memory load. Hum Brain Mapp, (2007). 28, 785–792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Uusitalo M. A., Ilmoniemi R. J. Signal-space projection method for separating MEG or EEG into components. Med Biol Eng Comput, (1997). 35, 135–140. [DOI] [PubMed] [Google Scholar]

- Van Veen B. D., van Drongelen W., Yuchtman M., Suzuki A. Localization of brain electrical activity via linearly constrained minimum variance spatial filtering. IEEE Trans Biomed Eng, (1997). 44, 867–880. [DOI] [PubMed] [Google Scholar]

- Walker E. A., Holte L., McCreery R. W., Spratford M., Page T., Moeller M. P. The influence of hearing aid use on outcomes of children with mild hearing loss. J Speech Lang Hear Res, (2015a). 58, 1611–1625. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walker E. A., McCreery R. W., Spratford M., Oleson J. J., Van Buren J., Bentler R., Roush P., Moeller M. P. Trends and predictors of longitudinal hearing aid use for children who are hard of hearing. Ear Hear, (2015b). 36 Suppl 1, 38S–47S. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walker E. A., Sapp C., Dallapiazza M., Spratford M., McCreery R. W., Oleson J. J. Language and reading outcomes in fourth-grade children with mild hearing loss compared to age-matched hearing peers. Lang Speech Hear Serv Sch, (2020). 51, 17–28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walker E. A., Sapp C., Oleson J. J., McCreery R. W. Longitudinal speech recognition in noise in children: Effects of hearing status and vocabulary. Front Psychol, (2019). 10, 2421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walker E. A., Spratford M., Moeller M. P., Oleson J., Ou H., Roush P., Jacobs S. Predictors of hearing aid use time in children with mild-to-severe hearing loss. Lang Speech Hear Serv Sch, (2013). 44, 73–88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wechsler D., Zhou X., Psychological Corporation., & Assessment Library Materials (University of Lethbridge. Faculty of Education. Curriculum Laboratory). (2011). WASI-II: Wechsler abbreviated scale of intelligence. [Google Scholar]

- Wianda E., Ross B. The roles of alpha oscillation in working memory retention. Brain Behav, (2019). 9, e01263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wong C. L., Ching T. Y. C., Cupples L., Button L., Leigh G., Marnane V., Whitfield J., Gunnourie M., Martin L. Psychosocial development in 5-year-old children with hearing loss using hearing aids or cochlear implants. Trends Hear, (2017). 21, 2331216517710373. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Worsley K. J., Andermann M., Koulis T., MacDonald D., Evans A. C. Detecting changes in nonisotropic images. Hum Brain Mapp, (1999). 8, 98–101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Worsley K. J., Marrett S., Neelin P., Vandal A. C., Friston K. J., Evans A. C. A unified statistical approach for determining significant signals in images of cerebral activation. Hum Brain Mapp, (1996). 4, 58–73. [DOI] [PubMed] [Google Scholar]

- Yoshinaga-Itano C., Sedey A. L., Coulter D. K., Mehl A. L. Language of early- and later-identified children with hearing loss. Pediatrics, (1998). 102, 1161–1171. [DOI] [PubMed] [Google Scholar]