Abstract

Purpose

While patient engagement is becoming more customary in developing health products, its monitoring and evaluation to understand processes and enhance impact are challenging. This article describes a patient engagement monitoring and evaluation (PEME) framework, co-created and tailored to the context of community advisory boards (CABs) for rare diseases in Europe. It can be used to stimulate learning and evaluate impacts of engagement activities.

Methods

A participatory approach was used in which data collection and analysis were iterative. The process was based on the principles of interactive learning and action and guided by the PEME framework. Data were collected via document analysis, reflection sessions, a questionnaire, and a workshop.

Results

The tailored framework consists of a theory of change model with metrics explaining how CABs can reach their objectives. Of 61 identified metrics, 17 metrics for monitoring the patient engagement process and short-term outcomes were selected, and a “menu” for evaluating long-term impacts was created. Example metrics include “Industry representatives’ understanding of patients’ unmet needs;” “Feeling of trust between stakeholders;” and “Feeling of preparedness.” “Alignment of research programs with patients’ needs” was the highest-ranked metric for long-term impact.

Conclusions

Findings suggest that process and short-term outcome metrics could be standardized across CABs, whereas long-term impact metrics may need to be tailored to the collaboration from a proposed menu. Accordingly, we recommend that others adapt and refine the PEME framework as appropriate. The next steps include implementing and testing the evaluation framework to stimulate learning and share impacts.

Keywords: patient engagement, patient participation, rare diseases, program evaluation, drug development

Patient engagement is increasingly integrated along the life cycle of research and development (R&D) for health products (ie, medicines, technologies and services) as an essential element in moving toward a more patient-centered R&D system.1–3 Patient engagement is defined as “the active, meaningful, and collaborative interaction between patients and researchers across all stages” of health product R&D, “where decision-making is guided by patients’ contributions as partners, recognising their specific experiences, values, and expertise.”4 Such interactions have rapidly increased in the last decade, taking many shapes and forms.1,5 However, little is known about how these interactions affect health product R&D processes and their stakeholders.6,7 This knowledge gap makes it difficult to understand best practices and the value of patient engagement, increasing the risk of tokenism and hindering successful embedding of practices in R&D processes.8,9 The need for monitoring and evaluation (M&E) to elucidate whether, how, and why the objectives of patient engagement are achieved has been repeatedly articulated.7,10

Despite the need, M&E of patient engagement initiatives in health product R&D is scarce, and current evidence is often considered anecdotal.6,10,11 Previous literature reviews discussing M&E tools and frameworks report concerns regarding their scientific rigor, lack of co-creation and impact assessment, and limited transferability to other settings.12–14 Various challenges underlie these limitations. First, patient engagement initiatives are highly contextualized and relational in nature, and it often takes years before impact can be seen.15,16 Consequently, many different factors influence the causal chains leading to impact, impeding the attribution of causality and the generalization of conclusions.10 Moreover, stakeholders have different needs and priorities, and therefore views vary on what constitutes successful patient engagement.7,14 Partly because of this, there is no consensus on relevant evaluation criteria and tools. While some seek standardization across the field to enable generalization and comparison,1,7,17 others argue that M&E frameworks and tools provide more meaningful insights when tailored to the initiative and co-created with those involved.12,14,18

This article presents a tailored M&E framework for community advisory boards (CABs) participating in the European Organisation for Rare Diseases (EURORDIS) program (collectively known as EuroCAB).19 CABs are autonomous groups of patient representatives (ie, CAB members), nominated and selected by their community, who offer their expertise to public and private sponsors of research in their disease area (mainly, the biopharmaceutical industry) through advisory meetings.20 They aim to align clinical research to community needs and realities.21 EuroCAB was established to provide training and support for CABs in the field of rare diseases.19 CAB members and industry representatives expressed the need for evaluation to gain a deeper understanding of the CAB processes and outcomes in order to identify strategies to enhance and demonstrate their impact. Accordingly, the objective of this study is to develop a meaningful and feasible M&E framework for these CABs.

METHODS

Theoretical Background

The development of a framework for CABs is supported by the patient engagement monitoring and evaluation (PEME) framework,22 which can be tailored — in co-creation with all stakeholders — to a specific initiative. The starting point is developing a theory of change model to collaboratively explain the steps toward the overarching objectives of the initiative, the relationships between these steps, and the underlying assumptions and context factors of how and why an initiative works.23–25 Iteratively, quantitative or qualitative metrics (sometimes referred to as “indicators” or “measures”) linked to the steps of the theory of change are identified and prioritized. Through discussion, the processes influencing success are described and illustrated, and consensus may be reached on a visual narrative model of the steps toward impact, accompanied by relevant metrics.26

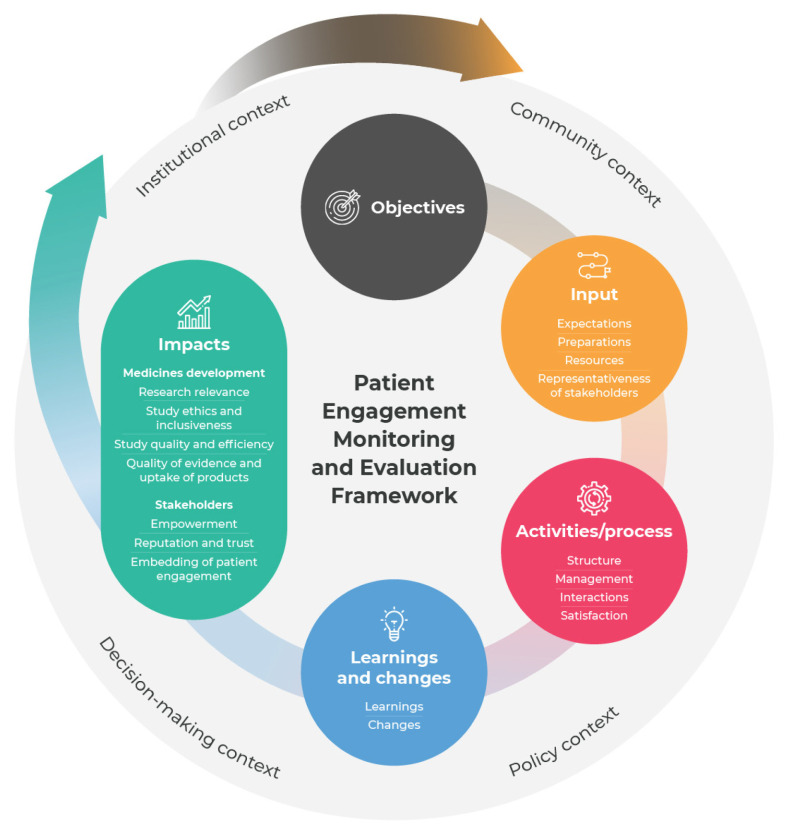

The model and metrics form the tailored framework, which is structured along 6 components: 1) objectives, 2) input, 3) activities/process, 4) learnings and changes, 5) impacts, and 6) context (Figure 1).22 Hence, the PEME framework focuses on generative causation to ensure that M&E stimulates reflection on how and why impacts are achieved, thereby inspiring change.27,28

Figure 1.

Patient engagement monitoring and evaluation (PEME) framework.

(Republished from Vat et al. Evaluation of patient engagement in medicine development: a multi-stakeholder framework with metrics. Health Expect. 2021;24:491–506; with permission from John Wiley & Sons Ltd.)

Setting

The study was conducted as part of the Patients Active in Research And Dialogue for an Improved Generation of Medicines (PARADIGM) consortium. The EuroCAB program was one of the case studies in this consortium. Currently, 5 CABs participate in the program: Duchenne Muscular Dystrophy, Cystic Fibrosis, Cystinosis, Lymphomas, and Hereditary Haemorrhagic Telangiectasia. CAB members and industry representatives collaboratively organize the CAB meetings, which strive for sustainable partnership that influences R&D decision-making by focusing on collective thinking and knowledge exchange between patient communities and researchers. The EuroCAB program applied and tailored a preliminary version of the PEME framework22 to develop an M&E framework for CABs in the rare disease context.

Participatory Approach

A participatory approach was adopted for framework development. This process was based on the principles of interactive learning and action29 and comprised 4 iterative data collection and analysis phases: 1) initiation and preparation; 2) collection, exchange, and integration of information; 3) priority setting; and 4) validation and planning (Table 1). Research activities took place between January 2019 and May 2020. The project team (authors S.E.F. and L.E.V., who gathered and analyzed the data, and R.C. and F.H., who guided the process and interpreted findings) held regular meetings to reflect on the findings, refine the framework, and plan next steps. Online Appendix A provides an overview of terms and definitions used throughout this study.

Table 1.

Phased Participatory Approach

| Phase | Objective | Stakeholders involved | Methods: | Decision-making | Output |

|---|---|---|---|---|---|

| 1) Initiation and preparation | To understand the EuroCAB context and review existing M&E practices | Project team | Document analysis, informal conversations | Not applicable | Preliminary case description |

| 2) Collection, exchange, and integration of information | To reflect on the collaboration processes and experiences with M&E to identify meaningful metrics and iteratively develop and refine the framework | 23 CAB members 4 industry representatives Project team |

Reflection sessions, 2 group sessions, and 6 one-to-one sessions | CAB members’ and industry representatives’ insights were used to develop the theory of change model and draft a set of potentially relevant metrics | Preliminary framework consisting of a theory of change and tailored set of possible metrics |

| 3) Priority setting | To prioritize the identified objectives and metrics to develop a set of meaningful metrics | 24 CAB members 6 industry representatives |

Questionnaire | CAB members’ and industry representatives’ rankings were used to cluster metrics into groups of low, medium, and high priority | Ranking of most important objectives and most relevant metrics |

| 4) Validation and planning | To validate the theory of change and set of metrics for M&E and to gather ideas for data collection and reporting | 3 CAB members 5 industry representatives Project team |

Digital dialogue workshop | CAB members and industry representatives discussed which metrics to include in the tailored framework and discussed how these could be measured and reported | Tailored framework with sets of metrics and ideas on how to implement M&E |

CAB, community advisory board; EuroCAB, a collection of CABs participating in this European Organisation for Rare Diseases program; M&E, monitoring and evaluation.

Vrije Universiteit Amsterdam’s ethics review committee determined that the research proposal complied with the ethical guidelines of its Faculty of Science.

Recruitment, and Data Collection/Analysis

Phase 1 – Initiation and Preparation

In phase 1, the project team aimed to understand the context of the EuroCAB program and reviewed the existing M&E practices to develop a preliminary case description. This was based on document review (CAB description and agreements, meeting minutes, and feedback tools developed by EURORDIS) and informal discussions with the project team.

Phase 2 – Collection, Exchange, and Integration of Information

In phase 2, reflection sessions with CAB members and industry representatives were held to reflect on their objectives, collaboration processes, and experiences with M&E. The aim of this phase was to identify meaningful metrics to iteratively develop and refine a preliminary M&E framework. Participants were invited to take part via the EuroCAB coordinator (R.C.) using a convenience sampling method. Participation was voluntary. Two group reflection sessions (~2 hours) were held, one with Duchenne Muscular Dystrophy CAB members (n=11) and one with Cystic Fibrosis CAB members (n=11). In addition, one-to-one reflection conversations (~1 hour) were conducted with 4 industry representatives from different companies and 2 CAB chairs (from Duchenne Muscular Dystrophy CAB and Lymphomas CAB). The preliminary framework and preliminary findings from the feedback tools developed by EURORDIS were presented and discussed during a workshop at a EURORDIS event with patients and companies. Notes were taken for all sessions.

Phases 1 and 2 – Analysis

During data collection, the theory of change was drafted and iteratively refined to develop a detailed representation of the CAB collaborations. Using the software program ATLAS.ti (Scientific Software Development GmbH), documents and notes from phases 1 and 2 were first analyzed deductively by author S.E.F. based on components of the PEME framework. Objectives and metrics within these components were identified via an inductive approach of open coding (identifying and describing insights), followed by axial coding (creating subcategories) by S.E.F. and L.E.V. Identified metrics were linked to the steps in the theory of change, resulting in a preliminary framework.

Phase 3 – Prioritization

Phase 3 aimed to prioritize the objectives and metrics identified in phase 2 to develop a set of meaningful metrics through a priority-setting questionnaire consisting of three parts: 1) role and experiences; 2) selection of applicable objectives and ranking of those selected; and 3) selection of up to 5 most relevant metrics across the core components of PEME (input, activities/process, learnings and changes, and impact). The impact metrics were divided into short- and long-term impact based on when they could be measured — before or after the start of the discussed study. The questionnaire was reviewed by R.C., F.H., and one CAB chair. Industry representatives and members of all CABs were invited to participate in the questionnaire via the CAB chairs or the CAB coordinator. Participation was voluntary. Of the 30 respondents who completed part 1 and 2 of the questionnaire (Table 2), 27 also completed part 3. Individual responses were anonymous and kept confidential.

Table 2.

Respondents to Questionnaire in Priority-Setting Phase

| Demographicsa | Total |

|---|---|

| Stakeholder group | |

| CAB member | 24 |

| Industry representative | 6b |

|

| |

| Total | 30 |

| Disease-specific CABc | 7 |

| Cystic Fibrosis | 7 |

| Duchenne Muscular Dystrophy | 6 |

| Cystinosis | 4 |

| Hereditary Haemorrhagic Telangiectasia | 7 |

|

| |

| Total | 24 |

| Number of CAB meetings attended | |

| None (in the planning phase) | 6 |

| ≥1 | 24 |

|

| |

| Total | 30 |

27 of 30 respondents completed part 3 of the questionnaire (selection of metrics).

Industry representative respondents all collaborated with the Cystic Fibrosis CAB.

Since all industry representative respondents worked with the Cystic Fibrosis CAB, their responses were excluded from stratification per disease area.

CAB, community advisory board.

Phase 3 – Analysis

Data from the questionnaire were analyzed via descriptive statistics using Microsoft Excel. The weighted average ranking of the objectives was calculated (rank 1 was assigned a weight of 7, etc). Unranked objectives were assigned a weight of 0. Metrics were ranked based on how often they were selected, resulting in a prioritized list of all metrics. Respondents could not indicate the relative weight for each of their priority metrics, so to do justice to the gross prioritization, clusters of high-, medium-, and low-priority metrics were created. Trends in prioritization of metrics among stakeholder roles, disease areas, and number of meetings attended were examined. Since industry representatives had only engaged with the Cystic Fibrosis CAB, their responses were excluded from the stratification by disease area to avoid potential bias.

Phase 4 – Validation and Planning

In phase 4, a digital dialogue workshop was held to validate the theory of change and set of metrics for M&E and gather ideas for data collection and reporting. The 5 CAB chairs and 5 industry representatives were invited. Three CAB chairs participated, 2 could not. A summary of the questionnaire results and the drafted tailored framework were shared with participants in advance of the workshop. Using polling tools, participants were asked to what extent they agreed with the tailored framework and the “high-priority” metrics and about what reporting structures they preferred, followed by discussion. Postworkshop, detailed notes were used by the project team and co-authors of this article to make final adjustments to the tailored framework.

RESULTS

The participatory approach resulted in a tailored framework for the EuroCAB program, including a theory of change explaining how and why objectives are reached, 17 high-priority metrics (out of 61 identified), and a menu of possible long-term impact metrics. Herein, we describe each part of the tailored framework, including rationales for metric selection.

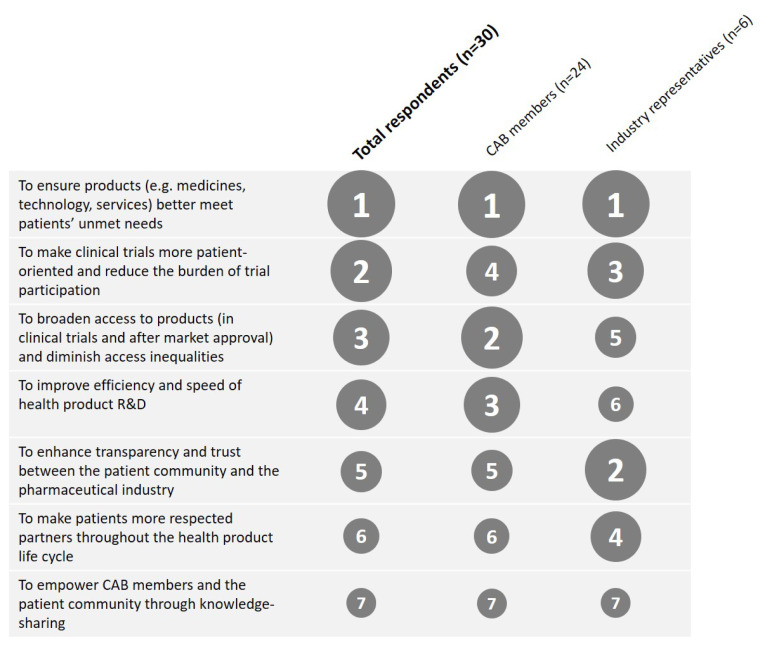

Objectives

Seven objectives for the CABs were identified. The prioritization questionnaire showed that, on average, CAB members and industry representatives considered the objective “to ensure products better meet patients’ unmet medical needs” as the most important (Figure 2). The relative importance of the other objectives differed somewhat by stakeholder group. For example, industry ranked “enhance transparency and trust” higher than did CAB members, whereas CAB members found “diminish access inequalities” and “improve efficiency and speed” more important. There were also small differences between disease areas. For example, the Cystic Fibrosis CAB found “diminish access inequalities” more important than other CABs did.

Figure 2.

Weighted average ranking of importance of the objectives. The size of the circles corresponds to the rank (1 = most important, 7 = least important).

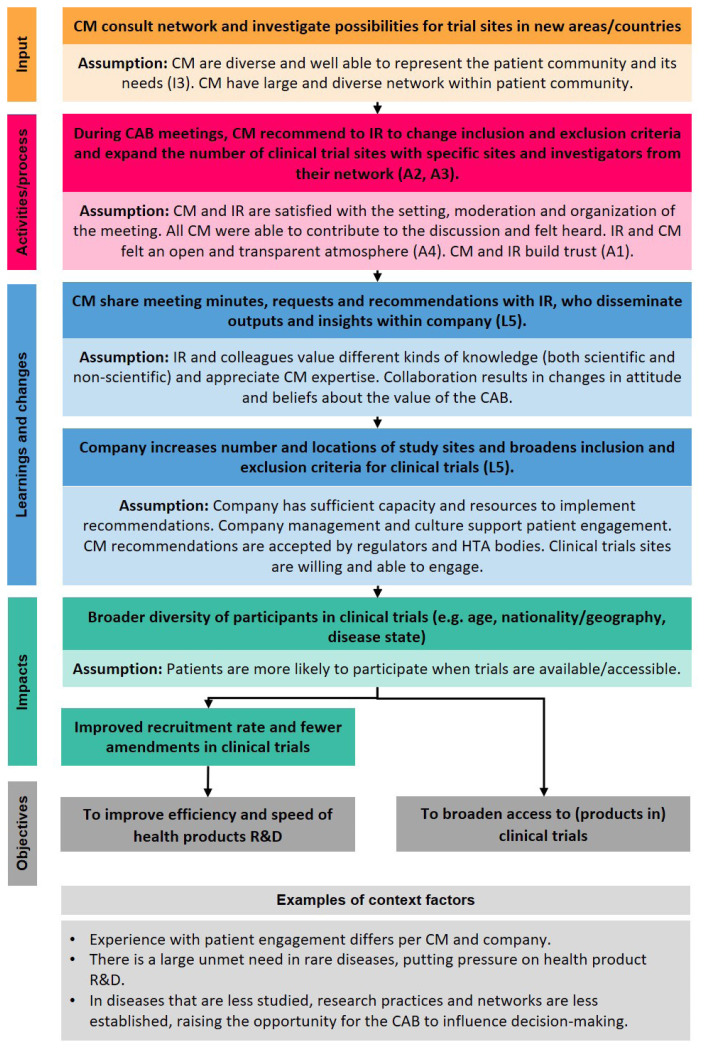

Theory of Change

Starting from the objectives, the actions needed to reach them, underlying assumptions, and context factors were iteratively mapped to develop a theory of change (Online Appendix B). Figure 3 shows an example of a theoretical pathway. The underlying assumptions describe the conditions that stakeholders deemed necessary for the proposed steps to take place. Also, several contextual factors influencing whether objectives of an initiative are achieved were identified and indicated. Each step in the theory of change can be evaluated with metrics (indicated with codes referring to the metrics in Table 3). In Table 3, data collection methods per metric are suggested. The following section highlights some rationales for selecting metrics and describe the links between them.

Figure 3.

Example of a pathway of the theory of change for community advisory boards in the EuroCAB program. Bright color boxes: actions needed to achieve the objectives. Faded color boxes: underlying assumptions (conditions deemed necessary for the steps to take place). Light gray box: context factors (external influences). (A2), (I3), (L5), etc link to that respective metric defined in Table 3. For the full theory of change, see Online Appendix B. CAB, community advisory board; CM, CAB member; HTA, health technology assessment; IR, industry representative; R&D, research and development.

Table 3.

Set of Metrics for the Community Advisory Boards (CABs) in EuroCAB

| Framework component | Metric | Codea | Number of stakeholders who selected metric (N=27) | Suggested data collection method |

|---|---|---|---|---|

|

| ||||

| Input | Feeling of preparedness of CAB members and industry representatives | I1 | 21 | Postmeeting survey Success tracker |

| Priorities for the meeting of CAB members and industry representatives | I2 | 21 | Success tracker | |

| Diversity of CAB members (eg, role within community, disease subtype, demographics) and industry representatives (eg, role, department, seniority level) | I3 | 18 | Postmeeting survey | |

| Quality and timing of preparation materials | I4 | 15 | Postmeeting survey | |

|

| ||||

| Activities / process | Feeling of trust between stakeholders | A1 | 22 | Postmeeting survey |

| Degree to which expectations of the meeting were met | A2 | 19 | Postmeeting survey | |

| Usefulness of the meeting | A3 | 18 | Postmeeting survey | |

| Feeling of transparency and openness | A4 | 17 | Postmeeting survey | |

|

| ||||

| Learnings and changes | Industry representatives’ understanding of patients’ unmet needs | L1 | 21 | Postmeeting survey Annual reflection |

| Degree to which the meeting helped to inform research goals of the company | L2 | 15 | Postmeeting survey Annual reflection | |

| Industry representatives’ understanding of patient-relevant outcome measures | L3 | 15 | Postmeeting survey Annual reflection | |

| CAB members’ understanding of decision-making in health product research and development | L4 | 9b | Postmeeting survey Annual reflection | |

| Number and type of planned or recommended actions and changes (not) implemented | L5 | 14 | Success tracker | |

|

| ||||

| Short-term impactc | Feeling of trust between stakeholders | IM1 | 21 | Postmeeting survey Annual reflection |

| Degree to which outcome measures of a trial are patient-centric | IM2 | 19 | Trial protocol review | |

| Expected study participant burden (eg, convenience of study visits, procedures) | IM3 | 18 | Trial protocol review Patient-facing material review | |

| Degree to which the collaboration helps to demonstrate the value of a product to regulators and health technology assessment bodies | IM4 | 14 | Trial protocol review Annual reflection | |

Codes link to the steps in the theory of change model (Figure 3, Online Appendix B).

This metric was not categorized as high-priority but was included because it supports the idea of shared learning.

In the prioritization questionnaire, impact was split into short- and long-term impact. Participants indicated that it is not feasible or meaningful for each CAB to measure all high-priority long-term impact metrics derived from the questionnaire. Therefore, it was proposed to create a “menú” of long-term impact metrics (Table 4). CAB members and industry partners will make a selection from the menu based on their CAB priorities, capacity, and resources.

Metrics

The metric “Feeling of trust between stakeholders” was selected most often. This metric was categorized under both activities/process and impact because while some indicated that improved trust is itself an impact, others felt that it is more a means to an end, strongly related to the metric “Feeling of openness and transparency.” In phase 2, most participants indicated that trust is a precondition for the sustainable long-term collaborations and that it takes time to develop:

“The more often you meet a company, you can tell that they have the feeling that we have important things to say, and that is exactly what we are trying to give them. They listen, they respect … they are very, very respectful of our opinion and how we interact with them, and that has led to the trust that is absolutely vital if you are going into confidential information … and it takes time for a relationship like that to really materialize.” (CAB chair 1)

The learnings metrics “Industry’s understanding of patients’ unmet needs,” “Degree to which the meeting helped to inform research goals,” and “Industry’s understanding of patient-relevant outcomes measures” were considered indicative of whether patient needs and experiences are taken into account in R&D processes, aligning closely to the most important objective. The metric “CAB members’ understanding of decision-making in health product R&D” was selected less often. Nevertheless, this metric was included because mutual knowledge exchange is considered a key principle of the CABs and should therefore be assessed from both perspectives.

To improve industry’s understanding of patient needs and reach the objectives, it was often emphasized that the “right” people need to attend the meeting. Therefore, the metric “Diversity of stakeholders” was included. Diversity of CAB members ensures that the insights and recommendations reflect to some extent those of the overall patient population. Diversity of industry representatives (eg, in seniority level) indicates the scope of the influence of CAB members on decision-making, as participants noted that broad diversity helps to spread awareness and understanding of patient needs throughout the company.

The metric “Degree to which the collaboration helps to demonstrate the value of a product to regulators and health technology assessment bodies” was selected to capture whether industry representatives report an influence of the collaboration on decision-making beyond their company. Moreover, the metrics “Degree to which outcome measures of a trial are patient-centric” and “Expected study participant burden” were selected to assess whether a trial is well designed, both in terms of the quality of evidence generated and participant experience, as these topics are often discussed in the meetings and patient-centric design is important to companies seeking to recruit quickly, minimize and prevent study drop-outs, and finish trials on time. One participant emphasized that, compared to longer-term impact metrics, the expected burden on study participants could be relatively easily attributed to patient engagement, for example, by reducing the number of hospital visits in a trial. Therefore, this metric was thought to provide compelling evidence of the value of engaging patients.

To track whether the changes needed to reach the impacts are made, more operational metrics, such as “Number and type of planned or recommended actions and changes (not) implemented” were selected. Tracking follow-up after the meeting is essential to ensure progress and prevent repetition:

“In the end, we completely lost track of everything because people were not in charge of the same things. And if there are no clear action steps, and there weren’t any because of the lack of alignment on the topics discussed, then it is very challenging [to make progress].” (CAB chair 3)

Several CAB members pointed out that they would like to receive more feedback from industry on where change is (not) happening to help them understand potential limitations industry may have and reprioritize discussion topics. Likewise, industry representatives indicated that an open discussion on expectations helps to align concrete goals and timeframes and prevent disappointment and frustration:

“It [tracking priorities, discussions, and actions] can also support the discussion around is it realistic that an impact can happen on this priority. … If you see that you keep on hitting a block on certain type of topic you can just say: ‘Okay, we agree that we are not going to make progress here so we can just kind of focus on other topics.’” (Industry representative 1)

Accordingly, the metric “Stakeholders’ priorities for the meeting” was selected because it could indicate whether the most important topics are discussed. True co-creation of the agenda and thorough preparation were considered essential because these create belief in the value and ownership of engagement among all involved.

It was suggested to exclude the long-term impact metrics from the set and include them instead in the tailored framework via a “menu” approach, as presented in Table 4. Participants indicated that long-term impact metrics are valuable as aspirational targets, but many contextual influences may make it difficult to attribute causality. Measuring long-term impact would therefore require thorough and continuous tracking of decisions and changes. The menu allows stakeholders to prioritize, through dialogue, the most meaningful metric(s) for their collaboration while ensuring the burden of measurement remains feasible.

Table 4.

“Menu” of Long-Term Impact Metrics for EuroCABs

| Long-term impact metricsa | Number of stakeholders who selected metric (N=27) |

|---|---|

| Perceptions on the alignment of research programs with patients’ needs | 16 |

| Time until regulatory approval and health technology assessment reimbursement decisions | 14 |

| Retention rate (number/percentage of participants retained in a trial and assessed) | 14 |

| Patient satisfaction with participation in a trial | 13 |

| Quality of a product (eg, drug, technology, service, information materials) | 12 |

| Time from start until the end of a trial (first participant in/last participant out) | 12 |

| Number of avoidable amendments (often costly changes to a trial protocol as a result of operational barriers) | 11 |

| Number of patients in a preapproval access program | 11 |

| Diversity of participants in a trial (demographics, disease subtype, hard to reach populations, etc) | 11 |

| Number of patients who have access to clinical trials | 8 |

| Recruitment rate (number of participants recruited per site per month) | 7 |

| Reputation of industry within the patient community | 6 |

Long-term impact metrics were not coded and indicated in the theory of change, as no consensus was reached and no methods were discussed.

DISCUSSION

In this study, the preliminary PEME framework was tailored in co-creation with relevant stakeholders to the context of CABs in the EuroCAB program. The various information needs shaped the tailored framework. Each collaboration between patients and industry is unique, and tailoring M&E by respective context can improve the relevance of the information collected, enhancing learning and reflexivity.12,30 Such continuous reflection is needed to understand under what conditions progress toward the objectives is made and to develop strategies to enhance impact.31 On the other hand, standardized M&E enables data aggregation, generalization, and comparison with other engagement initiatives, thereby better meeting accountability purposes.32,33 We aimed to balance these purposes by tailoring a framework to the CAB method and rare diseases context while also standardizing a set of metrics across all CABs in the EuroCAB program, combining metrics for learning and accountability. Our results suggest that “input,” “activities/process,” “learnings and changes,” and “short-term impact” metrics could be standardized across CABs, while the long-term impact metrics could best be tailored to the objectives, capacity, and resources of the collaboration. This resulted in a combination of fixed and flexible sets of metrics.

In line with earlier studies, the results suggest that stakeholders’ M&E priorities differ because of varying interests and needs.11,12,14 In alignment with the CABs’ focus on equal partnership and mutual knowledge exchange,20 the framework and metrics were constructed from the perspectives both of CAB members and industry and will be assessed from both perspectives when possible. The recognition that the metrics need to be meaningful for all stakeholders underlines the importance of tailoring M&E to enhance impact. Some of the selected metrics are in line with other studies, such as preparedness,17 diversity of patients,34 the recommendations (not) implemented and why,35 and the changes in research questions and design.36 These metrics might be applicable to patient engagement initiatives in various contexts. Other metrics may be particularly relevant in the rare disease context, such as “Number of patients in preapproval access programs” because of the high unmet medical need. Future studies could further explore the framework’s generalizability to other disease contexts outside of rare diseases.

The PEME framework supported the identification of relevant metrics and ensured that the tailored framework comprehensively captures the relationship between input and impacts. This improves understanding of the processes that influence success and can enable the attribution of causality.37 However, it remains difficult to prioritize metrics. The selected metrics do not fully reflect the potential value of the CABs, as they focus mainly on R&D while discussed topics and impacts may be broader. Despite excluding many steps of the theory of change from assessment, the set of metrics is still ambitious and implementation may prove challenging. This difficulty in prioritization could perhaps be overcome by urging participants to make their selection criteria more explicit (eg, relevant, feasible, transferable).26,27 It is important to note that the tailored framework is meant as a flexible tool for reflection — refined by each CAB/industry relationship — to enhance the collaboration processes. Next steps include developing and refining quantitative and qualitative data collection methods and implementing the evaluation framework to encourage learning and share impacts.38–40

Strengths and Limitations

During the process, we aimed to integrate stakeholders’ different perspectives while respecting diverse viewpoints through democratic participation complemented by discussions on the results. The collaborative approach created a common language and increased ownership of the tailored framework, which we see as a strength. It also supported the “relational work” of aligning expectations and developing a shared purpose.12,22,30 Thus, this process is itself an extension of the collaborative nature of the EuroCAB program.

A limitation was that CAB members and industry representatives of each of the 5 CABs could not participate in all phases, and the balance between CAB members and industry was not always exactly equal. However, all phases included a mix of perspectives. Also, the framework’s linear success-oriented structure may be viewed as narrow because, in reality, patient engagement has many iterations, setbacks, and unintended outcomes.38–40 Although the metrics are formulated neutrally to capture both positive and negative effects, we acknowledge this approach may limit the flexibility to adapt to changing needs and circumstances.

CONCLUSIONS

This study describes a tailored framework that could serve as a starting point for monitoring and evaluating patient engagement initiatives. We recommend adapting and refining the framework as appropriate to enhance its relevance to the specific context and encourage collaborative reflection. Such tailored frameworks can support learning and demonstration of impact, ultimately enhancing patient engagement in health product research and development.

Patient-Friendly Recap.

Community advisory boards (CABs) are createdby patient communities, health organizations, andindustry sponsors to provide a structured wayfor disease-specific patient experts to interactwith those involved in medical research anddevelopment.

Monitoring and evaluating CABs can 1) showwhether their contributions reflect the needs of thebroader patient community, 2) help both industryand patients achieve their goals, and 3) identifyareas where challenges remain.

To be meaningful for those involved, CABevaluation methods should be jointly created. Authors of this study identified a core set of datametrics, supplemented by a “menu” of flexibleoptional items, to monitor progress and evaluatespecific impacts of individual CABs.

Supplementary Information

Acknowledgments

We thank all participants for their contributions to the project. Furthermore, we thank Sally Hofmeister for reviewing the prioritization questionnaire and Natacha Bolaños for reviewing the outline of the article.

Footnotes

Author Contributions

Study design: Fruytier, Vat, Camp, Houÿez, Pittens, Schuitmaker-Warnaar. Data acquisition or analysis: all authors. Manuscript drafting: Fruytier, Vat, Camp, Schuitmaker-Warnaar. Critical revision: all authors.

Conflicts of Interest

Elena Zhuravleva is a shareholder in F. Hoffmann-La Roche Ltd.

Funding Sources

This study was conducted within the framework of the PARADIGM project. PARADIGM is a public-private partnership and is co-led by the European Patients’ Forum and the European Federation of Pharmaceutical Industries and Associations (EFPIA). PARADIGM has received funding from the Innovative Medicines Initiative’s 2 Joint Undertaking (grant agreement no. 777450), which receives support from the European Union’s Horizon 2020 research and innovation program and EFPIA.

References

- 1.Domecq JP, Prutsky G, Elraiyah T, et al. Patient engagementin research: a systematic review. BMC Health Serv Res. 2014;14:89. doi: 10.1186/1472-6963-14-89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Geissler J, Ryll B, di Priolo SL, Uhlenhopp M. Improving patientinvolvement in medicines research and development: a practicalroadmap. Ther Innov Regul Sci. 2017;51:612–9. doi: 10.1177/2168479017706405. [DOI] [PubMed] [Google Scholar]

- 3.Elberse JE. PhD thesis. published byUitgeverij BoxPress; 2012. Changing the health research system. Patientparticipation in health research. [Google Scholar]

- 4.Harrington RL, Hanna ML, Oehrlein EM, et al. Definingpatient engagement in research: results of a systematic reviewand analysis: report of the ISPOR Patient-Centered SpecialInterest Group. Value Health. 2020;23:677–88. doi: 10.1016/j.jval.2020.01.019. [DOI] [PubMed] [Google Scholar]

- 5.Epstein S. The construction of lay expertise: AIDS activismand the forging of credibility in the reform of clinical trials. SciTechnol Human Values. 1995;20:408–37. doi: 10.1177/016224399502000402. [DOI] [PubMed] [Google Scholar]

- 6.Fergusson D, Monfaredi Z, Pussegoda K, et al. The prevalenceof patient engagement in published trials: a systematic review. Res Involv Engagem. 2018;4:17. doi: 10.1186/s40900-018-0099-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Staniszewska S, Adebajo A, Barber R, et al. Developing theevidence base of patient and public involvement in healthand social care research: the case for measuring impact. Int JConsum Stud. 2011;35:628–32. doi: 10.1111/j.1470-6431.2011.01020.x. [DOI] [Google Scholar]

- 8.Bagley HJ, Short H, Harman NL, et al. A patient and publicinvolvement (PPI) toolkit for meaningful and flexibleinvolvement in clinical trials – a work in progress. Res InvolvEngagem. 2016;2:15. doi: 10.1186/s40900-016-0029-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hahn DL, Hoffmann AE, Felzien M, LeMaster JW, Xu J, Fagnan LJ. Tokenism in patient engagement. Fam Pract. 2017;34:290–5. doi: 10.1093/fampra/cmw097. [DOI] [PubMed] [Google Scholar]

- 10.Staley K. ‘Is it worth doing?’ Measuring the impact of patientand public involvement in research. Res Involv Engagem. 2015;1:6. doi: 10.1186/s40900-015-0008-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Brett J, Staniszewska S, Mockford C, et al. Mapping the impactof patient and public involvement on health and social careresearch: a systematic review. Health Expect. 2014;17:637–50. doi: 10.1111/j.1369-7625.2012.00795.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Greenhalgh T, Hinton L, Finlay T, et al. Frameworks forsupporting patient and public involvement in research:systematic review and co-design pilot. Health Expect. 2019;22:785–801. doi: 10.1111/hex.12888. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Boivin A, L’Espérance A, Gauvin FP, et al. Patient and publicengagement in research and health system decision making:a systematic review of evaluation tools. Health Expect. 2018;21:1075–84. doi: 10.1111/hex.12804. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Vat LE, Finlay T, Schuitmaker-Warnaar TJ, et al. Evaluatingthe “return on patient engagement initiatives” in medicinesresearch and development: a literature review. Health Expect. 2020;23:5–18. doi: 10.1111/hex.12951. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Staley K, Buckland SA, Hayes H, Tarpey M. ‘The missinglinks’: understanding how context and mechanism influencethe impact of public involvement in research. Health Expect. 2014;17:755–64. doi: 10.1111/hex.12017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Dillon EC, Tuzzio L, Madrid S, Olden H, Greenlee RT. Measuring the impact of patient-engaged research: how amethods workshop identified critical outcomes of researchengagement. J Patient Cent Res Rev. 2017;4:237–46. doi: 10.17294/2330-0698.1458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Feeney M, Evers C, Agpalo D, Cone L, Fleisher J, Schroeder K. Utilizing patient advocates in Parkinson’s disease: a proposedframework for patient engagement the modern metrics that candetermine its success. Health Expect. 2020;23:722–30. doi: 10.1111/hex.13064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Popay J, Collins M, editors. PiiAF Study Group. The PublicInvolvement Impact Assessment Framework guidance. [accessed September 29, 2020]. Published January 2014. https://piiaf.org.uk/documents/piiaf-guidance-jan14.pdf .

- 19.EURORDIS. EURORDIS community advisory board (CAB)programme. [accessedSeptember 29, 2020]. Last updated February 12, 2020. https://www.eurordis.org/content/eurordis-community-advisory-board-cab-programme .

- 20.Diaz A, Barbareschi G, Bruegger M, De Schryver D PARADIGM. Working with community advisory boards:guidance and tools for patient communities and pharmaceuticalcompanies. [Accessed September 29, 2020]. http://imi-paradigm.eu/petoolbox/community-advisory-boards/

- 21.Cox LE, Rouff JR, Svendsen KH, Markowitz M, Abrams DI Terry Beirn Community Programs for Clinical Research onAIDS. Community advisory boards: their role in AIDS clinicaltrials. Health Soc Work. 1998;23:290–7. doi: 10.1093/hsw/23.4.290. [DOI] [PubMed] [Google Scholar]

- 22.Vat LE, Finlay T, Robinson P, et al. Evaluation of patientengagement in medicine development: a multi-stakeholderframework with metrics. Health Expect. 2021;24:491–506. doi: 10.1111/hex.13191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ghate D. Developing theories of change for social programmes:co-producing evidence-supported quality improvement. Palgrave Commun. 2018;4:90. doi: 10.1057/s41599-018-0139-z. [DOI] [Google Scholar]

- 24.Weiss CH. Nothing as practical as good theory: exploring theory-based evaluation for comprehensive community initiatives forchildren and families. In: Connell JP, Kubisch AC, Schorr LB, Weiss CH, editors. New Approaches to Evaluating CommunityInitiatives: Concepts, Methods, Context. The Aspen Institute; 1995. pp. 65–92. [Google Scholar]

- 25.Connell JP, Kubisch AC. Applying a Theory of ChangeApproach to the Evaluation of Comprehensive CommunityInitiatives: Progress, Prospects, and Problems. The AspenInstitute; 1995. [Google Scholar]

- 26.Weiss CH. Which links in which theories shall we evaluate? New Dir Eval. 2000;2000(87):35–45. doi: 10.1002/ev.1180. [DOI] [Google Scholar]

- 27.Gooding K, Makwinja R, Nyirenda D, Vincent R, Sambakunsi R. Using theories of change to design monitoring evaluation ofcommunity engagement in research: experiences from a researchinstitute in Malawi. Wellcome Open Res. 2018;3:8. doi: 10.12688/wellcomeopenres.13790.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Davidoff F, Dixon-Woods M, Leviton L, Michie S. Demystifying theory and its use in improvement. BMJ QualSaf. 2015;24:228–38. doi: 10.1136/bmjqs-2014-003627. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Broerse JE, Bunders JF. Requirements for biotechnologydevelopment: the necessity for an interactive and participatoryinnovation process. Int J Biotechnol. 2000;2:275–96. [Google Scholar]

- 30.Collins M, Long R, Page A, Popay J, Lobban F. Using thePublic Involvement Impact Assessment Framework to assessthe impact of public involvement in a mental health researchcontext: a reflective case study. Health Expect. 2018;21:950–63. doi: 10.1111/hex.12688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.van Mierlo B, Regeer B, van Amstel M, et al. ReflexiveMonitoring in Action. Wageningen: University/AthenaInstitute; 2010. [Google Scholar]

- 32.Stergiopoulos S, Michaels DL, Kunz BL, Getz KA. Measuringthe impact of patient engagement and patient centricity inclinical research and development. Ther Innov Regul Sci. 2020;54:103–16. doi: 10.1177/2168479018817517. [DOI] [PubMed] [Google Scholar]

- 33.Regeer BJ, de Wildt-Liesveld R, van Mierlo B, Bunders JFG. Exploring ways to reconcile accountability and learning in theevaluation of niche experiments. Evaluation. 2016;22:6–28. doi: 10.1177/1356389015623659. [DOI] [Google Scholar]

- 34.Caron-Flinterman JF, Broerse JEW, Teerling J, et al. Stakeholder participation in health research agenda setting:the case of asthma and COPD research in the Netherlands. SciPublic Policy. 2006;33:291–304. doi: 10.3152/147154306781778993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Slade M, Bird V, Chandler R, et al. The contribution ofadvisory committees and public involvement to large studies:case study. BMC Health Serv Res. 2010;10:323. doi: 10.1186/1472-6963-10-323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Forsythe L, Heckert A, Margolis MK, Schrandt S, Frank L. Methods and impact of engagement in research and from theoryto practice and back again: early findings from the Patient-Centered Outcomes Research Institute. Qual Life Res. 2018;27:17–31. doi: 10.1007/s11136-017-1581-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Rogers PJ. Using programme theory to evaluate complicated andcomplex aspects of interventions. Evaluation. 2008;14:29–48. doi: 10.1177/1356389007084674. [DOI] [Google Scholar]

- 38.Staley K, Barron D. Learning as an outcome of involvement inresearch: What are the implications for practice, reporting andevaluation? Res Involv Engagem. 2019;5:14. doi: 10.1186/s40900-019-0147-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Russell J, Fudge N, Greenhalgh T. The impact of publicinvolvement in health research: What are we measuring? Whyare we measuring it? Should we stop measuring it? Res InvolvEngagem. 2020;6:63. doi: 10.1186/s40900-020-00239-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Edelman N, Barron D. Evaluation of public involvement inresearch: time for a major re-think? J Health Serv Res Policy. 2016;21:209–11. doi: 10.1177/1355819615612510. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Yeoman G, Furlong P, Seres M, et al. Defining patient centricitywith patients for patients and caregivers: a collaborativeendeavour. BMJ Innov. 2017;3:76–83. doi: 10.1136/bmjinnov-2016-000157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Stame N. Theory-based evaluation and types of complexity. Evaluation. 2004;10:58–76. doi: 10.1177/1356389004043135. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.