Abstract

Social interactions occur in group settings and are mediated by communication signals that are exchanged between individuals, often using vocalizations. The neural representation of group social communication remains largely unexplored. We conducted simultaneous wireless electrophysiological recordings from the frontal cortices of groups of Egyptian fruit bats engaged in both spontaneous and task-induced vocal interactions. We found that the activity of single neurons distinguished between vocalizations produced by self and by others, as well as among specific individuals. Coordinated neural activity among group members exhibited stable bidirectional interbrain correlation patterns specific to spontaneous communicative interactions. Tracking social and spatial arrangements within a group revealed a relationship between social preferences and intra- and interbrain activity patterns. Combined, these findings reveal a dedicated neural repertoire for group social communication within and across the brains of freely communicating groups of bats.

For many animals, including humans, social interactions often occur in group settings. These interactions are commonly mediated by vocal communication signals that convey social information, such as participant identity, context, and social preferences (1–3). Substantial progress has been made toward investigating the neural representation of sensory, motor, and social aspects of vocalizations separately (4–8), but rarely have all aspects been examined together (9) or in a group setting where behavioral and neural activity were recorded from more than two animals simultaneously. As a result, our ability to connect behavior and neural activity has been limited to individuals or pairs, rather than groups; consequently, it has been difficult to explore the specific social and neural relationships that exist within a group (10). A combined approach will allow us to better understand how communication signals are represented within and across the brains of freely interacting group members.

We studied social-vocal communication in groups of Egyptian fruit bats (Rousettus aegyptiacus). Similar to other bats (11), this species lives in large colonies where individuals form long-term and persistent relationships (12–14) and communicate using vocalizations that contain socially relevant information, including individual identity and behavioral context (3). These vocalizations exclusively occur as part of close-range, direct interactions with conspecifics (3, 15, 16), providing a reliable indicator of social communication between group members. The social-vocal behavior of the Egyptian fruit bat thus presents an opportunity to investigate neural computations related to key aspects of group communication, including (i) representations of self versus others and individual identities, (ii) shared activity patterns across the brains of group members, (iii) influence of social context, and (iv) impact of social relationships between individuals.

To study social-vocal communication under group conditions, we allowed multiple bats to interact freely while monitoring their behavior in an enclosure in the dark (“free communication session”; Fig. 1A, group sizes of n = 4 and n = 5 male bats). Under these conditions, the entire group is in close proximity and vocalizations only occur during social interactions (3, 15, 16). We therefore considered all interactions that included a spontaneously occurring vocalization to be a social-vocal interaction involving all bats in the group. These interactions occurred hundreds of times per session and were nonstereotyped (see supplementary materials for a list of defined behavior types). Because video recordings alone are not sufficient to accurately and consistently assign the identity of the vocalizer (3, 15, 16), we developed an on-animal wireless vibration sensor that allows for unambiguous identity detection (Fig. 1B and fig. S1). We found that vocalizations varied within and across bats in their spectral and temporal features (fig. S2 and table S1) and that just 1% of vocalizations overlapped in time across bats, indicating that only one bat vocalized at a time during social interactions (Fig. 1B and fig. S1).

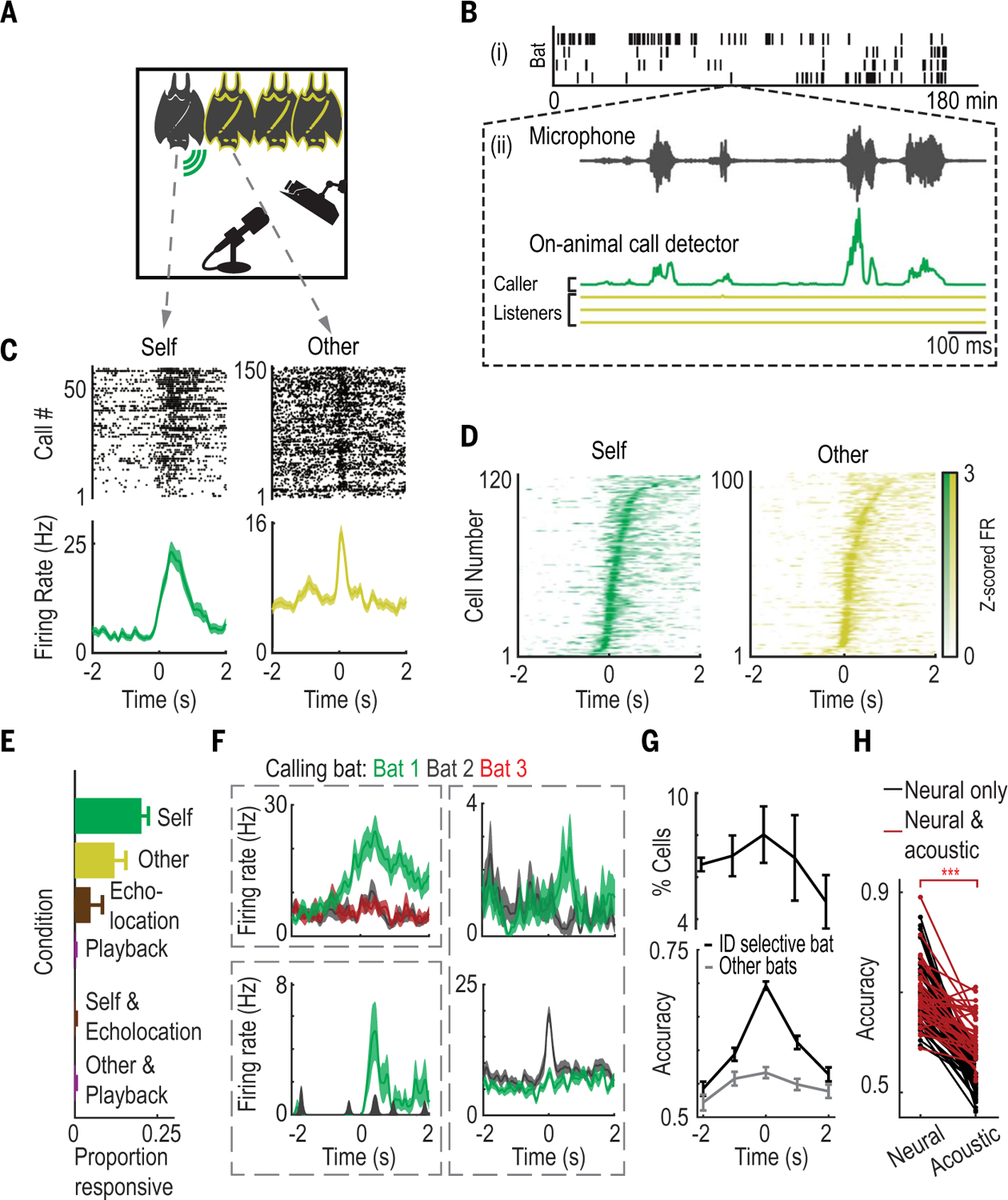

Fig. 1. Neural correlates of social-vocal interactions during free group vocal communication.

(A) Experimental setup. The calling bat (“self”) is indicated by the green symbol; other bats are indicated by a yellow outline. Bats were monitored using cameras and a microphone. (B) (i) Ticks indicate vocalizations from different bats on each row (n = 4) during an example session. (ii) Zoom-in on an example train of calls. Top: Microphone recording of example calls. Bottom: Corresponding filtered recordings from on-animal call detectors, colored according to (A). (C) Example call-aligned rasters (top) and peri-event time histograms (PETHs, bottom) of self- and other-responsive neurons (here and below, calls start at 0 s). (D) Maximum normalized average firing rates of all responsive cells, sorted by time of maximum firing rate, for self- and other-responsive neurons. (E) Average fraction of responsive neurons per condition. Error bars denote SEM (n = 7 bats). (F) Example identity-selective neurons modulating firing rates in response to one select bat’s calls, but not for others (colored traces are one neuron’s firing rates when listening to vocalizations from different bats). The two examples at right are identity-selective neurons recorded simultaneously from the same bat, each one selective for a different bat. Shaded areas indicate SEM. (G) Top: Percent of all cells that are significantly identity-selective, averaged across bats. Bottom: Identity-decoding accuracy over time averaged across all neurons that were identity-selective at the moment of vocalizations. Plotted are decoding accuracies for the individual bat that drove identity selectivity (black) and for all other bats (gray). Error bars denote SEM. (H) Decoding accuracy using neural data (left) and acoustic data (right). Shown are data only from neurons that exhibit significant neural identity selectivity. Instances with both significant neural and acoustic selectivity are in red; instances with only significant neural selectivity are in black. ***P < 10−20 (paired t test).

Single-neuron activity distinguishes between vocalizations produced by self and others

We wirelessly recorded the activity of 1153 single neurons and local field potential (LFP) activity from the frontal cortices of multiple bats simultaneously in each free communication session (table S2 and figs. S3 and S4; electrophysiological data from n = 7 total bats from two separate groups). Activity in this area has previously been shown to relate to social behaviors across a range of mammalian species (17–21), including bats (22). We began by looking for modulation of neural activity when an individual bat vocalized (“self”) as well as when it listened to calls produced by other group members (“others”), in accordance with previous studies demonstrating vocalization-related frontal cortical activity in multiple species (23–26). We found that single neurons modulated their firing rates around the time of vocalizations, both in the vocalizing bat (“self-responsive neurons,” 20.7% of neurons; see tables S2 to S4 for subpopulations of neurons) and in the other bats in the group (“other-responsive neurons,” 11.7% of neurons) (Fig. 1, C and D, and figs. S4 and S5). These neuronal populations were primarily nonoverlapping (fig. S5D and table S2), indicating that the same area of frontal cortex contains distinct representations of self- and other-generated vocalizations.

A complementary decoding analysis indicated that 26% of all neurons exhibited firing rates containing sufficient information to differentiate between calls made by self and others (table S2). Firing rate modulation occurred at short latencies, specifically during vocalizations, and followed the fast temporal dynamics of vocalization sequences (fig. S6). Furthermore, manual annotation of individual bats’ behavior around vocalizations showed that bats engaged in interactive and variable behavior before and after the time of the vocalizations, but that the type of behavior did not significantly affect firing rates for most call responsive neurons (fig. S7).

Self- and other-responsive neurons are not modulated by specific acoustic features, playback, or echolocation production

The neuronal responses we observed could potentially be related primarily to the sensorimotor rather than social aspects of the social-vocal interactions. However, we found that neither self- nor other-responsive neurons modulated their firing rates in relation to the acoustic features of the vocalizations that were produced or heard (figs. S8 and S9). To further dissociate the sensorimotor and social aspects of vocalizations, we conducted an additional set of experiments where bats heard or produced acoustic signals in isolation (fig. S10). In contrast to group social communication, we did not observe any neurons that responded when an isolated bat was exposed to playback of prerecorded vocalizations (Fig. 1E, fig. S10, A and B, and table S2). Similarly, we found that solitary bats flying freely and producing tongue-click echolocation pulses (fig. S10, C and D) that recruit many of the same orofacial muscles as vocalizations (27, 28) elicited few echolocation-responsive cells (5.9% of neurons). Notably, there was no overlap between echolocation and self-responsive neurons (Fig. 1E, fig. S10E, and table S2). Collectively, the low rates of responsivity to nonsocial acoustic production and auditory stimulation, as well as the lack of neuronal modulation in relation to acoustic details of vocalizations, indicate that the observed neuronal responses to vocalizations produced by self or others were specific to vocal behavior occurring in a social context.

Single-neuron activity exhibits selectivity for calls produced by specific individuals

In addition to discriminating between self and others, the group setting of our experiments enabled us to test whether neural activity in the frontal cortex contains a representation of individual identity—an important aspect of social interactions that has been shown to be behaviorally relevant during vocal communication in this species of bat (3, 13, 14). We found that a subset of neurons in listening bats responded selectively to vocalizations produced by specific individuals within the group (Fig. 1F). Using the firing rates of these “identity-selective” neurons, we could decode the identity of one bat versus other bats present in the group specifically around the time of vocal interactions (9.3% of neurons; cross-validated and false discovery rate–corrected P < 0.05, bootstrap test; Fig. 1, F and G, fig. S11A, and tables S3 and S4). We found that for the majority of identity-selective neurons, acoustic features failed to provide significant classification of caller identity (Fig. 1H, 56% of identity-selective neurons), indicating that these results could not be accounted for solely by acoustic differences across individual bats. Similarly, we found that physical contact and participation in social-vocal interactions did not drive the identity selectivity of single neurons. In particular, single-neuron identity decoding accuracy generally did not significantly decrease, either when restricting our analyses to include only interactions where all bats were in close physical contact (fig. S11B) or when only including interactions not involving the bat from which the identity-selective neuron was recorded (fig. S11C). These analyses do not rule out contributions of unconsidered sensory, social, or multimodal factors that have been shown to modulate frontal cortical activity (29) but do indicate that identity selectivity was not solely dependent on proximity, participation in the vocal interaction, or acoustic details. Combined, these results indicate that frontal cortical activity contains sufficient information to distinguish vocalizations produced by self versus others as well as between individual group members.

Vocal interactions elicit stable correlated neural activity across the brains of group members

Having considered the relationship between social-vocal communication and frontal cortical activity in the brains of individual bats, we next assessed the relationship in neural activity across the brains of group members during vocal interactions. Correlation in brain activity between pairs of individuals has been observed in studies in humans (30), duetting birds (9), and dyads of socially but not vocally interacting rodents (20) and bats (22). Interbrain correlation has further been shown to indicate, and possibly facilitate, successful vocal communication in humans (31–34). Previous findings in bats indicate that LFP activity was modulated on a long time scale (seconds to minutes) by nonvocal social interactions and was correlated across brains (22). We observed a strong, temporally precise modulation of LFP power on short time scales (milliseconds) around vocalizations in both low (<20 Hz) and high (>70 Hz) frequency bands (Fig. 2, A and B, and fig. S12). Moreover, we observed increased interbrain correlation precisely around the time of vocalizations between pairs of calling and listening bats as well as between pairs of listening bats (Fig. 2C and fig. S13; n = 8962 bat pairs and calls across two groups). Consistent with previous findings (22), pairwise interbrain correlation was found to be most pronounced in high-frequency LFP power (70 to 150 Hz; fig. S13). We therefore focused our subsequent analysis of interbrain relationships on power in the high-frequency LFP range.

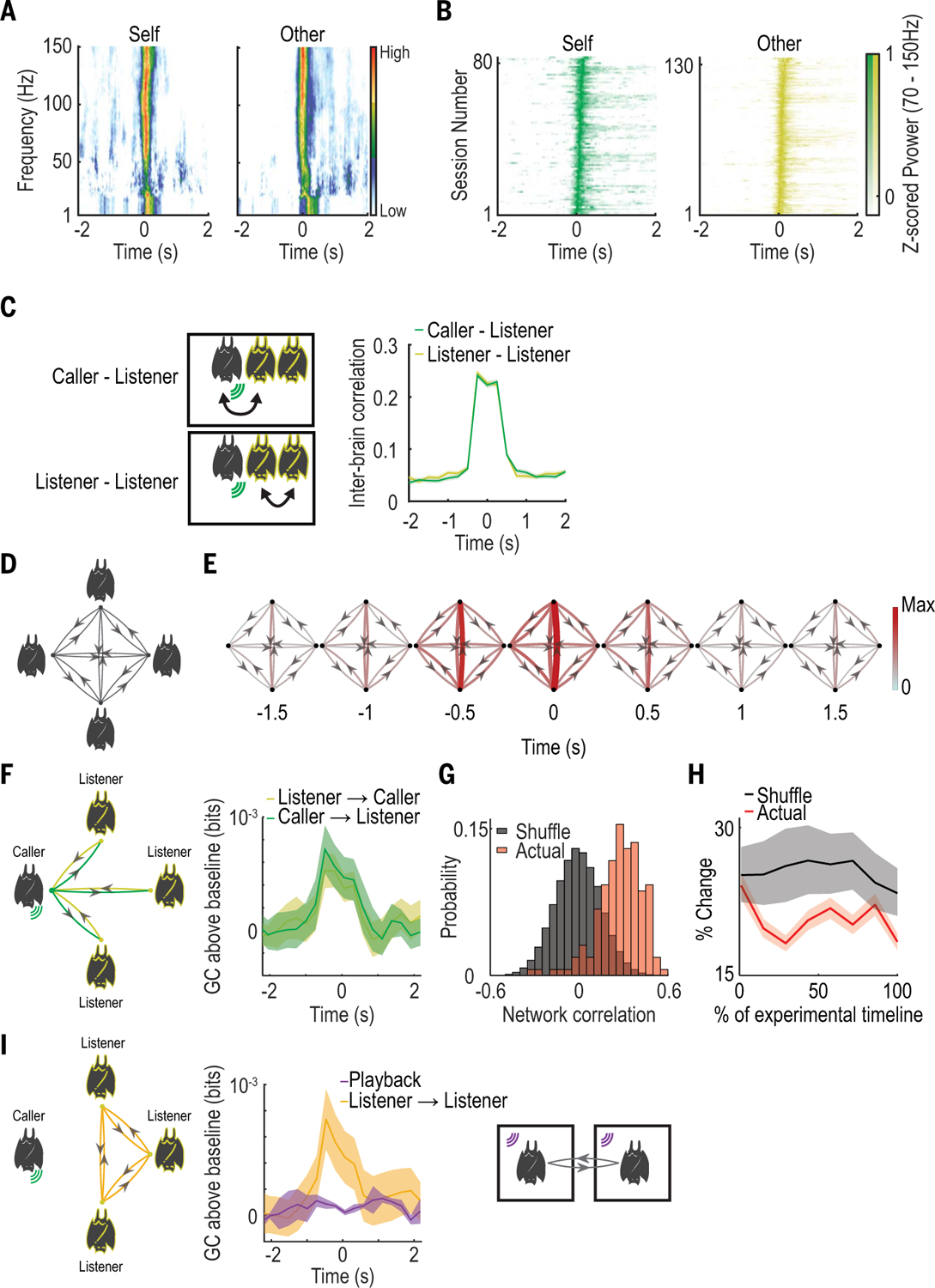

Fig. 2. Group interbrain activity patterns around social vocalizations produced during free group vocal interactions.

(A) Example average LFP spectrograms (normalized at each frequency bin) from one bat during one session. (B) Average power of high-frequency LFP (70 to 150 Hz) for all sessions, displayed as in (A). (C) Left: Schematics of interbrain correlation between bat pairs involving the calling bat (top) and involving only listening bats (bottom). Right: Call-aligned interbrain Pearson correlation of high-frequency (70 to 150 Hz) LFP power averaged across all vocalizations and bat pairs. Throughout this figure, shaded areas denote SEM. (D) Schematic illustrating all possible connections in a group of four bats. Nodes indicate bats, edges indicate interbrain relationships, and arrows indicate directionality. (E) Group interbrain GC graphs using all vocalizations (both calling and listening), calculated using a sliding window of 1-s duration around call onset. GC magnitudes are represented by both color and line width. (F) Left: Illustration of the different directional relationships that exist when a given bat is vocalizing. Right: Baseline-subtracted GC values aligned to call onset, calculated separately for vocalizations from each bat and averaged across bats. Data are shown according to the relationships delineated in the diagram at left (colors). (G) Distribution of average correlation values between GC magnitudes calculated using vocalizations equally binned across all experimental days (red) compared to shuffled data (gray). High values indicate similarity over time. Actual values are significantly higher than shuffled (P = 0.03, Mantel test). (H) Bin-to-bin percent change in GC values over the duration of the experiment, presented as percentage of experimental timeline and compared to shuffled values for the same bins. (I) Left: Illustration of listener-to-listener relationships in the group setting. Right: Simultaneous playback experiment. Center: Average baseline-subtracted GC values during playback compared to listener-to-listener GC values during free communication.

The group setting of the experiment allowed us to measure directed interbrain relationships simultaneously between multiple pairs of bats while also accounting for potential spurious correlations driven by common input from other group members using conditional Granger causality (GC) (Fig. 2D). We found that GC magnitude sharply increased around the time of vocal interactions across all calls (Fig. 2E, n = 995 calls and n = 4 bats; fig. S14, A to C, n = 827 calls and n = 3 bats), and also when separately considering the caller-to-listener and listener-to-caller directions (Fig. 2F; n = 7 bats). This suggests a bidirectional interbrain relationship during spontaneous social-vocal interactions between group members, which may reflect vocal as well as other associated behavioral aspects of the interactions between the bats. However, these results do not imply a causal relationship between the behaviors of individuals. The increase in GC was significantly greater than that observed in trial shuffled data, indicating a precise co-ordination in activity rather than simple co-activation (fig. S14D). Next, we assessed whether the variability in GC we observed across bats, and by listener and caller direction (fig. S14, E and F), reflected random association or a stable pattern of interbrain activity. We found that the correlation in GC values throughout the experimental timeline was significantly higher than in data shuffled across bats (Fig. 2G) and that GC values changed less than what would be expected by chance throughout the experimental timeline (Fig. 2H and fig. S14G). Lastly, we found that providing shared auditory input to two bats in separate, isolated chambers (Fig. 2I) did not elicit an increase in GC magnitude, indicating that social context was necessary for the existence of coordinated interbrain activity between group members (Fig. 2I; n = 2 bats). To verify that these findings were not a result of our choice of parameters or the parametric assumptions of GC, we repeated the above GC analysis using the same data at different values of time lag and also calculated interbrain relationships using the transfer entropy between pairs of bats. Using these measures, we observed similar results (fig. S15).

Intra- and interbrain activity patterns are restructured outside the group social context between spontaneous and trained vocal behavior

The results presented thus far show that social-vocal communication strongly modulates intra- and interbrain neural activity in groups of freely interacting bats. We next sought to dissociate vocalizations from their typical social context in order to assess the impact of engaging in spontaneous, communication-driven vocal interactions. To that end, we developed an operant conditioning task wherein pairs of bats learned to produce vocalizations for reward in a context where they would not normally vocalize. This permitted testing the influence of social context on neural activity by comparing trained, reward-driven vocal interactions to spontaneous group vocal interactions.

In the operant conditioning task, a pair of bats was placed in a divided cage such that they were physically separated but could still see, hear, and smell each other and even interact in a limited way across the mesh divider (n = 4 bats; two pairs of trained bats; Fig. 3A and table S3). Over the extensive course of training (fig. S16A), bats went from the naïve state of never calling in this context (Fig. 3B, Pre-training) to reliably producing hundreds of calls within a session (Fig. 3B, Trained). We found that under these conditions one bat in each pair spontaneously became the sole caller while the other became the listener, in clear contrast to the vocal behavior observed in the free-communication session (Fig. 3C and fig. S16B). Once conditioned, bats produced calls drawn from a similar vocal repertoire as in the free-communication session, but with less variability (Fig. 3D and fig. S16, C and D). To enable a direct comparison of neural activity across contexts, we performed the operant session immediately prior to the free-communication session and recorded the same neural activity throughout (tables S2 and S4). This comparison revealed marked differences between the spontaneous and reward-driven contexts at both the intra- and interbrain activity levels.

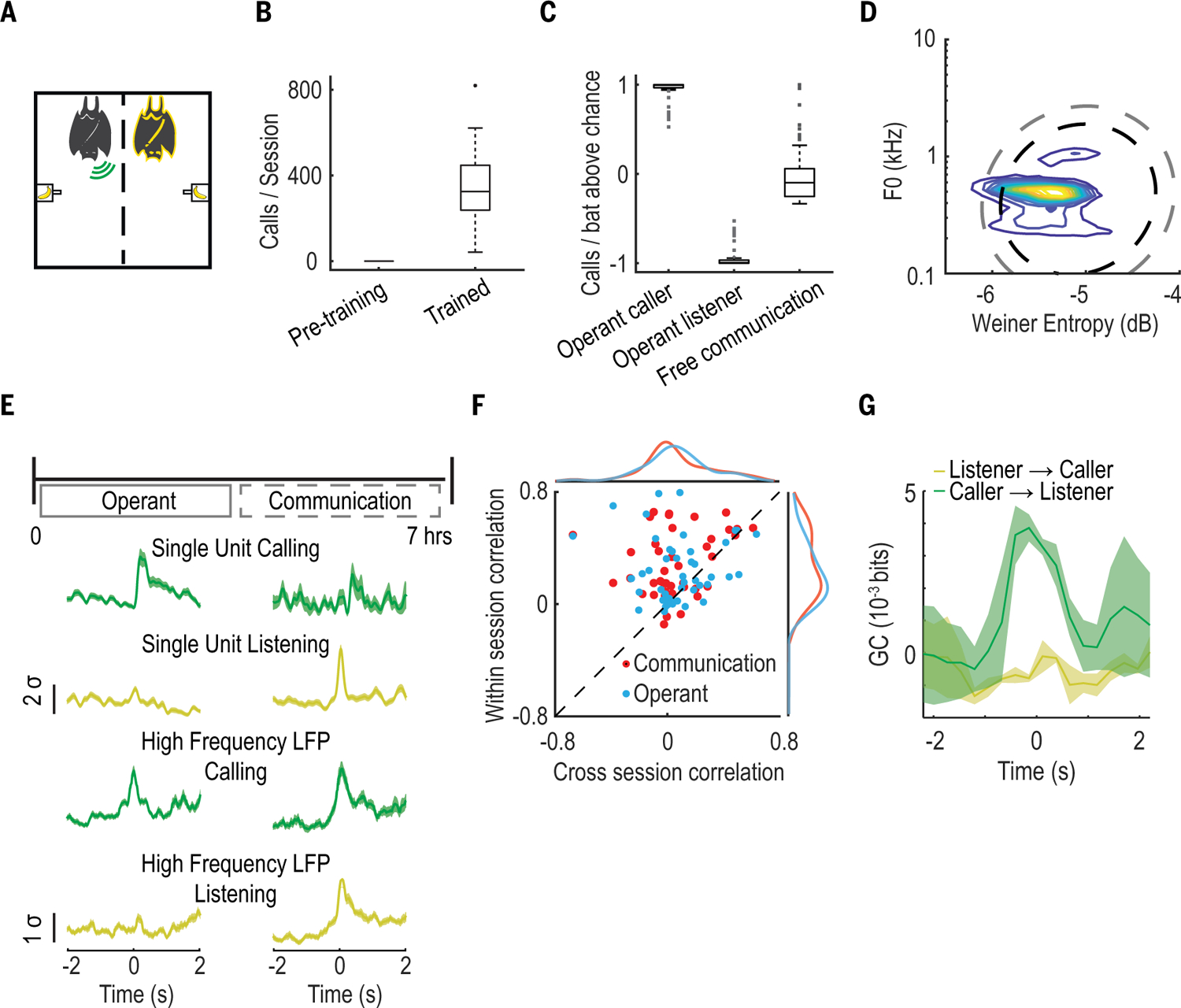

Fig. 3. Restructuring of neural activity during task-induced vocal behavior.

(A) Operant session schematic. As above, green and yellow represent the calling and listening bat, respectively. (B) Number of vocalizations per session before (17 sessions, n = 4 bats) and after training (28 sessions, n = 4 bats). Box plots display median, interquartile range, and ±1.5 SD. (C) Average number of calls compared to chance (assuming each bat is equally likely to call), produced by each bat and normalized by the number of bats in a given session. Calling and listening bats in the operant task are separated to illustrate caller-listener dichotomy and to contrast with free communication where all bats vocalized at similar rates, on average. Data are displayed in box plots as in (B). (D) Contour plots representing the distribution of operant session vocalization by two acoustic features. Black and gray dashed lines correspond to areas encompassing 95% and 99% of a Gaussian distribution, respectively, fit to free communication session vocalizations. (E) Top: Experimental timeline over the course of a day. Operant and free communication sessions are performed in direct succession over the course of ~7 hours. Bottom: Normalized average firing rates from two example single units and normalized average high-frequency LFP power from two example tetrode channels. Shown is activity aligned to vocalizations in the operant (left) and free communication (right) sessions. Shaded areas denote SEM. (F) Cross- versus within-session correlation of the PETHs of individual responsive neurons, colored according to session exhibiting responsivity (n = 64 neurons). Marginal distributions of cross- and within-session correlations are also shown. Note the deviation from unity due to high within- but not cross-session correlations. (G) Comparison of interbrain GC values averaged across bats during the operant session, shown for both possible directional relationships. Shaded areas denote SEM.

At the level of the single brain, certain neurons exhibited “self” or “other”-related response profiles during the operant session, as in the free-communications session (compare fig. S17 and S18A with fig. S4, A to C). However, we also saw substantial differences between the two sessions (fig. S18). Specifically, during the operant session, we observed an increased variability in call-related response latencies (fig. S18E), decreased firing rate consistency when firing rates were aligned to vocalizations rather than to reward (fig. S18F), and an increase in neural activity modulation occurring during the reward period (fig. S18, A and C) relative to the free-communications session. Most strikingly, however, we observed a reorganization of the responsivity of single neurons between the free-communication and operant sessions. We found that 78% (50/64) of neurons responsive in either session were not similarly responsive in both sessions (Fig. 3E, fig. S18, G and H, and table S2), indicating that their responsivity was contextually dependent. This reorganization was evident across the population of responsive neurons, which showed a systematic lack of firing rate consistency across sessions compared to within sessions (Fig. 3F). These differences were not driven primarily by acoustic differences between the calls produced in either session, as indicated by comparing acoustically similar calls across sessions and acoustically different calls within sessions (fig. S18I).

At the level of neural activity across brains, we compared interbrain GC values across session types. We found that the bidirectional interbrain relationship that we observed during communicative behavior breaks down during conditioned vocalizations. Instead of both caller and listener influencing one another (Fig. 2F), we observed no vocalization-related change in GC in the listener-to-caller direction during the operant session (Fig. 3G). As in the single-neuron activity, the observed differences in interbrain correlation were not driven primarily by acoustic differences across sessions (fig. S18J). Combined, these results suggest that the neural activity shifted away from the communication-related representation seen during spontaneous group social communication to a task-related representation in the reward-driven context.

Interbrain correlation and identity selectivity vary with social preferences of group members

Our findings thus far indicate that the neural activity patterns we identified during group communication depend on the social context at the group level. Next, we wanted to understand how these neural activity patterns vary according to social factors at the individual level. To do so, we used the fact that bats demonstrate robust spatial behavioral patterns that reflect social preferences of individuals within the group (11, 35). We developed a system to precisely track the individual positions of a group of eight bats moving freely in a large enclosure [1.2 × 1.2 × 0.6 m (width, length, height); Fig. 4, A and B, and fig. S19] as part of a complementary experimental session (termed the “social-space” session) performed prior to each free-communication session. This approach revealed stable social preferences of group members, which were reflected in the neural activity during vocal interactions in the free-communication session.

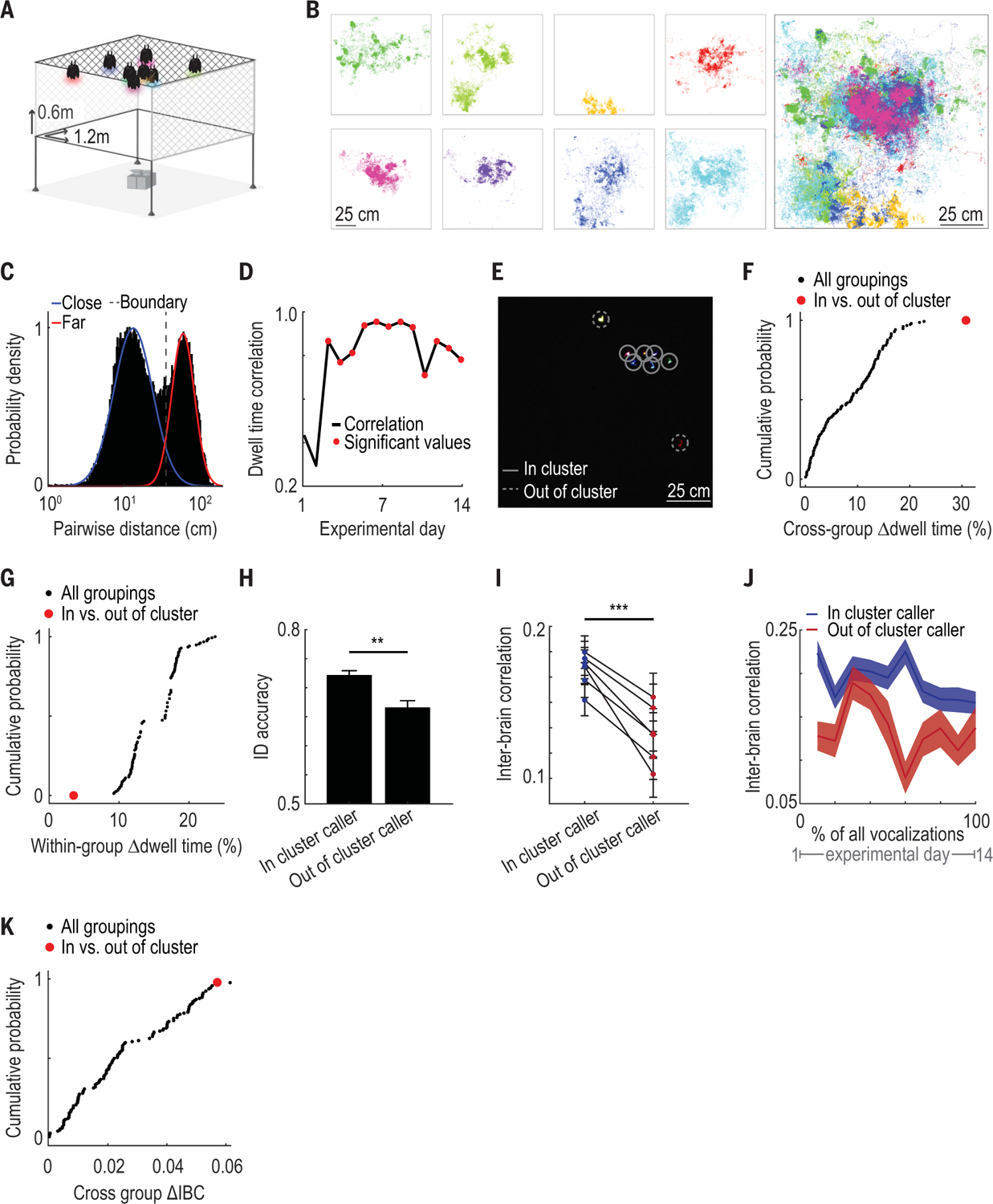

Fig. 4. Social-spatial patterns are related to both intra- and interbrain neural activity during group communication.

(A) Schematic of the “social space” enclosure: a large environment (1.2 × 1.2 × 0.6 m) where bats could move freely (bats and cameras not to scale). Transparent plexiglass on two sides and at the cage bottom allowed unobstructed video monitoring of all individuals (n = 8 bats). (B) Left: All tracked positions in an example session of each bat colored separately. Positions are shown on the xy plane corresponding to the enclosure ceiling where bats spent the majority of the time. Right: All bats’ positions overlaid. (C) Histogram of all measured instantaneous distances between pairs of bats displayed on a logarithmic scale. Blue and red lines indicate a two-component Gaussian mixture model fit to these data. Dashed line indicates the threshold for distances that were considered “close together” or “far apart.” (D) Correlation between dwell times for all bat pairs on a given day and the median pairwise dwell time across all other days. Statistically significant correlation values are indicated with red dots (P < 0.05, Mantel test). (E) Still image from the color video camera positioned below the cage. Each bat has an LED of a different color. Individual bat positions are indicated by circles. Lines are solid or dashed for in-cluster and out-of-cluster bats, respectively. (F) Cumulative probability distribution of the difference in mean dwell times between subgroups for all possible subgroupings of bats into two groups (n = 146 subgroupings). The in- and out-of-cluster subgrouping maximizes this value and is highlighted in red. (G) As in (F), showing the difference in mean dwell time within subgroups instead, which is minimized by the in- and out-of-cluster subgrouping. (H) Identity-decoding accuracy of identity-selective neurons when representing in-cluster versus out-of-cluster bats (**P = 0.006, likelihood ratio test; n = 68 neurons and bat pairs). Bars indicate mean decoding accuracy across neurons; error bars denote SEM. (I) Average interbrain correlation between bat pairs during vocalizations produced by in-cluster versus out-of-cluster bats (***P = 1.0 × 10−10, likelihood ratio test; n = 5758 calls and bat pairs). Each line indicates average interbrain correlation values for one bat pair; error bars indicate SEM. (J) Average interbrain correlation values separated as in (I) during all vocalizations across all free communication sessions, separated into equal-sized bins over time. Shaded areas denote SEM. Note that interbrain correlation values during calls from in- versus out-of-cluster bats are higher at every time point considered, indicating persistence of effect across days. (K) Cumulative probability of absolute differences in mean interbrain correlation between subgroups for all possible subgroupings of bats into two groups. Red dot indicates difference in interbrain correlation between the in- and out-of-cluster bat groups. Note that only one other subgrouping has a larger difference in interbrain correlation.

During the social-space session, most bats spent the majority of the time, and exclusively vocalized, near other bats (Fig. 4B and figs. S19B and S20). However, individual bats differed in their proximity preferences relative to other group members, resulting in a bimodal distribution of inter-bat distances (Fig. 4C and fig. S21). On the basis of this bimodal distribution, we derived a boundary to define distances between a pair of bats as either close together or far apart (Fig. 4C) and defined that pair’s “social dwell time” as the percentage of a session spent close together. Using this metric, we found that the way individuals positioned themselves relative to each other was highly stable over weeks (Fig. 4D and fig. S22). We observed two distinct social-spatial positioning preferences exhibited by different individuals: Some bats consistently spent time far apart from other group members, while others spent the majority of time close together (example shown in Fig. 4E). We quantified these preferences for each individual (Fig. 4, F and G, and fig. S23) and defined the “cluster status” of each bat as either “in-cluster” or “out-of-cluster,” reflecting their social-spatial preferences with respect to other group members.

During the corresponding free-communication sessions where all eight bats were close together, we recorded neural activity from the frontal cortex of four of the bats simultaneously. We found that the same neural activity patterns described in the previous smaller groups (n = 4 and n = 5 bats) were replicated in the larger group, including the representation of self versus others, individual identity, and interbrain relationships (n = 8 bats; fig. S24, A and B, and table S3). We additionally performed a group playback session with all eight bats present to test whether acoustic stimulation in a social context would drive other-responsive or identity-selective neurons. Consistent with our previous findings, we found that during group playback, none of the other-responsive or identity-selective neurons significantly modulated their activity (table S3), nor did we observe any increased interbrain correlation (fig. S24C). Furthermore, using position tracking during the free-communication session, we found that individual bats’ positions during calls could not account for the observed identity selectivity in single neurons (fig. S25). Next, we asked whether the cluster status of individual bats inferred from the social-space session was related to the neural activity during vocal interactions in the free-communication session. We focused our analysis on neural measures that intrinsically involve relationships between bats, namely the single-neuron identity selectivity and interbrain correlations.

We first examined whether the accuracy with which a calling bat could be decoded from the activity of identity-selective single neurons was related to that bat’s cluster status. We found that neurons selective for in-cluster bats had 34% higher decoding accuracy, on average, than neurons selective for out-of-cluster bats (Fig. 4H; n = 68 neuron and bat pairs; P = 0.006, likelihood ratio test; fig. S26). In- and out-of-cluster bats vocalized at similar rates across sessions (n = 14 sessions, P = 0.48, paired t test) and produced calls that could not be discriminated using acoustic features (n = 15,061 calls, P = 0.15, bootstrap test), which suggests that differences in acoustic content between the groups alone did not account for the neural differences we observed.

Next, we asked whether vocalization-related interbrain correlation in the free-communication session was related to the cluster status of the caller. Recent studies in humans have suggested that interbrain synchrony can be modulated by differences across individuals in verbal communication (32, 34, 36) as well as social group affiliation (37, 38). Consistent with these studies, we found that across all calls, interbrain correlation was 43% higher between all pairs of bats when in-cluster bats vocalized than when out-of-cluster bats vocalized (Fig. 4I; n = 5758 bat pairs and calls; P = 1.0 × 10−10, likelihood ratio test, controlling for bat pair and date of recording). This effect was persistent and stable over weeks and was not driven by day-to-day variability (Fig. 4J). Furthermore, differences in pairwise interbrain correlation according to the cluster status of the caller could not be accounted for by (i) the cluster status of the pair, (ii) whether the pair included the caller, or (iii) the physical distance between the bats in the free-communication session (fig. S27). Lastly, the difference in interbrain correlation during calls produced by in- versus out-of-cluster bats was greater than the difference in interbrain correlation for 98.6% of all other possible subgroupings of this group of eight bats (n = 146 subgroups; Fig. 4K), supporting the notion that social-spatial preferences influenced interbrain neural activity. Combined, these results indicate that intra- and interbrain neural activity patterns during group social communication were modulated by the social-spatial preferences of individual group members.

Discussion

In this study, we recorded neural activity from the frontal cortex of groups of bats engaged in spontaneous social communication and studied neural processes both within and across the brains of individual group members. At the level of the individual brain, we found that frontal cortical neural activity distinguished not only between calls produced by self versus others, but also between individual identities. Furthermore, examining neural activity across the brains of group members revealed robust, bidirectional, stable interbrain neural activity patterns that emerged only during spontaneous group vocal interactions.

Previous studies have demonstrated auditory responses in the frontal auditory field, an anatomically restricted region of the frontal cortex in microbats (39–41), even under anesthesia (42), whereas we did not observe neural responses to vocalization playback. This apparent discrepancy may be explained by our recordings being in an area outside of the frontal auditory fields, or possibly the lack of a frontal auditory field region in Egyptian fruit bats, especially considering the substantial neuroanatomical (43–45) and genomic (46) differences between micro- and megabats, as well as auditory functional specializations of the microbats (47–49). Future comparative studies will be crucial to further uncover the shared, as well as species-specific, neural circuits involved in vocal communicative behaviors. Yet collectively, our results are in line with findings in multiple species demonstrating the role of the frontal cortex in processing cognitive or associative aspects of acoustic information in a contextually dependent manner (50–53).

By comparing neural activity during a highly trained, task-based vocal behavior to activity during a more ethologically relevant social-vocal behavior, we found that single-neuron activity was highly contextually dependent and that interbrain correlation was entirely absent in the task-based context. This breakdown in interbrain correlation supports the hypotheses that bidirectional interbrain activity patterns are a feature of socially interactive behaviors (54, 55) and that shared interbrain activity patterns may play an important role in social communication between group members (19, 31, 33, 34, 54). These findings also underscore the importance of incorporating components of ethologically relevant spontaneous behavior to complement the prevailing approach of less ethologically relevant, and often highly trained, experimental designs (56, 57).

Consistent with the hypothesis that the repertoire of intra- and interbrain activity patterns we observed reflects (or possibly serves as the basis for) social interactions, we found that bats that chose to spend more time together elicited a more accurate representation of identity at the single-neuron level as well as higher levels of interbrain correlation between the group members. It is possible that the reduced interbrain correlation and identity accuracy elicited by out-of-cluster bats reflects differential communicative efficacy, as suggested by studies in humans (34, 36). This, in turn, may relate to the abilities of individuals to negotiate a place within a group. Therefore, an important avenue for future studies will be to follow the coevolution of neural and social dynamics during the formation and restructuring of social groups of varying compositions. Similarly, future studies that perturb neural activity during social interactions will be able to dissect the circuits supporting the social behaviors described here.

In addition to bats, a wide range of species naturally interact in groups and exhibit a diversity of social structures and forms of communication. Extending the approach taken here to other species in order to uncover similarities and differences in neural repertoires for social communication may provide important insight into the neural mechanisms that facilitate successful group living.

Materials and methods summary

To study social-vocal interactions under ethologically relevant group conditions, we allowed groups of bats to interact freely in a dark enclosure and spontaneously vocalize while recording audio, video, and neural data. We used wireless tetrode-based electrophysiology to record single-neuron activity and local field potentials from the frontal cortices of multiple Egyptian fruit bats (R. aegyptiacus) simultaneously (these experiments were repeated in three separate groups of size n = 4, 5, and 8). The identity of the vocalizing bat was determined using a custom-made on-animal call detector device. For certain subsets of bats, we also performed the additional following experiments: (i) playback of vocalizations from a loudspeaker to either individual isolated bats or bats in the group setting; (ii) production of echolocation signals by an individual bat while freely flying; (iii) pairs of bats engaged in an operant vocalization production task; and (iv) tracking the position of individual bats in a larger enclosure to determine social-spatial preferences of members in the group. Single-neuron firing rates were calculated and used to determine which neurons modulated their firing rates while producing a vocalization (self-responsive) or listening to other bats’ vocalizations (other-responsive). Single-neuron firing rates were also used to determine whether responsive neurons encoded the acoustic features of vocalizations. Identity selectivity of single neurons was assessed by training classifiers to use firing rates around vocalizations in order to predict the identity of the vocalizer. The power in the high-frequency band (70 to 150 Hz) of the local field potential was calculated and used to determine the level of interbrain correlation and Granger causality between the brains of individual bats around vocalizations. Responsivity of single neurons, identity selectivity of single neurons, and interbrain correlation measures were compared between spontaneous and trained vocalizations. Elicited identity selectivity accuracy and interbrain correlation measures were also compared between vocalizations produced by individual bats that exhibited one of two different modes of social-spatial preferences: (i) those preferring to position themselves close to other group members (in-cluster) or (ii) those preferring to remain farther away from other group members (out-of-cluster). Further details of the methods are provided in the supplementary materials.

Supplementary Material

ACKNOWLEDGMENTS

We thank W. Zhang, W. A. Liberti, E. Azim, R. T. Knight, Z. M. Williams, and R. Báez-Mendoza for comments on the manuscript; J. W. Pillow, F. E. Theunissen, and S. Popham for advice on acoustic encoding modelling; W. Zhang and R. T. Knight for advice on interbrain analyses; D. Genzel for advice on acoustic recording and analysis; L. Loomis and J. Chase for animal training; A. Rakuljic and B. Shvareva for assistance with histology; and G. Lawson, K. Jensen, J. Frohlich and the staff of the Office of Laboratory Animal Care for support with animal husbandry and care.

Funding:

Supported by NIH (DP2-DC016163), NIMH (1-R01MH25387-01), the New York Stem Cell Foundation (NYSCF-R-NI40), the Alfred P. Sloan Foundation (FG-2017-9646), the Brain Research Foundation (BRFSG-2017-09), a Packard Fellowship (2017-66825), the Klingenstein Simons Fellowship, the Human Frontiers Science Program, the Pew Charitable Trust (00029645), the McKnight Foundation, the Dana Foundation (M.M.Y.), and a Human Frontiers Science Program postdoctoral fellowship (B.S.). M.M.Y. is a New York Stem Cell Foundation-Robertson Investigator.

Footnotes

Competing interests: The authors declare no competing interests.

SUPPLEMENTARY MATERIALS

Data and materials availability:

The data presented in this study are available at (58).

REFERENCES AND NOTES

- 1.Seyfarth RM, Cheney DL, Marler P, Vervet monkey alarm calls: Semantic communication in a free-ranging primate. Anim. Behav 28, 1070–1094 (1980). doi: 10.1016/S0003-3472(80)80097-2 [DOI] [Google Scholar]

- 2.Elie JE, Theunissen FE, The vocal repertoire of the domesticated zebra finch: A data-driven approach to decipher the information-bearing acoustic features of communication signals. Anim. Cogn 19, 285–315 (2016). doi: 10.1007/s10071-015-0933-6; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Prat Y, Taub M, Yovel Y, Everyday bat vocalizations contain information about emitter, addressee, context, and behavior. Sci. Rep 6, 39419 (2016). doi: 10.1038/srep39419; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Hage SR, Nieder A, Single neurons in monkey prefrontal cortex encode volitional initiation of vocalizations. Nat. Commun 4, 2409 (2013). doi: 10.1038/ncomms3409; [DOI] [PubMed] [Google Scholar]

- 5.Washington SD, Kanwal JS, DSCF neurons within the primary auditory cortex of the mustached bat process frequency modulations present within social calls. J. Neurophysiol 100, 3285–3304 (2008). doi: 10.1152/jn.90442.2008; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Carruthers IM, Natan RG, Geffen MN, Encoding of ultrasonic vocalizations in the auditory cortex. J. Neurophysiol 109, 1912–1927 (2013). doi: 10.1152/jn.00483.2012; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Okobi DE Jr.., Banerjee A, Matheson AMM, Phelps SM, Long MA, Motor cortical control of vocal interaction in neotropical singing mice. Science 363, 983–988 (2019). doi: 10.1126/science.aau9480; [DOI] [PubMed] [Google Scholar]

- 8.Adreani NM, D’Amelio PB, Gahr M, Ter Maat A, Life-Stage Dependent Plasticity in the Auditory System of a Songbird Is Signal and Emitter-Specific. Front. Neurosci 14, 588672 (2020). doi: 10.3389/fnins.2020.588672; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hoffmann S et al. , Duets recorded in the wild reveal that interindividually coordinated motor control enables cooperative behavior. Nat. Commun 10, 2577 (2019). doi: 10.1038/s41467-019-10593-3; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Fichtel C, Manser M, in Animal Behaviour: Evolution and Mechanisms (Springer, 2010), pp. 29–54. [Google Scholar]

- 11.Kerth G, Causes and Consequences of Sociality in Bats. Bioscience 58, 737–746 (2008). doi: 10.1641/B580810 [DOI] [Google Scholar]

- 12.Kwiecinski GG, Griffiths TA, Rousettus egyptiacus. Mamm. Species 95, 1–9 (1999). doi: 10.2307/3504411 [DOI] [Google Scholar]

- 13.Harten L et al. , Persistent producer-scrounger relationships in bats. Sci. Adv 4, e1603293 (2018). doi: 10.1126/sciadv.1603293; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Harten L, Prat Y, Ben Cohen S, Dor R, Yovel Y, Food for Sex in Bats Revealed as Producer Males Reproduce with Scrounging Females. Curr. Biol 29, 1895–1900.e3 (2019). doi: 10.1016/j.cub.2019.04.066; [DOI] [PubMed] [Google Scholar]

- 15.Prat Y, Taub M, Yovel Y, Vocal learning in a social mammal: Demonstrated by isolation and playback experiments in bats. Sci. Adv 1, e1500019 (2015). doi: 10.1126/sciadv.1500019; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Genzel D, Desai J, Paras E, Yartsev MM, Long-term and persistent vocal plasticity in adult bats. Nat. Commun 10, 3372 (2019). doi: 10.1038/s41467-019-11350-2; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Nummela SU, Jovanovic V, de la Mothe L, Miller CT, Social Context-Dependent Activity in Marmoset Frontal Cortex Populations during Natural Conversations. J. Neurosci 37, 7036–7047 (2017). doi: 10.1523/JNEUROSCI.0702-17.2017; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Romanski LM, Averbeck BB, Diltz M, Neural representation of vocalizations in the primate ventrolateral prefrontal cortex. J. Neurophysiol 93, 734–747 (2005). doi: 10.1152/jn.00675.2004; [DOI] [PubMed] [Google Scholar]

- 19.Kuhlen AK, Bogler C, Brennan SE, Haynes JD, Brains in dialogue: Decoding neural preparation of speaking to a conversational partner. Soc. Cogn. Affect. Neurosci 12, 871–880 (2017). doi: 10.1093/scan/nsx018; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kingsbury L et al. , Correlated Neural Activity and Encoding of Behavior across Brains of Socially Interacting Animals. Cell 178, 429–446.e16 (2019). doi: 10.1016/j.cell.2019.05.022; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Chang SWC, Gariépy JF, Platt ML, Neuronal reference frames for social decisions in primate frontal cortex. Nat. Neurosci 16, 243–250 (2013). doi: 10.1038/nn.3287; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Zhang W, Yartsev MM, Correlated Neural Activity across the Brains of Socially Interacting Bats. Cell 178, 413–428.e22 (2019). doi: 10.1016/j.cell.2019.05.023; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Hage SR, Nieder A, Audio-vocal interaction in single neurons of the monkey ventrolateral prefrontal cortex. J. Neurosci 35, 7030–7040 (2015). doi: 10.1523/JNEUROSCI.2371-14.2015; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Silbert LJ, Honey CJ, Simony E, Poeppel D, Hasson U, Coupled neural systems underlie the production and comprehension of naturalistic narrative speech. Proc. Natl. Acad. Sci. U.S.A 111, E4687–E4696 (2014). doi: 10.1073/pnas.1323812111; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Miller CT, Thomas AW, Nummela SU, de la Mothe LA, Responses of primate frontal cortex neurons during natural vocal communication. J. Neurophysiol 114, 1158–1171 (2015). doi: 10.1152/jn.01003.2014; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Weineck K, García-Rosales F, Hechavarría JC, Neural oscillations in the fronto-striatal network predict vocal output in bats. PLOS Biol. 18, e3000658 (2020). doi: 10.1371/journal.pbio.3000658; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Möhres FP, Kulzer E, Über die Orientierung der Flughunde (Chiroptera - Pteropodidae). Z. Vgl. Physiol 38, 1–29 (1956). doi: 10.1007/BF00338621 [DOI] [Google Scholar]

- 28.Lee WJ et al. , Tongue-driven sonar beam steering by a lingual-echolocating fruit bat. PLOS Biol. 15, e2003148 (2017). doi: 10.1371/journal.pbio.2003148; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Sugihara T, Diltz MD, Averbeck BB, Romanski LM, Integration of auditory and visual communication information in the primate ventrolateral prefrontal cortex. J. Neurosci 26, 11138–11147 (2006). doi: 10.1523/JNEUROSCI.3550-06.2006; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Babiloni F, Astolfi L, Social neuroscience and hyperscanning techniques: Past, present and future. Neurosci. Biobehav. Rev 44, 76–93 (2014). doi: 10.1016/j.neubiorev.2012.07.006; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Pérez A, Carreiras M, Duñabeitia JA, Brain-to-brain entrainment: EEG interbrain synchronization while speaking and listening. Sci. Rep 7, 4190 (2017). doi: 10.1038/s41598-017-04464-4; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Stephens GJ, Silbert LJ, Hasson U, Speaker-listener neural coupling underlies successful communication. Proc. Natl. Acad. Sci. U.S.A 107, 14425–14430 (2010). doi: 10.1073/pnas.1008662107; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Stolk A et al. , Cerebral coherence between communicators marks the emergence of meaning. Proc. Natl. Acad. Sci. U.S.A 111, 18183–18188 (2014). doi: 10.1073/pnas.1414886111; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Dikker S et al. , Brain-to-Brain Synchrony Tracks Real-World Dynamic Group Interactions in the Classroom. Curr. Biol 27, 1375–1380 (2017). doi: 10.1016/j.cub.2017.04.002; [DOI] [PubMed] [Google Scholar]

- 35.Mann O et al. , Finding Your Friends at Densely Populated Roosting Places: Male Egyptian Fruit Bats ( Rousettus aegyptiacus ) Distinguish between Familiar and Unfamiliar Conspecifics. Acta Chiropt. 13, 411–417 (2011). doi: 10.3161/150811011X624893 [DOI] [Google Scholar]

- 36.Schmälzle R, Häcker FEK, Honey CJ, Hasson U, Engaged listeners: Shared neural processing of powerful political speeches. Soc. Cogn. Affect. Neurosci 10, 1137–1143 (2015). doi: 10.1093/scan/nsu168; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Hyon R et al. , Similarity in functional brain connectivity at rest predicts interpersonal closeness in the social network of an entire village. Proc. Natl. Acad. Sci. U.S.A 117, 33149–33160 (2020). doi: 10.1073/pnas.2013606117; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Yang J, Zhang H, Ni J, De Dreu CKW, Ma Y, Within-group synchronization in the prefrontal cortex associates with intergroup conflict. Nat. Neurosci 23, 754–760 (2020). doi: 10.1038/s41593-020-0630-x; [DOI] [PubMed] [Google Scholar]

- 39.Kobler JB, Isbey SF, Casseday JH, Auditory pathways to the frontal cortex of the mustache bat, Pteronotus parnellii. Science 236, 824–826 (1987). doi: 10.1126/science.2437655; [DOI] [PubMed] [Google Scholar]

- 40.Kanwal JS, Gordon M, Peng JP, Heinz-Esser K, Auditory responses from the frontal cortex in the mustached bat, Pteronotus parnellii. Neuroreport 11, 367–372 (2000). doi: 10.1097/00001756-200002070-00029; [DOI] [PubMed] [Google Scholar]

- 41.Eiermann A, Esser KH, Auditory responses from the frontal cortex in the short-tailed fruit bat Carollia perspicillata. Neuroreport 11, 421–425 (2000). doi: 10.1097/00001756-200002070-00040; [DOI] [PubMed] [Google Scholar]

- 42.López-Jury L, Mannel A, García-Rosales F, Hechavarria JC, Modified synaptic dynamics predict neural activity patterns in an auditory field within the frontal cortex. Eur. J. Neurosci 51, 1011–1025 (2020). doi: 10.1111/ejn.14600; [DOI] [PubMed] [Google Scholar]

- 43.Pettigrew JD, Maseko BC, Manger PR, Primate-like retinotectal decussation in an echolocating megabat, Rousettus aegyptiacus. Neuroscience 153, 226–231 (2008). doi: 10.1016/j.neuroscience.2008.02.019; [DOI] [PubMed] [Google Scholar]

- 44.Thiele A, Rübsamen R, Hoffmann KP, Anatomical and physiological investigation of auditory input to the superior colliculus of the echolocating megachiropteran bat Rousettus aegyptiacus. Exp. Brain Res 112, 223–236 (1996). doi: 10.1007/BF00227641; [DOI] [PubMed] [Google Scholar]

- 45.Hu K, Li Y, Gu X, Lei H, Zhang S, Brain structures of echolocating and nonecholocating bats, derived in vivo from magnetic resonance images. Neuroreport 17, 1743–1746 (2006). doi: 10.1097/01.wnr.0000239959.91190.c8; [DOI] [PubMed] [Google Scholar]

- 46.Nikaido M et al. , Comparative genomic analyses illuminate the distinct evolution of megabats within Chiroptera. DNA Res. 27, dsaa021 (2020). doi: 10.1093/dnares/dsaa021; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Covey E, Neurobiological specializations in echolocating bats. Anat. Rec. A 287, 1103–1116 (2005). doi: 10.1002/ar.a.20254; [DOI] [PubMed] [Google Scholar]

- 48.Ito T et al. , Three forebrain structures directly inform the auditory midbrain of echolocating bats. Neurosci. Lett 712, 134481 (2019). doi: 10.1016/j.neulet.2019.134481; [DOI] [PubMed] [Google Scholar]

- 49.Kössl M, Hechavarria J, Voss C, Schaefer M, Vater M, Bat auditory cortex – model for general mammalian auditory computation or special design solution for active time perception? Eur. J. Neurosci 41, 518–532 (2015). doi: 10.1111/ejn.12801; [DOI] [PubMed] [Google Scholar]

- 50.Medalla M, Barbas H, Specialized prefrontal “auditory fields”: Organization of primate prefrontal-temporal pathways. Front. Neurosci 8, 77 (2014). doi: 10.3389/fnins.2014.00077; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Plakke B, Romanski LM, Auditory connections and functions of prefrontal cortex. Front. Neurosci 8, 199 (2014). doi: 10.3389/fnins.2014.00199; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Fritz JB, David SV, Radtke-Schuller S, Yin P, Shamma SA, Adaptive, behaviorally gated, persistent encoding of task-relevant auditory information in ferret frontal cortex. Nat. Neurosci 13, 1011–1019 (2010). doi: 10.1038/nn.2598; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Cohen YE, Theunissen F, Russ BE, Gill P, Acoustic features of rhesus vocalizations and their representation in the ventrolateral prefrontal cortex. J. Neurophysiol 97, 1470–1484 (2007). doi: 10.1152/jn.00769.2006; [DOI] [PubMed] [Google Scholar]

- 54.Hasson U, Ghazanfar AA, Galantucci B, Garrod S, Keysers C, Brain-to-brain coupling: A mechanism for creating and sharing a social world. Trends Cogn. Sci 16, 114–121 (2012). doi: 10.1016/j.tics.2011.12.007; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Barraza P, Pérez A, Rodríguez E, Brain-to-Brain Coupling in the Gamma-Band as a Marker of Shared Intentionality. Front. Hum. Neurosci 14, 295 (2020). doi: 10.3389/fnhum.2020.00295; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Krakauer JW, Ghazanfar AA, Gomez-Marin A, MacIver MA, Poeppel D, Neuroscience Needs Behavior: Correcting a Reductionist Bias. Neuron 93, 480–490 (2017). doi: 10.1016/j.neuron.2016.12.041; [DOI] [PubMed] [Google Scholar]

- 57.Yartsev MM, The emperor’s new wardrobe: Rebalancing diversity of animal models in neuroscience research. Science 358, 466–469 (2017). doi: 10.1126/science.aan8865; [DOI] [PubMed] [Google Scholar]

- 58.Rose MC, Styr B, Schmid TA, Elie JE, Yartsev MM, Zenodo (2021). doi: 10.5281/zenodo.5152173 [DOI] [PMC free article] [PubMed]

- 59.Neuweiler G, Bau und Leistung des Flughundauges (Pteropus giganteus gig. Brünn.). Z. Vgl. Physiol 46, 13–56 (1962). doi: 10.1007/BF00340352 [DOI] [Google Scholar]

- 60.Liu HQ et al. , Sci. Rep 5, 1–13 (2015). [Google Scholar]

- 61.Lattenkamp EZ, Vernes SC, Wiegrebe L, Volitional control of social vocalisations and vocal usage learning in bats. J. Exp. Biol 221, jeb180729 (2018). doi: 10.1242/jeb.180729; [DOI] [PubMed] [Google Scholar]

- 62.Yartsev MM, Ulanovsky N, Representation of three-dimensional space in the hippocampus of flying bats. Science 340, 367–372 (2013). doi: 10.1126/science.1235338; [DOI] [PubMed] [Google Scholar]

- 63.Finkelstein A et al. , Three-dimensional head-direction coding in the bat brain. Nature 517, 159–164 (2015). doi: 10.1038/nature14031; [DOI] [PubMed] [Google Scholar]

- 64.Fukushima M, Margoliash D, The effects of delayed auditory feedback revealed by bone conduction microphone in adult zebra finches. Sci. Rep 5, 8800 (2015). doi: 10.1038/srep08800; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.de Cheveigné A, Kawahara H, YIN, a fundamental frequency estimator for speech and music. J. Acoust. Soc. Am 111, 1917–1930 (2002). doi: 10.1121/1.1458024; [DOI] [PubMed] [Google Scholar]

- 66.Glerean E et al. , Reorganization of functionally connected brain subnetworks in high-functioning autism. Hum. Brain Mapp 37, 1066–1079 (2016). doi: 10.1002/hbm.23084; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Quiroga RQ, Nadasdy Z, Ben-Shaul Y, Unsupervised spike detection and sorting with wavelets and superparamagnetic clustering. Neural Comput. 16, 1661–1687 (2004). doi: 10.1162/089976604774201631; [DOI] [PubMed] [Google Scholar]

- 68.Page ES, Continuous inspection schemes. Biometrika 41, 100–115 (1954). doi: 10.1093/biomet/41.1-2.100 [DOI] [Google Scholar]

- 69.Koepcke L, Ashida G, Kretzberg J, Single and Multiple Change Point Detection in Spike Trains: Comparison of Different CUSUM Methods. Front. Syst. Neurosci 10, 51 (2016). doi: 10.3389/fnsys.2016.00051; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Nunez-Elizalde AO, Huth AG, Gallant JL, Voxelwise encoding models with non-spherical multivariate normal priors. Neuroimage 197, 482–492 (2019). doi: 10.1016/j.neuroimage.2019.04.012; [DOI] [PubMed] [Google Scholar]

- 71.Musall S, Kaufman MT, Juavinett AL, Gluf S, Churchland AK, Single-trial neural dynamics are dominated by richly varied movements. Nat. Neurosci 22, 1677–1686 (2019). doi: 10.1038/s41593-019-0502-4; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Barnett L, Seth AK, The MVGC multivariate Granger causality toolbox: A new approach to Granger-causal inference. J. Neurosci. Methods 223, 50–68 (2014). doi: 10.1016/j.jneumeth.2013.10.018; [DOI] [PubMed] [Google Scholar]

- 73.Barnett L, Barrett AB, Seth AK, Granger causality and transfer entropy are equivalent for Gaussian variables. Phys. Rev. Lett 103, 238701 (2009). doi: 10.1103/PhysRevLett.103.238701; [DOI] [PubMed] [Google Scholar]

- 74.Timme NM, Lapish C, A Tutorial for Information Theory in Neuroscience. eNeuro 5, 1–40 (2018). doi: 10.1523/ENEURO.0052-18.2018; [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data presented in this study are available at (58).