Abstract

Modern electrophysiological recordings simultaneously capture single-unit spiking activities of hundreds of neurons spread across large cortical distances. Yet, this parallel activity is often confined to relatively low-dimensional manifolds. This implies strong coordination also among neurons that are most likely not even connected. Here, we combine in vivo recordings with network models and theory to characterize the nature of mesoscopic coordination patterns in macaque motor cortex and to expose their origin: We find that heterogeneity in local connectivity supports network states with complex long-range cooperation between neurons that arises from multi-synaptic, short-range connections. Our theory explains the experimentally observed spatial organization of covariances in resting state recordings as well as the behaviorally related modulation of covariance patterns during a reach-to-grasp task. The ubiquity of heterogeneity in local cortical circuits suggests that the brain uses the described mechanism to flexibly adapt neuronal coordination to momentary demands.

Research organism: Rhesus macaque

Introduction

Complex brain functions require coordination between large numbers of neurons. Unraveling mechanisms of neuronal coordination is therefore a core ingredient towards answering the long-standing question of how neuronal activity represents information. Population coding is one classical paradigm (Georgopoulos et al., 1983) in which entire populations of similarly tuned neurons behave coherently, thus leading to positive correlations among their members. The emergence and dynamical control of such population-averaged correlations has been studied intensely (Ginzburg and Sompolinsky, 1994; Renart et al., 2010; Helias et al., 2014; Rosenbaum and Doiron, 2014). More recently, evidence accumulated that neuronal activity often evolves within more complex low-dimensional manifolds, which imply more involved ways of neuronal activity coordination (Gallego et al., 2017; Gallego, 2018; Gallego et al., 2020): A small number of population-wide activity patterns, the neural modes, are thought to explain most variability of neuronal activity. In this case, individual neurons do not necessarily follow a stereotypical activity pattern that is identical across all neurons contributing to a representation. Instead, the coordination among the members is determined by more complex relations. Simulations of recurrent network models indeed indicate that networks trained to perform a realistic task exhibit activity organized in low-dimensional manifolds (Sussillo et al., 2015). The dimensionality of such manifolds is determined by the structure of correlations (Abbott et al., 2011; Mazzucato et al., 2016) and tightly linked to the complexity of the task the network has to perform (Gao, 2017) as well as to the dimensionality of the stimulus (Stringer et al., 2019). Recent work has started to decipher how neural modes and the dimensionality of activity are shaped by features of network connectivity, such as heterogeneity of connections (Smith et al., 2018; Dahmen et al., 2019), block structure (Aljadeff et al., 2015; Aljadeff et al., 2016), and low-rank perturbations (Mastrogiuseppe and Ostojic, 2018) of connectivity matrices, as well as connectivity motifs (Recanatesi et al., 2019; Dahmen et al., 2021; Hu and Sompolinsky, 2020). Yet, these works neglected the spatial organization of network connectivity (Schnepel et al., 2015) that becomes more and more important with current experimental techniques that allow the simultaneous recording of ever more neurons. How distant neurons that are likely not connected can still be strongly coordinated to participate in the same neural mode is a widely open question.

To answer this question, we combine analyses of parallel spiking data from macaque motor cortex with the analytical investigation of a spatially organized neuronal network model. We here quantify coordination by Pearson correlation coefficients and pairwise covariances, which measure how temporal departures of the neurons’ activities away from their mean firing rate are correlated. We show that, even with only unstructured and short-range connections, strong covariances across distances of several millimeters emerge naturally in balanced networks if their dynamical state is close to an instability within a ‘critical regime’. This critical regime arises from strong heterogeneity in local network connections that is abundant in brain networks. Intuitively, it arises because activity propagates over a large number of different indirect paths. Heterogeneity, here in the form of sparse random connectivity, is thus essential to provide a rich set of such paths. While mean covariances are readily accessible by mean-field techniques and have been shown to be small in balanced networks (Renart et al., 2010; Tetzlaff et al., 2012), explaining covariances on the level of individual pairs requires methods from statistical physics of disordered systems. With such a theory, here derived for spatially organized excitatory-inhibitory networks, we show that large individual covariances arise at all distances if the network is close to the critical point. These predictions are confirmed by recordings of macaque motor cortex activity. The long-range coordination found in this study is not merely determined by the anatomical connectivity, but depends substantially on the network state, which is characterized by the individual neurons’ mean firing rates. This allows the network to adjust the neuronal coordination pattern in a dynamic fashion, which we demonstrate through simulations and by comparing two behavioral epochs of a reach-to-grasp experiment.

Results

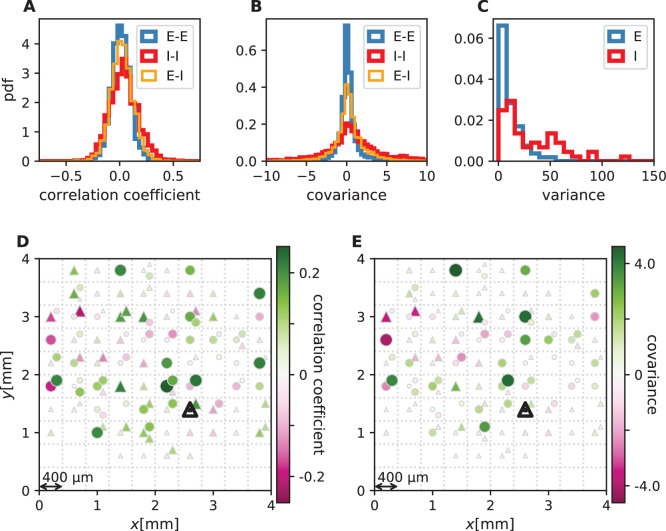

Macaque motor cortex shows long-range coordination patterns

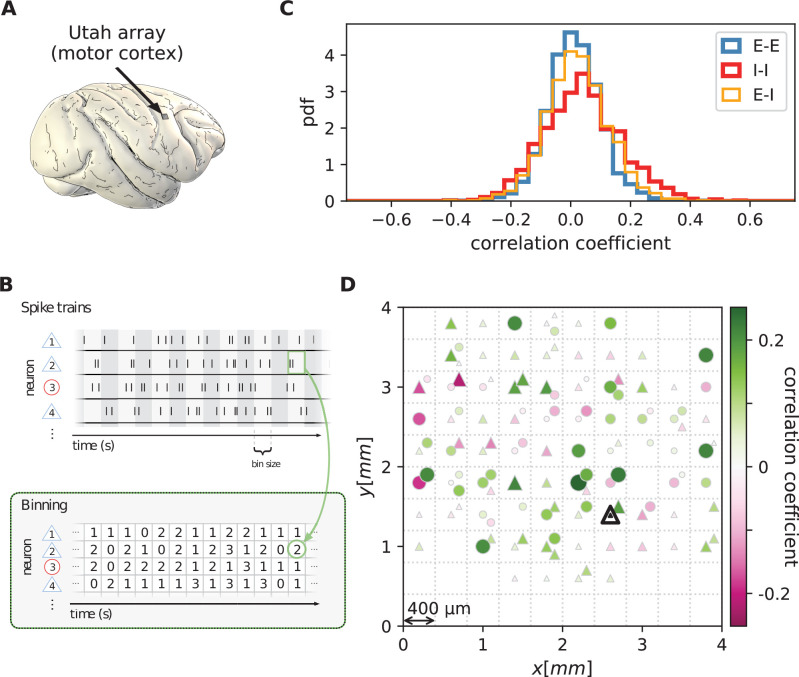

We first analyze data from motor cortex of macaques during rest, recorded with , 100-electrode Utah arrays with 400 µm inter-electrode distance (Figure 1A). The resting condition of motor cortex in monkeys is ideal to assess intrinsic coordination between neurons during ongoing activity. In particular, our analyses focus on true resting state data, devoid of movement-related transients in neuronal firing (see Materials and methods). Parallel single-unit spiking activity of neurons per recording session, sorted into putative excitatory and inhibitory cells, shows strong spike-count correlations across the entire Utah array, well beyond the typical scale of the underlying short-range connectivity profiles (Figure 1B and D). Positive and negative correlations form patterns in space that are furthermore seemingly unrelated to the neuron types. All populations show a large dispersion of both positive and negative correlation values (Figure 1C).

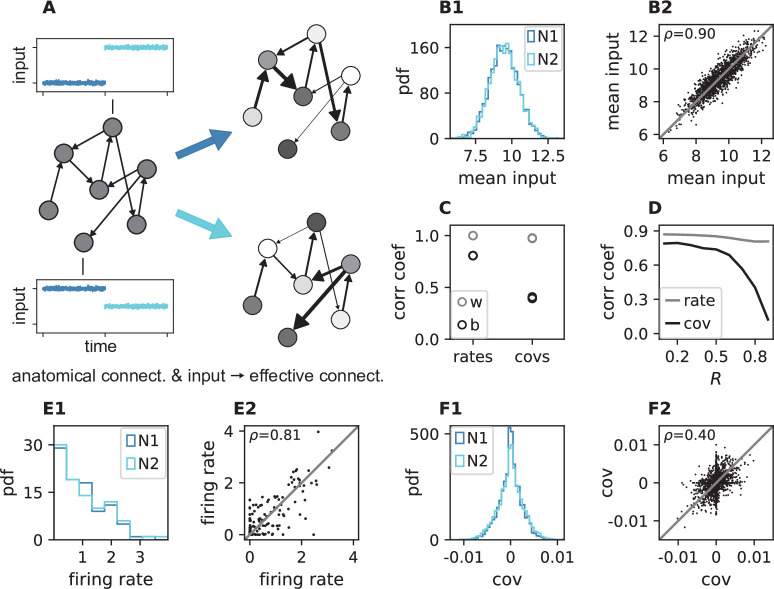

Figure 1. Salt-and-pepper structure of covariances in motor cortex.

(A) Sketch of 10 × 10 Utah electrode array recording in motor cortex of macaque monkey during rest. (B) Spikes are sorted into putative excitatory (blue triangles) and inhibitory (red circles) single units according to widths of spike waveforms (see Appendix 1 Section 2). Resulting spike trains are binned in 1 s bins to obtain spike counts. (C) Population-resolved distribution of pairwise spike-count Pearson correlation coefficients in session E2 (E-E: excitatory-excitatory, E-I: excitatory-inhibitory, I-I: inhibitory-inhibitory). (D) Pairwise spike-count correlation coefficients with respect to the neuron marked by black triangle in one recording (session E2, see Materials and methods). Grid indicates electrodes of a Utah array, triangles and circles correspond to putative excitatory and inhibitory neurons, respectively. Size as well as color of markers represent correlation. Neurons within the same square were recorded on the same electrode.

The classical view on pairwise correlations in balanced networks (Ginzburg and Sompolinsky, 1994; Renart et al., 2010; Pernice et al., 2011; Pernice et al., 2012; Tetzlaff et al., 2012; Helias et al., 2014) focuses on averages across many pairs of cells: average correlations are small if the network dynamics is stabilized by an excess of inhibitory feedback; dynamics known as the ‘balanced state’ arise (van Vreeswijk and Sompolinsky, 1996; Amit and Brunel, 1997; van Vreeswijk and Sompolinsky, 1998): Negative feedback counteracts any coherent increase or decrease of the population-averaged activity, preventing the neurons from fluctuating in unison (Tetzlaff et al., 2012). Breaking this balance in different ways leads to large correlations (Rosenbaum and Doiron, 2014; Darshan et al., 2018; Baker et al., 2019). Can the observation of significant correlations between individual cells across large distances be reconciled with the balanced state? In the following, we provide a mechanistic explanation.

Multi-synaptic connections determine correlations

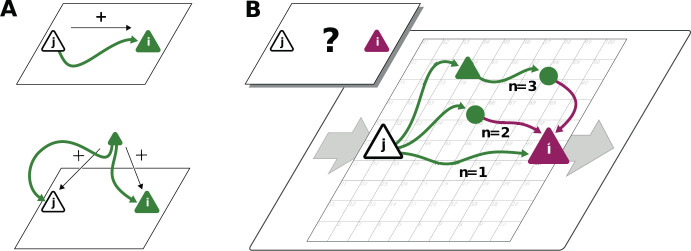

Connections mediate interactions between neurons. Many studies therefore directly relate connectivity and correlations (Pernice et al., 2011; Pernice et al., 2012; Trousdale et al., 2012; Brinkman et al., 2018; Kobayashi et al., 2019). From direct connectivity, one would expect positive correlations between excitatory neurons and negative correlations between inhibitory neurons and a mix of negative and positive correlations only for excitatory-inhibitory pairs. Likewise, a shared input from inside or outside the network only imposes positive correlations between any two neurons (Figure 2A). The observations that excitatory neurons may have negative correlations (Figure 1D), as well as the broad distribution of correlations covering both positive and negative values (Figure 1C), are not compatible with this view. In fact, the sign of correlations appears to be independent of the neuron types. So how do negative correlations between excitatory neurons arise?

Figure 2. Correlations from direct and indirect connections.

(A) Positive correlation (green neuron i) follows from direct excitatory connection (top) or shared input (middle). (B) Negative correlation (magenta) between two excitatory neurons cannot be explained by direct connections: Neuronal interactions are not only mediated via direct connections (; sign uniquely determined by presynaptic neuron type) but also via indirect paths of different length . The latter may have any sign (positive: green; negative: purple) due to intermediate neurons of arbitrary type (triangle: excitatory, circle: inhibitory).

The view that equates connectivity with correlation implicitly assumes that the effect of a single synapse on the receiving neuron is weak. This view, however, regards each synapse in isolation. Could there be states in the network where, collectively, many weak synapses cooperate, as perhaps required to form low-dimensional neuronal manifolds? In such a state, interactions may not only be mediated via direct connections but also via indirect paths through the network (Figure 2B). Such effective multi-synaptic connections may explain our observation that far apart neurons that are basically unconnected display considerable correlation of arbitrary sign.

Let us here illustrate the ideas first and corroborate them in subsequent sections. Direct connections yield correlations of a predefined sign, leading to correlation distributions with multiple peaks, for example a positive peak for excitatory neurons that are connected and a peak at zero for neurons that are not connected. Multi-synaptic paths, however, involve both excitatory and inhibitory intermediate neurons, which contribute to the interaction with different signs (Figure 2B). Hence, a single indirect path can contribute to the total interaction with arbitrary sign (Pernice et al., 2011). If indirect paths dominate the interaction between two neurons, the sign of the resulting correlation becomes independent of their type. Given that the connecting paths in the network are different for any two neurons, the resulting correlations can fall in a wide range of both positive and negative values, giving rise to the broad distributions for all combinations of neuron types in Figure 1C. This provides a hypothesis why there may be no qualitative difference between the distribution of correlations for excitatory and inhibitory neurons. In fact, their widths are similar and their mean is close to zero (see Materials and methods for exact values); the latter being the hallmark of the negative feedback that characterizes the balanced state. The subsequent model-based analysis will substantiate this idea and show that it also holds for networks with spatially organized heterogeneous connectivity.

To play this hypothesis further, an important consequence of the dominance of multi-synaptic connections could be that correlations are not restricted to the spatial range of direct connectivity. Through interactions via indirect paths the reach of a single neuron could effectively be increased. But the details of the spatial profile of the correlations in principle could be highly complex as it depends on the interplay of two antagonistic effects: On the one hand, signal propagation becomes weaker with distance, as the signal has to pass several synaptic connections. Along these paths mean firing rates of neurons are typically diverse, and so are their signal transmission properties (de la Rocha et al., 2007). On the other hand, the number of contributing indirect paths between any pair of neurons proliferates with their distance. With single neurons typically projecting to thousands of other neurons in cortex, this leads to involved combinatorics; intuition here ceases to provide a sensible hypothesis on what is the effective spatial profile and range of coordination between neurons. Also it is unclear which parameters these coordination patterns depend on. The model-driven and analytical approach of the next section will provide such a hypothesis.

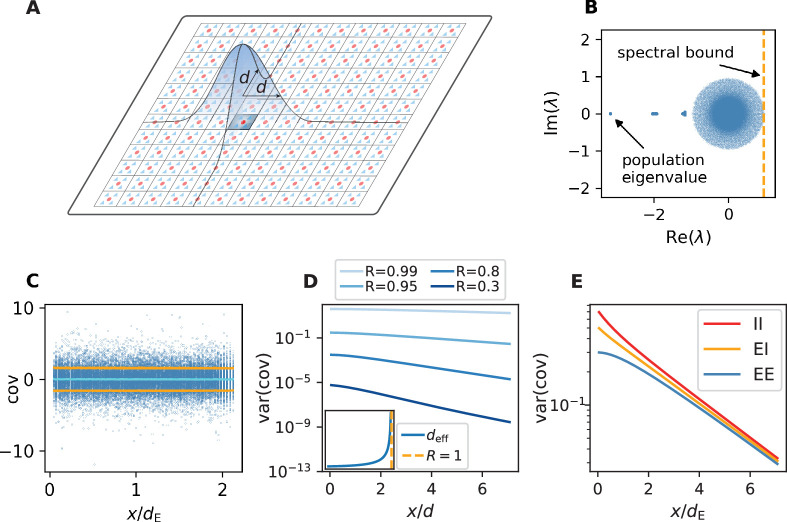

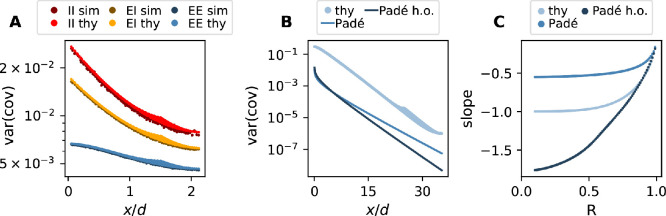

Networks close to instability show shallow exponential decay of covariances

We first note that the large magnitude and dispersion of individual correlations in the data and their spatial structure primarily stem from features in the underlying covariances between neuron pairs (Appendix 1—figure 1). Given the close relationship between correlations and covariances (Appendix 1—figure 1D and E), in the following we analyze covariances, as these are less dependent on single neuron properties and thus analytically simpler to treat. To gain an understanding of the spatial features of intrinsically generated covariances in balanced critical networks, we investigate a network of excitatory and inhibitory neurons on a two-dimensional sheet, where each neuron receives external Gaussian white noise input (Figure 3A). We investigate the covariance statistics in this model by help of linear-response theory, which has been shown to approximate spiking neuron models well (Pernice et al., 2012; Trousdale et al., 2012; Tetzlaff et al., 2012; Helias et al., 2013; Grytskyy et al., 2013; Dahmen et al., 2019). To allow for multapses, the connections between two neurons are drawn from a binomial distribution, and the connection probability decays with inter-neuronal distance on a characteristic length scale (for more details see Materials and methods). Previous studies have used linear-response theory in combination with methods from statistical physics and field theory to gain analytic insights into both mean covariances (Ginzburg and Sompolinsky, 1994; Lindner et al., 2005; Pernice et al., 2011; Tetzlaff et al., 2012) and the width of the distribution of covariances (Dahmen et al., 2019). Field-theoretic approaches, however, were so far restricted to purely random networks devoid of any network structure and thus not suitable to study spatial features of covariances. To analytically quantify the relation between the spatial ranges of covariances and connections, we therefore here develop a theory for spatially organized random networks with multiple populations. The randomness in our model is based on the sparseness of connections, which is one of the main sources of heterogeneity in cortical networks in that it contributes strongly to the variance of connections (see Appendix 1 Section 15).

Figure 3. Spatially organized E-I network model.

(A) Network model: space is divided into cells with four excitatory (triangles) and one inhibitory (circle) neuron each. Distance-dependent connection probabilities (shaded areas) are defined with respect to cell locations. (B) Eigenvalues λ of effective connectivity matrix for network in dynamically balanced critical state. Each dot shows the real part and imaginary part of one complex eigenvalue. The spectral bound (dashed vertical line) denotes the right-most edge of the eigenvalue spectrum. (C) Simulation: covariances of excitatory neurons over distance between cells (blue dots: individual pairs; cyan: mean; orange: standard deviation; sample of 150 covariances at each of 200 chosen distances). (D) Theory: variance of covariance distribution as a function of distance for different spectral bounds of the effective connectivity matrix. Inset: effective decay constant of variances diverges as the spectral bound approaches one. (E) For large spectral bounds, the variances of EE, EI, and II covariances decay on a similar length scale. Spectral bound . Other parameters see Appendix 1—table 3.

A distance-resolved histogram of the covariances in the spatially organized E-I network shows that the mean covariance is close to zero but the width or variance of the covariance distribution stays large, even for large distances (Figure 3C). Analytically, we derive that, despite the complexity of the various indirect interactions, both the mean and the variance of covariances follow simple exponential laws in the long-distance limit (see Appendix 1 Section 4 - Section 12). These laws are universal in that they do not depend on details of the spatial profile of connections. Our theory shows that the associated length scales are strikingly different for means and variances of covariances. They each depend on the reach of direct connections and on specific eigenvalues of the effective connectivity matrix. These eigenvalues summarize various aspects of network connectivity and signal transmission into a single number: Each eigenvalue belongs to a ‘mode’, a combination of neurons that act collaboratively, rather than independently, coordinating neuronal activity within a one-dimensional subspace. To start with, there are as many such subspaces as there are neurons. But if the spectral bound in Figure 3B is close to one, only a relatively small fraction of them, namely those close to the spectral bound, dominate the dynamics; the dynamics is then effectively low-dimensional. Additionally, the eigenvalue quantifies how fast a mode decays when transmitted through a network. The eigenvalues of the dominating modes are close to one, which implies a long lifetime. The corresponding fluctuations thus still contribute significantly to the overall signal, even if they passed by many synaptic connections. Therefore, indirect multi-synaptic connections contribute significantly to covariances if the spectral bound is close to one, and in that case we expect to see long-range covariances.

To quantify this idea, for the mean covariance we find that the dominant behavior is an exponential decay on a length scale . This length scale is determined by a particular eigenvalue, the population eigenvalue, corresponding to the mode in which all neurons are excited simultaneously. Its position solely depends on the ratio between excitation and inhibition in the network and becomes more negative in more strongly inhibition-dominated networks (Figure 3B). We show in Appendix 1 Section 9.4 that this leads to a steep decay of mean covariances with distance. The variance of covariances, however, predominantly decays exponentially on a length scale deff that is determined by the spectral bound , the largest real part among all eigenvalues (Figure 3B and D). In inhibition-dominated networks, is determined by the heterogeneity of connections. For we obtain the effective length scale

| (1) |

What this means is that precisely at the point where is close to one, when neural activity occupies a low-dimensional manifold, the length scale deff on which covariances decay exceeds the reach of direct connections by a large factor (Figure 3D). As the network approaches instability, which corresponds to the spectral bound going to one, the effective decay constant diverges (Figure 3D inset) and so does the range of covariances.

Our population-resolved theoretical analysis, furthermore, shows that the larger the spectral bound the more similar the decay constants between different populations, with only marginal differences for (Figure 3E). This holds strictly if connection weights only depend on the type of the presynaptic neuron but not on the type of the postsynaptic neuron. Moreover, we find a relation between the squared effective decay constants and the squared anatomical decay constants of the form

| (2) |

This relation is independent of the eigenvalues of the effective connectivity matrix, as the constant of order does only depend on the choice of the connectivity profile. For , this means that even though the absolute value of both effective length scales on the left hand side is large, their relative difference is small because it equals the small difference of anatomical length scales on the right hand side.

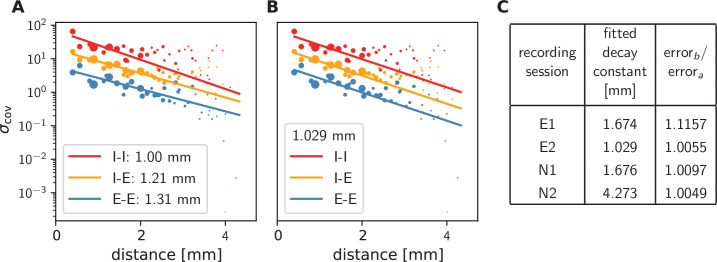

Pairwise covariances in motor cortex decay on a millimeter scale

To check if these predictions are confirmed by the data from macaque motor cortex, we first observe that, indeed, covariances in the resting state show a large dispersion over almost all distances on the Utah array (Figure 4). Moreover, the variance of covariances agrees well with the predicted exponential law: Performing an exponential fit reveals length constants above 1 mm. These large length constants have to be compared to the spatial reach of direct connections, which is about an order of magnitude shorter, in the range of 100-400 μm (Schnepel et al., 2015), so below the 400 μm inter-electrode distance of the Utah array. The shallow decay of the variance of covariances is, next to the broad distribution of covariances, a second indication that the network is in the dynamically balanced critical regime, in line with the prediction by Equation (1).

Figure 4. Long-range covariances in macaque motor cortex.

Variance of covariances as a function of distance. (A) Population-specific exponential fits (lines) to variances of covariances (dots) in session E2, with fitted decay constants indicated in the legend (I-I: putative inhibitory neuron pairs, I-E: inhibitory-excitatory, E-E: excitatory pairs). Dots show the empirical estimate of the variance of the covariance distribution for each distance. Size of the dots represents relative count of pairs per distance and was used as weighting factor for the fits to compensate for uncertainty at large distances, where variance estimates are based on fewer samples. Mean squared error 2.918. (B) Population-specific exponential fits (lines) analogous to (A), with slopes constrained to be identical. This procedure yields a single fitted decay constant of 1.029 mm. Mean squared error 2.934. (C) Table listing decay constants fitted as in (B) for all recording sessions and the ratios between mean squared errors of the fits obtained in procedures B and A.

The population-resolved fits to the data show a larger length constant for excitatory covariances than for inhibitory ones (Figure 4A). This is qualitatively in line with the prediction of Equation (2) given the – by tendency – longer reach of excitatory connections compared to inhibitory ones, as derived from morphological constraints (Reimann et al., 2017, Fig. S2). In the dynamically balanced critical regime, however, the predicted difference in slope for all three fits is practically negligible. Therefore, we performed a second fit where the slope of the three exponentials is constrained to be identical (Figure 4B). The error of this fit is only marginally larger than the ones of fitting individual slopes (Figure 4C). This shows that differences in slopes are hardly detectable given the empirical evidence, thus confirming the predictions of the theory given by Equation (1) and Equation (2).

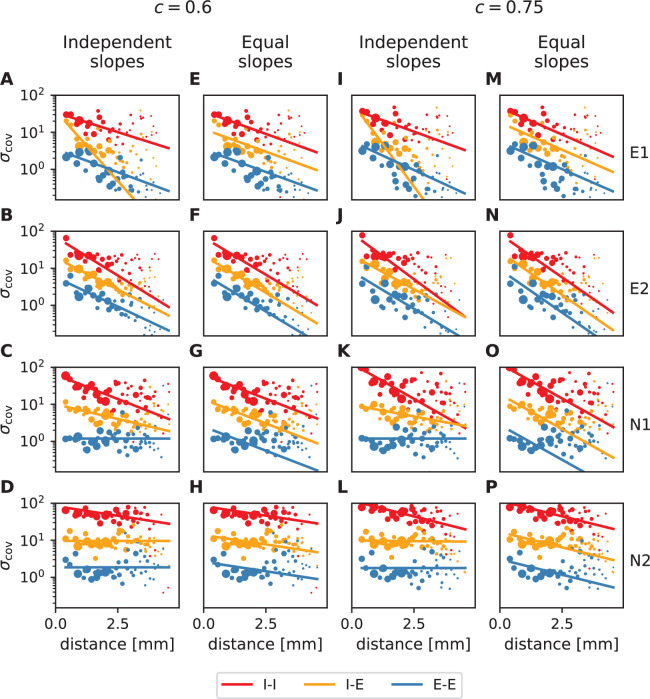

Firing rates alter connectivity-dependent covariance patterns

Since covariances measure the coordination of temporal fluctuations around the individual neurons’ mean firing rates, they are determined by how strong a neuron transmits such fluctuations from input to output (Abeles, 1991). To leading order this is explained by linear-response theory (Ginzburg and Sompolinsky, 1994; Lindner et al., 2005; Pernice et al., 2011; Tetzlaff et al., 2012): How strongly a neuron reacts to a small change in its input depends on its dynamical state, foremost the mean and variance of its total input, called ‘working point’ in the following. If a neuron receives almost no input, a small perturbation in the input will not be able to make the neuron fire. If the neuron receives a large input, a small perturbation will not change the firing rate either, as the neuron is already saturated. Only in the intermediate regime the neuron is susceptible to small deviations of the input. Mathematically, this behavior is described by the gain of the neuron, which is the derivative of the input-output relation (Abeles, 1991). Due to the non-linearity of the input-output relation, the gain is vanishing for very small and very large inputs and non-zero in the intermediate regime. How strongly a perturbation in the input to one neuron affects one of the subsequent neurons therefore not only depends on the synaptic weight but also on the gain and thereby the working point. This relation is captured by the effective connectivity . What is the consequence of the dynamical interaction among neurons depending on the working point? Can it be used to reshape the low-dimensional manifold, the collective coordination between neurons?

The first part of this study finds that long-range coordination can be achieved in a network with short-range random connections if effective connections are sufficiently strong. Alteration of the working point, for example by a different external input level, can affect the covariance structure: The pattern of coordination between individual neurons can change, even though the anatomical connectivity remains the same. In this way, routing of information through the network can be adapted dynamically on a mesoscopic scale. This is a crucial difference of such coordination as opposed to coordination imprinted by complex but static connection patterns.

Here, we first illustrate this concept by simulations of a network of 2000 sparsely connected threshold-linear (ReLU) rate neuron models that receive Gaussian white noise inputs centered around neuron-specific non-zero mean values (see Materials and methods and Appendix 1 Section 14 for more details). The ReLU activation function thereby acts as a simple model for the vanishing gain for neurons with too low input levels. Note that in cortical-like scenarios with low firing rates, neuronal working points are far away from the high-input saturation discussed above, which is therefore neglected by the choice of the ReLU activation function. For independent and stationary external inputs covariances between neurons are solely generated inside the network via the sparse and random recurrent connectivity. External inputs only have an indirect impact on the covariance structure by setting the working point of the neurons.

We simulate two networks with identical structural connectivity and identical external input fluctuations, but small differences in mean external inputs between corresponding neurons in the two simulations (Figure 5A). These small differences in mean external inputs create different gains and firing rates and thereby differences in effective connectivity and covariances. Since mean external inputs are drawn from the same distribution in both simulations (Figure 5B), the overall distributions of firing rates and covariances across all neurons are very similar (Figure 5E1, F1). But individual neurons’ firing rates do differ (Figure 5E2). For the simple ReLU activation used here, we in particular observe neurons that switch between non-zero and zero firing rate between the two simulations. This resulting change of working points substantially affects the covariance patterns (Figure 5F2): Differences in firing rates and covariances between the two simulations are significantly larger than the differences across two different epochs of the same simulation (Figure 5C). The larger the spectral bound, the more sensitive are the intrinsically generated covariances to the changes in firing rates (Figure 5D). Thus, a small offset of individual firing rates is an effective parameter to control network-wide coordination among neurons. As the input to the local network can be changed momentarily, we predict that in the dynamically balanced critical regime coordination patterns should be highly dynamic.

Figure 5. Changes in effective connectivity modify coordination patterns.

(A) Visualization of effective connectivity: A sparse random network with given structural connectivity (left network sketch) is simulated with two different input levels for each neuron (depicted by insets), resulting in different firing rates (grayscale in right network sketches) and therefore different effective connectivities (thickness of connections) in the two simulations. Parameters can be found in Appendix 1—table 4. (B1) Histogram of input currents across neurons for the two simulations (N1 and N2). (B2) Scatter plot of inputs to subset of 1500 corresponding neurons in the first and the second simulation (Pearson correlation coefficient ). (C) Correlation coefficients of rates and of covariances between the two simulations (b, black) and within two epochs of the same simulation (w, gray). (D) Correlation coefficient of rates (gray) and covariances (black) between the two simulations as a function of the spectral bound . (E1) Distribution of rates in the two simulations (excluding silent neurons with ). (E2) Scatter plot of rates in the first compared to the second simulation (Pearson correlation coefficient ). (F1) Distribution of covariances in the two simulation (excluding silent neurons). (F2) Scatter plot of sample of 5000 covariances in first compared to the second simulation (Pearson correlation coefficient ). Here silent neurons are included (accumulation of markers on the axes). Other parameters: number of neurons , connection probability , spectral bound for panels B, C, E, F is .

Coordination patterns in motor cortex depend on behavioral context

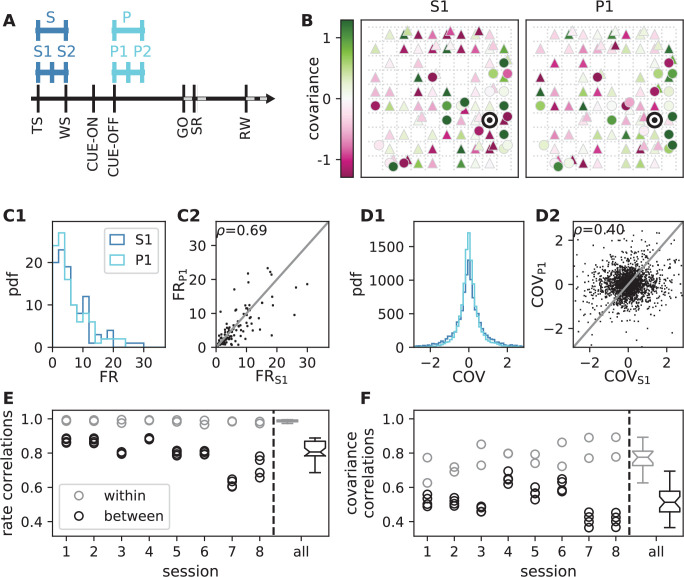

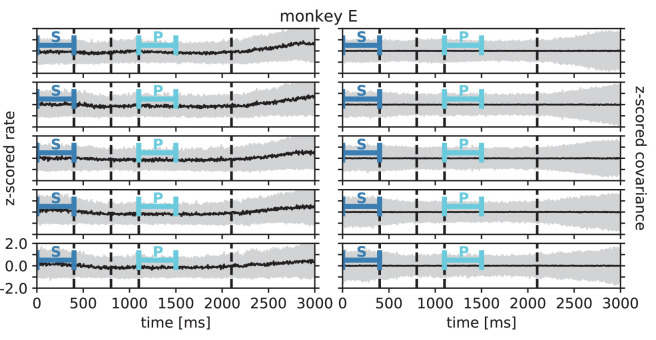

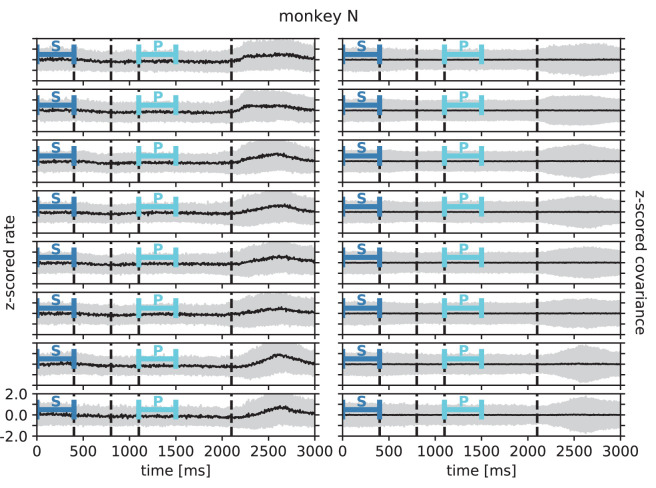

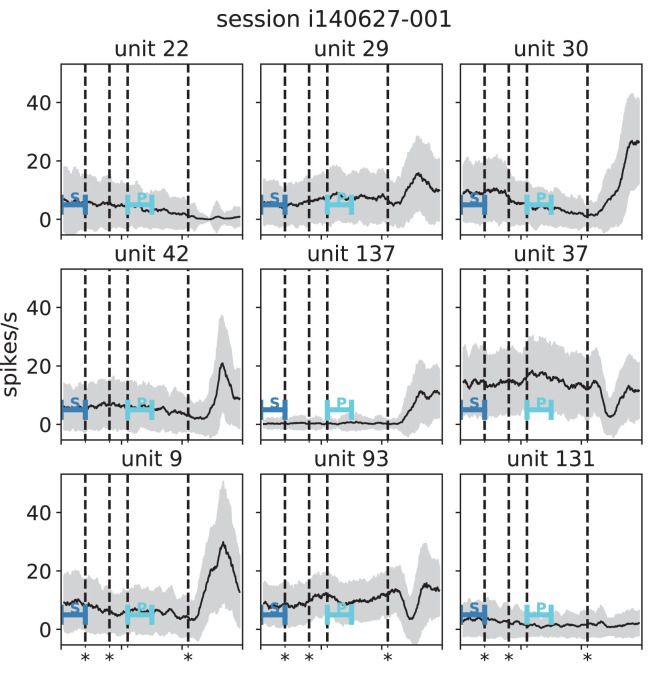

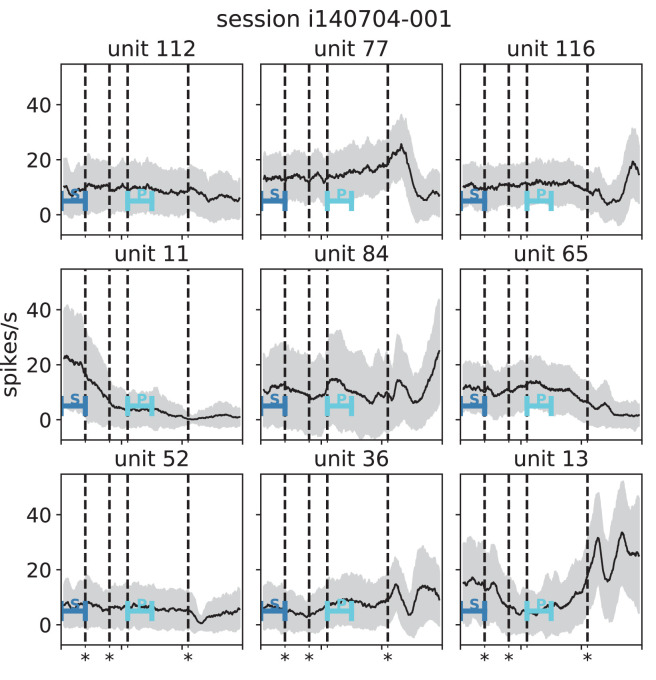

In order to test the theoretical prediction in experimental data, we analyze parallel spiking activity from macaque motor cortex, recorded during a reach-to-grasp experiment (Riehle et al., 2013; Brochier et al., 2018). In contrast to the resting state, where the animal was in an idling state, here the animal is involved in a complex task with periods of different cognitive and behavioral conditions (Figure 6A). We compare two epochs in which the animal is requested to wait and is sitting still but which differ in cognitive conditions. The first epoch is a starting period (S), where the monkey has self-initiated the behavioral trial and is attentive because it is expecting a cue. The second epoch is a preparatory period (P), where the animal has just received partial information about the upcoming trial and is waiting for the missing information and the GO signal to initiate the movement.

Figure 6. Behavioral condition reshapes mesoscopic neuronal coordination.

(A) Trial structure of the reach-to-grasp experiment (Brochier et al., 2018). Blue segments above the time axis indicate data pieces at trial start (dark blue: S (S1+ S2)) and during the preparatory period (light blue: P (P1+ P2)). (B) Salt-and-pepper structure of covariance during two different epochs (S1 and P1) of one recording session of monkey N (151 trials, 106 single units, Figure 1 for recording setup). For some neurons, the covariance completely reverses, while in the others it does not change. Inhibitory reference neuron indicated by black circle. (C1) Distributions of firing rates during S1 and P1. (C2) Scatter plot comparing firing rates in S1 and P1 (Pearson correlation coefficient ). (D1/D2) Same as panels C1/C2, but for covariances (Pearson correlation coefficient ). (E) Correlation coefficient of firing rates across neurons in different epochs of a trial for eight recorded sessions. Correlations between sub-periods of the same epoch (S1-S2, P1-P2; within-epoch, gray) and between sub-periods of different epochs (Sx-Py; between-epochs, black). Data shown in panels B-D is from session 8. Box plots to the right of the black dashed line show distributions obtained after pooling across all analyzed recording sessions per monkey. The line in the center of each box represents the median, box’s area represents the interquartile range, and the whiskers indicate minimum and maximum of the distribution (outliers excluded). Those distributions differ significantly (Student t-test, two-sided, ). (F) Correlation coefficient of covariances, analogous to panel E. The distributions of values pooled across sessions also differ significantly (Student t-test, two-sided, ). For details of the statistical tests, see Materials and methods. Details on number of trials and units in each recording session are provided in Appendix 1—table 1.

Within each epoch, S or P, the neuronal firing rates are mostly stationary, likely due to the absence of arm movements which create relatively large transient activities in later epochs of the task, which are not analyzed here (see Appendix 1 Section 3). The overall distributions of the firing rates are comparable for epochs S and P, but the firing rates are distributed differently across the individual neurons: Figure 6C shows one example session of monkey N, where the changes in firing rates between the two epochs are visible in the spread of markers around the diagonal line in panel C2. To assess the extent of these changes, we split each epoch, S and P, into two disjoint sub-periods, S1/S2 and P1/P2 (Figure 6A). We compute the correlation coefficient between the firing rate vectors of two sub-periods of different epochs (‘between’ markers in Figure 6E) and compare it to the correlation coefficient between the firing rate vectors of two sub-periods of the same epoch (‘within’ markers): Firing rate vectors in S1 are almost perfectly correlated with firing rate vectors in S2 ( for all of the five/eight different recording sessions from different recording days for monkey E/N, similarly for P1 and P2), confirming stationarity investigated in Appendix 1 Section 3. Firing rate vectors in S1 or S2, however, show significantly lower correlation to firing rate vectors in P1 and P2, confirming a significant change in network state between epochs S and P (Figure 6E).

The mechanistic model in the previous section shows a qualitatively similar scenario (Figure 5C and E). By construction it produces different firing rate patterns in the two simulations. While the model is simplistic and in particular not adapted to quantitatively reproduce the experimentally observed activity statistics, its simulations and our underlying theory make a general prediction: Differences in firing rates impact the effective connectivity between neurons and thereby evoke even larger differences in their coordination if the network is operating in the dynamically balanced critical regime (Figure 5D). To check this prediction, we repeat the correlation analysis between the two epochs, which we described above for the firing rates, but this time for the covariance patterns. Despite similar overall distributions of covariances in S and P (Figure 6D1), covariances between individual neuron pairs are clearly different between S and P: Figure 6B shows the covariance pattern for one representative reference neuron in one example recording session of monkey N. In both epochs, this covariance pattern has a salt-and-pepper structure as for the resting state data in Figure 1D. Yet, neurons change their individual coordination: a large number of neuron pairs even changes from positive covariance values to negative ones and vice versa. These neurons fire cooperatively in one epoch of the task while they show antagonistic firing in the other epoch. The covariances of all neuron pairs of that particular recording session are shown in Figure 6D2. Markers in the upper left and lower right quadrant show neuron pairs that switch the sign of their coordination (45 % of all neuron pairs). The extent of covariance changes between epochs is again quantified by correlation coefficients between the covariance patterns of two sub-periods (Figure 6F). As for the firing rates, we find rather large correlations between covariance patterns in S1 and S2 as well as between covariance patterns in P1 and P2. Note, however, that correlation coefficients are around 0.8 rather than 1, presumably since covariance estimates from 200 ms periods are noisier than firing rate estimates. The covariance patterns in S1 or S2 are, however, significantly more distinct from covariance patterns in P1 and P2, with correlation coefficients around 0.5 (Figure 6F). This more pronounced change of covariances compared to firing rates is predicted by a network whose effective connectivity has a large spectral bound, in the dynamically balanced critical state. In particular, the theory provides a mechanistic explanation for the different coordination patterns between neurons on the mesoscopic scale (range of a Utah array), which are observed in the two states S and P (Figure 6B). The coordination between neurons is thus considerably reshaped by the behavioral condition.

Discussion

In this study, we investigate coordination patterns of many neurons across mesoscopic distances in macaque motor cortex. We show that these patterns have a salt-and-pepper structure, which can be explained by a network model with a spatially dependent random connectivity operating in a dynamically balanced critical state. In this state, cross-covariances are shaped by a large number of parallel, multi-synaptic pathways, leading to interactions reaching far beyond the range of direct connections. Strikingly, this coordination on the millimeter scale is only visible if covariances are resolved on the level of individual neurons; the population mean of covariances quickly decays with distance and is overall very small. In contrast, the variance of covariances is large and predominantly decreases exponentially on length scales of up to several millimeters, even though direct connections typically only reach a few hundred micrometers.

Since the observed coordination patterns are determined by the effective connectivity of the network, they are dynamically controllable by the network state; for example, due to modulations of neuronal firing rates. Parallel recordings in macaque motor cortex during resting state and in different epochs of a reach-to-grasp task confirm this prediction. Simulations indeed exhibit a high sensitivity of coordination patterns to weak modulations of the individual neurons’ firing rates, providing a plausible mechanism for these dynamic changes.

Models of balanced networks have been investigated before (van Vreeswijk and Sompolinsky, 1996; Brunel, 2000; Renart et al., 2010; Tetzlaff et al., 2012) and experimental evidence for cortical networks operating in the balanced state is overwhelming (Okun and Lampl, 2008; Reinhold et al., 2015; Dehghani et al., 2016). Excess of inhibition in such networks yields stable and balanced population-averaged activities as well as low average covariances (Tetzlaff et al., 2012). Recently, the notion of balance has been combined with criticality in the dynamically balanced critical state that results from large heterogeneity in the network connectivity (Dahmen et al., 2019). Here, we focus on another ubiquitous property of cortical networks, their spatial organization, and study the interplay between balance, criticality, and spatial connectivity in networks of excitatory and inhibitory neurons. We show that in such networks, heterogeneity generates disperse covariance structures between individual neurons on large length-scales with a salt-and-pepper structure.

Spatially organized balanced network models have been investigated before in the limit of infinite network size, as well as under strong and potentially correlated external drive, as is the case, for example, in primary sensory areas of the brain (Rosenbaum et al., 2017; Baker et al., 2019). In this scenario, intrinsically generated contributions to covariances are much smaller than external ones. Population-averaged covariances then fulfill a linear equation, called the ‘balance condition’ (van Vreeswijk and Sompolinsky, 1996; Hertz, 2010; Renart et al., 2010; Rosenbaum and Doiron, 2014), that predicts a non-monotonous change of population-averaged covariances with distance (Rosenbaum et al., 2017). In contrast, we here consider covariances on the level of individual cells in finite-size networks receiving only weak inputs. While we cannot strictly rule out that the observed covariance patterns in motor cortex are a result of very specific external inputs to the recorded local network, we believe that the scenario of weak external drive is more suitable for non-sensory brain areas, such as, for example, the motor cortex in the resting state conditions studied here. Under such conditions, covariances have been shown to be predominantly generated locally rather than from external inputs: Helias et al., 2014 investigated intrinsic and extrinsic sources of covariances in ongoing activity of balanced networks and found that for realistic sizes of correlated external populations the major contribution to covariances is generated from local network interactions (Figure 7a in Helias et al., 2014). Dahmen et al., 2019 investigated the extreme case, where the correlated external population is of the same size as the local population (Fig. S6 in Dahmen et al., 2019). Despite sizable external input correlations projected onto the local circuit via potentially strong afferent connections, the dependence of the statistics of covariances on the spectral bound of the local recurrent connectivity is predicted well by the theory that neglects correlated external inputs (see supplement section 3 in Dahmen et al., 2019).

Our analysis of covariances on the single-neuron level goes beyond the balance condition and requires the use of field-theoretical techniques to capture the heterogeneity in the network (Dahmen et al., 2019; Helias and Dahmen, 2020). It relies on linear-response theory, which has previously been shown to faithfully describe correlations in balanced networks of nonlinear (spiking) units (Tetzlaff et al., 2012; Trousdale et al., 2012; Pernice et al., 2012; Grytskyy et al., 2013; Helias et al., 2013; Dahmen et al., 2019). These studies mainly investigated population-averaged correlations with small spectral bounds of the effective connectivity. Subsequently, Dahmen et al., 2019 showed the quantitative agreement of this linear-response theory for covariances between individual neurons in networks of spiking neurons for the whole range of spectral bounds, including the dynamically balanced critical regime. The long-range coordination studied in the current manuscript requires the inclusion of spatially non-homogeneous coupling to analyze excitatory-inhibitory random networks on a two-dimensional sheet with spatially decaying connection probabilities. This new theory allows us to derive expressions for the spatial decay of the variance of covariances. We primarily evaluate these expressions in the long-range limit, which agrees well with simulations for distances , which is fulfilled for most distances on the Utah array (Figure 3, Appendix 1—figure 7). For these distances, we find that the decay of covariances is dominated by a simple exponential law. Unexpectedly, its decay constant is essentially determined by only two measures, the spectral bound of the effective connectivity, and the length scale of direct connections. The length scale of covariances diverges when approaching the breakdown of linear stability. In this regime, differences in covariances induced by differences in length scales of excitatory and inhibitory connections become negligible. The predicted emergence of a single length scale of covariances is consistent with our data.

This study focuses on local and isotropic connection profiles to show that long-range coordination does not rely on specific connection patterns but can result from the network state alone. Alternative explanations for long-range coordination are based on specifically imprinted network structures: Anisotropic local connection profiles have been studied and shown to create spatio-temporal sequences (Spreizer et al., 2019). Likewise, embedded excitatory feed-forward motifs and cell assemblies via excitatory long-range patchy connections (DeFelipe et al., 1986) can create positive covariances at long distances (Diesmann et al., 1999; Litwin-Kumar and Doiron, 2012). Yet, these connections cannot provide an explanation for the large negative covariances between excitatory neurons at long distances (see e.g. Figure 1D). Long-range connectivity, for example arising from a salt-and-pepper organization of neuronal selectivity with connections preferentially targeting neurons with equal selectivity (Ben-Yishai et al., 1995; Hansel and Sompolinsky, 1998; Roxin et al., 2005; Blumenfeld et al., 2006), would produce salt-and-pepper covariance patterns even in networks with small spectral bounds where interactions are only mediated via direct connections. However, in this scenario, one would expect that neurons which have similar selectivity would throughout show positive covariance due to their mutual excitatory connections and due to the correlated input they receive. Yet, when analyzing two different epochs of the reach-to-grasp task, we find that a large fraction of neuron pairs actually switches from being significantly positively correlated to negatively correlated and vice versa (see Figure 6D2, upper left and lower right quadrant). This state-dependence of covariances is in line with the here suggested mechanism of long-range coordination by indirect interactions: Such indirect interactions depend on the effective strengths of various connections and can therefore change considerably with network state. In contrast, correlations due to imprinted network structures are static, so that a change in gain of the neurons will either strengthen or weaken the specific activity propagation, but it will not lead to a change of the sign of covariances that we see in our data. The static impact of these connectivity structures on covariances could nevertheless in principle be included in the presented formalism. Long-range coordination can also be created from short-range connections with random orientations of anisotropic local connection profiles (Smith et al., 2018). This finding can be linked to the emergence of tuning maps in the visual cortex. The mechanism is similar to ours in that it uses nearly linearly unstable modes that are determined by spatial connectivity structures and heterogeneity. Given the different source of heterogeneity, the modes and corresponding covariance patterns are different from the ones discussed here: Starting from fully symmetric networks with corresponding symmetric covariance patterns, Smith et al., 2018 found that increasing heterogeneity (anisotropy) yields more randomized, but still patchy regions of positive and negative covariances that are in line with low-dimensional activity patterns found in visual cortex. In motor cortex we instead find salt-and-pepper patterns that can be explained in terms of heterogeneity through sparsity. We provide the theoretical basis and explicit link between connectivity eigenspectra and covariances and show that heterogeneity through sparsity is sufficient to generate the dynamically balanced critical state as a simple explanation for the broad distribution of covariances in motor cortex, the salt-and-pepper structure of coordination, its long spatial range, and its sensitive dependence on the network state. Note that both mechanisms of long-range coordination, the one studied in Smith et al., 2018 and the one presented here, rely on the effective connectivity for the network to reside in the dynamically balanced critical regime. The latter regime is, however, not just one single point in parameter space, but an extended region that can be reached via a multitude of control mechanisms for the effective connectivity, for example by changing neuronal gains (Salinas and Sejnowski, 2001a; Salinas and Sejnowski, 2001b), synaptic strengths (Sompolinsky et al., 1988), and network microcircuitry (Dahmen et al., 2021).

What are possible functional implications of the coordination on mesoscopic scales? Recent work demonstrated activity in motor cortex to be organized in low-dimensional manifolds (Gallego et al., 2017; Gallego, 2018; Gallego et al., 2020). Dimensionality reduction techniques, such as PCA or GPFA (Yu et al., 2009), employ covariances to expose a dynamical repertoire of motor cortex that is comprised of neuronal modes. Previous work started to analyze the relation between the dimensionality of activity and connectivity (Aljadeff et al., 2015; Aljadeff et al., 2016; Mastrogiuseppe and Ostojic, 2018; Dahmen et al., 2019; Dahmen et al., 2021; Hu and Sompolinsky, 2020), but only in spatially unstructured networks, where each neuron can potentially be connected to any other neuron. The majority of connections within cortical areas, however, stems from local axonal arborizations (Schnepel et al., 2015). Here, we add this biological constraint and demonstrate that these networks, too, support a dynamically balanced critical state. This state in particular exhibits neural modes which are spanned by neurons spread across the experimentally observed large distances. In this state a small subset of modes that are close to the point of instability dominates the variability of the network activity and thus spans a low-dimensional neuronal manifold. As opposed to specifically designed connectivity spectra via plasticity mechanisms (Hennequin et al., 2014) or low-rank structures embedded into the connectivity (Mastrogiuseppe and Ostojic, 2018), the dynamically balanced critical state is a mechanism that only relies on the heterogeneity which is inherent to sparse connectivity and abundant across all brain areas.

While we here focus on covariance patterns in stationary activity periods, the majority of recent works studied transient activity during motor behavior (Gallego et al., 2017). How are stationary and transient activities related? During stationary ongoing activity states, covariances are predominantly generated intrinsically (Helias et al., 2014). Changes in covariance patterns therefore arise from changes in the effective connectivity via changes in neuronal gains, as demonstrated here in the two periods of the reach-to-grasp experiment and in our simulations for networks close to criticality (Figure 5D). During transient activity, on top of gain changes, correlated external inputs may directly drive specific neural modes to create different motor outputs, thereby restricting the dynamics to certain subspaces of the manifold. In fact, Elsayed et al., 2016 reported that the covariance structures during movement preparation and movement execution are unrelated and corresponding to orthogonal spaces within a larger manifold. Also, Luczak et al., 2009 studied auditory and somatosensory cortices of awake and anesthetized rats during spontaneous and stimulus-evoked conditions and found that neural modes of stimulus-evoked activity lie in subspaces of the neural manifold spanned by the spontaneous activity. Similarly, visual areas V1 and V2 seem to exploit distinct subspaces for processing and communication (Semedo et al., 2019), and motor cortex uses orthogonal subspaces capturing communication with somatosensory cortex or behavior-generating dynamics (Perich et al., 2021). Gallego, 2018 further showed that manifolds are not identical, but to a large extent preserved across different motor tasks due to a number of task-independent modes. This leads to the hypothesis that the here described mechanism for long-range cooperation in the dynamically balanced critical state provides the basis for low-dimensional activity by creating such spatially extended neural modes, whereas transient correlated inputs lead to their differential activation for the respective target outputs. The spatial spread of the neural modes thereby leads to a distributed representation of information that may be beneficial to integrate information into different computations that take place in parallel at various locations. Further investigation of these hypotheses is an exciting endeavor for the years to come.

Materials and methods

Experimental design and statistical analysis

Two adult macaque monkeys (monkey E - female, and monkey N - male) are recorded in behavioral experiments of two types: resting state and reach-to-grasp. The recordings of neuronal activity in motor and pre-motor cortex (hand/arm region) are performed with a chronically implanted Utah array (Blackrock Microsystems). Details on surgery, recordings, spike sorting and classification of behavioral states can be found in Riehle et al., 2013; Riehle et al., 2018; Brochier et al., 2018; Dąbrowska et al., 2020. All animal procedures were approved by the local ethical committee (C2EA 71; authorization A1/10/12) and conformed to the European and French government regulations.

Resting state data

During the resting state experiment, the monkey is seated in a primate chair without any task or stimulation. Registration of electrophysiological activity is synchronized with a video recording of the monkey’s behavior. Based on this, periods of ‘true resting state’ (RS), defined as no movements and eyes open, are chosen for the analysis. Eye movements and minor head movements are included. Each monkey is recorded twice, with a session lasting approximately 15 and 20 min for monkeys E (sessions E1 and E2) and N (sessions N1 and N2), respectively, and the behavior is classified by visual inspection with single second precision, resulting in 643 and 652 s of RS data for monkey E and 493 and 502 s of RS data for monkey N.

Reach-to-grasp data

In the reach-to-grasp experiment, the monkeys are trained to perform an instructed delayed reach-to-grasp task to obtain a reward. Trials are initiated by a monkey closing a switch (TS, trial start). After 400 ms a diode is illuminated (WS, warning signal), followed by a cue after another 400 ms(CUE-ON), which provides partial information about the upcoming trial. The cue lasts 300 ms and its removal (CUE-OFF) initiates a 1 s preparatory period, followed by a second cue, which also serves as GO signal. Two epochs, divided into 200 ms sub-periods, within such defined trials are chosen for analysis: the first 400 ms after TS (starting period, S1 and S2), and the 400 ms directly following CUE-OFF (preparatory period, P1 and P2) (Figure 6a). Five selected sessions for monkey E and eight for monkey N provide a total of 510 and 1111 correct trials, respectively. For detailed numbers of trials and single units per recording session see Appendix 1—table 1.

Separation of putative excitatory and inhibitory neurons

Offline spike-sorted single units (SUs) are separated into putative excitatory (broad-spiking) and putative inhibitory (narrow-spiking) based on their spike waveform width (Barthó et al., 2004; Kaufman et al., 2010; Kaufman et al., 2013; Peyrache, 2012; Peyrache and Destexhe, 2019). The width is defined as the time (number of data samples) between the trough and peak of the waveform. Widths of all average waveforms from all selected sessions (both resting state and reach-to-grasp) per monkey are collected. Thresholds for ‘broadness’ and ‘narrowness’ are chosen based on the monkey-specific distribution of widths, such that intermediate values stay unclassified. For monkey E the thresholds are 0.33 ms and 0.34 ms and for monkey N 0.40 ms and 0.41 ms. Next, a two-step classification is performed session by session. Firstly, the thresholds are applied to average SU waveforms. Secondly, the thresholds are applied to SU single waveforms and a percentage of single waveforms pre-classified as the same type as the average waveform is calculated. SU for which this percentage is high enough are marked classified. All remaining SUs are grouped as unclassified. We verify the robustness of our results with respect to changes in the spike sorting procedure in Appendix 1 Section 2.

Synchrofacts, that is, spike-like synchronous events across multiple electrodes at the sampling resolution of the recording system (1/30 ms) (Torre, 2016), are removed. In addition, only SUs with a signal-to-noise ratio (Hatsopoulos et al., 2004) of at least 2.5 and a minimal average firing rate of 1 Hz are considered for the analysis, to ensure enough and clean data for valid statistics.

Statistical analysis

All RS periods per resting state recording are concatenated and binned into 1 s bins. Next, pairwise covariances of all pairs of SUs are calculated according to the following formula:

| (3) |

with bi, bj - binned spike trains, , being their mean values, the number of bins, and the scalar product of vectors and . Obtained values are broadly distributed, but low on average in every recorded session: in session E1 E-E pairs: (M±SD), E-I: , I-I: , in session E2 E-E: , E-I , I-I , in session N1 E-E , E-I , I-I , in session N2 E-E , E-I , I-I .

To explore the dependence of covariance on the distance between the considered neurons, the obtained values are grouped according to distances between electrodes on which the neurons are recorded. For each distance the average and variance of the obtained distribution of cross-covariances is calculated. The variance is additionally corrected for bias due to a finite number of measurements (Dahmen et al., 2019). In most of cases, the correction does not exceed 0.01%.

In the following step, exponential functions are fitted to the obtained distance-resolved variances of cross-covariances ( corresponding to the variance and to distance between neurons), which yields a pair of values . The least squares method implemented in the Python scipy.optimize module (SciPy v.1.4.1) is used. Firstly, three independent fits are performed to the data for excitatory-excitatory, excitatory-inhibitory, and inhibitory-inhibitory pairs. Secondly, analogous fits are performed, with the constraint that the decay constant should be the same for all three curves.

Covariances in the reach-to-grasp data are calculated analogously but with different time resolution. For each chosen sub-period of a trial, data are concatenated and binned into 200 ms bins, meaning that the number of spikes in a single bin corresponds to a single trial. The mean of these counts normalized to the bin width gives the average firing rate per SU and sub-period. The pairwise covariances are calculated according to Equation (3). To assess the similarity of neuronal activity in different periods of a trial, Pearson product-moment correlation coefficients are calculated on vectors of SU-resolved rates and pair-resolved covariances. Correlation coefficients from all recording sessions per monkey are separated into two groups: using sub-periods of the same epoch (within-epoch), and using sub-periods of different epochs of a trial (between-epochs). These groups are tested for differences with significance level . Firstly, to check if the assumptions for parametric tests are met, the normality of each obtained distribution is assessed with a Shapiro-Wilk test, and the equality of variances with an F-test. Secondly, a t-test is applied to compare within- and between-epochs correlations of rates or covariances. Since there are two within and four between correlation values per recording session, the number of degrees of freedom equals: , which is 28 for monkey E and 46 for monkey N. To estimate the confidence intervals for obtained differences, the mean difference between groups and their pooled standard deviation are calculated for each comparison

with mwithin and mbetween being the mean, swithin and sbetween the standard deviation and and the number of within- and between-epoch correlation coefficient values, respectively.

This results in 95 % confidence intervals of for rates and for covariances in monkey E and for rates and for covariances in monkey N.

For both monkeys the within-epoch rate-correlations distribution does not fulfill the normality assumption of the t-test. We therefore perform an additional non-parametric Kolmogorov-Smirnov test for the rate comparison. The differences are again significant; for monkey E ; for monkey N .

For all tests we use the implementations from the Python scipy.stats module (SciPy v.1.4.1).

Mean and variance of covariances for a two-dimensional network model with excitatory and inhibitory populations

The mean and variance of covariances are calculated for a two-dimensional network consisting of one excitatory and one inhibitory population of neurons. The connectivity profile , describing the probability of a neuron having a connection to another neuron at distance decays with distance. We assume periodic boundary conditions and place the neurons on a regular grid (Figure 3A), which imposes translation and permutation symmetries that enable the derivation of closed-form solutions for the distance-dependent mean and variance of the covariance distribution. These simplifying assumptions are common practice and simulations show that they do not alter the results qualitatively.

Our aim is to find an expression for the mean and variance of covariances as functions of distance between two neurons. While the theory in Dahmen et al., 2019 is restricted to homogeneous connections, understanding the spatial structure of covariances here requires us to take into account the spatial structure of connectivity. Field-theoretic methods, combined with linear-response theory, allow us to obtain expressions for the mean covariance and variance of covariance

| (4) |

with identity matrix 1, mean and variance of connectivity matrix , input noise strength , and spectral bound . Since and have a similar structure, the mean and variance can be derived in the same way, which is why we only consider variances in the following.

To simplify Equation (4), we need to find a basis in which and therefore also , is diagonal. Due to invariance under translation, the translation operators and the matrix have common eigenvectors, which can be derived using that translation operators satisfy , where is the number of lattice sites in - or -direction (see Appendix 1). Projecting onto a basis of these eigenvectors shows that the eigenvalues of are given by a discrete two-dimensional Fourier transform of the connectivity profile

Expressing in the eigenvector basis yields , where is a discrete inverse Fourier transform of the kernel . Assuming a large network with respect to the connectivity profiles allows us to take the continuum limit

As we are only interested in the long-range behavior, which corresponds to , or , respectively, we can approximate the Fourier kernel around by a rational function, quadratic in the denominator, using a Padé approximation. This allows us to calculate the integral which yields

where denotes the modified Bessel function of second kind and zeroth order (Olver et al., 2010), and the effective decay constant deff is given by Equation (1). In the long-range limit, the modified Bessel function behaves like

Writing Equation (4) in terms of gives

with the double asterisk denoting a two-dimensional convolution. is a function proportional to the modified Bessel function of second kind and first order (Olver et al., 2010), which has the long-range limit

Hence, the effective decay constant of the variances is given by deff. Note that further details of the above derivation can be found in the Appendix 1 Section 4 - Section 12.

Network model simulation

The explanation of the network state dependence of covariance patterns presented in the main text is based on linear-response theory, which has been shown to yield results quantitatively in line with non-linear network models, in particular networks of spiking leaky integrate-and-fire neuron models (Tetzlaff et al., 2012; Trousdale et al., 2012; Pernice et al., 2012; Grytskyy et al., 2013; Helias et al., 2013; Dahmen et al., 2019). The derived mechanism is thus largely model independent. We here chose to illustrate it with a particularly simple non-linear input-output model, the rectified linear unit (ReLU). In this model, a shift of the network’s working point can turn some neurons completely off, while activating others, thereby leading to changes in the effective connectivity of the network. In the following, we describe the details of the network model simulation.

We performed a simulation with the neural simulation tool NEST (Jordan, 2019) using the parameters listed in Appendix 1—table 4. We simulated a network of inhibitory neurons (threshold_lin_rate_ipn, Hahne, 2017), which follow the dynamical equation

| (5) |

where zi is the input to neuron i, the output firing rate with (threshold linear activation function)

time constant , connectivity matrix , a constant external input , and uncorrelated Gaussian white noise , , with noise strength . The neurons were connected using the fixed_indegree connection rule, with connection probability , indegree , and delta-synapses (rate_connection_instantaneous) of weight .

The constant external input to each neuron was normally distributed, with mean , and standard deviation . It was used to set the firing rates of neurons, which, via the effective connectivity, influence the intrinsically generated covariances in the network. The two parameters and were chosen such that, in the stationary state, half of the neurons were expected to be above threshold. Which neurons are active depends on the realization of and is therefore different for different networks.

To assess the distribution of firing rates, we first considered the static variability of the network and studied the stationary solution of the noise-averaged input , which follows from Equation (5) as

| (6) |

Note that , through the nonlinearity , in principle depends on fluctuations of the system. This dependence is, however, small for the chosen threshold linear , which is only nonlinear in the point .

The derivation of is based on the following mean-field considerations: according to Equation (6) the mean input to a neuron in the network is given by the sum of external input and recurrent input

The variance of the input is given by

The mean firing rate can be calculated using the diffusion approximation (Tuckwell, 2009; Amit and Tsodyks, 2009), which is assuming a normal distribution of inputs due to the central-limit theorem, and the fact that a linear threshold neuron only fires if its input is positive

where denotes the probability density of the firing rate . The variance of the firing rates is given by

The number of active neurons is the number of neurons with a positive input, which we set to be equal to

which is only fulfilled for . Inserting this condition simplifies the equations above and leads to

For the purpose of relating synaptic weight and spectral bound , we can view the nonlinear network as an effective linear network with half the population size (only the active neurons). In the latter case, we obtain

For a given spectral bound , this relation allows us to derive the value

| (7) |

that, for a arbitrarily fixed (here ), makes half of the population being active. We were aiming for an effective connectivity with only weak fluctuations in the stationary state. Therefore, we fixed the noise strength for all neurons to the small value compared to the external input, such that the noise fluctuations did not have a large influence on the calculation above that determines which neurons were active.

To show the effect of a change in the effective connectivity on the covariances, we simulated two networks with identical connectivity, but supplied them with slightly different external inputs. This was realized by choosing

with

, and indexing the two networks. The main component of the external input was the same for both networks. But, the small component was drawn independently for the two networks. This choice ensures that the two networks have a similar external input distribution (Figure 5B1), but with the external inputs distributed differently across the single neurons (Figure 5B2). How similar the external inputs are distributed across the single neurons is determined by .

The two networks have a very similar firing rate distribution (Figure 5E1), but, akin to the external inputs, the way the firing rates are distributed across the single neurons differs between the two networks (Figure 5E2). As the effective connectivity depends on the firing rates

this leads to a difference in the effective connectivities of the two networks and therefore to different covariance patterns, as discussed in Figure 5.

We performed the simulation for spectral bounds ranging from 0.1 to 0.9 in increments of 0.1. We calculated the correlation coefficient of firing rates and the correlation coefficient of time-lag integrated covariances between neurons in the two networks (Figure 5D) and studied the dependence on the spectral bound.

To check whether the simulation was long enough to yield a reliable estimate of the rates and covariances, we split each simulation into two halves, and calculate the correlation coefficient between the rates and covariances from the first half of the simulation with the rates and covariances from the second half. They were almost perfectly correlated (Figure 5C). Then, we calculated the correlation coefficients comparing all halves of the first simulation with all halves of the second simulation, showing that the covariance patterns changed much more than the rate patterns (Figure 5C).

Acknowledgements

This work was partially supported by HGF young investigator’s group VH-NG-1028, European Union’s Horizon 2020 research and innovation program under Grant agreements No. 785,907 (Human Brain Project SGA2) and No. 945,539 (Human Brain Project SGA3), ANR grant GRASP and partially funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) - 368482240/GRK2416. We are grateful to our colleagues in the NEST and Elephant developer communities and for continuous collaboration. All network simulations were carried out with NEST 2.20.0 (http://www.nest-simulator.org). All data analyses performed with Elephant (https://neuralensemble.org/elephant/). We thank Sebastian Lehmann for help with the design of the figures.

Appendix 1

1 Correlations and covariances

A typical measure for the strength of neuronal coordination is the Pearson correlation coefficient, here applied to spike counts in bins. Correlation coefficients, however, comprise features of both auto- and cross-covariances. From a theoretical point of view, it is simpler to study cross-covariances separately. Indeed, linear-response theory has been shown to faithfully predict cross-covariances in spiking leaky integrate-and-fire networks (Tetzlaff et al., 2012; Pernice et al., 2012; Trousdale et al., 2012; Helias et al., 2013; Dahmen et al., 2019; Grytskyy et al., 2013). Appendix 1—figure 1 justifies the investigation of cross-covariances instead of correlation coefficients for the purpose of this study. It shows that the spatial organization of correlations closely matches the spatial organization of cross-covariances.

Appendix 1—figure 1. Correlations and covariances.

The shown data is taken from session E2. (E-E: excitatory-excitatory, E-I: excitatory-inhibitory, I-I: inhibitory-inhibitory). (A) Population-resolved distribution of pairwise spike-count Pearson correlation coefficients. Same data as in Figure 1C. (B) Population-resolved distribution of pairwise spike-count covariances. (C) Population-resolved distribution of variances. (D) Pairwise spike-count correlation coefficients with respect to the neuron marked by black triangle. Grid indicates electrodes of a Utah array, triangles and circles correspond to putative excitatory and inhibitory neurons, respectively. Size as well as color of markers represent correlation. Neurons within the same square were recorded on the same electrode. Same data as in Figure 1D. (E) Pairwise spike-count covariances with respect to the neuron marked by black triangle.

2 Robustness to E/I separation

The analysis of the experimental data involves a number of preprocessing steps, which may affect the resulting statistics. In our study one such critical step is the separation of putative excitatory and inhibitory units, which is partially based on setting thresholds on the widths of spike waveform, as described in the Methods section. We tested the robustness of our conclusions with respect to these thresholds.

As mentioned in the Methods, two thresholds for the width of a spike waveform are chosen, based on all SU average waveforms: A width larger than the ‘‘broadness’’ threshold indicates a putative excitatory neuron. A width lower than the ‘‘narrowness’’ threshold indicates a putative inhibitory neuron. Units with intermediate widths are unclassified. Additionally, to increase the reliability of the classification, we perform it in two steps: first on the SU’s average waveform, and second on all its single waveforms. We calculate the percentage of single waveforms classified as either type. Finally, only SUs showing a high enough percentage of single waveforms classified the same as the average waveform are sorted as the respective type. The minimal percentage required, referred to as consistency , is initially set to the lowest value which ensures no contradictions between average- and single-waveform thresholding results. While the ‘‘broadness’’ and ‘‘narrowness’’ thresholds are chosen based on all available data for a given monkey, the required consistency is determined separately for each recording session. For monkey N is set to 0.6 in all but one sessions: In resting state session N1 it is increased to 0.62. For monkey E the values of equals 0.6 in the resting state recordings and take the following values in five analyzed reach-to-grasp sessions: 0.6, 0.89, 0.65, 0.61, 0.64.

The only step of our analysis for which the separation of putative excitatory and inhibitory neurons is crucial is the fitting of exponentials to the distance-resolved covariances. This step only involves resting state data. To test the robustness of our conclusions, we manipulate the required consistency value for sessions E1, E2, N1, and N2 by setting it to 0.75. Appendix 1—figure 2 and Appendix 1—table 1 summarize the resulting fits.

Appendix 1—figure 2. Distance-resolved variance of covariance: robustness of decay constant estimation.

Exponential fits (lines) to variances of covariances (dots) analogous to Figure 4A and B in the main text (columns 1&3 and 2&4, respectively) for all analyzed resting state sessions. The two sets of plots differ in E/I separation consistency values chosen during data preprocessing. Panels A-H: default (lowest) required consistency (~0.6), used throughout the main analysis; panels I-P: The values of the obtained decay constants are listed in Appendix 1—table 1.

It turns out that increasing to 0.75, which implies disregarding about 20-25 percent of all data, does not have a strong effect on the fitting results. The obtained decay constants are smaller than for a lower value, but they stay in a range about an order of magnitude larger than the anatomical connectivity. We furthermore see that fitting individual slopes to different populations in some sessions leads to unreliable results (cf. yellow lines in Appendix 1—figure 2A, I and blue lines in Appendix 1—figure 2C,D,K,L). Therefore, the data is not sufficient to detect differences in decay constants for different neuronal populations. Fitting instead a single decay constant yields trustworthy results (cf. yellow lines in Appendix 1—figure 2E,M and blue lines in Appendix 1—figure 2G,H,O,P). Our data thus clearly expose that decay constants of covariances are in the millimeter range.

Appendix 1—table 1. Summary of exponential fits to distance-resolved variance of covariance.

For each value of E/I separation consistency the numbers of sorted putative neurons and the percentages of unclassified units, and therefore not considered for fitting SUs, are listed per resting state session, along with the resulting fits (Figure 4 in the main text)

| C | E1 | E2 | N1 | N2 | |

|---|---|---|---|---|---|

| 0.6 (default) | #exc/#inh | 56/50 | 67/56 | 76/45 | 78/62 |

| unclassified | 0.078 | 0.075 | 0.069 | 0.091 | |

| relative error | 1.1157 | 1.0055 | 1.0097 | 1.0049 | |

| 1-slope fit | 1.674 | 1.029 | 1.676 | 4.273 | |

| I-I | 1.919 | 0.996 | 1.647 | 4.156 | |

| I-E | 0.537 | 1.206 | 2.738 | 96100.688 | |

| E-E | 1.642 | 1.308 | 80308.482 | 94096.871 | |

| 0.75 | #exc/#inh | 45/42 | 47/48 | 70/36 | 74/48 |

| unclassified | 0.24 | 0.28 | 0.18 | 0.21 | |

| relative error | 1.1778 | 1.0141 | 1.0102 | 1.0090 | |

| 1-slope fit | 1.357 | 0.874 | 1.420 | 2.587 | |

| I-I | 1.794 | 0.809 | 1.394 | 2.550 | |

| I-E | 0.496 | 1.123 | 3.682 | 40.852 | |

| E-E | 1.390 | 1.199 | 80548.500 | 10310.780 |

3 Stationarity of behavioral data