Supplemental Digital Content is available in the text.

Abstract

Purpose

Continuing health provider education (HPE) is an important intervention supported by health policy to counter the opioid epidemic; knowledge regarding appropriate program design and evaluation is lacking. The authors aim to provide a comprehensive understanding of evaluations of opioid-related continuing HPE programs and their appropriateness as interventions to improve population health.

Method

In January 2020, the authors conducted a systematic search of 7 databases, seeking studies of HPE programs on opioid analgesic prescribing and overdose prevention. Reviewers independently screened the titles and abstracts of all studies and then assessed the full texts of all studies potentially eligible for inclusion. The authors extracted a range of data using categories for evaluating complex programs: the use of theory, program purpose, inputs, activities, outputs, outcomes, and industry involvement. Results were reported in a narrative synthesis.

Results

Thirty-nine reports on 32 distinct HPE programs met inclusion criteria. Of these 32, 31 (97%) were U.S./Canadian programs and 28 (88%) were reported after 2010. Measurements of changes in knowledge and confidence were common. Performance outcomes were less common and typically self-reported. Most studies (n = 27 [84%]) used concerns of opioid-related harms at the population health level to justify the educational intervention, but only 5 (16%) measured patient- or population-level outcomes directly related to the educational programs. Six programs (19%) had direct or indirect opioid manufacturer involvement.

Conclusions

Continuing HPE has been promoted as an important means of addressing population-level opioid-related harms by policymakers and educators, yet published evaluations of HPE programs focusing on opioid analgesics inadequately evaluate patient- or population-level outcomes. Instead, they primarily focus on self-reported performance outcomes. Conceptual models are needed to guide the development and evaluation of continuing HPE programs intended to have population health benefits.

Opioid-related harms, at epidemic levels throughout the United States and Canada, have worsened in the context of the COVID-19 pandemic. 1–3 While an increasing proportion of deaths is due to nonmedical opioids (e.g., heroin, fentanyl, fentanyl analogues) and to polysubstance use, prescribed opioids continue to contribute to this epidemic. 4,5

In response, numerous prescribing-focused policies have been implemented. These include prescription drug monitoring programs (PDMPs), 6 regulatory authority investigations of prescribers, and the development and dissemination of clinical practice guidelines. 7,8 Health provider education (HPE) has been central to the implementation of many of these interventions, and a variety of major policies, as well as national media reporting, have identified HPE as a pivotal strategy for countering the opioid epidemic. 9–12 The central role of HPE is driven by a recognition that, heretofore, HPE on pain has been deficient in terms of both quantity and quality across the health professions education continuum. 13,14 A compounding factor is the notable history of pharmaceutical industry involvement, which has influenced prescriber behavior not just through commercial promotions but also through heavy investments in HPE. 15,16

Although HPE is considered an important policy intervention for countering the opioid epidemic, there is a gap in understanding the appropriate design of these programs, how they are evaluated, and the manner in which program components affect outcomes. 17,18 This gap persists despite at least 2 systematic reviews that have assessed the effectiveness of HPE for opioid prescribing. 19,20 The role of educational theory informing the development, delivery, and evaluation of these programs is particularly unclear—as is how educational theory should inform population health policy.

Objective and Research Questions

The objective of this scoping review is to provide a comprehensive understanding of evaluations of continuing HPE focused on opioid prescribing. The following 4 related questions guided our research:

What explicit educational, evaluative, or health policy frameworks; models; or theories are used to inform evaluative reports about opioid continuing HPE?

What evidence-based program components are included in the interventions?

What outcome types do these studies report?

What is the role of the pharmaceutical industry in opioid continuing HPE?

Method

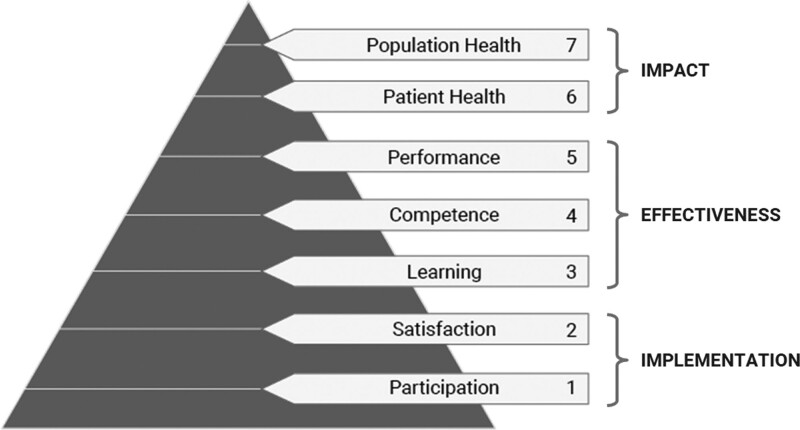

Two frameworks for scoping reviews 21,22 and a guide for conducting systematic reviews in medical education 23,24 informed this review. Additionally, we adapted a framework for applying theory to synthesize evidence from systematic reviews. 25 We used 2 related sets of knowledge on continuing HPE to synthesize the results. We used a review of systematic reviews on the effectiveness of continuing medical education to categorize evidence-based best practices in continuing HPE 26 and we applied an outcomes framework for planning and assessing continuing HPE to determine outcome types. 27,28 To facilitate consideration of HPE as health policy, we categorized these education outcomes as implementation, effectiveness, and impact outcomes according to frameworks for complex interventions (Figure 1). 29 We developed an a priori protocol for this review and made no amendments to the protocol.

Figure 1.

Mapping Moore and colleagues’ continuing health provider education outcome levels (left of figure) 27,28 to complex intervention outcome evaluation types (right of figure). 29 Reprinted with permission from Moore DE Jr, Chappell K, Sherman L, and Vinayaga-Pavan M. A conceptual framework for planning and assessing learning in continuing education activities designed for clinicians in 1 profession and/or clinical teams. Med Teach. 2018;40:904–913.

Inclusion/exclusion criteria

Participants.

We included studies of practicing health professionals involved in opioid prescribing for chronic pain, including physicians, nurses/nurse practitioners, dentists, and pharmacists. We excluded studies of continuing HPE whose primary participants were trainees (e.g., medical residents) or non–health professionals (e.g., patients, family members).

Interventions.

We included studies of educational programs relating to opioid analgesic or naloxone (opioid antagonist) prescribing. We excluded studies of opioid agonist and antagonist therapies, which are typically prescribed for the management of opioid use disorder rather than for pain. We used a broad and widely accepted definition of continuing education (CE) that encompasses interventions ranging from live, group-based lectures (“traditional CE”) to asynchronous online self-learning (e.g., archived webinars) to one-to-one, point-of-care learning (e.g., academic detailing). 30 The evaluations had to focus primarily on the educational intervention and include any kind of evaluative learning outcome. 26 We excluded evaluations of larger complex interventions that, while incorporating education, did not report specific evaluations of the continuing HPE component. We did not include any criteria about comparators.

Types of studies.

We included any kind of evaluative study design, such as quasi-experimental pre–post designs, prospective and retrospective observational designs, randomized controlled trials, and qualitative studies. We did not include systematic reviews or other evidence syntheses, nor did we include nonevaluative articles such as commentaries, editorials, or conceptual reports. We excluded studies focused only on needs assessments or program development that did not also incorporate a program evaluation. Meeting abstracts were not included in our review, but we used such records to identify possible full-length reports that would meet our inclusion criteria. To find these, we conducted manual searches and contacted authors of relevant meeting abstracts.

Context.

We did not set any limits in terms of timing, geography, or language given that problematic opioid prescribing has been a long-standing issue and a global phenomenon. 31 Based on our previous research, we anticipated that the majority of reports would likely be from the United States and written in English. 32 We included only studies of prescribers who worked in outpatient, ambulatory settings. We excluded studies based in hospital settings (e.g., perioperative clinical encounters) because priorities and practices of opioid prescribing in these contexts differ substantially from ambulatory settings where the majority of long-term opioid prescribing occurs. Additionally, more organizational (specifically noneducational) factors influence opioid prescribing in these inpatient settings. We excluded studies focused only on palliative and end-of-life care as these are different treatment contexts with different educational priorities.

Search strategy

Our search strategy followed the 3-step approach recommended by the Joanna Briggs Institute, 33 and we consulted with a professional librarian. The first step involved an initial limited search of MEDLINE, referenced against a set of high-relevance sentinel studies identified from 2 recent systematic reviews. 19,20 We then analyzed the words and language used in the titles, abstracts, and index terms of relevant studies to refine our search strategy (see Supplemental Digital Appendix 1 at http://links.lww.com/ACADMED/B127 for full database searches). The second step was to conduct a comprehensive search in the following databases:

Embase (Embase Classic + EMBASE)

Cumulative Index to Nursing and Allied Health (EBSCOHost)

MEDLINE (Medline-in-Process, Medline Epubs Ahead of Print)

PsycINFO

Dissertation Abstracts

Education Resources Information Centre (ProQuest)

Cochrane Trials and International Trials Registers and Cochrane Database of Systematic Reviews and Protocols (Wiley).

We used medical subject headings (MeSH) and textword terms to search for the concepts of “opioids” and “continuing health professional education.” We searched all databases from inception to January 20, 2020. The final step entailed a manual search of the references of the included studies to identify any additional relevant records.

Study selection

Search results were first uploaded into EndNote (Clarivate Analytics, Philadelphia, Pennsylvania), and duplicates were removed using a systematized process. 34 We then uploaded these records to the Covidence (Melbourne, Victoria, Australia) online data management platform for screening. Four reviewers (including A.S. and G.R.M.) independently screened titles and abstracts in duplicate and resolved most conflicts. When they could not reach an agreement, the duplicate reviewers discussed the record with a third reviewer, and we made a final decision by consensus. Next, 3 reviewers (including A.S. and G.R.M.) conducted a full-text review of the remaining records in duplicate, tracking their reasons for excluding studies using Covidence. Again, these reviewers discussed conflicts and consulted the third reviewer when they could not agree, and we made a final decision by consensus. We followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) extension for scoping reviews for manuscript preparation, 35 and we created a PRISMA flow diagram to present the number of records identified, screened, assessed for eligibility, and included or excluded (along with the reasons they were excluded).

Data extraction and charting

We developed a data extraction form using Excel software (Version 16.4, Microsoft, Redmond, Washington). We adapted a logic model for medical education programs, 36 and we applied both (1) Moore and colleagues’ outcomes framework for continuing HPE 27,28 and (2) the evidence-based continuing HPE practices from Cervero and Gaines’ review of systematic reviews. 26 We extracted the following data from each study:

Theory (explicit, implicit, or missing);

Program purpose (including the primary problem, target audience, and needs assessment);

Inputs (i.e., faculty, funding, registration fees, regulatory involvement, accreditation [whether learners earned CE credit], promotion/recruitment, program development);

Activities (i.e., duration, setting, synchronicity, group size [< 20 small, ≥ 20 large], number of interventions, number of exposures, interactivity);

Outputs (i.e., number of programs, location of programs, external factors identified [such as incentives for participation]);

Outcomes (i.e., participation, satisfaction, knowledge, confidence, attitudes, competence, performance, patient health, population health); and

Industry involvement.

Two of us (A.S. and G.R.M.) tested the form on 3 studies to ensure all relevant results were extracted, and we made adjustments to the form accordingly.

Results

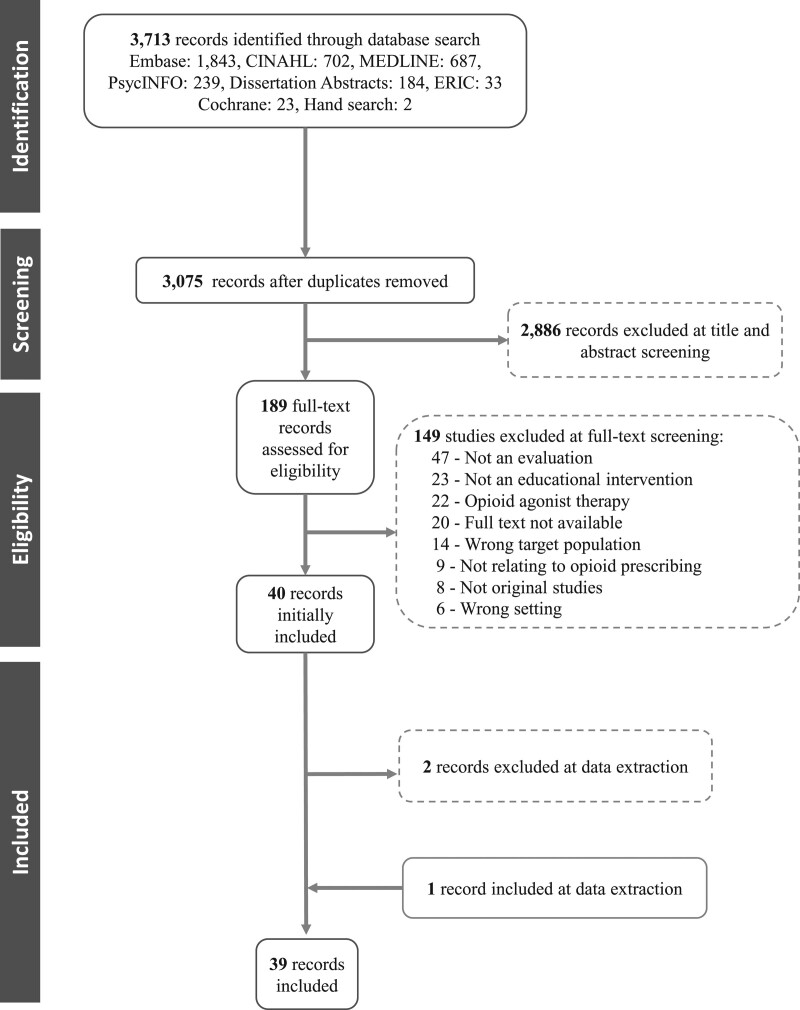

After removing 638 duplicates, the database search yielded 3,075 records. Of these, 2,886 were considered irrelevant and removed after title and abstract screening. We screened the full text of 189 articles, and we excluded 149 (Figure 2; see Supplemental Digital Appendix 2 at http://links.lww.com/ACADMED/B128 for the 149 articles excluded and the reasons for their exclusion). At the data extraction phase, we excluded 2 articles that were reviews of policies regarding opioid-related continuing HPE, rather than evaluations of specific programs. 37,38 At this stage, we also identified an additional study to include that provided more information about the delivery and development of a program described in another included study. Of the remaining 39 records, 39–77 7 reported different outcomes for programs covered in other reports, so we analyzed these with the primary record (and the denominators for the data reported below are 32 unless otherwise stated).

Figure 2.

PRISMA 35 flow diagram presenting the number of records identified, screened, assessed for eligibility, and included or excluded (along with the reasons they were excluded). Abbreviations: PRISMA, Preferred Reporting Items for Systematic Reviews and Meta-Analyses; CINAHL, Cumulative Index to Nursing and Allied Health; ERIC, Education Resources Information Centre.

Program descriptions

In total, we analyzed 39 evaluations of 32 continuing HPE programs from 28 distinct author groups. The reports spanned 1983 to 2020, though reports on 28 programs (88%) were published during or after 2010 (Appendix 1). The vast majority reported on U.S. or Canadian programs: 25 (78%) were based in the United States, 6 (19%) in Canada, and 1 (3%) in Turkey. 64 Physician participants were the primary learning targets of 28 (88%) of the programs and were the exclusive targets of 17 (53%). Only 2 of the programs that targeted exclusively physicians 50,66 (6% of the 32) focused on naloxone prescribing while the rest focused on opioid analgesics. Of the 32 programs, 9 (28%) also focused on nurse practitioners, but never exclusively. Physician assistants and dentists were included in, respectively, 2 programs 49,75 (6%) and 1 program 40 (3%). Four programs (13%) focused on only pharmacists and/or pharmacy technicians 41,55–57,68,69 and 2 other programs (6%) included pharmacists together with physicians. 40,66 Of the 6 programs that included pharmacists, 4 focused on naloxone prescribing or distribution. 55–57,66,68,69 Opioid-related continuing HPE targeted practitioners in a variety of settings, including community primary care (11 programs [34%]), community pharmacies (4 [12%]), academic internal medicine practices (2 [6%]), providers in veterans and military health settings (2 [6%]), and the United States Indian Health Service (1 program [3%]). Reports on 10 programs (31%) did not describe a targeted setting.

Most programs (n = 19 [59%]) were delivered via traditional live group learning, including both large groups (15 programs [47%]) and small groups (4 [12%]). These were typically delivered as standalone programs, but we also found examples of delivery at the workplace 44–51,53,59,61,64,68 or in the context of a conference. 39,52,53,65,67,69,72 Some of these traditional live group programs also provided an alternative online individual asynchronous version 39,47,48,52,55–57,66,69; none of these blended in-person and online programs. One program, 67 which was the first to report any kind of virtual delivery of education, did blend group learning with cases emailed monthly to participants after the in-person intervention. Four programs (12%) 41,54,58,75 were delivered asynchronously online only and 1 (3%) 63 was delivered only synchronously online; the evaluations for all 4 of these were published recently (between 2016 and 2020). We found 4 examples of academic detailing 44–46,49,50,61 and 2 of audit and feedback 59,70—all of which were delivered at the workplace.

Articles on 9 of the 32 programs (28%) reported professional (medical or pharmacy) regulator involvement in the development or delivery of the program. Most of these programs involved traditional live group learning, though we also noted examples of other delivery modes. Of these 9 programs, 3 mandated physician participation in the program (e.g., due to identified inappropriate prescribing), 62,63,73,74 and another mixed mandated and voluntary participants. 61 None of the programs with pharmacist regulator involvement mandated pharmacist involvement. The single study evaluating the effect of an educational program for United States Indian Health Service providers 63 required participation, but by mandate of the employer, not of the medical regulator.

Articles on more than half of the programs (n = 17 [53%]) reported accreditation. Only 6 (19%) reported the specific number of credits participants could earn, which ranged from 0.2 to 20. In a further 6 programs (19%), the opioid-related continuing HPE was embedded within larger complex interventions. These included the following:

education embedded in a quality improvement program focused on the use of an electronic medical record (EMR)-based protocol, 51

a public health detailing program embedded in a jurisdiction-wide opioid crisis campaign, 61

an educational program that was part of a statewide campaign, which also included a media strategy, the release of prescribing guidelines, the formation of public health coalitions, and private industry–led public education campaigns, 53

the distribution of workplace naloxone toolkits together with the delivery of educational programs for pharmacists, 41,55–57 and

a statewide approach to facilitating naloxone utilization, delivered with pharmacist and pharmacy technician training in naloxone. 68

Evaluation design and evidence-based components

Articles on most programs (n = 27 [84%]) reported that population-level concerns of opioid-related harms driven by inappropriate prescribing were used to justify the continuing HPE program and its evaluation (Appendix 2). Studies of 5 programs (16%) reported, instead, focusing on performance-level problems such as practices relating to opioid prescribing and chronic pain. Articles on 3 of these programs 42–46,73,74 published outcomes in the 1980s and 1990s, before the recognition of widespread, population-level harms from opioids. A more recent study (from 2015) was based in Turkey, 64 where the epidemiology of opioid prescribing and related harms is distinct from that of the United States and Canada. The final of these 5 studies 65 focused on end-of-life and palliative care, rather than only chronic pain management.

Articles on only 6 programs (19%) identified an explicit model for the development or evaluation of the educational intervention. One set of authors 42,43 identified the Transtheoretic Model of Behavior Change as the primary orienting model for their intervention. This program also applied Moore and colleagues’ framework, 27 as did another program, using traditional live group learning. 72 Two programs applied the Theory of Planned Behavior, 41,55 and one of these also referenced the Kirkpatrick model in describing program development. 55–57 The single example of an explicitly interprofessional program 52 reported the use of frameworks for interprofessional education. 78–80 One intervention entailing academic detailing 47,48 applied the Medical Research Council’s framework for complex interventions for both program development and program evaluation. 81 Finally, 1 traditional live, small-group program with regulatory involvement was designed around models of physician behavior change. 73

While the studies of the other programs did not explicitly reference any theories, models, or frameworks for program development or evaluation, we could identify some implicit models. For example, 1 program 51 used quality improvement and change management as the overarching framework for its intervention. Likewise, an academic detailing intervention 44–46 applied a combination of communication theory and behavioral science for development. Another 2 programs 58,77 used a clear outcomes-based approach to evaluation congruent with Moore and colleagues’ framework, 27 focusing on the change in clinician knowledge, competence, confidence, and performance. Similarly, an additional program discussed an approach to physician behavior change driven by changes in confidence and knowledge. 66

In terms of evaluation, studies of more than half of the programs (n = 17 [53%]) used quasi-experimental pre–post designs. Of these 17, only 2 included comparator groups. 52,77 Evaluations of 6 programs 53,65,68,69,74,76 (19%) measured only postintervention outcomes without any baseline measures. The studies of 2 programs (6%) examining the effects of the educational interventions on physician prescribing used retrospective cohort studies, 50,60 and another one (3%) used a time series analysis. 59 We identified 5 controlled trials 42–46,49,70,75 one of which 49 did not report clear randomization between groups. Two of the 4 randomized controlled trials were among the earlier studies published in the 1980s and 1990s. 42–46 Both of the audit and feedback studies 59,70 and 2 of the 4 academic detailing studies 44–46,49 used designs that allow for greater causal inference, namely controlled trials and time series analysis.

Articles on 4 programs (12%) reported use of all the evidence-based components of interventions likely to improve physician performance and patient health. 42,43,60,67,75 These programs also tended to use evaluation designs that allow for greater causal inference (randomized controlled trials and a retrospective cohort study). Studies on 20 of the programs (62%) reported interactivity, and studies on a different 20 (62%) reported use of multiple methods. About a third of the programs (12 [38%]) included more than 1 educational exposure. The duration of the interventions ranged from 13 minutes to 780 minutes; most reported under 300 minutes. The longer programs tended to be traditional live group learning, while the academic detailing interventions and several of the virtual-only programs tended to be the shortest. Studies of 6 programs (19%), including the 2 audit and feedback programs and 1 program that included only mailed information, did not specify the duration of the intervention. 50,59,64,70,73,74,76

All the evaluations of the programs reported participation as an evaluation outcome, but articles on most (n = 22 [69%]) did not include satisfaction. Of the 32 distinct programs, evaluation measures of 16 of them (50%) included changes in knowledge, 18 (56%) included changes in confidence, and a different 18 (56%) included changes in attitudes. The studies of only 5 programs (16%) included measures of competence. One of these was a study of an educational program to improve enrollment in a PDMP that included self-reported changes in competence. 58 Performance, evaluated in 23 (72%) of programs, was much more commonly measured; however, 13 of these 23 studies included self-reported performance changes. Of the 8 programs for which objective performance changes (e.g., in opioid prescribing rates) were measured, 6 used either randomized controlled or retrospective cohort studies. The evaluation of only 1 of these 8 reported an implementation outcome besides participation. 44 The articles on 3 additional programs reported objective performance changes—not of the program participants but of the entire jurisdiction where the intervention was delivered. 61,62,74 Evaluations of 6 programs 49,51,55–57,59,68,70 (19%) reported patient health outcomes; however, the patient outcomes for one of these programs was not directly connected to patients cared for by program participants. 68 The studies of 3 programs (9%), all evaluating traditional live group learning programs for physicians or pharmacists, reported community health outcomes. 53,62,68 Yet, none of these outcomes related directly to program participants’ patients or communities; rather, programs reported overall patterns of opioid-related harms in the jurisdictions and during the time in which the intervention took place.

Industry involvement

The evaluations of 6 programs (19%), all of which were published between 2016 and 2018, reported either direct or indirect industry involvement in the development or evaluation of the continuing HPE intervention. All of these were opioid analgesic education programs; none were naloxone training programs. Three were developed under the opioid analgesic risk evaluation and mitigation strategy (REMS), which is funded by opioid manufacturers, and all 3 of these reported accreditation. 39,54,77 Another program used content from a REMS program and received an educational grant from a pharmaceutical manufacturer. 52 A further program, not related to REMS, also received an educational grant from a pharmaceutical manufacturer. 58 The sixth program was sponsored by a pharmaceutical manufacturer and a private insurer, and the study authors were compensated directly by the pharmaceutical company. 70

Discussion

Summary of findings

The published literature on opioid-related continuing HPE makes little explicit use of theory from any domain to guide either development or evaluation. This paucity is emblematic of the general field of continuing HPE, in which the development and use of theory, frameworks, and models are considered to be in their infancy. 26 This situation, though, is common to improvement programs even outside of HPE. As Davidoff and colleagues note,

Despite the potential value of theory, a striking feature of many improvement efforts is the tendency of its practitioners to move straight to implementation, skipping the critical working out of the programme theory; for example, sometimes only the source of the problem is identified but not an accompanying theory of change. 82

This assertion accurately characterizes the bulk of studies included in our review. Without attending to theory, opioid-related continuing HPE program developers miss opportunities for expanding and optimizing impact and for contributing to the advancement of the field—all of which should be considered priorities in the context of the public health crisis that the programs claim to address. 82

Despite the paucity of theory to guide continuing HPE development and delivery, the extant literature provides substantial knowledge about components of programs that are likely to drive changes in practice behaviors and patient outcomes. 26 Similarly, conceptual frameworks making use of this published knowledge have been developed and widely disseminated. 27 Continuing HPE programs focused on opioids have so far made only moderate use of these best practices, which include interactivity, multiple methods, and multiple exposures. This underuse is associated with minimal reporting about patient- or population-level health outcomes. Again this problem is not specific to continuing HPE related to opioid analgesics but reflects the continuing HPE and health policy fields. 83–86 Some reasons for this dearth may include a lack of financial resources, poor data sources, or lack of methodological expertise. 87,88 Yet these omissions are hard to justify when the primary motivation for the development and support of the opioid-related continuing HPE programs is the mitigation of population-level harms resulting from opioid use. This weakness is nowhere more clear than in the dates of publication of the included studies: the vast majority were published from 2010 onward and none were published between 2000 and 2009. These dates indicate that continuing HPE focused on opioid analgesics became a topic of scientific and evaluative value only after population-level opioid-related harms were more widely acknowledged.

Among the few programs that explicitly referenced theory, the most frequently mentioned frameworks were the Transtheoretic Model and the Theory of Planned Behavior. This behavior change orientation was also implicit in a number of the included studies. For example, Leong and colleagues claimed,

There are many stages in the outcomes of CME [continuing medical education] training for physicians. The initial stage is for the physician to assimilate the new knowledge. The second stage is that the physician be convinced enough of the new information that they are willing to change their practice. Only then can the third stage of implementation of the new material be realized and improvements in patient care be achieved. 65

This implicit theory—that knowledge acquisition will lead to behavior change which will, in turn, result in improved patient care—appears to justify the assumption that measures of changes in knowledge or clinical performance are sufficient to presume patient- and population-level effects. Yet, this stance is not well supported either by good theory or strong evidence. 26

The few studies that did report objective measures of performance change tended not to measure implementation outcomes. As is common in other outcomes-based program evaluations, these studies seemed to treat the educational interventions as “black boxes”; that is, they aimed to determine primarily if the interventions worked but did not provide a good framework or data for understanding why or how the interventions worked or did not work. 89

That such frameworks are lacking was evident in many of the included studies examining patient- and population-level outcomes. Three of these studies 53,62,68 reported evaluative outcomes that did not link directly to program participants but instead to ecological trends in the jurisdictions in which the intervention was delivered. The U.S. Food and Drug Administration (FDA) identified this same serious methodological flaw in its review of the Extended-Release/Long-Acting Opioid Prescribing REMS program, a major U.S. continuing HPE initiative aimed at reducing opioid analgesic harms. 38

The pharmaceutical industry is known to have played an insidious role in the origin of the contemporary opioid epidemic, particularly by promoting opioid analgesic use, often via educational programs for prescribers. 90 Our review has demonstrated the persistent, though not always direct, role of the pharmaceutical industry in setting the U.S. and Canadian opioid-related continuing HPE agenda. This presence is more noticeable in the opioid analgesic studies than in the naloxone studies. Four of the opioid analgesic studies were evaluations of REMS-compliant programs. In addition to the poor evaluation of these programs from the U.S. FDA (mentioned above), the opioid REMS program has been criticized for program and blueprint developers’ ties to industry, for the nature and orientation of the content of the compliant programs, and for the possibility that the connections to industry are obscured upon delivery. 91

Recommendations for practice

Based on the findings of this review, we have 2 primary recommendations for the practice of continuing HPE programs (especially those that aim to effect improvements at the population health level): (1) adopting evaluation frameworks for complex interventions and (2) using conceptual models that link clinical practice and population health.

Evaluation frameworks for complex interventions.

Continuing HPE interventions meet many of the criteria of so-called “complex interventions.” They involve the actions of people, involve a complex chain of steps, are embedded in social systems shaped by context, and are open systems subject to change. 92,93 The following are examples of methods from the evaluation sciences that may be useful for understanding the effects of complex interventions such as continuing HPE programs: the development of program theories, the use of logic models, and the determination of types of outcomes—and their relationship to one another. 36 Though others have long identified the need for sequential or staged evaluation for continuing HPE, 94 the field has been slow to move in this direction. However, there is evidence that this trend may be changing. Moore and colleagues’ recent renewal of a previous outcomes-based framework categorizes outcome types as “summative,” “performance,” and “impact.” 28 Their perspective aligns well with the effectiveness and impact outcomes commonly used in the evaluative sciences but, importantly, overlooks implementation outcomes, which are vital for the evaluation of complex interventions. 95 Knowledge from the evaluative sciences, which has been successfully applied to a wide range of population health programs, including ones involving other forms of education, 96 can and should be considered for continuing HPE programs, including those focused on opioid prescribing. Applying this literature may enhance the reach and effectiveness of these programs, enabling them to improve population health as intended.

Conceptual models that link clinical practice and population health.

Models focused on the behavior changes of individuals may be insufficient for meeting the need to translate continuing HPE to population health outcomes. Even widely used population-focused behavior change models (e.g., socioecological models 97) may not be sufficient for this purpose, particularly because the effects of continuing HPE programs on patients and populations must be mediated first by clinician behavior change. As health systems have become increasingly concerned with population health outcomes, interest in the development of appropriate conceptual models for connecting clinical practice to population health has grown. 98,99 One promising paradigm is clinical population medicine (CPM), which Orkin defines as “the conscientious, explicit, and judicious application of population health approaches to care for individual patients and design of health care systems.” 100 In discussing population health improvement through a proposed new model of health service delivery in the province of Ontario, Canada, Orkin delineates the notion of a population of individuals within a defined clinical environment (e.g., a health team or a physician’s practice) against the notion of a population in a given community or jurisdiction, regardless of members, affiliation with or access to clinical services. 101 He argues that CPM, besides maintaining its traditional focus on the clinical environment, also focuses on the community population, and he describes various means of achieving this change in orientation. While a comprehensive framework for population-focused continuing HPE is currently wanting, such a framework could serve the educational needs of CPM or similar paradigms that explicitly conceptualize, develop, and examine the connections between clinical practice and population health.

Our review emphasizes the ongoing needs to incorporate evidence-based practices into continuing HPE programs and to mitigate industry influence, both of which have already been broadly characterized in the existing literature. While the HPE community does not yet have good evidence that educational best practices (e.g., interactivity, multiple methods, multiple exposures) can drive population health improvements, we do have good evidence that such practices are associated with improvements in participants’ knowledge and performance—and, to some extent, improvements in the health of individual patients. Given, first, the absence of conceptual models for connecting individual patient health outcomes to population health outcomes and, second, the assumption of implicit models that improvements in patient health outcomes lead, in aggregate, to improvements in population health outcomes, we believe it is imperative that continuing HPE follow the best available evidence for driving intended outcomes.

Industry participation in medical practice, specifically continuing HPE, has long been problematized for the potential misalignments between commercial interests and patient and population welfare. 102,103 In describing recommended continuing HPE policy that would mitigate this risk, Brennan and colleagues have suggested creating central repositories of industry contributions that would be managed by academic medical centers independently of industry. 104 Indeed, the U.S. FDA attempted a variation of this model in enacting its opioids REMS program. Given the significant deficiencies identified in the program and its ongoing connection to a number of opioid education programs, it is clear that, as Brennan and colleagues state, “other ways of funding CME will have to be identified.” 104 Public funds from local, state, and national governments are the most evident source to support continuing HPE that aligns funder and population health interests. In our review, we identified a few programs that benefitted from the direct engagement of government and public health agencies at both the development and delivery stages. 49,53,61–63,68 These could serve as models for the development of population-focused continuing HPE. Publicly funded development and evaluation grants may also indirectly support opioid-related continuing HPE. Importantly, effective continuing HPE programs must consider sustainability and ultimately attain independence from public support to maintain their long-term relevance and impact.

Limitations

We note several limitations to this review. We did not conduct a systematic search of the gray literature for reports evaluating opioid-related continuing HPE programs. Given that some relevant programs may be conducted by health agencies or similar institutions, evaluations may be published as institutional reports rather than scientific studies. However, considering the more pragmatic nature of such gray literature, we presume that few publications will have more extensively used theories, models, or frameworks than the evaluations captured in this review. Furthermore, while we extracted data on the types of outcomes, we did not formally evaluate the effectiveness or impact of the included programs. Given the heterogeneity of the types of outcomes and outcome measures, a formal synthesis of these outcomes would be challenging. We note, though, that other methods (e.g., a realist synthesis) could be of value to accomplish this. Other recently published reviews 19,20 have already aimed to study the effectiveness and impact of opioid HPE programs especially vis-à-vis other opioid epidemic interventions.

Conclusions

Despite both the recognition of patient- and population-level harms resulting from opioids and also the clear casting of continuing HPE as an appropriate intervention to address these harms, programs have only occasionally followed best practices, have rarely applied educational or other theories, and have seldom included methodologically sound evaluations of effects on patient or population health outcomes. Direct or indirect pharmaceutical industry involvement in the development and evaluation of opioid-related continuing HPE programs persists, which constitutes an important source of potential bias that may impair the ability of these programs to benefit patients and populations. Further conceptual models are urgently needed to guide the development and evaluation of continuing HPE programs, especially in the case of those intended to have population health impacts.

Acknowledgments:

Ms. Elizabeth Uleryk provided professional librarian support for this study, including designing and executing the database search strategy. Ms. Melanie Eliot and Dr. Pamela Sabioni contributed to title and abstract screening. Drs. Whitney Berta, Kate Hodgson and Pamela Sabioni provided helpful comments on earlier drafts of the report.

Supplementary Material

Appendix 1 Summary Descriptions of Articles Evaluating 32 Continuing Health Provider Education Programs Focused on Opioid Analgesic Prescribing and Overdose Preventiona

Appendix 2 Justification; Theory, Model, or Framework; Evaluation Design; Evidence-Based Components; and Outcomes of Articles Evaluating Continuing Health Provider Education Programs Focused on Opioid Analgesic Prescribing and Overdose Preventiona

Footnotes

Supplemental digital content for this article is available at http://links.lww.com/ACADMED/B127 and http://links.lww.com/ACADMED/B128.

Funding/Support: Work for this project was supported in part by the Substance Use and Addictions Program, Health Canada (1920-HQ-000031). Dr. Sud received research support from the Department of Family and Community Medicine and from the Medical Psychiatry Alliance, both at the University of Toronto.

Other disclosures: Dr. Sud is the director and a faculty member of Safer Opioid Prescribing, a Canadian chronic pain and opioid prescribing continuing education program. The authors have no conflicts of interest with commercial entities to declare.

Ethical approval: Reported as not applicable.

References

- 1.Special Advisory Committee on the Epidemic of Opioid Overdoses. National Report: Opioid-related Harms in Canada Web-based Report. Ottawa, ON: Public Health Agency of Canada. https://health-infobase.canada.ca/substance-related-harms/opioids. [No longer available.] Updated December 16, 2019. [Google Scholar]

- 2.Centers for Disease Control and Prevention, National Center for Injury Prevention and Control. Drug overdose death. https://www.cdc.gov/drugoverdose/data/statedeaths.html. Published March 19, 2020. Accessed May 6, 2021.

- 3.British Columbia Coroners Service. Illicit drug toxicity deaths in BC January 1, 2011–March 31, 2021. https://www2.gov.bc.ca/assets/gov/birth-adoption-death-marriage-and-divorce/deaths/coroners-service/statistical/illicit-drug.pdf. Published April 29, 2021. Accessed May 6, 2021.

- 4.Gomes T, Khuu W, Craiovan D, et al. Comparing the contribution of prescribed opioids to opioid-related hospitalizations across Canada: A multi-jurisdictional cross-sectional study. Drug Alcohol Depend. 2018;191:86–90. [DOI] [PubMed] [Google Scholar]

- 5.Canadian Institute for Health Information. Opioid Prescribing in Canada: How Are Practices Changing? Ottawa, ON: Canadian Institute for Health Information; 2019. https://www.cihi.ca/sites/default/files/document/opioid-prescribing-canada-trends-en-web.pdf. Accessed May 6, 2021. [Google Scholar]

- 6.Sproule B. Prescription Monitoring Programs in Canada: Best Practice and Program Review. Ottawa, ON: Canadian Centre on Substance Abuse. https://campusmentalhealth.ca/wp-content/uploads/2018/03/CCSA-Prescription-Monitoring-Programs-in-Canada-Report-2015-en1.pdf. Published April 2015. Accessed May 6, 2021. [Google Scholar]

- 7.Dowell D, Haegerich TM, Chou R. CDC guideline for prescribing opioids for chronic pain—United States, 2016. JAMA. 2016;315:1624–1645. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Busse JW, Craigie S, Juurlink DN, et al. Guideline for opioid therapy and chronic noncancer pain. CMAJ. 2017;189:E659–E666. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Alford DP. Opioid prescribing for chronic pain—Achieving the right balance through education. N Engl J Med. 2016;374:301–303. [DOI] [PubMed] [Google Scholar]

- 10.Canadian Centre on Substance Use and Addiction. Joint Statement of Action to Address the Opioid Crisis: A Collective Response (Annual Report 2016–2017). https://www.ccsa.ca/sites/default/files/2019-04/CCSA-Joint-Statement-of-Action-Opioid-Crisis-Annual-Report-2017-en.pdf. Published 2017. Accessed May 6, 2021.

- 11.Kahn N, Chappell K, Regnier K, Travlos DV, Auth D. A Collaboration between government and the continuing education community tackles the opioid crisis: Lessons learned and future opportunities. J Contin Educ Health Prof. 2019;39:58–63. [DOI] [PubMed] [Google Scholar]

- 12.Webster F, Rice K, Sud A. A critical content analysis of media reporting on opioids: The social construction of an epidemic. Soc Sci Med. 2020;244:112642. [DOI] [PubMed] [Google Scholar]

- 13.Webster F, Bremner S, Oosenbrug E, Durant S, McCartney CJ, Katz J. From opiophobia to overprescribing: A critical scoping review of medical education training for chronic pain. Pain Med. 2017;18:1467–1475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lynch ME. The need for a Canadian pain strategy. Pain Res Manag. 2011;16:77–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Van Zee A. The promotion and marketing of Oxycontin Commercial triumph, public health tragedy. Am J Public Health. 2009;99:221–227. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Spithoff S. Industry involvement in continuing medical education: Time to say no. Can Fam Physician. 2014;60:694–696, 700–703. [PMC free article] [PubMed] [Google Scholar]

- 17.Davis CS, Carr D. Physician continuing education to reduce opioid misuse, abuse, and overdose: Many opportunities, few requirements. Drug Alcohol Depend. 2016;163:100–107. [DOI] [PubMed] [Google Scholar]

- 18.Barth KS, Guille C, McCauley J, Brady KT. Targeting practitioners: A review of guidelines, training, and policy in pain management. Drug Alcohol Depend. 2017;173(suppl 1):S22–S30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Furlan AD, Carnide N, Irvin E, et al. A systematic review of strategies to improve appropriate use of opioids and to reduce opioid use disorder and deaths from prescription opioids. Can J Pain. 2018;2:218–235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Moride Y, Lemieux-Uresandi D, Castillon G, et al. A systematic review of interventions and programs targeting appropriate prescribing of opioids. Pain Physician. 2019;22:229–240. [PubMed] [Google Scholar]

- 21.Arksey H, O’Malley L. Scoping studies: Towards a methodological framework. Int J Soc Res Methodol. 2005;8:19–32. [Google Scholar]

- 22.Levac D, Colquhoun H, O’Brien KK. Scoping studies: Advancing the methodology. Implement Sci. 2010;5:69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Haig A, Dozier M. BEME guide no 3: Systematic searching for evidence in medical education—Part 1: Sources of information. Med Teach. 2003;25:352–363. [DOI] [PubMed] [Google Scholar]

- 24.Haig A, Dozier M. BEME guide no. 3: Systematic searching for evidence in medical education—Part 2: Constructing searches. Med Teach. 2003;25:463–484. [DOI] [PubMed] [Google Scholar]

- 25.Gardner B, Whittington C, McAteer J, Eccles MP, Michie S. Using theory to synthesise evidence from behaviour change interventions: The example of audit and feedback. Soc Sci Med. 2010;70:1618–1625. [DOI] [PubMed] [Google Scholar]

- 26.Cervero RM, Gaines JK. Effectiveness of continuing medical education: Updated synthesis of systematic reviews. Accreditation Council for Continuing Medical Education. https://www.accme.org/sites/default/files/652_20141104_Effectiveness_of_Continuing_Medical_Education_Cervero_and_Gaines.pdf. Published 2014. Accessed May 6, 2021. [Google Scholar]

- 27.Moore DE, Jr, Green JS, Gallis HA. Achieving desired results and improved outcomes: Integrating planning and assessment throughout learning activities. J Contin Educ Health Prof. 2009;29:1–15. [DOI] [PubMed] [Google Scholar]

- 28.Moore DE, Jr, Chappell K, Sherman L, Vinayaga-Pavan M. A conceptual framework for planning and assessing learning in continuing education activities designed for clinicians in one profession and/or clinical teams. Med Teach. 2018;40:904–913. [DOI] [PubMed] [Google Scholar]

- 29.Sud A, Doukas K, Hodgson K, et al. A retrospective quantitative implementation evaluation of Safer Opioid Prescribing, a Canadian continuing education program. BMC Med Educ. 2021;21:101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Marinopoulos SS, Dorman T, Ratanawongsa N, et al. Effectiveness of continuing medical education, 2007 in Database of Abstracts of Reviews of Effects (DARE): Quality-assessed Reviews. York, UK: Centre for Reviews and Dissemination; 1995. [Google Scholar]

- 31.Portnow JM, Strassman HD. Medically induced drug addiction. Int J Addict. 1985;20:605–611. [DOI] [PubMed] [Google Scholar]

- 32.Sud A, Armas A, Cunningham H, et al. Multidisciplinary care for opioid dose reduction in patients with chronic non-cancer pain: A systematic realist review. PLoS One. 2020;15:e0236419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Peters MDJ, Godfrey C, McInerney P, Munn Z, Tricco AC, Khalil H. Chapter 11: Scoping reviews (2020 version). In Aromataris E, Munn Z, eds. JBI Manual for Evidence Synthesis. Adelaide, Australia: JBI; 2020. 10.46658/JBIMES-20-12. Accessed May 6, 2021. [DOI] [Google Scholar]

- 34.Bramer WM, Giustini D, de Jonge GB, Holland L, Bekhuis T. De-duplication of database search results for systematic reviews in EndNote. J Med Libr Assoc. 2016;104:240–243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Tricco AC, Lillie E, Zarin W, et al. PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and explanation. Ann Intern Med. 2018;169:467–473. [DOI] [PubMed] [Google Scholar]

- 36.Melle EV. Using a logic model to assist in the planning, implementation, and evaluation of educational programs. Acad Med. 2016;91:1464. [DOI] [PubMed] [Google Scholar]

- 37.Cepeda MS, Coplan PM, Kopper NW, Maziere JY, Wedin GP, Wallace LE. ER/LA opioid analgesics REMS: Overview of ongoing assessments of its progress and its impact on health outcomes. Pain Med. 2017;18:78–85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Heyward J, Olson L, Sharfstein JM, Stuart EA, Lurie P, Alexander GC. Evaluation of the extended-release/long-acting opioid prescribing risk evaluation and mitigation strategy program by the US Food and Drug Administration: A review. JAMA Intern Med. 2020;180:301–309. [DOI] [PubMed] [Google Scholar]

- 39.Alford DP, Zisblatt L, Ng P, et al. SCOPE of pain: An evaluation of an opioid risk evaluation and mitigation strategy continuing education program. Pain Med. 2016;17:52–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Allen M, Macleod T, Zwicker B, Chiarot M, Critchley C. Interprofessional education in chronic non-cancer pain. J Interprof Care. 2011;25:221–222. [DOI] [PubMed] [Google Scholar]

- 41.Alley L, Novak K, Havlin T, et al. Development and pilot of a prescription drug monitoring program and communication intervention for pharmacists. Res Social Adm Pharm. 2020;16:1422–1430. [DOI] [PubMed] [Google Scholar]

- 42.Anderson JF, McEwan KL, Hrudey WP. Effectiveness of notification and group education in modifying prescribing of regulated analgesics. CMAJ. 1996;154:31–39. [PMC free article] [PubMed] [Google Scholar]

- 43.Anderson JF, McEwan KL. Modifying prescribing of regulated analgesics. CMAJ. 1997;156:636. [PMC free article] [PubMed] [Google Scholar]

- 44.Avorn J, Soumerai SB. Improving drug-therapy decisions through educational outreach. A randomized controlled trial of academically based “detailing.” N Engl J Med. 1983;308:1457–1463. [DOI] [PubMed] [Google Scholar]

- 45.Soumerai SB, Avorn J. Economic and policy analysis of university-based drug “detailing.” Med Care. 1986;24:313–331. [DOI] [PubMed] [Google Scholar]

- 46.Soumerai SB, Avorn J. Predictors of physician prescribing change in an educational experiment to improve medication use. Med Care. 1987;25:210–221. [DOI] [PubMed] [Google Scholar]

- 47.Barth KS, Ball S, Adams RS, et al. Development and feasibility of an academic detailing intervention to improve prescription drug monitoring program use among physicians. J Contin Educ Health Prof. 2017;37:98–105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Larson MJ, Browne C, Nikitin RV, et al. Physicians report adopting safer opioid prescribing behaviors after academic detailing intervention. Subst Abus. 2018;39:218–224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Behar E, Rowe C, Santos GM, Santos N, Coffin PO. Academic detailing pilot for naloxone prescribing among primary care providers in San Francisco. Fam Med. 2017;49:122–126. [PubMed] [Google Scholar]

- 50.Bounthavong M, Harvey MA, Wells DL, et al. Trends in naloxone prescriptions prescribed after implementation of a National Academic Detailing Service in the Veterans Health Administration: A preliminary analysis. J Am Pharm Assoc (2003). 2017;57:S68–S72. [DOI] [PubMed] [Google Scholar]

- 51.Canada RE, DiRocco D, Day S. A better approach to opioid prescribing in primary care. J Fam Pract. 2014;63:E1–E8. [PubMed] [Google Scholar]

- 52.Cardarelli R, Elder W, Weatherford S, et al. An examination of the perceived impact of a continuing interprofessional education experience on opiate prescribing practices. J Interprof Care. 2018;32:556–565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Cochella S, Bateman K. Provider detailing: An intervention to decrease prescription opioid deaths in Utah. Pain Med. 2011;12(suppl 2):S73–S76. [DOI] [PubMed] [Google Scholar]

- 54.Donovan AK, Wood GJ, Rubio DM, Day HD, Spagnoletti CL. Faculty communication knowledge, attitudes, and skills around chronic non-malignant pain improve with online training. Pain Med. 2016;17:1985–1992. [DOI] [PubMed] [Google Scholar]

- 55.Eukel HN, Skoy E, Werremeyer A, Burck S, Strand M. Changes in pharmacists’ perceptions after a training in opioid misuse and accidental overdose prevention. J Contin Educ Health Prof. 2019;39:7–12. [DOI] [PubMed] [Google Scholar]

- 56.Strand MA, Eukel H, Burck S. Moving opioid misuse prevention upstream: A pilot study of community pharmacists screening for opioid misuse risk. Res Social Adm Pharm. 2019;15:1032–1036. [DOI] [PubMed] [Google Scholar]

- 57.Skoy E, Eukel H, Werremeyer A, Strand M, Frenzel O, Steig J. Implementation of a statewide program within community pharmacies to prevent opioid misuse and accidental overdose. J Am Pharm Assoc (2003). 2020;60:117–121. [DOI] [PubMed] [Google Scholar]

- 58.Finnell JT, Twillman RK, Breslan SA, Schultz J, Miller L. The role of continuing medical education in increasing enrollment in prescription drug monitoring programs. Clin Ther. 2017;39:1896–1902.e2. [DOI] [PubMed] [Google Scholar]

- 59.Fisher JE, Zhang Y, Sketris I, Johnston G, Burge F. The effect of an educational intervention on meperidine use in Nova Scotia, Canada: A time series analysis. Pharmacoepidemiol Drug Saf. 2012;21:177–183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Kahan M, Gomes T, Juurlink DN, et al. Effect of a course-based intervention and effect of medical regulation on physicians’ opioid prescribing. Can Fam Physician. 2013;59:e231–e239. [PMC free article] [PubMed] [Google Scholar]

- 61.Kattan JA, Tuazon E, Paone D, et al. Public health detailing—A successful strategy to promote judicious opioid analgesic prescribing. Am J Public Health. 2016;106:1430–1438. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Katzman JG, Comerci GD, Landen M, et al. Rules and values: A coordinated regulatory and educational approach to the public health crises of chronic pain and addiction. Am J Public Health. 2014;104:1356–1362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Katzman JG, Fore C, Bhatt S, et al. Evaluation of American Indian Health Service Training in pain management and opioid substance use disorder. Am J Public Health. 2016;106:1427–1429. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Kavukcu E, Akdeniz M, Avci HH, Altuğ M, Öner M. Chronic noncancer pain management in primary care: Family medicine physicians’ risk assessment of opioid misuse. Postgrad Med. 2015;127:22–26. [DOI] [PubMed] [Google Scholar]

- 65.Leong L, Ninnis J, Slatkin N, et al. Evaluating the impact of pain management (PM) education on physician practice patterns—A continuing medical education (CME) outcomes study. J Cancer Educ. 2010;25:224–228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Lockett TL, Hickman KL, Fils-Guerrier BJ, Lomonaco M, Maye JP, Rossiter AG. Opioid overdose and naloxone kit distribution: A quality assurance educational program in the primary care setting. J Addict Nurs. 2018;29:157–162. [DOI] [PubMed] [Google Scholar]

- 67.Midmer D, Kahan M, Marlow B. Effects of a distance learning program on physicians’ opioid- and benzodiazepine-prescribing skills. J Contin Educ Health Prof. 2006;26:294–301. [DOI] [PubMed] [Google Scholar]

- 68.Morton KJ, Harrand B, Floyd CC, et al. Pharmacy-based statewide naloxone distribution: A novel “top-down, bottom-up” approach. J Am Pharm Assoc (2003). 2017;57:S99–S106.e5. [DOI] [PubMed] [Google Scholar]

- 69.Palmer E, Hart S, Freeman PR. Development and delivery of a pharmacist training program to increase naloxone access in Kentucky. J Am Pharm Assoc (2003). 2017;57:S118–S122. [DOI] [PubMed] [Google Scholar]

- 70.Pasquale MK, Sheer RL, Mardekian J, et al. Educational intervention for physicians to address the risk of opioid abuse. J Opioid Manag. 2017;13:303–313. [DOI] [PubMed] [Google Scholar]

- 71.Roy P, Jackson AH, Baxter J, et al. Utilizing a faculty development program to promote safer opioid prescribing for chronic pain in internal medicine resident practices. Pain Med. 2019;20:707–716. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Sanchez-Ramirez DC, Polimeni C. Knowledge and implementation of current opioids guideline among healthcare providers in Manitoba. J Opioid Manag. 2019;15:27–34. [DOI] [PubMed] [Google Scholar]

- 73.Spickard AJ, Dodd D, Swiggart W, Dixon GL, Pichert JW. A CME course for physicians who misprescribe controlled substances: An alternative to sanction. Fed Bull: J Fed State Med Boards. 1998;85:8–19. [Google Scholar]

- 74.Spickard A, Jr, Dodd D, Dixon GL, Pichert JW, Swiggart W. Prescribing controlled substances in Tennessee: Progress, not perfection. South Med J. 1999;92:51–54. [DOI] [PubMed] [Google Scholar]

- 75.Trudeau KJ, Hildebrand C, Garg P, Chiauzzi E, Zacharoff KL. A randomized controlled trial of the effects of online pain management education on primary care providers. Pain Med. 2017;18:680–692. [DOI] [PubMed] [Google Scholar]

- 76.Young A, Alfred KC, Davignon PP, Hughes LM, Robin LA, Chaudhry HJ. Physician survey examining the impact of an educational tool for responsible opioid prescribing. J Opioid Manag. 2012;8:81–87. [DOI] [PubMed] [Google Scholar]

- 77.Zisblatt L, Hayes SM, Lazure P, Hardesty I, White JL, Alford DP. Safe and competent opioid prescribing education: Increasing dissemination with a train-the-trainer program. Subst Abus. 2017;38:168–176. [DOI] [PubMed] [Google Scholar]

- 78.World Health Organization. Framework for action on interprofessional education and collaborative practice. https://www.who.int/hrh/resources/framework_action/en. Published 2010. Accessed May 6, 2021. [PubMed]

- 79.Englander R, Cameron T, Ballard AJ, Dodge J, Bull J, Aschenbrener CA. Toward a common taxonomy of competency domains for the health professions and competencies for physicians. Acad Med. 2013;88:1088–1094. [DOI] [PubMed] [Google Scholar]

- 80.Interprofessional Education Collaborative. Core Competencies for Interprofessional Collaborative Practice. Washington, DC: Interprofessional Education Collaborative. https://hsc.unm.edu/ipe/resources/ipec-2016-core-competencies.pdf. Updated 2016. Accessed May 6, 2021. [Google Scholar]

- 81.Craig P, Dieppe P, Macintyre S, Michie S, Nazareth I, Petticrew M. Developing and evaluating complex interventions: The new Medical Research Council guidance. Int J Nurs Stud. 2013;50:587–592. [DOI] [PubMed] [Google Scholar]

- 82.Davidoff F, Dixon-Woods M, Leviton L, Michie S. Demystifying theory and its use in improvement. BMJ Qual Saf. 2015;24:228–238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Davis D, O’Brien MA, Freemantle N, Wolf FM, Mazmanian P, Taylor-Vaisey A. Impact of formal continuing medical education: Do conferences, workshops, rounds, and other traditional continuing education activities change physician behavior or health care outcomes? JAMA. 1999;282:867–874. [DOI] [PubMed] [Google Scholar]

- 84.Bloom BS. Effects of continuing medical education on improving physician clinical care and patient health: A review of systematic reviews. Int J Technol Assess Health Care. 2005;21:380–385. [DOI] [PubMed] [Google Scholar]

- 85.Mansouri M, Lockyer J. A meta-analysis of continuing medical education effectiveness. J Contin Educ Health Prof. 2007;27:6–15. [DOI] [PubMed] [Google Scholar]

- 86.Tian J, Atkinson NL, Portnoy B, Gold RS. A systematic review of evaluation in formal continuing medical education. J Contin Educ Health Prof. 2007;27:16–27. [DOI] [PubMed] [Google Scholar]

- 87.Webster F, Krueger P, MacDonald H, et al. A scoping review of medical education research in family medicine. BMC Med Educ. 2015;15:79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Mackenzie M, O’Donnell C, Halliday E, Sridharan S, Platt S. Evaluating complex interventions: One size does not fit all. BMJ. 2010;340:401–403. [DOI] [PubMed] [Google Scholar]

- 89.Astbury B, Leeuw FL. Unpacking black boxes: Mechanisms and theory building in evaluation. Am J Eval. 2010;31:363–381. [Google Scholar]

- 90.Hadland SE, Rivera-Aguirre A, Marshall BDL, Cerdá M. Association of pharmaceutical industry marketing of opioid products with mortality from opioid-related overdoses. JAMA Netw Open. 2019;2:e186007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Lurie J. Doctors receive opioid training. Big pharma funds it. What could go wrong? Mother Jones. https://www.motherjones.com/politics/2018/04/doctors-are-required-to-receive-opioid-training-big-pharma-funds-it-what-could-go-wrong. Published April 27, 2018. Accessed May 6, 2021. [Google Scholar]

- 92.Pawson R, Greenhalgh T, Harvey G, Walshe K. Realist Synthesis: An Introduction. RMP Methods Paper 2. ESRC Research Methods Programme, University of Manchester; 2004. [Google Scholar]

- 93.Sridharan S, Nakaima A. Ten steps to making evaluation matter. Eval Program Plann. 2011;34:135–146. [DOI] [PubMed] [Google Scholar]

- 94.Curran VR, Fleet L. A review of evaluation outcomes of web-based continuing medical education. Med Educ. 2005;39:561–567. [DOI] [PubMed] [Google Scholar]

- 95.Datta J, Petticrew M. Challenges to evaluating complex interventions: A content analysis of published papers. BMC Public Health. 2013;13:568. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Cross AB, Gottfredson DC, Wilson DM, Rorie M, Connell N. Implementation quality and positive experiences in after-school programs. Am J Community Psychol. 2010;45:370–380. [DOI] [PubMed] [Google Scholar]

- 97.Golden SD, Earp JA. Social ecological approaches to individuals and their contexts: Twenty years of health education & behavior health promotion interventions. Health Educ Behav. 2012;39:364–372. [DOI] [PubMed] [Google Scholar]

- 98.Committee on Integrating Primary Care and Public Health, Board on Population Health and Public Health Practice, Institute of Medicine. Primary Care and Public Health: Exploring Integration to Improve Population Health. Washington, DC: National Academies Press; March 28, 2012. [PubMed] [Google Scholar]

- 99.Shahzad M, Upshur R, Donnelly P, et al. A population-based approach to integrated healthcare delivery: A scoping review of clinical care and public health collaboration. BMC Public Health. 2019;19:708. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Orkin AM, Bharmal A, Cram J, Kouyoumdjian FG, Pinto AD, Upshur R. Clinical population medicine: Integrating clinical medicine and population health in practice. Ann Fam Med. 2017;15:405–409. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Orkin A. Clinical population medicine: A population health roadmap for Ontario Health Teams. https://www.longwoods.com/content/26010/clinical-population-medicine-a-population-health-roadmap-for-ontario-health-teams. Published November 2019. Accessed May 6, 2021.

- 102.Steinman MA, Bero LA, Chren MM, Landefeld CS. Narrative review: The promotion of gabapentin: An analysis of internal industry documents. Ann Intern Med. 2006;145:284–293. [DOI] [PubMed] [Google Scholar]

- 103.Mintzes B, Swandari S, Fabbri A, Grundy Q, Moynihan R, Bero L. Does industry-sponsored education foster overdiagnosis and overtreatment of depression, osteoporosis and over-active bladder syndrome? An Australian cohort study. BMJ Open. 2018;8:e019027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Brennan TA, Rothman DJ, Blank L, et al. Health industry practices that create conflicts of interest: A policy proposal for academic medical centers. JAMA. 2006;295:429–433. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.