Abstract

Individuals can be characterized in a population according to their brain measurements and activity, given the inter-subject variability in brain anatomy, structure-function relationships, or life experience. Many neuroimaging studies have demonstrated the potential of functional network connectivity patterns estimated from resting functional magnetic resonance imaging (fMRI) to discriminate groups and predict information about individual subjects. However, the predictive signal present in the spatial heterogeneity of brain connectivity networks is yet to be extensively studied. In this study, we investigate, for the first time, the use of pairwise-relationships between resting-state independent spatial maps to characterize individuals. To do this, we develop a deep Siamese framework comprising three-dimensional convolution neural networks for contrastive learning based on individual-level spatial maps estimated via a fully automated fMRI independent component analysis approach. The proposed framework evaluates whether pairs of spatial networks (e.g., visual network and auditory network) are capable of subject identification and assesses the spatial variability in different network pairs’ predictive power in an extensive whole-brain analysis. Our analysis on nearly 12,000 unaffected individuals from the UK Biobank study demonstrates that the proposed approach can discriminate subjects with an accuracy of up to 88% for a single network pair on the test set (best model, after several runs), and 82% average accuracy at the subcortical domain level, notably the highest average domain level accuracy attained. Further investigation of our network’s learned features revealed a higher spatial variability in predictive accuracy among younger brains and significantly higher discriminative power among males. In sum, the relationship among spatial networks appears to be both informative and discriminative of individuals and should be studied further as putative brain-based biomarkers.

1. Introduction

Studies have investigated variability in human brain structure via standard assessment measures such as cortical thickness, sulcal depth, and cortical folding across individuals [1–4]. Later studies discovered the existence of relatively unique patterns in the human brain’s functional organization. In particular, significant variability in functional connectivity patterns (i.e., temporal dependence among brain network timecourses) between groups of subjects (e.g., controls and patients) has been reported in the past decade [5–7]. Other studies have shown that functional connectivity patterns can be used to predict individual traits [8]. Despite these findings, it is still unclear whether spatial relationships among functional networks in the brain can be linked to individuals uniquely. Motivated by that, we investigate the spatial maps of functional networks in this study and evaluate if the relationship between any two network spatial maps can predict whether or not the maps come from the same subject.

Brain neural activity can be indirectly recorded by functional magnetic resonance imaging (fMRI) based on the ensuing fluctuations in blood oxygenation level dependent (BOLD) signals [9, 10]. Whole-brain resting-state fMRI (rs-fMRI) measures BOLD fluctuations while an individual is at rest, i.e., subjects are not performing an explicit task [11]. There has been significant interest in resting-state fMRI due to its lower design complexity and ease of acquisition (relative to task fMRI, where an individual needs to be able to perform a certain task) [12]. This advantage of rs-fMRI imaging renders it a widely used technology leading to many studies, including those of patients with particular conditions (e.g., comatose individuals or Alzheimer’s patients) [13]. Multiple studies have also shown rs-fMRI data can be used to estimate subject-level differences [14]. Various model- and data-driven methods (such as seed-based correlation analysis [15], independent component analysis (ICA) [16, 17], graph methods [18], and clustering algorithms [19]) analyze whole-brain rs-fMRI scans to identify spatially distinct but functionally correlated regions, also called resting-state networks (RSNs). Compared to other methods, ICA can identify maximally statistically independent RSNs with less prior information. Also, it is able to capture artifacts and noise while separating these from the RSNs [9]. These advantages have led to the widespread use of ICA to analyze rs-fMRI data.

Studies that utilize ICA for processing rs-fMRI data often analyze temporal patterns of brain activity, such as the pairwise correlation between RSN-specific timecourses [7, 20–22]. Despite the predominance of studies on temporal characteristics of rs-fMRI data, the spatial characteristics of functional networks also carry remarkable information and possess distinctive patterns for characterizing subjects [23–25]. Recent studies have reported that ICA patterns can be used to classify group membership (e.g., patients versus controls) [26]. In this article, we shed more light on a newer and yet more challenging problem, namely, the discrimination of subjects solely from their underlying spatial map networks by learning inter-network relationships in a verifiable way. To the best of our knowledge, this problem has not been studied before, yet it has important implications for future studies of brain-based biomarkers and distributed networks.

The majority of studies that analyze resting-state connectivity rely on comparing functional patterns between groups of people in some way. Some of these studies have tried to distinguish unaffected control subjects from symptomatic ones, such as those with Alzheimer’s disease [7, 27], schizophrenia [8, 28, 29], language-impairment [5], and autism [30, 31]. In comparison, others have provided models to classify subjects according to their sex [32, 33] or age [34] using functional connectivity. Altogether, although these studies attain individual-level predictions, they are ultimately focused on the use of group-level (dis)similarity. However, as mentioned above, there has been much less focus on individual-level spatial heterogeneity in brain functional interactions.

A few studies have shifted the focus to link such patterns to individuals [6, 8, 14, 35]. More specifically, Finn and colleagues [6] identify subjects based on their whole-brain and network-based functional connectivity. They scanned the brain of 126 subjects over six sessions when performing working memory, motor, language, and emotion tasks as well as at resting state. Then, for each subject, the functional connectivity derived from each session was compared (using Pearson correlation) to the set of functional connectivity of all subjects from other sessions. Another study [14] computed the variability in the intrinsic functional connectivity of a small rs-fMRI dataset of 23 healthy subjects collected over six months with five scan sessions per individual. The individual variability in some brain regions was evaluated, including frontal, temporal, and parietal lobes, and shown to be higher than in other regions. In a quite different approach, a linear model was proposed in [35] for the prediction of a region of interest (ROI) time series from another ROI time series. A model was fit to assess if the prediction is unique for each subject, i.e., the prediction pattern was distinctive between 27 individuals. One of the key concerns in these works is the concept of test-retest reliability where we investigate the consistency of our predictions across all sessions corresponding to the same subject as the image of brain in longitudinal experiments can vary due the factor of time (which is not a concern in our study). Such models suffer from a few fundamental shortcomings. First, they usually require multiple scans from each subject for proper training, which is costly and time-consuming; for instance, it took over six months for the authors in [14] to complete the data acquisition phase. Second, due to the lack of high-sample-sized datasets for such longitudinal models, it is hard, if not impossible, to train the high-capacity models that are typically better at capturing complex features and, thus, such models are not otherwise generalizable due to overfitting [36]. Furthermore, all these studies disregard the spatial maps of functional brain networks in their analysis, while several studies have shown such information can be used as biomarkers to characterize individuals [37–39].

Here we use a large resting-state fMRI dataset to identify discriminative features of brain activity between unaffected individuals to address the shortcomings above. Our goal is to characterize individuals based on high-level features learned from their functional brain network spatial patterns and from pair-wise comparisons between subjects given a pre-selected network pair. We are also interested in observing which specific networks are more informative for such task. Accordingly, we develop a deep neural network-based framework that can detect subjects based on high-level difference features in the spatial patterns of their functional networks, when those networks are different (e.g., auditory and visual networks) for each subject. Our assumption is that there exists some high-level spatial pattern that underlies all such functional networks but is unique to each person. Thus, we hypothesize that subjects can be differentiated from each other by contrasting such a pattern if it can be extracted and represented in a latent feature space where the distances make biological sense. In other words, our aim is to learn a functional brain pattern that 1) is unique to the individual brain and 2) can be mapped to a unified feature space where differences in subject labels translate, by design, to L1 distances. In that respect, it does not matter which network of the brain we are selecting from an individual as long as we can infer the latent feature(s) from it, which can then be used to capture differences. Given the possibly nuanced complexity and unpredictable nature of such spatial (dis)similarity features, it is fair to conjecture they may be successfully learned via deep neural network model representations.

Our approach of characterizing brain samples using a pair of functional brain networks is different from the few previous studies in several ways. First, we develop a framework to capture complex functional patterns in the brain and do so in a region agnostic manner (i.e., regardless of the selected brain network pair). Second, we transform the problem of subject identification into subject comparison by taking advantage of the Siamese architecture [40, 41], thus reducing the original multiclass problem into a binary classification problem (class 1 (or positive) if two input spatial maps are from the same subject, class 2 (or negative) otherwise), which is moderately easier to train. Third, we leverage the nature of this task to obtain a relatively large augmented dataset of pairs of subjects, effectively boosting the predictive power of our end-to-end trained models. Furthermore, our models do not require multiple scans per subject, which is a limitation in some previous studies. Finally, as will be discussed, each trained model works on a preselected pair of functional networks and, thus, can capture relationships between brain regions, contrary to previous work that is limited in this regard.

This paper is organized as follows. In section 2, we describe the data and the procedure underlying data collection and preprocessing and subsequently introduce our model in more detail. In section 3, we evaluate our model on a held-out test set using the Monte Carlo cross-validation approach and shed more light on age and sex differences in its performance. Section 4 reflects on our observations and the model’s performance. Finally, section 5 concludes our paper and suggests future directions to continue this line of research.

2. Materials & methods

2.1. Participants

We retrieved the resting-state fMRI dataset from the UK Biobank [42]. At the time of retrieval, this dataset included 19831 subjects, out of which 13668 were self-reported as healthy (unaffected) adult participants. The subject fMRI scans underwent quality control, and subjects were excluded if the scans met the following criteria: marked as unusable by UK Biobank, visual inspection of mean maps for gross anomalies, absolute framewise displacement (FD) higher than 0.3mm, Matthews correlation coefficient (MCC) between the binarized study-specific mask and the subject mask lower than 0.8, and failure to complete ICA estimation. 11754 subjects were finally retained for the analysis after quality control, with the included participants’ ages ranging from 45 to 80 (62.56±7.38) years. The dataset was well balanced in terms of the participants’ sex (c.f. Table 1).

Table 1. Subjects’ demographics.

| Population Number | Age (years) | |||||||

|---|---|---|---|---|---|---|---|---|

| Mean | SD | Min. | 25% | 50% | 75% | Max. | ||

| All | 11754 (100%) | 62.56 | 7.38 | 45 | 57 | 63 | 68 | 80 |

| Male | 5772 (49%) | 63.08 | 7.54 | 45 | 57 | 64 | 69 | 80 |

| Female | 5982 (51%) | 62.07 | 7.19 | 46 | 56 | 62 | 68 | 80 |

2.2. Data acquisition & preprocessing

All participants were scanned once by a 3-Tesla (3T) Siemens Skyra scanner with a 32-channel receive head coil, all acquired in one site. A gradient-echo echo planar imaging (GE-EPI) paradigm was used to obtain resting-state fMRI scans. The EPI-based acquisition parameters include multiband acceleration factor of 8 (i.e., eight slices were acquired simultaneously), no iPAT, fat saturation, flip angle (FA) = 52°, spatial resolution = 2.4×2.4×2.4mm, field-of-view (FOV) = (88×88×64 matrix), repeat time (TR) = 0.735s, echo time (TE) = 39 ms, and 490 volumes. Subjects were instructed to stare at a crosshair passively and remain relaxed, not thinking about anything, during the six-minute and ten-second resting-state scanning period.

The preprocessing steps performed by UK Biobank are as follows. An intra-modal motion correction tool, MCFLIRT [43], was applied to minimize the distortions due to head motion. Grand-mean intensity normalization was used to scale the entire 4D dataset by a single multiplicative factor to compare brain scans between subjects. The data were filtered by a high-pass temporal filter (Gaussian-weighted least-squares straight-line fitting, with σ = 50.0 s) to remove residual temporal drifts. Geometric distortions of EPI scans were corrected by using the FSL’s Topup tool [44]. EPI unwarping is followed by a gradient distortion correction (GDC) unwarping phase. Finally, structured artefacts are removed by ICA+FIX processing (Independent Component Analysis followed by FMRIB’s ICA-based X-noiseifier [45–47], with no lowpass temporal or spatial filtering up to this point. More details on the UK Biobank imaging protocol and preprocessing steps can be found in [42]. In addition, the data were then normalized to an MNI EPI template using FLIRT followed by SPM12, old normalization module. Finally, the data were smoothed using a Gaussian kernel with FWHM = 6mm.

2.3. Group independent component analysis

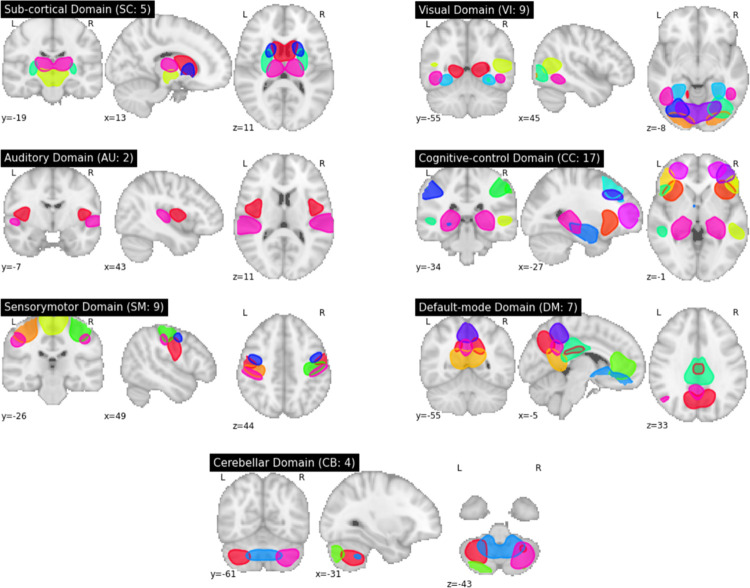

We applied fully automated spatially constrained ICA using the NeuroMark approach [48] on the 4D preprocessed UK Biobank rs-fMRI data from Section 2.2. In the Neuromark approach, a template of replicable independent components (ICs) was constructed after spatially matching correlated group-level ICs between two healthy control fMRI datasets—genomics superstruct project (GSP) and human connectome project (HCP). The estimated network template was then used as a prior for a spatially constrained ICA algorithm applied to each UK Biobank subject individually. This identified 53 functionally relevant resting-state networks (RSNs) for each individual that are maximally spatially independent (see S1 Fig and S1 Table). Each subject-specific RSN is represented by a spatial map of size 53×63×52 voxels and its associated time course of 490-time points. RSNs are grouped into seven domains, namely subcortical (SC), auditory (AU), sensory-motor (SM), visual (VI), cognitive control (CC), default mode (DM), and cerebellar (CB), by functional similarity (see Fig 1). This work used the subject-specific spatial maps (henceforth referred to as networks) as input to our model and a basis for all subsequent analyses.

Fig 1. Group ICA-derived spatial maps.

Spatial maps are grouped into seven domains, subcortical (SC), auditory (AU), sensory-motor (SM), visual (VI), cognitive control (CC), default mode (DM), and cerebellar (CB), each of which contains 5, 2, 9, 9, 17, 7, and 4 networks, respectively.

2.4. Generating input pairs

We split our preprocessed data by subjects into training, validation, and test sets with a proportion of 60%, 20%, and 20%, respectively (see S2–S4 Tables for the statistics of subjects in each set). The number of same-subject pairs created for each set was equal to the total number of subjects in each set, and all these pairs were labeled ‘class 1.’ Likewise, an equal number of different-subjects pairs (labeled ‘class 2’) were created for each set by randomly selecting subjects from the corresponding set (for a total of N pairs out of possible pairs, where N is the total number of subjects in each set). As discussed before, input pairs are comprised of two networks instead of one. This is important as, otherwise, instead of learning patterns that govern the relationships within networks, the model gradually (i.e., within the course of training) tends to learn to make an elementwise comparison of the input voxels. We trained one model for each pair of the 53 functional networks using the TReNDS high-performance computing GPU cluster. In total, models were trained.

2.5. Model architecture

We provide an end-to-end trainable deep learning model for learning a non-linear dissimilarity metric based on patterns of spatial relationships in functional networks that characterize each subject. We use balanced (i.e., same-subject vs. different-subject) preprocessed input-pairs for training, and the objective is to adjust network weights such that input pairs that belong to the same subject produce a low dis-similarity score whereas those pertaining to different subjects generate a large score. Our proposed framework comprises two major building blocks, one based on the convolutional neural networks (CNNs), which are superior in capturing high-level features from imaging data modalities [49], and the other based on a Siamese network that can efficiently generate features for differentiating inputs [40, 41]. In the following sub-sections, we shed more light on the architecture of each module.

2.6. Convolutional Neural Networks (CNNs)

2D CNNs and, more recently, 3D CNNs have gained attention in various domains that involve image processing. CNNs can pick up high-level and yet subtle, task-specific features if trained on task-appropriate loss functions. For our analysis, the desired set of features stem from patterns of functional brain relationships that can help characterize subjects, yet in a relatively network-agnostic way. In other words, these features should be comparable between different subjects, even if they are derived from different spatial maps. This is indeed a crucial component of our design since using spatial maps from the same networks drives the training course towards a point in the parameter space that corresponds to a voxel-to-voxel verbatim comparison. In other words, instead of learning a pattern that characterizes brain activities, the model will tend to see if the two images are identical voxel-wise. We therefore prevent this by utilizing network spatial maps as input and two CNN-based child networks that can generate informative features linked to each other via a Siamese architecture, as will be discussed shortly.

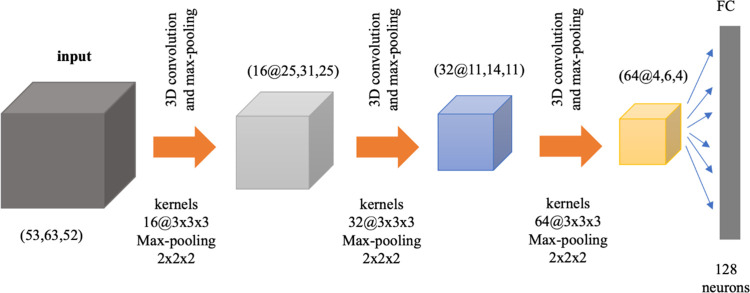

Fig 2 depicts the architecture of the employed 3D ConvNet that extracts features from a supplied spatial map with a resolution of 53×63×52 voxels. We used three convolutional layers with 16, 32, and 64 filters, respectively, and used kernels of 3×3×3 for each of the layers. We used ReLU activations for both convolutional and later fully connected layers. Furthermore, we augmented the convolutional layers with max-pooling layers (with a kernel size of 2×2×2 and stride of 2) to reduce the feature maps dimensionalities. The features in the last feature map are flattened and then fed into a single fully-connected layer with a ReLU of size 128 neurons. We used 3D and 1D batch-normalization as a regularization for all layers. This is especially important due to the large dimensionality and complexity of the model. The DL encodings (i.e., final feature values) serve as a summary of the input 3D spatial map, capturing the non-linear pattern of functional relationships underlying the input brain network pair when trained based on a loss that quantitatively factors in our goal, as discussed in the next section.

Fig 2. CNNs architecture.

The CNNs block consists of three 3D convolutional layers, with kernels of sizes 3×3×3, each of which is followed by a max-pooling layer with a kernel of size 2×2×2.

2.7. Siamese architecture

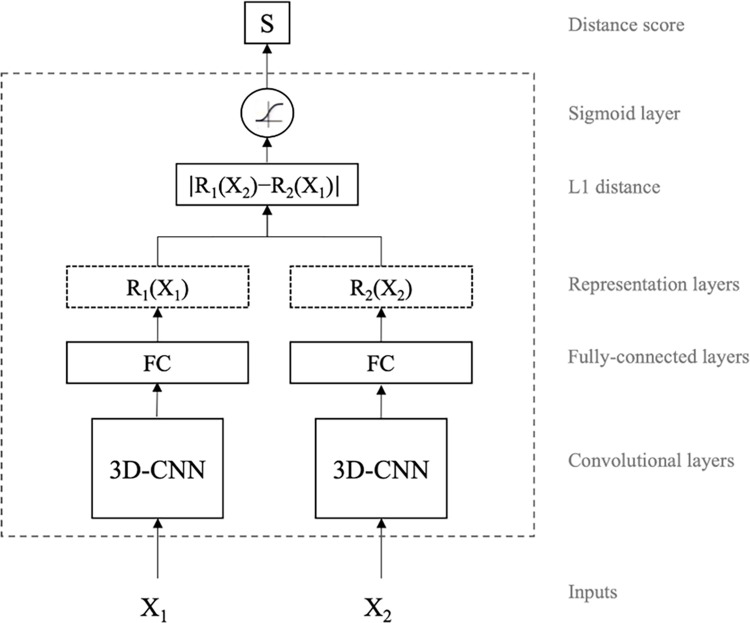

For our brain-network classification task, we use a modified Siamese-based CNN model, seeking to map input image pairs to a shared target space in which both spatial map images are comparable. In other words, we learn separate mappings that transform the (spatial) input spaces of two different functional brain network maps into a shared high-level space. In that space, the distance between subjects (irrespective of the brain network region) represents how closely related their corresponding functional networks are. By training specifically on different brain network pairs, we ensure that closely related network representations learned by the model are not merely driven by spatial similarity but, rather, by having their origin on the same subject. Fig 3 shows the block diagram of our proposed model. It is comprised of two CNN-based child networks with identical architectures (referred to as sub-networks hereon), but not sharing weights. Each sub-network takes a different functional brain map network image, namely X1 and X2 (e.g., visual network and auditory network), to generate consistent and comparable representations R1(X2) and R2(X2), respectively. Then, optimizing for a Siamese-based architecture using the binary cross-entropy loss drives parameters of the CNN sub-networks towards a point in the parameter search space where projections lie in distant locations when subjects are different. Our experimentation suggests that this is more easily attained with independent, rather than tied, weights for each child network (see Fig 4C). This choice is also driven by the problem at hand. Using shared weights is a common approach if the objective is to learn a generic representation for both input data modes (here, brain network spatial maps), whereas using independent weights is more suitable if learning mode-specific representations instead. Thus, the use of separate weights is more appropriate for our objective of training models that learn if two modes (i.e., brain network spatial maps) correspond to the same subject or not. Additionally, we assume that a softmax-normalized L1 distance, trained through the binary cross-entropy loss, will induce both sub-networks to converge into a shared feature space.

Fig 3. High-level architecture of the proposed model.

Siamese networks take two images, X1 and X2, and learn a distance function between the two vector representations of the input images. The model outputs distance score close to one if the two inputs are from two different objects (in this case, different subjects) and close to zero otherwise. The weights of the CNN child networks are updated independently and are not tied/shared.

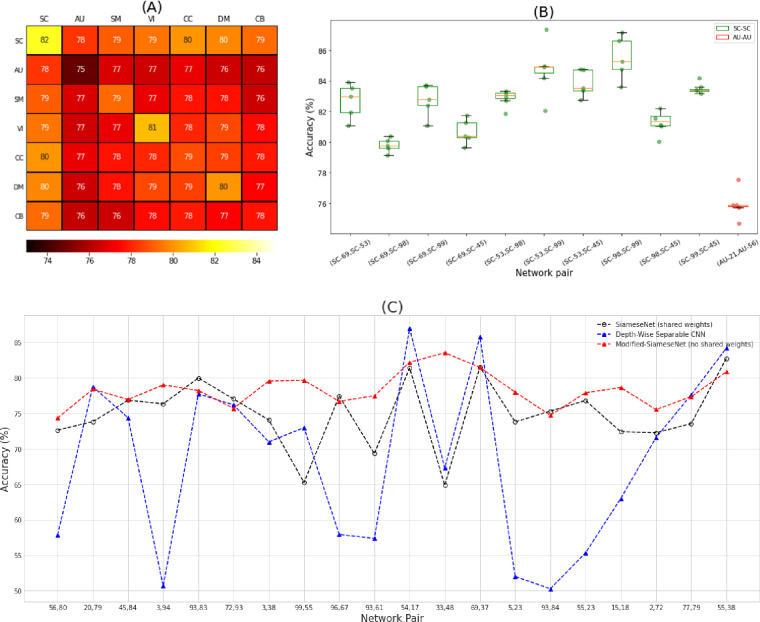

Fig 4.

(A) Mean domain accuracy heatmap. Each cell shows the mean accuracies of all models trained on spatial networks belonging to the indicated domain-domain pair. The resulting mean accuracies show that domains have different predictive power for characterization of subjects based on brain activity. A comparison of SC to the other domains reveals that its underlying networks yield the most discriminative features. On the other hand, the AU domain appears to produce the least discriminative features relative to other regions in the brain. (B) Boxplot for the accuracies derived from the MCCV evaluation. The results show low variation across the five repeats for pairs of networks that fall under SC-SC and AU-AU domain pairs, which provides supporting evidence for the generalizability of the proposed model. Green and red boxes represent SC and AU network pairs, respectively. The dots are the results of MCCV for each network pair. The X-axis indicates the Neuromark network ids. (C) A Comparison between our proposed model, and two baseline models. We randomly selected 20 network pairs and compared our proposed model to a Siamese model with shared weights, and a depth-wise separable CNN. The results show that the proposed model is doing consistently better than other baselines both in terms of accuracy and performance variance.

We train this network for 200 epochs using stochastic gradient descent with a binary cross-entropy loss and a learning rate of 0.001. The model is given by loss = −t log(S)−(1−t)log(1−S) where t is the true label, S = sigmoid(∑widi), di = |R1(X2i)−R2(X1i)|, and i is the dimensionality index of the feature space. The training is stopped using the early-stopping strategy of no improvement in validation accuracy after 14 consecutive epochs. Furthermore, we repeat the training course three times and select the best validation model (i.e., the one with the highest validation accuracy) for testing on the held-out test data.

3. Experiments

In this section, we evaluate the performance of the proposed model when trained on different pairs of functional brain networks, on unseen (held-out) test datasets. In section 3.1, we compare and contrast the accuracy achieved when we select networks from different domains. In addition, we discuss and analyze the prediction performances across three held-out test set sample cohorts according to the class labels we attempt to predict: network pairs from the same subjects, network pairs from different subjects, and the entire (aggregate) set of all network pairs (same and different subject pair samples together). Using these cohorts enables us to evaluate our model architecture’s performance under different scenarios and provides insight into brain function. In sections 3.2 and 3.3, we analyze the relationship between cognitive features, age and sex, and model performance.

3.1. Analysis of performance in different functional network domains

To investigate potential variation in model performance by network pairs, we evaluated the proposed model on the entire held-out test set (i.e., the cohort containing all subject pair samples), which is comprised of an equal number of same-subject and different-subject network-pair samples. Fig 4A shows a heatmap of the mean model accuracies between domains (see the fine-grained brain network connection-level accuracies in S2 Fig). According to the heatmap, there exist spatial network relationship patterns with strong prediction capability stemming from SC-SC, SC-CC, SC-DM, SC-VI, VI-VI, and DM-DM domain-pairs. On the other hand, network pairs including an auditory network appear to contain less discriminative (yet still significant) features. Overall, it turns out that the amount of discriminative information that can be captured from a given network pair is at least partly domain-dependent (see S5 Table for a two-sided two-sample t-test of the mean prediction accuracy between domains and the corresponding p-values). Furthermore, to assess the reproducibility and generalizability of the results, we repeat our training-testing approach using the Monte Carlo cross-validation (MCCV) approach with five repeats (the held-out test set changes for each repeat). Fig 4B shows a boxplot of the corresponding accuracies for each pair of networks that fall under SC-SC and AU-AU domain pairs (i.e., the highest and the lowest performant domain pairs according to Fig 4A, respectively). From the figure, it is evident that our results are reproducible up to a negligible variation in model performance.

Furthermore, we compared our proposed model to two baseline models: a Siamese network with shared weights, and a depth-wise separable CNN (where each channel -i.e., original input modalities- is treated with a separate set of convolutional kernels) [50]. Where applicable, we used the same architecture as well as model configurations so that the results remain comparable. Furthermore, in our depth-wise separable CNN model, we need to augment the model with a point-wise convolutional layer with a kernel of size 1x1x1. Each input channel in the resulting network will be a spatial map from one single network. We treated each network pair as a single image input and fed it into the first depth-wise convolutional layer with two channels in the depth-wise separable CNN model to predict if they are from the same subject or not.

Fig 4C compares the prediction accuracy achieved by each of the three models for 20 random pairs of networks. According to the figure, the proposed model is doing consistently better than other baselines both in terms of accuracy and performance variance. This is because the Siamese learning pattern offers some level of robustness with respect to the bias that exists between the network pairs. This bias is due to the correlation between voxel intensities that stand out when the two networks belong to the same brain. While the depth-wise CNN tries to address this bias through segregation of convolutional kernels that are applied to each input modality, it falls short to succeed in all cases. The proposed model on the other hand, addresses this shortcoming by learning comparable patterns through using the independent weights for each daughter network. The independent (i.e., untied) weights lead to representations for each input modality in the same latent space which is hopefully free of irrelevant information and bias. This is corroborated by the fact that the same architecture but with tied weights is doing often worse than our model, while mostly doing better than the other baseline.

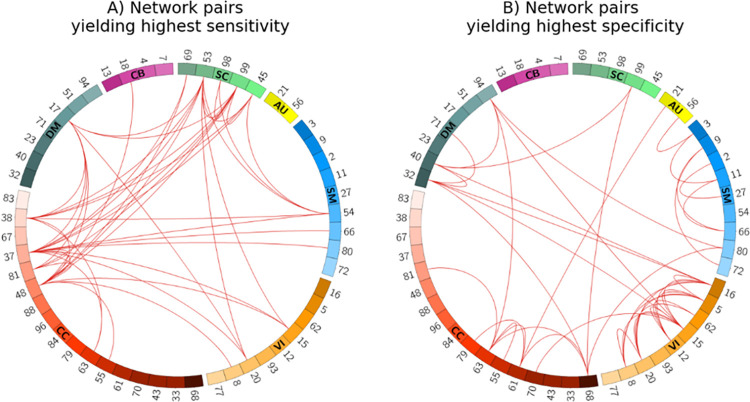

We were also interested in investigating whether any network pairs were more feature-rich for either of the same- vs. different-subject cohorts in the classification task. This is an important question as significant differences can serve to support recommendation guidelines for optimally choosing certain network pairs for different goals. In light of that, we experimented by partitioning the test samples into a cohort of network pairs from the same subjects and a cohort of pairs with different subjects. Fig 5A and 5B depict the spatial connectograms of the top 2% network pairs with the highest sensitivity (we treat same-subject samples as the positive class) and specificity, respectively (see S3A and S3B Fig for the full results). We grouped connectograms based on their functional domains, i.e., SC, AU, SM, VI, CC, DM, and CB, which contain n = 5, 2, 9, 9, 17, 7, and 4 networks, respectively. Specifically, Fig 5A shows that the highest sensitivities are mostly from brain regions in the subcortical and cognitive control domains. While according to Fig 5B, brain regions in the visual domain exhibit the highest specificities. Thus, comparing the two connectograms suggests that different domains offer distinct patterns of contribution towards identifying same- vs. different-subject pairs.

Fig 5.

Spatial connectogram of sensitivity (A) and specificity (B) highlighting the top 2% network pairs. Sensitivity and specificity show the percentage of network pair samples correctly identified as the same- or different-subject cohorts, respectively. Fig 5A suggests that networks from the subcortical (green) and cognitive control (red) domains are among the most useful for characterizing same-subject samples, both intra- and inter-domain. On the other hand, the high specificity of VI-VI network pairs (orange) in Fig 5B indicates better characterization of different-subject samples. The numbers shown on the connectograms represent the specific Neuromark RSN network indices (See S1 Table for a list of the network names). The connectograms were generated using the Circos tool [51].

3.2. Impact of sex on performance

We also evaluated the role of sex on the prediction performance separately for the same- and different-subject cohorts. First, we considered the same-subject cohort and compared male vs. female subcohorts within it. We observed that the model’s sensitivity when two networks are selected from male subjects is larger than when they are selected from female subjects, which was the case for 71% of network pairs (and reliably so for network pairs showing largest differences; see S4A Fig). This suggests that male functional networks have more uniquely identifying individual patterns, especially in the cerebellar domain (see S6A Fig), compared to females. This finding is consistent with studies of brain structure that maintain males’ brains have more variability than females [52, 53]. Indeed, higher variability in brain structure is conducive to the existence of more distinct patterns in males that help characterize male subjects’ identities more accurately.

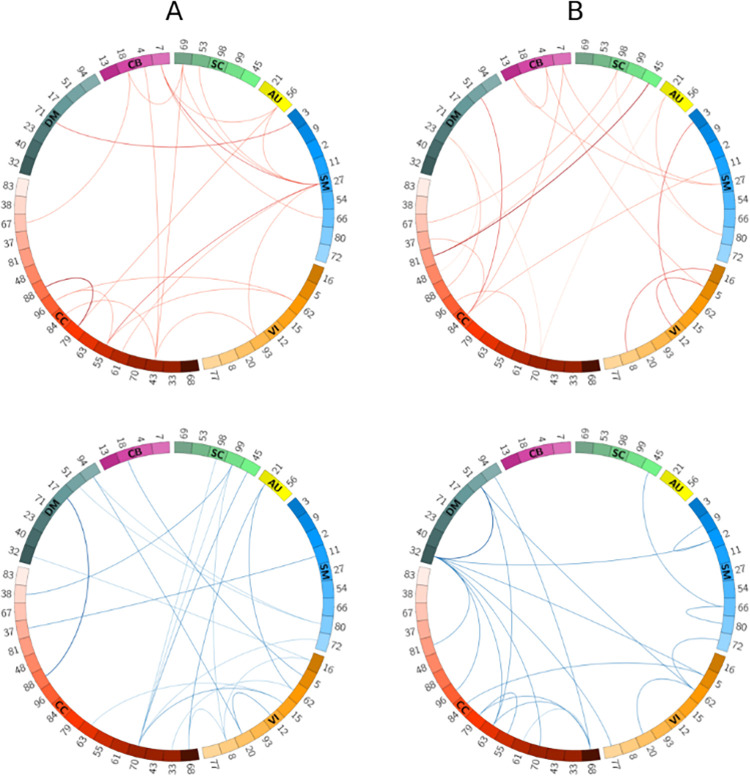

To better visualize the difference in performance, we subtracted the female cohort’s sensitivity scores from that of males for each network pair and selected the network pairs corresponding to the highest (positive) and lowest (negative) 1% score difference, as illustrated in Fig 6A. According to the figure, from the pairs in which males have higher sensitivity (i.e., the red arcs), the superior parietal lobule (SPL) network (indicated as SM27) shows more outgoing arcs in the connectogram. This may suggest that the SPL network activity varies more (or, is more uniquely identified) in males than females. To the best of our knowledge, this is the first time such a pattern has been identified. Studies have shown that the SPL network is linked to spatial processing tasks, especially in mental rotation [54, 55]. Another interesting research has reported stronger activity of these networks among males [56]. Altogether, these observations seem to generally indicate that while men might do better at spatial orientation tasks than women, there is more variation to their brain functional engagement in this task than women. Hence, the significance of SM27 as a functional brain activity biomarker for classification of males from females should be considered. Likewise, among networks with the lowest (most negative) sensitivity difference between males and females (i.e., the blue arcs in Fig 6A), CC70 presents the most outgoing arcs, suggesting this network is more discriminative in females.

Fig 6.

Spatial connectogram of difference in sensitivity (A) as well as the difference in specificity (B) between sex-based groups highlighting the top 1% positive (top row) and negative (bottom row) differences. Fig 6A: scores are calculated by subtracting sensitivities of the female subcohort from the male subcohort. SM27, when paired with other domains, produces higher specificity in males, while CC70 yields higher specificity in females. Fig 6B: scores are calculated by subtracting specificities of different-sex from same-sex subcohorts. The SM and CB networks produce higher sensitivities for different-sex samples, whereas the CC networks predict better in the same-sex subcohort. Blue and red color lines represent the two ends of the difference spectrum. Numbers represent indices for the ICA-derived Neuromark RSNs. The connectograms were generated using the Circos tool [51].

Finally, we considered the set of different-subject samples. This set naturally splits into same-sex (male-male or female-female pairings) and different-sex (male-female pairings) subcohorts, for which we conducted assessments analog to those shown in the male vs. female discussion above. Interestingly, we observed higher specificity in the different-sex cohort for 86% of network pairs (and reliably so for network pairs showing largest differences; see S4B and S6B Figs). According to the results of this assessment, the model performs better (higher specificity) when samples belong to different sexes, for most network pairs. This is likely because our model is capable of learning sex-rich features from the network spatial map patterns, even though sex has not been used as an input to our model. The corresponding highest and lowest 1% specificity differences are visualized in Fig 6B. For the most negative differences (same-sex specificity lower than for different-sex), we observed that network pairs including either the default mode (especially DM32 and DM51) or the cognitive control networks had the most outgoing links, suggesting more unique variations in the different-sex cohort. For those network pairs with positive specificity differences, on the other hand, the CC networks, especially CC84, appear more frequently in the connectogram, suggesting that more unique patterns occur in the same-sex cohort when network pairs include a CC network.

Furthermore, we assessed the previous experiments’ results from a statistical perspective (see S6 Table). We performed a two-sided two-sample t-test which shows the significance of the mean sensitivity or specificity difference between the two sub-cohorts of each sex-based assessment. Accordingly, we observed that the mean sensitivity in the male sub-cohort is significantly different in the female sub-cohort, and the mean specificity in the different-sex sub-cohort is significantly different from the same-sex sub-cohort. These seem especially true for the cognitive control domain.

3.3. Impact of age on performance

Next, we analyzed the relationship between age and model performance. Similar to experiments in the previous section, we divided the test set into same- and different-subject cohorts. We computed the sensitivity of subjects of ages below 52 and above 72, which make cohorts of size 260 and 268 subjects, respectively. Our results revealed that the younger cohort’s prediction performance is higher than that of the older cohort for 66% of network pairs (and reliably so for network pairs showing largest differences; see S5A and S6C Figs). This is especially the case for the cognitive control (p<2e−22, see S7 Table), sensory-motor (p<6e−18), and default mode (p<1e−11). In a similar experiment, we computed the specificity for all pairs of networks within the different-subject cohort for 139 and 158 subjects with age below 57 and above 69 years old, respectively (see S5B and S6D Figs). For most domains, the model performance for younger brains appears to be stronger than older ones, especially for the cognitive control (p<4e−8) and cerebellar (p<2e−8) domains.

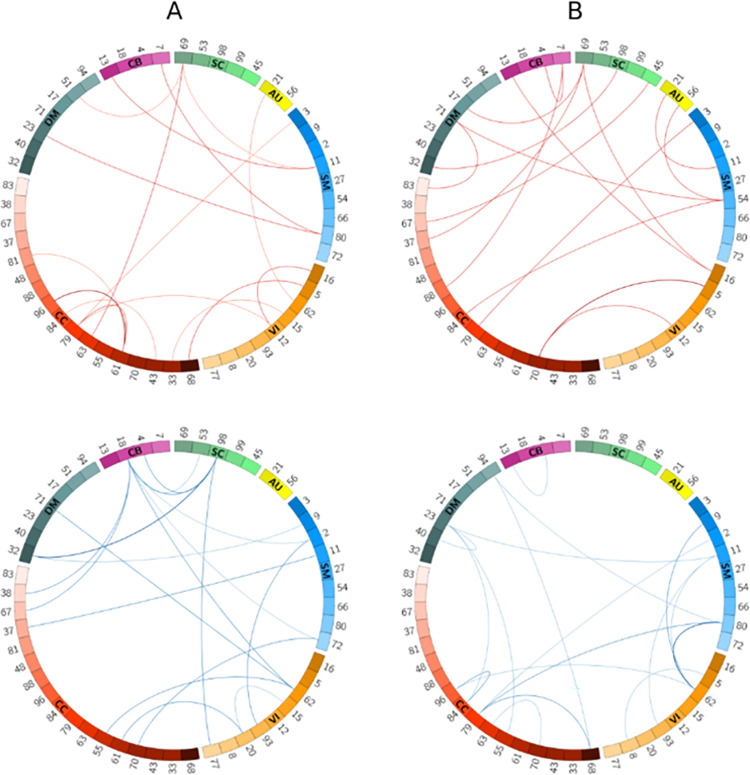

To shed more light on the role of age on our model performance, we computed pairwise sensitivity and specificity differences between the young-old cohorts and then picked the highest and lowest 1% of the resulting scores. Fig 7A and 7B show these differences for the sensitivity and specificity metrics, respectively. Based on these figures, from the pairs with the largest sensitivities (the arcs colored in red) networks CC61, CC79, and SC69 when paired with a number of other networks appear to be more discriminative. On the other hand, among the networks with the lowest sensitivity difference score (arcs colored in blue) networks CB18 and SC98 are linked with many outgoing arcs suggesting that these networks contain more unique patterns within the cohort of old subjects. Altogether, the aforementioned networks can be used in age-related tasks where we are interested in comparing intra-subject networks. Likewise, considering Fig 6B, networks from the CC and SM domains when linked with other networks (Fig 7B, bottom row) along with the (CC70, VI5) network pair (Fig 7B, top row) are well suited for age-related tasks using patterns of networks between subjects (e.g., classifying younger vs. older individuals).

Fig 7.

Spatial connectogram of difference in sensitivity (A) as well as the difference in specificity (B) between young and old groups with the highest (top row) and lowest (bottom row) 1% values. All the connectograms show the difference scores computed from deducting old subjects from young subjects. Fig 7A suggests CC61 and CC79, and SC69 networks contain more discriminative features in young subjects. On the other hand, networks of CB18 and SC98 perform better when subjects are old. Fig 7B shows the network pair CC70-VI5 has the largest specificity difference between young and old subjects. In contrast, the combination of the CC and SM networks can better predict the dis-similarity metric when subjects are older. The connectograms were generated using the Circos tool [51].

4. Conclusion

In this study, we showed for the first time that pairwise-relationships between ICA-based spatial maps can predict whether or not two networks belong to the same subject. As such, we proposed a Siamese-based model that extracts individualized patterns by pairwise comparison of spatial network maps (e.g., an auditory and a visual network). In our model, we combined a Siamese architecture with convolutional neural networks to learn high-level features describing 3D functional brain network maps suitable for the downstream comparison of subjects in our prediction task. Using the features extracted from the CNN networks, our model generated a non-linear distance metric representing the chance the two networks are from same or different subjects. Our results of training the proposed model for all possible network pairs showed that different pairs of functional networks contributed differently to the network prediction task. This was especially true when networks were selected from the subcortical domain (with the highest accuracy) or the auditory domain (with the lowest accuracy). The model’s high performance in subcortical networks suggested the superiority of such networks being used for future end-point tasks, such as age classification, brain fingerprinting, etc., under the proposed framework.

We also provided guidelines for neuroimaging-based prediction tasks by investigating which network pairs were more feature-rich in each of the same-subject and different-subject cohorts. We observed that networks from the subcortical and cognitive control domains, and especially their combinations, demonstrate more variability in the cohort of same-subject samples. Therefore, we suggest such pairs of networks are well suited for analyzing spatial interactions, for example, for predicting diseases. On the other hand, in the different-subject cohort, network pairs from the visual domain resulted in more accurate models and can later be used for a task that scrutinizes networks between subjects, e.g., for identifying same-sex samples.

Further analysis of our results revealed that the performance of our model depends on subjects’ age and sex. From the sex-based assessments, we observed in most cases (71%) the model performed better in males corroborating the previous studies’ findings, which showed that males’ brain networks have larger variability (i.e., more distinct patterns) than females’ [52, 53]. Especially for the first time, we showed functional activity in the SPL network varies more within males than females. Future assessment and study of potential sex bias in these findings is warranted, and approaches such as [57] could be easily adapted to our framework for such purposes. Moreover, for 86% of network pairs, we observed higher specificity among different-sex subjects than same-sex subjects. This suggests higher discriminative patterns between networks of different sex compared to networks of the same sex. When it comes to the impact of age, our results showed that the prediction performance among the younger cohort is higher than that of the older cohort for most network pairs. Overall, our age and sex-related findings showed that our model could learn sex and age-rich features from the spatial maps, even though sex and age have not been explicitly used as an input to our model.

Overall, despite the widespread use of timecourse-derived information (i.e., functional connectivity) in prediction-focused neuroimaging studies, spatial maps are feature-rich data sources that can serve as surrogates to timecourse data or even be used as complementary source input. As future works, we think there is room for further research in at least two different respects. First, in this study, we tried to show that single scan datasets are sufficient to learn features that characterize functional patterns. However, one interesting direction would be to see how we can adapt such a model to leverage multi-scan longitudinal datasets for more robust predictions. Aggregation techniques such as boosting, or its derivatives can be potentially used for that purpose. Second, we encourage researchers to utilize our approach and investigate whether similar patterns exist among diseased and healthy cases. Moreover, we hypothesize that our model can serve as the first step towards brain fingerprinting through discovering unique functional patterns that can characterize people in a unique way.

Supporting information

(PDF)

Each entry in the heatmap indicates the accuracy of a network pair on the entire test set. The heatmap is grouped according to their functional domains, i.e., SC, AU, SM, VI, CC, DM, and CB which contain 5, 2, 9, 9, 17, 7, and 4 networks, respectively. The results suggest that different networks have different amount of discriminative information for characterizing subjects. For example, the subcortical, and visual networks appear to be more predictive (i.e., have more discriminative information) than auditory networks when paired with a broad range of networks across all domains.

(PDF)

S3A and S3B Fig depict the heatmap of sensitivity and specificity, respectively, expressed as percentages. Comparing the two heatmaps reveals an interesting observation that certain domains (here, the subcortical domain) appear to contribute more significantly when classifying ‘same subjects,’ while a different set of networks (especially VI-VI and SM-SM network pairs in S3B Fig) contribute the most toward classification of ‘different subjects’.

(PDF)

Fig 4A shows the sensitivity differences computed from subtracting the sensitivity of females from that of males. According to the figure, network pair samples coming from male subjects perform better (i.e., they attain higher sensitivity) than samples coming from female subjects in most cases of same-subject prediction. Fig 4B depicts the specificity differences computed from subtracting the different-sex specificities from same-sex specificities. This figure shows that when subjects have different sex, the model performs better (higher specificity) in different-subject prediction than when subjects are from the same sex. Note that sensitivity and specificity are expressed as percentages. (C-D) Percentage of bootstrapped differences larger/smaller than 0: (C) corresponds to Fig A and (D) corresponds to Fig B. Bootstrapped sensitivity and specificity differences were evaluated 1,000,000 times (with replacement) to assess how likely it is to observe a non-zero value in sensitivity/specificity difference per network pair, in the direction of the original difference shown in panels (A) and (B). Large percentages indicate the directionality of the observed difference replicates reliably. Note that we report the number of times values are greater or smaller than zero (whichever is bigger). Thus, percentages closer to 50% are indicative of unreliable (chance) replication of the original result. (E-F) Coefficient of variation. Fig 4E and 4F report the ratio of the standard deviation to the absolute (unsigned) mean of bootstrapped samples for sensitivity and specificity differences, respectively. For bootstrapped mean differences whose absolute value are below 1, if their standard deviation exceeds the mean by 1.6 times or more, we simply report -10log10(1.6) = -0.2. As illustrated, differences with larger absolute value have lower bootstrapped variability, further supporting their reliability.

(PNG)

Fig 5A and 5B show the sensitivity and specificity score differences obtained by deducting the scores of old subjects from those of young subjects. According to the results, for most network pairs the sensitivity and specificity scores are higher in young subjects than in old subjects. Note that sensitivity and specificity are expressed as percentages. (C-D) Percentage of bootstrapped differences larger/smaller than 0: (C) corresponds to Fig A and (D) corresponds to Fig B. Bootstrapped sensitivity and specificity differences were evaluated 1,000,000 times (with replacement) to assess how likely it is to observe a non-zero value in sensitivity/specificity difference per network pair, in the direction of the original difference shown in panels (A) and (B). Large percentages indicate the directionality of the observed difference replicates reliably. Note that we report the number of times values are greater or smaller than zero (whichever is bigger). Thus, percentages closer to 50% are indicative of unreliable (chance) replication of the original result. (E-F) Coefficient of variation. Fig 5E and 5F report the ratio of the standard deviation to the absolute (unsigned) mean of bootstrapped samples for sensitivity and specificity differences, respectively. For bootstrapped mean differences whose absolute value are below 1, if their standard deviation exceeds the mean by 1.6 times or more, we simply report -10log10(1.6) = -0.2. As illustrated, in (E) sensitivity differences with larger absolute value have lower bootstrapped variability, further supporting their reliability, while in (F) large negative specificity differences (old > young) appear to have lower bootstrapped variability than equally-large positive specificity differences.

(PNG)

Fig A shows that the model’s sensitivity in males is higher than females in all brain domains, especially in CB, where 87% of network pairs have higher sensitivity in males than females. (B) Different-sex brains are more variable than same-sex brains. Fig B shows that most network pairs in the different-sex cohort have higher specificity than those in the same-sex cohort within domains, especially in DM, SM, VI, and SC. (C-D) Younger brains are more variable than elders’. According to Fig C and D, sensitivity and specificity in young brains are higher than in old brains.

(PDF)

(DOCX)

(DOCX)

(DOCX)

(DOCX)

Numbers in parentheses refer to the average accuracy of network pairs with one network of the domain. Numbers in cells are the -"log(p-value)×sign(t)" scores obtained from a two-sided two-sample t-test between mean domain accuracies. The results show that, using Bonferoni correction and a significance level of 0.05, the mean accuracies of different domains are mostly significantly different (Bold cells are significant). Lowest and highest scores are color-coded with blue and red, respectively. The table is symmetric along the main diagonal except for the sign, which reflects the direction of the difference. (B) Cohen’s d associated with Table A. Numbers in cells are the effect size, Cohen’s d, between domain accuracies and are rounded to 3 digits.

(DOCX)

P-values were computed using the two-sided two-sample t-test to compare male vs. female or same- vs. different-sex cohort. Results show that the mean sensitivity between male and female sub-cohorts are significantly different, especially in CC and CB domains, each of which includes CC-x and SM-x network pairs. Similarly, the p-values of comparing the same- and different cohorts suggest the significance of the mean specificity difference, especially for CC and SM domains.

(DOCX)

P-values were computed using the two-sided two-sample t-test for comparison of young vs. old cohorts. The results depict the mean sensitivity and specificity between the young and old sub-cohorts are significantly different.

(DOCX)

Data Availability

The data underlying the results presented in the study are available from https://biobank.ctsu.ox.ac.uk/crystal/label.cgi?id=111. Furthermore, the processing protocols are described at https://biobank.ctsu.ox.ac.uk/crystal/crystal/docs/brain_mri.pdf.

Funding Statement

The study was funded in part by NIH studies R01MH118695, R01MH118695-03S1, and RF1AG063153. These funds were awarded to Prof. Vince D. Calhoun. The funders had no role in the study design, data collection and analysis, decision to publish, or preparation of the manuscript. All the funding or sources of support received during this study and there was no additional external funding received for this study.

References

- 1.Mangin J. F., Rivière D., Cachia A., Duchesnay É., Cointepas Y., Papadopoulos-Orfanos D., et al P., "A framework to study the cortical folding patterns," Neuroimage, vol. 23, pp. S129–S138, 2004. doi: 10.1016/j.neuroimage.2004.07.019 [DOI] [PubMed] [Google Scholar]

- 2.Bürgel U., Amunts K., Hoemke L., Mohlberg H., Gilsbach J. M. and Zilles K., "White matter fiber tracts of the human brain: Three-dimensional mapping at microscopic resolution, topography and intersubject variability," Neuroimage, vol. 29, no. 4, pp. 1092–1105, 2006. doi: 10.1016/j.neuroimage.2005.08.040 [DOI] [PubMed] [Google Scholar]

- 3.Smith S., Duff E., Groves A., Nichols T. E., Jbabdi S., Westlye L. T., et al. , "Structural variability in the human brain reflects fine-grained functional architecture at the population level," Journal of Neuroscience, vol. 39, no. 31, pp. 6136–6149, 2019. doi: 10.1523/JNEUROSCI.2912-18.2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Alexander-Bloch A., Giedd J. N. and Bullmore E., "Imaging structural co-variance between human brain regions," Nature Reviews Neuroscience, vol. 14, no. 5, pp. 322–336, 2013. doi: 10.1038/nrn3465 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Gupta L., Besseling R. M., Overvliet G. M., Hofman P. A., Louw A. d., Vaessen M. J., et al. , "Spatial heterogeneity analysis of brain activation in fMRI," NeuroImage: Clinical, vol. 5, pp. 266–276, 2014. doi: 10.1016/j.nicl.2014.06.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Finn E. S., Shen X., Scheinost D., Rosenberg M. D., Huang J., Chun M. M., et al. , "Functional connectome fingerprinting: identifying individuals using patterns of brain connectivity," Nature neuroscience, vol. 18, no. 11, pp. 1664–1671, 2015. doi: 10.1038/nn.4135 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Wang K., Liang M., Wang L., Tian L., Zhang X., Li K. et al. , "Altered functional connectivity in early Alzheimer’s disease: A resting‐state fMRI study," Human brain mapping, vol. 28, no. 10, pp. 967–978, 2007. doi: 10.1002/hbm.20324 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Arbabshirani M. R., Kiehl K., Pearlson G. and Calhoun V. D., "Classification of schizophrenia patients based on resting-state functional network connectivity," Frontiers in neuroscience, vol. 7, p. 133, 2013. doi: 10.3389/fnins.2013.00133 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Cole D. M., Smith S. M. and Beckmann C. F., "Advances and pitfalls in the analysis and interpretation of resting-state FMRI data," Frontiers in systems neuroscience, vol. 4, p. 8, 2010. doi: 10.3389/fnsys.2010.00008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Crosson B., Ford A., McGreg K. M., Cheshkov S., Li X., Walker-Batson D. et al. , "Functional Imaging and Related Techniques: An Introduction for Rehabilitation Researchers," Journal of rehabilitation research and development, vol. 47, no. 2, 2010. doi: 10.1682/jrrd.2010.02.0017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Lee M. H., Smyser C. D. and Shimony J. S., "Resting state fMRI: A review of methods and clinical applications," American Journal of neuroradiology, vol. 34, no. 10, pp. 1866–1872, 2013. doi: 10.3174/ajnr.A3263 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Di X., Gohel S., Kim E. H. and Bisw B. B., "Task vs. rest—different network configurations between the coactivation and the resting-state brain networks," Frontiers in Human Neuroscience, vol. 7, p. 493, 2013. doi: 10.3389/fnhum.2013.00493 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.O’Connor E. E. and Zeffiro T. A. , "Why is Clinical fMRI in a Resting State?," Frontiers in neurology, vol. 10, p. 420, 2019. doi: 10.3389/fneur.2019.00420 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Mueller S., Wang D., Fox M. D., Yeo B. T., Sepulcre J., Sabuncu M. R., et al. , "Individual Variability in Functional Connectivity Architecture of the Human Brain," Neuron, vol. 77, no. 3, pp. 586–595, 2013. doi: 10.1016/j.neuron.2012.12.028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Biswal B., Zerrin Yetkin F., Haughton V. M. and Hyde J. S., "Functionalconnectivityin the motor cortex of resting human brain using echo-planar MRI," Magnetic resonance in medicine, vol. 34, no. 4, pp. 537–541, 1995. doi: 10.1002/mrm.1910340409 [DOI] [PubMed] [Google Scholar]

- 16.Beckmann C. F., DeLuca M., Devlin J. T. and Smith S. M., "Investigations into resting-state connectivity using independent component analysis," Philosophical Transactions of the Royal Society B: Biological Sciences, vol. 360, no. 1457, pp. 1001–1013, 2005. doi: 10.1098/rstb.2005.1634 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Calhoun V. D. and Adali T., "Multisubject Independent Component Analysis of fMRI: A Decade of Intrinsic Networks, Default Mode, and Neurodiagnostic Discovery," IEEE reviews in biomedical engineering, vol. 5, pp. 60–73, 2012. doi: 10.1109/RBME.2012.2211076 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Power J. D., Cohen A. L., Nelson S. M., Wig G. S., Barnes K. A., Church J. A., et al. , "Functional network organization of the human brain," Neuron, vol. 72, no. 4, pp. 665–678, 2011. doi: 10.1016/j.neuron.2011.09.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Lee M. H., Hacker C. D., Snyder A. Z., Corbetta M., Zhang D., Leuthardt E. C. et al. "Clustering of resting state networks," PloS one, vol. 7, no. 7, p. e40370, 2012. doi: 10.1371/journal.pone.0040370 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Damaraju E., Allen E. A., Belger A., Ford J. M., McEwen S. J., Mathalon D., et al. "Dynamic functional connectivity analysis reveals transient states of dysconnectivity in schizophrenia," NeuroImage: Clinical, vol. 5, pp. 298–308, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Van Den Heuvel M. P. and Pol H. E. H., "Exploring the brain network: A review on resting-state fMRI functional connectivity," European neuropsychopharmacology, vol. 20, no. 8, pp. 519–534, 2010. doi: 10.1016/j.euroneuro.2010.03.008 [DOI] [PubMed] [Google Scholar]

- 22.Roy A. K., Shehzad Z., Margulies D. S., Kelly A. C., Uddin L. Q., Gotimer K., et al. , "Functional connectivity of the human amygdala using resting state fMRI," Neuroimage, vol. 45, no. 2, pp. 614–626, 2009. doi: 10.1016/j.neuroimage.2008.11.030 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Niu Y.-W., Lin Q.-H., Qiu Y., Kuang L.-D. and Calhoun V. D., "Sample Augmentation for Classification of Schizophrenia Patients and Healthy Controls Using ICA of fMRI Data and Convolutional Neural Networks," in 2019 Tenth International Conference on Intelligent Control and Information Processing (ICICIP), 2019. [Google Scholar]

- 24.Qiu Y., Lin Q.-H., Kuang L.-D., Zhao W.-D., Gong X.-F., Cong F. et al. , "Classification of schizophrenia patients and healthy controls using ICA of complex-valued fMRI data and convolutional neural networks," in International Symposium on Neural Networks, 2019. [Google Scholar]

- 25.Hassanzadeh R. and Calhoun V. D., "Individualized Prediction of Brain Network Interactions using Deep Siamese Networks," in 2020 IEEE 20th International Conference on Bioinformatics and Bioengineering (BIBE), 2020. [Google Scholar]

- 26.Arbabshirani M. R., Plis S., Sui J. and Calhoun V. D., "Single subject prediction of brain disorders in neuroimaging: Promises and pitfalls," Neuroimage, vol. 145, pp. 137–165, 2017. doi: 10.1016/j.neuroimage.2016.02.079 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Chen G., Ward B. D., Xie C., Li W., Wu Z., Jones J. L., et al. , "Classification of Alzheimer Disease, Mild Cognitive Impairment, and Normal Cognitive Status with Large-Scale Network Analysis Based on Resting-State Functional MR Imaging," Radiology, vol. 259, no. 1, pp. 213–221, 2011. doi: 10.1148/radiol.10100734 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Du W., Calhoun V. D., Li H., Ma S., Eichele T., Kiehl K. A., et al. , "High classification accuracy for schizophrenia with rest and task fMRI data," Frontiers in human neuroscience, vol. 6, p. 145, 2012. doi: 10.3389/fnhum.2012.00145 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Tang Y., Wang L., Cao F. and Tan L., "Identify schizophrenia using resting-state functional connectivity: an exploratory research and analysis," Biomedical engineering online, vol. 11, no. 1, p. 50, 2012. doi: 10.1186/1475-925X-11-50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Iidaka T., "Resting state functional magnetic resonance imaging and neural network classified autism and control," Cortex, vol. 63, pp. 55–67, 2015. doi: 10.1016/j.cortex.2014.08.011 [DOI] [PubMed] [Google Scholar]

- 31.Khosla M., Jamison K., Kuceyeski A. and Sabuncu M. R., "Ensemble learning with 3D convolutional neural networks for functional connectome-based prediction," Neuroimage, vol. 199, pp. 651–662, 2019. doi: 10.1016/j.neuroimage.2019.06.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Weis S., Patil K. R., Hoffstaedter F., Nostro A., Yeo B. T. and Eickhoff S. B., "Sex classification by resting state brain connectivity," Cerebral cortex, vol. 30, no. 2, pp. 824–835, 2020. doi: 10.1093/cercor/bhz129 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Zhang C., Dougherty C. C., Baum S. A., White T. and Michael A. M., "Functional connectivity predicts gender: Evidence for gender differences in resting brain connectivity," Human brain mapping, vol. 39, no. 4, pp. 1765–1776, 2018. doi: 10.1002/hbm.23950 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Pruett J. R. Jr, Kandala S., Hoertel S., Snyder A. Z., Elison J. T., Nishino T., et al. , "Accurate age classification of 6 and 12 month-old infants based on resting-state functional connectivity magnetic resonance imaging data," Developmental cognitive neuroscience, vol. 12, pp. 123–133, 2015. doi: 10.1016/j.dcn.2015.01.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Miranda-Dominguez O., Mills B. D., Carpenter S. D., Grant K. A., Kroenke C. D., Nigg J. T. et al. , "Connectotyping: Model Based Fingerprinting of the Functional Connectome," PloS one, vol. 9, no. 11, p. e111048, 2014. doi: 10.1371/journal.pone.0111048 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Button K. S., Ioannidis J. P. A., Mokrysz C., Nosek B. A., Flint J., Robinson E. S. J. et al. , "Power failure: why small sample size undermines the reliability of neuroscience," Nature reviews neuroscience, vol. 14, no. 5, pp. 365–376, 2013. doi: 10.1038/nrn3475 [DOI] [PubMed] [Google Scholar]

- 37.Ma S., Correa N. M., Li X.-L., Eichele T., Calhoun V. D. and Adali T., "Automatic Identification of Functional Clusters in fMRI Data Using Spatial Dependence," IEEE Transactions on Biomedical Engineering, vol. 58, no. 12, pp. 3406–3417, 2011. doi: 10.1109/TBME.2011.2167149 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Liu J., Liao X., Xia M. and He Y., "Chronnectome fingerprinting: Identifying individuals and predicting higher cognitive functions using dynamic brain connectivity patterns," Human brain mapping, vol. 39, no. 2, pp. 902–915, 2018. doi: 10.1002/hbm.23890 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Kong R., Li J., Orban C., Sabuncu M. R., Liu H., Schaefer A., et al. , "Spatial topography of individual-specific cortical networks predicts human cognition, personality, and emotion," Cerebral cortex, vol. 29, no. 6, pp. 2533–2551, 2019. doi: 10.1093/cercor/bhy123 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Bromley J., Guyon I., LeCun Y., Säckinger E. and Shah R., "Signature verification using a "siamese" time delay neural network," in Advances in neural information processing systems, 1994. [Google Scholar]

- 41.Chung Y.-A. and Weng W.-H., "Learning Deep Representations of Medical Images using Siamese CNNs with Application to Content-Based Image Retrieval," arXiv preprint arXiv:1711.08490 }, 2017. [Google Scholar]

- 42.Miller K. L., Alfaro-Almagro F., Bangerter N. K., Thomas D. L., Yacoub E., Xu J., et al. , "Multimodal population brain imaging in the UK Biobank prospective epidemiological study," Nature neuroscience, vol. 19, no. 11, pp. 1523–1536, 2016. doi: 10.1038/nn.4393 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Jenkinson M., Bannister P., Brady M. and Smith S., "Improved optimization for the robust and accurate linear registration and motion correction of brain images," Neuroimage, vol. 17, no. 2, pp. 825–841, 2002. doi: 10.1016/s1053-8119(02)91132-8 [DOI] [PubMed] [Google Scholar]

- 44.Andersson J. L., Skare S. and Ashburner J., "How to correct susceptibility distortions in spin-echo echo-planar images: application to diffusion tensor imaging," Neuroimage, vol. 20, no. 2, pp. 870–888, 2003. doi: 10.1016/S1053-8119(03)00336-7 [DOI] [PubMed] [Google Scholar]

- 45.Salimi-Khorshidi G., Douaud G., Beckmann C. F., Glasser M. F., Griffanti L. and Smith S. M., "Automatic denoising of functional MRI data: Combining independent component analysis and hierarchical fusion of classifiers," Neuroimage, vol. 90, pp. 449–468, 2014. doi: 10.1016/j.neuroimage.2013.11.046 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Beckmann C. F. and Smith S. M., "Probabilistic independent component analysis for functional magnetic resonance imaging," IEEE transactions on medical imaging, vol. 23, no. 2, pp. 137–152, 2004. doi: 10.1109/TMI.2003.822821 [DOI] [PubMed] [Google Scholar]

- 47.Griffanti L., Salimi-Khorshidi G., Beckmann C. F., Auerbach E. J., Douaud G., Sexton C. E., et al. , "ICA-based artefact removal and accelerated fMRI acquisition for improved resting state network imaging," Neuroimage, vol. 90, pp. 449–468, 2014. doi: 10.1016/j.neuroimage.2013.11.046 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Du Y., Fu Z., Sui J., Gao S., Xing Y., Lin D., et al., "NeuroMark: An automated and adaptive ICA based pipeline to identify reproducible fMRI markers of brain disorders," NeuroImage: Clinical, vol. 28, 2020. doi: 10.1016/j.nicl.2020.102375 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Brosch T., Tam R. and Initiative A. D. N., "Manifold Learning of Brain MRIs by Deep Learning," in International Conference on Medical Image Computing and Computer-Assisted Intervention, Berlin, Heidelberg, 2013. [DOI] [PubMed] [Google Scholar]

- 50.Chollet F., "Xception: Deep Learning with Depthwise Separable Convolutions," in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017. [Google Scholar]

- 51.Krzywinski M., Schein J., Birol İ., Connors J., Gascoyne R., Horsman D., et al. , "Circos: an information aesthetic for comparative genomics," Genome research, vol. 19, no. 9, pp. 1639–1645, 2009. doi: 10.1101/gr.092759.109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Wierenga L. M., Doucet G. E., Dima D., Agartz I., Aghajani M., Akudjedu T. N., et al., "Greater male than female variability in regional brain structure across the lifespan," bioRxiv, 2020. doi: 10.1002/hbm.25204 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Forde N. J., Jeyachandra J., Joseph M., Jacobs G. R., Dickie E., h. D. Satterthwaite, et al. , "Sex Differences in Variability of Brain Structure Across the Lifespan," Cerebral Cortex, vol. 30, no. 10, pp. 5420–5430, 2020. doi: 10.1093/cercor/bhaa123 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Save E. and Poucet B., "Hippocampal-parietal cortical interactions in spatial cognition," Hippocampus, vol. 10, no. 4, pp. 491–499, 2000. doi: [DOI] [PubMed] [Google Scholar]

- 55.Jagaroo V., "Mental rotation and the parietal question in functional neuroimaging: A discussion of two views," European Journal of Cognitive Psychology, vol. 16, no. 5, pp. 717–728, 2004. [Google Scholar]

- 56.Koscik T., O’Leary D., Moser D. J., Andreasen N. C. and Nopoulos P., "Sex differences in parietal lobe morphology: Relationship to mental rotation performance," Brain and cognition, vol. 69, no. 3, pp. 451–459, 2009. doi: 10.1016/j.bandc.2008.09.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Adeli E., Zhao Q., Pfefferbaum A., Sullivan E. V., Fei-Fei L., Niebles J. C. et al. , "Representation Learning With Statistical Independence to Mitigate Bias," in Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2021. doi: 10.1109/wacv48630.2021.00256 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(PDF)

Each entry in the heatmap indicates the accuracy of a network pair on the entire test set. The heatmap is grouped according to their functional domains, i.e., SC, AU, SM, VI, CC, DM, and CB which contain 5, 2, 9, 9, 17, 7, and 4 networks, respectively. The results suggest that different networks have different amount of discriminative information for characterizing subjects. For example, the subcortical, and visual networks appear to be more predictive (i.e., have more discriminative information) than auditory networks when paired with a broad range of networks across all domains.

(PDF)

S3A and S3B Fig depict the heatmap of sensitivity and specificity, respectively, expressed as percentages. Comparing the two heatmaps reveals an interesting observation that certain domains (here, the subcortical domain) appear to contribute more significantly when classifying ‘same subjects,’ while a different set of networks (especially VI-VI and SM-SM network pairs in S3B Fig) contribute the most toward classification of ‘different subjects’.

(PDF)

Fig 4A shows the sensitivity differences computed from subtracting the sensitivity of females from that of males. According to the figure, network pair samples coming from male subjects perform better (i.e., they attain higher sensitivity) than samples coming from female subjects in most cases of same-subject prediction. Fig 4B depicts the specificity differences computed from subtracting the different-sex specificities from same-sex specificities. This figure shows that when subjects have different sex, the model performs better (higher specificity) in different-subject prediction than when subjects are from the same sex. Note that sensitivity and specificity are expressed as percentages. (C-D) Percentage of bootstrapped differences larger/smaller than 0: (C) corresponds to Fig A and (D) corresponds to Fig B. Bootstrapped sensitivity and specificity differences were evaluated 1,000,000 times (with replacement) to assess how likely it is to observe a non-zero value in sensitivity/specificity difference per network pair, in the direction of the original difference shown in panels (A) and (B). Large percentages indicate the directionality of the observed difference replicates reliably. Note that we report the number of times values are greater or smaller than zero (whichever is bigger). Thus, percentages closer to 50% are indicative of unreliable (chance) replication of the original result. (E-F) Coefficient of variation. Fig 4E and 4F report the ratio of the standard deviation to the absolute (unsigned) mean of bootstrapped samples for sensitivity and specificity differences, respectively. For bootstrapped mean differences whose absolute value are below 1, if their standard deviation exceeds the mean by 1.6 times or more, we simply report -10log10(1.6) = -0.2. As illustrated, differences with larger absolute value have lower bootstrapped variability, further supporting their reliability.

(PNG)

Fig 5A and 5B show the sensitivity and specificity score differences obtained by deducting the scores of old subjects from those of young subjects. According to the results, for most network pairs the sensitivity and specificity scores are higher in young subjects than in old subjects. Note that sensitivity and specificity are expressed as percentages. (C-D) Percentage of bootstrapped differences larger/smaller than 0: (C) corresponds to Fig A and (D) corresponds to Fig B. Bootstrapped sensitivity and specificity differences were evaluated 1,000,000 times (with replacement) to assess how likely it is to observe a non-zero value in sensitivity/specificity difference per network pair, in the direction of the original difference shown in panels (A) and (B). Large percentages indicate the directionality of the observed difference replicates reliably. Note that we report the number of times values are greater or smaller than zero (whichever is bigger). Thus, percentages closer to 50% are indicative of unreliable (chance) replication of the original result. (E-F) Coefficient of variation. Fig 5E and 5F report the ratio of the standard deviation to the absolute (unsigned) mean of bootstrapped samples for sensitivity and specificity differences, respectively. For bootstrapped mean differences whose absolute value are below 1, if their standard deviation exceeds the mean by 1.6 times or more, we simply report -10log10(1.6) = -0.2. As illustrated, in (E) sensitivity differences with larger absolute value have lower bootstrapped variability, further supporting their reliability, while in (F) large negative specificity differences (old > young) appear to have lower bootstrapped variability than equally-large positive specificity differences.

(PNG)

Fig A shows that the model’s sensitivity in males is higher than females in all brain domains, especially in CB, where 87% of network pairs have higher sensitivity in males than females. (B) Different-sex brains are more variable than same-sex brains. Fig B shows that most network pairs in the different-sex cohort have higher specificity than those in the same-sex cohort within domains, especially in DM, SM, VI, and SC. (C-D) Younger brains are more variable than elders’. According to Fig C and D, sensitivity and specificity in young brains are higher than in old brains.

(PDF)

(DOCX)

(DOCX)

(DOCX)

(DOCX)

Numbers in parentheses refer to the average accuracy of network pairs with one network of the domain. Numbers in cells are the -"log(p-value)×sign(t)" scores obtained from a two-sided two-sample t-test between mean domain accuracies. The results show that, using Bonferoni correction and a significance level of 0.05, the mean accuracies of different domains are mostly significantly different (Bold cells are significant). Lowest and highest scores are color-coded with blue and red, respectively. The table is symmetric along the main diagonal except for the sign, which reflects the direction of the difference. (B) Cohen’s d associated with Table A. Numbers in cells are the effect size, Cohen’s d, between domain accuracies and are rounded to 3 digits.

(DOCX)

P-values were computed using the two-sided two-sample t-test to compare male vs. female or same- vs. different-sex cohort. Results show that the mean sensitivity between male and female sub-cohorts are significantly different, especially in CC and CB domains, each of which includes CC-x and SM-x network pairs. Similarly, the p-values of comparing the same- and different cohorts suggest the significance of the mean specificity difference, especially for CC and SM domains.

(DOCX)

P-values were computed using the two-sided two-sample t-test for comparison of young vs. old cohorts. The results depict the mean sensitivity and specificity between the young and old sub-cohorts are significantly different.

(DOCX)

Data Availability Statement

The data underlying the results presented in the study are available from https://biobank.ctsu.ox.ac.uk/crystal/label.cgi?id=111. Furthermore, the processing protocols are described at https://biobank.ctsu.ox.ac.uk/crystal/crystal/docs/brain_mri.pdf.