Abstract

The purpose of the paper was the assessment of the success of an artificial intelligence (AI) algorithm formed on a deep-convolutional neural network (D-CNN) model for the segmentation of apical lesions on dental panoramic radiographs. A total of 470 anonymized panoramic radiographs were used to progress the D-CNN AI model based on the U-Net algorithm (CranioCatch, Eskisehir, Turkey) for the segmentation of apical lesions. The radiographs were obtained from the Radiology Archive of the Department of Oral and Maxillofacial Radiology of the Faculty of Dentistry of Eskisehir Osmangazi University. A U-Net implemented with PyTorch model (version 1.4.0) was used for the segmentation of apical lesions. In the test data set, the AI model segmented 63 periapical lesions on 47 panoramic radiographs. The sensitivity, precision, and F1-score for segmentation of periapical lesions at 70% IoU values were 0.92, 0.84, and 0.88, respectively. AI systems have the potential to overcome clinical problems. AI may facilitate the assessment of periapical pathology based on panoramic radiographs.

1. Introduction

Chronic apical periodontitis is an infection of tissues surrounding the dental apex induced by pulpal disease, mostly because of bacterial disease in the root canal complex developing during untreated or incorrectly treated dental caries [1–3]. Apical periodontitis is common, and its prevalence increases with age. Epidemiological studies have reported that apical periodontitis is present in 7% of teeth and 70% of the general population. The diagnosis of acute apical periodontitis is made clinically, but the detection of chronic apical periodontitis is done by radiography [4]. In general, following root canal treatment, complete healing of periapical lesions is expected or at least improvement in the form of a decrease of the size of periapical lesion [1, 5]. Radiographically, apical periodontitis manifests as a widened periodontal ligament space or visible lesions. Such radiolucencies, also called apical lesions, tend to be detected incidentally or by radiographic follow-up of endodontically treated teeth [6, 7]. Radiolucency in radiographs is an important feature of apical periodontitis [2]. Apical periodontitis can be detected on periapical and panoramic radiographs and by cone-beam computed tomography (CBCT). CBCT has superior discriminatory power but is costly and exposes the patient to radiation burden [6, 8]. Periapical and panoramic radiographs are the most frequently used techniques in the diagnosis and treatment of apical lesions [2]. Panoramic radiography generates two-dimensional (2D) tomographic images of the entire maxillomandibular area [9], enabling the evaluation of all teeth simultaneously. Also, panoramic radiography requires a far lower dose of radiation than CBCT imaging [6, 10]. Besides, panoramic radiography is painless, unlike intraoral radiographs, thus well tolerated by patients [9, 11]. One of the many recent technological advances in artificial intelligence (AI) and its applications are expanding rapidly, also in the area of medical management and medical imaging [12]. AI uses computational networks (neural networks (NNs)) that mimic biological nervous systems [13]. NNs were developed as one of the first types of AI algorithms. The computing power of NNs varies depending on the character and amount of training data. Networks using many large layers are termed deep learning NNs [14]. A deep convolutional neural network (D-CNN) was used to process large and complex images [15]. Deep learning networks, including CNNs, have displayed superior achievement in terms of object, face, and activity recognition [16]. Medical organ and lesion segmentation are an important application of imaging modalities [17, 18]. The detection and classification performance of deep learning-based CNNs concerning retinopathy caused by diabetes, skin cancer, and tuberculosis is very high [19, 20]. CNNs have also been applied in dentistry for tooth detection and numbering, as well as an assessment of periodontal bone loss and periapical pathology [21–25]. U-Net and pixel-based image segmentation, which is a different architecture created from CNN layers, are more successful than classical models even if there are few training images. The presentation of this architecture has been realized with biomedical images. The traditional U-Net architecture, extended to handle volumetric input, has two phases: the coder portion of the network where it learns representational features at unlikely scale- and gather-dependent information, and the decoder portion where the network extracts knowledge from the noticed situation and formerly learned features. The jump links used between the corresponding encoder and decoder layers allow deep parts of the network to be trained efficiently and compare the same receiver characteristics with different receiver areas [26].

The study is aimed at assessing the diagnostic success of U-Net approach for the segmentation of apical lesions in panoramic images.

2. Material and Methods

2.1. Radiographic Data Preparation

The panoramic radiographs used in the study were derived from the archives of the Faculty of Dentistry of Eskisehir Osmangazi University; 470 anonymized panoramic radiographs were applied. The radiographs were obtained from January 2018 to January 2019 for a variety of reasons. Images with artifacts of any type were excluded. The study design was authorized by the Non-Interventional Clinical Research Ethics Committee of Eskisehir Osmangazi University (decision date and number: 06.08.2019/14). The study was conducted following the regulations of the Declaration of Helsinki. The Planmeca Promax 2D (Planmeca, Helsinki, Finland) panoramic imaging system was used to obtain panoramic radiographs with the following parameters: 68 kVp, 16 mA, and 13 s.

2.2. Image Annotation

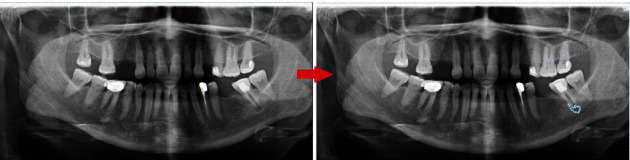

Three dental radiologists (I.S.B. and E. B. with 10 years of experience and F.A.K. with 3 years of experience) annotated ground truth images with the common decision on all images using CranioCatch Annotation software (Eskisehir, Turkey). The polygonal boxes were used to determine the locations of the apical lesions.

2.3. Deep CNN Architecture

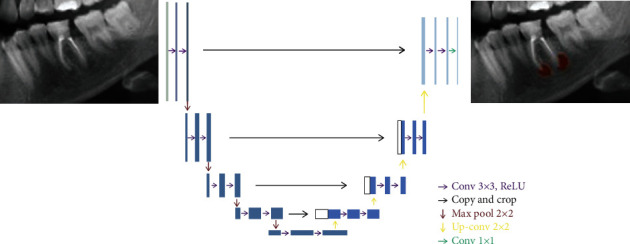

The deep learning was performed using a U-Net implemented with the PyTorch model (version 1.4.0). The U-Net architecture is used for semantic segmentation assignments (Figure 1).

Figure 1.

Annotation of the apical lesion using polygonal box method.

The U-Net architecture consists of four block levels, including two convolutional layers with batch normalization and a rectified linear unit activation function (ReLu). There is a maximum pool layer in the encoding section and upconvolution layers in the decoding section. Each block has 32, 64, 128, or 256 convolutional filters. Besides the bottleneck, the layer comprises 512 convolutional filters. Skip connections to the corresponding layers from the encoding layers are present in the decoding part [26]. The Adam Optimizer was used to train the U-Net.

2.4. Model Pipeline

PyTorch library was used for model development on the Python open-source programming language (v. 3.6.1; Python Software Foundation, Wilmington, DE, USA; retrieved on August 1, 2019, from https://www.python.org/). An AI model (CranioCatch, Eskisehir-Turkey) was developed to automatically segment apical lesions on panoramic radiographs. The training process was performed using an individual computer implemented with 16 GB RAM and an NVIDIA GeForce GTX 1060Ti graphic card.

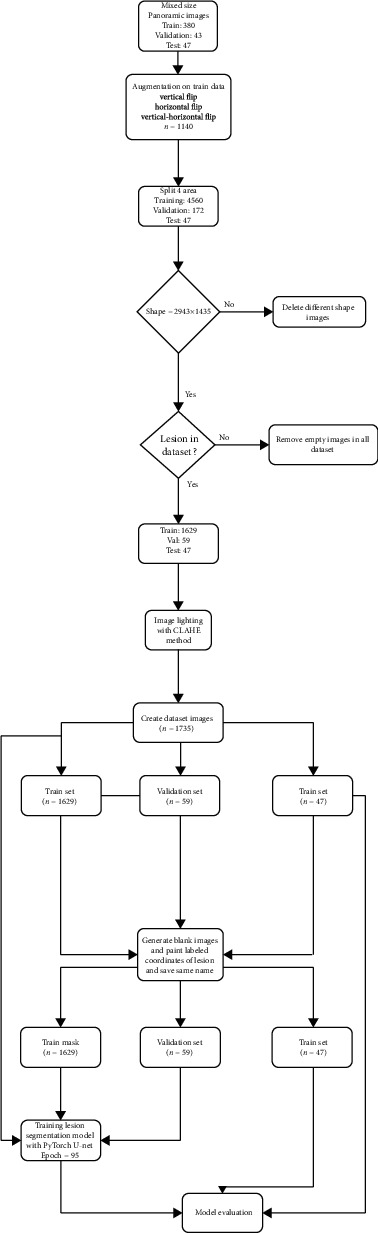

Split: 470 panoramic radiographs were divided into train, validation, and test group

Training group: 380

Validation group: 43

Test group: 47

(ii) Augmentation: 1140 images from the 380 original training group images were derived using data augmentation. Augmentation was applied on the training data set, and augmentations were horizontal flip and vertical flip (total images: 1140 (=380 × 3)) (size: 2943 × 1435)

(iii) Cropping (preprocessing step): then, all images of the train were divided into 4 parts as upper right, upper left, lower right, and lower left (size: 1000 × 530)

Training group: 1140 × 4 = 4560

Validation group: 43 × 4 = 172

Test group: 47

(iv) Remove full black masks (preprocessing step): the regions without lesions of all data set were deleted

Training group: 1629

Validation group: 59

Test group: 47

(v) Contrast Limited Adaptive Histogram Equalization (CLAHE) (preprocessing step): CLAHE has applied all images to improve image contrast and enable the identification of apical lesions

Training group: 1629

Validation group: 59

Test group: 47

(vi) Resize (preprocessing step): the resolution of each piece divided into 4 (1000 × 530) was resized to 512 x 256

Training group: 1629

Validation group: 59

Test group: 47

The segmentation model with PyTorch U-Net was trained with 95 epochs; the model based on 43 epochs showed the best performance and was thus used in the experiment. The model pipeline is summarized in Figure 2.

Figure 2.

The U-Net architecture for the semantic segmentation task.

2.5. Statistical Analysis

The confusion matrix was used to assess the achievement of the model. This matrix is a meaningful table that summarizes the predicted and actual situations. The performance of model is frequently assessed using the data in the confusion matrix [27]. The metrics used to evaluate the success of the model were as follows:

True Positive (TP): apical lesion was segmented, correctly

False Positive (FP): apical lesions were not detected

False Negative (FN): without apical lesions, lesions were nevertheless segmented

TP, FP, and FN were determined; then, the following metrics were computed:

Sensitivity (recall): TP/(TP + FN)

Precision: TP/(TP + FP)

F1 score: 2TP/(2TP + FP + FN)

3. Results and Discussion

3.1. Results

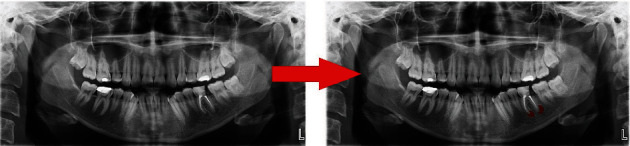

The AI model segmented 63 apical lesions on 47 radiographs in the test data set (True Positives) (Figures 3–5).

Figure 3.

Model pipeline for apical lesion segmentation (CranioCatch, Eskisehir, Turkey).

Figure 4.

Automatically apical lesion segmentation using AI model (CranioCatch, Eskisehir, Turkey).

Figure 5.

An example real-prediction image comparison.

Twelve apical lesions were not detected (False Negatives). In 5 cases without apical lesions, lesions were nevertheless segmented by the AI model (False Positives) (Table 1).

Table 1.

The number of segmented apical lesions with AI model (CranioCatch, Eskisehir, Turkey).

| Metrics | Number |

|---|---|

| True Positives (TP) | 63 |

| False Negatives (FN) | 12 |

| False Positives (FP) | 5 |

The sensitivity, precision, and F1-score values at 70% IoU value were 0.92, 0.84, and 0.88, respectively (Table 2).

Table 2.

The prediction performance measurement of the AI model (CranioCatch, Eskisehir, Turkey).

| Measure | Value | Derivations |

|---|---|---|

| Sensitivity (recall) | 0.92 | TP/(TP + FN) |

| Precision | 0.84 | TP/(TP + FP) |

| F1 score | 0.88 | 2TP/(2TP + FP + FN) |

| IoU value | 0.79 | TP/(TP + FP + FN) |

| Dice coefficient | 0.88 | 2TP/(2TP + FP + FN) |

3.2. Discussion

AI has rapidly improved the interpretation of medical and dental images, including via the application of deep learning models and CNNs [28, 29]. Deep learning has been developing rapidly thus recently attracting considerable attention [28–34]. The deep CNN architecture appears to be the most used deep learning approach. This is most likely due to its effective self-learning models and high computing capacity, which provide superior classification, detection, and quantitative performance based on imaging data [28–35]. CNNs have been used in dentistry for cephalometric landmark detection, dental structure segmentation, tooth classification, and apical lesion detection [36–39].

Tuzoff et al. presented a novel CNN algorithm for automatic tooth detection and numbering on panoramic radiographs. They found the sensitivity and specificity value of tooth numbering as 0.9893 and 0.9997, respectively. The findings showed the ability of current CNN architectures for automatic dental radiographic interpretation and diagnosis on panoramic radiographs [25]. Chen et al. detected and numbered teeth in dental periapical films using faster region proposal CNN networks (faster R-CNN). Faster R-CNN performed unusually well for tooth detection and localization, showing good precision and recall and overall performance like that of a younger dentist [24]. Miki et al. assessed the utility of deep CNN for classifying teeth based on dental CBCT images; the accuracy was 91.0%. The system rapidly and automatically produces diagrams for forensic recognition [38]. Two previous studies investigated the utility of AI systems for detecting periapical lesions. Ekert et al. investigated the capability of deep CNN algorithm to detect apical lesions on dental panoramic radiographs. CNNs detected the lesions despite the small number of data sets [6]. Orhan et al. [39] compared the diagnostic ability of a deep CNN algorithm to that of volume measurements based on CBCT images in the context of periapical pathology. The rate of detection of periapical lesions of the CNN model was 92.8%, and the volumetric and manual segmentation measurements were similar [39]. Endres et al. [40] created a model using 2902 deidentified panoramic radiographs. The presence of periapical radiolucencies on panoramic radiographs was evaluated by 24 oral and maxillofacial surgeons. They show that the deep learning algorithm has better success than 14 of 24 oral and maxillofacial surgeons. The success metrics for this model were as follows: the precision of 0.60 and an F1 score of 0.58 corresponding to a positive predictive value of 0.67 and True Positive rate of 0.51. Setzer et al. performed a study to use a deep learning proposal using U-Net architecture for the automatic segmentation of periapical lesions on CBCT images [41]. Segmentation of lesion accuracy was found as 0.93 with a specificity of 0.88, a positive predictive value of 0.87, and a negative predictive value of 0.93. They concluded that the DL algorithm trained in a limited CBCT images presented wonderful results in lesion detection accuracy. In the presented study, we created a segmentation model with PyTorch U-Net AI architecture on panoramic radiograph. It segmented 63 apical lesions on 47 radiographs in the test data set. Twelve apical lesions were not detected. In 5 cases without apical lesions, lesions were nevertheless segmented by the AI model. The sensitivity, precision, and F1-score values at 70% IoU value were 0.92, 0.84, and 0.88, respectively. Our results showed that AI deep learning algorithms can have service ability in the clinical dental setting. However, the present study had some limitations. Only one radiography machine and standard parameters were used to image acquisitions. Besides, study groups included all size of periapical images. The external test group was not used to assess the model's success. We used the U-Net algorithm to model development, only. Future studies should be used using larger study samples and images taken from different radiography equipment. Comparative experiments should be planned to use different CNN algorithms, and AI model performance should be compared to different human observers which have different level professional experiences.

4. Conclusions

Deep learning AI models enable the evaluation of periapical pathology based on panoramic radiographs. The application of AI for apical lesion detection and segmentation can reduce the burden on clinicians.

Data Availability

The data used to support the findings of this study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

References

- 1.Segura-Egea J., Martín-González J., Castellanos-Cosano L. Endodontic medicine: connections between apical periodontitis and systemic diseases. International Endodontic Journal . 2015;48(10):933–951. doi: 10.1111/iej.12507. [DOI] [PubMed] [Google Scholar]

- 2.Velvart P., Hecker H., Tillinger G. Detection of the apical lesion and the mandibular canal in conventional radiography and computed tomography. Oral Surgery, Oral Medicine, Oral Pathology, Oral Radiology, and Endodontology . 2001;92(6):682–688. doi: 10.1067/moe.2001.118904. [DOI] [PubMed] [Google Scholar]

- 3.Ridao-Sacie C., Segura-Egea J., Fernández-Palacín A., Bullón-Fernández P., Ríos-Santos J. Radiological assessment of periapical status using the periapical index: comparison of periapical radiography and digital panoramic radiography. International Endodontic Journal . 2007;40(6):433–440. doi: 10.1111/j.1365-2591.2007.01233.x. [DOI] [PubMed] [Google Scholar]

- 4.Patel S., Durack C. Essential Endodontology: Prevention and Treatment of Apical Periodontitis . Third Edition. Blackwell; 2019. Radiology of apical periodontitis. Essential endodontology: prevention and treatment of apical periodontitis; pp. 179–210. [Google Scholar]

- 5.Connert T., Truckenmüller M., ElAyouti A., et al. Changes in periapical status, quality of root fillings and estimated endodontic treatment need in a similar urban German population 20 years later. Clinical Oral Investigations . 2019;23(3):1373–1382. doi: 10.1007/s00784-018-2566-z. [DOI] [PubMed] [Google Scholar]

- 6.Ekert T., Krois J., Meinhold L., et al. Deep learning for the radiographic detection of apical lesions. Journal of Endodontics . 2019;45(7):917–922.e5. doi: 10.1016/j.joen.2019.03.016. [DOI] [PubMed] [Google Scholar]

- 7.Kanagasingam S., Hussaini H., Soo I., Baharin S., Ashar A., Patel S. Accuracy of single and parallax film and digital periapical radiographs in diagnosing apical periodontitis–a cadaver study. International Endodontic Journal . 2017;50(5):427–436. doi: 10.1111/iej.12651. [DOI] [PubMed] [Google Scholar]

- 8.Patel S., Brown J., Semper M., Abella F., Mannocci F. European Society of Endodontology position statement: use of cone beam computed tomography in Endodontics. International Endodontic Journal . 2019;52(12):1675–1678. doi: 10.1111/iej.13187. [DOI] [PubMed] [Google Scholar]

- 9.Du X., Chen Y., Zhao J., Xi Y. A convolutional neural network based auto-positioning method for dental arch in rotational panoramic radiography. 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); 2018; Honolulu, HI, USA. pp. 2615–2618. [DOI] [PubMed] [Google Scholar]

- 10.Leonardi Dutra K., Haas L., Porporatti A. L., et al. Diagnostic accuracy of cone-beam computed tomography and conventional radiography on apical periodontitis: a systematic review and meta-analysis. Journal of Endodontics . 2016;42(3):356–364. doi: 10.1016/j.joen.2015.12.015. [DOI] [PubMed] [Google Scholar]

- 11.White S. C., Pharoah M. J. Oral radiology-E-Book: Principles and interpretation . St. Louis: Elsevier Health Sciences; 2014. [Google Scholar]

- 12.Allen B., Seltzer S. E., Langlotz C. P., et al. A road map for translational research on artificial intelligence in medical imaging: from the 2018 National Institutes of Health/RSNA/ACR/The Academy Workshop. Journal of the American College of Radiology . 2019;16(9):1179–1189. doi: 10.1016/j.jacr.2019.04.014. [DOI] [PubMed] [Google Scholar]

- 13.Wong S., al-Hasani H., Alam Z., Alam A. Artificial intelligence in radiology: how will we be affected? European Radiology . 2019;29(1):141–143. doi: 10.1007/s00330-018-5644-3. [DOI] [PubMed] [Google Scholar]

- 14.Burt J. R., Torosdagli N., Khosravan N., et al. Deep learning beyond cats and dogs: recent advances in diagnosing breast cancer with deep neural networks. The British Journal of Radiology . 2018;91(1089) doi: 10.1259/bjr.20170545. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Hwang J. J., Jung Y. H., Cho B. H., Heo M. S. An overview of deep learning in the field of dentistry. Imaging Science in dentistry . 2019;49(1):1–7. doi: 10.5624/isd.2019.49.1.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Sklan J. E., Plassard A. J., Fabbri D., Landman B. A. Toward content-based image retrieval with deep convolutional neural networks. Proceedings of SPIE--the International Society for Optical Engineering . 2015;9417:p. 94172C. doi: 10.1117/12.2081551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Neri E., de Souza N., Brady A., et al. What the radiologist should know about artificial intelligence–an ESR white paper. Insights into imaging . 2019;10(1) doi: 10.1186/s13244-019-0738-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Lee J. H., Kim D. H., Jeong S. N., Choi S. H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. Journal of Dentistry . 2018;77:106–111. doi: 10.1016/j.jdent.2018.07.015. [DOI] [PubMed] [Google Scholar]

- 19.Gulshan V., Peng L., Coram M., et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA . 2016;316(22):2402–2410. doi: 10.1001/jama.2016.17216. [DOI] [PubMed] [Google Scholar]

- 20.Esteva A., Kuprel B., Novoa R. A., et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature . 2017;542(7639):115–118. doi: 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Krois J., Ekert T., Meinhold L., et al. Deep learning for the radiographic detection of periodontal bone loss. Scientific Reports . 2019;9(1):1–6. doi: 10.1038/s41598-019-44839-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Davies A., Mannocci F., Mitchell P., Andiappan M., Patel S. The detection of periapical pathoses in root filled teeth using single and parallax periapical radiographs versus cone beam computed tomography - a clinical study. International Endodontic Journal . 2015;48(6):582–592. doi: 10.1111/iej.12352. [DOI] [PubMed] [Google Scholar]

- 23.Zakirov A., Ezhov M., Gusarev M., Alexandrovsky V., Shumilov E. End-to-end dental pathology detection in 3D cone-beam computed tomography images. 2018. http://arxiv.org/abs/1810.10309 .

- 24.Chen H., Zhang K., Lyu P., et al. A deep learning approach to automatic teeth detection and numbering based on object detection in dental periapical films. Scientific Reports . 2019;9(1):1–11. doi: 10.1038/s41598-019-40414-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Tuzoff D. V., Tuzova L. N., Bornstein M. M., et al. Tooth detection and numbering in panoramic radiographs using convolutional neural networks, Dentomaxillofacial Radiology . 2019;48(4) doi: 10.1259/dmfr.20180051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Ronneberger O., Fischer P., Brox T. International Conference on Medical image computing and computer-assisted intervention . Springer; 2015. U-net: convolutional networks for biomedical image segmentation; pp. 234–241. [DOI] [Google Scholar]

- 27.Osmanoğlu U. Ö., Atak O. N., Çağlar K., Kayhan H., Can T. C. Sentiment analysis for distance education course materials: a machine learning approach. Journal of Educational Technology and Online Learning . 2020;3(1):31–48. doi: 10.31681/jetol.663733. [DOI] [Google Scholar]

- 28.He K., Zhang X., Ren S., Sun J. Delving deep into rectifiers: surpassing human-level performance on imagenet classification. Proceedings of the IEEE International Conference on Computer Vision (ICCV); 2015; Santiago, Chile. pp. 1026–1034. [Google Scholar]

- 29.Lakhani P., Sundaram B. Deep learning at chest radiography: automated classification of pulmonary tuberculosis by using convolutional neural networks. Radiology . 2017;284(2):574–582. doi: 10.1148/radiol.2017162326. [DOI] [PubMed] [Google Scholar]

- 30.Zhang L., Arefan D., Guo Y., Wu S. Fully automated tumor localization and segmentation in breast DCEMRI using deep learning and kinetic prior. SPIE Medical Imaging; 2020; Houston, Texas, USA. p. p. 113180Z. [DOI] [Google Scholar]

- 31.Zhang L., Mohamed A. A., Chai R., Guo Y., Zheng B., Wu S. Automated deep learning method for whole-breast segmentation in diffusion-weighted breast MRI. Journal of Magnetic Resonance Imaging . 2020;51(2):635–643. doi: 10.1002/jmri.26860. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Zhang L., Luo Z., Chai R., Arefan D., Sumkin J., Wu S. Deep-learning method for tumor segmentation in breast DCE-MRI. SPIE Medical Imaging; 2019; San Diego, California, USA. p. p. 6. [DOI] [Google Scholar]

- 33.Russakovsky O., Deng J., Su H., et al. Imagenet large scale visual recognition challenge. International Journal of Computer Vision . 2015;115(3):211–252. doi: 10.1007/s11263-015-0816-y. [DOI] [Google Scholar]

- 34.Hricak H. 2016 New Horizons Lecture: Beyond Imaging—Radiology of Tomorrow. Radiology . 2018;286(3):764–775. doi: 10.1148/radiol.2017171503. [DOI] [PubMed] [Google Scholar]

- 35.Soffer S., Ben-Cohen A., Shimon O., Amitai M. M., Greenspan H., Klang E. Convolutional neural networks for radiologic images: a radiologist’s guide. Radiology . 2019;290(3):590–606. doi: 10.1148/radiol.2018180547. [DOI] [PubMed] [Google Scholar]

- 36.Arik S. Ö., Ibragimov B., Xing L. Fully automated quantitative cephalometry using convolutional neural networks. Journal of Medical Imaging . 2017;4(1) doi: 10.1117/1.JMI.4.1.014501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Wang C. W., Huang C. T., Lee J. H., et al. A benchmark for comparison of dental radiography analysis algorithms. Medical Image Analysis . 2016;31:63–76. doi: 10.1016/j.media.2016.02.004. [DOI] [PubMed] [Google Scholar]

- 38.Miki Y., Muramatsu C., Hayashi T., et al. Classification of teeth in cone-beam CT using deep convolutional neural network. Computers in Biology and Medicine . 2017;80:24–29. doi: 10.1016/j.compbiomed.2016.11.003. [DOI] [PubMed] [Google Scholar]

- 39.Orhan K., Bayrakdar I. S., Ezhov M., Kravtsov A., Ozyurek T. Evaluation of artificial intelligence for detecting periapical pathosis on cone-beam computed tomography scans. International Endodontic Journal . 2020;53(5):680–689. doi: 10.1111/iej.13265. [DOI] [PubMed] [Google Scholar]

- 40.Endres M. G., Hillen F., Salloumis M., et al. Development of a deep learning algorithm for periapical disease detection in dental radiographs. Diagnostics . 2020;10(6):p. 430. doi: 10.3390/diagnostics10060430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Setzer F. C., Shi K. J., Zhang Z., et al. Artificial intelligence for the computer-aided detection of periapical lesions in cone-beam computed tomographic images. Journal of Endodontia . 2020;46(7):987–993. doi: 10.1016/j.joen.2020.03.025. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.