Summary

Artificial intelligence (AI) algorithms are being applied across a large spectrum of everyday life activities. The implementation of AI algorithms in clinical practice has been met with some skepticism and concern, mainly because of the uneasiness that stems, in part, from a lack of understanding of how AI operates, together with the role of physicians and patients in the decision-making process; uncertainties regarding the reliability of the data and the outcomes; as well as concerns regarding the transparency, accountability, liability, handling of personal data, and monitoring and system upgrades. In this viewpoint, we take these issues into consideration and offer an integrated regulatory framework to AI developers, clinicians, researchers, and regulators, aiming to facilitate the adoption of AI that rests within the FDA’s pathway, in research, development, and clinical medicine.

Graphical abstract

Highlights

-

•

Concerns hamper the implementation of augmented intelligence in clinical practice

-

•

Transparency and external validation increase trust in developed algorithms

-

•

A regulatory framework is needed for adoption of augmented intelligence in medicine

Bazoukis et al. propose a regulatory framework for the implementation of augmented intelligence in clinical practice that pertains to core domains: reliability, accountability, liability, equity, system failure reporting, protection of personal data, system upgrading, and cybersecurity.

Introduction

Artificial intelligence (AI) algorithms are being applied across a large spectrum of everyday life activities.1 Some of the most popular applications are in navigation, where they can predict traffic delays; language translation in real-time using cameras; social media applications, which help people discover new content of personal value or interest; facial recognition; movie recommendations; email spam and malware filtering; online customer support; and many others.

By contrast, augmented intelligence algorithms—that is, intelligence that augments human cognition but does not replace human labor—is also being applied to different aspects of medicine.2,3 Notable examples of these applications include early disease detection,4,5 improvement of diagnosis accuracy,6, 7, 8, 9 identification of new physiological observations or patterns,10 development of personalized diagnostics and/or therapeutic approaches,11,12 and the detection of bias in clinical trials12, among others.

Despite the inclusion and benefits of augmented intelligence in healthcare, early adoption has been met with some skeptisicm and concern. The uneasiness stems in part from the lack of understanding of how augmented intelligence operates, together with the role of physicians and patients in the decision-making process;13 uncertainties regarding the reliability of the data and the outcomes;13 as well as concerns regarding the transparency,14 accountability,14 liability,15 handling of personal data, and monitoring and system upgrades16, 17, 18.

Here, we take these issues into consideration and offer an integrated regulatory framework to augmented intelligence developers, clinicians, researchers, and regulators, aiming to facilitate the adoption of augmented intelligence in research, development, and clinical medicine.19

Critical issues pertaining to the use of augmented intelligence algorithms in medicine

AI-based technologies have the potential to transform healthcare by deriving new and important insights from the vast amount of data that can now be analyzed, managed, and organized. The ability of augmented intelligence to learn (train) continuously and improve performance (refinement/adaptation) uniquely positions this technology as software that constitutes a rapidly expanding area of research and development.

The International Medical Device Regulators’ Forum (IMDRF) defines8 “software as a medical device (SaMD)” as software intended to be used for one or more medical purposes without being part of medical device hardware.20 The Food and Drug Administration (FDA) has made significant strides in developing policies that are appropriately tailored for SaMD to ensure that safe and effective technology reaches users, including patients and healthcare professionals.18

Manufacturers need to submit a marketing application to the FDA prior to initial distribution of a medical device. Medical devices are classified into three distinct classes according to the device’s risk to the patient. Low-risk devices are classified as class I, while high-risk devices are classified as class III. The process that is followed before a medical device gets to the market is dependent on the risk category that the product belongs. For example, Class II products require FDA clearance but not FDA approval before going to the market. FDA clearance means that the device has undergone a 510(k) submission, which FDA has reviewed and provided clearance. In other words, the manufacturer can demonstrate that the product is “substantially equivalent” to another similar device that has already been approved, and the FDA has not taken any other activity toward the device. On the other hand, high-risk products require FDA approval, which means that manufacturers need to submit a premarket approval application and clinical testing results in order to get an approval. It should be noted that ML software is FDA cleared but not approved. For changes in design that are specific to software that has been reviewed and cleared under a 510(k) notification, the FDA’s Center for Devices and Radiological Health (CDRH) has published guidance21 that describes a risk-based approach to assist in determining when a premarket submission is required.22

In China, the ‘New Generation Artificial Intelligence Development Plan” was released by China’s State Council in 2017, giving emphasis on transparency, privacy, accountability, and respect for human welfare.23 The European commission published a regulatory framework proposal for high-risk AI systems only, while non-high-risk AI systems should follow a code of conduct. In the European’s commission proposal, prohibited AI practices are described mainly to prevent physical or psychological harm. Interestingly, in the European’s commission proposal, AI tools must be effectively overseen by natural persons.24 On the other hand, the UK has released a seven-point framework pertinent to the use of AI, which includes: (1) avoidance of any unintended outcomes or consequences, (2) delivery of fair services to the users, (3) accountability issues, (4) data safety and protection of the citizens’ interests, (5) information for the users and citizens about the potential impact of AI in their life, (6) compliance with the law, and (7) continuously monitoring the algorithm or system.25

Accordingly, common data structures such as the Informatics for Integrating Biology and the Bedside (i2b2), Patient-Centered Clinical Research Network (PCORNet), Observational Medical Outcomes Partnership (OMOP), and Sentinel, aim to format, clean, harmonize, and standardize data for subsequent use of AI algorithm training.26 Some of these common data structures have an international presence and focus, which may contribute to increased diversity and reduced bias, and it may also promote AI algorithm transparency, reproducibility, and compatibility assessment across countries.27

Finally, in order that semantic meaning to be accurately added to data structure representations across countries, common data models such as the Logical Observation Identifiers Names and Codes, the International Classification of Diseases, Tenth Revision, Clinical Modification (ICD-10-CM), should be employed to increase data and algorithm interoperability.

Physician involvement in the decision-making process

The transition from paper to electronic medical records allowed us to leverage the electronic medical record data safely and securely in a timely manner, and to to enable better communication between patient and physician. Furthermore, it is possible, that augmented intelligence based on sound data, and with the inclusion of checks and balances, may aid clinicians in the decision-making process, especially when conventional approaches cannot be applied safely with the greatest confidence. In that context, the implementation of augmented intelligence algorithms may be accepted with less skepticism in areas where clinical decisions are controversial or where decisions based only on clinical judgment are precarious. Subsequently, as with any other technology or product, the more augmented intelligence models demonstrate their ability to perform their intended tasks accurately, the less the interpretability of the negatively biased “black box” concept will be an issue, and the more prevailing their adoption will be, irrespective of whether or not a physician will eventually choose to adhere to their findings and/or recommendations.

On the other hand, if society (i.e., regulators, clinicians, and patients) expects clinical augmented intelligence algorithms to be fully interpretable, it is possible that it would restrict the ability of augmented intelligence developers to use state-of-the-art augmented intelligence technologies that are not fully interpretable, yet perform better than older augmented intelligence technologies that are more interpretable. Thus, regulatory oversight should take into consideration the balance of interpretability and performance (Table 1).

Table 1.

Clinical challenges associated with the implementation of the augmented intelligence algorithms in clinical practice

| Clinical challenges |

|---|

| Problem identification |

| Oversight and regulation |

| Interpretability |

| Clinical staff education |

| Performance assessment |

| Patient engagement and access |

| Accountability |

Reliability, accountability, and liability

Reliability

Reliability constitutes the cornerstone of successful implementation of augmented intelligence algorithms in clinical practice. Three steps need to be considered for achieving a reliable augmented intelligence algorithm: (1) construction of efficient algorithms that are constantly refined and modified with new data; (2) construction of a framework for achieving real-time reliability monitoring, and identification of potential failures and their cause; and (3) capability for rapid and efficient correction of potential failure (Table 2). The performance of an augmented intelligence algorithm should first be tested and compared with the existing systems (clinical scores, etc.) by conducting a randomized clinical trial.28

Table 2.

Developer challenges involving the implementation of augmented intelligence algorithms in clinical practice

| Developer challenges |

|---|

| Use state-of-the-art algorithms |

| Capacity to intervene |

| Risk failures/adverse events |

| Data reliability |

| Acceptable accuracy |

| Interpretability |

| Accountability |

| Liability |

| User education |

To develop trust in the utilization of augmented intelligence algorithms in healthcare, stakeholders must aim on reproducibility of outcomes.29 However, since AI algorithm outcome reproducibility should not impinge on progress in that area, it is unlikely that a requirement may be applied whereby a third party should be able to obtain the same results by using the same data, models, and code (software packages, libraries and software products), as data may not be available without proper institutional patient-privacy safeguards, and code sharing may not be feasible as commercial entities view their code as proprietary information.

The efficacy of augmented intelligence algorithms should be “labeled” by the FDA with respect to the subject populations that have been evaluated. As new patient groups are studied—thereby reducing sample bias—such descriptions should be entered into the augmented intelligence algorithm label. It should be recognized, however, that deterioration of algorithm performance may occur as a consequence of natural evolution of clinical environments resulting from changes in the demographics of the treated patients or updated clinical practice evidence and outcomes.30

Accountability

As with other technologies and/or products, the implementation of augmented intelligence algorithms in clinical practice is expected to consist of distinct components with distinct responsibilities (Table 1, Table 2, Table 3). The source organization has the responsibility (1) for the quality and efficacy of the produced augmented intelligence algorithm, considering the indications and adverse effects of its use; and (2) to provide adequate training to both physicians and personnel who will handle a specific augmented intelligence algorithm. Physicians should be responsible for the adequacy of their training in using augmented intelligence algorithms, as well for the proper use of these algorithms according to the FDA-approved indications and the current evidence-based practice. Complete understanding of the complex nature of augmented intelligence algorithms’ function is not necessary for accountability. For example, the complex mechanisms of a specific action of a drug may not be fully understood; yet physicians may use this drug according to its labeling owing to its observed beneficial actions.

Table 3.

Main components of the augmented intelligence regulatory framework in clinical practice

| Regulatory challenges |

|---|

| Oversight and regulation |

| Safety and efficacy surveillance |

| Accountability |

| Liability |

| Equity and inclusion |

| Transparency |

| Data |

| Algorithm |

| Architecture |

| Explainability |

| Education |

| Patient engagement |

| Cybersecurity and privacy |

| Ethics and fairness |

| Financial incentives |

Overall, the clinical effectiveness and safety of high-risk augmented intelligence algorithms, from either a non-inferiority or superiority viewpoint, are of particular significance even when other parameters, such as the user experience, are taken into consideration. It is likely that high-risk augmented intelligence algorithms will necessitate both a higher level of evidence from more rigorous studies, as well as an increased level of monitoring after implementation. By contrast, for low-risk augmented intelligence algorithms, evaluation may simply need to focus on metrics involving the algorithms’ use in order to measure their positive/negative effects and experience.

Liability

Monitoring liability among augmented intelligence algorithm users (i.e., physicians) is crucial when assessing risks and benefits. Regulators must engage all stakeholders (AI developers, clinicians, and researchers) in order to evaluate augmented intelligence algorithms continuously for their safety and effectiveness.

An organization that proves that the designed augmented intelligence algorithm is effective and safe for the specific purpose for which it was developed should file an application with the FDA to allow marketing of the algorithm. After approval, there will be postmarket safety monitoring similar to phase-IV drug development evaluation. In this ongoing phase, if the use of the algorithm results in potential adverse events/system failure, it would be the responsibility of the augmented intelligence developers to report and investigate such outcomes. Therefore, the critical issue of a physician’s professional liability in case of an incorrect decision and a potentially harmful outcome15, as with any other medical product, narrows down to a responsibility to use such algorithm as “labeled,” which minimizes liability concerns (Table 3).

Equity and bias

The World Health Organization defines equity as the absence of avoidable, unfair, or remediable differences among groups of people, whether the groups are defined socially, economically, demographically, or geographically.31 Health equity is met when every citizen has a reasonable opportunity to achieve access to fully available healthcare.

Yet, disparities in morbidity and mortality between rural and urban community residents in the United States are well documented.32,33 Furthermore, a report by the Centers for Disease Control and Prevention has pointed to disparities across racial/ethnic populations within rural communities.34 Importantly, all racial/ethnic minority populations are less likely than non-Hispanic whites to report having a personal healthcare provider in rural areas. While all rural populations experience health issues, the issues may differ by race/ethnicity.35, 36, 37, 38 With such an inhomogeneous patient population receiving health care, it is apparent that AI-based algorithms are liable to bias driven by data (i.e., in this case, the nature of participating patient population).

In order that the aspiration for health equity be fulfilled, population-representative datasets must be included in augmented intelligence algorithm development; if not, the possible scaling resulting from augmented intelligence may further exacerbate potential existing inequities in health outcomes. Therefore, prioritizing in equity should be a noticeably articulated goal in health-care-augmented intelligence algorithm development. Consequently, augmented intelligence can aid the recognition of geographical locations and/or population demographics where high incidences of disease or high risk of behaviors exist. National efforts should also focus on deployment of augmented intelligence algorithms in lower-resource and lower-income areas with less-developed information technology capabilities.

Precision medicine employs augmented intelligence to provide clinicians with tools that may help improve the understanding of complex biological, behavioral, and environmental mechanisms underlying a patient’s health, condition, or disease, and enable the prediction of treatments that may be most appropriate for that patient.39 Therefore, as scientific advances in augmented intelligence and precision medicine are expected to impact positively all population groups, research must include such diverse populations as racial/ethnic minorities, societally structurally disadvantaged population subgroups, sex and sexual-identity minorities, people with different levels of functional ability, rural residents, as well as other marginalized groups;40, 41, 42 and do so without conflating social identity constructs with biology and biological determinism. Furthermore, as genetic background and differences in environmental factors (i.e., in the Americas, versus Europe, Africa, or Asia, etc.) are expected to affect the performance of augmented intelligence algorithms, population-representative data have to be used during the research and development, as well as the validation phase of the augmented intelligence algorithms. One of the key components to ensuring the long-term success of a precision medicine strategy is implementation science. Implementation science is defined as the study and use of methods aiming to promote the systematic uptake of research findings and other evidence-based practices into routine practice, thereby improving the quality and effectiveness of health services among all people.43 In this regard, with appropriate consent, augmented intelligence may link personal and public data toward precision medicine implementation in health care.

Even at a point in time at which there have been marked gains in terms of the detection of, and explanations for cardiovascular health outcomes disparities, efforts to develop, implement, sustain, and evaluate interventions to reduce such disparities have not produced the desired results.44,45 Conversely, while implementation science has made significant gains with the development of frameworks, theories, strategies, and measures; major gaps remain in the application of this knowledge towards a decrease in disparities and the achievement of health equity,46 either because groups that include racial/ethnic minorities, rural populations, sexual and sex-identity minorities, socioeconomically disadvantaged persons, and persons with disabilities have not been adequately represented in clinical trials; or because such groups were not under consideration when interventions and implementation strategies were planned and effected.

Development of algorithm auditing processes that can recognize a group (or even an individual) for which a decision may not be reliable, can reduce the implications of such decision due to bias.47 Consequently, health care–related augmented intelligence algorithms have the capacity to influence confidence in a health care system, particularly if these tools result for some groups in worse outcomes, or increased inequities. Therefore, ensuring at a national level that these tools address present inequities will require thoughtful planning that is not only driven by profit, but also by indicators of health care costs, quality, and access.

The ever-increasing use of augmented intelligence in developing new study designs and diagnostic and treatment frameworks as well as multistakeholder approaches in implementation science are expected not only to reduce bias incrementally, depending on data derived from the augmented intelligence algorithms, but also to facilitate, if affordable, the improvement of evidence-based interventions in disparity populations and thereby promote health equity.48

Adverse event/system failure reporting

Some AI algorithms approved and deployed for clinical use may be designed to continue to learn and refine their internal model. This ongoing development of these systems increases the difficulty of applying a regulatory framework. The clinical evaluation of the SaMD by FDA includes three steps: (1) the presence of a valid clinical association between the SaMD output and the targeted clinical condition; (2) analytical validation that the input data generate accurate, reliable, and precise output data; and, finally, (3) clinical validation of whether the output data achieve the intended use of SaMD in the targeted population.18

As with phase IV trials in drug development, postmarket surveillance is required for SaMD. This is an important step that ensures the effectiveness and safety of an SaMD after its approval for clinical use. Moreover, the postmarket surveillance not only reduces the potential risk for the patients, but benefits the manufacturer by identifying areas in which to improve product quality. Postmarket surveillance can be achieved by implementing the following mechanisms: (1) customer surveys/complaints that should be reported by the end users (physicians, clinical care teams), (2) launch of user-friendly online platforms for reporting any device-related deaths or serious adverse effects/malfunctions, and (3) automatic diagnostic tools for identifying algorithm malfunctions and transmission of the code error to the manufacturing company.

Today, surveillance of the manufacturing companies by the FDA is achieved by implementing the Case for Quality and Pre-Cert programs (Box 1).49 The FDA has upgraded its role from a regulatory agency to a collaborative industry partner by implementing the Case for Quality program. Through this program, the FDA is working with industry, health-care providers, patients, payers, and investors to improve the quality of medical products by transforming the emphasis from “regulatory compliance” to “focus on quality.”50 It consists of three core components: (1) focus on quality (promote manufacturers’ implementation of critical-to-quality practices during device design and production), (2) enhancement of data transparency (device data can be retrieved from https://open.fda.gov/), and (3) stakeholder engagement (continued collaboration with stakeholders). The Pre-Cert program aims to evaluate and monitor a software product from its premarket development to postmarket performance and, additionally, to demonstrate and verify the organization’s excellence.51 Algorithm transparency may not be necessary in all cases, and will, instead, depend upon regulatory agencies, developers, and users to delineate collaboratively guidelines aiming to determine the degree of transparency required across a wide spectrum of risk of augmented intelligence algorithms in a framework within which data, algorithms, and algorithm-performance are reported separately.

Box 1. Software Pre-Certification Program goal.

“The goal of the program is to have tailored, pragmatic, and least burdensome regulatory oversight that assesses organizations (large and small) to establish trust that they have a culture of quality and organizational excellence such that they can develop high quality SaMD products, leverages transparency of organizational excellence and product performance across the entire lifecycle of SaMD, uses a tailored streamlined premarket review, and leverages unique postmarket opportunities available in software to verify the continued safety, effectiveness, and performance of SaMD in the real world.

The Software Pre-Cert Program is intended to build stakeholder confidence that participating organizations have demonstrated capabilities to build, test, monitor, and proactively maintain and improve the safety, efficacy, performance, and security of their medical device software products, so that they meet or exceed existing FDA standards of safety and effectiveness.”49

Accordingly, the augmented intelligence manufacturing company is expected to implement a systematic process to collect the information from any source of postmarket surveillance, to assess the collected data in a proper way (continuous reassessment of the benefit-risk analysis and assessment of the significance of an increase in an adverse event), to communicate directly with the end users, and to design service packs in cases of malfunction that can be provided directly to the end users.

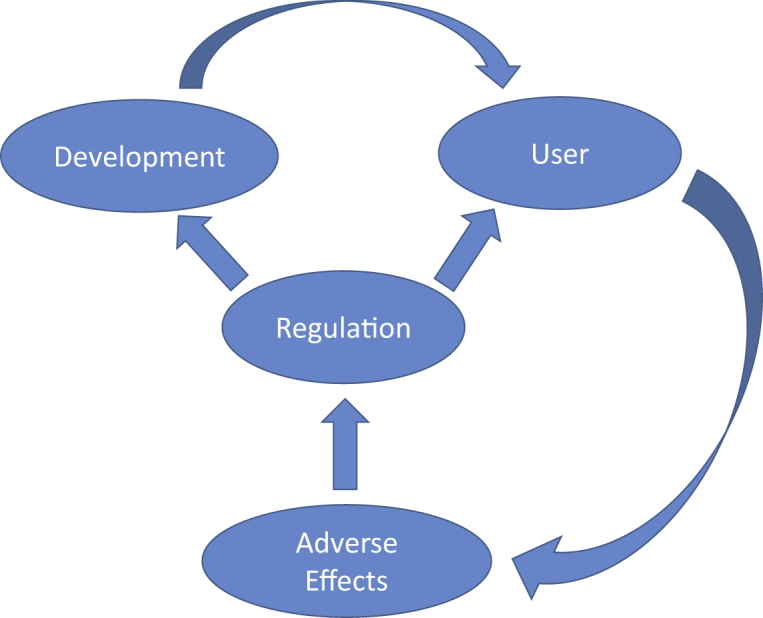

The adverse events/system failures should be managed by augmented intelligence experts from the manufacturing company (Figure 1). Service packs will be provided as automatic updates to resolve the existing issues. However, the FDA should be informed about the system failure and the provided service packs that can be categorized as low-, intermediate-, and high-risk. The low-risk updates can be incorporated in the algorithm without premarket review; the intermediate- and high-risk updates are further tested before their approval for clinical use. Enhanced postmarket surveillance may allow a more streamlined premarket review process of augmented intelligence algorithms and more frequent product modification over time.52

Figure 1.

Relationships between regulators, users (clinicians or researchers), and developers

Patient rights and protection of personal data

The White House Precision Medicine Initiative, announced at the 2015 State of the Union address by President Obama, identified patient engagement as a fundamental component for achieving the promise of precision medicine. For effective patient engagement, there are some common themes that emerge across various resources that include the recognition of the importance of integrity, trust, relationship building, and reciprocity.

As patients are encouraged to participate actively in the decision-making process and give informed consent before the implementation of augmented intelligence algorithms (for diagnosis, risk stratification, appropriate treatment, etc.), different instruments have been developed for assessing the implementation and quality of the informed consent process in clinical practice.53 Although the General Data Protection Regulation provides guidance regarding the processing of personal data in augmented intelligence algorithms,14 a key determinant for data integration is the lack of adequate regulatory processes of the secondary use of otherwise routinely collected patient data, as well as the complex interactions of augmented intelligence algorithms with privacy concerns and privacy law,54 with many of the laws around data ownership and profitability being country-specific and based on evolving cultural norms. For example, today in the US, fully de-identified health care data (although there is disagreement over what constitutes sufficiently de-identified data) may also be used for other tasks without the need of obtaining additional consent, a policy that is not uniformly accepted internationally.

System upgrading

Another important aspect concerns the approach followed for system manufacturer-initiated upgrading, especially in the case of an urgent update. Regarding the locked algorithms (same inputs lead to same outcomes), we propose that any modification of an already approved algorithm should be examined by a regulatory committee regarding potential harmful outcomes to the patients. If a modification is characterized as low-risk for potential harmful outcomes, then it can be approved by the FDA for immediate implementation in clinical practice. In cases of intermediate- or high-risk updates for potential harmful outcomes, the update should be further evaluated before its approval for clinical use. Regarding adaptive algorithms (same inputs lead to different outcomes before and after system update), changes should be typically implemented and validated through a well-defined and possibly fully automated process. This process should consist of two stages—learning and updating.18 Specifically, the algorithm should learn how to change from the addition of new cases (inputs) and then an update should occur, which results in different outputs with the same inputs (compared to outputs before the update). In this case, frequent real-world performance monitoring, as mentioned above, should be implemented.

The system upgrades can: (1) modify the performance without change to the intended use or new input type, (2) modify the inputs without change to the intended use, or (3) modify the intended use. In the first case, the modifications aim to improve the performance of the algorithm by its retraining with a larger dataset or by modifications in the algorithm; however, these modifications do not change the intended use and can be approved for immediate implementation in clinical practice. The second type of modifications do not change the intended use but the type and the number of input data. These modifications, after appropriate testing for their performance, should be implemented in clinical practice followed by adequate training of their users to achieve proper use. Finally, the third type of modification that changes the intended use should pass all of the premarket phases before the new-labeling approval. Augmented intelligence manufacturers must be responsible for the compatibility of the supporting devices, accessories, or non-device components after any modification. Users should be informed of the availability of upgrades with program notifications and e-mail alerts that will include adequate information about the provided upgrade.

Cybersecurity

AI systems could be (and have been) a potential target for hacking.55,56 This is a major issue that should be thoroughly examined and tested prior to the wide implementation of these systems in clinical practice. Hacking could lead to wrong decisions, which may be harmful to patients. As a result, cybersecurity for augmented intelligence algorithms should be a priority for sources of anti-malware software that should have the ability to receive automatic updates.

In particular, cloud computing that allocates assets broadly across locations and often in multiple countries remains, for the most part, a challenging matter since it may result in serious cybersecurity breaches as augmented intelligence developers attempt to uphold compliance across many diverse local, national,57 and international laws and regulations.58

Conclusions

At organizations that range from universities to small/large corporations, scientists and physicians use augmented intelligence algorithms to guide the development of precision treatments for complex diseases59 by aiming to extract from increasingly massive datasets knowledge of what makes individual patients healthy. Augmented intelligence algorithms have the capability not only to improve current clinical decision support methodologies, but also to empower a broad range of innovations with the potential to disrupt health care. Augmented intelligence algorithms are expected to change health care delivery to a lesser degree by replacing physicians, but mostly by supporting or augmenting physicians’ work. Augmented intelligence is expected to support less-trained physicians in performing tasks that are presently referred to specialists, or to filter out noncomplex cases such that specialists will be able to focus on more challenging cases. Augmented intelligence algorithms are also expected to facilitate screening and evaluation in areas with restricted access to medical expertise.

For the successful deployment of augmented intelligence applications, an effective information technology governance is required. For objective governance, a neutral agency within existing governmental or nongovernmental structures that is supported by all stakeholders could manage the review of health-care-related augmented intelligence algorithms while guarding developers’ intellectual property and patient privacy rights. Also, the clinical benefit of augmented intelligence algorithms, manifested by improvement of patient outcomes and/or decrease of medical costs, will be also assessed by relevant stakeholders (i.e., hospital administrators, insurers, physicians, patients, etc). Looking forward, in order that a healthy health-care augmented intelligence application growth gets cultivated, there will be a need for a comprehensive review, evaluation, and growth of germane, professional health education focused on health care, medicine, data sciences, AI, and ethics.

Effective data standardization, harmonization, as well as data quality assessment methodologies are likely to enhance interoperability, and will be critical across all layers of a health care system, or even between different health care systems.18,24 While government initiatives in the US18 and Europe24 attempt to guide the health care community toward an apparent interoperability, such efforts have not yet been met with widespread acceptance and use. Ultimately, for augmented intelligence algorithms to be trusted, the semantics and the methods used to generate the data used in deriving these algorithms must be transparent, and available, for external validation. However, the conflation of algorithm and data transparency complicates the evolution of the augmented intelligence ecosystem; therefore, in this viewpoint, we propose a clear separation of these topics.

While periods of unbridled enthusiasm can promote general societal interest in the short term, they may inadvertently hinder progress when disillusionment mounts from unmet expectations. Thus, the greatest near-term risk in applying augmented intelligence algorithms in medicine is that it cannot meet the improbable expectations created by such publicity. Accurate augmented intelligence–algorithm performance will allow humans to begin to trust its performance and will progressively require less transparency.

In conclusion, beyond fear and mystery pertaining to the use of augmented intelligence in health care, the prudent and sensible growth of health-care-related augmented intelligence is expected to involve all stakeholders, and be guided by a stepwise approach and the necessity to serve every patient.

Acknowledgments

The work was supported by the Institute of Precision Medicine (17UNPG33840017) from the AHA, the RICBAC Foundation, NIH grant 1 R01 HL135335-01, 1 R21 HL137870-01 and 1 R21 EB026164-01.

Declaration of interests

The authors declare no competing interests.

References

- 1.Cai Y., Abascal J. Springer, Berlin, Heidelberg; 2006. Ambient Intelligence in Everyday Life. Part of Lecture Notes in Computer Science and Lecture Notes in Artificial Intelligence. [Google Scholar]

- 2.Sevakula R.K., Au-Yeung W.M., Singh J.P., Heist E.K., Isselbacher E.M., Armoundas A.A. State-of-the-art machine learning techniques aiming to improve patient outcomes pertaining to the cardiovascular system. J. Am. Heart Assoc. 2020;9:e013924. doi: 10.1161/JAHA.119.013924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Handelman G.S., Kok H.K., Chandra R.V., Razavi A.H., Lee M.J., Asadi H. eDoctor: machine learning and the future of medicine. J. Intern. Med. 2018;284:603–619. doi: 10.1111/joim.12822. [DOI] [PubMed] [Google Scholar]

- 4.Kerut E.K., To F., Summers K.L., Sheahan C., Sheahan M. Statistical and machine learning methodology for abdominal aortic aneurysm prediction from ultrasound screenings. Echocardiography. 2019;36:1989–1996. doi: 10.1111/echo.14519. [DOI] [PubMed] [Google Scholar]

- 5.Le S., Hoffman J., Barton C., Fitzgerald J.C., Allen A., Pellegrini E., Calvert J., Das R. Pediatric severe sepsis prediction using machine learning. Front Pediatr. 2019;7:413. doi: 10.3389/fped.2019.00413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Erickson B.J., Korfiatis P., Akkus Z., Kline T.L. Machine learning for medical imaging. Radiographics. 2017;37:505–515. doi: 10.1148/rg.2017160130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Alizadehsani R., Roshanzamir M., Abdar M., Beykikhoshk A., Khosravi A., Panahiazar M., Koohestani A., Khozeimeh F., Nahavandi S., Sarrafzadegan N. A database for using machine learning and data mining techniques for coronary artery disease diagnosis. Sci. Data. 2019;6:227. doi: 10.1038/s41597-019-0206-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Serag A., Ion-Margineanu A., Qureshi H., McMillan R., Saint Martin M.J., Diamond J., O’Reilly P., Hamilton P. Translational AI and deep learning in diagnostic pathology. Front. Med. (Lausanne) 2019;6:185. doi: 10.3389/fmed.2019.00185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Wu C., Zhao X., Welsh M., Costello K., Cao K., Abou Tayoun A., Li M., Sarmady M. Using machine learning to identify true somatic variants from next-generation sequencing. Clin. Chem. 2019;6:239–246. doi: 10.1373/clinchem.2019.308213. [DOI] [PubMed] [Google Scholar]

- 10.Quitadamo L.R., Cavrini F., Sbernini L., Riillo F., Bianchi L., Seri S., Saggio G. Support vector machines to detect physiological patterns for EEG and EMG-based human-computer interaction: a review. J. Neural Eng. 2017;14:011001. doi: 10.1088/1741-2552/14/1/011001. [DOI] [PubMed] [Google Scholar]

- 11.Mo X., Chen X., Li H., Li J., Zeng F., Chen Y., He F., Zhang S., Li H., Pan L., et al. Early and accurate prediction of clinical response to methotrexate treatment in juvenile idiopathic arthritis using machine learning. Front. Pharmacol. 2019;10:1155. doi: 10.3389/fphar.2019.01155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ahmad T., Lund L.H., Rao P., Ghosh R., Warier P., Vaccaro B., Dahlström U., O’Connor C.M., Felker G.M., Desai N.R. Machine learning methods improve prognostication, identify clinically distinct phenotypes, and detect heterogeneity in response to therapy in a large cohort of heart failure patients. J. Am. Heart Assoc. 2018;7:e008081. doi: 10.1161/JAHA.117.008081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Grote T., Berens P. On the ethics of algorithmic decision-making in healthcare. J. Med. Ethics. 2019;46:205–211. doi: 10.1136/medethics-2019-105586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Blacklaws C. Algorithms: Transparency and accountability. Phil. Trans. R. Soc. A. 2018;376:20170351. doi: 10.1098/rsta.2017.0351. [DOI] [PubMed] [Google Scholar]

- 15.Price W.N., 2nd, Gerke S., Cohen I.G. Potential liability for physicians using artificial intelligence. JAMA. 2019;322:1765–1766. doi: 10.1001/jama.2019.15064. [DOI] [PubMed] [Google Scholar]

- 16.Veale M., Binns R., Edwards L. Algorithms that remember: Model inversion attacks and data protection law. Phil. Trans. R. Soc. A. 2018;376:20180083. doi: 10.1098/rsta.2018.0083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Saeb S., Lonini L., Jayaraman A., Mohr D.C., Kording K.P. The need to approximate the use-case in clinical machine learning. Gigascience. 2017;6:1–9. doi: 10.1093/gigascience/gix019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Rosamond W., Flegal K., Furie K., Go A., Greenlund K., Haase N., Hailpern S.M., Ho M., Howard V., Kissela B., et al. American Heart Association Statistics Committee and Stroke Statistics Subcommittee Heart disease and stroke statistics--2008 update: a report from the American Heart Association Statistics Committee and Stroke Statistics Subcommittee. Circulation. 2008;117:e25–e146. doi: 10.1161/CIRCULATIONAHA.107.187998. [DOI] [PubMed] [Google Scholar]

- 19.Matheny M.E., Whicher D., Thadaney Israni S. Artificial intelligence in health care: A report from the National Academy of Medicine. JAMA. 2019;323:509–510. doi: 10.1001/jama.2019.21579. [DOI] [PubMed] [Google Scholar]

- 20.International Medical Device Regulators Forum . Software as a Medical Device (SaMD): Key Definitions. 2013. https://www.imdrf.org/sites/default/files/docs/imdrf/final/technical/imdrf-tech-131209-samd-key-definitions-140901.pdf [Google Scholar]

- 21.US Food and Drug Administration . 2017. Deciding when to submit a 510(k) for a software change to an existing device. Guidance for industry and Food and Drug Administration staff.https://www.fda.gov/regulatory-information/search-fda-guidance-documents/deciding-when-submit-510k-software-change-existing-device [Google Scholar]

- 22.US Food and Drug Administration . 2008. Modifications to devices subject to premarket approval (PMA) - The PMA supplement decision-making process. Guidance for Industry and Food and Drug Administration Staff.https://www.fda.gov/regulatory-information/search-fda-guidance-documents/modifications-devices-subject-premarket-approval-pma-pma-supplement-decision-making-process [Google Scholar]

- 23.Chinese State Council . 2017. New generation of Artificial Intelligence Development Plan. State Council Document No. 35.https://flia.org/notice-state-council-issuing-new-generation-artificial-intelligence-development-plan/ [Google Scholar]

- 24.European Commission . 2021. Proposal for a regulation of the European Parliament and of the Council laying down harmonised rules on artificial intelligence (Artificial Intelligence Act) and amending certain union legislative acts {SEC(2021) 167 final} - {SWD(2021) 84 final} - {SWD(2021) 85 final}https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A52021PC0206 [Google Scholar]

- 25.UK Department of Health & Social Care . 2021. A guide to good practice for digital and data-driven health technologies.https://www.gov.uk/government/publications/code-of-conduct-for-data-driven-health-and-care-technology/initial-code-of-conduct-for-data-driven-health-and-care-technology [Google Scholar]

- 26.Rosenbloom S.T., Carroll R.J., Warner J.L., Matheny M.E., Denny J.C. Representing knowledge consistently across health systems. Yearb. Med. Inform. 2017;26:139–147. doi: 10.15265/IY-2017-018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Hripcsak G., Duke J.D., Shah N.H., Reich C.G., Huser V., Schuemie M.J., Suchard M.A., Park R.W., Wong I.C., Rijnbeek P.R., et al. Observational health data sciences and informatics (OHDSI): Opportunities for observational researchers. Stud. Health Technol. Inform. 2015;216:574–578. [PMC free article] [PubMed] [Google Scholar]

- 28.Shimabukuro D.W., Barton C.W., Feldman M.D., Mataraso S.J., Das R. Effect of a machine learning-based severe sepsis prediction algorithm on patient survival and hospital length of stay: a randomised clinical trial. BMJ Open Respir. Res. 2017;4:e000234. doi: 10.1136/bmjresp-2017-000234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Stodden V., Bailey D.H., Borwein J.M., LeVeque R.J., Rider W.J., Stein W.A. 2013. Setting the default to reproducible: reproducibility in computational and experimental mathematics.http://stodden.net/icerm_report.pdf [Google Scholar]

- 30.Davis S.E., Lasko T.A., Chen G., Matheny M.E. Calibration drift among regression and machine learning models for hospital mortality. AMIA Annu. Symp. Proc. 2018;2017:625–634. [PMC free article] [PubMed] [Google Scholar]

- 31.World Health Organization . 2013. Closing the health equity gap: Policy options and opportunities for action.https://apps.who.int/iris/bitstream/handle/10665/78335/9789241505178_eng.pdf?sequence=1&isAllowed=y [Google Scholar]

- 32.Cosby A.G., McDoom-Echebiri M.M., James W., Khandekar H., Brown W., Hanna H.L. Growth and persistence of place-based mortality in the United States: The rural mortality penalty. Am. J. Public Health. 2019;109:155–162. doi: 10.2105/AJPH.2018.304787. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Singh G.K., Siahpush M. Widening rural-urban disparities in all-cause mortality and mortality from major causes of death in the USA, 1969-2009. J. Urban Health. 2014;91:272–292. doi: 10.1007/s11524-013-9847-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.James C.V., Moonesinghe R., Wilson-Frederick S.M., Hall J.E., Penman-Aguilar A., Bouye K. Racial/ethnic health disparities among rural adults - United States, 2012-2015. MMWR Surveill. Summ. 2017;66:1–9. doi: 10.15585/mmwr.ss6623a1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Liao Y., Bang D., Cosgrove S., Dulin R., Harris Z., Taylor A., White S., Yatabe G., Liburd L., Giles W., Division of Adult and Community Health, National Center for Chronic Disease Prevention and Health Promotion. Centers for Disease Control and Prevention (CDC) Surveillance of health status in minority communities - Racial and ethnic approaches to community health across the U.S. (REACH U.S.) risk factor survey, United States, 2009. MMWR Surveill. Summ. 2011;60:1–44. [PubMed] [Google Scholar]

- 36.Howard G., Prineas R., Moy C., Cushman M., Kellum M., Temple E., Graham A., Howard V. Racial and geographic differences in awareness, treatment, and control of hypertension: The reasons for geographic and racial differences in stroke study. Stroke. 2006;37:1171–1178. doi: 10.1161/01.STR.0000217222.09978.ce. [DOI] [PubMed] [Google Scholar]

- 37.Yoon S.S., Carroll M.D., Fryar C.D. Hypertension Prevalence and Control Among Adults: United States, 2011-2014. NCHS Data Brief. 2015;220:1–8. [PubMed] [Google Scholar]

- 38.Todd K.H., Samaroo N., Hoffman J.R. Ethnicity as a risk factor for inadequate emergency department analgesia. JAMA. 1993;269:1537–1539. [PubMed] [Google Scholar]

- 39.Lyles C.R., Lunn M.R., Obedin-Maliver J., Bibbins-Domingo K. The new era of precision population health: insights for the All of Us Research Program and beyond. J. Transl. Med. 2018;16:211. doi: 10.1186/s12967-018-1585-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Dankwa-Mullan I., Bull J., Sy F. Precision medicine and health disparities: Advancing the science of individualizing patient care. Am. J. Public Health. 2015;105(Suppl 3):S368. doi: 10.2105/AJPH.2015.302755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Rajkomar A., Hardt M., Howell M.D., Corrado G., Chin M.H. Ensuring fairness in machine learning to advance health equity. Ann. Intern. Med. 2018;169:866–872. doi: 10.7326/M18-1990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Adams S.A., Petersen C. Precision medicine: Opportunities, possibilities, and challenges for patients and providers. J. Am. Med. Inform. Assoc. 2016;23:787–790. doi: 10.1093/jamia/ocv215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Eccles M.P., Mittman B.S. Welcome to implementation science. Implement. Sci. 2006;1:1. [Google Scholar]

- 44.Manrai A.K., Funke B.H., Rehm H.L., Olesen M.S., Maron B.A., Szolovits P., Margulies D.M., Loscalzo J., Kohane I.S. Genetic misdiagnoses and the potential for health disparities. N. Engl. J. Med. 2016;375:655–665. doi: 10.1056/NEJMsa1507092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Chen I.Y., Szolovits P., Ghassemi M. Can AI help reduce disparities in general medical and mental health care? AMA J. Ethics. 2019;21:E167–E179. doi: 10.1001/amajethics.2019.167. [DOI] [PubMed] [Google Scholar]

- 46.Chinman M, Woodward EN, Curran GM and Hausmann LRM. Harnessing implementation science to increase the impact of health equity research. Med Care. 55, S16–S23. [DOI] [PMC free article] [PubMed]

- 47.Schulam P., Saria S. Reliable decision support using counterfactual models. Adv. Neural Inf. Process. Syst. 2017;Decmber 21017:1698–1709. [Google Scholar]

- 48.McNulty M., Smith J.D., Villamar J., Burnett-Zeigler I., Vermeer W., Benbow N., Gallo C., Wilensky U., Hjorth A., Mustanski B., et al. Implementation research methodologies for achieving scientific equity and health equity. Ethn. Dis. 2019;29(Suppl 1):83–92. doi: 10.18865/ed.29.S1.83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.US Food and Drug Administration . 2019. Pre-Cert Program Version 1.0 working model.https://www.fda.gov/media/119722/download?utm_campaign=Digital%20Health%20Update%3A%20New%20FDA%20Pre-Cert%20Working%20Model%20Now%20Available&utm_medium=email&utm_source=Eloqua [Google Scholar]

- 50.Mauco K.L., Scott R.E., Mars M. Development of an eHealth Readiness Assessment Framework for Botswana and other developing countries: Interview study. JMIR Med. Inform. 2019;7:e12949. doi: 10.2196/12949. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Lavallee D.C., Lee J.R., Semple J.L., Lober W.B., Evans H.L. Engaging patients in co-design of mobile health tools for surgical site infection surveillance: Implications for research and implementation. Surg. Infect. (Larchmt.) 2019;20:535–540. doi: 10.1089/sur.2019.148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.US Food and Drug Administration. Proposed regulatory framework for modifications to artificial intelligence/machine learning (AI/ML)-based software as a medical device (SaMD). Discussion paper and request for feedback. https://www.fda.gov/files/medical%20devices/published/US-FDA-Artificial-Intelligence-and-Machine-Learning-Discussion-Paper.pdf. (accessed December 22, 2019).

- 53.Vučemilo L., Milošević M., Dodig D., Grabušić B., Đapić B., Borovečki A. The quality of informed consent in Croatia-a cross-sectional study and instrument development. Patient Educ. Couns. 2016;99:436–442. doi: 10.1016/j.pec.2015.08.033. [DOI] [PubMed] [Google Scholar]

- 54.Loukides G., Denny J.C., Malin B. The disclosure of diagnosis codes can breach research participants’ privacy. J. Am. Med. Inform. Assoc. 2010;17:322–327. doi: 10.1136/jamia.2009.002725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Klonoff D., Han J. The first recall of a diabetes device because of cybersecurity risks. J. Diabetes Sci. Technol. 2019;13:817–820. doi: 10.1177/1932296819865655. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Muddy Waters Research. MW is short St. Jude Medical (STJ: US), August 25, 2016. http://wwwmuddywatersresearchcom/research/stj/mw-is-short-stj/. Accessed April 26, 2020.

- 57.Stern A.D., Gordon W.J., Landman A.B., Kramer D.B. Cybersecurity features of digital medical devices: an analysis of FDA product summaries. BMJ Open. 2019;9:e025374. doi: 10.1136/bmjopen-2018-025374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Swedish National Board of Trade . 2012. How borderless is the Cloud? An introduction to Cloud computing and international trade.https://www.wto.org/english/tratop_e/serv_e/wkshop_june13_e/how_borderless_cloud_e.pdf [Google Scholar]

- 59.Topol E.J. High-performance medicine: The convergence of human and artificial intelligence. Nat. Med. 2019;25:44–56. doi: 10.1038/s41591-018-0300-7. [DOI] [PubMed] [Google Scholar]