Abstract

In clinical practice, assessing digital health literacy is important to identify patients who may encounter difficulties adapting to digital health using digital technology and service. We developed the Digital Health Technology Literacy Assessment Questionnaire (DHTL-AQ) to assess the ability to use digital health technology, services, and data. The DHTL-AQ was developed in three phases. In the first phase, the conceptual framework and domains and items were generated from a systematic literature review using relevant theory and surveys. In the second phase, a cross-sectional survey with 590 adults age ≥ 18 years was conducted at an academic hospital in Seoul, Korea in January and February 2020 to test face validity of the items. Then, psychometric validation was conducted to determine the final items and cut-off scores of the DHTL-AQ. The eHealth literacy scale, the Newest Vital Sign, and 10 mobile app task ability assessments were examined to test validity. The final DHTL-AQ includes 34 items in two domains (digital functional and digital critical literacy) and 4 categories (Information and Communications Technology terms, Information and Communications Technology icons, use of an app, evaluating reliability and relevance of health information). The DHTL-AQ had excellent internal consistency (overall Cronbach’s α = 0.95; 0.87–0.94 for subtotals) and acceptable model fit (CFI = 0.821, TLI = 0.807, SRMR = 0.065, RMSEA = 0.090). The DHTL-AQ was highly correlated with task ability assessment (r = 0.7591), and moderately correlated with the eHealth literacy scale (r = 0.5265) and the Newest Vital Sign (r = 0.5929). The DHTL-AQ is a reliable and valid instrument to measure digital health technology literacy.

Supplementary Information

The online version contains supplementary material available at 10.1007/s10916-022-01800-8.

Keywords: Digital health technology literacy, Digital health literacy, Performance-based task ability, Tool development, Measurement scale

Introduction

Despite the rapidly increasing number of interventions using mobile devices that support the education and self-management of patients with chronic disease, the utilization of digital health has been relatively low [1, 2]. The barriers to using digital health include lack of interest and unfamiliarity with technology, inexperienced user interaction, and lack of skills related to digital health use [3, 4]. Non-users exhibited lower technology-related health literacy, more concerns about data security, and less perceived value of using the app compared to users [5]. A pragmatic trial of a mobile app for self-management of diabetes reported that only 18.2% of participants used the app consistently during the research period (182 days). In the trial, about half of the participants used the app for 10 days or less, and patients who were older, lacked individual motivation, and had low beliefs about the usefulness of digital health were significantly less likely to use mobile devices [3]. The success of digital therapeutics, which are the most common interventions where mobile devices are used, has been reported to rely on age and user ability to manage the technology when conducting interventions. This situation may lead to potential bias in the healthcare services online [6].

Digital health literacy (DHL) refers to the specific degree of skills and abilities necessary to use digital health technology and services [7, 8]. While the DHL requires various skills and abilities, most studies considered DHL to be the same as eHealth literacy, which is “the ability to seek, find, understand, and appraise health information from electronic sources and apply the knowledge gained to addressing or solving a health problem” [9–11]. However, the DHL has a broader scope in the context of technology, encompassing mobile devices and health information technology (IT), telehealth, and personalized medicine.1 Assessing DHL is important to identify patients who may encounter difficulties adapting to and using digital health [5]. A valid measurement of these skills and abilities is necessary to examine DHL. Most studies employed self-reported evaluations of individuals’ abilities to use the Internet, such as the eHealth literacy scale (eHEALS), or added only a few questions about digital technology based on weak theoretical foundations [12–14]. Moreover, studies were limited to assessing the literacy of certain groups, such as students [11]. Thus, we developed the Digital Health Technology Literacy Assessment Questionnaire (DHTL-AQ), which assesses the ability to use digital health technology, services, and data.

Methods

Questionnaire development process

The DHTL-AQ was developed in three phases (Fig. 1). In the first phase, the conceptual framework was defined, and the items were generated to represent the dimensions. To explore the construct and specify the dimensions, we conducted a systematic literature review following the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) statement [15]. Using the health behavior model and unified theory of acceptance and use of technology (UTAUT) [16, 17], relevant items were identified from existing or newly developed eHealth literacy instruments [9, 10, 18–20], and other relevant surveys including Health Information National Trends (HINTS) from the National Cancer Institute in the United States,2 the Wisconsin Health Literacy questionnaire,3 and a survey on Korean digital literacy.4 After integrating the item pool, each item was reevaluated by three experts in digital health (JC, ML, and JY) for content relevance and representativeness. Five domains were finalized: digital functional, digital communicative, digital critical, device, and data literacy (Supplementary Fig. 1). The three domains were based on three aspects of health literacy: functional, communicative, and critical [21]; furthermore, the two domains represented digital health—device and data literacy (Supplementary Table 1). While most eHealth literacy scales only asked about use of Internet, the DHTL-AQ included various digital tools such as computer (e.g., Web browser, Domain/URL, and Cloud), table-pc, smartphone (e.g., mobile app; Update, Bluetooth, QR code, Play Store, or App Store), wearable device and Chabot. A pilot test was conducted with 10 graduate students majoring in digital health. The items were reviewed and modified based on student feedback. A total of 115 item candidates were identified.

Fig. 1.

Development process of Digital Health Technology Literacy Assessment Questionnaire (DHTL-AQ)

In the second phase, a survey was conducted at an academic hospital in Seoul, Korea, in January and February 2020. The inclusion criteria were age ≥ 18 years, having a smartphone, and being able to read and understand Korean. People with cognitive problems and psychiatric diseases were excluded from the study. For this study, we determined the sample size by estimating sample required for an exploratory factor analysis (EFA). Regarding EFA, literature5 suggested rules of thumb consisting either of minimum Ns in absolute numbers like 100—250 [22, 23], 300 [24] or 500 or more [25]. Another category of rules of thumb is ratios. In EFA the N:p ratio is used, i.e. of participants (N) to variables (p) set traditionally to 5:1 (5 participants per item) [26]. Thus, for this study we aimed to recruit 600 participants considering 5% missing.

In terms of sampling, we used a convenience sampling. We intended to include two-third of the study sample with patients with chronic disease considering that the utilization of digital health has been relatively low despite the rapidly increasing number of interventions using mobile devices that support the education and self-management of patients with chronic disease. We recruited one-third of the study population with people without chronic disease considering that digital health technology would be used for prevention. Then, we aimed to recruit equal proportion of study population by gender and age. In addition, we tried to recruit 30% people with lower education considering who would be vulnerable for digital health technology.

All the participants provided informed consent at the onset. After the survey, trained researchers conducted a face-to-face task ability assessment (Supplementary Table 2). The participants were asked to perform 10 tasks using the same app (a breast cancer information app) developed by the research team in 2019.6 The researchers helped the participants only when they hesitated or faced difficulties in completing the tasks and moved onto the next one. The results of the task performance were documented and evaluated by the degree of help of the researchers, ranging from 1 to 4 (“do not know” to “self-implementation”). We reviewed all items with experts based on the statistical results. The reduction and adjustment of items were determined in terms of face validity.

In the third phase, psychometric procedures were conducted to determine the final items and cut-off scores of the DHTL-AQ. The Korean version of eHEALS [18], health literacy with the Newest Vital Sign (NVS)7 [27], and the Korean adaptation of the General Self-Efficacy Scale8 were assessed as standard measurements. In addition, we assessed the items on health behaviors such as drinking, smoking, and exercising, and sociodemographic factors including age, sex, educational level, family income, and marital status. Furthermore, we asked about clinical information, such as comorbidities and type of cancer.

Statistical analysis

Descriptive statistics and item analyses were performed to assess face validity. Based on the mean score of the mobile app task ability assessment (Supplementary Table 3), we considered each result of 115 items in terms of three groups (high, 39–40; moderate, 35–38; and low, < 35).

Exploratory factor analysis (EFA) was applied using principal component analysis (PCA) to obtain common latent factors and extract the items assuming that there is no unique variance, and the total variance is equal to common variance. For each factor of categories, an eigenvalue > 1.0 was retained, and orthogonal rotation (Varimax) was used. The Kaiser–Meyer–Olkin (KMO) sample fit test, and Bartlett’s sphericity test were conducted to verify whether each category was suitable for factor analysis. The KMO > 0.5 and Bartlett p < 0.05 were used to evaluate all available data for factor analysis. Cronbach’s αcoefficients ≥ 0.7 were considered reliable. Confirmatory factor analysis (CFA) was performed based on the factor structure and items extracted through the EFA. Structural equation modeling (SEM) was conducted using the maximum likelihood estimation method. Model goodness-of-fit using the standardized root mean square residual (SRMR), root mean square error of approximation (RMSEA), comparative fit index (CFI), and Tucker–Lewis fit index (TLI) were assessed. In addition, receiver operating characteristic (ROC) analysis was conducted to calculate the cutoff points of the final DHTL-AQ. All data were analyzed using STATA 14.

Results

Item reduction

A total of 590 individuals participated in this study. Their mean age was 46.5 years (SD, 13.0; range, 20–84 years), and 273 (46.3%) were men. Overall, 370 (62.7%) had a college degree or above, and 337 (57.1%) were employed. A total of 377 (63.9%) patients were diagnosed with at least one chronic disease, including cancer (Table 1).

Table 1.

Participant characteristics (N = 590)

| Characteristic | N (%) |

|---|---|

| Age, mean (SD), range | 46.5 (13.0), 20–84 |

| < 40 | 143 (24.3) |

| 41–50 | 152 (25.7) |

| 51–60 | 161 (27.3) |

| > 61 | 154 (22.6) |

| Sex | |

| Male | 273 (46.3) |

| Female | 317 (53.7) |

| Marital status | |

| Married | 459 (77.8) |

| Single/divorce/widowed | 130 (22.0) |

| Unknown | 1 (0.2) |

| Education | |

| ≤ High school | 220 (37.3) |

| ≥ College | 370 (62.7) |

| Employment status | |

| Employed | 337 (57.1) |

| Unemployed | 252 (42.7) |

| Unknown | 1 (0.2) |

| Monthly household income | |

| < $2,000 | 63 (10.7) |

| $2,000-$3,999 | 134 (22.7) |

| $4,000-$5,999 | 179 (30.3) |

| ≥ $6,000 | 205 (34.8) |

| Unknown | 9 (1.5) |

| Status of disease | |

| Chronic disease (yes) | 377 (63.9) |

| Number of households | |

| None | 33 (5.6) |

| 1 | 150 (25.4) |

| ≥ 2 | 376 (63.7) |

| Unknown | 31 (5.3) |

In terms of face validity, all the items of the digital communicative literacy, device literacy, data literacy domains, and four out of eight categories of the digital functional literacy domain were excluded based on the descriptive results of the survey—for example, low usage (< 55%)—across all the study participants or no statistically significant differences between groups, and expert review (Fig. 1). The version for psychometric validation consisted of 40 items, including “ICT terms,” “ICT icons,” and “use of an app” in the digital functional literacy domain, and “evaluating the reliability and relevance of health information” and “degree of autonomy in the use of digital health”.

Item construction

In the EFA, six out of 40 items were excluded. The initial item retention and deletion criteria included (1) retaining items with a factor loading > 0.5, and (2) removing cross-loaded items with a loading > 0.4 on two or more factors. After one PCA for each domain, one item, “degree of autonomy in the use of digital health,” with 0.4324 and three items (transferring photos, deleting an app, and app login) in “ability to use an app” that were cross-loaded on two factors were excluded.

The KMO ranged from 0.832 to 0.933, and the Bartlett p-value was < 0.05. Initially, the EFA indicated a five-factor solution with two factors from ICT-related terms, while we designed a four-factor solution. Further examination of the factor structure was conducted using a five-factor solution, and two items were extracted: app menu icon from “ICT icons” with low factor loading (-0.4082) and the function of app install in “use an app” with cross-loading on two factors (0.5736, 0.4998; Table 2).

Table 2.

Initial results of exploratory factor analysis (36 items)

| Domain | Items | Factor1 | Factor2 | Factor3 | Factor4 | Factor5 |

|---|---|---|---|---|---|---|

| Digital Functional Literacy | ||||||

| Use of an app | 1.I can record the amount of activity (steps), weight, and meals through the app | 0.8613 | -0.114 | 0.0842 | 0.1531 | -0.1728 |

| 2.I can check the amount of activity (steps), weight, and meals recorded through the app | 0.8586 | -0.125 | 0.0817 | 0.1457 | -0.165 | |

| 3.I can use the recorded health information for my health through the app | 0.8327 | -0.133 | 0.0624 | 0.2196 | -0.1836 | |

| 4.I can record my health information through the app | 0.8212 | -0.1368 | 0.0916 | 0.2494 | -0.1829 | |

| 5.I can set preferences (sound, security, display, notification etc.) for the app | 0.7303 | -0.2218 | 0.2897 | 0.099 | -0.1306 | |

| 6.I can find more reliable apps by comparing different apps | 0.6588 | -0.1734 | 0.1425 | 0.2405 | -0.1974 | |

| 7.I can update the app | 0.6466 | -0.2097 | 0.4645 | 0.1638 | -0.0382 | |

| 8.I can easily find the app to help my health | 0.6455 | -0.0959 | 0.2159 | 0.0882 | -0.1991 | |

| 9.I can sign up to use the app (create ID, password, etc.) | 0.6098 | -0.2432 | 0.4272 | 0.1212 | -0.0635 | |

| 10.I can download the app.* | 0.5736 | -0.3082 | 0.4998 | 0.1477 | -0.0005 | |

| ICT icons | 11.Icon (Download) | -0.1213 | 0.7535 | -0.1113 | -0.1194 | 0.123 |

| 12.Icon (Security file) | -0.2204 | 0.7452 | -0.169 | -0.1415 | 0.1206 | |

| 13.Icon (Search bar) | -0.1604 | 0.7199 | -0.1415 | -0.2682 | 0.0107 | |

| 14.Icon (Synchronization) | -0.2823 | 0.7179 | -0.12 | -0.1744 | 0.059 | |

| 15.Icon (Voice assistant) | -0.163 | 0.6882 | -0.0895 | -0.229 | 0.1161 | |

| 16.Icon (Social media) | -0.0717 | 0.6815 | -0.1821 | 0.0159 | 0.1305 | |

| 17.Icon (Bluetooth) | -0.2348 | 0.669 | -0.3076 | -0.2574 | 0.0588 | |

| 18.Icon (URL) | -0.1535 | 0.6603 | -0.1904 | -0.0873 | 0.0125 | |

| 19.Icon (QR code) | -0.1497 | 0.5973 | -0.3424 | 0.0752 | 0.0754 | |

| 20.Icon (App menu-hamburger icon)* | -0.3725 | 0.288 | -0.1231 | -0.4082 | 0.069 | |

| ICT terms | 21.Internet-related term (Update, synchronization) | 0.2006 | -0.1822 | 0.7682 | 0.2136 | -0.0709 |

| 22.Internet-related term (Application) | 0.1312 | -0.1904 | 0.7601 | 0.2038 | -0.0876 | |

| 23.Internet-related term (Bluetooth) | 0.2124 | -0.2715 | 0.6523 | 0.1531 | -0.0846 | |

| 24.Internet-related term (QR code) | 0.2977 | -0.2147 | 0.5938 | 0.3141 | -0.0949 | |

| 25.Internet-related term (Play store, app store) | 0.3197 | -0.3631 | 0.5239 | 0.3642 | -0.0459 | |

| 26.Internet-related term (Wearable device) | 0.2321 | -0.146 | 0.128 | 0.7235 | -0.1454 | |

| 27.Internet-related term (Search bar) | 0.1886 | -0.1377 | 0.1952 | 0.6952 | -0.1046 | |

| 28.Internet-related term (Web browser) | 0.2877 | -0.2191 | 0.2406 | 0.6807 | -0.1251 | |

| 29.Internet-related term (Domain, URL) | 0.3381 | -0.2273 | 0.2221 | 0.6732 | -0.115 | |

| 30.Internet related term (Cloud) | 0.3844 | -0.1605 | 0.2583 | 0.5835 | -0.1358 | |

| 31.Internet-related term (Chatbot, voice assistant) | 0.282 | -0.1538 | 0.2598 | 0.5722 | -0.0864 | |

| Digital Critical Literacy | ||||||

| Evaluating reliability and relevance of health information | 32.I can judge whether the information on the Internet or digital health was used for commercial benefit | -0.104 | 0.0006 | -0.0222 | -0.0327 | 0.8162 |

| 33.I can judge whether the information I find on the Internet or in digital health is properly used for myself | -0.2207 | 0.0592 | -0.0946 | -0.1169 | 0.8082 | |

| 34.I can judge whether the information I got from the Internet or digital health is reliable | -0.1581 | 0.1422 | -0.043 | -0.0084 | 0.7944 | |

| 35.I can check if the same information is provided by other websites or on the Internet | -0.2197 | 0.0886 | -0.0799 | -0.155 | 0.7609 | |

| 36.I can use the information I find on the Internet or digital health to make health-related decisions | -0.2174 | 0.1172 | -0.0381 | -0.1561 | 0.7398 | |

*These items were deleted from the final EFA

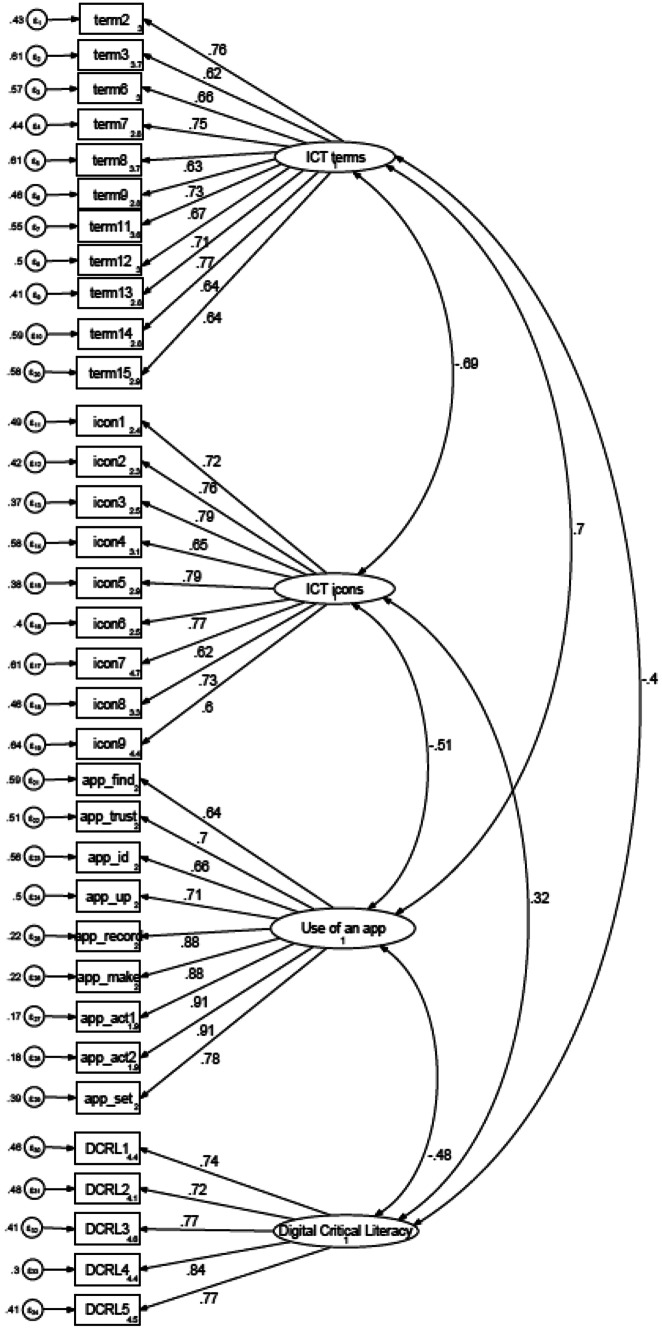

Subsequently, a four-factor solution was determined for the CFA (Supplementary Table 4). After the four-factor solution loading, “Bluetooth” was 0.466, and “Play store, App store” was cross-loaded with two factors (-0.4298, 0.5655). However, two items were retained from the description of results and expert review because paring with a digital device through a mobile phone and downloading the app were necessary for chronic disease management. The final DHTL-AQ consists of four categories (34 items; Supplementary Fig. 2). Cronbach’s αcoefficients were calculated for the total (0.95) and for each category (ability to use an app, 0.94; knowing of app icons, 0.91; ICT-related terms, 0.91; and evaluating the reliability and relevance of digital health, 0.87). The chi-squared test for overall model fit was significant (χ2 = 2938.252, p < 0.000). The fit indices showed a good fit: SRMR = 0.065, RMSEA = 0.090 (CI = 0.087–0.094), TLI = 0.807, and CFI = 0.821. (Fig. 2).

Fig. 2.

Confirmatory factor analysis

The DHTL-AQ was strongly associated with task ability assessment (r = 0.7591); “ICT terms” showed an especially high correlation (r = 0.7084). The NVS (r = 0.5168) and eHEALS (r = 0.4076) were moderately associated with task ability assessment (Table 3). The DHTL-AQ was moderately correlated with NVS (0.5929) and eHEALS (0.5265), and the correlation between eHEALS and NVS was low (r = 0.3298) (Fig. 3).

Table 3.

Concurrent and discriminative validity (correlation)

|

Task Ability Assessment (total) |

DHTL (total) |

DHTL (4 categories) |

NVS (total) |

||||

|---|---|---|---|---|---|---|---|

| ICT Terms | ICT Icons | Use of an App | Evaluating Reliability and Relevance of Health Information | ||||

| DHTL (total) | 0.7591 | ||||||

| ICT terms | 0.7084 | 0.8963 | |||||

| ICT icons | 0.6796 | 0.7559 | 0.6341 | ||||

| Use of an app | 0.6407 | 0.8800 | 0.7031 | 0.5033 | |||

| Evaluating reliability and relevance of health information | 0.3360 | 0.6241 | 0.3913 | 0.3137 | 0.5032 | ||

| NVS (total) | 0.5168 | 0.5929 | 0.5837 | 0.5430 | 0.4537 | 0.2745 | |

| eHEALS (total) | 0.4076 | 0.5265 | 0.4240 | 0.3557 | 0.4620 | 0.4774 | 0.3298 |

DHTL digital health technology literacy, eHEALS eHealth literacy measurement, NVS Newest Vital Sign

Fig. 3.

Correlation with digital health technology literacy and task ability, eHealth literacy, and health literacy. *eHealth literacy was assessed using the Korean eHealth literacy tool [18], and health literacy was measured using the Newest Vital Sign [27]

The ROC analysis revealed that the maximum point at which the sum of sensitivity and specificity (a sensitivity of 86.4% and a specificity of 86.4%) was determined was considered a cutoff score, and the total cutoff value was 22 out of 34.

Short version

A short version of DHTL-AQ was developed with 20 items covering two categories—“ICT terms” and “use an app” (Table 2) in the digital functional literacy domain—which were selected from the inspection of content validity rating based on experts’ reviews and factor analysis. Model fit indices were acceptable (SRMR = 0.076, RMSEA = 0.131, TLI = 0.784, CFI = 0.808), and the total cutoff value was 11 out of 20.

Discussion

We developed a measurement to assess digital health technology literacy (DHTL) for use in clinical settings. The DHTL-AQ consists of 34 items from four categories, including “ICT terms,” “ICT icons,” and “use of an app” in the digital functional literacy domain and “evaluating reliability and relevance of health information” in the digital critical literacy domain. It can be concluded that the overall validity of these categories is sufficient, with excellent internal consistency (overall instrument Cronbach’s α = 0.95; 0.87–0.94 for subtotals) and acceptable model fit. The categories “ICT terms” and “ICT icons” covered basic terms and icons to enable individuals to understand the mobile device, “use of an app” presented more advanced skills to use mobile apps effectively, and “evaluating reliability and relevance of health information” focused on cognitive skills to critically analyze the health information and data from digital technology and effectively use them to make health-related decisions.

During the initial development stage, we conceptualized that the DHTL would cover five domains based on literature reviews. However, we found that the domains of digital communicative, device, and data literacy domain categories showed low overall usage and no statistically significant differences between groups in face validity. This result may be because these domains mainly included social media, wearable devices, and personal health record (PHR), which were still nascent aspects; the low retention rates reflected individual preferences in Korea [28–30], and most items focused on individuals’ perceived abilities, such as attitudes, preferences, and expectations rather than performance-related abilities, including knowledge and skills. In contrast, the most statistically significant differences between the groups in this study were knowledge- and skill-based items. While individuals’ attitudes and preferences concerning digital health are still susceptible and most commonly used, measuring objective knowledge and function-based technical aspects is necessary to assess the DHL. Several researchers have recently created a combined measurement encompassing objective evaluation of performance skills; however, most approaches do not fully reflect the aspect of digital technology, such as mobile devices [9–11]. Finally, we decided to include only two domains that are more focused on knowledge- and skill-based items to measure individuals’ competencies required for use and adoption in a routine clinical setting. Further measurement needs to reflect a variety of state-of-the-art technologies and services and to update content to improve the quality of DHTL.

As expected, the DHTL-AQ had a stronger association with the actual performance skills of mobile app task ability. These results support our hypothesis that mobile technology skills are essential to assess the effect of digital health literacy. When we developed the scale, we found that digital health literacy had a broader scope in the context of technology, and the mobile health (mHealth) sector has been increasing in importance and offering numerous health services with diverse functions. Recently, there has been a rising trend in hospitals and institutions encouraging patients to use mobile apps, such as digital therapeutics, to manage their illness and treatment. The DHTL-AQ would be helpful in identifying vulnerable populations for use in digital health. However, time and resources may be important when adopting this tool. We recommend using 20 items, which are the short forms of DHTL-AQ. The DHTL-AQ can assess, acquire, and mediate clinically certain levels of the effectiveness of digital health in the future.

Most DHTL-AQ items were clearly loaded on their own factor based on the criteria for factor loadings > 0.5, and they were not cross-loaded items with a loading > 0.4 on two or more factors. In CFA, most fit indices met the recommended criteria. While most items constituting each factor were correlated, the ICT-related terms loaded onto two factors, which were clustered app-related (Update, Bluetooth, QR code, Play Store, or App Store) and web-related terms (Web browser, Domain/URL, and Cloud) with new technology terms (wearable device and chatbot). This scenario may be because digital health has significant differences in constructs with various technologies and services. Most studies used “eHealth literacy” or “digital literacy,” which only focused on the Internet or a computer as DHL (Supplementary Table 5). For example, a multifaceted eHealth literacy assessment toolkit (eHLA) [20] and DHLI, used to assess individuals’ ability to engage with digital health, also mainly emphasized computer-based technology and performance-based skills to navigate the Internet and web pages [19]. In fact, while DHTL-AQ had a strong association with actual digital task skills, it had a moderate association with health literacy and eHealth literacy assessed by NVS and eHEALS, respectively. Further research should explore the expansion of digital technology and services, and how these factors affect and interact with DHTL.

The DHTL-AQ can be easily administered and completed by vulnerable digital health groups using simple questionnaires, including terms and icons. In our study, approximately half of the participants were aged > 50 years, two out of three had an educational qualification less than high school, and over 60% had chronic diseases. In the digital era, people need to be self-reliant and able to actively participate in their health management using digital health. According to previous review, however, a group with low DHL had a low level of technical readiness, a lack of trust, and data privacy concerns related to digital health [31], and they were less likely to use digital health such as diabetes mobile apps [3]. Therefore, a valid assessment of DHL can provide insights into the current population level of digital health education and improve levels through interventions. Additionally, we developed the short version with 20 items consisting of “ICT terms” and “use of an app” and confirmed that this version was also valid for evaluating DHTL. The short version of the DHTL-AQ would be helpful in evaluating DHTL in a busy clinical setting.

Furthermore, digital technologies, and services such as mobile apps became more important due to COVID-19 pandemic. Mobile apps are commonly used for reporting symptoms and monitoring COVID-19 patients. (REF) For example, in Korea, QR-code scan using mobile apps is required to enter certain public place such as restaurants, stores, or hospitals. Along with these changes, people’s DHTL might be improved. However, there might be some people who would experience more social or health disparities due to low DHTL. Under the COVID-19, DHTL-AQ would be helpful to screening people with low DHTL. So these digitally vulnerable population would also receive benefits from digital healthcare. Further research should look into the validation of the DHTL-AQ as a tool for assessing and evaluating to determine how the tool works as a predictor of actual education and intervention related to digital health such as digital therapeutics.

This study has some limitations. First, there might be items and content that are important for assessing DHL, but we may have missed them. The DHL includes a wide range of skills, and the factors comprise complex technology-related health literacy in the digital era. In particular, approaches to DHTL have markedly changed over the past decade, are changing, and will continue to change. Therefore, the DHTL-AQ should be updated to reflect the various significant advances. Second, because we use the convenience sample and recruited the participants at a university hospital in Seoul, Korea, additional validation study would be necessary for other populations at other settings before using the DHTL-AQ. Third, we did not conduct the test–retest reliability of the DHTL-AQ; therefore, it will be needed for reproducibility. Finally, when we compared the DHTL-AQ to the actual skills with the mobile app, we only used certain mobile applications for patients with breast cancer. This choice is because the features and functions would be diverse, depending on the app in which the test results may vary.

Conclusion

We developed a instrument to measure DHTL. The instrument can be used to clinically screen and assess the level of health literacy using digital health, as well as provide insight into the health equity impacts of the implementation and adoption of digital health in the community.

Supplementary Information

Below is the link to the electronic supplementary material.

Authors' contributions

Study conception and design: All authors; data acquisition: J.H.Y and M.G.L; analysis and interpretation of results: JH.Y, MG.L, and JH.C; drafting of manuscript: JH.Y and JH.C; critical revision of manuscript: JH.Y, MG.L, and JH.C. All authors reviewed the results and approved the final version of the manuscript.

Funding

This research was supported by the Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (NRF-2018R1D1A1B07047833).

Availability of data and material

Data available in Samsung Medical Center.

Declarations

Ethics approval

The study was reviewed and approved by the Samsung Medical Center Institutional Review Board (2019–07-045–006).

Consent to participate

All participants provided written informed consent at the onset for this study.

Consent for publication

Not applicable.

Conflicts of interest

None declared.

Footnotes

Administration, U.S.F.D. What is Digital Health? Sep.22.2020; Available from: https://www.fda.gov/medical-devices/digital-health-center-excellence/what-digital-health.

National Cancer Institute, Health Information National Trends Survey (HINTS) Available from: https://hints.cancer.gov/.

You Can Trust Available from: https://wisconsinliteracy.org/health-literacy/programs/health-literacy-programs/health-online-finding-information-you-can-trust.html

ICT, Korean Digital Literacy in 2018 Available from: https://www.nia.or.kr/site/nia_kor/ex/bbs/List.do?cbIdx=81623

Kyriazos, T.A., Applied Psychometrics: Sample Size and Sample Power Considerations in Factor Analysis (EFA, CFA) and SEM in General. Psychology, 2018. 9(8). Available from: https://www.scirp.org/journal/paperinformation.aspx?paperid=86856

BRAVO App 2019; Available from: http://bravoproject.or.kr/.

Korean version of NVS: Kim, J. Measuring the Level of Health Literacy and Influence Factors: Targeting the Visitors of a University Hospital s Outpatient Clinic. 2011; Available from: https://www.koreascience.or.kr/article/JAKO201108161395188.page

Korean Adaptation of the General Self-Efficacy Scale. 1994; Available from: http://userpage.fu-berlin.de/~health/korean.htm.

This article is part of the Topical Collection on Mobile & Wireless Health

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Lee J-A, et al. Effective behavioral intervention strategies using mobile health applications for chronic disease management: a systematic review. BMC medical informatics and decision making. 2018;18(1):12–12. doi: 10.1186/s12911-018-0591-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Mahmood A, et al. Use of mobile health applications for health-promoting behavior among individuals with chronic medical conditions. Digital health. 2019;5:2055207619882181–2055207619882181. doi: 10.1177/2055207619882181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Agarwal P, et al. Mobile App for Improved Self-Management of Type 2 Diabetes: Multicenter Pragmatic Randomized Controlled Trial. JMIR Mhealth Uhealth. 2019;7(1):e10321. doi: 10.2196/10321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Dunn P, Conard S. Digital health literacy in cardiovascular research. Int J Cardiol. 2018;269:274–275. doi: 10.1016/j.ijcard.2018.07.011. [DOI] [PubMed] [Google Scholar]

- 5.Price-Haywood EG, et al. eHealth Literacy: Patient Engagement in Identifying Strategies to Encourage Use of Patient Portals Among Older Adults. Popul Health Manag. 2017;20(6):486–494. doi: 10.1089/pop.2016.0164. [DOI] [PubMed] [Google Scholar]

- 6.Yan K, Balijepalli C, Druyts E. The Impact of Digital Therapeutics on Current Health Technology Assessment Frameworks. Front Digit Health. 2021;3:667016. doi: 10.3389/fdgth.2021.667016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Robbins D, Dunn P. Digital health literacy in a person-centric world. Int J Cardiol. 2019;290:154–155. doi: 10.1016/j.ijcard.2019.05.033. [DOI] [PubMed] [Google Scholar]

- 8.Harris K, Jacobs G, Reeder J. Health Systems and Adult Basic Education: A Critical Partnership in Supporting Digital Health Literacy. Health Lit Res Pract. 2019;3(3 Suppl):S33–s36. doi: 10.3928/24748307-20190325-02. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.van der Vaart, R. and C. Drossaert, Development of the Digital Health Literacy Instrument: Measuring a Broad Spectrum of Health 1.0 and Health 2.0 Skills. J Med Internet Res, 2017. 19(1): p. e27. 10.2196/jmir.6709. [DOI] [PMC free article] [PubMed]

- 10.Kayser L, et al. A Multidimensional Tool Based on the eHealth Literacy Framework: Development and Initial Validity Testing of the eHealth Literacy Questionnaire (eHLQ) J Med Internet Res. 2018;20(2):e36. doi: 10.2196/jmir.8371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Griebel L, et al. eHealth literacy research-Quo vadis? Inform Health Soc Care. 2018;43(4):427–442. doi: 10.1080/17538157.2017.1364247. [DOI] [PubMed] [Google Scholar]

- 12.Holt KA, et al. Differences in the Level of Electronic Health Literacy Between Users and Nonusers of Digital Health Services: An Exploratory Survey of a Group of Medical Outpatients. Interact J Med Res. 2019;8(2):e8423. doi: 10.2196/ijmr.8423. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Paige SR, et al. Proposing a Transactional Model of eHealth Literacy: Concept Analysis. J Med Internet Res. 2018;20(10):e10175. doi: 10.2196/10175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Chang, A. and P.J. Schulz, The Measurements and an Elaborated Understanding of Chinese eHealth Literacy (C-eHEALS) in Chronic Patients in China. Int J Environ Res Public Health, 2018. 15(7). 10.3390/ijerph15071553. [DOI] [PMC free article] [PubMed]

- 15.Liberati A, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration. Bmj. 2009;339:b2700. doi: 10.1136/bmj.b2700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Naslund JA, et al. Health behavior models for informing digital technology interventions for individuals with mental illness. Psychiatr Rehabil J. 2017;40(3):325–335. doi: 10.1037/prj0000246. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Venkatesh V, Thong JYL, Xu X. Unified Theory of Acceptance and Use of Technology: A Synthesis and the Road Ahead. J. Assoc. Inf. Syst. 2016;17:1. doi: 10.17705/1jais.00428. [DOI] [Google Scholar]

- 18.Chung S, Park BK, Nahm ES. The Korean eHealth Literacy Scale (K-eHEALS): Reliability and Validity Testing in Younger Adults Recruited Online. J Med Internet Res. 2018;20(4):e138. doi: 10.2196/jmir.8759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Norman CD, Skinner HA. eHEALS: The eHealth Literacy Scale. J Med Internet Res. 2006;8(4):e27. doi: 10.2196/jmir.8.4.e27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Karnoe A, et al. Assessing Competencies Needed to Engage With Digital Health Services: Development of the eHealth Literacy Assessment Toolkit. J Med Internet Res. 2018;20(5):e178. doi: 10.2196/jmir.8347. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Nutbeam D. Health literacy as a public health goal: a challenge for contemporary health education and communication strategies into the 21st century. Health Promotion International. 2000;15(3):259–267. doi: 10.1093/heapro/15.3.259. [DOI] [Google Scholar]

- 22.Cattell, R. B. (1978). The Scientific Use of Factor Analysis in Behavioral and Life Sciences. New York: Plenum.10.1007/978-1-4684-2262-7

- 23.Gorsuch, R. (1983). Factor Analysis. Hillsdale, NJ: L. Erlbaum Associates.

- 24.Tabachnick, B., & Fidell, L. (2013). Using Multivariate Statistics. Boston, MA: Pearson Education Inc.

- 25.Comrey, A. L., & Lee, H. B. (1992). A First Course in Factor Analysis. Hillsdale, NJ: Lawrence Eribaum Associates

- 26.Johanson GA, Brooks GP. Initial Scale Development: Sample Size for Pilot Studies. Educational and Psychological Measurement. 2009;70(3):394–400. doi: 10.1177/0013164409355692. [DOI] [Google Scholar]

- 27.Weiss BD. The Newest Vital Sign: Frequently Asked Questions. Health literacy research and practice. 2018;2(3):e125–e127. doi: 10.3928/24748307-20180530-02. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Park Y, Yoon H-J. Understanding Personal Health Record and Facilitating its Market. Healthc Inform Res. 2020;26(3):248–250. doi: 10.4258/hir.2020.26.3.248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Lee SM, Lee D. Healthcare wearable devices: an analysis of key factors for continuous use intention. Service Business. 2020;14(4):503–531. doi: 10.1007/s11628-020-00428-3. [DOI] [Google Scholar]

- 30.Park, J., and Kyeong-Na Kim, Structural Influence of SNS Social Capital on SNS Health Information Utilization Level. The Korean Journal of Health Service Management, June 2020. 14(2): p. 1–14. 10.12811/kshsm.2020.14.2.001.

- 31.Rasche P, et al. Prevalence of Health App Use Among Older Adults in Germany: National Survey. JMIR mHealth and uHealth. 2018;6(1):e26–e26. doi: 10.2196/mhealth.8619. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data available in Samsung Medical Center.