Abstract

The reliable and rapid identification of the COVID-19 has become crucial to prevent the rapid spread of the disease, ease lockdown restrictions and reduce pressure on public health infrastructures. Recently, several methods and techniques have been proposed to detect the SARS-CoV-2 virus using different images and data. However, this is the first study that will explore the possibility of using deep convolutional neural network (CNN) models to detect COVID-19 from electrocardiogram (ECG) trace images. In this work, COVID-19 and other cardiovascular diseases (CVDs) were detected using deep-learning techniques. A public dataset of ECG images consisting of 1937 images from five distinct categories, such as normal, COVID-19, myocardial infarction (MI), abnormal heartbeat (AHB), and recovered myocardial infarction (RMI) were used in this study. Six different deep CNN models (ResNet18, ResNet50, ResNet101, InceptionV3, DenseNet201, and MobileNetv2) were used to investigate three different classification schemes: (i) two-class classification (normal vs COVID-19); (ii) three-class classification (normal, COVID-19, and other CVDs), and finally, (iii) five-class classification (normal, COVID-19, MI, AHB, and RMI). For two-class and three-class classification, Densenet201 outperforms other networks with an accuracy of 99.1%, and 97.36%, respectively; while for the five-class classification, InceptionV3 outperforms others with an accuracy of 97.83%. ScoreCAM visualization confirms that the networks are learning from the relevant area of the trace images. Since the proposed method uses ECG trace images which can be captured by smartphones and are readily available facilities in low-resources countries, this study will help in faster computer-aided diagnosis of COVID-19 and other cardiac abnormalities.

Keywords: Electrocardiogram (ECG), COVID-19, Deep learning, Convolutional neural networks, Cardiovascular diseases (CVDs)

Introduction

Coronavirus disease 2019 (COVID-19) has rapidly spread with increased fatalities across the world leading to a long-lasting global pandemic. Over 166 million cases have been recorded as of May 21, 2021, with over 3.4 million fatalities documented worldwide [1]. The Severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) virus mostly affects the respiratory system, but it can also lead to multi-organ failure. It has a severe impact on the cardiovascular system [2–6]. The advancement of artificial intelligence in biomedical applications has helped in developing trained networks for reliable computer-aided diagnostic decisions and thus reducing the pressure from the healthcare facilities (such as medical doctors, healthcare staff, etc.) [7]. Several deep learning models have been proposed in recent studies to identify abnormalities from medical images, including chest X-ray images and computerized tomography (CT) scans [8]. Degerli et al. in [9], introduced a novel approach for the combined localization, severity grading, and detection of COVID-19 from 15,495 CXR pictures by constructing so-called infection maps, which can accurately localize and grade the severity of COVID-19 infection with 98.69% accuracy. For chest X-ray image classification, Kesim et al. proposed a novel convolutional neural network (CNN) model [10]. Since pre-trained CNN models have difficulty in practical applications, the authors designed a small-sized CNN architecture that showed very promising performance in classifying 12 different abnormalities from chest X-ray images (Atelectasis, Cardiomegaly, Consolidation, Edema, Effusion, Emphysema, Fibrosis, Infiltration, Mass, Nodule, Pleural Thickening, Pneumothorax) and reported an accuracy score of 86%. Liu et al. proposed tuberculosis (TB) detection technique using chest X-ray and deep learning models [11]. The authors proposed a new CNN model and utilized shuffle sampling to deal with the imbalanced dataset issue and yielded an accuracy score of 85.68%. Rahman et al. [12] applied various pre-trained CNNs to categorize CXR pictures as having pulmonary tuberculosis (TB) symptoms or as being healthy. A dataset of 3500 infected and 3500 normal CXR pictures were used to train the suggested model. DenseNet201, the best-performing model, achieved a high detection performance of 98.57% sensitivity and 98.56% specificity. Chowdhury et al. [13] have created a public dataset consisting of normal, viral pneumonia and COVID-19 chest X-ray images and used deep CNN models for binary and three class classifications. On the created dataset, transfer learning using pre-trained Squeezenet, Mobilenetv2, Inceptionv3, Chexnet, ResNet, and Densenet201 models were examined. While binary classification had an accuracy score of 99.7%, three-class classification tasks showed an accuracy of 97.9%. Xu et al. in [14] devised a method for detecting abnormalities in the chest X-ray images. To avoid the over-fitting problem in transfer learning, the authors suggested a hierarchical-CNN model called CXNet-m1. The proposed CNN models were shallower than the pre-trained CNN models. Moreover, a novel loss function and CNN kernel improvement were introduced with an overall accuracy of 67.6%. In the study by Rahman et al. [15], the authors reported three schemes of classifications: normal vs. pneumonia, bacterial vs. viral pneumonia, and normal, bacterial, and viral pneumonia. Normal and pneumonia images, bacterial and viral pneumonia images, and normal, bacterial, and viral pneumonia images had classification accuracy of 98%, 95%, and 93.3%, respectively.

Chouhan et al. [16] used deep learning models to detect pneumonia in chest X-ray images using five deep transfer learning models and their ensemble. The accuracy of the ensemble deep learning model was 96.4%. Rajpurkar et al. created ChexNet, a 121-layer CNN architecture for stratifying fourteen distinct lung diseases using chest X-ray images [17]. The authors used the chest X-ray dataset to train the 121-layered DenseNet-121 CNN model. This is the first pre-trained ImageNet model which has been made public re-trained on chest X-ray images. The proposed model produces an area under the curve (AUC) values ranging from 0.704 to 0.944. Li et al. proposed a multi-resolution CNN (MR-CNN) for lung nodule identification [18]. To extract the features, a patch-based MR-CNN model was utilized, and multiple fusion approaches were applied for classification. Free Response Receiver Operating Characteristics (FROC) curve was used for performance evaluation with AUC and Refined Competition Performance Metric (R-CPM) measures of 0.982 and 0.987, respectively.

Bhandary et al. tweaked the AlexNet model to detect lung anomalies using chest X-ray images [19]. A new threshold filter and feature ensemble technique were deployed to achieve a classification accuracy of 96%. Ucar et al. [20] employed Laplacian Gaussian filters to improve the classification performance of the CNN models in chest X-ray image classification, which achieved a classification accuracy of 82.43%. Ismael and Şengür [21] demonstrated different deep learning approaches to detect COVID-19 from chest X-ray images using a Kaggle dataset and obtained the highest accuracy score 92.63%, which was produced by the ResNet50 model. Their COVID-19 detection was carried out using a variety of multiresolution techniques (Contourlet transform and Wavelet and Shearlet). Extreme Learning Machine (ELM) was applied in the classification stage and the experiment results showed that Wavelet and Shearlet can obtain higher accuracy of 92%.

COVID-19 infection can cause acute myocarditis in apparently healthy people [22]. Up to 27.8% of COVID-19 patients had an increased troponin level beyond the 99th percentile of the upper reference limit, indicating acute myocardial injury in an early case reported from China [23, 24]. This is about ten times greater than the influenza rate (2.9%) [25]. Most COVID-19 patients, even those who have biochemical evidence of acute myocardial damage, have a moderate illness history and recover without overt cardiac problems. It is unclear if COVID-19 survivors with no overt cardiac signs have any subclinical or hidden cardiac injury that might impair long-term results. As the pandemic slows, it is critical to figure out if cardiac monitoring in COVID-19 survivors is necessary or not. The potential to screen the general population and give an extra opinion for health care practitioners is the benefit of automated 12-lead electrocardiogram (ECG) diagnostic techniques. Since 1957, attempts have been made to automate the interpretation of ECG recordings, with a focus on findings linked to atrial fibrillation (AF). However, the performance of currently available automated methods has been mediocre [26]. Subsequently, despite current technological advancements, notably in the fields of sophisticated machine learning and artificial intelligence (AI) methodologies, the clinical value of automated ECG interpretations remains very limited [27, 28], and cardiologists continue to analyze and interpret 12-lead ECG recordings using traditional methods. In recent works, Angeli et al. [29] evaluated ECG features from 50 hospitalized patients (96% of our patients were Caucasians) infected with COVID-19 pneumonia. They discovered that during COVID-19 in older patients, the ECG signal produces abnormalities that can be treated in the early stages. However, the study was carried out using standard laboratory techniques and results could not be extended to other ethnic groups. Du et al. in [30], used the approach of deep learning on ECG trace images using a Fine-grained Multi-label ECG (FM-ECG) framework to effectively detect the abnormalities using recurrent neural network (RNN) from the real clinical ECG was proposed. Ozdemir et al. [31] proposed hexaxial feature mapping for detecting COVID-19 to represent 12-lead ECG to 2D colourful images, also the Gray-Level Co-Occurrence Matrix was applied in feature extraction. In this examination, they obtained 93.20% of testing accuracy.

Thus, our main contribution includes a comprehensive study on the detection of COVID-19 and other cardiac abnormalities using deep convolutional neural networks (CNN) techniques on ECG images. We applied six different CNN models for two-class, three-class and five-class classifications respectively to determine which model provides a higher detection accuracy of COVID-19 from ECG images. Several machine learning approaches have been applied for diagnosing covid-19 using medical images [32–34].

The remainder of this paper is organized as follows. ‘Methodology’ discusses the material and methods of the study, while ‘Experiments’ outlined the experimental pipeline and evaluation metrics. Results are presented in ‘Results’ and discussed in ‘Discussion’. Finally, ‘Conclusion’ concludes the paper.

Deep convolutional neural networks-based transfer learning

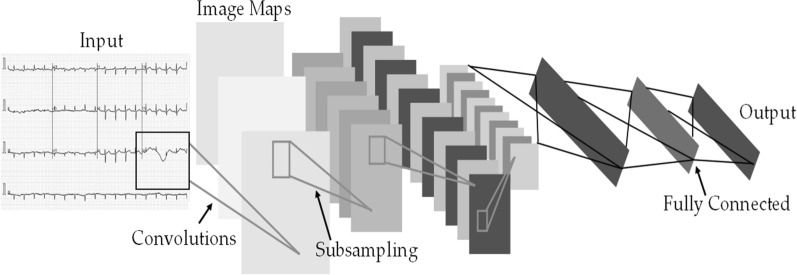

Six popular deep learning pre-trained CNN models have been used for COVID-19 detection using ECG trace images. These are ResNet18, ResNet50, ResNet101 [6], DenseNet201 [35], InceptionV3 [36], and MobileNetV2 [37], which are initially trained on ImageNet database. The Residual Network (also known as ResNet) was created to overcome the vanishing gradient and degradation problem [6]. ResNet has different variants based on the number of layers in the residual network: ResNet18, ResNet50, ResNet101, and ResNet152. ResNet is widely utilized for transfer learning in biomedical image classification. During training, deep neural network layers typically learn low or high-level features, whereas ResNet learns residuals rather than features [22]. Figure 1 shows the architecture of a convolutional neural network.

Fig. 1.

Architecture of a convolutional neural network

A Dense Convolutional Network (or DenseNet) [35] requires fewer parameters than a traditional CNN as this does not require training on redundant feature maps. The DenseNet has very narrow layers, hence it only adds a small number of new feature maps. DenseNet has four different known variants: DenseNet264, DenseNet169, DenseNet121 and DenseNet20. DenseNet provides straight access to the original input image as well as gradients from the loss function in each layer. As a result, the computational cost of DenseNet has been significantly lowered, making it a superior choice for image classification.

Alternatively, MobileNetv2 [37] is not comparable to other networks in-depth, rather this is a compact network. Except for the first layer, which is a full convolution, the rest of the layers are non-convolutional. Except for the last fully connected layer, which has no nonlinearity and feeds into a Softmax layer for classification, the MobileNet structure is constructed on depth-wise separable convolutions. Batch normalization and Rectified Linear Units (ReLU) nonlinearity are applied to all layers. Before the fully connected layer, a final average pooling reduces the spatial resolution to 1. MobileNet has 28 layers when depth-wise and pointwise convolutions are counted separately. Inception Networks use inception blocks to allow for deeper networks and more efficient computation by reducing dimensionality with layered convolutions.

Visualization Techniques

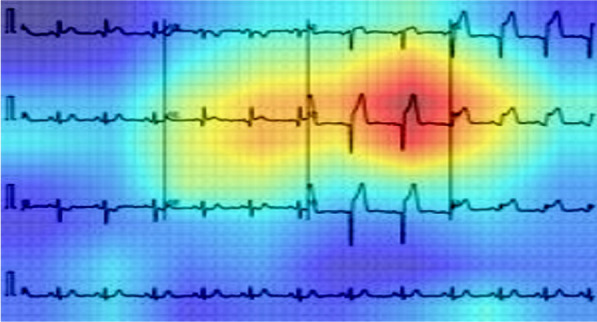

There is an increased interest in the internal mechanics of CNNs and the rationale for the models’ judgments for classification. The visualization techniques aid the interpretation of CNN decision-making processes by providing a more visual representation. These also improve the model's transparency by presenting the reason behind the inference in a way that is easily understandable by humans, hence enhancing confidence in the neural network's conclusions. SmoothGrad [23], Grad-CAM [24], Grad-CAM++ [25], and Score-CAM [38] are examples of visualization approaches. Because of its promising performance, Score-CAM was used in this study. The outcome is formed by a linear combination of weights and activation maps, with each activation map's weight determined by its forward passing score on the target class and eliminating the dependency of gradients. A sample image visualization with Score-CAM is shown in Fig. 2, where the heat map indicates that the region dominantly contributed to the decision making in CNN. This can be useful for understanding how the network makes decisions and for enhancing end-user confidence when it can be confirmed that the network makes decisions using the important segment of ECG trace image all the time.

Fig. 2.

Score-CAM heat map on ECG trace images to show the important region for making the decision by the CNN

Different abnormalities in ECG images

In this study, five distinct types of ECG trace images were used in this study, where four out of five are abnormal (COVID-19, myocardial infarction, abnormal heartbeat, and recovered myocardial infarction) and the other one is normal ECG trace images. In clinical terms, a normal ECG trace image represents the ECG of the normal person, who has no abnormality in the ECG trace. Myocardial infarction (MI), often known as a heart attack, is a form of acute coronary syndrome that defines a sudden or short-term reduction or disruption of blood flow to the heart, causing significant damage to the heart and can be detected by ECG sensing for correct patient diagnosis [39]. Chest pain or discomfort is the most prevalent symptom, which might spread to the shoulder, arm, back, neck, or jaw. Other than the MI ECG trace images of individuals, the dataset includes ECG traces images of the patients who have just recovered from COVID-19 and are experiencing symptoms of shortness of breath or respiratory sickness and the patients who are suffering from other abnormal heartbeats. Moreover, ECG trace images of the patients who are recently recovered from myocardial infarction were also available.

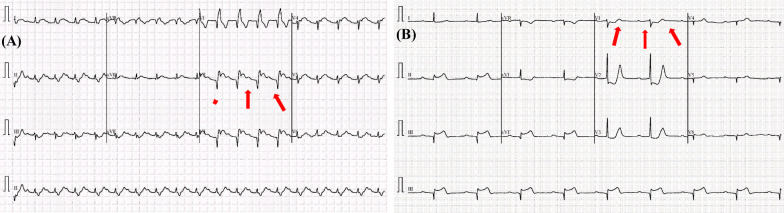

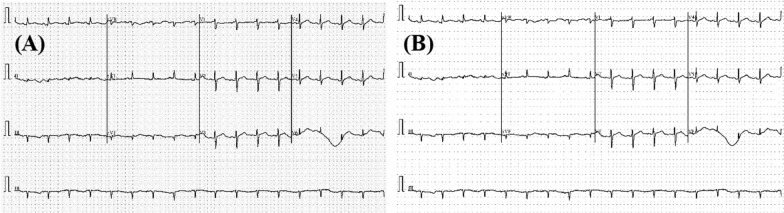

Most types of cardiac abnormalities have slight variances in ECG signals, nonetheless, these tiny distinctions (e.g., a peak–peak interval or a particular wave) are frequently used for defining the variables in abnormalities classification, such as ST-segmentation change, P wave height, and T wave abnormality. Figure 3 shows two examples of aberrant kinds that may be recognized by important components. Due to its inability to efficiently gather important and discriminative aspects, deep CNN models’ effectiveness is restricted when dealing with picture data challenges.

Fig. 3.

Illustration of two examples of ECG classes: abnormal ECG trace image for the a COVID-19 and b myocardial Infarction patients. The subtle signs identified (highlighted in red and pointing via arrows) as key parts to detect the abnormalities

Methodology

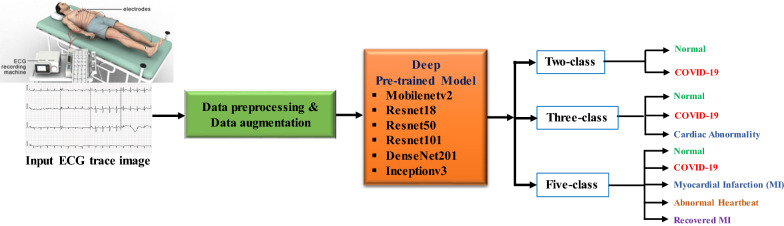

Figure 4 summarizes the methodology of this study. As explained earlier, this work has presented three different experimental schemes: (i) binary or two-class classification (normal vs COVID-19); (ii) three-class classification (normal, COVID-19, and cardiac abnormalities) and finally, (iii) five-class classification (normal, COVID-19, MI, AHB, and RMI). Six state-of-the-art CNN models were trained, validated, and tested to detect abnormality from ECG trace images for each of the classification schemes.

Fig. 4.

Overview of the methodology

The methodology of this research work is described in the following subsections.

Dataset description

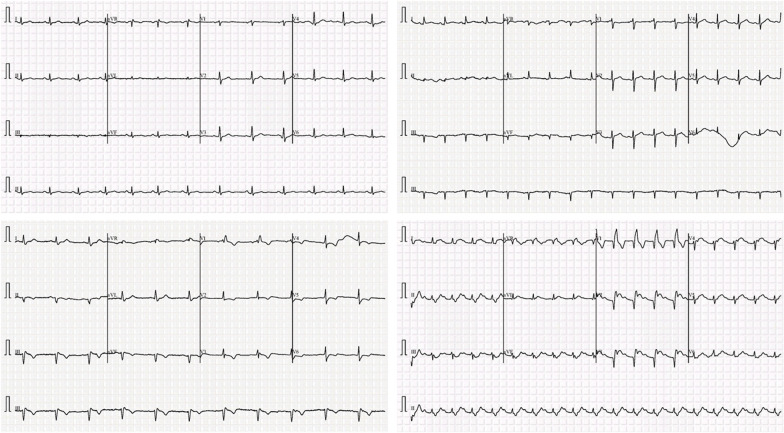

In this study, an ECG image dataset [40] of cardiac and COVID-19 patients is used, which consists of 1937 distinct patient records with five distinct categories (normal, COVID-19, myocardial infarction (MI), abnormal heartbeat (AHB), and recovered myocardial infarction (RMI). All the data were collected using the ECG device ‘EDAN SERIES-3’ installed in Cardiac Care and Isolation Units of different health care institutes across Pakistan. Twelve lead ECG trace images were collected and were manually reviewed by medical professors using a telehealth ECG diagnostic system, under the supervision of senior medical professionals with experience in ECG interpretation. Table 1 shows the number of images for different categories in the dataset and some sample images are shown in Fig. 5.

Table 1.

Dataset description

| Category | Number of images | Sample rate (Hz) | Leads |

|---|---|---|---|

| Normal | 859 | 500 | 12 |

| COVID-19 | 250 | ||

| Myocardial infarction | 77 | ||

| Abnormal heartbeat | 548 | ||

| Recovered MI | 203 |

Fig. 5.

Sample ECG trace images from the dataset. The horizontal axis represents time, and each time step is represented by a vertical line that lasts 0.04 s. Signal magnitudes in millivolts (mV), are represented on the vertical axis

Preprocessing

To improve the ECG image quality, the files are preprocessed using a gamma correction enhancement technique [41]. In image normalization, linear operations, individual pixels are frequently subjected to operations such as scalar multiplication, addition, and subtraction. Gamma correction is a non-linear procedure that is applied to the pixels of a source image. To improve the image, gamma correction employs the projection relationship between the pixel value and the gamma value according to the internal map, as shown in Fig. 6. If A represents the pixel value within a range of 0–255, which represents an angle value. If X represents the grayscale value of the pixel (A), then Eqs. (1)–(5) is correct. Let Xm be the midpoint of the range [0, 255]. P is the linear map from a group consisting of the following elements:

| 1 |

Fig. 6.

Preprocessing the input image: original ECG trace image (A) and Gamma corrected image (B)

The mapping from Ω to Ґ is defined as:

| 2 |

| 3 |

| 4 |

Based on this map, group A can be related to Ґ group pixel values. The arbitrary pixel value is calculated with a given Gamma number. Let γ (X) = h(X), and the Gamma correction function is as follows:

| 5 |

where s(X) represents the output pixel correction value in grayscale. After gamma correction, the dataset is processed to resize the ECG images to fit the input image-size requirements of CNN networks (e.g., 224 by 224 for residual and dense networks, and 299 by 299 for inception network). Using the mean and standard deviation of the images, Z-score normalization of the image was carried out [42].

Augmentation

Since the dataset is not balanced and the dataset does not have a similar number of images for the different categories, training with an imbalanced dataset can produce a biased model. Thus, data augmentation for the training set can help in having a similar number of images in the various classes, which can provide reliable results as stated in many recent publications [12, 13, 15, 41, 43, 44]. In this study, three augmentation strategies (rotation, scaling, and translation) were utilized to balance the training images. The rotation operation used for image augmentation was done by rotating the images in the clockwise and counterclockwise direction with an angle between 5° and 10°. The scaling operation is the magnification or reduction of the frame size of the image and 2.5% to 10% image magnifications were used in this work. Image translation was done by translating images horizontally and vertically by 5% to 20%.

Experiments

As discussed in ‘Methodology’, three different classification schemes were carried out in this study: two classes (normal vs COVID-19), three classes (normal, COVID-19, and cardiac abnormality), and five classes (normal, COVID-19, myocardial infarction, abnormal heartbeat, and recovered myocardial infarction) classification using different deep learning algorithms. Five-fold cross-validation was used and therefore, 80% of data were used for training and 20% for testing. Out of the training dataset subset, 10% were utilized for validation to avoid overfitting issues [45]. Finally, the results were a weighted average of five folds. Table 2 shows the details of the number of training, validation, and test ECG images used.

Table 2.

Details of training, validation, and test set for different classification problem

| Classification | Types | Total no. of images/class | Train set count/fold | Validation set count/fold | Test set count/fold |

|---|---|---|---|---|---|

| Two-class | Normal | 859 | 619 × 4 = 2476 | 68 | 172 |

| COVID-19 | 250 | 180 × 14 = 2520 | 20 | 50 | |

| Three-class | Normal | 859 | 619 × 4 = 2476 | 68 | 172 |

| COVID-19 | 250 | 180 × 14 = 2520 | 20 | 50 | |

| Abnormal | 828 | 597 × 4 = 2388 | 66 | 165 | |

| Five-class | Normal | 859 | 619 × 4 = 2476 | 68 | 172 |

| COVID-19 | 250 | 180 × 14 = 2520 | 20 | 50 | |

| Myocardial infarction | 77 | 56 × 43 = 2408 | 6 | 15 | |

| Abnormal HB | 548 | 395 × 6 = 2370 | 44 | 109 | |

| Recovered MI | 203 | 147 × 17 = 2499 | 16 | 40 |

The networks were built with the PyTorch library and Python 3.7 on an Intel® Xeon® CPU E5-2697 v4 @ 2.30 GHz with 64 GB RAM and a 16 GB NVIDIA GeForce GTX 1080 GPU. All networks were trained using the Adam optimizer with a learning rate of 10−3, a dropout rate of 0.2, a momentum update of 0.9, a mini-batch size of 16 images with 15 backpropagation epochs, and an early stopping threshold of 8 maximum epochs when no improvement in validation loss was seen. Table 3 summarizes the training settings used in the categorization studies.

Table 3.

Summary of training parameters for classification experiments

| Training parameter | |||||

|---|---|---|---|---|---|

| Learning rate | Batch size | Epochs | Epoch patience | Stopping criteria | Optimizer |

| 0.001 | 16 | 15 | 8 | 8 | ADAM |

In Table 3, the depths, number of learnable parameters and the input image sizes of the considered pre-trained deep CNN networks are given. In Table 4, we show used pre-trained network architecture. The Densenet201 has the deeper architecture and the Resnet101 has 44.6 million parameters. All considered pre-trained networks have identical input image sizes.

Table 4.

The architectures of the pre-trained CNN models

| Network | Depth | Parameters (millions) | Input image size |

|---|---|---|---|

| MobienetV2 | 53 | 3.5 | 224 × 224 |

| Resnet18 | 18 | 11.7 | 224 × 224 |

| Resnet50 | 50 | 25.6 | 224 × 224 |

| Resnet101 | 101 | 44.6 | 224 × 224 |

| Densenet201 | 201 | 20 | 224 × 224 |

| InceptionV3 | 48 | 23.9 | 224 × 224 |

Performance matrices for classification

In this study, six CNN models were trained and assessed using fivefold cross-validation. After the training phase, the performance of multiple networks for the testing dataset was assessed and compared using six performance indicators, such as accuracy, sensitivity or recall, specificity, precision (PPV), and F1 score. Equations (6)–(10) [41] indicate the different matrices for performance evaluation:

| 6 |

| 7 |

| 8 |

| 9 |

| 10 |

Here, for two-class, true positive (TP) is the number of correctly classified COVID-19 ECG images and true negative (TN) is the number of correctly classified normal images. False-positive (FP) and false-negative (FN) are the misclassified normal and COVID-19 ECG images, respectively. For the three-class, true positive (TP) is the number of correctly classified COVID-19 ECG images and true negative (TN) is the number of correctly classified other two classes (normal and abnormal images). False-positive (FP) and false-negative (FN) are the misclassified other two classes (normal and abnormal images) and COVID-19 ECG images, respectively. For the five-class, true positive (TP) is the number of correctly classified COVID-19 ECG images and true negative (TN) is the number of correctly classified other four classes (normal, myocardial infarction, abnormal heartbeat, and recovered myocardial infarction images). False-positive (FP) and false-negative (FN) are the misclassified other four classes (normal, myocardial infarction, abnormal heartbeat, and recovered myocardial infarction images) and COVID-19 ECG images, respectively.

The performance of deep CNNs was assessed using different evaluation metrics with 95% confidence intervals (CIs). Accordingly, CI for each evaluation metric was computed, as shown in Eq. (11):

| 11 |

where N is the number of test samples, and z is the level of significance that is 1.96 for 95% CI.

In addition to the above metrics, the various classification networks were compared in terms of elapsed time per image, or the time it took each network to classify an input image, as shown in Eq. (12).

| 12 |

In this equation, T1 is the starting time for a network to classify an image, I, and T2 is the end time when the network has classified the same image, I.

Results

This section describes the performance of the different classification networks’ performance on ECG trace image classification. The comparative performance of different CNNs for two-class, three-class, five-class classification schemes was shown in Table 5. It can be noted that for two and three class classification schemes DenseNet201 is outperforming while for the five-class classification InceptionV3 is showing the best performance.

Table 5.

Comparison of the performances of the different CNN models for different classification schemes (best result is presented as bold)

| Classification | Model | Result with 95% CI | Inference time | ||||

|---|---|---|---|---|---|---|---|

| Overall Weighted | |||||||

| Accuracy | Precision | Sensitivity | F1-score | Specificity | |||

| 2 class | MobieneV2 | 98.74 ± 0.52 | 98.74 ± 0.52 | 98.74 ± 0.52 | 98.73 ± 0.52 | 96.23 ± 0.88 | 0.18 |

| Resnet18 | 98.62 ± 0.45 | 98.56 ± 0.35 | 98.6 ± 0.4 | 98.8 ± 0.44 | 96.21 ± 0.78 | 0.26 | |

| Resnet50 | 98.92 ± 0.48 | 98.93 ± 0.48 | 98.92 ± 0.48 | 98.91 ± 0.48 | 96.28 ± 0.87 | 0.44 | |

| Resnet101 | 99.01 ± 0.46 | 99.02 ± 0.46 | 99.01 ± 0.46 | 99 ± 0.46 | 96.59 ± 0.84 | 0.85 | |

| Densenet201 | 99.1 ± 0.44 | 99.11 ± 0.43 | 99.1 ± 0.44 | 99.09 ± 0.44 | 96.9 ± 0.8 | 1.34 | |

| InceptionV3 | 98.78 ± 0.52 | 98.62 ± 0.54 | 100 ± 0 | 99.31 ± 0.38 | 95.2 ± 0.99 | 1.26 | |

| 3 class | MobieneV2 | 90.79 ± 1.34 | 91.26 ± 1.3 | 90.79 ± 1.34 | 90.76 ± 1.34 | 92.75 ± 1.2 | 0.22 |

| Resnet18 | 92.81 ± 1.19 | 92.83 ± 1.19 | 92.81 ± 1.19 | 92.8 ± 1.19 | 94.44 ± 1.06 | 0.31 | |

| Resnet50 | 93.01 ± 1.18 | 93.09 ± 1.17 | 93.01 ± 1.18 | 93.01 ± 1.18 | 94.59 ± 1.05 | 0.48 | |

| Resnet101 | 93.02 ± 1.18 | 93.23 ± 1.16 | 93.01 ± 1.18 | 92.99 ± 1.18 | 94.54 ± 1.05 | 0.88 | |

| Densenet201 | 97.36 ± 0.74 | 97.4 ± 0.74 | 97.36 ± 0.74 | 97.36 ± 0.74 | 97.93 ± 0.66 | 1.4 | |

| InceptionV3 | 96.89 ± 0.8 | 96.9 ± 0.8 | 96.9 ± 0.8 | 96.89 ± 0.8 | 97.6 ± 0.71 | 1.36 | |

| 5 class | MobieneV2 | 96.22 ± 0.88 | 96.29 ± 0.87 | 96.22 ± 0.88 | 96.2 ± 0.88 | 97.73 ± 0.69 | 0.25 |

| Resnet18 | 95.34 ± 0.97 | 95.44 ± 0.96 | 95.34 ± 0.97 | 95.28 ± 0.98 | 97.02 ± 0.79 | 0.33 | |

| Resnet50 | 96.43 ± 0.86 | 96.43 ± 0.86 | 96.43 ± 0.86 | 96.4 ± 0.86 | 97.93 ± 0.66 | 0.52 | |

| Resnet101 | 97 ± 0.79 | 97.07 ± 0.78 | 97 ± 0.79 | 96.95 ± 0.79 | 97.97 ± 0.65 | 0.92 | |

| Densenet201 | 97.2 ± 0.76 | 97.2 ± 0.76 | 97.21 ± 0.76 | 97.2 ± 0.76 | 98.63 ± 0.54 | 1.61 | |

| InceptionV3 | 97.83 ± 0.67 | 97.82 ± 0.67 | 97.83 ± 0.67 | 97.82 ± 0.67 | 98.86 ± 0.49 | 1.68 | |

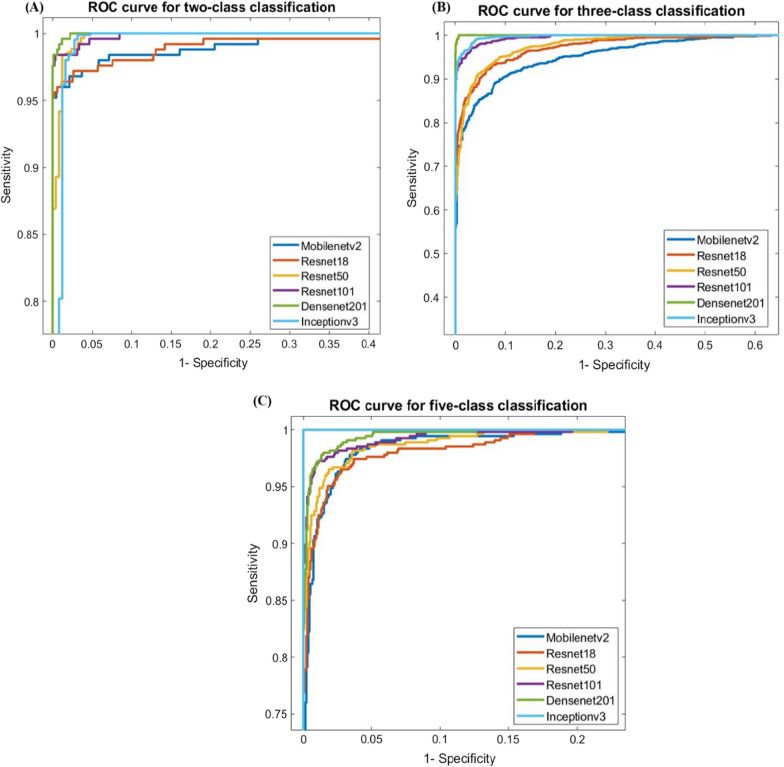

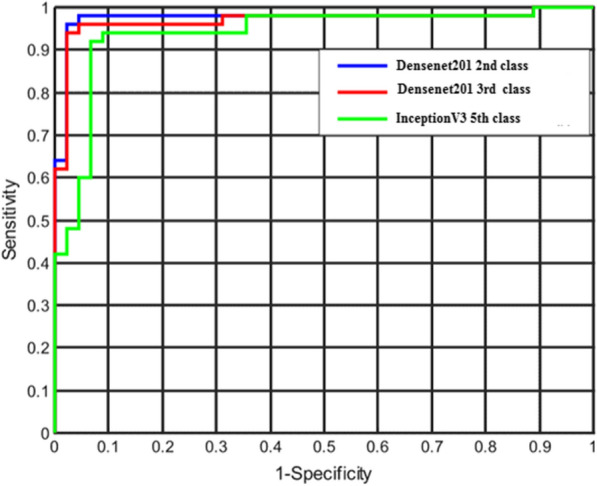

For two-class (normal vs COVID-19) classification, overall test accuracy was 99.1% using Densenet201, while for three-class (normal, COVID-19 and other cardiac abnormality) classification, it was 97.36% using Densenet201, and for five-class (normal, COVID-19, myocardial infarction, abnormal heartbeat, and recovered MI) classification, it was found to be 97.83% with InceptionV3. Figure 7 depicts the area under the curve (AUC)/receiver-operating characteristics (ROC) curve (also known as AUROC (area under the receiver operating characteristics) for various classification schemes, which is one of the most essential assessment metrics for determining the success of any classification model. In two-class and three-class classification, DenseNet201 shows better performance than the other techniques, as shown in Fig. 8, where InceptionV3 outperforms the other algorithms for five-class classification.

Fig. 7.

ROC curves for the deep CNN approaches

Fig. 8.

ROC curves for two-class, three-class, and five-class classifications for ECG images

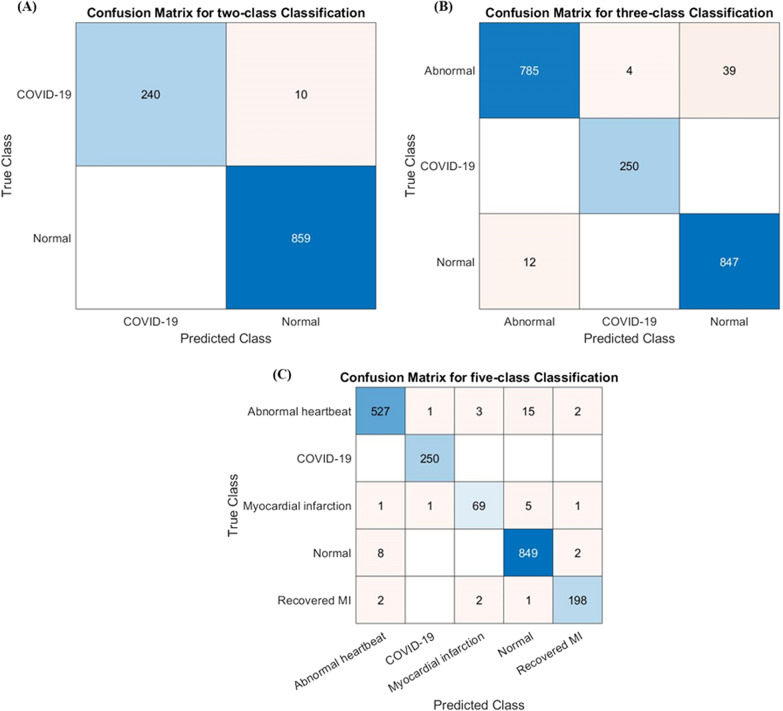

Figure 9 illustrates the confusion matrix for the outperforming model for ECG trace image classification schemes: two classes (Densenet201), three classes (Densenet201), and five classes (Inceptionv3). It is worth noting that with the top-performing network, 10 out of 250 COVID-19 ECG images were incorrectly categorized as normal for two-class classification, however, none of the COVID-19 ECG images was incorrectly categorized as normal or other classes for three-class or five-class classification. This is an outstanding performance from any computer-aided classifier, and this can significantly help in the fast diagnosis of COVID-19 by clinicians immediately after acquiring the ECG images.

Fig. 9.

Confusion matrix for A normal and COVID-19, B normal, COVID-19, and abnormal classification for Densenet201 model, and C normal, COVID-19, myocardial infarction, abnormal heartbeat, and recovered MI classification for Inceptionv3 model

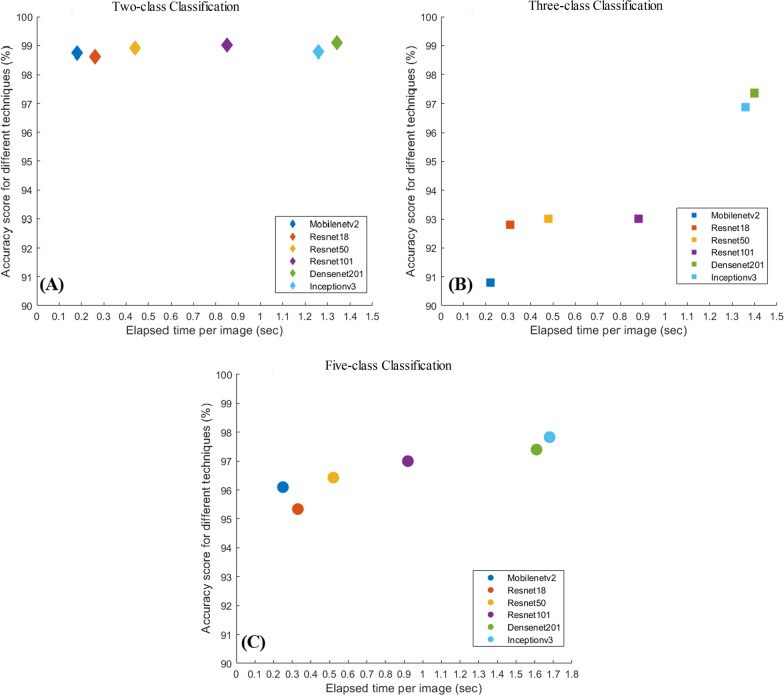

Figure 10 shows the comparison of accuracy versus the elapsed time per image for different CNN models for two-class, three-class, and five-class classification. While Densenet201 outperforms other networks for two-class, and three-class classification and Inceptionv3 outperform other networks for five-class classification, these are the slowest networks; however, these networks took approximately a second to take the decision. For two-class and five-class classification, all network performances are comparable, whereas, for three-class, Densenet201 outperforms other networks by 4–7%.

Fig. 10.

Accuracy vs inference time plot for two-class (A), three-class (B), and five-class (C) classifications

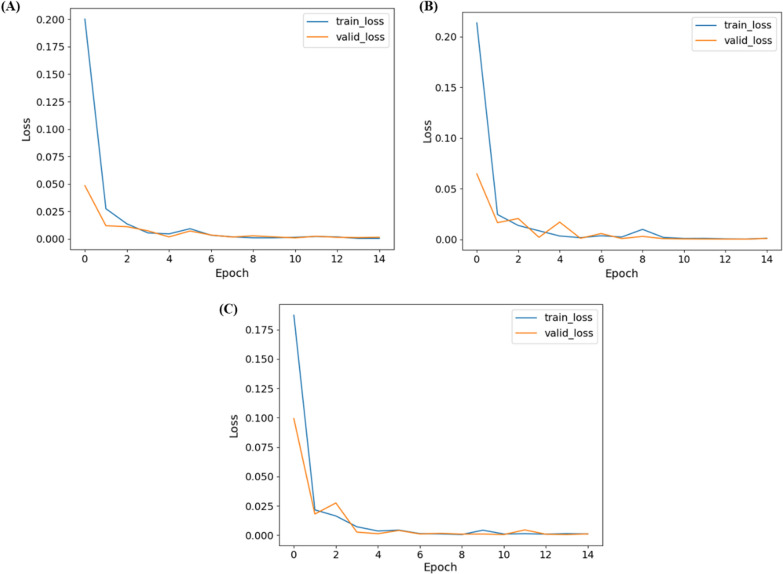

Figure 11 shows the training and validation loss versus epochs for the three best-performing networks for two-class (DenseNet201), three-class (DenseNet201), and five-class (InceptionV3) classification. It can also be seen that the networks reach and stabilize with the lowest loss earlier after a few epochs.

Fig. 11.

Training and validation losses versus Epoch for A two-class, B three-class, and C five-class classification

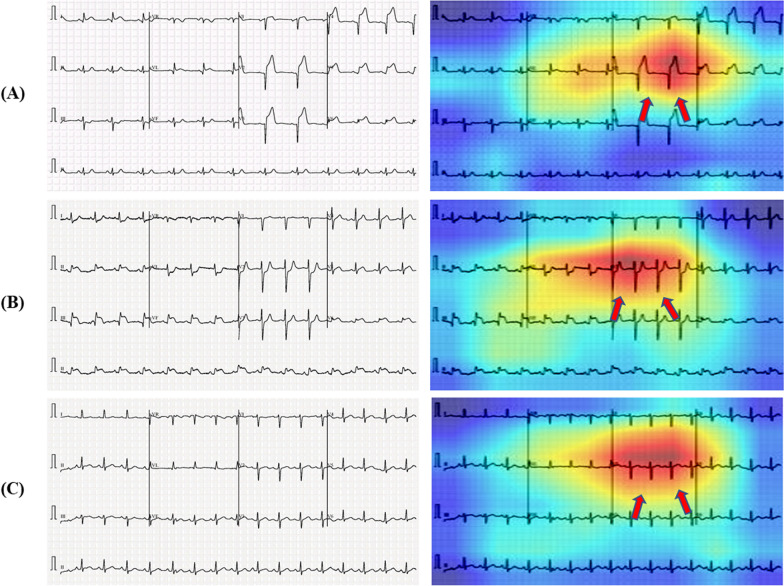

As mentioned previously, it is critical to determine if the network is learning from the relevant area of the ECG trace images or from somewhere else and any non-related data for taking the decision. Heat maps based on the Score-CAM technique were created for distinct classes of the ECG trace images. Figure 12 depicts samples ECG trace images for three-class classification as well as heat maps created using the best-performing DenseNet201 model. CNN learns from the regions where various waves change for various classes and the areas that are most important in determining abnormal ECG images in each of the ECG trace images. In Fig. 12A–C, we can see that ST-segment and J-point elevation, and abnormal heartbeat occurred for COVID-19, myocardial infarction, and abnormal heartbeat. Reliability of how the network is taking decisions for classification is important to increase the confidence of the end-user in the AI performance. It is easily noticeable that the network learned from the area where ECG waves are changing compared to normal ECG images rather than the outside area of the ECG waves.

Fig. 12.

Score-CAM visualization of abnormal (COVID-19, myocardial infarction, and abnormal heartbeat) ECG images using the best performing model. The subtle signs are identified as key parts to detect abnormalities (highlighted in red and pointing via arrows)

Discussion

Furthermore, numerous deep learning-based studies have employed radiographic images to detect COVID-19, with many of them achieving excellent classification results. As an example, consider the following studies: Al-Waisy et al. [46] achieved 99.99% accuracy, Dhiman et al. [47] achieved 98.54% accuracy, Ozturk et al. [48] achieved 98.08% accuracy, and Ahuja et al. [49] achieved 99.4% accuracy. The effectiveness of the research is because lung involvement is the most common symptom of COVID-19 disease [50], and the symptoms may be seen clearly on radiographic lung imaging [51].

Despite this, several studies employing CT and X-ray to diagnose COVID-19 have found that our technique is less accurate. As an example, consider the following studies: Ismael and Sengur [21] achieved 94.7% accuracy, Pathak et al. [52] achieved 93.02% accuracy, Song et al. [53] achieved 86% accuracy, Amyar et al. [54] achieved 94.67% accuracy, and Wang et al. [55] achieved 82.9% accuracy. Furthermore, given the drawbacks of radiological imaging discussed in the Background section, the suggested ECG-based COVID-19 diagnosis approach could be more useful than radiological image-based detection methods. It should be mentioned that the ECG is more easily accessible.

Furthermore, several research studies using multi-lead ECG to classify cardiac arrhythmias are described [56, 57]. Arrhythmias may not be present in all ECG channels, or they may be prevalent in only a few. All channel information should be protected, especially in multi-lead ECG and AI-based categorization research. If the prediction is made through the ECG channel when no abnormalities are visible, an abnormal ECG may be misclassified. Because the suggested hexaxial mapping method incorporates full 12-lead channel data, no channel with arrhythmias has been overlooked.

In this investigation, COVID-19 ECG images and other cardiovascular diseases (CVDs) were detected using deep-learning techniques. A public dataset of ECG images consisting of 1937 images from five distinct categories, such as Normal, COVID-19, myocardial infarction (MI), abnormal heartbeat (AHB), and recovered myocardial infarction (RMI) were used in this study. Six different deep CNN models (ResNet18, ResNet50, ResNet101, InceptionV3, DenseNet201, and MobileNetv2) were used to investigate three different classification schemes.

Limitations

Despite having low-resolution images and a limited dataset from our study and experiments, a significant difference was identified between images of COVID-19 ECGs and the others in all statistical analyses of GLCM characteristics; MI, aberrant, and no cardiac abnormalities. However, we emphasize that a wide range of ECG datasets, particularly ECGs of moderate or asymptomatic COVID-19 patients, is required to support our results and findings.

Conclusion

This paper provides a deep convolutional neural networks-based transfer learning strategy for the automated diagnosis of COVID-19 and other cardiac disorders using ECG trace images. The performance of the six different CNN models was evaluated for the classification of three different schemes: two-class classification (normal and COVID-19), three-class classification (normal, COVID-19, and cardiac abnormality) and five-class classification (normal, COVID-19, myocardial infarction (MI), abnormal heartbeat (HB), and recovered MI). Densnet201 model outperforms other deep CNN models for two-class, and three-class classifications whereas Inceptionv3 outperform other networks for five-class classification. The best classification accuracy, precision, and recall for the two-class, and three-class classifications were found to be 99.1%, 99.11%, 99.1%, and 97.36%, 97.4%, 97.36%, respectively. For five-class classification, the best classification accuracy, precision, and recall were 97.82%, 97.83%, and 97.82%, respectively. The Score-CAM visualization output demonstrates that the important signal changes in the ECG trace contribute to the decision-making of the network. Automatic abnormality detection from ECG images has a very crucial application in computer-aided diagnosis for critical healthcare problems like this one. This state-of-the-art performance can be a very useful and fast diagnostic tool, which can save a significant number of people who died every year due to delayed or improper diagnosis of COVID-19 and other comorbidities.

Contributor Information

Alex Akinbi, Email: o.a.akinbi@ljmu.ac.uk.

Aras M. Ismael, Email: aras.masood@spu.edu.iq

References

- 1.Christodoulidis S, Anthimopoulos M, Ebner L, Christe A, Mougiakakou S. Multisource transfer learning with convolutional neural networks for lung pattern analysis. IEEE J Biomed Health Inform. 2017 doi: 10.1109/JBHI.2016.2636929. [DOI] [PubMed] [Google Scholar]

- 2.Yang H, Mei S, Song K, Tao B, Yin Z. Transfer-learning-based online mura defect classification. IEEE Trans Semicond Manuf. 2018 doi: 10.1109/TSM.2017.2777499. [DOI] [Google Scholar]

- 3.Akçay S, Kundegorski ME, Devereux M, Breckon TP. Transfer learning using convolutional neural networks for object classification within X-ray baggage security imagery. In: Proceedings—International Conference on Image Processing, ICIP, 2016. 10.1109/ICIP.2016.7532519.

- 4.Tajbakhsh N, Shin JY, Gurudu SR, Hurst RT, Kendall CB, Gotway MB, Liang J. Convolutional neural networks for medical image analysis: full training or fine tuning? IEEE Trans Med Imaging. 2016 doi: 10.1109/TMI.2016.2535302. [DOI] [PubMed] [Google Scholar]

- 5.Pan SJ, Yang Q. A survey on transfer learning. IEEE Trans Knowl Data Eng. 2010;22:1345–1359. doi: 10.1109/TKDE.2009.191. [DOI] [Google Scholar]

- 6.CV-Tricks.com, ResNet, AlexNet, VGGNet. Inception: understanding various architectures of Convolutional Networks. 2021. https://cv-tricks.com/cnn/understand-resnet-alexnet-vgg-inception/. Accessed 16 May 2021.

- 7.Dahmani M, Chowdhury MEH, Khandakar A, Rahman T, Al-Jayyousi K, Hefny A, Kiranyaz S. An intelligent and low-cost eye-tracking system for motorized wheelchair control. Sensors (Switzerland) 2020 doi: 10.3390/s20143936. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Qiblawey Y, Tahir A, Chowdhury MEH, Khandakar A, Kiranyaz S, Rahman T, Ibtehaz N, Mahmud S, Al Maadeed S, Musharavati F, Ayari MA. Detection and severity classification of COVID-19 in CT images using deep learning. Diagnostics. 2021;11:893. doi: 10.3390/diagnostics11050893. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Degerli A, Ahishali M, Yamac M, Kiranyaz S, Chowdhury MEH, Hameed K, Hamid T, Mazhar R, Gabbouj M. COVID-19 infection map generation and detection from chest x-ray images. Health Inf Sci Syst. 2020 doi: 10.1007/s13755-021-00146-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kesim E, Dokur Z, Olmez T. X-ray chest image classification by a small-sized convolutional neural network. In: Proceedings of the 2019 Scientific Meeting on Electrical-Electronics and Biomedical Engineering and Computer Science, EBBT 2019. 2019. 10.1109/EBBT.2019.8742050.

- 11.Liu C, Cao Y, Alcantara M, Liu B, Brunette M, Peinado J, Curioso W. TX-CNN: detecting tuberculosis in chest X-ray images using convolutional neural network. In: Proceedings—International Conference on Image Processing, ICIP. 2018. 10.1109/ICIP.2017.8296695.

- 12.Rahman T, Khandakar A, Kadir MA, Islam KR, Islam KF, Mazhar R, Hamid T, Islam MT, Kashem S, Bin Mahbub Z, Ayari MA, Chowdhury MEH. Reliable tuberculosis detection using chest X-ray with deep learning, segmentation and visualization. IEEE Access. 2020 doi: 10.1109/ACCESS.2020.3031384. [DOI] [Google Scholar]

- 13.Chowdhury MEH, Rahman T, Khandakar A, Mazhar R, Kadir MA, Bin Mahbub Z, Islam KR, Khan MS, Iqbal A, Al Emadi N, Reaz MBI, Islam MT. Can AI help in screening viral and COVID-19 pneumonia? IEEE Access. 2020 doi: 10.1109/ACCESS.2020.3010287. [DOI] [Google Scholar]

- 14.Xu S, Wu H, Bie R. CXNet-m1: anomaly detection on chest X-rays with image-based deep learning. IEEE Access. 2019 doi: 10.1109/ACCESS.2018.2885997. [DOI] [Google Scholar]

- 15.Rahman T, Chowdhury MEH, Khandakar A, Islam KR, Islam KF, Mahbub ZB, Kadir MA, Kashem S. Transfer learning with deep Convolutional Neural Network (CNN) for pneumonia detection using chest X-ray. Appl Sci (Switzerland) 2020 doi: 10.3390/app10093233. [DOI] [Google Scholar]

- 16.Chouhan V, Singh SK, Khamparia A, Gupta D, Tiwari P, Moreira C, Damaševičius R, de Albuquerque VHC. A novel transfer learning based approach for pneumonia detection in chest X-ray images. Appl Sci (Switzerland) 2020 doi: 10.3390/app10020559. [DOI] [Google Scholar]

- 17.Rajpurkar P, Irvin J, Ball RL, Zhu K, Yang B, Mehta H, Duan T, Ding D, Bagul A, Langlotz CP, Patel BN, Yeom KW, Shpanskaya K, Blankenberg FG, Seekins J, Amrhein TJ, Mong DA, Halabi SS, Zucker EJ, Ng AY, Lungren MP. Deep learning for chest radiograph diagnosis: a retrospective comparison of the CheXNeXt algorithm to practicing radiologists. PLoS Med. 2018 doi: 10.1371/journal.pmed.1002686. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Li X, Shen L, Xie X, Huang S, Xie Z, Hong X, Yu J. Multi-resolution convolutional networks for chest X-ray radiograph based lung nodule detection. Artif Intell Med. 2020 doi: 10.1016/j.artmed.2019.101744. [DOI] [PubMed] [Google Scholar]

- 19.Bhandary A, Prabhu GA, Rajinikanth V, Thanaraj KP, Satapathy SC, Robbins DE, Shasky C, Zhang YD, Tavares JMRS, Raja NSM. Deep-learning framework to detect lung abnormality—a study with chest X-Ray and lung CT scan images. Pattern Recogn Lett. 2020 doi: 10.1016/j.patrec.2019.11.013. [DOI] [Google Scholar]

- 20.Uçar M, Uçar E. Computer-aided detection of lung nodules in chest X-rays using deep convolutional neural networks, Sakarya University. J Comput Inf Sci. 2019 doi: 10.35377/saucis.02.01.538249. [DOI] [Google Scholar]

- 21.Ismael AM, Şengür A. Deep learning approaches for COVID-19 detection based on chest X-ray images. Expert Syst Appl. 2021 doi: 10.1016/j.eswa.2020.114054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.LeCun Y, Kavukcuoglu K, Farabet C. Convolutional networks and applications in vision. In: Proceedings of the ISCAS 2010—2010 IEEE International Symposium on Circuits and Systems: Nano-Bio Circuit Fabrics and Systems. 2010. 10.1109/ISCAS.2010.5537907.

- 23.Smilkov D, Thorat N, Kim B, Viégas F, Wattenberg M. SmoothGrad: Removing noise by adding noise, ArXiv (2017). https://arxiv.org/pdf/1706.03825.pdf. Accessed 30 Dec 2021.

- 24.Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-CAM: visual explanations from deep networks via gradient-based localization. Int J Comput Vis. 2020 doi: 10.1007/s11263-019-01228-7. [DOI] [Google Scholar]

- 25.Chattopadhay A, Sarkar A, Howlader P, Balasubramanian VN, Grad-CAM++: generalized gradient-based visual explanations for deep convolutional networks. In: Proceedings—2018 IEEE Winter Conference on Applications of Computer Vision, WACV 2018. 2018. 10.1109/WACV.2018.00097.

- 26.Ruan Q, Yang K, Wang W, Jiang L, Song J. Clinical predictors of mortality due to COVID-19 based on an analysis of data of 150 patients from Wuhan, China. Intensive Care Med. 2020 doi: 10.1007/s00134-020-05991-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Nabil Ibtehaz TR, Chowdhury MEH, Khandakar A, Kiranyaz S, Sohel Rahman M, Tahir A, Qiblawey Y, EDITH: ECG biometrics aided by Deep learning for reliable Individual auTHentication, ArXiv.Org. 2021. https://arxiv.org/abs/2102.08026v2. Accessed 30 Dec 2021.

- 28.Muhammad Uzair Zahid MG, Kiranyaz S, Ince T, Can Devecioglu O, Chowdhury MEH, Khandakar A, Tahir A. Robust R-peak detection in low-quality holter ECGs using 1D convolutional neural network. ArXiv.Org. (2020). https://arxiv.org/abs/2101.01666. Accessed 30 Dec 2021. [DOI] [PubMed]

- 29.Angeli F, Spanevello A, de Ponti R, Visca D, Marazzato J, Palmiotto G, Feci D, Reboldi G, Fabbri LM, Verdecchia P. Electrocardiographic features of patients with COVID-19 pneumonia. Eur J Internal Med. 2020 doi: 10.1016/j.ejim.2020.06.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Du N, Cao Q, Yu L, Liu N, Zhong E, Liu Z, Shen Y, Chen K. FM-ECG: A fine-grained multi-label framework for ECG image classification. Inf Sci. 2021 doi: 10.1016/j.ins.2020.10.014. [DOI] [Google Scholar]

- 31.Ozdemir MA, Ozdemir GD, Guren O. Classification of COVID-19 electrocardiograms by using hexaxial feature mapping and deep learning. BMC Med Inf Decis Mak. 2021 doi: 10.1186/s12911-021-01521-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Gomes JC, Barbosa VAF, Santana MA, Bandeira J, Valença MJS, de Souza RE, Ismael AM, dos Santos WP. IKONOS: an intelligent tool to support diagnosis of COVID-19 by texture analysis of X-ray images. Res Biomed Eng. 2020 doi: 10.1007/s42600-020-00091-7. [DOI] [Google Scholar]

- 33.Gomes JC, Masood AI, Silva LHS, da Cruz Ferreira JRB, Freire Júnior AA, Rocha ALS, de Oliveira LCP, da Silva NRC, Fernandes BJT, dos Santos WP. Covid-19 diagnosis by combining RT-PCR and pseudo-convolutional machines to characterize virus sequences. Sci Rep. 2021 doi: 10.1038/s41598-021-90766-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Ismael AM, Şengür A. The investigation of multiresolution approaches for chest X-ray image based COVID-19 detection. Health Inf Sci Syst. 2020 doi: 10.1007/s13755-020-00116-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Huang G, Liu Z, Van Der Maaten L, Weinberger KQ. Densely connected convolutional networks. In: Proceedings—30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017. 2017. 10.1109/CVPR.2017.243.

- 36.Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z. Rethinking the inception architecture for computer vision. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. 2016. 10.1109/CVPR.2016.308.

- 37.Sandler M, Howard A, Zhu M, Zhmoginov A, Chen LC, MobileNetV2: inverted residuals and linear bottlenecks. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. 2018. 10.1109/CVPR.2018.00474.

- 38.Wang H, Wang Z, Du M, Yang F, Zhang Z, Ding S, Mardziel P, Hu X, Score-CAM: score-weighted visual explanations for convolutional neural networks. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops. 2020. 10.1109/CVPRW50498.2020.000.

- 39.Morrow DA. Myocardial infarction: a companion to Braunwald’s heart disease. 1. New York: Elsevier; 2016. [Google Scholar]

- 40.Khan AH, Hussain M, Malik MK. ECG images dataset of cardiac and COVID-19 patients. Data Brief. 2021 doi: 10.1016/j.dib.2021.106762. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Rahman T, Khandakar A, Qiblawey Y, Tahir A, Kiranyaz S, Bin Abul Kashem S, Islam MT, Al Maadeed S, Zughaier SM, Khan MS, Chowdhury MEH. Exploring the effect of image enhancement techniques on COVID-19 detection using chest X-ray images. Comput Biol Med. 2021 doi: 10.1016/j.compbiomed.2021.104319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Liu P, Wang JY, Yin ZK. Study on illumination compensation method for images. Xi Tong Gong Cheng Yu Dian Zi Ji Shu/Systems Engineering and Electronics. 2008.

- 43.Anas Tahir MEHC, Qiblawey Y, Khandakar A, Tawsifur Rahman, Khurshid U, Musharavati F, Islam MT, Kiranyaz S. Coronavirus: comparing COVID-19, SARS and MERS in the eyes of AI. ArXiv.Org. 2021.

- 44.Chowdhury MEH, Rahman T, Khandakar A, Al-Madeed S, Zughaier SM, Doi SAR, Hassen H, Islam MT. An early warning tool for predicting mortality risk of COVID-19 patients using machine learning. ArXiv. 2020. [DOI] [PMC free article] [PubMed]

- 45.Elite Data Science, Overfitting in machine learning: what it is and how to prevent it. 2021. https://elitedatascience.com/overfitting-in-machine-learning. Accessed 16 May 2021.

- 46.Al-Waisy AS, Al-Fahdawi S, Mohammed MA, Abdulkareem KH, Mostafa SA, Maashi MS, Arif M, Garcia-Zapirain B. COVID-CheXNet: hybrid deep learning framework for identifying COVID-19 virus in chest X-rays images. Soft Comput. 2020 doi: 10.1007/s00500-020-05424-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Dhiman G, Chang V, Kant Singh K, Shankar A. ADOPT: automatic deep learning and optimization-based approach for detection of novel coronavirus COVID-19 disease using X-ray images. J Biomol Struct Dynam. 2021 doi: 10.1080/07391102.2021.1875049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Ozturk T, Talo M, Yildirim EA, Baloglu UB, Yildirim O, Rajendra Acharya U. Automated detection of COVID-19 cases using deep neural networks with X-ray images. Comput Biol Med. 2020 doi: 10.1016/j.compbiomed.2020.103792x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Ahuja S, Panigrahi BK, Dey N, Rajinikanth V, Gandhi TK. Deep transfer learning-based automated detection of COVID-19 from lung CT scan slices. Appl Intell. 2021;51:571–585. doi: 10.1007/s10489-020-01826-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Feng Z, Yu Q, Yao S, Luo L, Zhou W, Mao X, Li J, Duan J, Yan Z, Yang M, Tan H, Ma M, Li T, Yi D, Mi Z, Zhao H, Jiang Y, He Z, Li H, Nie W, Liu Y, Zhao J, Luo M, Liu X, Rong P, Wang W. Early prediction of disease progression in COVID-19 pneumonia patients with chest CT and clinical characteristics. Nat Commun. 2020 doi: 10.1038/s41467-020-18786-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Li F, Michelson AP, Foraker R, Zhan M, Payne PRO. Computational analysis to repurpose drugs for COVID-19 based on transcriptional response of host cells to SARS-CoV-2. BMC Med Inf Decis Mak. 2021 doi: 10.1186/s12911-020-01373-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Pathak Y, Shukla PK, Tiwari A, Stalin S, Singh S. Deep transfer learning based classification model for COVID-19 disease. IRBM. 2020 doi: 10.1016/j.irbm.2020.05.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Song Y, Zheng S, Li L, Zhang X, Zhang X, Huang Z, Chen J, Wang R, Zhao H, Zha Y, Shen J, Chong Y, Yang Y. Deep learning enables accurate diagnosis of novel coronavirus (COVID-19) with CT images. IEEE/ACM Trans Comput Biol Bioinf. 2021 doi: 10.1109/TCBB.2021.3065361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Amyar A, Modzelewski R, Li H, Ruan S. Multi-task deep learning based CT imaging analysis for COVID-19 pneumonia: classification and segmentation. Comput Biol Med. 2020 doi: 10.1016/j.compbiomed.2020.104037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Wang S, Kang B, Ma J, Zeng X, Xiao M, Guo J, Cai M, Yang J, Li Y, Meng X, Xu B. A deep learning algorithm using CT images to screen for Corona virus disease (COVID-19) Eur Radiol. 2021 doi: 10.1007/s00330-021-07715-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Huang J, Chen B, Yao B, He W. ECG arrhythmia classification using STFT-based spectrogram and convolutional neural network. IEEE Access. 2019 doi: 10.1109/ACCESS.2019.2928017. [DOI] [Google Scholar]

- 57.Le TQ, Chandra V, Afrin K, Srivatsa S, Bukkapatnam S. A dynamic systems approach for detecting and localizing of infarct-related artery in acute myocardial infarction using compressed paper-based electrocardiogram (ECG) Sensors (Switzerland). 2020 doi: 10.3390/s20143975. [DOI] [PMC free article] [PubMed] [Google Scholar]