Abstract

Technology has advanced surgery, especially minimally invasive surgery (MIS), including laparoscopic surgery and robotic surgery. It has led to an increase in the number of technologies in the operating room. They can provide further information about a surgical procedure, e.g. instrument usage and trajectories. Among these surgery‐related technologies, the amount of information extracted from a surgical video captured by an endoscope is especially great. Therefore, the automation of data analysis is essential in surgery to reduce the complexity of the data while maximizing its utility to enable new opportunities for research and development. Computer vision (CV) is the field of study that deals with how computers can understand digital images or videos and seeks to automate tasks that can be performed by the human visual system. Because this field deals with all the processes of real‐world information acquisition by computers, the terminology “CV” is extensive, and ranges from hardware for image sensing to AI‐based image recognition. AI‐based image recognition for simple tasks, such as recognizing snapshots, has advanced and is comparable to humans in recent years. Although surgical video recognition is a more complex and challenging task, if we can effectively apply it to MIS, it leads to future surgical advancements, such as intraoperative decision‐making support and image navigation surgery. Ultimately, automated surgery might be realized. In this article, we summarize the recent advances and future perspectives of AI‐related research and development in the field of surgery.

Keywords: artificial intelligence, computer vision, decision‐making, machine learning, minimally invasive surgery

In recent years, image recognition by artificial intelligence (AI) has advanced to the point where it is comparable to that of humans. If AI‐based image recognition technology can be effectively applied to surgery, it will lead to the realization of future surgical advancements, such as intraoperative decision‐making support, image navigation surgery, and automated surgery. In this article, the recent advances and future perspectives of AI‐related research and development in the field of surgery are summarized.

1. INTRODUCTION

The latest form of computer‐based automation that self‐captures and self‐analyzes a wide variety of data is advancing and rapidly penetrating all aspects of daily life, represented by self‐driving cars 1 ; artificial intelligence (AI) is being used to make this large amount of data interpretable and usable. Technology has advanced surgery, especially minimally invasive surgery (MIS), including laparoscopic surgery and robotic surgery. It has led to an increase in the number of technological devises in the operating room. They can provide further information about a surgical procedure, e.g. instrument usage and trajectories. Among these surgery‐related technologies, the amount of information extracted from a surgical video captured by an endoscope is especially great. Therefore, the automation of data analysis is also essential in surgery to reduce the complexity of the data while maximizing its utility to enable new opportunities for research and development.

Computer vision (CV) is the field of study that deals with how computers can understand digital images or videos and seeks to automate tasks that can be performed by the human visual system. Because this field deals with all the processes of real‐world information acquisition by computers, the terminology “CV” extends to include hardware for image sensing to AI‐based image recognition. 2 AI‐based image recognition for simple tasks such as recognizing snapshots has advanced to the point where it is comparable to humans in recent years. 3 Although surgical video recognition is a more complex and challenging task, it could lead to future surgical advancements, such as intraoperative decision‐making support and image navigation surgery, if effectively applied to MIS. Ultimately, automated surgery might be realized. In this article, we summarize the recent advances and future perspectives of AI‐related research and development in the field of surgery.

2. AI IN MINIMALLY INVASIVE SURGERY

Technical errors are the leading cause of preventable harm in surgical patients. 4 Although individual surgeons’ skills vary significantly, their technical skills can have a drastic impact on patient outcomes. 5 , 6 , 7 , 8 On the other hand, diagnostic and judgment errors also play important roles in patient outcomes. The causes of a notable proportion of surgical complications can be traced back to a lapse in judgment or an error in decision‐making during the surgery. 9 , 10 A previous analysis of surgical errors in closed malpractice claims at several liability insurers found that the most frequent factors that contributed to surgical errors were related to cognition, such as judgment errors and vigilance or memory failures. 9 A separate analysis of the causes of bile duct injuries during laparoscopic cholecystectomy also found that the main sources of error were visual perceptual illusions; however, faults in technical skills were only present in 3% of these injuries. 10 Furthermore, time constraints and uncertainty forces individuals to rely on cognitive shortcuts that could lead to errors in judgement, and surgeons have identified this as one of the most common causes of major error. 11 , 12 However, the surgeon's decision‐making process depends on the circumstances of the surgery; usually, there is no obvious answer, and this creates a significant complexity around defining and measuring the process.

AI can play an important role in generalizing the high‐quality MIS in every hospital, and there are several reasons for this. First, in MIS, both the surgical procedure and the intraoperative decision‐making heavily rely on visual information compared to open surgery. Therefore, future technological improvement of AI‐based image recognition might reduce the burden of intraoperative decision‐making on surgeons. Second, many surgical videos can be acquired through MIS. These videos can be utilized as a training dataset to create an algorithm for machine learning (ML), one of the subdomains of AI. Third, fine anatomical structures, such as vessels or nerves, can be visualized during MIS under magnified view. Surgery should be performed more skillfully than ever with the understanding of these complicated structures. AI‐based image navigation would also be invaluable for these demands.

In an era of increased emphasis on patient safety, issues related to human error have become more pronounced. Because the capacity of human decision‐making is biologically limited, developing an approach to support surgeons’ decision‐making processes during surgery would be highly valuable. It might lead to technology‐augmented, real‐time intraoperative decision‐making support. Many surgical videos could be the foundation for creating an AI with collective surgical consciousness that comprises the knowledge and experience of global expert surgeons.

3. AI‐BASED COMPUTER VISION

Technological innovations have been linked to clinical benefits within the field of surgery. The navigation and planning of complex surgeries has been made possible through the use of both preoperative and intraoperative imaging techniques, such as ultrasonography, computed tomography, and magnetic resonance imaging. 13 Furthermore, the development of surgical devices and instruments related to endoscopic surgery have greatly contributed to the introduction and penetration of MIS. 14 Postoperative care has also been improved through the use of sophisticated sensors and monitors. 15 Following the introduction of these measures, AI will play an important role in intraoperative surgical decision‐making and automation. The recent introduction of AI‐based CV in MIS focuses on imaging, navigation, and guidance. 16 , 17

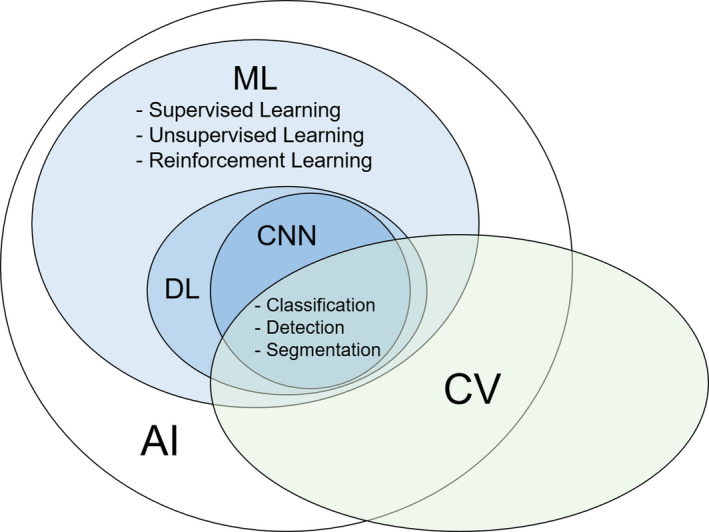

As shown in Figure 1, deep learning (DL) is part of ML methods that is based on neural networks, and the most important feature of DL is that it automatically extracts the features that should be focused on for analysis during the learning process. Convolutional neural networks (CNNs) are the most commonly applied DL models to CV. Major CV tasks that use CNNs can broadly be divided into image classification, object detection, semantic segmentation, and instance segmentation.

FIGURE 1.

Relationships between the terminologies mentioned in this article. AI, artificial intelligence; CNN, convolutional neural network; CV, computer vision; DL, deep learning; ML, machine learning

3.1. Image classification

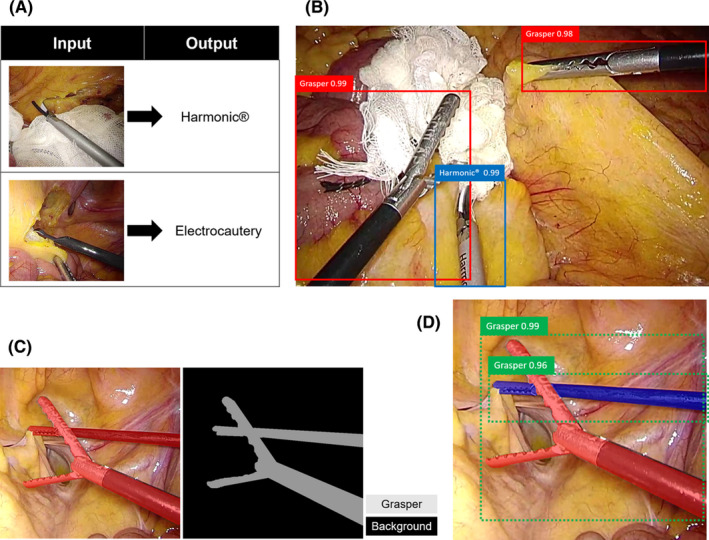

In an image classification task, the DL model is provided example classes (i.e. training data with annotation labels). From this information, it develops learning algorithms that review the examples and learn about the visual appearance of each class (Figure 2A). Current efforts towards image classification that uses CV in MIS focus on automated step identification in surgical videos. Due to their readily available video feed and stable field of view, laparoscopic surgeries lend themselves to CV analysis, with work currently being done with cholecystectomies, 18 sleeve gastrectomies, 19 sigmoid colectomies, 20 and peroral endoscopic myotomies. 21 Real‐time automatic surgical workflow is considered an essential technology for the development of context‐aware, computer‐assisted surgical systems.

FIGURE 2.

Reference images of A, Image classification, B, Object detection, C, Semantic segmentation, and D, Instance segmentation

3.2. Object detection

In an object detection task, the DL model outputs bounding boxes and labels for individual objects within images, i.e. when there are multiple objects in an image, the same number of bounding boxes appears in the image (Figure 2B). A typical example of the application of object detection in the medical field is AI‐assisted diagnosis, such as polyp detection during colonoscopy 22 ; it has attracted increased attention for its potential to reduce human error. In surgery, there have been several reports on surgical instrument detection. 23 , 24 Besides, kinematic information of a surgeon can be useful for workflow recognition and skill assessment, 25 , 26 and intraoperative tracking of surgical instruments is a prerequisite for computer‐and robotic‐assisted interventions 26 , 27 , 28 ; and to extract such data, object detection approach for surgical instruments can be utilized.

3.3. Semantic segmentation

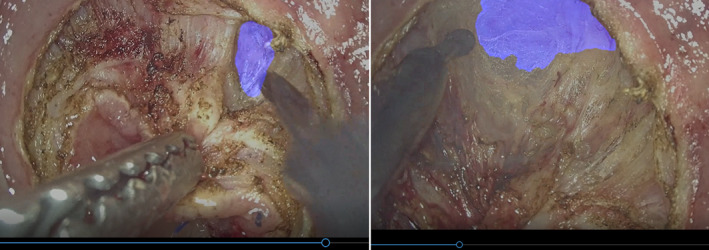

The process of segmentation, which divides whole images into pixel groupings that can then be labeled and classified, is central to CV. In particular, semantic segmentation attempts to specifically understand the role of each pixel in an image. The boundaries of each object can be delineated; therefore, dense pixel‐based predictions can be achieved (Figure 2C). Semantic segmentation is incredibly useful for intraoperative guidance, and one application is to avoid biliary tract injury during laparoscopic cholecystectomy. 16 , 17 Urethral injury is also a crucial intraoperative adverse event that is related to transanal total mesorectal excision (TaTME). During TaTME, the prostate is used as a landmark to determine the dissection line, and information on the location of the prostate can help surgeons recognize the location of the urethra and avoid urethral injury. Previously, our team developed a real‐time automatic prostate segmentation system to reduce the risk of urethral injury 29 (Figure 3). Although developing AI that can recognize anatomical structure is quite challenging compared to surgical instruments, applying semantic segmentation to anatomical structures as surgical landmarks is a promising approach; therefore, further developments are expected.

FIGURE 3.

Endoscopic images of semantic segmentation for prostate during transanal total mesorectal excision

3.4. Instance segmentation

The only available information in an object detection task is whether the object is inside or outside the bounding box (Figure 2B), and it is impossible to distinguish between overlapped objects with the same label in a semantic segmentation task (Figure 2C). In an instance segmentation task, even multiple overlapping objects with the same label, including their boundaries, differences, and relationships to one another, can be recognized (Figure 2D). This approach is highly useful to recognize surgical instruments, which intersect in a complex manner during surgery. 27

AI technology is advancing daily, and new AI‐based CV approaches will continue to emerge. To pursue novel surgical treatments using AI, cooperation with medical professionals, engineers, and other multidisciplinary professionals, i.e. medical‐industrial cooperation, is expected to become increasingly important in future surgical research and development fields.

4. TRAINING DATASET CONSTRUCTION

Surgical data science was recently defined as an interdisciplinary research field that aims to improve the quality and value of interventional healthcare through the capture, organization, analysis, and modeling of data. 30 The ultimate goal is to derive an AI‐based approach that provides physicians with the right assistance at the right time. One active field of research explores the analysis of surgical video data to provide context‐aware intraoperative assistance to the surgical team during MIS. 31

In our previous studies on laparoscopic colorectal surgical step classification that used 60 cases from a single institution and 240 cases from multiple institutions as the training datasets, the overall accuracies were 91.9% and 81.0%, respectively. 20 , 32 Despite the fact that the latter training dataset was four times larger than the former, the accuracy of the latter was lower than the former. These results demonstrated that the execution of an image recognition task on a multi‐institutional surgical video dataset, i.e. closer to the real‐world setting, was more difficult due to the data diversity. Therefore, to realize the aforementioned goal, large (in terms of the number of images), diverse (in terms of the different institutions, procedures, and image quality levels included), and extensively annotated (e.g. surgical step data or anatomical area segmentations) datasets are required; this should accelerate the development of robust AI algorithms. Currently, publicly available large annotated surgical video datasets include the Cholec120, 33 Bypass170, 33 and HeiCo datasets. 31 These datasets contain videos of 120 laparoscopic cholecystectomies, 170 laparoscopic bariatric bypass surgeries, and 30 laparoscopic colorectal surgeries, respectively. However, these were all single‐institution datasets; thus, the complexity of the data is limited to the variability of the procedures that are executed by surgeons from the same institution. Training an AI with a dataset from a single institution can reduce generalizability and overfitting. Overfitting implied that although AI can analyze and output with high accuracy in a known training dataset, its accuracy may be low in a new dataset, and AI with overfitting is useless in a real‐world setting. To obtain more generalizable networks, videos from multiple medical institutions should be included to ensure higher variability within the dataset.

At the National Cancer Center Hospital East (Chiba, Japan), we have worked on the “S‐access Japan” project to construct a large multi‐institutional laparoscopic surgical video database. This study was supported by the Japan Agency for Medical Research and Development and Japan Society for Endoscopic Surgery. Based on the large amount of video data that have been collected from all over the country, large, diverse, and high‐quality training datasets for AI algorithms can be constructed. This will accelerate the development of generalizable solutions for numerous AI‐based image recognition tasks.

5. QUALITY ASSURANCE OF ANNOTATION DATA

As shown in Figure 1, ML‐based approach is divided into the following three types of learning: supervised, unsupervised, and reinforcement learning.

5.1. Supervised learning

In supervised learning, AI learns the rules and patterns based on a substantial amount of data that has been annotated with labels of the ground truth in advance. After that, unknown data is newly inputted, and recognition and prediction are performed based on the output of the rules and patterns determined during the learning process (e.g. regression, classification).

5.2. Unsupervised learning

Unlike supervised learning, unsupervised learning does not require the training process with a large amount of annotated data. Instead, unsupervised learning analyzes the structure and characteristics of the data itself to group and simplify the data (e.g. clustering, dimension reduction).

5.3. Reinforcement learning

Reinforcement learning is an approach to learning a mechanism to reinforce the strategies of actions taken by AI. A reward is set for each result of a series of actions taken, and AI improves its accuracy through trial and error and repeated learning to maximize the reward.

Supervised learning is considered the most suitable approach for teaching expert surgeons’ tacit surgical knowledge to AI. With regards to supervised learning in the field of MIS, a standardized annotation process that is performed by an expert surgeon or an annotator with appropriate surgical knowledge is essential to ensure the quality of the training dataset, and data quantity and diversity alone are insufficient. In most previous studies that applied supervised learning approach‐based CV to MIS (e.g. surgical step recognition in laparoscopic colorectal surgery, 32 laparoscopic sleeve gastrectomy, 19 and POEM 21 ; surgical instrument recognition in laparoscopic gastrectomy 23 ; and prostate recognition in TaTME 29 ), the annotation procedure was performed by two or three surgeons. Although the annotation in the HeiCo dataset was performed by non‐surgeons, i.e. 14 engineers and four medical students, 31 the annotation target was a surgical instrument; therefore, deep surgical knowledge may not have been essential for the annotation task to be completed; however, when the annotation targets are surgical workflow or anatomical information, the annotation task may be too difficult to perform without surgeons. In addition, the ways in which inter‐annotator consensus was achieved also varied in each study and this is one of the future issues that needs to be standardized in this field. Although most studies managed the inter‐annotator discrepancies through discussion or double checking, several papers calculated the inter‐annotator reliability/agreement using concordance correlation coefficients, such as Krippendorff's alpha or Fleiss’ kappa. 19 , 21

The research field of “AI in surgery” is relatively new, and how to assure the quality of surgical annotation data (e.g. who performs the annotation, how many annotators there are, and how inter‐annotator consensus is achieved) has not yet been standardized. The standardization of surgical annotation process is unmistakably an important future challenge in the research field related to AI in surgery.

6. ETHICAL AND LEGAL CONSIDERATIONS

Ethical and legal considerations will play a major role in the research field of AI in surgery and should be carefully considered. Acquiring large amounts of surgical video data is paramount to the scalability and sustainability of the use of AI in surgery. 34 The considerations are as follows.

6.1. Privacy

Patients’ medical records, including diagnosis, operation process, and intra‐/postoperative complication data must be highly protected. In addition, whether the surgical video itself should be included in the private information is controversial.

6.2. Verification

The impact of AI‐based surgical system failures on patients must be minimized. AI‐based surgical systems should be verified and certified with full consideration of all the possible risks. Currently, it is necessary to consider appropriate methods for each AI‐based surgical system, other than clinical trials, to evaluate its safety and efficacy.

6.3. Ethics

The responsible use of new technologies and gradual building of trust between human and AI, e.g. effect of over‐reliance on the AI‐based surgical system and negative impact on surgical education, should be considered. The handling of short‐ or long‐term adverse events caused by AI‐based surgery should also be ensured. 35

To address these issues, it is advocated that broad policies governing the acquisition, storage, sharing, and use of data need to be developed and agreed upon by surgeons, engineers, lawmakers, ethicists, patients, etc. 34

7. FUTURE CHALLENGES

AI‐based image recognition, such as anatomical landmark navigation, is expected to bring value to future surgery in recognizing the correct dissection plane and avoiding accidental organ injury. However, current AI in supervised learning has the following challenges to demonstrate the value in actual surgery.

7.1. Anatomical factors

Although anatomical structures are easy for surgeons to recognize, they can also be recognized by AI with high accuracy and vice versa for difficult targets. The more difficult anatomical structures for surgeons to recognize, such as tiny nerves and vessels covered by fat tissue, the more valuable it is for AI‐based image navigation. One of the future challenges is annotating targets that are difficult for even surgeons to recognize and making AI learn them. Integrating with other technologies, such as indocyanine green (ICG) and near‐infrared light, could be necessary to improve the recognition accuracy for difficult targets.

7.2. Patient factors

Compared to standard cases, AI‐based image navigation could be valuable in difficult cases, such as patients with severe obesity, severe adhesions, and anatomical abnormalities. However, considering the statistics, it is assumed that most data in the training set consists of standard cases, and AI trained on such datasets will not be applicable in cases that deviate from the standard. In particular, the accumulation of cases with anatomical abnormalities could be an extremely difficult future challenge.

7.3. Surgeon factors

As mentioned in patient factors, it is assumed that most datasets in training consist of standard scenes, i.e. a situation where the surgery is performed properly and without trouble, and AI trained on such datasets will not be sufficient in a trouble‐shooting situation, such as the occurrence of massive bleeding and straying into the wrong dissection plane. To ensure sufficient recognition accuracy in difficult cases mentioned earlier and trouble‐shooting situations, it could be necessary to strategically select cases and scenes from the phase of training dataset construction.

Despite these challenges, surgeons’ vision, especially novice surgeons, tends to be narrow and focused on the operating point during MIS. We believe that highlighting target anatomical structures in the peripheral vision has a certain value even if they are fully exposed in standard cases and scenes. Besides, in any research and development field, advances in technology will surely follow. Therefore, we believe it is important first to implement the new technology to standard cases and scenes, identify issues, and then continue to expand the application.

8. FUTURE PERSPECTIVES

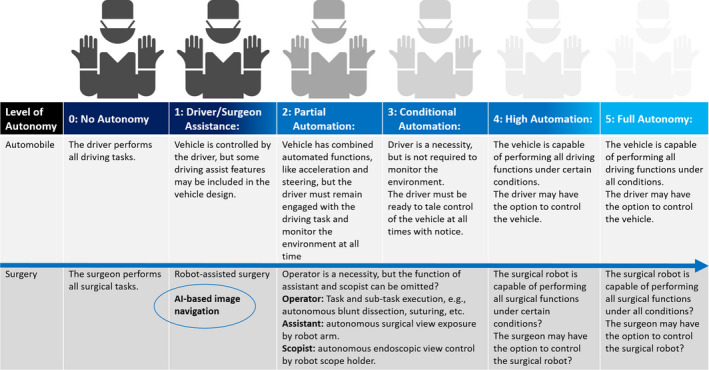

A shown in Figure 4, when the future of surgery is likened to technological innovation in automobiles, we believe that autonomous surgery will be waiting for us beyond image navigation surgery. There is no doubt that AI‐based image recognition will become an essential fundamental technology to realize autonomous surgery.

FIGURE 4.

Autonomy level of surgery likened to autonomy level of automobile

In the automobile field, autonomy level 1 is defined as “vehicle is controlled by the driver, but some driving assist features may be included in the vehicle design.” Similarly, AI‐based image navigation and robot‐assisted surgery still fall under autonomy level 1. For surgery to achieve the next level of autonomy, AI should at least analyze and output surgical steps, anatomy, instruments, etc., with real‐time and robust accuracy in every situation. A much higher level of autonomy must be required to replace the operator's function, and it is expected that the most recent challenge in the field of AI in surgery will be aiming partially to replace the function of assistant and scopist.

9. SUMMARY

In this article, we summarized the recent advances and future perspectives of AI‐related research and development in the field of surgery. There are several issues that need to be addressed before AI can be seamlessly integrated into the future of surgery in terms of technical feasibility, accuracy, safety, cost, and ethical and legal considerations. However, it is reasonable to expect that future surgical AI and robots will be able to perceive and understand complicated surroundings, conduct real‐time decision‐making, and perform desired tasks with increased precision, safety, and efficiency. Moreover, we believe that this vision will be realized in the not‐so‐distant future.

DISCLOSURE

Conflict of Interest: The authors declare no conflict of interests regarding this article.

ACKNOWLEDGEMENTS

This study was supported by the Japan Agency for Medical Research and Development (AMED) and the Japan Society for Endoscopic Surgery (JSES).

Kitaguchi D, Takeshita N, Hasegawa H, Ito M. Artificial intelligence‐based computer vision in surgery: Recent advances and future perspectives. Ann Gastroenterol Surg.2022;6:29–36. 10.1002/ags3.12513

REFERENCES

- 1. Feußner H, Park A. Surgery 4.0: the natural culmination of the industrial revolution? Innov Surg Sci. 2017;2(3):105–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Hashimoto DA, Rosman G, Rus D, Meireles OR. Artificial intelligence in surgery: promises and perils. Ann Surg. 2018;268(1):70–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. He K, Zhang X, Ren S, Sun J. Deep Residual Learning for Image Recognition. arxiv 2015; 1512.03385.

- 4. Healey MA, Shackford SR, Osler TM, Rogers FB, Burns E. Complications in surgical patients. Arch Surg. 2002;137(5):611–7. [DOI] [PubMed] [Google Scholar]

- 5. Birkmeyer JD, Finks JF, O'Reilly A, Oerline M, Carlin AM, Nunn AR, et al. Surgical skill and complication rates after bariatric surgery. N Engl J Med. 2013;369(15):1434–42. [DOI] [PubMed] [Google Scholar]

- 6. Begg CB, Riedel ER, Bach PB, Kattan MW, Schrag D, Warren JL, et al. Variations in morbidity after radical prostatectomy. N Engl J Med. 2002;346(15):1138–44. [DOI] [PubMed] [Google Scholar]

- 7. Furuya S, Tominaga K, Miyazaki F, Altenmüller E. Losing dexterity: patterns of impaired coordination of finger movements in musician's dystonia. Sci Rep. 2015;29(5):13360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Carmeli E, Patish H, Coleman R. The aging hand. J Gerontol A Biol Sci Med Sci. 2003;58(2):146–52. [DOI] [PubMed] [Google Scholar]

- 9. Rogers SO, Gawande AA, Kwaan M, Puopolo AL, Yoon C, Brennan TA, et al. Analysis of surgical errors in closed malpractice claims at 4 liability insurers. Surgery. 2006;140(1):25–33. [DOI] [PubMed] [Google Scholar]

- 10. Way LW, Stewart L, Gantert W, Liu K, Lee CM, Whang K, et al. Causes and prevention of laparoscopic bile duct injuries: analysis of 252 cases from a human factors and cognitive psychology perspective. Ann Surg. 2003;237(4):460–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Shanafelt TD, Balch CM, Bechamps G, Russell T, Dyrbye L, Satele D, et al. Burnout and medical errors among American surgeons. Ann Surg. 2010;251(6):995–1000. [DOI] [PubMed] [Google Scholar]

- 12. Madani A, Grover K, Watanabe Y. Measuring and teaching intraoperative decision‐making using the visual concordance test: deliberate practice of advanced cognitive skills. JAMA Surg. 2020;155(1):78–9. [DOI] [PubMed] [Google Scholar]

- 13. Vitiello V, Lee SL, Cundy TP, Yang G‐Z. Emerging robotic platforms for minimally invasive surgery. IEEE Rev Biomed Eng. 2013;6:111–26. [DOI] [PubMed] [Google Scholar]

- 14. Troccaz J, Dagnino G, Yang GZ. Frontiers of medical robotics: from concept to systems to clinical translation. Annu Rev Biomed Eng. 2019;21:193–218. [DOI] [PubMed] [Google Scholar]

- 15. Michard F, Biais M, Lobo SM, Futier E. Perioperative hemodynamic management 4.0. Best Pract Res Clin Anaesthesiol. 2019;33(2):247–55. [DOI] [PubMed] [Google Scholar]

- 16. Madani A, Namazi B, Altieri MS, Hashimoto DA, Rivera AM, Pucher PH, et al. Artificial intelligence for intraoperative guidance: using semantic segmentation to identify surgical anatomy during laparoscopic cholecystectomy. Ann Surg. 2020. 10.1097/SLA.0000000000004594. Online ahead of print. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Mascagni P, Vardazaryan A, Alapatt D, Urade T, Emre T, Fiorillo C, et al. Artificial intelligence for surgical safety: automatic assessment of the critical view of safety in laparoscopic cholecystectomy using deep learning. Ann Surg. 2020. 10.1097/SLA.0000000000004351. Online ahead of print. [DOI] [PubMed] [Google Scholar]

- 18. Jin Y, Dou QI, Chen H, Yu L, Qin J, Fu C‐W, et al. SV‐RCNet: workflow recognition from surgical videos using recurrent convolutional network. IEEE Trans Med Imaging. 2018;37(5):1114–26. [DOI] [PubMed] [Google Scholar]

- 19. Hashimoto DA, Rosman G, Witkowski ER, Stafford C, Navarette‐Welton AJ, Rattner DW, et al. Computer vision analysis of intraoperative video: automated recognition of operative steps in laparoscopic sleeve gastrectomy. Ann Surg. 2019;270(3):414–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Kitaguchi D, Takeshita N, Matsuzaki H, Takano H, Owada Y, Enomoto T, et al. Real‐time automatic surgical phase recognition in laparoscopic sigmoidectomy using the convolutional neural network‐based deep learning approach. Surg Endosc. 2020;34(11):4924–31. [DOI] [PubMed] [Google Scholar]

- 21. Ward TM, Hashimoto DA, Ban Y, Rattner DW, Inoue H, Lillemoe KD, et al. Automated operative phase identification in peroral endoscopic myotomy. Surg Endosc. 2020;27:1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Misawa M, Kudo SE, Mori Y, Hotta K, Ohtsuka K, Matsuda T, et al. Development of a computer‐aided detection system for colonoscopy and a publicly accessible large colonoscopy video database (with video). Gastrointest Endosc. 2020. [DOI] [PubMed] [Google Scholar]

- 23. Yamazaki Y, Kanaji S, Matsuda T, Oshikiri T, Nakamura T, Suzuki S, et al. Automated surgical instrument detection from laparoscopic gastrectomy video images using an open source convolutional neural network platform. J Am Coll Surg. 2020;230(5):725–32.e1. [DOI] [PubMed] [Google Scholar]

- 24. Zhao Z, Cai T, Chang F, Cheng X. Real‐time surgical instrument detection in robot‐assisted surgery using a convolutional neural network cascade. Healthc Technol Lett. 2019;6(6):275–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Khalid S, Goldenberg M, Grantcharov T, Taati B, Rudzicz F. Evaluation of deep learning models for identifying surgical actions and measuring performance. JAMA Netw Open. 2020;3(3):e201664. [DOI] [PubMed] [Google Scholar]

- 26. Zhang J, Gao X. Object extraction via deep learning‐based marker‐free tracking framework of surgical instruments for laparoscope‐holder robots. Int J Comput Assist Radiol Surg. 2020;15(8):1335–45. [DOI] [PubMed] [Google Scholar]

- 27. Roß T, Reinke A, Full PM, Wagner M, Kenngott H, Apitz M, et al. Comparative validation of multi‐instance instrument segmentation in endoscopy: Results of the ROBUST‐MIS 2019 challenge. Med Image Anal. 2021. https://doi.org10.1016/j.media.2020.101920. Online ahead of print. [DOI] [PubMed] [Google Scholar]

- 28. Wijsman PJM, Broeders IAMJ, Brenkman HJ, Szold A, Forgione A, Schreuder HWR, et al. First experience with THE AUTOLAP™ SYSTEM: an image‐based robotic camera steering device. Surg Endosc. 2018;32(5):2560–6. [DOI] [PubMed] [Google Scholar]

- 29. Kitaguchi D, Takeshita N, Matsuzaki H, Hasegawa H, Honda R, Teramura K, et al. Computer‐assisted real‐time automatic prostate segmentation during TaTME: a single‐center feasibility study. Surg Endosc. 2021;35:2493–9. [DOI] [PubMed] [Google Scholar]

- 30. Maier‐Hein L, Vedula SS, Speidel S, Navab N, Kikinis R, Park A, et al. Surgical data science for next‐generation interventions. Nat Biomed Eng. 2017;1(9):691–6. [DOI] [PubMed] [Google Scholar]

- 31. Maier‐Hein L, Wagner M, Ross T, Reinke A, Bodenstedt S, Full PM, et al. Heidelberg colorectal data set for surgical data science in the sensor operating room. Sci Data. 2021;8(1):101. 10.1038/s41597-021-00882-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Kitaguchi D, Takeshita N, Matsuzaki H, Oda T, Watanabe M, Mori K, et al. Automated laparoscopic colorectal surgery workflow recognition using artificial intelligence: experimental research. Int J Surg. 2020;1(79):88–94. [DOI] [PubMed] [Google Scholar]

- 33. Twinada AP, Yengera G, Mutter D, Marescaux J, Padoy N. IEEE Trans Med Imaging. 2019;38(4):1069–1078. [DOI] [PubMed] [Google Scholar]

- 34. Hashimoto DA, Ward TM, Meireles OR. The role of artificial intelligence in surgery. Adv Surg. 2020;1(54):89–101. [DOI] [PubMed] [Google Scholar]

- 35. Zhou X‐Y, Guo Y, Shen M, Yang G‐Z, et al. Application of artificial intelligence in surgery. Front Med. 2020;14(4):417–30. [DOI] [PubMed] [Google Scholar]