Abstract

Background

To study the utility of a teleophthalmology program to diagnose and triage common ophthalmic complaints presenting to an ophthalmic emergency room.

Methods

Prospective, observational study of 258 eyes of 129 patients presenting to the Massachusetts Eye and Ear Infirmary Emergency Ward (MEE EW) who completed a questionnaire to gather chief complaint (CC), history of present illness, and medical history. Anterior and posterior segment photographs were collected via iPhone 5 C camera and a Canon non-mydriatic fundus camera, respectively. Ophthalmic vital signs were collected. All information was reviewed remotely by three ophthalmologists; a diagnosis and urgency designation were recorded. The remote assessment was compared to gold standard in-person assessment.

Results

The 129 recruited patients collectively contributed 220 visual complaints, of which 121 (55%) were from females with mean age 56.5 years (range 24–89). Sensitivities and specificities for telemedical triage were as follows: eye pain (n = 56; sensitivity: 0.58, CI [0.41, 0.74]; specificity: 0.91, CI [0.80, 1]), eye redness (n = 54; 0.68, CI [0.50, 0.86]; 0.93, CI [0.84, 1]), blurry vision (n = 68; 0.73, CI [0.60, 0.86]; 0.91, CI [0.80, 1]), and eyelid complaints (n = 42; 0.67, CI [0.43, 0.91]; 0.96, CI [0.89, 1]). The remote diagnostic accuracies, as stratified by CC, were eye pain (27/56; 48.21%), eye redness: (32/54; 59.26%), blurry vision: (30/68; 44.11%), eyelid (24/42; 57.14%).

Conclusions

Telemedical examination of emergent ophthalmic complaints consisting of a patient questionnaire, anterior segment and fundus photos, and ophthalmic vital signs, may be useful to reliably triage eye disease based on presenting complaint.

Subject terms: Physical examination, Eye manifestations

Introduction

The utilization of telemedicine for triage, diagnosis, and management of the disease has substantially increased in recent years across all of medicine in large part due to the COVID-19 pandemic. Prior to the COVID-19 pandemic, fewer than 2% of clinicians provided any outpatient care via telemedicine [1]. There was a subsequent 23 fold increase in telemedicine from January to June 2020, spanning the specialties of medicine. Ophthalmology, however, was a laggard in telemedicine uptake with only 9.3% of ophthalmologists using telemedicine at least once.

Historically, ophthalmic telemedicine has been limited to remote screening for diseases within at-risk populations, such as diabetic retinopathy [2–7], retinopathy of prematurity [8–10], and glaucoma [11]. Telemedicine has also been studied for diagnostic and ongoing management of routine ophthalmic problems, but with little uptake [12–15]. While telemedicine for urgent ophthalmic complaints has been trialed in remote international settings [16–19] or primary care settings [20], telemedicine has been used less frequently for triage or diagnostic purposes in the context of urgent or emergent ophthalmic care within the United States. Limited ophthalmology coverage in emergency department (ED) settings across the country and ED physician discomfort in assessing eye complaints lend further support for testing teleophthalmology in urgent and emergent care settings [21, 22].

Patients with ophthalmic complaints commonly present to emergency departments in hopes of prompt evaluation and management. Innovation in tele-ophthalmic approaches to triage and diagnose urgent conditions would improve patient access to specialty evaluation. This study assessed the utility of a teleophthalmology program to triage and diagnose ophthalmic complaints presenting to an ophthalmic emergency room. We hypothesize that such a protocol can effectively triage urgent eye conditions and serve as a proof of concept for the telemedical evaluation of urgent eye complaints.

Methods

This is a prospective cross-sectional study performed at a single academic center ophthalmic emergency room that processes an average of 40–50 eye patients daily. Patients were consented and enrolled by a study coordinator. Inclusion criteria were patients 18 years of age or older presenting to the ophthalmic emergency room. Exclusion criteria included severe eye trauma (facial lacerations, eyelid lacerations, acute orbital fractures, and open globe injuries), non-English speakers, and inability to provide informed consent. Despite the availability of advanced technology for imaging, anterior segment photos were taken with an iPhone 5 C (Apple, Cupertino, CA, USA).

Primary outcomes of the study included sensitivity and specificity of triage status and diagnostic accuracy via virtual examination of patients with the following common ophthalmic complaints: eye pain, eye redness, blurry vision, eyelid complaint. Secondary analyses of the primary outcome included difference of means for age and diagnostic confidence level along with the difference of proportions for sex, triage status urgency, and diagnostic accuracy. Analysis of inter-rater reliability for triage status across the three virtual examiners was also performed.

Patient recruitment and data capture

Two study coordinators without prior medical or ophthalmology experience were trained by a comprehensive ophthalmologist to recruit patients and capture clinical information. Coordinators were instructed to provide a survey to patients for completion without further explanation or guidance. Coordinators were provided a protocol sheet instructing them how to capture ophthalmic photography (Fig. 1) with full face, external gaze, and nine cardinal gaze directions in room light without fluorescein dye. They were also instructed to place fluorescein dye in each eye and use the iPhone camera for anterior segment photography of the ocular surface of both eyes in primary gaze under a blue light. Lastly, coordinators used a Canon non-mydriatic fundus camera (Melville, NY, USA) to capture one macula-centered, 45-degree photo of the posterior pole of each eye. They were allowed discretion to take as many photos as needed until they felt sufficient focus was achieved. The training lasted 30 min and consisted of ophthalmologist instruction and supervised image capture with all imaging modalities for one complete patient session. Images were stored within RedCap and uploaded onto an encrypted password-protected computer drive. Eligible subjects completed a standardized survey about their medical and ocular history, chief complaint (CC) and history of present illness. If a patient was unable to read the survey, the coordinators read the survey aloud and recorded responses.

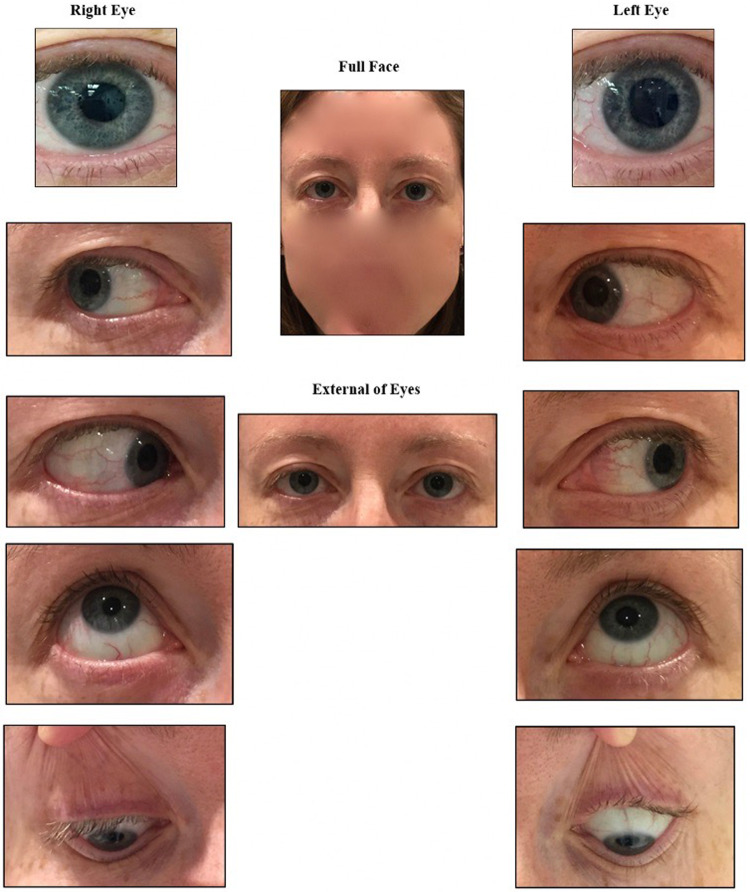

Fig. 1. Smartphone anterior segment photo protocol.

iPhone photographs were taken using this template, with a full face image, image of eyes in primary gaze, and images of individual eyes in cardinal gazes.

Patients then underwent a comprehensive ophthalmic evaluation by an attending ophthalmologist. The ophthalmologist subsequently recorded the triage status of each patient, specifically answering the following question for each patient: “If this problem were seen in a primary care office first, when would you suggest referral to ophthalmology, and if so is the referral urgent (should be seen on the same or following day) or non-urgent (could be referred for follow-up clinic visit)”?

Remote telemedical evaluation

Three attending ophthalmologists who did not personally evaluate any of the study patients in the emergency room subsequently reviewed remotely, in an asynchronous fashion, the de-identified patient survey data, anterior segment photos, and fundus photos as well as the patient’s visual acuity, pupillary response, and extraocular movements. Each remote reviewer provided a triage status (urgent or non-urgent, as defined above) and diagnosis for each patient, as well as a degree of confidence in their diagnosis on a 10-point Likert scale ranging from 1 (very unsure) to 10 (extremely confident). The consensus of at least 2 of 3 virtual examiners was used as the triage status for virtual examination.

Statistical plan and analysis

The sample size was calculated with the goal of detecting a sensitivity and specificity of 80% for telemedicine examination as considered a new diagnostic test. Expected sensitivity and specificity were based on two previous studies; one of referable diabetic retinopathy and concomitant ocular diseases, which achieved 90% sensitivity and 69% specificity for diagnosis [23]; and another study using smartphone technology in an emergency room reporting 92.85% and 81.94% diagnostic sensitivity and specificity, respectively [19].

Statistical analysis consisted of calculation of overall sensitivity and specificity of the telemedical test. The study was not powered for subgroup analysis, but sensitivity and specificity were calculated for the most common CCs of eye pain, eye redness, blurred vision, and eyelid complaints. Overall diagnostic accuracy was calculated, as well as for each CC category, comparing the final diagnosis made by remote reading to the final diagnosis made by the in-person examination. Two out of three remote readers needed to have their diagnosis match with that of the in-person evaluator for the remote diagnosis to be considered accurate. Given the non-parametric nature of these data, Kruskal–Wallis [24] tests were used to compare (1) the mean age, (2) the mean confidence level of virtual diagnosis indicated by remote examiners, (3) the mean confidence level of virtual diagnosis indicated by remote examiners for which the triage was correct, and (4) the mean confidence level of virtual diagnosis indicated by remote examiners for which the triage was incorrect, across each CC category with p < 0.05 used for statistical significance. For significant results, Dunn’s test [25] was used for multiple comparisons, using the Benjamini–Hochberg method for p-value adjustment [26]. A test for the equality of proportions was used to compare (1) the proportion of females vs. males, (2) the proportion of urgent vs. non-urgent triage status, and (3) the proportion of accurately diagnosed conditions vs. non-accurately diagnosed conditions across CC categories. For significant results at the 0.05 level, pairwise tests of proportions were used with the Benjamini–Hochberg method for p-value adjustment (Table 1). The same tests were run across urgent vs. non-urgent triage status (Table 2). Kappa values for inter-rater reliability for triage status were determined between each pair of virtual examiners. All analyses were performed using R statistical programming software (version 4.0.3) [27].

Table 1.

Telemedical evaluation data stratified by complaint.

| Parameters | Overall (N = 220) | Eye Redness (N = 54) | Eye Pain (N = 56) | Blurred Vision (N = 68) | Eyelid Complaint (N = 42) | P |

|---|---|---|---|---|---|---|

| Virtual evaluation parameters | ||||||

| Triage sensitivity (CI) | 0.71 (0.61–0.81) | 0.68 (0.50–0.86) | 0.58 (0.41–0.74) | 0.73 (0.60–0.86) | 0.67 (0.43–0.91) | |

| Triage specificity (CI) | 0.92 (0.86–0.98) | 0.93 (0.84–1.0) | 0.91 (0.80–1.0) | 0.91 (0.80–1.0) | 0.96 (0.89–1.0) | |

| Diagnostic accuracy | 113/220 (51.0%) | 32/54 (59.3%) | 27/56 (48.2%) | 30/68 (44.1%) | 24/42 (57.1%) | 0.31 |

| Mean confidence level ± SD | 5.6 ± 1.8 | 6.1 ± 1.6 | 5.4 ± 1.9 | 5.0 ± 1.7 | 6.0 ± 1.8 | 0.012 |

| Range | 1.0–9.7 | 2.3–9.7 | 1.0–9.3 | 1.0–9.0 | 2.3–9.3 | |

| Mean conf. level (Correct Dx) | 6.6 ± 1.6 | 6.8 ± 1.5 | 6.3 ± 1.7 | 6.1 ± 1.5 | 7.0 ± 1.5 | 0.13 |

| Mean conf. level (Incorrect Dx) | 4.5 ± 1.4 | 5.0 ± 1.1 | 4.5 ± 1.6 | 4.2 ± 1.3 | 4.6 ± 1.1 | 0.28 |

| “Urgent” triage (in-person) | 118/220 (54.0%) | 25/54 (46.3%) | 33/56 (58.9%) | 45/68 (66.2%) | 15/42 (35.7%) | 0.009 |

| “Urgent” triage (virtual) | 86/220 (39.1%) | 19/54 (35.2%) | 21/56 (37.5%) | 35/68 (51.5%) | 11/42 (26.2%) | 0.052 |

Table 2.

Telemedical evaluation data stratified by in-person triage status.

| Parameters | Overall (N = 220) | Non-urgent (N = 102) | Urgent (N = 118) | P |

|---|---|---|---|---|

| Virtual evaluation parameters | ||||

| Mean confidence level ± SD | 5.6 ± 1.8 | 5.9 ± 1.8 | 5.3 ± 1.7 | 0.018 |

| Range | 1.0–9.7 | 1.7–9.7 | 1.0–9.0 | |

| Mean conf. level (Correct Triage) | 5.6 ± 1.8 | 5.9 ± 1.9 | 5.2 ± 1.7 | 0.012 |

| Mean conf. level (Incorrect Triage) | 5.6 ± 1.7 | 5.5 ± 1.8 | 5.6 ± 1.7 | 0.76 |

| Diagnostic accuracy | 113/220 (51.4%) | 60/102 (58.8%) | 53/118 (44.9%) | 0.054 |

The study was approved by the Institutional Review Board of the Mass General Brigham and adhered to the tenets of the Declaration of Helsinki.

Results

The 129 recruited patients collectively contributed 220 visual complaints with the most common being: “eye redness”, “eye pain”, “blurred vision”, and “eyelid complaint”. Of the 220 visual complaints (each patient could contribute multiple complaints), 121 (55%) were from females with mean age 56.5 years (range 24–89). Notably, patients had been sent by their PCP for same day evaluation for 50 (23%) of the 220 complaints (Table 3). At the CC-level, there were no significant differences in age, sex, or “presentation from PCP” status between the four CC groups.

Table 3.

Demographic data stratified by complaint.

| Parameters | Overall N = 220 | Eye redness N = 54 | Eye pain N = 56 | Blurred vision N = 68 | Eyelid complaint N = 42 | P |

|---|---|---|---|---|---|---|

| Demographics | ||||||

| Eyes | 220 | 54 | 56 | 68 | 42 | |

| Female sex, N (%) | 121 (55) | 29 (54) | 30 (54) | 37 (54) | 25 (60) | 0.93 |

| Mean age ± SD | 56.5 ± 17.9 | 53.9 ± 18.5 | 55.2 ± 17.3 | 58.3 ± 17.5 | 58.7 ± 18.6 | 0.46 |

| Range | 24–89 | 25–88 | 24–84 | 24–89 | 24–88 | |

| Presenting from PCP (%) | 50/220 (23) | 10/54 (19) | 13/56 (23) | 16/68 (24) | 11/42 (26) | 0.83 |

The sensitivity and specificity for telemedical triage status were calculated using the in-person examination as a reference standard (Table 1). Fifty-six patients (43.4%) were deemed non-urgent by both tests (true negative), 4 (3.1%) were non-urgent by reference standard but urgent by virtual examination (false positive), 22 (17.1%) were urgent by reference standard but non-urgent by virtual examination (false negative), and 47 (36.4%) were urgent by reference standard and urgent by virtual examination (true positive). Overall, the telemedical protocol had a sensitivity of 0.71 (95% CI 0.61, 0.81) and specificity of 0.92 (95% CI 0.86, 0.98.) in its ability to determine appropriate triage level. The positive predictive value of an urgent telemedical triage was 0.93 (95% CI 0.88, 0.99) while the negative predictive value of a non-urgent telemedical triage was 0.67 (95% CI 0.56, 0.77). Sensitivity and specificity were also calculated for telemedical triage of the most common eye complaints including blurred vision (n = 68), eye pain (n = 56), eye redness (n = 54), and eyelid complaints (n = 42) in Table 1.

The most common diagnoses by in-person examination were: conjunctivitis (12), posterior vitreous detachment (10), chalazion/hordeolum (9), anterior uveitis (6), dry eye (5), retinal detachment/hole/tear (5), vitreous hemorrhage (4), blepharitis (4), corneal abrasion (4), subconjunctival hemorrhage (2), contact lens keratitis (3). These common diagnoses accounted for 64 of 129 (49.6%) of cases.

An “urgent” triage designation was assigned to a greater proportion of the total presenting visual complaints when assessed by in-person examination versus virtual assessment (in-person: 54.0%, virtual: 39.1%; p = 0.003) (Table 1). Among the four CC groups, there were no significant differences in the proportion of complaints that were triaged as “urgent” between in-person examination and virtual evaluation except for “eye pain” (in-person: 58.9%, virtual: 39.1%; p = 0.038). When assessed in-person, the most frequent CC assigned an urgent status was “blurred vision” (66%), whereas “eyelid complaint” was the least frequently assigned urgent status (36%). There was a statistically significant pairwise comparison of proportions between these two complaint groups (p = 0.021).

Twenty-two cases of ‘false negative’ triage status (i.e., considered urgent by in-person examination but non-urgent by virtual examination) had the following diagnoses as determined by in-person examination: anterior uveitis (2), chemical burn, superficial punctate keratopathy with abrasion, corneal abrasion (2), chemosis with dellen and corneal abrasion, corneal infiltrate (2), superficial keratitis, hordeolum with preseptal cellulitis, filamentary keratitis, microhyphema with elevated pressure, lower lid entropion (with incidental finding of chronic retinal detachment), multiple hordeola, conjunctival lesion, herpes zoster ophthalmicus, posterior vitreous detachment, central serous chorioretinopathy, blurred vision of unknown etiology, amaurosis fugax, and headache. Seven out of 22 cases (32%) with “false negative” triage status had diagnostic concordance between the in-person examination and virtual examination: filamentary keratitis, conjunctival lesion, lower lid entropion (with incidental finding of chronic retinal detachment), corneal abrasion, headache, hordeolum, and chemosis. Blepharitis and dry eye were always triaged correctly as non-urgent. Retinal detachment/hole/tear, vitreous hemorrhage, and contact lens keratitis were always triaged correctly as urgent.

Overall, 113 of 220 complaints (51.4%) had virtual diagnostic concordance with in-person assessment. Virtual diagnostic accuracies, as stratified by most common CC, were eye redness: (32/54; 59.3%), eyelid complaint (24/42; 57.1%), eye pain (27/56; 48.2%), and blurred vision: (30/68; 44.1%), with p = 0.31 (Table 1).

Mean overall confidence level for all virtual diagnoses was 5.6 ± 1.8 (range 1.0–9.7). The confidence level for virtual diagnoses made correctly and incorrectly was 6.6 ± 1.6 and 4.5 ± 1.4, respectively. The difference in average confidence level across all CC categories was statistically significant (p = 0.012). The results from Dunn’s test of multiple comparisons revealed that the difference in average confidence level within the “blurred vision” group (average confidence = 5.04) and the “eye redness” group (average confidence = 6.06) was statistically significant (adjusted p-value = 0.023). Similarly, the difference in average confidence level within the “blurred vision” group (average confidence = 5.04) and the “eyelid complaint” group (average confidence = 5.99) was statistically significant (adjusted p-value = 0.041). All other pairwise comparisons were not statistically significant at the 0.05 significance level. On average, virtual examiners yielded a higher diagnostic confidence level for correctly diagnosed complaints than for incorrectly diagnosed complaints across each of the four complaint categories (all p-values < 0.001).

Virtual graders indicated significantly greater mean diagnostic confidence for non-urgent presentations (5.9) as compared to urgent presentations (5.3) (p = 0.018, Table 2). This trend was also appreciated among patients for whom a correct virtual triage was obtained (p = 0.012) but not for patients with incorrect virtually triaged complaints (p = 0.76). However, there was no significant difference in virtual diagnostic accuracy between non-urgent and urgent presentations (p = 0.054).

Kappa values for inter-rater reliability for triage status were determined between each pair of virtual examiners. All had poor agreement between raters: For examiner 1-examiner 2, K = 0.139 (SE 0.057, 95% CI 0.028–0.250), examiner 1-examiner 3, K = 0.246 (SE 0.061, 95% CI 0.127,0.366), examiner 2-examiner 3, K = 0.379 (SE = 0.082, 95% CI 0.219–0.540).

Additional triage sensitivities and specificities were calculated following adjustment of 6 “false negative” triage cases to “true negative” based on the authors’ post-hoc clinical assessment that the agreed upon diagnoses were indeed non-urgent. Following the adjustment, overall triage sensitivity improved from 0.71 to 0.79 (95% CI 0.69–0.88) (Table 4). A similar adjustment was performed for cases with low diagnostic confidence levels (less than 4) to determine the effect of employing a conservative approach that automatically triaged cases with low virtual confidence as “urgent”. Triage sensitivity following adjustment of 10 low confidence cases improved from 0.71 to 0.76 (95% CI 0.67–0.85). The combined effect of both of these adjustments yielded an overall adjusted sensitivity of 0.84 (95% CI 0.75–0.92) (Table 4).

Table 4.

Adjusted telemedical sensitivity & specificity.

| Parameters | Overall (N = 220) | Eye redness (N = 54) | Eye pain (N = 56) | Blurred vision (N = 68) | Eyelid complaint (N = 42) |

|---|---|---|---|---|---|

| Low diagnostic confidence adjustment | |||||

| Triage sensitivity (CI) | 0.76 (0.67–0.85) | 0.68 (0.50–0.86) | 0.67 (0.51–0.83) | 0.78 (0.66–0.90) | 0.73 (0.51–0.96) |

| Triage specificity (CI) | 0.80 (0.71–0.89) | 0.90 (0.79–1.0) | 0.78 (0.80–1.0) | 0.78 (0.61–0.95) | 0.93 (0.83–1.0) |

| Triage disagreement adjustment | |||||

| Triage sensitivity (CI) | 0.79 (0.69–0.88) | 0.80 (0.64–0.96) | 0.73 (0.58–0.88) | 0.82 (0.71–0.93) | 0.80 (0.60–1.00) |

| Triage specificity (CI) | 0.92 (0.86–0.98) | 0.93 (0.84–1.00) | 0.91 (0.80–1.00) | 0.91 (0.80–1.00) | 0.96 (0.89–1.00) |

| Combined overall adjustment | |||||

| Triage sensitivity (CI) | 0.84 (0.75–0.92) | 0.80 (0.64–0.96) | 0.82 (0.69–0.95) | 0.87 (0.77–0.97) | 0.87 (0.69–1.00) |

| Triage specificity (CI) | 0.80 (0.71–0.89) | 0.90 (0.79–1.00) | 0.78 (0.61–0.95) | 0.78 (0.61–0.95) | 0.93 (0.83–1.00) |

Discussion

Teleophthalmology for the diagnosis of urgent complaints has the potential to expand access to subspecialty care and minimize costly subspecialty visits. This study examines the sensitivity and specificity of a smartphone teleophthalmology protocol, augmented by non-mydriatic posterior segment photos, for both triage and diagnosis of urgent ophthalmic complaints.

The study used a combination of a patient survey, clinical information, and imaging data to assess any complaint presenting to an ophthalmic emergency room, often as referred by a primary care provider. Sensitivity and specificity for triage status using this protocol is 71% and 92%, respectively, which is similar to what has been reported in smaller international studies [16–19]. Moreover, we found similar telemedical triage sensitivities and specificities for the most common eye complaints with eye redness and eyelid complaints demonstrating the highest specificities (0.93 and 0.96, respectively) and blurry vision and eye redness with the highest sensitivities (0.73 and 0.68, respectively). This is encouraging given that the testing relied solely on a brief history, iPhone photography, and fundus photography gathered by individuals without clinical training.

It is likely that some of the disparity between in-person and virtual triage status is due to variability in clinical opinion. Indeed, there is a lack of a true gold standard for triage status. The “false negative” group (i.e., patients that were triaged as urgent by in-person examination but non-urgent by virtual examination) is at the highest risk in our protocol. These are the patients who theoretically would have missed receiving appropriate care if they had been assessed by virtual examination alone. There are several diagnoses, including hordeolum, conjunctival lesion, filamentary keratitis, superficial keratitis, lower lid entropion, headache, and chemosis about which clinicians might disagree as to whether an urgent triage designation is warranted. Therefore, a robust telemedical evaluation program needs to establish uniform clinical criteria for which conditions constitute urgent diagnosis.

Our reference standard of the in-person examiner represents a single clinical opinion which could vary according to clinical acumen, seniority, and attitude towards risk. This is highlighted by the fact that 32% of patients who were “false negatives” for urgent triage status actually had diagnostic concordance—i.e., the in-person and virtual examiner agreed on the diagnosis but disagreed on whether that diagnosis was urgent or non-urgent. We sought to address this limitation by calculating hypothetical triage sensitivities and specificities after adjusting 6 of the 7 “false negative” triage cases to “true negatives”. This change maintained the diagnosis of the in-person examiner but changed their triage status to non-urgent based on the authors’ clinical assessment of these diagnoses. Another explanation for the poor telemedical triage sensitivity may be due to low virtual diagnostic confidence. This could potentially be compensated for by automatically designating an urgent triage status to any cases with a low virtual diagnostic confidence below a given threshold. The substantial improvement in triage sensitivity (overall adjusted triage sensitivity of 0.84) following correction for low diagnostic confidence situations and diagnosis-specific triage status disagreement demonstrates the potential uses and parameters for telemedicine in the assessment of urgent eye complaints.

A telemedicine approach for the triage of urgent eye complaints may vary according to patient symptoms that closely correspond to specific aetiologies. For example, all 19 patients who reported a CC of “flashes and floaters” were designated a triage status of “urgent” by the in-person examiner. This finding highlights a well-established practice pattern, namely, to urgently triage patients presenting with reports of “flashes and floaters” due to the urgent nature of the potential retinal aetiologies. Conversely, there is no anterior segment-related clinical history equivalent of “flashes and floaters” to indicate the urgency of anterior segment disease [28]. Further exploration, therefore, is needed to determine the extent to which virtual triage status can be determined with survey information alone.

Examination of common CCs suggests that it is possible to correctly triage such complaints, though the ability to accurately diagnose a specific etiology through our teleophthalmology program is limited. This is especially true of the diagnostic accuracies obtained in this study below 50% for “eye pain” (48%) and “blurry vision” (44%). It is possible that higher quality imaging modalities are required to obtain accurate diagnoses for these CCs. Recent advances in technology, including remotely-operated slit lamp cameras [29, 30] capable of detecting anterior chamber cell and flare and ultrawide field retinal imaging [31], may prove useful in this regard.

The choice of smartphone technology for anterior segment imaging in this study was pursued because smartphones are ubiquitously available, thereby improving the generalizability of our findings [32, 33]. However, smartphones do not have a slit beam and as such cannot image the anterior chamber structures with stereoscopic depth cues [34]. Also, handheld photos from a smartphone do not allow for the same fine-tuned, adjustable focus as a traditional slit lamp camera. Such imaging limitations potentially contributed to reduced diagnostic confidence among virtual examiners. Notably, sensitivity and specificity of virtual examination may increase with the emergence of newer imaging solutions, particularly for anterior segment pathology [34].

We found low agreement in triage designation between remote reviewers, which suggests that sensitivity and specificity vary depending on the individual clinician reading the virtual data. Virtual evaluation data were considered in aggregate for this study for the purpose of testing a representative diagnostic opinion rather than that of one individual. However, low kappa values for triage status may indicate that further work should be done to properly train physicians in the use of ophthalmic telemedicine. As telemedicine is further integrated into ophthalmologic care, education in best telemedicine practices should be made available for current physicians as well as for trainees. Telemedical education throughout one’s training and clinical practice could potentially increase levels of confidence in remote triage and diagnosis.

This study has several limitations, one of which is selection bias. Presumably, patients with lower acuity complaints who were not in significant pain were more likely to agree to participate. The study was not powered to compare groups according to acuity of ophthalmic diagnosis, nor was it powered for separate analysis of individual CCs. As mentioned, there is a lack of a true gold standard for triage status. Lastly, our choice to perform posterior segment photography with a standard non-mydriatic fundus camera may not be generalizable in other cost-prohibitive settings, and as such, greater reliance on survey information for posterior complaints would be needed.

Overall, this study illustrates that a protocol combining a survey, clinical information, smartphone anterior segment photos, and standard fundus photos can produce reasonable sensitivity and specificity for triaging the complaint as urgent or non-urgent, suggesting that this technique could serve as a helpful clinical tool in emergency settings. This protocol, however, cannot reliably produce high levels of diagnostic accuracy remotely for urgent ophthalmic complaints. This study illustrates the need for thoughtful validation of any telemedicine protocol.

Summary

What was known before

Urgent ophthalmic complaints presenting to emergency rooms are often misdiagnosed or mismanaged by non-ophthalmic providers. Telemedicine is a model of care capable of improving patient access to eye care in emergent settings. However, the utility of telemedical diagnosis and triage of urgent ophthalmic complaints has been poorly studied.

What this study adds

This study assesses the sensitivity and specificity of a teleophthalmology program to both triage and diagnose urgent ophthalmic complaints presenting to an ophthalmic emergency room. The telemedical examination consisted of a patient questionnaire, photographs of both the anterior segment and fundus, as well as ophthalmic vital signs. We show that this model of telemedical eye care can reliably triage eye disease based on presenting complaints and ophthalmic testing.

Acknowledgements

The authors acknowledge the Department of Ophthalmology at Massachusetts Eye and Ear Infirmary.

Author contributions

RSM and ACL had full access to all the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis. Concept and design: ACL, MBH, EJR, RSM, GWA. Acquisition, analysis, or interpretation of data: NEH, RSM, GWA, ACL, EJR. Drafting of the manuscript: RSM, GWA, ACL, NEH. Critical revision of the manuscript for important intellectual content: All authors. Statistical analysis: NEH. Supervision: ACL and RSM.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Patel SY, Mehrotra A, Huskamp HA, Uscher-Pines L, Ganguli I, Barnett ML. Variation in telemedicine use and outpatient care during the COVID-19 pandemic in the United States. Health Aff. 2021;40:349–58. doi: 10.1377/hlthaff.2020.01786. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Silva PS, Aiello LP. Telemedicine and eye examinations for diabetic retinopathy: a time to maximize real-world outcomes. JAMA Ophthalmol. 2015;133:525–6. doi: 10.1001/jamaophthalmol.2015.0333. [DOI] [PubMed] [Google Scholar]

- 3.Chasan JE, Delaune B, Maa AY, Lynch MG. Effect of a teleretinal screening program on eye care use and resources. JAMA Ophthalmol. 2014;132:1045–51. doi: 10.1001/jamaophthalmol.2014.1051. [DOI] [PubMed] [Google Scholar]

- 4.Kirkizlar E, Serban N, Sisson JA, Swann JL, Barnes CS, Williams MD. Evaluation of telemedicine for screening of diabetic retinopathy in the veterans health administration. Ophthalmology. 2013;120:2604–10. doi: 10.1016/j.ophtha.2013.06.029. [DOI] [PubMed] [Google Scholar]

- 5.Owsley C, McGwin G, Lee DJ, Lam BL, Friedman DS, Gower EW, et al. Diabetes eye screening in urban settings serving minority populations: detection of diabetic retinopathy and other ocular findings using telemedicine. JAMA Ophthalmol. 2015;133:174. doi: 10.1001/jamaophthalmol.2014.4652. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Ogunyemi O, George S, Patty L, Teklehaimanot S, Baker R. Teleretinal screening for diabetic retinopathy in six Los Angeles urban safety-net clinics: final study results. AMIA Annu Symp Proc AMIA Symp. 2013;2013:1082–8. [PMC free article] [PubMed] [Google Scholar]

- 7.Silva PS, Cavallerano JD, Tolson AM, Rodriguez J, Rodriguez S, Ajlan R, et al. Real-time ultrawide field image evaluation of retinopathy in a diabetes telemedicine program. Diabetes Care. 2015;38:1643–9. doi: 10.2337/dc15-0161. [DOI] [PubMed] [Google Scholar]

- 8.Chiang MF, Wang L, Kim D, Scott K, Richter G, Kane S, et al. Diagnostic performance of a telemedicine system for ophthalmology: advantages in accuracy and speed compared to standard care. AMIA Annu Symp Proc AMIA Symp. 2010;2010:111–5. [PMC free article] [PubMed] [Google Scholar]

- 9.Daniel E, Quinn GE, Hildebrand PL, Ells A, Hubbard GB, Capone A, et al. Validated system for centralized grading of retinopathy of prematurity: telemedicine approaches to evaluating acute-phase retinopathy of prematurity (e-ROP) study. JAMA Ophthalmol. 2015;133:675. doi: 10.1001/jamaophthalmol.2015.0460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Jackson KM. Cost-utility analysis of telemedicine and ophthalmoscopy for retinopathy of prematurity management. Arch Ophthalmol Chic Ill 1960. 2008;126:493–9. doi: 10.1001/archopht.126.4.493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Pasquale LR, Asefzadeh B, Dunphy RW, Fisch BM, Conlin PR, Ocular TeleHealth Team. Detection of glaucoma-like optic discs in a diabetes teleretinal program. Optom St Louis Mo. 2007;78:657–63. doi: 10.1016/j.optm.2007.04.101. [DOI] [PubMed] [Google Scholar]

- 12.Maa AY, Evans C, DeLaune WR, Patel PS, Lynch MG. A novel tele-eye protocol for ocular disease detection and access to eye care services. Telemed J E-Health J Am Telemed Assoc. 2014;20:318–23. doi: 10.1089/tmj.2013.0185. [DOI] [PubMed] [Google Scholar]

- 13.Gupta SC, Sinha SK, Dagar AB. Evaluation of the effectiveness of diagnostic & management decision by teleophthalmology using indigenous equipment in comparison with in-clinic assessment of patients. Indian J Med Res. 2013;138:531–5. [PMC free article] [PubMed] [Google Scholar]

- 14.Tan J, Poh E, Sanjay S, Lim T. A pilot trial of tele-ophthalmology for diagnosis of chronic blurred vision. J Telemed Telecare. 2013;19:65–9. 10.1177/1357633X13476233 [DOI] [PubMed]

- 15.Wright HR, Diamond JP. Service innovation in glaucoma management: using a web-based electronic patient record to facilitate virtual specialist supervision of a shared care glaucoma programme. Br J Ophthalmol. 2015;99:313–7. doi: 10.1136/bjophthalmol-2014-305588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Peeler CE, Dhakhwa K, Mian SI, Blachley T, Patel S, Musch DC, et al. Telemedicine for corneal disease in rural Nepal. J Telemed Telecare. 2014;20:263–6. doi: 10.1177/1357633X14537769. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.John S, Sengupta S, Reddy SJ, Prabhu P, Kirubanandan K, Badrinath SS. The Sankara Nethralaya mobile teleophthalmology model for comprehensive eye care delivery in rural India. Telemed J E-Health J Am Telemed Assoc. 2012;18:382–7. doi: 10.1089/tmj.2011.0190. [DOI] [PubMed] [Google Scholar]

- 18.Johnson KA, Meyer J, Yazar S, Turner AW. Real-time teleophthalmology in rural Western Australia. Aust J Rural Health. 2015;23:142–9. doi: 10.1111/ajr.12150. [DOI] [PubMed] [Google Scholar]

- 19.Ribeiro AG, Rodrigues RAM, Guerreiro AM, Regatieri CVS. A teleophthalmology system for the diagnosis of ocular urgency in remote areas of Brazil. Arq Bras Oftalmol. 2014;77:214–8. doi: 10.5935/0004-2749.20140055. [DOI] [PubMed] [Google Scholar]

- 20.Lamminen H, Lamminen J, Ruohonen K, Uusitalo H. A cost study of teleconsultation for primary-care ophthalmology and dermatology. J Telemed Telecare. 2001;7:167–73. doi: 10.1258/1357633011936336. [DOI] [PubMed] [Google Scholar]

- 21.Deaner JD, Amarasekera DC, Ozzello DJ, Swaminathan V, Bonafede L, Meeker AR, et al. Accuracy of referral and phone-triage diagnoses in an eye emergency department. Ophthalmology. 2021;128:471–3. doi: 10.1016/j.ophtha.2020.07.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Dettori J, Taravati P. Diagnostic accuracy of emergency medicine provider eye exam. Invest Ophthalmol Vis Sci. 2012;53:1434–1434. [Google Scholar]

- 23.Conlin PR, Asefzadeh B, Pasquale LR, Selvin G, Lamkin R, Cavallerano AA. Accuracy of a technology-assisted eye exam in evaluation of referable diabetic retinopathy and concomitant ocular diseases. Br J Ophthalmol. 2015;99:1622–7. doi: 10.1136/bjophthalmol-2014-306536. [DOI] [PubMed] [Google Scholar]

- 24.Hollander M, Wolfe DA. Nonparametric Statistical Methods. Wiley; 1973.

- 25.Dunn OJ. Multiple comparisons using rank sums. Technometrics. 1964;6:241–52. doi: 10.1080/00401706.1964.10490181. [DOI] [Google Scholar]

- 26.Benjamini Y, Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J R Stat Soc Ser B Methodol. 1995;57:289–300. [Google Scholar]

- 27.R Core Team. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing; 2020. https://www.R-project.org/

- 28.Woodward MA, Valikodath NG, Newman-Casey PA, Niziol LM, Musch DC, Lee PP. Eye symptom questionnaire to evaluate anterior eye health. Eye Contact Lens. 2018;44:384–9. doi: 10.1097/ICL.0000000000000403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Parel J-MA, Rowaan C, Gonzalez A, Silgado J, Aguilar MC, Alawa KA, et al. Second generation robotic remote controlled stereo slit-lamp. Invest Ophthalmol Vis Sci. 2016;57:1721. doi: 10.1167/tvst.7.4.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Lahaie Luna G, Parel J-M, Gonzalez A, Hopman W, Rowaan C, Khimdas S, et al. Validating the use of a stereoscopic robotized teleophthalmic drone slit lamp. Can J Ophthalmol. 2021;56:191–6. doi: 10.1016/j.jcjo.2020.10.005. [DOI] [PubMed] [Google Scholar]

- 31.Aiello LP, Odia I, Glassman AR, Melia M, Jampol LM, Bressler NM, et al. Comparison of early treatment diabetic retinopathy study standard 7-field imaging with ultrawide-field imaging for determining severity of diabetic retinopathy. JAMA Ophthalmol. 2019;137:65. doi: 10.1001/jamaophthalmol.2018.4982. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Berenguer A, Goncalves J, Hosio S, Ferreira D, Anagnostopoulos T, Kostakos V. Are smartphones ubiquitous? An in-depth survey of smartphone adoption by seniors. IEEE Consum Electron Mag. 2017;6:104–10. doi: 10.1109/MCE.2016.2614524. [DOI] [Google Scholar]

- 33.Gao W, Liu Z, Guo Q, Li X. The dark side of ubiquitous connectivity in smartphone-based SNS: An integrated model from information perspective. Comput Hum Behav. 2018;84:185–93. doi: 10.1016/j.chb.2018.02.023. [DOI] [Google Scholar]

- 34.Armstrong GW, Kalra G, De Arrigunaga S, Friedman DS, Lorch AC. Anterior segment imaging devices in ophthalmic telemedicine. Semin Ophthalmol. 2021;36:149–56. doi: 10.1080/08820538.2021.1887899. [DOI] [PubMed] [Google Scholar]