Abstract

With the increase of COVID-19 cases worldwide, an effective way is required to diagnose COVID-19 patients. The primary problem in diagnosing COVID-19 patients is the shortage and reliability of testing kits, due to the quick spread of the virus, medical practitioners are facing difficulty in identifying the positive cases. The second real-world problem is to share the data among the hospitals globally while keeping in view the privacy concerns of the organizations. Building a collaborative model and preserving privacy are the major concerns for training a global deep learning model. This paper proposes a framework that collects a small amount of data from different sources (various hospitals) and trains a global deep learning model using blockchain-based federated learning. Blockchain technology authenticates the data and federated learning trains the model globally while preserving the privacy of the organization. First, we propose a data normalization technique that deals with the heterogeneity of data as the data is gathered from different hospitals having different kinds of Computed Tomography (CT) scanners. Secondly, we use Capsule Network-based segmentation and classification to detect COVID-19 patients. Thirdly, we design a method that can collaboratively train a global model using blockchain technology with federated learning while preserving privacy. Additionally, we collected real-life COVID-19 patients’ data open to the research community. The proposed framework can utilize up-to-date data which improves the recognition of CT images. Finally, we conducted comprehensive experiments to validate the proposed method. Our results demonstrate better performance for detecting COVID-19 patients.

Keywords: COVID-19, privacy-preserved data sharing, deep learning, federated-learning, blockchain

I. Introduction

A. Background

The massive and sudden spread of the coronavirus (COVID-19) has overwhelmed the world for more than a year since the initial cases were reported. Coronavirus triggers an acute respiratory infection in the lungs causing a large number of deaths. Due to the highly contagious nature, COVID-19 detection remains among high-priority tasks. Currently, a nucleic acid test by sampling throat and nasopharyngeal swabs is the most feasible way for the diagnosis. However, sampling error and low viral load affect the diagnosis results in terms of accuracy. In contrast, the antigen tests are comparatively faster, but, have poor sensitivity.

Besides the pathological tests, radiological examinations in the form of chest Computed Tomography (CT) and X-ray imaging also contribute in recognizing the infection in patients. To improve the detection accuracy, a significant series of deep learning models are proposed which detect different types of infection by analyzing the CT and X-ray images [1]–[6]. Based on a small set of available infected samples, deep learning models are trained and improved accordingly. However, sensitivity and accuracy are still compromised due to the lack of training data. Traditional federated learning is the natural solution for such problem. Federated learning collects locally trained models from the different sources (in our case from the hospitals or health care centers) and collaboratively train a global model over the decentralized network [7]–[9]. However, sharing such confidential data is not possible due to the absence of a privacy-preserving approach for the health care centers [7], [10]–[19].

To solve this issue, Shokri and Shmatikov [20] proposed a distributed model, in which, the users can share the gradients to assure the privacy of the data. However, their methodology was exposed to vulnerability even for passive attackers [21], [22]. Bonawitz et al. [23] designed a privacy-preserving framework for secure aggregation of the gradients using the federated learning global model. Zhang et al. [24] presented homomorphic encryption (HE) and threshold secret sharing schemes to secure the gradients. However, the shared model has no measure of certainty related to the authentic users. In other words, the trust problem between different sources still exists which threatens the quality of data and leads to poor training of the model.

To overcome the trust problem over decentralized network, blockchain technology provides the trust mechanism in various ways [13], [25]–[28]. The idea of private data sharing [13] proposed the framework for the authentication of the data from the source. Qu et al. [27], proposed a blockchain-based algorithm for the aggregation of the local deep learning models which ensure data reliability. Such data-sharing techniques increase the risk of data leakage due to not considering the gradients’ privacy. Furthermore, these schemes require multiple rounds in each aggregation which is not suitable for the blockchain distributed network.

B. Motivation

The motivation of this study is inspired by some fundamental problems. COVID-19 is spreading rapidly having different symptoms with different patients. Thus, hospitals can share their data for the accurate diagnosis of COVID-19 patients. Sharing data securely (without leakage the privacy of users) and train the global model for detection of the positive cases is a challenging task. Moreover, the existing studies are not capable enough to share the data collaboratively and train the model accurately. Collecting data from various sources is a big challenge and a bottleneck in the advancement of Artificial Intelligence (AI) based techniques. The availability of such confidential data is not possible due to the absence of a privacy-preserving approach for the health care centers [7], [10]–[18]. Furthermore, training the deep learning model collaboratively over a public network is another challenge.

The latest report of the World Health Organization (WHO) reveals that COVID-19 is an infectious disease that primarily affects the lungs such as SARS, giving them a honeycomb-like ap pearance [29]. Even after recovering from COVID-19, some patients have to live with permanent lung damage [30], [31]. The first motivation of our work is to find small infected areas in the lungs due to COVID-19. Such detection can benefit professional radiologists to accurately detect infected areas. The second motivation to share the data to train a better deep learning model while keeping in view the privacy concern of the data providers. The sharing of data helps to develop a robust deep learning-based model for the automatic detection of COVID-19 patients.

-

•

Unbalanced Data: The data is collected from different hospitals and CT scanners and various scanners support different resolutions, sizes, and other properties.

-

•

Privacy: The third challenge is the lack of an appropriate dataset for training. It is quite challenging to collect enough amount of training data and train a robust prediction model while keeping in view the privacy concerns of hospitals.

-

•

Detection of COVID-19 CT scan: Recognizing the patterns of infections in the lung and screening COVID-19 are also challenging tasks.

-

•

Collaboratively learning: Training the global model based on a decentralized network and send back the aggregated model to the client is a quite challenging task.

C. Proposed Approach

To solve the mentioned challenges, we propose a framework that builds an accurate collaborative model using data from multiple hospitals to recognize CT scans of COVID-19 patients. The proposed blockchain-based federated learning framework learns collaboratively from multiple hospitals having different kinds of CT scanners. Firstly, we propose a data normalization process to normalize the data obtained from the different sources. Then we employ deep learning models to recognize the COVID-19 patterns of lung CT scans. We use SegCaps [32] for image segmentation and further train a Capsule Network [33] for better generalization. We found the capsule network achieved better performance as compared to other learning models. Finally, we train the global model and solve the privacy issue using the federated learning technique. The proposed framework collects the data and collaboratively trains an intelligent model then shares this intelligent model in a decentralized manner over the public network. By using federated learning, the weights are aggregated from the various local models with keeping their data privacy of the hospitals. For preserving the privacy, hospitals share only gradients to the blockchain network, The blockchain-based federated learning aggregates the gradients and distribute updated model to the verified hospitals. The blockchain decentralized architecture for data sharing among multiple hospitals shares the data securely without leakage the privacy of the hospitals.

Additionally, this article introduces a new dataset, named CC-19, related to the latest family of coronavirus i.e. COVID-19. The dataset contains the Computed Tomography scan (CT) slices for 89 subjects. Out of these 89 subjects, 68 were confirmed patients (positive cases) of the COVID-19 virus, and the rest 21 were found to be negative cases. The dataset contains 34,006 CT scan slices (images) belonging to 89 subjects. The data for these patients were collected on various days having about 231 CT scan volumes in total.

The main contributions of the paper are not limited to:

-

1)

This paper proposes a data normalization technique (to accurately train the federated learning model) as the data is collected from different sources (i.e, Hospitals) and devices (CT scanner machines).

-

2)

The proposed technique detects the patterns of COVID-19 from the lung CT scans using Capsule Network-based segmentation and classification.

-

3)

This paper proposes a blockchain empowered method to collect the locally trained model weights collaboratively from different sources while keeping in view the organizations’ privacy concerns. Federated learning employed is to protect the organizations’ data privacy and train the global deep learning model using less accurate local models.

-

4)

Additionally, we introduce a new dataset that consists of 89 subjects out of which 68 subjects are confirmed COVID-19 patients. The dataset contains 34,006 CT scan slices (images) belonging to 89 subjects.

D. Applications

The proposed approach is practical for big data analysis (i.e., lung CT scans), and it efficiently processes the data using blockchain and deep learning models. Consider a scenario of the real-time use case of a hospital having some new symptoms of the COVID-19 virus. To find out new symptoms or new information regarding COVID-19, the data needs to be stored on a decentralized network without leakage of the privacy of the patients and securely share the knowledge of the latest symptoms. Federated learning secures data through the decentralized network and distributes the training task to train a better model using the latest available patients data.

The proposed framework collects a small amount of data from various sources and trains a collaborative deep learning model. Federated learning combines all the individually trained models over the blockchain (global model). The collaboratively trained global model provides better and more accurate predictions as it holds the latest information about COVID-19 symptoms.

E. Structure of Paper

The rest of this paper is organized as follows: In Section II, we describe the system model and its workflow. Section III describes the proposed data normalization process and the capsule network-based classification and segmentation model. In Section IV, we provide details for the global federated learning model. We present the experiment details and results in Section V followed by a conclusion in Section VI.

II. System Model

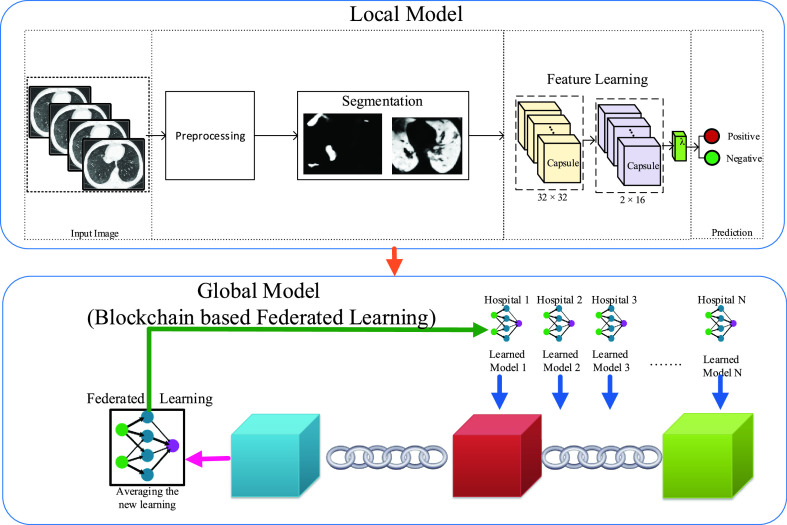

In this paper, we distributed the data among multiple hospitals. Each hospital shares its data to train the global model shown in Fig 1. The main goal of this paper to share the data from multiple sources and collaboratively train a deep learning model. As the data is collected from multiple sources, for that reason, we design a normalization technique to deal with different kinds of CT scanners’ (Brilliance ICT, Samatom definition Edge, Brilliance 16P CT) data. After normalization of the data, we segmented the images and then train the model for reorganization of COVID-19 suspects using the Capsule Network. We utilize a blockchain-based federated learning framework to train and share a collaborative model. The purpose of federated learning is to combine the weights of the locally trained model. After the aggregation of the locally trained model weights, the global model is returned to the hospital or organization.

Fig. 1.

Overview of the federated learning process.

The privacy of the data providers is the most important factor. The privacy and leakage of sensitive data are managed by the blockchain. Therefore, we store the two types of transactions in the blockchain ledger i.e., i) data sharing transactions and ii) data retrieve transactions. To assure data privacy, this paper utilizes the permissioned blockchain for managing the accessibility of data. The main advantage of the permissioned blockchain is that it records all transactions to retrieve the data from a global model. The Second objective to achieve data collaboration. The proposed blockchain federated learning method combines the local model weights and sends updated weights to the local model. Finally, we design a local model for the heterogeneous or imbalanced data using the spatial normalization process.

The proposed model is divided into two parts i) Local model ii) Blockchain-based Federated learning. First, we solve the problem of heterogeneous CT scan data. Then, we use the SegCaps [32] for segmentation and train the local model to detect the patterns of COVID-19. Finally, we share the local model weights to the blockchain network to train the global model.

III. Local Client Model

A. Data Normalization

A major challenge with federated learning is to deal with input data from multiple sources and various machines with different parameters. Most of the existing techniques are not efficient enough to deal with this problem for federated learning. To overcome this challenge, we propose a normalization technique that deals with different types of CT scans and brings the images to the same standard. As a result of this normalization process, federated learning can deal with the heterogeneity of the dataset and train a better learning model. The normalization method has two phases i) spatial normalization, and ii) signal normalization. Spatial normalization deals with the dimension and resolution of the CT scan. Signal normalization deals with the intensity of each voxel of the CT scanners which is based on the lung window.

1). Spatial Normalization:

As already discussed, different CT scanners have different parameters for CT scans such as high-resolution scan volume is

and low resolution

and low resolution

. In our case, we used federated learning for the data obtained from multiple sources. We use the standardized volume

. In our case, we used federated learning for the data obtained from multiple sources. We use the standardized volume

for human lung. Moreover, we use the Lanczos interpolation [26] to resale the standard resolutions.

for human lung. Moreover, we use the Lanczos interpolation [26] to resale the standard resolutions.

2). Signal Normalization:

As every CT scan has Hounsfield Units (HU) and the data collected from different hospitals have different HU (i.e.,-400 HU to -600 HU). In medical practice, radiologists set the lung window for every CT scanner. There are different types of windows such as window length (

) and window width (

) and window width (

). Where

). Where

is defined as the central signal value and

is defined as the central signal value and

defines the width of this window. The proposed Equation (1) represents the upper bound and the lower bound of the voxel.

defines the width of this window. The proposed Equation (1) represents the upper bound and the lower bound of the voxel.

|

is the intensity of the data and

is the intensity of the data and

is the final intensity. We set the range of the lung window is

is the final intensity. We set the range of the lung window is

to standardized the embedding space.

to standardized the embedding space.

B. Segmentation and Classification Model

This section proposes the segmentation based on [32]. Further, the Capsule Network is trained for the detection of COVID-19 using the segmented CT scan images.

The proposed method takes 2D slices as input for segmentation. A standardized volume

for human lung segmentation is used. Each CT scan volume (3D) has three planes

for human lung segmentation is used. Each CT scan volume (3D) has three planes

,

,

, and

, and

. We formalize the

. We formalize the

or

or

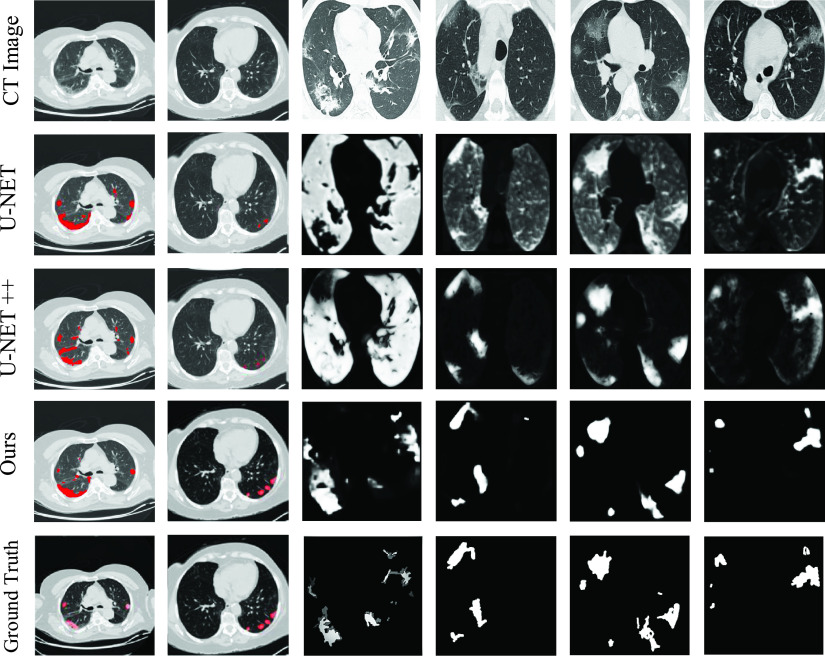

planes to easily differentiate the lung infection (as shown in the first row of Fig. 8).

planes to easily differentiate the lung infection (as shown in the first row of Fig. 8).

|

Fig. 8.

A visual comparison (segmentation) of our method with other studies. The first row (CT-Images) represents the original images taken from different datasets. The first two columns show the overlay segmentation result while the rest of the columns represent the masks.

Where

denotes the probability and

denotes the probability and

is the infection point.

is the infection point.

is the method to define the voxel of three dimensions views.

is the method to define the voxel of three dimensions views.

is aggregation function to predict the

is aggregation function to predict the

,

,

, and

, and

voxel. Thus, the traditional method is time-consuming, so, we modify the Equation (2) to:

voxel. Thus, the traditional method is time-consuming, so, we modify the Equation (2) to:

|

1). Capsule Networks for Classification of COVID-19:

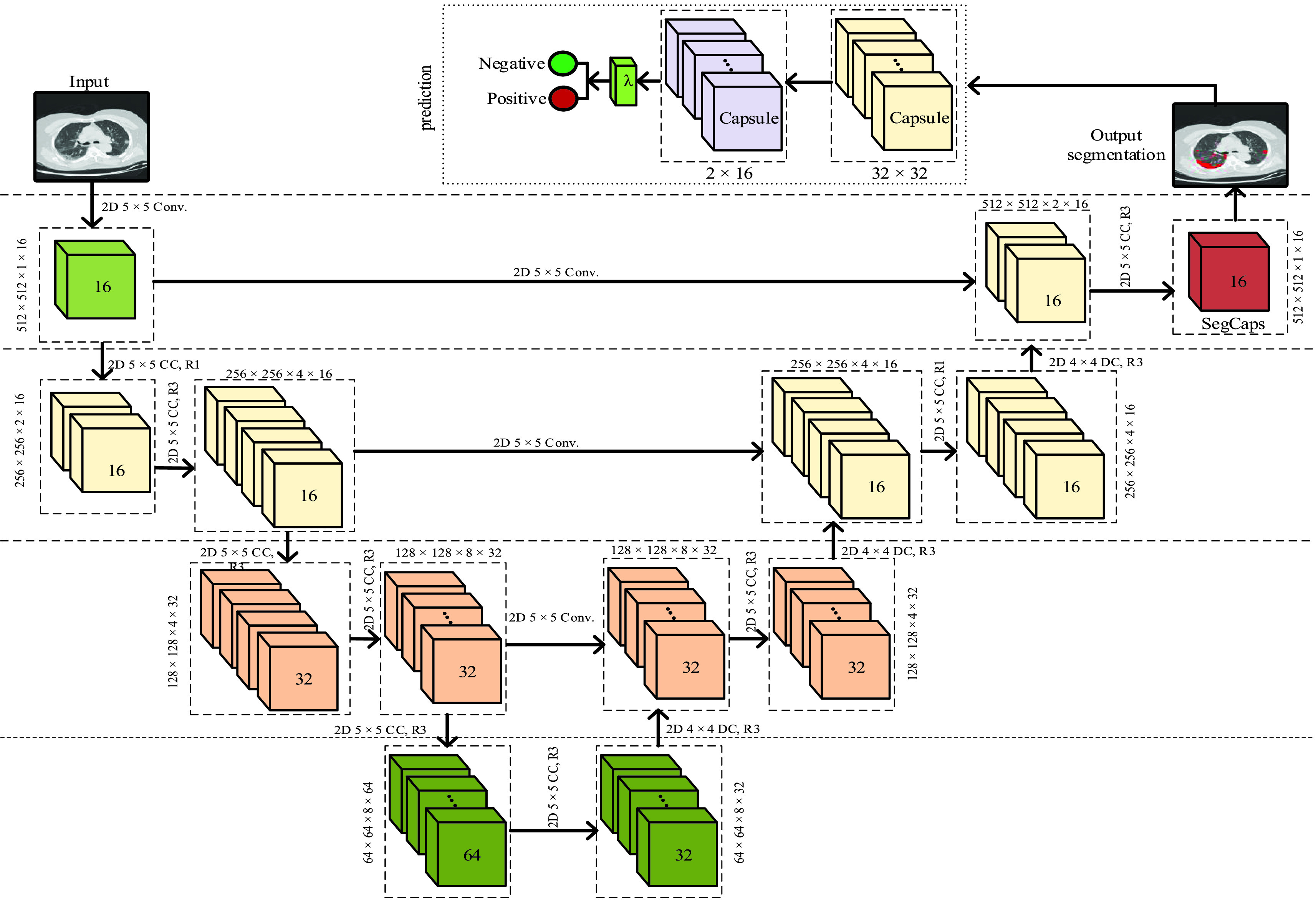

A deep learning framework usually has a feature extraction pipeline that estimates and extracts prominent features. Afterward, a learning process such as MLP (multi-layer perceptron) is applied to learn the appropriate class on the extracted features. Over the past few years, researchers have used and fine-tuned the feature extraction pipeline of these robust deep learning frameworks. We design a Capsule Network because it achieves high performance in detecting diseases in the medical images. The previous technique needs lots of data to train a more accurate model. The Capsule Network improves the deep learning models’ performance inside the internal layers of the deep learning models. The architecture of our modified Capsule Network is shown in Fig. 2, which is similar to Hinton’s Capsule Network. The Capsule Network contains four layers: i) Convolutional layer, ii) Hidden layer, iii) PrimaryCaps layer, and iv) DigitCaps layer.

Fig. 2.

Our proposed capsule-based (SegCaps) segmentation and classification model. Further, CC, DC, and Conv. represent convolution capsule, Deconvolution capsule, and convolution respectively.

A capsule is created when input features are in the lower layer. Each layer of the Capsule Network contains many capsules. To train the Capsule Network, the activation layer represents instantiate parameters of the entity and compute the length of the Capsule Network to re-compute the scores for the feature part. Capsule Networks is a better replacement for Artificial Neural Network (ANN). Here, the capsule acts as a neuron. Unlike ANN where a neuron outputs a scalar value, Capsule Networks tend to describe an image at a component level and associate a vector with each component. The probability of the existence of a component is represented by this vector’s length and replaces max-pooling with “routing by agreement”. As capsules are independents the probability of correct classification increases when multiple capsules agree on the same parameters. Every component can be represented by a pose vector

rotated and translated by a weighted matrix

rotated and translated by a weighted matrix

to a vector

to a vector

. Moreover, the prediction vector can be calculated as:

. Moreover, the prediction vector can be calculated as:

|

The next higher level capsule i.e.

processes the sum of predictions from all the lower level capsules with

processes the sum of predictions from all the lower level capsules with

as a coupling coefficient. Capsules

as a coupling coefficient. Capsules

can be represented as:

can be represented as:

|

where

can be represented as a routing softmax function given as:

can be represented as a routing softmax function given as:

|

As can be seen from the Fig. 2, the parameter c, A squashing function is applied to scale the output probabilities between 0 and 1 which can be represented as:

|

For further details, refer to the original study [33]. We perform the routing by agreement using the Algorithm 1.

Algorithm 1 Routing Algorithm

-

1:

Forall capsules

in layer

in layer

and capsule in layer

and capsule in layer

) do

) do

-

2:

For k iterations do

-

3:

Forall capsule

in layer

in layer

do

do

-

4:

Forall capsule

in layer

in layer

do

do

-

5:

Forall capsule

in layer

in layer

do

do

State Forall capsule

State Forall capsule

in layer

in layer

in layer

in layer

do

do

-

6:

Return

C is an array after softmax, and it can be determined by dynamic routing by agreement. There are quite a few introductions to this method, the main meaning is that through several iterations, the distribution of the output of the low-level capsule to the high-level capsule is gradually adjusted according to the output of the high-level capsule, and finally an ideal distribution will be reached. The detailed training algorithm is shown in the paper [34]. We use the Capsule Network to train the model and compare it with the state of art deep learning networks. Table I shows the difference between traditional and Capsule Network. In section V, we compare traditional deep learning with the Capsule Network classifiers.

TABLE I. A Comparison Between Capsule and Traditional Neural Network.

| Operation | Neuron (scalar) | Capsule (vector) |

|---|---|---|

| Affine transformation | NA |

|

| Weighted sum |

|

|

| Activation |

|

|

| Output | scalar | vector

|

| Graphical representation |

IV. Decentralized Global Federated Learning Model

In this Section, we consider a decentralized data sharing scenario with multiple hospitals. Each hospital is willing to share its locally trained model (weights), our proposed method assists in hiding the user data and share the model over a decentralized network. Further, federated learning is used to combine the net effect of different models shared by different hospitals. The main goal is to utilize federated learning to share the data among the hospitals without leakage of privacy.

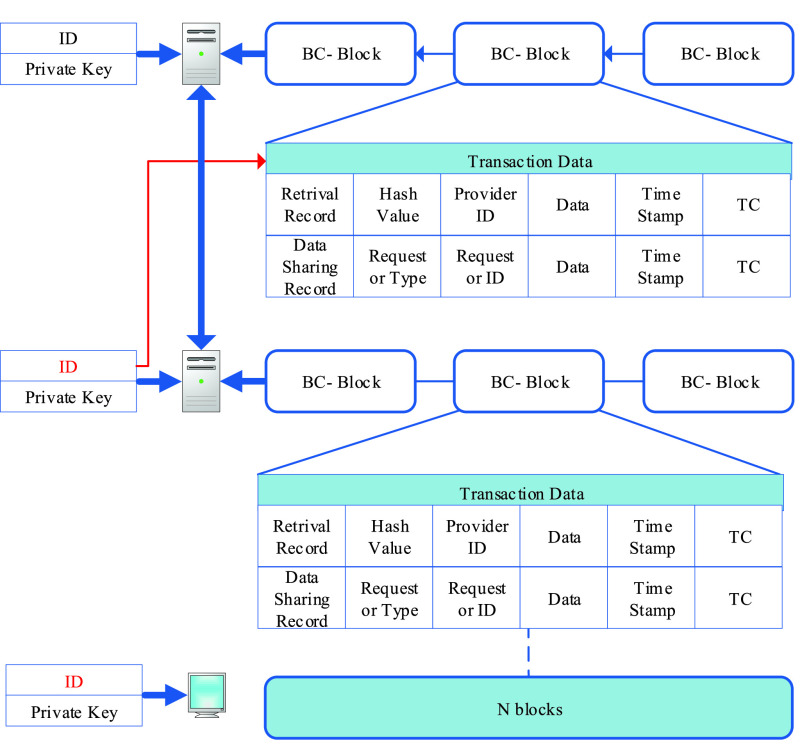

A. Blockchain Based Fast and Effective Federated Learning

As patients’ data is sensitive and has a high volume in terms of disk capacity. Placing data on the blockchain with its limited storage space is expensive both financially and for computational resources. Thus, the actual CT scan data is stored by the hospital while blockchain helps to retrieve the trained model. When a new hospital provides the data, it stores a transaction in the block to verify the owner of the data. The hospitals’ data include the type of data and the size of the data. Each transaction for the data sharing and retrieval process is shown in Fig. 3. The proposed model solves data-sharing retrieval requests. Multiple hospitals can collaboratively share the data and train the collaborative model which can be used to predict optimal results. The retrieval mechanism does not violate the privacy of hospitals. Inspired by Maymounkov and Mazi‘eres [35], we present multi-organization architecture using blockchain technology. All hospitals

are partitioned and share data for various categories. Each category has a different community and each community maintains the log table

are partitioned and share data for various categories. Each category has a different community and each community maintains the log table

. The blockchain stores the all unique IDs for every hospital.

. The blockchain stores the all unique IDs for every hospital.

Fig. 3.

Overview of the blockchain record storing process. TC represents the transaction count.

Retrieving data into the physically present nodes is expressed by Equation (8). We measure the distance between two nodes as Equation (8) where

is the data categories to retrieve the data among the hospitals. Moreover, the distance of two nodes

is the data categories to retrieve the data among the hospitals. Moreover, the distance of two nodes

measured to the retrieve of data, and

measured to the retrieve of data, and

are the attributes of the weight matrix for the node

are the attributes of the weight matrix for the node

and

and

, respectively. Every hospital generates its unique ID according to the logic and distance of the nodes.

, respectively. Every hospital generates its unique ID according to the logic and distance of the nodes.

|

The nodes

and

and

with unique IDs

with unique IDs

and

and

shown in the Equation (9).

shown in the Equation (9).

|

To maintain the privacy of data in a decentralized manner, the randomized method for two hospital nodes is shown in Equation (10). Where

and

and

is the neighboring records of data.

is the neighboring records of data.

is the outcome set of data.

is the outcome set of data.

achieves the privacy of the data.

achieves the privacy of the data.

|

However, to achieve data privacy for multiple hospitals, Laplace is applied for the local model training

:

:

|

where

represents the sensitivity as expressed by Equation (12):

represents the sensitivity as expressed by Equation (12):

|

The consensus algorithm is executed to train the global model by using the local models. As all nodes collaboratively train the model, we provide proof of work to share the data between the different nodes. During the training phase, the consensus algorithm checks the quality of local models, and the accuracy is measured by mean absolute error (MAE).

shows predicated data and

shows predicated data and

,

,

is the original data. The high accuracy of

is the original data. The high accuracy of

shows the low mean absolute error of

shows the low mean absolute error of

. The consensus algorithm (voting process) among the hospitals is represented by Equation (13) and (14). Where Equation (13)

. The consensus algorithm (voting process) among the hospitals is represented by Equation (13) and (14). Where Equation (13)

shows the locally trained model and

shows the locally trained model and

shows the global models weights in Equation (14).

shows the global models weights in Equation (14).

|

To preserve the hospitals’ data privacy, all the data is encrypted and signed using public and private keys

. MAE calculates all transactions and broadcast

. MAE calculates all transactions and broadcast

and

and

calculates each transaction of the model. If all transactions are approved then the record is stored in the distributed ledger. More precisely, the training of the consensus algorithm describes as follows:

calculates each transaction of the model. If all transactions are approved then the record is stored in the distributed ledger. More precisely, the training of the consensus algorithm describes as follows:

-

1)

Node

transfers the local model

transfers the local model

transaction to the

transaction to the

.

. -

2)

Node

transfers the local model

transfers the local model

to the leader.

to the leader. -

3)

The leader broadcasts the block node to the

and

and

.

. -

4)

Verify the

and

and

and wait for approval.

and wait for approval. -

5)

Finally, store the blocks in the retrieval blockchain database.

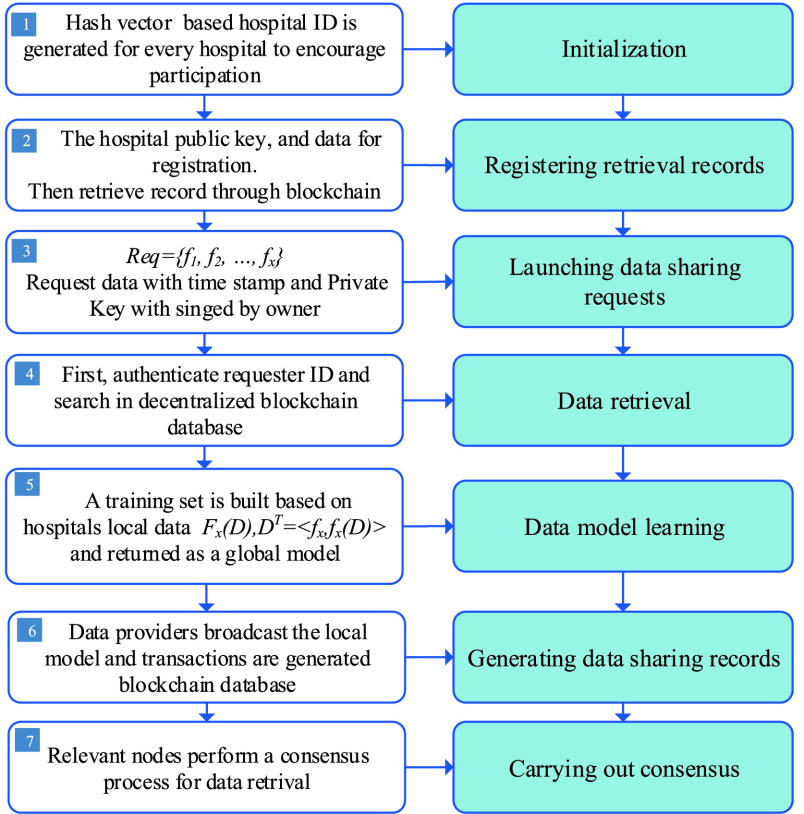

1). Data Sharing Process:

Current approaches use encryption to protect data. It is a risk for data providers to share personal data because of certain security attacks. A simple solution is to transmit the data to the requester with legitimate details and to preserve the data holders’ privacy. Instead of sharing original data, data providers such as hospitals, exchange only the locally learned model weights with the requester. Fig. 4 shows the process of data sharing. The nodes are communicating with each other and the consensus process learns from federated data. The provider and requester search and store the data into the blockchain nodes. More precisely, the steps of data sharing are shown in Fig. 4. To integrate the blockchain with federated learning retrieved data securely for the multiple world-wide hospitals which can provide an effective prediction.

Fig. 4.

Data sharing process.

To protect the privacy of the data, we share the trained model instead of the original image data. The objective of the proposed architecture is to train the global model by using locally trained models. The secure data sharing is illustrated in Fig. 5. In the first phase, we select the training data and then use the private federated learning algorithm for collaborative multi-hospital learning. In other words, the hospital shares the locally trained model weights to the blockchain network and federated learning combines the local model into a global model.

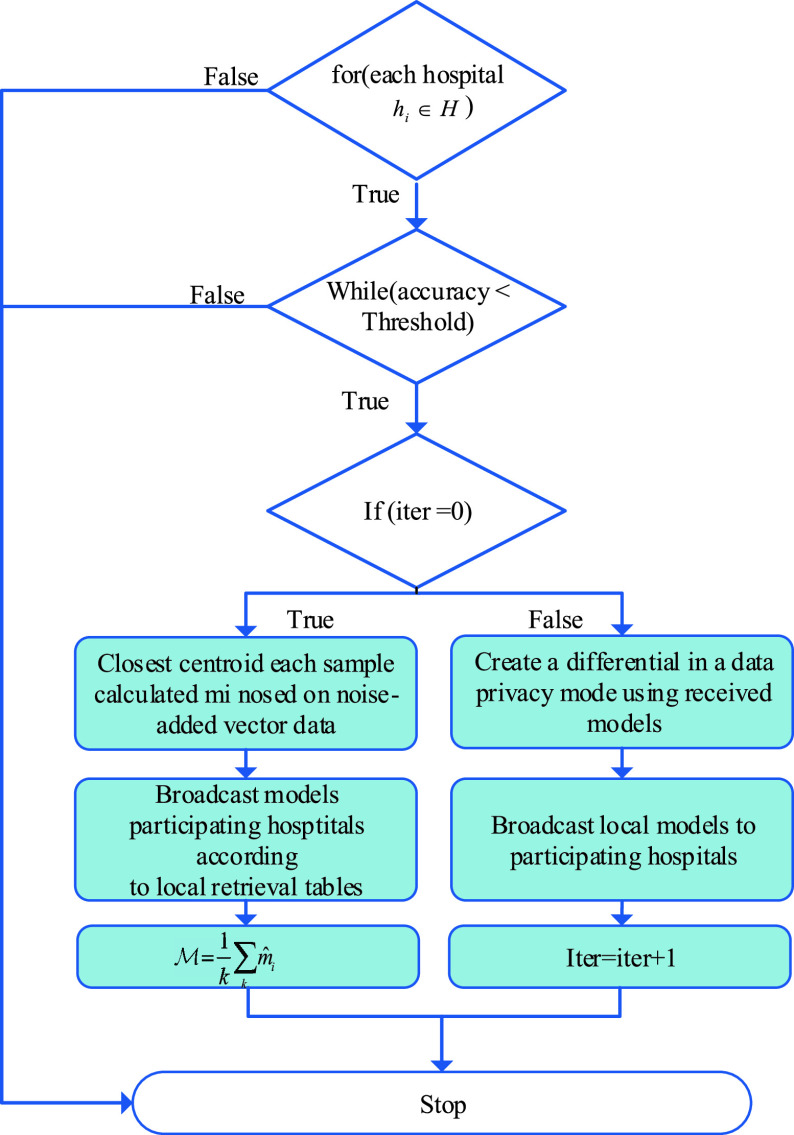

Fig. 5.

Private federated learning algorithm.

B. Federated Deep Learning Model for Node Selection

The nature of the dataset collected from various hospitals is heterogeneous. Thus, the aggregating global model requires an effective and fast aggregation process. To improve the aggregated global model, we select the trained model in the nodes to maximize the accuracy of the aggregated global model i.e.

.

.

We formulate the node selection problem and introduce the

,

,

is the time slot of the vector for the selection of hospitals states. If

is the time slot of the vector for the selection of hospitals states. If

then hospital is selected otherwise not. We provide cost metrics for the node selection process. The local capsule network model of hospitals

then hospital is selected otherwise not. We provide cost metrics for the node selection process. The local capsule network model of hospitals

is time slot

is time slot

denoted as:

denoted as:

|

where

is the training data of hospitals

is the training data of hospitals

, and

, and

is the CPU cycles required for the training model

is the CPU cycles required for the training model

. The cost is measured by:

. The cost is measured by:

|

The size of the trained model is

with the time slot

with the time slot

. Thus, time cost

. Thus, time cost

describe as:

describe as:

|

We describe the time cost function as:

|

The learned model accuracy loss calculated with time slot

as:

as:

|

However, the aggregated model is

and

and

is the loss function. Where

is the loss function. Where

are the images for the training data of the hospital

are the images for the training data of the hospital

. In our scheme, the quality of the learned model is measured for each hospital. Also, the total cost of federated learning with time slot is given by:

. In our scheme, the quality of the learned model is measured for each hospital. Also, the total cost of federated learning with time slot is given by:

|

V. Experiments and Results

A. CC-19 Dataset

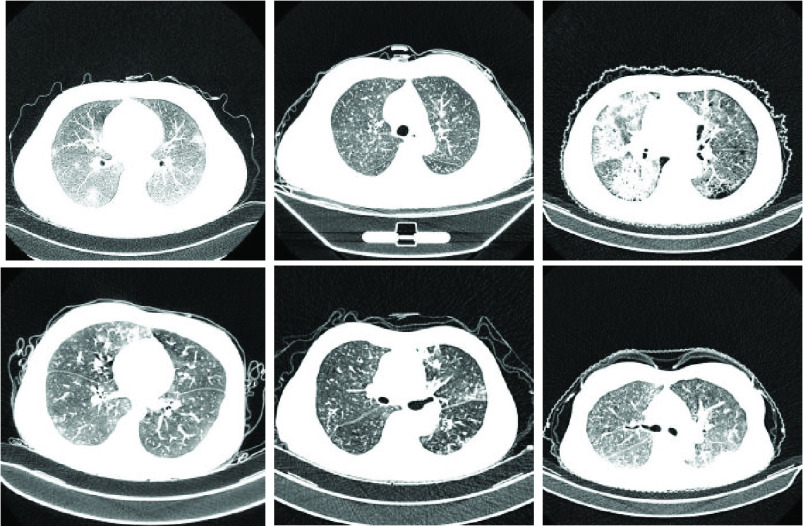

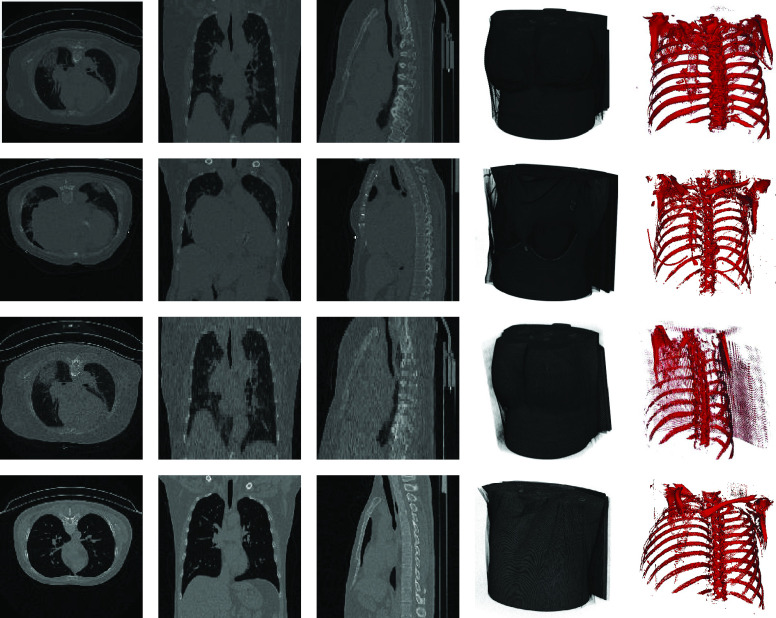

In the past, AI has gained a reputable position in the field of clinical medicine. And in such chaotic situations, AI can help medical practitioners to validate the disease detection process, hence increasing the reliability of the diagnosis methods and save precious human lives. Currently, the biggest challenge faced by AI-based methods is the availability of relevant data. AI cannot progress without the availability of abundant and relevant data. In this paper, we collected the data CT scan data for 34006 slices from the 3 different hospitals. The data is scanned by 6 different scanners shown in Table II. In addition, we collected the third party dataset [36], [37] from different sources to validate the federated learning methods. Moreover, the collected dataset is publicly available via GitHub (https://github.com/abdkhanstd/COVID-19). The collected data set contains the Computed Tomography scan (CT) slices for 89 subjects. Out of these 89 subjects, 68 were confirmed patients (positive cases) of the COVID-19 virus, and the rest 21 were found to be negative cases. The proposed dataset CC-19 contains 34,006 CT scan slices (images) belonging to 89 subjects out of which 28,395 CT scan slices belong to positive COVID-19 patients. Fig. 6 shows some 2D slices taken from CT scans of the CC-19 dataset. Moreover, some selected 3D samples from the dataset are shown in Fig. 7. The Hounsfield unit (HU) is the measurement of CT scans radiodensity as shown in Table III. Usually, CT scanning devices are carefully calibrated to measure the HU units. This unit can be employed to extract the relevant information in CT Scan slices. The CT scan slices have cylindrical scanning bounds. For unknown reasons, the pixel information that lies outside this cylindrical bound was automatically discarded by the CT scanner system. But fortunately, this discarding of outer pixels eliminates some steps for preprocessing.

TABLE II. CC-19 Dataset Collected From Three Different Hospitals (A, B, and C).

| Hospital ID | A | A | B | B | C | C |

|---|---|---|---|---|---|---|

| CT scanner ID | 1 | 2 | 3 | 4 | 5 | 6 |

| Number of Patients | 30 | 10 | 13 | 7 | 20 | 9 |

| Infecation annotation | Voxel-level | Voxel-level | Voxel-level | Voxel-level | Voxel-level | Voxel-level |

| CT scanner | SAMATOM scope | Samatom Definitation Edge | Brilliance 16P iCT | Brilliance iCT | Brilliance iCT | GE 16-slice CT scanner |

| Lung Window level (LW) | −600 | −600 | −600 | −600 | −600 | −500 |

| Lung Window Witdh (WW) | 1200 | 1200 | 1600 | 1600 | 1600 | 1500 |

| Slice thickness (mm) | 5 | 5 | 5 | 5 | 5 | 5 |

| Slice increment (mm) | 5 | 5 | 5 | 5 | 5 | 5 |

| Collimation(mm) | 128*0.6 | 16*1.2 | 128*0.625 | 16*1.5 | 128*0.6 | 16*1.25 |

| Rotation time (second) | 1.2 | 1.0 | 0.938 | 1.5 | 1.0 | 1.75 |

| Pitch | 1.0 | 1.0 | 1.2 | 0.938 | 1.75 | 1.0 |

| Matrix | 512*512 | 512*512 | 512*512 | 512*512 | 512*512 | 512*512 |

| Tube Voltage (K vp) | 120 | 120 | 120 | 110 | 120 | 120 |

Fig. 6.

Some random samples of CT scan 2D slices taken from CC-19 dataset.

Fig. 7.

This figure shows some selected samples from the CC-19 dataset. Each row represents different patient samples with various Hounsfield Unit (HU) for CT scans. The first three columns represent the XY, XZ, and YX plane of the 3D- volume respectively. The fourth column represents a whole 3D-Volume followed by a bone structure in the fifth column.

TABLE III. Various Values of Hounsfield Unit (HU) for Different Substances.

| S/No | Substance | Hounsfield Unit (HU) |

|---|---|---|

| 1 | Air | −1000 |

| 2 | Bone | +700 to +3000 |

| 3 | Lungs | −500 |

| 4 | Water | 0 |

| 5 | Kidney | 30 |

| 6 | Blood | +30 to +45 |

| 7 | Grey matter | +37 to +45 |

| 8 | Liver | +40 to +60 |

| 9 | White matter | +20 to +30 |

| 10 | Muscle | +10 to +40 |

| 11 | Soft Tissue | +100 to +300 |

| 12 | Fat | −100 to −50 |

| 13 | Cerebrospinal fluid(CSF) | 15 |

Collecting datasets is a challenging task as there are many ethical and privacy concerns observed the hospitals and medical practitioners. Keeping in view these norms, this dataset was collected in the earlier days of the epidemic from various hospitals in Chengdu, the capital city of Sichuan. Initially, the dataset was in an extremely raw form. We preprocessed the data and found many discrepancies with most of the collected CT scans. Finally, the CT scans, with discrepancies, were discarded from the proposed dataset. All the CT scans are different from each other i.e. CT scans have a different number of slices for different patients. We believe that the possible reasons behind the altering number of slices are the difference in height and body structure of the patients. Moreover, upon inspecting various literature, we found that the volume of the lungs of an adult female is, comparatively, ten to twelve percent smaller than a male of the same height and age [38].

B. Evaluation Measures

Specificity and sensitivity are the abilities of a model that how correctly the model identifies a subject with disease and without a disease. In our case, it is critical to detect a COVID-19 patient as missing a COVID-19 patient can have disastrous consequences. The formulas of the measures are given as follows:

,

,

,

,

, and

, and

.

.

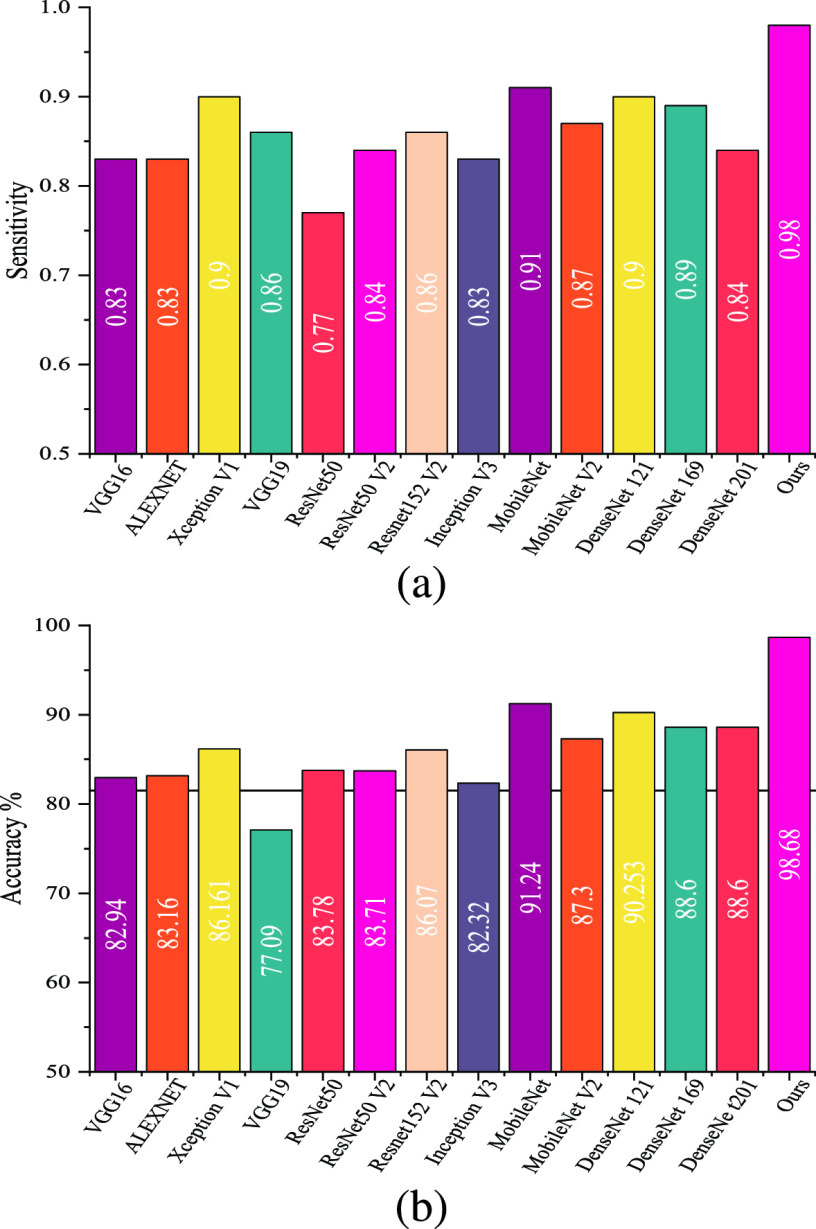

A medical diagnosis-based system needs to have high sensitivity and recall. We present a comprehensive overview of various famous deep learning frameworks. The results presented in Table IV indicate the superiority of our proposed method.

TABLE IV. The Performance of Some Famous Deep Learning Networks. The Bold Values Represent the Best Performance. It Can Be Seen That the Capsule Network Exhibited the Highest Sensitivity While ResNet Has the Best Specificity.

| Feature extraction network | Learnable node | Pre-trained | Precision | Sensitivity / Recall | Specicivity |

|---|---|---|---|---|---|

| VGG16 [39] | MLP | Imagenet | 0.8269 | 0.8294 | 0.1561 |

| AlexNet [40] | MLP | Scratch | 0.833 | 0.831 | 0.191 |

| Xception V1 [41] | MLP | Imagenet | 0.830 | 0.894 | 0.110 |

| VGG19 [39] | MLP | Imagenet | 0.827 | 0.8616 | 0.128 |

| ResNet50 [42] | MLP | Imagenet | 0.833 | 0.771 | 0.249 |

| ResNet50 V2 [43] | MLP | Imagenet | 0.830 | 0.837 | 0.166 |

| Resnet152 V2 [43] | MLP | Imagenet | 0.828 | 0.861 | 0.134 |

| Inception V3 [44] | MLP | Imagenet | 0.828 | 0.833 | 0.159 |

| MobileNet [45] | MLP | Imagenet | 0.830 | 0.912 | 0.089 |

| MobileNet V2 [46] | MLP | Imagenet | 0.828 | 0.873 | 0.118 |

| DenseNet121 [47] | MLP | Imagenet | 0.832 | 0.903 | 0.113 |

| DenseNet169 [47] | MLP | Imagenet | 0.831 | 0.886 | 0.126 |

| DenseNet201 [47] | MLP | Imagenet | 0.829 | 0.844 | 0.152 |

| Ours (SegCaps) | Capsule Network | – | 0.830 | 0.987 | 0.004 |

C. Deep Learning Implimentation Details

We fine-tuned deep learning networks such as VGG16, AlexNet, Inception V3, ResNet 50-152, MobileNet, and DenseNet pre-trained on the ImageNet dataset. We used the pre-trained weights provided by Keras library. An Adams optimizer was used to fine-tune the network. Moreover, we fine-tuned the networks using a learning rate of 10−5 with a decay of 10−6 with an early stopper mechanism with patience equal to five.

D. Comparison With Benchmark Methods

We performed comprehensive experiments using different kinds of deep learning models i.e.,(VGG16, AlexNet, Inception V3, ResNet 50–152 layers, MobileNet, DenseNet). We used deep learning models and different layers for comparing the performance models on the COVID-19 dataset, which is shown in Table IV. We evaluate the performance of the Capsule Network for the detection of COVID-19 lung CT image accuracy. Fig. 9(a) shows the deep learning models; the Capsule Network achieves high sensitivity and less specificity, we achieved high detection performance through the Capsule Network. Fig. 9(b) shows the Segcaps based Capsule Network achieved the best performance and provide the highest sensitivity and lowest specificity. These models were tested using three different test lists containing about 11,450 CT scan slices. The COVID-19 infection segmentation shown in Fig. 8, indicates our method outperforms the baseline methods. The proposed techniques’ results are close to the ground truth. In contrast, U Net

’ performance is near to our results.

’ performance is near to our results.

Fig. 9.

(a) Sensitivity/Recall of the COVID-19 dataset over the decentralized network. (b) Accuracy of the COVID-19 Images.

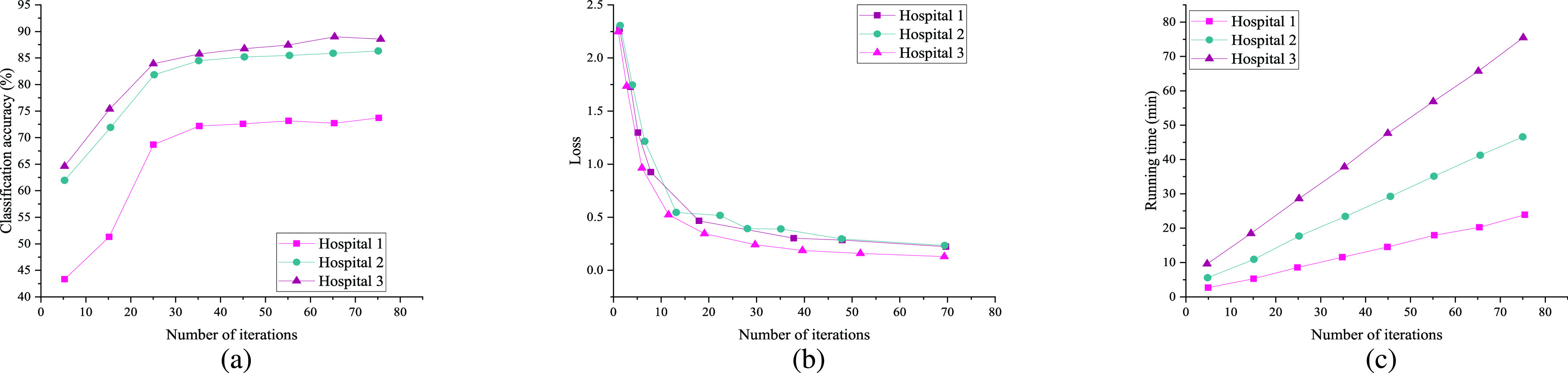

E. Federated Learning Security Analysis

The dataset was gathered from different sources and different hospitals having various kinds of machines. To measure the performance of federated learning, we distribute the datasets over three hospitals. In this model, multiple hospitals can share the data and learn from federated learning. The performance of our proposed distributed model is shown in Fig. 10(a), accuracy was changed when the hospitals or providers were increased. It is better to use more data providers for better results. Fig. 10(b) shows the model loss convergence. As we can see in Fig. 10(a), the accuracy does not change smoothly because the samples from different hospitals are not the same. The accuracy depends on the number of patients or slices. The same is the process for the model loss. Also, it can be seen that the number of providers is increasing. The global model aggregates all the local models where each hospital uses normalized data for training a local model. The number of hospitals affects the performance of the collaborative model. Additionally, the run time is shown in Fig. 10(c). It varies from dataset to datasets and the number of iteration in different sub-datasets.

Fig. 10.

(a) The accuracy of dataset COVID-19 for different providers. (b) The Loss of dataset COVID-19 for different providers. (c) The time of dataset COVID-19 for different providers.

We compare the federated learning with the local model as shown in Fig. 9(b). The local model is trained on whole dataset and federated learning model learns from the local models. Fig. 10(a) and 10(b) indicates that performance increases significantly when data providers are increasing. However, federated learning does not affect the accuracy but it achieves privacy while sharing the data.

-

•

Differences-Privacy: Fig. 5 describes the differences in privacy analysis, where a principled approach that enables organizations to learn from most data while ensuring that these results do not allow data to be distinguished or re-identified by any individual. On the other hand, Equation (11) obtains the value in the data to ensure strong data security.

-

•

Trust: The decentralized trust mechanism of the blockchain allows everything to run automatically through a preset program that improves data security. Relying on a strict set of algorithms, the decentralized blockchain technology can ensure that the data is true, accurate, transparent, traceable, and cannot be tampered with.

-

•

Data security: Data providers have the authority to control their data. Actual data is uploaded with the signature of the owner in the blockchain database. The owner has the right to control and change the policy of the data using the smart contract. The blockchain uses cryptographic algorithms that enable the security of the data.

F. Comparison With Other Methods

A lot of studies have been carried out for detecting the COVID-19 such as [2], [57]–[60], these techniques do not consider data sharing to train the better prediction model. However, some techniques used GAN and data augmentation for generating fake images. The performance of such methods is not reliable in the case of medical images. Due to the small number of data patients [61] the data analytic is difficult. Our proposed model collects a huge amount of real-time data to build a better prediction model. Firstly, we compare with the state of art studies and compare them with the deep learning models shown in Table V. Moreover, we compare federated learning with the state-of-art deep learning models such as VGG, ResNet, MobileNet, DenseNet, Capsule Network, etc. The results show the accuracy is similar to train the local model with the whole dataset or divide data into different hospitals and combine the model weights using blockchain-based federated learning.

TABLE V. A Comparison With the State-of-the-Art Studies Related to COVID-19 Patients’ Detection. Moreover, S, A, and SP Represent Sensitivity, Specificity, and Accuracy Respectively.

| Study | Backbone method | Tasks | Number of cases | Performance | Sharing | Privacy protection |

|---|---|---|---|---|---|---|

| Chen et al. [48] | 2D Unet++ | COVID-19, viral, baterial and Pneu. classification | 106 cases | 95.2 %(A)100% (S)93.6%(SP) | No | No |

| Shi et al. [49] | Random forest | COVID-19, viral/ baterial Pneu. and normal classification | 2685 cases | 87.9 %(A) 90.7% (S) 83.3%(SP) | No | No |

| Zheng et al. [50] | 2D Unet and 2D CNN | COVID-19, viral, baterial and Pneu. classification | 499 Training / 132 Validation | 90.7% (S) 91.1%(SP) | No | No |

| Li et al. [51] | 2D-Resnet 50 | COVID-19, viral/ baterial Pneu. and normal classification | 3920 Training/436 Testing | 90.0% (S) 96.0%(SP) | No | No |

| Jin et al. [52] | 2D Unet++ and 2D-CNN | COVID-19, viral, baterial and Pneu. classification | 1136 Training / 282 Testing | 97.4% (S) 92.2%(SP) | No | No |

| Song et al [53]. | 2D-Resnet 50 | COVID-19, baterial Pneu. and normal classification | 164 Training/ 27 Validation/ 83 Testing | 86.0% (A) | No | No |

| Xu et al. [54] | 2D-CNN | Normal, Influenza-A and viral/bacterial Pneu. classification | 528 Training / 90 Testing | 86.7% (A) | No | No |

| Jin et al. [55] | 2D-CNN | COVID-19, viral, baterial and Pneu. classification | 312 Training/ 104 Validation/ 1255 Testing | 94.1% (S) 95.5%(SP) | No | No |

| Wang et al. [56] | 2D-CNN | COVID-19 and viral Pneu. classification | 250 cases | 82.9% (A) | No | No |

| Wang et al. [6] | 3D-ResNet + attention | COVID-19, viral Pneu. and normal classification | 3997 5-fold validation / 60 validation/ 600 testing | 93.3 %(A) 87.6% (S) 95.5%(SP) | No | No |

| Ours | Federated Bockchain and Capsule Network | COVID-19, viral Pneu. and normal classification | 182 Training/ 45 Testing (patients per hospital) | 98.68%(A) 98% (S) | Yes | Yes |

Finally, we compare our work with blockchain-based data sharing techniques. [62] proposed a deep learning and blockchain-based technique to share the medical images, but the main weakness of the model is that it is not based on federated learning and does not aggregate the neural network weights over the blockchain. Moreover, [13], [19] design a framework based on federated learning but they only consider share vehicle data. Our proposed framework trains the global model to collect data from different hospitals and train a collaborative global model.

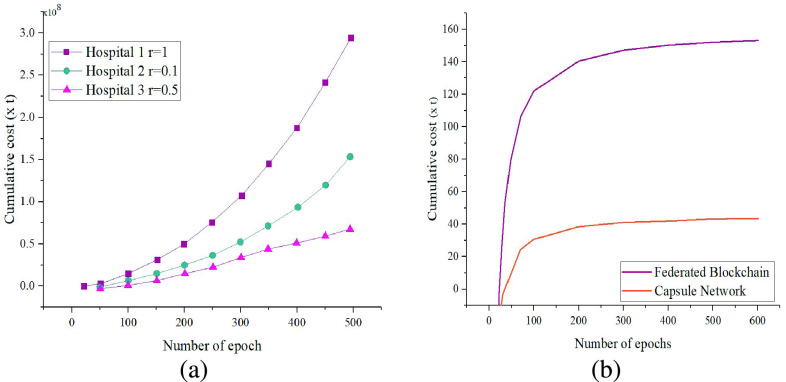

G. Computational Cost

We compare the proposed blockchain-based federated learning with the local capsule network. However, blockchain-based federated models exhibit high security and privacy. Fig. 11(a) shows that the running cost increases with the number of hospitals or transactions due to the increase in communication load. Moreover, as it can be seen in Fig. 11(b), our proposed scheme outperforms as compared with the local capsule network local model.

Fig. 11.

(a) Proposed blockchain based federated learning cost. (b) Performance comparison with capsule and federated learning.

VI. Conclusion

This paper proposed a framework that can utilize up-to-date data to improve the recognition of computed tomography (CT) images and share the data among hospitals while preserving privacy. The data normalization technique deals with the heterogeneity of data. Further, Capsule Network based segmentation and classification is used to detect COVID-19 patients along with a method that can collaboratively train a global model using blockchain technology with federated learning. Also, we collected real-life COVID-19 patients’ data and made it publically available to the research community. Extensive experiments were performed on various deep learning models for training and testing the datasets. The Capsule Network achieved the highest accuracy. The proposed model is smart as it can learn from the shared sources or data among various hospitals. Conclusively, the proposed model can help in detecting COVID-19 patients using lung screening as hospitals share their private data to train a global and better model.

Biographies

Rajesh Kumar received the B.S. and M.S. degrees in computer science from the University of Sindh, Jamshoro, Pakistan, and the Ph.D. degree in computer science and engineering from the University of Electronic Science and Technology of China (UESTC), where he is currently pursuing the Ph.D. degree in information security. He has published more than 30 articles in various international journals and conference proceedings. His research interests include machine learning, deep leaning, malware detection, the Internet of Things (IoT), and blockchain technology.

Abdullah Aman Khan received the master’s degree in computer engineering from the National University of Science and Technology (NUST), Punjab, Pakistan, in 2014. He is currently pursuing the Ph.D. degree in computer science and technology with the School of Computer Science and Engineering, University of Electronic Science and Technology of China, Chengdu, China. His main research interests include electronics design, machine vision, and intelligent systems.

Jay Kumar received the master’s degree from Quaid-i-Azam University, Islamabad, in 2018. He is currently pursuing the Ph.D. degree with the Data Mining Laboratory, School of Computer Science and Engineering, University of Electronics Science and Technology of China. He is also working with the Data Mining Laboratory, School of Computer Science and Engineering, University of Electronics Science and Technology of China. His current research work has been published in top conference of ACL, the Journal of Information Sciences, and IEEE Transactions on Cybernetics. His main research interests include text mining, data stream mining, and natural language processing.

Zakria received the M.S. degree in computer science and information from N. E. D. University in 2017, and the Ph.D. degree from the School of Information and Software Engineering, University of Electronic Science and Technology of China, where he is currently pursuing the Ph.D. degree. He has a vast academic, technical, and professional experience in Pakistan. His research interests include artificial intelligence, computer vision, particularly vehicle re-identification

Noorbakhsh Amiri Golilarz received the M.Sc. degree from Eastern Mediterranean University, Cyprus, in 2017. He is currently pursuing the Ph.D. degree with the School of Computer Science and Engineering, University of Electronic Science and Technology of China (UESTC). His main research interests include image processing, satellite and hyper-spectral image de-noising, biomedical, signal processing, optimization algorithms, control, systems, pattern recognition, neural networks, and deep learning.

Simin Zhang received the master’s degree from Sichuan University, China. She is currently pursuing the Ph.D. degree in medical imaging and nuclear medicine with the West China Hospital, Sichuan University. Her research interests include machine learning and multi-model MRI applied to glioma.

Yang Ting received the bachelor’s degree in communication engineering from the University of Electronic Science and Technology of China (UESTC), the master’s degree from the School of Computer Science and Engineering, UESTC, and the Ph.D. degree in electronics engineering from UESTC. His research interests include computer network architecture, software defined networking, and the Internet of Things (IoT).

Chengyu Zheng graduated from the Huazhong University of Science and Technology, majoring in computer software design. He received the executive master’s degree in business administration from the Southwest University of Finance and Economics. He was selected by the China Telecom Corporation to study at Stanford University, USA. He is working as a Senior Engineer and the General Manager of China Telecom Company Ltd. His main research interests include information technology system architecture design, mobile communication networks, and building business models.

Wenyong Wang received the B.S. degree in computer science from Beihang University, Beijing, China, in 1988, and the M.S. and Ph.D. degrees from the University of Electronic Science and Technology (UESTC), Chengdu, China, in 1991 and 2011, respectively. He was a Professor with the School of Computer Science and Engineering, UESTC, in 2009. From 2003 to 2009, he has worked as the Director of the Information Center, UESTC, and the Chairman of the UESTC-Dongguan Information Engineering Research Institute. He is currently a Visiting Professor with the Macau University of Science and Technology. His main research interests include next-generation Internet, software-designed networks, software engineering, and artificial intelligence. He is a member of the expert board of CERNET and China Next-Generation Internet Committee and a Senior Member of the Chinese Computer Federation.

Funding Statement

This work was supported in part by the National Natural Science Foundation of China under Grant U2033212 and in part by the University of Electronic Science and Technology of China under Project Y03019023601016201.

Contributor Information

Rajesh Kumar, Email: rajakumarlohano@gmail.com.

Abdullah Aman Khan, Email: abdkhan@hotmail.com.

Jay Kumar, Email: jay_tharwani1992@yahoo.com.

Zakria, Email: zakria.uestc@hotmail.com.

Noorbakhsh Amiri Golilarz, Email: noorbakhsh.amiri@std.uestc.edu.cn.

Simin Zhang, Email: 835408876@qq.com.

Yang Ting, Email: yting@uestc.edu.cn.

Chengyu Zheng, Email: 18081000808@189.cn.

Wenyong Wang, Email: wywang@must.edu.mo.

References

- [1].Wang G.et al. , “A noise-robust framework for automatic segmentation of COVID-19 pneumonia lesions from CT images,” IEEE Trans. Med. Imag., vol. 39, no. 8, pp. 2653–2663, Aug. 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Wang X.et al. , “A weakly-supervised framework for COVID-19 classification and lesion localization from chest CT,” IEEE Trans. Med. Imag., vol. 39, no. 8, pp. 2615–2625, Aug. 2020. [DOI] [PubMed] [Google Scholar]

- [3].Han Z.et al. , “Accurate screening of COVID-19 using attention-based deep 3D multiple instance learning,” IEEE Trans. Med. Imag., vol. 39, no. 8, pp. 2584–2594, Aug. 2020. [DOI] [PubMed] [Google Scholar]

- [4].Ouyang X.et al. , “Dual-sampling attention network for diagnosis of COVID-19 from community acquired pneumonia,” IEEE Trans. Med. Imag., vol. 39, no. 8, pp. 2595–2605, Aug. 2020. [DOI] [PubMed] [Google Scholar]

- [5].Kang H.et al. , “Diagnosis of coronavirus disease 2019 (COVID-19) with structured latent multi-view representation learning,” IEEE Trans. Med. Imag., vol. 39, no. 8, pp. 2606–2614, Aug. 2020. [DOI] [PubMed] [Google Scholar]

- [6].Wang J.et al. , “Prior-attention residual learning for more discriminative COVID-19 screening in CT images,” IEEE Trans. Med. Imag., vol. 39, no. 8, pp. 2572–2583, Aug. 2020. [DOI] [PubMed] [Google Scholar]

- [7].Xu G., Li H., Liu S., Yang K., and Lin X., “VerifyNet: Secure and verifiable federated learning,” IEEE Trans. Inf. Forensics Security, vol. 15, pp. 911–926, 2020. [Google Scholar]

- [8].Lu Y., Huang X., Dai Y., Maharjan S., and Zhang Y., “Blockchain and federated learning for privacy-preserved data sharing in industrial IoT,” IEEE Trans. Ind. Informat., vol. 16, no. 6, pp. 4177–4186, Jun. 2020. [Google Scholar]

- [9].Jiang W., Li H., Xu G., Wen M., Dong G., and Lin X., “PTAS: Privacy-preserving thin-client authentication scheme in blockchain-based PKI,” Future Gener. Comput. Syst., vol. 96, pp. 185–195, Jul. 2019. [Google Scholar]

- [10].Azad M. A.et al. , “A first look at privacy analysis of COVID-19 contact tracing mobile applications,” IEEE Internet Things J., early access, Sep. 17, 2020, doi: 10.1109/JIOT.2020.3024180. [DOI] [PMC free article] [PubMed]

- [11].Xu H., Zhang L., Onireti O., Fang Y., Buchanan W. J., and Imran M. A., “BeepTrace: Blockchain-enabled privacy-preserving contact tracing for COVID-19 pandemic and beyond,” IEEE Internet Things J., vol. 8, no. 5, pp. 3915–3929, Mar. 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Chiu T.-C., Shih Y.-Y., Pang A.-C., Wang C.-S., Weng W., and Chou C.-T., “Semisupervised distributed learning with non-IID data for AIoT service platform,” IEEE Internet Things J., vol. 7, no. 10, pp. 9266–9277, Oct. 2020. [Google Scholar]

- [13].Lu Y., Huang X., Zhang K., Maharjan S., and Zhang Y., “Blockchain empowered asynchronous federated learning for secure data sharing in Internet of vehicles,” IEEE Trans. Veh. Technol., vol. 69, no. 4, pp. 4298–4311, Apr. 2020. [Google Scholar]

- [14].Pang J., Huang Y., Xie Z., Han Q., and Cai Z., “Realizing the heterogeneity: A self-organized federated learning framework for IoT,” IEEE Internet Things J., vol. 8, no. 5, pp. 3088–3098, Mar. 2021. [Google Scholar]

- [15].Sun H., Li S., Yu F. R., Qi Q., Wang J., and Liao J., “Toward communication-efficient federated learning in the Internet of Things with edge computing,” IEEE Internet Things J., vol. 7, no. 11, pp. 11053–11067, Nov. 2020. [Google Scholar]

- [16].Yang K., Jiang T., Shi Y., and Ding Z., “Federated learning via over-the-air computation,” IEEE Trans. Wireless Commun., vol. 19, no. 3, pp. 2022–2035, Mar. 2020. [Google Scholar]

- [17].Zhou Z., Yang S., Pu L., and Yu S., “CEFL: Online admission control, data scheduling, and accuracy tuning for cost-efficient federated learning across edge nodes,” IEEE Internet Things J., vol. 7, no. 10, pp. 9341–9356, Oct. 2020. [Google Scholar]

- [18].Xu G., Li H., Dai Y., Yang K., and Lin X., “Enabling efficient and geometric range query with access control over encrypted spatial data,” IEEE Trans. Inf. Forensics Security, vol. 14, no. 4, pp. 870–885, Apr. 2019. [Google Scholar]

- [19].Kong L., Liu X.-Y., Sheng H., Zeng P., and Chen G., “Federated tensor mining for secure industrial Internet of Things,” IEEE Trans. Ind. Informat., vol. 16, no. 3, pp. 2144–2153, Mar. 2020. [Google Scholar]

- [20].Shokri R. and Shmatikov V., “Privacy-preserving deep learning,” in Proc. 22nd ACM SIGSAC Conf. Comput. Commun. Secur., Oct. 2015, pp. 1310–1321. [Google Scholar]

- [21].Tang W., Ren J., and Zhang Y., “Enabling trusted and privacy-preserving healthcare services in social media health networks,” IEEE Trans. Multimedia, vol. 21, no. 3, pp. 579–590, Mar. 2019. [Google Scholar]

- [22].Dai H.-N., Zheng Z., and Zhang Y., “Blockchain for Internet of Things: A survey,” IEEE Internet Things J., vol. 6, no. 5, pp. 8076–8094, Oct. 2019. [Google Scholar]

- [23].Bonawitz K.et al. , “Practical secure aggregation for privacy-preserving machine learning,” in Proc. ACM SIGSAC Conf. Comput. Commun. Secur., Oct. 2017, pp. 1175–1191. [Google Scholar]

- [24].Zhang X., Ji S., Wang H., and Wang T., “Private, yet practical, multiparty deep learning,” in Proc. IEEE 37th Int. Conf. Distrib. Comput. Syst. (ICDCS), Jun. 2017, pp. 1442–1452. [Google Scholar]

- [25].Liu Y., Yu J. J. Q., Kang J., Niyato D., and Zhang S., “Privacy-preserving traffic flow prediction: A federated learning approach,” IEEE Internet Things J., vol. 7, no. 8, pp. 7751–7763, Aug. 2020. [Google Scholar]

- [26].Qu Y., Pokhrel S. R., Garg S., Gao L., and Xiang Y., “A blockchained federated learning framework for cognitive computing in industry 4.0 networks,” IEEE Trans. Ind. Informat., vol. 17, no. 4, pp. 2964–2973, Apr. 2021. [Google Scholar]

- [27].Qu Y.et al. , “Decentralized privacy using blockchain-enabled federated learning in fog computing,” IEEE Internet Things J., vol. 7, no. 6, pp. 5171–5183, Jun. 2020. [Google Scholar]

- [28].Kumar R.et al. , “Privacy-preserved blockchain-federated-learning for medical image analysis towards multiple parties,” 2021, arXiv:2104.10903. [Online]. Available: http://arxiv.org/abs/2104.10903

- [29].Zhang W., “Imaging changes of severe COVID-19 pneumonia in advanced stage,” in Intensive Care Medicine. New York, NY, USA: Springer, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Ai T.et al. , “Correlation of chest CT and RT-PCR testing for coronavirus disease 2019 (COVID-19) in China: A report of 1014 cases,” Radiology, vol. 296, no. 2, pp. E32–E40, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Khan A. A., Shafiq S., Kumar R., Kumar J., and Haq A. U., “H3DNN: 3D deep learning based detection of COVID-19 virus using lungs computed tomography,” in Proc. 17th Int. Comput. Conf. Wavelet Act. Media Technol. Inf. Process. (ICCWAMTIP), 2020, pp. 183–186. [Google Scholar]

- [32].LaLonde R. and Bagci U., “Capsules for object segmentation,” 2018, arXiv:1804.04241. [Online]. Available: http://arxiv.org/abs/1804.04241

- [33].Sabour S., Frosst N., and Hinton G. E., “Dynamic routing between capsules,” in Proc. Adv. Neural Inf. Process. Syst., Annu. Conf. Neural Inf. Process. Syst., Long Beach, CA, USA, Dec. 2017, pp. 3856–3866. [Google Scholar]

- [34].Wang Y.et al. , “Capsule networks showed excellent performance in the classification of hERG blockers/nonblockers,” Frontiers Pharmacol., vol. 10, p. 1631, Jan. 2020. [Online]. Available: https://www.frontiersin.org/article/10.3389/fphar.2019.01631 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [35].Maymounkov P. and Mazières D., Kademlia: A Peer-to-Peer Information System Based on the XOR Metric (Lecture Notes in Computer Science: Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). New York, NY, USA: Springer, 2002. [Google Scholar]

- [36].Rahimzadeh M., Attar A., and Sakhaei S. M., “A fully automated deep learning-based network for detecting covid-19 from a new and large lung ct scan dataset,” medRxiv, vol. 68, 2021, Art. no. 102588. [Online]. Available: https://www.medrxiv.org/content/early/2020/06/12/2020.06.08.20121541 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [37].Yang X., He X., Zhao J., Zhang Y., Zhang S., and Xie P., “COVID-CT-dataset: A CT scan dataset about COVID-19,” 2020, arXiv:2003.13865. [Online]. Available: http://arxiv.org/abs/2003.13865

- [38].Bellemare F., Jeanneret A., and Couture J., “Sex differences in thoracic dimensions and configuration,” Amer. J. respiratory Crit. Care Med., vol. 168, pp. 305–312, Sep. 2003. [DOI] [PubMed] [Google Scholar]

- [39].Simonyan K. and Zisserman A., “Very deep convolutional networks for large-scale image recognition,” in Proc. 3rd Int. Conf. Learn. Represent. (ICLR), Bengio Y. and LeCun Y., Eds., San Diego, CA, USA, May 2015. [Online]. Available: https://iclr.cc [Google Scholar]

- [40].Krizhevsky A., Sutskever I., and Hinton G. E., “Imagenet classification with deep convolutional neural networks,” in Proc. Adv. Neural Inf. Process. Syst., 26th Annu. Conf. Neural Inf. Process. Syst., Bartlett P. L., Pereira F. C. N., Burges C. J. C., Bottou L., and Weinberger K. Q., Eds. Lake Tahoe, NV, USA: ACM, 2012, pp. 1106–1114. [Google Scholar]

- [41].Chollet F., “Xception: Deep learning with depthwise separable convolutions,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), Honolulu, HI, USA, Jul. 2017, pp. 1800–1807. [Google Scholar]

- [42].He K., Zhang X., Ren S., and Sun J., “Deep residual learning for image recognition,” CoRR, vol. abs/1512.03385, pp. 770–778, Jun. 2016. [Google Scholar]

- [43].He K., Zhang X., Ren S., and Sun J., “Identity mappings in deep residual networks,” in 14th European Conference, Comput. Vis. ECCV, in Lecture Notes in Computer Science, vol. 9908. Amsterdam, The Netherlands: Springer, Oct. 2016, pp. 630–645. [Google Scholar]

- [44].Szegedy C., Vanhoucke V., Ioffe S., Shlens J., and Wojna Z., “Rethinking the inception architecture for computer vision,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR). Las Vegas, NV, USA: IEEE Computer Society, Jun. 2016, pp. 2818–2826. [Google Scholar]

- [45].Howard A. G.et al. , “MobileNets: Efficient convolutional neural networks for mobile vision applications,” 2017, arXiv:1704.04861. [Online]. Available: https://arxiv.org/abs/1704.04861

- [46].Sandler M., Howard A., Zhu M., Zhmoginov A., and Chen L.-C., “MobileNetV2: Inverted residuals and linear bottlenecks,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., Salt Lake City, UT, USA. Washington, DC, USA: IEEE Computer Society, Jun. 2018, pp. 4510–4520. [Google Scholar]

- [47].Huang G., Liu Z., Van Der Maaten L., and Weinberger K. Q., “Densely connected convolutional networks,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), Jul. 2017, pp. 4700–4708. [Google Scholar]

- [48].Chen J.et al. , “Deep learning-based model for detecting 2019 novel coronavirus pneumonia on high-resolution computed tomography: A prospective study,” MedRxiv, vol. 10, no. 1, pp. 1–11, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [49].Shi F.et al. , “Large-scale screening of COVID-19 from community acquired pneumonia using infection size-aware classification,” 2020, arXiv:2003.09860. [Online]. Available: http://arxiv.org/abs/2003.09860 [DOI] [PubMed]

- [50].Zheng C.et al. , “Deep learning-based detection for covid-19 from chest ct using weak label,” medRxiv, 2020.

- [51].Li L.et al. , “Using artificial intelligence to detect COVID-19 and community-acquired pneumonia based on pulmonary CT: Evaluation of the diagnostic accuracy,” Radiology, vol. 296, no. 2, pp. E65–E71, Aug. 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [52].Jin S.et al. , “Ai-assisted CT imaging analysis for COVID-19 screening: Building and deploying a medical AI system in four weeks,” medRxiv, 2020. [DOI] [PMC free article] [PubMed]

- [53].Song Y.et al. , “Deep learning enables accurate diagnosis of novel coronavirus (COVID-19) with CT images,” medRxiv, 2020. [DOI] [PMC free article] [PubMed]

- [54].Xu X.et al. , “A deep learning system to screen novel coronavirus disease 2019 pneumonia,” Engineering, vol. 6, no. 10, pp. 1122–1129, Oct. 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [55].Jin C.et al. , “Development and evaluation of an AI system for COVID-19 diagnosis,” medRxiv, vol. 11, pp. 1–14, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [56].Wang S.et al. , “A deep learning algorithm using CT images to screen for corona virus disease (COVID-19),” MedRxiv, vol. 31, no. 6, pp. 1–9, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [57].Kuzdeuov A.et al. , “A network-based stochastic epidemic simulator: Controlling COVID-19 with region-specific policies,” IEEE J. Biomed. Health Informat., vol. 24, no. 10, pp. 2743–2754, Oct. 2020. [DOI] [PubMed] [Google Scholar]

- [58].Wang D.et al. , “Clinical characteristics of 138 hospitalized patients with 2019 novel coronavirus–infected pneumonia in Wuhan, China,” JAMA, vol. 323, no. 11, p. 1061, Mar. 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [59].Zhou L.et al. , “A rapid, accurate and machine-agnostic segmentation and quantification method for CT-based COVID-19 diagnosis,” IEEE Trans. Med. Imag., vol. 39, no. 8, pp. 2638–2652, Aug. 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [60].Sun L.et al. , “Adaptive feature selection guided deep forest for COVID-19 classification with chest CT,” vol. 24, no. 10, pp. 2798–2805, 2020, arXiv:2005.03264. [Online]. Available: http://arxiv.org/abs/2005.03264 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [61].Inciardi R. M.et al. , “Cardiac involvement in a patient with coronavirus disease 2019 (COVID-19),” JAMA Cardiol., vol. 5, no. 7, pp. 819–824, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [62].Kumar R.et al. , “An integration of blockchain and AI for secure data sharing and detection of CT images for the hospitals,” Comput. Med. Imag. Graph., vol. 87, Jan. 2021, Art. no. 101812. [DOI] [PubMed] [Google Scholar]