Abstract

In today’s world, technology has become an inevitable part of human life. In fact, during the Covid-19 pandemic, everything from the corporate world to educational institutes has shifted from offline to online. It leads to exponential increase in intrusions and attacks over the Internet-based technologies. One of the lethal threat surfacing is the Distributed Denial of Service (DDoS) attack that can cripple down Internet-based services and applications in no time. The attackers are updating their skill strategies continuously and hence elude the existing detection mechanisms. Since the volume of data generated and stored has increased manifolds, the traditional detection mechanisms are not appropriate for detecting novel DDoS attacks. This paper systematically reviews the prominent literature specifically in deep learning to detect DDoS. The authors have explored four extensively used digital libraries (IEEE, ACM, ScienceDirect, Springer) and one scholarly search engine (Google scholar) for searching the recent literature. We have analyzed the relevant studies and the results of the SLR are categorized into five main research areas: (i) the different types of DDoS attack detection deep learning approaches, (ii) the methodologies, strengths, and weaknesses of existing deep learning approaches for DDoS attacks detection (iii) benchmarked datasets and classes of attacks in datasets used in the existing literature, and (iv) the preprocessing strategies, hyperparameter values, experimental setups, and performance metrics used in the existing literature (v) the research gaps, and future directions.

Keywords: Deep learning, Distributed Denial of Service attacks, Datasets, Performance metrics

Introduction

In today’s fast paced world, one cannot imagine life without Internet, which is required in diverse fields, namely, communication, education, business shopping, and the list is infinite. Despite its many advantages, many crimes have proliferated over the internet, viz. the spreading of misinformation, hacking, attacks, etc. The Denial of Service (DoS) attack occurs when the service (s), machine (s) or network (s) are made unavailable to its legitimate users (https://www.cloudflare.com/en-in/learning/ddos/glossary/denial-of-service/). The DDoS attack is the subcategory of DoS attack and it occurs when the attacker compromises multiple computing devices to interrupt the regular traffic of a targeted victim (https://www.cloudflare.com/en-in/learning/ddos/what-is-a-ddos-attack/). In February 2021, the Cryptocurrency exchange EXMO was directed with 30 GB of traffic per second and it was unavailable for 2 h (https://portswigger.net/daily-swig/uk-cryptocurrency-exchange-exmo-knocked-offline-by-massive-ddos-attack; Han et al. 2012). In December 2020, the popular website tracker Down Detector had claimed many outages because of DDoS attacks (https://www.livemint.com/technology/apps/google-services-youtube-gmail-google-drive-face-outage-11607947475759.html). The other DDoS attacks that happened in 2018–2020 are detailed in (https://www.livemint.com/technology/apps/google-services-youtube-gmail-google-drive-face-outage-11607947475759.html; https://www.vxchnge.com/blog/recent-ddos-attacks-on-companies; https://www.thesslstore.com/blog/largest-ddos-attack-in-history/; https://securelist.com/ddos-report-q4-2019/96154/).

According to NETSCOUT’s ATLAS Security Engineering & Response Team (ASERT), in the first quarter of 2021, approximately 2.9 million DDoS attacks were launched by the threat actors, and it is a 31% increase from the same time in 2020 (https://www.netscout.com/blog/asert/beat-goes). It hence proves that it is essential to detect DDoS attacks.

The above-cited incidents necessitate the need for an effective method to detect DDoS attacks. There are many techniques, viz. Statistical, Shallow Machine Learning, the Deep Learning, etc., to detect DDoS attacks. Of these techniques, Deep learning technique is suitable to detect DDoS attacks. The rest of these methods have limitations that have been explored and are explained as below:

Statistical Methods Limitations: The statistical-based detection methods work on the basis of the previous knowledge of network flow (Catak and Mustacoglu 2019). But in today’s world, malicious network flows are becoming a changing target. Hence, it is a challenging task to characterize the network traffic correctly. Most of the statistical DDoS detection methods are highly dependent on various user-defined thresholds. Hoque et al. (2017). Therefore, those thresholds need to be modified dynamically to be up to date with changes in a network. Hoque et al. (2017). The entropy measure of statistical methods requires extensive network awareness and experimentations to choose suitable statistical characteristics (Li and Lu 2019). To detect DDoS attacks, an entropy method, the Shannon entropy is used and this entropy detection uses only one feature like source IP address to create the detection model. Henceforth, attackers can easily manipulate source IP address using tools like scapy, hping, etc. Thus, the diversity of this feature to detect DDoS attacks is not a reliable source (Catak and Mustacoglu 2019). Most of the statistical approaches like entropy, correlation, etc., take excessive computational time throughout DDoS attack detection. Therefore, they cannot be carried out in real time (Hoque et al. 2017).

Shallow Machine Learning (SML) Limitations: It works well by using the rules over a small amount of data. The SML identifies the attacks based on statistical features (Yuan et al. 2017) and then determines the class or value. It also requires regular updating of the model (Yuan et al. 2017) corresponding to the changes in attacks. The SML approaches solve the problem by breaking it into small subproblems and solves subproblems, and gives the final result (Xin et al. 2018). In SML some algorithms take less time in training and a long time in testing (Xin et al. 2018).

The DL methods are suitable to detect DDoS attack as: The DL methods can do feature extraction as well as classify the data. In today’s world, there is a requirement for a detection system that can deal with the unavailability of data. Although the label for legitimate traffic is generally available, the availability of labelled malicious traffic is less. The DL approaches can extract the information from incomplete data (Van et al. 2017). The DL approaches are suitable to identify the low-rate attacks. Historical information is required to identify low-rate attacks (Yuan et al. 2017) and the DL approaches can learn long-term dependencies of temporal patterns (Vinayakumar et al. 2017). Thus, the DL approaches are useful in such a situation. The DL approaches have complex mathematical operations that are executed through multiple hidden layers using many parameters during the training phase (Aldweesh et al. 2020). The DL approaches use many matrix operations as compared to traditional machine learning approaches. GPU is efficient in doing well with matrix operations, and the availability of GPU machines makes it computationally efficient and fast.

Also, quantum computing has been very promising in many areas viz: artificial intelligence (AI), cybersecurity, medical research, etc. The possibilities of applying quantum computing in AI is to create quantum algorithms that perform better than classical algorithms and can be used for learning, decision problems, quantum search, and quantum game theory (https://research.aimultiple.com/quantum-ai/). In AI, to tackle more complex problems, quantum computing can provide a computation boost. It can be used for fast training or other improvements in SML and DL models (https://research.aimultiple.com/quantum-ai/). Thus, quantum computing extends the capabilities of deep learning by solving complex problems that involves large datasets and high computational requirements.

The abbreviations used in this article are summarized in Table 1. This article has been compared with other review articles, and a detailed comparison is provided in Table 2. It has been observed from Table 2 that most of the existing review articles do not discuss the preprocessing strategies, strengths, and types of attack used from the datasets in the existing literature. Our systematic review differs from the existing reviews described in Table 2 as we present the various types of DDoS attack detection DL approaches. Moreover, as per the research undertaken, there is no systematic literature review that covers DDoS attacks detection using the DL approaches.

Table 1.

List of abbreviations

| Acronym | Meaning | Acronym | Meaning |

|---|---|---|---|

| AE | Auto Encoder | MLP | Multilayer Perceptron |

| ANN | Artificial Neural Network | MSDA | Marginalized Stacked De-noising Auto-encoder |

| BOW | Bag of Word | MSE | Mean Squared Error |

| CH | Cluster Head | NB | Naïve Bayes |

| CIC | Canadian Institute of Cyber security | NID | Network Intrusion Detection |

| CL | Convolutional Layer | NIDS | Network Intrusion Detection System |

| CNN | Convolutional Neural Network | NN | Neural Network |

| DDoS | Distributed Denial of Service | NS2 | Network Simulator 2 |

| DL | Deep Learning | OBS | Optical Burst Switching |

| DNN | Deep Neural Network | PCA | Principal Component Analysis |

| DoS | Denial of Service | PCC | Pearson’s Correlation Coefficient |

| DT | Decision Tree | PDR | Packet Delivery Ratio |

| FC | Fully Connected | PL | Pooling Layer |

| FCNN | Fully Connected Neural Network | RBF | Radial Basis Function |

| FFBP | Feed-Forward Back-Propagation | RE | Reconstruction Error |

| FNR | False Negative Rate | ReLu | Rectified Linear Unit |

| FPR | False Positive Rate | ResNet | Residual Network |

| GD | Gradient Descent | RF | Random Forest |

| GPU | Graphics Processing Unit | RMS | Root Mean Square |

| GRU | Gated Recurrent Unit | RNN | Recurrent Neural Network |

| GT | Game Theory | RQ | Research Question |

| IDS | Intrusion Detection System | SCC | Sparse Categorical Cross-entropy |

| IoT | Internet of Things | SDN | Software Defined Network |

| IP | Internet Protocol | SGD | Stochastic Gradient Descent |

| KNN | k-Nearest Neighbours | SLR | Systematic Literature Review |

| LR | Logistic Regression | SML | Shallow Machine Learning |

| LSTM | Long short-term memory | SVM | Support Vector Machine |

| LUCID | Lightweight Usable CNN in DDoS Detection | TCP | Transmission Control Protocol |

| MANETs | Mobile Ad-hoc Networks | TL | Transfer Learning |

| MCC | Mission Control Center | TNR | True Negative Rate |

| MKL | Multiple Kernel Learning | TPR | True Positive Rate |

| MKLDR | Multiple Kernel Learning for Dimensionality Reduction | UDP | User Datagram Protocol |

| ML | Machine Learning | WSN | Wireless Sensor Network |

Table 2.

A detailed comparison with other review articles: (: Yes, : No)

| Review article | Ferrag et al. (2020) | Aleesa et al. (2020) | Ahmad et al. (2021) | Gamage and Samarabandu (2020) | Ahmad and Alsmadi (2021) | This article |

|---|---|---|---|---|---|---|

| Focused security domain | Cyber security intrusion detection | IDS | IDS | NID | IoT security | DDoS |

| ML/DL | DL | DL | ML/DL | DL | ML/DL | DL |

| Systematic study | ||||||

| Taxonomy | ||||||

| Preprocessing strategy | ||||||

| Types of attack used in existing literature from the datasets | ||||||

| Strengths | ||||||

| Weaknesses | ||||||

| Research gaps |

In this paper, we have used the SLR protocol to review the DDoS attacks detection system based on DL approaches and have contributed the following findings:

The state-of-the-art DDoS attack detection Deep learning approaches have been identified and categorized based on common parameters.

The methodologies, strengths, and weaknesses of existing deep learning approaches for DDoS attacks detection have been summarized.

The available DDoS benchmarked datasets and classes of attacks in datasets used in the existing literature have been summarized.

Focus has been on the preprocessing strategies, hyperparameter values, experimental setups, and performance metrics that the existing deep learning approaches have used for DDoS attacks detection.

The paper aims at highlighting the research gaps, and points at the future directions in this area.

The rest of the paper is organized as follows: Sect. 2 explains the SLR protocol; Sect. 3 talks about the state-of-the-art DDoS attacks detection DL approaches used in the existing literature; Sect. 4 analyses the methodologies, strengths, and weaknesses of the existing literature; Sect. 5 describes the details about the available DDoS benchmarked datasets and classes of attacks in datasets that are used in the existing literature; Sect. 6 provides the details about the preprocessing strategies, hyperparameter values, experimental setups, and performance metrics; Sect. 7 illustrates the research gaps in the existing literature; and Sect. 8 explicates the conclusion and future directions of this review article.

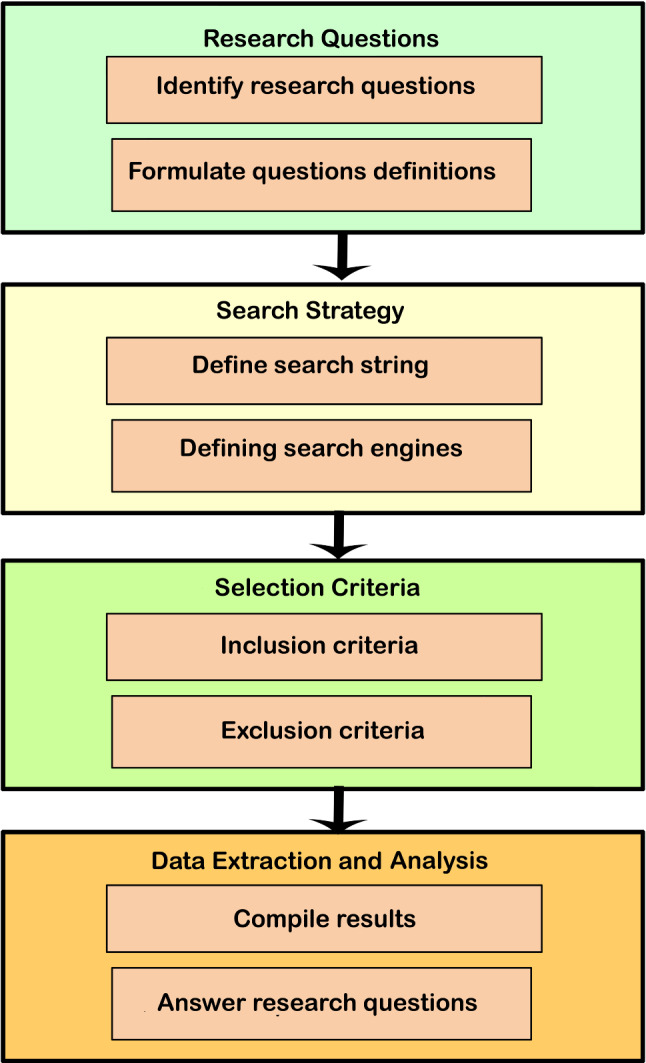

Systematic literature review protocol

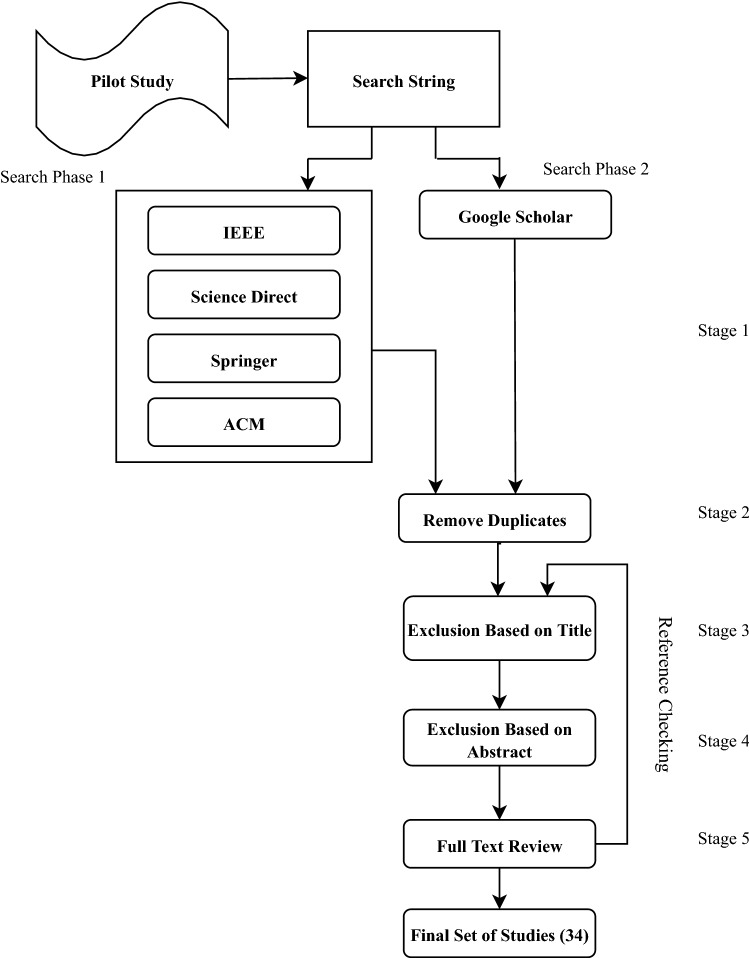

SLR provides a comprehensive approach towards understanding the problem and is considered an effective method in evaluating the literature related to the problem. A step-by-step methodology is adopted for conducting the research in systematic surveying. The SLR survey in this research work follows the guidelines of Keele et al. (2007). This work focuses on DDoS attacks detection using deep learning-based solutions, published from 2018 to 2021. The result of SLR provides a set of research articles that are categorized based on the taxonomy of DL approaches used. The purpose of SLR is to figure out various research gaps in the existing literature that provide promising future research directions. Figure 1 shows the overview of the survey protocol, and it is explained step-by-step as below.

Fig. 1.

Survey protocol overview

Research questions

The main objective of the systematic review is to outline the research questions and to answer them after evaluating the data taken out from the list of final selected research papers. Research questions that have been addressed in this work are given as below:

What are the state-of-the-art DDoS attacks detection DL approaches and how can these approaches be categorized?

What are the methodologies, strengths, and weaknesses of existing deep learning approaches for DDoS attacks detection?

What are the available DDoS benchmarked datasets and classes of attacks in datasets that have been used in the existing literature?

What are the preprocessing strategies, hyperparameter values, experimental setups, and performance metrics that the existing DL approaches have used for DDoS attack detection?

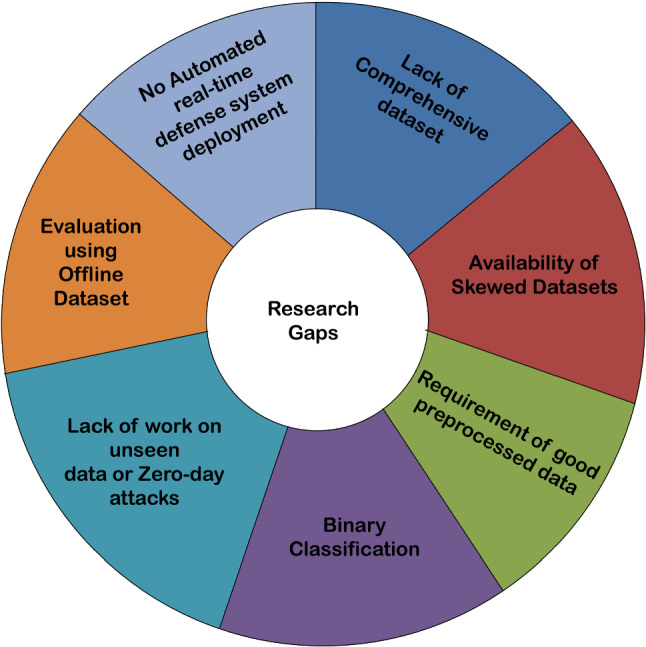

What are the research gaps in the existing literature?

Search strategy

A systematic survey is initialized by forming a suitable search strategy. A proper search strategy is the pre-requisite to any research. Therefore, a suitable set of databases has been selected to mine out the appropriate literature. In the present research work, search was carried out in two phases from 2018 to 2021. Phase 1 of the search consisted of four digital libraries: ACM digital library, IEEE Explore, Springer, Science Direct, and Phase 2 included Google Scholar academic search engine. The addition of Google Scholar has helped in preventing the omission of any relevant literature. In addition, a pilot study was also carried out to refine the search string. Ten most cited and suitable articles have been selected from a set of pre-collected articles kept in the database during the pilot study. One common search query that was performed with little modification in different digital libraries is:

(Detection of DDoS attacks using deep learning OR DDoS attack detection using deep learning approaches)

The results obtained from the chosen digital libraries were refined by ” filtering options.” Figure 2 depicts the flow of various steps of the survey protocol.

Fig. 2.

Systematic literature review process

Study selection criteria

The main objective of study selection was to exclude any irrelevant literature concerned with the defined RQs. This was done with the help of addition and elimination criteria. Besides, the research articles which extended the previous related work were included. The search phase 1 produced 3039 entries, and from search phase 2, we have taken only the first 1000 entries, making 4039 entries in stage 1. Out of 4039, 178 duplicate entries were removed in stage 2. Then stage 2 is followed by removal of articles according to the titles (3130), abstract (581), and full texts (118), respectively, in subsequent stages. Finally, 32 research articles were selected after stage 5. The inclusion and exclusion criteria were specified to eliminate the research studies that are not related to the defined research questions. The inclusion/exclusion criteria used are defined as below:

Inclusion criteria:

All articles that provide a new approach for DDoS attacks detection using deep learning.

All studies that focus on only deep learning approaches.

Studies that are closely associated but vary in essential parameters were included as distinct primary studies.

Studies that fulfil the research questions.

Studies that extend the previous related work.

The articles were published between 2018 and 2021.

Exclusion criteria:

Articles not in the English language.

Articles not related to the research topic.

Review articles, Editorials, Discussion, Data articles, Short communications, Software publications, Encyclopedia, Poster, Abstract, Tutorial, Work in progress, Keynote, Invited talk.

Articles did not demonstrate an adequate amount of information.

Duplicate research studies.

Reference checking

The references of 32 articles obtained after reviewing the full texts were evaluated to prevent the omission of any relevant work. The results (76 articles) obtained were then moved to inclusion and exclusion criteria for further assessment based on title, abstract, and full text. Then articles based on titles (11), abstract (51), and full text (12) were removed in subsequent stages. In the end, two articles were finalised after removing 74 articles through reference checking.

Data extraction

The required data were extracted after studying the text of the complete article based on the research questions. The data extracted from each study is used to fill a pre-designed form. This form consists of various field entries, including title, the approach used, datasets used, number of features, attack and legitimate classes identified, preprocessing strategy, experiment setup/performance optimization of the model, performance metrics, strength, weakness, and the summary which is used to critically analyze the final set of articles to simplify the responses to the research questions. The details of data extraction fields are given in Table 3.

Table 3.

Data extraction fields

| Field | Objective |

|---|---|

| Title | Provides the title of research paper |

| Approach used | List the different approaches related to the DL used in the paper |

| Datasets | List the different datasets used in the paper for the evaluation purpose |

| Number of features | List the selected features from the datasets |

| Attack and legitimate classes identified | Provides the name of attacks used in the paper |

| Preprocessing strategy | Describes the preprocessing processes used before training the model |

| Experiment setup/performance optimization of the model | Explains about how the experiment is done/list different parameter values of the model at which it gives best performance |

| Performance metrics | Provides the results and through these metrics we can compare one model with another model |

| Strength | List the good points about the model |

| Weakness | List the weak points of the model |

| Summary | A concise explanation about the above fields |

State-of-the-art DDoS attack detection Deep learning approaches

Deep learning is defined as the subset of ML in artificial intelligence (https://www.investopedia.com/terms/d/deep-learning.asp) with the capabilities of learning from supervised or unsupervised data. Deep learning uses multi-layer networks; therefore, it is also called as deep neural network or deep neural learning (Aldweesh et al. 2020). The layers are linked through neurons, representing the mathematical calculation of the learning processes (Goodfellow et al. 2016).

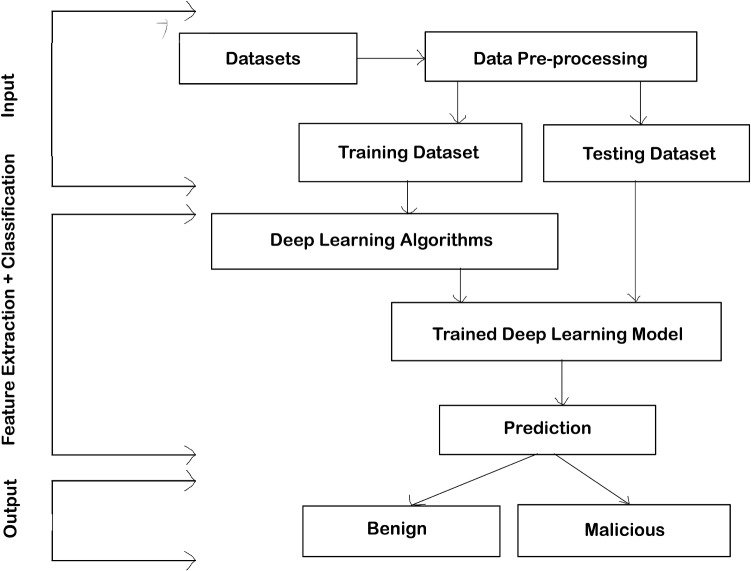

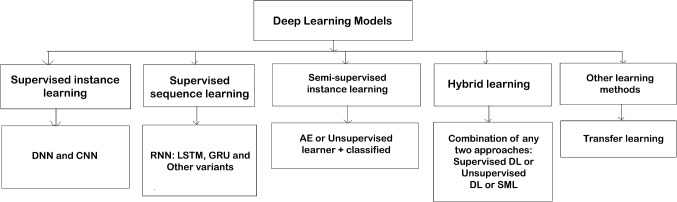

As shown in Fig. 3 DL algorithms take the preprocessed data as input and do both feature extraction as well as classification and predict the samples as benign or malicious as output. The taxonomy contains the five categories of DL models for DDoS attacks detection based on common parameters of the DL approaches. The taxonomy of DL is shown in Fig. 4. The DL methods have been classified into five categories that are supervised instance learning, supervised sequence learning, semi-supervised learning, hybrid learning, and other learning methods. The following is the brief description of each category:

- Supervised instance learning Supervised instance learning uses the flow of instances (Gamage and Samarabandu 2020). It uses the labelled instances for training purposes. The following is the description of the most commonly used methods in this category:

- Convolutional neural network The CNN consists of convolutional, pooling, flattening, and FC layers (https://www.ibm.com/cloud/learn/convolutional-neural-networks). The convolutional layer is the main constructing block of CNN (Gopika et al. 2020). The convolution layer performs the mathematical operation (https://www.analyticsvidhya.com/blog/2021/05/convolutional-neural-networks-cnn/) by applying the filters to the input to produce a convoluted feature or feature map. The filters are applied in a moving window manner over the height, width and depth of the input. The pooling layer followed the convolution layer (Gopika et al. 2020). It is used to reduce the dimensionality of feature maps (Zhu et al. Jan 2018; Ke et al. 2018) by taking a maximum or minimum value from a given area. The flattening layer is used to change the multidimensional data in pooling layer, to 1-D vector to input into a FC layer. The FC layer determines the probability of each class label to classify the samples (Yamashita et al. 2018).

- Supervised sequence learning The supervised sequence learning uses a sequence of flows (Gamage and Samarabandu 2020). In this type, the models learn from the series of data by keeping the previous input states in the memory. The most commonly used models in this type are described as below:

- Recurrent neural networks (RNN) The feed-forward neural network comprises of the input, hidden, and output layers. In feed-forward neural networks all inputs and outputs are independent of each other (Nisha et al. 2021), and thus, it cannot use the previous information. Therefore, it is not suitable in case of next word prediction of a sentence (https://towardsdatascience.com/illustrated-guide-to-recurrent-neural-networks-9e5eb8049c9). In RNN the output from the previous step is given to the current step in addition to the current input, and thus, it can predict the next word of a sentence by retaining the previous information. But the RNN has disadvantages of gradient vanishing problems, exploding problems (Nisha et al. 2021) and to process the long sequential data using RNN (https://www.mygreatlearning.com/blog/types-of-neural-networks/).

- Long short-term memory (https://www.analyticsvidhya.com/blog/2017/12/fundamentals-of-deep-learning-introduction-to-lstm/) The problem of RNN has been solved by the LSTM. The LSTM network comprises different memory blocks or cells. The two states, i.e. hidden state and the cell state, are given to the next cell. The memory blocks can select which information to remember or to forget through the three mechanisms called gates, i.e. forget, input, and output gates (https://purnasaigudikandula.medium.com/recurrent-neural-networks-and-lstm-explained-7f51c7f6bbb9). A forget gate eliminates the information from the cell state which is no longer necessary for the LSTM. The input gate adds the information to the cell state and the output gate is responsible for extracting valuable information from the present cell state and treated it as an output.

- Gated recurrent unit (Alom et al. 2018) In the GRU the forget and input gates are combined into an update gate and merged the cell state and hidden state along with a few different changes.

- Semi-supervised learning Semi-supervised methods use the pre-training stage using unlabelled data (Gamage and Samarabandu 2020). It uses both labelled and unlabelled records for training a model. In this, autoencoder has been used for extraction of features, and other deep or shallow machine learning models are used for the classification.

- Autoencoders (Aldweesh et al. 2020) An AE is a deep neural network used for dimensionality reduction and feature extraction. An AE comprises of input (for encoding) and output (for decoding) layers along with the hidden layer. AE trains the encoder and decoder collectively using back-propagation. The encoder extracts the raw features and converts the input into low-dimensional abstraction. The decoder then reconstructs the original features from the low-dimensional notion.

Hybrid learning It uses the combination of any two approaches, i.e. supervised DL or unsupervised DL or shallow machine learning. In the existing literature, many researchers have used CNN-LSTM (Roopak et al. 2019, 2020; Nugraha and Murthy 2020), LSTM-Bayes (Li and Lu 2019), RNN-AE (Elsayed et al. 2020), etc.

Other learning methods Under this category comes transfer learning. A transfer learning method uses the already pre-trained model from a repository (Gamage and Samarabandu 2020). The researchers have used the deep learning approaches to train them on one attack domain and later used that trained model on another domain.

Fig. 3.

A deep learning process

Fig. 4.

Taxonomy of deep learning models

Methodologies, strengths, and weaknesses

In this section, the methodologies, strengths, and weaknesses in the existing paper have been briefed according to the proposed taxonomy:

- Supervised instance learning

-

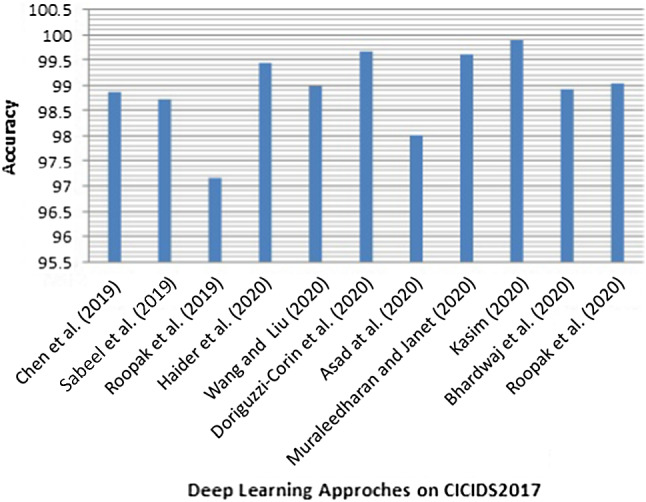

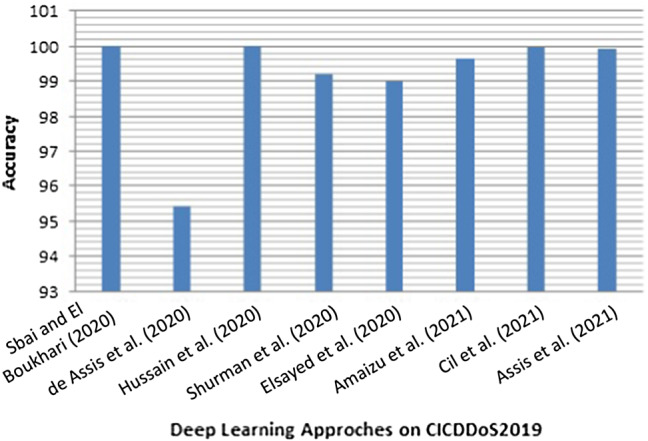

Deep neural networks:Sabeel et al. (2019) proposed two ML models, DNN and LSTM, for the prediction of unknown DoS/DDoS attacks. In this paper, authors first trained their models on the preprocessed DoS/DDoS samples in the CICIDS2017 dataset and then evaluated the results on the synthesized ANTS2019 dataset to measure the accuracy. In the second part, the authors have merged the synthesized dataset with the CICIDS2017 dataset. The models are then retrained and the detection performance to newly synthesized unknown attacks is evaluated. The performance of these models have showed great enhancements on the second part of the experiment, i.e. DNN and LSTM achieving an accuracy of 98.72% and 96.15%, respectively. The DNN and LSTM have AUC values of 0.987 and 0.989, respectively. The dataset ANTS2019 has been created synthetically to mimic real-life attacks. The binary class classification has been done and the real-time detection setup has not been used.In the private cloud, DDoS is one of the causes to degrade the services. The focus of Virupakshar et al. (2020) is on bandwidth and connection flooding types of DDoS attacks. Authors have used DT, KNN, NB, and DNN algorithms for the detection of DDoS attacks in the OpenStack-based cloud. The authors have also compared several classifiers and selected the model with the best precision and accuracy. DNN model has been chosen as it has higher accuracy and precision value when the dynamically generated dataset is being used. DNN classifier achieved 96% precision and higher accuracy for cloud datasets than DT, KNN, NB. Authors have used an old dataset, i.e. KDDCUP99, and also, there is no detail given about the LAN and cloud dataset. The precision value of the DNN algorithm is less for the KDDCUP99 dataset compared to other algorithms.Asad et al. (2020) introduced DNN architecture (i.e. DeepDetect). It is based on feed-forward back-propagation architecture. The authors proposed this model to protect the services from the application layer DDoS attacks. The proposed approach is evaluated using the CICIDS2017 dataset for DDoS detection. The authors have compared their method with RF and DeepGFL. The DeepDetect yielded F1-score value of 0.99 and outperformed the other approach. Also, the AUC value is so close to 1, that it shows the high accuracy achieved by the proposed model. In this article researchers have done multiclass classification and this approach has been deployed on the cloud as a web service to provide security from application-layer DDoS attacks. This approach has been evaluated only on the Application layer DDoS attacks.Muraleedharan and Janet (2020) proposed a flow data-based deep neural classification model to detect slow DoS attacks on HTTP. The classification model used a FC feed-forward deep network. The model is evaluated on the CICIDS2017 dataset in which only the DoS samples have been selected for the model. The classifier can detect the type of DoS attacks. The results obtained illustrate that the model can classify the attacks with an overall accuracy of 99.61%. This approach has evaluated only HTTP slow DoS attacks (Slowloris, SlowHTTP, Hulk, GoldenEye) over the CICIDS2017 dataset.Sbai and El Boukhari (2020) proposed a DL model DNN (with two hidden layers and 6- epochs) to detect data flooding or UDP flooding attack in MANETs, by using the dataset CICDDoS2019. The authors trained and evaluated the model with the CICDDoS2019 dataset. The proposed model obtained results that are: Recall: 1, precision: 0.99, F1-score: 0.99, Accuracy: 0.99, which are very promising. In this article, the authors have worked only on the data flooding or UDP flooding attack of the CICDDoS2019 dataset.Amaizu et al. (2021) proposed an efficient DL-based DDoS attack detection framework in 5G and B5G environments. The proposed framework is developed by concatenating two differently designed DNN models, coupled with a feature extraction algorithm, i.e. PCC. It is built to detect the DDoS attacks and the type of DDoS attacks encountered. The authors evaluated the proposed framework using four different scenarios over an industry-recognized dataset (i.e. CICDDoS2019 dataset). Results illustrated that the framework could detect DDoS attacks with an accuracy of 99.66% and a loss of 0.011. Furthermore, the proposed detection framework results were compared with the existing approaches, i.e. KNN, SVM, DeepDefense, and CNN ensemble. The proposed framework outperformed all except the CNN ensemble. The CNN ensemble has better precision and recall than the proposed framework. The proposed model has a complex structure so it can take more detection time and thus can affect the model’s performance in a real-time scenario.Cil et al. (2021), proposed the DL model that contains both feature extraction as well as classification processes in its structure. The DNN model consists of an input layer with 69 units, three hidden layers consist of an equal amount of 50 units and two units are used in the output layer. The authors have divided the dataset CICDDoS2019 into two datasets, i.e. Dataset1 and Dataset2. Dataset1 is categorized as two types of traffic: normal and attack traffic. Dataset2 is created to define the types of DDoS attacks. DNN model has nearly 100% accuracy for DDoS attack detection on Dataset1 and thus the DNN model has achieved the reliable result for early action, suitable for real time scenarios. Also, it successfully classifies DDoS attacks with approximately 95% of accuracy on the Dataset2. The proposed model gives less accuracy in the case of multiclass classification.

-

Convolutional neural networkThe Optical Burst Switching (OBS) network is usually victimized by DDoS attacks, known as Burst Header Packet (BHP) flooding attacks. According to Hasan et al. (2018) because of a minimal number of records of the datasets, conventional machine learning techniques such as NB, KNN, and SVM cannot examine the data efficiently. Therefore, the authors have proposed a Deep CNN model. The results showed that the proposed method outperformed the three ML methods for a given dataset with fewer features. In this multiclass Classification has been done and the model has been evaluated over 11 performance metrics and obtained good results. The dataset used to evaluate the proposed model has a small number of instances and does not contain all traffic types.In the paper, Amma and Subramanian (2019) a Vector Convolutional Deep Feature Learning (VCDeepFL) approach to identify DoS attacks has been introduced. The VCDeepFL approach is a combination of Vector VCNN and FCNN. The proposed method has two phases, i.e. training and testing. The training phase consists of pre-training using unsupervised learning, i.e. VCNN, and training using supervised learning, i.e. FCNN. VCNN uses the vector form and the FCNN has been trained using the features from the pre-training module. FCNN is a multiclass classifier. The testing is done using the weights which are learned during the training phase in VCDeepFL. The proposed approach has been tested over the NSL KDD dataset and compared with the base classifiers (MLP, SVM) and state-of-the-art attack detection systems. It has been observed from the results that the proposed approach achieved high accuracy, low false alarm, and improved detection rate compared to base classifiers and the state-of-the-art attack detection system. In this study, the old dataset has been used and the authors have not shown the experiments for detecting unknown attacks.Chen et al. (2019) proposed a DAD-MCNN (i.e. multichannel CNN) framework to detect DDoS attacks. The number of feature groups decides the number of channels. The authors have split the features into different levels, like packet level, host level, and traffic level. The authors have used the incremental training approach to train MC-CNN. The authors have conducted a sequence of tests over KDDCUP99, CICIDS2017 datasets for binary classification in both datasets and multiclass category in KDDCUP99 only. They also compared MC-CNN with CNN, LSTM (3 layers), and other shallow ML methods (RF, SVM, C4.5, and KNN). The results showed that MC-CNN outperformed the state-of-art methods for all binary and multiclass classification. Further, the authors have also changed the training dataset size and evaluated the CNN and MC-CNN. The results showed that MC-CNN is better in the restricted dataset and helpful in building DDoS detection systems when the training data are relatively insufficient. There is no much difference in the results of multichannel and single-channel models. Also, the multichannel models will increase the complexity and thus might not be suitable when validated over real-time scenarios.In Shaaban et al. (2019), the CNN model has been proposed to detect DDoS attacks. Authors have compared their proposed model with the classification algorithms like DT, SVM, KNN, and NN over two datasets, i.e. dataset 1 (simulated network traffic) and dataset2 (NSL-KDD). It has been observed that the proposed model performed well compared to the other four classification algorithms such as like DT, SVM, KNN, and NN and gives an accuracy of 99% on both datasets. In this approach one-column padding has been used to convert the data into matrix form. Thus it can affect the learning of the model.Haider et al. (2020) proposed a deep CNN framework for the detection of DDoS attacks in Software Defined Networks, and this proposed ensemble mechanism has been evaluated over the CICIDS2017 dataset. This solution is compared with the state-of-the-art DL-based ensembles and hybrid approaches (i.e. RNN, LSTM, RL). The ensemble CNN performed better than other three proposed DL-approaches, but there is a trade-off between their training and testing time. The authors have also compared the proposed ensemble CNN approach with existing competing approaches. The results showed that the ensemble CNN approach outperformed the existing competing approaches. The ensemble CNN has achieved an accuracy of 99.45%. This approach has training and testing times higher than other approaches. Thus, it can affect the mitigation mechanism. Therefore, attacks can cause more damage.Wang and Liu (2020) proposed an information entropy and DL method to detect DDoS attacks in SDN environment. Thus, the technique uses two-level detection for the identification of the attacks. Firstly, the controller will inspect the suspicious traffic through information entropy detection. A CNN model will then execute the detection based on the fine-grained packet to distinguish among normal traffic and attack traffic. The authors have compared their method with the DNN, SVM, and DT. The CNN achieved higher precision, accuracy, F1-score, and recall among them. The accuracy of it is 98.98%. 1. The ROC curve of CNN is steeper than DNNs, SVM, and DT. The AUC of CNN is 0.949. There is a need to set the threshold value for the detection method based on information entropy.Kim et al. (2020) developed a CNN-based model to detect DoS attacks using the records of DoS attacks in CSE-CIC-IDS 2018 and KDD datasets. Authors have designed their CNN model considering the number of CLs and kernel size. They evaluated their model by creating 18 scenarios considering hyperparameters, the type of image, i.e. greyscale or RGB, the number of CLs, and the kernel size. The authors have evaluated each scenario for both binary and multiclass classifications. They then suggested optimal scenarios with higher performance. The CNN model is also compared with RNN. The CNN model can identify specific DoS attacks with alike characteristics compared to the RNN model. It has also been found that kernel size in CNN has not significantly impacted both binary and multiclass classification. The preprocessing time of conversion of features to RGB and greyscale images has not been considered, as it matters in real-time validation.LUCID technique (Doriguzzi-Corin et al. 2020) has been used to detect DDoS attacks, which helps in, lightweight execution with low processing overhead and detection time. Their unique traffic preprocessing mechanism is designed to feed the CNN model with network traffic for online DDoS attack detection. The authors compared LUCID with DeepDefense 3LSTM over ISCX2012, CIC2017, CSECIC2018, UNB201X and got comparable results. However, the LUCID outperforms 3LSTM in detection time. The performance of LUCID has been compared against state-of-the-art works (DeepDefense, TR-IDS, E3ML) and validated on ISCX2012. Also, compared the LUCID with state-of-the-art works (DeepGFL, MLP, LSTM, 1D-CNN, 1D-CNN LSTM) and validated on CIC2017 Dataset. The evaluation results show that the LUCID matches the existing state-of-the-art performance. It has also been demonstrated the suitability of the model in resource-constrained environments. Their work has also proved that LUCID is learning the correct domain information by calculating each feature’s kernel activations. The LUCID training time on the GPU development board is 40 times faster than the authors’ implementation of DeepDefense 3LSTM. The feasibility test has also been done for the proposed approach. The padding has been used for making the size of each flow equal to n. By using padding, the CNN may get affected in learning the patterns. Also, there are trade-offs between accuracy and memory requirements. The pre-processing time has not been calculated as it is important for real-time scenarios.In de Assis et al. (2020), the authors have proposed an SDN defence system. The defence system detects and mitigates DDoS attacks over the external targeted server and on the controller. The detection module detects attacks. In this module, the authors have used DL-based CNN method to detect DDoS attacks by inspecting the SDN traffic behaviour. The proposed method works in near real-time, as in this study, IP flow data have been extracted and analyzed in one-second intervals to reduce the DDoS effect over genuine users. The proposed CNN approach within the detection module has been compared with the other three anomaly detection approaches, i.e. the LR, the MLP network, and the Dense MLP. The authors have tested the above detection methods over two test scenarios, i.e. the first one uses simulated SDN data, and the second one uses CICDDoS 2019 dataset. The overall results showed that CNN is efficient in detecting DDoS attacks for all these test scenarios. A GT-based technique has been applied in the SDN controller to mitigate the attack in the mitigation module. The outcomes showed that the mitigation method efficiently restores the SDN’s regular operation. The proposed system operates autonomously to allow the speed of the detection and mitigation processes. The model shows less accuracy for CICDDoS 2019 dataset.Authors Hussain et al. (2020) have proposed a method to transform the non-image network traffic into three-channel image forms. It has been evaluated on the existing ResNet-18 model, a state-of-the-art CNN model, for detecting the recent DoS and DDoS attacks. The proposed method used the cleaned and normalized features to transform the data into images without using any encoding or transformation techniques. The authors also compared the proposed methodology using ResNet-18 with a state of art solution and outperformed it on the same dataset. The proposed methodology using ResNet-18 achieved 99.99% accuracy in binary class classification. It has also achieved an accuracy of 87.06% for the 11 types of DoS and DDoS attacks on the CICDDoS2019 dataset. The preprocessing time is not calculated for converting non-image data to image data as this is the important metric for real-time validation. Also, the transformation of the original 60*60*3 dimensions into 224*224*3 dimensions has not been described for the input to the ResNet model.

-

- Supervised sequence learning

-

Long short-term memoryLi et al. (2018) proposed a deep learning model to detect DDoS attacks in SDN environment. The model comprises an input, forward recursive, reverse recursive, FC hidden layer, and output layers. RNN, LSTM, and CNN are also used in the model. Thus, the authors have formed four different models that are: LSTM, CNN/LSTM, GRU, 3LSTM. The accuracy of the DDoS attack by the use of the ISCX dataset is 98%. The DDoS attack detection and defence system are built using the ubuntu14.04 operating system, and the DDoS defence system is verified through real-time DDoS attacks. But tested on only limited types of real-time DDoS attacks that are the Ping Of Death attack, ARP flood inundation attack, SYN flood inundation attack, Smurf attack, and UDP flood inundation attack.Priyadarshini and Barik (2019) have designed a DL-based model to protect from DDoS attacks in a fog network. The LSTM has been used to detect Network/ Transport level DDoS attacks. The LSTM model’s parameters are also varied and were implemented using two scenarios. The authors have produced the results by implementing the DL model over the CTU-13 Botnet and the ISCX2012 IDS datasets in the first scenario. In the second scenario, the DL model is trained with the Hogzilla dataset and is examined on 10% of it and a few real-time DDoS attacks. The authors compared the model with other approaches also. It has been observed that the LSTM model showed 98.88% of accuracy for all the test scenarios. DDoS defender module can block the infected packet from being transmitted to the cloud server through the OpenFlow switch present in SDN. In this article, no real-time feasibility analysis of the proposed has been done and only Network/transport-level DDoS attacks have been detected.Liang and Znati (2019) have proposed the four-layered architecture model consisting of two LSTM layers, a dropout layer, and a FC layer. In this approach, the handcrafted feature engineering has been obviated, and network traffic behaviour has been learned directly from a small sequence of packets. This paper has carried out three experiments with three other algorithms (DT, ANN, SVM) over CICIDS 2017 Wednesday and Friday datasets. According to the results observed, Experiment 1 showed that the LSTM-based scheme successfully learned the complex flow-level feature descriptions embedded in raw input and performed well than other approaches. Experiment 2’s result showed that the proposed scheme can capture the dynamic behaviours of unknown network traffic accurately. Experiment 3 concluded that permitting the model to test more packets for every flow, with increasing n values, no longer always enhances the performance. The proposed scheme outperforms traditional machine learning methods over unknown traffic. The proposed model uses a subsequence of n packets, i.e. . If a flow does not have enough packets, S is padded with fake packets. These padding values can affect the learning of the proposed model and can cause performance degradation.Shurman et al. (2020) proposed two methodologies the first method is a hybrid-based IDS, and the second method is a DL model based on LSTM to detect DoS/DDoS attacks. The first method, the IDS framework, defined as an application, can detect malicious network traffic from any network device with running datasets of IPs against it. It is capable of blocking unwelcome IPs. The second method used the LSTM and this model is trained on the CICDDoS2019 dataset with several types of DrDoS attacks. The second model is compared with other existing models. The results show that the model outperformed the other models. The LSTM-based model shows an accuracy of 99.19% on the reflection-based CICDDoS2019 dataset but only reflection-based CICDDoS2019 dataset has been used. Also, the hybrid IDS and LSTM methods are independent of each other.

-

Gated recurrent unitAssis et al. (2021) proposed a defence system against DDoS and intrusion attacks in SDN environment. The proposed system is consists of two essential modules, i.e. the detection and mitigation modules. The detection module detects attacks. In this module, the authors have used the DL-based GRU method to detect DDoS and intrusion attacks by analyzing single IP flow records. The mitigation module takes effective actions against the detected attacks. Authors have tested their proposed model against seven different ML approaches on two datasets, i.e. CICDDoS 2019 and the CICIDS 2018. These different ML approaches are DNN, CNN, LSTM, SVM, LR, KNN, and GD. The authors have taken two test scenarios, i.e. first for CICDDoS 2019 dataset and second for the CICIDS2018. In both scenarios, authors have tested their proposed model with other ML methods for accuracy, precision, recall, f-measure, the effectiveness of the methods’ classification concerning normal and attack flows separately. The results showed that the GRU could detect DDoS and intrusion attacks for all these test scenarios. Furthermore, a feasibility test is also performed by calculating the average number of flows per second the detection methods can analyze and classify. This test is done using collected actual IP flow data from the State University of Londrina. The results pointed out that GRU is a viable proposed approach. The average results of the proposed approach including the accuracy, recall, precision, and f-measure for CICDDoS2019 and CICIDS2018 datasets are 99.94% and 97.09%, respectively. In this article, the detection and training times are not calculated and also the offline analysis of datasets has been done.

-

-

Semi-supervised learning

Catak and Mustacoglu (2019) proposed a combination of two different models, i.e. AE and a deep ANN. The AE layer of the model learns the representation of the network flows. The DNN model tries to determine the exact malicious activity class. The authors have evaluated their model on the UNSWNB15 dataset and KDDCUP99 with different activation functions. The results obtained the best F1 results with ReLu activation function, i.e. 0.8985. The overall accuracy and precision for KDDCUP’99 are approximately 99% for activation functions softplus, softsign, ReLu, tanh. In this article, the focus is only on the activation functions.

Ali and Li (2019) have proposed the deep AE for feature learning and MKL framework for detection model learning and classification. The authors first trained the multiple deep AEs to learn features in an unsupervised manner from training data. Then, the features are automatically combined using the MKL algorithm called the MKLDR algorithm. It is then used to form a DDoS detection model in a supervised fashion. The proposed method has been evaluated on two datasets, i.e. ISCXIDS2012 and UNSW-NB15 and their subsets. Also, the proposed method is compared with NB, DT, KN, LSVM, RF, and LSTM. It has been observed that the accuracy of the proposed method is higher compared to other methods. The detection time of the proposed model is not calculated as the model is very complex and thus can take time to respond and thus, attacks can cause significant damage to the system.

Yang et al. (2020) have designed a five-layered AE model for an effective and unsupervised DDoS detection. It requires only normal data for building the detection model. Then this model classifies the traffics into the attack and normal. Authors have demonstrated through experiments over different datasets (i.e. public datasets synthetic dataset) that the knowledge learned from one network environment cannot be applied to another. Also showed that one of the supervised ML approaches, i.e. DT, cannot effectively detect new attacks which have not appeared in its train set. Still, the AE performed well on unknown and new attacks. The authors also demonstrated that the results of AE-based DDoS attacks Detection Framework (AE-D3F) with 27 features and the sixteen selected features with PCC on the datasets achieved a comparable performance while using fewer features. This approach used only normal traffic to train the model and is helpful for the unavailability of labelled attack data. It is used for both feature learning as well as classification of traffic. The classification is done using the RE threshold value. AE-D3F can achieve on both known and unknown attacks test sets, nearly 100% DR with less than 0.5% FPR, but there is a need to set the RE threshold value.

In the paper (Kasim 2020), the author has proposed the AE-SVM approach. Authors evaluated their proposed model on the following test scenarios: (1) The model trained over 16,902 data (2) Tested over randomly selected 15,000 data from CICIDS dataset (3) Tested over the 6957 dataset of DDoS attacks created with Kali Linux environment (4) Trained using NSL-KDD train dataset with ten-fold cross-validation. (5) Tested over NSLKDD. The AE-SVM method outperformed other methods in terms of low false-positive rate and rapid anomaly discovery. The accuracy of the proposed model over the NSL-KDD dataset is less compared to the other two datasets.

Bhardwaj et al. (2020) proposed an approach that combines a stacked sparse AE to learn features with a DNN for network traffic classification. First of all, Naive AE and DNN have been considered a baseline model in which authors have taken the random hyperparameters values for both AE and DNN. Then naive AE and DNN have been optimized for further improvements in AE and DNN model. The ten state-of-the-art approaches have been compared with the proposed approach. The approaches taken to compare over the NSL-KDD dataset are SAECSMR, AECGaussian NB, RNN, MLP, AECSVM, and SAVAERCDNN. The approaches taken to compare over the CICIDS2017 dataset are DT, ANN, SVM, SAVAERCDNN, and LSTM. Results showed that the proposed approach outperformed the existing approaches over the NSL-KDD dataset with 98.43% accuracy and produced competitive results over the CICIDS2017 dataset by giving the accuracy of 98.92%. The proposed method is adequate to deal with feature learning and overfitting problem. The feature learning is achieved by training the AE with random samples of training data and the overfitting problem has been prevented by using the sparsity parameter. This article has not evaluated the recent dataset and has done offline analysis. Also, the detection time is not calculated for the proposed model.

Premkumar and Sundararajan (2020) proposed a DLDM frame structure to detect DoS attacks in WSN. The authors have used the DLDM framework, which uses RBF-based neural DL to classify the data. The authors took the simulation parameters, simulated the experiments in NS2, and presented the detection performance over a single CH. Authors showed that by taking a single CH, and the number of attackers taken from 5 to 15%, the detection ratio is between 86% to 99%, and the average false alarm rate is 15%. The DLDM showed a higher detection rate and a low false alarm rate than the MAS for the entire data forwarding phase. The nodes’ lifetime is enhanced due to the reduction in the energy utilization of the nodes. The feasibility analysis of the proposed model has been done on simulator NS2 by calculating PDR, energy consumption, and throughput. The DLDM framework is valid for nodes with little mobility or without mobility, but in the WSN, nodes are highly dynamic and move frequently. Also, only generated dataset has been used for model evaluation.

-

Hybrid learning

Roopak et al. (2019) have proposed four DL models, i.e. MLP, CNN, LSTM, and hybrid CNN-LSTM model, and compared with ML algorithms (SVM, Bayes, and RF ML algorithms). The authors evaluated them on the CICIDS2017 dataset, and this dataset is unbalanced. It is made balanced by duplicating the data. It has been observed that the hybrid CNN-LSTM model performed well compared to the rest of the DL and ML models. It gives an accuracy of 97.16%, and recall of 99.1%. The method by which the dataset has been made balanced is missing and offline analysis of the proposed model has been done for IoT networks.

Li and Lu (2019) proposed a model which is the combination of the LSTM and Bayes method, referred to as LSTM-BA. In this approach, LSTM first learns the DDoS attack mode using network traffic, which gives a probability of prediction for a DDoS attack. In this, the authors have determined the DDoS attacks with high prediction value (value greater than 0.5) and the normal traffic with a low prediction value (value less than 0.5) for DDoS attacks. Those prediction values from 0.2 to 0.8 authors re-detect it for high accuracy by using the Bayes method for identifying the DDoS. Authors have evaluated their LSTM-BA approach and LSTM module without Bayes over intrusion detection ISCX2012 dataset. From the results, it has been shown that the LSTM-BA performed well compared to LSTM in terms of F1-score. Then, the authors have compared their model with other existing methods, i.e. DeepDefense and Random Forest. LSTM-BA outperformed them with the highest F1-score and accuracy. In addition to the above experiments, authors have also verified the generalization of LSTM-BA. They examine the performance of LSTM-BA on data of the 5th day of the ISCX2012 dataset. Results showed that performance indicators have declined a little in the new data and the results are still good. Hence, it proves the generalization of the LSTM-BA approach. The LSTM-BA can take more time to detect the attack that is unsuitable for real-time scenarios. The proposed model increases the accuracy only by 0.16% compared to the existing DeepDefense method. The preprocessing time has not been calculated as the BOW, and feature hashing is used to convert IP addresses to a real vector.

Roopak et al. (2020) used the multi-objective optimization, i.e. the Non-dominated sorting algorithm (NSGA) method for feature selection on the preprocessed dataset. In this study, the combination of CNN and LSTM has been used to classify the attack. The CICIDS2017 dataset has been used for experimentations using GPU. The proposed method achieved a high accuracy of 99.03% and a F1-score value of 99.36%. Authors have also compared their method with MLP, SVM, RF, Bayes, and other state-of-the-art techniques. The results showed that the proposed model outperforms other work. The training time is reduced 11 times lower compared to other DL methods. In this article, most of the state-of-the-art techniques are not using the CICIDS2017 dataset. So the comparison seems not suitable.

Elsayed et al. (2020) proposed DDoSNet to detect DDoS attacks in SDNs. DDoSNet is a DL-based technique, which combines the RNN with AE. The model has been evaluated using the new dataset CICDDoS2019. Authors have also compared the DDoSNet with six classical ML techniques, i.e. DT, NB, RF, SVM, Booster, and LR. The evaluation of the DDoSNet model showed that it outperformed the existing six classical ML techniques in terms of accuracy, recall, precision, and F-score. The approach achieved 99% accuracy and AUC of 98.8 on CICDDoS2019 dataset. The offline analysis of the dataset has been done, and no multiclass classification has been done.

In Nugraha and Murthy (2020) a DL-based approach has been proposed to detect slow DDoS attacks in SDNs. This approach uses a hybrid CNN-LSTM. Firstly, authors have created synthetic datasets for slow DDoS attacks and benign flows because these attack traffic datasets are not available publicly. The synthetic traffic flow dataset having UDP and HTTP flows as benign traffic and HTTP flows as slow DDoS attack traffic are generated. Secondly, the proposed CNN-LSTM model is trained, validated, and tested over the generated datasets. The authors have compared the performance of the hybrid CNN-LSTM model with the DL model (MLP) and the ML technique (1-Class SVM). The proposed model outperformed other methods by achieving more than 99% in all performance metrics. The model is used only for the detection of slow DDoS attacks.

-

Other learning methods

In the paper (He et al. 2020) He et al. have proposed a method based on deep transfer learning to detect small sample DDoS attack. Firstly, several neural networks are trained using DL techniques. The authors then compare the transfer performance of different networks using transferability metric. Then by comparing the transferability metric, the model with the best transfer performance has been selected out of the four networks. The authors then fine-tuned the parameters of the layers of the transferred network and trained it on the target domain. Authors showed a 20.8% improvement in detection of the 8LANN network in the target domain compared to the network where the parameters of all layers are initialized randomly, in which the final detection performance drops from 99.28 to 67%. Thus, the deep transfer network method combined with fine tuning technology improves the deterioration of detection performance caused by small sample of DDoS attacks. Only one attack is taken in the source domain and the target domain for model evaluation.

Available DDoS benchmarked datasets and classes of attacks in datasets

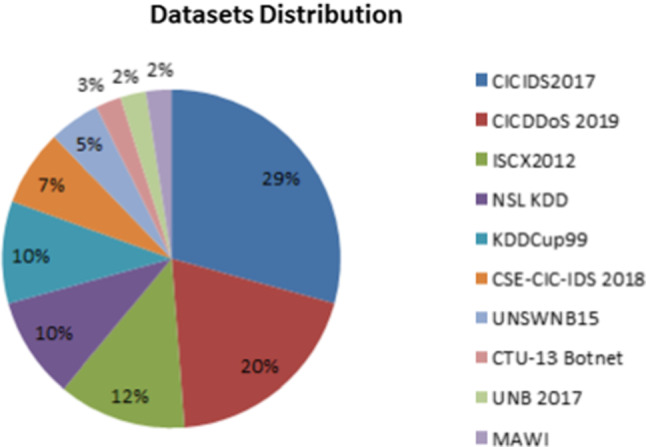

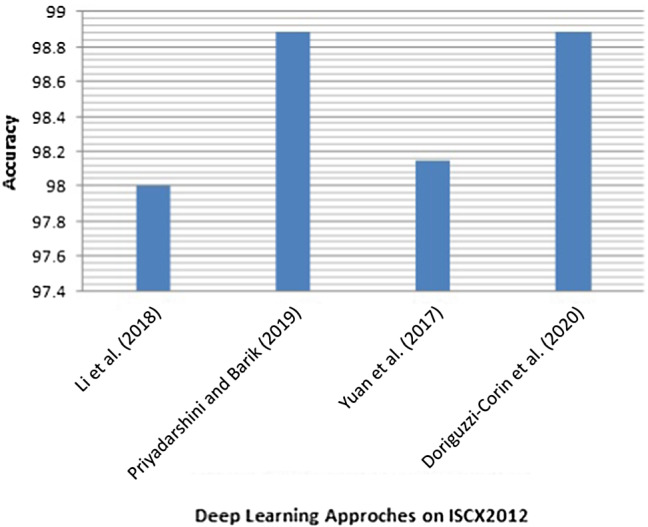

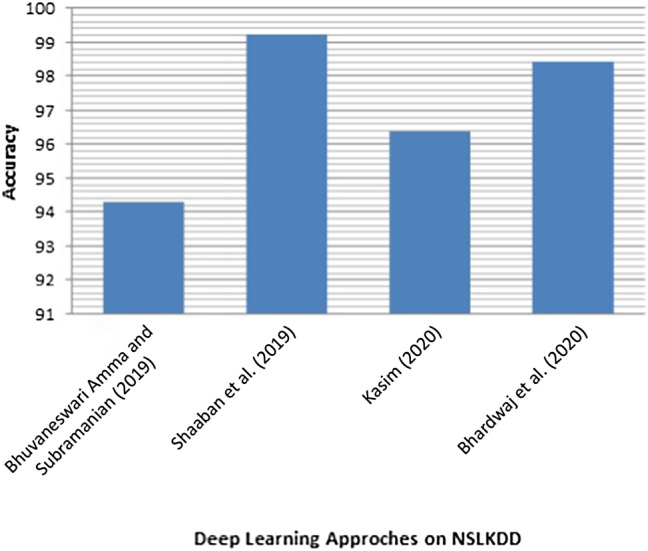

Table 4 lists the datasets and types of attack classes used by the papers that were reviewed for DDoS attack detection. It has been observed that most of the papers used seven datasets, namely, CICIDS2017 dataset, CICDDoS2019 dataset, ISCX2012 dataset, KDDCUP 1999 dataset, NSL-KDD dataset, CSECICIDS2018 dataset, and UNSWNB15 dataset. The description of these datasets is given as below.

Table 4.

The recent DL-based DDoS attacks detection studies, their methods, datasets, and classes of attacks used

| Taxonomy | References | Date of publication | Approach used | Dataset used | Classes of attacks in the studies |

|---|---|---|---|---|---|

| Supervised Instance Learning | Hasan et al. (2018) | 2018 | Deep CNN | Optical Burst Switching (OBS) Network dataset | – |

| Amma and Subramanian (2019) | 2019 | CNN | NSL KDD | DoS | |

| Chen et al. (2019) | 2019 | Multichannel CNN | KDDCUP99 and CICIDS2017 | KDDCUP99: Normal, DoS, R2L, U2R, Probe. CICIDS2017: DoS/DDoS: Hulk, Heartbleed, slowloris, Slowhttptest, GoldenEye | |

| Shaaban et al. (2019) | 2019 | CNN model | 1. Captured from simulated MCC network by Wireshark 2. NSL-KDD | Dataset 1: TCP and HTTP Flood DDoS Attack. NSL-KDD: DoS, Probe, R2L, U2R | |

| Sabeel et al. (2019) | 2019 | DNN, LSTM | CICIDS2017 and ANTS2019 | CICIDS2017: Benign, DoS GoldenEye, DoS Slowloris, DoS Hulk, DoS Slowhttptest, DDoS. ANTS2019: DDoS attack and Benign | |

| Virupakshar et al. (2020) | 2020 | DT, KNN, NB, and DNN | KDDCUP, LAN, and Cloud | KDDCUP99: Normal, DoS, R2L, U2R, Probe. Cloud: ICMP flooding, TCP flooding, and HTTP flooding | |

| Haider et al. (2020) | 2020 | Ensemble RNN, LSTM, CNN, and Hybrid RL | CICIDS2017 | Slowloris, Slowhttptest, Hulk, GoldenEye, Heartbleed, and DDoS | |

| Wang and Liu (2020) | 2020 | Information entropy and CNN | CICIDS2017 | Benign, BForce, SFTP and SSH, slowloris, Slowhttptest, Heartbleed, Web BForce, Hulk, GoldenEye, XSS and SQL Inject, Infiltration Dropbox Download, Botnet ARES, Cool disk, DDoS LOIT, PortScans | |

| Kim et al. (2020) | 2020 | CNN | KDDCUP99 and CSE-CIC-IDS 2018 | KDDCUP99: Benign, Neptune and Smurf Attack. CSE-CIC-IDS 2018: Benign, DoS-SlowHTTPTest, DoS-Hulk Attack, DoS-GoldenEye, DoS-Slowloris, DDoS-HOIC, DDoS-LOIC-HTTP | |

| Doriguzzi-Corin et al. (2020) | 2020 | CNN | ISCX2012, CIC2017, and CSECIC2018 | ISCX2012: DDoS attack based on an IRC botnet. CIC2017: HTTP DDoS generated with LOIC. CSECIC2018: HTTP DDoS generated with HOIC | |

| Asad et al. (2020) | 2020 | DNN | CICIDS2017 | Benign, DoS Slowloris, DoS Hulk, DoS SlowHTTPTest and DoS GoldenEye | |

| Muraleedharan and Janet (2020) | 2020 | DNN | CICIDS2017 | Benign, Slowloris, SlowHTTP, Hulk, GoldenEye | |

| Sbai and El Boukhari (2020) | 2020 | DNN | CICDDoS2019 | Data flooding or UDP flooding attack | |

| de Assis et al. (2020) | 2020 | CNN | Simulated SDN data and CICDDoS 2019 | SDN dataset: DDoS attack. CICDDoS2019: Twelve DDoS attacks on the training day and seven attacks during the testing day | |

| Hussain et al. (2020) | 2020 | CNN model i.e., ResNet | CICDDoS2019 | Syn, TFTP, DNS, LDAP, UDP Lag, MSSQL, NetBIOS, SNMP, SSDP, NTP, UDP, and Normal traffic | |

| Amaizu et al. (2021) | 2021 | DNN | CICDDoS2019 | UDP LAG, SYN, DNS, MSSQL, NTP, SSDP, TFTP, NetBIOS, LDAP, UDP and Benign | |

| Cil et al. (2021) | 2021 | DNN | CICDDoS2019 | Twelve DDoS attacks on the training day and seven attacks during the testing day | |

| Supervised Sequence Learning | Li et al. (2018) | 2018 | LSTM, CNN/LSTM, GRU, 3LSTM | ISCX2012 dataset and Generated DDoS attacks | Generated DDoS attacks : ARP flood inundation attack, Smurf attack, SYN flood inundation attack, Ping of Death attack, and UDP flood inundation attack. ISCX2012: HTTP Denial of Service and Distributed Denial of Service using an IRC Botnet |

| Priyadarshini and Barik (2019) | 2019 | LSTM | CTU-13 Botnet, ISCX 2012 and, some real DDoS attacks | ISCX2012: Infiltrating the network from the inside, DDoS using an IRC botnet, HTTP DoS, SSH brute force. CTU-13: IRC, Port Scan, FastFlux, spam, ClickFraud, US. Some real DDoS attacks are: TCP, UDP and ICMP | |

| Liang and Znati (2019) | 2019 | LSTM | CICIDS2017 | Slowloris, Hulk, Slowhttptest, GoldenEye and LOIC | |

| Shurman et al. (2020) | 2020 | Hybrid IDS and LSTM | Reflection-based CICDDoS2019 | MSSQL, SSDP, CharGen, LDAP, NTP, TFTP, DNS, SNMP, NETBIOS, and PORTMAP | |

| Assis et al. (2021) | 2021 | GRU | CICDDoS2019 and CICIDS2018 | CICDDoS2019: Twelve DDoS attacks on the training day and seven attacks during the testing day. CICIDS2018: Infiltration of the network from inside, HTTP denial of service, Collection of web application attacks, Brute force attacks, Last updated attacks | |

| Semi-supervised instance learning | Catak and Mustacoglu (2019) | 2019 | AE and a deep ANN | UNSWNB15 and KDDCUP99 | UNSWNB15 dataset: Normal, Analysis, Fuzzers, Backdoors, Exploits, DoS, Reconnaissance, Shellcode and Worm. KDDCUP99: neptune, Smurf, Teardrop |

| Ali and Li (2019) | 2019 | Deep AE and MKL | ISCXIDS2012 and UNSWNB15 | ISCXIDS2012: Normal Activity. UNSWNB15: Fuzzers, Backdoors, Analysis, DoS, Exploits, Generic, Shellcode, Reconnaissance and Worms | |

| Yang et al. (2020) | 2020 | AE | Synthetic Dataset, UNB2017 and MAWI | Synthetic dataset: Excessive get post-attack, Recursive get attack, SlowLoris attack, and Slow post-attack. UNB2017: Slow HTTP attack, Hulk attack, Slowloris attack, and Golden eye. MAWI: Normal samples | |

| Kasim (2020) | 2020 | AE-SVM | CICIDS2017, NSL-KDD and 6957 data set of DDoS attacks | CIC-IDS2017: Slowloris, Slowhttptest, Hulk, GoldenEye, DDoS LOIT. NSL-KDD : Back, Land, Pod, Smurf, Neptune, Teardrop, Processtable, Udpstorm, Apache2, Mailbomb, Worm. 6957 data set of DDoS attacks | |

| Bhardwaj et al. (2020) | 2020 | AE with DNN | NSL-KDD and CICIDS2017 | NSL-KDD: Back, Land, Teardrop, Mailbomb, Processtable, Udpstorm, Neptune, Pod, Smurf, Apache2, and Worm. CICIDS2017: Slowloris, Hulk, Slowhttptest, GoldenEye, DDoS LOIT | |

| Premkumar and Sundararajan (2020) | 2020 | RBF | Generated dataset | Data Flooding, Jamming, Exhaustion, Sinkhole, Eavesdropping and Packet dropping attack | |

| Hybrid Learning | Roopak et al. (2019) | 2019 | MLP, CNN, LSTM, and hybrid CNN LSTM | CICIDS2017 | Slowloris, Slowhttptest, Hulk, GoldenEye, DDoS LOIT |

| Li and Lu (2019) | 2019 | LSTM and Bayes | ISCX2012 | HTTP Denial of Service and Normal Activity | |

| Roopak et al. (2020) | 2020 | CNN with LSTM | CICIDS2017 | DDoS | |

| Elsayed et al. (2020) | 2020 | RNN-AE | CICDDoS2019 | Twelve DDoS attacks on the training day and seven attacks during the testing day | |

| Nugraha and Murthy (2020) | 2020 | CNN-LSTM | Synthetically generated | Slow DDoS attack: HTTP flows. Benign traffic: UDP and HTTP flows | |

| Transfer learning | He et al. (2020) | 2020 | 6LANN, 7LANN, 8LANN, 9LANN | – | SYN-type, and LDAP-type DDoS attacks |

KDDCUP 1999 The KDDCUP99 dataset (http://kdd.ics.uci.edu/databases/kddcup99/kddcup99.html) is an intrusion detection standard dataset and was provided by the Massachusetts Institute of Technology laboratory (MIT). It is based on DARPA’98 data set. The total number of normal and attacks records are 1,033,372 and 4,176,086, respectively (Tavallaee et al. 2009). It contains total training and testing records of 4,898,431 and 311,027, respectively (Tavallaee et al. 2009). Each record has 41 features. It has three types of features, i.e. basic, traffic, and content (Tavallaee et al. 2009). This dataset contains emulated records. It is labelled and imbalanced dataset (Ring et al. 2019). This dataset has four types of attacks, i.e. Denial of Service (DoS), Remote to Local (R2L), User to Root (U2R) and Probe attacks.The details are given as below (Gamage and Samarabandu 2020):

Probe: ipsweep, nmap, satan, portsweep.

DoS: back, land, smurf, neptune, pod, teardrop.

U2R: buffer overflow, perl, loadmodule, rootkit.

R2L: ftp write, guesspasswd, imap, multihop, phf, spy, warezmaster, warezlient.

NSL-KDD dataset (https://www.unb.ca/cic/datasets/nsl.html; Protić 2018) This dataset is an extension of the KDDCUP99 dataset to eliminate some problems of KDDCUP99 dataset. KDDCUP99 dataset contains many redundant and duplicate records, and to fix these problems, the NSL-KDD dataset was proposed. The number of records in the train and test sets is reasonable in the NSL-KDD dataset. It contains approximately 150,000 data points, and this dataset also contains emulated records (Ring et al. 2019). The dataset is labelled and imbalanced (Ring et al. 2019) and contains training records of 125,973 and testing records of 22,544 (Gamage and Samarabandu 2020). It also includes four types of attacks (Protić 2018):

DoS: Back, Land, Pod, Smurf, Apache2, Neptune, Teardrop, Mailbomb, Processtable, Udp storm, Worm.

Probe: IPsweep, Satan, Nmap, Mscan, Portsweep, Saint.

R2L: Ftp write, Imap, Guess password, Phf, Multihop, Warezmaster, Xlock, Xsnoop, Snmpguess, Snmpgetattack, Httptunnel, Named, Sendmail.

U2R: Buffer overflow, Perl, Loadmodule, Rootkit, Sqlattack, Ps, Xterm.

UNSWNB15 dataset (Moustafa and Slay 2015) It was generated in the Cyber Range Lab of the Australian Centre for Cyber Security (ACCS). Four tools were used to create this dataset, i.e. IXIA PerfectStorm, argus, bro-IDS, and tcpdump tools. The IXIA PerfectStorm tool is utilised to generate a hybrid of the normal and abnormal network traffic. The IXIA tool generates nine types of attacks that are fuzzers, reconnaissance attacks, exploits, backdoors, generic attacks, shellcode, DoS attacks, worms, and analysis attacks (Gümüşbaş et al. 2020). The tcpdump tool captured the network traffic in the form of packets. The simulation period of the dataset was a total of 31 h for capturing 100 GBs, i.e. 16 h on 22-01-2015 and 15 h on 17-02-2015. Argus and bro-IDS tools extracted the reliable features from the pcap files. It has 49 features. In addition to it, twelve algorithms using a C# language were also developed to analyse the flow of the connection packets. It contains two million and 540,044 number of records having 2,218,761 benign records and 321,283 malicious records.

ISCX2012 (https://www.unb.ca/cic/datasets/ids.html) The ISCX2012 dataset was created in 2012 by Ali Shiravi et al. (Shiravi et al. 2012), consisting of the 7 days from 11-06-2010 to 17-06-2010) of network activity having normal and malicious traffic and includes full-packet network data. The malicious traffic includes Infiltrating the network from inside, Distributed Denial of Service, HTTP Denial of Service, and Brute Force SSH. This dataset was created in an emulated network environment. It has imbalanced and labelled dataset (Ring et al. 2019). In the ISCX dataset two general profiles are used, i.e. profiles, which characterize attack behaviour and profiles, which characterize normal user scenarios (Ring et al. 2019). It has a total of 2,381,532 benign and 68,792 malicious records (Ahmad and Alsmadi 2021).

CICIDS2017 (https://www.unb.ca/cic/datasets/ids-2017.html) The CICDS2017 dataset was generated in an emulated environment from 03-07-2017 to 07-07-2017 (Ring et al. 2019). This dataset comprises packet-based and bidirectional flow-based format of network traffic. The CICIDS2017 dataset is created by Sharafaldin et al. It implements normal activity and attacks like DoS, Heartbleed, Brute Force SSH, Web Attack, Botnet, Infiltration, and DDoS, and Brute Force FTP (Gümüşbaş et al. 2020; Panigrahi et al. 2018). More than 80 features have been extracted for each flow by the CICFlowMeter tool from the generated network traffic. The dataset made the abstract behaviour of 25 users based on some protocols like FTP, SSH, HTTP, HTTPS, and email protocols. It has 2,273,097 benign records and 557,646 malicious records (Ahmad and Alsmadi 2021).

CSE-CIC-IDS2018 dataset (https://www.unb.ca/cic/datasets/ids-2018.html) It has been created by the Communications Security Establishment (CSE) & the Canadian Institute for Cybersecurity (CIC) collected over 10 days, from Wednesday (14-02-2018) to Friday (02-03-2018). This dataset has been generated on the large network and includes seven types of attack scenarios: Heartbleed, Botnet, Brute-force, DoS, Web attacks, DDoS, and infiltration of the network from inside. The CICFlowMeter tool has extracted 80 features from the created network traffic.

CICDDoS2019 (https://www.unb.ca/cic/datasets/ddos-2019.html) The CICDDoS2019 dataset was generated by Sharafaldin et al. (2019). The features were extracted from the raw data, by using the CICFlowMeter-V3 tool and extracted more than 80 traffic features. The CICDDoS2019 comprises benign and up-to-date common DDoS attacks. This dataset was generated using real traffic and comprises a large amount of different DDoS attacks generated through protocols using TCP/UDP. The taxonomy of attacks include exploitation-based and reflection-based attacks. The reflection-based attacks contain Microsoft SQL Server (MSSQL), Network Time Protocol (NTP), Simple Service Discovery Protocol (SSDP), CharGen, Trivial File Transfer Protocol (TFTP), Lightweight Directory Access Protocol (LDAP), Domain Name Server (DNS), Simple Network Management Protocol (SNMP), Network Basic Input/Output System (NETBIOS), and PortMap. The Exploitation-based attacks include UDP flood, UDPLag and SYN flood. This dataset was gathered over 2 days in both PCAP file and flow format based for training and testing evaluation. On the training day, twelve types of DDoS attacks included DNS, LDAP, NTP, MSSQL, UDP, UDP-Lag, NetBIOS, SNMP, SSDP, WebDDoS, TFTP, and SYN which were captured on January 12th, 2019 and seven attacks on the testing day include NetBIOS, PortScan, LDAP, UDP, UDP-Lag, MSSQL and SYN, which were captured on March 11th, 2019.

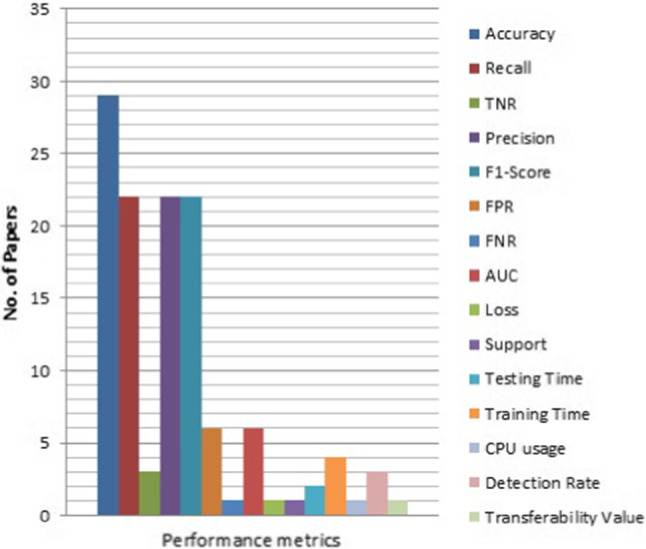

Preprocessing strategies, hyperparameter values, experimental setups, and performance metrics

Table 5 shows the preprocessing strategies, hyperparameter values, experimental setups, and performance metrics that the existing DL approaches have used for DDoS attack detection.

Table 5.

The recent DL-based DDoS attack detection studies with their preprocessing strategies, hyperparameter values, experimental setups, and performance metrics

| Taxonomy | References | Preprocessing strategies | Hyperparameter values | Experimental setups | Performance metrics |

|---|---|---|---|---|---|

| Supervised instance learning | Hasan et al. (2018) | – | The architecture consist of two convolutional layer followed by maxpooling layer, ReLu function, fully connected layer (250 neurons), ReLu function, dropout layer FC layer (four neurons). The stride size used is 1 X 1 for CL and 1 X 2 used for PL. Used back-propagation and SoftMax loss function | – | Accuracy 99%, Sensitivity 99%, Specificity 99%, Precision 99%, F1-score 99%, False positive rate 1%, and False negative rate 1% |

| Amma and Subramanian (2019) | Min–max normalization | The pre-training module has two stages of training and each stage comprises of CL and PL. The filter size is 3 and the max pooling has size 2. Training Module: The FCNN comprises of input layer, two hidden layers, the output layer with 11-9-7-6 number of nodes, respectively, and the activation function is ReLu | – | Accuracy: Normal 99.3%, Back 97.8%, Neptune 99.1%, Smurf 99.2%, Teardrop 83.3%, Others 87.1%. Precision: Normal 99.6%, Back 95.9%, Neptune 97.9%, Smurf 91.1%, Teardrop 19.6%, Others 97.8%. Recall: Normal 99.3%, Back 97.8%, Neptune 99.1%, Smurf 92.2%, Teardrop 83.3%, Others 87.1%. F1-score: Normal 99.4%, Back 96.8%, Neptune 98.5%, Smurf 95.0%, Teardrop 31.7%, Others 92.1%. False Alarm: Normal 0.7, Back 2.2, Neptune 0.9, Smurf 0.8, Teardrop 16.7, Others 12.9. AUC: Normal 0.993, Back 0.978, Neptune 0.991, Smurf 0.992, Teardrop 0.833, Others 0.871 | |

| Chen et al. (2019) | – | Used incremental training method to train MC-CNN | – | Accuracy: KDDCUP99 (2 class) 99.18%, KDDCUP99 (5 class) 98.54%, CICIDS2017 98.87% | |

| Shaaban et al. (2019) | Features are converted into matrix form i.e., 8 and 41 features into 3*3 and 6*7 matrix using padding | The CNN model contains three stages and the first stage comprised of the input layer, two CLs, and the output from these layers is fed to PL. Second stage has two CLs and a PL. The third stage consists of a FC network and output layer. ReLu function has been used in all layers except the output layer that uses softmax function | Keras library and Tenser-flow library | Dataset1: Accuracy 0.9933, Loss 0.0067. Dataset2 (NSL-KDD): Accuracy 0.9924, Loss 0.0076 | |

| Sabeel et al. (2019) | Z-score normalization | The input layer of the DNN/LSTM model has size of 25 followed by a dense/recurrent layer having 60 neurons and dropout of 0.2, FC dense layer having 60 neurons, a dropout rate is 0.2, another dense layer having 60 neurons. All layers have ReLu activation function. Then, a dense FC output layer used the sigmoid activation function. The learning rate is 0.0001 and batch size is set to 0.0001 | GPU NIVDIA Quadro K2200, Intel (R) Xenon (R) with CPU E5-2630 v3@ 2.40GHz, 240GB SSD, 64-bit Windows 10 Pro 1809. Software: Python 3.7.3, TensorFlow1.13.0, keras 1.1.0, and NVIDIA Cuda Toolkit 10.0.130 with cuDNN 7.6.0 | Accuracy 98.72%, TPR 0.998, Precision 0.949, F1-score 0.974, AUC 0.987 | |

| Virupakshar et al. (2020) | – | – | Controller: 1 GB RAM, 50 GB Memory, 2 (No. of core) Processor. Neutron: 1 GB RAM, 20 GB Memory, 2 (No. of core) Processor. Computer-1: 1 GB RAM, 20 GB Memory, 2 (No. of core) Processor. Computer-2: 1 GB RAM, 20 GB Memory, 2 (No. of core) Processor | KDDCUP99: Recall 0.99, F1-score 0.98, Support 2190. LAN Dataset: Recall 0.91, F1-score 0.91, Support 2140. Cloud Dataset: Recall 0.91, F1-Score 0.91, Support 2138, Precision 96% | |

| Haider et al. (2020) | Z-score normalization | The ensemble CNN model (M1 and M2) contains three 2-d CLs (with 128, 64, & 32 filters, respectively), 2 max PLs, 1 layer to flatten, and 2 dense FC layers. ReLu as activation function in hidden layers and Sigmoid at the output layer | System Manufacturers : Lenovo, Processor: Intel Core i7-6700 CPU with 3.4 GHz Processor, Memory 8GB, Operating System: Microsoft Windows 10. Software: Keras Library with TensorFlow | Accuracy 99.45%, Precision 99.57%, Recall 99.64%, F1-score 99.61%, Testing time 0.061 (minutes), Training time 39.52 (minutes), CPU Usage% 6.025 | |

| Wang and Liu (2020) | Each byte of a packet is converted into a pixel and gathered as a picture | The model includes two CLs, two PLs, and two FC layers. For Information entropy the threshold is selected as 100 Packets/s | Mininet emulator, POX controller and a PC with Inter Core i5- 7300HQ CPU, 8GB RAM, and Ubuntu 5.4.0-6 system. The experimental topology comprises of six switches, one server and a controller. Software: Hping3, TensorFlow framework | Accuracy 98.98%, Precision 98.99%, Recall 98.96%, F1-score 98.97%, and Training time 72.81s, AUC 0.949 | |

| Kim et al. (2020) | One-hot encoding and 117 features are converted into images with 13 9 pixels and 78 features to 13 6 | The model comprises of 1, 2, or 3 CLs, and the number of kernels is set to 32, 64 and 128, respectively. In addition, the kernel size is set to 2 2, 3 3, and 4 4. The stride value is set to 1 | Python with TensorFlow | Accuracy: KDDCUP99 99%. CSE-CIC-IDS2018 91.5% | |