Summary

Three of the most robust functional landmarks in the human brain are the selective responses to faces in the fusiform face area (FFA), scenes in the parahippocampal place area (PPA), and bodies in the extrastriate body area (EBA). Are the selective responses of these regions present early in development, or do they require many years to develop? Prior evidence leaves this question unresolved. We designed a new 32-channel infant MRI coil and collected high-quality functional magnetic resonance imaging (fMRI) data from infants (2–9 months of age) while they viewed stimuli from four conditions – faces, bodies, objects, and scenes. We find that infants have face-, scene-, and body-selective responses in the location of the adult FFA, PPA, and EBA, respectively, powerfully constraining accounts of cortical development.

eToc Blurb

Portions of the ventral visual pathway in adults selectively respond to faces, scenes, and bodies. Here, Kosakowski et al. show that 2- to 9-month-old human infants have face-, scene- and body-selective responses in FFA, PPA, and EBA, respectively, constraining theories of cortical development.

Introduction

The human mind is not homogenous and equipotential but structured, containing a set of highly specialized mechanisms for processing particular domains of information, from perceiving faces to understanding language to thinking about other people’s thoughts1. Much of this domain-specific structure of the mind is mirrored in the functional organization of the brain, which features cortical regions selectively engaged in processing these content domains1. How does this functional organization arise over development? Is our cognitive and neural machinery built slowly, over years of experience, or is much of that structure already present early in development? Here, we approach this question by asking whether the domain-specific organization of high-level visual cortex is already present in young infants.

A number of prior fMRI studies in children have argued that category-selective regions of the ventral visual pathway (VVP), including the fusiform face area (FFA)2, parahippocampal place area (PPA)3, and extrastriate body area (EBA)4, develop very slowly, increasing in size throughout childhood and into adolescence5–11. But those studies have not tested children younger than 3 years of age, focusing primarily instead on children ages 7 and up. To understand development, we need to know if category selective responses are present in infants. Research using electroencephalography (EEG), magnetoencephalography (MEG), and functional near-infrared spectroscopy (fNIRS) have reported neural responses in the infant brain to faces12–14, scenes14, and bodies15,16, but these studies have used only a single control stimulus type, providing only weak tests of selectivity. Further, these methods lack the spatial resolution to determine if the recorded responses are coming from the same cortical locations as the adult FFA, PPA, and EBA. Only two publications have reported fMRI responses in the VVP of awake infants, one in humans (n=6)17 and one in macaques (n=3)18. These studies observed an adultlike spatial organization of responses to faces in both species17,18 and scenes in human infants17. However, the activated regions did not show selective responses, instead responding as strongly to objects as to faces or scenes. Thus, no prior studies have reported selective responses in infants to faces, bodies, or scenes that can be localized to the FFA, PPA, or EBA, respectively.

Taken together, these results have led to a hypothesis that infant cortex is organized by feature-based protomaps that extend from primary sensory areas to higher-level cortical regions18–22 and that selective responses to specific visual categories, such as faces, scenes, and bodies develop later based on extensive visual experience with that category. This hypothesis predicts that category-selective responses will emerge in regions of cortex that correspond to overlapping protomap features. For example, because faces tend to be foveated and have substantial low spatial frequency and curvilinear content, the FFA will develop in a region of the protomap that responds preferentially to foveal, low spatial frequency, and curvilinear input. Conversely, scenes extend into the periphery and tend to have high spatial frequency and rectilinear content, so the PPA will develop in a peripheral region of the protomap that overlaps with preferences for high spatial frequency and rectilinear content. Importantly, this hypothesis predicts that responses to features of the protomaps, such as retinotopy, curvilinearity, rectilinearity, and spatial frequency, would arise earlier in development than responses to high-level visual categories such as faces, scenes, and bodies20.

Much of the evidence for this hypothesis comes from the absence of category-selective responses in VVP as measured with fMRI17,18. We reasoned to the contrary that the FFA, PPA, and EBA in infants might be category-selective, but have gone undetected in prior infant studies due to limitations in the quality and amount of data obtained. It is extremely difficult to obtain high quality fMRI data from awake infants because they have short attention spans23, cannot follow verbal instructions, and tend to move in the scanner. Further, the tools used for collecting and processing infant data are less advanced than those for adults. To test our hypothesis, we scanned a much larger number of infants and devised a variety of technical innovations to increase both the quantity and quality of data we could obtain (see Fig. 1a & Methods). To sustain infants’ attention, we used engaging, colorful stimuli depicting faces, bodies, objects, and scenes (Fig. 1b). We recruited 87 infants and were able to collect usable fMRI data from 52 of them (2.1–9.7 months; see Methods). The primary results reported here reflect data from 26 infants scanned on a second-generation custom 32-channel infant head coil (referred to here as “Coil 2021”) with a higher signal-to-noise ratio (SNR) than an equivalent adult coil24 and MR-safe headphones that provided better hearing protection, enabling the collection of infant fMRI data at a resolution commonly used for adult studies.

Figure 1. Infant scanning procedures and stimuli.

a) Infants were swaddled, wore custom MR-safe headphones to protect their hearing, and scanned in a newly designed 32-channel head coil (“Coil 2021”24) that was shaped like a cradle and had adjustable frontal coils. b) Examples of face, body, object, scene, and baseline stimuli. Videos were 2.7s long. To enhance infant attention, each video was followed by a 300ms presentation of a still image from the same condition. Stimuli were displayed on a mirror over the infants’ eyes and played continuously while the infant was awake, content, and attending.

Results

Do human infants have face-, scene-, or body-selective responses in the VVP? As an initial exploratory analysis, we first conducted voxelwise whole-brain analyses testing for higher responses to each condition of interest (i.e., faces, scenes, and bodies) compared to objects. Individual subject activation maps and group random effects analyses (n=29) found higher responses to faces than objects in the fusiform gyrus, higher responses to scenes than objects in the parahippocampal gyrus, and higher responses to bodies than objects in lateral-occipital cortex (Fig. 2), areas that correspond to the location of the FFA, PPA, and EBA in adults. While suggestive, these responses did not reach thresholds for statistical significance when correcting for multiple comparisons across the whole brain.

Figure 2. Stereotyped location of category responses in the infant brain.

Representative individual subject statistical maps (rows 1–3, threshold P<0.01 transformed to Z for visualization) and group random effects analyses (n=23, bottom row, threshold −log(P)=2.0) revealed higher responses to faces>objects in the fusiform gyrus (a, location of adult FFA outlined in purple), to scenes>objects in the parahippocampal gyrus (b, location of adult PPA outlined in green), and to bodies>objects in lateral-occipital cortex (c, location of adult EBA outlined in pink). Individual and group maps displayed on representative infant anatomical image collected on the same coil (left hemisphere shown on the left and right on right). See Fig. S1 for multiple slices through each region from the group maps.

FFA, PPA, and EBA are category-selective in infancy.

To test the anatomical location and selectivity of these regions more directly and stringently, we next conducted a standard fROI analysis using previously described anatomical parcels that constrain localization of the FFA, PPA, and EBA in adults25. To accommodate the imperfect registration of infant functional data to adult anatomical templates, we uniformly increased the size of each parcel by a small amount (see similar fROI results for unexpanded parcels in Table S1). We then selected the top 5% of voxels in each parcel, in each participant that had a numerically greater response to the preferred category corresponding to that parcel (e.g., faces for the FFA) than to objects, in one subset of the data. Then, in independent data in the same participant, we quantified the response in those voxels to each of the four conditions using linear mixed effects models (see Methods). In the main dataset, 19 infants had enough data to be included in this analysis.

This fROI analysis identified voxels in the FFA parcel where responses to faces were significantly greater than the response to each of the other three categories (all Ps<0.001; Fig. 3a; Table S1), scene responses in the PPA parcel that were significantly greater than each the other three categories (all Ps<0.01; Fig. 4a; Table S1; though not higher than baseline), and body responses in the EBA parcel that were significantly greater than each of the other three categories (all Ps<0.05; Fig. 5a; Table S1).

Figure 3. Face-selectivity in the infant brain.

An fROI analysis (n=20) with Coil 2021 data was conducted with adult-constrained parcels of the FFA25, OFA25, and ATL33 that were uniformly enlarged. All parcels are displayed on an anatomical image of an infant brain with face parcels shaded purple. Bar charts show the average response across participants in each fROI to each stimulus category (compared to baseline) in data independent of that used to define the fROI. Error bars are standard error of the mean accounting for within-subject variability50. Symbols used to report one-tailed statistics from linear mixed effect models: †P<0.1, *P<0.05, **P<0.01, ***P<0.001. Statistics reported in Table S1. Line graphs show selectivity analysis with different proportions of voxels selected with the average response in independent data for faces plotted in purple, bodies plotted in pink, objects plotted in teal, and scenes plotted in green. The vertical dashed line marks the top 5% and corresponds to the bar charts. The highest proportion of voxels with face-selective responses is indicated with a black triangle. Error bars are standard error of the mean. See Fig. S3 and Table S2 for comparison of Coil 2011 and Coil 2021 datasets.

Figure 4. Scene-selectivity in the infant brain.

An fROI analysis (n=20) with Coil 2021 data was conducted with adult-constrained parcels of the PPA25, OPA25, and RSC25 that were uniformly enlarged. All parcels are displayed on an anatomical image of an infant brain with scene parcels shaded green. Bar charts show the average response across participants in each fROI to each stimulus category (compared to baseline) in data independent of that used to define the fROI. Error bars are standard error of the mean accounting for within-subject variability50. Symbols used to report one-tailed statistics from linear mixed effect models: †P<0.1, *P<0.05, **P<0.01, ***P<0.001. Statistics reported in Table S1. Line graphs show selectivity analysis with different proportions of voxels selected with the average response in independent data for faces plotted in purple, bodies plotted in pink, objects plotted in teal, and scenes plotted in green. The vertical dashed line marks the top 5% and corresponds to the bar charts. The highest proportion of voxels with face-selective responses is indicated with a black triangle. Error bars are standard error of the mean. See Fig. S3 and Table S2 for comparison of Coil 2011 and Coil 2021 datasets.

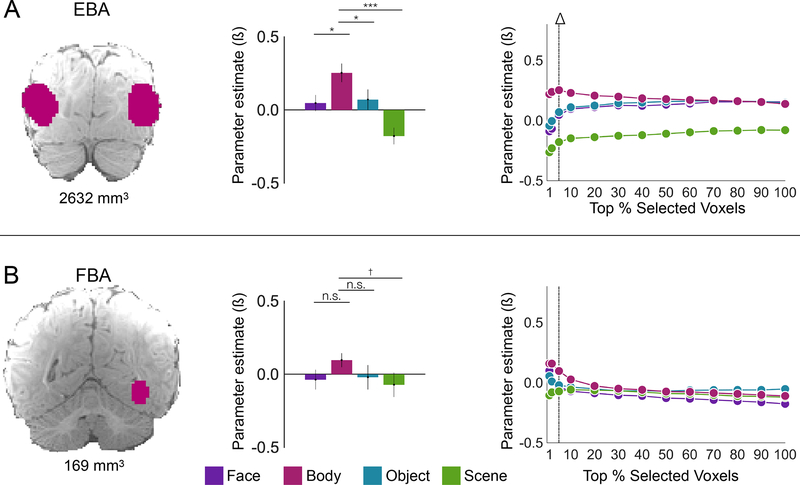

Figure 5. Body-selectivity in the infant brain.

An fROI analysis (n=20) with Coil 2021 data was conducted with adult-constrained parcels of the FBA25 and EBA25 that were uniformly enlarged. All parcels are displayed on an anatomical image of an infant brain with body parcels shaded pink. Bar charts show the average response across participants in each fROI to each stimulus category (compared to baseline) in data independent of that used to define the fROI. Error bars are standard error of the mean accounting for within-subject variability50. Symbols used to report one-tailed statistics from linear mixed effect models: †P<0.1, *P<0.05, **P<0.01, ***P<0.001. Statistics reported in Table S1. Line graphs show selectivity analysis with different proportions of voxels selected with the average response in independent data for faces plotted in purple, bodies plotted in pink, objects plotted in teal, and scenes plotted in green. The vertical dashed line marks the top 5% and corresponds to the bar charts. The highest proportion of voxels with face-selective responses is indicated with a black triangle. Error bars are standard error of the mean. See Fig. S3 and Table S2 for comparison of Coil 2011 and Coil 2021 datasets.

How much of each cortical parcel contains the predicted pattern of selectivity? We conducted the same fROI analysis in each parcel, varying the number of voxels selected from the top 1% of voxels to 100% of voxels. In the FFA, we observe a selective response to faces in held-out data when selecting anywhere from 1% to 30% of voxels (Fig. 3a), suggesting that face-selective responses in infant FFA spans about 217.5 mm3 of cortical territory. In the PPA, the scene response is greater than the response to all other conditions even when 100% of voxels are included in the analysis (Fig. 4a), suggesting that scene responses in infant PPA covers at least 1320 mm3. Finally, in the EBA, the response to bodies is selective only when the top 1-, 2-, or 5-percent of voxels are selected (Fig. 5a), or approximately 131.6 mm3. (Note that these areas are estimates in the volume, not on the cortical surface.) Thus, selective responses in infant fROIs are robust to analytic parameters for voxel selection; and face, scene, and body selective responses are present in the locations predicted from prior studies of adults.

Are face, scene and body selective responses found distinctively in their predicted locations? In each of the three parcels (FFA, PPA, and EBA), we selected voxels using all three contrasts (faces>objects, scenes>objects, bodies>objects) at each threshold for voxel selection (top 1% to 100%), and then computed the selectivity of these voxels in held out data. In the FFA parcel, there were many face-selective voxels, and no scene- or body-selective voxels (Fig 6a). In the PPA, there were many scene-selective voxels and no body- or face-selective voxels (Fig 6b). In the EBA, both the bodies>objects and faces>objects contrasts identified reliable voxels (Fig 6c); however, while the top 1%−5% of body-responding voxels were body-selective (significantly higher than faces, objects, and scenes each independently), most face-responding voxels in the EBA parcel responded to both faces and bodies. In the EBA parcel as a whole, the majority of voxels responded to faces, bodies, and objects more than to scenes (Fig 5a).

Figure 6. Predicted selectivities are the highest in predicted regions.

The top n% of voxels for three contrasts (faces>objects, scenes>objects, bodies>objects) were selected in the FFA (a), PPA (b), and EBA (c) parcels. Responses were measured in held-out data. Face index: Faces>(Bodies,Scenes,Objects); Scene Index: Scenes>(Faces, Bodies, Objects); Body Index: Bodies>(Faces, Scenes, Objects). a) In the FFA parcel, voxels selected by the faces>objects contrast, up to the top 30% of the parcel, had a significant face-index in held out data. No other contrast identified significant category preferences at any threshold. b) In the PPA parcel, voxels selected by the scenes>objects contrast had a significant scene-index in held out data. No other contrast identified significant category preferences at any threshold. c) In the EBA parcel, voxels selected by bodies>objects had a significant body-index, and voxels selected by faces>objects had a significant face-index. Error bars are standard error of the mean.

Taken together, these results indicate that the FFA, PPA, and EBA are present in infancy, in the same anatomical location and with a selective response to the same preferred category as adults.

Higher quality fMRI data led to observation of selective responses.

Why do we find face-, scene-, and body-selective responses when Deen et al.17 did not? The face, body, object, and scene videos used in the present study were the same as in Deen et al., and the average age of the infants across the studies is similar. However, in contrast to Deen et al.17 the current study used a new coil (“Coil 2021”), a different pulse sequence producing higher resolution data, and included data from more subjects; there are also some differences in the analyses. To test which of these factors explained the difference in results between the present study and those of Deen et al.,17 we analyzed a third dataset we had collected on the first generation custom 32-channel infant head coil (referred to as “Coil 2011”), before Coil 2021 was built. For all subjects in the Coil 2011 dataset (which did not overlap with the current data, or the data published by Deen et al.), we used the same pulse sequence and coil used by Deen et al. This enabled us to test whether the use of Coil 2021 and/or the higher resolution acquisition sequence could explain the observed difference in results. Indeed, in matched fROI analyses of the Coil 2021 data and the Coil 2011 data (n=39), we found significant selectivity for faces, places, and bodies in Coil 2021 data but not Coil 2011 data, and a significant Experiment (Coil 2011 vs. Coil 2021) by stimulus condition by fROI interaction (Fig. S3c; F(6,108)=2.38, η2=0.013, P=0.013). These results indicate that it is the new coil and pulse sequence of the current study that enabled us to find selectivity when Deen et al. did not.

Visual statistics are not sufficient to explain category-selective responses.

Are face- and scene-selective responses in infants driven by low-level visual features of the stimuli? The protomap hypothesis predicts that the region that will develop into FFA initially responds preferentially to low spatial frequency and curvilinear input. In our paradigm, the stimuli with most curvilinear and low spatial frequency input were the object videos and the swirly baseline (Fig. S2). Yet the response to faces in FFA is significantly greater than the response to both baseline and object videos (Ps<0.05; Table S1). Therefore, curvilinearity and low spatial frequency are unlikely to account for the selective response to faces in FFA.

Similarly, the protomap hypothesis predicts that the region that will develop into PPA initially responds preferentially to high spatial frequency and rectilinear input. In our stimuli, rectilinear input was highest in the object movies (Fig. S2), yet the PPA responded significantly more to scenes than objects. High spatial frequency input was highest in the scene movies, and so might explain early PPA responses. However, one unexpected feature of the PPA response could not be explained by either a response to high spatial frequencies or category selectivity: the response in PPA was similar for scene movies and for the swirly baseline (P>0.2; Table S1), despite scenes containing substantially more high spatial frequency (as well as semantic scene) content. It is thus unclear why the scene and swirly baseline stimuli evoked similar responses in PPA; future research should investigate the optimal stimuli for infant PPA.

In summary, the responses of the FFA and PPA in infants cannot be easily accounted for in terms of lower-level visual features or “protomaps” 18,20–22,26–30 (see Discussion).

Selectivity observed in some, but not all, high-level visual areas that are selective in adults.

In addition to the FFA, PPA, and EBA, other occipitotemporal regions exist in adults that respond selectively to faces, scenes, and bodies. Are these regions also present in infants? The occipital face area (OFA) and occipital place area (OPA) are located in lateral occipital cortex (with EBA between them) and are thought to reside earlier in the visual hierarchy than FFA31 and PPA32, respectively. Thus, a posterior to anterior development of face- and scene-selectivity would predict that OFA and OPA would acquire characteristic functional specificity prior to FFA and PPA21. However, fROI analyses using adult parcels for OFA and OPA, with the same method just described, did not find significant face-selectivity in the OFA or scene-selectivity in the OPA (all Ps>0.05 except OFA faces>scenes P=0.004; Figs. 3b & 4b; Table S1).

What about regions thought to reside later in the visual hierarchy, such as the face selective response in anterior temporal lobe (ATL)? An fROI analysis with an adult ATL parcel33 found that face responses were9Fig. 3c; Table S1). However, an fROI analysis using an adult parcel for the fusiform body area (FBA), a body-selective region thought to arise later in the neural hierarchy than EBA34, found only numerically but not significantly greater responses to bodies than the other three conditions in the FBA parcel (all Ps>0.05; Fig. 5b; Table S1). Similarly, the scene response in the retrosplenial cortex (RSC) was numerically but not significantly greater than the response to all of the other conditions (S>F P=0.3, S>B P=0.1, S>O P=0.02; Fig. 4c; Table S1).

Thus, we find significantly category selective responses in infant FFA and ATL for faces, PPA for scenes, and EBA for bodies, but not in other regions.

Discussion

Here we report that the FFA, PPA, and EBA are present in infants, in the same location, and with qualitatively similar selectivities, as adults. Continued development may expand and refine these regions over subsequent years5–11, but the existence of category-selective mechanisms in the brain evidently does not require years of visual experience and maturation.

Our finding of face-, scene-, and body-selectivity in infant FFA, PPA, and EBA differs from the earlier findings of Deen et al.17, who found preferential but not fully category selective responses in these regions in infants. The most likely account of this difference is that the current study benefited from the higher quality data afforded by our new infant coil24 and the higher resolution pulse sequence.

Our findings inform ongoing debates about cortical development, including the question of how visual experience influences the emergence of functionally selective regions in VVP. These regions appear in approximately the same cortical location in every individual, implying that patches of cortex are predisposed for specific functions. However, the nature of these predispositions is unknown and disputed. On one view, visual cortex is organized at birth into “protomaps”: smooth, orthogonal spatial gradients of neurons tuned along low-level visual feature dimensions like retinotopy, spatial frequency, and curvilinearity 18,20–22,26–30. The natural statistics of early visual experience cause frequent co-activation of neurons with certain responses (e.g., faces are low frequency and curvy, and typically foveated; scenes are high frequency and boxy and experienced in the periphery). Extensive visual experience thus leads to the slow emergence of cortical regions selectively responsive to these stimulus categories in cortical regions already tuned for their low-level correlates. Evidence for this view comes from fMRI studies with macaques. In early infancy (~30 days) V1 and regions that later become face-selective respond similarly to intact faces and pixelated faces18. In juvenile macaques (~200 days of age) the response profiles between V1 and face-selective regions begin to differentiate as face-selective regions (but not V1) begin to respond less to pixelated faces18.

A key prediction of the protomap hypothesis is that protomaps for spatial frequency and curvilinearity are present across the primate brain prior to functionally specialized responses20. However, our infant FFA responses do not have a pattern of response that corresponds to either low spatial frequency or curvilinearity, and our infant PPA responses do not have a pattern of response that corresponds to either high spatial frequency or rectilinearity. Thus, our data place important constraints on the protomap framework. If an underlying protomap combined with visual experience drives selectivity of domain specific regions like the FFA, PPA, and EBA, only a few months of visual experience must be sufficient to acquire that selectivity.

Relatedly, some theorists have proposed that selectivity in cortical regions might emerge in sequence, following the hierarchy of bottom-up visual processing in VVP. On this view, low-level representations in early visual cortex might emerge first, followed by mid-level representations in lateral-occipital cortical regions, culminating in higher-level visual representations in the VVP. For example, fMRI studies of infant macaques found that BOLD responses to motion emerged first in V1, followed by V4, and then MT35. A feedforward pattern of development has similarly been suggested for VVP such that OFA and OPA would develop prior to FFA and PPA, respectively, because they are presumed to lie earlier in the visual hierarchy36–38. (The hierarchical relationship of FBA and EBA is unclear39–43.) In contrast to this prediction, we observed scene-selectivity in PPA, but not its “precursor” OPA, and we observed face-selectivity in FFA, but this selectivity did not reach significance in its “precursor” OFA. These results should be interpreted with caution, though, given that null results in infant fMRI are always suspect, and the OPA and OFA are smaller and less robust regions even in adults. Nevertheless, it is intriguing that our data show no evidence of feedforward hierarchical emergence of category selectivity in VVP in infants.

If not entirely driven by low-level feature protomaps and bottom-up input from earlier visual areas, what else might constrain the development of functionally selective regions in infants? One possibility is that in infants, VVP regions have distinctive preexisting long-range connectivity not only to early visual regions, but also to non-visual regions (e.g., in parietal and frontal cortices). These patterns of connectivity can be used to accurately predict the location of functionally selective regions in infants44 and children45. Further, these connections exist prior to the emergence of selective responses in at least some cases: for example, patterns of connectivity in pre-readers can be used to predict the future location of the visual word form area when the same child learns to read45. As further evidence for connectivity from non-visual areas, the putative VWFA has stronger functional correlations with putative language areas compared to functional correlations between nearby FFA and putative language areas46. Finally, congenitally blind humans have auditory and tactile responses in the FFA that are selective for faces47,48, and distinctive patterns of resting functional correlation between the “blind FFA” and both visual and nonvisual regions47. Thus, we speculate that the location of FFA, PPA, and EBA may be influenced by pre-existing long-range connectivity to both early visual regions and also regions in parietal and frontal cortex.

Many fundamental open questions remain. First, although we found clear evidence of category selectivity at a much earlier age than previously reported, five-month-old infants already have hundreds of hours of visual experience. What, if any, visual experience is necessary for the construction or maintenance of category-selective regions? Would purely visual exposure to faces, places, and bodies be sufficient to evoke category selective responses49? Or must infants experience faces paired with social interaction, bodies paired with goal directed actions, and places paired with selfmotion through the environment? Second, are the representations extracted and computations conducted in these regions similar to those of adults, or do these regions undergo major functional change between infancy and adulthood, and if so, what experience is necessary to produce that change? Research into these questions will ultimately provide scientific answers to the long-standing philosophical question of the origins of the human mind.

STAR METHODS

RESOURCE AVAILABILITY

Lead contact

Further information and requests for resources should be directed to and will be fulfilled by the lead contact, Heather L. Kosakowski (hlk@mit.edu).

Materials availability

This study did not generate new unique reagents.

Data and code availability

De-identified results from the functional region of interest analyses have been deposited at osf.io and are publicly available as of the date of publication. Accession numbers are listed in the key resources table.

Group maps for the three published contrasts have been deposited at osf.io and are publicly available as of the data of publication. Accession numbers are listed in the key resources table.

All original code has been deposited at osf.io and is publicly available as of the date of publication. DOIs are listed in the key resources table.

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.

KEY RESOURCES TABLE.

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Antibodies | ||

| Bacterial and virus strains | ||

| Biological samples | ||

| Chemicals, peptides, and recombinant proteins | ||

| Critical commercial assays | ||

| Deposited data | ||

| Group random effects analyses | This paper | https://osf.io/jnx5a/?view_only=2db4898abffa424fbe9b96e7e2d7e161 |

| Functional region of interest analyses | This paper | https://osf.io/jnx5a/?view_only=2db4898abffa424fbe9b96e7e2d7e161 |

| Visual statistics of stimuli | This paper | https://osf.io/jnx5a/?view_only=2db4898abffa424fbe9b96e7e2d7e161 |

| Experimental models: Cell lines | ||

| Experimental models: Organisms/strains | ||

| Oligonucleotides | ||

| Recombinant DNA | ||

| Software and algorithms | ||

| All pre-processing software | This paper, previous work17, FSL (https://fsl.fmrib.ox.ac.uk/fsl/fslwiki/), Freesurfer (http://surfer.nmr.mgh.harvard.edu/) | https://osf.io/jnx5a/?view_only=2db4898abffa424fbe9b96e7e2d7e161 |

| All data analysis software | This paper, FSL (https://fsl.fmrib.ox.ac.uk/fsl/fslwiki/), Freesurfer (http://surfer.nmr.mgh.harvard.edu/) | https://osf.io/jnx5a/?view_only=2db4898abffa424fbe9b96e7e2d7e161 |

| Visual statistics of stimuli | This paper; rectilinearity27; curvilinearity28,29 | https://osf.io/jnx5a/?view_only=2db4898abffa424fbe9b96e7e2d7e161 |

| Stimuli presentation | This paper and previous work17 | https://osf.io/jnx5a/?view_only=2db4898abffa424fbe9b96e7e2d7e161 |

| MATLAB 2020a/2020b | MathWorks | https://www.mathworks.com/products/matlab.html |

| Freesurfer 6.0.0 | Massachusetts General Hospital | https://surfer.nmr.mgh.harvard.edu |

| FSL 5.0.9 | FMRIB Software Library | https://fsl.fmrib.ox.ac.uk/fsl/fslwiki/ |

| Other | ||

EXPERIMENTAL MODEL AND SUBJECT DETAILS

Infants were recruited from the Boston metro area through word-of-mouth, fliers, and social media. Parents of participants were provided parking or, when travel was a constraint for participation, reimbursed travel expenses. Participants received a $50 Amazon gift card for each visit and, whenever possible, printed images of their brain. We recruited 87 infants (2.1–11.9 months; mean age = 5.0 months; 48 female) that participated in a total of 162 visits (the scanner was booked for two hours for each visit). Forty-two of these infants (2.1–9.7 months, mean=5.5 months, 21 female) were scanned on the Coil 202124 and we recovered usable data (see data selection) from 26 infants (2.1–9.7 months, 11 female). The other 47 of these infants (2.5–11.9 months, mean=4.5 months, 26 female) were scanned on the Coil 201151 and we recovered usable data from 26 infants (2.5–8.7 months, mean=4.8 months, 12 female). (For full subject, data, and inclusion details, see Table S3). We were unable to collect data from 15 subjects (included in the full count) due to technical error or lack of subject compliance. One subject (included in the full count) was excluded due to experimenter error. Infants were scanned with Coil 2011 until Coil 2021 was available. We stopped all data collection due to the COVID-19 pandemic. Ethical approval for this research was obtained through the Internal Review Board at MIT.

METHOD DETAILS

Stimuli.

Infants watched blocks of videos and still images from five categories (faces, objects, bodies, scenes, curvy baseline; Fig. 1b). There are four important ways this paradigm is different than what was used previously17. First, all infants saw videos from all four conditions. Second, we selected a subset of videos that would make each block more visually and categorically homogenous within blocks and heterogenous across blocks (see below for a description). Third, each block included continuous videos that were 2.7s and interleaved with still images from the same category (but not drawn from the videos) presented for 300 ms. We added interleaved 300ms still images with the hopes of 1) increasing the BOLD response by mitigating effects of adaptation and 2) increasing infant attention. One previous study indicated that 300 ms still image presentations led to the greatest neural response using EEG52, thus we chose a 300 ms still image presentation for our stimuli. We additionally wanted to maximize looking duration to each video so we shortened the 3s videos from previous research17,53 to 2.7s. Finally, the baseline condition in previous research17 was scrambled blocks of scenes whereas in this study the baseline consists of curvy abstract scenes. Scrambled objects and scenes are a good control for a variety of low-level features of the object and scene blocks but not the face blocks. Thus, we chose to use curvy abstract scenes rather than scrambled objects or scrambled scenes to control for curvilinear visual features (see below for visual features of stimuli). As such, if the face condition is substantially greater than baseline, we can have some confidence that the response to faces is not merely due to curvilinear features.

All blocks were 18s and included 6 videos and 6 images. Face videos featured a single face of a child on a black background. Body stimuli focused on hands and feet of children wearing shorts, t-shirts, jeans, or dresses, with or without socks and shoes. Object blocks displayed toys (e.g., magnetic toys, plastic cars and planes, balls) on a black background, and scene blocks mountain or pastoral scenes. Baseline blocks were also 18s and consisted of six 2.7s videos and six still images that featured abstract color scenes such as liquid bubbles or tie-dyed patterns. Video and image order were randomized within blocks and block order was pseudorandom by category. There were 3 face blocks (frontal, averted, expressive), 2 body blocks, 3 object blocks, 3 scene blocks, and 1 block depicting hand-object interactions (not included in analysis).

The first 31 infants of the Coil 2011 dataset (8 of whom were included in the Coil 2011 fROI analysis) viewed face, body, object, scene, and abstract curvy videos. Video blocks were 18s long, each video was 3s long, and was no still image presentation. The videos played continuously for the full time the infant was in the scanner, awake, and attending. Additionally, instead of 12 condition blocks, there were 10 condition blocks – 3 face blocks, 3 scene blocks, 2 body blocks, and 2 object blocks – and the blocks played in a random order. Though the contents of each block was slightly different than the main paradigm, the videos were from the same stimulus set53.

A run began as soon as the infant was in the scanner and videos played continuously for as long as the infant was content, paying attention, and awake. All stimuli available on OSF (osf.io).

Data Collection.

Infants were swaddled if possible. A parent or researcher went into the scanner with the infant while a second adult “scanner buddy” stood outside the bore of the scanner. The scanner buddy monitored infant attention and the TRs in which the infant was not looking at the stimuli were recorded for exclusion during preprocessing. Infants heard lullabies (https://www.pandora.com/artist/jammy-jams-childrens/) played through custom infant headphones for the duration of the scan.

Custom Head Coils.

Forty-two infants were scanned in a newly designed 32-channel coil (Coil 2021) designed to comfortably cradle infants and wore custom infant MR-safe headphones24 (Fig. 1a). Infant headphones attenuated scanner noises (attenuation statistics reported in 24) and allowed infants to listen to music at a comfortable volume for the duration of the scan. An adjustable coil design increased infant comfort, accommodated headphones, and suited a variety of infant head sizes (Fig. 1a). Coil 2021 and infant headphones were designed for a Siemens Prisma 3T scanner and enabled the use of an EPI with standard trajectory with 44 near-axial slices (repetition time, TR = 3s, echo time, TE = 30ms, flip angle = 90°, field of view, FOV = 160 mm, matrix = 80×80, slice thickness = 2 mm, slice gap = 0 mm). A few subjects were scanned on a different EPI with standard trajectory with near-axial slices (repetition time, TR = 3s, echo time, TE = 30ms, flip angle = 90°, field of view, FOV = 208 mm, matrix = 104×104, slice thickness = 2 mm, slice gap = 0 mm). Four of these subjects were included in the fROI analysis.

Data from 47 infants were collected with Coil 2011 with the same data collection methods reported by Deen et al17. We used a custom 32-channel infant coil designed for a Siemens Trio 3T scanner51 (Coil 2011) and a quiet EPI with sinusoidal trajectory54 with 22 near-axial slices (repetition time, TR = 3s, echo time, TE = 43 ms, flip angle = 90°, field of view, FOV = 192 mm, matrix = 64×64, slice thickness = 3 mm, slice gap = 0.6 mm). The sinusoidal acquisition sequence caused substantial distortions in the functional images (Fig. S3b).

Data Selection (subrun creation):

To be included in the analysis, data had to meet the criteria for low head motion that was reported by Deen et al17. Data were cleaved between consecutive timepoints having more than 2 degrees or 2 mm of motion, creating “subruns,” which contained at least 24 consecutive low-motion volumes. All volumes included in a subrun were extracted from the original “run” data (a “run” began when the subject went into the scanner and ended when the subject fell asleep or was fussy) and combined to create a new Nifti file for each subrun. Paradigm files were similarly updated for each subrun. Volumes with greater than 0.5 degrees or mm of motion between volumes were scrubbed. We used highly conservative motion thresholds23 that were identical to the thresholds we used previously17, to ensure inclusion of only the highest quality data in our analysis. If a subject had more than one dataset (data collected within a 30-day window were analyzed as a single dataset), we included only the dataset with more volumes that met our criteria for inclusion. One subject had two datasets but the dataset with more volumes was missing a large portion the temporal lobe due to motion. For this subject we used the dataset with fewer volumes but an intact temporal lobe. This procedure resulted in 514.3 minutes of useable data (mean=5.9 minutes, s.d.=11.3) from the Coil 2021 dataset and 566.00 minutes of useable data (mean=6.5 minutes, s.d.=12.6) from the Coil 2011 dataset.

To be included in the group random effects analysis, subjects had to have at least 96 volumes that met the above motion threshold criteria. 23 subjects from the Coil 2021 dataset (2.1–9.7 months, mean=5.7 months) and 29 subjects from the Coil 2011 dataset (2.5–8.8 months, mean=4.8 months) met these criteria.

To be included in the fROI analysis, subjects had to have at least two subruns with at least 96 volumes in each subrun (one to choose voxels showing the relevant contrast, and the other to independently extract response magnitudes from the selected voxels). This resulted in a final fROI dataset of 20 datasets (3.0–9.7 months; mean=5.8 months) for the Coil 2021 dataset and 19 datasets (18 unique subjects) for the Coil 2011 dataset (2.5–8.8 months, mean=4.8 months). Due to the variable amount of data in each subrun for each subject and the impact this could have on reliable parameter estimates from the GLM, we first combined or split subruns to approximately equate the amount of data across subruns within subjects. For example, if a subject had three subruns and the first was 35 volumes, the second was 57 volumes and third was 220 volumes, then we concatenated the first two subruns to create one subrun and we split the third subrun into 2 resulting in a total of three subruns with approximately 100 volumes per subrun.

Preprocessing.

Each subrun was processed individually. First, an individual functional image was extracted from the middle of the subrun to be used for registering the subruns to one another for further analysis. Then, each subrun was motion corrected using FSL MCFLIRT. If more than 3 consecutive images had more than 0.5 mm or 0.5 degrees of motion, there had to be at least 7 consecutive low-motion volumes following the last high-motion volume in order for those volumes to be included in the analysis. Additionally, each subrun had to have at least 24 volumes after accounting for motion and sleep TRs. Functional data were skull-stripped (FSL BET2), intensity normalized, and spatially smoothed with a 3mm FWHM Gaussian kernel (FSL SUSAN).

Data registration.

Due to the substantial distortion in these data and the lack of an anatomical image from many subjects, we registered all functional data to a representative functional image collected with the same coil and acquisition parameters (referred to as the functional template image). As there is no standard approach to the use of common space in infant MRI research55, we elected to use a template image collected with the same acquisition parameters as each subject. For Coil 2011 data, the functional template image was the same image used by Deen and colleagues17. For Coil 2021 data, we selected a representative functional image from a representative subject for whom we also had a high-quality anatomical image. All subruns were registered within subjects to a target image from that subject. Then each subject target image was registered to a template functional image collected using the same coil and acquisition parameters. First, the middle image of each subrun was extracted and used as an example image for registration. If the middle image was corrupted by motion or distortion, a better image was selected to be the example image. The example image from the middle subrun of the first visit was used as the target image and all other subruns from that subject were registered to that subject’s target image. The target image for each subject was registered to a template image collected using the same acquisition parameters. Subrun and target image registrations were concatenated so that each subrun was individually registered to template space. We attempted to register each image using a rigid, an affine, and a partial affine registration with FSL FLIRT. The best image was selected by eye from the three registration options and manually tuned with the Freesurfer GUI for the best possible data alignment. To display group results, images were transformed to the anatomical space of the template image.

Parcels / Search Spaces.

Group constrained parcels were acquired from previous adult research to localize areas of selectivity25,33. Face-selective parcels included OFA, FFA, and ATL. Scene-selective parcels included OPA, PPA, and RSC. Body-selective parcels included EBA and FBA. After transforming the FFA parcel to individual subject space, we noticed that the peak face activation in the fusiform gyrus often fell just anterior to the FFA parcel. We attributed this difference to difficulties in obtaining reliable registrations with infant functional data and used the -dilM function in fslmaths to uniformly increase the size of all parcels. As this was an unplanned change in our analysis, we also report fROI results from the original parcels (Table S1) and the dilated parcels (Figs. 3–5; Table S1). The large ventral and lateral parcels are the same ones we used in Deen et al17 and results are reported in Figure S3c and Table S2. All parcels will be available on OSF upon publication.

QUANTIFICATION AND STATISTICAL ANALYSIS

Subject-level Beta and Contrast Maps.

Functional data were analyzed with a whole brain voxel-wise general linear model (GLM) using custom MATLAB scripts. The GLM included 4 condition regressors (faces, bodies, objects, and scenes), 6 motion regressors, a linear trend regressor, and 5 PCA noise regressors. Condition regressors were defined as a boxcar function for the duration of the stimulus presentation (18s blocks). Infant inattention or sleep was accounted for using a single impulse nuisance (‘sleep’) regressor. The sleep regressor was defined as a boxcar function with a 1 for each TR the infant was not looking at the stimuli, and the corresponding TR was set to 0 for all condition regressors. Boxcar condition and sleep regressors were convolved with an infant hemodynamic response function (HRF) that is characterized by a longer time to peak and deeper undershoot compared to the standard adult HRF 56. PCA noise regressors were computed using a method similar to GLMDenoise 57, defined in 17. Data and regressors were demeaned for each subrun. Next, demeaned data and regressors were concatenated across subruns, run regressors were added to account for differences between runs, and beta values were computed for each condition in a whole-brain voxel-wise GLM. Three subject-level contrast maps were computed as the difference between the condition of interest beta (i.e., face beta, body beta, or scene beta) and the object beta for each voxel using in-house MATLAB code. Results from subject-level contrast maps are reported in Fig. 2.

Group Random Effects Analyses.

To test whether there was systematic overlap between areas of activation, we conducted group random effects analyses for eligible data. For visualization and reporting purposes subject-level voxelwise statistical maps were transformed to coil-specific functional template space (see data registration method above) and group random effects analyses were performed using Freesurfer mri_concat and Freesurfer mri_glmfit. Each group map was then transformed to coilspecific anatomical space. We did not combine data across coils due to difficulty registering the two different image types. Results from group random effects analyses are reported in Fig. 2 and Fig. S1.

Functional Region of Interest.

To constrain search areas for voxel selection, we used anatomically defined parcels (see parcels/search spaces) transformed to subject native space. We used an iterative leave-one-subrun-out procedure such that data were concatenated across all subruns except one prior to the whole-brain voxel-wise GLM and contrasts were computed (described above). The top 5% of voxels with the greatest difference between the category of interest (i.e., faces, bodies, or scenes) and objects within an anatomical constraint parcel were selected as the fROI for that subject, and the parameter estimates were extracted from a GLM on the left-out subrun. Beta values were averaged across folds within a participant and weighted betas were averaged across participants. The use of top 5% and weighted betas was an analytic decision based on previous research17. The strength of selecting the top 5% of voxels is that we equally sample all subjects for a representation of the overall pattern of response across subjects. Thus, it is possible to find a response that is unreliable and even has the reverse preference in left out data (see OPA in Fig. 4b as an example – voxels were selected that had a greater response to scenes compared to objects but in independent data, the response to objects was greater than the response to scenes, indicating that across infants, there was not a reliable scene response in OPA.)

Category-Selectivity.

To determine whether a region’s response was category-selective, we fit the beta-values using a linear mixed effects model. In each region, we had an a priori hypothesis about which of the four conditions would elicit the largest response. So, in each model, we dummy-coded the other three control conditions, to test the hypotheses that the response to each control condition was lower than to the predicted preferred condition. For example, for regions predicted to be face-selective, we fit a model in MATLAB with the expression:

where the three dummy-coded condition regressors are f1 (bodies), f2 (objects) and f3 (scenes). Fixed effects parameters of no interest were age, motion, and sex. Motion was the fraction of scrubbed volumes. Subject was coded as a random intercept for all models. In the Coil 2011 analyses, we also included a fixed effect paradigm parameter (“para”) because two slightly different versions of the experimental paradigm were used during data collection.

The response in a parcel was deemed selective if the fixed effect coefficient for each of the three control conditions was significantly negative, using a t-test. Because predictions are unidirectional, reported p-values are one-tailed. For example, a face parcel was only deemed face-selective if the parameter estimates for the body, object, and scene regressors were significantly negative. Results for the fROI analyses are reported in Figs. 3–5, Fig. S3, and Tables S1–S2.

Models of stimulus visual statistics.

Each frame was extracted from each video. Frames and still images were converted to grayscale and normalized. To obtain spatial frequency information, we computed a Fourier transform on each image. Low- and high-spatial frequency were extracted from each frame of each stimulus using the methods and cut-offs described by 58 and averaged across all images within a category. Each category value was then normalized relative to the baseline value. Nasr and colleagues27 found that scene and object stimuli are characterized by the presence of angles and that the presence of straight lines and right angles drive activation in the PPA. Similarly, Yue and colleagues28,29 found that face stimuli are characterized by the presence of curves and activate areas along the fusiform gyrus. Thus, we also extracted curvilinear information using code provided by 27 and methods described by 28,29. Briefly, each normalized grayscale image from a block (e.g., each face block was computed separately) was reduced to 140 × 210 pixels and averaged together. For rectilinear information, angled Gabor filters (90° and 180°) with four different spatial frequencies (1, 2, 4, and 8) were applied to each pixel to assess the amount of angular content. Rectilinear values were averaged across pixels and blocks. Curvilinear values were computed in a similar manner except each angled Gabor filter (30°, 60°, 90°, 120°, 150°, and 180°) had five different curve depths. Curvilinear values for each frame from each block were averaged together. All visual statistics results for this analysis are reported in Fig. S2.

Supplementary Material

Highlights.

Category-selective responses are present within the first year of life.

FFA, PPA, and EBA are face-, scene-, and body-selective, respectively, in infancy.

Selective responses did not reach significance in infant OFA, OPA, RSC, or FBA.

Category-selective responses are not explained by visual “protomaps.”

Acknowledgments:

This research was carried out at the Athinoula A. Martinos Imaging Center at the McGovern Institute for Brain Research at MIT. The authors thank Lyneé Herrera for help collecting the Coil 2011 dataset and Isabel Nichoson for help collecting the Coil 2021 dataset. We also that Steven Shannon, members of Saxe Lab, and members of the Kanwisher Lab for help during recruitment and data collection; Stefano Anzellotti, Dana Boebinger, Tyler Bonnen, Katharina Dobs, Anna Ivanova, Frederik Kamps, Owen Kunhardt, and Somaia Saba for helpful comments on the manuscript; Kirsten Lydic for code and statistics transposition review; Hannah A. LeBlanc for everything; and all the infants and their families.

Funding:

We gratefully acknowledge support of this project by a National Science Foundation (graduate fellowship to HLK; Collaborative Research Award #1829470 to MAC), NIH (#1F99NS124175 to HLK; #R21-HD090346-02 to RS; #DP1HD091947 to NK); shared instrumentation grant S10OD021569 for the MRI scanner), the McGovern Institute for Brain Research at MIT, and the Center for Brains, Minds and Machines (CBMM), funded by an NSF STC award (CCF-1231216).

Footnotes

Declaration of Interests: Nancy Kanwisher served on the advisory board of Current Biology.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Kanwisher N Functional specificity in the human brain: A window into the functional architecture of the mind. Proc Natl Acad Sci. 2010;107(25):11163–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kanwisher N, McDermott J, Chun MM. The Fusiform Face Area: A Module in Human Extrastriate Cortex Specialized for Face Perception. J Neurosci. 1997;17(11):4302–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Epstein R, Kanwisher N. A cortical representation the local visual environment. Nature. 1998;392(6676):598–601. [DOI] [PubMed] [Google Scholar]

- 4.Downing PE, Jiang Y, Shuman M, Kanwisher N. A cortical area selective for visual processing of the human body. Science (80- ). 2001;293(September):2470–3. [DOI] [PubMed] [Google Scholar]

- 5.Grill-Spector K, Golarai G, Gabrieli J. Developmental neuroimaging of the human ventral visual cortex. Trends Cogn Sci. 2008;12(4):152–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Golarai G, Liberman A, Grill-Spector K. Experience Shapes the Development of Neural Substrates of Face Processing in Human Ventral Temporal Cortex. Cereb Cortex. 2015;(27):1229–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Golarai G, Ghahremani DG, Whitfield-Gabrieli S, Reiss A, Eberhardt JL, Gabrieli JDE, et al. Differential development of high-level visual cortex correlates with category-specific recognition memory. Nat Neurosci. 2007;10(4):512–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Meissner TW, Nordt M, Weigelt S. Prolonged functional development of the parahippocampal place area and occipital place area. Neuroimage. 2019;191(February):104–15. [DOI] [PubMed] [Google Scholar]

- 9.Cohen MA, Dilks DD, Koldewyn K, Weigelt S, Feather J, Kell AJ, et al. Representational similarity precedes category selectivity in the developing ventral visual pathway. Neuroimage. 2019. Aug 15;197:565–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Peelen MV, Glaser B, Vuilleumier P, Eliez S Differential development of selectivity for faces and bodies in the fusiform gyrus. Dev Sci. 2009;12(6):16–25. [DOI] [PubMed] [Google Scholar]

- 11.Kamps FS, Hendrix CL, Brennan PA, Dilks DD. Connectivity at the origins of domain specificity in the cortical face and place networks. Proc Natl Acad Sci U S A. 2020;117(11):6163–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.de Heering A, Rossion B. Rapid categorization of natural face images in the infant right hemisphere. Elife. 2015;4(JUNE):1–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kobayashi M, Otsuka Y, Kanazawa S, Yamaguchi MK, Kakigi R. The processing of faces across non-rigid facial transformation develops at 7 month of age: A fNIRS-adaptation study. BMC Neurosci. 2014;15(1):1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Powell LJ, Deen B, Saxe R. Using individual functional channels of interest to study cortical development with fNIRS. Dev Sci. 2018;21(4):e12595. [DOI] [PubMed] [Google Scholar]

- 15.Meltzoff AN, Ramírez RR, Saby JN, Larson E, Taulu S, Marshall PJ. Infant brain responses to felt and observed touch of hands and feet: an MEG study. Dev Sci. 2018;21(5):1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Lisboa IC, Queirós S, Miguel H, Sampaio A, Santos JA, Pereira AF. Infants’ cortical processing of biological motion configuration – A fNIRS study. Infant Behav Dev. 2020; [DOI] [PubMed] [Google Scholar]

- 17.Deen B, Richardson H, Dilks DD, Takahashi A, Keil B, Wald LL, et al. Organization of high-level visual cortex in human infants. Nat Commun 2017;8(13995):1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Livingstone MS, Vincent JL, Arcaro MJ, Srihasam K, Schade PF, Savage T. Development of the macaque face-patch system. Nat Commun. 2017;8:1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Powell LJ, Kosakowski HL, Saxe R. Social Origins of Cortical Face Areas. Trends Cogn Sci. 2018;22(9):752–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Arcaro MJ, Livingstone MS. On the relationship between maps and domains in inferotemporal cortex. Nat Rev Neurosci. 2021; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Arcaro MJ, Schade PF, Livingstone MS. Universal Mechanisms and the Development of the Face Network: What You See Is What You Get. Annu Rev Vis Sci. 2019;5(1). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Arcaro MJ, Livingstone MS. A hierarchical, retinotopic proto-organization of the primate visual system at birth. Elife. 2017;6:1–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ellis CT, Skalaban LJ, Yates TS, Bejjanki VR, Córdova NI, Turk-Browne NB. How to read a baby’s mind: Re-imagining fMRI for awake, behaving infants. Nat Commun 2020; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Ghotra A, Kosakowski HL, Takahashi A, Etzel R, May MW, Scholz A, et al. A size-adaptive 32-channel array coil for awake infant neuroimaging at 3 Tesla MRI. Magn Reson Med. 2021;86(3):1773–85. [DOI] [PubMed] [Google Scholar]

- 25.Julian JB, Fedorenko E, Webster J, Kanwisher N. An algorithmic method for functionally defining regions of interest in the ventral visual pathway. Neuroimage. 2012; [DOI] [PubMed] [Google Scholar]

- 26.Hasson U, Levy I, Behrmann M, Hendler T, Malach R. Eccentricity bias as an organizing principle for human high-order object areas. Neuron. 2002;34(3):479–90. [DOI] [PubMed] [Google Scholar]

- 27.Nasr S, Echavarria CE, Tootell RBH. Thinking Outside the Box: Rectilinear Shapes Selectively Activate Scene-Selective Cortex. J Neurosci. 2014;34(20):6721–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Yue X, Pourladian IS, Tootell RBH, Ungerleider LG. Curvature-processing network in macaque visual cortex. Proc Natl Acad Sci U S A. 2014;111(33). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Yue X, Robert S, Ungerleider LG. Curvature processing in human visual cortical areas. Neuroimage. 2020;222(August). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Livingstone MS, Arcaro MJ, Schade PF. Cortex Is Cortex: Ubiquitous Principles Drive Face-Domain Development. Physiol Behav. 2016;176(1):139–48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Pitcher D, Walsh V, Duchaine B. The role of the occipital face area in the cortical face perception network. Exp Brain Res. 2011;209(4):481–93. [DOI] [PubMed] [Google Scholar]

- 32.Kamps FS, Julian JB, Kubilius J, Kanwisher N, Dilks DD. The occipital place area represents the local elements of scenes. Neuroimage. 2016;132:417–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kriegeskorte N, Formisano E, Sorger B, Goebel R. Individual faces elicit distinct response patterns in human anterior temporal cortex. Proc Natl Acad Sci U S A. 2007;104(51):20600–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Bratch A, Engel S, Burton P, Kersten D. The Fusiform Body Area Represents Spatial Relationships Between Pairs of Body Parts. J Vis. 2018; [Google Scholar]

- 35.Van Grootel TJ, Meeson A, Munk MHJ, Kourtzi Z, Movshon JA, Logothetis NK, et al. Development of visual cortical function in infant macaques: A BOLD fMRI study. PLoS One. 2017;12(11):1–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Tsantani M, Kriegeskorte N, Storrs K, Williams AL, McGettigan C, Garrido L. FFA and OFA encode distinct types of face identity information. J Neurosci. 2021. Jan 15;JN-RM-1449–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Henriksson L, Mur M, Kriegeskorte N. Faciotopy-A face-feature map with face-like topology in the human occipital face area. Cortex. 2015;72:156–67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Henriksson L, Mur M, Kriegeskorte N. Rapid Invariant Encoding of Scene Layout in Human OPA. Neuron. 2019;103(1):161–171.e3. [DOI] [PubMed] [Google Scholar]

- 39.Taylor JC, Wiggett AJ, Downing PE. Functional MRI analysis of body and body part representations in the extrastriate and fusiform body areas. J Neurophysiol. 2007;98(3):1626–33. [DOI] [PubMed] [Google Scholar]

- 40.Brandman T, Yovel G. Bodies are Represented as Wholes Rather Than Their Sum of Parts in the Occipital-Temporal Cortex. Cereb Cortex. 2016;26(2):530–43. [DOI] [PubMed] [Google Scholar]

- 41.Pitcher D, Ianni G, Ungerleider LG. A functional dissociation of face-, body- and scene-selective brain areas based on their response to moving and static stimuli. Sci Rep. 2019;9(1):1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Pitcher D, Ungerleider LG. Evidence for a Third Visual Pathway Specialized for Social Perception. Trends Cogn Sci. 2020; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Ewbank MP, Lawson RP, Henson RN, Rowe JB, Passamonti L, Calder AJ. Changes in “top-down” connectivity underlie repetition suppression in the ventral visual pathway. J Neurosci. 2011;31(15):5635–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Cabral L, Zubiaurre L, Wild C, Linke A, Cusack R. Category-Selective Visual Regions Have Distinctive Signatures of Connectivity in Early Infancy. BioRxiv. 2019; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Saygin ZM, Osher DE, Norton ES, Youssoufian DA, Beach SD, Feather J, et al. Connectivity precedes function in the development of the visual word form area. Nat Neurosci. 2016;19(9):1250–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Li J, Osher DE, Hansen HA, Saygin ZM. Innate connectivity patterns drive the development of the visual word form area. Sci Rep. 2020;10(1):1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Murty NAR, Teng S, Beeler D, Mynick A, Oliva A, Kanwisher NG. Visual Experience is not Necessary for the Development of Face Selectivity in the Lateral Fusiform Gyrus. bioRxiv. 2020; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.van den Hurk J, Van Baelen M, Op de Beeck HP. Development of visual category selectivity in ventral visual cortex does not require visual experience. Proc Natl Acad Sci. 2017;114(22):E4501–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Dobs K, Martinez J, Kell AJE, Kanwisher N. Brain-like functional specialization emerges spontaneously in deep neural networks. 2021;1–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Cousineau D Confidence intervals in within-subject designs: A simpler solution to Loftus and Masson’s method. Tutor Quant Methods Psychol. 2005;1(1):42–5. [Google Scholar]

- 51.Keil B, Alagappan V, Mareyam A, McNab JA, Fujimoto K, Tountcheva V, et al. Size-optimized 32-channel brain arrays for 3 T pediatric imaging. Magn Reson Med. 2011. Dec 1;66(6):1777–87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Kouider S, Stahlhut C, Gelskov S V., Barbosa LS, Dutat M, de Gardelle V, et al. A Neural Marker of Perceptual Consciousness in Infants. Science (80- ). 2013;340(6130):376–80. [DOI] [PubMed] [Google Scholar]

- 53.Pitcher D, Dilks DD, Saxe RR, Triantafyllou C, Kanwisher N. Differential selectivity for dynamic versus static information in face-selective cortical regions. Neuroimage. 2011;56(4):2356–63. [DOI] [PubMed] [Google Scholar]

- 54.Zapp J, Schmitter S, Schad LR. Sinusoidal echo-planar imaging with parallel acquisition technique for reduced acoustic noise in auditory fMRI. J Magn Reson Imaging. 2012;36(3):581–8. [DOI] [PubMed] [Google Scholar]

- 55.Dufford AJ, Hahn CA, Peterson H, Gini S, Mehta S, Alfano A, et al. (Un)common space in infant neuroimaging studies: a systematic review of infant templated. bioRvix. 2021;1:1–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Arichi T, Fagiolo G, Varela M, Melendez-Calderon A, Allievi A, Merchant N, et al. Development of BOLD signal hemodynamic responses in the human brain. Neuroimage. 2012;63(2):663–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Kay KN, Rokem A, Winawer J, Dougherty RF, Wandell BA. GLMdenoise: A fast, automated technique for denoising task-based fMRI data . Front Neurosci. 2013;7(7 DEC):1–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Rajimehr R, Devaney KJ, Bilenko NY, Young JC, Tootell RBH. The “parahippocampal place area” responds preferentially to high spatial frequencies in humans and monkeys. PLoS Biol. 2011;9(4). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

De-identified results from the functional region of interest analyses have been deposited at osf.io and are publicly available as of the date of publication. Accession numbers are listed in the key resources table.

Group maps for the three published contrasts have been deposited at osf.io and are publicly available as of the data of publication. Accession numbers are listed in the key resources table.

All original code has been deposited at osf.io and is publicly available as of the date of publication. DOIs are listed in the key resources table.

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.

KEY RESOURCES TABLE.

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Antibodies | ||

| Bacterial and virus strains | ||

| Biological samples | ||

| Chemicals, peptides, and recombinant proteins | ||

| Critical commercial assays | ||

| Deposited data | ||

| Group random effects analyses | This paper | https://osf.io/jnx5a/?view_only=2db4898abffa424fbe9b96e7e2d7e161 |

| Functional region of interest analyses | This paper | https://osf.io/jnx5a/?view_only=2db4898abffa424fbe9b96e7e2d7e161 |

| Visual statistics of stimuli | This paper | https://osf.io/jnx5a/?view_only=2db4898abffa424fbe9b96e7e2d7e161 |

| Experimental models: Cell lines | ||

| Experimental models: Organisms/strains | ||

| Oligonucleotides | ||

| Recombinant DNA | ||

| Software and algorithms | ||

| All pre-processing software | This paper, previous work17, FSL (https://fsl.fmrib.ox.ac.uk/fsl/fslwiki/), Freesurfer (http://surfer.nmr.mgh.harvard.edu/) | https://osf.io/jnx5a/?view_only=2db4898abffa424fbe9b96e7e2d7e161 |

| All data analysis software | This paper, FSL (https://fsl.fmrib.ox.ac.uk/fsl/fslwiki/), Freesurfer (http://surfer.nmr.mgh.harvard.edu/) | https://osf.io/jnx5a/?view_only=2db4898abffa424fbe9b96e7e2d7e161 |

| Visual statistics of stimuli | This paper; rectilinearity27; curvilinearity28,29 | https://osf.io/jnx5a/?view_only=2db4898abffa424fbe9b96e7e2d7e161 |

| Stimuli presentation | This paper and previous work17 | https://osf.io/jnx5a/?view_only=2db4898abffa424fbe9b96e7e2d7e161 |

| MATLAB 2020a/2020b | MathWorks | https://www.mathworks.com/products/matlab.html |

| Freesurfer 6.0.0 | Massachusetts General Hospital | https://surfer.nmr.mgh.harvard.edu |

| FSL 5.0.9 | FMRIB Software Library | https://fsl.fmrib.ox.ac.uk/fsl/fslwiki/ |

| Other | ||