Abstract

Aversive motivation plays a prominent role in driving individuals to exert cognitive control. However, the complexity of behavioral responses attributed to aversive incentives creates significant challenges for developing a clear understanding of the neural mechanisms of this motivation-control interaction. We review the animal learning, systems neuroscience, and computational literatures to highlight the importance of experimental paradigms that incorporate both motivational context manipulations and mixed motivational components (e.g., bundling of appetitive and aversive incentives). Specifically, we postulate that to understand aversive incentive effects on cognitive control allocation, a critical contextual factor is whether such incentives are associated with negative reinforcement or punishment. We further illustrate how the inclusion of mixed motivational components in experimental paradigms enables increased precision in the measurement of aversive influences on cognitive control. A sharpened experimental and theoretical focus regarding the manipulation and assessment of distinct motivational dimensions promises to advance understanding of the neural, monoaminergic, and computational mechanisms that underlie the interaction of motivation and cognitive control.

Keywords: Aversive motivation, Cognitive control, Mixed motivation, Negative reinforcement, Punishment, Serotonin, Habenula

1. Introduction

In daily life, individuals demonstrate an impressive ability to weigh the relevant incentives when deciding the amount and type of effort to invest when completing cognitively demanding tasks (Shenhav et al., 2017). These incentives can include both the potential positive outcomes obtained from task completion (e.g., bonus earned, social praise), as well as potential negative outcomes that can be avoided if the task is not completed (e.g., job termination, social admonishment). The ability to successfully adjust cognitive control based on diverse motivational incentives is highly significant for determining one’s future academic, career, and social goals (Bonner and Sprinkle, 2002; Duckworth et al., 2007; Mischel et al., 1989), as well as providing a necessary intermediary step for informing how motivational and cognitive deficits may arise in clinical disorders (Barch et al., 2015; Jean-Richard-Dit-Bressel et al., 2018).

Importantly, individuals often face a mixture – or “bundle” – of positive and negative incentives that may jointly occur as in relation to their behavior (e.g., the motivation to earn a good salary and to avoid being fired may jointly drive a worker to allocate more effort to optimize their performance relative to each incentive alone). A crucial factor often neglected in cognitive neuroscience studies of motivation and cognitive control is that the impact of a negative incentive on behavior may depend strongly on the context of how it is bundled (e.g., good salary plus the fear of job termination may motivate an individual to increase their effort, whereas a good salary accompanied by frequent and harsh criticism from a supervisor may cause that same person to decrease their effort). In this review, we provide a detailed examination of how contextual factors moderate bundled incentive effects to better elucidate the mechanisms that underlie interactions of motivation and cognitive control.

Recent empirical research has shed some light on the neural mechanisms of motivation and cognitive control interactions (Botvinick and Braver, 2015; Braver et al., 2014; Yee and Braver, 2018). In particular, dopamine has been widely postulated as a key neurotransmitter (Cools, 2008, 2019; Westbrook and Braver, 2016), and a broad network of brain regions have been shown to underlie these interactions (Parro et al., 2018). Extant studies in this domain have almost exclusively focused on the impact of expected rewards (e.g., monetary bonuses, social praise) on higher-order cognition and cognitive control (Aarts et al., 2011; Bahlmann et al., 2015; Braem et al., 2014; Chiew and Braver, 2016; Duverne and Koechlin, 2017; Etzel et al., 2015; Fröber and Dreisbach, 2016; Frömer et al., 2021; Kouneiher et al., 2009; Locke and Braver, 2008; Small et al., 2005). In contrast, much less is known about the mechanisms through which negative outcomes (e.g., monetary losses, shocks) interact with cognitive control (Braem et al., 2013; Fröbose and Cools, 2018). Although this dissociation by motivational valence (e.g., rewarding vs. aversive) in decision-making is not new (Pessiglione and Delgado, 2015; Plassmann et al., 2010), it remains a significant challenge to determine whether rewarding and aversive motivational values are processed in common or separate neural circuits (Hu, 2016; Morrison and Salzman, 2009).

A recent theoretical framework that shows great promise for integrating the role of aversive motivation in cognitive control is the Expected Value of Control (EVC) model (Shenhav et al., 2013, 2017). The EVC model utilizes a computationally explicit formulation of cognitive control in terms of reinforcement learning and decision-making processes in order to characterize how diverse motivational incentives (e.g., rewards, penalties) impact cognitive control allocation. Critically, EVC reframes adjustments in cognitive control as a fundamentally motivated process, determined by weighing effort costs against potential benefits of control to yield the integrated, net expected value. Although the EVC model has been successfully applied to characterize how rewarding incentives offset the cost of exerting cognitive control, the current cost-benefit analysis needs to be expanded to account for the diversity of strategies for control allocation that arise from aversive motivational incentives.

These important gaps in the literature highlight a ripe opportunity and unique challenge for expanding the investigation of motivation and cognitive control interactions. But why have researchers not yet made significant inroads into characterizing these mechanisms underlying aversive motivation effects on cognitive control? We argue that obstacles to progress can be attributed to two main factors. First, much of the contemporary neuroscience literature has often neglected to consider the motivational context through which aversive incentives influence different strategies for allocating cognitive control, that is, whether the motivational context is operationalized as the degree to which motivation to attain or avoid an outcome will increase (e.g., reinforcement) or decrease (e.g., punishment) behavioral responding. For example, whereas rewarding incentives typically increase behavioral responding to approach the expected reward, aversive incentives can lead an organism to either invigorate or attenuate behavioral responses to avoid the aversive outcome, depending on the motivational context (e.g., See Levy and Schiller, 2020; Mobbs et al., 2020). Second, current experimental paradigms rarely include bundled incentives (i.e., mixed motivation, when both appetitive and aversive outcomes are associated with a behavior), despite the intuition that people likely integrate diverse motivational incentives when deciding how much cognitive control to allocate in mentally demanding tasks. A particular challenge is the lack of well-controlled experimental assays that can explicitly quantify the diverse effects of aversive incentives on cognitive control.

In this review, our primary objective is to identify and highlight critical motivational dimensions (e.g., motivational context and mixed motivation), which for the most part have been neglected in prior treatments. In our opinion, these dimensions have strong potential to advance understanding regarding the neural, monoaminergic, and computational mechanisms of aversive motivational and cognitive control. In particular, we demonstrate how incorporating these motivational dimensions, which have played a prominent role in animal learning experimental paradigms, into experimental studies with humans, can improve the granularity and precision through which we can measure aversive incentive effects on cognitive control allocation. Specifically, we hypothesize that stronger consideration of the motivational context of aversive incentives can clarify the putative dissociable neural pathways and computational mechanisms through which aversive motivation may guide cognitive control allocation. Similarly, the inclusion of mixed motivational components in experimental paradigms will facilitate increased precision in measuring the aversive influences on cognitive control. In sum, we anticipate this review will invigorate greater appreciation for foundational learning and motivation theories that have guided the cornerstone discoveries over the past century, as well as catalyze innovative, groundbreaking research into the computations, brain networks, and neurotransmitter systems associated with aversive motivation and cognitive control.

2. Historical perspectives on aversively motivated behavior

2.1. Pavlovian vs. instrumental control of aversive outcomes

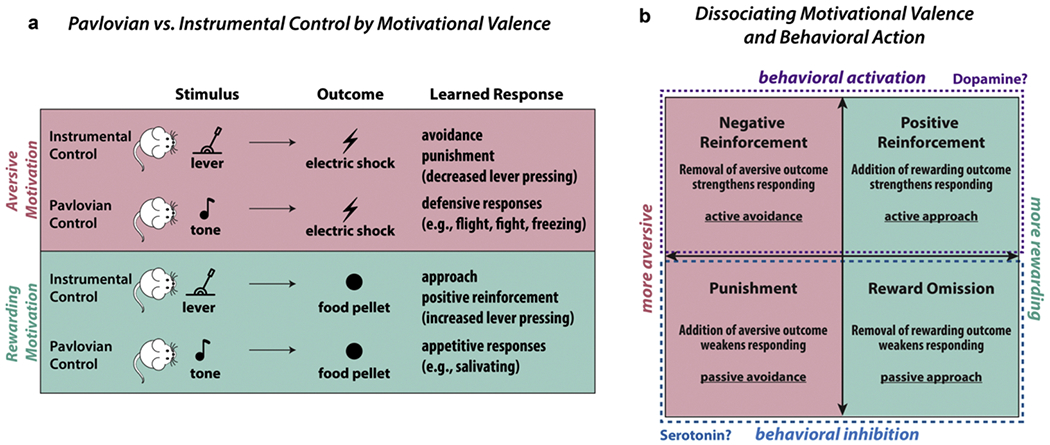

The dichotomy between Pavlovian and instrumental control of behavior has long played an influential role in our contemporary understanding of motivation (Guitart-Masip et al., 2014; Mowrer, 1947; Rescorla and Solomon, 1967). Here, Pavlovian control refers to when a conditioned stimulus (CS) elicits a conditioned response (CR) that is typically associated with an unconditioned stimulus (US) (Dickinson and Mackintosh, 1978; Pavlov, 1927; Rescorla, 1967, 1988). For example, a rat will learn to salivate when they hear a tone that predicts delivery of a food pellet, or alternatively learn to produce defensive responses (e.g., freezing, panic, anxiety) when they hear a tone that predicts electric shocks. In contrast to Pavlovian control, instrumental control describes when an ongoing behavior is “controlled by its consequences,” such that the likelihood of a behavioral response increases when an organism receives a reinforcing outcome for performing that response (Skinner, 1937; Staddon and Cerutti, 2003). For example, a rat will increase its rate of lever pressing if that action is followed by a food reward (e.g., reinforcement), whereas a rat will decrease its rate of lever pressing if that action is followed by an electric shock. Importantly, although both examples illustrate how Pavlovian and instrumental control lead to changes in behavior, the key distinction is that in the former, the appetitive or aversive outcome (US) is presented independent of an organism’s behavior, whereas, in the latter, an organism must perform a specific action in order to successfully attain or avoid a certain outcome. This distinction is detailed in Table 1 and Fig. 1a.

Table 1.

Pavlovian vs. Instrumental Control. Detailed comparison of key differences between Pavlovian and Instrumental Control.

| Pavlovian Control (e.g., Classical, Respondent) | Instrumental Control (e.g., Operant) |

|---|---|

| Behavior is controlled by stimulus preceding response | Behavior is controlled by consequences of response |

| Responses are elicited by neutral stimuli repeatedly associated with an appetitive or aversive unconditioned outcome | Responses are driven by the motivation to attain a rewarding outcome or avoid/escape from an aversive outcome |

| Goal is to increase the probability of a response (CR) to an initially neutral stimulus (CS) by associating the neutral stimulus with an unconditioned stimulus (US) | Goal is to increase the probability of a response in the presence of a discriminative stimulus (SD) by following a desired response with a reinforcing outcome or following the undesired response with a punishing outcome |

| Stimulus-Stimulus contingencies | Response-Outcome contingencies |

| US follows CS during training regardless of whether or not CS occurs. CR is brought under the control of a stimulus event CS that precedes the response, rather than the one that follows it | Reinforcer or Punisher follows the response only if the organism performs the voluntary action |

CR = conditioned response; CS = conditioned stimulus; US = unconditioned stimulus; SD = discriminative stimulus.

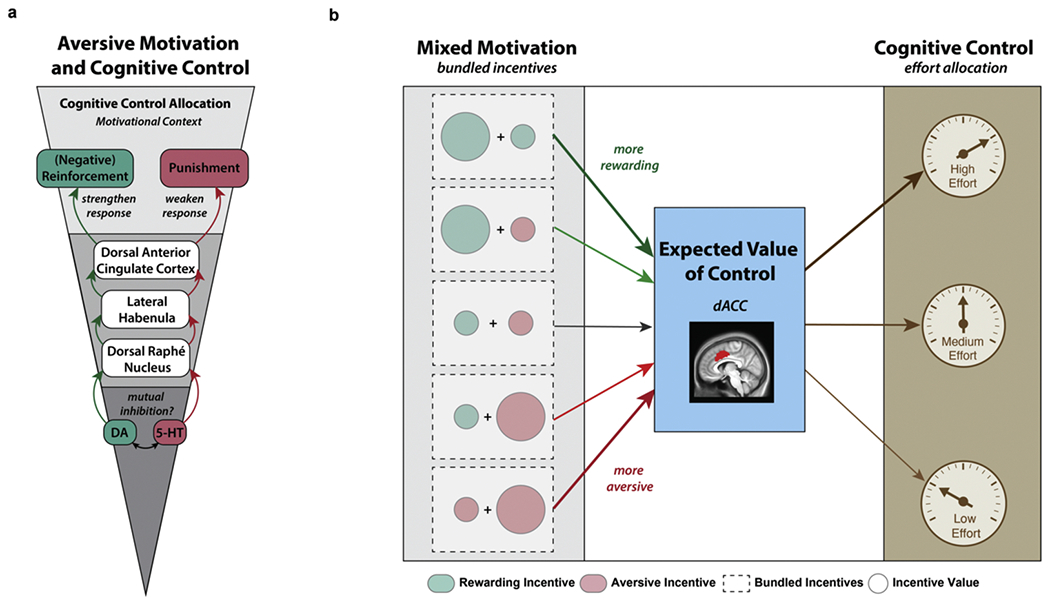

Fig. 1.

Pavlovian vs. Instrumental Control of Motivated Behavior. a) Schematic of how rewarding vs. aversive motivation may elicit various behavioral responses under Pavlovian vs. instrumental control paradigms. b) Instrumentally controlled responses by motivational valence (rewarding vs. aversive) and behavioral responding (activation vs. inhibition). Given that the same outcome may strengthen or weaken responses based on the context, consideration of both the motivational valence of the expected outcome and its impact on instrumental responding is of critical importance. In the case of aversive motivation, we highlight how avoidance motivation may lead to either behavioral activation (active avoidance) or behavioral inhibition (passive avoidance), depending on the context (negative reinforcement for the former, punishment for the latter).

Despite the utility of the Pavlovian-instrumental distinction in explaining the influence of rewarding and aversive incentives on behavior in the animal literature (e.g., conditioned responses), this distinction has largely been neglected in human cognitive neuroscience studies of motivation and cognitive control. This neglect may be a primary factor contributing to the contradictory findings associated with aversive motivation in the contemporary literature, which we describe in the subsequent sections.

2.2. Aversive outcomes may strengthen or weaken behavioral responses, depending on the motivational context

A large source of confusion in aversive motivation stems from the misuse of proper terms (i.e., the conflation between “aversive outcome” and “punishment”). This misunderstanding likely stems from insufficient clarity regarding reinforcement theory. Based on Thorndike’s “law of effect,” the theory posits that responses that produce a satisfying state will be strengthened, whereas responses that produce a discomforting state will be weakened (Thorndike, 1927, 1933). Formally, a reinforcer is anything that strengthens an immediately preceding instrumental response, whereas a punisher is anything that weakens an immediately preceding instrumental response (Premack, 1971; Skinner, 1953). Reinforcement is produced by denying the subject the opportunity to occupy a pleasant state as long as it would choose to, thus strengthening instrumental responding to approach or maintain that pleasant state; whereas punishment is produced by forcing the subject to occupy an unpleasant state longer than it would choose to, thus suppressing instrumental responding to avoid or escape from an unpleasant state (Estes, 1944; Solomon, 1964). A key insight arising from this distinction is that an aversive outcome can either reinforce (i.e., strengthen) or punish (i.e., weaken) an instrumentally conditioned response, depending on the context by which that outcome is presented (Crosbie, 1998; McConnell, 1990; Terhune and Premack, 1974). See Fig. 1b for illustration.

The distinction between negative reinforcement and punishment has great potential to provide insight into the interactions between aversive motivation and cognitive control. Typically, an individual will desire to avoid aversive outcomes. In these scenarios, Pavlovian conditioned stimuli can either strengthen or weaken instrumental responses to facilitate avoidance (Bull and Overmier, 1968; Overmier et al., 1971). Specifically, negative reinforcement refers to when successful escape from an aversive outcome strengthens instrumental responding in future trials (Masterson, 1970) and will produce a pleasant or rewarding affective response (H. Kim et al., 2006). Conversely, punishment refers to when the presence of aversive outcomes weakens instrumental responding in an approach motivation context (Dickinson and Pearce, 1976; Estes and Skinner, 1941) and will potentiate defensive responses such as anxiety, stress, arousal, vigilance, panic, or freezing (Hagenaars et al., 2012; Sege et al., 2017). Importantly, we suggest that the inclusion of aversive incentives can provide greater granularity into how distinct motivational factors can bias individuals to use different strategies for allocating cognitive control to accomplish behavioral goals. For example, a clear representation of the motivational context in which an aversive outcome will be encountered (e.g., punishment or negative reinforcement) can help individuals determine the amount of effort required to achieve their goal, as well as discern the strategy through which they will adjust their cognitive control allocation to meet that goal.

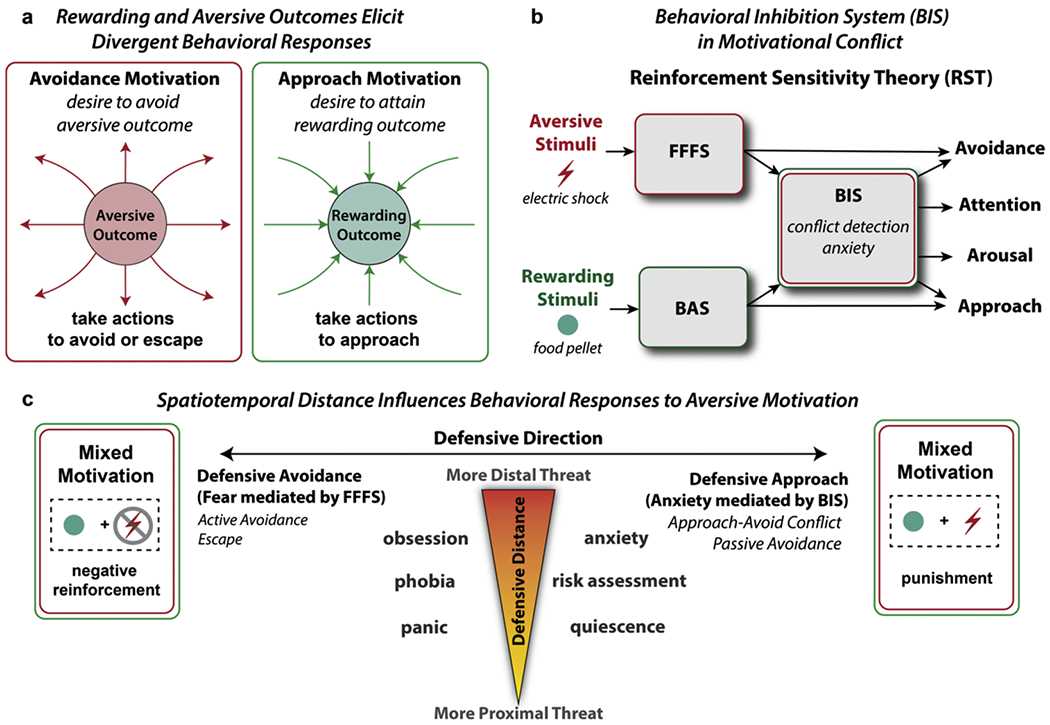

Mixed motivation: a key ingredient for motivational conflict and mutual inhibition

One particular challenge for quantifying the effects of aversive motivation is that their influence on behavior is much less parsimonious than appetitive motivation. Whereas approach-related motivation typically produces purely appetitive or consummatory responses to pursue a rewarding outcome, avoidance-related motivation typically engenders a wide range of behavioral responses to avoid or escape from detected threats (Fanselow, 1994; Fanselow and Lester, 1988; Masterson and Crawford, 1982) (See Fig. 2a). For example, in order to avoid receiving an electric shock (e.g., active avoidance), an organism may freeze, run (e.g., escape), produce a stress or fear response, or engage in a combination of such behaviors (Church, 1963).

Fig. 2.

a) Approach and avoidance motivation elicit divergent behavioral responses, with the former associated with actions to approach the rewarding outcome and the latter associated with actions to avoid or escape from the aversive outcome. b) According to Reinforcement Sensitivity Theory (RST), three core systems underlie human emotion: 1) fight-flight-freeze system (FFFS), 2) behavioral approach system (BAS), and 3) behavioral inhibition system (BIS). Adapted from Gray, 1982 & Gray and McNaughton, 2000. Relevant to the current proposal, the BIS system mediates the resolution of goal conflict (e.g., approach-avoidance motivational conflict). The intensity of this conflict is associated with increased subjective anxiety. c) Recent extensions of RST have suggested defensive distance and defensive direction as two important dimensions that may help organize defensive responses to aversive motivation. Defensive distance describes the perceived distance from a threat (proximal to distal) that influences the intensity of a defensive response. Defensive direction describes the range of responses between actively avoiding or escaping a threat (defensive avoidance) to cautiously approaching a threat (defensive approach). Relevant to the current review, this delineation between defensive avoidance and defensive approach reveals how the critical distinction between negative reinforcement and punishment may underlie distinct fear-mediated and anxiety-mediated defensive responses to aversive motivation. Simplified adaptation from McNaughton and Corr, 2004.

Although approach and avoidance motivation have long been theorized to be mediated by distinct systems (Carver, 2006; Carver and White, 1994; Elliot and Covington, 2001), the extent to which individuals exert mental and physical effort to complete behavioral goals is almost certainly determined by mixed motivation, i.e., the combined or integrated net value of multiple incentives which potentially increase or decrease behavior depending on the motivational context (Aupperle et al., 2015; Corr and McNaughton, 2012; Yee and Braver, 2018). For example, an individual who is motivated to increase their likelihood of attending a good medical school and avoid the consequences of failing their course (e.g., academic probation) may additionally exert more effort when vigorously studying for a final exam compared to a single motivation (e.g., approach or avoidance motivation only). Conversely, an individual may be motivated to perform well on their exam but also may find the content aversive (e.g., a student who has strong disgust reactions when studying human anatomy) may be overall less motivated to study relative to an exam on a less aversive topic (e.g., fly genetics).

Over the years, researchers have attempted to organize the diversity of defensive responses precipitated by aversive motivation (LeDoux and Pine, 2016; McNaughton, 2011). One well-established framework that has played an influential role is reinforcement sensitivity theory (RST) (Gray, 1982; Gray and McNaughton, 2000). According to RST, three core systems underlie human emotion: 1) a fight-flight-freeze system (FFFS) that is responsible for mediating behaviors in response to aversive stimuli (e.g., avoidance, escape, panic, phobia), 2) a behavioral approach system (BAS) that is responsible for mediating reactions to all appetitive stimuli (e.g., reward-orientation, impulsiveness), and 3) a behavioral inhibition system (BIS) which mediates the resolution of goal conflict (i.e., between FFFS and BAS, or even FFFS-FFFS and BAS-BAS conflict) (Pickering and Corr, 2008). Critically, the BIS is hypothesized to play a key role in generating anxiety during mixed motivational contexts. For example, during approach-avoidance conflict, activation of the BIS will increase attention to the environment and arousal, with the level of anxiety that is elicited tracking the intensity of conflict evoked by such attention (See Fig. 2b). Although approach-avoidance conflicts are more commonly observed, RST proposes that anxiety can also arise from approach-approach and avoidance-avoidance conflicts.

Recent extensions of the RST have postulated two relevant dimensions that may help organize the variety of defensive responses to aversive motivation (McNaughton, 2011; McNaughton and Corr, 2004). As illustrated in Fig. 2c, defensive direction describes the functional distinction between leaving a dangerous situation (active avoidance or escape; fear mediated by the FFFS system) and increasing caution to avoid a dangerous outcome (approach-avoidance conflict or passive avoidance; anxiety mediated by the BIS system). Conversely, defensive distance describes how an organism’s intensity of responding is associated with one’s perceived distance to the threat. For example, more proximal threats would elicit more overt reactive behavioral responses (e.g., panic, defensive quiescence). In contrast, distal threats may elicit more covert non-defensive behavior (e.g., obsessive attention towards the potential threat may drive compulsions to avoid that threat and increased anxiety).

The extended RST framework suggests the importance of mixed motivation for understanding incentive effects on behavior. In particular, the joint consideration of rewarding and aversive incentives associated with an outcome could have the effect of either further strengthening or competitively inhibiting motivational influences on instrumental or goal-directed behavior (Dickinson and Dealing, 1979; Dickinson and Pearce, 1977; Konorski, 1967). In addition to the impact of behavior, an important open question in this domain is whether and how the interaction between different motivational systems increases (Barker et al., 2019) or reduces (Solomon, 1980; Solomon and Corbit, 1974) affective or emotional responses. To further glean insight into the neural and computational mechanisms long associated with defensive responses to aversive motivation (Hofmann et al., 2012; Mobbs et al., 2009, 2020; Steimer, 2002), we argue that future work incorporating mixed motivation will help clarify of how aversive motivation modulates the intensity or frequency an individual’s effort allocation in mentally challenging tasks.

3. Experimental paradigms to investigate aversive motivation and cognitive control

The perspectives that arise from the animal learning literature suggest that a significant gap in characterizing the effects of aversive motivation of cognitive control is the lack of validated experimental paradigms to probe such interactions. Therefore, to make progress in this area of research, it is necessary to develop sensitive and specific task paradigms that allow researchers to systematically manipulate and measure how aversive outcomes influence goal-directed cognitive control. In the next section, we highlight several prominent experimental paradigms that have provided great insight into appetitive-aversive motivation interactions across animals and humans. Next, we describe several task paradigms that hold great promise for investigating aversive motivation and cognitive control interactions. In these paradigms, we show how the inclusion of both mixed motivation and motivational context can help quantify the extent to which aversive incentives may differentially guide cognitively controlled behavior, an important intermediary step for characterizing the engagement of underlying neural and computational mechanisms.

3.1. Experimental paradigms of aversive motivation on goal-directed behavior

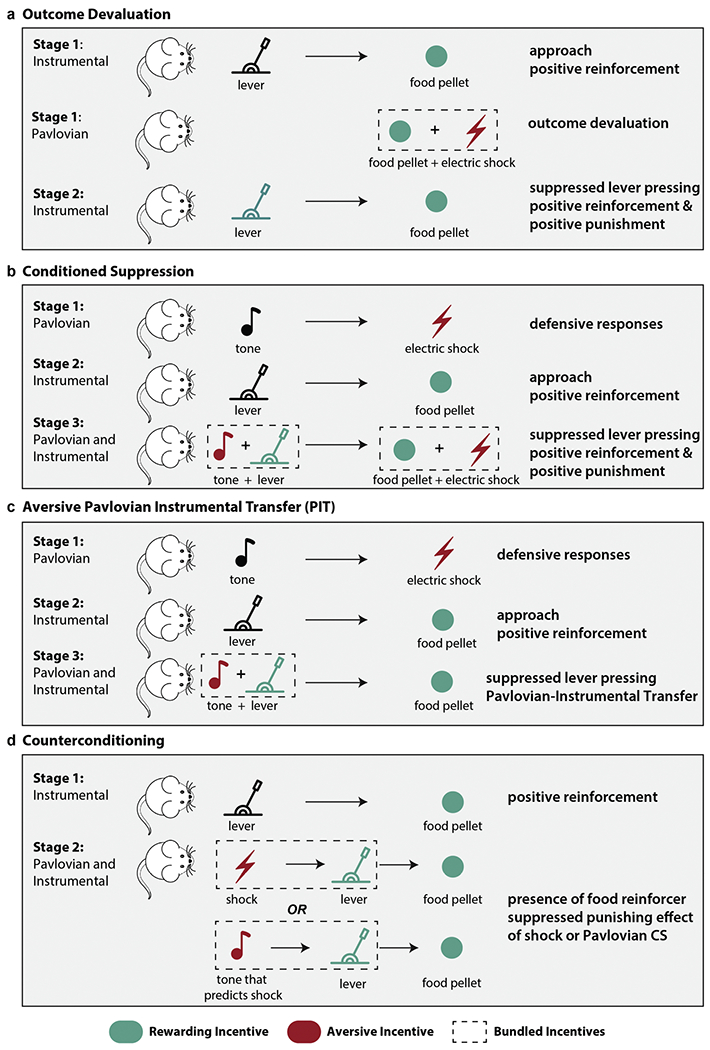

Researchers in the animal learning domain have dedicated significant time and effort towards examining how aversive outcomes act as behavioral inhibitors of the response strength conditioned to appetitive outcomes (Dickinson and Dearing, 1979; Dickinson and Pearce, 1977; Nasser and McNally, 2012, 2013). Although the combination of aversive and appetitive motivational incentives is known to produce mutual inhibitory effects on instrumentally controlled responses (Dickinson and Pearce, 1977), researchers rarely consider the myriad of ways through which this mutual inhibition occurs when manipulating aversive motivation in behavioral tasks. As illustrated in Fig. 3, we describe four classic experimental paradigms that utilize distinct approaches to manipulate how combining rewarding and aversive incentives mutually inhibit instrumental responding in animals and humans. Importantly, our brief synthesis of such foundational paradigms aims to inspire novel insight for future experimental research that probes how aversive motivation influences the cognitive control processes guiding incentive-modulated goal-directed behavior (as described in Section 3.2).

Fig. 3.

Experimental Paradigms of Appetitive-Aversive Interactions. Four established paradigms to investigate approach-avoidance motivational conflict) are illustrated. These tasks highlight how including both appetitive and aversive incentives (e.g., mixed motivation) may exhibit mutual inhibition on instrumental behavior. A key distinction between these procedures is whether the aversive stimuli are conditioned in a Pavlovian or instrumental manner, as well as whether the presence of the aversive stimulus strengthens or weakens instrumental behavior (e.g., motivational context). The four paradigms are labeled as follows: a) Outcome devaluation. b) Conditioned Suppression. c) Pavlovian Instrumental Transfer. d) Counterconditioning. The black stimuli indicate a neutral stimulus, which is initially not paired with an unconditioned stimulus (e.g., food pellet or shock). The green stimuli indicate a rewarding incentive (e.g., food pellet) or a conditioned stimulus associated with a rewarding outcome (e.g., the rat learns that pulling the lever leads to a food pellet). In contrast, the red stimuli indicate an aversive incentive (e.g., shock) or a conditioned stimulus associated with an aversive outcome (e.g., the rat learns that a tone predicts the shock). The dashed rectangle indicates which incentives are bundled to facilitate mixed motivation.

3.1.1. Outcome devaluation

One classic approach for measuring aversive motivation effects on instrumental responding is outcome devaluation (also called reinforcer devaluation), a phenomenon in which the bundling of an aversive outcome (e.g., an electric shock) with a rewarding outcome will weaken instrumental responding towards the expected reward (e.g., a food pellet). Rachlin (1972) first demonstrated these punishment effects on pre-conditioned baseline excitatory responses in rats and pigeons. In these studies, the animals first learned to increase pressing a lever to obtain food rewards (e.g., positive reinforcement). Next, the food rewards were paired with electric shocks (e.g., appetitive-aversive motivation), and the decreased overall value of the bundled incentives suppressed the animal’s rate of lever pressing. Critically, this paradigm demonstrates how approach motivated behavior can be inhibited by including an additional aversive incentive in a measurable and systematic manner (Dickinson and Pearce, 1977). For example, the strength of an additional aversive incentive will determine the degree to which that aversive incentive inhibits approach-related behavior (e.g., greater suppression of instrumental responding occurs when food rewards are paired with more frequent and/or more intense shocks). Although some prior studies in rodents found that overtraining reduced the effect of outcome devaluation (Adams and Dickinson, 1981), recent work suggests that the degree to which additional aversive incentives may inhibit behavioral responding may depend on the training duration (Araiba et al., 2018). Interestingly, however, although outcome devaluation paradigms have been found to robustly elicit behavioral inhibition effects with outcome devaluation in rodents and monkeys (Balleine et al., 2005; Izquierdo and Murray, 2010; Murray and Rudebeck, 2013), there is mixed evidence of the degree to which overtraining impacts outcome devaluation in humans (Tricomi et al., 2009; de Wit et al., 2018), suggesting a need to examine the degree to which the findings obtained from this paradigm in animals are transferable to human studies. Nevertheless, outcome devaluation is a well-established paradigm that provides a promising approach to investigate how the bundling of rewarding and aversive incentives can modulate the strength of action-outcome contingencies (See Fig. 3a).

3.1.2. Conditioned suppression

Another approach for manipulating aversive motivation is via Pavlovian mechanisms, such that the presence of an aversive Pavlovian conditioned stimulus (CS) will inhibit instrumental behavior (Dickinson and Balleine, 2002; Mowrer, 1947, 1956). One such type of paradigm is conditioned suppression, which describes how a Pavlovian CS (e.g., a tone) paired with a noncontingent aversive stimulus (e.g., electric shock) may suppress instrumental responding (e.g., lever pressing) for a food reward (Lyon, 1968). In this paradigm, animals receive Pavlovian and instrumental training in separate phases. In the first phase, they learn an association between the Pavlovian CS and an aversive outcome (e.g., tone that predicts an electric shock) and develop a Pavlovian conditioned response to the aversive Pavlovian CS. In the second phase, they learn an association between performing an action and receiving a rewarding outcome (e.g., pressing a lever will lead to a food reward), and receipt of the food reward reinforces instrumental responding (e.g., positive reinforcement). The key manipulation that evaluates the extent to which the aversive Pavlovian CS inhibits responding is the test phase, through which both conditioning procedures are combined. Specifically, when both the Pavlovian CS and the lever are present, one can measure the extent to which the presence of the aversive Pavlovian CS (e.g., tone that predicts the shock) may suppress an animal’s desire to press the lever to receive a food reinforcer (Bouton and Bolles, 1980; Estes and Skinner, 1941). Notably, although some versions of this paradigm superimpose the Pavlovian conditioned aversive stimulus on an instrumentally controlled response (Blackman, 1970; Dickinson, 1976), others have noted that these conditioned suppression effects may also arise even during extinction of the aversive stimulus, similar to the aversive Pavlovian instrumental transfer paradigms discussed in the next section. This paradigm is illustrated in Fig. 3b.

3.1.3. Aversive Pavlovian Instrumental Transfer (PIT)

Aversive Pavlovian Instrumental Transfer (PIT; also referred to as transfer-of-control paradigms) is nearly identical to conditioned suppression, in that animals receive separate Pavlovian and instrumental training in separate phases. However, the main difference is that the test phase (transfer) occurs under extinction (Campese et al., 2013, 2020; Cartoni et al., 2016; Estes, 1943). Specifically, during the test (transfer) phase, the animal is presented with the Pavlovian CS in extinction (e.g., tone but no shock) while they perform the instrumental task (Scobie, 1972). This manipulation is important because the ‘transfer’ effect of the aversive motivation on instrumental responding is not conflated with the sensory properties of the aversive outcome. Some have even argued that because of this feature, aversive PIT is a ‘purer’ approach (than conditioned suppression) to study Pavlovian-instrumental interactions (Campese, 2021; Campese et al., 2013). The PIT paradigm is illustrated in Fig. 3c.

The aversive PIT paradigm has recently garnered much attention in human cognitive neuroscience, as it has been well documented to measure the effects of aversive motivation on instrumentally controlled behavior (Garofalo and Robbins, 2017; Geurts et al., 2013a; Lewis et al., 2013; Rigoli et al., 2012). In this human adaptation, participants first undergo instrumental conditional training through which they learn to push a button (approach-go) or do nothing (approach-no-go) to approach a rewarding stimulus (monetary gain) or push a button (withdrawal-go) or do nothing (withdrawal-no-go) to avoid an aversive stimulus (monetary loss). Participants would then undergo Pavlovian conditioning, through which unfamiliar audiovisual stimuli (pure tone and fractal) are paired with various appetitive or aversive liquids. Finally, during the testing phase (PIT), participants would perform the same task as during instrumental training, except that now the Pavlovian stimuli are tiled in the background. Critically, these PIT trials are performed in extinction, such that the liquid incentives were not presented. Interestingly, prior findings have demonstrated that the aversive Pavlovian CS’s inhibit approach-related instrumental responding and invigorate withdrawal-related instrumental responding, consistent with a successful PIT effect (Geurts et al., 2013a; Millner et al., 2018).

3.1.4. Counterconditioning

One important consideration not yet discussed is that an aversive stimulus may counterintuitively become less effective in suppressing instrumental responding when it predicts a rewarding outcome (i.e., it may reinforce instrumental responding). In the counterconditioning procedure (Dickinson and Pearce, 1976), the animal first learns an association between lever pressing and a food reward, which results in positive reinforcement of the lever pressing response. Next, an aversive stimulus (e.g., electric shock) is introduced and always precedes the positive food reinforcer (e.g., pressing a lever for a food reward). When the animal learns the association between the aversive stimulus (e.g., shock) and the food reinforcer, the aversive stimulus becomes less effective in its ability to act as a punisher (compared to without the food reinforcement) because it predicts a food reward. Interestingly, a separate experiment in this study replaced the electric shocks with an aversive Pavlovian CS (e.g., a tone predicting a shock) and found the same counterconditioning effects, confirming that the inhibitory effects of the positive reinforcement on the aversive Pavlovian CS were not simply due to the stimulus properties of the shock (Nasser and McNally, 2013). The counterconditioning paradigm is illustrated in Fig. 3d.

3.2. Experimental paradigms of aversive motivation and cognitive control

Despite the extensive history and foundational establishment of well-designed animal learning paradigms to characterize appetitive-aversive motivation interactions, this work has primarily been carried out in rodents. Conversely, there is much less work adapting these paradigms to investigate how mixed motivation impacts decision making in primates (Amemori et al., 2015; Amemori and Graybiel, 2012; Leathers and Olson, 2012; Roesch and Olson, 2004) and humans (Aupperle et al., 2011; Kirlic et al., 2017). Even when such paradigms have been implemented to examine how animals and humans make decisions based on “bundles” of rewarding and aversive incentives, only a very few studies have explicitly examined how mixed motivation impacts the allocation of cognitive control. Moreover, to account for the variety of behavioral strategies that arise from aversive incentives, e.g., penalties can facilitate enhancement or avoidance of cognitive control (Fröbose and Cools, 2018; Yee and Braver, 2018), it is imperative to design innovative experimental paradigms that can accurately characterize the full range of cognitively controlled behaviors that arise from these interactions.

Here, we draw inspiration from classical reinforcement theory and describe several recent paradigms that have examined the influence of aversive incentives on cognitive control. Similar to the classical paradigms previously described, these aversive motivation-control paradigms also incorporate mixed motivation, the combined influence of multiple incentives. In contrast to prior studies which have only looked at aversive incentives on conditioned behavioral responses (Bradshaw et al., 1979; Reynolds, 1968; Weiner, 1989), these paradigms explicitly manipulate how rewards and aversive motivational incentives combine to impact cognitive control. Moreover, the inclusion of multiple diverse types of motivational incentives is crucial for studying these interactions by valence, as they enable us to precisely quantify the relative influence of aversive incentives (e.g., monetary losses, shocks, saltwater) in terms of their recruitment and allocation of cognitive control in goal-directed tasks. Lastly, while we acknowledge that these paradigms are certainly not exhaustive, we hope that consideration of these motivational dimensions (e.g., motivational context, mixed motivation) will provide a broad foundation from which to drive future research that investigates the specific and nuanced ways through which aversive motivational value interacts with cognitive control.

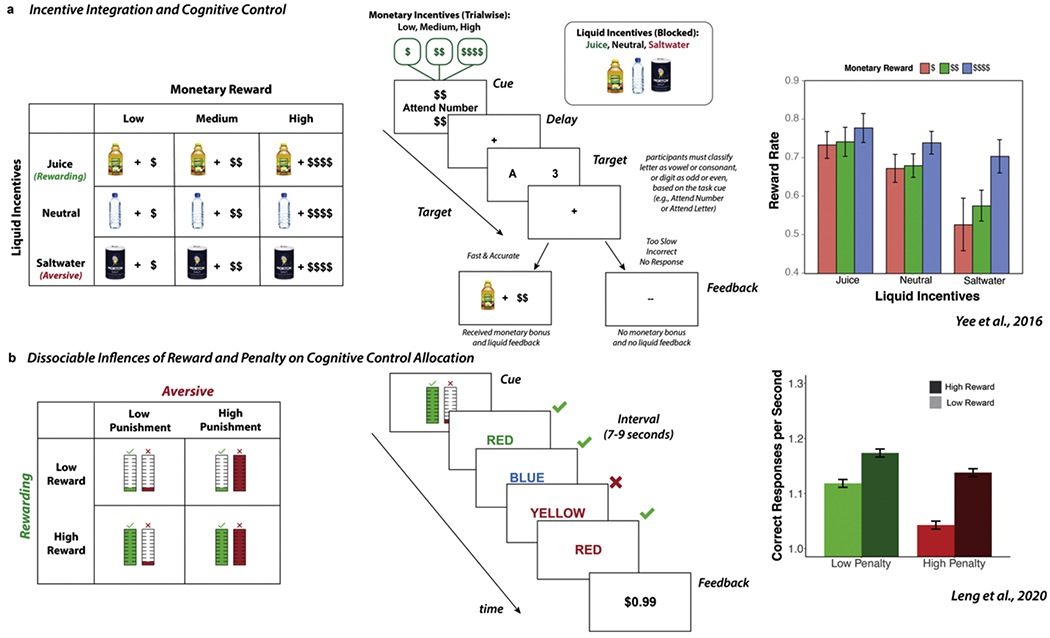

3.2.1. Incentive integration and cognitive control

An experimental paradigm that holds great promise for investigating aversive motivation on cognitive control is the incentive integration and cognitive control paradigm (Yee et al., 2016, 2019, 2021). This paradigm illustrated in Fig. 4a parallels the outcome devaluation paradigm described earlier but replaces the instrumental conditioning procedure (e.g., lever pressing for a food reward) with a cognitive control task (cued task-switching). On each trial, a letter-number pair is visually presented (e.g., one letter and one number on the screen), and participants are tasked with categorizing the target symbol based on the task instructed briefly presented at the beginning of each trial (e.g., a randomized cue would indicate whether the participant should classify the letter as a vowel or consonant or classify a number as odd or even). A monetary reward cue is also randomly presented with each trial and indicates whether participants can earn low, medium, or high reward value (displayed as $, $$, or $$$$) for fast and accurate task performance (e.g., faster than a subjective RT criterion established during baseline blocks with no incentives present). Importantly, successful attainment of monetary reward is indicated by oral liquid delivery to the participant’s mouth as post-trial performance feedback. In contrast, participants did not receive money nor liquid if they were incorrect, too slow, or did not respond. Additionally, the type of liquid is blocked, such that the liquid feedback can be rewarding (apple juice), neutral (tasteless isotonic solution), or aversive (saltwater). However, as the symbolic meaning of the liquid is kept constant across conditions (i.e., always indicating performance success), any behavioral differences observed can be attributed to the differential subjective evaluation of the bundled monetary and liquid incentives. Previous results across multiple studies have consistently demonstrated that humans integrate the motivational value of monetary and liquid incentives to modulate cognitive task performance and self-reported motivation, such that greater performance improvements were observed with more rewarding bundled incentives (high monetary reward + juice) relative to less rewarding bundled incentives (low monetary reward + neural), while impairments were found for the most aversive bundles (low monetary reward + saltwater).

Fig. 4.

Experimental Paradigms for Investigating Aversive Motivation and Cognitive Control. a) Incentive Integration and Cognitive Control Paradigm. Participants performed cued task-switching and could earn monetary rewards and liquid incentives for fast and accurate performance (Yee et al., 2016). Manipulating the motivational value of the monetary and liquid incentives across bundled incentive conditions ensured a clear comparison of how the relative motivational value of these incentives influenced cognitive control. b) Dissociable Influences of Reward and Penalty on Cognitive Control Allocation. Participants performed a self-paced incentivized mental effort task (Leng et al., 2020). They were rewarded with monetary gains for correct responses and were penalized with monetary losses for incorrect responses. The motivational value of the rewards and penalties were varied, which enabled clear dissociation between how expected rewards increased response rate (via faster response times while maintaining accuracy) and expected penalties decreased response rate (via slower response times and increased accuracy). Together, these paradigms demonstrate the utility of using mixed motivation to more precisely evaluate how aversive motivation influences cognitive control.

Importantly, by manipulating both monetary and liquid incentives across rewarding and aversive domains, this paradigm enables straightforward examination of how much the aversive motivational value of the saltwater impacts cognitive control task performance relative to the neutral solution or apple juice. Moreover, manipulating the valence of the liquid incentives across bundled incentive conditions ensures that the comparison is related to the motivational value of the liquids on cognitive control rather than salience properties that are commonly associated with primary incentives. Broadly, the incentive integration paradigm demonstrates the utility of using bundled primary and secondary incentives to evaluate how the mutual inhibition between rewarding and aversive motivational processes influence cognitive control task performance. Moreover, the motivational manipulations in this paradigm hold great promise for examining how aversive incentives of different categories (Crawford et al., 2020) may similarly or differently impact performance in other cognitive control tasks (e.g., Flanker, Stroop, Simon, AX-CPT) and across the lifespan (Yee et al., 2019).

3.2.2. Dissociable influences of reward and penalty on cognitive control

Another approach for investigating the effect of aversive incentives on cognitive control is to examine the dissociable (rather than the integrated) influence of multiple incentives on cognitive control. Our group recently developed a novel task that examines how expected rewards and penalties influence the allocation of cognitive control on a self-paced Stroop task (Leng et al., 2020). Specifically, in contrast to previous studies that have primarily measured motivation in terms of performance on a fixed number of obligatory task trials, this task contains fixed time intervals through which a person can choose how much effort to invest based upon the expected rewards for success and penalties for failure (Schmidt et al., 2012). Critically, in addition to estimating the amount of effort invested within a given interval, this task enables us to measure the extent to which different incentives influence different types of mental effort investment (e.g., attentional control, response caution). In this paradigm, subjects earn monetary rewards for each correct response (high or low) and are penalized with monetary losses for each incorrect response (high or low). Each interval is preceded by a cue that indicates the consequences for correct and incorrect responses and is followed by feedback with the total reward and loss incurred for that interval. See Fig. 4b for an illustration of the task.

Because individuals need to consider both the motivational value of rewards and penalties when deciding how much cognitive control to allocate within a given interval, this paradigm enables an explicit comparison of the dissociable influences of reward and penalty on cognitive control allocation. Behavioral results revealed that participants maximized their net reward unit per time (e.g., reward rate) based on the bundled expected value of rewards and penalties, with better performance for higher expected rewards and worse performance for higher expected penalties. Post-hoc analyses of speed and accuracy revealed dissociable strategies for allocating effort based on both incentives. Higher rewards resulted in faster response times without a change in accuracy, whereas higher penalties resulted in slower but more accurate responses. Importantly, these data suggest the promise of this paradigm as another approach for evaluating the influence of aversive incentives on cognitive control.

4. Neural mechanisms of aversive motivation and cognitive control

In the next section, we propose that considering the motivational context of how aversive incentives influence behavior may help organize the wide range of neural processes underpinning aversive motivation and cognitive control. Although the neurobiological mechanisms of aversive motivation have been of longstanding interest (Campese et al., 2015; Jean-Richard-Dit-Bressel et al., 2018; Kobayashi, 2012; Levy and Schiller, 2020; Schiller et al., 2008; Seymour et al., 2007; Umberg and Pothos, 2011), and other regions such as orbitofrontal cortex, ventromedial PFC, insula, and amygdala have been broadly implicated in aversive processing (Atlas, 2019; Gehrlach et al., 2019; Kobayashi, 2012; Maren, 2001; Michely et al., 2020), we primarily focus on neural circuits implicated in motivation and cognitive control interactions. In particular, a significant challenge for developing a clear understanding of the mechanisms that underlie aversive motivation and cognitive control has been the perplexing spectrum of neural findings from extant studies involving aversive outcomes. Prior research has shown that active avoidance (e.g., increased behavioral responding to escape from the aversive outcome) is associated with increased dopamine (DA) release (Bromberg-Martin et al., 2010; Wenzel et al., 2018) as well as activation in the striatum and dorsal anterior cingulate cortex (Boeke et al., 2017; Delgado et al., 2009). In contrast, the anticipation of aversive incentives facilitates behavioral inhibition (e.g., decreased behavioral responding to avoid an aversive outcome) and is associated with increased serotonin (5-HT) release (Crockett et al., 2009, 2012; Geurts et al., 2013b) as well as activation in the lateral habenula (Jean-Richard-Dit-Bressel and McNally, 2014, 2015; Lawson et al., 2014; Webster et al., 2020) and dorsal anterior cingulate cortex (Fujiwara et al., 2009; Monosov, 2017). Importantly, we believe that greater emphasis on the distinction between how aversive incentives promote behavioral activation (e.g., negative reinforcement) versus behavioral inhibition (e.g., punishment) may help organize the diverse neural processes associated with aversive motivation and cognitive control. Below, we review the monoaminergic and neural mechanisms associated with negative reinforcement and punishment and present a novel framework (See Fig. 6a) that describes how the motivational context may delineate potential distinct neural pathways through which aversive incentives modulate cognitive control allocation.

Fig. 6.

Aversive Motivation and Cognitive Control. a) Neural mechanisms underlying aversive motivation and cognitive control. This framework considers the motivational context through which aversive incentives may facilitate either behavioral activation or behavioral inhibition. Dissociable monoaminergic mechanisms may underlie these two effort strategies (e.g., DA may promote negative reinforcement, 5-HT may promote punishment). The arrows represent information coding, such that reward-related information is passed along the green arrows to support reinforcement-related behavior. In contrast, aversive-related information is passed along the red arrows to support punishment-related behavior. Additionally, motivational opponency between DA and 5-HT (e.g., mutual inhibition; approach-avoidance motivational conflict) may help understand how “bundled incentives” (e.g., mixed motivation) signals are transmitted to the dorsal raphe nucleus, lateral habenula, and dorsal anterior cingulate cortex to promote divergent strategies for cognitive control allocation. b) Dorsal anterior cingulate cortex (dACC) integrates Expected Value of Control (EVC)-relevant information (e.g., expected positive and negative outcomes) to determine the allocation of cognitive control. Our current framework extends the EVC model from Shenhav et al., 2013 by including mixed motivation (e.g., the dotted rectangle indicates summed value of bundled incentives) to determine the EVC and cognitive control allocation (e.g., how much effort to exert). Thus, the inclusion of multiple diverse types of incentives is crucial for studying these interactions by valence. Specifically, they enable us to precisely quantify the relative influence of aversive incentives (e.g., monetary losses, shocks, saltwater) on the recruitment and allocation of cognitive control in goal-directed tasks.

4.1. Monoaminergic mechanisms of aversive motivation

4.1.1. Dopamine, behavioral activation, and negative reinforcement

It is unequivocal that dopamine (DA) is a key neurotransmitter involved in motivation-cognitive control interactions. Prior work has shown that the enhancement of cognitive performance (e.g., attentional processes, task-switching) by monetary rewards is specifically linked with increased dopamine release in the striatum and prefrontal cortex (Aarts et al., 2011; Braver and Cohen, 2000; Cools, 2008; Schouwenburg et al., 2010; Westbrook and Braver, 2016). However, while there is abundant evidence demonstrating the causal link between dopamine and exerting effort to obtain rewards (Hamid et al., 2016; Salamone, 2009; Walton and Bouret, 2019; Westbrook et al., 2020), there is also extensive literature on dopamine facilitating the avoidance of aversive outcomes (Lloyd and Dayan, 2016; Menegas et al., 2018; Nuland et al., 2020). Notably, although the role of dopamine in active avoidance seems somewhat counterintuitive, one plausible explanation may be that the successful avoidance of an aversive outcome may be intrinsically rewarding and thus drive active defensive strategies that increase effort to continually avoid the aversive outcome (McCullough et al., 1993; Sokolowski et al., 1994).

One compelling hypothesis that may reconcile these seemingly paradoxical results is that dopamine may modulate the reinforcement-related responses associated with motivational incentives (Dayan and Balleine, 2002; Wise, 2004). This idea is consistent with prior research, which has shown that dopamine modulates both positive reinforcement (Heymann et al., 2020; Steinberg et al., 2013, 2014) and negative reinforcement (Gentry et al., 2018; Navratilova et al., 2012; Pignatelli and Bonci, 2015). Others have observed that mesolimbic dopamine is associated with avoidance learning at the neural circuit level (Antunes et al., 2020; Ilango et al., 2012; Stelly et al., 2019; Wenzel et al., 2018), but there is not yet evidence that shows that dopamine modulates negative reinforcement in humans. Critically, validating this putative dopamine-reinforcement relationship in humans would provide an important stepping stone towards clarifying the putative role of dopamine in aversively motivated cognitive control.

4.1.2. Serotonin, behavioral inhibition, and punishment

Serotonin, also known as 5-Hydroxytryptamine (5-HT), has long been linked to aversive processes (Dayan and Huys, 2009; Deakin and Graeff, 1991; Soubrié, 1986), as well as a broad range of behavioral functions, including behavioral suppression, neuroendocrine function, feeding behavior, and aggression (Lucki, 1998). These diverse processes may be largely related to the numerous (at minimum 14) serotonin receptors in the brain (Carhart-Harris, 2018; Carhart-Harris and Nutt, 2017; Cools et al., 2008a; Cowen, 1991; Homberg, 2012), making it challenging to map serotonin’s specific role in motivational and cognitive processing. Prior work has shown that serotonin is linked to reward and punishment processing (Cohen et al., 2015; Hayes and Greenshaw, 2011; Kranz et al., 2010), coordinating defense mechanisms (Deakin and Graeff, 1991; Graeff, 2004), behavioral suppression (Soubrié, 1986), aversive learning (Cools et al., 2008b; Daw et al., 2002; Dayan and Huys, 2008; Ogren, 1982), cognitive flexibility (Clarke et al., 2004, 2005; Matias et al., 2017), impulsivity (Desrochers et al., 2020; Ranade et al., 2014), and motor control (Jacobs and Fornal, 1993; Wei et al., 2014), to name a few.

Perhaps one of the greatest challenges for developing a unified theory of 5-HT’s functional role relates to the observation that different 5-HT pathways mediate distinct adaptive responses to aversive outcomes (Deakin, 2013). For example, 5-HT projections from the dorsal raphe nucleus (DRN) to the amygdala facilitates anticipatory anxiety that can guide an organism away from the threat, whereas 5-HT projections to the periaqueductal gray (PAG) facilitate a reflexive fight/flight mechanism in response to unconditioned proximal threats (e.g., panic). It may initially seem paradoxical that 5-HT is engaged to facilitate both antic-ipatoiy anxiety and panic, behavioral responses that appear to be at odds with one another (e.g., anticipatory anxiety should inhibit panic). However, what is abundantly clear is that a functional topography underlies when and how 5-HT is released, and the adaptive behavioral response depends on the spatiotemporal distance of the anticipated or imminent aversive outcome or threat (Paul et al., 2014; Paul and Lowry, 2013).

Despite these neurobiological complexities associated with 5-HT, one promising motivational hypothesis that has gained traction over the years is that serotonin relates to aversive-related behavioral inhibition or punishment (Robinson and Roiser, 2016). Researchers have found evidence for this hypothesis in recent years using acute tryptophan depletion (ATD), a pharmacological challenge that reduces the availability of the essential amino acid and serotonin precursor tryptophan. ATD is hypothesized the selectively target the serotonin system (Fern-strom, 1979; Hood et al., 2005; Young, 2013, though see also Donkelaar et al., 2011). In particular, prior research has demonstrated that serotonin specifically modulates punishment-related behavioral inhibition in humans (Crockett et al., 2009, 2012) and attenuates the influence of aversive Pavlovian cues on instrumental behavior (Geurts et al., 2013b; den Ouden et al., 2015). Together, these human pharmacological studies demonstrate that serotonin plays a central role in punishment by linking Pavlovian-aversive predictions with behavioral inhibition (Crockett and Cools, 2015; Faulkner and Deakin, 2014), suggesting a potential mechanism through which aversive motivation may inhibit effort when allocating cognitive control.

4.1.3. Mutual inhibition between dopamine and serotonin in the dorsal raphé nucleus

The independent roles of dopamine and serotonin in modulating motivational valence and adaptive behavior (Hu, 2016; Rogers, 2011) are consistent with the idea that the motivational opponency between the two systems is what modulates activation responses and higher cognitive functioning (Boureau and Dayan, 2011; Cools et al., 2011; Daw et al., 2002; Samanin and Garattini, 1975). However, empirical studies attempting to validate this hypothesis have met with limited success (Fischer and Ullsperger, 2017; Seymour et al., 2012), although the neural mechanisms through which this mutual inhibition occurs remain an active area of research (Moran et al., 2018).

Recent evidence from the animal literature suggests that the dorsal raphe nucleus (DRN) may play a central role in modulating mutual inhibition between rewarding and aversive processes (Hayashi et al., 2015; Li et al., 2016; Nakamura, 2013; Nakamura et al., 2008). The DRN contains high concentrations of serotonin neurons (Huang et al., 2019; Kirby et al., 2003; Marinelli et al., 2004; Michelsen et al., 2008) as well as dopamine neurons (Cho et al., 2021; Lin et al., 2021; Matthews et al., 2016; Stratford and Wirtshafter, 1990; Yoshimoto and McBride, 1992). Some have shown that serotonergic DRN neurons play a key modulatory role in reward processing (Browne et al., 2019; Liu et al., 2020; Luo et al., 2015; Nagai et al., 2020; Ren et al., 2018), while dopaminergic DRN neurons appear to encode the motivational salience of incentives (Cho et al., 2021). Additionally, serotonergic DRN neurons project to the dopamine-rich ventral tegmental area (VTA) (Chang et al., 2021; Gervais and Rouillard, 2000), revealing its potentially crucial role in providing a more comprehensive understanding of mutual inhibition between DA and 5-HT. Taken together, one possible interpretation of these findings is that DRN may represent the benefits and costs of motivational incentives (Luo et al., 2016), and this signal may be relayed to cortical brain regions (e.g., frontal cortex) to drive behavioral control (Azmitia and Segal, 1978).

4.2. Neural circuit mechanisms of aversive motivation and cognitive control

4.2.1. Lateral habenula and aversive motivational value

The lateral habenula (LHb) has recently gained much attention as a promising candidate brain region involved in processing aversive motivational value (Hu et al., 2020; Lawson et al., 2014; Matsumoto and Hikosaka, 2009) due to its anatomical connections to motivational and emotional brain regions and influences of dopamine and serotonin neurons (Hikosaka et al., 2008). In particular, the LHb has been found to inhibit dopamine neurons (Brown and Shepard, 2016; Hikosaka, 2010; Lammel et al., 2012), but its activity is also suppressed by serotonin neurons (Shabel et al., 2012; Xie et al., 2016). These findings present provocative evidence that LHb serves as a critical functional hub for regulating how monoaminergic systems modulate motivated behavior and affective states (Namboodiri et al., 2016).

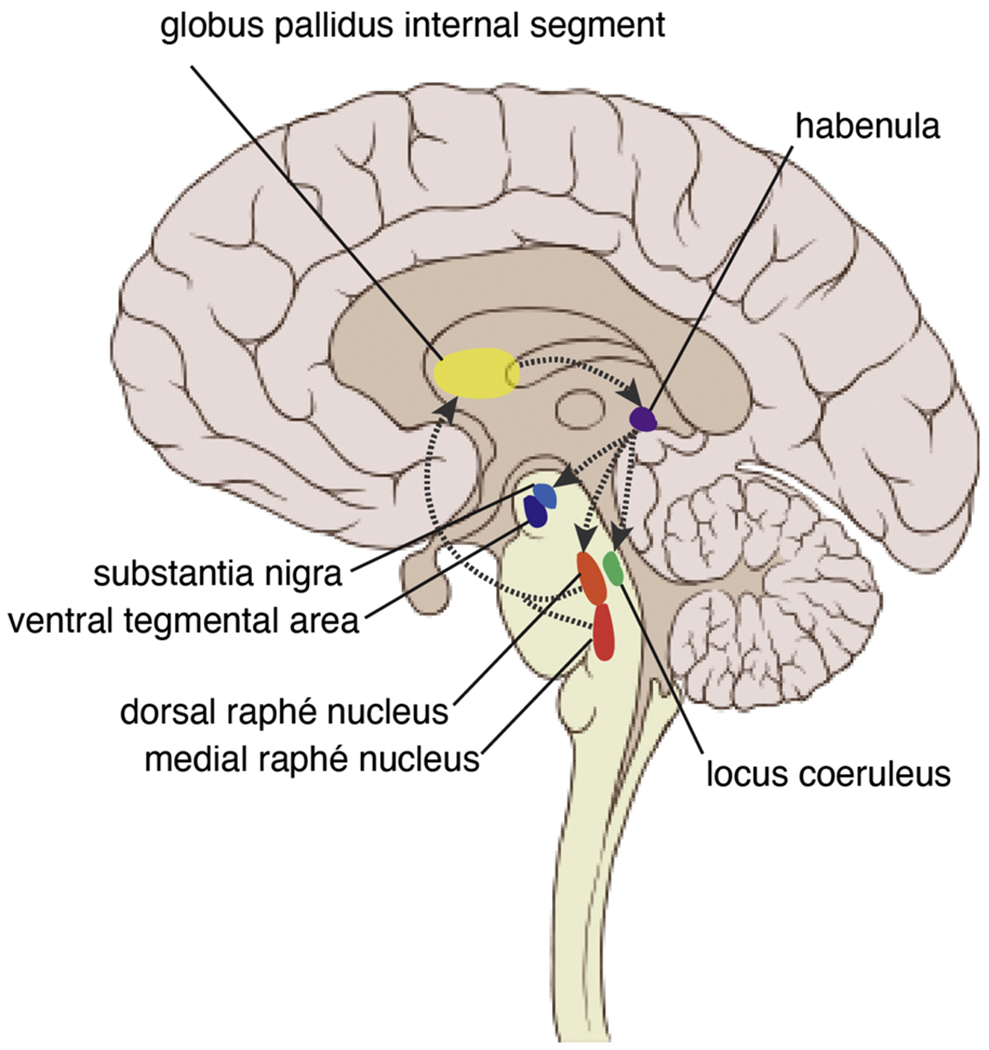

The LHb is part of a larger neural circuit, as illustrated in Fig. 5, and is highly connected to various subcortical brain structures such as the septum, hypothalamus, basal ganglia, globus pallidus) as well as dopamine and serotonin (Metzger et al., 2017). Within this putative aversive motivational value neural circuit, the LHb receives afferent projections from the ventral pallidum, globus pallidus internal segment (GPi), and ventral tegmental area (Haber and Knutson, 2010; Hong and Hikosaka, 2008; Root et al., 2014; Wulff et al., 2019). The LHb then sends efferent projections to brainstem nuclei, including dorsal and median raphe nuclei, ventral tegmental area, substantia nigra, and locus coeruleus (Akagi and Powell, 1968; Quina et al., 2015; Sutherland, 1982; Wang and Aghajanian, 1977; Zahm and Root, 2017). Importantly, these connections suggest LHb likely serves an important regulatory role of dopamine and serotonin (as well as norepinephrine).

Fig. 5.

Lateral Habenula and Aversive Motivational Value. The lateral habenula (LHb) receives excitatory afferent projections from the globus pallidus internal segment (GPi). The GPi is located more lateral but is placed in the same slice for illustration purposes. The LHb sends efferent projections to target the substantia nigra, ventral tegmental area, dorsal and medial raphé nuclei, and locus coeruleus, brainstem nuclei with high concentrations of dopamine, serotonin, and norepinephrine. These modulatory signals are mediated by the rostromedial tegmental nucleus (not pictured). Serotonin neurons send inhibitory projections to the GPi that suppress the excitatory projections from GPi to the LHb.

Recent evidence from the animal neuroscience literature lends support to the putative role of LHb in aversive motivational value, as LHb neurons in primates are strongly excited by aversive outcomes (e.g., absence of a liquid reward or presence of an air puff punisher). Interestingly, these “negative reward” signals from the LHb are mediated by the rostromedial tegmental nucleus (RMTg), a brain structure speculated to modulate both reward-related behaviors of DA neurons in the SNc/VTA and aversive-related behaviors of 5-HT neurons in the DRN (Hong et al., 2011; Jhou and Vento, 2019). Interestingly, others have observed that presentation of aversive stimuli to rodents increased LHb projections to RMTg neurons (Stamatakis and Stuber, 2012) and that stimulation of LHb-RMTg transmission in rodents reduced motivation to exert effort to earn rewards (Proulx et al., 2018). Moreover, recent studies have shown that LHb inactivation alters both choice flexibility and willingness to exert physical effort, demonstrating that this region is likely a key contributor in guiding behavior during mentally and/or physically demanding tasks (Baker et al., 2015; Sevigny et al., 2021). Finally, the LHb both receives direct projections from the dorsal anterior cingulate cortex (dACC; a critical brain region involved in cognitive control described in the next section (Chiba et al., 2001; U. Kim and Lee, 2012), and also indirectly influences dACC via inhibition the activity of midbrain dopamine neurons that project to dACC (Haber, 2014; Lammel et al., 2012; Williams and Goldman-Rakic, 1995). Thus, it is highly likely that both regions communicate with each other to support the transmission of aversive motivational value, in service of monitoring action outcomes and signaling necessary behavioral adjustments (Baker et al., 2016). Recent evidence from primates has shown that LHb represents ongoing negative outcomes in ongoing trials, while the dACC represents outcome information from past trials and signals behavioral adjustments in subsequent trials (Kawai et al., 2015). Yet, despite these promising results suggesting complementary roles for LHb and dACC processing aversive outcomes in behavioral control, many open questions remain regarding the nature of how neural signals jointly interact in the brain.

These studies demonstrate that the habenula plays a prominent role in the neural pathway through which aversive motivation interacts with cognitive control (Baker and Mizumori, 2017; Mizumori and Baker, 2017). However, a significant limitation for investigating the LHb in humans is its relatively small size, which is around 30 mm3 in volume (Boulos et al., 2017; Lawson et al., 2013; Strotmann et al., 2014). While some early fMRI studies suggest that the human habenula is activated for negative outcomes and negative reward prediction errors (Salas et al., 2010; Shepard et al., 2006; Ullsperger and Cramon, 2003), a potential limitation of this early work may be the lack of spatial specificity due to the available MRI methods at the time. Fortunately, more recent developments in 7 T MRI have enabled researchers to define the human habenula and associated functional networks with greater precision (Lawson et al., 2013; Torrisi et al., 2017). Although these high-resolution imaging techniques have demonstrated great promise in providing preliminary evidence in humans that the habenula is activated by aversive stimuli more broadly (Hennigan et al., 2015; Lawson et al., 2014; Shelton et al., 2012; Weidacker et al., 2021), much remains to be elucidated regarding its specific functional role in motivation and cognitive control interactions.

Finally, while we have emphasized the role of LHb in aversive motivational value, an important adjacent brain structure also relevant for aversive processing is the medial habenula (MHb), which some have argued is functionally distinct from the LHb (Namboodiri et al., 2016). Specifically, as neuroanatomical studies suggest that the MHb sends afferent projections to the amygdala, a region long implicated in representing Pavlovian conditioned values of threatening or noxious stimuli (Campese et al., 2015; Moscarello and LeDoux, 2013), or conditioned approach and avoidance behavior (Choi et al., 2010; Fernando et al., 2013; Schlund and Cataldo, 2010). Although much less is known about MHb’s impact on aversive motivational processing relative to the LHb, we speculate that one potential critical factor that may contribute to these functional differences are the parallel pathways through which DA and 5-HT project from the dorsal and median raphé nuclei to distinct cortical brain regions (Azmitia and Segal, 1978). Moreover, while many open questions remain regarding how these distinct pathways impact aversive processing, future work clarifying the neural circuitry between LHb and MHb may help elucidate the mechanisms by which organisms develop adaptive behavioral responses to aversive motivation.

4.2.2. Dorsal anterior cingulate cortex and the expected value of control

The dorsal anterior cingulate cortex (dACC) has long been implicated in cognitive control (Botvinick et al., 2001; Ridderinkhof et al., 2004; Sheth et al., 2012), as well as various cognitive, motor, and affective functions (Heilbronner and Hayden, 2016; Vassena et al., 2020; Vega et al., 2016), including affect (Braem et al., 2017; Etkin et al., 2011) and emotion-control interactions (Inzlicht et al., 2015; Pessoa, 2008). In recent years, growing evidence suggests that dACC plays a central role in modulating the interaction between motivation and cognitive control (Botvinick and Braver, 2015; Parro et al., 2018). However, despite dACC’s indisputable role in motivation/affect and cognitive control, surprisingly few studies have investigated aversive motivation and cognitive control in the brain (Cubillo et al., 2019). This provides a unique challenge and opportunity to develop a greater mechanistic understanding of exactly how aversive motivational value is transmitted to dACC to guide cognitive control (Yee and Braver, 2018).

As mentioned at the beginning of this review, the Expected Value of Control (EVC) model is a promising framework for addressing this crucial gap in the literature. In particular, the EVC attempts to integrate these broad neuroscientific findings posits that dACC serves as a central hub that integrates motivational values to modulate cognitive control (Shenhav et al., 2013, 2016). Recent evidence from the animal literature is consistent with the EVC account, as some studies have shown how rodent medial prefrontal cortex (one putative rodent analog of human dACC; but see Heukelum et al., 2020; Vogt et al., 2013) plays a central role in integrating rewarding and aversive motivational incentives to modulate effort and attention (Hosking et al., 2014; Schneider et al., 2020). Moreover, as illustrated in Fig 6a, incorporating the motivational context through which these incentives may help clarify how aversive incentives promote dissociable strategies for cognitive control allocation (e.g., DA may promote behavioral activation/negative reinforcement while 5-HT may promote behavioral inhibition/punishment). Although these neural pathways are still somewhat speculative and not yet validated in humans, future research combining innovative experimental tasks with high-resolution MRI or deep-brain stimulation could help fill this crucial gap in the literature (Boulos et al., 2017; Lawson et al., 2013).

Additionally, an important core assumption of the EVC model is that dACC “bundles” expected positive and negative outcomes into a net motivational value (e.g., mixed motivation) that modulates cognitive control signals in the brain (See Fig. 6b). Recent work from a human fMRI study provides evidence in support of dACC’s role in value integration and cognitive control (Yee et al., 2021). In particular, we used the incentive integration and cognitive control task (See Fig 4a) to explicitly test the hypothesis that bundled neural signals in dACC encoded the motivational value of monetary and liquid incentives in terms of their influence on cognitive performance and self-reported motivating ratings. In other words, dACC selectively encoded the integrated subjective motivational value of bundled incentives, and more importantly, the bundled neural signal was associated with motivated task performance (e.g., juice + monetary rewards increased dACC signals and boosted performance, whereas saltwater + monetary rewards decreased dACC signals and impaired performance). However, while these current results lend support for how mixed motivation may modulate cognitive control via an instrumental manner, it remains unknown how this integrated value signal may differentially impact cognitive control when incentives are conditioned in a Pavlovian or even combined (e.g., Pavlovian-Instrumental Transfer) manner. Future studies could explicitly examine the degree to which dACC activity reflects the integrated motivational value of different combinations of various types of motivational incentives on cognitive control processes (e.g., does receiving monetary loss + saltwater as performance feedback elicit lower activation relative to monetary loss + juice as performance feedback), or alternatively consider the motivational context of incentives modulate cognitive control allocations (e.g., are there dissociable dACC neural signals underlying whether aversive motivation elicits negative reinforcement vs. punishment behavior).

5. Computational mechanisms of aversive motivation and cognitive control

5.1. Dissociable influences of reinforcement and punishment on cognitive control allocation

In this section, we highlight recent theoretical work demonstrating how the inclusion of aversive motivational incentives enables us to reconceptualize cognitive control allocation, not as a one-dimensional problem – in which motivation monotonically influences cognitive control (e.g., higher or lower effort allocation) – but instead as a multidimensional one. For example, it is important to consider both the amount (e.g., how much effort) and type of effort strategy (e.g., what kind of effort) utilized for allocating cognitive control must be computed. Specifically, we describe an instantiation of the EVC model that offers an account of 1) how mixed motivation may influence the interaction of motivation and cognitive control, and 2) how the motivational context of aversive incentives can elicit dissociable effort strategies for cognitive control allocation. Notably, while the motivation to avoid negative outcomes might engage control processes during mentally challenging tasks, the context of how that outcome can be avoided may drive different kinds of control signals. For example, whereas the motivation to avoid or escape from expected negative outcomes may boost effort allocation on a mentally challenging task (e. g., negative reinforcement) via increasing attentional control, the motivation to avoid being penalized with negative outcomes may instead reduce effort allocation on a mentally challenging task (e.g., punishment) through increased response caution. This example clearly illustrates how the motivational context through which aversive motivation facilitates behavioral activation (Evans et al., 2019) or behavioral inhibition (Verharen et al., 2019) has significant implications for understanding how aversive incentives might drive divergent effort strategies for cognitive control allocation.

Recent theoretical work has demonstrated how these different forms of control adjustment (e.g., attentional control vs. response caution) can be formalized within the framework of formal models of evidence accumulation (Danielmeier et al., 2011; Danielmeier and Ullsperger, 2011; Ritz et al., 2021). In particular, the drift diffusion model (DDM) provides a useful framework for explicitly quantifying how different types of incentives (e.g., reward, penalty) can guide distinct adjustments in cognitive control allocation (Ratcliff et al., 2016; Ratcliff and McKoon, 2008). Moreover, normative models have been developed that incorporate such DDM parameters into an objective function which putatively accounts for how individuals optimally vary the intensity of their physical or mental effort to maximize their expected reward rate (Bogacz et al., 2006; Niv et al., 2007). However, an important gap in this theoretical research relates to characterizing the degree to which various motivational incentives might modulate similar or dissociable strategies for mental effort allocation.

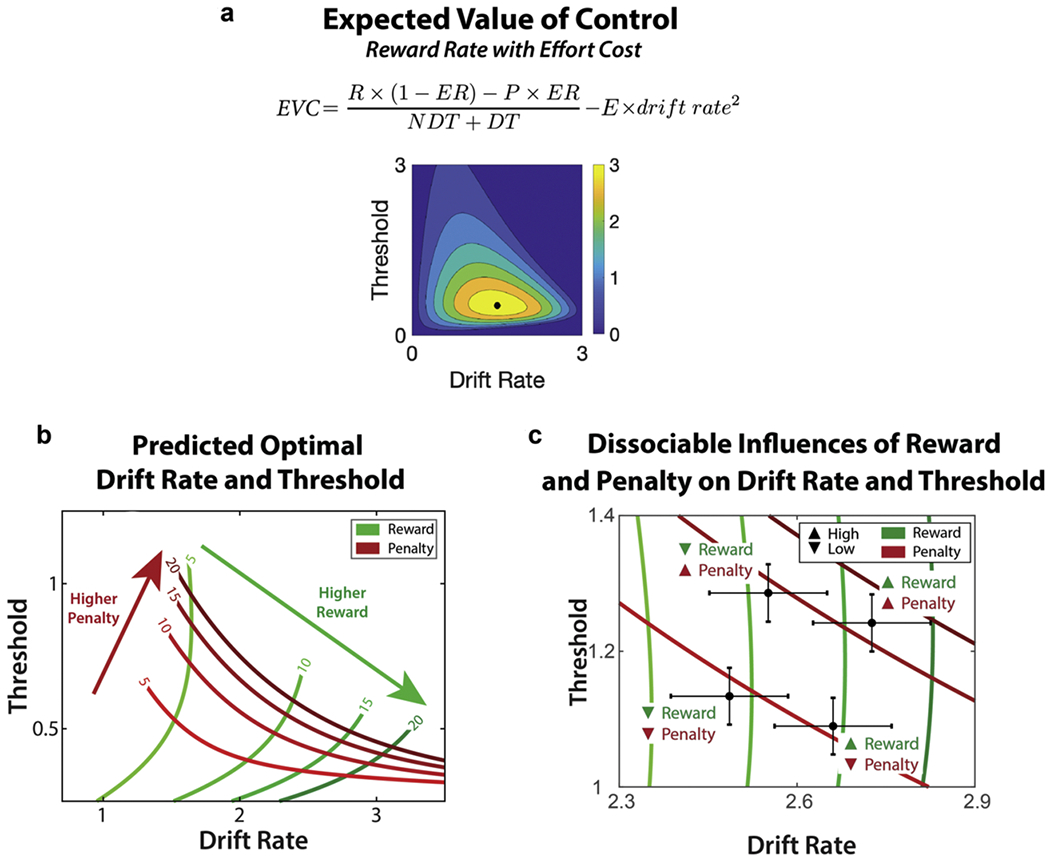

The following implementation of the Expected Value of Control (EVC) model extends the existing reward rate framework to describe how individuals determine the appropriate amount of cognitive control to allocate in a given situation. A core assumption of the model is that individuals will allocate the amount and type of cognitive control that maximizes their expected reward rate while simultaneously minimizing the effort costs associated with exerting cognitive control (Lieder et al., 2018; Musslick et al., 2015). The difference between these two quantities, referred to as the Expected Value of Control (EVC; See Eq. 1), indexes the extent to which benefits outweigh the costs (Shenhav et al., 2013, 2017). The EVC model predicts that an individual will adjust control allocation based upon the expected benefits (e.g., the net value of monetary rewards and monetary losses earned for exerting control) and the expected costs (i.e., the mental effort required to exert control).

| (1) |

In order to maximize EVC, cognitive control can be adjusted to modify specific parameters of the DDM, which govern how incentives may influence predicted behavior. For example, increased attentional control from expected reinforcers may correspond to the rate of evidence accumulation (e.g., drift rate), whereas increased response caution from expected punishers may correspond to the response threshold. Importantly, changes in drift rate and threshold may predict distinct patterns of changes in response time RT (which is a combination of both decision-related and decision-unrelated factors, i.e., decision time [DT] and non-decision time [NDT]) and error likelihood. For example, increases in drift rate result in faster RT and increased likelihood of error responses, whereas increases in threshold result in slower RT and increased accuracy (Bogacz et al., 2006). As described in Eq. 2, Reward Rate can be estimated as a function of resulting performance (e. g., error rate ER and response time RT), as well as the reinforcement for a correct response R and punishment for an incorrect response P. Critically, by integrating the influence of multiple incentives, this formulation accounts for contexts involving mixed motivation and thus has the potential to provide a more comprehensive picture of the explicit ways through which diverse forms of motivation can influence different strategies for allocating cognitive control.

| (2) |

An exciting feature of this normative account is the ability to explicitly stipulate distinct parameters for positive reinforcement and negative reinforcement. For instance, in scenarios where accurate responses lead to obtaining rewarding incentives, this formulation can be used to explicitly estimate the effects of positive reinforcement [RPos] on reward rate. Conversely, in scenarios where accurate responses lead to successful avoidance of aversive incentives, the equation can instead account for the effects of negative reinforcement [RNeg] on reward rate, which may potentially be distinct from how positive reinforcement may modulate drift rate and threshold parameters during reward rate optimization. This distinction allows us to delineate the motivational context of whether an aversive incentive should be treated as negative reinforcement or punishment. Importantly, this formulation dictates divergent predictions for how an aversive incentive may modulate the intensity of mental effort allocated in a given cognitive control task based upon this motivational context. Moreover, the model has the potential to elucidate the degree to which negative reinforcement may produce similar patterns as positive reinforcement effects versus punishment effects on cognitive control allocation.

The other key component in the EVC model is the Cost of cognitive control, which refers to the aversiveness of the mental effort required to exert cognitive control and successfully perform the task (Kool and Botvinick, 2018; Shenhav et al., 2017). This cost is assumed to be a monotonic but likely non-linear function (e.g., quadratic) of the intensity of control being allocated (Massar et al., 2020; Petitet et al., 2021; Soutschek and Tobler, 2020; Vogel et al., 2020). Because the model assumes that it is optimal to maximize drift rate, the drift rate would not be constrained without a cost function. Thus, the inclusion of a cost function represented as a squared function of the drift rate, scaled by parameter E, allows for a more constrained set of parameter values for drift rate and threshold for reward rate maximization (Leng et al., 2020); shown in Eq. 3. For additional discussion about the potential forms and source of this cost function, see (Kool and Botvinick, 2018; Ritz et al., 2021; Westbrook and Braver, 2015). Integrating across considerations of expected reward rates and effort costs, the model can estimate the EVC of each possible combination of drift rate and threshold (shown as a heatmap in Fig. 7a) and then determine the settings of each of these control signals that is optimal (i.e., that maximizes EVC).

Fig. 7.