Abstract

Objective:

To evaluate the feasibility and potential efficacy of a technology-assisted education program in teaching adults at a high risk of opioid overdose about opioids; opioid overdose; and opioid use disorder medications.

Method:

A within-subject, repeated-measures design was used to evaluate effects of the novel technology-assisted education program. Participants (N=40) were out-of-treatment adults with opioid use disorder, recruited in Baltimore, Maryland from May 2019 through January 2020. The education program was self-paced and contained three courses. Each course presented information and required answers to multiple-choice questions. The education program was evaluated using a 50-item test, delivered before and after participants completed each course. Tests were divided into three subtests that contained questions from each course. We measured accuracy on each subtest before and after completion of each course and used a mixed-effects model to analyze changes in accuracy across tests.

Results:

The technology-assisted education program required a median time of 91 min of activity to complete. Most participants completed the program in a single day. Accuracy on each subtest increased only after completion of the course that corresponded to that subtest, and learning comparisons were significant at the p<.001 level for all subtests. Accuracy on each subtest was unchanged before completion of the relevant course, and increases in accuracy were retained across subsequent tests. Learning occurred similarly independent of participant education, employment, and poverty.

Conclusions:

Technology-assisted education programs can provide at-risk adults with access to effective education on opioids, opioid overdose, and opioid use disorder medications.

Keywords: health education, opioids, out-of-treatment adults, overdose prevention, technology-assisted education

Introduction

Drug overdose remains a major contributor to accidental deaths in the United States (Hedegaard et al., 2020), and among some populations, such as out-of-treatment adults with opioid use disorder, the risk of death from opioid overdose is disproportionately high (Sordo et al., 2017). Prior research has shown that in-person health education can be an effective strategy to reduce opioid overdose deaths (Walley et al., 2013), but logistical and financial constraints can make in-person education impractical (Davis et al., 2013; Hewlett & Weemeling, 2013; Gupta et al., 2016; Winstanley et al., 2016).

The application of technology to administer health education has emerged as a promising method to navigate these barriers. Technology-assisted education programs can be beneficial because the educational programs can be disseminated with high fidelity across patients, taken at an individualized pace (e.g., Pollard et al., 2014), delivered more cost-effectively than some therapist-delivered interventions (Henny et al., 2018), and provided to individuals in underserved or difficult-to-reach communities (Masson et al., 2019). Prior research suggests that technology-assisted health education can result in efficient learning with high ratings of patient acceptability when compared to written instruction (Dunn et al., 2017) or education delivered by therapists (Marsch & Bickel, 2004). Technology-assisted education can also be modified to incorporate principles of effective instruction (Barrett et al., 1991) such as repeated presentation of critical information with opportunities for overt responses (Silverman et al., 1990), frequent opportunities for feedback (Prue & Fairbank, 1981), and performance-based incentives (Koffarnus et al., 2013), thereby eliminating the need to train instructors and staff in these procedures.

Technology-assisted health education has been explored using computer-based applications to provide instruction about opioids and techniques to prevent opioid overdose to people using illicit opioids (Dunn et al., 2016), people undergoing opioid detoxification (Dunn et al., 2017), and people who reported using opioids for pain management (Huhn et al., 2018). Results from these studies indicate that technology-assisted opioid education can be effective and socially acceptable. Technology-assisted health education could, therefore, be expanded to include education on medications that treat opioid use disorder and leveraged to educate out-of-treatment adults who are at an increased risk of opioid overdose. There are several medications for opioid use disorder (i.e., methadone, buprenorphine, and naltrexone) that can be effective at reducing illicit opioid use and related mortality (Jarvis et al., 2018; Larochelle et al., 2018; Sordo et al., 2017; Wakeman et al., 2020). However, these medications remain underutilized for several potential reasons, including misperceptions and lack of knowledge about the efficacy and safety of these medications (Hay et al., 2019; Olsen & Sharfstein, 2014).

The focus of the present study was to evaluate the feasibility and potential efficacy of a technology-assisted education program to teach out-of-treatment adults with opioid use disorder about opioids; preventing, detecting, and responding to an opioid overdose; and FDA-approved medications used in the treatment of opioid use disorder. A within-subject, repeated-measures design was used to evaluate effects of the program on overall learning and performance across different course content areas. This study focused on out-of-treatment adults with opioid use disorder because they are at high risk for opioid overdose and could benefit from effective interventions to reduce their opioid overdose risk.

Method

Participants and Setting

Participants in this education program were 40 out-of-treatment adults with opioid use disorder who had been enrolled in a randomized controlled trial (Clinical Trial Number: NCT03677986). An additional participant who was enrolled in the trial and completed the education program was excluded from the present analysis because results from two tests were lost. After completing the technology-assisted education program, participants continued into the main trial. Inclusion criteria for the main trial required that an individual was at least 18 years old; met DSM-5 criteria for opioid use disorder; provided an opioid-positive urine sample, as judged by a urinalysis conducted by FRIENDS Medical Laboratory, Inc. in Baltimore, MD (FRIENDS Medical Laboratory, Inc., 2020); reported not receiving any type of drug abuse treatment in the past 30 days; and was interested in receiving buprenorphine treatment. Participants were excluded from the trial if they had current suicidal or homicidal ideation; were pregnant or nursing; or were unwilling or unable to use their own smartphone for the trial. Participants in this study were recruited between May 2019 and January 2020 through community agencies that served the target population and a referral system in which trial participants were paid for successfully referring others to the trial.

The education program was administered via computer at individual workstations at the Center for Learning and Health, a research-treatment unit located on the Johns Hopkins Bayview Medical Center Campus (Baltimore, MD). Each workstation had a chair, desk, computer, monitor, mouse, and keyboard (see Silverman et al., 2007 for a detailed description of the Center for Learning and Health setting and procedures). All procedures were approved by the Johns Hopkins University School of Medicine Institutional Review Board, and all participants provided written informed consent.

Technology-Assisted Education Program

Participants were invited to participate in a technology-assisted education program that was designed to teach them about opioids; preventing, detecting, and responding to an opioid overdose; and FDA-approved medications for opioid use disorder. The education program was created and delivered using our computer-based training, authoring, and course presentation system called ATTAIN. ATTAIN is a customizable software that allows instructional designers to develop courses with multimedia stimuli without the need for computer programming. The software allows for multiple-choice and fill-in-the-blank questions, immediate feedback for responding to those questions, random and repeated presentation of questions, and continued presentation of questions until the participant meets criteria based on speed or accuracy of responding. The system integrates the delivery of financial incentives based on participant performance, which can motivate participant engagement and progression through training. These features have been successfully applied to teach use of the computer keyboard and numeric keypad to low-income, unemployed adults (Koffarnus et al., 2013), information about HIV and PrEP to people with substance use disorders (Getty et al., 2018), and information about HIV and HIV treatment to people living with HIV (Subramaniam et al., 2019).

Courses

The opioid education program was based on materials made available to the public by the Centers for Disease Control and Prevention (CDC; CDC, 2020) and Substance Abuse and Mental Health Services Administration (SAMHSA; SAMHSA, 2018, 2020). The program was divided into three courses with the aim to describe three general content areas in a direct and concise manner. Course 1 provided an introduction to opioids, which defined the term “opioids,” provided examples and non-examples of opioids, and described opioid tolerance and withdrawal (see Supplementary Materials A-C). Course 2 provided information about opioid overdose risks and symptoms, and described strategies for identifying and responding effectively to an opioid overdose (see Supplementary Materials D-F). Course 3 taught participants about the names, forms, and functions of the three FDA-approved medications (i.e., methadone, buprenorphine, and naltrexone) used to treat opioid use disorder (see Supplementary Materials G-L).

The opioid education program consisted of 12 Modules (3 in Course 1, 3 in Course 2, and 6 in Course 3) arranged in a series that was administered in a sequence, such that each participant was exposed to Course 1 before Course 2, and Course 2 before Course 3. To increase accessibility of the information, all text presented in a module was accompanied by an audio recording that played automatically and could be replayed.

Each Module consisted of Presentation and Mastery Units. Presentation Units were designed to introduce new material. To advance through slides in Presentation Units, participants were required to read or listen to presented information and to answer multiple-choice questions. For each multiple-choice question, participants were presented with a question in the upper part of the screen and two to four answer choices distributed horizontally across the bottom part of the screen. The answer choices were presented in boxes of equal size that were distributed evenly, with text representing each choice centered in the middle of each box. There was only one correct response per question; all other choices were incorrect. Participants responded by clicking on a choice box using their mouse. Correct answers changed the border of the selected choice to green, produced a feedback statement that said “Correct” for 1 second, added $0.05 to the participant’s earnings, and advanced the slide. Incorrect answers changed the outline of the selected choice to red, produced a feedback statement that said “X,” did not produce money, shuffled the location of the correct and incorrect choices, and required the participant to answer again. Correct answers that followed incorrect answers were treated the same as any other correct answer, and produced money and feedback and advanced the slide as described above. When each Presentation Unit was completed, the participant was awarded a $2.00 bonus, which served as an incentive for progressing through the program.

After completing each Presentation Unit, the participant was exposed to a Mastery Unit, which allowed participants to practice answering questions about that material. In Mastery Units, questions from the previous Presentation Unit were presented again in a random order for 1 minute. The location of correct and incorrect choices (e.g., left, middle, or right for questions that included three choices) was shuffled each time a question was presented. During the minute, participants could answer multiple-choice questions repeatedly. Each correct answer produced feedback as described above, added $0.05 to the participant’s earnings, and advanced the slide to the next question. Incorrect answers produced feedback as described above, did not produce money, shuffled the location of correct and incorrect choices, and required the participant to answer again. After completing the Mastery Unit, the participant was shown the number of correct and incorrect answers and the amount of money that they earned. At this time, the Module was complete and the participant advanced to the next Module or test.

Testing

A 50-item test was developed to assess knowledge prior to and following training. All items on the test were multiple-choice questions that were taken directly from the Modules of each education course. Participants earned $0.05 for each correct answer; however, no feedback was provided until the test was completed. After each test, participants were told the number of answers that were correct and the amount of money that was earned.

Experimental Design

We used a multiple-probe design to evaluate learning in the education program (Horner & Baer, 1978). Each participant completed the 50-item test described above four times (Test 1, Test 2, Test 3, and Test 4): Prior to beginning the education program and then again after they had completed each of the three courses. Tests consisted of three subtests, each of which contained questions from one of the courses. Test items 1–16 (i.e., Subtest 1) corresponded to Course 1; test items 17–32 (i.e., Subtest 2) corresponded to Course 2; and test items 33–50 (i.e., Subtest 3) corresponded to Course 3. These four tests allowed us to measure participant performance before and after each education course and evaluate effects of each course on performance in each subtest. All participants completed every test.

We hypothesized that scores on each subtest would increase in the test administration that followed completion of the relevant course (i.e., the learning period). Additionally, we expected that scores on each subtest would maintain in the test administrations following the learning period (for Course 1 and Course 2) and that scores on each subtest would not improve in the test before exposure to the relevant course (for Course 2 and Course 3). For example, we expected that scores on Subtest 2 would be lower in Test 1 and Test 2 (the tests that were administered before Course 2) than in Test 3 and Test 4 (the tests that were administered after Course 2). Furthermore, we expected that scores on Subtest 2 would not improve from Test 1 to Test 2 and would maintain from Test 3 to Test 4.

Statistical Analysis

The primary goal of this study was to evaluate learning in the education program. The main dependent variable was the percent correct on the subtest related to each course. Accuracy on Subtest 1, Subtest 2, and Subtest 3 were entered into three separate piecewise mixed-effects models (for Course 1, Course 2, and Course 3, respectively). Each model used a random intercept for subject. The piecewise mixed-effects model estimated separate parameters describing the change in accuracy in the test immediately following the relevant course, whether accuracy maintained across subsequent tests, and whether accuracy maintained across the tests taken prior to the relevant course. For Subtest 1, the model estimated improvement in accuracy during the learning period (Test 1 to Test 2) and whether accuracy maintained following the learning period (Test 2 through Test 4). For Subtest 2, the model estimated the stability of accuracy prior to the learning period (Test 1 and Test 2), improvement in accuracy during the learning period (Test 2 to Test 3), and whether accuracy maintained after the learning period (Test 3 and Test 4). For Subtest 3, the model estimated the stability of accuracy prior to the learning period (Test 1 through Test 3) and improvement during the learning period (Test 3 to Test 4).

The piecewise mixed-effects model permitted analyses of covariates and their interaction with performance related to each course. The model was adjusted to include education (< 12th grade vs ≥ 12th grade), employment (normally unemployed vs normally employed), and poverty (reported income was above vs below the poverty level of $1063/month; U.S. Department of Health and Human Services, 2020) as dichotomous covariates. For all covariates, we evaluated the interaction between those variables and changes in accuracy following each course. This interaction analysis allowed us to determine which factors, if any, were related to learning in the education program.

As a secondary evaluation, we assessed the relation between baseline knowledge of opioids; preventing, detecting, and responding to opioid overdose; and FDA-approved medications used to treat opioid use disorder, and the covariates described above. Accuracy on the first administration of the 50-item test (Test 1, collapsing across subtests) represented baseline knowledge on opioids, overdose, and medications. Relations between accuracy on Test 1 and participant characteristics were described using independent-samples t-tests for dichotomous variables.

Results

Participant Characteristics

Table 1 shows participant characteristics at study intake. The median (IQR) age of participants was 46 (40–57) years old, and participants in this sample had a median (IQR) of 14 (4–20) years prior experience with heroin. Most participants reported being male (77%), Black (63%), normally unemployed (60%), living below the poverty level (85%), and completing at least 12 years of education (73%). Most participants (85%) reported that they had previously been enrolled in Buprenorphine (63%), Methadone (19%), or Naltrexone/Vivitrol (5%) treatment for opioid use disorder. At study intake, all participants (100%) provided a urine sample that tested positive for an opioid. In most cases, participant urine samples were positive for cocaine (65%), fentanyl (90%), or opiates (88%). Some urine samples were positive for benzodiazepines (8%), hydrocodone/hydromorphone (10%), methadone (13%), or THC/cannabis (23%).

Table 1.

Participant characteristics at study intake.

| Characteristics | |

|---|---|

| ASI measures a | Median (IQR) |

| Age in years | 46 (40–57) |

| Prior heroin use in years | 14 (4–20) |

| N (%) | |

| Maleb | 30 (77) |

| Race/ethnicity | |

| Black | 25 (63) |

| White | 11 (28) |

| Otherc | 4 (10) |

| ≥12 years of education | 29 (73) |

| Normally unemployed | 24 (60) |

| Below poverty leveld | 34 (85) |

| Prior opioid treatment | 34 (85) |

| Buprenorphine | 25 (63) |

| Methadone | 19 (48) |

| Naltrexone | 2 (5) |

| Urinalysis positive for e | N (%) |

| Amphetamines | 0 (0) |

| Barbiturates | 0 (0) |

| Benzodiazepines | 3 (8) |

| Buprenorphine | 5 (13) |

| Cocaine | 26 (65) |

| Fentanyl | 36 (90) |

| Hydrocodone/Hydromorphone | 4 (10) |

| Methadone | 5 (13) |

| Opiates | 35 (88) |

| Oxycodone | 0 (0) |

| THC/Cannabis | 9 (23) |

| Any opioid | 40 (100) |

| Percent correct at intake | Mean (SD) |

| Subtest 1 | 85 (12) |

| Subtest 2 | 90 (12) |

| Subtest 3 | 81 (13) |

| Overall | 85 (10) |

Results from the Addiction Severity Index-Lite (ASI-Lite; McLellan et al., 1985) conducted at study intake.

All participants identified as either male or female.

Race/ethnicity of “Other” includes participants that reported being “Mixed”, “Black and White”, “Asian”, or “American Indian or Alaskan Native”.

Below poverty level was calculated using income reported in the ASI at study intake and 2020 Poverty Guidelines from the U.S. Department of Health and Human Services, 2020.

Results from the Urinalysis conducted at study intake.

Completion of the Course

The technology-assisted education program required a median time of 91 min of activity (range 59–219 min) to complete. Participants were free to complete the courses at their own pace. They could take breaks as desired or leave the building and resume the course on another day. Most participants (n=38) completed the program in a single day, but one participant split the education program across two days (spaced 4 days apart) and one split the program across three days (spaced 2 and 16 days apart). Money earned while completing the program was aggregated across the education program and delivered to the participants at the completion of the program by a staff member who loaded the earned money onto a reloadable credit card that was issued to each participant during study enrollment.

Participant Earnings

Mean (SD) participant overall earnings for completing the education program and tests were $43.56 ($2.54). Participants earned a mean (SD) of $9.06 ($0.84) for completing the four tests and $34.50 ($2.60) for completing the three courses.

Baseline (Test 1) Performance

The mean overall score on Test 1 was 85 percent (SD: 10%; range 58 to 100%). A single-sample t test confirmed that overall Test 1 scores were significantly higher than chance (37%; t39=30.43, p<.001, d = 4.9). Similarly, baseline scores on Subtests 1 (85%), 2 (90%), and 3 (82%) were significantly higher than chance (40%, 36%, and 35%, respectively; t39=24.24, p<.001, d=3.9; t39=29.37, p<.001, d=4.7; t39=22.52, p<.001, d=3.6). Thus, before completing the education program, participants had prior knowledge about opioids; preventing, detecting, and responding to an opioid overdose; and FDA-approved medications to treat opioid use disorder.

Performance during the Opioid Education Program

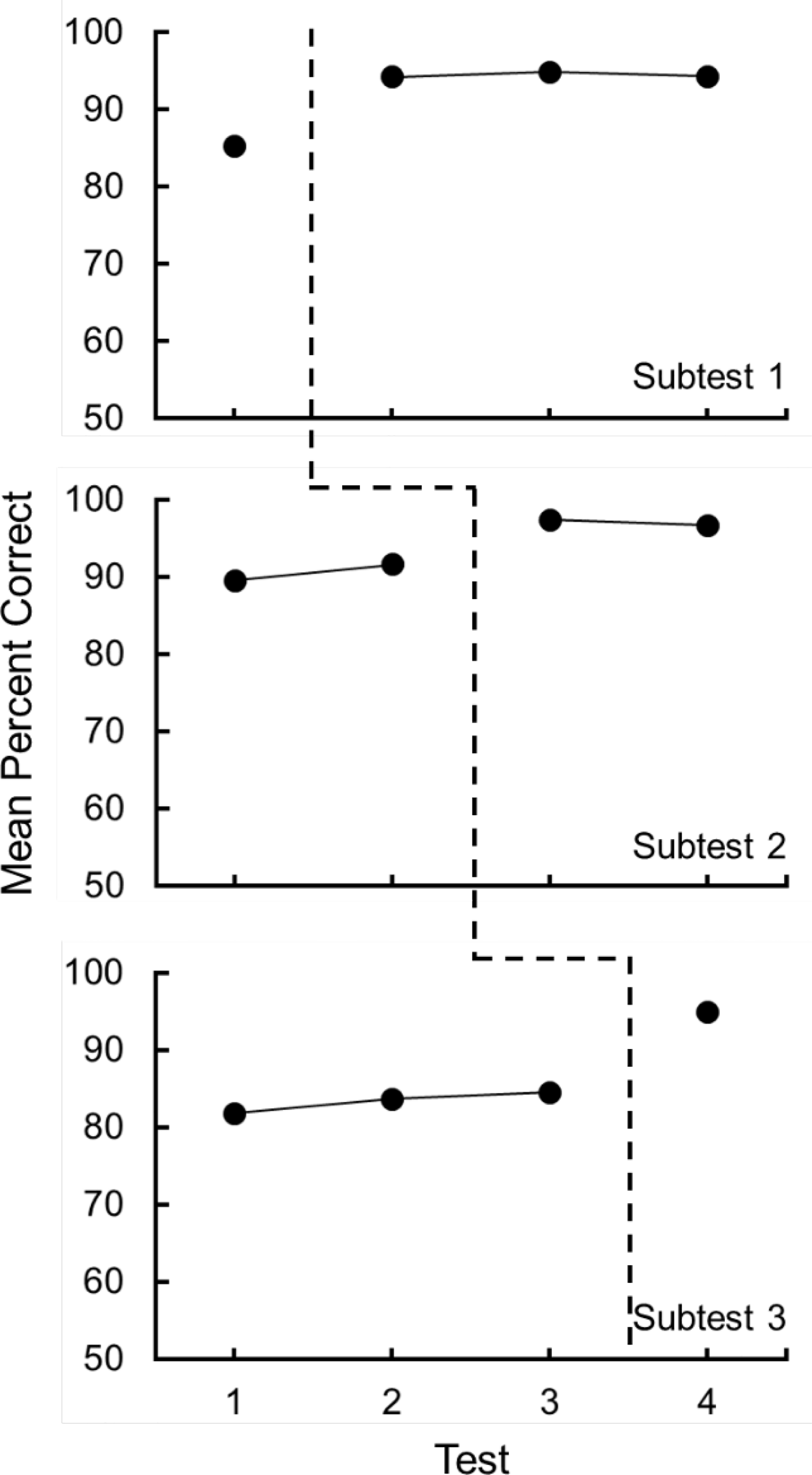

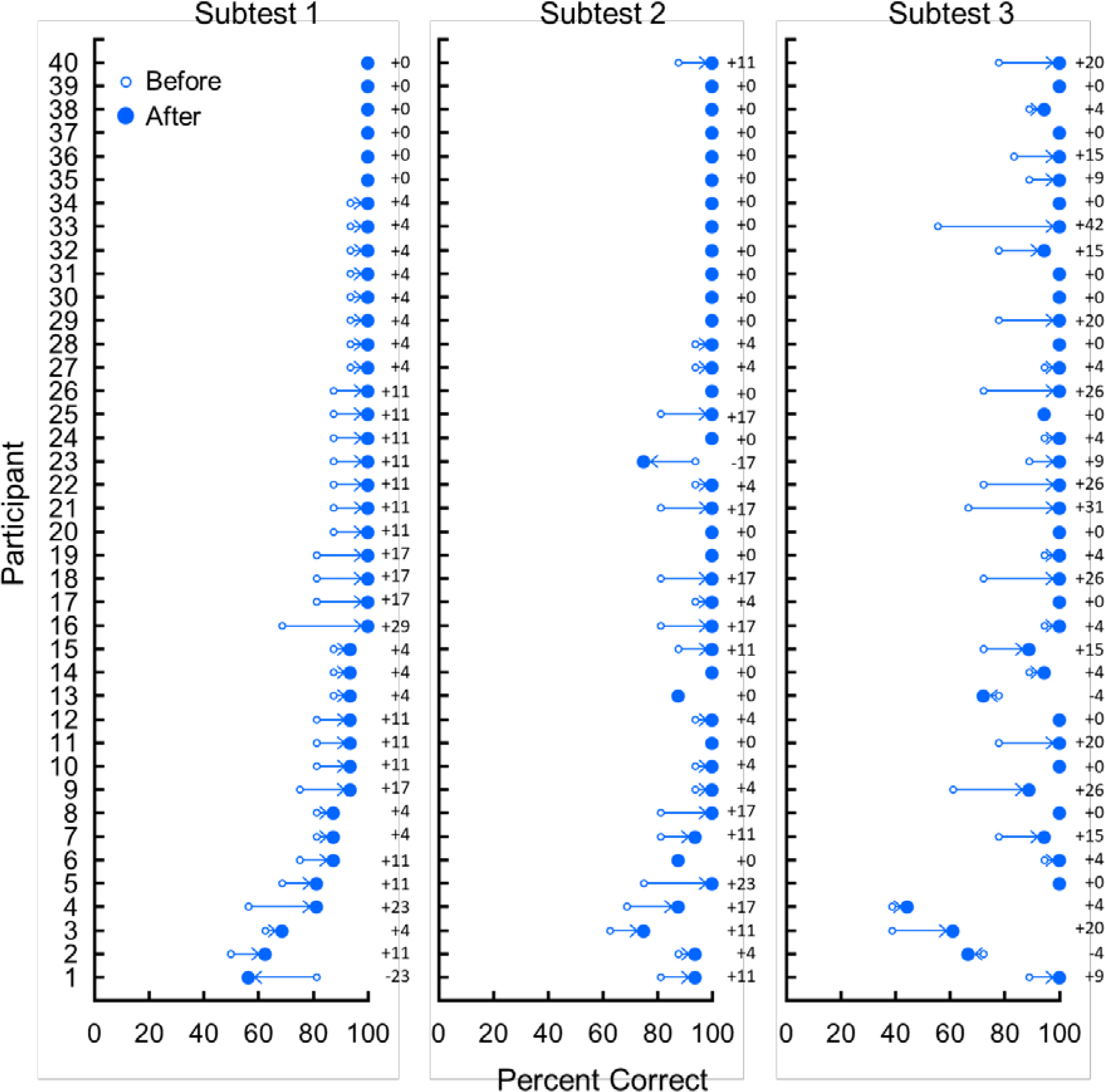

As shown in Figure 1, accuracy on subtests increased only after participants completed the course relevant to each subtest. Figure 2 provides a more detailed account of changes to individual participants’ accuracy on Subtests 1, 2, and 3 in the tests immediately before and after the relevant course. In most cases shown in Figure 2, participant accuracy increased after completion of the relevant course. Together, Figures 1 and 2 illustrate that the completion of each course generally increased accuracy on the corresponding subtest, that accuracy was stable before training, and that accuracy was maintained following training. See Supplementary Materials M for results in the test immediately before and after the relevant course arranged by test question.

Figure 1.

The mean percent correct on content from Subtests 1, 2, and 3 across the four tests. The dashed lines show the administration of the relevant education course.

Figure 2.

The percent correct on content from Subtests 1, 2, and 3 in the test immediately before (unfilled dots) and after (filled dots) the administration of the relevant education course. Arrows and numerals show the direction and extent of changes in accuracy for individual participants.

Table 2 shows the fitted change in scores (i.e., expected slopes) for each subtest across tests that preceded the relevant course (baseline), across tests immediately after the relevant course was completed (learning period), and across tests that followed the learning period (maintenance) based on piecewise mixed-effects model analyses. Accuracy on Subtest 1 increased significantly (+9.09%, p<.001) following Course 1 and did not change across subsequent tests (+0.08%, p=.900). Accuracy on Subtest 2 increased significantly (+5.78%, p<.001) following Course 2 and did not change across tests prior to the course (+2.03%, p=.094) or across tests after the learning period (−0.63%, p=.606). Accuracy on Subtest 3 increased significantly following Course 3 (+10.14%, p<.001) and did not change across tests prior to the course (+1.39%, p=.114). See Supplementary Materials N for model estimates for the adjusted piecewise mixed model and the adjusted interaction by each covariate.

Table 2.

Fitted change in scores during baseline, the learning period, and maintenance.

| Course 1 | Course 2 | Course 3 | |

|---|---|---|---|

| Baselinea | --- | 2.03 (1.21), .094 | 1.39 (0.88), .114 |

| Learning Periodb | 9.09 (1.20), <.001 | 5.78 (1.21), <.001 | 10.14 (1.68), <.001 |

| Maintenancec | 0.08 (0.63), .900 | −0.63 (1.21), .606 | --- |

Note. The change in scores was calculated from coefficients in the piecewise mixed-effects model (adjusted for education, employment, and poverty) and are shown above as the expected slope (SE), p-value. Statistically significant slopes (p<.05) are in bold.

Baseline represents the change in scores for the subtest prior to the relevant course.

Learning Period represents the change in scores for the subtest immediately prior to the relevant course and immediately after completion of the relevant course.

Maintenance represents the change in scores for the subtest after the relevant course.

Although overall increases in accuracy on subtests were sometimes small (e.g., about 6% for Subtest 2), large increases were measured on some items that have the potential to save lives. Course 2 was the critical course in teaching information about opioid overdose prevention, detection, and response. As described in the Baseline Performance subsection, participants had generally high accuracy on the content from Course 2 prior to its completion; however, there were two notable items related to opioid overdose response on which participants performed poorly before taking the course. These items were about what to do when responding to an opioid overdose: specifically that participants should put the individual who may be experiencing an overdose into a “recovery position” and perform rescue breathing. Before completing Course 2, only 68 percent and 75 percent of participants responded to these questions correctly, respectively. After completing Course 2, 98 percent and 95 percent of participants responded correctly – an increase of 30 and 20 percent, respectively.

Factors Related to Performance and Learning in the Opioid Education Program

Table 3 shows accuracy on each subtest across tests and comparisons of baseline scores, arranged by analyzed covariates. Participants who completed fewer than 12 years of education scored lower than those who completed at least 12 years in the Baseline test for Subtests 1 (79% vs 88%; t38=−2.19, p=.035) and 2 (82% vs 92%; t38=−2.58, p=.014). No other baseline comparisons yielded significant results.

Table 3.

Mean (and standard deviation) percent correct on questions in Subtests 1, 2, and 3 by participants during each test and comparisons of baseline scores, arranged by analyzed covariate.

| Covariate | Subtest 1 | Subtest 2 | Subtest 3 |

|---|---|---|---|

| Education | |||

| <12th Grade (n=11) | |||

| Test 1 (Baseline) | 79 (13) | 82 (12) | 80 (12) |

| Test 2 | 85 (15) | 86 (11) | 77 (9) |

| Test 3 | 88 (13) | 93 (9) | 78 (15) |

| Test 4 | 86 (13) | 93 (13) | 89 (14) |

| ≥12th Grade (n=29) | |||

| Test 1 (Baseline) | 88 (10) | 92 (10) | 83 (13) |

| Test 2 | 98 (5) | 94 (8) | 86 (14) |

| Test 3 | 98 (5) | 99 (3) | 87 (16) |

| Test 4 | 98 (6) | 98 (6) | 97 (10) |

| Baseline Comparisons (<12th Grade vs ≥12th Grade) |

t 38 =−2.19, p=.035 | t 38 =−2.58, p=.014 | t38=−0.59, p=.558 |

| Employment | |||

| Normally unemployed (n=24) | |||

| Test 1 (Baseline) | 85 (11) | 89 (13) | 82 (13) |

| Test 2 | 94 (11) | 91 (11) | 84 (14) |

| Test 3 | 93 (11) | 96 (7) | 86 (17) |

| Test 4 | 93 (11) | 95 (11) | 94 (14) |

| Normally employed (n=16) | |||

| Test 1 (Baseline) | 86 (12) | 90 (9) | 82 (13) |

| Test 2 | 95 (9) | 93 (7) | 82 (13) |

| Test 3 | 97 (5) | 99 (3) | 83 (14) |

| Test 4 | 96 (8) | 99 (3) | 97 (9) |

| Baseline Comparisons (Normally unemployed vs employed) |

t38=−0.10, p=.920 | t38=−1.14, p=.891 | t38=−0.05, p=.957 |

| Poverty | |||

| Below poverty level (n=34) | |||

| Test 1 (Baseline) | 86 (12) | 90 (12) | 82 (13) |

| Test 2 | 94 (11) | 92 (10) | 83 (13) |

| Test 3 | 95 (10) | 97 (7) | 85 (16) |

| Test 4 | 94 (10) | 97 (9) | 94 (10) |

| Above poverty level (n=6) | |||

| Test 1 (Baseline) | 82 (7) | 89 (8) | 83 (13) |

| Test 2 | 95 (5) | 89 (7) | 85 (15) |

| Test 3 | 95 (8) | 99 (5) | 83 (15) |

| Test 4 | 95 (9) | 98 (7) | 99 (10) |

| Baseline Comparisons (Below vs above poverty level) |

t38=0.68, p=.504 | t38=0.23, p=.823 | t38=−0.31, p=.762 |

Note. Statistically significant comparisons (p<.05) are in bold.

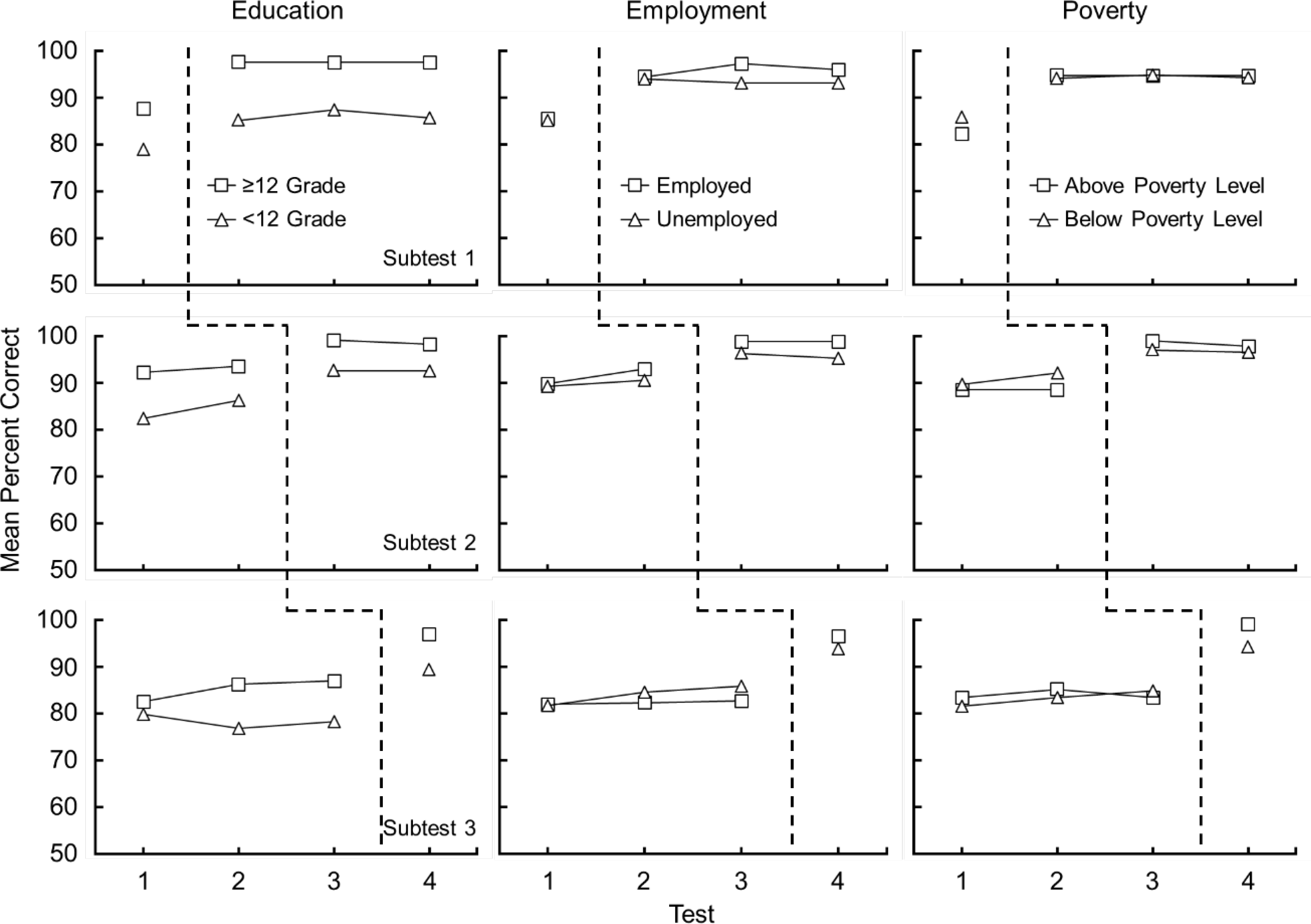

Table 4 shows the relation between covariates and changes in accuracy during the learning period, based on the piecewise mixed-effects model interaction analyses. The change in accuracy (i.e., expected slopes) on subtests during the learning period for each category of dichotomous covariates is listed below each covariate. All slopes are positive (range 4.96–15.21), indicating that each course improved scores on corresponding subtests independent of the covariate analyzed. None of the learning comparisons yielded significant results, indicating that, despite the fact that participants who completed 12 or more years of education were more accurate in Courses 1 and 2 during the baseline test, participant performance improved similarly, independent of education, employment, and poverty. These results are displayed graphically in Figure 3, which shows accuracy on each subtest across tests, organized by the analyzed covariates.

Table 4.

The relation between covariates and changes in accuracy during the learning period.

| Subtest 1 | Subtest 2 | Subtest 3 | |

|---|---|---|---|

| Education | |||

| < 12th Gradea | 6.91 (2.27) | 6.25 (2.29) | 11.87 (3.18) |

| ≥ 12th Gradea | 9.91 (1.40) | 5.60 (1.41) | 9.48 (1.96) |

| Learning comparisonb | 3.00 (2.66), .260 | 2.04 (4.65), .662 | −5.35 (5.03), .288 |

| Employment | |||

| Normally unemployeda | 8.72 (1.53) | 5.73 (1.56) | 7.64 (2.14) |

| Normally employeda | 9.64 (1.88) | 5.86 (1.91) | 13.89 (2.62) |

| Learning comparisonb | 0.91 (2.42), .706 | −1.69 (4.26), .692 | 7.99 (4.57), .080 |

| Poverty | |||

| Below poverty levela | 8.49 (1.29) | 4.96 (1.30) | 9.26 (1.81) |

| Above poverty levela | 12.50 (3.07) | 10.42 (3.09) | 15.12 (4.31) |

| Learning comparisonb | 4.01 (3.32), .228 | 7.84 (5.80), .176 | 7.50 (6.31), .234 |

Note. The change in scores was calculated from coefficients in the piecewise mixed-effects model (adjusted for education, employment, and poverty) and are shown above as the expected slope (SE), p-value.

Interaction model for Course 1 comparisons: E[yij] = ß0 + ß1*time + ß2*time1 + ß3*covariate + ß4*time*covariate + ß5*time1*covariate.00

Interaction model for Course 2 comparisons: E[yij] = ß0 + ß1*time + ß2*time1 + ß3*time2 + ß4*covariate + ß5*time*covariate + ß6*time1*covariate + ß7*time2*covariate.

Interaction model for Course 3 comparisons: E[yij] = ß0 + ß1*time + ß2*time1 + ß3*covariate + ß4*time*covariate + ß5*time1*covariate.

The expected change in percent correct following completion of the relevant education course for participants with the analyzed characteristic.

Results of the analysis comparing participant learning based on the analyzed covariates.

Figure 3.

The mean percent correct on content from Subtests 1, 2, and 3 across the four tests, arranged by analyzed covariates. The dashed lines show the administration of the relevant computer-based education course.

Discussion

The present study evaluated the feasibility and potential efficacy of a technology-assisted education program designed to improve knowledge about opioids; preventing, detecting, and responding to an opioid overdose; and FDA-approved medications for opioid use disorder among out-of-treatment adults with opioid use disorder. All participants completed the education program, which required approximately 91 min of activity to complete. Most participants completed the program in a single day. The program was effective in improving participants’ knowledge about opioids, opioid overdose, and medications to treat opioid use disorder. Accuracy on subtests increased significantly following the completion of each relevant course. Furthermore, accuracy was stable across tests taken before the relevant courses and maintained at high levels across tests taken after the courses, and increases in accuracy were similar independent of education, employment, and poverty level. Thus, the technology-assisted program appears to be a feasible and potentially efficacious approach to provide overdose prevention-related education to out-of-treatment adults with opioid use disorder. Although not sufficient, education is a critical component of promoting uptake of overdose prevention-related behaviors (Arlinghaus & Johnson, 2018).

The technology-assisted education program was delivered in ATTAIN, which allowed us to incorporate questions that could be presented reliably and consistently, provide frequent opportunities for participants to answer questions, provide immediate feedback on whether questions were answered correctly, and deliver performance-based incentives. The present study builds upon prior research using ATTAIN (Getty et al., 2018; Subramaniam et al., 2019) by extending the finding that technology-assisted education can effectively teach out-of-treatment adults with opioid use disorder about opioids; preventing, detecting, and responding to an opioid overdose; and medications to treat opioid use disorder. It also builds upon prior research on technology-assisted opioid education (Bergeria et al., 2019; Dunn et al., 2017, Huhn et al., 2018) in several ways. First, the present study was the first to our knowledge to design a technology-assisted education program to teach out-of-treatment adults with opioid use disorder, a population at a high risk of opioid overdose, about opioid overdose and medications to treat opioid use disorder. Second, the software incorporated principles of effective instruction and monitored participant retention of learning across several tests before and after the completion of the courses.

Participants in this study were all out-of-treatment adults with opioid use disorder who provided opioid-positive urine samples at study intake. These participants had relatively high levels of existing knowledge about opioids, opioid overdose, and opioid treatment. On the first test, which represented baseline knowledge, participants scored significantly higher than chance – showing that they had preexisting knowledge about the topics that we planned to teach. Notwithstanding this high level of baseline knowledge, the education program produced significant increases in accuracy, which provides evidence that computerized education can improve the performance of people with preexisting knowledge. From a clinical standpoint, these improvements could be important. For example, Course 2 was the critical course to teach health information related to preventing, detecting, and responding to an opioid overdose. After participants completed Course 2, participants learned that, when responding to an opioid overdose, they should perform rescue breathing (+20%) and put the person in the recovery position (+30%; see supplementary Materials M). These results indicate that, although overall increases in accuracy may have been modest (e.g., 6–10%), the education program produced substantial increases in accuracy for some information relevant to preventing risk of death from opioid overdose.

This study had limitations. First, the study did not determine whether increased knowledge about opioid overdose prevention and medications to treat opioid use disorder increased opioid overdose prevention behavior, including enrollment in opioid use disorder treatment. However, now that we have shown that the education program can increase knowledge about opioid overdose and opioid treatment experimentally, future research could determine if similar technology-assisted education can increase adherence to medications that treat opioid use disorder and other opioid overdose prevention behavior.

Second, the present study did not evaluate how effective the procedure would be if implemented on a wide scale, and what barriers (e.g., conservation of resources for financial incentives) might need to be examined further. Future research might investigate methods to make the education program more attractive for wide-scale implementation. For example, researchers may use participant scores on the first test to select participants most in need of education. Exposing only participants with relatively low scores on the initial test would reduce the number of participants who take the course and could thereby extend available resources while targeting only people who are most in need of education. It should be noted however that some modifications of the incentive intervention may not lead to successful wide-scale education. For example, interventions that employ financial incentives generally produce behavioral effects that are directly influenced by the magnitude of the incentives and the immediacy with which they are delivered following the behavior targeted by the intervention, with higher magnitude (e.g., Dallery et al., 2001) and more immediate incentives (e.g., Toegel et al., 2020) being more effective. These findings suggest that procedures that reduce or delay financial incentives may produce reductions in the overall effectiveness of the program.

Third, although we examined the retention of participant knowledge across several tests, we did not examine how knowledge was maintained across long periods of time. Future research might consider conducting a follow-up test several months after the completion of the education program.

Some sociodemographic characteristics predicted individual differences in test scores. In the first test, accuracy on Subtests 1 and 2 was positively related to education, but accuracy on Subtest 3 was not. Employment and poverty status were not predictive of accuracy at baseline. This finding is notable because employment and poverty are widely recognized as variables that are interrelated with drug addiction (Henkel, 2011; Center for Behavioral Health Statistics and Quality, 2018). Although education was predictive of some baseline scores, none of the covariates predicted the amount that participants learned. The comparisons based on the model describing increases in accuracy in the learning period yielded results that were not significantly different, indicating that participants learned similarly independent of education, employment, or poverty. Future research might consider evaluating methods to generate accelerated learning by participants who completed fewer than 12 years of education to promote educational equity.

Conclusions

The technology-assisted education program used in this study produced significant improvements in knowledge about opioids; preventing, detecting, and responding to an opioid overdose; and FDA-approved medications used to treat opioid use disorder. The program incorporated principles of effective instruction into a computerized health education program, which can be integrated with mobile technology to disseminate opioid education widely while avoiding barriers to providing effective education related to time and resources required to build competency in staff for in-person training. Learning comparisons showed that completion of each course was associated with increased accuracy on the material from the relevant course, that learned content was retained across subsequent tests, and that participants learned the content similarly independent of education, employment, and poverty. Although the aim of the study was to evaluate the feasibility and potentially efficacy of the program in promoting improvements in knowledge, it is our hope that our study will contribute to the body of research aimed to promote behavior capable of preventing harms from opioid overdose and help to combat the opioid epidemic.

Supplementary Material

Public Health Significance Statements:

Technology-assisted education can teach adults at a high risk of opioid overdose about opioids, opioid overdose, and opioid use disorder treatment.

Each course in the technology-assisted education program produced significant increases in participant accuracy, and learning was retained across tests after courses were completed.

Education, employment, and poverty were unrelated to how much participants learned.

Acknowledgements

We thank Meghan Arellano, Jackie Hampton, India Harper, Calvin Jackson, and Sarah Pollock for their work recruiting participants and facilitating the technology-assisted education program.

Sources of support

This journal article was supported by Grants T32DA07209 funded by the National Institute on Drug Abuse and R01CE003069 funded by the Centers for Disease Control and Prevention. Its contents are solely the responsibility of the authors and do not necessarily represent the official views of the National Institute on Drug Abuse, the Centers for Disease Control and Prevention, or the Department of Health and Human Services.

Footnotes

Conflict of Interest Statement

On behalf of all authors, the corresponding author states that there are no conflicts of interest.

Compliance with Ethical Standards

Research involving human participants and/or animals statement

The research described herein was in conducted in accordance with The Code of Ethics of the World Medical Association (Declaration of Helsinki). All procedures were approved by the Johns Hopkins University School of Medicine Institutional Review Board.

Informed consent

All participants provided written informed consent.

Availability of Data and Materials

Data supporting the findings can be obtained by contacting the corresponding author. Materials used in the education course have been uploaded as part of a supplementary materials file, and can also be obtained by contacting the corresponding author.

References

- Arlinghaus KR, & Johnston CA (2018). Advocating for behavior change with education. American Journal of Lifestyle Medicine, 12(2), 113–116. https://doi.org/10.1177%2F1559827617745479 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett BH, Beck R, Binder C, Cook DA, Engelmann S, Greer RD, Jane S, Kyrklund SJ, Johnson KR, Maloney M, McCorkle N, Vargas JS, & Watkins CL (1991). The right to effective education. The Behavior Analyst, 14, 79–82. 10.1007/BF03392556 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bergeria CL, Huhn AS, & Dunn KE (2019). Randomized comparison of two web-based interventions on immediate and 30-day opioid overdose knowledge in three unique risk groups. Preventive Medicine, 128, 105718. 10.1016/j.ypmed.2019.05.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Center for Behavioral Health Statistics and Quality. (2018). 2017 National Survey on Drug Use and Health: Detailed Tables. Substance Abuse and Mental Health Services Administration, Rockville, MD. Accessed on February 13, 2019. https://www.samhsa.gov/data/nsduh/reports-detailed-tables-2017-NSDUH [Google Scholar]

- Centers for Disease Control and Prevention. (2020). Preventing an opioid overdose. Accessed June 30, 2020. https://www.cdc.gov/drugoverdose/pdf/patients/Preventing-an-Opioid-Overdose-Tip-Card-a.pdf.

- Dallery J, Silverman K, Chutuape MA, Bigelow GE, & Stitzer ML (2001). Voucher-based reinforcement of opiate plus cocaine abstinence in treatment-resistant methadone patients: Effects of reinforcer magnitude. Experimental and Clinical Psychopharmacology, 9, 317–325. 10.1037//1064-1297.9.3.317 [DOI] [PubMed] [Google Scholar]

- Davis C, Webb D, & Burris S (2013). Changing law from barrier to facilitator of opioid overdose prevention. The Journal of Law, Medicine & Ethics, 41, 33–36. 10.1111/jlme.12035 [DOI] [PubMed] [Google Scholar]

- Dunn KE, Barrett FS, Yepez-Laubach C, Meyer AC, Hruska BJ, Sigmon SC, Fingerhood M, & Bigelow GE (2016). Brief Opioid Overdose Knowledge (BOOK): A questionnaire to assess overdose knowledge in individuals who use illicit or prescribed opioids. Journal of Addiction Medicine, 10, 314–323. 10.1097/ADM.0000000000000235 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunn KE, Yepez-Laubach C, Nuzzo PA, Fingerhood M, Kelly A, Berman S, & Bigelow GE (2017). Randomized controlled trial of a computerized opioid overdose education intervention. Drug and Alcohol Dependence, 173, S39–S47. 10.1016/j.drugalcdep.2016.12.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- FRIENDS Medical Laboratory, Inc. Accessed September 2020. https://friendslab.com/

- Getty CA, Subramaniam S, Holtyn AF, Jarvis BP, Rodewald A, & Silverman K (2018). Evaluation of a computer-based training program to teach adults at risk for HIV about pre-exposure prophylaxis. AIDS Education and Prevention, 30, 287–300. 10.1521/aeap.2018.30.4.287 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gupta R, Shah ND, & Ross JS (2016). The rising price of naloxone — risks to efforts to stem overdose deaths. New England Journal of Medicine, 375, 2213–2215. 10.1056/NEJMp1609578 [DOI] [PubMed] [Google Scholar]

- Hay KR, Huhn AS, Tompkins DA, & Dunn KE (2019). Recovery Goals and Long-term Treatment Preference in Persons Who Engage in Non-Medical Opioid Use. Journal of addiction medicine, 13(4), 300. https://dx.doi.org/10.1097%2FADM.0000000000000498 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hedegaard H, Miniño AM, & Warner M (2020). Drug overdose deaths in the United States, 1999–2018. NCHS Data Brief, no 356. Hyattsville, MD: National Center for Health Statistics. Accessed October 22, 2020. https://stacks.cdc.gov/view/cdc/84647 [Google Scholar]

- Henkel D (2011). Unemployment and substance use: A review of the literature (1990–2010). Current Drug Abuse Reviews, 4, 4–27. 10.2174/1874473711104010004 [DOI] [PubMed] [Google Scholar]

- Henny KD, Wilkes AL, McDonald CM, Denson DJ, & Neumann MS (2018). A rapid review of eHealth interventions addressing the continuum of HIV care (2007–2017). AIDS and Behavior, 22, 43–63. 10.1007/s10461-017-1923-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hewlett L, & Wermeling DP (2013). Survey of naloxone legal status in opioid overdose prevention and treatment. Journal of Opioid Management, 9, 369–377. 10.5055/jom.2013.0179 [DOI] [PubMed] [Google Scholar]

- Horner RD, & Baer DM (1978). Multiple-probe technique: A variation of the multiple baseline. Journal of Applied Behavior Analysis, 11, 189–196. 10.1901/jaba.1978.11-189 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huhn AS, Garcia-Romeu AP, & Dunn KE (2018). Opioid overdose education for individuals prescribed opioids for pain management: Randomized comparison of two computer-based interventions. Frontiers in Psychiatry, 9, 34. 10.3389/fpsyt.2018.00034 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jarvis BP, Holtyn AF, Subramaniam S, Tompkins DA, Oga EA, Bigelow GE, & Silverman K (2018). Extended-release injectable naltrexone for opioid use disorder: a systematic review. Addiction, 113(7), 1188–1209. 10.1111/add.14180 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koffarnus MN, DeFulio A, Sigurdsson SO, & Silverman K Performance pay improves engagement, progress, and satisfaction in computer-based job skills training of low-income adults. Journal of Applied Behavior Analysis, 46, 395–406. 10.1002/jaba.51 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Larochelle MR, Bernson D, Land T, Stopka TJ, Wang N, Xuan Z, ... & Walley AY (2018). Medication for opioid use disorder after nonfatal opioid overdose and association with mortality: a cohort study. Annals of internal medicine, 169(3), 137–145. 10.7326/m17-3107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marsch LA, & Bickel WK (2004). Efficacy of computer-based HIV/AIDS education for injection drug users. American Journal of Health Behavior, 28, 316–327. 10.5993/AJHB.28.4.3 [DOI] [PubMed] [Google Scholar]

- Masson CL, Chen IQ, Levine JA, Shopshire MS, & Sorenson JL (2019). Health-related internet use among opioid treatment patients. Addictive Behaviors Reports, 9, 100157. 10.1016/j.abrep.2018.100157 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McLellan AT, Luborsky L, Cacciola J, Griffith J, Evans F, Barr HL, & O’Brien CP (1985). New data from the addiction severity index: reliability and validity in three centers. Journal of Nervous and Mental Disease, 173, 412–423. doi: 10.1097/00005053-198507000-00005 [DOI] [PubMed] [Google Scholar]

- Olsen Y, & Sharfstein JM (2014). Confronting the stigma of opioid use disorder—and its treatment. JAMA, 311(14), 1393–1394. doi: 10.1001/jama.2014.2147 [DOI] [PubMed] [Google Scholar]

- Pollard JS, Higbee TS, Akers JS, & Brodhead MT (2014). An evaluation of interactive computer training to teach instructors to implement discrete trials with children with autism. Journal of Applied Behavior Analysis, 47, 765–776. 10.1002/jaba.152 [DOI] [PubMed] [Google Scholar]

- Prue DM, & Fairbank JA (1981). Performance feedback in organizational behavior management: A review. Journal of Organizational Behavior Management, 3, 1–16. 10.1300/J075v03n01_01 [DOI] [Google Scholar]

- Silverman K, Lindsley OR, & Porter KL (1990). Overt responding in computer-based training. Current Psychology, 9, 373–384. 10.1007/BF02687193 [DOI] [Google Scholar]

- Silverman K, Wong CJ, Needham M, Diemer KN, Knealing T, Crone-Todd D, Fingerhood M, Nuzzo P, & Kolodner K (2007). A randomized trial of employment-based reinforcement of cocaine abstinence in injection drug users. Journal of Applied Behavior Analysis, 40, 387–410. 10.1901/jaba.2007.40-387 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sordo L, Barrio G, Bravo MJ, Indave BI, Degenhardt L, Wiessing L, Ferri M, & Pastor-Barriuso R (2017). Mortality risk during and after opioid substitution treatment: systematic review and meta-analysis of cohort studies. BMJ, 357, 1–14. 10.1136/bmj.j1550 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Subramaniam S, Getty CA, Holtyn AF, Rodewald A, Katz B, Jarvis BP, Leoutsakos JS, Fingerhood M, & Silverman K (2019). Evaluation of a computer-based HIV education program for adults living with HIV. AIDS and Behavior, 23, 3125–3164. 10.1007/s10461-019-02474-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Substance Abuse and Mental Health Services Administration (SAMHSA). (2020). Medications for opioid use disorder for healthcare and addiction professionals, policymakers, patients, and families. Treatment Improvement Protocol (TIP) 63. Accessed June 1, 2020. https://store.samhsa.gov/product/TIP-63-Medications-for-Opioid-Use-Disorder-Full-Document/PEP20-02-01-006. [PubMed] [Google Scholar]

- Substance Abuse and Mental Health Services Administration (SAMHSA). (2018). Opioid overdose prevention toolkit. Accessed June 1, 2020. https://store.samhsa.gov/product/Opioid-Overdose-Prevention-Toolkit/SMA18-4742

- Toegel F, Holtyn AF, Silverman K (2020). Increased reinforcer immediacy can promote employment-seeking in unemployed homeless adults with alcohol use disorder. The Psychological Record, Online First. 10.1007/s40732-020-00431-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- U.S. Department of Health and Human Services (HHS). (2020). Poverty Guidelines for 2020. Accessed September 1, 2020. https://aspe.hhs.gov/2020-poverty-guidelines

- Wakeman SE, Larochelle MR, Ameli O, Chaisson CE, McPheeters JT, Crown WH, ... & Sanghavi DM (2020). Comparative effectiveness of different treatment pathways for opioid use disorder. JAMA network open, 3(2), e1920622–e1920622. 10.1001/jamanetworkopen.2019.20622 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walley AY, Xuan Z, Hackman HH, Quinn E, Doe-Simkins M, Sorensen-Alawad A, Ruiz S, & Ozonoff A (2013). Opioid overdose rates and implementation of overdose education and nasal naloxone distribution in Massachusetts: Interrupted time series analysis. BMJ, 346, 1–13. 10.1136/bmj.f174 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winstanley EL, Clark A, Feinberg J, & Wilder CM (2016). Barriers to implementation of opioid overdose prevention programs in Ohio. Substance Abuse, 37, 42–46. 10.1080/08897077.2015.1132294 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.