Abstract

Background

The benefits of involving those with lived experience in the design and development of health technology are well recognized, and the reporting of co-design best practices has increased over the past decade. However, it is important to recognize that the methods and protocols behind patient and public involvement and co-design vary depending on the patient population accessed. This is especially important when considering individuals living with cognitive impairments, such as dementia, who are likely to have needs and experiences unique to their cognitive capabilities. We worked alongside individuals living with dementia and their care partners to co-design a mobile health app. This app aimed to address a gap in our knowledge of how cognition fluctuates over short, microlongitudinal timescales. The app requires users to interact with built-in memory tests multiple times per day, meaning that co-designing a platform that is easy to use, accessible, and appealing is particularly important. Here, we discuss our use of Agile methodology to enable those living with dementia and their care partners to be actively involved in the co-design of a mobile health app.

Objective

The aim of this study is to explore the benefits of co-design in the development of smartphone apps. Here, we share our co-design methodology and reflections on how this benefited the completed product.

Methods

Our app was developed using Agile methodology, which allowed for patient and care partner input to be incorporated iteratively throughout the design and development process. Our co-design approach comprised 3 core elements, aligned with the values of patient co-design and adapted to meaningfully involve those living with cognitive impairments: end-user representation at research and software development meetings via a patient proxy; equal decision-making power for all stakeholders based on their expertise; and continuous user consultation, user-testing, and feedback.

Results

This co-design approach resulted in multiple patient and care partner–led software alterations, which, without consultation, would not have been anticipated by the research team. This included 13 software design alterations, renaming of the product, and removal of a cognitive test deemed to be too challenging for the target demographic.

Conclusions

We found patient and care partner input to be critical throughout the development process for early identification of design and usability issues and for identifying solutions not previously considered by our research team. As issues addressed in early co-design workshops did not reoccur subsequently, we believe this process made our product more user-friendly and acceptable, and we will formally test this assumption through future pilot-testing.

Keywords: agile, dementia, co-design, cognition, mHealth, patient public involvement, software development, mobile phone

Introduction

Background

In January 2019, the National Health Service published its long-term plan, setting out key ambitions for the next 10 years. One of the most ambitious targets of this plan was in the field of digital technology, with a vision toward increasing care at home using remote monitoring and digital tools [1]. This move will likely be expedited by the need for social distancing brought about by the COVID-19 pandemic. Therefore, with the impetus and growing necessity for distance health care, it is important to consider how this new type of service will meet the needs of patients. A way to ensure that new technologies are usable, acceptable, and tailored toward the patients they aim to support is to ensure that the patients themselves are central to the design and development process. Co-design offers a way to ensure that new technologies and interventions are tailored to patient needs [2]. Indeed, there is growing support for the benefits of co-design in health care [3] but less evidence as to how these approaches can be tailored to the needs of diverse patient populations [4].

Within the context of software development, co-design can be defined as a process that draws on the shared creativity of software developers and people not trained in software working together [5]. To this end, special attention is given to involving end users and ensuring that their input as experts through experience is central to the design process and that their specific needs are understood and met [5-7]. This is in line with existing literature suggesting that integrating patient voice with software development is achievable and can provide valuable feedback to improve the intuitive design and usability of software outcomes [8,9].

In dementia research, patient and public involvement (PPI) and co-design is still a developing field [10], although it has been suggested to confer benefits to research outcomes, researchers, and members of the public who play a part in the process [11,12]. The capacities, needs, and preferences of those living with cognitive impairments can be diverse [13]. Therefore, standard co-design and PPI methodologies often need to be adapted to suit this population. This may be particularly important in the field of digital technology and software development, as studies suggest that there is utility and an appetite for assistive technology for older people and those living with cognitive impairments. However, despite there being motivation for older people to use digital technologies, barriers exist around usability and lack of experience [14-17].

An area in which digital technology can help with the care and management of dementia is through the monitoring of cognitive change and variability, which is an issue that is considered important for this population [18,19]. For instance, many people with dementia experience worsening cognitive and neuropsychiatric symptoms in later periods of the day, a phenomenon known as sundowning [20,21]. Current practice bases the diagnosis of dementia on a combination of clinical history, biomarker detection, and examination, of which cognitive testing is a key part [22]. However, conventional cognitive assessments cannot detect short-term fluctuations in cognition that might be relevant to understanding or managing individuals’ cognitive, functional, and behavioral symptoms.

Recent developments in computerized cognitive testing have made it possible to measure microlongitudinal patterns of cognitive function [23]. However, although these tools have been tested in cognitively healthy older adults [14], they have not yet been used in populations with cognitive impairment. Furthermore, these tasks were designed for use on large, touchscreen tablet devices and have not yet been adapted for use on smaller, more mobile devices, such as smartphones, which are used by an increasing number of older people [23]. Therefore, there is an impetus to adapt such tasks for use on smartphone devices and meet the needs of those living with clinical conditions that affect their cognitive abilities [24].

Despite the diverse and divergent lived experiences of those living with dementia, software apps are rarely designed with this patient population in mind [15]. It is even rarer to find software codeveloped alongside those living with dementia [4]. This can result in poorer quality technology that can be difficult to use for those living with dementia [25]. However, research indicates that those living with dementia have an interest in assistive technology and are capable of using touchscreen technology [17,26]. Therefore, we approached the adaptation of microlongitudinal computerized cognitive tests to the needs of people living with cognitive impairments through an iterative Agile process with patient co-design at its center.

Agile software development focuses on collaboration with users and rapid software deployment [27]. Scaling tests to a mobile device requires regular input and development iterations from end users, with an understanding that direct translation between devices may be unsuitable. The Agile methodology is best suited to such projects where requirements may not be clearly defined at the outset and emerge over time [28]. This is especially relevant in this case, where iterative co-design workshops spaced throughout the development process meant that the final product was not clearly defined early in the process and, instead, emerged based on consultations with experts through experience via regular workshops.

Objectives

In this paper, we describe how we modified co-design approaches to involve members of the public living with dementia and their care partners in the production of a smartphone app. Although we worked specifically with people with dementia, the principles could be applied to other patient groups who do not find it easy to engage with standard co-design approaches. We also explain the benefits of the Scrum development methodology as a way of integrating user feedback into the design and development process.

Methods

PPI and Co-design: Theoretical Framework

Overview

Public involvement in research is defined by the National Institute for Health Research’s INVOLVE as research being conducted with or by members of the public rather than to, about, or for them. Tambuyzer et al [29] also recognize that, given the heterogeneity of research protocols and patient populations, involvement is not a one-size-fits-all concept and is better defined by values rather than protocols. These values include participation in decision-making and giving contributors some control and responsibility over research outcomes, active involvement that goes beyond consultation or receiving information, involvement in a range of activities, being recognized as experts by experience, and collaboration with professionals.

Working alongside individuals living with cognitive impairments necessitates a tailored approach to involvement and co-design. Therefore, it is necessary to balance facilitating meaningful involvement alongside being mindful of individuals’ capacity, capability, and preferences.

Therefore, we approached the challenge of co-design alongside individuals with cognitive impairments by adopting the following three methodological steps: (1) end-user representation at research and software development meetings via a patient proxy; (2) equal decision-making power for all stakeholders based on their expertise; and (3) continual user consultation, user-testing, and feedback.

Step 1: End-user Representation

On the basis of the combination of a short timescale for app development, limitations in the availability of clinical advisors, and a desire to reduce unnecessary burden on contributors living with dementia and their care partners, we chose to represent the patient or public voice at research group meetings via a proxy. Our proxy was a PPI officer who worked alongside our research group. They were responsible for developing and facilitating co-design workshops and representing end users at research group meetings. This ensured that the patient voice was represented in all important decisions and was given equal weight as the voice of other research team members.

Step 2: Equality of Expertise

Input from those with lived experience of cognitive impairments was integral to the development of this app. Therefore, those involved were encouraged to input into all the elements of the design process. To this end, input from those with lived experience led to 13 design alterations across the life of the project (listed in the following sections). Feedback from co-design workshops also led to the removal of 1 cognitive test, which was deemed too challenging for those living with dementia, and rebranding of the app.

Step 3: Continued Input

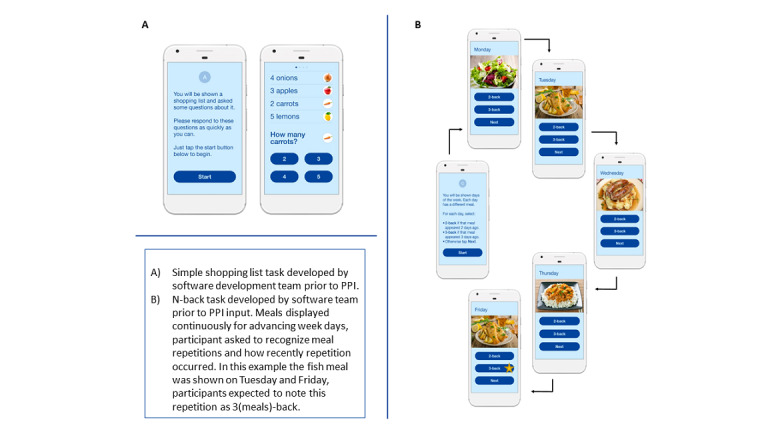

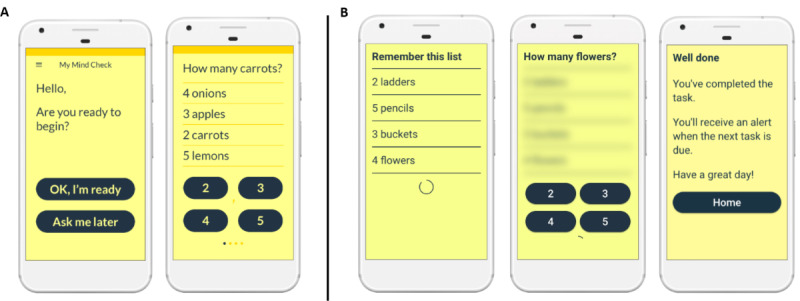

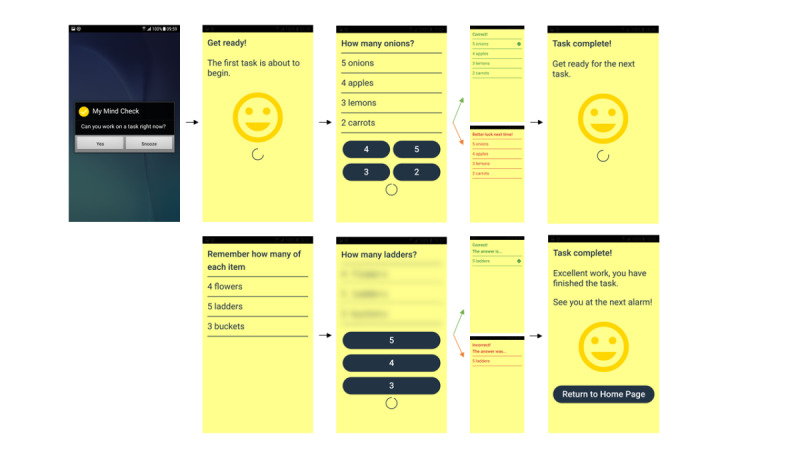

Following the development of an initial prototype app, which was designed to act as a scaffolding example app for use in the first co-design workshop, all subsequent software development sprints were based on end-user feedback. This ensured that any emerging design or software features were reviewed and modified by end users before being added to the following sprint. The extent of the end-user modifications adopted in the creation of this app can be visualized by comparing Figure 1 (the research team’s prototype app) with Figure 2 (alterations made following our first co-design workshop) and Figure 3 (the final product based on feedback from 4 co-design workshops).

Figure 1.

(A) Simple test of cognitive processing speed and (B) a more cognitively demanding tests of working memory developed by the software development team before patient and public involvement input. PPI: patient and public involvement.

Figure 2.

(A) Redesigned shopping list task and (B) new shopping list+ task following first patient and public involvement workshop.

Figure 3.

Final software alterations following second patient and public involvement workshop showing flow through the app.

PPI, Co-design Process, and Methodology

Overview

A total of 4 co-design workshops were run collaboratively with community dementia support groups and were tailored to those living with cognitive impairments. Workshops were planned around familiar venues and, in some cases, to coincide with existing support group meetings. The materials used were dementia-friendly [30] and in line with INVOLVE recommendations [31]; the budget was ring-fenced to cover attendee travel, attendance fees, and refreshments.

Participants of workshops 1 and 2 comprised a mix of individuals living with a dementia diagnosis and current and past care providers of people living with dementia (workshop 1: 5/7, 71% with dementia and 2/7, 29% current or past carers; workshop 2: 3/6, 50% with dementia and 3/6, 50% current or past carers). Participants were recruited from 2 local dementia support groups following informal visits and presentations from the members of the research team. Approximately 30% (3/10) of the participants (1/3, 33% living with dementia, and 2/3, 67% current or past carers) attended both workshops 1 and 2.

Workshops 1 and 2 adopted a similar format: each workshop lasted approximately 2 hours and included (1) lunch and informal ice-breaker conversations; (2) a short, accessible project discussion and feedback; (3) introduction and testing of a visual working prototype; and (4) the collection of informal one-to-one and group feedback on the prototype. Workshop 1 also included an activity in which participants were encouraged to discuss their views on and responses to candidate words and phrases for the app’s name. This was achieved via a discussion of flashcards containing keywords associated with the cognitive testing app (eg, cognition, test, training, research, brain, e, memory, noggin, and mobile) alongside our prototype name Health-e-Mind. This discussion generated the name MyMindCheck, which was considered meaningful and acceptable to workshop attendees. This name was later presented to the participants of workshop 2, 7 months after workshop 1, and was received positively.

These workshops were designed to involve patients in the co-design of the MyMindCheck app rather than being structured research or focus groups. Therefore, feedback from participants was not treated as research data. Consistent with common involvement practice [32], participant feedback was collected as written field notes by 2 workshop facilitators (direct quotes were not included); these notes were collated, and key points were identified and fed back to the research team.

Workshops 3 and 4 took place 2 months after workshop 2 and spanned a week-long period of user-testing. Participants were recruited by the research team from a local community dementia support group with a focus on technology; these were older individuals with current or past experience of supporting someone living with dementia (n=4 current or past carers). This group was targeted as we expected individuals attending a technology-focused group to be inclined to take part in our week-long user-testing phase.

Potential participants from this group were approached during one of the group’s regular meetings, and the project was introduced, and the app was demonstrated. From this meeting, 4 individuals consented to the 7-day testing period, whereas 4 declined, citing time commitments as a barrier to participation. We returned to the group the following week to distribute phones preloaded with the MyMindCheck app, a short instruction manual, and optional paper diaries. The paper diaries were used as an aide-memoir for participants to record their day-to-day experiences using the app.

Workshop 4 took place after the week-long user-testing period and comprised a short informal discussion regarding participants’ experiences of using the software. Participants were asked to comment not only on their own experience with the software but also on its suitability for someone living with a dementia diagnosis. Paper diaries were referred to during this discussion as a memory prompt; data from these diaries were not stored or analyzed further outside this workshop.

Feedback from each workshop was reviewed and discussed by the project team shortly after each workshop. This resulted in an agreed set of changes for the subsequent shippable products. As with any feedback of this nature, the project team prioritized changes based on both the effort required to implement and the likely impact on the end user.

The Software Development Process

The MyMindCheck app was developed using a co-design approach [5-7] involving three key groups of stakeholders: the research team (including clinical input), the target user group (people living with dementia and individuals with direct experience of caring for those with dementia), and the software development team. The software team adopted the Scrum framework for development [33]. Scrum is a modern, Agile software development methodology that fits well with the co-design approach used to develop this app. It focuses on the regular delivery of working software (shippable products) to users and depends on user feedback throughout the software development.

Scrum uses sprints, which are timeboxed development efforts, usually 1 to 4 weeks in duration [33]. The software team used 3-week sprints for this project, as this presented a suitable balance between the need to be able to respond flexibly to changing requirements and the delivery of sufficient functionality within each sprint. Sprint planning sessions were attended by members of the research and software development team, including a PPI specialist (research team member) who facilitated public workshops and acted as a customer proxy during these planning sessions. The customer proxy acted as the Scrum product owner in this instance and was responsible for being the voice of the customer. In Scrum, the product owner is responsible for defining and prioritizing the requirements for the product, which, in this case, was the MyMindCheck app [34]. The PPI specialist was chosen as the product owner as they worked closely with the PPI participants to capture the requirements for the app.

Visual working prototypes were used during the initial PPI workshop events. These prototypes allowed users to see interactive screens that portrayed key design elements and the flow through the app and were produced by a user experience designer embedded in the software team. This enabled early testing with the research team and target user group without requiring significant investment in software development.

The initial prototypes were based on a validated computerized cognitive task, in which participants were presented with a short list of grocery items (eg, carrots) and a quantity for each [23]. Participants were then asked to report the quantity of a given item (Figure 1). This task had previously been validated as a measure of cognitive processing speed in community-living older people [23]. An additional task was also included to place a higher demand on memory, which was based on clinical input. This task was based on an N-back test of working memory [35] and presented participants with a meal for each day of the week. When a participant was shown a meal that had previously been seen, they needed to recall how many days back the meal was first seen (Figure 1).

Before the first PPI workshop, research team members, who had experience in working with people with cognitive impairment, reviewed the prototypes. They suggested modifications to simplify these tasks, making them more visually appealing and quicker to navigate to maintain user engagement. These modifications included the following:

The addition of images to both tasks

Start screens containing instructions on how to complete the tasks

Simplification of the second, harder, N-back task by introducing images of meals and the use of the days of the week, as these were familiar items (Figure 1)

-

The team also generated the provisional name

Health-e-Mind

for the prototype app

These prototypes were then presented for review to the target user group during co-design workshops arranged by the team’s public involvement lead. On the basis of initial feedback on the visual working prototypes, the software team began the development of version 1 of the app. This process continued in an iterative manner for each of the key components of the app.

Learning From the Approach

Throughout the project, the research and software development teams met on an approximately monthly basis to review the approach being taken. This enabled improvements to be made to the process within the project and resulted in some key learning points that could be applied to future projects.

Results

Initial Prototype and Feedback From Workshop 1

The initial prototype comprised a shopping list task and an N-back task (Figure 1).

Much of the participant feedback collected during workshop 1 correlated poorly with the research team’s prior assumptions. Some of the key feedback from workshop 1 and the actions taken to address this feedback are presented in Table 1.

Table 1.

Feedback and resulting software modifications from workshop 1.

| Item and feedback | Software modification | ||

| Appearance | |||

|

|

|

From this feedback, the software development team chose to remove images from both tasks. | |

|

|

|

The display was altered to black text on a yellow background. | |

| Instructions | |||

|

|

|

The development team removed the introduction text from this task, replacing it with a simple “Are you ready to start <yes>, <no>” structure. | |

|

|

|

Text flow was altered in line with workshop preferences in the next design iteration (Figure 2). | |

|

|

|

To encourage users to complete the shopping list task as quickly as possible, the development team added a circular bar countdown timer to the bottom of the task screen. | |

| N-back task | |||

|

|

|

It was decided that the N-back task was too complicated and not fit for purpose. Therefore, the development team removed this task and replaced it with a more memory-intensive variant of the shopping list task, subsequently referred to as shopping list+, in which the shopping list was removed from the screen before and during each probe question (Figure 2). | |

| Feedback | |||

|

|

|

It was decided that a generic positive feedback message would be added to the tasks, that is, “Great job, well done.” | |

| Name | |||

|

|

|

From this feedback, the team chose to change the name to MyMindCheck. | |

Overall, workshop participants seemed positively disposed to the purpose of the app and said that assuming certain alterations were made, they would be willing to interact with such a program on a subdaily basis.

Second Prototype and Feedback From Workshop 2

Building on feedback from workshop 1, the software team undertook a second development sprint, updating the original prototype to incorporate feedback from workshop 1, including removal of N-back task and replacement with shopping list+ task (Figure 2).

Feedback from this workshop and actions taken are listed below in Table 2.

Table 2.

Feedback and resulting software modifications from workshop 2.

| Item and feedback | Software modification | |

| Instructions | ||

|

|

For the new shopping list+ task, a number of participants noted that until they reached the screen containing the question and multiple-choice answers, they did not realize that they had to remember both the objects listed and the associated number of items. | This was addressed by altering the prompt used on the first screen of this task to read “Remember how many of each item.” |

| Appearance | ||

|

|

For the shopping list+ task, several participants were unable to read the entire list of 4 items displayed on the first screen before it timed out and moved on to the probe question. | To address this, the team increased the display duration of the first screen to give users more time to read the instruction and object list. They also reduced the list length from 4 items to 3. |

|

|

This version included a countdown timer on both tasks, specifically, a circular bar countdown timer. Although most testers said that they did not notice this timer, they did note that they had been trying to respond quickly. However, 1 tester did say that she noticed the timer and felt stressed about completing the task in time. | The countdown timer remains in the app as a visual cue to complete in a timely manner. However, the timer was altered from a model which showed a finite time counting down to a timer that did not count down to a finite point. It was hoped that this maintained a sense of urgency but would mitigate stress caused by a finite countdown. |

| Feedback | ||

|

|

This version of the app included a generic positive feedback message after each task that was not linked to performance, that, “Well done.” This was included to avoid user discouragement because of low scores. However, participants did not appreciate being given positive feedback when they were aware that they had performed badly. | Feedback was altered to maintain a positive tone while also remaining performance neutral: “Task complete! You have finished the task. See you at the next alarm.” |

In line with feedback from the first workshop, no major objections were raised to the usability and acceptability of the MyMindCheck app. Indeed, 1 attendee who stated at the beginning of the workshop that she did not use mobile phones was particularly fast to pick up both tasks and noted at the end of the workshop that she had enjoyed testing the app.

Final Prototype and Feedback From Workshops 3 and 4

Workshops 3 and 4 aimed to test the software alterations implemented as a result of workshop 2 and to trial new functionality, including prompts and alarms. Feedback from these workshops and the actions taken to address this feedback are listed as follows:

Although most participants complied with the assigned in-app tests, the most common reasons for noncompliance were

The alarm was not loud or long enough.

Fear of breaking the phone if they took it out of the house.

Fatigue at being asked to complete tasks 4 times a day.

To address these concerns, the software team implemented the following modifications:

Increased the alarm volume and duration

Decided to provide phone cases when using a study phone to reduce fear of dropping or damaging phones

Decided to implement further PPI regarding prompt number and frequency before further implementation or testing

One tester also noted in her diary that, for the first 2 days of testing, the phone did not register her responses, and therefore was timing out on the tests. Similar issues had surfaced with other testers to a lesser extent in some of the preceding workshops. On the basis of this feedback and previous observations, it was noted that some participants were holding the response buttons rather than tapping them, perhaps reflecting a level of unfamiliarity with mobile technology among this group. Therefore, to address this issue the software was modified to identify both on-press and on-hold events as valid answers.

Testers were confident using both tasks, although they noted that they found the shopping list+ task harder and that it required more concentration. Testers with experience caring for someone with dementia stated that they believed that these tasks could be completed by someone living with dementia, assuming they had support from a care partner.

Discussion

Principal Findings

We used a co-design approach to develop the MyMindCheck app involving three key groups of stakeholders: the research team (including clinical input), the target user group (people living with dementia or individuals with direct experience of caring for others with dementia), and the software development team. As patient involvement and co-design in dementia research is in its relative infancy across Europe [10], this study will make an important contribution toward a model of best practice for related research and provide an exemplar for others wishing to adopt and modify this approach. Our report conforms to the Guidance for Reporting Involvement of Patients and the Public-2 international reporting guidelines for PPI [36]. Therefore, findings from this study will be comparable and address concerns raised by some researchers regarding the lack of consistent reporting in co-design research [37], especially in regard to those living with dementia [4].

By adopting Scrum in this context, we were able to realize the benefits of an iterative co-design approach, with the software evolving throughout each of the 4 workshops. In addition, our use of prototype designs in the first 2 workshops provided the team with a low-cost opportunity to receive feedback and evaluate the idea before commencing software development.

Participants in the workshops gave positive feedback about the experience, showed strong engagement during the sessions, and provided constructive comments on the app. Notably, points raised in early workshops did not resurface in the week-long test undertaken by a different participant group at a later stage. This could be because users in the week-long test focused on different aspects of the app. However, it could also suggest that our approach was effective in addressing design issues at an early stage of development.

Although the co-design methodology enabled the team to iteratively develop the app, we still had to overcome several challenges. For instance, it was agreed early on that embedding of an end user (in this case, someone living with a dementia diagnosis into the Scrum team), as per co-design best practice [38], would not be feasible because of the burden that regular meetings could place on those living with a dementia diagnosis and their care partners, as well as the power differentials and communication difficulties associated with involving lay members in technical discussions [39]. Instead, we took the pragmatic approach of running workshops throughout the project to garner regular feedback from the user group and provide end-user representation through a proxy (in our study, the proxy was a public engagement officer who developed and facilitated all co-design workshops). Ideally, the project would have benefited from more regular contact with the end-user group. However, given the vulnerable nature of this group, our approach seemed to be an appropriate compromise, given that this was a fast turnaround, intensive development project.

There were some logistical challenges in running the Agile development using a co-design process. Specifically, recruiting participants for workshops required multiple interactions with community groups to garner interest in the project and plan suitable times and venues for workshops. Therefore, it was necessary to set the date for each workshop several weeks in advance. However, software development does not always run according to the plan, as it is not possible to estimate development tasks with a high degree of accuracy. Therefore, there is a risk that a date could be set for a workshop only for the software not to be ready in time. We mitigated this risk by setting a date for each workshop, which allowed a suitable leeway for any unexpected delays. We also worked with an experienced and established Scrum team, meaning that the estimates could usually be provided with a reasonable level of confidence.

Conclusions

Given the need for health research, particularly the development of health technology, to be approached in a patient-centered manner [37], we developed a methodology that combines Agile software development with integrated patient co-design. This approach facilitated meaningful user involvement in a manner that was easily manageable by our project team, who were working on a short timescale with budget constraints, a challenge experienced by many developers [40].

We also highlighted several instances where input provided by people with lived experience of dementia helped our team to identify and address usability issues early in the development process, speeding up delivery and reducing software development waste. Our experience evidences how co-design can benefit the software development process and be sustainably tailored to the needs of diverse patient populations [4].

The next step for the MyMindCheck app is to undertake a large pilot trial and adaptations to apply to other health conditions with fluctuating cognitive states. Patient groups will continue to be involved throughout this future work to ensure that the developed software is fit for its purpose.

Acknowledgments

This work was funded by the Medical Research Council Momentum Award: Institutional pump-priming award for dementia research (MC_PC_16033). The authors would like to thank the Alzheimer’s Society and their patient network for support in the setup and design of workshops and all the participants who contributed to this project, including special thanks to the Peter Quinn Friendship Group, Together Dementia Support, and the Humphrey Booth Resource center.

Abbreviations

- PPI

patient and public involvement

Footnotes

Conflicts of Interest: None declared.

References

- 1.The NHS long term plan. NHS. [2021-12-13]. https://www.longtermplan.nhs.uk/

- 2.Dobson J. Co-production helps ensure that new technology succeeds. BMJ. 2019 Jul 25;41(6):l4833–50. doi: 10.1136/bmj.l4833. [DOI] [Google Scholar]

- 3.Palumbo R. Contextualizing co-production of health care: a systematic literature review. Int J Public Sector Manag. 2016 Jan 11;29(1) doi: 10.1108/IJPSM-07-2015-0125. doi: 10.1108/IJPSM-07-2015-0125. [DOI] [Google Scholar]

- 4.Topo P. Technology studies to meet the needs of people with dementia and their caregivers. J Appl Gerontol. 2008 Oct 01;28(1):5–37. doi: 10.1177/0733464808324019. doi: 10.1177/0733464808324019. [DOI] [Google Scholar]

- 5.Martin A, Caon M, Adorni F, Andreoni G, Ascolese A, Atkinson S, Bul K, Carrion C, Castell C, Ciociola V, Condon L, Espallargues M, Hanley J, Jesuthasan N, Lafortuna CL, Lang A, Prinelli F, Puidomenech Puig E, Tabozzi SA, McKinstry B. A mobile phone intervention to improve obesity-related health behaviors of adolescents across Europe: iterative co-design and feasibility study. JMIR Mhealth Uhealth. 2020 Mar 02;8(3):e14118. doi: 10.2196/14118. https://mhealth.jmir.org/2020/3/e14118/ v8i3e14118 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Sanders E, Stappers P. Co-creation and the new landscapes of design. CoDesign. 2008 Mar;4(1):5–18. doi: 10.1080/15710880701875068. doi: 10.1080/15710880701875068. [DOI] [Google Scholar]

- 7.Steen M, Manschot M, Koning N. Benefits of co-design in service design projects. Int J Design. 2011 Aug;5(2):53–60. https://www.researchgate.net/publication/254756409_Benefits_of_Co-design_in_Service_Design_Projects . [Google Scholar]

- 8.Cavalcanti L, Holden R, Karanam Y. Applying participatory design with dementia stakeholders: challenges and lessons learned from two projects. Proceedings of the 12th EAI International Conference on Pervasive Computing Technologies for Healthcare – Demos, Posters, Doctoral Colloquium; Proceedings of the 12th EAI International Conference on Pervasive Computing Technologies for Healthcare – Demos, Posters, Doctoral Colloquium; May 21-24, 2018; New York, United States. 2018. [DOI] [Google Scholar]

- 9.Kerkhof Y, Pelgrum-Keurhorst M, Mangiaracina F, Bergsma A, Vrauwdeunt G, Graff M, Dröes R-M. User-participatory development of FindMyApps; a tool to help people with mild dementia find supportive apps for self-management and meaningful activities. Digit Health. 2019;5:2055207618822942. doi: 10.1177/2055207618822942. https://journals.sagepub.com/doi/10.1177/2055207618822942?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed .10.1177_2055207618822942 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Miah J, Dawes P, Edwards S, Leroi I, Starling B, Parsons S. Patient and public involvement in dementia research in the European Union: a scoping review. BMC Geriatr. 2019 Aug 14;19(1):220. doi: 10.1186/s12877-019-1217-9. https://bmcgeriatr.biomedcentral.com/articles/10.1186/s12877-019-1217-9 .10.1186/s12877-019-1217-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Pickett J, Murray M. Editorial: patient and public involvement in dementia research: setting new standards. Dementia (London) 2018 Nov;17(8):939–43. doi: 10.1177/1471301218789290. doi: 10.1177/1471301218789290. [DOI] [PubMed] [Google Scholar]

- 12.Morgan N, Grinbergs-Saull A, Murraty M. 'We can make our research meaningful': the impact of the Alzheimer's Society Research Network. Alzheimer's Society. [2021-12-13]. https://www.alzheimers.org.uk/sites/default/files/2018-04/Research%20Network%20Report%20low-res.pdf .

- 13.Hudson JM, Pollux PM. The Cognitive Daisy - A novel method for recognising the cognitive status of older adults in residential care: Innovative Practice. Dementia (London) 2019 Jul;18(5):1948–1958. doi: 10.1177/1471301216673918. https://journals.sagepub.com/doi/10.1177/1471301216673918?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed .1471301216673918 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Van der Roest HG, Wenborn J, Pastink C, Dröes R-M, Orrell M. Assistive technology for memory support in dementia. Cochrane Database Syst Rev. 2017 Jun 11;6:CD009627. doi: 10.1002/14651858.CD009627.pub2. http://europepmc.org/abstract/MED/28602027 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Bateman DR, Srinivas B, Emmett TW, Schleyer TK, Holden RJ, Hendrie HC, Callahan CM. Categorizing health outcomes and efficacy of mHealth apps for persons with cognitive impairment: a systematic review. J Med Internet Res. 2017 Aug 30;19(8):e301. doi: 10.2196/jmir.7814. https://www.jmir.org/2017/8/e301/ v19i8e301 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Andrews JA, Brown LJ, Hawley MS, Astell AJ. Older Adults' Perspectives on Using Digital Technology to Maintain Good Mental Health: Interactive Group Study. J Med Internet Res. 2019 Feb 13;21(2):e11694. doi: 10.2196/11694. https://www.jmir.org/2019/2/e11694/ v21i2e11694 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hassan L, Swarbrick C, Sanders C, Parker A, Machin M, Tully MP, Ainsworth J. Tea, talk and technology: patient and public involvement to improve connected health 'wearables' research in dementia. Res Involv Engagem. 2017 Aug 01;3:12. doi: 10.1186/s40900-017-0063-1. https://researchinvolvement.biomedcentral.com/articles/10.1186/s40900-017-0063-1 .63 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.May C, Hasher L, Stoltzfus E. Optimal time of day and the magnitude of age differences in memory. Psychol Sci. 2017 Apr 25;4(5):326–30. doi: 10.1111/j.1467-9280.1993.tb00573.x. http://www.jstor.org/stable/40063056 . [DOI] [Google Scholar]

- 19.Mortamais M, Ash JA, Harrison J, Kaye J, Kramer J, Randolph C, Pose C, Albala B, Ropacki M, Ritchie CW, Ritchie K. Detecting cognitive changes in preclinical Alzheimer's disease: a review of its feasibility. Alzheimers Dement. 2017 Apr 01;13(4):468–92. doi: 10.1016/j.jalz.2016.06.2365.S1552-5260(16)32901-6 [DOI] [PubMed] [Google Scholar]

- 20.Menegardo CS, Friggi FA, Scardini JB, Rossi TS, Vieira TD, Tieppo A, Morelato RL. Sundown syndrome in patients with Alzheimer's disease dementia. Dement Neuropsychol. 2019 Sep 01;13(4):469–74. doi: 10.1590/1980-57642018dn13-040015. http://europepmc.org/abstract/MED/31844502 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Khachiyants N, Trinkle D, Son SJ, Kim KY. Sundown syndrome in persons with dementia: an update. Psychiatry Investig. 2011 Dec 01;8(4):275–87. doi: 10.4306/pi.2011.8.4.275. http://psychiatryinvestigation.org/journal/view.php?doi=10.4306/pi.2011.8.4.275 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Dementia assessment and diagnosis. National Institute for Health and Care Excellence. [2021-12-13]. https://pathways.nice.org.uk/pathways/dementia/dementia-assessment-and-diagnosis .

- 23.Brown LJ, Adlam T, Hwang F, Khadra H, Maclean LM, Rudd B, Smith T, Timon C, Williams EA, Astell AJ. Computer-based tools for assessing micro-longitudinal patterns of cognitive function in older adults. Age (Dordr) 2016 Aug;38(4):335–50. doi: 10.1007/s11357-016-9934-x. http://europepmc.org/abstract/MED/27473748 .10.1007/s11357-016-9934-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Muniz-Terrera G, Watermeyer T, Danso S, Ritchie C. Mobile cognitive testing: opportunities for aging and neurodegeneration research in low- and middle-income countries. J Glob Health. 2019 Dec;9(2):020313. doi: 10.7189/jogh.09.020313. doi: 10.7189/jogh.09.020313.jogh-09-020313 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.O'Connor S. Co-designing technology with people with dementia and their carers: exploring user perspectives when co-creating a mobile health application. Int J Older People Nurs. 2020 Sep;15(3):e12288. doi: 10.1111/opn.12288. [DOI] [PubMed] [Google Scholar]

- 26.Yousaf K, Mehmood Z, Awan IA, Saba T, Alharbey R, Qadah T, Alrige MA. A comprehensive study of mobile-health based assistive technology for the healthcare of dementia and Alzheimer's disease (AD) Health Care Manag Sci. 2020 Jun 01;23(2):287–309. doi: 10.1007/s10729-019-09486-0.10.1007/s10729-019-09486-0 [DOI] [PubMed] [Google Scholar]

- 27.Roberts M. Successful public health information system database integration projects: a qualitative study. Online J Public Health Inform. 2018;10(2):e207. doi: 10.5210/ojphi.v10i2.9221. http://europepmc.org/abstract/MED/30349625 .ojphi-10-e207 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Raghu A, Praveen D, Peiris D, Tarassenko L, Clifford G. Engineering a mobile health tool for resource-poor settings to assess and manage cardiovascular disease risk: SMARThealth study. BMC Med Inform Decis Mak. 2015 Apr 29;15:36. doi: 10.1186/s12911-015-0148-4. https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/s12911-015-0148-4 .10.1186/s12911-015-0148-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Tambuyzer E, Pieters G, Van Audenhove C. Patient involvement in mental health care: one size does not fit all. Health Expect. 2014 Feb 01;17(1):138–50. doi: 10.1111/j.1369-7625.2011.00743.x. doi: 10.1111/j.1369-7625.2011.00743.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Dementia-friendly communities. Alzheimer's Society. [2021-12-13]. https://www.alzheimers.org.uk/get-involved/dementia-friendly-communities .

- 31.Good practice for payment and recognition - things to consider. NIHR|INVOLVE. 2019. [2021-08-01]. https://www.invo.org.uk/good-practice-for-payment-and-recognition-things-to-consider/

- 32.Turk A, Boylan A, Locock L. A researchers guide to patient public involvement. Healthtalk. [2021-12-13]. https://oxfordbrc.nihr.ac.uk/wp-content/uploads/2017/03/A-Researchers-Guide-to-PPI.pdf .

- 33.Schwaber K. Business Object Design and Implementation. London: Springer; 1997. SCRUM development process. [Google Scholar]

- 34.Oomen S, Benny DW, Ademar A, Pascal R. How can scrum be successful? Competences of the scrum product owner. Proceedings of the 25th European Conference on Information Systems (ECIS); Proceedings of the 25th European Conference on Information Systems (ECIS); Jun 5-10, 2017; Guimarães, Portugal. 2017. Jan 01, [Google Scholar]

- 35.Lazak M, Howieson D, Bigler E, Tranel D. Neuropsychological Assessment 5th edition. Oxford, UK: Oxford University Press; 2012. [Google Scholar]

- 36.Staniszewska S, Brett J, Simera I, Seers K, Mockford C, Goodlad S, Altman DG, Moher D, Barber R, Denegri S, Entwistle A, Littlejohns P, Morris C, Suleman R, Thomas V, Tysall C. GRIPP2 reporting checklists: tools to improve reporting of patient and public involvement in research. Res Involv Engagem. 2017 Aug 2;3:13. doi: 10.1186/s40900-017-0062-2. https://researchinvolvement.biomedcentral.com/articles/10.1186/s40900-017-0062-2 .62 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Slattery P, Saeri AK, Bragge P. Research co-design in health: a rapid overview of reviews. Health Res Policy Syst. 2020 Feb 11;18(1):17. doi: 10.1186/s12961-020-0528-9. https://health-policy-systems.biomedcentral.com/articles/10.1186/s12961-020-0528-9 .10.1186/s12961-020-0528-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Guidance on co-producing a research project. NIHR. 2018. Apr 01, [2021-12-13]. https://www.invo.org.uk//wp-content//uploads//2019//04//Copro_Guidance_Feb19.pdf .

- 39.Brown LJ, Dickinson T, Smith S, Brown Wilson C, Horne M, Torkington K, Simpson P. Openness, inclusion and transparency in the practice of public involvement in research: a reflective exercise to develop best practice recommendations. Health Expect. 2018 Apr;21(2):441–7. doi: 10.1111/hex.12609. doi: 10.1111/hex.12609. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Nan N, Harter D.E. Impact of budget and schedule pressure on software development cycle time and effort. IIEEE Trans Software Eng. 2009 Sep 01;35(5):624–37. doi: 10.1109/tse.2009.18. [DOI] [Google Scholar]