Abstract

Brain–machine interfaces (BMIs) for reaching have enjoyed continued performance improvements, yet there remains significant need for BMIs that control other movement classes. Recent scientific findings suggest that the intrinsic covariance structure of neural activity depends strongly on movement class, potentially necessitating different decode algorithms across classes. To address this possibility, we developed a self-motion BMI based on cortical activity as monkeys cycled a hand-held pedal to progress along a virtual track. Unlike during reaching, we found no high-variance dimensions that directly correlated with to-be-decoded variables. This was due to no neurons having consistent correlations between their responses and kinematic variables. Yet we could decode a single variable—self-motion—by nonlinearly leveraging structure that spanned multiple high-variance neural dimensions. Resulting online BMI-control success rates approached those during manual control. These findings make two broad points regarding how to build decode algorithms that harmonize with the empirical structure of neural activity in motor cortex. First, even when decoding from the same cortical region (e.g., arm-related motor cortex), different movement classes may need to employ very different strategies. Although correlations between neural activity and hand velocity are prominent during reaching tasks, they are not a fundamental property of motor cortex and cannot be counted on to be present in general. Second, although one generally desires a low-dimensional readout, it can be beneficial to leverage a multidimensional high-variance subspace. Fully embracing this approach requires highly nonlinear approaches tailored to the task at hand, but can produce near-native levels of performance.

SIGNIFICANCE STATEMENT Many brain–machine interface decoders have been constructed for controlling movements normally performed with the arm. Yet it is unclear how these will function beyond the reach-like scenarios where they were developed. Existing decoders implicitly assume that neural covariance structure, and correlations with to-be-decoded kinematic variables, will be largely preserved across tasks. We find that the correlation between neural activity and hand kinematics, a feature typically exploited when decoding reach-like movements, is essentially absent during another task performed with the arm: cycling through a virtual environment. Nevertheless, the use of a different strategy, one focused on leveraging the highest-variance neural signals, supported high performance real-time brain–machine interface control.

Keywords: BMI, motor cortex, prosthetics, subspaces

Introduction

Intracortical brain–machine interfaces (BMIs) that support reach-like tasks have proved successful in primates and human clinical trials (Ethier et al., 2012; Gilja et al., 2012, 2015; Collinger et al., 2013; Shenoy and Carmena, 2014; Wodlinger et al., 2015; Ajiboye et al., 2017; Shanechi et al., 2017). Yet it is unclear whether current decode algorithms will generalize well to nonreaching applications, even within the domain of arm movements. Early reach-based BMIs (Chapin et al., 1999; Wessberg et al., 2000; Serruya et al., 2002; Taylor et al., 2002; Carmena et al., 2003; Velliste et al., 2008) employed a strategy of inverting the ostensible neural encoding of kinematic variables, primarily hand velocity and direction. Despite evidence against literal kinematic encoding (Scott et al., 2001; Sergio et al., 2005; Churchland et al., 2012; Sussillo et al., 2015; Michaels et al., 2016; Russo et al., 2018), studies have continued to leverage robust correlations between neural activity and reach kinematics. Improvements have derived from honing this strategy (Gilja et al., 2012), from stabilizing the neural subspace containing the useful correlations (Degenhart et al., 2020; Gallego et al., 2020) and/or from better estimating the neural state (Aghagolzadeh and Truccolo, 2016; Makin et al., 2018). For example, Kao et al. (2015) leveraged dynamics, spanning many neural dimensions, to denoise activity in dimensions where correlations with reach kinematics were strong.

Successful decoding both leverages (Chase and Schwartz, 2011; Shenoy et al., 2011) and serves to validate scientific assumptions, including whether population activity evolves according to dynamics (Kao et al., 2015) and how neural responses change during learning (Taylor et al., 2002; Ganguly et al., 2011; Sadtler et al., 2014). The assumption that neural activity correlates robustly with external parameters (Taylor et al., 2002; Kandel et al., 2021) has been near-universal, yet is based almost entirely on observations during reach-like movements of the arm or wrist. Correlations between neural activity and hand velocity are perhaps not surprising during reaching; both are phasic. A natural question is whether this empirical foundation can be relied on when decoding movements of the arm that are not reach-like. Do strong correlations remain, or do other response features become prominent, requiring different decode strategies?

BMIs designed for different effectors (e.g., for tongue and speech decoding) have already had to contend with the possibility that strong correlations between neural activity and to-be-decoded variables may be absent, requiring highly nonlinear approaches (Anumanchipalli et al., 2019; Liu et al., 2019). Even within the realm of reaching, performance is improved if a recurrent network precedes linear decoding of kinematics (Sussillo et al., 2012, 2016). Much of this benefit may be due to denoising (similar to Kao et al., 2015), but this finding also suggests meaningful nonlinear relationships. What then should we expect during non–reach-like arm movements? Will strong linear correlations with kinematics persist, or will other more nonlinear relationships become dominant? This question is intertwined with our evolving understanding of motor cortex covariance structure. During a given task, a handful of neural dimensions typically captures considerable variance (Churchland et al., 2007; Sadtler et al., 2014; Gallego et al., 2017). These high-variance dimensions are useful for decoding because higher variance implies better noise resistance. Within a given task, neural covariance structure remains surprisingly fixed even when the decoder is altered (Sadtler et al., 2014; Golub et al., 2018) and can remain similar across related tasks (Gallego et al., 2018). If covariance remains fixed across tasks, then the nature of neural-vs-kinematic correlations would presumably also be stable. One might still need different decode strategies for very different modalities (e.g., arm movements vs speech), but one could hope to use a unified strategy within a modality.

Yet there is increasing experimental evidence that covariance is often not stable across tasks. Covariance changes dramatically when cycling forward versus backward (Russo et al., 2018), when using one arm versus another (Ames and Churchland, 2019), when preparing versus moving (Kaufman et al., 2014; Elsayed et al., 2016; Inagaki et al., 2020), when reaching versus walking (Miri et al., 2017), and when co-contracting versus alternating muscle activity (Warriner et al., under review). Thus, in a new task, there is no guarantee the high-variance dimensions will be the same or will show the same correlations with kinematics. Indeed, the separation of computations related to different tasks into different dimensions may be a common network property (Duncker et al., 2020). While such a property would impair the use of the same decode strategy across tasks, it could also allow the design of decoders that leverage task-specific relationships between neural activity and kinematic variables.

To investigate, we employed a simple task in which monkeys cycle a hand-held pedal to move along a virtual track. The class of neural activity evoked by this task (rhythmic activity with frequency reflecting movement speed) could potentially be leveraged by future BMI devices that guide self-motion. Prior explorations of self-motion-decoding borrowed from the strategies employed by reach-based BMIs, and decoded a whole-body directional vector (Rajangam et al., 2016) or classified the direction of a joystick intermediary (Libedinsky et al., 2016). This is a promising approach, but we wished to explore whether a task-specific approach might also be promising. Cycling is overtly different from reach- or joystick-based tasks, and might both require and allow different decode strategies.

During cycling, activity that correlated with kinematics was relegated to low-variance dimensions. Put differently, most neurons displayed activity that did not correlate particularly well with kinematics. While there existed linear readouts of activity that did correlate well with movement kinematics, they were based on low-variance dimensions and thus susceptible to noise. In contrast, there existed high-variance subspaces where neural activity had reliable nonlinear relationships with intended self-motion. The ability to decode a one-dimensional command for virtual self-motion, from activity spanning multiple high-variance dimensions, produced both high accuracy and low latency. Success rates and acquisition times were close to those achieved under manual control. As a result, almost no training was needed; monkeys appeared to barely notice transitions from manual to BMI control. These findings make two points regarding how BMI decoding should interact with the basic properties of motor cortex activity. First, the neural relationships leveraged by traditional decoders are empirically reliable only during some behaviors. Second, even when those traditional relationships are absent, other task-specific relationships will be present and can support very accurate decoding.

Materials and Methods

Experimental design

All procedures were approved by the Columbia University Institutional Animal Care and Use Committee. Subjects G and E were 2 adult male macaque monkeys (Macaca mulatta). Monkeys sat in a primate chair facing an LCD monitor (144 Hz refresh rate) that displayed a virtual environment generated by the Unity engine (Unity Technologies). The head was restrained via a titanium surgical implant. While the monkey's left arm was comfortably restrained, the right arm grasped a hand pedal. Cloth tape was used to ensure consistent placement of the hand on the pedal. The pedal connected via a shaft to a motor (Applied Motion Products), which contained a rotary encoder that measured the position of the pedal with a precision of 1/10,000 of the cycle. The motor was also used to apply forces to the pedal, endowing it with virtual mass and viscosity.

Although our primary focus was on BMI performance, we also employed multiple sessions where the task was performed under manual control. Manual-control sessions allowed us to document the features of neural responses we used for decoding. They documented “normal” performance, against which BMI performance could be compared. Monkey G performed eight manual-control sessions (average of 229 trials/session), each within the same day as 1 of the 20 BMI-controlled sessions (average of 654 trials/session). Monkey E performed five manual-control sessions (average of 231 trials/session) on separated days from the 17 BMI-controlled sessions (average of 137 trials/session).

BMI-controlled sessions were preceded by 50 “decoder-training trials” performed under manual control. These were used to train the decoder on that day. Decoder-training trials employed only a subset of all conditions subsequently performed during BMI control. Thus, BMI-controlled performance had to generalize to sequences of different distances not present during the decoder-training trials. Because they did not include all conditions, we do not analyze performance for decoder-training trials. All performance comparisons are made between manual-control and BMI-control sessions, which employed identical conditions, trial parameters, and definitions of success versus failure. We describe the task below from the perspective of manual control. The task was identical under BMI control, except that position in the virtual environment was controlled by the output of a decoder rather than the pedal. We did not prevent or discourage the monkey from cycling during BMI control, and he continued to do so as normal.

The cycling task required that the monkey cycle the pedal in the instructed direction to move through the virtual environment, and stop on top of lighted targets to collect juice reward. The color of the landscape indicated whether cycling must be “forward” (green landscape, the hand moved away from the body at the top of the cycle) or “backward” (tan landscape, the hand moved toward the body at the top of the cycle). In the primary task, cycling involved moving between stationary targets (in a subsequent section, we describe an additional task used to evaluate speed control). There were 6 total conditions, defined by cycling direction (forward or backward) and target distance (2, 4, or 7 cycles). Distance conditions were randomized within same-direction blocks (three trials of each distance per block), and directional blocks were randomized over the course of each session. Trials began with the monkey stationary on a target. A second target appeared in the distance. To obtain reward, the monkey had to cycle to that target, come to a halt “on top” of it (in the first-person perspective of the task), and remain stationary for a hold period of 1000-1500 ms (randomized). A trial was aborted without reward if the monkey began moving before target onset (or in the 170 ms after, which would indicate attempted anticipation), if the monkey moved past the target without stopping, or if the monkey moved while awaiting reward. The next trial began 100 ms after the variable hold period. Monkeys performed until they received enough liquid reward that they chose to desist. As their motivation waned, they would at times take short breaks. For both manual-control and BMI-control sessions, we discarded any trials in which monkeys made no attempt to initiate the trial, and did not count them as “failed.” These trials occurred 2 ± 2 times per session (mean and SD, Monkey G, maximum 10) and 3 ± 3 times per session (Monkey E, maximum 11).

For Monkey G, an additional three manual-control sessions (189, 407, and 394 trials) were employed to record EMG activity from the muscles. We recorded from 5 to 7 muscles per session, yielding a total of 19 recordings. We made one or more recordings from the three heads of the deltoid, the lateral and long heads of triceps brachii, the biceps brachii, trapezius, and latissimus dorsi. These muscles were selected due to their clear activation during the cycling task.

Surgery and neural/muscle recordings

Neural activity was recorded using chronic 96-channel Utah arrays (Blackrock Microsystems), implanted in the left hemisphere using standard surgical techniques. In each monkey, an array was placed in the region of primary motor cortex (M1) corresponding to the upper arm. In Monkey G, a second array was placed in dorsal premotor cortex (PMd), just anterior to the first array. Array locations were selected based on MRI scans and anatomical landmarks observed during surgery. Experiments were performed 1-8 months (Monkey G) and 3-4 months (Monkey E) after surgical implantation. Neural responses both during the task and during palpation confirmed that arrays were in the proximal-arm region of cortex.

Electrode voltages were filtered (bandpass 0.3 Hz to 7.5 kHz) and digitized at 30 kHz using Digital Headstages, Digital Hubs, and Cerebus Neural Signal Processors from Blackrock Microsystems. Digitized voltages were high-pass filtered (250 Hz) and spike events were detected based on threshold crossings. Thresholds were set to between −4.5 and −3 times the RMS voltage on each channel, depending on the array quality on a given day. On most channels, threshold crossings included clear action-potential waveforms from one or more neurons, but no attempt was made to sort action potentials.

Intramuscular EMG recordings were made using pairs of hook-wire electrodes inserted with 30 mm × 27 gauge needles (Natus Neurology). Raw voltages were amplified and filtered (bandpass 10 Hz to 10 kHz) with ISO-DAM 8A modules (World Precision Instruments), and digitized at 30 kHz with the Cerebus Neural Signal Processors. EMG was then digitally bandpass filtered (50 Hz to 5 kHz) prior to saving for offline analysis. Offline, EMG recordings were rectified, low-pass filtered by convolving with a Gaussian (SD: 25 ms), downsampled to 1 kHz, and then fully normalized such that the maximum value achieved on each EMG channel was 1.

A real-time target computer (Speedgoat) running Simulink Real-Time environment (The MathWorks) processed behavioral and neural data and controlled the decoder output in online experiments. It also streamed variables of interest to another computer that saved these variables for offline analysis. Stateflow charts were implemented in the Simulink model to control task state flow as well as the decoder state machine. Real-time control had millisecond precision.

Spike trains were causally converted to firing rates by convolving each spike with a β kernel. The β kernel was defined by temporally scaling a β distribution (shape parameters: α = 3 and β = 5) to be defined over the interval [0, 275] ms and normalizing the kernel such that the firing rates would be in units of spikes/s. The same filtering was applied for online decoding and offline analyses. Firing rates were also mean centered (subtracting the mean rate across all times and conditions) and normalized. During online decoding, the mean and normalization factor were values that had been computed from the training data. We used soft normalization (Russo et al., 2018): the normalization factor was the firing rate range plus a constant (5 spikes/s).

Computing trial-averaged firing rates

Analyses of BMI performance are based on real-time decoding during online performance, with no need to consider trial-averaged firing rates. However, we still wished to compute trial-averaged traces of neural activity and kinematics for two purposes. First, some aspects of decoder training benefited from analyzing trial-averaged firing rates. Second, we employ analyses that document basic features of single-neuron responses and of the population response (e.g., plotting neural activity within task-relevant dimensions). These analyses benefit from the denoising that comes from computing a time-varying firing rate across many trials. Due to the nature of the task, trials could be quite long (up to 20 cycles in the speed-tracking task), rendering the traditional approach of aligning all trials to movement onset insufficient for preserving alignment across all subsequent cycles. It was thus necessary to modestly adjust the time base of each individual trial (e.g., stretching time slightly for a trial where cycling was faster than typical). We employed two alignment methods. Method A is a simplified procedure that was used prior to parameter fitting when training the decoder before online BMI control. This method aligns only times during the movement. Method B is a more sophisticated alignment procedure that was used for all offline analyses. This method aligns the entire trial, including premovement and postmovement data. For visualization, conditions with the same target distance (e.g., 7 cycles), but different directions, were also aligned to the same time base. Critically, any data processing that relied on temporal structure was completed in the original, unstretched time base prior to alignment.

Method A

The world position for each trial resembles a ramp between movement onset and offset. First, we identify the portion of each trial starting ¼ cycle into the movement and ending ¼ cycle before the end of the movement. We fit a line to the world position in this period and then extend that line until it intercepts the starting and ending positions. The data between these two intercepts are considered the movement data for each trial and are extracted. These movement data are then uniformly stretched in time to match the average trial length for each trial's associated condition. This approach compresses slower than average movements and stretches faster than average movements within a condition, such that they can be averaged while still preserving many of the cycle-specific features of the data.

Method B

This method consists of a mild, nonuniform stretching of time in order to match each trial to a condition-specific template (for complete details, see Russo et al., 2018).

Statistical analysis

Statistical analysis relating to neural activity during manual control (see Fig. 2) is described in the following sections. Analysis related to performance of the decoders during the main BMI task and the speed-control task variant is given subsequently in the associated Materials and Methods and Results sections.

Figure 2.

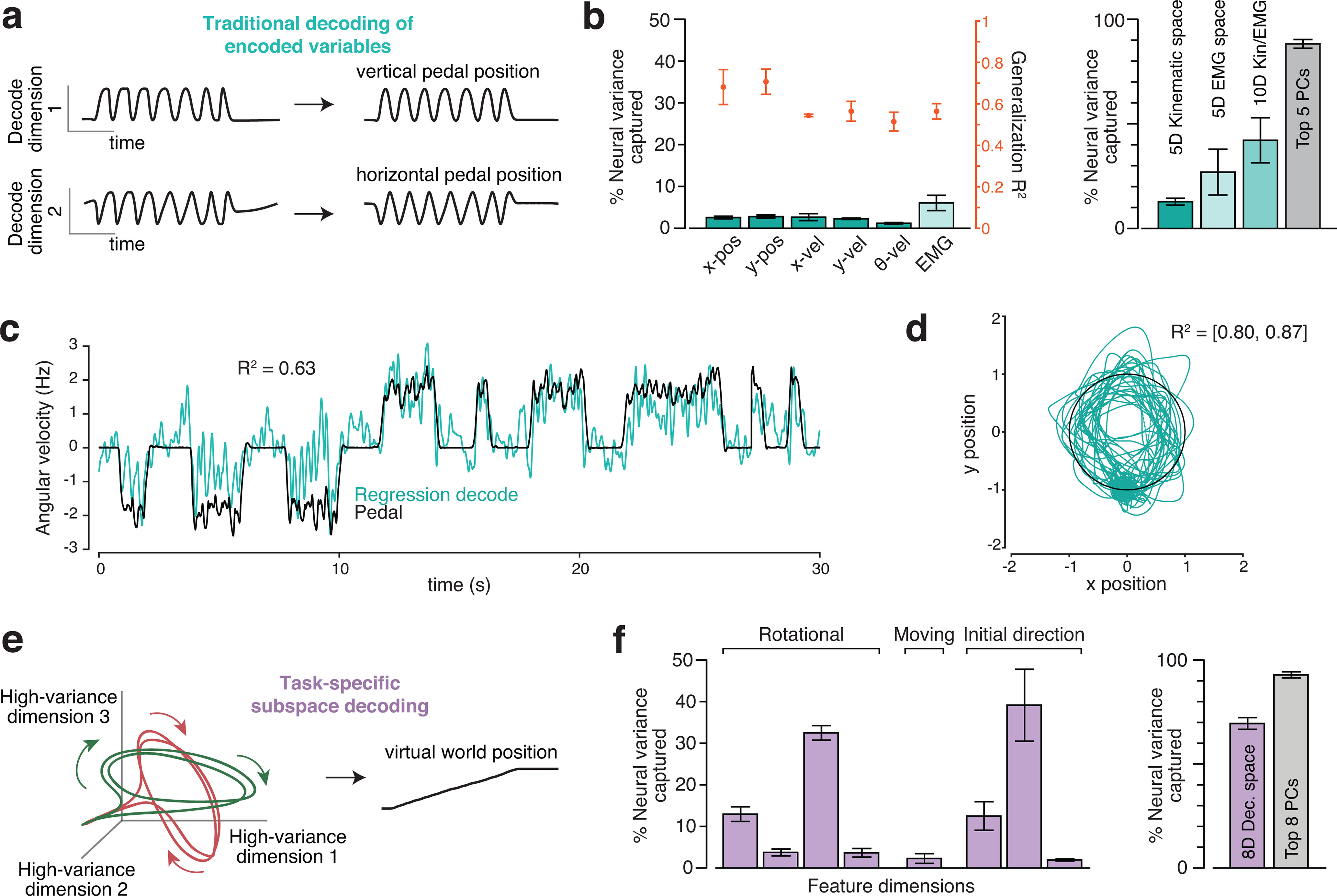

Different decode strategies leverage neural signals with different magnitudes. a, In the traditional decoding strategy, neural firing rates are assumed to predominantly encode the key variables. The encoding model is usually assumed to be roughly linear when variables are expressed appropriately. For example, cosine tuning for reach velocity is equivalent to a linear dependence on horizontal and vertical velocity. The goal of decoding is to invert encoding. Thus, decoding dimensions should capture the dominant signals in the neural data (because those are what is encoded). b, Variance of the neural population response captured by dimensions used to decode kinematic parameters (teal bars) and muscle activity (light teal bar). Variance captured was computed based on trial-averaged firing rates, and thus quantifies to the degree to which each neuron's firing rate (as opposed to its noisy spiking) contains a contribution from the signal captured by each dimension. Left subpanel, Neural variance captured (left axis) for dimensions correlating with kinematic variables (individual variables shown separately) and muscles (average across 19 recordings, SE computed across recordings). Also shown are the associated generalized R2 values (right axis) for each decoder. Right subpanel, Total variance captured by subspaces spanned by kinematic-decoding dimensions, muscle-decoding dimensions, or both. (These are not the sum of the individual variances as dimensions were not always orthogonal.) We had different numbers of EMG recordings per day and thus always selected a subset of 5. Variance captured by the top five principal components is shown for comparison. In both subpanels, data are from three manual-control sessions where units (192 channels per day) and muscles (5-7 channels per day) were recorded simultaneously. Each bar/point with error bars plots the average and SE across sessions. c, Example cross-validated regression performance for offline decoding of angular velocity. R2 is the coefficient of determination for the segment of data shown. d, Example cross-validated regression performance for offline decoding of horizontal and vertical pedal position. R2 is the coefficient of determination for the segment of data shown, same time period as in c. e, A new strategy that can be applied, even if the dominant signals do not have the goal of encoding. This strategy seeks to find neural response features that have a robust relationship with the variable one wishes to decode. That relationship may be complex or even incidental but is useful if it involves high-variance response features. f, Similar plot to b, but for the dimensions on which our decoder was built. Left subpanel, Neural variance captured for each of these eight dimensions. Right subpanel, Neural variance captured by the eight-dimensional subspace spanned by those dimensions. Variance captured by the top eight principal components is shown for comparison.

Neural variance captured analysis

Analysis of neural variance captured was based on successful trials from the three sessions, performed under manual control, with simultaneous neural and muscle recordings. Each session was split into a training set (250 trials for two of the sessions, 300 trials for the third) and testing set (100 trials for two of the sessions, 200 trials for the third). Neural dimensions were identified using a subset of training trials in order to match the size of the training sets used for online decoding (25 forward 7 cycle trials and 25 backward 7 cycle trials). Neural variance captured was computed (as described below) using the trial-averaged neural activity from the full training set for all conditions. We considered data from the full duration of each trial, including times before movement onset and after movement offset. We analyzed the variance captured by neural dimensions of three types: (1) neural dimensions where activity correlated strongly with kinematic features; (2) neural dimensions where activity correlated strongly with muscle activity; and (3) neural dimensions that captured robust “features” leveraged by our decoder.

Dimensions of the third type were found as detailed in a dedicated section below. Dimensions of the first two types were found with ridge regression, using the model , where is the kinematic or muscle variable at time during trial , and is the corresponding N-dimensional vector of neural firing rates. The vector defines a direction in neural space where activity correlates strongly with the variable . It is found by minimizing the objective function , where is a free parameter that serves to regularize the weights . We found multiple such vectors; for instance, is a dimension where neural activity correlates with horizontal velocity and is a dimension where neural activity correlates with biceps activity. For each kinematic or muscle variable, we swept to find the value that yielded the largest coefficient of determination on held-out data, and then used that for all analyses. Because filtering of neural activity introduces a net lag, this analysis naturally assumes a ∼100 ms lag between neural activity and the variables of interest. Results were extremely similar if we considered longer or shorter lags. Although we present the results of a ridge regression (L2 penalty), a lasso regression (L1 penalty) was also attempted and found to yield similar results (R2 performance within 3% and even less neural variance captured).

Before computing neural variance explained, each vector was scaled to have unity norm, yielding a dimension in neural space. We wished to determine whether that dimension captured large response features that were reliable across trials. Thus, variance captured was always computed based on trial-averaged neural responses (averages taken across all trials in the data used to identify ). We considered the matrix , where is the total number of time points across all conditions. Each row of contains the trial-averaged firing rate of one neuron. We computed an covariance matrix by treating rows of as random variables and columns as observations. The proportion of total neural variance captured by a given dimension, , is therefore as follows:

Some analyses considered the variance captured by a subspace spanned by a set of dimensions. To do so, we took the sum of the variance captured by orthonormal dimensions spanning that space.

Single-channel correlation analysis

To assess whether kinematic signals make a large contribution to single-unit firing rates during this task, we performed two correlation analyses. First, for each unit, we took single-trial neural firing rates (during the same trials and sessions as in the previous section) and found the maximum cross-correlation with horizontal and vertical pedal velocity (lags between −1000 ms and 1000 ms). Second, for each unit, we performed a regression of the firing rate on horizontal and vertical pedal velocity, and then found the maximum cross-correlation between the recorded firing rate and that predicted by the pedal velocity (lags between −1000 ms and 1000 ms).

Identifying neural dimensions

The response features leveraged by the decode algorithm are clearly visible in the top principal components of the population response, but individual features are not necessarily aligned with individual principal component dimensions. To find neural dimensions that cleanly isolated features, we employed dedicated preprocessing and dimensionality reduction approaches tailored to each feature. Dimensions were found based on the 50 decoder-training trials, collected at the beginning of each BMI-controlled session, before switching to BMI-control.

We sought a “moving-sensitive dimension” where activity reflected whether the monkey was stopped or moving. We computed spike counts in time bins both when the monkey was moving (defined as angular velocity > 1 Hz) and when he was stopped (defined as angular velocity < 0.05 Hz). We ignored time bins that did not fall into either category. Spike counts were square-root transformed so that a Gaussian distribution becomes a more reasonable approximation (Thacker and Bromiley, 2001). This resulted, for each time-bin, in an activity vector (one element per recorded channel) of transformed spike counts and a label (stopped or moving). Linear discriminant analysis yielded a hyperplane that discriminated between stopped and moving. The moving-sensitive dimension, , was the vector normal to this hyperplane.

We sought four neural dimensions that captured rotational trajectories during steady-state cycling. Spike time-series were filtered to yield firing rates (as described above), then high-pass filtered (second-order Butterworth, cutoff frequency: 1 Hz) to remove any slow drift. Single-trial movement-period responses were aligned (Method A, above) and averaged to generate an matrix containing responses during forward cycling, and an matrix containing responses during backward cycling. We sought a four-dimensional projection that would maximally capture rotational trajectories while segregating forward and backward data into different planes. Whereas the standard PCA cost function finds dimensions that maximize variance captured, we opted instead for an objective function that assesses the difference in variance captured during forward and backward cycling as follows:

where , , and is constrained to be orthonormal. This objective function will be maximized when projecting the data onto captures activity during forward cycling but not backward cycling. It will be minimized when the opposite is true: the projection captures activity during backward but not forward cycling. We thus define the forward rotational plane as the N × 2 matrix that maximizes . Similarly, the backward rotational plane was the N × 2 matrix that minimizes . Iterative optimization was used to find and using the Manopt toolbox (Boumal et al., 2014) as detailed by Cunningham and Ghahramani (2015). Optimization naturally results in and orthonormal to one another.

Finally, we sought dimensions where neural responses were maximally different, when comparing forward and backward cycling, in the moments just after movement onset. To identify the relevant epoch, we determined the time, , when the state machine would have entered the INIT state during online control. We then considered trial-averaged neural activity, for forward and backward cycling, from through ms. We applied PCA and retained the top three dimensions: , , and . Such dimensions capture both how activity evolves during the analyzed epoch and how it differs between forward and backward cycling.

Computing probability of moving

Based on neural activity in the moving-sensitive dimension, a hidden Markov model (HMM) inferred the probability of being in each of two behavioral states: “moving” or “stopped” (Kao et al., 2017a). As described above, square-rooted spike counts in the decoder-training data were already separated into “moving” and “stopped” sets for the purposes of identifying . We projected those counts onto and a fit a Gaussian distribution to each set.

When under BMI control, we computed , the probability of being in the “moving” state, given the entire sequence of current and previously observed square-rooted spike counts. was computed with a recursive algorithm that used the state transition matrix:

and knowledge of the Gaussian distributions. encodes assumptions about the probability of transitioning from one state to the next at any given bin. For each monkey, we chose reasonable values for based on preliminary data. For Monkey G, we set and . For Monkey E, we set and These values were used for all BMI-controlled sessions.

Computing steady-state direction and speed

During BMI control, we wished to infer the neural state in the four “rotational” dimensions, spanned by [ We began with , a vector containing the firing rate of every neuron at the present time t, computed by causally filtering spikes and preprocessing as described above. For this computation only, computation of included high-pass filtering to remove any drift on timescales slower than the rotations (same filter used when identifying and ). We applied a Kalman filter of the form:

where , and . In these equations, represents the true underlying neural state in the rotational dimensions and the firing rates are a noisy reflection of that underlying state. We employed filtered firing rates, rather than binned spike counts, for purely opportunistic reasons: it consistently yielded better performance.

The parameters of the Kalman filter were fit based on data from the decoder-training trials. We make the simplifying assumption that the “ground truth” state is well described by the trial-averaged firing rates projected onto [. If so, and can be directly inferred from the evolution of that state, and reflects to the degree to which single-trial firing rates differ from their idealized values:

where

where and are the trial-averaged activity when cycling forward and backward, respectively, is the neural activity for the -th trial in the training set, and indexing uses MATLAB notation. Online inference yields an estimate at each millisecond , computed recursively using the steady-state form of the Kalman filter (Malik et al., 2011).

Angular momentum was computed in each plane as the cross product between the estimated neural state and its derivative, which (up to a constant scaling) can be written:

where the superscript indexes the elements of . We fit two-dimensional Gaussian distributions to these angular momentums for each of three behaviors in the training data: “stopped” (speed < 0.05 Hz), “pedaling forward” (velocity > 1 Hz), and “pedaling backward” (velocity < −1 Hz). Online, the likelihood of the observed angular momentums with respect to each of these three distributions dictated the steady-state estimates of direction and speed. We denote these three likelihoods , , and .

In principle, one could render a simple 3-valued decode (stopped, moving forward, moving backward) based on the highest likelihood. However, we wanted the decoder to err on the side of withholding movement, and for movement speed to reflect certainty regarding direction. We set to zero unless the value of was below a conservative threshold, set to correspond to a Mahalanobis distance of 3 between and the distribution of angular momentums when stopped. (In one dimension, this would be equivalent to being >3 SDs from the mean neural angular momentum when stopped.) When was below threshold, we decoded direction and speed as follows:

where varies between 0 and 1 depending on the relative sizes of the likelihoods (yielding a slower velocity if the direction decode is uncertain) and is a direction-specific constant whose purpose is simply to scale up the result to match typical steady-state cycling speed. In practice, certainty regarding direction was high at most moments and was thus close to the maximal value set by .

Computing initial direction and speed

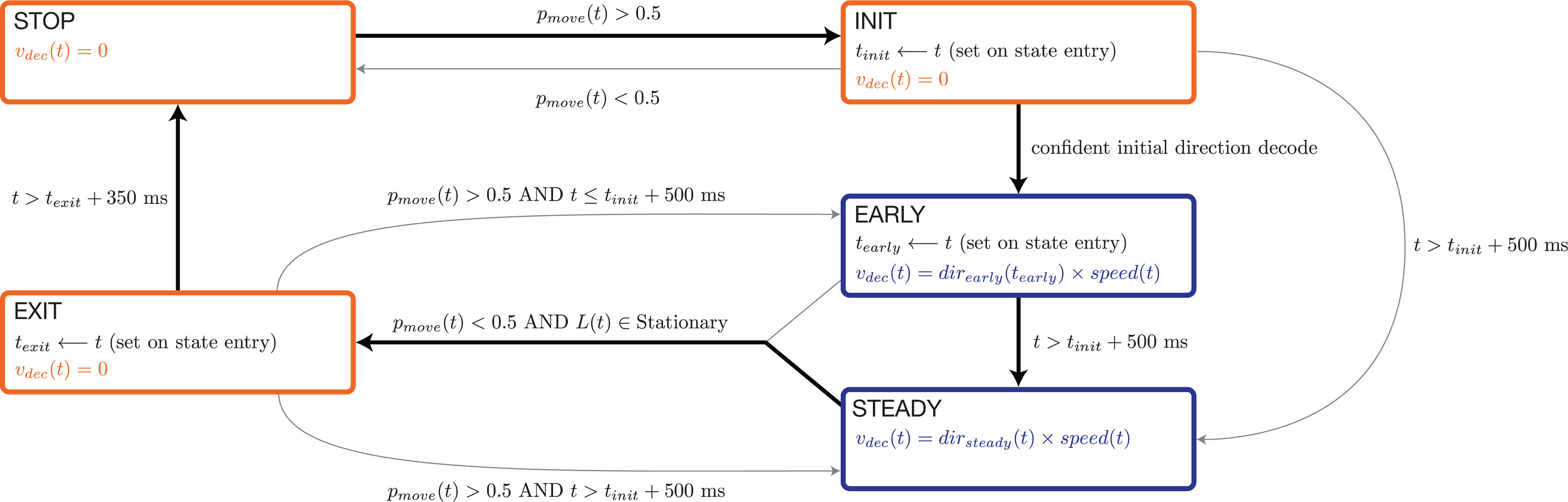

Decoded motion was determined by a state machine with four states: STOP, INIT, EARLY, and STEADY. Transitions between these states were determined primarily by . Decoded velocity was zero for STOP and INIT and was determined by (as described above) when in STEADY. Because rotational features were not yet robustly present in the EARLY state, we employed a different method to infer initial direction and speed. Both were determined at , the moment the EARLY state was entered. These values then persisted throughout the remainder of the EARLY state. Decoded direction was determined by projecting the vector of firing rates, at the moment the EARLY state was entered, onto the three initial-direction dimensions, , , and . These directions were computed based on decoder-training trials (see above) as were the Gaussian distributions of single-trial projections when cycling forward versus backward (using the moment the EARLY state would have been entered). This allowed us to compute the likelihoods, and , of the present projection given each distribution. If the observed projection was not an outlier (>10 Mahalanobis distance units) with respect to both distributions, initial direction and speed were computed as:

If the observed neural state was an outlier, we assumed rotational structure was likely already present and initial direction and speed were set to and as described above.

Smoothing of decoded velocity

The decoder state machine produced an estimate of velocity, , at every millisecond. During the STOP and INIT states, this estimate was always zero and the monkey's position in the virtual environment was held constant. During the EARLY and STEADY states, this estimate was smoothed with a trailing average:

where ; i.e., the trailing average extended in history up to 500 ms or to the moment the EARLY state was entered, whichever was shorter. was integrated every millisecond to yield decoded position in the virtual environment. In the speed-tracking experiment (described below), there was no need to smooth prior to integration because the speed estimate had already been smoothed.

Design and statistical analysis of the speed-tracking task

In addition to the primary task (where the monkey traveled 2 to 7 cycles between stationary targets), we employed a speed-tracking task, in which the monkey was required to match his virtual speed to an instructed speed. Speed was instructed implicitly, via the relative position of two moving targets. The primary target was located a fixed distance in front of the monkey's location in virtual space: the secondary target fell “behind” the first target when cycling was too slow, and pulled “ahead” if cycling was too fast. This separation saturated for large errors, but for small errors was proportional to the difference between the actual and instructed speed. This provided sufficient feedback to allow the monkey to track the instructed speed, even when it was changing. Because there was no explicit cue regarding the absolute instructed speed, monkeys began cycling on each trial unaware of the true instructed speed profile and “honed in” on that speed over the first ∼2 cycles.

We quantify instructed speed not in terms of the speed of translation through the virtual environment (which has arbitrary units) but in terms of the physical cycling velocity necessary to achieve the desired virtual speed. For example, an instructed speed of 2 Hz necessitated cycling at an angular velocity of 2 Hz to ensure maximal reward. Under BMI control, the output of the decoder had corresponding units. For example, a 2 Hz angular velocity of the neural trajectory produced movement at the same speed as 2 Hz physical cycling (see Neural features for speed-tracking for details of decoder). Reward was given throughout the trial so long as the monkey's speed was within 0.2 Hz of the instructed speed. We employed both constant and ramping instructed-speed profiles.

Constant profiles were at either 1 or 2 Hz. Trials lasted 20 cycles. After 18 cycles, the primary and secondary targets (described above) disappeared and were replaced by a final stationary target two cycles in front of the current position. Speed was not instructed during these last two cycles; the monkey simply had to continue cycling and stop on the final target to receive a large reward. Analyses of performance were based on the ∼16 cycle period starting when the monkey first honed in on the correct speed (within 0.2 Hz of the instructed speed) and ending when the speed-instructing cues disappeared 2 cycles before the trial's end.

Ramping profiles began with 3 s of constant instructed speed to allow the monkey to hone in on the correct initial speed. Instructed speed then ramped, over 8 s to a new value, and remained constant thereafter. As for constant profiles, speed-instructing cues disappeared after 18 cycles and the monkey cycled two further cycles before stopping on a final target. Again, analyses of performance were based on the period from when the monkey first honed in on the correct speed, to when the speed-instructing cues disappeared. There were two ramping profiles: one ramping up from 1 to 2 Hz, and one ramping down from 2 to 1 Hz. There were thus four total speed profiles (two constant and two ramping). These were performed for both cycling directions (presented in blocks and instructed by color as in the primary task) yielding eight total conditions. This task was performed by Monkey G, who completed an average of 166 trials/session over 2 manual-control sessions and an average of 116 trials/session over three BMI-control sessions. BMI-control sessions were preceded by 61 to 74 decoder-training trials performed under manual control. Decoder-training trials employed the two constant speeds and not the ramping profiles. Thus, subsequent BMI-controlled performance had to generalize to these situations.

As will be described below, the speed decoded during BMI control was low-pass filtered to remove fluctuations due to noise. This had the potential to actually make the task easier under BMI control, given that changes in instructed speed were slow within a trial (excepting the onset and offset of movement). We did not wish to provide BMI control with an “unfair” advantage compared with manual control. We therefore also low-pass filtered virtual speed while under manual control. Filtering (exponential, = 1 s) was applied only when speed was >0.2 Hz, so that movement onset and offset could remain brisk. This aided the monkey's efforts to track slowly changing speeds under manual control.

In manual-control sessions, trials were aborted if there was a large discrepancy between actual and instructed speed. This ensured that monkeys tried their best to consistently match speed at all times. A potential concern is that this could also mask, under BMI control, errors that would have been observed had the trial not aborted. To ensure that such errors were exposed, speed discrepancies did not cause trials to abort when under BMI control. This potentially puts BMI performance at a disadvantage relative to manual control, where large errors could not persist. In practice, this was not an issue as large errors were rare.

Neural features for speed-tracking

Although the speed-tracking experiment leveraged the same dominant neural responses that were used in the primary experiment, some quantities were computed slightly differently. These changes reflected a combination of small improvements (the speed-tracking task was performed after the primary experiment described above) and modifications to allow precise control of speed. The probability of moving, , was calculated using a different set of parameters, largely due to changes in recording quality in the intervening time between data collection from the primary experiment and data collection for the speed-tracking experiment. The bin size was increased to 100 ms, and the following state transition values were used: and . In addition, we observed that the square-root transform seemed to be having a negligible impact at this bin size, so we removed it for simplicity. To avoid losing rotational features at slower speeds, we dropped the cutoff frequency of the high-pass filter, applied to the neural firing rates, from 1 to 0.75 Hz.

In computing , the same computations were performed as for the primary experiment, with one exception: a new direction was not necessarily decoded every millisecond. In order to decode a new direction, the following conditions needed to be met: (1) the observed angular momentums had a Mahalanobis distance of <4 to the distribution corresponding to the decoded direction; and (2) the observed angular momentums had a Mahalanobis distance of >6 to the distribution corresponding to the opposite direction. These criteria ensured that a new steady-state direction was only decoded when the angular momentums were highly consistent with a particular direction. When these criteria were not met, the decoder continued to decode the same direction from the previous time step. This improved decoding but did not prevent the decoder from accurately reversing direction when the monkey stopped and reversed his physical direction (e.g., between a forward and backward trial).

Speed was computed identically in the EARLY and STEADY states, by decoding directly from the rotational plane corresponding to the decoded direction. A coarse estimate of speed was calculated as the derivative of the phase of rotation:

where and are the phases within the two planes of the neural state estimate , direction corresponds to while in the EARLY state and while in the STEADY state, and the derivative is computed in units of Hz. The coarse speed estimate, , was then smoothed with an exponential moving average ( = 500 ms) to generate the variable speed. Saturation limits were set such that, when moving, speed never dropped below 0.5 Hz or exceeded 3.5 Hz, to remain in the range at which monkeys can cycle smoothly. On entry into EARLY or STEADY from either INIT or EXIT, speed was reset to a value of 1.5 Hz. Thus, when starting to move, the initial instantaneous value of speed was set to an intermediate value and then relaxed to a value reflecting neural angular velocity.

The speed-tracking task employed two new conditions for decoder state transitions. First, transitions from INIT to EARLY required that a condition termed “confident initial direction decode” was obtained. This condition was met when the Mahalanobis distance from the neural state in the initial-direction subspace to either the forward or backward distributions dropped below 4. Second, transitions into the EXIT state required (in addition to a drop in ) that the observed angular momentums (L) belong to a set termed “Stationary.” This set was defined as all L with a Mahalanobis distance of <4 to the “stopped” distribution of angular momentums, which was learned from the training set.

Data and code accessibility

Datasets and code implementing the decoders are available on request.

Results

Behavior

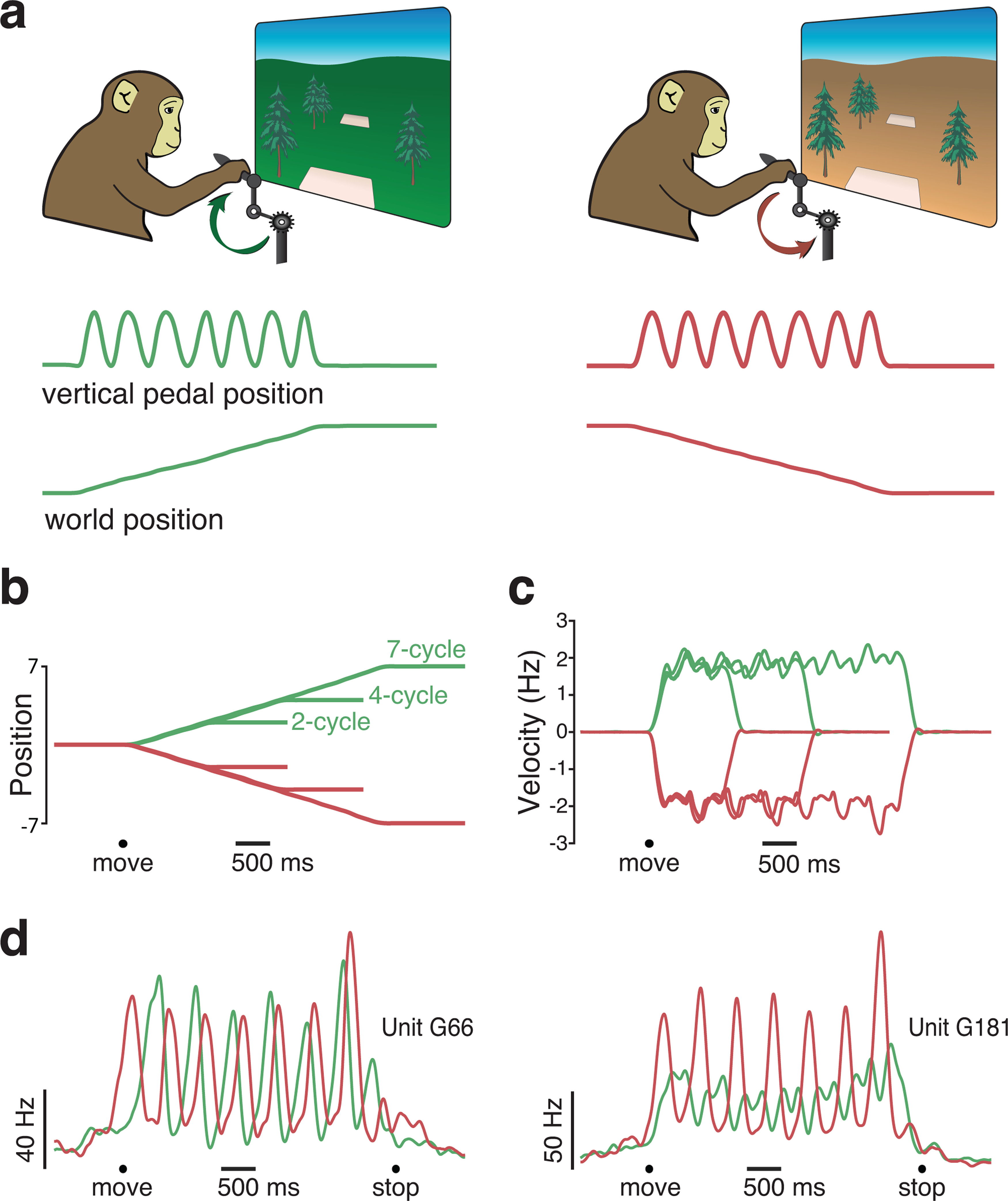

We trained 2 monkeys (Monkeys G and E) to rotate a hand-held pedal to move through a virtual environment (Fig. 1). All motion was along a linear track—no steering was necessary. Consistent with this, a single pedal was cycled with the right arm only. Our goal when decoding was to reconstruct the virtual self-motion produced by that single pedal. On each trial, a target appeared in the distance. To acquire that target, monkeys produced virtual velocity in proportion to the rotational velocity of the pedal. The color of the environment (lush and green vs desert-like and tan) instructed cycling direction. When the environment was green (Fig. 1a, left), forward virtual motion was produced by cycling “forward” (i.e., with the hand moving away from the body at the top of the cycle). When the environment was tan (Fig. 1a, right), forward virtual motion was produced by cycling “backward” (the hand moving toward the body at the top of the cycle). Cycling in the wrong direction produced motion away from the target. Trials were presented in blocks of forward or backward trials. Within each block, targets were separated by a randomized distance of 2, 4, or 7 cycles. Acquisition of a target was achieved by stopping and remaining stationary “on top” of the virtual target for a specified time. Reward was then given and the next target appeared.

Figure 1.

A cycling task that elicits rhythmic movements. a, Monkeys rotated a hand-held pedal forward (left; cued by a green background) or backward (right; cued by a tan background) to progress through a virtual environment. Bottom traces, Pedal kinematics (vertical position) and the resulting virtual world position for two example manual-control trials. On both of these trials (one forward and one backward), the monkey progressed from one target to another by cycling 7 cycles. b, Trial-averaged virtual position from a typical manual-control session. Each trace plots the change in virtual position (from a starting position of zero) for one of six conditions: forward or backward for 2, 4, or 7 cycles. Black circle represents the time of movement onset. Trials were averaged after being aligned to movement onset, and then scaled such that the duration of each trial matched the average duration for that condition. c, Trial-averaged pedal rotational velocity from the same session, for the same six conditions. d, Firing rates of two example units. Trial-averaged firing rates (computed after temporally aligning trials) are shown for two conditions: forward (green) and backward (red) for 7 cycles. Black circles represent the timing of movement onset and offset.

Monkeys performed the task well, moving swiftly between targets, stopping accurately on each target, and remaining stationary until the next target was shown. Monkeys cycled at a pace that yielded nearly linear progress through the virtual environment (Fig. 1b). Although not instructed to cycle at any particular angular velocity, monkeys adopted a brisk ∼2 Hz rhythm (Fig. 1c). Small ripples in angular velocity were present during steady-state cycling; when cycling with one hand, it is natural for velocity to increase on the downstroke and decrease on the upstroke. Success rates were high, exceeding 95% in every session (failures typically involved overshooting or undershooting the target location). This excellent performance under manual control provides a stringent bar by which to judge performance under BMI control.

BMI control was introduced after monkeys were adept at performing the task under manual control. Task structure and the parameters for success were unchanged under BMI control, and no cue was given regarding the change from manual to BMI control. For a BMI session, the switch to BMI control was made after completion of 50 decoder-training trials performed under manual-control (25 forward and 25 backward 7 cycle trials). The decoder was trained on these trials, at which point the switch was made to BMI control for the remainder of the session. For Monkey G, we occasionally included a session of manual-control trials later in the day to allow comparison between BMI and manual performance. For Monkey E, we used separate (interleaved) sessions to assess manual-control performance because he was willing to perform fewer total trials per day.

During both BMI control and manual control, the monkey's ipsilateral (noncycling) arm was restrained. The contralateral (cycling) arm was never restrained. We intentionally did not dissuade the monkey from continuing to physically cycle during BMI control. Indeed, our goal was that the transition to BMI control would be sufficiently seamless to be unnoticed by the monkey, such that he would still believe that he was in manual control. An advantage of this strategy is that we are decoding neural activity when the subject attempts to actually move, as a patient presumably would. Had we insisted the arm remain stationary, monkeys would have needed to actively avoid patterns of neural activity that drive movement—something a patient would not have to do. Allowing the monkey to continue to move normally also allowed us to quantify decoder performance via direct comparisons with intended (i.e., actual) movement. This is often not possible when using other designs. For example, in Rajangam et al. (2016), performance could only be assessed via indirect measures (e.g., time to target) because what the monkey was actually intending to do at each moment was unclear. We considered these advantages to outweigh a potential concern: a decoder could potentially “cheat” by primarily leveraging activity driven by proprioceptive feedback (which would not be present in a paralyzed patient). This is unlikely to be a large concern. Recordings were made from motor cortex, where robust neural responses precede movement onset. Furthermore, we have documented that motor cortex population activity during cycling is quite different from that within the proprioceptive region of primary somatosensory cortex (Russo et al., 2018). Thus, while proprioceptive activity is certainly present in motor cortex (Lemon et al., 1976; Fetz et al., 1980; Suminski et al., 2009; Schroeder et al., 2017), especially during perturbations (Pruszynski et al., 2011), the dominant features of M1 activity that we leverage are unlikely to be primarily proprioceptive.

Our goal was to use healthy animals to determine strategies for leveraging the dominant structure of neural activity. This follows the successful strategy of BMI studies that leveraged the well-characterized structure of activity during reaching. Of course, the nature of the training data used to specify decode parameters (e.g., the weights defining the key neural dimensions) will necessarily be different for a healthy animal that cannot understand verbal instructions and an impaired human that can. We thus stress that our goal is to determine a robust and successful decode strategy that works in real time during closed-loop performance. We do not attempt to determine the best approach to parameter specification, which in a patient would necessarily involve intended or imagined movement.

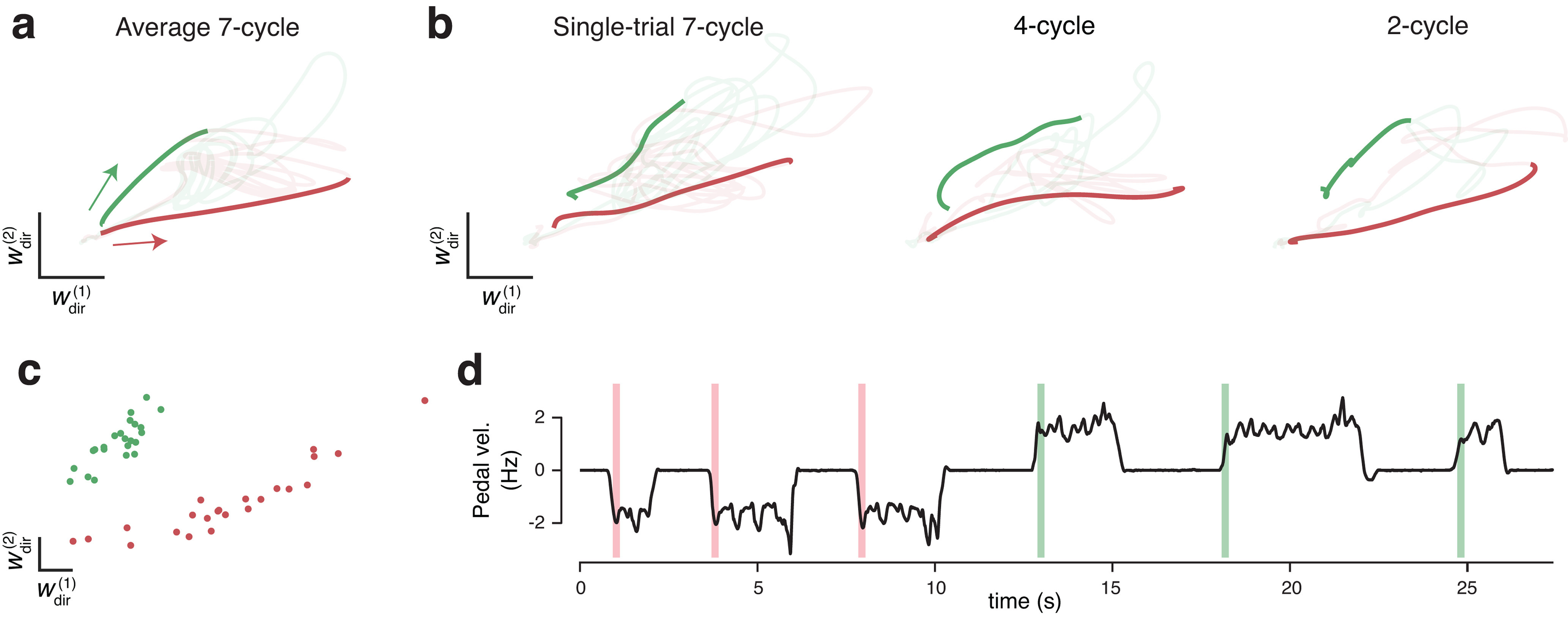

Neural activity and decoding strategy

We recorded motor cortical activity using 96-channel Utah arrays. For Monkey G, one array was implanted in M1 and a second in PMd. For Monkey E, a single array was implanted in M1. For each channel, we recorded times when the voltage crossed a threshold. Threshold crossings typically reflected individual spikes from a small handful of neurons (a multiunit). Spikes from individual neurons could be clearly seen on many channels, but no attempt was made to spike-sort. The benefit of sorting is typically modest when controlling a prosthetic device (Christie et al., 2015), and reduced-dimension projections of motor cortex population activity are similar whether based on single units or multiunits (Trautmann et al., 2019). Unit activity was strongly modulated during cycling (Fig. 1d). The phase, magnitude, and temporal pattern of activity depended on whether cycling was stopped, moving forward (green traces) or moving backward (red traces). A key question is how these unit-level features translate into population-level features that might be leveraged to estimate intended motion through the virtual environment.

In traditional decoding approaches (Fig. 2a), neural activity is hypothesized (usefully if not literally) to encode kinematic signals, which can be decoded by inverting the encoding scheme. Although nonlinear methods (e.g., Kalman filtering of the neural state) are often used to estimate neural activity, the final conversion to a kinematic command is typically linear or roughly so. To explore the feasibility of this approach in the present task, we used ridge regression to identify neural dimensions in which activity correlated well with kinematics. For each kinematic variable, the degree of regularization was chosen to maximize generalization R2—how well the kinematic variable could be reconstructed from single-trial activity in that neural dimension (Fig. 2b, orange symbols). We then computed the neural variance captured, which quantifies the magnitude of the neural signals in that dimension (Fig. 2b, dark teal bars). By definition, a high-variance signal makes a large contribution to the response of many neurons while a low-variance signal makes a small contribution (i.e., most aspects of neural responses do not resemble that signal). To illustrate expectations, consider the “population vector” hypothesis in which a neuron's firing rate is the dot product of a preferred direction with a velocity vector (Moran and Schwartz, 1999). Velocity-decoding dimensions would capture considerable neural variance. Given the heterogeneity of neural responses (Churchland and Shenoy, 2007), few would expect velocity-encoding dimensions to capture all neural variance. Yet variance captured should be reasonably high given that correlations between firing rates and external parameters are thought to be prevalent (Kandel et al., 2021) and useful (Schwartz, 2007). Indeed, this is precisely why kinematic-encoding dimensions have been heavily leveraged for BMI decoding during reach-like tasks.

We found that, during cycling, the dimensions that best reconstructed kinematic signals all captured very little neural variance (Fig. 2b). The neural variance captured could not be increased via regularization without decreasing generalization R2 (i.e., without reducing the correlation with kinematics). Neural variance captured was also low for dimensions found to correlate well with muscle activity (Fig. 2b, light teal bars). Results were similar (with slightly less variance captured) if we identified dimensions using L1 regularization or no explicit regularization. This analysis was performed at the population level and was based on trial-averaged firing rates, to give decode dimensions the best possible chance of capturing variance. A related effect was observed when analyzing single-neuron, single-trial responses. If the decoding dimension for a given variable (e.g., horizontal velocity) captures little population-level variance, then that variable makes only a weak contribution to the activity of most neurons and firing rates should typically correlate weakly with that variable. This was indeed the case. For example, across neurons, the median correlation of activity with horizontal and vertical velocity was 0.09 and 0.33. Even the “best” neurons did not show strong correlations (the 95th percentile correlations were 0.19 and 0.52). Correlations increased only slightly if, for each neuron, we correlated activity with velocity in its “preferred direction” (found via regression). The median correlation was 0.37, and the 95th percentile correlation was 0.57. Correlations were not due to neural activity leading kinematic parameters; we independently optimized the lead/lag of each neuron.

The strikingly low (<5%) neural variance captured in kinematic-correlating dimensions was initially surprising because single-neuron responses were robustly sinusoidally modulated, just like many kinematic variables. Yet sinusoidal response features were often superimposed on other response features (e.g., overall shifts in rate when moving vs not moving). Sinusoidal features also displayed phase relationships, across forward and backward cycling, inconsistent with kinematic encoding. This underscores that, when correlations are incidental, one cannot count on them being consistently present across behaviors. Furthermore, which correlations are more prevalent can also change. During reaching, correlations are typically strongest with velocity (a consequence of phasic activity), while in the present case correlations were slightly higher for position, both in terms of generalization R2 and in terms of neural variance captured.

These findings extend those of Russo et al. (2018), who found that the largest components of neural activity during cycling (the top two principal components, which together captured ∼35% of the neural variance) did not resemble velocity. Yet that result did not rule out the possibility that kinematic signals could still be sizeable. As pointed out by Jackson (2018) and acknowledged by Russo et al. (2018), the dominant signals could still have reflected a joint representation of kinematic signals and their derivatives (e.g., vertical hand position and velocity). Furthermore, a signal can be sizeable even if it is not isolated in the top two principal components; indeed, the majority of variance lies outside those two dimensions. The present data reveal that both velocity- and position-correlating signals are very small: 10-fold smaller than the top two PCs. The fact that kinematic-correlating signals are small during some tasks (cycling) but sizeable in others (reaching) supports the view that they are most likely incidental.

Of course, even an incidental correlation could be useful. Yet the fact that kinematic-correlating neural dimensions are low-variance makes them a challenging substrate for decoding. For example, we identified a dimension where the projection of trial-averaged neural activity correlated with angular velocity, which is conveniently proportional to the quantity we wish to decode (velocity of virtual self-motion). However, because that dimension captured relatively little variance (1.2 ± 0.2% of the overall population variance; SE across three sessions), the relevant signal was variable on single trials (Fig. 2c) and the correlation with angular velocity was poor. Generalization R2 was 0.63 for the segment of data shown, and was even lower when all data were considered (R2 = 0.51 ± 0.15, SE across three sessions). Any decode based on this signal would result in many false starts and false stops. This example illustrates a general property: single-trial variability is expected for any low-variance signal estimated from a limited number of neurons.

We also considered the alternative strategy of first decoding horizontal and vertical hand position. We chose position rather than velocity (which typically dominates reach-BMI decoding) because correlations with position were slightly stronger (compare orange symbols in Fig. 2b). A reasonable strategy would be to convert a two-dimensional position decode (Fig. 2d) into a one-dimensional self-motion command. Possible approaches for doing so include deciphering where on the circle the hand is most likely to be at each moment, computing the angular velocity of the neural state, or computing its angular momentum. However, consideration of such approaches raises a deeper question. If we are willing to nonlinearly decode a one-dimensional quantity (self-motion) from a two-dimensional subspace, why stop at two dimensions? Furthermore, why not leverage dimensions that capture most of the variance in the population response, rather than dimensions that capture a small minority? Projections onto high-variance dimensions have proportionally less contribution from spiking variability. They are also less likely to be impacted by electrical interference or recording instabilities which, while of little concern in a controlled laboratory environment, are relevant to the clinical goals of prosthetic devices. One should thus, when possible, leverage multiple dimensions that jointly capture as much variance as possible. We term this approach (Fig. 2e) task-specific subspace decoding because it seeks one or more subspaces that capture robust response features as the subject attempts to move within the context of a particular task (in the present case, while generating a rhythmic movement to produce self-motion). Both the population-level response features and the subspaces themselves may be specific to that task. The necessity of task-specific decoding is already well recognized when tasks are very different (e.g., reaching vs speaking), but has not been explored within the context of different tasks normally performed with the same limb.

To pursue this strategy, we identified three high-variance subspaces. The first was spanned by four “rotational” dimensions (two each for forward and backward cycling), which captured elliptical trajectories present during steady-state cycling (Russo et al., 2018). The second was a single “moving-sensitive” dimension, in which the neural state distinguished whether the monkey was stopped or moving regardless of movement direction (Kaufman et al., 2016). The third was a triplet of “initial-direction” dimensions, in which cycling direction could be transiently distinguished in the moments after cycling began. In subsequent sections, we document the specific features present in these high-variance subspaces. Here we concentrate on the finding that the eight-dimensional subspace spanned by these dimensions captured 70.9 ± 2.3% of the firing-rate variance (Fig. 2f). Note that because dimensions are not orthogonal, the total captured variance is not the sum of that for each dimension. The “initial-direction” dimensions, for example, overlap considerably with the “rotational” dimensions. Nevertheless, the total captured variance was only modestly less than that captured by the top eight PCs (which capture the most variance possible), and much greater than that captured by spaces spanned by dimensions where activity correlated with kinematics and/or muscle activity (Fig. 2b). We thus based our BMI decode on activity in these eight high-variance dimensions. It is likely that, within the remaining 29.1% of the variance, there exist additional features that could be leveraged in other ways. We chose not to pursue that possibility as BMI performance (documented below) was already excellent.

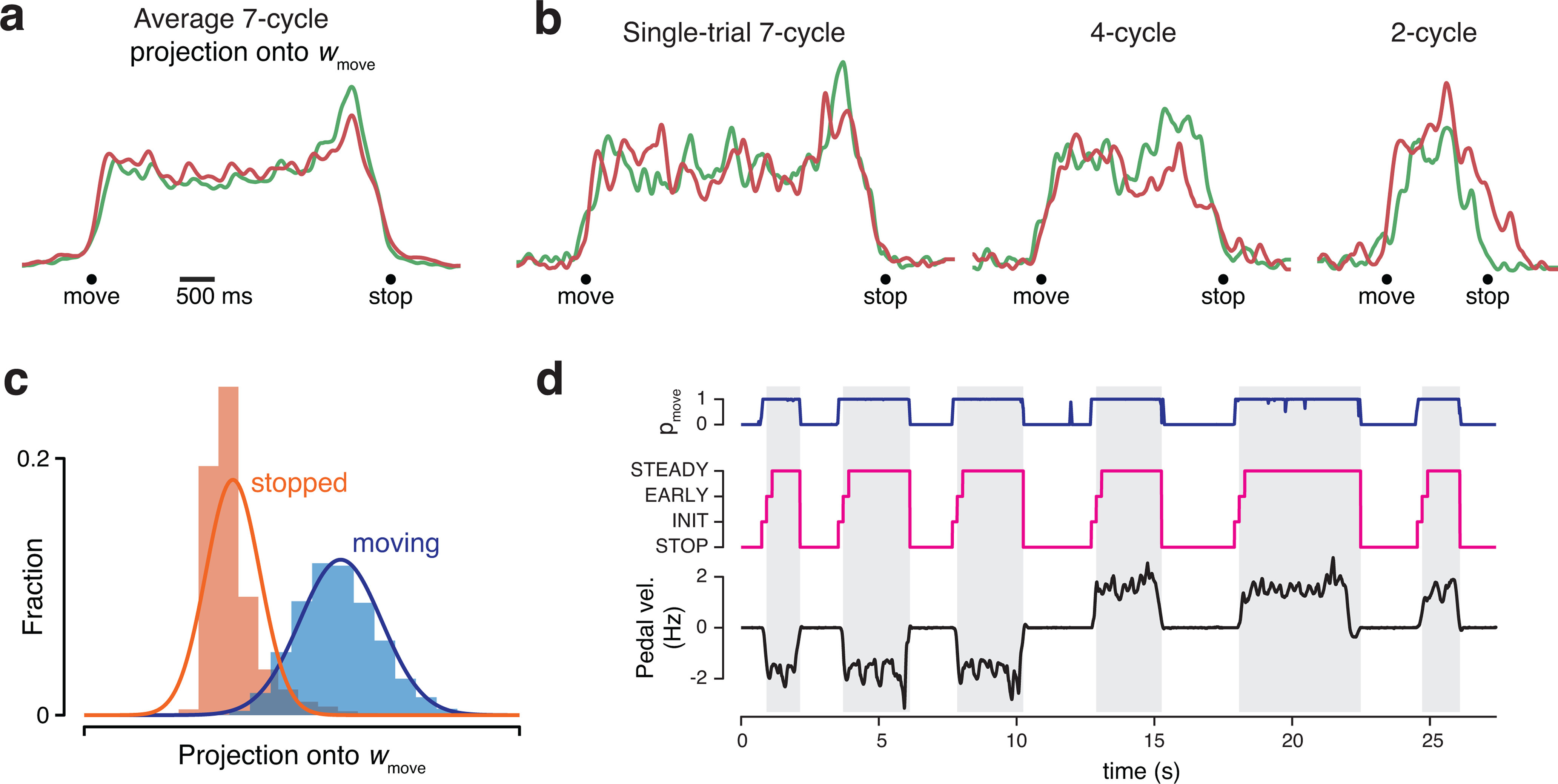

Direction of steady-state movement inferred from rotational structure

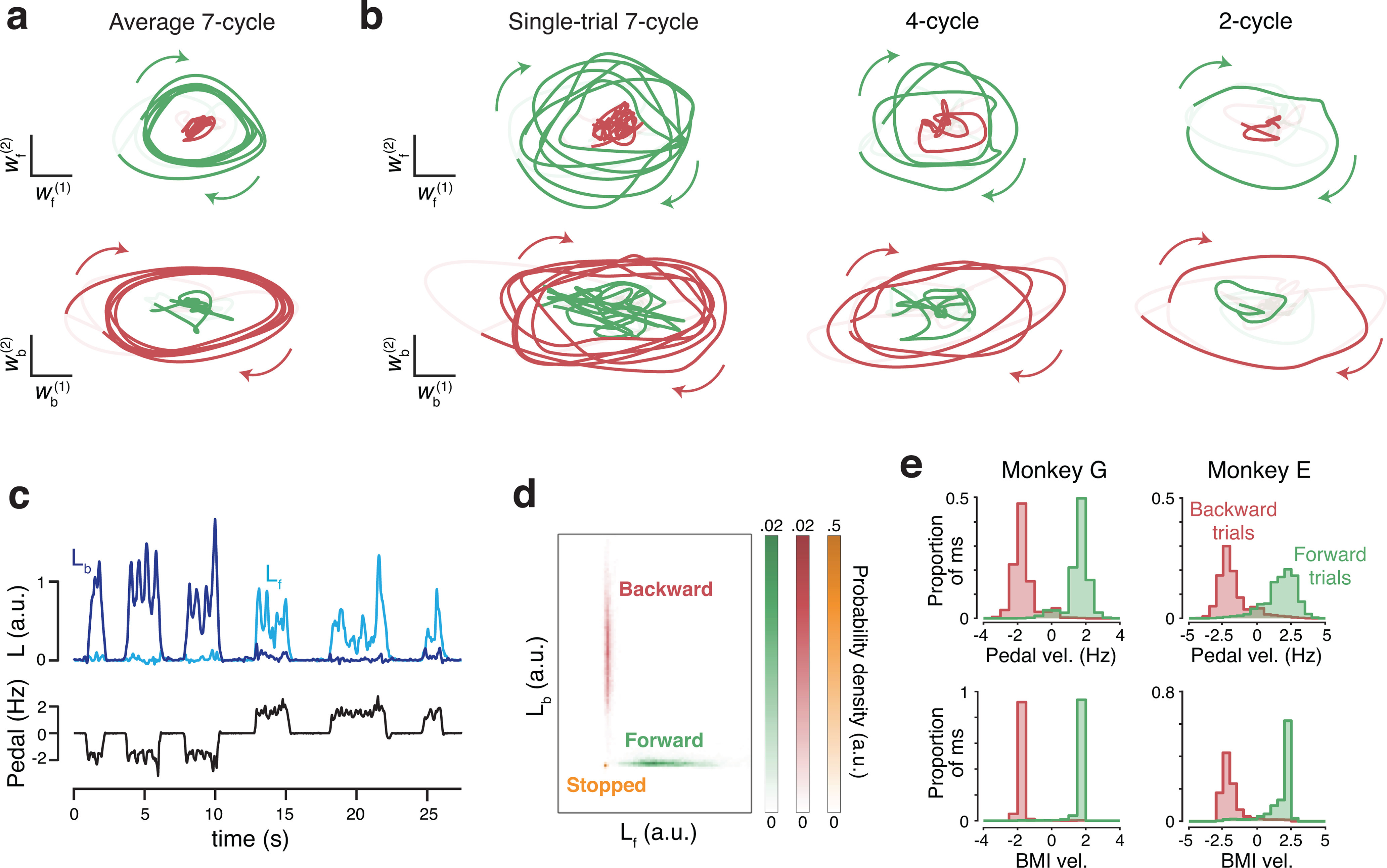

The dominant feature of the population neural response during steady-state cycling was a repeating elliptical trajectory (Russo et al., 2018). Thus, the core of our decoder was built on this feature, leveraging the fact that forward-cycling and backward-cycling trajectories occurred in nonidentical subspaces (Fig. 3a). We employed an optimization procedure to find a two-dimensional “forward plane” that maximized the size of the forward trajectory relative to the backward trajectory. We similarly found an analogous “backward plane.” These two planes cleanly captured rotations of the trial-averaged neural state (Fig. 3a) and, with filtering (see Materials and Methods), continued to capture rotations on individual trials (Fig. 3b). Forward and backward trajectories were not perfectly orthogonal. Nevertheless, the above procedure identified orthogonal planes where strongly elliptical trajectories were present for only one cycling direction. The data in Figure 3a, b are from a manual-control session (which used multiple distances) to illustrate this design choice. In practice, planes were found based on decoder-training trials (7 cycle only) after trial-averaging.

Figure 3.

Leveraging rotational trajectories to decode velocity. a, Trial-averaged population activity, during a manual-control session, projected onto the forward (top) and backward (bottom) rotational planes. Data are from 7 cycle forward (green) and backward (red) conditions. By design, the forward plane primarily captures rotational trajectories during forward cycling, and vice versa. Boldly colored portions of each trace highlight rotations during the middle cycles (a period that excludes the first and last half cycle of each movement). Colored arrows indicate rotation direction. Light portion of each trace corresponds to the rest of the trial. In addition to smoothing with a causal filter, neural data have been high-pass filtered to match what was used during BMI control. Data are from Monkey G. b, As in a, but for six example single trials, one for each of the three distances in the two directions. c, Example angular momentum (L) in the backward plane (dark blue) and forward plane (bright blue) during six trials of BMI control. Black represents velocity of the pedal. Although the pedal was disconnected from the task, this provides a useful indication of how the monkey was intending to move. Data are from the same day shown in a and b. d, Probability densities of angular momentums found from the decoder training trials collected on the same day. e, Histograms of BMI-control velocity (bottom) and (disconnected) pedal velocity (top) for all times the decoder was in the STEADY state (see Materials and Methods), across all BMI-control sessions.

To convert the neural state in these two planes into a one-dimensional decode of self-motion, we compared angular momentum (the cross product of the state vector with its derivative) between the two planes. When moving backward (first 3 cycling bouts in Fig. 3c), angular momentum was sizeable in the backward plane (dark blue) but not the forward plane (bright blue). The opposite was true when moving forward (subsequent 3 bouts). Using the decoder-training trials, we considered the joint distribution of forward-plane and backward-plane angular momentums. We computed distributions when stopped (Fig. 3d, orange), when cycling forward (green), and when cycling backward (red) and fit a Gaussian to each. During BMI control, we computed the likelihood of the observed angular momentums under each of the three distributions. If likelihood under the stopped distribution was high, decoded velocity was zero. Otherwise, likelihoods under the forward and backward distributions were converted to a virtual velocity that was maximal when one likelihood was much higher and slower when likelihoods were more similar. Maximum decoded velocity was set based on the typical virtual velocity under manual control (∼2 Hz).

Distributions of decoded velocity when moving under BMI control (Fig. 3e, bottom) were similar to the distributions of velocity that would have resulted were the pedal still operative (Fig. 3e, top). Importantly, forward and backward distributions overlapped little; the direction of decoded motion was almost always correct. Decoded velocity was near maximal at most times, especially for Monkey G. The decoded velocity obtained from these four dimensions constituted the core of our decoder. We document the performance of that decoder in the next section. Later sections describe how aspects of the decoder were fine-tuned by leveraging the remaining four dimensions.

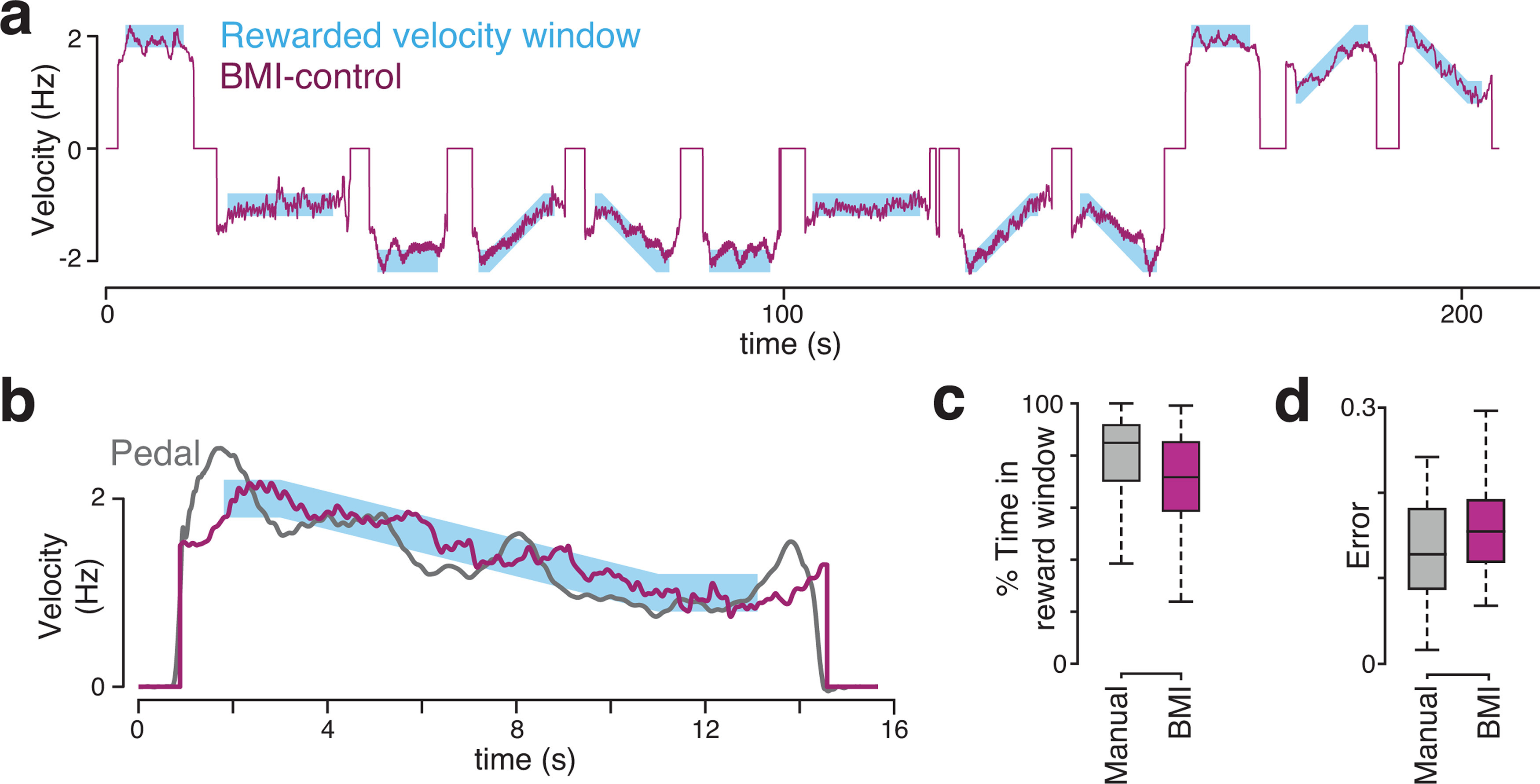

Performance

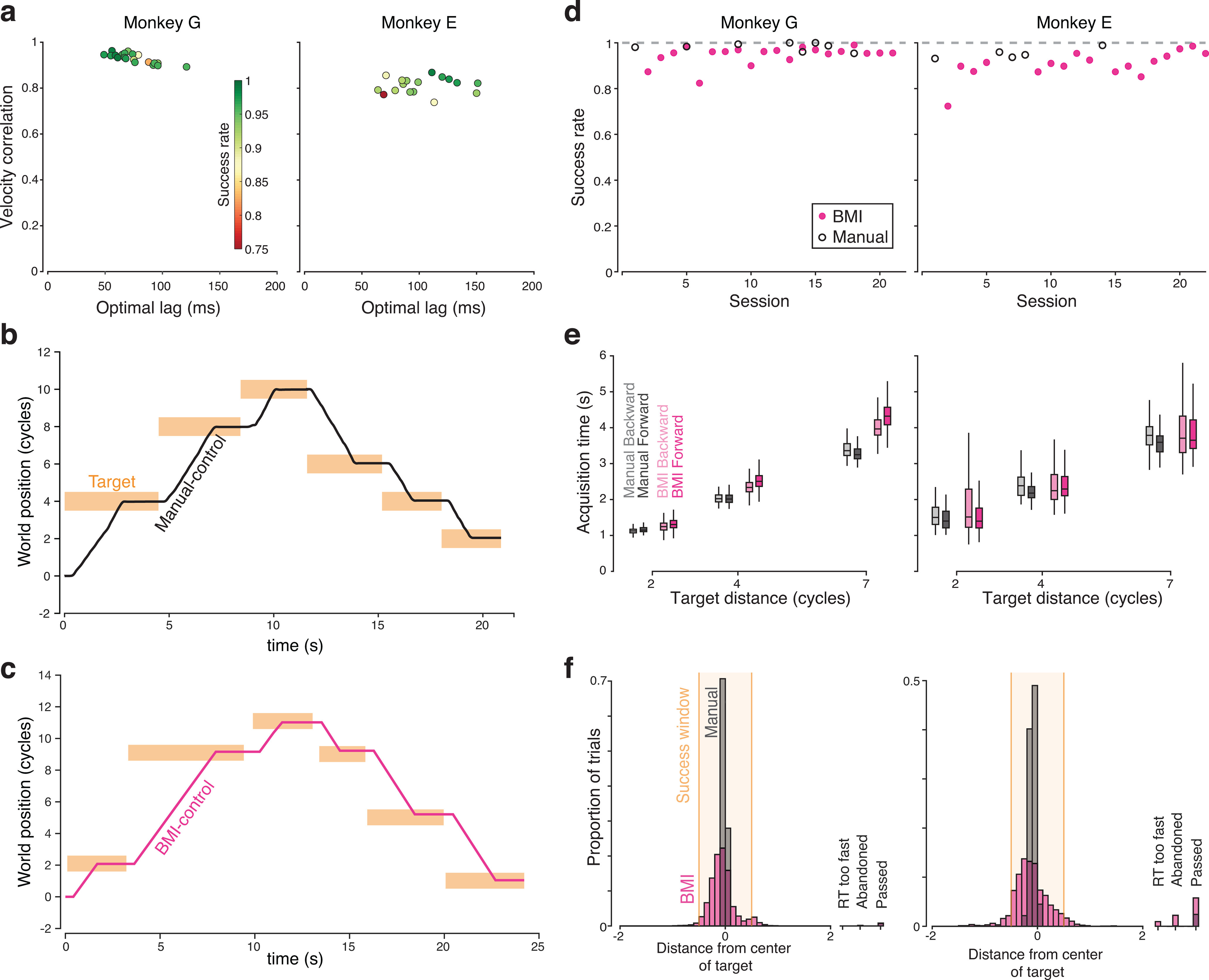

Monkeys performed the task very well under closed-loop BMI control (Fig. 4 and Movie 1). Monkeys continued to cycle as normal, presumably not realizing that the pedal had been disconnected from the control system. The illusion that the pedal still controlled the task was supported by a high similarity between decoded virtual velocity and intended virtual velocity (i.e., what would have been produced by the pedal were it still controlling the task). The cross-correlation between these was 0.93 ± 0.02 and 0.81 ± 0.03 (Monkeys G and E, mean ± SD across sessions) with a short lag: 76 ± 4 ms and 102 ± 7 ms (Fig. 4a). There were also few false starts; it was exceedingly rare for decoded motion to be non-zero when the monkey was attempting to remain stationary on top of a target. False starts occurred on 0.29% and 0.09% of trials (Monkeys G and E), yielding an average of 1.9 and 0.12 occurrences per day. This is notable because combatting unintended movement is a key challenge for BMI decoding. The above features—high correlation with intended movement, low latency, and few false starts—led to near-normal performance under BMI control (Fig. 4b,c). Success rates under BMI control (Fig. 4d, magenta symbols) were almost as high as under manual control (open symbols), and the time to move from target to target was only slightly greater under BMI control (Fig. 4e).

Figure 4.

Decoder performance. a, Summary of the cross-correlation between decoded virtual velocity under BMI control, and the virtual velocity that would have been produced by the pedal (which monkeys continued to manipulate normally). Each symbol corresponds to one BMI-control session and plots the peak of the cross-correlation versus the lag where that peak occurred. Colors represent success rate during that session. b, Example manual-control performance for 6 consecutive trials (3 forward and 3 backward). World position is expressed in terms of the number of cycles of the pedal needed to move that distance. For plotting purposes, the position at the beginning of this stretch of behavior was set to zero. Bars represent the time that targets turned on and off (horizontal span) and the size of the acceptance window (vertical span). c, Similar plot during BMI control. For ease of comparison, world position is still expressed in terms of the number of physical cycles that would be needed to travel that far, although physical cycling no longer had any impact on virtual velocity. d, Success rate for both monkeys. Each symbol plots, for one session, the proportion of trials where the monkey successfully moved from the initial target to the final target, stopped within it, and remained stationary until reward delivery. Dashed line at 1 for reference. e, Target acquisition times for successful trials. Middle lines indicate median. Box edges represent the first and third quartiles. Whiskers include all nonoutlier points (points <1.5 times the interquartile range from the box edges). Data are shown separately for the three target distances. f, Histograms of stopping location from both monkeys. Analysis considers both successful and failed trials. Far right, Bars represent the proportion of trials where the monkey failed for reasons other than stopping accuracy per se. This included trials where monkeys disrespected the reaction time limits, abandoned the trial before approaching the target, or passed through the target without stopping.

BMI and manual control of primary task (Monkey G, October 4, 2018). Both manual (left) and BMI control (right) sessions were performed on the same day, which was the fourth day of BMI control. Manual and BMI control success rates were very similar on this day (see Fig. 3d). The task required that the monkey turn the hand pedal in the instructed direction to move through the virtual environment, stop on top of a lighted target, and remain still while collecting juice reward. The color of the landscape indicated whether cycling must be “forward” (green landscape) or “backward” (tan landscape). There were six total conditions, defined by cycling direction and target distance (2, 4, or 7 cycles).

Although the BMI decoder was trained using only data from 7 cycle movements, monkeys successfully used it to perform random sequences with different distances between targets (specifically, random combinations of 2, 4, and 7 cycles). For example, in Figure 4c, the monkey successfully performs a sequence involving 2, 7, and 2 cycle forward movements, followed by 2, 4, and 4 cycle backward movements. The only respect in which BMI control suffered noticeably was accuracy in stopping on the middle of the target. Under manual control, monkeys stopped very close to the target center (Fig. 4f, gray histogram), which always corresponded to the “pedal-straight-down” position. Stopping was less accurate under BMI control (magenta histogram). This was partly due to the fact that, because virtual self-motion was swift, small errors in decoded stopping time became relevant. A 100 ms error corresponded to ∼0.2 cycles of physical motion. The average SD of decoded stopping time (relative to actual stopping time) was 133 (Monkey G) and 99 ms (Monkey E). Greater stopping error in BMI-control trials was also due to an incidental advantage of manual control: the target center was aligned with the pedal-straight-down position, a fact that monkeys leveraged to stop very accurately. This strategy was unavailable during BMI control because the correct moment to stop rarely aligned perfectly with the pedal-straight-down position (this occurred only if decoded and intended virtual velocity matched perfectly when averaged across the cycling bout).

Performance was modestly better for Monkey G versus Monkey E. This was likely due to the implantation of two arrays rather than one. Work ethic may also have been a factor; Monkey E performed fewer trials under both BMI and manual control. Still, both monkeys could use the BMI successfully starting on the first day, with success rates of 0.87 and 0.74 (Monkeys G and E). Monkey G's performance rapidly approached his manual-control success rate within a few sessions. Monkey E's performance also improved quickly, although his manual-control and BMI-control success rates were mostly lower than Monkey G's. The last five sessions involved BMI success rates of 0.97 and 0.96 for the 2 monkeys. This compares favorably with the overall averages of 0.98 and 0.95 under manual control. Although this performance improvement with time may relate to adaptation, the more likely explanation is simply that monkeys learned to not be annoyed or discouraged by the small differences in decoded and intended velocity.

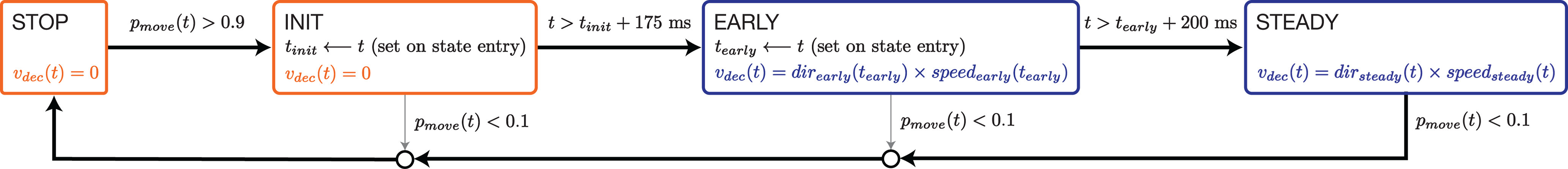

State machine

The performance documented above was achieved using a state-dependent decode (Fig. 5). The rotational-feature-based strategy (described above) determined virtual self-motion in the “STEADY” state. Thus, self-motion could be forward, backward, or stopped while in STEADY. The use of other states was not strictly necessary but helped fine-tune performance. State transitions were governed by activity in the moving-sensitive dimension, which was translated into a probability of moving, , computed as described in the next section. If was low, the STOP state was active and decoded virtual velocity was enforced to be zero. For almost all times when the STOP state was active, the angular-momentum-based strategy would have estimated zero velocity, even if it were not enforced. Nevertheless, the use of an explicit STOP state helped nearly eliminate false starts.

Figure 5.