Highlights

-

•

Despite attempts to improve diversity in healthcare, many populations continue to be underrepresented in medicine (URM)

-

•

Blinded interviews, which de-emphasize the written application, may reduce bias in application review.

-

•

We found that blinded interviewers were more likely to rank URM applicants more highly.

-

•

Other techniques to limit bias, such as standardized questions and implicit bias training, should be considered.

Keywords: Underrepresented in Medicine, Blinded applications, Fellowship interviews

Abstract

Biases in application review may limit access of applicants who are underrepresented in medicine (URM) to graduate medical training opportunities. We aimed to evaluate the association between blinding interviewers to written applications and final ranking of all applicants and URM applicants for Gynecologic Oncology fellowship. During 2020 virtual Gynecologic Oncology fellowship interviews, we blinded one group of interviewers to written applications, including self-reported URM status. Interviewers visually interacted with the applicants but did not review their application. Interviewers submitted independent rank lists. We compared pooled rankings of blinded and non-blinded interviewers for all applicants and for URM applicants using appropriate bivariate statistics.

We received 94 applications for two positions through the National Resident Matching Program, of which 18 (19%) self-identified as URM. We invited 40 applicants to interview and interviewed 30 applicants over six sessions. Ten interviewees (33%) self-identified as URM. Of 12 or 13 faculty interviewers during each interview session, 3 or 4 were blinded to the written application. There was no statistically significant difference in rank order when comparing blinded to non-blinded interviewers overall. However, blinded interviewers ranked URM applicants higher than non-blinded interviewers (p = 0.04). Blinding of written application metrics may allow for higher ranking of URM individuals.

1. Introduction

Although Hispanic and Black populations have grown compared to non-minority groups in the United States, they are proportionally more underrepresented in medicine (URM) today compared to thirty years ago despite concerted attempts to improve diversity in healthcare (Lett et al., 2018). Studies have demonstrated that URM medical students are motivated to enter healthcare by factors such as inclusion and support from role models (Hadinger, 2017, Agawu et al., 2019). Lack of URM trainees continues to be a concern, from medical school through fellowship training (Auseon et al., 2013). Furthermore, experiences of URM trainees tend to be worse, with greater risk for depression in programs with less diversity (Elharake et al., 2020).

Reasons for underrepresentation of minority groups in medicine are complex and multifactorial, but implicit bias, a concept that recognizes unconscious preferences or aversions to people or groups, is an increasingly recognized factor (Qt et al., 2017). Blinded interviews include the selective withholding of aspects of candidates’ written applications that have been shown to be particularly susceptible to bias, with the intent of reducing implicit biases and potentially altering overall final ranking (Miles et al., 2001). An examination of processes in Gynecologic Oncology fellowship for recruiting and interviewing trainees is paramount to improve diversity.

We aimed to evaluate the association between blinding interviewers to written applications and final ranking of all applicants and of URM applicants for a Gynecologic Oncology fellowship.

2. Methods

Through the National Resident Matching Program for the 2020 Gynecologic Oncology fellowship match, we received 94 applications for two positions. Applicants self-identified as URM, defined by the Association of American Medical Colleges as “racial and ethnic populations that are underrepresented in the medical profession relative to their numbers in the general population.” The Program Director (PD) and Associate Program Director (APD) reviewed non-blinded applications and agreed on 40 applicants to invite for interview. Although there was no minimum cutoff for standardized test scores, academic performance, letters of reference, personal statement, and research experiences were considered and all applicants who were invited to interview met a minimum academic standard. The PD and APD were not blinded to URM status. Due to the COVID-19 pandemic, video interviewing was employed.

Thirty applicants accepted interview invitations. Depending on availability, 12 or 13 faculty interviewed during each session and three or four faculty were blinded to the entire written applications, including self-reported URM status. Faculty were offered the opportunity to be blinded and the same faculty were blinded at each interview session. The PD and APD were not blinded. By chance, all blinded faculty were women. None of the faculty or fellows self-identified as URM (Table 1).

Table 1.

Characteristics of fellowship applicants (n = 94) and interviewers (n = 13).

| Applicants | n (%) |

|---|---|

| Self-identified URM | 18 (19) |

| Allopathic medical school | 92 (98) |

| Foreign medical school | 8 (8.5) |

| Residency program location | |

| Southeast | 18 (19) |

| Midwest | 30 (32) |

| Northeast | 25 (26.5) |

| Southwest | 8 (8.5) |

| West | 11 (12) |

| Puerto Rico | 2 (2) |

|

Interviewers | |

| Self-identified URM | 0 (0) |

|

Gender Female Male |

7 (54) 6 (46) |

|

Rank Professor Associate Professor Assistant Professor Fellow |

2 (15) 3 (23) 5 (38) 3 (23) |

Abbreviations: URM underrepresented in medicine.

Each applicant interviewed with all faculty present. Interviews were conducted with one to three faculty and one applicant, with each applicant completing six interviews. When multiple faculty were present, they were either all blinded or all non-blinded. There were no predetermined or required questions in the twenty-minute time allotment. A scoring sheet was provided as a guide, but no formal scores were assigned. Each faculty member interviewed four to six applicants per session and ranked the applicants they interviewed in order, with one being their top choice from that session. Interviewers were unaware that ranking of URM applicants was being assessed.

This study was designated by our institutional review board as exempt. We computed descriptive statistics, including tests of normality, and used appropriate bivariate statistics to compare pooled rankings of blinded and non-blinded interviewers. A p-value < 0.05 was considered significant. This study was not powered for significance as it was exploratory in nature with a fixed sample size. IBM® SPSS version 27 was used for statistical analyses.

3. Results

Of 94 applicants, 18 (19%) self-identified as URM. Self-identified URM applicants comprised 10/30 (33%) interviewees. Interviewee characteristics are shown in Table 1. Of ten applicants who declined or cancelled their interview one (10%) identified as URM.

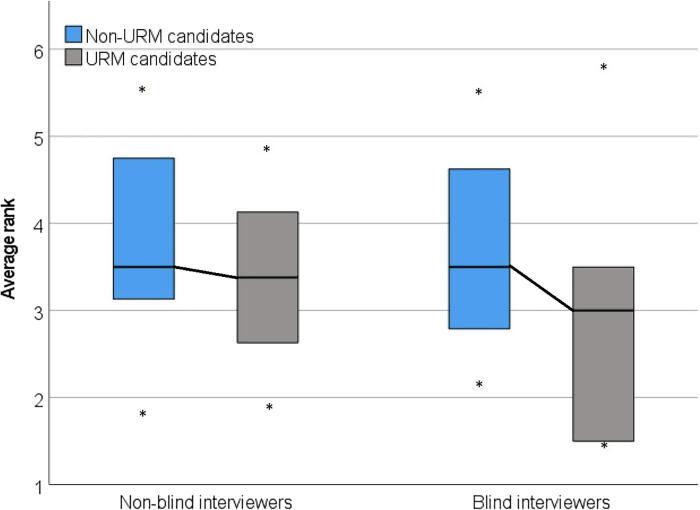

Medians of average rank are shown in Table 2. For each applicant, there was no difference in the average rank overall assigned by blinded compared to non-blinded interviewers. We also compared differences in medians of average rank between non-blinded and blinded interviewers for non-URM and URM applicants, calculated as non-blind average rank minus blind average rank. For example, if average rank of one applicant by non-blinded interviewers was 2.4 and by blinded interviewers was 2.6, the difference in average ranks is −0.2. The average difference for non-URM applicants was −0.1 and for URM applicants was 0.4, indicating that blinded interviewers were more likely to rank URM applicants higher (Fig. 1, p = 0.04). One of the two matched applicants to the program self-identified as URM.

Table 2.

Median of average rank for blinded and non-blinded interviewers.

|

Blinded interviewers (n = 3–4), median (range) |

Non-blinded interviewers (n = 8), median (range) |

|

|---|---|---|

| Overall | 2.7 (1.0–5.3) | 2.9 (1.3–5.0) |

| Non-URM | 3.3 (1.7–5.0) | 2.9 (1.3–5.0) |

| URM | 2.5 (1.0–5.3) | 2.9 (1.4–4.4) |

Abbreviations: URM underrepresented in medicine.

Fig. 1.

Differences in median of average rank between non-blinded and blinded interviewers for non-URM and URM applicants. Difference calculated by (non-blind – blind) average rankings, demonstrating that URM applicants were ranked higher by blinded interviewers, p = 0.04. * minimum/maximum.

4. Discussion

We evaluated the association between blinding interviewers to written applications and final rank overall and of URM applicants for Gynecologic Oncology fellowship. We found no significant differences in rank order overall, but blinded interviewers were more likely to rank URM applicants higher compared to non-blinded interviewers.

Research has shown that blinded interviews and standardized questions improve interview utility and accuracy (McDaniel et al., 1994). Huffcutt et al outline seven principles for conducting employment interviews, including the suggestion that interviewers should “know as little about the candidate as possible.” They posit that when interviewers review candidate information beforehand, they tend to form general impressions before even meeting the applicant and only confirm these initial impressions through selective treatment during the interview (Huffcutt, 2010). These best practices have not completely translated to the field of medicine, as only 8–20% of residency programs report using blinding during interviews and virtually no existing data on blinding during subspecialty fellowship interviews (Kasales et al., 2019, Kim et al., 2016).

In contrast to prior studies that primarily evaluated blinding of academic performance, in our study interviewers were also blinded to self-reported URM status. On initial review of applications, all applicants who were invited to interview met a minimum academic standard. Interestingly, we found that blinded interviewers were more likely to rank URM applicants higher. One explanation for this difference may be that written application materials were not perceived as positively for URM applicants so interviewers who had access to all materials may have unconsciously already decided about the quality of the applicant.

While the difference in rank may be due to the strength of the applicant, implicit bias may also play a role in this observed difference, as it has been shown to pervade both medical school admissions (Qt et al., 2017) and residency selection (Maxfield et al., 2020). Studies have addressed implicit bias with the use of implicit association tests (IAT) to attempt to mitigate this bias (Maxfield et al., 2020). We acknowledge the limitations of IAT and implicit bias training, which in isolation are not viable solutions to institutionalized racism and the larger structural problems at hand but may be a step in the right direction.

There are several limitations to our study. First, although interviewers were blinded to the written application, they were still able to see the applicants on the video interview. Blinding of the written application removes the bias that URM applicants may experience in aspects such as letters of recommendation, fewer research opportunities, and lower test scores. Nevertheless, incomplete blinding may confound the results of our analysis. Additionally, biases may exist in the selection of faculty interviewers that may confound our results, as faculty were not randomized to be blinded and initial review of applications was completed by only two faculty members (the PD and APD). Although all faculty complete implicit bias training, differences in applicant rank may be attributable to differences among interviewers. Due to the limitation that each interviewer assigned a rank (not a score) to each applicant, the resulting distribution for each reviewer is the same. Thus, it is not possible to compare inter-interviewer differences. Finally, our study was not powered for significance with a fixed sample size. We are limited in our ability to repeat this study to increase sample size as faculty are now aware of the reason for blinding.

Educating physicians on implicit bias and offering testing of one’s own biases may be one step towards recognizing and mitigating unconscious influences in the interview process (Sukhera et al., 2018). Future work may assess the use of standardized questions and formalized scoring sheets that include diversity. Programs may also consider blinding pieces of the written application once an applicant meets the set minimal criteria. Simply recruiting a more diverse physician workforce will not solve the problem of bias in medicine but is a critical step. Recruitment efforts must occur alongside increased support and mentorship of URM trainees, education on health disparities, partnership with community, and advocacy from the local to national level (Edwards, 2021, Bonifacino et al., 2021).

We have demonstrated that blinded interviewers were more likely to rank URM applicants higher than non-blinded interviewers. Blinding of written application metrics may remove implicit bias for traditional markers of success and allow for higher ranking of URM individuals.

CRediT authorship contribution statement

Jennifer Haag: Investigation, Writing – original draft. Brooke Sanders: Investigation, Writing – original draft. Joseph Walker Keach: Conceptualization, Methodology, Writing – review & editing. Carolyn Lefkowits: Conceptualization, Methodology, Writing – review & editing. Jeanelle Sheeder: Conceptualization, Formal analysis, Writing – review & editing. Kian Behbakht: Conceptualization, Data curation, Methodology, Supervision, Writing – review & editing.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgements

The authors would like to thank Dr. Shanta Zimmer, Associate Dean for Diversity and Inclusion, for her invaluable feedback on the review of this manuscript.

Footnotes

This project was presented as a poster at the Society of Gynecologic Oncology Virtual Annual Meeting on Women’s Cancer, March 19–25, 2021.

References

- Lett L.A., Orji W.U., Sebro R. Declining racial and ethnic representation in clinical academic medicine: A longitudinal study of 16 US medical specialties. PLoS ONE. 2018;13(11) doi: 10.1371/journal.pone.0207274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hadinger M.A. Underrepresented Minorities in Medical School Admissions: A Qualitative Study. Teach Learn Med. 2017;29(1):31–41. doi: 10.1080/10401334.2016.1220861. [DOI] [PubMed] [Google Scholar]

- Agawu A., Fahl C., Alexis D., Diaz T., Harris D., Harris M.C., et al. The Influence of gender and underrepresented minority status on medical student ranking of residency programs. J. Natl Med. Assoc. 2019;111(6):665–673. doi: 10.1016/j.jnma.2019.09.002. [DOI] [PubMed] [Google Scholar]

- Auseon A.J., Kolibash A.J., Jr., Capers Q. Successful efforts to increase diversity in a cardiology fellowship training program. J. Graduate Med. Educat. 2013;5(3):481–485. doi: 10.4300/JGME-D-12-00307.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elharake J.A., Frank E., Kalmbach D.A., Mata D.A., Sen S. Racial and ethnic diversity and depression in residency programs: a prospective cohort study. J. Gen. Intern. Med. 2020;35(4):1325–1327. doi: 10.1007/s11606-019-05570-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Qt C., Clinchot D., McDougle L., Greenwald A.G. Implicit racial bias in medical school admissions. Academic Med.: J. Assoc. Am. Medical Colleges. 2017;92(3):365–369. doi: 10.1097/ACM.0000000000001388. [DOI] [PubMed] [Google Scholar]

- Miles W.S., Shaw V., Risucci D. The role of blinded interviews in the assessment of surgical residency candidates. Am. J. Surg. 2001;182(2):143–146. doi: 10.1016/s0002-9610(01)00668-7. [DOI] [PubMed] [Google Scholar]

- McDaniel M.A., Whetzel D.L., Schmidt F.L., Maurer S.D. The validity of employment interviews: a comprehensive review and meta-analysis. J. Appl. Psychol. 1994;79(4):599–616. [Google Scholar]

- Huffcutt A. From science to practice: Seven principles for conducting employment interviews. Appl. HRM Res. 2010;12:121–136. [Google Scholar]

- Kasales C., Peterson C., Gagnon E. Interview techniques utilized in radiology resident selection- a survey of the APDR. Acad. Radiol. 2019;26(7):989–998. doi: 10.1016/j.acra.2018.11.002. [DOI] [PubMed] [Google Scholar]

- Kim R.H., Gilbert T., Suh S., Miller J.K., Eggerstedt J.M. General surgery residency interviews: are we following best practices? Am. J. Surgery. 2016;211(2):476. doi: 10.1016/j.amjsurg.2015.10.003. 81.e3. [DOI] [PubMed] [Google Scholar]

- Maxfield C.M., Thorpe M.P., Desser T.S., Heitkamp D., Hull N.C., Johnson K.S., et al. Awareness of implicit bias mitigates discrimination in radiology resident selection. Med. Educ. 2020;54(7):637–642. doi: 10.1111/medu.14146. [DOI] [PubMed] [Google Scholar]

- Sukhera J., Milne A., Teunissen P.W., Lingard L., Watling C. The actual versus idealized self: exploring responses to feedback about implicit bias in health professionals. Academic Med.: J. Assoc. Am. Medical Colleges. 2018;93(4):623–629. doi: 10.1097/ACM.0000000000002006. [DOI] [PubMed] [Google Scholar]

- Edwards J.A. Mentorship of underrepresented minorities and women in surgery. Am. J. Surg. 2021;221(4):768–769. doi: 10.1016/j.amjsurg.2020.09.012. [DOI] [PubMed] [Google Scholar]

- Bonifacino E., Ufomata E.O., Farkas A.H., Turner R., Corbelli J.A. Mentorship of underrepresented physicians and trainees in academic medicine: a systematic review. J. Gen. Intern. Med. 2021;36(4):1023–1034. doi: 10.1007/s11606-020-06478-7. [DOI] [PMC free article] [PubMed] [Google Scholar]