Abstract

Background

Numerous wrist-wearable devices to measure physical activity are currently available, but there is a need to unify the evidence on how they compare in terms of acceptability and accuracy.

Objective

The aim of this study is to perform a systematic review of the literature to assess the accuracy and acceptability (willingness to use the device for the task it is designed to support) of wrist-wearable activity trackers.

Methods

We searched MEDLINE, Embase, the Cochrane Central Register of Controlled Trials, and SPORTDiscus for studies measuring physical activity in the general population using wrist-wearable activity trackers. We screened articles for inclusion and, for the included studies, reported data on the studies’ setting and population, outcome measured, and risk of bias.

Results

A total of 65 articles were included in our review. Accuracy was assessed for 14 different outcomes, which can be classified in the following categories: count of specific activities (including step counts), time spent being active, intensity of physical activity (including energy expenditure), heart rate, distance, and speed. Substantial clinical heterogeneity did not allow us to perform a meta-analysis of the results. The outcomes assessed most frequently were step counts, heart rate, and energy expenditure. For step counts, the Fitbit Charge (or the Fitbit Charge HR) had a mean absolute percentage error (MAPE) <25% across 20 studies. For heart rate, the Apple Watch had a MAPE <10% in 2 studies. For energy expenditure, the MAPE was >30% for all the brands, showing poor accuracy across devices. Acceptability was most frequently measured through data availability and wearing time. Data availability was ≥75% for the Fitbit Charge HR, Fitbit Flex 2, and Garmin Vivofit. The wearing time was 89% for both the GENEActiv and Nike FuelBand.

Conclusions

The Fitbit Charge and Fitbit Charge HR were consistently shown to have a good accuracy for step counts and the Apple Watch for measuring heart rate. None of the tested devices proved to be accurate in measuring energy expenditure. Efforts should be made to reduce the heterogeneity among studies.

Keywords: diagnosis, measurement, wrist-wearable devices, mobile phone

Introduction

Background

Tracking, measuring, and documenting one’s physical activity can be a way of monitoring and encouraging oneself to participate in daily physical activity; increased activity is thought to translate into important positive health outcomes, both physical and mental [1]. In the past, most physical activity tracking was done manually by oneself or an external assessor, through records, logbooks, or using questionnaires. These are indirect methods to quantify physical activity, meaning that they do not measure movement directly as it occurs [2]. The main disadvantages of such methods are the administrative burden on either the self-assessor or the external assessor and the potential imprecision because of recall bias [2,3]. Direct methods to assess physical activity [2], such as accelerometers or pedometers that digitally record movement, are preferred because they eliminate recall bias and are convenient. This process of activity tracking has become automated, accessible, and digitized with wearable tracking technology such as wristband sensors and smartwatches that can be linked to computer apps on other devices such as smartphones, tablets, and PCs. When data are uploaded to these devices, users can review their physical activity log and potentially use this feedback to make behavior changes with regard to physical activity.

The ideal device should be acceptable to the end user, affordable, easy to use, and accurate in measuring physical activity. Accuracy can be defined as the closeness of the measured value to the actual value. Accuracy can be calculated using measures of agreement, sensitivity and specificity, receiver operating characteristic curves, or absolute and percentage differences [4]. Agreement can be defined as “the degree of concordance between two or more sets of measurements” [5]. It can be measured as percentage agreement, that is, the percentage of cases in which 2 methods of measurements of the same variable lead to classification in the same category. Another example of methods of calculating agreement is the κ statistic, which measures agreement beyond chance [6]. Sensitivity and specificity are the true positive and true negative proportions, respectively. These proportions are calculated using the measurement method that we are evaluating as the index test and another method, known to be accurate, as the reference standard [4]. Receiver operating characteristic curves are obtained plotting the sensitivity versus the complement of specificity and can be used to find optimal cutoff points for the index test. Absolute and percentage differences are used to determine how far the index test measurement is from the reference standard or their average [4]. Acceptability can be widely defined as “the demonstrable willingness within a user group to employ information technology for the task it is designed to support” [7]. It can be assessed qualitatively (eg, through questionnaires or interviews) or quantitatively (eg, percentage of the time during which the device is worn or the data are available or measured using ad hoc scales). On the basis of a 2019 review, acceptability or acceptance of wrist-wearable activity-tracking devices is dependent on the type of user and context of use [8]. This same review indicates that research on accuracy has not kept up with the plethora of wearable physical activity–tracking devices in the market [8]. This may be because of the rapidly changing landscape as companies continue to upgrade models with different technical specifications and features. The purpose of this systematic review is to assess the acceptability and accuracy of these wrist-wearable activity-tracking devices through a focused in-depth review of primary studies assessing these 2 characteristics.

Objectives

The first objective of this systematic review is to assess the accuracy of wrist-wearable activity-tracking devices for measuring physical activity.

The second objective is to assess the acceptability of wrist-wearable activity-tracking devices for measuring physical activity.

Methods

The methods used for this systematic review have been registered in the PROSPERO database (CRD42019137420).

Search Strategy

The databases searched were MEDLINE, Embase, the Cochrane Central Register of Controlled Trials, and SPORTDiscus from inception to May 28, 2019. Search strategies were developed to retrieve content on wearable activity trackers and on their accuracy and reproducibility of results. We used search terms, including Wearable device and Fitness tracker, to identity studies on the use of a consumer-based wearable activity tracker, whereas terms such as data accuracy and reproducibility of results were included to bring in content focused on activity tracker validation. The search strategy is available on the web in the PROSPERO record. A snowball search was conducted by checking the references of relevant studies and systematic reviews on this topic that were identified in our original search.

Selection of Studies

For the acceptability objective, the population was the general population, without sex or age restrictions. The intervention was the use of a wrist-wearable activity tracker. The outcome was any quantitative measure of acceptability, including wearing time, data availability, and questionnaires to assess acceptability.

For the accuracy objective, the population was again the general population, the index test had to be a wrist-wearable activity tracker, and the reference standard could be another device or any method used to measure physical activity, including questionnaires and direct observation. The outcome could be any measure of physical activity, including but not limited to step count, heart rate, distance, speed, activity count, activity time, and intensity of physical activity.

For both objectives, this review examined both research-grade devices (activity trackers available only for research purposes) and commercial devices (those available to the general public). The included studies were limited to the community-based everyday-life setting. Laboratory tests such as research studies were included as long as everyday settings were reproduced, thereby excluding patients who were institutionalized and those who were hospitalized. We set no restrictions on the length of observation for the original studies.

The exclusion criteria included the following: device not worn on the wrist, studies measuring sleep, and studies on patients who were institutionalized or hospitalized.

All studies reporting primary data were considered for inclusion, with the exception of case reports and case series.

Using the aforementioned inclusion and exclusion criteria and a piloted form, we initially screened for inclusion from the titles and abstracts of the retrieved articles, using the web-based software Rayyan (Rayyan Systems Inc) [9]. Subsequently, we screened the full texts of the studies identified as potentially eligible from the title and abstract screening for selection.

Data Extraction and Risk-of-Bias Assessment

Data were extracted to an Excel (Microsoft Corp) file. The data extraction form was based on a previous publication on the same topic [8] and adapted to the needs of this review. The following data were extracted: general study information: first author’s name, publication year, type of study (prospective vs retrospective and observational vs interventional), duration of follow-up (in days), and setting (laboratory vs field); characteristics of the population: number of participants, underlying health condition (eg, healthy participants, people with severe obesity, and chronic joint pain), gender, and age distribution (mean and SD or median and minimum–maximum or first and third quartiles); measures of accuracy: step count, distance, speed, heart rate, activity count, time spent being active, and intensity of physical activity; and acceptability of the device, including but not limited to data availability, wearing time, ease of use. The risk of bias was assessed using the Quality Assessment of Diagnostic Accuracy Studies, version 2, tool [10]. This tool guides the assessment of the risk of bias in diagnostic accuracy studies in 4 domains: patient selection, index test, reference standard, and flow and timing. We rated the risk of bias in each domain as High, Probably high, Probably low, and Low. When necessary, the study authors were contacted for additional information.

Throughout title and abstract and full-text screening and the data extraction, each step was performed in duplicate with 2 reviewers (NN and BAP) deciding independently on inclusion or exclusion and, if needed, later having a discussion with another author to make a final decision. Disagreements were solved through discussion and, when needed, with the intervention of a third reviewer (FG, VBD, or DP). The reviewers were trained with calibration exercises until an adequate performance was achieved for each of these steps.

Diagnostic Accuracy Measures

When available, we extracted the mean absolute percentage error (MAPE) or the mean percentage error. When these were not available, we extracted other measures in the following order of priority: mean difference, mean bias (Bland–Altman), accuracy determined through intraclass correlation coefficient, and correlation coefficient (Pearson or Spearman). When the outcome was dichotomized and sensitivity and specificity were calculated, we reported on these values. When available, we reported measures of variability or 95% CIs for all the aforementioned measures. The formulas used for calculating the MAPE, mean percentage error, mean difference, and mean bias are reported in Multimedia Appendix 1 [11-75].

Synthesis of Results

Because of the significant heterogeneity observed in the studies’ populations, settings, devices assessed, reference standards, outcomes assessed, and the outcome measures reported, we decided not to perform a quantitative synthesis and have provided a narrative synthesis of the results for both the objectives. For the accuracy objective, given the high number of studies retrieved, we summarized results only for devices that were included in at least two studies reporting the same outcome. All the remaining results are reported in Multimedia Appendix 1.

Ethics Approval

This systematic review was based on published data and therefore did not require a submission to a research ethics board.

Availability of Data and Materials

Most of the data that support the findings of this study are available in Multimedia Appendix 1. A guide on how to use the database provided in Multimedia Appendix 1 can be found in Multimedia Appendix 2. The full data set can be made available upon reasonable request.

Code Availability

This is not applicable to this systematic review because no quantitative data synthesis was performed.

Results

Overview

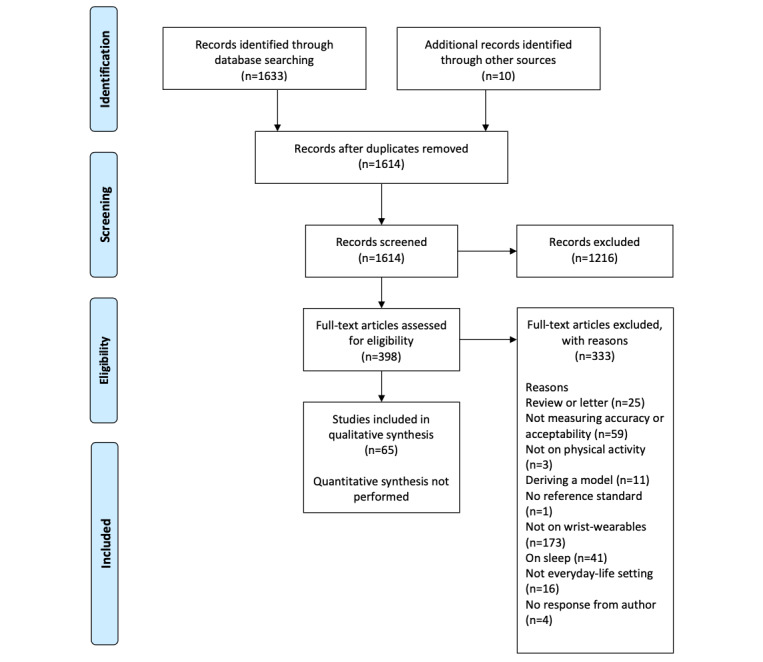

The search identified 1633 records (1614, 98.84%, after the removal of duplicates). The study flow diagram is presented in Figure 1. After screening the full texts of 398 articles, 65 (16.3%) were included in the systematic review. The characteristics of the included studies are summarized in Table 1 and Multimedia Appendix 3 [11-67] for the accuracy objective and Table 2 for the acceptability objective. All the included studies were single-center studies, with a prospective, observational design. The complete results for accuracy and acceptability have been reported in Multimedia Appendix 1.

Figure 1.

PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) 2009 study flow diagram.

Table 1.

Characteristics of the studies reporting on accuracy (N=57).

| First author, year | Setting | FUPa time, days | Sample, n | Age (years), mean (SD) | Female, % | Underlying health condition | Outcome | Device brand and model |

| Alharbi [11], 2016 | Laboratory | <1 | 48 | 66 (7) | 48 | Patients undergoing cardiac rehabilitation | Step count and MVPAb | Fitbit Flex |

| Alsubheen [12], 2016 | Field | 5 | 13 | 40 (12) | 38 | Healthy | Step count and energy expenditure | Garmin Vivofit |

| An [13], 2017 | Laboratory and field | 1 | 35 | 31 (12) | 51 | Healthy | Step count | Fitbit Flex, Garmin Vivofit, Polar Loop, Basis B1 Band, Misfit Shine, Jawbone UP24, and Nike FuelBand SE |

| An [14], 2017 | Field | <1 | 62 | 24 (5) | 40 | Healthy | Active time | ActiGraph GT3X |

| Blondeel [15], 2018 | Field | 14 | 8 | 65 (8) | 25 | COPDc | Step count | Fitbit Alta |

| Boeselt [16], 2016 | Field | 3 | 20 | 66 (7) | 15 | COPD | Step count, energy expenditure, and MVPA | Polar A300 |

| Bruder [17], 2018 | Laboratory and field | 395 | 32 | —d | — | Rehabilitation after radial fracture | Activity count | ActivPAL |

| Bulathsinghala [18], 2014 | Laboratory | 1 | 20 | 70 (10) | — | COPD | Physical activity intensity | ActiGraph GT3X+ |

| Burton [19], 2018 | Laboratory | <1 | 31 | 74 (6) | 65 | Healthy older adults | Step count | Fitbit Flex and Fitbit Charge HR |

| Choi [20], 2010 | Laboratory | <1 | 76 | 13 (2) | 62 | Healthy | Energy expenditure | ActiGraph GT1M |

| Chow [21], 2017 | Laboratory | 1.5 | 31 | 24 (5) | 39 | Healthy | Step count | ActiGraph wGT3xBT-BT, Fitbit Flex, Fitbit Charge HR, and Jawbone UP24 |

| Chowdhury [22], 2017 | Laboratory and field | 2 | 30 | 27 (6) | 50 | Healthy | Energy expenditure | Microsoft Band; Apple Watch, series not specified; Jawbone Up24; and Fitbit Charge |

| Cohen [23], 2010 | Laboratory and field | 3 | 57 | 70 (10) | — | COPD | Speed | ActiGraph Mini Motionlogger |

| Compagnat [24], 2018 | Laboratory | <1 | 46 | 65 (13) | — | Stroke | Energy expenditure | ActiGraph GT3X+ |

| Dondzila [25], 2018 | Laboratory and field | 3-62 | 40 | 22 (2) | 58 | Healthy | Step count, energy expenditure, and heart rate | Fitbit Charge HR and Mio Fuse |

| Dooley [26], 2017 | Laboratory | 1 | 62 | 23 (4) | 58 | Healthy | Heart rate and energy expenditure | Apple Watch, series not specified; Fitbit Charge HR; and Garmin Forerunner 225 |

| Durkalec-Michalski [27], 2013 | Laboratory | 2 | 20 | 26 (5) | 55 | Healthy | Energy expenditure | ActiGraph GT1M |

| Falgoust [28], 2018 | Laboratory | 1 | 30 | — | — | Healthy | Step count | Fitbit Charge HR, Fitbit Surge, and Garmin Vivoactive HR |

| Ferguson [29], 2015 | Field | 2 | 21 | 33 (10) | 52 | Healthy | Step count, MVPA, and energy expenditure | Nike FuelBand, Misfit Shine, and Jawbone UP |

| Gaz [30], 2018 | Laboratory and field | <1 | 32 | 36 (8) | 69 | Healthy | Step count and distance | Fitbit Charge HR; Apple Watch, series not specified; Garmin Vivofit 2; and Jawbone UP2 |

| Gillinov [31], 2017 | Laboratory | <1 | 50 | 38 (12) | 54 | Healthy | Heart rate | Garmin Forerunner 235; TomTom Spark; Apple Watch, series not specified; and Fitbit Blaze |

| Gironda [32], 2007 | Laboratory | <1 | 3 | 43e | 31 | Pain syndromes | Activity count | Actiwatch Score |

| Hargens [33], 2017 | Laboratory and field | 7 | 21 | 31e | 68 | Healthy | MVPA, energy expenditure, and step count | Fitbit Charge |

| Hernandez-Vicente [34], 2016 | Field | 7 | 18 | 21 (1) | 50 | Healthy | Energy expenditure, vigorous active time, active time, and step count | Polar V800 |

| Huang [35], 2016 | Laboratory | 1 | 40 | 24 (3) | 25 | Healthy | Step count and distance | Jawbone UP24, Garmin Vivofit, Fitbit Flex, and Nike FuelBand |

| Imboden [36], 2018 | Laboratory | <1 | 30 | 49 (19) | 50 | Healthy | Energy expenditure, MVPA, and step count | Fitbit Flex, Jawbone Up24, and Fitbit Flex |

| Jo [37], 2016 | Laboratory | <1 | 24 | 25e | 50 | Healthy | Heart rate | Basis Peak K and Fitbit Charge |

| Jones [38], 2018 | Laboratory | 118 | 30 | 33e | — | Healthy | Step count | Fitbit Flex |

| Kaewkannate [39], 2016 | Laboratory | <1 | 7 | 31 (0) | 14 | Healthy | Step count | Fitbit Flex, Jawbone UP24, Withings Pulse, and Misfit Shine |

| Lamont [40], 2018 | Laboratory | <1 | 33 | 67 (8) | 64 | Parkinson disease | Step count | Garmin Vivosmart HR and Fitbit Charge HR |

| Lauritzen [41], 2013 | Laboratory and field | <1 | 18 | — | 56 | Older adults | Step count | Fitbit Ultra |

| Lawinger [42], 2015 | Laboratory | <1 | 30 | 26 (6) | 70 | Healthy | Activity count | ActiGraph GT3X+ |

| Lemmens [43], 2018 | Laboratory | <1 | 40 | 31 (5) | 100 | Parkinson disease | Energy expenditure | Philips optical heart rate monitor |

| Magistro [44], 2018 | Laboratory | <1 | 40 | 74 (7) | 60 | Healthy | Step count | ADAMO Care Watch |

| Mandigout [45], 2017 | Laboratory | <1 | 24 | 68 (14) | 60 | Stroke | Energy expenditure | Actical and ActiGraph GTX |

| Manning [46], 2016 | Laboratory | <1 | 9 | 15 (1) | — | Severe obesity | Step count | Fitbit One, Fitbit Flex, Fitbit Zip, ActiGraph GT3x+, and Jawbone UP |

| Montoye [47], 2017 | Laboratory | <1 | 30 | 24 (1) | 47 | Healthy | Step count, energy expenditure, and heart rate | Fitbit Charge HR |

| Powierza [48], 2017 | Field | <1 | 22 | 22 (2) | 55 | Healthy | Heart rate | Fitbit Charge |

| Price [49], 2017 | Laboratory | <1 | 14 | 23e | 21 | Healthy | Energy expenditure | Fitbit One, Garmin Vivofit, and Jawbone UP |

| Redenius [50], 2019 | Laboratory | 4 | 65 | 42 (12) | 72 | Healthy | MVPA | Fitbit Flex |

| Reid [51], 2017 | Field | 4 | 22 | 21 (2) | 100 | Healthy | MVPA and step count | Fitbit Flex |

| Roos [52], 2017 | Laboratory | 2 | 20 | 24 (2) | 40 | Runners | Energy expenditure | Suunto Ambit, Garmin Forerunner 920XT, and Polar V800 |

| Schaffer [53], 2017 | Laboratory | <1 | 24 | 54 (13) | 42 | Stroke | Step count | Garmin Vivofit |

| Scott [54], 2017 | Field | 7 | 89 | — | 54 | Healthy | Daily mean activity and MVPA | GENEActiv |

| Semanik [55], 2020 | Laboratory | 7 | 35 | 52e | 69 | Chronic joint pain | MVPA | Fitbit Flex |

| Sirard [56], 2017 | Laboratory and field | 7 | 14 | 9 (2) | 50 | Healthy | Energy expenditure, MVPA, and step count | Movband and Sqord |

| St-Laurent [57], 2018 | Laboratory | 7 | 16 | 33 (4) | 100 | Pregnant | Step count and MVPA | Fitbit Flex |

| Stackpool [58], 2013 | Laboratory | <1 | 20 | 22 (1) | 50 | Healthy | Step count and energy expenditure | Jawbone UP, Nike FuelBand, Fitbit Ultra, and Adidas miCoach |

| Stiles [59], 2013 | Laboratory | 1 | 10e | 39 (6) | 100 | Healthy premenopausal women | Loading rate (BWf/s) | GENEActiv and ActiGraph GT3X+ |

| Støve [60], 2019 | Laboratory | <1 | 29 | 29 (9) | 41 | Healthy | Heart rate | Garmin Forerunner |

| Tam [61], 2018 | Laboratory | <1 | 30 | 32 (9) | 50 | Healthy | Step count | Fitbit Charge HR and Xiaomi Mi Band 2 |

| Thomson [62], 2019 | Laboratory | <1 | 30 | 24 (3) | 50 | Healthy | Heart rate | Apple Watch, series not specified; and Fitbit Charge HR2 |

| Wahl [63], 2017 | Laboratory | <1 | 20 | 25 (3) | 50 | Healthy | Step count, energy expenditure, and distance | Polar Loop, Beurer AS80, Fitbit Charge HR, Fitbit Charge, Bodymedia Sensewear, Garmin Vivofit, Garmin Vivosmart, Garmin Vivoactive, Garmin Forerunner 920XT, Xiaomi Mi Band, and Withings Pulse |

| Wallen [64], 2016 | Laboratory | <1 | 22 | 24 (6) | 50 | Healthy | Heart rate, energy expenditure, and step count | Apple Watch, series not specified; Samsung Gear S; Mio Alpha; and Fitbit Charge |

| Wang [65], 2017 | Laboratory | <1 | 9 | 22 (1) | 44 | Healthy | Step count | Huawei B1, Xiaomi Mi Band, Fitbit Charge, Polar Loop, Garmin Vivofit 2, Misfit Shine, and Jawbone UP |

| Woodman, 2017 [66] | Laboratory | <1 | 28 | 25 (4) | 29 | Healthy | Energy expenditure | Garmin Vivofit, Withings Pulse, and Basis Peak |

| Zhang [67], 2012 | Laboratory | 1 | 60 | 49 (7) | 62 | Healthy | Activity classification (sedentary, household, walking, and running) | GENEActiv |

aFUP: follow-up.

bMVPA: moderate- to vigorous-intensity physical activity.

cCOPD: chronic obstructive pulmonary disease.

dNot available.

eSD not reported.

fBW: body weight.

Table 2.

Characteristics of the studies reporting on acceptability (N=11).

| First author, year | Setting | FUPa time, days | Sample, n | Age (years), mean (SD) | Female, % | Underlying health condition | Outcome assessed | Device brand and model |

| Boeselt [16], 2016 | Laboratory and field | 7 | 20 | 66 (7) | 15 | COPDb | Ease of use and other characteristics | Polar A300 |

| Deka [68], 2018 | Field | 5 | 46 | 65 (12) | 67 | CHFc | Data availability | Fitbit Charge HR |

| Farina [69], 2019 | Field | 2 | 26; 26 | 80 (6); 76 (6) | 39; 73 | Dementia; caregivers of patients with dementia | Wearing time | GENEActiv |

| Fisher [70], 2016 | Field | 7 | 34 | 69d | —e | Parkinson disease | Ease of use and other characteristics | AX3 data logger |

| Kaewkannate [39], 2016 | Field | <1 | 7 | 31 (0) | 14 | Healthy | Ease of use and other characteristics | Fitbit Flex, Jawbone UP24, Withings Pulse, and Misfit Shine |

| Lahti [71], 2017 | Laboratory | 120 | 40 | — | — | Schizophrenia | Data availability | Garmin Vivofit |

| Marcoux [72], 2019 | Field | 46 | 20 | 73 (7) | 20 | Idiopathic pulmonary fibrosis | Data availability | Fitbit Flex 2 |

| Naslund [73], 2015 | Field | 80-133 | 5 | 48 (9) | 90 | Serious mental illness | Wearing time | Nike FuelBand |

| Speier [74], 2018 | Laboratory | 90 | 186 | — | — | Coronary artery disease | Wearing time | Fitbit Charge HR2 |

| St-Laurent [57], 2018 | Laboratory | 1 | 16 | 33 (4) | 100 | Pregnant | Ease of use and other characteristics | Fitbit Flex |

| Rowlands [75], 2018 | Field | 425 | 1724 | 13 (1) | 100 | Healthy | Data availability | GENEActiv |

aFUP: follow-up.

bCOPD: chronic obstructive pulmonary disease.

cCHF: congestive heart failure.

dSD not reported.

eNot available.

Accuracy

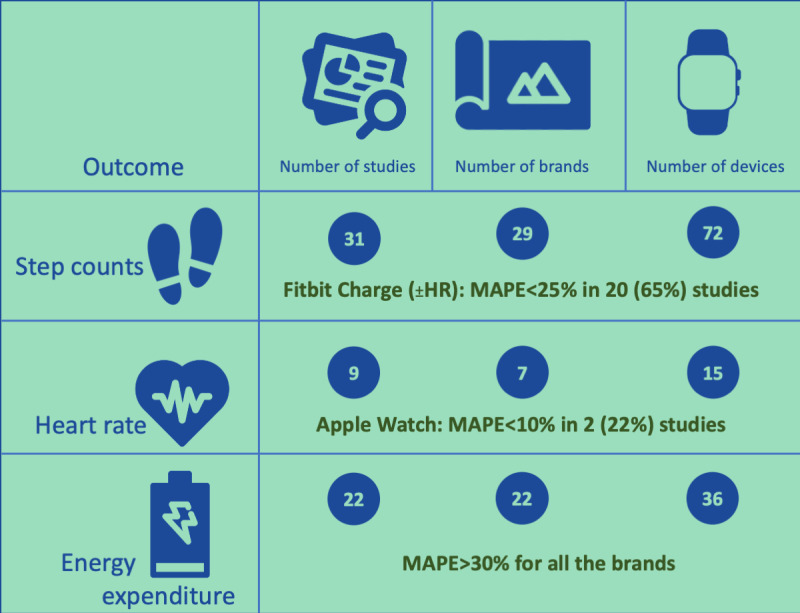

The accuracy of wrist-wearable activity trackers was assessed in 57 studies on 72 devices from 29 brands. Step count, heart rate, and energy expenditure (EE) were the most commonly assessed outcomes in the appraised literature. The results of these outcomes are summarized in Figure 2 (icons by Nikhil Bapna, Yoyon Pujiyono, Chintuza, Gregor Cresnar, Andrejs Kirma, and Yigit Pinarbasi from the Noun Project [76]), in which we have highlighted the standout device for the most frequently reported outcomes.

Figure 2.

Summary of the results for the main accuracy outcomes. MAPE: mean absolute percentage error.

Step Counts

A total of 31 studies on 72 devices from 29 brands reported data on step counts. The reference standards used were manual count (directly observed or on video, usually with the help of a tally counter) or automated count through video analysis, an activity tracker (8 different devices), or a photoelectric cell.

The ActiGraph wGT3xBT-BT, tested against manual count, showed a mean percentage error of –41.7% (SD 13.5%) [21]. The ActiGraph GT3x+ showed no statistically significant correlation with the same reference standard [46].

The Apple Watch (series not specified) was evaluated in 6% (2/31) of studies using manual count as the reference standard [30,64]. The mean difference between the device and the manual count varied from –47 (SD 470) steps to 39.44 (SD 151.81) steps in different walking conditions.

For the Fitbit Alta, the mean step count was 773 (SD 829) higher (P=.009) than the one obtained with the reference standard, an accelerometer [15]. For the Fitbit Charge, the mean difference was –59 (SD 704) steps compared with direct observation [64]. The MAPE for the same device ranged from –4.4% to 20.7%, using different automated step count methods as the reference standard [33,63,65]. The Fitbit Charge HR was assessed in 29% (9/31) of studies, using direct observation [19,21,28,30,61] or an automated method of step count as the reference standard [25,40,47,63]. The MAPE ranged from –12.7% to 24.1%. The accuracy of the Fitbit Flex in measuring steps was assessed in 35% (11/31) of studies, using manual count [13,19,21,35,36,38,39,46] or an ActiGraph device [11,51,57] as the reference standard. The mean percentage error ranged from –23% to 13%. For the Fitbit One and Fitbit Zip, no statistically significant correlation was found in step counting using direct observation as the reference standard [46]. The correlation coefficient was not reported. For the Fitbit Surge, the mean difference compared with direct observation was –86.0 steps (P=.004) [28]. For the Fitbit Ultra, the MAPE was 99.6% (SD 0.8%) [41] and the Pearson correlation coefficient against manual count ranged from 0.44 to 0.99 in different exercise conditions [58].

The accuracy of the Garmin Vivofit was assessed in 16% (5/31) of studies [12,13,35,53,63], with a MAPE ranging from –41% to 18% [13,53,63]. For the Vivofit 2, a study reported a MAPE of 4% [65] and another study reported a mean difference ranging from 5.09 (SD 8.38) steps to 98.06 (SD 137.49) steps in different walking conditions (over a maximum distance of 1.6 km) [30].

In a study by Wahl et al [63], the MAPE against automated step counting using a photoelectric cell as the reference standard, in different exercise types and conditions, ranged from –2.7% to 1.5% for the Garmin Forerunner 920XT, from –1.5% to 0.6% for the Garmin Vivoactiv, and from –1.1% to –0.3% for the Garmin Vivosmart [63]. For the Garmin Vivoactive HR, the mean difference against manual step count was –19.7 steps (P=.03) [28]. For the Garmin Vivosmart HR, the mean difference ranged from –39.7 (SD 54.9) steps to 5.4 (SD 5.8) steps for different walking speeds and locations (outdoor vs indoor) over a total of 111-686 steps [40].

For the Jawbone UP, the MAPE was –6.73% in a study [65] and the mean absolute difference 806 over an average of 9959 steps in another study [29]. For the Jawbone UP2, the mean difference ranged from 16.19 (SD 29.14) steps to 64 (SD 66.32) steps for different walking conditions over a maximum distance of 1.6 km [30]. For the Jawbone UP24, the mean percentage error ranged from –28% to –0.8% [21,35,36].

For the Misfit Shine, the MAPE ranged from –13% to 23% [13,65].

For the Mio Fuse, the MAPE ranged from –5% to –16% at different treadmill speeds [25], whereas in another study, the mean percentage error was <5% for the Xiaomi Mi Band 2 [61].

For the Nike FuelBand, the mean percentage error ranged from –34.3% (SD 26.8%) to –16.7% (SD 16.5%) [35], whereas for the FuelBand SE, the MAPE ranged from 10.2% to 45.0% [13].

The MAPE for the Polar Loop ranged from –13% to 27% in 3 studies [13,63,65]. Regarding 2 other devices from Polar, for the A300, a Pearson correlation coefficient of 0.96 (P<.01) [16] was reported, whereas for the V800, the Bland–Altman bias was equal to 2487 (SD 2293) steps per day over a mean 10,832 (SD 4578) steps per day measured with the reference standard [34].

For the Withings Pulse, the MAPE for step count ranged from –16.0% to –0.4% [63] and the accuracy from 97.2% to 99.9% [39]. All the remaining devices were only used in 1 study each, and the results are reported in Multimedia Appendix 1.

Heart Rate

A total of 9 studies on 15 devices from 7 brands evaluated the accuracy of activity-tracking devices to measure the participants’ heart rates. The reference standards used were electrocardiography, pulse oximetry, or another activity tracker (4 different devices).

For the Apple Watch, the MAPE for measuring heart rate ranged from 1% (SD ~1%) to 7 (SD ~11%) [26,31].

In the Fitbit family of devices, for the Fitbit Charge, the mean bias estimated with the Bland–Altman method ranged from –6 (SD 10) bpm to –9 (SD 8) bpm [37,48,64]. For the Fitbit Charge HR or Fitbit Charge HR2, the MAPE for measuring heart rate ranged from 2.4% (SD ~1.5%) to 17% (SD ~20%) [26,47,62]. For the Fitbit Blaze, the MAPE ranged from 6% (SD 6%) to 16% (SD 18%) for different activities [31].

Active Time: Time Spent in Moderate- to Vigorous-Intensity Physical Activity and Other Outcomes

A total of 13 studies on 11 devices from 8 brands reported on the time spent being active, most frequently defined as the time spent in moderate- to vigorous-intensity physical activity (MVPA; 11 studies), expressed in minutes per day. The reference standard for MVPA was another activity tracker (3 different devices). Other outcomes were time spent being active (standing+walking+running), time spent running, or time spent on different types of physical activity, with each of these outcomes being reported in only 1 study.

For the Fitbit Flex, the MAPE for measuring the time spent in MVPA varied from 7% (SD 6%) to 74% (SD 13%) [50] and the mean percentage error ranged from –65% to 10% [11,36]. All the other devices were only used in 1 study each, and the results are reported in Multimedia Appendix 1.

Intensity of Activity: EE and Other Outcomes

A total of 24 studies on 42 devices from 23 brands focused on measuring the intensity of physical activity. The most frequent measure of intensity was EE, expressed as kcal, evaluated in 92% (22/24) of studies. The less frequent measures of intensity included loading rate and the classification of physical activity (sedentary, household, walking, and running). For EE, the reference standard used most commonly was indirect calorimetry (6 different instruments). Less common reference standards included EE estimated with other wearable activity trackers (5 different devices), estimated based on the treadmill settings, or direct room calorimetry.

Among the ActiGraph family, the mean percentage difference in the EE compared with the reference standard in people with previous stroke was 3% for walking participants and 47% for participants with wheelchair using the ActiGraph GT3X+ [24]. The Spearman correlation coefficient was 0.08 (P=.71) if worn on the plegic side and 0.20 (P=.34) if worn on the nonplegic side with the ActiGraph GTX [45]. Using the ActiGraph GT1M, the mean percentage difference was 0.5% (SD 8.0%) in a study [20], whereas another study found that the device overestimated EE at moderate intensity by 60% and underestimated EE by 40% at vigorous intensity while being 86% accurate in measuring EE at light intensity [27].

For the Apple Watch, the MAPE for EE ranged from 15% (SD 10%) to 211% (SD ~96%) [22,26].

In the Fitbit family, the MAPE from the Charge model ranged from –4.5% to 75.0% in different studies [22,33,63] and from –12% to 89% for the Charge HR [25,26,47,63]. For the Fitbit Flex, a mean percentage bias of –13% was reported [36]. For the Fitbit One, a study reported a mean bias of 2.91 (SD 4.35) kcal per minute [49], whereas for the Fitbit Ultra, the Pearson correlation coefficient ranged from 0.24 to 0.67 for different physical activities [58].

Among the devices from Garmin, the MAPE for EE ranged from –21% to 45% for the Vivofit [63,66], from –2% to –36% for the Vivosmart [63], and from 5% to 37% for the Vivoactive [63].

For the Garmin Forerunner, the MAPE ranged from –27% to 49% for the model 920XT [52,63] and from 31% (SD ~26%) to 155% (SD ~164%) for the model 225 [26].

In the Polar family, the MAPE for EE ranged from 10% to 40% for the V800 model [52], with a Bland–Altman bias of 957.5 (SD 679.9) kcal, when the mean EE measured with the reference standard was 1456.48 (SD 731.40) kcal [34]. For the Polar Loop, the MAPE for EE ranged from 6% to 56% [63]. The Pearson correlation coefficient was 0.74 (P<.01) for the Polar A300 [16].

For the Withings Pulse, the MAPE for EE ranged from –39% to 64% [63,66].

Outcomes Reported Less Frequently

Other outcomes that were evaluated less frequently include distance, reported in 3 studies on 15 devices from 7 brands, always using the measured distance as the reference standard [30,35,63]; speed, reported in a study using 1 device, with actual speed (on a treadmill) as the reference standard [23]; and activity count, defined as the number of activities (eg, number of arm movements or body movements based on observation or measured acceleration data), reported from 4 studies on 4 devices from 4 different brands using as the reference standard manual count (video recording), video analysis (automated), or an activity tracker [17,32,42,54].

Risk of Bias

The risk-of-bias assessment for each outcome is reported in Multimedia Appendix 1. In summary, all the studies were at high or probably high risk of bias for the domain Patient selection because they used a convenience sampling technique. Almost all the studies were at low risk of bias for the domains Index test and Reference standard because the 2 measurement methods were applied at the same time and interpreted without knowledge of the results obtained with the other method. A small number of studies was identified as high risk for the domain Flow and timing based on the high percentage (>25%) of missing data for the index test or reference standard.

Acceptability

The acceptability of wrist-wearable activity trackers was assessed in 11 studies on 10 devices from 9 brands.

Data Availability

In all, 36% (4/11) of studies focused on data availability, expressed as a proportion of time in which the data were available, and a different device was used in each of these studies. The denominator for the proportion could be the study duration or the time spent exercising. Rowlands et al [75] found that data availability was 52% in a pediatric healthy population using the GENEActiv for 14 months. Deka et al [68] focused on data availability during exercise time. In this study, adult patients with cardiac heart failure activated their Fitbit Charge HR in 75% of the exercise sessions (over 5 days) and data were available for 99% of the time when activated. Marcoux et al [72] studied the Fitbit Flex 2 in adults with idiopathic pulmonary fibrosis (for 46 days). Of the 20 patients, 2 did not succeed in activating the device. Among the remaining participants, data were available for a mean of 91% (SD 20%) of the time. Lahti et al [71] studied the Garmin Vivofit in adults with schizophrenia and found data available for 97% of the time (over 4 months).

Wearing Time

In all, 27% (3/11) of studies reported on the wearing time. Farina et al [69], using the GENEActiv, found that 89% of the participants with dementia and 86% of their caregivers wore the device for the duration of the study (28 days). Speier et al [74], using the Fitbit Charge 2, enrolled participants with coronary artery disease. The median time spent wearing the activity tracker ranged from 44% to 90% over 90 days. Finally, for Nike FuelBand, in a study on patients with schizophrenia, the mean wearing time was 89% (SD 13%) over 80-133 days [73].

Ease of Use and Other Characteristics

In all, 36% (4/11) of studies focused on the ease of use and similar characteristics of wrist-wearing devices. The Polar A300 was assessed in patients with chronic obstructive pulmonary disease wearing the device for 3 days using the Post-Study System Usability Questionnaire, which calculates a score that ranges from 1 to 7 (the lower the better) for 3 subdomains [16]. The mean scores were 1.46 (SD 0.23) for system quality, 2.41 (SD 0.53) for information quality, and 3.35 (SD 0.62) for interface quality. The AX3 data logger was assessed in persons with Parkinson disease wearing the device for 7 days [70]. A questionnaire created ad hoc was used for the assessment; 94% of the participants agreed that they were willing to wear the sensors at home, and 85% agreed that they were willing to wear the sensors in public. However, some of the participants reported problems with the strap fitting and the material (number not reported). The Fitbit Flex was assessed with a questionnaire created ad hoc in a study on pregnant women followed for 7 days [57]. The Fitbit Flex was reported by 31% to be inconvenient, 6% to be poorly esthetic, and 12% to be uncomfortable. Kaewkannate et al [39] asked healthy participants to wear 4 different devices over 28 days and compared them using a questionnaire created ad hoc. The Withings Pulse had the highest satisfaction score, followed by Misfit Shine, Jawbone UP24, and Fitbit Flex.

Discussion

Study Findings

We systematically reviewed the available evidence on the acceptability and accuracy of wrist-wearable activity-tracking devices for measuring physical activity across different devices and measures. We found substantial heterogeneity among the included studies. The main sources of heterogeneity were the studies’ population and setting, the device used, the reference standard, the outcome assessed, and the outcome measure reported.

Acceptability was evaluated in 11 studies on 10 devices from 9 brands. Data availability was ≥75% for the Fitbit Charge HR, Fitbit Flex 2, and Garmin Vivofit. Data availability is defined as the amount of data captured over a certain time period, which, in this case, is over a predetermined duration of each respective study. Data availability can be a measure of how accurate a device is at capturing data when the device is worn. For example, if an individual wears the device for 8 hours but only 4 hours of data are available, some questions may be raised on the capability of the device to capture information accurately. The wearing time was 89% for both the GENEActiv and Nike FuelBand. Wearing time is defined as the amount of time the device is worn over a predetermined duration for each study. For each study, wearing time may have been assessed differently; for example, a study may measure wearing time over a day, whereas another study may measure over a week. Both data availability and wearing time can provide a deeper look into acceptability because participants may wear a device more frequently and, ultimately, have more data available if a device is more acceptable. Accuracy was assessed in 57 studies on 72 devices from 29 brands. Among 14 outcomes assessed, step counts, heart rate, and EE were the ones used most frequently. For step counts, the Fitbit Charge (or the Fitbit Charge HR) had a MAPE <25% across 20 studies. For heart rate, the Apple Watch had a MAPE <10% in 2 studies. For EE, the MAPE was >30% for all the brands, showing poor accuracy across devices.

Comparison With Other Systematic Reviews

Feehan et al [77] conducted a systematic review on the accuracy of Fitbit devices for measuring physical activity. The review did not specifically focus on wrist-wearable activity trackers; it also included studies using activity trackers worn on other body locations (torso, ankle, or hip). This systematic review reported a good accuracy of Fitbit devices in measuring steps, with 46% of the included studies reporting a measurement error within –3% to +3%. Regarding EE, the authors concluded that “Fitbit devices are unlikely to provide accurate measures of energy expenditure.” Studies on heart rate were not included in the review. Evenson et al [78] performed a systematic review focusing on Fitbit and Jawbone devices. Similarly, wearing the device on the wrist was not an inclusion criterion. The authors concluded that for step counts, the included studies often showed a high correlation, with the correlation coefficient ≥0.80 among devices from both brands, with the reference standards. The correlation was frequently low for the outcome EE. Similar to the review by Feehan et al [77], the outcome heart rate was not included in this systematic review. The results of these systematic reviews are consistent with our findings for the devices and outcomes assessed.

Strengths and Limitations

The main strengths of our systematic review include the inclusion of all the devices reported in the literature; the reporting on all the outcomes related to acceptability and accuracy, with no restrictions; and the assessment of the risk of bias of the included studies. These characteristics make this review unique for this topic. However, in our systematic review, we decided to exclude studies in which a wearable device was not positioned on a wrist. Some devices can be positioned both on the wrist and other sites (torso, hip, ankle, arm, or brassiere), and the acceptability and accuracy can vary for the same device depending on where it is positioned, increasing heterogeneity [77,78]. Therefore, our results cannot be generalized to the acceptability and accuracy of devices worn on sites other than wrists. Acceptability is defined and measured in many different ways in the literature about wearing devices and about information technology in general [79]. These definitions are often broad and nonspecific, with published literature suggesting that acceptability research should become more robust [80]. For the purpose of our paper, acceptability was operationalized using proxies such as wearing time or data availability. However, other definitions have proposed that acceptability is related more to the extent to which individuals receiving a health care intervention find it appropriate based on cognitive and emotional responses to the intervention [80]. It is important to recognize that acceptability may be more of a holistic and subjective construct rather than an objective one, and thus wear time or data availability may not do full justice to acceptability. Although these metrics have the advantage of being relatively easy to obtain and reproduce, allowing for quantitative comparisons, they are only proxies for acceptability, which is a more nuanced concept. For example, one might wonder if wearing time is low because a person only wears the device a few hours each day or only on weekends or if they completely stopped wearing it after some time. Moreover, wearing time is more likely to offer valuable information in studies with a long follow-up, whereas 2 out of 3 studies reporting on this outcome had a follow-up of <1 week. Because of the presence of important heterogeneity among studies, we were not able to perform a meta-analysis.

Regardless, the comprehensive reporting in this review will allow researchers to assess the available evidence and inform future studies, either to further assess the accuracy of wearable devices or to inform the choice of one device over another to use in interventional studies. To facilitate these choices, we have provided to readers the database with the results of the individual included studies and we did our best to offer a synthesis of the 3 outcomes reported most frequently (step counts, heart rate, and EE).

Future Research

Further high-quality studies are needed to determine the accuracy and acceptability of wearable devices for measuring physical activity. Given the number of devices available (72 included in this review), it is unlikely that a single study will be able to answer this question. This makes it particularly important to standardize some aspects of these studies, to reduce the heterogeneity among them, and allow for meta-syntheses of the results with comparisons across studies, devices, and outcomes. If the heterogeneity was acceptable, a network meta-analysis would also allow researchers to make indirect comparisons. The main sources of heterogeneity that could be controlled are the setting of the study, the population, the reference standard used, and the outcome definition and measure. A first step in this direction would be putting together a task force of experts to issue guidelines on how to report these experiments, similar to guidelines for the EQUATOR network. A second step would be to issue recommendations on this aspect, starting with accepted reference standards against which devices should be tested for each outcome, the conditions in which the experiment should be conducted, and the way in which the outcomes should be measured and analyzed. Regarding the reference standards, some of these are more accurate than others. Our approach was to take accuracy to mean criterion and convergent validity in this review, but once there is consensus on the acceptable reference standard, other comparisons should not be included in a meta-synthesis. Regarding the method to report on the accuracy of continuous variables (more common in this field), this is the order of priority that we suggest: MAPE, mean percentage error, mean difference, Bland–Altman mean bias, and measure of correlation as the least preferred. This is because the percentage error gives the reader a better understanding of the importance of the error (a mean error of 50 steps is much more relevant if the total step count was 100 than if it was 10,000). We preferred the MAPE over the mean absolute error because when the absolute value is not used, there is a risk of negative and positive errors balancing each other, with the risk of overestimating the accuracy. We prefer the mean difference over the Bland–Altman mean bias because in an accuracy study, the reference standard is supposed to be more accurate than the index test, and therefore the latter should be tested against the former, not against their mean. In the case of the acceptability outcome, consensus should be reached also on how to define and measure it. For example, defining a minimum set of outcomes to be reported might help in this context. This might include reporting the percentage of abandonment over time. Furthermore, as new devices become available, their acceptability and accuracy should also be tested because they could differ from the acceptability and accuracy of other devices, even those produced by the same company. Regarding the choice of the device to use in interventional studies, for example, in studies that aim at increasing physical activity in a certain population, there is no one-device-fits-all answer. This choice should be based on the available data on acceptability and accuracy and be tailored to the outcome to measure. In a study with step count as the main outcome, the Fitbit Charge and Fitbit Charge HR might be appropriate choices. The Apple Watch might be preferred if the main outcome is heart rate. Active time was most often measured through time spent in MVPA, and the Fitbit Flex is the only device that was used in 3 studies, showing good results in 2 of these. Regarding EE, we do not feel comfortable suggesting the use of any device based on the current evidence because the accuracy was poor across devices. The decision should probably be driven by the other outcomes used. Broader recommendations should be issued in the form of guidelines from a panel of experts using this systematic review as a knowledge base.

Conclusions

We reported on the acceptability and accuracy of 72 wrist-wearable devices for measuring physical activity produced by 29 companies. The Fitbit Charge and Fitbit Charge HR were consistently shown to have a good accuracy for step counts and the Apple Watch for measuring heart rate. None of the tested devices proved to be accurate in measuring EE. Efforts should be made to reduce the heterogeneity among studies.

Abbreviations

- EE

energy expenditure

- MAPE

mean absolute percentage error

- MVPA

moderate to vigorous-intensity physical activity

Database of result characteristics of the studies reporting on accuracy and acceptability.

Guide for accessing database in Multimedia Appendix 1.

Result characteristics of the studies reporting on accuracy.

Footnotes

Authors' Contributions: FG and AI conceived this review. FG is the guarantor of the review and drafted the manuscript. TN and VBD developed the search strategy. FG, NN, VBD, APB, and DP screened the articles and extracted the data. All the authors read, provided feedback, and approved the final manuscript.

Conflicts of Interest: None declared.

References

- 1.Seifert A, Schlomann A, Rietz C, Schelling HR. The use of mobile devices for physical activity tracking in older adults' everyday life. Digit Health. 2017 Nov 09;3:2055207617740088. doi: 10.1177/2055207617740088. https://journals.sagepub.com/doi/10.1177/2055207617740088?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed .10.1177_2055207617740088 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Ainsworth BE. How do I measure physical activity in my patients? Questionnaires and objective methods. Br J Sports Med. 2009 Jan;43(1):6–9. doi: 10.1136/bjsm.2008.052449.bjsm.2008.052449 [DOI] [PubMed] [Google Scholar]

- 3.Rütten A, Ziemainz H, Schena F, Stahl T, Stiggelbout M, Auweele YV, Vuillemin A, Welshman J. Using different physical activity measurements in eight European countries. Results of the European Physical Activity Surveillance System (EUPASS) time series survey. Public Health Nutr. 2003 Jun;6(4):371–6. doi: 10.1079/PHN2002450. [DOI] [PubMed] [Google Scholar]

- 4.Rothwell PM. Analysis of agreement between measurements of continuous variables: general principles and lessons from studies of imaging of carotid stenosis. J Neurol. 2000 Nov;247(11):825–34. doi: 10.1007/s004150070068. [DOI] [PubMed] [Google Scholar]

- 5.Ranganathan P, Pramesh CS, Aggarwal R. Common pitfalls in statistical analysis: measures of agreement. Perspect Clin Res. 2017;8(4):187–91. doi: 10.4103/picr.PICR_123_17. http://www.picronline.org/article.asp?issn=2229-3485;year=2017;volume=8;issue=4;spage=187;epage=191;aulast=Ranganathan .PCR-8-187 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Viera AJ, Garrett JM. Understanding interobserver agreement: the kappa statistic. Fam Med. 2005 May;37(5):360–3. http://www.stfm.org/fmhub/fm2005/May/Anthony360.pdf . [PubMed] [Google Scholar]

- 7.Dillon A, Morris M. User acceptance of information technology: theories and models. Annual Review of Information Science and Technology (ARIST) 1996. [2021-12-30]. https://eric.ed.gov/?id=EJ536186 .

- 8.Shin G, Jarrahi MH, Fei Y, Karami A, Gafinowitz N, Byun A, Lu X. Wearable activity trackers, accuracy, adoption, acceptance and health impact: a systematic literature review. J Biomed Inform. 2019 May;93:103153. doi: 10.1016/j.jbi.2019.103153.S1532-0464(19)30071-1 [DOI] [PubMed] [Google Scholar]

- 9.Ouzzani M, Hammady H, Fedorowicz Z, Elmagarmid A. Rayyan-a web and mobile app for systematic reviews. Syst Rev. 2016 Dec 05;5(1):210. doi: 10.1186/s13643-016-0384-4. doi: 10.1186/s13643-016-0384-4.10.1186/s13643-016-0384-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Whiting PF, Rutjes AW, Westwood ME, Mallett S, Deeks JJ, Reitsma JB, Leeflang MM, Sterne JA, Bossuyt PM, QUADAS-2 Group QUADAS-2: a revised tool for the quality assessment of diagnostic accuracy studies. Ann Intern Med. 2011 Oct 18;155(8):529–36. doi: 10.7326/0003-4819-155-8-201110180-00009.155/8/529 [DOI] [PubMed] [Google Scholar]

- 11.Alharbi M, Bauman A, Neubeck L, Gallagher R. Validation of Fitbit-Flex as a measure of free-living physical activity in a community-based phase III cardiac rehabilitation population. Eur J Prev Cardiol. 2016 Feb 23;:1476–85. doi: 10.1177/2047487316634883.2047487316634883 [DOI] [PubMed] [Google Scholar]

- 12.Alsubheen SA, George AM, Baker A, Rohr LE, Basset FA. Accuracy of the vivofit activity tracker. J Med Eng Technol. 2016 Aug;40(6):298–306. doi: 10.1080/03091902.2016.1193238. [DOI] [PubMed] [Google Scholar]

- 13.An H, Jones GC, Kang S, Welk GJ, Lee J. How valid are wearable physical activity trackers for measuring steps? Eur J Sport Sci. 2017 Apr;17(3):360–8. doi: 10.1080/17461391.2016.1255261. [DOI] [PubMed] [Google Scholar]

- 14.An H, Kim Y, Lee J. Accuracy of inclinometer functions of the activPAL and ActiGraph GT3X+: a focus on physical activity. Gait Posture. 2017 Jan;51:174–80. doi: 10.1016/j.gaitpost.2016.10.014. http://europepmc.org/abstract/MED/27780084 .S0966-6362(16)30617-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Blondeel A, Loeckx M, Rodrigues F, Janssens W, Troosters T, Demeyer H. Wearables to coach physical activity in patients with COPD: validity and patient's experience. Am J Respirat Crit Care Med. 2018;197:A7072. https://www.atsjournals.org/doi/abs/10.1164/ajrccm-conference.2018.197.1_MeetingAbstracts.A7072 . [Google Scholar]

- 16.Boeselt T, Spielmanns M, Nell C, Storre JH, Windisch W, Magerhans L, Beutel B, Kenn K, Greulich T, Alter P, Vogelmeier C, Koczulla AR. Validity and usability of physical activity monitoring in patients with chronic obstructive pulmonary disease (COPD) PLoS One. 2016;11(6):e0157229. doi: 10.1371/journal.pone.0157229. http://dx.plos.org/10.1371/journal.pone.0157229 .PONE-D-16-05572 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Bruder AM, McClelland JA, Shields N, Dodd KJ, Hau R, van de Water AT, Taylor NF. Validity and reliability of an activity monitor to quantify arm movements and activity in adults following distal radius fracture. Disabil Rehabil. 2018 Jun;40(11):1318–25. doi: 10.1080/09638288.2017.1288764. [DOI] [PubMed] [Google Scholar]

- 18.Bulathsinghala C, Tejada J, Wallack RZ. Validating output from an activity monitor worn on the wrist in patients with COPD. Chest. 2014 Oct;146(4):25A. doi: 10.1378/chest.1989979. [DOI] [Google Scholar]

- 19.Burton E, Hill KD, Lautenschlager NT, Thøgersen-Ntoumani C, Lewin G, Boyle E, Howie E. Reliability and validity of two fitness tracker devices in the laboratory and home environment for older community-dwelling people. BMC Geriatr. 2018 May 03;18(1):103. doi: 10.1186/s12877-018-0793-4. https://bmcgeriatr.biomedcentral.com/articles/10.1186/s12877-018-0793-4 .10.1186/s12877-018-0793-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Choi L, Chen KY, Acra SA, Buchowski MS. Distributed lag and spline modeling for predicting energy expenditure from accelerometry in youth. J Appl Physiol (1985) 2010 Mar;108(2):314–27. doi: 10.1152/japplphysiol.00374.2009. https://journals.physiology.org/doi/10.1152/japplphysiol.00374.2009?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed .00374.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Chow JJ, Thom JM, Wewege MA, Ward RE, Parmenter BJ. Accuracy of step count measured by physical activity monitors: the effect of gait speed and anatomical placement site. Gait Posture. 2017 Sep;57:199–203. doi: 10.1016/j.gaitpost.2017.06.012.S0966-6362(17)30235-7 [DOI] [PubMed] [Google Scholar]

- 22.Chowdhury EA, Western MJ, Nightingale TE, Peacock OJ, Thompson D. Assessment of laboratory and daily energy expenditure estimates from consumer multi-sensor physical activity monitors. PLoS One. 2017;12(2):e0171720. doi: 10.1371/journal.pone.0171720. http://dx.plos.org/10.1371/journal.pone.0171720 .PONE-D-16-36931 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Cohen MD, Cutaia M. A novel approach to measuring activity in chronic obstructive pulmonary disease: using 2 activity monitors to classify daily activity. J Cardiopulm Rehabil Prev. 2010;30(3):186–94. doi: 10.1097/HCR.0b013e3181d0c191. [DOI] [PubMed] [Google Scholar]

- 24.Compagnat M, Mandigout S, Chaparro D, Daviet JC, Salle JY. Validity of the Actigraph GT3x and influence of the sensor positioning for the assessment of active energy expenditure during four activities of daily living in stroke subjects. Clin Rehabil. 2018 Dec;32(12):1696–704. doi: 10.1177/0269215518788116. [DOI] [PubMed] [Google Scholar]

- 25.Dondzila C, Lewis C, Lopez J, Parker T. Congruent accuracy of wrist-worn activity trackers during controlled and free-living conditions. Int J Exerc Sci. 2018;11(7):584. https://www.researchgate.net/publication/323701487_Congruent_Accuracy_of_Wrist-Worn_Activity_Trackers_During_Controlled_and_Free-Living_Conditions . [Google Scholar]

- 26.Dooley EE, Golaszewski NM, Bartholomew JB. Estimating accuracy at exercise intensities: a comparative study of self-monitoring heart rate and physical activity wearable devices. JMIR Mhealth Uhealth. 2017 Mar 16;5(3):e34. doi: 10.2196/mhealth.7043. https://mhealth.jmir.org/2017/3/e34/ v5i3e34 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Durkalec-Michalski K, Wozniewicz M, Bajerska J, Jeszka J. Comparison of accuracy of various non-calorimetric methods measuring energy expenditure at different intensities. Hum Mov. 2013;14(2):167. doi: 10.2478/humo-2013-0019. [DOI] [Google Scholar]

- 28.Falgoust B, Handwerger B, Rouly L, Blaha O, Pontiff M, Eason J. Accuracy of wearable activity monitors in the measurement of step count and distance. Cardiopulm Phys Ther J. 2018;29(1):36. https://journals.lww.com/cptj/Fulltext/2018/01000/CSM_2018_Platform_Presentation.4.aspx . [Google Scholar]

- 29.Ferguson T, Rowlands AV, Olds T, Maher C. The validity of consumer-level, activity monitors in healthy adults worn in free-living conditions: a cross-sectional study. Int J Behav Nutr Phys Act. 2015;12:42. doi: 10.1186/s12966-015-0201-9. http://www.ijbnpa.org/content/12//42 .10.1186/s12966-015-0201-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Gaz DV, Rieck TM, Peterson NW, Ferguson JA, Schroeder DR, Dunfee HA, Henderzahs-Mason JM, Hagen PT. Determining the validity and accuracy of multiple activity-tracking devices in controlled and free-walking conditions. Am J Health Promot. 2018 Nov;32(8):1671–8. doi: 10.1177/0890117118763273. [DOI] [PubMed] [Google Scholar]

- 31.Gillinov AM, Etiwy M, Gillinov S, Wang R, Blackburn G, Phelan D, Houghtaling P, Javadikasgari H, Desai MY. Variable accuracy of commercially available wearable heart rate monitors. J Am Coll Cardiol. 2017 Mar;69(11):336. doi: 10.1016/s0735-1097(17)33725-7. [DOI] [PubMed] [Google Scholar]

- 32.Gironda RJ, Lloyd J, Clark ME, Walker RL. Preliminary evaluation of reliability and criterion validity of Actiwatch-Score. J Rehabil Res Dev. 2007;44(2):223–30. doi: 10.1682/jrrd.2006.06.0058. https://www.rehab.research.va.gov/jour/07/44/2/pdf/Gironda.pdf . [DOI] [PubMed] [Google Scholar]

- 33.Hargens TA, Deyarmin KN, Snyder KM, Mihalik AG, Sharpe LE. Comparison of wrist-worn and hip-worn activity monitors under free living conditions. J Med Eng Technol. 2017 Apr;41(3):200–7. doi: 10.1080/03091902.2016.1271046. [DOI] [PubMed] [Google Scholar]

- 34.Hernández-Vicente A, Santos-Lozano A, De Cocker K, Garatachea N. Validation study of Polar V800 accelerometer. Ann Transl Med. 2016 Aug;4(15):278. doi: 10.21037/atm.2016.07.16. doi: 10.21037/atm.2016.07.16.atm-04-15-278 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Huang Y, Xu J, Yu B, Shull PB. Validity of FitBit, Jawbone UP, Nike+ and other wearable devices for level and stair walking. Gait Posture. 2016 Dec;48:36–41. doi: 10.1016/j.gaitpost.2016.04.025.S0966-6362(16)30042-X [DOI] [PubMed] [Google Scholar]

- 36.Imboden MT, Nelson MB, Kaminsky LA, Montoye AH. Comparison of four Fitbit and Jawbone activity monitors with a research-grade ActiGraph accelerometer for estimating physical activity and energy expenditure. Br J Sports Med. 2017 May 08;:844–50. doi: 10.1136/bjsports-2016-096990.bjsports-2016-096990 [DOI] [PubMed] [Google Scholar]

- 37.Jo E, Lewis K, Directo D, Kim MJ, Dolezal BA. Validation of biofeedback wearables for photoplethysmographic heart rate tracking. J Sports Sci Med. 2016 Sep;15(3):540–7. http://europepmc.org/abstract/MED/27803634 . [PMC free article] [PubMed] [Google Scholar]

- 38.Jones D, Crossley K, Dascombe B, Hart HF, Kemp J. Validity and reliability of the Fitbit Flex™ and ActiGraph GT3X+ at jogging and running speeds. Int J Sports Phys Ther. 2018 Aug;13(5):860–70. http://europepmc.org/abstract/MED/30276018 . [PMC free article] [PubMed] [Google Scholar]

- 39.Kaewkannate K, Kim S. A comparison of wearable fitness devices. BMC Public Health. 2016 May 24;16:433. doi: 10.1186/s12889-016-3059-0. https://bmcpublichealth.biomedcentral.com/articles/10.1186/s12889-016-3059-0 .10.1186/s12889-016-3059-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Lamont RM, Daniel HL, Payne CL, Brauer SG. Accuracy of wearable physical activity trackers in people with Parkinson's disease. Gait Posture. 2018 Jun;63:104–8. doi: 10.1016/j.gaitpost.2018.04.034.S0966-6362(18)30436-3 [DOI] [PubMed] [Google Scholar]

- 41.Lauritzen J, Muñoz A, Sevillano JL, Civit BA. The usefulness of activity trackers in elderly with reduced mobility: a case study. Stud Health Technol Inform. 2013;192:759–62. [PubMed] [Google Scholar]

- 42.Lawinger E, Uhl T, Abel M, Kamineni S. Assessment of accelerometers for measuring upper-extremity physical activity. J Sport Rehabil. 2015 Aug;24(3):236–43. doi: 10.1123/jsr.2013-0140.2013-0140 [DOI] [PubMed] [Google Scholar]

- 43.Lemmens PM, Sartor F, Cox LG, den Boer SV, Westerink JH. Evaluation of an activity monitor for use in pregnancy to help reduce excessive gestational weight gain. BMC Pregnancy Childbirth. 2018 Jul 31;18(1):312. doi: 10.1186/s12884-018-1941-8. https://bmcpregnancychildbirth.biomedcentral.com/articles/10.1186/s12884-018-1941-8 .10.1186/s12884-018-1941-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Magistro D, Brustio PR, Ivaldi M, Esliger DW, Zecca M, Rainoldi A, Boccia G. Validation of the ADAMO Care Watch for step counting in older adults. PLoS One. 2018;13(2):e0190753. doi: 10.1371/journal.pone.0190753. https://dx.plos.org/10.1371/journal.pone.0190753 .PONE-D-17-15209 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Mandigout S, Lacroix J, Ferry B, Vuillerme N, Compagnat M, Daviet J. Can energy expenditure be accurately assessed using accelerometry-based wearable motion detectors for physical activity monitoring in post-stroke patients in the subacute phase? Eur J Prev Cardiol. 2017 Dec;24(18):2009–16. doi: 10.1177/2047487317738593. [DOI] [PubMed] [Google Scholar]

- 46.Manning M, Tune B, Völgyi E, Smith W. Agreement of activity monitors during treadmill walking in teenagers with severe obesity. Pediatr Exerc Sci. 2016;28:44. https://web.s.ebscohost.com/abstract?direct=true&profile=ehost&scope=site&authtype=crawler&jrnl=08998493&AN=120431809&h=BTNCdwlg33Qq5cKOuDkyZyDSvt%2bwyVM7v%2fErTZiAtAUBh7Flobkb5gJM3cLaqaHNe7tvV%2fm1lizqDk0h2PC2kw%3d%3d&crl=c&resultNs=AdminWebAuth&resultLocal=ErrCrlNotAuth&crlhashurl=login.aspx%3fdirect%3dtrue%26profile%3dehost%26scope%3dsite%26authtype%3dcrawler%26jrnl%3d08998493%26AN%3d120431809 . [Google Scholar]

- 47.Montoye AH, Mitrzyk JR, Molesky MJ. Comparative accuracy of a wrist-worn activity tracker and a smart shirt for physical activity assessment. Meas Phys Educ Exerc Sci. 2017 Jun 08;21(4):201–11. doi: 10.1080/1091367x.2017.1331166. [DOI] [Google Scholar]

- 48.Powierza CS, Clark MD, Hughes JM, Carneiro KA, Mihalik JP. Validation of a self-monitoring tool for use in exercise therapy. PM R. 2017 Nov;9(11):1077–84. doi: 10.1016/j.pmrj.2017.03.012. http://europepmc.org/abstract/MED/28400221 .S1934-1482(17)30425-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Price K, Bird SR, Lythgo N, Raj IS, Wong JY, Lynch C. Validation of the Fitbit One, Garmin Vivofit and Jawbone UP activity tracker in estimation of energy expenditure during treadmill walking and running. J Med Eng Technol. 2017 Apr;41(3):208–15. doi: 10.1080/03091902.2016.1253795. [DOI] [PubMed] [Google Scholar]

- 50.Redenius N, Kim Y, Byun W. Concurrent validity of the Fitbit for assessing sedentary behavior and moderate-to-vigorous physical activity. BMC Med Res Methodol. 2019 Feb 07;19(1):29. doi: 10.1186/s12874-019-0668-1. https://bmcmedresmethodol.biomedcentral.com/articles/10.1186/s12874-019-0668-1 .10.1186/s12874-019-0668-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Reid RE, Insogna JA, Carver TE, Comptour AM, Bewski NA, Sciortino C, Andersen RE. Validity and reliability of Fitbit activity monitors compared to ActiGraph GT3X+ with female adults in a free-living environment. J Sci Med Sport. 2017 Jun;20(6):578–82. doi: 10.1016/j.jsams.2016.10.015.S1440-2440(16)30231-6 [DOI] [PubMed] [Google Scholar]

- 52.Roos L, Taube W, Beeler N, Wyss T. Validity of sports watches when estimating energy expenditure during running. BMC Sports Sci Med Rehabil. 2017 Dec 20;9(1):22. doi: 10.1186/s13102-017-0089-6. https://bmcsportsscimedrehabil.biomedcentral.com/articles/10.1186/s13102-017-0089-6 .89 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Schaffer SD, Holzapfel SD, Fulk G, Bosch PR. Step count accuracy and reliability of two activity tracking devices in people after stroke. Physiother Theory Pract. 2017 Oct;33(10):788–96. doi: 10.1080/09593985.2017.1354412. [DOI] [PubMed] [Google Scholar]

- 54.Scott JJ, Rowlands AV, Cliff DP, Morgan PJ, Plotnikoff RC, Lubans DR. Comparability and feasibility of wrist- and hip-worn accelerometers in free-living adolescents. J Sci Med Sport. 2017 Dec;20(12):1101–6. doi: 10.1016/j.jsams.2017.04.017.S1440-2440(17)30401-2 [DOI] [PubMed] [Google Scholar]

- 55.Semanik P, Lee J, Pellegrini CA, Song J, Dunlop DD, Chang RW. Comparison of physical activity measures derived from the Fitbit Flex and the ActiGraph GT3X+ in an employee population with chronic knee symptoms. ACR Open Rheumatol. 2020 Jan 02;2(1):48–52. doi: 10.1002/acr2.11099. doi: 10.1002/acr2.11099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Sirard JR, Masteller B, Freedson PS, Mendoza A, Hickey A. Youth oriented activity trackers: comprehensive laboratory- and field-based validation. J Med Internet Res. 2017 Jul 19;19(7):e250. doi: 10.2196/jmir.6360. https://www.jmir.org/2017/7/e250/ v19i7e250 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.St-Laurent A, Mony MM, Mathieu M, Ruchat SM. Validation of the Fitbit Zip and Fitbit Flex with pregnant women in free-living conditions. J Med Eng Technol. 2018 May;42(4):259–64. doi: 10.1080/03091902.2018.1472822. [DOI] [PubMed] [Google Scholar]

- 58.Stackpool CM. Accuracy of various activity trackers in estimating steps taken and energy expenditure. UW-L Master's Theses. 2013. [2021-12-31]. https://minds.wisconsin.edu/handle/1793/66292 .

- 59.Stiles VH, Griew PJ, Rowlands AV. Use of accelerometry to classify activity beneficial to bone in premenopausal women. Med Sci Sports Exerc. 2013 Dec;45(12):2353–61. doi: 10.1249/MSS.0b013e31829ba765. [DOI] [PubMed] [Google Scholar]

- 60.Støve MP, Haucke E, Nymann ML, Sigurdsson T, Larsen BT. Accuracy of the wearable activity tracker Garmin Forerunner 235 for the assessment of heart rate during rest and activity. J Sports Sci. 2019 Apr;37(8):895–901. doi: 10.1080/02640414.2018.1535563. [DOI] [PubMed] [Google Scholar]

- 61.Tam KM, Cheung SY. Validation of electronic activity monitor devices during treadmill walking. Telemed J E Health. 2018 Oct;24(10):782–9. doi: 10.1089/tmj.2017.0263. [DOI] [PubMed] [Google Scholar]

- 62.Thomson EA, Nuss K, Comstock A, Reinwald S, Blake S, Pimentel RE, Tracy BL, Li K. Heart rate measures from the Apple Watch, Fitbit Charge HR 2, and electrocardiogram across different exercise intensities. J Sports Sci. 2019 Jun;37(12):1411–9. doi: 10.1080/02640414.2018.1560644. [DOI] [PubMed] [Google Scholar]

- 63.Wahl Y, Düking P, Droszez A, Wahl P, Mester J. Criterion-validity of commercially available physical activity tracker to estimate step count, covered distance and energy expenditure during sports conditions. Front Physiol. 2017 Sep;8:725. doi: 10.3389/fphys.2017.00725. doi: 10.3389/fphys.2017.00725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Wallen MP, Gomersall SR, Keating SE, Wisløff U, Coombes JS. Accuracy of heart rate watches: implications for weight management. PLoS One. 2016;11(5):e0154420. doi: 10.1371/journal.pone.0154420. http://dx.plos.org/10.1371/journal.pone.0154420 .PONE-D-15-55600 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Wang L, Liu T, Wang Y, Li Q, Yi J, Inoue Y. Evaluation on step counting performance of wristband activity monitors in daily living environment. IEEE Access. 2017;5:13020–7. doi: 10.1109/access.2017.2721098. [DOI] [Google Scholar]

- 66.Woodman JA, Crouter SE, Bassett DR, Fitzhugh EC, Boyer WR. Accuracy of consumer monitors for estimating energy expenditure and activity type. Med Sci Sports Exerc. 2017 Feb;49(2):371–7. doi: 10.1249/MSS.0000000000001090. [DOI] [PubMed] [Google Scholar]

- 67.Zhang S, Murray P, Zillmer R, Eston RG, Catt M, Rowlands AV. Activity classification using the GENEA: optimum sampling frequency and number of axes. Med Sci Sports Exerc. 2012 Nov;44(11):2228–34. doi: 10.1249/MSS.0b013e31825e19fd. [DOI] [PubMed] [Google Scholar]

- 68.Deka P, Pozehl B, Norman JF, Khazanchi D. Feasibility of using the Fitbit Charge HR in validating self-reported exercise diaries in a community setting in patients with heart failure. Eur J Cardiovasc Nurs. 2018 Oct;17(7):605–11. doi: 10.1177/1474515118766037. [DOI] [PubMed] [Google Scholar]

- 69.Farina N, Sherlock G, Thomas S, Lowry RG, Banerjee S. Acceptability and feasibility of wearing activity monitors in community-dwelling older adults with dementia. Int J Geriatr Psychiatry. 2019 Apr;34(4):617–24. doi: 10.1002/gps.5064. [DOI] [PubMed] [Google Scholar]

- 70.Fisher JM, Hammerla NY, Rochester L, Andras P, Walker RW. Body-worn sensors in Parkinson's disease: evaluating their acceptability to patients. Telemed J E Health. 2016 Jan;22(1):63–9. doi: 10.1089/tmj.2015.0026. http://europepmc.org/abstract/MED/26186307 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Lahti A, White D, Katiyar N, Pei H, Baker S. 325. Clinical utility study towards the use of continuous wearable sensors and patient reported surveys for relapse prediction in patients at high risk of relapse in schizophrenia. Biolog Psychiatry. 2017 May;81(10):S133. doi: 10.1016/j.biopsych.2017.02.340. [DOI] [Google Scholar]

- 72.Marcoux V, Fell C, Johannson K. Daily activity trackers and home spirometry in patients with idiopathic pulmonary fibrosis. Am J Respirat Crit Care Med. 2018;197:A1586. https://www.atsjournals.org/doi/abs/10.1164/ajrccm-conference.2018.197.1_MeetingAbstracts.A1586 . [Google Scholar]

- 73.Naslund JA, Aschbrenner KA, Barre LK, Bartels SJ. Feasibility of popular m-health technologies for activity tracking among individuals with serious mental illness. Telemed J E Health. 2015 Mar;21(3):213–6. doi: 10.1089/tmj.2014.0105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Speier W, Dzubur E, Zide M, Shufelt C, Joung S, Van Eyk JE, Merz CN, Lopez M, Spiegel B, Arnold C. Evaluating utility and compliance in a patient-based eHealth study using continuous-time heart rate and activity trackers. J Am Med Inform Assoc. 2018 Oct 01;25(10):1386–91. doi: 10.1093/jamia/ocy067.5025055 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Rowlands AV, Harrington DM, Bodicoat DH, Davies MJ, Sherar LB, Gorely T, Khunti K, Edwardson CL. Compliance of adolescent girls to repeated deployments of wrist-worn accelerometers. Med Sci Sports Exerc. 2018 Jul;50(7):1508–17. doi: 10.1249/MSS.0000000000001588. [DOI] [PubMed] [Google Scholar]

- 76.Noun Project: Free Icons & Stock Photos for Everything. [2021-09-11]. https://thenounproject.com/

- 77.Feehan LM, Geldman J, Sayre EC, Park C, Ezzat AM, Yoo JY, Hamilton CB, Li LC. Accuracy of Fitbit devices: systematic review and narrative syntheses of quantitative data. JMIR Mhealth Uhealth. 2018 Aug 09;6(8):e10527. doi: 10.2196/10527. http://mhealth.jmir.org/2018/8/e10527/ v6i8e10527 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Evenson KR, Goto MM, Furberg RD. Systematic review of the validity and reliability of consumer-wearable activity trackers. Int J Behav Nutr Phys Act. 2015;12(1):159. doi: 10.1186/s12966-015-0314-1. http://www.ijbnpa.org/content/12/1/159 .10.1186/s12966-015-0314-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Nadal C, Doherty G, Sas C. Technology acceptability, acceptance and adoption-definitions and measurement. Proceedings of the CHI 2019 Conference on Human Factors in Computing Systems; CHI 2019 Conference on Human Factors in Computing Systems; May 4–9, 2019; Glasgow, Scotland UK. 2019. https://www.researchgate.net/publication/331787774_Technology_acceptability_acceptance_and_adoption_-_definitions_and_measurement . [Google Scholar]

- 80.Sekhon M, Cartwright M, Francis JJ. Acceptability of healthcare interventions: an overview of reviews and development of a theoretical framework. BMC Health Serv Res. 2017 Jan 26;17(1):1–13. doi: 10.1186/s12913-017-2031-8. https://bmchealthservres.biomedcentral.com/articles/10.1186/s12913-017-2031-8 .10.1186/s12913-017-2031-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Database of result characteristics of the studies reporting on accuracy and acceptability.

Guide for accessing database in Multimedia Appendix 1.

Result characteristics of the studies reporting on accuracy.

Data Availability Statement

Most of the data that support the findings of this study are available in Multimedia Appendix 1. A guide on how to use the database provided in Multimedia Appendix 1 can be found in Multimedia Appendix 2. The full data set can be made available upon reasonable request.

In all, 36% (4/11) of studies focused on data availability, expressed as a proportion of time in which the data were available, and a different device was used in each of these studies. The denominator for the proportion could be the study duration or the time spent exercising. Rowlands et al [75] found that data availability was 52% in a pediatric healthy population using the GENEActiv for 14 months. Deka et al [68] focused on data availability during exercise time. In this study, adult patients with cardiac heart failure activated their Fitbit Charge HR in 75% of the exercise sessions (over 5 days) and data were available for 99% of the time when activated. Marcoux et al [72] studied the Fitbit Flex 2 in adults with idiopathic pulmonary fibrosis (for 46 days). Of the 20 patients, 2 did not succeed in activating the device. Among the remaining participants, data were available for a mean of 91% (SD 20%) of the time. Lahti et al [71] studied the Garmin Vivofit in adults with schizophrenia and found data available for 97% of the time (over 4 months).