Abstract

Adults with hearing loss demonstrate a reduced range of emotional responses to nonspeech sounds compared to their peers with normal hearing. The purpose of this study was to evaluate two possible strategies for addressing the effects of hearing loss on emotional responses: (a) increasing overall level and (b) hearing aid use (with and without nonlinear frequency compression, NFC). Twenty-three adults (mean age = 65.5 years) with mild-to-severe sensorineural hearing loss and 17 adults (mean age = 56.2 years) with normal hearing participated. All adults provided ratings of valence and arousal without hearing aids in response to nonspeech sounds presented at a moderate and at a high level. Adults with hearing loss also provided ratings while using individually fitted study hearing aids with two settings (NFC-OFF or NFC-ON). Hearing loss and hearing aid use impacted ratings of valence but not arousal. Listeners with hearing loss rated pleasant sounds as less pleasant than their peers, confirming findings in the extant literature. For both groups, increasing the overall level resulted in lower ratings of valence. For listeners with hearing loss, the use of hearing aids (NFC-OFF) also resulted in lower ratings of valence but to a lesser extent than increasing the overall level. Activating NFC resulted in ratings that were similar to ratings without hearing aids (with a moderate presentation level) but did not improve ratings to match those from the listeners with normal hearing. These findings suggest that current interventions do not ameliorate the effects of hearing loss on emotional responses to sound.

Keywords: valence, arousal, nonlinear frequency compression, affect, hearing impairment

Introduction

Permanent hearing loss, which is highly prevalent in older adults (Lin et al., 2011; Stevens et al., 2013), can disrupt emotion perception (see for review, Picou et al., 2018). The effects of hearing loss on emotion perception are evident in the ability to recognize emotion in the voice of others (Christensen et al., 2019; Singh et al., 2018) and in the emotion experienced in response to sound (Husain et al., 2014; Picou, 2016; Picou & Buono, 2018). Because recognizing emotion in others and experiencing a full range of emotions are both important to adults’ social and psychological function (Arthaud-Day et al., 2005; Luo et al., 2018; Picou & Buono, 2018; Singh et al., 2018), it is important to understand the effects of hearing loss and hearing loss interventions on emotion perception. The focus of this paper is specifically on experienced emotion in response to sounds within the context of the dimensional view of emotion. The dimensional view posits that emotions can be described with a combination of two or more dimensions, most often hedonistic valence (pleasant to unpleasant) and emotional arousal (exciting vs. calming; Bradley & Lang, 1994; Russell, 1980; Russell & Mehrabian, 1977).

To investigate the effects of hearing loss on experienced emotion, Picou (2016) assessed emotional responses to nonspeech sounds in participants with normal hearing or mild to moderately severe hearing loss. Participants rated their experienced valence and arousal after listening to sounds that varied in expected valence (neutral, pleasant, unpleasant) or arousal (neutral, exciting, calming). Although ratings of arousal were largely unaffected by hearing loss, older patients with hearing loss demonstrated a reduced range of valence ratings relative to their similarly aged peers with (near)-normal hearing. Specifically, participants with hearing loss rated pleasant sounds as less pleasant (lower on the valence scale) and rated unpleasant sounds as less unpleasant (higher on the valence scale) than their peers.

These changes in experienced emotion might be explained by central mechanisms, as well as by peripheral factors. Centrally, hearing loss has been associated with changes in cognitive and emotional control (Zinchenko et al., 2018), changes in resting state neural networks (Luan et al., 2019), and alterations in cerebellar–cerebral connections (Xu et al., 2019), all of which have been implicated in emotion perception. Furthermore, Husain et al. (2014) reported that participants with hearing loss use different cortical regions to process emotional sounds than do participants with normal hearing. Using functional magnetic resonance imaging techniques, the authors reported that, relative to participants with normal hearing, participants with hearing loss showed reduced limbic system engagement (involved with lower level, reactionary responses) and increased activity in the parietal cortices and precuneus (involved with memory and decision-making).

Changes in experienced emotion could also be the result of peripheral factors, such as reduced audibility. It is possible that hearing loss reduces audibility of acoustic cues that are important for emotion perception, for example, fundamental frequency for speech (e.g., Pell et al., 2009) or spectral centroid for music (Brattico et al., 2011). Indeed, the degree of hearing loss has been related to performance on emotion recognition tasks (Singh et al., 2018) and on experienced emotion tasks (Picou & Buono, 2018), suggesting that reduction in audibility is related to emotion perception.

To explicitly evaluate the relationship between audibility and experienced emotion, Buono et al. (2021) presented low- and high-pass filtered (800 and 2000 Hz, respectively) nonspeech sounds to participants with normal hearing and recorded their ratings of valence and arousal. Their results demonstrate that, relative to unfiltered sounds, filtered sounds elicited ratings of valence that were less pleasant (for pleasant stimuli) and less unpleasant (for unpleasant stimuli). Although their results do not provide insight into the frequency-specific nature of acoustic cues important for emotion and were possibly influenced by the unnaturalness of filtered sounds, their data do suggest that reduced audibility of high- or low-frequency cues disrupt emotion perception. Consequently, it might be expected that improving audibility, for example, through hearing aid provision or increasing the overall presentation level, could improve emotion perception for adults with hearing loss.

Although one might expect hearing aids to improve emotion perception because they improve the audibility of the incoming signals, the expected hearing aid benefits for emotion perception have not been shown in research studies (Goy et al., 2018; Schmidt et al., 2016; Singh et al., 2018). For example, Singh et al. (2018) reported no differences in emotion recognition performance between participants with hearing loss who did or did not wear hearing aids. Similarly, Goy et al. (2018) reported no difference between emotion recognition performance with and without hearing aids for adults with hearing loss, despite demonstrable improvements in audibility and word recognition performance. For these studies, participants used their own hearing aids during testing. Although the hearing aids were verified to be generally appropriate, as indicated by reported participant satisfaction with sound quality (Goy et al., 2018) or by being within 8 dB of prescriptive targets (Singh et al., 2018), it is possible the results would have been more favorable if the hearing aids were fit with more careful control over prescriptive target matching and hearing aid feature use. In addition, these studies focused on vocal emotion recognition. Although emotion recognition and experienced emotion are intertwined (Chan et al., 2013; Hess et al., 1999; Livingstone et al., 2016), evidence suggests that these two types of emotion perception are differentially affected by hearing loss, acoustic cues, and aging (for a review, see Picou et al., 2018).

Data have yet to be reported regarding the effects of hearing aid use on experienced emotion, particularly for nonspeech sounds. Based on existing literature, two competing hypotheses could be proposed. First, it is possible that hearing aids will exacerbate, rather than ameliorate the effects of hearing loss on experienced emotion. In the study of Picou (2016), participants with hearing loss rated stimuli presented at 80 dBA as significantly less pleasant (lower ratings of valence) than those presented at 60 dBA. Instead of expanding the range of valence ratings, increasing the overall level reduced the ratings of valence of all stimuli while maintaining the same range of ratings. It is not clear what contributed to the reduction in valence ratings for stimuli presented at the higher presentation level, which were concomitantly more audible. Recent evidence suggests that even stimuli that are intended to be pleasant are perceived as unpleasant if they are intense and presented without context (Atias et al., 2019), as would be the case for nonspeech sounds presented at 80 dBA. Thus, by increasing the intensity of sounds, hearing aids might exacerbate the effects of hearing loss on experienced emotion.

Alternatively, it is possible that hearing aids counteract the effects of reduced audibility by providing individualized, frequency-specific gain, while avoiding excessive loudness. For example, a common prescriptive target, National Acoustic Laboratories NonLinear 2 (NAL-NL2; Keidser et al., 2012), is designed to maximize audibility while giving perceived loudness similar to, or lower than, what would be expected for those with normal hearing. Thus, if loudness is a contributing factor to the negative effects of higher presentation level on ratings of valence reported by Picou (2016), an individualized hearing aid fitting might expand the range of valence ratings for participants with hearing loss. Indeed, in the study of Buono et al. (2021), the unfiltered stimuli were perceived as louder than the filtered stimuli, yet pleasant stimuli were rated as more pleasant, and unpleasant stimuli were rated as more unpleasant in the unfiltered condition. These results suggest that, by amplifying only specific frequencies, rather than by amplifying all frequencies equally, hearing aids might ameliorate the effects of hearing loss on experienced emotion, resulting in ratings of valence with a wider range relative to ratings without hearing aids.

Beyond individualized hearing aid fittings matched to validated prescriptive targets, another method for improving audibility without increasing loudness is the activation of nonlinear frequency compression (NFC). The purpose of NFC is to compress the hearing aid output frequency range relative to the hearing aid input frequency range (McDermott, 2011). NFC is theoretically most beneficial for patients with steeply sloping hearing losses, for frequencies where the hearing aid receiver is output-limited and the patient's thresholds are poor. By compressing the frequency output, high-frequency information is presented at lower frequencies, presumably where the hearing aid receiver has greater output, and the patient has more usable hearing. Recent systematic reviews demonstrate some benefit of NFC on speech perception (Akinseye et al., 2018; Mao et al., 2017; Simpson et al., 2018), but there is a lack of existing research that has investigated the impact of NFC on experienced emotion. The findings of Buono et al. (2021) suggest that selectively improving high-frequency audibility, which is the goal of NFC, could improve ratings of valence for participants with hearing loss by resulting in a broader range of experienced emotion.

The purpose of this study was two-fold. Firstly, this study was designed to confirm the effects of hearing loss and presentation level on emotional responses to nonspeech sounds with a larger sample than reported by Picou (2016), which only included 10 participants in each participant group. Based on this early work, it was expected that participants with hearing loss would demonstrate a reduced range of valence ratings in response to stimuli presented at a moderate overall level, as evidenced by lower (less pleasant) ratings of valence for pleasant stimuli and higher (less unpleasant) ratings of valence for unpleasant stimuli. It was also expected that increasing the overall level would result in lower (less pleasant) ratings of valence for participants with hearing loss, regardless of sound category. Based on the evidence that increased overall level results in higher (more exciting) ratings of arousal (Goudbeek & Scherer, 2010; Ilie & Thompson, 2006; Laukka et al., 2005; Ma & Thompson, 2015; Weninger et al., 2013), it was expected that increasing the presentation level would increase ratings of arousal. However, no effect of hearing loss was expected on ratings of arousal, consistent with previous work (Picou, 2016).

Secondly, this study was intended to evaluate the effect of hearing aid use on emotional responses to nonspeech sounds. It was expected that by providing individualized gain fit to a validated prescriptive formula (NFC-OFF), hearing aids would overcome the reduced audibility associated with hearing loss and result in a wider range of ratings of valence than for unaided listening. It was also expected that activating NFC would further improve ratings of valence because the technology provides users with additional information about high-frequency components. It was also expected that ratings of arousal would be higher for both conditions with hearing aids and with the higher overall presentation level as a result of the increased loudness associated with those conditions.

Materials and Methods

Participants

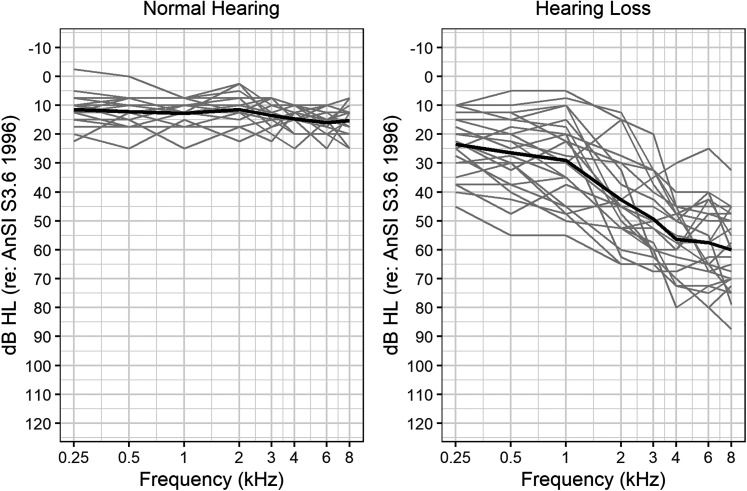

Forty adults participated, 17 with normal hearing (mean [M] = 56.2 years, standard deviation [SD] = 7.7, range = 50–80 years; 6 males) and 23 with acquired sensorineural hearing loss (M = 65.5 years, SD = 7.3, range = 49–74 years; 16 males). Four additional participants were recruited for the group with normal hearing. These participants completed the study, but were later determined to have mild, high-frequency hearing loss and thus were not further considered in this paper. Figure 1 displays individual and mean audiometric data for both groups. All participants denied the use of antidepressants and passed a screening for clinical depression using the Hospital Anxiety and Depression Scale (HADS; Zigmond & Snaith, 1983). For the participants with normal hearing, scores on the anxiety subscale ranged from 0 to 9 (M = 4.4, SD = 2.6) and scores on the depression subscale ranged from 0 to 4 (M = 0.9, SD = 1.1), where the maximum possible score on each subscale is 21.

Figure 1.

Air Conduction Audiometric Hearing Thresholds as a Function of Frequency (Hz) for Participants With Normal Hearing (Left Panel) and Hearing Loss (Right Panel). Individual participants are indicated by gray lines and the black line indicates the mean threshold for each group.

Participants with hearing loss had long-standing hearing loss (M = 14.9 years, SD = 12.9, range = 4–50); 18 were experienced hearing aid users (M = 4.3 years, SD = 5.0, range = 0.3–17). With one exception (a 69-year-old female), all hearing aid users wore bilateral hearing aids. Hearing aid style was predominantly receiver-in-the-canal (n = 11), followed by traditional behind the ear (n = 4), completely in the canal (n = 3), and half-shell in the ear (n = 1). Five of the hearing aid users were using noncustom, nonoccluding eartips; the remaining were accustomed to relatively occluding hearing aids, either because they used custom instruments or nonustom, occluding eartips with behind-the-ear instruments. Five participants had no previous hearing aid experience. For participants with hearing loss, scores on the anxiety subscale of the HADS ranged from 1 to 9 (M = 4.8, SD = 2.5) and scores on the depression subscale ranged from 0 to 7 (M = 2.4, SD = 2.1), consistent with low risk of anxiety or depression.

All participants had normal middle ear function, as indicated by normal middle ear immittance findings (as defined in Margolis & Heller, 1987) and by the absence of significant air–bone gaps during audiometric testing. In addition, all hearing was bilaterally symmetrical, as indicated by interaural threshold differences less than 15 dB at any three consecutive audiometric frequencies, or less than 30 dB at a single audiometric test frequency. Participants also denied a history of mental health disorders. Participants were compensated for their time at an hourly rate. All procedures were conducted with ethical approval from Vanderbilt University Medical Center's Institutional Review Board (#150523). Most participants also completed questionnaires regarding isolation and hearing handicap. Scores on the questionnaires and ratings of valence in response to sounds in the unaided condition with a moderate presentation level are part of a larger data set also reported elsewhere (Picou & Buono, 2018). De-identified ratings of valence and arousal are available to interested researchers in a repository (https://osf.io/ky4hx/).

Hearing Aid Fitting

Participants with normal hearing were tested only without hearing aids. All participants with hearing loss were tested with and without bilateral, Phonak Audéo V90 behind-the-ear, receiver-in-the-canal, hearing aids with occluding, noncustom eartips. Two hearing aid conditions were tested: (a) nonlinear frequency compression off (NFC-OFF) and (b) nonlinear frequency compression on (NFC-ON; SoundRecover 1). In both conditions, the microphone mode was set to omnidirectional, and all digital features were deactivated (e.g., digital noise reduction, reverberation reduction, wind noise reduction), except feedback suppression, which was set to “moderate.”

The hearing aids were fit in the laboratory to NAL-NL2 (Keidser et al., 2012) and were verified using the Audioscan Verifit and recorded speech (the carrot passage). The hearing aids were first fit to NAL-NL2 targets in the NFC-OFF setting and were verified to be within 4 dB (rms deviation) of prescriptive targets from 250 to 6,000 Hz. The NFC-ON setting was created by copying the NFC-OFF settings and then activating NFC. The NFC was set to be minimally aggressive while still improving high-frequency audibility, based on evidence that more aggressive NFC settings can be undesirable (Souza et al., 2013). Specifically, the lowest compression ratio and highest compression threshold possible were used, as long as NFC activation improved the audibility of high-frequency sounds (4,000, 5,000, or 6,300 Hz) by at least 5 dB for at least one ear, as measured using the filtered stimuli available in the Audioscan Verifit. The goal was to improve audibility of 6,300 Hz for all participants; however, this was only possible for 21 of the 23 participants. For the remaining two participants, audibility was only improved for 4,000 Hz. The mean frequency compression ratio used was 2.61:1 (range: 2.2–3.0) and the mean frequency compression threshold used was 3,910 Hz (range: 2,800–5,000 Hz). NFC parameters were matched across ears for each participant.

Stimuli

Acoustic stimuli were a subset of 75 sounds from the International Affective Digitized Sounds corpus (IADS-2; Bradley & Lang, 2007). These stimuli are 1.5-s segments of nonspeech sounds, including animal sounds, human communication, body functions, music, and environmental sounds. The specific sounds used are reported in the Appendix (supplemental digital content). Each sound was assigned to a category, based on the category assignments in Picou (2016). Categories were neutral, pleasant/high arousal, pleasant/low arousal, unpleasant/high arousal, and unpleasant/low arousal. Consistent with earlier work, sounds all had the same peak level (−3.01 dB relative to the sound card maximum; Buono et al., 2021; Picou, 2016; Picou & Buono, 2018). In the current study, sounds were presented at either 60 or 80 dBA overall level. Equipment was calibrated prior to testing using a steady noise with the same long-term average spectral shape as the concatenated IADS-2 sounds used for testing, as measured at the position of a participant's ear (with the participant absent).

Participants used the Self-Assessment Manikin (SAM; Bradley & Lang, 1994), a pictorial, visual analog scale, to assist with ratings of valence and arousal. For each scale, the SAM includes five cartoon figures that vary along the dimension. For valence, the figures range from smiling (far left) to frowning (far right). For arousal, figures range from portraying excitement (far left) to calm (far fight). Under each figure, evenly spaced integer numbers are displayed from 9 (far left) to 1 (far right).

Procedure

Prior to data collection, participants provided informed consent, underwent audiometric testing, and completed the HADS. In addition, participants with hearing loss were fit with the research hearing aids. Then, they provided ratings of valence and arousal in the test conditions. As there is no evidence that rating order affects ratings, participants provided two ratings after each sound and always rated valence before rating arousal (Bradley & Lang, 2007; Buono et al., 2021; Picou, 2016; Picou & Buono, 2018). During testing, a small, black fixation cross (2 cm × 2 cm) appeared on a white background. Immediately after the sound was played, the SAM figures related to valence were displayed on the monitor. The participant entered their valence rating on a USB keypad (Targus) and advanced the program by pressing “Enter,” which immediately triggered the presentation of the SAM arousal figures. The participant again entered their rating using the keypad and pressed “Enter” to continue. Prior to testing, participants were instructed using the instructions provided by Picou (2016). Briefly, participants were told to rate the way they felt while listening to the sound. Participants were encouraged to make their ratings as quickly as possible but were also instructed that they could change their answers by pressing a different number. The most recent button press was accepted as the final rating. Participants were instructed not to provide a rating for sounds they did not hear by pressing “Enter” and advancing the program.

Participants listened to and rated the 75 sounds in random order either twice (participants with normal hearing) or four times (participants with hearing loss). Participants with normal hearing provided ratings of valence and arousal of sounds presented at 60 and 80 dBA. Participants with hearing loss also provided ratings of valence and arousal of sounds presented at 60 and 80 dBA with no hearing aids in their ears, and of sounds presented at 60 dBA while listening through hearing aids with NFC-OFF and NFC-ON. The test order was counterbalanced but blocked such that participants rated sounds in one condition before moving on to another condition. Within a condition, sounds were presented in random order and not blocked by sound category. Participants with normal hearing completed all testing during a single test session. Participants with hearing loss completed test procedures over the course of two test sessions, separated by at least a half-day.

Test Environment

Data collection occurred in a double-walled, audiometric test booth. IADS-2 stimuli were presented from a computer (Dell) via custom programming with Presentation software (Neurobehavioral Systems v 12). From the computer, the stimuli were routed through a soundcard (Echo Layla), through an audiometer for level control (Madsen Orbiter), to an amplifier (Russound Crown) and to a loudspeaker (Bowers & Wilkins 685 S2) located 1.25 m directly in front of the participant.

Data Analysis

Prior to analysis, mean ratings were calculated for each participant in each condition for each sound category (pleasant/high arousal, pleasant/low arousal, neutral, unpleasant/high arousal, unpleasant/low arousal). To ensure internal consistency of the items in each category, raw Cronbach's α scores were calculated using the alpha function of the psych package (Revelle, 2018) in R (3.6.1; R Core Team, 2019). According to the recommendations set forth by Streiner (2003), the results of reliability analysis revealed good internal consistency (raw α throughout) for ratings of valence and arousal (see Table 1). The Appendix displays the expected α if each item were dropped from the total score, revealing that the exclusion of ratings of single sounds would not result in notable improvements in internal consistency. Therefore, ratings of all 75 sounds were included in the subsequent analyses.

Table 1.

Internal Consistency (Cronbach's Raw α Scores) for Ratings of Valence (Left Column) and Arousal (Right Column) for Sounds in Each Category.

| Category | Valence | Arousal |

|---|---|---|

| Neutral | 0.81 | 0.86 |

| Pleasant/High | 0.91 | 0.92 |

| Pleasant/Low | 0.84 | 0.88 |

| Unpleasant/High | 0.87 | 0.96 |

| Unpleasant/Low | 0.71 | 0.93 |

Ratings of valence and arousal were analyzed separately, each using linear mixed-effects models. Separate analyses were conducted for both research questions (effects of hearing loss/presentation level and effects of hearing aids). For the effects of hearing loss and presentation level, the independent variables were category (neutral, pleasant/high, pleasant/low, unpleasant/high, unpleasant/low), condition (unaided 60 dB, unaided 80 dB), and group (normal hearing, hearing loss). Initially, HADS scores were included in the analyses; however, their inclusion did not change the results. Therefore, HADS scores were excluded from subsequent analyses for simplicity. For the effects of hearing aids, the independent variables were sound category and condition (unaided 60 dB, unaided 80 dB, aided with NFC-OFF, aided with NFC-ON).

For both questions, participant was included as a random intercept, and degrees of freedom were based on Satterthwaite's method (Satterthwaite, 1941). Linear mixed-effects models were constructed using the lmer function in the lme4 package (Bates et al., 2015). F statistics for each model constructed with lmer were determined using the analysis of variance function in base R. Residuals were inspected visually and did not reveal clear violations of normality or homoscedasticity. Following the recommendations of Matuschek et al. (2017) and Bates et al. (2015), the parsimonious model with only significant main effects or interactions for each research question was maintained and subjected to post hoc comparisons. Pairwise comparisons were calculated using the emmeans function in the emmeans package in R (Lenth, 2019), and comparisons were adjusted to account for the false discovery rate (Benjamini & Hochberg, 1995). All analyses were conducted in R (3.6.1; R Core Team, 2019).

Results

Hearing Loss and Presentation Level

Valence

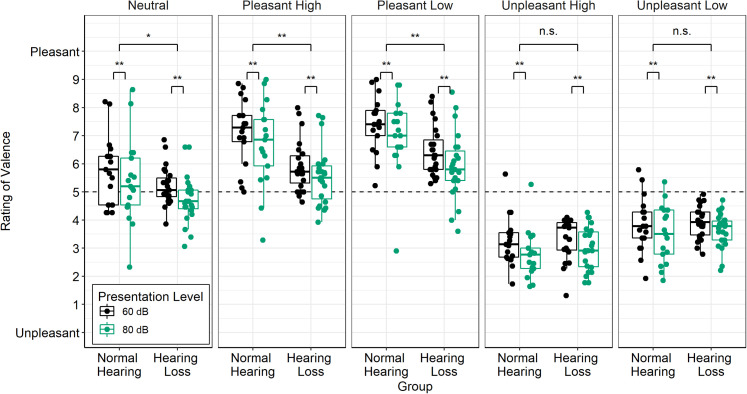

Figure 2 displays ratings of valence, averaged within each category, for each group, and in each condition without hearing aids. Analysis of ratings of valence revealed significant effects of condition (F1,342 = 32.97, p < .0001), category (F3,342 = 393.01, p < .0001), and group (F1,38 = 4.68, p = .037), as well as a significant group × category interaction (F4,342 = 15.51, p < .0001). There were no other two-way or three-way interactions. As a result, the final parsimonious model included main effects of category, condition, and group, and a group × category interaction.

Figure 2.

Mean Ratings of Valence for Participants With Normal Hearing and Hearing Loss in the Two Conditions Without Hearing Aids (60 and 80 dBA). Ratings have been averaged across sounds within each category for each participant. Boxes represent first to third quartile; solid lines indicate median; circles indicate data from individual participants. Significant differences are indicated by *p < .05 or **p < .01. NS = nonsignificant comparison.

These findings indicate that the increased presentation level resulted in lower (less pleasant) ratings of valence, independent of group or category (M difference = 0.41, 95% CI [0.27, 0.54], t(351) = 5.86, p < .00001). In addition, ratings of valence from participants with normal hearing were different from those from participants with hearing loss, although the effects of group depended on sound category (see Table 2). For sounds in the two unpleasant categories (low and high arousal), ratings of valence did not differ significantly between groups. However, ratings from participants with normal hearing were significantly higher (more pleasant) than ratings from participants with hearing loss for sounds in the neutral and both pleasant (low and high arousal) categories (0.63, 1.19, and 1.02 points, respectively).

Table 2.

Results of Pairwise Comparisons of Ratings of Valence for Participants With Normal Hearing and Hearing Loss. For All Comparisons, Standard Error = 0.27, and Degrees of Freedom = 68.80.

| Category | Estimated difference | 95% confidence interval | t ratio | p | Significance |

|---|---|---|---|---|---|

| Neutral | 0.63 | [0.08, 1.18] | 2.28 | .026 | * |

| Pleasant/High | 1.19 | [0.64, 1.74] | 4.32 | <.001 | *** |

| Pleasant/Low | 1.02 | [0.47, 1.56] | 3.69 | <.001 | *** |

| Unpleasant/High | −0.17 | [−0.72, 0.38] | −0.61 | .544 | NS |

| Unpleasant/Low | −0.11 | [−0.66, 0.44] | −0.39 | .695 | NS |

NS = nonsignificant.

*p < .05, **p < .01, ***p < .001.

Arousal

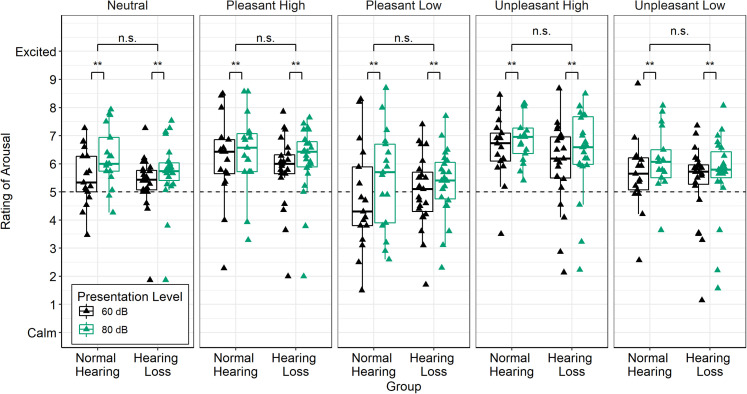

Figure 3 displays ratings of arousal, averaged within each category, for each group, and in each condition without hearing aids. Analysis revealed only main effects of condition (F1,342 = 20.42, p < .0001) and category (F4,342 = 23.18, p < .001); there was no main effect of group and were no significant interactions. The final parsimonious model included the main effects of condition and category. As expected, ratings of arousal varied across categories; ratings differed between all categories (p < .05), except ratings of neutral and unpleasant/low arousal sounds, were not different from each other (p > .05). In addition, ratings of arousal were higher (more exciting) in response to sounds at the higher presentation level (M difference = 0.41, standard error (SE) = 0.09, 95% CI [0.23, 0.59], t(355) = 4.47, p < .001). Combined, these data indicate that ratings of arousal varied across categories and presentation level, but ratings from the two participant groups were not different from each other.

Figure 3.

Mean Ratings of Arousal for Participants With Normal Hearing and Hearing Loss in the Two Conditions Without Hearing Aids (60 and 80 dBA). Ratings have been averaged across sounds within each category for each participant. Boxes represent first to third quartile; solid lines indicate median; triangles indicate data from individual participants. Significant differences are indicated by *p < .05 or **p < .01. NS = nonsignificant comparison.

Effect of Hearing Aids for Hearing Loss Group

Valence

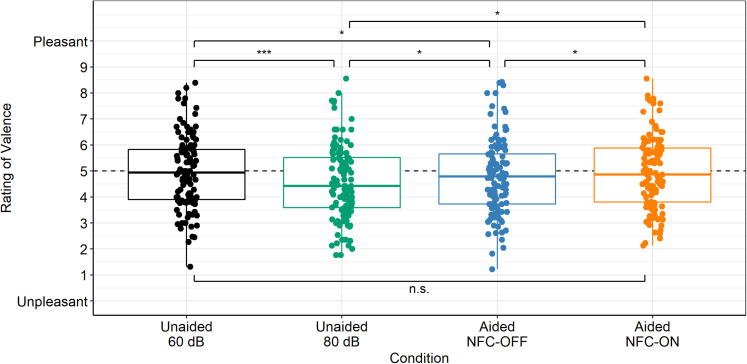

Figure 4 displays ratings of valence for participants with hearing loss with and without hearing aids. Analysis revealed significant main effects of condition (F3,437 = 10.05, p < .001) and category (F4,437 = 368.03, p < .0001), but a nonsignificant condition × category interaction. As a result, the final parsimonious model included only the main effects of condition and category.

Figure 4.

Ratings of Valence for Participants With Hearing Loss in the Unaided and Aided Conditions. Data are collapsed across category because the condition × category interaction was not statistically significant. Boxes represent first to third quartile; solid lines indicate median; circles indicate data from individual participants. Each participant is represented five times per condition (once for each sound category). Significant differences are indicated by *p < .05 or **p < .01. NS = nonsignificant comparison; NFC-OFF = nonlinear frequency compression is not activated; NFC-ON = nonlinear frequency compression is activated.

These results confirm that ratings of valence for participants with hearing loss varied as expected with category; ratings of pleasant sounds were higher than ratings of neutral or unpleasant sounds, and ratings of neutral sounds were higher than ratings of unpleasant sounds (all comparisons p < .05). Results also indicate that condition affected ratings of valence, and the effects were independent of category. Collapsed across all categories (see Table 3), ratings were highest (most pleasant) without hearing aids in the 60 dB presentation level and with hearing aids in the NFC-ON condition. Conversely, ratings were lowest (least pleasant) without hearing aids in the 80 dB presentation level condition. Collectively, these data demonstrate that independent of category, hearing aid use without NFC lowered ratings of valence (0.20 points), but less so than increasing the overall level (0.40 points). In addition, the data demonstrate that activating NFC counteracted the consequences of hearing aid use without NFC and increased the overall level on ratings of valence (0.19 points).

Table 3.

Results of Pairwise Comparisons Between Conditions for Ratings of Valence for Participants With Hearing Loss. For All Comparisons, Standard Error = 0.09, and Degrees of Freedom = 430.

| Comparison | Estimated difference | 95% confidence interval | t ratio | p | Significance |

|---|---|---|---|---|---|

| 60–80 | 0.40 | [0.18, 0.63] | 4.70 | <.0001 | *** |

| 60–NFC-OFF | 0.20 | [−0.03, 0.43] | 2.32 | .031 | * |

| 60–NFC-ON | 0.01 | [−0.22, 0.24] | 0.12 | .904 | NS |

| 80–NFC-OFF | −0.20 | [−0.43, 0.02] | −2.38 | .031 | * |

| 80–NFC-ON | −0.39 | [−0.62, −0.17] | −4.58 | <.0001 | *** |

| NFC-OFF–NFC-ON | −0.19 | [−0.42, 0.04] | −2.20 | .033 | * |

NFC-OFF = nonlinear frequency compression is not activated; NFC-ON = nonlinear frequency compression is activated; NS = nonsignificant.

*p < .05, **p < .01, ***p < .001.

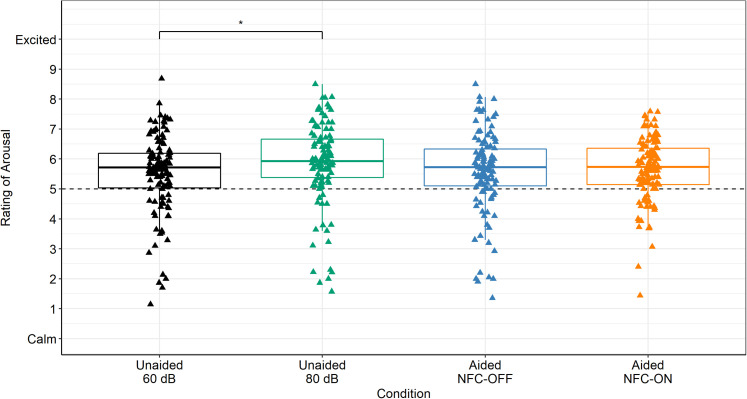

Arousal

Figure 5 displays ratings of arousal for participants with hearing loss with and without hearing aids. Analysis revealed main effects of condition (F3,418 = 3.49, p = .016) and category (F4,418 = 23.23, p < .0001), and a nonsignificant category × condition interaction. As a result, the final parsimonious model included only the main effects of condition and category. Pairwise comparisons revealed that ratings of arousal were different across all five categories (p < .05), except that ratings were similar for neutral and unpleasant/low arousal stimuli (p > .05). Pairwise comparisons between conditions revealed that ratings of arousal were higher in the 80 dB condition than in the 60 dB condition (M difference = 0.34, SE = 0.11, 95% CI [0.05, 0.62], t(430) = 3.10, p = .012) and higher in the 80 dB condition than in the NFC-OFF condition (M difference = 0.27, SE = 0.11, 95%CI [−0.02, 0.56], t(430) = 2.247, p = .041). There were no other significant differences between conditions. Combined, these data indicate that ratings of arousal were highest for the condition without hearing aids with sounds presented at 80 dB and that hearing aid use did not affect ratings of arousal.

Figure 5.

Mean Ratings of Arousal for Participants With Hearing Loss in the Conditions With and Without Hearing Loss. Data are collapsed across category because the condition × category interaction was not statistically significant. Boxes represent first to third quartile; solid lines indicate median; circles indicate data from individual participants. Each participant is represented five times per condition (once for each sound category). Significant differences are indicated by *p < .05 or **p < .01. NFC-OFF = nonlinear frequency compression is not activated; NFC-ON = nonlinear frequency compression is activated.

Discussion

The purpose of this study was (a) to confirm the effects of hearing loss and presentation level on emotional responses to nonspeech sounds and (b) to evaluate the effect of hearing aid use on emotional responses to nonspeech sounds. Hearing loss, presentation level, and hearing aid use affected ratings, but the effects were different for ratings of arousal and valence.

Ratings of Arousal

Consistent with previous work (Picou, 2016), the effects of hearing loss and presentation level were more apparent for ratings of valence than for arousal. Ratings of arousal were affected only by presentation level; participants’ ratings of arousal were lower for sounds presented at the moderate, rather than the high, presentation level. This finding is consistent with literature that documents the effects of overall level (or loudness) on arousal (Goudbeek & Scherer, 2010; Ilie & Thompson, 2006; Laukka et al., 2005; Ma & Thompson, 2015; Weninger et al., 2013). Also consistent with previous literature (Picou, 2016), mild-to-severe sensorineural hearing loss did not affect ratings of arousal; participants with normal and impaired hearing rated arousal similarly across all conditions and sound categories. Given the nonsignificant effects of hearing loss and hearing aid use on ratings of arousal, they will not be discussed further.

Ratings of Valence

Unlike ratings of arousal, there were effects of hearing loss, presentation level, and hearing aid use on ratings of valence. The effects of hearing loss depended on sound category. Participants with hearing loss rated pleasant and neutral sounds as less pleasant than participants with normal hearing but rated unpleasant sounds in a similar manner. This finding is consistent with a growing body of work documenting the effects of hearing loss on emotion perception, as evidenced by the ability to identify vocal emotion in others (Christensen et al., 2019; Singh et al., 2018) and by ratings of experienced emotion (Picou, 2016; Picou & Buono, 2018).

Somewhat surprisingly, the effects of hearing loss were limited to the pleasant sounds (both low and high arousal) in the current study. However, previous data suggest that hearing loss affects responses to both pleasant and unpleasant sounds (Husain et al., 2014; Picou, 2016). The reasons for the discrepancy are not clear. One potential explanation is that participant gender confounds ratings of unpleasant stimuli; Picou (2016) did not control for gender between participant groups, with more females in the groups with normal hearing and more males in the group with hearing loss. Some investigators have found effects of gender on ratings of valence where females provide lower ratings of valence of unpleasant stimuli than did males (e.g., Grossman & Wood, 1993; Natale et al., 1983; Vrana & Rollock, 2002), although others have not confirmed this effect (e.g., Morgan, 2019). Specific to the IADS stimuli, effects of gender on ratings of valence have also been mixed. For example, Picou and Buono (2018) reported no effect of hearing loss on ratings of unpleasant stimuli and instead only an effect of gender; females rated unpleasant sounds as less unpleasant than did their male counterparts. Conversely, others have reported nonsignificant relationships between gender and ratings of valence (Bradley & Lang, 2000). Furthermore, exploratory analyses of the current data set did not reveal the effects of gender on ratings of unpleasant sounds. Thus, future work is warranted to disentangle the effects of hearing loss, audibility, and gender on emotional responses to unpleasant sounds.

Another surprising finding in the current study is the effect of presentation level on ratings of valence for all participants. Picou (2016) reported that increasing the overall presentation level only affected participants with hearing loss. However, the current data demonstrate that participants with and without hearing loss were similarly, negatively affected by an increase in presentation level. The reasons for this discrepancy are also not clear. It is possible that the study of Picou (2016) was underpowered to detect the effect of presentation level for participants with normal hearing; only 10 older participants with normal hearing rated stimuli at a moderate and a high presentation level. Indeed, participants in that study provided ratings of valence that were approximately 0.5 points lower for sounds presented at 80 dBA than for stimuli presented at 50 dBA. This difference is somewhat higher than the effect noted in the current study (0.41 points) but was not statistically significant in the earlier study. Thus, it is likely that increasing the overall level of sounds results in less pleasant ratings of valence for most participants, independent of hearing acuity. The conclusion would be consistent with the work of Atias et al. (2019), demonstrating that high intensity, ambiguous sounds result in low (unpleasant) ratings of valence, even in response to sounds that were intended to be pleasant. Combined, these results do not support frequency nonspecific amplification for the purpose of affecting experienced emotion by remediating reduced audibility. Instead, such a strategy (e.g., increasing the level of music players, increasing the volume on televisions, or using personal sound amplifiers with frequency nonspecific gain) might result in even less pleasant, experienced emotions.

Hearing Aids

The results of the study do not support improvements in emotion perception with hearing aid use relative to unaided listening. It is interesting to note that simply increasing the overall level universally resulted in the lowest ratings of valence. Relative to the effect of an overall increase in presentation level, hearing aid use without NFC resulted in a smaller reduction in ratings of valence (0.40 and 0.20 points, respectively). These data suggest that personalized, frequency-specific fitting has smaller negative consequences than increasing the overall level. This is consistent with existing work demonstrating, relative to uniform settings, individualized, personal amplification settings can result in higher speech recognition performance (Mackersie et al., 2009) and higher ratings of sound quality (Kam et al., 2017). The finding in the current study that ratings of valence were higher with hearing aids fit than with an overall increase in level extends the existing work into the domain of emotional responses to sound.

Activation of NFC further counteracted the negative effects of listening to amplified sounds for participants with hearing loss. Providing access to high-frequency sounds via frequency compression was needed to achieve ratings of valence in line with unaided ratings. Indeed, ratings of valence were nearly identical in the NFC-ON and 60 dB conditions (0.01 point difference). Reasons for this improvement in ratings relative to NFC-OFF warrant further investigation and could be related to improved access to high-frequency information without a concomitant increase in loudness.

Collectively, these data suggest that improving audibility for emotional sounds does not counteract the effects of hearing loss on emotion perception. This finding supports the hypothesis that the consequences of hearing loss on experienced emotion are not adequately addressed by hearing aids alone. It could be that additional interventions are necessary to address peripheral and central changes in the auditory system that are unaddressed by modern hearing aids, or that further hearing aid processing strategies are necessary. Future insights from additional clinical tests of the auditory system might help identify specific characteristics of auditory function relating to the effects of hearing loss and related interventions on emotion perception.

Future Directions

Future work is needed to explore the extent to which these findings are generalizable. For example, it is possible that a more aggressive NFC setting would have different effects on emotion perception. The NFC used in the current study was programmed at a weak setting to minimize distortion and improve access to at least some high-frequency information. A more aggressive NFC setting might have resulted in larger benefits by providing additional high-frequency information or perhaps resulted in more negative consequences by introducing distortions or artifacts.

It will be interesting to evaluate whether the results of this study generalize to different hearing aid settings. For example, one might expect significantly more disrupted emotion perception with fast-acting, wide dynamic range compression, based on the work of Arefi et al. (2017). However, fast-acting amplitude compression could further improve audibility for the entire dynamic range of the sounds, which might have a positive effect on experienced emotion.

In addition, hearing aid experience might have affected the current results. As this study was the first step toward evaluating the effects of hearing aids on experienced emotion, a convenience sample of participants was used. Participants represented established and relatively new hearing aid users, although the majority (n = 18) were established hearing aid users. Moreover, the specific hearing aids used were new to all participants, because they were fit for the purpose of the study. It is possible that long-term improvements in audibility could counteract some effects of hearing loss (Purdy et al., 2001). Perhaps with long-term experience with the study hearing aids, the hearing aids might positively affect ratings of valence in response to nonspeech sounds.

Finally, more work is warranted to investigate the extent to which the laboratory findings might generalize to typical listening circumstances. Specifically, it is not clear what size change on the nine-point SAM scale would be meaningful in daily life. On one hand, the largest differences noted in the study were small (<1.2 points). In addition, the participants were community dwelling adults who were not depressed or taking pharmacological medications. Perhaps the reduced range of valence responses does not noticeably affect participants’ emotional experiences. On the other hand, valence ratings in response to pleasant sounds have been linked to isolation and social connectedness; adults who provide lower ratings in the laboratory also report more isolation and disconnectedness in their lives (Picou & Buono, 2018). Moreover, in the current study, adults with mild-to-severe hearing loss provided ratings of valence that were 16% lower than did adults with normal hearing bilaterally, suggesting that hearing loss has a relatively large effect on ratings of valence within the context of the SAM.

Investigation into the meaningfulness of differences in valence ratings is also important for interpreting the effects of hearing aids in this study, where ratings of pleasant stimuli were 3% lower in the NFC-OFF condition than without hearing aids, and ratings with NFC-ON condition were approximately 3% higher than in the NFC-OFF condition. It is not clear if a 3% change in ratings would be noticeable to hearing aid user in their daily lives.

Conclusion

The current study confirms existing findings that hearing loss disrupts emotional responses to nonspeech sounds for adults and extends the knowledge base by demonstrating that the current standard of care for this population, individualized hearing aid fittings, does not counteract the effects of hearing loss for nonspeech sounds. Instead, ratings of valence were lower (less pleasant) even with hearing aids (NFC-OFF) than they were without hearing aids. Although ratings of valence were higher (more pleasant) with the activation of NFC, even with NFC-ON, participants with hearing loss rated pleasant stimuli approximately 1 point lower (less pleasant) than did their peers with normal hearing. These findings suggest that personalized amplification with or without NFC activated would be preferable to a uniform increase in amplification, as might be the case with television or stereo volume control or personal sound amplifiers. The results also suggest that enabling NFC might be preferable to not enabling it. Further work is warranted to evaluate additional interventions (e.g., more aggressive NFC, fast-acting amplitude compression) for hearing loss that might affect experienced emotion in response to sounds for participants with hearing loss.

Supplementary Material

Acknowledgments

The authors wish to thank Sarah Alfieri for assistance with data collection and Todd Ricketts for insightful comments during project planning. Portions of this project were presented at the American Academy of Audiology Conference (April 2016, Phoenix, AZ), the World Congress of Audiology (October 2016, Vancouver, British Columbia, Canada), Acoustics Week in Canada (October 2016, Vancouver, British Columbia, Canada), and the Audiology Research Conference of the American Academy of Audiology (April 2019, Columbus, Ohio).

Footnotes

Authors’ Note: Lori Rakita is now at Starkey Hearing Technologies in Eden Prairie MN. Gabrielle Buono is now at Nashville ENT Audiology, St. Thomas Medical Group, Nashville, TN.

Declaration of Conflicting Interests: The authors declared the following potential conflicts of interest with respect to the research, authorship, and/or publication of this article: Erin M. Picou received funding to conduct this research; Lori Rakita is a former Sonova AG employee.

Funding: The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was supported by Sonova, AG.

ORCID iD: Erin M. Picou https://orcid.org/0000-0003-3083-0809

References

- Akinseye G. A., Dickinson A.-M., Munro K. J. (2018). Is non-linear frequency compression amplification beneficial to adults and children with hearing loss? A systematic review. International Journal of Audiology, 57(4), 262–273. 10.1080/14992027.2017.1420255 [DOI] [PubMed] [Google Scholar]

- Arefi H. N., Sameni S. J., Jalilvand H., Kamali M. (2017). Effect of hearing aid amplitude compression on emotional speech recognition. Auditory and Vestibular Research, 26(4), 223–230. https://doi.org/https://avr.tums.ac.ir/index.php/avr/article/view/651 [Google Scholar]

- Arthaud-Day M. L., Rode J. C., Mooney C. H., Near J. P. (2005). The subjective well-being construct: A test of its convergent, discriminant, and factorial validity. Social Indicators Research, 74(3), 445–476. 10.1007/s11205-004-8209-6 [DOI] [Google Scholar]

- Atias D., Todorov A., Liraz S., Eidinger A., Dror I., Maymon Y, Aviezer H. (2019). Loud and unclear: Intense real-life vocalizations during affective situations are perceptually ambiguous and contextually malleable. Journal of Experimental Psychology: General, 148(10), 1842–1848. 10.1037/xge0000535 [DOI] [PubMed] [Google Scholar]

- Bates D., Maechler M., Bolker B., Walker S. (2015). Fitting linear mixed-effects models using {lme4}. Journal of Statistical Software, 67(1), 1–48. 10.18637/jss.v067.i01 [DOI] [Google Scholar]

- Benjamini Y., Hochberg Y. (1995). Controlling the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society: Series B (Methodological), 57(1), 289–300. 10.1111/j.2517-6161.1995.tb02031.x [DOI] [Google Scholar]

- Bradley M. M., Lang P. J. (1994). Measuring emotion: The self-assessment manikin and the semantic differential. Journal of Behavior Therapy and Experimental Psychiatry, 25(1), 49–59. 10.1016/0005-7916(94)90063-9 [DOI] [PubMed] [Google Scholar]

- Bradley M. M., Lang P. J. (2000). Affective reactions to acoustic stimuli. Psychophysiology, 37(2), 204–215. 10.1111/1469-8986.3720204 [DOI] [PubMed] [Google Scholar]

- Bradley M. M., Lang P. J. (2007). The International Affective Digitized Sounds (IADS-2): Affective ratings of sounds and instruction manual. University of Florida, Gainesville, FL, Tech. Rep. B-3. [Google Scholar]

- Brattico E., Alluri V., Bogert B., Jacobsen T., Vartiainen N., Nieminen S. K., Tervaniemi M. (2011). A functional MRI study of happy and sad emotions in music with and without lyrics. Frontiers in Psychology, 2, 308. 10.3389/fpsyg.2011.00308 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buono G. H., Crukley J., Hornsby B. W., Picou E. M. (2021). Loss of high-or low-frequency audibility can partially explain effects of hearing loss on emotional responses to non-speech sounds. Hearing Research, 401, 108153. 10.1016/j.heares.2020.108153 [DOI] [PubMed] [Google Scholar]

- Chan L. P., Livingstone S. R., Russo F. A. (2013). Facial mimicry in response to song. Music Perception: An Interdisciplinary Journal, 30(4), 361–367. 10.1525/mp.2013.30.4.361 [DOI] [Google Scholar]

- Christensen J. A., Sis J., Kulkarni A. M., Chatterjee M. (2019). Effects of age and hearing loss on the recognition of emotions in speech. Ear and Hearing, 40(5), 1069–1083. 10.1097/AUD.0000000000000694 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goudbeek M., Scherer K. (2010). Beyond arousal: Valence and potency/control cues in the vocal expression of emotion. The Journal of the Acoustical Society of America, 128(3), 1322–1336. 10.1121/1.3466853 [DOI] [PubMed] [Google Scholar]

- Goy H., Pichora-Fuller K. M., Singh G., Russo F. A. (2018). Hearing aids benefit recognition of words in emotional speech but not emotion identification. Trends in Hearing, 22, 1–16. 10.1177/2331216518801736 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grossman M., Wood W. (1993). Sex differences in intensity of emotional experience: A social role interpretation. Journal of Personality and Social Psychology, 65(5), 1010–1022. 10.1037/0022-3514.65.5.1010 [DOI] [PubMed] [Google Scholar]

- Hess U., Blairy S., Philippot P. (1999). Facial Mimicry. New York: Cambridge University Press. [Google Scholar]

- Husain F. T., Carpenter-Thompson J. R., Schmidt S. A. (2014). The effect of mild-to-moderate hearing loss on auditory and emotion processing networks. Frontiers in Systems Neuroscience, 8, 1–13. 10.3389/fnsys.2014.00010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ilie G., Thompson W. F. (2006). A comparison of acoustic cues in music and speech for three dimensions of affect. Music Perception: An Interdisciplinary Journal, 23(4), 319–330. 10.1525/mp.2006.23.4.319 [DOI] [Google Scholar]

- Kam A. C. S., Sung J. K. K., Lee T., Wong T. K. C., van Hasselt A. (2017). Improving mobile phone speech recognition by personalized amplification: Application in people with normal hearing and mild-to-moderate hearing loss. Ear and Hearing, 38(2), e85–e92. 10.1097/AUD.0000000000000371 [DOI] [PubMed] [Google Scholar]

- Keidser G., Dillon H., Carter L., O’Brien A. (2012). NAL-NL2 empirical adjustments. Trends in Amplification, 16, 211–223. 10.1177/1084713812468511 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laukka P., Juslin P., Bresin R. (2005). A dimensional approach to vocal expression of emotion. Cognition & Emotion, 19(5), 633–653. 10.1080/02699930441000445 [DOI] [Google Scholar]

- Lenth R. (2019). Emmeans: Estimated marginal means, aka least-squares means. R package version 1.4. https://CRAN.R-project.org/package=emmeans [Google Scholar]

- Lin F. R., Thorpe R., Gordon-Salant S., Ferrucci L. (2011). Hearing loss prevalence and risk factors among older adults in the United States. The Journals of Gerontology Series A: Biological Sciences and Medical Sciences, 66(5), 582–590. 10.1093/gerona/glr002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Livingstone S. R., Vezer E., McGarry L. M., Lang A. E., Russo F. A. (2016). Deficits in the mimicry of facial expressions in Parkinson's Disease. Frontiers in Psychology, 7, 10.3389/fpsyg.2016.00780, Article 780. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luan Y., Wang C., Jiao Y., Tang T., Zhang J., Teng G.-J. (2019). Dysconnectivity of multiple resting-state networks associated with higher-order functions in sensorineural hearing loss. Frontiers in Neuroscience, 13, 55. 10.3389/fnins.2019.00055 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luo X., Kern A., Pulling K. R. (2018). Vocal emotion recognition performance predicts the quality of life in adult cochlear implant users. The Journal of the Acoustical Society of America, 144(5), EL429–EL435. 10.1121/1.5079575 [DOI] [PubMed] [Google Scholar]

- Ma W., Thompson W. F. (2015). Human emotions track changes in the acoustic environment. Proceedings of the National Academy of Sciences, 112(47), 14563–14568. 10.1073/pnas.1515087112/-/DCSupplemental [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mackersie C. L., Qi Y., Boothroyd A., Conrad N. (2009). Evaluation of cellular phone technology with digital hearing aid features: Effects of encoding and individualized amplification. Journal of the American Academy of Audiology, 20(2), 109–118. 10.3766/jaaa.20.2.4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mao Y., Yang J., Hahn E., Xu L. (2017). Auditory perceptual efficacy of nonlinear frequency compression used in hearing aids: A review. Journal of Otology, 12(3), 97–111. 10.1016/j.joto.2017.06.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Margolis R. H., Heller J. W. (1987). Screening tympanometry: Criteria for medical referral. Audiology, 26(4), 197–208. 10.1080/00206098709081549 [DOI] [PubMed] [Google Scholar]

- Matuschek H., Kliegl R., Vasishth S., Baayen H., Bates D. (2017). Balancing type I error and power in linear mixed models. Journal of Memory and Language, 94, 305–315. 10.1016/j.jml.2017.01.001 [DOI] [Google Scholar]

- McDermott H. J. (2011). A technical comparison of digital frequency-lowering algorithms available in two current hearing aids. PLoS One, 6(7), e22358. 10.1371/journal.pone.0022358 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morgan S. D. (2019). Categorical and dimensional ratings of emotional speech: Behavioral findings from the morgan emotional speech Set. Journal of Speech, Language, and Hearing Research, 62(11), 4015–4029. 10.1044/2019_JSLHR-S-19-0144 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Natale M., Gur R. E., Gur R. C. (1983). Hemispheric asymmetries in processing emotional expressions. Neuropsychologia, 21(5), 555–565. 10.1016/0028-3932(83)90011-8 [DOI] [PubMed] [Google Scholar]

- Pell M. D., Paulmann S., Dara C., Alasseri A., Kotz S. A. (2009). Factors in the recognition of vocally expressed emotions: A comparison of four languages. Journal of Phonetics, 37(4), 417–435. 10.1016/j.wocn.2009.07.005 [DOI] [Google Scholar]

- Picou E. M. (2016). How hearing loss and age affect emotional responses to nonspeech sounds. Journal of Speech, Language, and Hearing Research, 59(5), 1233–1246. 10.1044/2016_JSLHR-H-15-0231 [DOI] [PubMed] [Google Scholar]

- Picou E. M., Buono G. H. (2018). Emotional responses to pleasant sounds are related to social disconnectedness and loneliness independent of hearing loss. Trends in Hearing, 22, 1–15. 10.1177/2331216518813243 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Picou E. M., Singh G., Goy H., Russo F. A., Hickson L., Oxenham A. J., Buono G. H., Ricketts T. A., & Launer S. (2018). Hearing, emotion, amplification, research, and training workshop: Current understanding of hearing loss and emotion perception and priorities for future research. Trends in Hearing, 22, 1–24. 10.1177/2331216518803215 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Purdy S. C., Kelly A. S., Thorne P. R. (2001). Auditory evoked potentials as measures of plasticity in humans. Audiology and Neurotology, 6(4), 211–215. 10.1159/000046835 [DOI] [PubMed] [Google Scholar]

- R Core Team (2019). R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing. [Google Scholar]

- Revelle W. (2018). Psych: Procedures for Personality and Psychological Research. Northwestern University. [Google Scholar]

- Russell J. A. (1980). A circumplex model of affect. Journal of Personality and Social Psychology, 39(6), 1161–1178. 10.1037/0022-3514.36.10.1152 [DOI] [Google Scholar]

- Russell J. A., Mehrabian A. (1977). Evidence for a three-factor theory of emotions. Journal of Research in Personality, 11(3), 273–294. 10.1016/0092-6566(77)90037-X [DOI] [Google Scholar]

- Satterthwaite F. E. (1941). Synthesis of variance. Psychometrika, 6(5), 309–316. [Google Scholar]

- Schmidt J., Herzog D., Scharenborg O., Janse E. (2016). Do hearing aids improve affect perception? In van Dijk P., Başkent D., Gaudrain E., Kleine E. D., Wagner A., Lanting C. (Eds.), Physiology, psychoacoustics and cognition in normal and impaired hearing: Advances in experimental medicine and biology (Vol. 894, pp. 47–55). Springer. [DOI] [PubMed] [Google Scholar]

- Simpson A., Bond A., Loeliger M., Clarke S. (2018). Speech intelligibility benefits of frequency-lowering algorithms in adult hearing aid users: A systematic review and meta-analysis. International Journal of Audiology, 57(4), 249–261. 10.1080/14992027.2017.1375163 [DOI] [PubMed] [Google Scholar]

- Singh G., Liskovoi A., Launer S., Russo F. A. (2018). The emotional communication in hearing questionnaire (EMO-CHeQ): Development and evaluation. Ear and Hearing, 40(2), 260–272. 10.1097/AUD.0000000000000611 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Souza P., Arehart K., Kates J., Croghan N., Gehani N. (2013). Exploring the limits of frequency lowering. Journal of Speech, Language, and Hearing Research, 56(5), 1349–1363. 10.1044/1092-4388(2013/12-0151 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevens G., Flaxman S., Brunskill E., Mascarenhas M., Mathers C. D., Finucane M. (2013). Global and regional hearing impairment prevalence: An analysis of 42 studies in 29 countries. The European Journal of Public Health, 23(1), 146–152. 10.1093/eurpub/ckr176 [DOI] [PubMed] [Google Scholar]

- Streiner D. L. (2003). Starting at the beginning: An introduction to coefficient alpha and internal consistency. Journal of Personality Assessment, 80(1), 99–103. 10.1207/S15327752JPA8001_18 [DOI] [PubMed] [Google Scholar]

- Vrana S. R., Rollock D. (2002). The role of ethnicity, gender, emotional content, and contextual differences in physiological, expressive, and self-reported emotional responses to imagery. Cognition & Emotion, 16(1), 165–192. 10.1080/02699930143000185 [DOI] [Google Scholar]

- Weninger F., Eyben F., Schuller B. W., Mortillaro M., Scherer K. R. (2013). On the acoustics of emotion in audio: What speech, music, and sound have in common. Frontiers in Psychology, 4, 10.3389/fpsyg.2013.00292, Article 292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu X.-M., Jiao Y., Tang T.-Y., Zhang J., Lu C.-Q., Luan Y., … Teng G.J. (2019). Dissociation between cerebellar and cerebral neural activities in humans with long-term bilateral sensorineural hearing loss. Neural Plasticity, 2019, 10.1155/2019/8354849, Articled 835489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zigmond A. S., Snaith R. P. (1983). The Hospital Anxiety and Depression Scale. Acta Psychiatrica Scandinavica, 67(6), 361–370. 10.1111/j.1600-0447.1983.tb09716.x [DOI] [PubMed] [Google Scholar]

- Zinchenko A., Kanske P., Obermeier C., Schröger E., Villringer A., Kotz S. A. (2018). Modulation of cognitive and emotional control in age-related mild-to-moderate hearing loss. Frontiers in Neurology, 9, 783. 10.3389/fneur.2018.00783 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.