Abstract

Rapid diagnosis of COVID-19 with high reliability is essential in the early stages. To this end, recent research often uses medical imaging combined with machine vision methods to diagnose COVID-19. However, the scarcity of medical images and the inherent differences in existing datasets that arise from different medical imaging tools, methods, and specialists may affect the generalization of machine learning-based methods. Also, most of these methods are trained and tested on the same dataset, reducing the generalizability and causing low reliability of the obtained model in real-world applications. This paper introduces an adversarial deep domain adaptation-based approach for diagnosing COVID-19 from lung CT scan images, termed ADA-COVID. Domain adaptation-based training process receives multiple datasets with different input domains to generate domain-invariant representations for medical images. Also, due to the excessive structural similarity of medical images compared to other image data in machine vision tasks, we use the triplet loss function to generate similar representations for samples of the same class (infected cases). The performance of ADA-COVID is evaluated and compared with other state-of-the-art COVID-19 diagnosis algorithms. The obtained results indicate that ADA-COVID achieves classification improvements of at least 3%, 20%, 20%, and 11% in accuracy, precision, recall, and F1 score, respectively, compared to the best results of competitors, even without directly training on the same data. The implementation source code of the ADA-COVID is publicly available at https://github.com/MehradAria/ADA-COVID.

1. Introduction

Nearly 268 million people worldwide officially have been infected with the COVID-19, and more than 5.2 million death tolls until November 2021 [1] as of epidemic declaration in March 2020 signify the rapid diagnosis of the COVID-19 with high reliability in the early stages, not only to save human lives but also to reduce the social and economic burden on the communities involved. Although the RT-PCR (real-time polymerase chain reaction) test is the standard reference for confirming COVID-19, some studies show that this laborious method cannot diagnose the disease in the early stages [2–5], and some studies report a high false-negative rate [6].

One standard way to identify morphological patterns of lung lesions associated with COVID-19 is to use chest scan images. There are two common techniques for scanning the chest: X-rays and computer tomography (CT). Detection of COVID-19 from chest images by a radiologist is time-consuming, and the accuracy of COVID-19 diagnosis depends strongly on the radiologist's opinion [7, 8]. Also, manually checking every image might not be feasible in emergency cases. Recently, deep learning-based methods [9, 10] have been applied to help the medical community diagnose COVID-19 quickly, accurately, and automatically.

Using CT images to diagnose COVID-19 has recently drawn researchers' interest due to some key ideas that they possess: more accurate images of bones, organs, blood vessels, and soft tissues. Using these images, radiologists can better identify internal structures in more detail and evaluate their texture, density, size, and shape. Chest images obtained by CT scan usually provide much more accurate images of the patient's condition than X-rays. Therefore, in recent works based on deep learning, CT scan images are used more than plain radiographs [11–14].

Deep learning-based methods usually require large datasets to achieve better results and overcome overfitting [15]. In the accurate detection of COVID-19 using chest images, the lack of comprehensive, high-quality datasets is a fundamental problem in this research area. The COVID CT dataset was first introduced in [16] and has been used in recent works [11–13]. The next SARS-CoV-2 CT scan dataset [17] contains 2,482 CT scan images collected from hospitals in São Paulo, Brazil. Another large dataset includes 7495 positive corona samples and 944 negative ones [18]. The mentioned datasets are the largest and most common datasets used in this field, while researchers also use other small public datasets [19–21].

It is observed that obtained evaluation results on test samples belonging to the same dataset used for training are significantly better than other datasets [22]. In other words, model performance is artificially good when the train and test sets belong to the same dataset. At the same time, model performance is dramatically reduced when the trained model is evaluated on another dataset. Numerous studies demonstrate that the most recent approaches in the literature are unreliable [23, 24]. For example, two well-known studies [10, 25] in this field show a performance close to random classification facing unseen data (i.e., datasets on which the model has not been trained). For example, the classification accuracy in research [22] decreases from 98.5% on the test set to 59.12% on unseen datasets. The structural and inherent differences in the images from the available datasets, which arise from different tools and medical imaging methods, are the cause of this issue. Upon closer inspection, we found that most of the proposed methods for detecting and classifying COVID-19 are trained and tested on a set of images from the same dataset. Using a single dataset during network training reduces the model generalization. One of the fundamental problems of deep learning is shortcut learning [26]. Decision rules that perform well on typical benchmarks but fail to transfer to more complex testing situations, such as real-world scenarios, are examples of shortcuts [26].

This paper proposes an adversarial deep domain adaptation-based approach for diagnosing COVID-19 from lung CT scan images, termed ADA-COVID. In ADA-COVID, two datasets with different input domains are used in the network training process. The goal is to generate similar representations for images belonging to two different domains. This model can perform the correct classification regardless of the specific features of each input distribution. In other words, the generated representations are domain invariant, and overall better representations are generated. Also, due to the excessive structural similarity of medical images compared to other image data in machine vision, we use the triplet loss function for the training model. Using this loss function, similar (dissimilar) representations are generated for samples of the same class (different classes) in the embedded space.

The contributions of this work are twofold:

The effect of structural and intrinsic differences in images obtained from different medical imaging tools and methods is minimized as a result of the introduced domain adaptation-based approach for CT images.

A custom deep model is designed based on this approach to make corona case detection more reliable. Therefore, the generalization of the ADA-COVID for other new datasets and in the real-world application is high.

The performance of the ADA-COVID method is evaluated on two standard datasets, and extensive experiments are performed to examine the effectiveness of each solution proposed. The results show that our approach achieves higher performance than the existing competitors.

The rest of the paper is organized as follows. Section 2 gives a brief review of the related work; in Section 3, the proposed ADA-COVID is described in detail. In Section 4, the experimental results are presented. In Section 5, the conclusions and possible future works are discussed.

2. Related Work

With the prevalence of COVID-19, various methods were introduced to diagnose COVID-19 [14, 27–29]. These methods can be classified into three general categories: (1) methods that have developed customized network architectures specifically for COVID-19 detection, such as COVID-Net [10], COVID CT-Net [30], and CVR-Net [31], (2) methods that use pretrained networks and transfer learning to detect COVID-19, such as COVID-ResNet [32], CoroNet [33], COVID-CAPS [25], and convolutional CapsNet [34], and (3) very few studies employed handcrafted feature extraction approaches and conventional classifiers. In the following, we review each of these categories.

2.1. Methods Based on Customized Network Architectures

COVID-Net [10] is one of the earliest methods based on convolutional neural networks designed to detect COVID-19 using X-ray images. CVR-Net (Coronavirus Recognition Network) [31] is a customized model with convolutional layers trained and tested on a combination of CT and X-ray images. In CVR-Net, an average accuracy of 78% was reported for the CT image dataset. Further improvements were added to COVID-Net to improve its representational ability for one specific image modality and to make the network computationally more efficient [35]. CovidCTNet [36] is a set of open-source algorithms used to differentiate COVID-19 from community-acquired pneumonia (CAP) and other lung diseases. The aim of designing this model is to work with heterogeneous and small sample sizes independent of CT imaging hardware. In [13], an AUTOENCODER-based architecture was used to simultaneously segment and classify CT images. Their proposed architecture consists of an encoder and three decoders; these decoders are used for image reconstruction, image segmentation, and classification, respectively. COVID CT-Net [30] is an attentional CNN, which can focus on the infected areas of the chest. All of the introduced approaches in this category propose a customized architecture for detecting infected cases without utilizing any well-established pretrained networks.

2.2. Methods Based on Pretrained Models

In contrast to the first group, methods based on pretrained models have recently gained more attention, where standard pretrained CNN models are used to detect COVID-19 [37] based on CT images. In [38], convolutional networks and transfer learning have been used to classify the samples into three categories: COVID-19, bacterial pneumonia, and normal. This study aims to evaluate the performance of standard CNN models such as VGG19 [39], MobileNet v2 [40], Inception [41], Xception [42], and InceptionResNetV2 [41], which have been proposed in recent years. In [43], to detect COVID-19, transfer learning with fine-tuning has been used and evaluated their proposed method on four popular CNN architectures: ResNet18 [44], ResNet50 [44], SqueezeNet [45], and DenseNet-121 [46]. They prepared a dataset of around 5000 X-ray images for COVID-19 detection. Brunese et al. [47] used transfer learning on a pretrained VGG-16 [39] network to automatically detect COVID-19. Also, [48] applied a pretrained DenseNet201 [46] model on chest CT images to distinguish COVID-19 from non-COVID-19.

In [49], deep learning models and chest CT images differentiate coronavirus pneumonia from influenza pneumonia. This study was performed on CT images collected from various hospitals in China. Their studies have proven the effectiveness of CT images in diagnosing COVID-19. DeepPneumonia [50] was designed to classify COVID-19, bacterial pneumonia, and healthy cases based on CT images. Their proposed model achieved an accuracy of 86 : 5% for differentiating bacterial pneumonia and COVID-19 and 94% for distinguishing COVID-19 and healthy cases.

In [51], a new method called CONVNet based on the 3D deep learning framework for COVID-19 identification has been developed. This method can extract three-dimensional and two-dimensional representations. This method used ResNet [44] architecture. In [52], a deep transfer learning algorithm was introduced that used X-ray and CT scan images to accelerate the detection of COVID-19 cases. In [53], an attention-based deep learning model using the attention module with VGG-16 has been proposed. This method captures the spatial relationship between the ROIs in chest X-ray images. In [54], a new method based on BoVW (Bag of Visual Words) features has been proposed, which by removing the feature map normalization step and adding the deep features normalization step on the raw feature maps helps preserve the semantics of each feature map that may have important clues to differentiate COVID-19 from pneumonia.

2.3. Methods Based on Handcrafted Feature Extraction

Some COVID-19 detection methods used handcrafted feature extraction approaches. In [55], first, different texture features are extracted from the images by popular texture descriptors, and then, these texture features are combined with the extracted features from the pretrained Inception-v3 [56] model. In [57], a method for classifying the positive and negative cases of COVID-19 based on CT scan images was proposed. Different texture features were extracted from CT images using the Gabor filter, and then, the SVM method was used to classify these images. In [58], to reduce intensity variations between CT slices, a preprocessing step was applied on CT slices. Then, a long short-term memory (LSTM) classifier was used to discriminate between COVID-19, pneumonia, and healthy cases.

Other related methods based on the combination of feature extraction approaches and deep learning models are introduced in [59].

Most of the mentioned methods are highly dependent on the image domain of datasets on which they were trained. If the test set is from the same domain of the training set, the model performance will be acceptable. However, when the domain of the evaluation dataset is different, model performance is significantly reduced. However, in real-world applications, the domain of the inference image is not always the same as the training set. In other words, unseen data are often independent of the training set, so the results would not be reliable.

3. Proposed Approach: ADA-COVID

To overcome the mentioned problems in Section 1, we use the domain adaptation technique during model training. Using this technique during the training process, the generated representations do not depend on the domain of a particular dataset. Also, we use the triplet loss function for the training phase. Using this loss function, the distance between pairs of samples with similar classes in the embedded space is less than samples with different classes. Therefore, the extracted representations from the ADA-COVID model are very discriminative and domain invariant.

3.1. Overview

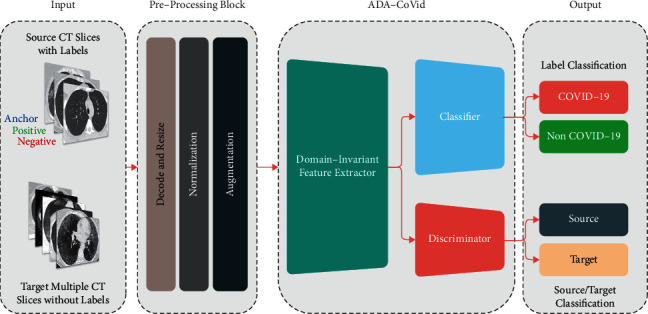

Figure 1 shows a general overview of the proposed method. As shown in this figure, different input domains are used in the COVID-19 detection process. The aim is to bring the statistical distribution of these domains closer together in the embedded space. The proposed method uses two different input domains named source and target. The source dataset is used to train the network, and the target dataset is applied for better generalizability of the network on new datasets. The next step is preprocessing, which includes decoding and resize, normalization, and augmentation. The output images from the preprocessing stage are entered into the ADA-COVID architecture. ADA-COVID consists of three modules as follows—(1) domain-invariant feature extractor: this module is responsible for extracting features, (2) classifier: this module is responsible for classifying data into two groups: COVID-19 and non-COVID-19, and (3) discriminator: this module is responsible for distinguishing and differentiating source data from target data.

Figure 1.

Diagram of the proposed approach.

The main aim of the proposed method is to generate similar representations for images belonging to two different domains. This model can perform the correct classification regardless of the specific features of each input distribution. Therefore, the model's generalizability increases, and it has the best performance for images belonging to different input domains.

The preprocessing step is introduced in Section 3.2, and the proposed ADA-COVID framework is described in Section 3.3.

3.2. Preprocessing

Preprocessing stage is performed to prepare data for training and evaluation of the model. The following paragraphs describe the different steps carried out in this regard.

3.2.1. Decode and Resize

CT scan images are saved in DICOM file format, the widely used format in medical imaging. We need to convert DICOM format images to standard image formats such as JPG or PNG to work with such images. In the proposed method, we convert the images to grayscale PNG format.

In deep neural networks, input images are often resized to maintain compatibility with the network architecture and reduce computations [60]. The proposed method uses the pretrained ResNet50 architecture as a feature extractor with an input size of 224 × 224. Therefore, all images are resized into 224 × 224 pixels for training, validation, and testing.

3.2.2. Normalization

To reduce the effect of intensity variations between CT slices, we normalize the data through (1) in the range [0, 1].

| (1) |

where xi represents the i-th image in the train set, and μ and σ represent the pixel level mean and standard deviation for all images in the train set, respectively. ε=1e − 10 is an insignificant value to prevent zero division, i is the index of each training sample, and Zi is the normalized version of Xi.

3.2.3. Data Augmentation

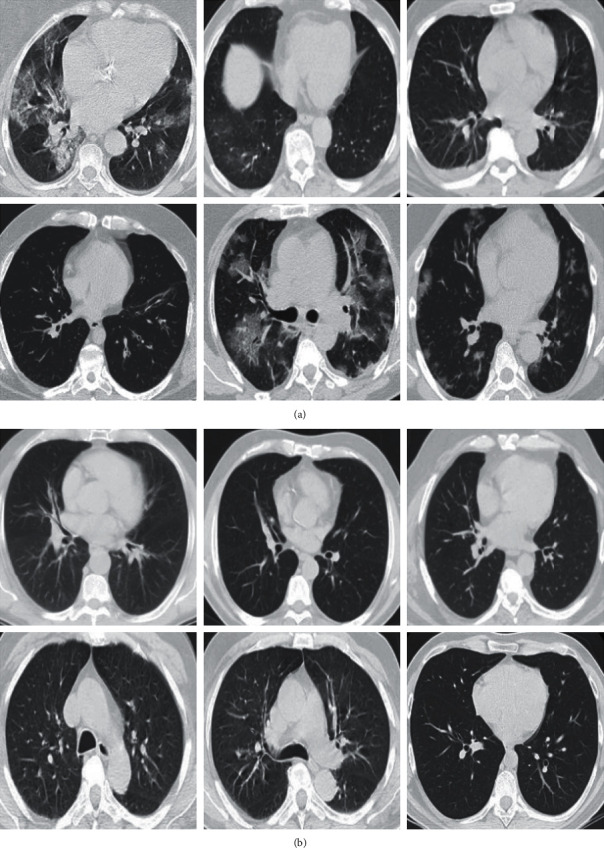

Data augmentation means increasing the number of training samples by transforming images without losing semantic information. We use five transformations that are randomly applied to samples of the training set. These transformations are selected so that they do not lead to different interpretations by radiologists. The details of these transformations are summarized in Table 1. Figure 2 shows some images after the preprocessing is applied.

Table 1.

Transformations.

| Augmentation | Range/type |

|---|---|

| Brightness | [−10%, +10%] |

| Contrast | [−10%, +10%] |

| Rotation | [−20°, +20°] |

| JPEG noise | [50, 100] |

| Flip | Horizontal |

Figure 2.

Preprocessed samples. (a) COVID-19 and (b) non-COVID-19.

3.3. ADA-COVID Framework

As shown in Figure 1, the ADA-COVID framework consists of three main modules: domain-invariant feature extractor, classifier, and discriminator. The following paragraphs describe these modules in detail. Also, the training procedure is provided in this section.

3.3.1. Domain-Invariant Feature Extractor

This module is applied for extracting image features. Common CNN models such as VGG16 and ResNet are often used to extract features from images. These models require many training data to generate better representations. However, in the COVID-19 detection task, the size of the available datasets for network training is very small. Using transfer learning techniques is a practical solution to overcome this limitation. Transfer learning is a well-known representation learning technique in which the models trained on large image datasets (such as ImageNet [61]) are used to initialize the models for tasks for which the dataset is small. There are two general approaches to use transfer learning from pretrained models: feature extraction and fine-tuning [62]. In the first approach, only the weights of some newly added layers (classification layers) are optimized during training, while in the second approach, all the weights (or part of the weights) are optimized for the new task. In the proposed framework, we use the fine-tuning approach.

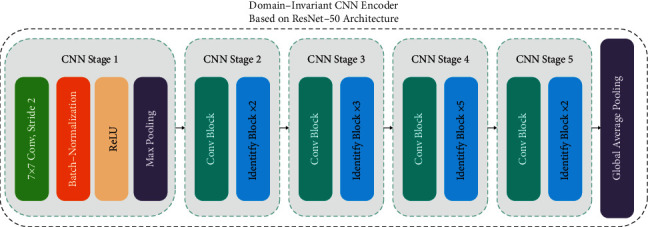

In the proposed framework, we use the pretrained ResNet50 [44] convolutional model trained on the ImageNet dataset. The ResNet50 has been selected after testing the most common pretrained CNN models. This architecture is smaller than other ResNet-based models (such as ResNet101 [44] and ResNet152 [44]) and has fewer parameters. Therefore, network training time is less than other models. An overview of the ResNet50 model is shown in Figure 3.

Figure 3.

Domain-invariant CNN encoder based on ResNet-50 architecture.

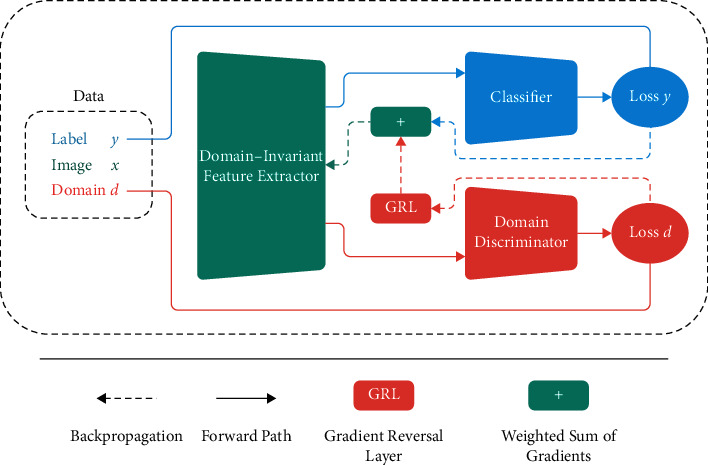

3.3.2. Discriminator and Classifier

As described in Section 3.1, different input domains (source and target datasets) are used in the COVID-19 detection process. The discriminator module is responsible for differentiating source data from target data, and the classifier module is responsible for classifying data into two groups: COVID-19 and non-COVID-19. Figure 4 illustrates an overview graphical representation of the model using the adversarial training approach in a multisource transfer learning setting. The classifier and discriminator utilize the features extracted by the feature extractor module at the same time to predict the class label and the domain from which the data came. The output predictor (classifier) and the domain classifier (discriminator) are trained classically by backpropagating their respective losses. When it reaches the feature extractor module, the gradient reversal layer reverses (multiplies by) (1) the domain classifier's loss. As a result, while the feature extractor learns a feature representation that is beneficial for output prediction, it also learns a feature representation that is indiscriminate of the domain from which the data come, promoting a more generalized one. The goal of learning representations using this joint architecture is to (1) generate representations that are indistinguishable from each other; (2) increase the model's generalizability; (3) learn representations that are based on essential features that are independent of the specific domain and dataset; and (4) distinguish COVID cases from non-COVID-19 cases with high accuracy.

Figure 4.

General representation of a deep model trained with the adversarial training methodology in the multisource transfer learning setting.

The architecture of the discriminator and classifier modules is almost the same. In the discriminator module, we pass the extracted features into two consecutive blocks consisting of dense, batch normalization, ReLU, and dropout layers. On top of the discriminator module, we use the sigmoid function. The output of this function indicates the probability of assigning each sample to the source or target class. In the classifier module, in addition to two consecutive blocks consisting of dense, batch normalization, ReLU, and dropout layers, an embedding layer is added on top of the classifier module. This embedding layer is dense and has 64 neurons.

After training the network and learning the appropriate embedding, in the test phase, a dense layer with two neurons and a softmax activation function is added on top of the classifier module so that the network can be used independently as a classifier. Also, the discriminator module is no longer needed in the test phase, so this module is removed in the final application.

3.3.3. Loss Function

Inspired by [63], we use two losses simultaneously in the network training process to increase the generalizability and transferability of the model. (2) represents the loss of the proposed method. This loss is a combination of a classifier loss (ℒy) and a discriminator loss (ℒd). λd and λy are the coefficients given to the discriminator and classifier losses, respectively. These coefficients are used to find the optimal balance between variance and bias for better generalizability of the model.

| (2) |

We use the triplet loss function to calculate the classifier output loss (ℒy) and the crossentropy loss function to calculate the discriminator domain loss (ℒd).

Triplet loss was first introduced in FaceNet [64]. The idea is that pairs of samples in a class should have similar representations, and pairs of samples in different classes should have different representations in the embedded space. In triplet loss, a positive sample and a negative sample are selected for each sample (anchor). The positive sample is selected from the same class of the anchor sample, and the negative sample is selected from the opposite class of the anchor sample. Positive and negative samples are selected in each batch, and the loss function is calculated by (3).

| (3) |

where, in (3), the function fθ represents the data in embedded space and θ are parameters that must be learned. Thus, fθ(A), fθ(P), and fθ(N) represent embedded representations for the anchor, positive, and negative samples, respectively. ‖‖2 represents the Euclidean distance, and α is a margin used to ensure that the model does not make the embeddings fθ(A), fθ(P), and fθ(N) equal to each other to trivially satisfy the equation.

Due to the many inherent and structural similarities in medical images, the use of this loss function can be helpful to better differentiate data from two different classes in our task. The brilliant results of using this function in the present application reinforce the validity of this hypothesis.

We use the crossentropy loss function to calculate the discriminator domain loss (ℒd). The crossentropy loss function is calculated by (4). In this equation, y represents the actual class, and represents the model output prediction.

| (4) |

4. Experiments

In this section, the performance of the ADA-COVID model is evaluated. The results are compared with the following groups of methods:

Methods that have developed customized network, such as COVID CT-Net [30], contrastive learning [35], Amyar et al. [13], Javaheri [36], xDNN [17], Wang et al. [65], Dadario et al. [66], Wu et al. [67], Liu [68], Singh et al. [69], He et al. [11], Zheng et al. [70], and Song et al. [50]

Methods that use pretrained networks and transfer learning to detect COVID-19, such as DenseNet201-based [48], modified VGG19 [52], ResNet-101-based [71], DenseNet-169-based [11], decision function [72], Chen et al. [29], Cifci [73], Jin et al. [74], Yousefzadeh et al. [75], DenseNet-169-based [76], and Wang et al. [77]

Methods that use handcrafted feature extraction approaches and conventional classifiers, such as Hasan et al. [58], Farid et al. [78], Xu et al. [79], Elghamrawy [80], and DenseNet-121 + SVM [4]

Parameter batch size and the maximum number of iterations are typical in the implemented methods, which are set to 32 and 2 × 104, respectively. The learning rate in the ADA-COVID framework is set to 10−2 (in the ADA-COVID framework, Adam optimizer is used). In the proposed algorithm, the required parameters are set as follows: λy=4, and λd=1. Due to the small number of images, overfitting may occur. To solve this problem, dropout has been used along with the data augmentation technique. The dropout rate is set to 0.5. Also, the k-fold crossvalidation technique, considering k = 5, is used in the ADA-COVID framework. The experiments are conducted using the Keras framework on a computer with Intel (R) Core (TM) i7-7700K, 16 GB RAM, Nvidia GTX 1080 GPU.

To maximize the reliability of the proposed model, several slides of a patient's CT scan images are given to the network, and the average results are reported. In contrast, most of the compared methods reported the best results among different slides of a patient's CT scan images.

4.1. Dataset

To train and evaluate the model's performance and compare it with other methods, we use the SARS-CoV-2 CT scan dataset [17] as the source dataset and the COVID19-CT dataset [11] as the target dataset. The details of datasets are summarized in Table 2. 80% of the dataset is selected as a training set, and 20% of the dataset is selected as a test set.

Table 2.

Characteristics of the utilized datasets.

| Datasets | No. of samples | No. of COVID-19 samples | No. of non-COVID-19 samples | Image size |

|---|---|---|---|---|

| SARS-CoV-2 CT scan (source dataset) | 2482 | 1252 | 1230 | 119 × 104 416 × 512 |

|

| ||||

| COVID-19 CT (target dataset) | 746 | 349 | 397 | 124 × 153 1485 × 1853 |

4.2. Performance Metrics

The following five metrics are used to measure the performance in the experiments.

Accuracy is the number of correct predictions divided by the total number of samples [81]. This metric is calculated as follows.

| (5) |

Precision is the ratio of correct positive predictions to the number of positive results predicted. This metric is calculated as follows.

| (6) |

Recall is the ratio of the number of correct positive predictions to the number of all relevant samples. This metric is calculated follows.

| (7) |

F 1 score is the harmonic mean between precision and recall [82, 83]. This metric is calculated as follows.

| (8) |

Specificity rate corresponds to the proportion of negative samples that are correctly considered negative with respect to all negative samples. This metric is calculated by (9).

| (9) |

In equations (5) to (9), TP, FP, TN, and FN represent true positive, false positive, true negative, and false negative, respectively.

In our experiments, these metrics are expressed as a percentage. A high percentage indicates a better performance.

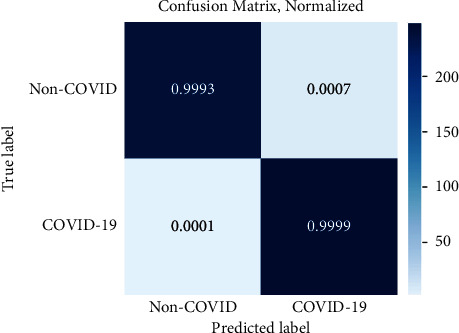

4.3. Experiment 1: Evaluation on the Source Dataset

This section compares the proposed approach's performance with the state-of-the-art methods on the source dataset, shown in Table 3. The results of other methods have been quoted directly from the relevant publications. Also, for the proposed method, the confusion matrix of evaluation on the test set of the source dataset is illustrated in Figure 5. From Table 3 and Figure 5, it is evident that ADA-COVID performs better than the other methods. It performs the best overall performance for all evaluation metrics. The average accuracy, precision, recall, and F1 metrics of ADA-COVID are 99.9%, 99.9%, 99.8%, and 99.9%, respectively. Recall 99.8% indicates that, on average, only one COVID-19 image is incorrectly predicted as non-COVID-19. Also, the proposed model correctly diagnoses all non-COVID-19 cases with only one false positive. After ADA-COVID, the EfficientNetB0, xDNN, DenseNet201-based, and ShuffleNet methods have relatively good performance, respectively. In EfficientNetB0 architecture, on average, two COVID-19 images are incorrectly predicted as non-COVID-19.

Table 3.

Performance comparison of different models for detecting COVID-19 on the source dataset (the best rates are bold-faced)

| Model/method | Evaluation metrics | |||

|---|---|---|---|---|

| Accuracy | Precision | Recall | F1 | |

| AdaBoost | 95.1 | 93.6 | 96.7 | 95.1 |

| AlexNet | 93.7 | 94.9 | 92.2 | 93.6 |

| Decision tree | 79.4 | 76.8 | 83.1 | 79.8 |

| EfficientNetB0 | 98.9 | 99.1 | 98.9 | 99.0 |

| GoogleNet | 91.7 | 90.2 | 93.5 | 91.8 |

| ResNet50 | 94.9 | 93.0 | 97.1 | 95.0 |

| ResNet50V2 | 94.2 | 92.8 | 96.7 | 94.1 |

| ShuffleNet | 97.5 | 96.1 | 99.0 | 97.5 |

| SqueezeNet | 95.1 | 94.2 | 96.2 | 95.2 |

| VGG-16 | 94.9 | 94.0 | 95.4 | 94.9 |

| Xception | 98.8 | 99.0 | 98.6 | 98.8 |

|

| ||||

| Contrastive learning [35] | 90.8 | 95.7 | 85.8 | 90.8 |

| COVID CT-Net [30] | 90.7 | 88.5 | 85.0 | 90.0 |

| DenseNet201-based [48] | 96.2 | 96.2 | 96.2 | 96.2 |

| Modified VGG19 [52] | 95.0 | 95.3 | 94.0 | 94.3 |

| xDNN [17] | 97.3 | 99.1 | 95.5 | 97.3 |

|

| ||||

| ADA-COVID | 99.9 | 99.9 | 99.8 | 99.9 |

Figure 5.

Confusion matrix of evaluation on the test set of the source dataset.

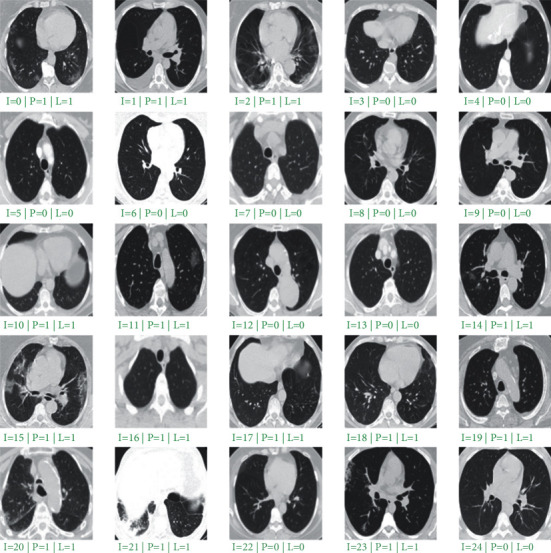

The visual evaluation results of ADA-COVID on 25 random samples from the test dataset are shown in Figure 6. Due to the model's high precision, there was no unsuccessful sample prediction in random results to examine the model's possible weaknesses.

Figure 6.

Performance evaluation on 25 random samples from the test set. “I” is the image index, “P” is the predicted value, and “L” is the ground truth label.

4.4. Experiment 2: Evaluation on the Target Dataset

In this section, we evaluate the performance of the proposed approach on the target dataset once without training and once with training. In the first case, the model is trained on the source dataset and evaluated on the target dataset. In the second case, the proposed model is trained and evaluated independently on the target dataset. In other words, the second dataset input part and discriminator module are not considered in the second case, and the network is trained and evaluated on the target dataset. The performance of the different methods and models is shown in Table 4. The results of other methods have been quoted directly from the relevant publications. The compared methods are trained and evaluated on the target dataset.

Table 4.

Performance comparison of different models for detecting COVID-19 on the target dataset.

| Model/Method | Evaluation metrics | |||

|---|---|---|---|---|

| Accuracy | Recall | Specificity | F1 | |

| AlexNet | 74.5 | 70.4 | 79.0 | 75.0 |

| DenseNet-121 | 88.9 | 88.8 | 88.9 | 88.2 |

| DenseNet-169 | 91.2 | 93.3 | 88.9 | 90.8 |

| DenseNet-201 | 91.7 | 88.6 | 94.1 | 91.9 |

| GoogleNet | 78.9 | 75.9 | 82.3 | 79.0 |

| Inception-ResNet-v2 | 86.3 | 88.1 | 84.2 | 87.0 |

| Inception-v3 | 89.4 | 90.0 | 88.9 | 88.8 |

| MobileNet-v2 | 87.2 | 93.2 | 77.6 | 89.0 |

| NasNet-large | 85.2 | 79.3 | 91.9 | 84.0 |

| NasNet-Mobile | 83.4 | 84.8 | 81.9 | 85.0 |

| ResNet-101 | 89.7 | 82.2 | 89.2 | 89.0 |

| ResNet-18 | 90.1 | 89.4 | 90.9 | 91.0 |

| ResNet-50 | 90.8 | 90.0 | 91.0 | 90.1 |

| ResNeXt-101 | 90.9 | 93.1 | 88.9 | 90.6 |

| ResNeXt-50 | 90.6 | 93.4 | 88.2 | 90.3 |

| ShuffleNet | 86.1 | 83.5 | 89.0 | 86.0 |

| SqueezeNet | 78.5 | 86.5 | 63.8 | 82.0 |

| VGG-16 | 78.5 | 74.6 | 82.8 | 76.0 |

| VGG-19 | 83.2 | 90.7 | 74.7 | 85.0 |

| Xception | 85.6 | 88.3 | 80.6 | 87.7 |

|

| ||||

| Contrastive learning [35] | 78.6 | 78.0 | 77.0 | 78.8 |

| Decision function [72] | 88.3 | 87.0 | 87.9 | 86.7 |

| DenseNet-121 + SVM [4] | 85.9 | 84.9 | 86.8 | 86.2 |

| DenseNet-169-based [11] | 83.0 | 84.8 | 85.5 | 81.0 |

| DenseNet-169-based [76] | 87.7 | 85.6 | 86.9 | 87.8 |

| ResNet-101-based [71] | 80.3 | 85.7 | 86.0 | 81.8 |

|

| ||||

| ADA-COVID (without training) | 92.5 | 93.5 | 94.2 | 93.0 |

| ADA-COVID (with training) | 95.8 | 94.9 | 96.0 | 95.2 |

As shown in Table 4, ADA-COVID with training on the target dataset has the best performance. The average accuracy, recall, specificity, and F1 metrics are 95.8%, 94.9%, 96%, and 95.2%, respectively. Also, after ADA-COVID with training, the ADA-COVID without training mode has the best performance. The average accuracy, recall, specificity, and F1 metrics are 92.5%, 93.5%, 94.2%, and 93%, respectively. In without training mode, the average recall of 93.5% indicates that, on average, eight images of COVID-19 are incorrectly predicted as non-COVID-19. Also, the average specificity of 94.2 indicates that all non-COVID-19 cases are detected with only seven false-positive samples. In training mode, the average recall of 94.9% indicates that, on average, 6 COVID-19 images are incorrectly predicted as non-COVID-19. Also, the average of specificity 96% indicates that it detects all cases of non-COVID-19 with only five false-positive samples.

In ADA-COVID without training mode, although the proposed approach is not trained on the target dataset, it has better results than other compared methods. This indicates that the proposed method has significantly increased generalizability, independent of the source dataset.

After ADA-COVID with and without training modes, the ResNet-50, ResNeXt-101, and ResNeXt-50 architectures have relatively good performance. In these architectures, the average recall of 92.16% indicates that, on average, more than 12 COVID-19 images are incorrectly predicted as non-COVID-19. Also, the average specificity of 90.2% indicates that all non-COVID-19 cases are detected with 15 false-positive samples. Among the reported results, AlexNet has the worst performance.

4.5. Experiment 3: Crossdataset Evaluation

To investigate the effect of the domain adaptation used in our approach on the final results, we evaluate the proposed approach's performance once with domain adaptation and once without domain adaptation. Also, we train the model once on the source dataset and evaluate it on the target dataset, and once on the target dataset and evaluate it on the source dataset. We compare the proposed method with the method proposed by Silva et al. [22] as the baseline. As shown in Table 5, the proposed method performs better than the reverse case when trained on the source dataset and evaluated on the target dataset. The reason for this is that the size of the target dataset is much smaller than the size of the source dataset. Also, the data collected in this dataset are from different sources, in different contrasts and with different visual features. Therefore, it is not a suitable dataset for the model training. Comparison of the proposed method with baseline [22] shows that the proposed method has improved the results by an average of 30%.

Table 5.

Crossdataset evaluation results.

| Method | Training dataset | Test dataset | Evaluation metrics | ||

|---|---|---|---|---|---|

| Accuracy | Recall | Precision | |||

| Baseline | Source | Target (train set) | 59.12 | 64.14 | 54.95 |

| Source | Target (test set) | 56.16 | 53.06 | 54.74 | |

| Source | Target (all data) | 58.31 | 61.03 | 54.90 | |

| Target | Source | 45.25 | 54.39 | 46.36 | |

|

| |||||

| ADA-COVID (without domain adaptation) | Source | Target (train set) | 64.28 | 65.10 | 64.12 |

| Source | Target (test set) | 62.05 | 63.30 | 62.00 | |

| Source | Target (all data) | 65.37 | 66.80 | 65.22 | |

| Target | Source | 57.00 | 59.41 | 56.88 | |

|

| |||||

| ADA-COVID (with domain adaptation) | Source | Target (train set) | 91.04 | 92.70 | 92.11 |

| Source | Target (test set) | 90.88 | 91.00 | 91.90 | |

| Source | Target (all data) | 92.49 | 93.53 | 92.47 | |

| Target | Source | 83.07 | 87.51 | 84.26 | |

As shown in Table 5, the proposed approach performs better with the domain adaptation technique than without domain adaptation mode. Using the domain adaptation technique, the proposed approach results are improved by an average of 44.10%. Therefore, it can be concluded that better representations are generated by using the domain adaptation technique. As a result, the quality of the diagnoses is better, specifically for the unseen new samples.

4.6. Experiment 4: ADA-COVID vs. Pretrained Models

We evaluate the proposed method with methods in which the models are already pr-trained on the ImageNet dataset. As shown in Table 6, the results show that the proposed algorithm has a higher performance than other successful methods in this field. The critical point is that the proposed method is trained on the small SARS-CoV-2 CT scan dataset, while the other methods are often trained on a large dataset. Therefore, apart from the qualitative contributions and the proposed innovations that offer a low-cost and practical solution to overcome the shortcut learning problem [26], the proposed method achieves significant improvements using only a few sets of training samples without suffering from overfitting problem.

Table 6.

ADA-COVID vs. pretrained models.

| Reference | Data sources | No. of samples | Model | Performance |

|---|---|---|---|---|

| Ardakani et al. [84] | Real-time data from the hospital environment. | Total: 1,020 COVID-19 : 510 Non-COVID-19 : 510 |

AlexNet, VGG-16, VGG-19,… |

Accuracy: 99.51 Recall: 100 Specificity: 99.02 |

| Chen et al. [29] | Renmin Hospital of Wuhan University. | Total: 35,355 | UNet++ | Accuracy: 98.85 Recall: 94.34 Specificity: 99.16 |

| Cifci [73] | Kaggle benchmark dataset [85] | Total: 5,800 | AlexNet, Inception-V4 | Accuracy: 94.74 Recall: 87.37 Specificity: 87.45 |

| Javaheri et al. [36] | Five medical centers in Iran, SPIE-AAPM-NCI [86], LUNGx [87] | Total: 89,145 COVID-19 : 32,230 Non-COVID-19 : 56,915 |

BCDU-Net (U-Net) | Accuracy: 91.66 Recall: 87.5 Specificity: 94 |

| Jin et al. [74] | Wuhan Union Hospital, LIDC-IDRI [88], ILD-HUG [89] |

Total: 1,881 COVID-19 : 496 Non-COVID-19 : 1,385 |

ResNet152 | Accuracy: 94.98 Recall: 94.06 Specificity: 95.47 F1: 92.78 |

| Jin et al. [65] | Five different hospitals of China. | Total: 1,391 COVID-19 : 850 Non-COVID-19 : 541 |

DPN-92, Inception-v3, ResNet-50 |

Recall: 97.04 Specificity: 92.2 |

| Li et al. [66] | Multiple hospitals environment. | Total: 4,536 COVID-19 : 1,296 Non-COVID-19 : 1,325 |

ResNet50 | Recall: 90 Specificity: 96 |

| Wu et al. [67] | China Medical University, Beijing Youan Hospital. |

Total: 495 COVID-19 : 368 Non-COVID-19 : 127 |

ResNet50 | Accuracy: 76 Recall: 81.1 Specificity: 61.5 |

| Xu et al. [79] | Zhejiang University, Hospital of Wenzhou, Hospital of Wenling. | Total: 618 COVID-19 : 219 Non-COVID-19 : 399 |

ResNet18 | Accuracy: 86.7 Recall: 81.5 F1: 81.1 |

| Yousefzadeh et al. [75] | Real-time data from the hospital environment. | Total: 2,124 COVID-19 : 706 Non-COVID-19 : 1,418 |

DenseNet, ResNet, Xception, EcientNetB0 |

Accuracy: 96.4 Recall: 92.4 Specificity: 98.3 F1: 95.3 |

|

| ||||

| ADA-COVID | SARS-CoV-2 CT scan dataset | Total: 2,482 COVID-19 : 1,252 Non-COVID-19 : 1,229 |

ResNet50 | Accuracy: 99.96 Recall: 99.80 Specificity: 99.80 F1: 99.90 |

The method presented by Ardakani et al. [84] has almost higher performance than ADA-COVID in terms of recall metric; however, it suffers from low reliability. In other words, network performance decreases dramatically in the face of unseen data.

4.7. Experiment 5: ADA-COVID vs. Customized Models

This section compares the proposed method with methods that have developed customized architectures specifically to detect COVID-19. In these methods, transfer learning is not used, and the network is trained from scratch.

Table 7 shows the results for ADA-COVID and other compared approaches. As shown in this table, the ADA-COVID method, for all metrics, has the best results. After ADA-COVID, Elghamrawy and Hassanien [80] have the second-best results. Moreover, Hasan et al. [58] have relatively good performance. Wang et al. [77] have the worst performance among the reported results.

Table 7.

ADA-COVID vs. customized models.

| Reference | Data sources | No. of samples | Model | Performance |

|---|---|---|---|---|

| Amyar et al. [13] | COVID CT [16], COVID-19 CT segmentation dataset [90], Henri Becquerel Center | Total: 1,044 COVID-19 : 449 Non-COVID-19 : 595 |

Encoder-decoder with multilayer perceptron | Accuracy: 86.0 Recall: 94.0 Specificity: 79.0 |

| Elghamrawy and Hassanien. [80] | COVID-19 database [91], COVID CT [16] |

Total: 583 COVID-19 : 432 Non-COVID-19 : 151 |

WOA-CNN | Accuracy: 96.40 Recall: 97.25 Precision: 97.3 |

| Farid et al. [78] | Kaggle benchmark dataset [85] | Total: 102 COVID-19 : 51 Non-COVID-19 : 51 |

CNN | Accuracy: 94.11 Precision: 99.4 F1: 94.0 |

| Hasan et al. [58] | COVID-19 [92], SPIE-AAPM-NCI lung nodule classification challenge dataset [86] | Total: 321 COVID-19 : 118 Non-COVID-19 : 203 |

QDE–DF | Accuracy: 99.68 |

| He et al. [11] | COVID-19 database [91], COVID-19 [92], Eurorad [93], corona cases [94] |

Total: 746 COVID-19 : 349 Non-COVID-19 : 397 |

CRNet | Accuracy: 86.0 F1: 85.0 AUC: 94.0 |

| Liu et al. [68] | Ten designated COVID-19 hospitals in China | Total: 1,993 COVID-19 : 920 Non-COVID-19 : 1,073 |

Modified DenseNet-264 | Accuracy: 94.3 Recall: 93.1 Specificity: 95.1 |

| Singh et al. [69] | COVID-19 patient chest CT images [95] | Total: 150 COVID-19 : 75 Non-COVID-19 : 75 |

MODE-CNN | Accuracy: 93.25 Recall: 90.70 Specificity: 90.72 |

| Wang et al. [77] | Xi'an Jiaotong University, Nanchang University, Xi'an Medical College | Total: 1,065 COVID-19 : 740 Non-COVID-19 : 325 |

Modified inception | Accuracy: 79.3 Recall: 83.0 Specificity: 67.0 |

| Song et al. [50] | Hospital of Wuhan University, third affiliated hospital | Total: 1,990 COVID-19 : 777 Non-COVID-19 : 1,213 |

DRE-Net | Accuracy: 94.3 Recall: 93.0 Precision: 96.0 |

| Zheng et al. [70] | Union Hospital, Tongji Medical College, Huazhong University of Science and Technology | Total: 630 | DeCoVNet | Accuracy: 90.1 Recall: 90.7 Specificity: 91.1 |

|

| ||||

| ADA-COVID | SARS-CoV-2 CT scan dataset | Total: 2,482 COVID-19 : 1,252 Non-COVID-19 : 1,229 |

ResNet50 | Accuracy: 99.96 Recall: 99.80 Specificity: 99.80 F1: 99.90 |

5. Conclusion

Rapid diagnosis of COVID-19 with high reliability is vital in the early stages of the infection. Using the transfer learning technique, this paper proposed an adversarial deep domain adaptation-based approach (ADA-COVID) for COVID-19 diagnosis from lung CT scan images. Previous studies suffer from shortcut learning when the model is trained using limited train data; furthermore, the state-of-the-art approaches fail to generalize for new samples, achieving poor performance or behaving similar to random predictors. Thanks to the proposed domain adaptation between the source and unseen target samples, ADA-COVID guarantees that the generated representations do not depend on the domain of a specific dataset. In addition, since medical images have a high structural resemblance compared to other image data in machine vision tasks, we utilized the triplet loss function for training the proposed model to achieve improved discrimination between positive and negative samples. Finally, the proposed approach can be easily extended for similar applications which utilize medical imaging such as radiography. ADA-COVID's performance was tested and compared to many state-of-the-art approaches. The results demonstrated that ADA-COVID achieves significant classification improvements, up to 60%, compared to the best results of competitors, even without directly training on the same dataset.

Data Availability

Previously reported image data were used to support this study and are available at doi.org/10.1101/2020.04.24.20078584 and https://doi.org/10.1101/2020.04.13.20063941. These prior studies (and datasets) are cited at relevant places within the text as references [11, 17].

Conflicts of Interest

The authors declare that there are no conflicts of interest regarding the publication of this paper.

References

- 1.Worldometer - real time world statistics. 2021. https://www.worldometers.info/

- 2.Ai T., Yang Z., Hou H., et al. Correlation of chest CT and RT-PCR testing for coronavirus disease 2019 (COVID-19) in China: a report of 1014 cases. Radiology . 2020;296(2):E32–E40. doi: 10.1148/radiol.2020200642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Alshazly H., Linse C., Barth E., Martinetz T. Explainable covid-19 detection using chest ct scans and deep learning. Sensors . 2021;21(2):p. 455. doi: 10.3390/s21020455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Shamsi A., Asgharnezhad H., Jokandan S. S., et al. An uncertainty-aware transfer learning-based framework for COVID-19 diagnosis. IEEE Transactions on Neural Networks and Learning Systems . 2021;32(4):1408–1417. doi: 10.1109/TNNLS.2021.3054306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ghaderzadeh M., Aria M., Asadi F. X-ray equipped with artificial intelligence: changing the COVID-19 diagnostic paradigm during the pandemic. BioMed Research International . 2021;2021:16. doi: 10.1155/2021/9942873.9942873 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Long C., Xu H., Shen Q., et al. Diagnosis of the Coronavirus disease (COVID-19): rRT-PCR or CT? European Journal of Radiology . 2020;126 doi: 10.1016/j.ejrad.2020.108961.108961 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ghaderzadeh M., Aria M. Management of covid-19 detection using artificial intelligence in 2020 pandemic. Proceedings of the 2021 5th International Conference on Medical and Health Informatics; May 2021; Kyoto, Japan. pp. 32–38. [DOI] [Google Scholar]

- 8.Ng M.-Y., Lee E. Y. P., Yang J., et al. Imaging profile of the COVID-19 infection: radiologic findings and literature review. Radiology: Cardiothoracic Imaging . 2020;2(1) doi: 10.1148/ryct.2020200034.e200034 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Luz E., Silva P., Silva R., et al. Towards an effective and efficient deep learning model for COVID-19 patterns detection in X-ray images. Research on Biomedical Engineering . 2021:1–14. doi: 10.1007/s42600-021-00151-6. [DOI] [Google Scholar]

- 10.Wang L., Lin Z. Q., Wong A. Covid-net: a tailored deep convolutional neural network design for detection of covid-19 cases from chest x-ray images. Scientific Reports . 2020;10(1) doi: 10.1038/s41598-020-76550-z.19549 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.He X. Sample-Efficient Deep Learning for COVID-19 Diagnosis Based on CT Scans. IEEE transactions on medical imaging . 2020 [Google Scholar]

- 12.Polsinelli M., Cinque L., Placidi G. A light CNN for detecting COVID-19 from CT scans of the chest. Pattern Recognition Letters . 2020;140:95–100. doi: 10.1016/j.patrec.2020.10.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Amyar A., Modzelewski R., Li H., Ruan S. Multi-task deep learning based CT imaging analysis for COVID-19 pneumonia: classification and segmentation. Computers in Biology and Medicine . 2020;126 doi: 10.1016/j.compbiomed.2020.104037.104037 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Wang S., Kang B., Ma J., et al. A deep learning algorithm using CT images to screen for Corona virus disease (COVID-19) European Radiology . 2021;31(8):6096–6104. doi: 10.1007/s00330-021-07715-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Roh Y., Heo G., Whang S. E. A survey on data collection for machine learning: a big data-ai integration perspective. IEEE Transactions on Knowledge and Data Engineering . 2019;33 [Google Scholar]

- 16.Yang X., He X., Zhao J., Zhang Y., Zhang S., Xie P. COVID-CT-dataset: A CT Scan Dataset about COVID-19. 2020. https://paperswithcode.com/dataset/covid-ct .

- 17.Angelov P., Almeida Soares E. SARS-CoV-2 CT-scan Dataset: A Large Dataset of Real Patients CT Scans for SARS-CoV-2 Identification. 2020. https://www.researchgate.net/publication/341338748_SARS-CoV-2_CT-scan_dataset_A_large_dataset_of_real_patients_CT_scans_for_SARS-CoV-2_identification .

- 18.Ghaderzadeh M., Asadi F., Jafari R., Bashash D., Abolghasemi H., Aria M. Deep convolutional neural network-based computer-aided detection system for COVID-19 using multiple lung scans: design and implementation study. Journal of Medical Internet Research . 2021;23(4) doi: 10.2196/27468.e27468 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Afshar P., Heidarian S., Enshaei N., et al. COVID-CT-MD, COVID-19 computed tomography scan dataset applicable in machine learning and deep learning. Scientific Data . 2021;8(1):1–8. doi: 10.1038/s41597-021-00900-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Cohen J. P., Morrison P., Dao L., Roth K., Duong T., Ghassemi M. MELBA; 2020. COVID-19 Image Data Collection: Prospective Predictions Are the Future. https://www.researchgate.net/publication/342377621_COVID-19_Image_Data_Collection_Prospective_Predictions_Are_the_Future .18272 [Google Scholar]

- 21.Rahimzadeh M., Attar A., Sakhaei S. M. A fully automated deep learning-based network for detecting covid-19 from a new and large lung ct scan dataset. Biomedical Signal Processing and Control . 2021;68 doi: 10.1016/j.bspc.2021.102588.102588 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Silva P., Luz E., Silva G., et al. COVID-19 detection in CT images with deep learning: a voting-based scheme and cross-datasets analysis. Informatics in Medicine Unlocked . 2020;20 doi: 10.1016/j.imu.2020.100427.100427 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Tartaglione E., Barbano C. A., Berzovini C., Calandri M., Grangetto M. Unveiling covid-19 from chest x-ray with deep learning: a hurdles race with small data. International Journal of Environmental Research and Public Health . 2020;17(18):p. 6933. doi: 10.3390/ijerph17186933. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Tabik S., Gomez-Rios A., Martin-Rodriguez J. L., et al. COVIDGR dataset and COVID-SDNet methodology for predicting COVID-19 based on Chest X-Ray images. IEEE Journal of Biomedical and Health Informatics . 2020;24(12):3595–3605. doi: 10.1109/jbhi.2020.3037127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Afshar P., Heidarian S., Naderkhani F., Oikonomou A., Plataniotis K. N., Mohammadi A. COVID-CAPS: a capsule network-based framework for identification of COVID-19 cases from X-ray images. Pattern Recognition Letters . 2020;138:638–643. doi: 10.1016/j.patrec.2020.09.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Geirhos R., Jacobsen J.-H., Michaelis C., et al. Shortcut learning in deep neural networks. Nature Machine Intelligence . 2020;2(11):665–673. doi: 10.1038/s42256-020-00257-z. [DOI] [Google Scholar]

- 27.Li L., Qin L., Xu Z., et al. Using artificial intelligence to detect COVID-19 and community-acquired pneumonia based on pulmonary CT: evaluation of the diagnostic accuracy. Radiology . 2020;296(2):E65–E71. doi: 10.1148/radiol.2020200905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Xu X., Jiang X., Ma C., et al. A deep learning system to screen novel coronavirus disease 2019 pneumonia. Engineering . 2020;6(10):1122–1129. doi: 10.1016/j.eng.2020.04.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Chen J., Wu L, Zhang J, et al. Deep learning-based model for detecting 2019 novel coronavirus pneumonia on high-resolution computed tomography. Scientific Reports . 2020;10(1):19196–19211. doi: 10.1038/s41598-020-76282-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Yazdani S., Minaee S., Kafieh R., Saeedizadeh N., Sonka M. Covid Ct-Net: Predicting Covid-19 from Chest Ct Images Using Attentional Convolutional Network. 2020. https://www.researchgate.net/publication/344234635_COVID_CT-Net_Predicting_Covid-19_From_Chest_CT_Images_Using_Attentional_Convolutional_Network .

- 31.Hasan M., Alam M., Elahi M., Toufick E., Roy S., Wahid S. R. CVR-net: A Deep Convolutional Neural Network for Coronavirus Recognition from Chest Radiography Images. 2020. https://www.researchgate.net/publication/343179127_CVR-Net_A_deep_convolutional_neural_network_for_coronavirus_recognition_from_chest_radiography_images .

- 32.Farooq M., Hafeez A. Covid-resnet: A Deep Learning Framework for Screening of Covid19 from Radiographs. 2020. https://www.researchgate.net/publication/348137582_COVID-ResNet_A_Deep_Learning_Framework_for_Screening_of_COVID19_from_Radiographs .

- 33.Khan A. I., Shah J. L., Bhat M. M. CoroNet: a deep neural network for detection and diagnosis of COVID-19 from chest x-ray images. Computer Methods and Programs in Biomedicine . 2020;196 doi: 10.1016/j.cmpb.2020.105581.105581 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Toraman S., Alakus T. B., Turkoglu I. Convolutional capsnet: a novel artificial neural network approach to detect COVID-19 disease from X-ray images using capsule networks. Chaos, Solitons & Fractals . 2020;140 doi: 10.1016/j.chaos.2020.110122.110122 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Wang Z., Liu Q., Dou Q. Contrastive cross-site learning with redesigned net for covid-19 ct classification. IEEE Journal of Biomedical and Health Informatics . 2020;24(10):2806–2813. doi: 10.1109/jbhi.2020.3023246. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Javaheri T., Homayounfar M., Amoozgar Z., et al. CovidCTNet: an open-source deep learning approach to diagnose covid-19 using small cohort of CT images. NPJ digital medicine . 2021;4(1):1–10. doi: 10.1038/s41746-021-00399-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Singh D., Kumar V., Vaishali, Kaur M. Classification of COVID-19 patients from chest CT images using multi-objective differential evolution-based convolutional neural networks. European Journal of Clinical Microbiology & Infectious Diseases . 2020;39(7):1379–1389. doi: 10.1007/s10096-020-03901-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Apostolopoulos I. D., Mpesiana T. A. Covid-19: automatic detection from X-ray images utilizing transfer learning with convolutional neural networks. Physical and Engineering Sciences in Medicine . 2020;43(2):635–640. doi: 10.1007/s13246-020-00865-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Simonyan K., Zisserman A. Very Deep Convolutional Networks for Large-Scale Image Recognition. 2015. https://www.semanticscholar.org/paper/Very-Deep-Convolutional-Networks-for-Large-Scale-Simonyan-Zisserman .

- 40.Howard A. G., Zhu M., Chen B., et al. Mobilenets: Efficient Convolutional Neural Networks for mobile Vision Applications. 2017. https://www.researchgate.net/publication/316184205_MobileNets_Efficient_Convolutional_Neural_Networks_for_Mobile_Vision_Applications .

- 41.Szegedy C., Ioffe S., Vanhoucke V., Alemi A. A. Inception-v4, inception-resnet and the impact of residual connections on learning. Proceedings of the Thirty-first AAAI Conference on Artificial Intelligence; February 2017; California, CA, USA. [Google Scholar]

- 42.Chollet F. Xception: deep learning with depthwise separable convolutions. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; July 2017; Honolulu, HI, USA. pp. 1251–1258. [DOI] [Google Scholar]

- 43.Minaee S., Kafieh R., Sonka M., Yazdani S., Jamalipour Soufi G. Deep-COVID: predicting COVID-19 from chest X-ray images using deep transfer learning. Medical Image Analysis . 2020;65 doi: 10.1016/j.media.2020.101794.101794 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; June 2016; Las Vegas, NV, USA. pp. 770–778. [DOI] [Google Scholar]

- 45.Iandola F. N., Han S., Moskewicz M. W., Ashraf K., Dally W. J., Keutzer K. SqueezeNet: AlexNet-Level Accuracy with 50x Fewer Parameters And< 0.5 MB Model Size. 2016. https://www.researchgate.net/publication/301878495_SqueezeNet_AlexNet-level_accuracy_with_50x_fewer_parameters_and_05MB_model_size .

- 46.Huang G., Liu Z., Van Der Maaten L., Weinberger K. Q. Densely connected convolutional networks. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; July 2017; Honolulu, HI, USA. pp. 4700–4708. [DOI] [Google Scholar]

- 47.Brunese L., Mercaldo F., Reginelli A., Santone A. Explainable deep learning for pulmonary disease and coronavirus COVID-19 detection from X-rays. Computer Methods and Programs in Biomedicine . 2020;196 doi: 10.1016/j.cmpb.2020.105608.105608 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Jaiswal A., Gianchandani N., Singh D., Kumar V., Kaur M. Classification of the COVID-19 infected patients using DenseNet201 based deep transfer learning. Journal of Biomolecular Structure and Dynamics . 2020;39(15):5682–5689. doi: 10.1080/07391102.2020.1788642. [DOI] [PubMed] [Google Scholar]

- 49.Zhou M., Chen Y., Yang D., et al. Improved Deep Learning Model for Differentiating Novel Coronavirus Pneumonia and Influenza Pneumonia . 2020. https://www.medrxiv.org/content/10.1101/2020.03.24.20043117v1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Song Y., Zheng S., Li L., et al. Deep learning enables accurate diagnosis of novel coronavirus (COVID-19) with CT images. IEEE/ACM Transactions on Computational Biology and Bioinformatics . 2021;18(6):2775–2780. doi: 10.1109/tcbb.2021.3065361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Li L., Qin L., Xu Z., et al. Artificial Intelligence Distinguishes COVID-19 from Community Acquired Pneumonia on Chest CT. Radiology . 2020 doi: 10.1148/radiol.2020200905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Panwar H., Gupta P. K., Siddiqui M. K., Morales-Menendez R., Bhardwaj P., Singh V. A deep learning and grad-CAM based color visualization approach for fast detection of COVID-19 cases using chest X-ray and CT-Scan images. Chaos, Solitons & Fractals . 2020;140 doi: 10.1016/j.chaos.2020.110190.110190 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Sitaula C., Hossain M. B. Attention-based VGG-16 model for COVID-19 chest X-ray image classification. Applied Intelligence . 2021;51(5):2850–2863. doi: 10.1007/s10489-020-02055-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Sitaula C., Aryal S. New bag of deep visual words based features to classify chest x-ray images for covid-19 diagnosis. Health Information Science and Systems . 2021;9(1):1–12. doi: 10.1007/s13755-021-00152-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Pereira R. M., Bertolini D., Teixeira L. O., Silla C. N., Costa Y. M. G. COVID-19 identification in chest X-ray images on flat and hierarchical classification scenarios. Computer Methods and Programs in Biomedicine . 2020;194 doi: 10.1016/j.cmpb.2020.105532.105532 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Szegedy C., Vanhoucke V., Ioffe S., Shlens J., Wojna Z. Rethinking the inception architecture for computer vision. Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); June 2016; Las Vegas, NV, USA. pp. 2818–2826. [DOI] [Google Scholar]

- 57.Al-Karawi D., Al-Zaidi S., Polus N., Jassim S. Machine Learning Analysis of Chest CT Scan Images as a Complementary Digital Test of Coronavirus (COVID-19) Patients . 2020. [Google Scholar]

- 58.Hasan A. M., Al-Jawad M. M., Jalab H. A., Shaiba H., Ibrahim R. W., Al-Shamasneh A. a. R. Classification of Covid-19 coronavirus, pneumonia and healthy lungs in CT scans using Q-deformed entropy and deep learning features. Entropy . 2020;22(5):p. 517. doi: 10.3390/e22050517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Farid A. A., Selim G. I., Khater H. A. A. A Novel Approach of CT Images Feature Analysis and Prediction to Screen for corona Virus Disease (COVID-19) International Journal of Scientific and Engineering Research . 2020;11 [Google Scholar]

- 60.Ghaderzadeh M., Aria M., Hosseini A., Asadi F., Bashash D., Abolghasemi H. A fast and efficient CNN model for B‐ALL diagnosis and its subtypes classification using peripheral blood smear images. International Journal of Intelligent Systems . 2021 [Google Scholar]

- 61.Deng J., Dong W., Socher R., Li L.-J., Li K., Fei-Fei L. Imagenet: a large-scale hierarchical image database. Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition; June 2009; Miami, FL. pp. 248–255. [DOI] [Google Scholar]

- 62.Alshazly H., Linse C., Barth E., Martinetz T. Ensembles of deep learning models and transfer learning for ear recognition. Sensors . 2019;19(19):p. 4139. doi: 10.3390/s19194139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Ganin Y., Ustinova E., Ajakan H., et al. Domain-adversarial training of neural networks. Journal of Machine Learning Research . 2016;17(1):2096–2030. [Google Scholar]

- 64.Schroff F., Kalenichenko D., Philbin J. Facenet: a unified embedding for face recognition and clustering. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; June 2015; Boston, MA, USA. pp. 815–823. [DOI] [Google Scholar]

- 65.Wang B., Jin S., Yan Q., et al. AI-assisted CT imaging analysis for COVID-19 screening: building and deploying a medical AI system. Applied Soft Computing . 2021;98 doi: 10.1016/j.asoc.2020.106897.106897 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Dadário A. M. V., Paiva J. P. Q., Chate R. C., Machado B. S., Szarf G. Regarding artificial intelligence distinguishes COVID-19 from community acquired pneumonia on chest CT. Radiology . 2020;296(2)201178 [Google Scholar]

- 67.Wu X., Hui H., Niu M., et al. Deep learning-based multi-view fusion model for screening 2019 novel coronavirus pneumonia: a multicentre study. European Journal of Radiology . 2020;128:p. 109041. doi: 10.1016/j.ejrad.2020.109041.109041 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Liu B., Liu P., Dai L., et al. Assisting scalable diagnosis automatically via CT images in the combat against COVID-19. Scientific Reports . 2021;11(1):1–8. doi: 10.1038/s41598-021-83424-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Singh D., Kumar V., Kaur M. Classification of COVID-19 Patients from Chest CT Images Using Multi-Objective Differential Evolution–Based Convolutional Neural Networks. European Journal of Clinical Microbiology & Infectious Diseases . 2020;39:1–11. doi: 10.1007/s10096-020-03901-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Zheng C., Deng X., Fu Q., et al. Deep Learning-Based Detection for COVID-19 from Chest CT Using Weak Label . 2020. [Google Scholar]

- 71.Saqib M., Anwar S., Anwar A., Blumenstein M. COVID19 Detection from Radiographs: Is Deep Learning Able to Handle the Crisis? 2020. [Google Scholar]

- 72.Mishra A. K., Das S. K., Roy P., Bandyopadhyay S. Identifying COVID19 from chest CT images: a deep convolutional neural networks based approach. Journal of Healthcare Engineering . 2020;2020:7. doi: 10.1155/2020/8843664.8843664 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Cifci M. A. Deep learning model for diagnosis of corona virus disease from CT image. International Journal of Scientific and Engineering Research . 2020;11(4):273–278. [Google Scholar]

- 74.Jin C., Chen W, Cao Y, et al. Development and evaluation of an artificial intelligence system for COVID-19 diagnosis. Nature Communications . 2020;11(1):5088–5114. doi: 10.1038/s41467-020-18685-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Yousefzadeh M., Esfahanian P., Movahed S. M. S., et al. ai-corona: radiologist-assistant deep learning framework for COVID-19 diagnosis in chest CT scans. PloS one . 2021;16(5) doi: 10.1371/journal.pone.0250952.e0250952 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Martinez A. R. Classification of covid-19 in ct scans using multi-source transfer learning . 2020. [Google Scholar]

- 77.Wang S., Kang B., Ma J., et al. A Deep Learning Algorithm Using CT Images to Screen for Corona Virus Disease (COVID-19) European radiology . 2021;31:1–9. doi: 10.1007/s00330-021-07715-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Farid A. A., Selim G. I., Awad H., Khater A. A novel approach of CT images feature analysis and prediction to screen for corona virus disease (COVID-19) International Journal of Scientific Engineering and Research . 2020;11(3):1–9. doi: 10.14299/ijser.2020.03.02. [DOI] [Google Scholar]

- 79.Xu X., Jiang X., Ma C., et al. A Deep Learning System to Screen Novel Coronavirus Disease 2019 Pneumonia. Engineering . 2020;6 doi: 10.1016/j.eng.2020.04.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.ELGhamrawy S. M. Diagnosis and Prediction Model for COVID19 Patients Response to Treatment Based on Convolutional Neural Networks and Whale Optimization Algorithm Using CT Images . 2020. [Google Scholar]

- 81.Hashemzadeh M., Golzari Oskouei A., Farajzadeh N. New fuzzy C-means clustering method based on feature-weight and cluster-weight learning. Applied Soft Computing . 2019;78:324–345. doi: 10.1016/j.asoc.2019.02.038. [DOI] [Google Scholar]

- 82.Golzari Oskouei A., Hashemzadeh M., Asheghi B., Balafar M. A. CGFFCM: cluster-weight and Group-local Feature-weight learning in Fuzzy C-Means clustering algorithm for color image segmentation. Applied Soft Computing . 2021;113 doi: 10.1016/j.asoc.2021.108005.108005 [DOI] [Google Scholar]

- 83.Golzari Oskouei A., Balafar M. A., Motamed C. FKMAWCW: categorical fuzzy k-modes clustering with automated attribute-weight and cluster-weight learning. Chaos, Solitons & Fractals . 2021;153 doi: 10.1016/j.chaos.2021.111494.111494 [DOI] [Google Scholar]

- 84.Ardakani A. A., Kanafi A. R., Acharya U. R., Khadem N., Mohammadi A. Application of deep learning technique to manage COVID-19 in routine clinical practice using CT images: results of 10 convolutional neural networks. Computers in Biology and Medicine . 2020;121 doi: 10.1016/j.compbiomed.2020.103795.103795 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Jun M., Cheng G., Yixin W., et al. COVID-19 CT lung and infection segmentation dataset. Zenodo . 2020 [Google Scholar]

- 86.Armato S. G., III, Hadjiiski L., Tourassi G. D., et al. SPIE-AAPM-NCI lung nodule classification challenge dataset. Cancer Imaging Arch . 2015;10 [Google Scholar]

- 87.Armato S. G., III, Drukker K, Li F, et al. LUNGx Challenge for computerized lung nodule classification. Journal of Medical Imaging . 2016;3(4) doi: 10.1117/1.JMI.3.4.044506.044506 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Armato S. G., III, McLennan G., Bidaut L., et al. The lung image database consortium (LIDC) and image database resource initiative (IDRI): a completed reference database of lung nodules on CT scans. Medical Physics . 2011;38(2):915–931. doi: 10.1118/1.3528204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Depeursinge A., Vargas A., Platon A., Geissbuhler A., Poletti P.-A., Müller H. Building a reference multimedia database for interstitial lung diseases. Computerized Medical Imaging and Graphics . 2012;36(3):227–238. doi: 10.1016/j.compmedimag.2011.07.003. [DOI] [PubMed] [Google Scholar]

- 90.COVID-19 CT segmentation dataset. 2020. http://medicalsegmentation.com/covid19/

- 91.Ko H., Chung H., Kang W. S., et al. COVID-19 pneumonia diagnosis using a simple 2D deep learning framework with a single chest CT image: model development and validation. Journal of Medical Internet Research . 2020;22(6):p. e19569. doi: 10.2196/19569. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Covid-19. 2020. https://radiopaedia.org/

- 93.Eurorad. 2020. https://www.eurorad.org/

- 94.Coronacases. 2020. https://coronacases.org/

- 95.Li X., Zeng X., Liu B., Yu Y. COVID-19 infection presenting with CT halo sign. Radiology: Cardiothoracic Imaging . 2020;2(1) doi: 10.1148/ryct.2020200026.e200026 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Previously reported image data were used to support this study and are available at doi.org/10.1101/2020.04.24.20078584 and https://doi.org/10.1101/2020.04.13.20063941. These prior studies (and datasets) are cited at relevant places within the text as references [11, 17].