Abstract

During the COVID-19 pandemic, we have been confronted with faces covered by surgical-like masks. This raises a question about how our brains process this kind of visual information. Thus, the aims of the current study were twofold: (1) to investigate the role of attention in the processing of different types of faces with masks, and (2) to test whether such partial information about faces is treated similarly to fully visible faces. Participants were tasked with the simple detection of self-, close-other's, and unknown faces with and without a mask; this task relies on attentional processes. Event-related potential (ERP) findings revealed a similar impact of surgical-like masks for all faces: the amplitudes of early (P100) and late (P300, LPP) attention-related components were higher for faces with masks than for fully visible faces. Amplitudes of N170 were similar for covered and fully visible faces, and sources of brain activity were located in the fusiform gyri in both cases. Linear Discriminant Analysis (LDA) revealed that irrespective of whether the algorithm was trained to discriminate three types of faces either with or without masks, it was able to effectively discriminate faces that were not presented in the training phase.

Keywords: SARS-CoV-2, Mask-covered faces, EEG, Self-face, Attention

Recently the world has encountered a unique challenge. The COVID-19 pandemic has brought many changes to our daily lives. Since March 2019, we have been instructed to take special measures of precaution in order to avoid virus transmission. One of the newly introduced safety requirements was covering our mouths and noses with surgical-like protective masks. For a whole year now, in most of our public social encounters we have been observing other people's faces through a thin veil of their masks. This begs the question of whether such a dramatic change to our public face perception has any effect on the cognitive aspects of face processing.

So far there have been few studies related to that topic. Carragher and Hancock (2020) found that surgical face masks have a significant negative effect on face matching performance in a task where participants had to decide if two faces presented simultaneously belong to the same person. Interestingly, this detrimental effect did not differ, whether one or both faces in each pair were masked and was similar in size for both familiar and unfamiliar faces. Furthermore, Calbi et al. (2021) presented participants with different facial expressions (angry, happy, neutral) covered by a surgical-like mask or by a scarf. The participants were then asked to recognize expressed emotions and estimate the degree to which they would maintain social distancing measures for each face. The results revealed that even though the given faces were covered, participants were still able to correctly decode the facial expressions of emotions. When assessing social distancing measures it was found that females choices were driven mostly by the emotional valence of the stimuli. Men's choices, on the other hand, were influenced by the type of face cover.

Noyes et al. (2021) have likewise explored the effects of masks and sunglasses (i.e., an occlusion that individuals tend to have more experience with) on familiar and unfamiliar face matching, as well as emotion categorization. In comparison to fully visible faces, reduced accuracy in all three tasks was observed for partially visible faces. There was little difference in performance for masked faces and faces in sunglasses. Additionally, matching accuracy was lower for the mask condition than for unconcealed faces, regardless of face familiarity. This finding was later confirmed by Estudillo et al. (2021), who reported that compared to a full-view condition, matching performance decreased when a face mask was superimposed on (1) one face and (2) both faces in a pair. Additionally, participants with better performance in the full-view condition, generally showed a stronger negative impact of mask presence. Freud et al. (2020), in turn, used a modified version of the Cambridge Face Memory Test (CFMT), where faces were presented in both masked and unmasked conditions. Their results showed that face masks lead to a robust decrease in face processing abilities. Similar changes were found whether masks were included during the study or the test phases of the experiment. Moreover, the study demonstrated that masked faces subjected to inversion showed a reduction of inversion effect compared to unmasked faces. This result suggests that processing of masked faces relies less heavily on holistic processing and focuses more on the available features (e.g., eyes, eyebrows). The effect of a substantial decrease in performance for masked faces in a modified version of CMFT was recently replicated by Stajduhar et al. (2021).

The mentioned studies show that wearing surgical-like masks is indeed a factor that influences our ability to process faces. Face masks disrupt configural/holistic face processing and promote instead a local, feature-based processing. Importantly, similar effects were found for both familiar and unfamiliar masked faces (Carragher & Hancock, 2020; Noyes et al., 2021). However, the impact of a mask on one's own face processing has not been yet investigated. Thus, a question arises regarding whether covering such a highly familiar face with a surgical-like mask can alter its processing similarly to other faces, familiar or not. One's own face differs from other faces not only in respect of its extreme familiarity but also in respect of its saliency (Apps et al., 2015; Brédart et al., 2006; Gray et al., 2004; Lavie et al., 2003; Pannese & Hirsch, 2011; Wójcik et al., 2018, 2019; Żochowska et al., 2021). The self-face, in comparison to other faces, benefits from a stronger and more robust mental representation (Bortolon & Raffard, 2018; Tong & Nakayama, 1999). Moreover, in contrast to other faces, self-face processing draws upon both configural and featural information (Keyes & Brady, 2010; Keyes et al., 2012). It is a unique piece of self-referential information, that is strongly linked to the physical self-identity (Estudillo, 2017; McNeill, 1998) and consistently shows a processing advantage over both unfamiliar and familiar faces (e.g., Sui et al., 2006; Tacikowski & Nowicka, 2010; Żochowska et al., 2021). One account for this self-face preference refers to attentional mechanisms as one's own face captures, holds, and biases attention in various conditions and on different levels of processing (for review see: Humphreys & Sui, 2016; Sui & Rotshtein, 2019).

Therefore, in the current study, we were interested in whether such attention-related effects can also appear for the self-face when covered by a surgical-like mask. Attention is a multifaceted construct composed of distinct stages (Petersen & Posner, 2012). First, people reflexively orient to relevant signals/stimuli because they initially capture attention (Posner, 1980). Second, salient stimuli trigger a state of general alertness that helps to sustain attention (Sturm et al., 1999). Lastly, executive control involves shifting attention to target stimuli and executing a behavioral response (Duncan, 1980). Thus, the first aim of our study was to investigate the early and late stages of attentional mechanisms involved in the processing of one's own face and other faces (familiar, unfamiliar) when covered by surgical-like masks.

We used the event-related potentials (ERPs) method to achieve this goal. Analyses were focused on early and late ERPs components: (i) P100 (a positive ERP component with occipito-parietal distribution, occurring approximately 100 msec after a visual stimulus onset) linked to early, stimulus-driven attentional processes (Luck et al., 2000; Magnun, 1995; Mangun & Hillyard, 1991); (ii) P300 (a positive ERP component with a centro-parietal distribution and latency of about 300 msec) viewed as a neural marker of subsequent attention allocation (Asanowicz et al., 2020; Polich, 2007); (iii) Late Positive Potential (LPP) (a positive, sustained ERP component starting around 500 msec after stimulus onset with a wide frontal-central topography) reflecting a non-specific (i.e., global) temporary increase in attention that serves to facilitate the processing of salient stimuli (Brown et al., 2012). We hypothesized that the amplitudes of attention-related ERP components (P100, P300, LPP) would be enhanced for the self-face when covered by a surgical-like mask, thus indicating preferential capture and allocation of attention.

Besides the issue of attentional processes associated with the processing of mask-covered faces (self versus. others), we attempted to address a question that is more general in nature and refers to the issue of whether partial information about faces is treated similarly to fully visible faces by the human brain. Therefore, the second aim of the current study was to examine similarities/dissimilarities between fully visible faces and mask-covered faces using the following methods: ERPs (with focus on the N170 component), source analysis (LORETA), and linear discriminant analysis (LDA).

The N170 (a negative ERP component with parietal-occipital topography and a latency of 170 msec) reflects the operation of a neural mechanism tuned not only to detect human faces but also to discriminate faces from other object categories (Bentin et al., 1996; Eimer et al., 2000; Rossion et al., 2000; Schweinberger & Neumann, 2015). It is typically regarded as a marker of the structural encoding of faces (for a review see: Eimer, 2011). Based on the functional role of this ERP component, a similar N170 response to faces with and without surgical-like masks would indicate that the upper part of a face and a fully visible face are not differentiated at a categorical level.

Moreover, we investigated whether in the case of visual stimulation with images of faces covered by surgical-like masks, sources of recorded brain activity were located in the fusiform gyrus. Common sources identified within the fusiform gyrus both for (uncovered) faces and upper parts of faces would indicate that such a partial information about faces was sufficient to activate highly specialized brain region, strongly involved in face-processing in general (Haxby et al., 2000, 2001; Rossion, 2014).

LDA, in turn, served as a tool to assess: (i) the capability of the algorithm that was taught to discriminate different types of unmasked faces (based on neural activity associated with processing of such faces) to discriminate faces with surgical-like masks; (ii) the capability of the algorithm that was taught to discriminate different types of faces with surgical-like mask to discriminate faces without masks. Both approaches seem to be ecologically valid: the first one in the case of the self-face and the close-other's face, the second one in the case of unfamiliar faces. This notion may be justified in the following way. Before the beginning of the COVID-19 pandemic, people had a long-time experience with viewing their own and their close-other's faces without any mask. Therefore, representations of both faces are rich and highly elaborated which enables configural processing of robust represented faces (Keyes, 2012). However, it is not the case for unfamiliar faces. In addition, during the COVID-19 pandemic due to the requirements to cover faces with masks, only partial information about facial features is available. We were curious to find out whether an algorithm trained to discriminate full images of highly familiar faces from unfamiliar faces will be able to discriminate different types of faces on the basis of partial information available for processing. Faces of unknown people, in turn, are nowadays viewed with surgical-like masks and sometimes it is necessary to recognize/identify people with masked faces. For this reason, we were also interested whether an algorithm trained on masked faces would discriminate unmasked faces.

Previous studies explored the impact of masks on the processing of celebrity and unknown faces (Carragher & Hancock, 2020; Noyes et al., 2021). In the current study, we decided to use a close-other's face (freely chosen by each participant) instead of famous faces. The face of a close-other is frequently encountered on an everyday basis and its level of familiarity is as high as in the case of the self-face. Thus, the self-face, a close-other's face, and unfamiliar faces were presented to participants in two conditions: with and without surgical-like masks.

Participants were tasked with the simple detection of presented faces. This task is considered to be a purely attentional task as it depends mostly on attentional resources involved in the processing of incoming visual information (Bortolon & Raffard, 2018). It is worth noting that an advantage of such tasks is that the observed patterns of findings are not likely to be driven by decision making processes (there was no specific decision to be made, just a simple detection of a stimulus) or by stimulus-response (S-R) links (regardless of the observed face participants always pressed the same button).

1. Materials and methods

1.1. Participants

Thirty-two participants (16 females, 16 males) between the ages of 21 and 34 (M = 27.6; SD = 3.1) took part in the study. Twenty-nine participants were right-handed and 3 left-handed as verified with the Edinburgh Handedness Inventory (Oldfield, 1971). Only participants with normal or corrected-to-normal vision with the use of contacts and with no distinctive facial marks were recruited. This restriction was introduced to ensure the uniformity of visual stimuli standards, as the photograph of each participant was matched with photographs from the Chicago Face Database – CFD (Ma et al., 2015). Images included in this database present faces without glasses and without any visible marks. All participants reported no history of mental or neurological diseases.

The required sample size was estimated using the MorePower software (Campbell & Thompson, 2002). Estimation was conducted for the main factor of ‘stimuli’ (faces with surgical-like mask, faces without surgical-like mask) in two-way repeated measures ANOVA with factors of ‘stimuli’ and ‘type of face’ (self, close-other's, unknown): estimated effect size η 2 = .25, α = .05, β = .90. The result indicates a required sample size of 30 participants.

As the study was conducted during the COVID-19 pandemic, it should be noted that all our participants (PhD students and employees at the Nencki Institute) as well as the researchers involved in this study were tested for the SARS-CoV-2 virus on a weekly basis. This was done within the SONAR-II project (www.nencki.edu.pl), which aimed at evaluating the effectiveness of the pooled testing strategy, developed at the Nencki Institute (in cooperation with the University of Warsaw). The SONAR-II covers the asymptomatic population of people who do not meet the criteria for SARS-CoV-2 testing, according to epidemiological regulations, but who may come into contact with infected people. All our participants and researchers had negative results at the time of the study.

1.2. Ethics statement

All experimental procedures were approved by the Human Ethics Committee of the Institute of Applied Psychology at Jagiellonian University. The work described here has been carried out in accordance with The Code of Ethics of the World Medical Association (Declaration of Helsinki) for experiments involving humans. Written informed consent was obtained from each participant prior to the study and all participants received financial compensation for their participation.

1.3. Stimuli

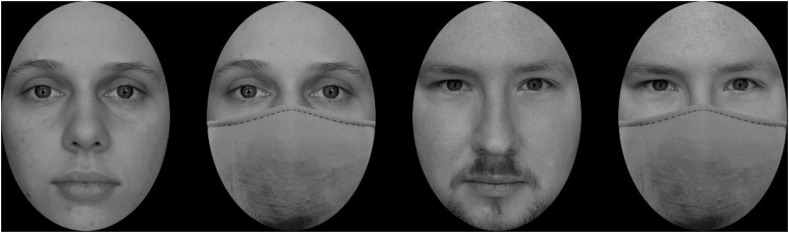

Similarly to our previous studies on the topic of self-face processing, the set of stimuli in the current study was individually tailored for each participant (Tacikowski et al., 2011; Tacikowski & Nowicka, 2010; Wójcik et al., 2018, 2019; Bola et al., 2021; Żochowska et al., 2021). It consisted of single face images of three types: the self-face, a close-other's face, and an unknown face. Each type of face was presented with and without a surgical-like mask. An image of a surgical-like mask (Freud et al., 2020) was fitted to each face using Photoshop® CS5 (Adobe, San Jose, CA), fully covering the nose and the mouth. Examples of faces with and without a surgical-like mask are shown in Fig. 1 .

Fig. 1.

Examples of faces with and without a surgical-like mask. They present two co-authors of this study.

Self-face photographs were taken prior to the experiment. Participants were asked to maintain a neutral facial expression when photographed. The close-other was freely chosen by each participant to avoid the situation in which a pre-defined close-other would not really be a significant person in the participant's opinion. This approach was applied in our earlier studies (Cygan et al., 2014; Kotlewska et al., 2017; Kotlewska and Nowicka, 2015, 2016). The only restriction was that the close-other had to be of the same gender as the participant. A photograph of the close-other's face (with a neutral facial expression) was delivered by the participant. Finally, photographs of unknown faces were taken from the Chicago Face Database - CFD (Ma et al., 2015). The gender of faces from the CFD was matched to each participant's gender to control for the between-category variability. Different images of unknown faces were used in individual sets of stimuli in order to avoid the plausible influence of one selected image on the pattern of brain activity.

Pictures of faces within each stimuli set (i.e., images of the self-face, a close-other's face, a selected CFD face) were extracted from the background, grey-scaled, cropped to include only the facial features (i.e., the face oval without hair), and resized to subtend 6.7° × 9.1° of visual angle using Photoshop® CS5 (Adobe, San Jose, CA). The mean luminance of all visual stimuli was equalized using the SHINE toolbox (Willenbockel et al., 2010), and faces were presented against a black background. None of the stimuli were shown to the participants before the experiment.

1.4. Procedure

Participants were seated comfortably in a dimly lit and sound-attenuated room with a constant viewing distance of 57 cm from the computer screen (DELL Alienware AW2521HFL, Round Rock, Texas, USA). After electrode cap placement (ActiCAP, Brain Products, Munich, Germany), the participants used an adjustable chinrest to maintain a stable head position. Presentation software (Version 18.2, Neurobehavioral Systems, Albany, CA) was used for stimuli presentation. Participants completed a simple detection task: regardless of the image presented (self-face with/without surgical-like mask, close-other's face with/without surgical-like mask, unknown face with/without surgical-like mask), they were asked to push the same response button (Cedrus response pad RB-830, San Pedro, USA) as quickly as possible. After reading the instructions displayed on the screen, participants initiated the experiment by pressing a response button. Each trial started with a blank screen, shown for 1500 msec. Next, a white cross (subtending .5° × .5° of visual angle) was centrally displayed for 100 msec and then followed by a blank screen lasting either 100, 200, 300, 400, 500 or 600 msec at random. Subsequently, a stimulus was presented for 500 msec, followed by a blank screen for 1000 msec. The number of repetitions for each stimulus type was 50. The order of stimuli presentation was pseudo-randomized, i.e., no more than two stimuli of the same category were displayed consecutively. A break was planned in the middle of experiment to enable participants to rest. It lasted 1 min, unless the participant decided to start the second part of the experiment earlier. Participants needed on average 20 min to complete the whole task.

1.5. EEG recording

The EEG was continuously recorded with 62 Ag–AgCl electrically shielded electrodes mounted on an elastic cap (ActiCAP, Brain Products, Munich, Germany) and positioned according to the extended 10–20 system. Two additional electrodes were placed on the left and right earlobes. The data were amplified using a 64-channel amplifier (BrainAmp MR plus; Brain Products, Germany) and digitized at a 500-Hz sampling rate, using BrainVision Recorder software (Brain Products, Munich, Germany). EEG electrode impedances were kept below 10 kΩ.

1.6. Behavioural analysis

Responses within a 100–1000 msec time-window after stimulus onset were analysed using SPSS (Version 26, IBM Corporation) and reported results were cross-checked with Statcheck (http://stat check.io/index.php). A two-way repeated measure ANOVA was performed with type of stimulus (faces with mask, faces without mask) and type of face (self, close-other's, unknown) as within-subject factors. The results are reported with reference to an alpha level equal to .05.

1.7. ERP analysis

Off-line analysis of the EEG data was performed using custom scripts written in Python (Version 3.5, Python Software Foundation). EEG data from 62 channels were re-referenced off-line to the algebraic average of the signal recorded at the left and right earlobes, notch filtered at 50 Hz, and band-pass-filtered from .01 to 30 Hz using a 2nd order Butterworth filter. After re-referencing and filtering the signal, ocular artefacts were corrected using Independent Component Analysis – ICA (Bell & Sejnowski, 1995). After the decomposition of each data set into maximally statistically independent components, components representing eye blinks were rejected based on a visual inspection of the component's topography (Jung et al., 2001). Using the reduced component-mixing matrix, the remaining ICA components were multiplied and back-projected to the data, resulting in a set of ocular-artefact-free EEG data. Subsequently, the EEG signal was segmented into 1,700-msec-long epochs, from −200 msec before to 1,500 msec after stimulus onset. The next step was a semi-automatic artefact rejection procedure that rejected trials exceeding the following threshold: the maximum permitted absolute difference between two values in a segment was 100 μV. Two data sets had to be excluded from the sample during preprocessing based on too few trials remaining after artefacts rejection (the threshold for exclusion was set at less than 50% of trials). The mean number of segments that were averaged afterwards for each category of stimuli was as follows: self-face – 37.5 (SD = 12.0), self-face with a surgical-like mask – 37.6 (SD = 11.8), close-other's face - 37.6 (SD = 13.5), close-other's face with a surgical-like mask – 38.1 (SD = 12.2), unknown face - 37.4 (SD = 12.1), and unknown face with a surgical-like mask – 37.4 (SD = 12.1). The number of epochs used to obtain ERPs did not differ significantly between the types of stimuli. Finally, the epochs were baseline-corrected by subtracting the mean of the pre-stimulus period.

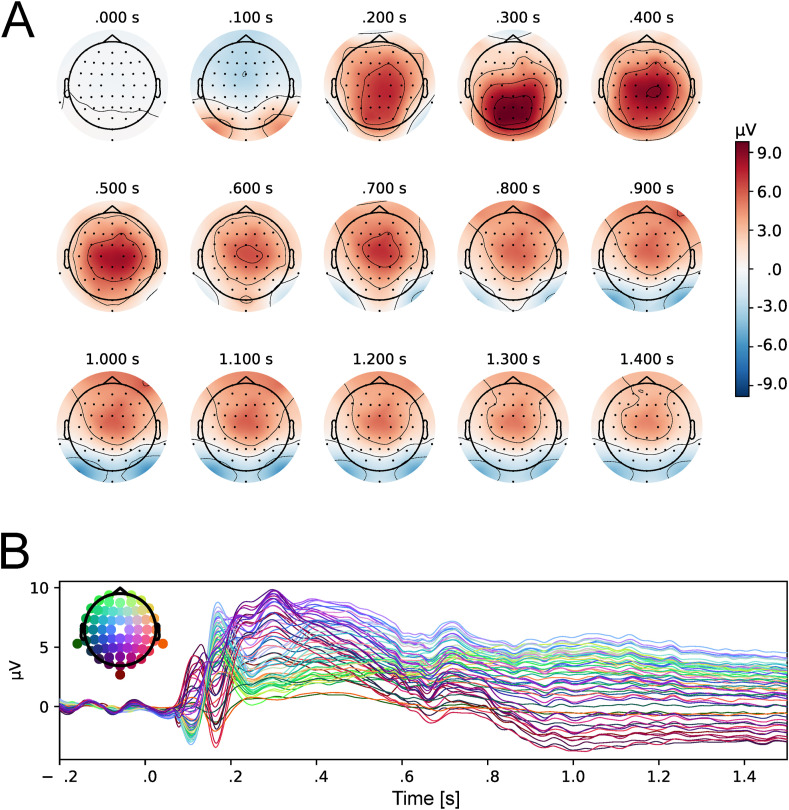

The selection of electrodes for ERP analyses has to be orthogonal to potential differences between experimental conditions (Kriegeskorte & Kievit, 2013). Therefore, this must be done on the basis of the topographical distribution of brain activity (in the time window corresponding to a given component) averaged across all experimental conditions. Based on the topographical distribution of activity as well as grand-averaged ERPs, collapsed for all conditions (self-face, close-other's face, unknown face, self-face with a surgical-like mask, close-other's face with a surgical-like mask, unknown face with a surgical-like mask), the following windows were chosen for analysis of ERPs components of interest: 90–150 msec for P100, 140–200 msec for N170, 300–600 msec for P300, and 400–900 msec for LPP (Fig. 2 ). Six clusters of electrodes within the region of maximal changes of activity were selected: for P100 – left: O1 and PO3, right – O2 and PO4; for N170 – left: P7 and PO7, right: P8 and PO8; for P300 – CPz, CP1, CP2, and Pz; for LPP – Fz, F2, F4, and FCz. The data were pooled within each cluster. This step is justified by the limited spatial resolution of EEG and high correlation between neighbouring electrodes. Peak amplitudes were analyzed for P100 and N170, while the mean values at each time point within the aforementioned time window were used to assess P300 and LPP mean amplitude. In the case of ERP components that do not have a clear peak, this method of assessing amplitudes is less affected by possible low signal-to-noise ratio than peak measure methods (Luck, 2005).

Fig. 2.

Maps of topographical distribution of activity collapsed for all experimental conditions: self-face, close-other's face, unknown face, self-face with a surgical-like mask, close-other's face with a surgical-like mask, unknown face with a surgical-like mask (A) and butterfly plot presenting grand-average ERPs for collapsed all experimental conditions, al all 62 active electrodes (B).

All statistical analyses were performed using the JASP software and custom Python scripts (Version 3.5, Python Software Foundation). Reported results were cross-checked with Statcheck (http://statc heck.io/index.php). For P100 and N170 amplitudes, a three-way ANOVAs were performed with hemisphere (left, right), type of stimulus (faces with masks, faces without masks), and type of face (self, close-other's, unknown) as within-subject factors. For P300 amplitudes, two-way repeated measure ANOVAs were performed with type of stimulus (faces with masks, faces without masks) and type of face (self, close-other's, unknown) as within-subject factors. In the case of early ERP components (P100, N170), analyses of amplitudes were complemented by analogous ANOVAs run on latencies.

All effects with more than one degree of freedom in the numerator were adjusted for violations of sphericity (Greenhouse & Geisser, 1959). Bonferroni correction for multiple comparisons was applied to post-hoc analyses. All results are reported with alpha levels equal to .05.

1.8. Linear discriminant analysis (LDA)

Briefly, LDA identifies a linear combination of features that optimally separates two or more classes of data (Balakrishnama & Ganapathiraju, 1998; Fisher, 1936). In the current study, the scikit-learn Python library was used (https://scikit-learn.org/stable/). LDA was applied to assess whether: (1) an algorithm that differentiated faces with surgical-like masks was efficient in discriminating faces without such masks; (2) an algorithm that differentiated faces without surgical-like masks was efficient in discriminating faces with such masks and to investigate the possible time dynamics of these effects.

1.9. Source analysis

Brain Electrical Source Analysis (BESA v.7.1, MEGIS Software GmbH, Munich, Germany) was used to model sources of the ERPs signal. Source estimations were performed on the averaged data of 30 participants. This analysis was focused solely on differentiating two conditions of faces: covered and uncovered by surgical-like masks. Type of face (self, close-other's, unknown) trials with respect to different types of stimuli (with mask, without mask) were averaged together in order to create the two desired conditions. Source estimation was performed on 200 ms-long post stimulus segments that were extracted from the averaged data. Two clear components were observed in this time interval, one positive peaking at a latency of 110 msec (P100) and a negative component peaking at approximately 170 msec (N170). Only the peak-to-peak interval of those components (110–160 msec for faces without surgical-like masks, 110–168 msec for faces with surgical-like masks) was taken into the model fit, as it resembles the actual neural postsynaptic activity (Key et al., 2005). Two methods of source analysis were applied: discrete sources analysis (dipole fitting) and a distributed source imaging method CLARA (Classical LORETA Analysis Recursively Applied).

1.9.1. Discrete source analysis

Regional sources composed of three single dipoles at one location oriented orthogonal to each other were used to model three-dimensional ERP current waveforms originating from within a certain brain region (Paul-Jordanov et al., 2016). Two regional sources were fit bilaterally and symmetrically in the area of the fusiform gyrus, which is recognized as one of the most crucial structures in face processing (Burns et al., 2019; Haxby et al., 2000, 2001; Rossion, 2014). Symmetry constraints with respect to location were applied to the pair of lateral sources in order to limit the number of parameters being estimated (Schweinberger et al., 2002). No other constraints with respect to localization were applied. The fit interval assigned to the source model was dominated by a single PCA component. The final source solution required a residual variance of less than 10% (Berg & Scherg, 1994; Tarkka & Mnatsakanian, 2003), i.e., a goodness of fit over 90%.

1.9.2. Distributed source analysis

Compared to the abovementioned method, distributed source analysis estimates the underlying generators without any prior assumptions on the number and locations of the sources. The distributed sources volume-based method CLARA (Beniczky et al., 2016) is an iterative application of the Low Resolution Electromagnetic Tomography (LORETA) algorithm (Pascual-Marqui et al., 1994), with an implicit reduction of the source space in each iteration (Paul-Jordanov et al., 2016). CLARA was used to automatically identify sources and verify the hypothesis regarding the fusiform gyrus activation and differences between processing of faces with and without surgical-like masks.

2. Results

2.1. Behavioral results

The RTs of one participant were found to be beyond 3 SD above the mean for each condition, and were thus excluded from further analysis. The mean RTs to all types of stimuli were as follows (mean ± standard deviation): self-face (289.9 ± 57.5), self-face with surgical-like mask (297.3 ± 64.0), close-other's face (294.3 ± 65.6), close-other's face with surgical-like mask (291.7 ± 62.8), unknown face (293.1 ± 70.8), and unknown face with surgical-like mask (292.9 ± 62.2).

A repeated-measures ANOVA conducted on mean RTs revealed a significant 2-way interaction: face x surgical-like mask (F(2, 60) = 3.450; P = .038; η 2 = .103). Both the main effect of face and main effect of surgical-like mask were found to be non-significant. Post-hoc tests of the face x surgical-like mask interaction showed that RTs to self-face without surgical-like mask were significantly shorter than to self-face with surgical-like mask (P = .014). The other comparisons were non-significant.

2.2. ERPs results

2.2.1. P100

Statistical analysis of P100 amplitudes showed the significant main effects of ‘hemisphere’ (F(1, 29) = 6.438, P = .017, η 2 = .068) and ‘type of stimulus’ (F(1, 29) = 9.798, P = .004, η 2 = .039). These statistical findings indicated that (1) P100 amplitudes recorded at the occipito-parietal region in the left hemisphere were higher than P100 amplitudes recorded at the occipito-parietal region in the right hemisphere (6.79 ± 3.71 μV vs. 5.74 ± 3.48 μV), and (2) P100 amplitudes were substantially enhanced for all types of faces covered by surgical-like masks in comparison to faces without masks (6.66 ± 3.73 μV vs. 5.89 ± 3.46 μV).

Analysis of P100 latencies showed the significant main effect of ‘type of stimulus’ (F(1, 29) = 15.589, P < .001, η 2 = .108). P100 latency for faces with surgical-like masks were longer than for faces without masks (129.2 ± 17.7 ms vs. 122.7 ± 17.6 ms). All other effects and interactions were non-significant. Fig. 3 (panel A) illustrates the P100 results.

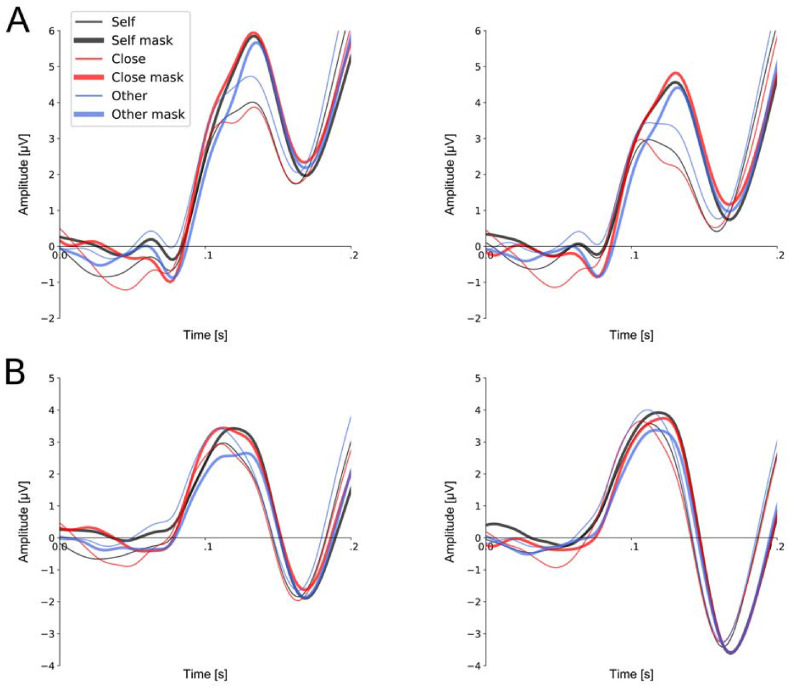

Fig. 3.

Grand average ERPs in the P100 time-window and in N170 time-window to self-face, close-other's face, unknown face with and without a surgical-like mask. Upper panel A: P100 component for pooled electrodes O2 and PO4 within the right occipital-parietal region and pooled electrodes O1 and PO3 within the left occipital-parietal region. Lower panel B: N170 component for pooled electrodes P8 and PO8 within the right parietal region. Peak amplitude of this component was analyzed in the 140–200 msec time-window.

2.2.2. N170

Grand-average ERPs in the N170 ms time window are presented in Fig. 3 (panel B). Analysis of N170 amplitudes showed that none of the factors nor their interaction reached the level of statistical significance. Analysis of N170 latencies, in turn, showed the significant main effect of ‘type of stimulus’ (F(1, 29) = 43.115, P < .001, η 2 = .217). Analogously to P100 results, N170 latency for faces with surgical-like masks was longer than for faces without masks (171.3 ± 9.6 ms vs. 163.9 ± 11.3 ms).

2.2.3. P300

Analysis of P300 amplitudes revealed the significant main effects of ‘type of stimulus’ (F(1, 29) = 12.704, P = .001, η 2 = .073) and ‘type of face’ (F(2, 58) = 25.085, P < .001, η 2 = .284). The interaction of these two factors was non-significant. The significance of the ‘type of stimulus’ factor indicated that P300 amplitudes associated with the processing of faces with surgical-like masks were substantially increased in comparison to faces without such masks (8.63 ± 4.54 μV vs. 7.45 ± 4.34 μV).

In addition, post hoc tests on the ‘type of face’ factor showed that: (1) P300 amplitude to the self-face was higher than to the close-other's face (9.55 ± 4.76 μV vs. 7.84 ± 4.39 μV, P < .001); (2) P300 amplitude to the self-face was higher than to the unknown face (9.55 ± 4.76 μV vs. 6.73 4.27 μV, P < .001); (3) P300 amplitude to the close-other's face was higher than to unknown face (7.84 ± 4.39 μV vs. 6.73 ± 4.27 μV, P = .023). Fig. 4 (panel A) presents grand-average ERPs at pooled CPz, CP1, CP2, and Pz electrodes.

Fig. 4.

Late ERP components P300 (Panel A) and LPP (Panel B). Left panel A: P300 component for pooled electrodes Pz, CPz, CP2, and P2 that were within the region of maximal activity in the topographical distribution of brain activity, averaged across all experimental conditions. Right panel B: LPP for pooled electrodes FCz, Fz, FC2, and C2 that were within the region of maximal activity in the topographical distribution of brain activity, averaged across all experimental conditions. The analyzed time windows are marked by light-blue rectangles.

2.2.4. LPP

Analysis of LPP amplitudes showed the significant main effects of ‘type of stimulus’ (F(1, 29) = 4.550, P = .041, η 2 = .026) and ‘type of face’ (F(2, 29) = 16.285, P < .001, η 2 = .228). The interaction of these two factors was non-significant. The significance of the ‘type of stimulus’ factor indicated that LPP amplitudes associated with processing of faces with surgical-like masks were higher than to faces without such masks (6.02 ± 3.44 μV vs. 5.33 ± 3.12 μV).

Post hoc tests on the ‘type of face’ factor showed enhanced LPP amplitude to the self-face in comparison to the close-other's face (7.04 ± 3.76 μV vs. 5.45 ± 3.11 μV, P = .002) and unknown face (7.04 ± 3.76 μV vs. 4.54 ± 2.97 μV, P < .001). Fig. 4 (panel B) presents grand-average ERPs at pooled Fz, F2, F4, and FCz electrodes.

2.3. LDA results

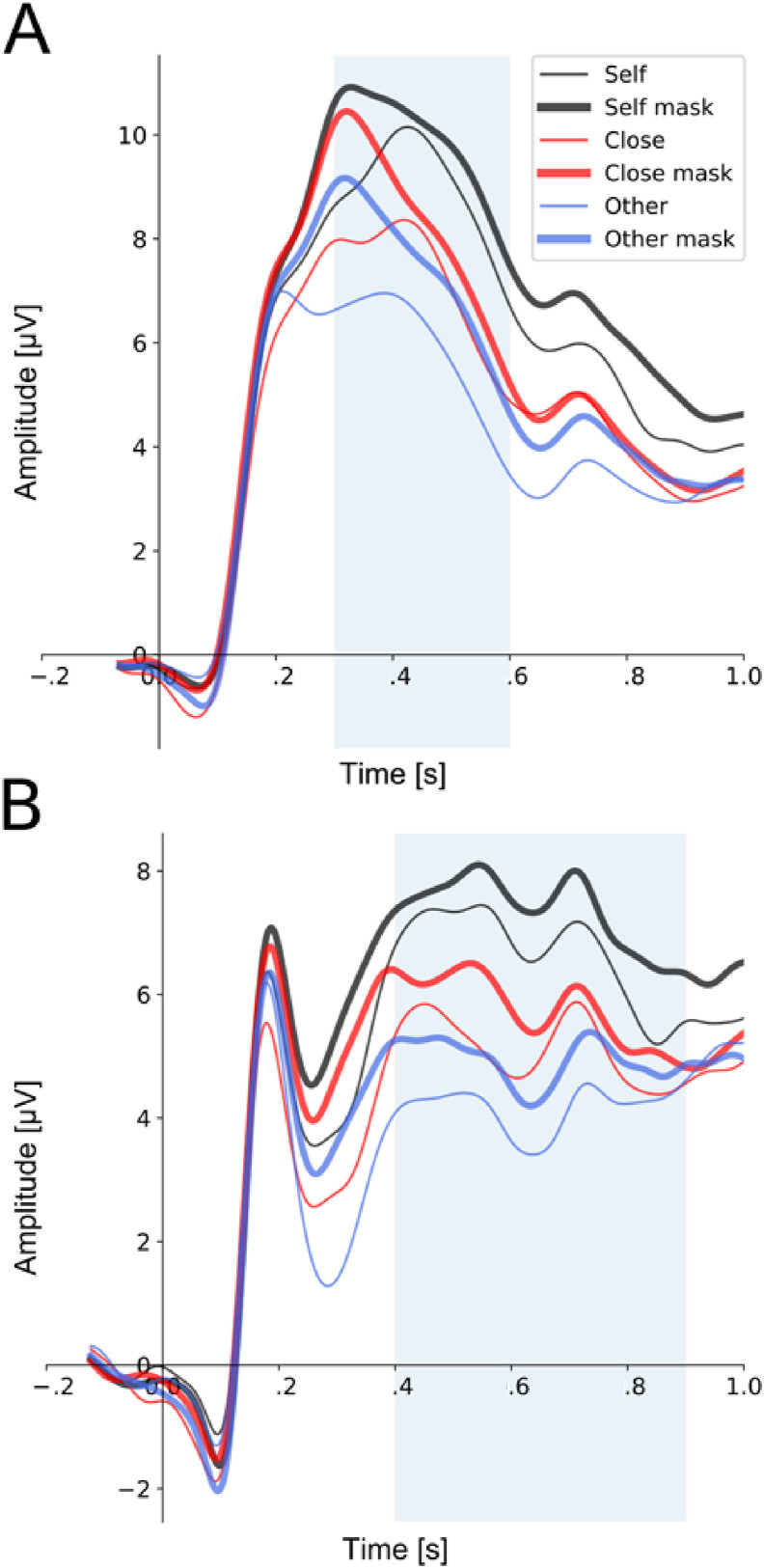

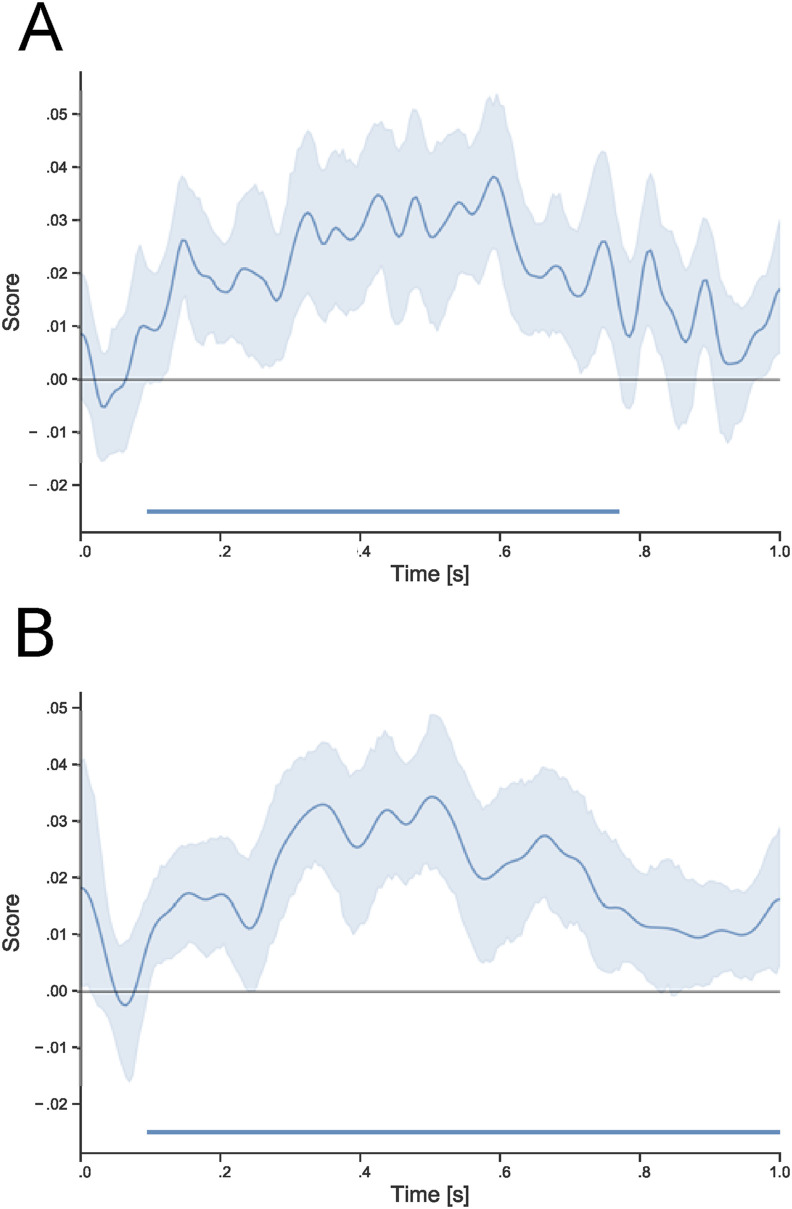

The algorithm trained to discriminate self-face, close-other's face and unknown faces that were without surgical-like masks was efficient in discriminating those types of faces when covered by surgical-like masks. LDA revealed a significant cluster in the 95–770 msec time-window (P < .001). In addition, the algorithm trained to discriminate all types of faces with surgical-like masks (self-face versus. close-other's face versus. unknown face) was also able to properly discriminate those types of faces without surgical-like masks. LDA revealed a significant cluster in the 95–1000 msec time-window (P < .001). All LDA results are presented in Fig. 5 .

Fig. 5.

Results of Linear Discriminant Analysis. Panel A: LDA algorithm trained to discriminate uncovered self-face, close-other's face, and unknown faces properly discriminates those faces covered by surgical-like masks. Panel B: LDA algorithm trained to discriminate three types of faces covered by surgical-like masks discriminates uncovered self-, close-other's, and unknown faces. Horizontal blue bars indicate statistically significant effects. Shaded areas indicate 95% CI.

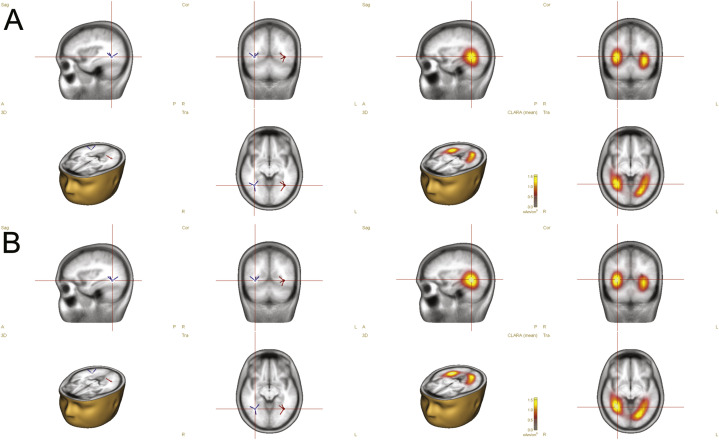

2.4. Source analysis results

The discrete source analysis revealed ERP dipole sources fitted at symmetrical bilateral locations (x, y, z Talairach coordinates): (x = −35.4, y = −54.0, z = −5.3), (x = 35.4, y = −54.0, z = −5.3) for faces without surgical-like masks and (x = −34.2, y = −56.6, z = −7.3) (x = 34.2, y = −56.6, z = −7.3) for faces with surgical-like masks. The applied solutions explained 97.41% and 97.83% of the models, respectively. Moreover, the obtained coordinates did indeed correspond to the localization of the fusiform gyrus within ± 2 mm cube range identified by the Tailarach Client 2.4.2 (Lancaster et al., 2000).

The distributed sources analysis with use of CLARA estimated the strongest activity at bilateral locations in response to (1) faces without surgical-like masks: (x = −24.5, y = −65.9, z = −11.3), (x = 31.5, y = −51.9, z = −4.3) and (2) faces with surgical-like masks: (x = −17.5, y = −72.9, z = −11.3) (x = 31.5, y = −51.9, z = −4.3), identified by the Tailarach Client as the fusiform gyrus within ± 5 mm cube range around the peak of a given activation. Fig. 6 presents all of the aforementioned results.

Fig. 6.

Source analysis of ERP responses. Distributed source imaging with CLARA (Classical LORETA Analysis Recursively Applied) points to the fusiform gyrus as the most active generator of the signal elicited by presentation of masked faces (Panel A) and unmasked faces (Panel B). Two dipoles fitted within the fusiform gyrus explains almost 98% of the data.

3. Discussion

Faces are one of the most critical classes of visual stimuli from which people may acquire social information. Faces inform us about the age, sex, mood, direction of gaze, person's identity etc. The ability to extract this kind of information within a fraction of a second plays a crucial role in our social lives. Humans have developed specialized cognitive and neural mechanisms dedicated specifically to the processing of faces (Kanwisher & Yovel, 2006). Due to safety requirements during the COVID-19 pandemic, we have been confronted on an everyday basis with faces covered by surgical-like masks. This raises several questions about how our brains process this kind of visual, socially-relevant information.

Previous behavioral studies showed that surgical-like masks exert a strong influence on our ability to efficiently match (Carragher & Hancock, 2020; Estudillo et al., 2021; Noyes et al., 2021) and remember faces (Freud et al., 2020; Stajduhar et al., 2021). In the current study, we investigated the impact of surgical-like masks on the neural underpinnings of personally familiar (self, close-other's) and unfamiliar face processing. We found that the effects related to surgical-like masks were similar for all faces. This is in line with previous studies investigating the effects of masks on familiar and unfamiliar face processing (Carragher & Hancock, 2020; Noyes et al., 2021). Our results showed that early and late attention-related ERP components were substantially increased not only for the self-face with a surgical-like mask but also for other masked faces (close-other's, unfamiliar) in comparison to faces without masks. In addition, the prioritized processing of one's own face was observed, irrespective of presence or absence of a surgical-like mask, as revealed by enhanced P300 and LPP. The detailed results were as follows.

Within the initial one hundred milliseconds after the onset of visual stimuli, amplitudes and latencies of an early ERP component (P100) were higher for all covered versus. uncovered faces. In the consecutive one hundred milliseconds time-window, longer latencies of N170 were observed for all faces with surgical-like masks. Thereafter, P300 amplitudes differentiated both faces with and without masks and different types of faces (self-, close-other's, unfamiliar). P300 was enhanced for all faces covered with surgical-like masks and P300 was higher to the self-face than to the close-other's and unfamiliar faces (the latter two also differed). However, the lack of significant ‘type of stimulus’ x ‘type of face’ interaction indicated that P300 effects reflecting the influence of surgical-like masks were similar for personally relevant and personally irrelevant faces. Finally, the late ERP component (LPP) showed an analogous to P300 pattern of findings (i.e., increased LPP amplitudes for covered versus. uncovered faces; increased LPP amplitudes to the self-face versus. other faces). LDA results, in turn, indicated that even partial information about the three types of faces that was available for processing was sufficient to develop an algorithm that subsequently discriminated efficiently uncovered self-, close-other's and unknown faces. Moreover, LDA trained on full images of faces was also able to correctly discriminate images of those faces with surgical-like masks.

Enhanced P100, P300, and LPP amplitudes for covered versus. uncovered faces may reflect amplified attentional processing of faces with surgical-like masks. Specifically, P100 is traditionally related to the early processes of stimulus detection and to sensory gain control (Mangun, 1995). This sensory gain control mechanism is manifested either as attentional suppression or as attentional facilitation, occurring at an early stage of information processing (Hillyard et al., 1998). Based on the notion that the P100 amplitude is proportional to the amount of attentional resources required for initial processing of visual information (Hillyard & Anllo-Vento, 1998; Mangun & Hillyard, 1991), increased P100 amplitudes and delayed P100 latencies to faces with surgical-like masks may indicate stronger but slightly delayed involvement of early selective attention.

Increased P100 to faces with masks may also be directly related to the view that visual ambiguity is associated with enhanced P100 (Schupp et al., 2008). In line with this notion, P100 amplitude was larger for morphed faces than for unaltered faces (Dering et al., 2011) and for inverted faces when compared to upright faces (Hileman et al., 2011). However, some studies reported not only larger P100 amplitude but also a longer P100 latency for inverted faces when compared to upright faces (Itier & Taylor, 2002). An analogous pattern of P100 results was observed in the present study. Both the inversion of a face and its covering with a surgical-like mask lead to a disruption of its configural processing, making it more difficult to identify a face as a face and thus requiring increased attention (Itier & Taylor, 2002). In addition, it has also been proposed that the P100 component may serve as a sign of processing effort (Hileman et al., 2011). Thus, the higher P100 amplitude and the longer latency to faces with masks than to faces without masks may reflect the need for engagement of additional brain resources.

The P300 component, in turn, reflects the cognitive evaluation of stimulus significance, a process that can be elicited by both active and passive attention (Picton & Hillyard, 1988). As the functional role of P300 is associated mainly with allocation of attentional resources (Polich, 2007), substantially enhanced P300 amplitudes for covered faces reflected increased attention allocation. Subsequently, LPP is linked to a global, temporary increase in attention that serves to facilitate the in-depth processing of salient stimuli (Brown et al., 2012; Hajcak et al., 2010). In the light of this, increased LPP to faces with surgical-like masks reflects enhanced processing and global sustained attention. In addition, as far as the saliency feature of stimuli is concerned, larger LPP amplitudes to all faces with surgical-like masks than to faces without masks may be a consequence of an increased arousal associated with processing of covered faces (Cuthbert et al., 2000). All in all, the detection of faces with surgical-like masks was associated with the more elaborated attentional processing, and mask-covered faces were focused on by participants to a significantly greater extent than fully visible faces.

While the early ERP components (P100, N170) were not modulated by the type of presented face, it was the case for late ERP components (P300, LPP) that showed such effect. Our results corroborate the findings of previous studies reporting enhanced P300 (Cygan et al., 2014; Kotlewska & Nowicka, 2015; Ninomiya et al., 1998; Sui et al., 2006; Tacikowski & Nowicka, 2010) and enhanced LPP (Żochowska et al., 2021) to the self-face in comparison to other (either familiar or unfamiliar) faces. However, in the current study we showed, for the first time that both the full view of the self-face as well as only the upper part of the self-face available for processing resulted in increased P300 and LPP amplitudes in comparison to other (personally relevant and personally irrelevant) faces. This self-preference effect may be also attributed to highly elaborated attentional processing of the self-face (Tacikowski & Nowicka, 2010; Żochowska et al., 2021). Thus the mechanisms boosting the prioritized processing of self-relevant information seem to be driven by automatic capture of attention and prioritized allocation of attention to the self-related stimuli (review: Humphreys & Sui, 2016; Sui & Rotshtein, 2019). Indeed, several studies found that self-face automatically captures attention (e.g., Alexopoulos et al., 2012; Alzueta et al., 2020; Brédart et al., 2006; Tong & Nakayama, 1999) and numerous ERP studies revealed greater amplitude of the P300 component in response to one's own face (e.g., Knyazev, 2013; Ninomiya et al., 1998; Sui et al., 2006; Tacikowski & Nowicka, 2010; Żochowska et al., 2021).

Enhanced LPP to the self-face reported in the present study may be attributed either to the global, temporary increase in attention (Brown et al., 2012; Hajcak et al., 2010) or to the process of self-reflection. The later interpretation is based on commonly reported larger LPP when participants make judgments about themselves compared to making judgments about others (Kotlewska & Nowicka, 2016; Nowicka et al., 2018; Yu et al., 2010; Zhang et al., 2013). It is worth noting that in the current study a single experimental trial was long enough to allow for such mental activity, i.e., some considerations about one's own person. Although no kind of self-reflection was required to successfully accomplish the behavioral task (a simple detection of faces), one may assume that multiple presentations of one's own face may automatically evoke such a process. This notion is supported by the findings of fMRI studies (Heatherton et al., 2006; Keenan et al., 2000; Kircher et al., 2001), reporting an increased activation of the medial prefrontal cortex and anterior cingulate cortex to images of self-faces compared with images of others' faces. These findings may indicate that exposure to the self-face effectively induces introspection and emotional reaction.

We would also like to point out the non-significant interactions of face type (self, close-other's, unfamiliar) and stimulus type (face with mask, face without mask) in our analyses of P300 and LPP amplitudes, indicating that patterns of P300 and LPP results regarding type of faces were analogous for faces with and without masks. Whether or not the faces were covered by masks, amplitudes of P300 and LPP to one's own face were the largest, followed by amplitude to the close-other's face and lastly unfamiliar faces. This pattern of findings for fully visible faces was reported in previous studies on self-face processing (e.g., Cygan et al., 2014; Kotlewska & Nowicka, 2015; Żochowska et al., 2021). However, the observed impact of familiarity on P300 and LPP findings to faces with masks seems to suggest some differentiation of faces even if they were processed in a feature-based way.

The next issue that we would like to comment on refers to similarities between neural correlates of faces with and without surgical-like masks. First of all, amplitudes of N170 were not different for faces with and without surgical-like masks. Numerous studies showed that the N170 component is enhanced for faces compared to other non-face objects (e.g., Rossion & Jacques, 2011). Thus it is claimed to be face-specific (e. g., Bentin et al., 1996; Sagiv & Bentin, 2001; Carmel & Bentin, 2002) and to reflect the structural encoding of faces (e.g., Eimer, 2000; for a review, see; Eimer, 2011). The N170 is usually linked to the activation of perceptual face representations (Eimer, 2000; Sagiv & Bentin, 2001). Moreover, the N170 involves the detection of a face at a categorical level, i.e., its discrimination from another object category (Schweinberger & Neumann, 2015). Thus, similar amplitudes for covered and uncovered faces may indicate similar levels of structural encoding and a similar categorization of faces with surgical-like masks as faces. The findings of some earlier studies (Eimer, 2000) indicated attenuated N170 amplitudes for faces lacking some of their natural features (e.g., eyes, nose etc.). In contrast, disruption in the use of configural information for inverted faces was associated with larger N170 as compared to N170 to upright faces (e.g., Rossion et al., 2000; Civile, et al., 2018). Thus, although both removing some essential parts in face images and presenting face images in the atypical position disturbed the holistic processing of faces, those two manipulations were associated with opposite N170 effects: either a decrease or increase in N170 amplitudes. In the present study, in turn, similar N170 amplitudes were found to fully visible faces and to faces with masks, processed in a more featural manner. It may be hypothesized that nowadays covering the lower part of a face with a surgical-like mask is so common that such a face image may be treated as an ecologically valid stimulus and may be viewed as a face. It should be pointed out that although all of aforementioned face manipulations promote feature-based processing, there is one crucial difference between them. In the case of inverted faces, all pieces of information about a face (eyes, nose, mouth, forehead, face shape, cheeks etc.) are still available for processing. In the case of faces with masks or faces and faces lacking some internal features, only partial information about the face is processed. Therefore, outcomes of studies with different face manipulations may differ.

In addition, there is strong evidence indicating that only the self-face (but no other familiar face) is processed using featural information (Keyes, 2012; Keyes & Brady, 2010). However, we did not observe any differences in the amplitude of N170 between the mask-covered self-face and other faces. Moreover, we found no differences between fully visible images of the self-, close-other's, and unfamiliar faces either. The latter is in line with findings of studies revealing that the N170 is rather not affected by face familiarity: similar N170 potentials were elicited by famous and unfamiliar faces (Gosling & Eimer, 2011; Tacikowski et al., 2011), both when they were relevant and irrelevant to the task (Bentin & Deouell, 2000). Although some studies have presented evidence that this component is larger (i.e., more negative) to the self-face when compared to other faces, familiar or not (Caharel et al., 2002; Keyes et al., 2010), this pattern of findings was not confirmed by other studies (Gunji et al., 2009; Parketny et al., 2015; Pierce et al., 2011; Sui et al., 2006; Tanaka et al., 2006).

The results of two LDA models also revealed some similarities in processing faces with and without surgical-like masks. Specifically, irrespective of whether the algorithm was trained to discriminate self-face, close-other's face, and unknown faces either with or without surgical-like masks, it was able to effectively discriminate faces that were not presented in the training phase. Based on LDA results, one may conclude that neural activity associated with processing of information about upper parts of face was sufficient to decode full images of faces. It should be stressed, however, that the LDA algorithm may not directly reflect how the human brain works. Thus, the fact the LDA can effectively discriminate faces that were not presented in the training phase is not necessarily relevant to a human being able to do so. Our LDA findings are generally in line with a recent study that investigated facial expression of different emotions in the case of faces covered by masks or scarfs (Calbi et al., 2021). Despite the covering of the lower part of the face, participants correctly recognized the facial expressions of emotions. Although we tested different face-identities and Calbi et al. (2021) tested different facial emotions, both studies found that the upper part of the face provided enough information to be sufficiently and adequately processed.

Similarities in the processing of faces with and without surgical-like masks were also revealed by sources analyses of recorded activity. In both cases, sources were located in fusiform gyri, typically involved in processing of faces (Kanwisher et al., 1997; Kanwisher & Yovel, 2006; Rossion et al., 2003). The latter is in line with findings of some fMRI studies showing equally strong activations in this region both for entire human faces and for faces with eyes occluded (Tong et al., 2000). Our results showing sources located in the fusiform gyri for covered faces may be viewed as providing evidence that upper part of a face is treated just as a face and for that reason activates fusiform gyri. An alternative explanation may refer to the expertise hypothesis (Burns et al., 2019; Gauthier et al., 2000), proposing that the fusiform face area responds not only to faces but to view of stimuli for which participants have gained substantial perceptual expertise. In light of the expertise hypothesis, our findings may be explained by a newly developed expertise to process/recognize a partial view of faces.

On the behavioral level, we found that RTs were not modulated by the face type in general. Specifically, RTs to the self-face did not significantly differ from RTs to other faces. This result is in line with a recent meta-analysis across a large number of studies (Bortolon & Raffard, 2018). Bortolon and Raffard (2018) stressed that the employed task and, more precisely, the cognitive function on which that task rests may have an impact on patterns of RTs findings. When performing a detection task or visual search task that relies on attentional processes, participants responded equally quickly to their own face and to other people's faces. On the other hand, when requested to perform an identification task, participants were faster when responding to their own face than to other people's faces (Bortolon & Raffard, 2018).

However, we observed, a clear differentiation of the self-face with and without a surgical-like mask, with shorter RTs in the unmasked condition. We hypothesize that this slowing of reaction to one's own face when covered by a mask may correspond to emotional Stroop-like RTs effect (Dresler et al., 2009). The slowing of responses to the color of emotional stimuli in comparison to neutral ones indicates a biasing of attentional resources towards emotionally salient information (González-Villar et al., 2014). Thus, longer RTs to the self-face when covered by a mask may be a consequence of a specific attentional bias to the unusual image of one's own face. At this point it should be stressed that our behavioral task did not require overt recognition of the presented faces, and we could not directly infer that the face recognition occurred. Nevertheless, one may assume that this is a rather automatic process that happens involuntary. In a similar vein, Bortolon and Raffard (2018) noted also that the extraction of semantic information (e.g., face identity or face familiarity) also takes place during detection (attentional) tasks because we automatically attach meaning to what we see, although the task itself does not require the extraction of this information to be successful.

In conclusion, early (P100) and late (P300, LPP) ERP components revealed the stronger involvement of attentional mechanisms in processing of faces covered by surgical-like masks. However, N170 amplitudes as well as the results of LDA and source analyses pointed to some similarities between the neural underpinnings of faces when observed with and without surgical-like masks.

Funding

This study was funded by a National Science Center Poland, grant 2018/31/B/HS6/00461 awarded to AN.

Credit author statement

Anna Żochowska: Conceptualization, Investigation, Formal analysis, Visualization, Writing - Review & Editing. Paweł Jakuszyk: Conceptualization, Investigation, Formal analysis, Visualization, Writing - Review & Editing. Maria Nowicka: Data Curation, Formal analysis, Software, Visualization, Writing - Review & Editing. Anna Nowicka: Conceptualization, Methodology, Resources, Supervision, Project administration, Funding acquisition, Writing - Original Draft, Writing - Review & Editing.

Others

-

•

Data, experiment code and analysis code can be found here: https://osf.io/a9wef/

-

•

Legal copyright restrictions do not permit us to publicly archive the stimuli from The Chicago Face Database (CFD) used in this experiment. Readers seek access to the stimuli are advised to visit https://www.chicagofaces.org/. CFD stimuli will be provided on request without restriction. Furthermore, a subset of experimental stimuli presenting images of participants' faces and their close-other's faces have not been archived in a publicly accessible repository in order to maintain participant anonymity and ethics reasons (participants were assured that their faces will neither be publicly available nor used in other studies).

-

•

No part of the study procedures and analyses were pre-registered in a time-stamped, institutional registry prior to the research being conducted.

-

•

We report how we determined our sample size, all data exclusions, all inclusion/exclusion criteria, whether inclusion/exclusion criteria were established prior to data analysis, all manipulations, and all measures in the study.

Open practices

The study in this article earned an Open Data badge for transparent practices. Data for this study is available at https://osf.io/a9wef/?view_only=63bc4f0eef6a41359fce6f0976db516c.

Declaration of competing interest

None.

Action editor Holger Wiese

Reviewed 3 October 2021

References

- Alexopoulos T., Muller D., Ric F., Marendaz C. I, me, mine: Automatic attentional capture by self-related stimuli. European Journal of Social Psychology. 2012;42:770–779. [Google Scholar]

- Alzueta E., Melcón M., Jensen O., Capilla A. The ‘Narcissus Effect’: Top-down alpha-beta band modulation of face-related brain areas during self-face processing. NeuroImage. 2020;213:116754. doi: 10.1016/j.neuroimage.2020.116754. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Apps M.A.J., Tajadura-Jiménez A., Sereno M., Blanke O., Tsakiris M. Plasticity in unimodal and multimodal brain areas reflects multisensory changes in self-face identification. Cerebral Cortex. 2015;25:46–55. doi: 10.1093/cercor/bht199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Asanowicz D., Gociewicz K., Koculak M., Finc K., Bonna K., Cleeremans A., Binder M. The response relevance of visual stimuli modulates the P3 component and the underlying sensorimotor network. Scientific Reports. 2020;10:3818. doi: 10.1038/s41598-020-60268-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balakrishnama S., Ganapathiraju A. Linear discriminantanalysis-a brief tutorial. Institute Signal Information Process. 1998;18:1–8. [Google Scholar]

- Bell A.J., Sejnowski T.J. An information–maximization approach to blind separation and blind deconvolution. Neural Computation. 1995;7:1129–1159. doi: 10.1162/neco.1995.7.6.1129. [DOI] [PubMed] [Google Scholar]

- Beniczky S., Rosenzweig I., Scherg M., Jordanov T., Lanfer B., Lantz G., Larsson P.G. Ictal EEG source imaging in presurgical evaluation: high agreement between analysis methods. Seizure. 2016;43:1–5. doi: 10.1016/j.seizure.2016.09.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentin S., Allison T., Puce A., Perez E., McCarthy G. Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience. 1996;8:551–565. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentin S., Deouell L.Y. Structural encoding and identification in face processing: ERP evidence for separate mechanisms. Cognitive Neuropsychology. 2000;17:35–54. doi: 10.1080/026432900380472. [DOI] [PubMed] [Google Scholar]

- Berg P., Scherg M. A fast method for forward computation of multiple-shell spherical head models. Electroencephalography Clinical Neurophysiology. 1994;90:58–64. doi: 10.1016/0013-4694(94)90113-9. [DOI] [PubMed] [Google Scholar]

- Bola M., Paź M., Doradzińska Ł., Nowicka A. The self-face captures attention without consciousness: Evidence from the N2pc ERP component analysis. Psychophysiology. 2021;58 doi: 10.1111/psyp.13759. [DOI] [PubMed] [Google Scholar]

- Bortolon C., Raffard S. Self-face advantage over familiar and unfamiliar faces: A three-level meta-analytic approach. Psychonomic B Review. 2018;25:1287–1300. doi: 10.3758/s13423-018-1487-9. [DOI] [PubMed] [Google Scholar]

- Brédart S., Delchambre M., Laureys S. One's own face is hard to ignore. Quarterly Journal of Experimental Psychology. 2006;59:46–52. doi: 10.1080/17470210500343678. [DOI] [PubMed] [Google Scholar]

- Brown S.B., van Steenbergen H., Band G.P., de Rover M., Nieuwenhuis S. Functional significance of the emotion-related late positive potential. Frontiers Human Neuroscience. 2012;6:33. doi: 10.1111/1467-839X.00101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burns E.J., Arnold T., Bukach C.M. P-curving the fusiform face area: Meta-analyses support the expertise hypothesis. Neuroscience and Biobehavioral Reviews. 2019;104:209–221. doi: 10.1016/j.neubiorev.2019.07.003. [DOI] [PubMed] [Google Scholar]

- Caharel S., Poiroux S., Bernard C., Thibaut F., Lalonde R., Rebai M. ERPs associated with familiarity and degree of familiarity during face recognition. International Journal of Neuroscience. 2002;112:1499–1512. doi: 10.1080/00207450290158368. [DOI] [PubMed] [Google Scholar]

- Calbi M., Langiulli N., Ferroni F., Montalti M., Kolesnikov A., Gallese V., Umiltà M.A. The consequences of COVID-19 on social interactions: An online study on face covering. Scientific Reports. 2021;11:2601. doi: 10.1038/s41598-021-81780-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campbell J.I.D., Thompson V.A. More power to you: Simple power calculations for treatment effects with one degree of freedom. Behaviour Research Methods Instrumentation. 2002;34:332–337. doi: 10.3758/bf03195460. [DOI] [PubMed] [Google Scholar]

- Carmel D., Bentin S. Domain specificity versus expertise: Factors influencing distinct processing of faces. Cognition. 2002;83:1–29. doi: 10.1016/s0010-0277(01)00162-7. [DOI] [PubMed] [Google Scholar]

- Carragher D.J., Hancock P.J.B. Surgical face masks impair human face matching performance for familiar and unfamiliar faces. Cognitive Research. 2020;5:59. doi: 10.1186/s41235-020-00258-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Civile C., Elchlepp H., McLaren R., Galang C.M., Lavric A., McLaren I. The effect of scrambling upright and inverted faces on the N170. Quartely Journal Experiment Psychology. 2018;71:2464–2476. doi: 10.1177/1747021817744455. [DOI] [PubMed] [Google Scholar]

- Cuthbert B.N., Schupp H.T., Bradley M.M., Birbaumer N., Lang P.J. Brain potentials in affective picture processing: Covariation with autonomic arousal and affective report. Biological Psychology. 2000;52:95–111. doi: 10.1016/s0301-0511(99)00044-7. [DOI] [PubMed] [Google Scholar]

- Cygan H.B., Tacikowski P., Ostaszewski P., Chojnicka I., Nowicka A. Neural correlates of own name and own face detection in autism spectrum disorder. Plos One. 2014;9 doi: 10.1371/journal.pone.0086020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dering B., Martin C.D., Moro S., Pegna A.J., Thierry G. Face-sensitive processes one hundred milliseconds after picture onset. Frontiers Human Neuroscience. 2011;5:93. doi: 10.3389/fnhum.2011.00093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dresler T., Mériau K., Heekeren H.R., van der Meer E. Emotional Stroop task: Effect of word arousal and subject anxiety on emotional interference. Psychological Research. 2009;73:364–371. doi: 10.1007/s00426-008-0154-6. [DOI] [PubMed] [Google Scholar]

- Duncan J. The locus of interference in the perception of simultaneous stimuli. Psychological Review. 1980:272–300. [PubMed] [Google Scholar]

- Eimer M. The face-specific N170 component reflects late stages in the structural encoding of faces. Neuroreport. 2000;11:2319–2324. doi: 10.1097/00001756-200007140-00050. [DOI] [PubMed] [Google Scholar]

- Eimer M. In: The Oxford handbook of face perception. Calder A.J., Rhodes G., Johnson M.H., Haxby J.V., editors. Oxford University Press; Oxford: 2011. The face-sensitive N170 component of the event-related brain potential; pp. 329–344. [Google Scholar]

- Estudillo A.J. Commentary: My face or yours? Event-related potential correlates of self-face processing. Frontier Psychology. 2017;8:608. doi: 10.3389/fpsyg.2017.00608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Estudillo A.J., Hills P.J., Keat W.H. The effect of face masks on forensic face matching: An individual differences study. Journal of Applied Research Memory Cognitive. 2021 doi: 10.1016/j.jarmac.2021.07.002. [DOI] [Google Scholar]

- Fisher R.A. The use of multiple measurements in taxonomic problems. Annual Eugenics. 1936;7:179–188. [Google Scholar]

- Freud E., Stajduhar A., Rosenbaum R.S., Avidan G., Ganel T. The COVID-19 pandemic masks the way people perceive faces. Scientific Reports. 2020;10:22344. doi: 10.1038/s41598-020-78986-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gauthier I., Skudlarski P., Gore J.C., Anderson A.W. Expertise for cars and birds recruits brain areas involved in face recognition. Nature Neuroscience. 2000;3:191–197. doi: 10.1038/72140. [DOI] [PubMed] [Google Scholar]

- González-Villar A.J., Triñanes Y., Zurrón M., Carrillo-de-la-Peña M.T. Brain processing of task-relevant and task-irrelevant emotional words: An ERP study. Cognitive, Affective & Behavioral Neuroscience. 2014;14:939–950. doi: 10.3758/s13415-013-0247-6. [DOI] [PubMed] [Google Scholar]

- Gosling A., Eimer M. An event-related brain potential study of explicit face recognition. Neuropsychologia. 2011;49:2736–2745. doi: 10.1016/j.neuropsychologia.2011.05.025. [DOI] [PubMed] [Google Scholar]

- Gray H.M., Ambady N., Lowenthal W.T., Deldin P. P300 as an index of attention to self-relevant stimuli. Journal of Experiment Social Psychology. 2004;40:216–224. [Google Scholar]

- Greenhouse S.W., Geisser S. On methods in the analysis of profile data. Psychometrika. 1959;24:95–112. [Google Scholar]

- Gunji A., Inagaki M., Inoue Y., Takeshima Y., Kaga M. Event-related potentials of self-face recognition in children with pervasive developmental disorders. Brain & Development. 2009;31:139–147. doi: 10.1016/j.braindev.2008.04.011. [DOI] [PubMed] [Google Scholar]

- Hajcak G., MacNamara A., Olvet D.M. Event-related potentials, emotion, and emotion regulation: An integrative review. Developmental Neuropsychology. 2010;35:129–155. doi: 10.1080/87565640903526504. [DOI] [PubMed] [Google Scholar]

- Haxby J.V., Gobbini M.I., Furey M.L., Ishai A., Schouten J.L., Pietrini P. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 2001;293:2425–2430. doi: 10.1126/science.1063736. [DOI] [PubMed] [Google Scholar]

- Haxby J.V., Hoffman E.A., Gobbini M.I. The distributed human neural system for face perception. Trends Cognitive Science. 2000;4:223–233. doi: 10.1016/s1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- Heatherton T.F., Wyland C.L., Macrae C.N., Demos K.E., Denny B.T., Kelley W.M. Medial prefrontal activity differentiates self from close others. [Social Cognitive and Affective Neuroscience Electronic Resource] 2006;1:18–25. doi: 10.1093/scan/nsl001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hileman C.M., Henderson H., Mundy P., Newell L., Jaime M. Developmental and individual differences on the P1 and N170 ERP components in children with and without autism. Develop Neuropsychology. 2011;36:214–236. doi: 10.1080/87565641.2010.549870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hillyard S.A., Anllo-Vento L. Event-related brain potentials in the study of visual selective attention. Proceeding National Academy Science of United States of America. 1998;95:781–787. doi: 10.1073/pnas.95.3.781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hillyard S.A., Vogel E.K., Luck S.J. Sensory gain control (amplification) as a mechanism of selective attention: Electrophysiological and neuroimaging evidence. Physical Therapy in Sport: Official Journal of the Association of Chartered Physiotherapists in Sports Medicine. 1998;353:1257–1270. doi: 10.1098/rstb.1998.0281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humphreys G.W., Sui J. Attentional control and the self: The self-attention network (SAN) Cognitive Neuroscience. 2016;7:5–17. doi: 10.1080/17588928.2015.1044427. [DOI] [PubMed] [Google Scholar]

- Itier R.J., Taylor M.J. Inversion and contrast polarity reversal affect both encoding and recognition processes of unfamiliar faces: A repetition study using ERPs. NeuroImage. 2002;15:353–372. doi: 10.1006/nimg.2001.0982. [DOI] [PubMed] [Google Scholar]

- Jung T., Makeig S., Westerfield M., Townsend J., Courchesne E., Sejnowski T. Analysis and visualization of single-trial event-related potentials. Human Brain Mapping. 2001;14:166–185. doi: 10.1002/hbm.1050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N., McDermott J., Chun M.M. The fusiform face area: A module in human extrastriate cortex specialized for face perception. The Journal of Neuroscience: the Official Journal of the Society for Neuroscience. 1997;17(11):4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N., Yovel G. The fusiform face area: A cortical region specialized in the perception of faces. Plastic and Reconstructive Surgery. 2006;361:2109–2128. doi: 10.1098/rstb.2006.1934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keenan J.P., Wheeler M.A., Gallup G.G., Jr., Pascual-Leone A. Self-recognition and the right prefrontal cortex. Trends Cognitive Science. 2000;4:338–344. doi: 10.1016/s1364-6613(00)01521-7. [DOI] [PubMed] [Google Scholar]

- Key A.P., Dove G.O., Maguire M.J. Linking brainwaves to the brain: An ERP primer. Developmental Neuropsychology. 2005;27:183–215. doi: 10.1207/s15326942dn2702_1. [DOI] [PubMed] [Google Scholar]

- Keyes H. Categorical perception effects for facial identity in robustly represented familiar and self-faces: The role of configural and featural information. Quartely Journal Experiment Psychology. 2012;65(4):760–772. doi: 10.1080/17470218.2011.636822. [DOI] [PubMed] [Google Scholar]

- Keyes H., Brady N. Self-face recognition is characterized by "bilateral gain" and by faster, more accurate performance which persists when faces are inverted. Quartely Journal of Experimental Psychology. 2010;63(5):840–847. doi: 10.1080/17470211003611264. [DOI] [PubMed] [Google Scholar]

- Kircher T.T., Senior C., Phillips M.L., Rabe-Hesketh S., Benson P.J., Bullmore E.T., Brammer M., Simmons A., Bartels M., David A.S. Recognizing one’s own face. Cognition. 2001;78:B1–B15. doi: 10.1016/s0010-0277(00)00104-9. [DOI] [PubMed] [Google Scholar]

- Knyazev G. EEG correlates of self-referential processing. Frontier Human Neuroscience. 2013;7:264. doi: 10.3389/fnhum.2013.00264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kotlewska I., Nowicka A. Present self, past self and close-other: Event-related potential study of face and name detection. Biological Psychology. 2015;110:201–211. doi: 10.1016/j.biopsycho.2015.07.015. [DOI] [PubMed] [Google Scholar]

- Kotlewska I., Nowicka A. Present-self, past-self and the close-other: Neural correlates of assigning trait adjectives to oneself and others. The European Journal of Neuroscience. 2016;44:2064–2071. doi: 10.1111/ejn.13293. [DOI] [PubMed] [Google Scholar]

- Kotlewska I., Wójcik M.J., Nowicka M.M., Marczak K., Nowicka A. Present and past selves: A steady-state visual evoked potentials approach to self-face processing. Scientific Reports. 2017;7:1–9. doi: 10.1038/s41598-017-16679-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N., Kievit R.A. Representational geometry: Integrating cognition, computation, and the brain. Trends Cogn Sci. 2013;17:401–412. doi: 10.1016/j.tics.2013.06.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lancaster J.L., Woldorff M.G., Parsons L.M., Liotti M., Freitas C.S., Rainey L., Kochunov P.V., Nickerson D., Mikiten S.A., Fox P.T. Automated Talairach atlas labels for functional brain mapping. Human Brain Mapping. 2000;10(3):120–131. doi: 10.1002/1097-0193(200007)10:3<120::AID-HBM30>3.0.CO;2-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lavie N., Ro T., Russell C. The role of perceptual load in processing distractor faces. Psychological Science. 2003;14:510–515. doi: 10.1111/1467-9280.03453. [DOI] [PubMed] [Google Scholar]

- Luck S.J. In: Event-related potentials: A methods handbook. Handy T.C., editor. The MIT Press; Cambridge, MA: 2005. Ten simple rules for designing ERP experiments; pp. 209–227. [Google Scholar]

- Luck S.J., Woodman G.E., Vogel E.K. Event-related potential studies of attention. Trends Cognitive Science. 2000;4:432–440. doi: 10.1016/s1364-6613(00)01545-x. [DOI] [PubMed] [Google Scholar]

- Ma D.S., Correll J., Wittenbrink B. The Chicago face database: A free stimulus set of faces and norming data. Behavior Research Methods. 2015;47:1122–1135. doi: 10.3758/s13428-014-0532-5. [DOI] [PubMed] [Google Scholar]

- Magnun G. Neural mechanisms of visual selective attention. Psychophysiology. 1995;32:4–18. doi: 10.1111/j.1469-8986.1995.tb03400.x. [DOI] [PubMed] [Google Scholar]

- Mangun G.R., Hillyard S.A. Modulations of sensory-evoked brain potentials indicate changes in perceptual processing during visual-spatial priming. Journal Experimental Psychology Human. 1991;17:1057–1074. doi: 10.1037//0096-1523.17.4.1057. [DOI] [PubMed] [Google Scholar]

- McNeill D. 1st ed. Little, Brown and Company; Boston, MA: 1998. The face. [Google Scholar]

- Ninomiya H., Onitsuka T., Chen C.H., Sato E., Tashiro N. P300 in response to the subject's own face. Psychiatry and Clinical Neurosciences. 1998;52:519–522. doi: 10.1046/j.1440-1819.1998.00445.x. [DOI] [PubMed] [Google Scholar]

- Nowicka M.M., Wójcik M.J., Kotlewska I., Bola M., Nowicka A. The impact of self-esteem on the preferential processing of self-related information: Electrophysiological correlates of explicit self versus. other evaluation. Plos One. 2018;13 doi: 10.1371/journal.pone.0200604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noyes E., Davis J.P., Petrov N., Gray K.L.H., Ritchie K.L. The effect of face masks and sunglasses on identity and expression recognition with super-recognizers and typical observers. Royal Society Open Science. 2021;8:201169. doi: 10.1098/rsos.201169. 0.1098/rsos.201169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oldfield R.C. The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Pannese A., Hirsch J. Self-face enhances processing of immediately preceding invisible faces. Neuropsychologia. 2011;49:564–573. doi: 10.1016/j.neuropsychologia.2010.12.019. [DOI] [PubMed] [Google Scholar]

- Parketny J., Towler J., Eimer M. The activation of visual face memory and explicit face recognition are delayed in developmental prosopagnosia. Neuropsychologia. 2015;75:538–547. doi: 10.1016/j.neuropsychologia.2015.07.009. [DOI] [PubMed] [Google Scholar]

- Pascual-Marqui R.D., Michel C.M., Lehmann D. Low resolution electromagnetic tomography: A new method for localizing electrical activity in the brain. International Journal of Psychophysiology. 1994;18:49–65. doi: 10.1016/0167-8760(84)90014-x. [DOI] [PubMed] [Google Scholar]

- Paul-Jordanov I., Hoechstetter K., Bornfleth H., Waelkens A., Scherg M. 2016. Besa research 6.1 user manual. [Google Scholar]

- Petersen S.E., Posner M.I. The attention system of the human brain: 20 years after. Annual review of neuroscience. Annual Review of Neuroscience. 2012;35:73–89. doi: 10.1146/annurev-neuro-062111-150525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Picton T.W., Hillyard S.A. In: Human event-related potentials. Picton T.W., editor. Elsevier; Amsterdam: 1988. Endogenous event-related potentials; pp. 376–390. [Google Scholar]

- Pierce L.J., Scott L., Boddington S., Droucker D., Curran T., Tanaka J. The n250 brain potential to personally familiar and newly learned faces and objects. Frontiers Human Neuroscience. 2011;5:111. doi: 10.3389/fnhum.2011.00111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polich J. Updating P300: An integrative theory of P3a and P3b. Clinical Neurophysiology: Official Journal of the International Federation of Clinical Neurophysiology. 2007;118:2128–2148. doi: 10.1016/j.clinph.2007.04.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Posner M.I. Orienting of attention. Quaterly Journal of Experiment Psychology. 1980;32(1):3–25. doi: 10.1080/00335558008248231. [DOI] [PubMed] [Google Scholar]

- Rossion B. Understanding face perception by means of human electrophysiology. Trends Cogn Sci. 2014;18:310–318. doi: 10.1016/j.tics.2014.02.013. [DOI] [PubMed] [Google Scholar]

- Rossion B., Gauthier I., Tarr M.J., Despland P., Bruyer R., Linotte S., Crommelinck M. The N170 occipito-temporal component is delayed and enhanced to inverted faces but not to inverted objects: An electrophysiological account of face-specific processes in the human brain. Neuroreport. 2000;11(1):69–72. doi: 10.1097/00001756-200001170-00014. [DOI] [PubMed] [Google Scholar]