Abstract

Mammals localize sounds using information from their two ears. Localization in real-world conditions is challenging, as echoes provide erroneous information, and noises mask parts of target sounds. To better understand real-world localization we equipped a deep neural network with human ears and trained it to localize sounds in a virtual environment. The resulting model localized accurately in realistic conditions with noise and reverberation. In simulated experiments, the model exhibited many features of human spatial hearing: sensitivity to monaural spectral cues and interaural time and level differences, integration across frequency, biases for sound onsets, and limits on localization of concurrent sources. But when trained in unnatural environments without either reverberation, noise, or natural sounds, these performance characteristics deviated from those of humans. The results show how biological hearing is adapted to the challenges of real-world environments and illustrate how artificial neural networks can reveal the real-world constraints that shape perception.

Introduction

Why do we see or hear the way we do? Perception is believed to be adapted to the world – shaped over evolution and development to help us survive in our ecological niche. Yet adaptedness is often difficult to test. Many phenomena are not obviously a consequence of adaptation to the environment, and perceptual traits are often proposed to reflect implementation constraints rather than the consequences of performing a task well. Well-known phenomena attributed to implementation constraints include aftereffects1,2, masking3,4, poor visual motion and form perception for equiluminant color stimuli5, and limits on the information that can be extracted from high-frequency sound6–8.

Evolution and development can be viewed as an optimization process that produces a system that functions well in its environment. The consequences of such optimization for perceptual systems have traditionally been revealed by ideal observer models – systems that perform a task optimally under environmental constraints9,10, and whose behavioral characteristics can be compared to actual behavior. Ideal observers are typically derived analytically, but as a result are often limited to simple psychophysical tasks11–16. Despite recent advances, such models remain intractable for many real-world behaviors. Rigorously evaluating adaptedness has thus remained out of reach for many domains. Here we extend ideas from ideal observer theory to investigate the environmental constraints under which human behavior emerges, using contemporary machine learning to optimize models for behaviorally relevant tasks in simulated environments. Human behaviors that emerge from machine learning under a set of naturalistic environmental constraints, but not under alternative constraints, are plausibly a consequence of optimization for those natural constraints (i.e., adapted to the natural environment) (Fig. 1A).

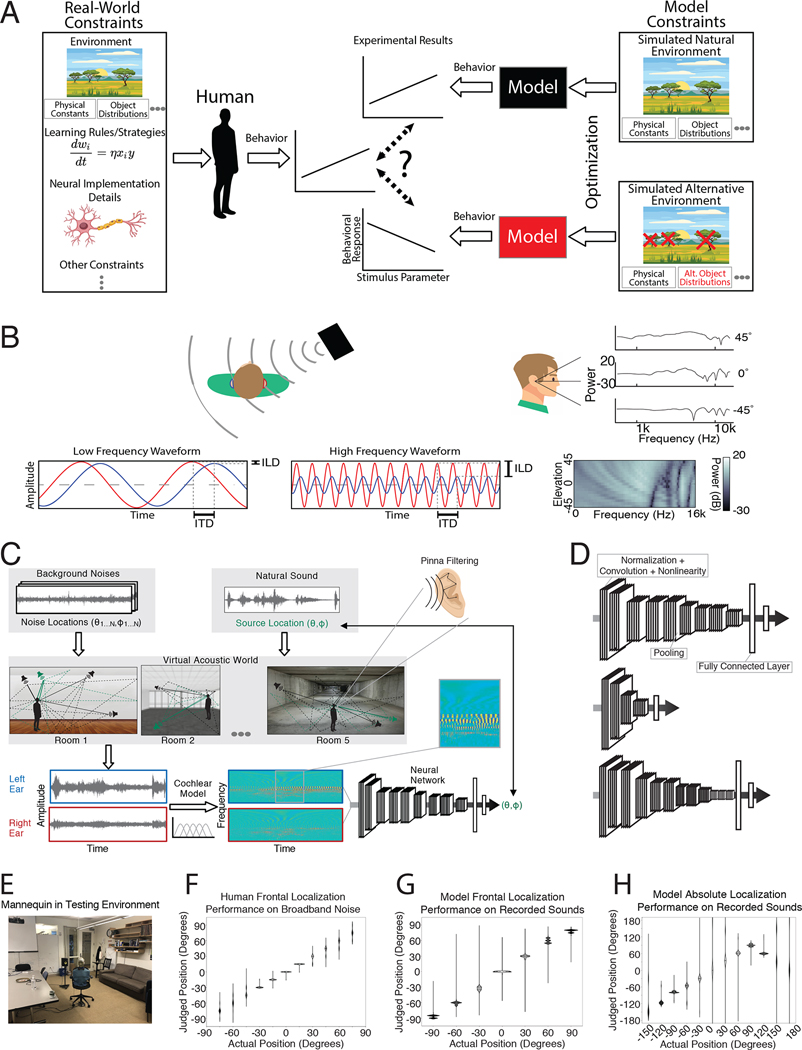

Figure 1.

Overview of approach. A. Illustration of method. A variety of constraints (left) shape human behavior. Models optimized under particular environmental constraints (right) illustrate the effect of these constraints on behavior. Environment simulators can instantiate naturalistic environments as well as alternative environments in which particular properties of the world are altered, to examine the constraints that shape human behavior. B. Cues to sound location available to humans: interaural time and level differences (left and center) and spectral differences (right). Time and level differences are shown for low and high frequency sinusoids (left and center, respectively). The level difference is small for the low frequency, and the time difference is ambiguous for the high frequency. C. Training procedure. Natural sounds (green) were rendered at a location in a room, with noises (natural sound textures, black) placed at other locations. Rendering included direction-specific filtering by the head/torso/pinnae, using head-related transfer functions from the KEMAR mannequin. Neural networks were trained to classify the location of the natural sound source (azimuth and elevation) into one of a set of location bins (spaced 5 degrees in azimuth and 10 degrees in elevation). D. Example neural network architectures from the architecture search. Architectures consisted of sequences of “blocks” (a normalization layer, followed by a convolution layer, followed by a nonlinearity layer) and pooling layers, culminating in fully connected layers followed by a classifier that provided the network’s output. Architectures varied in the total number of layers, the kernel dimensions for each convolutional layer, the number of blocks that preceded each pooling layer, and the number of fully connected layers preceding the classifier. Labels indicate an example block, pooling layer, and fully connected layer. The model’s behavior was taken as the average of the results for the 10 best architectures (assessed by performance on a held-out validation set of training examples). E. Recording setup for real-world test set. Mannequin was seated on a chair and rotated relative to the speaker to achieve different azimuthal positions. Sound was recorded from microphones in the mannequin ears. F. Free-field localization of human listeners, replotted from a previous publication154. Participants heard a sound played from one of 11 speakers in the front horizontal plane and pointed to the location. Graph plots kernel density estimate of participant responses for each actual location. G. Localization judgments of the trained model for the real-world test set. Graph plots kernel density estimates of response distribution. For ease of comparison with F, in which all locations were in front of the listener, positions were front-back folded. H. Localization judgments of the model without front-back folding. Model errors are predominantly at front-back reflections of the correct location.

Sound localization is one domain of perception where the relationship of behavior to environmental constraints has not been straightforward to evaluate. The basic outlines of spatial hearing have been understood for decades17–20. Time and level differences in the sound that enters the two ears provide cues to a sound’s location, and location-specific filtering by the ears, head, and torso provide monaural cues that help resolve ambiguities in binaural cues (Fig. 1B). However, in real-world conditions, background noise masks or corrupts cues from sources to be localized, and reflections provide erroneous cues to direction21. Classical models based on these cues thus cannot replicate real-world localization behavior22–24. Instead, modeling efforts have focused on accounting for observed neuronal tuning in early stages of the auditory system rather than behavior25–31, or have modeled behavior in simplified experimental conditions using particular cues24,30,32–36. Engineering systems must solve localization in real-world conditions, but typically adopt approaches that diverge from biology, using more than two microphones and/or not leveraging cues from ear/head filtering37–44. As a result we lack quantitative models of how biological organisms localize sounds in realistic conditions. In the absence of such models, the science of sound localization has largely relied on intuitions about optimality. Those intuitions were invaluable in stimulating research, but on their own are insufficient for quantitative predictions.

Here we exploit the power of contemporary artificial neural networks to develop a model optimized to localize sounds in realistic conditions. Unlike much other contemporary work using neural networks to investigate perceptual systems45–50, our primary interest is not in potential correspondence between internal representations of the network and the brain. Instead, we aim to use the neural network as a way to find an optimized solution to a difficult real-world task that is not easily specified analytically, for the purpose of comparing its behavioral characteristics to those of humans. Our approach is thus analogous to the classic ideal observer approach, but harnesses modern machine learning in place of an ideal observer for a problem where one is not analytically tractable.

To obtain sufficient labeled data with which to train the model, and to enable the manipulation of training conditions, we used a virtual acoustic world51. The virtual world simulated sounds at different locations with realistic patterns of surface reflections and background noise that could be eliminated to yield unnatural training environments. To give the model access to the same cues available to biological organisms, we trained it on a high-fidelity cochlear representation of sound, leveraging recent technical advances52 to train the large models that are required for such high-dimensional input. Unlike previous generations of neural network models24,37,40,42,44, which were reliant on hand-specified sound features, we learn all subsequent stages of a sound localization system to obtain good performance in real-world conditions.

When tested on stimuli from classic laboratory experiments, the resulting model replicated a large and diverse array of human behavioral characteristics. We then trained models in unnatural conditions to simulate evolution and development in alternative worlds. These alternative models deviated notably from human-like hearing. The results suggest that the characteristics of human hearing are indeed adapted to the constraints of real-world localization, and that the rich panoply of sound localization phenemona can be explained as consequences of this adaptation. The approach we employ is broadly applicable to other sensory modalities, providing a way to test the adaptedness of aspects of human perception to the environment and to understand the conditions in which human-like perception arises.

Results

Model construction

We began by building a system that could localize sounds using the information available to human listeners. The system thus had outer ears (pinnae), and a simulated head and torso, along with a simulated cochlea. The outer ears and head/torso were simulated using head-related impulse responses recorded from a standard physical model of the human53. The cochlea was simulated with a bank of bandpass filters modeled on the frequency selectivity of the human ear54,55, whose output was rectified and low-pass filtered to simulate the presumed upper limit of phase locking in the auditory nerve56. The inclusion of a fixed cochear front-end (in lieu of trainable filters) reflected the assumption that the cochlea evolved to serve many different auditory tasks rather than being primarily driven by sound localization. As such, the cochlea seemed a plausible biological constraint on localization.

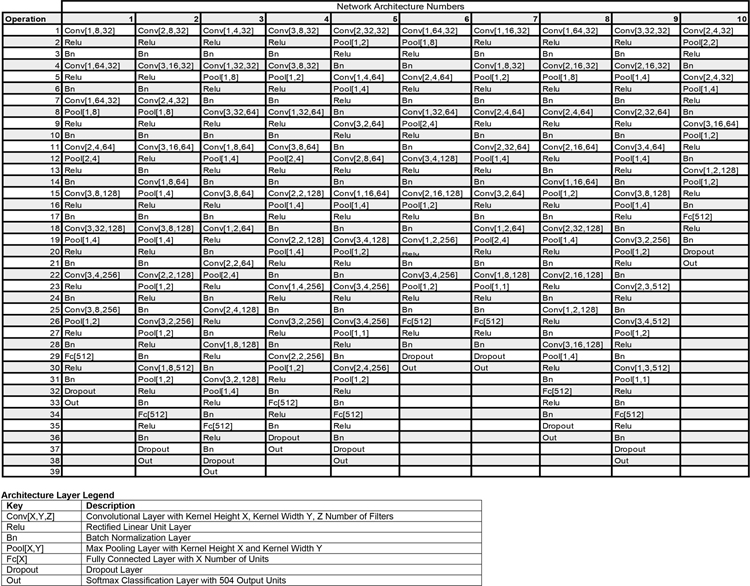

The output of the two cochlea formed the input to a standard convolutional neural network (Fig. 1C). This network instantiated a cascade of simple operations – filtering, pooling, and normalization – culminating in a softmax output layer with 504 units corresponding to different spatial locations (spaced 5° in azimuth and 10° in elevation). The parameters of the model were tuned to maximize localization performance on the training data. The optimization procedure had two phases: an architecture search in which we searched over architectural parameters to find a network architecture that performed well (Fig. 1D), and a training phase in which the filter weights of the selected architectures were trained to asymptotic performance levels using gradient descent.

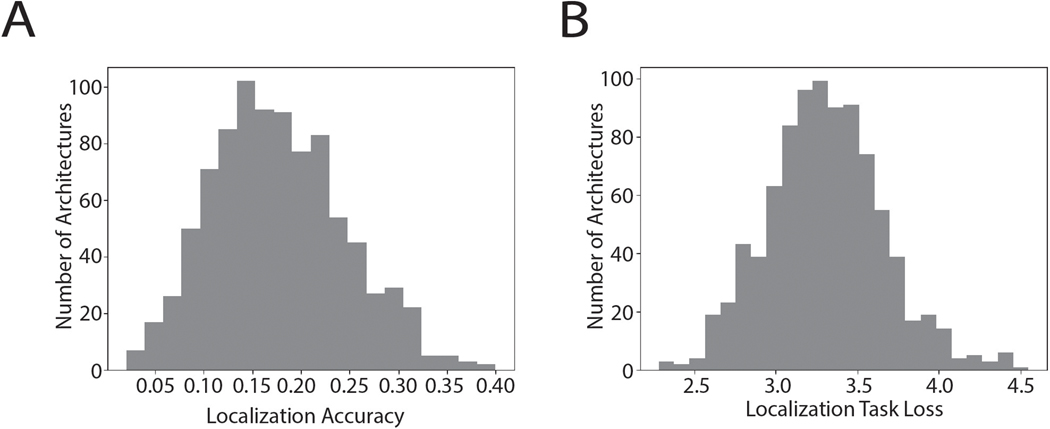

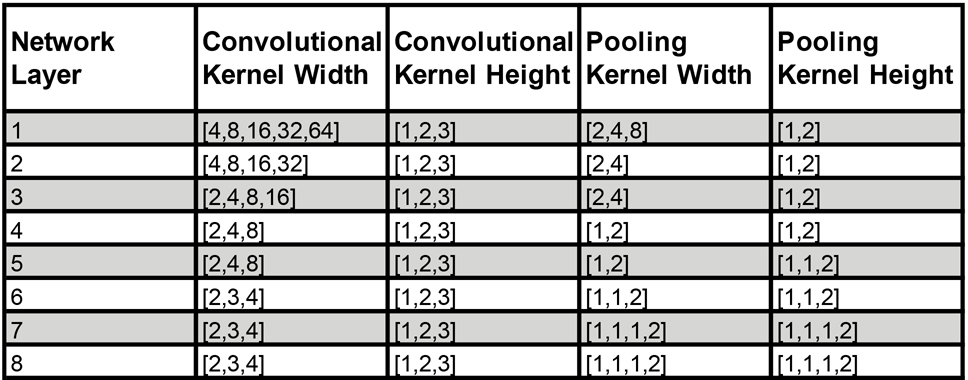

The architecture search consisted of training each of a large set of possible architectures for 15000 training steps with 16 1s stimulus examples per step (240k total examples; see Extended Data Fig. 1 for distribution of localization performance across architectures, and Extended Data Fig. 2 for the distributions from which architectures were chosen). We then chose the 10 networks that perfomed best on a validation set of data not used during training (Extended Data Fig. 3). The parameters of these 10 networks were then reinitialized and each trained for 100k training steps (1.6M examples). Given evidence that internal representations can vary somewhat across different networks trained on the same task57, we present results aggregated across the top 10 best-performing architectures, treated akin to different participants in an experiment58. Most results graphs present the average results for these 10 networks, which we collectively refer to as “the model”.

The training data was based on a set of ~500,000 stereo audio signals with associated 3D locations relative to the head (on average 988 examples for each of the 504 location bins; see Methods). These signals were generated from 385 natural sound source recordings (Extended Data Fig. 4) rendered at a spatial location in a simulated room. The room simulator used a modified source-image method51,59 to simulate the reflections off the walls of the room. Each reflection was then filtered by the (binaural) head-related impulse response for the direction of the reflection53. Five different rooms were used, varying in their dimensions and in the material of the walls (Extended Data Fig. 5). To mimick the common presence of noise in real-world environments, each training signal also contained spatialized noise. Background noise was synthesized from the statistics of a natural sound texture60, and was rendered at between 3 and 8 randomly chosen locations using the same room simulator, in order to produce noise that was diffuse but non-uniform, intended to replicate common real-world sources of noise. At each training step the rendered natural sound sources were randomly paired with rendered background noises. The neural networks were trained to map the binaural audio to the location of the sound source (specified by its azimuth and elevation relative to the model’s “head”).

Model evaluation in real-world conditions

The trained networks were first evaluated on a held-out set of 70 sound sources rendered using the same pipeline used to generate the training data (yielding a total of ~47,000 stereo audio signals). The best-performing networks produced accurate localization for this validation set (the mean error was 5.3 degrees in elevation and 4.4 degrees in azimuth, front-back folded, i.e. reflected about the coronal plane, to discount front-back confusions).

To assess whether the model would generalize to real-world stimuli outside the training distribution, we made binaural recordings in an actual conference room using a mannequin with in-ear microphones (Fig. 1E. Humans localize relatively well in such free-field conditions (Fig. 1F). The trained networks also localized real-world recordings relatively well (Fig. 1G), on par with human free-field localization, with errors limited to the front-back confusions that are common to humans when they cannot move their heads (Fig. 1H)61,62.

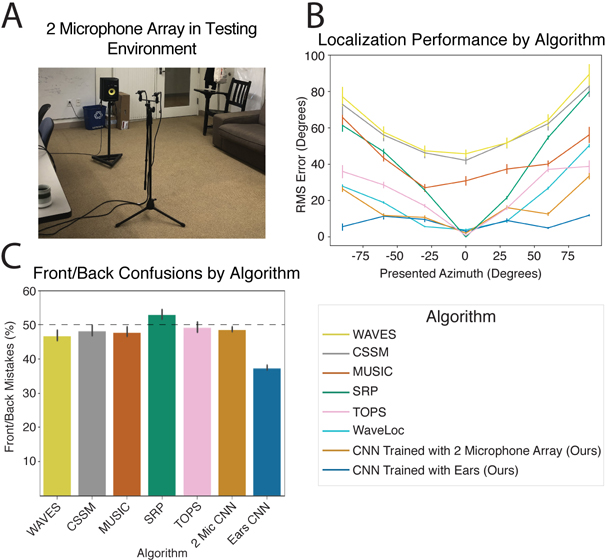

For comparison, we also assessed the performance of a standard set of two-microphone localization algorithms from the engineering literature63–68. In addition, we trained the same set of neural networks to localize sounds from a two-microphone array that lacked the filtering provided to biological organisms by the ears/head/torso but that included the simulated cochlea (Extended Data Fig. 6A). Our networks that had been trained with biological pinnae/head/torso outperformed the set of standard two-microphone algorithms from the engineering community, as well as the neural networks trained with stereo microphone input without a head and ears (Extended Data Fig. 6B&C). This latter result confirms that the head and ears provide valuable cues for localization. Overall, performance on the real-world test set demonstrates that training a neural network in a virtual world produces a model that can accurately localize sounds in realistic conditions.

Model behavioral characteristics

To assess whether the trained model replicated the characteristics of human sound localization, we simulated a large set of behavioral experiments from the literature, intended to span many of the best-known and largest effects in spatial hearing. We replicated the conditions of the original experiments as closely as possible (e.g. when humans were tested in anechoic conditions, we rendered experimental stimuli in an anechoic environment). We emphasize that the networks were not fit to human data in any way. Despite this, the networks reproduced the characteristics of human spatial hearing across this broad set of experiments.

Sensitivity to interaural time and level differences

We began by assessing whether the networks learned to use the binaural cues known to be important for biological sound localization. We probed the effect of interaural time and level differences (ITDs and ILDs, respectively) on localization behavior using a paradigm in which additional time and level differences are added to high- and low-frequency sounds rendered in virtual acoustic space69 (Fig. 2A). This paradigm has the advantage of using realistically externalized sounds and an absolute localization judgment (rather than the left/right lateralization judgments of simpler stimuli that are common to many other experiments70–73).

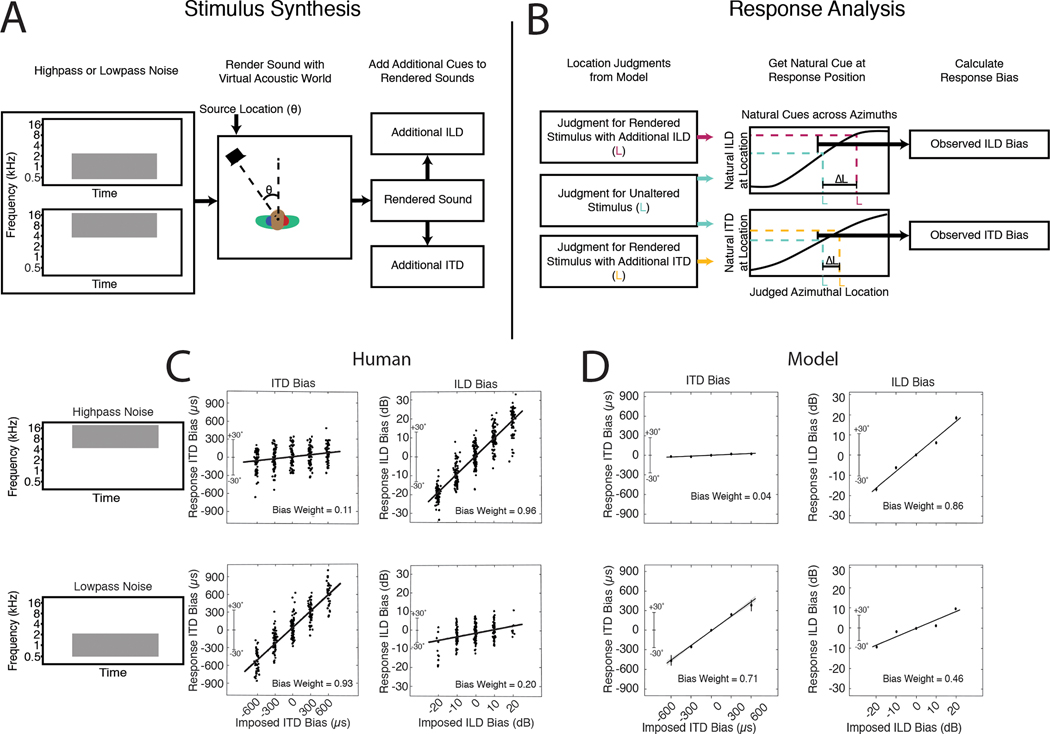

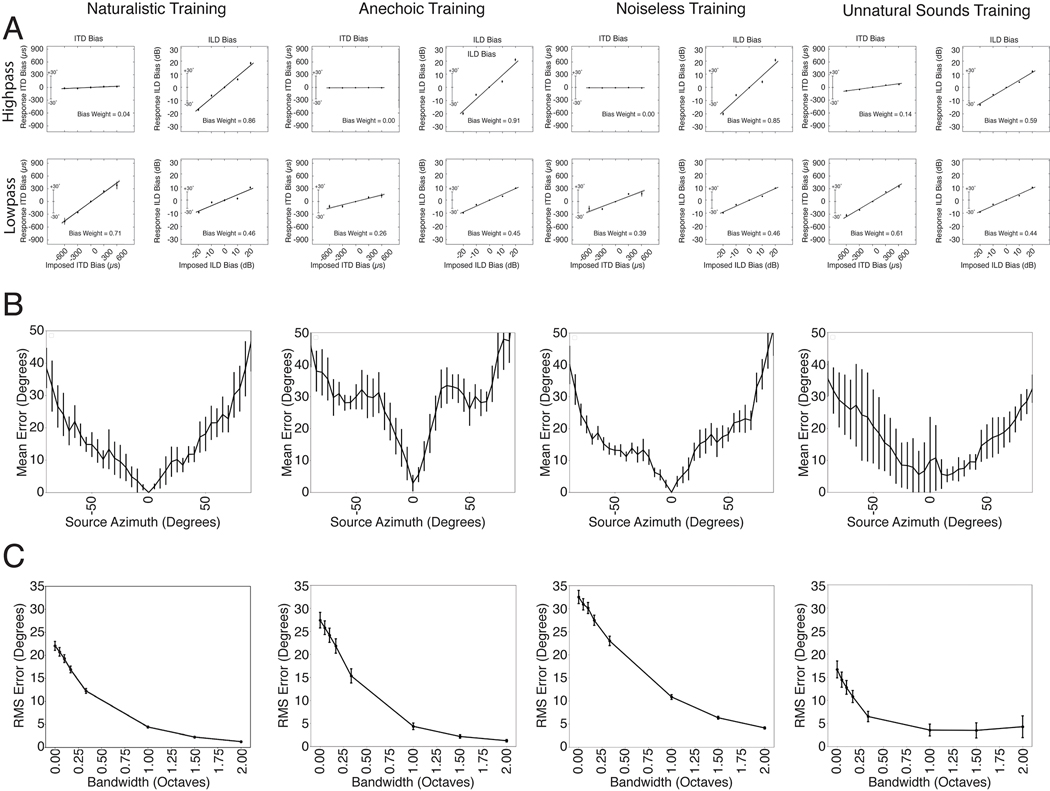

Figure 2.

Sensitivity to interaural time and level differences. A. Schematic of stimulus generation. Noise bursts filtered into high or low frequency bands were rendered at a particular azimuthal position, after which an additional ITD or ILD was added to the stereo audio signal. B. Schematic of response analysis. Responses were analyzed to determine the amount by which the perceived location (L) was altered (ΔL) by the additional ITD/ILD, expressed as the amount by which the ITD/ILD would have changed if the actual sound’s location changed by ΔL. C. Effect of additional ITD and ILD on human localization. Y axis plots amount by which the perceived location was altered, expressed in ITD/ILD as described above. Each dot plots a localization judgment from one trial. Data reproduced from a previous publication69. D. Effect of additional ITD and ILD on model localization. Same conventions as B. Error bars plot SEM, bootstrapped across the 10 networks.

In the original experiment69, the change to perceived location imparted by the additional ITD or ILD was expressed as the amount by which the ITD or ILD would change in natural conditions if the actual location were changed by the perceived amount (Fig. 2B). This yields a curve whose slope indicates the efficacy of the manipulated cue (ITD or ILD). We reproduced the stimuli from the original study, rendered them in our virtual acoustic world, added ITDs and ILDs as in the original study, and analyzed the model’s localization judgments in the same way.

For human listeners, ITD and ILD have opposite efficacies at high and low frequencies (Fig. 2C), as predicted by classical “duplex” theory17. An ITD bias imposed on low-frequency sounds shifts the perceived location of the sound substantially (bottom left), whereas an ITD imposed on high-frequency sound does not (top left). The opposite effect occurs for ILDs (right panels), although there is a weak effect of ILDs on low-frequency sound. This latter effect is inconsistent with the classical duplex story but consistent with more modern measurements indicating small but reliable ILDs at low frequencies74 that are used by the human auditory system75–77.

As shown in Fig. 2D, the model qualitatively replicated the effects seen in humans. Added ITDs and ILDs had the largest effect at low and high frequencies, respectively, but ILDs had a modest effect at low frequencies as well. This produced an interaction between the type of cue (ITD/ILD) and frequency range (difference of differences between slopes significantly greater than 0; p<.001, evaluated by bootstrapping across the 10 networks). However, the effect of ILD at low frequencies was also significant (slope significantly greater than 0; p<.001, via bootstrap). Thus, a model optimized for accurate localization both exhibits the dissociation classically associated with duplex theory, but also its refinements in the modern era.

Azimuthal localization of broadband sounds

We next measured localization accuracy of broadband noise rendered at different azimuthal locations (Fig. 3A). In humans, localization is most accurate near the midline (Fig. 3B), and becomes progressively less accurate as sound sources move to the left or right of the listener78–80. One explanation is that the first derivatives of ITD and ILD with respect to azimuthal location decrease as the source moves away from the midline21, providing less information about location28. The model qualitatively reproduced this result (Fig. 3C).

Figure 3.

Azimuthal localization is most accurate at the midline and improves with stimulus bandwidth. A. Schematic of stimuli from experiment measuring localization accuracy at different azimuthal positions. B. Localization accuracy of human listeners for broadband noise at different azimuthal positions. Data were scanned from a previous publication80, which measured discriminability of noise bursts separated by 15 degrees (quantified as d’). Error bars plot SEM. C. Localization accuracy of our model for broadband noise at different azimuthal positions. Graph plots mean absolute localization error of the same noise bursts used in the human experiment in B. Error bars plot SEM across the 10 networks. D. Schematic of stimuli from experiment measuring effect of bandwidth on localization accuracy. Noise bursts varying in bandwidth were presented at particular azimuthal locations; participants indicated the azimuthal position with a keypress. E. Effect of bandwidth on human localization of noise bursts. Error bars plot SD. Data are replotted from a previous publication82. F. Effect of bandwidth on model localization of noise bursts. Networks were constrained to report only the azimuth of the stimulus. Error bars plot SEM across the 10 networks.

Integration across frequency

Because biological hearing begins with a decomposition of sound into frequency channels, binaural cues are thought to be initially extracted within these channels20,25. However, organisms are believed to integrate information across frequency to achieve more accurate localization than could be mediated by any single frequency channel. One signature of this integration is improvement in localization accuracy as the bandwidth of a broadband noise source is increased (Fig. 3D&E)81,82. We replicated one such experiment on the networks and they exhibited a similar effect, with accuracy increasing with noise bandwidth (Fig. 3F).

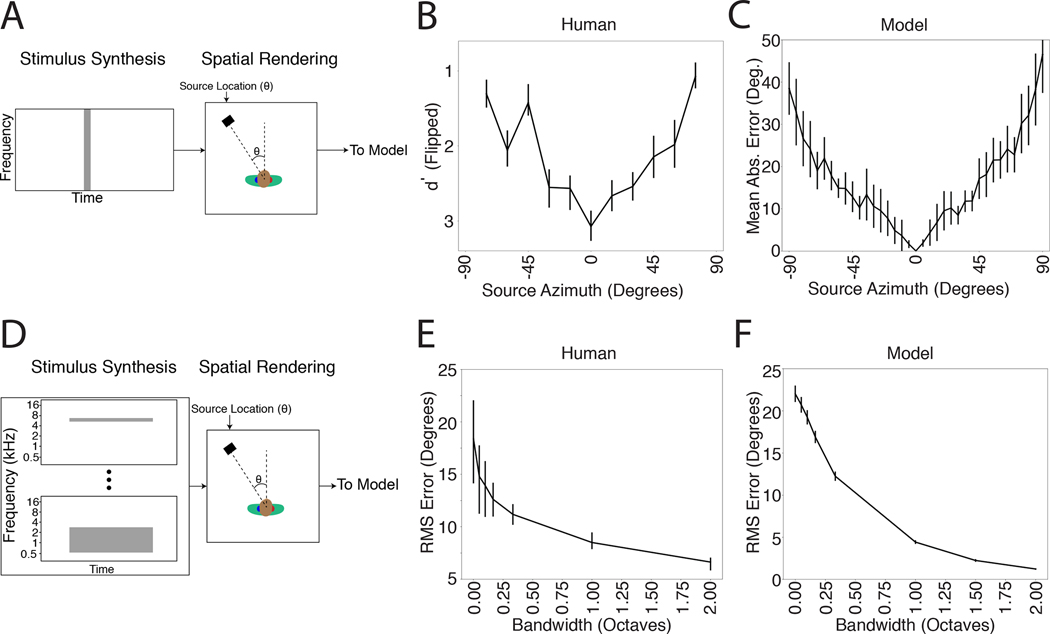

Use of ear-specific cues to elevation

In addition to the binaural cues that provide information about azimuth, organisms are known to make use of the direction-specific filtering imposed on sound by the ears, head and torso18,83. Each individual’s ears have resonances that “color” a sound differently depending on where it comes from in space. Individuals are believed to learn the specific cues provided by their ears. In particular, if forced to listen with altered ears, either via molds inserted into the ears84 or via recordings made in a different person’s ears85, localization in elevation degrades even though azimuthal localization is largely unaffected (Fig. 4A–C).

Figure 4.

Dependence of elevation perception on ear-specific transfer functions. A. Photographs of ear alteration in humans (reproduced from a previous publication84). B. Sound localization by human listeners with unmodified ears. Graph plots mean and SEM of perceived locations for 4 participants, superimposed on grid of true locations (dashed lines). Data scanned from original publication84. C. Effect of ear alteration on human localization. Same conventions as B. D. Sound localization in azimuth and elevation by the model, using the ears (head-related impulse responses) from training, with broadband noise sound sources. Graph plots mean locations estimated by the 10 networks. Tested locations differed from those in the human experiment to conform to the location bins used for network training. E. Effect of ear alteration on model sound localization. Ear alteration was simulated by substituting an alternative set of head-related impulse responses into the sound rendered following training. Graph plots average results across all 45 sets of alternative ears (averaged across the 10 networks). F. Effect of individual sets of alternative ears on localization in azimuth. Graph shows results for a larger set of locations than in D and E to illustrate the generality of the effect. G. Effect of individual sets of alternative ears on localization in elevation. Bolded lines show ears at 5th, 25th, 75th, and 95th percentiles when the 45 sets of ears were ranked by accuracy. H. Smoothing of head-related transfer functions, produced by varying the number of coefficients in a discrete cosine transform. Reproduced from original publication86. I. Effect of spectral smoothing on human perception. Participants heard two sounds, one played from a speaker in front of them, and one played through open-backed earphones, and judged which was which. The earphone-presented sound was rendered using HRTFs smoothed by various degrees. In practice participants performed the task by noting changes in sound location. Data scanned from original publication86. Error bars plot SEM. Conditions with 4, 2, and 1 cosine coefficients were omitted from the experiment, but are included on the x-axis to facilitate comparison with the model results in J. J. Effect of spectral smoothing on model sound localization accuracy (measured in both azimuth and elevation). Conditions with 512 and 1024 cosine components were not realizable given the length of the impulse responses we used. K. Effect of spectral smoothing on model accuracy in azimuth. L. Effect of spectral smoothing on model accuracy in elevation. M. Stimuli from experiment in N and O. Noise bursts varying in low- or high-pass cutoff were presented at particular elevations. N. Effect of low-pass and high-pass cutoff on accuracy in humans. Data scanned from original publication90; error bars were not provided in the original publication. O. Effect of low-pass and high-pass cutoff on model accuracy. Networks were constrained to report only elevation. Here and in J, K, and L, error bars plot SEM across the 10 networks.

To test whether the trained networks similarly learned to use ear-specific elevation cues, we measured localization accuracy in two conditions: one where sounds were rendered using the head-related impulse response set used for training the networks, and another where the impulse responses were different (having been recorded in a different person’s ears). Because we have unlimited ability to run experiments on the networks, in the latter condition we evaluated localization with 45 different sets of impulse responses, each recorded from a different human. As expected, localization of sounds rendered with the ears used for training was good in both azimuth and elevation (Fig. 4D). But when tested with different ears, localization in elevation generally collapsed (Fig. 4E), much like what happens to human listeners when molds are inserted in their ears (Fig. 4C), even though azimuthal localization was nearly indistinguishable from that with the trained ears. Results for individual sets of alternative ears revealed that elevation performance transferred better across some ears than others (Fig. 4F&G), consistent with anecdotal evidence that sounds rendered with HRTFs other than one’s own can sometimes be convincingly localized in three dimensions.

Limited spectral resolution of elevation cues

Elevation perception is believed to rely on the peaks and troughs introduced to a sound’s spectrum by the ears/head/torso18,21,83 (Fig. 1B, right). In humans, however, perception is dependent on relatively coarse spectral features – the transfer function can be smoothed substantially before human listeners notice abnormalities86 (Fig. 4 H&I), for reasons that are unclear. In the original demonstration of this phenomenon, human listeners discriminated sounds with and without smoothing, a judgment that was in practice made by noticing changes in the apparent location of the sound. To test whether the trained networks exhibited a similar effect, we presented sounds to the networks with similarly smoothed transfer functions and measured the extent to which the localization accuracy was affected. The effect of spectral smoothing on the networks’ accuracy was similar to the measured sensitivity of human listeners (Fig. 4J). The effect of the smoothing was most prominent for localization in elevation, as expected, but there was also some effect on localization in azimuth for the more extreme degrees of smoothing (Fig. 4K&L), consistent with evidence that spectral cues affect azimuthal space encoding87.

Dependence on high-frequency spectral cues to elevation

The cues used by humans for localization in elevation are primarily in the upper part of the spectrum88,89. To assess whether the trained networks exhibited a similar dependence, we replicated an experiment measuring the effect of high-pass and low-pass filtering on the localization of noise bursts90 (Fig. 4M). Model performance varied with the frequency content of the noise in much the same way as human performance (Fig. 4N&O).

The precedence effect

Another hallmark of biological sound localization is that judgments are biased towards information provided by sound onsets21,91. The classic example of this bias is known as the “precedence effect”92–94. If two clicks are played from speakers at different locations with a short delay (Fig. 5A), listeners perceive a single sound whose location is determined by the click that comes first. The effect is often hypothesized to be an adaptation to the common presence of reflections off of environmental surfaces (Fig. 1C) – reflections arrive from an erroneous direction but traverse longer paths and arrive later, such that basing location estimates on the earliest arriving sound might avoid errors21. To test whether our model would exhibit a similar effect, we simulated the classic precedence experiment, rendering two clicks at different locations. When clicks were presented simultaneously, the model reported the sound to be centered between the two click locations, but when a small inter-click delay was introduced, the reported location switched to that of the leading click (Fig. 5B). This effect broke down as the delay was increased, as in humans, though with the difference that the model cannot report hearing two sounds, and so instead reported a single location intermediate between those of the two clicks.

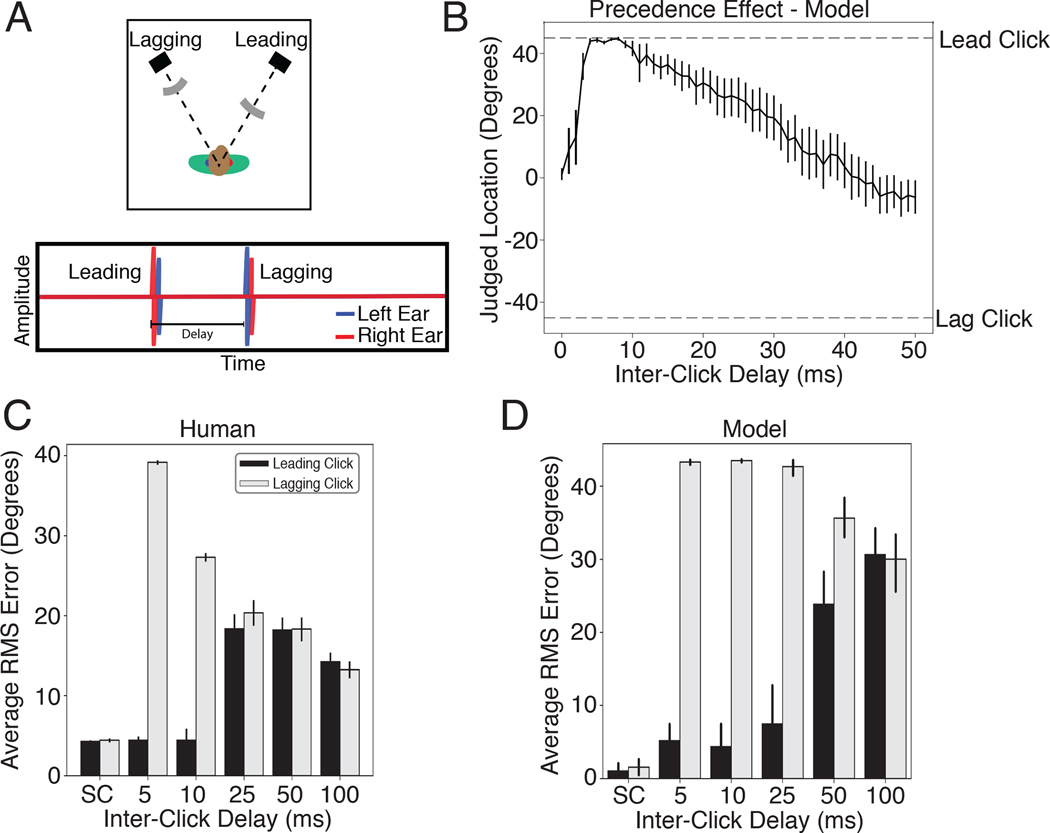

Figure 5.

The precedence effect. A. Diagram of stimulus. Two clicks are played from two different locations relative to the listener. The time interval between the clicks is manipulated and the listener is asked to localize the sound(s) that they hear. When the delay is short but non-zero, listeners perceive a single click at the location of the first click. At longer delays listeners hear two distinct sounds. B. Localization judgments of the model for two clicks at +45 and −45 degrees. The model exhibits a bias for the leading click when the delay is short but non-zero. At longer delays the model judgments (which are constrained to report the location of a single sound, unlike humans), converge to the average of the two click locations. Error bars plots SEM across the 10 networks. C. Error in localization of the leading and lagging clicks by humans as a function of delay. SC denotes a single click at the leading or lagging location. Error bars plot SD. Data scanned from original publication95. D. Error in localization of the leading and lagging clicks by the model as a function of delay. Error bars plots SEM across the 10 networks.

To compare the model results to human data, we simulated an experiment in which participants reported the location of both the leading and lagging click as the inter-click delay was varied95. At short but non-zero delays, humans accurately localize the leading but not the lagging click (Fig. 5C; because a single sound is heard at the location of the leading click). At longer delays the lagging click is more accurately localized, and listeners start to mislocalize the leading click, presumably because they confuse which click is first95. The model qualitatively replicated both effects, in particular the large asymmetry in localization accuracy for the leading and lagging sound at short delays (Fig. 5D).

Multi-source localization

Humans are able to localize multiple concurrent sources, but only to a point96–98. The reasons for the limits on multi-source localization are unclear97. These limitations could reflect human-specific cognitive constraints. For instance, reporting a localized source might require attending to it, which could be limited by central factors not specific to localization. Alternatively, localization could be fundamentally limited by corruption of spatial cues by concurrent sources or other ambiguities intrinsic to the localization problem. To assess whether the model would exhibit limitations like those observed in humans, we replicated an experiment98 in which humans judged both the number and location of a set of speech signals played from a subset of an array of speakers (Fig. 6A). To enable the model to report multiple sources we fine-tuned the final fully-connected layer to indicate the probability of a source at each of the location bins, and set a probability criterion above which we considered the model to report a sound at the corresponding location (see Methods). The weights in all earlier layers were “frozen” during this fine-tuning, such that all other stages of the model were identical to those used in all other experiments. We then tested the model on the experimental stimuli.

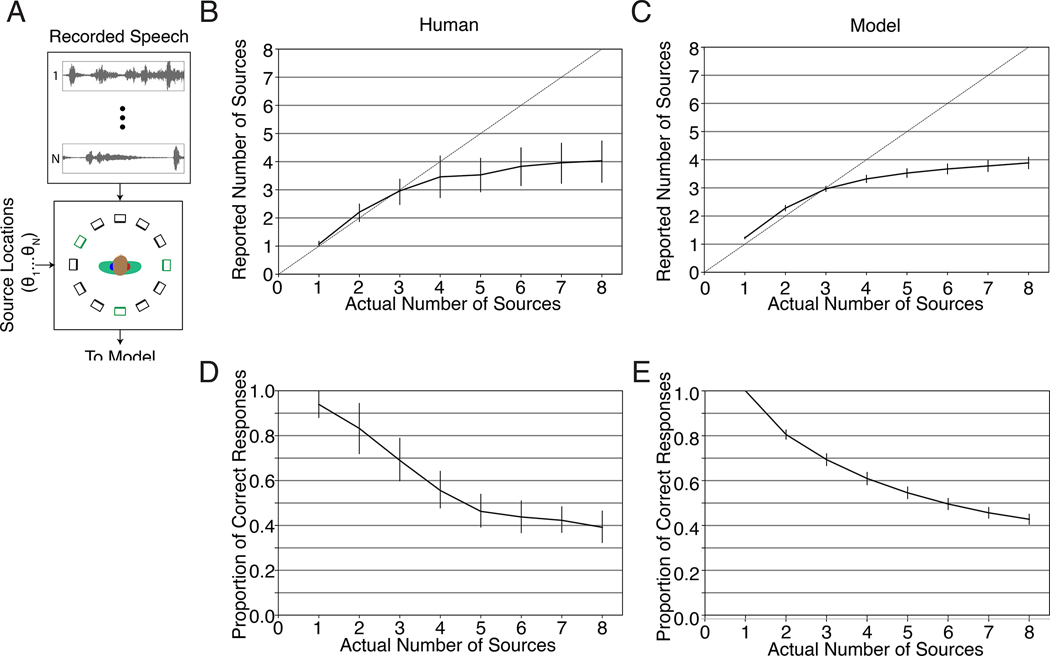

Figure 6.

Multi-source localization. A. Diagram of experiment. On each trial, between 1 and 8 speech signals (each spoken by a different talker) was played from a subset of the speakers in a 12-speaker circular array. The lower panel depicts an example trial in which three speech signals were presented, with the corresponding speakers in green. Participants reported the number of sources and their locations. B. Average number of sources reported by human listeners, plotted as a function of the actual number of sources. Error bars plot standard deviation across participants. Here and in D, graph is reproduced from original paper98 with permission of the authors. C. Same as B, but for the model. Error bars plot standard deviation across the 10 networks D. Localization accuracy (measured as the proportion of sources correctly localized to the actual speaker from which they were presented), plotted as a function of the number of sources. Error bars plot standard deviation across participants. E. Same as D, but for the model. Error bars plot standard deviation across the 10 networks.

Humans accurately report the number of sources up to three, after which they undershoot, only reporting about four sources in total regardless of the actual number (Fig. 6B). The model reproduced this effect, also being limited to approximately four sources (Fig. 6C). Human localization accuracy also systematically drops with the number of sources (Fig. 6D); the model again quantitatively reproduced this effect (Fig. 6E). The model-human similarity suggests that these limits on sound localization are intrinsic to the constraints of the localization problem, rather than reflecting human-specific central factors.

Effect of optimization for unnatural environments

Despite having no previous exposure to the stimuli used in the experiments, and despite not being fit to match human data in any way, the model qualitatively replicated a wide range of classic behavioral effects found in humans. These results raise the possibility that the characteristics of biological sound localization may be understood as a consequence of optimization for real-world localization. However, given these results alone, the role of the natural environment in determining these characteristics is left unclear.

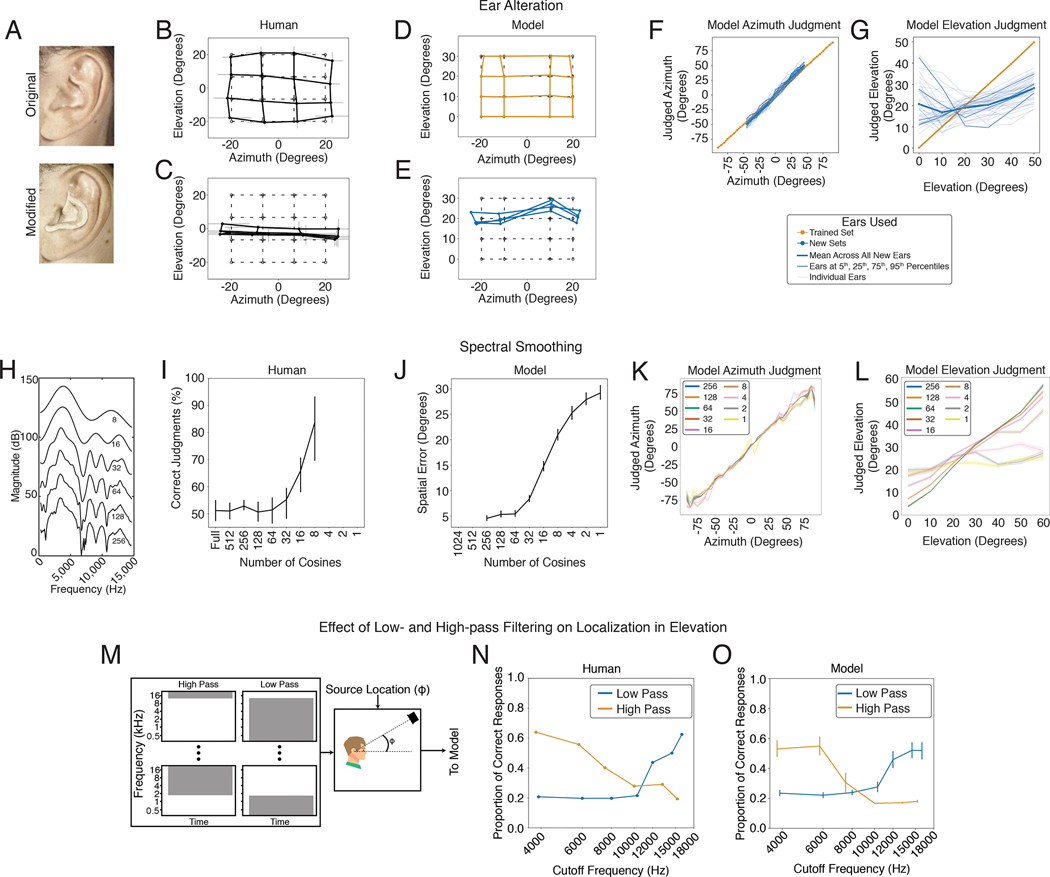

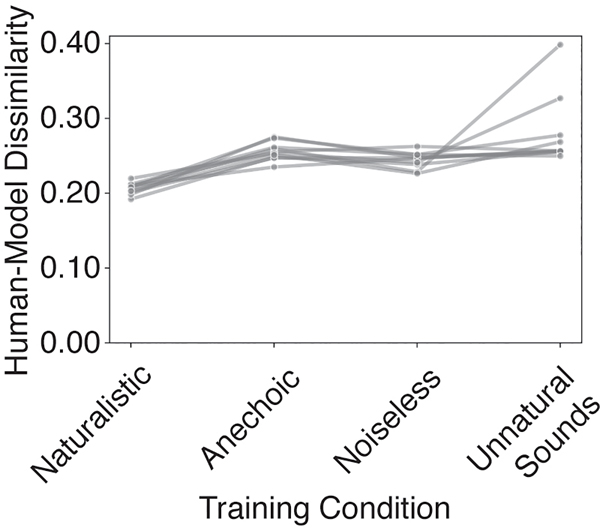

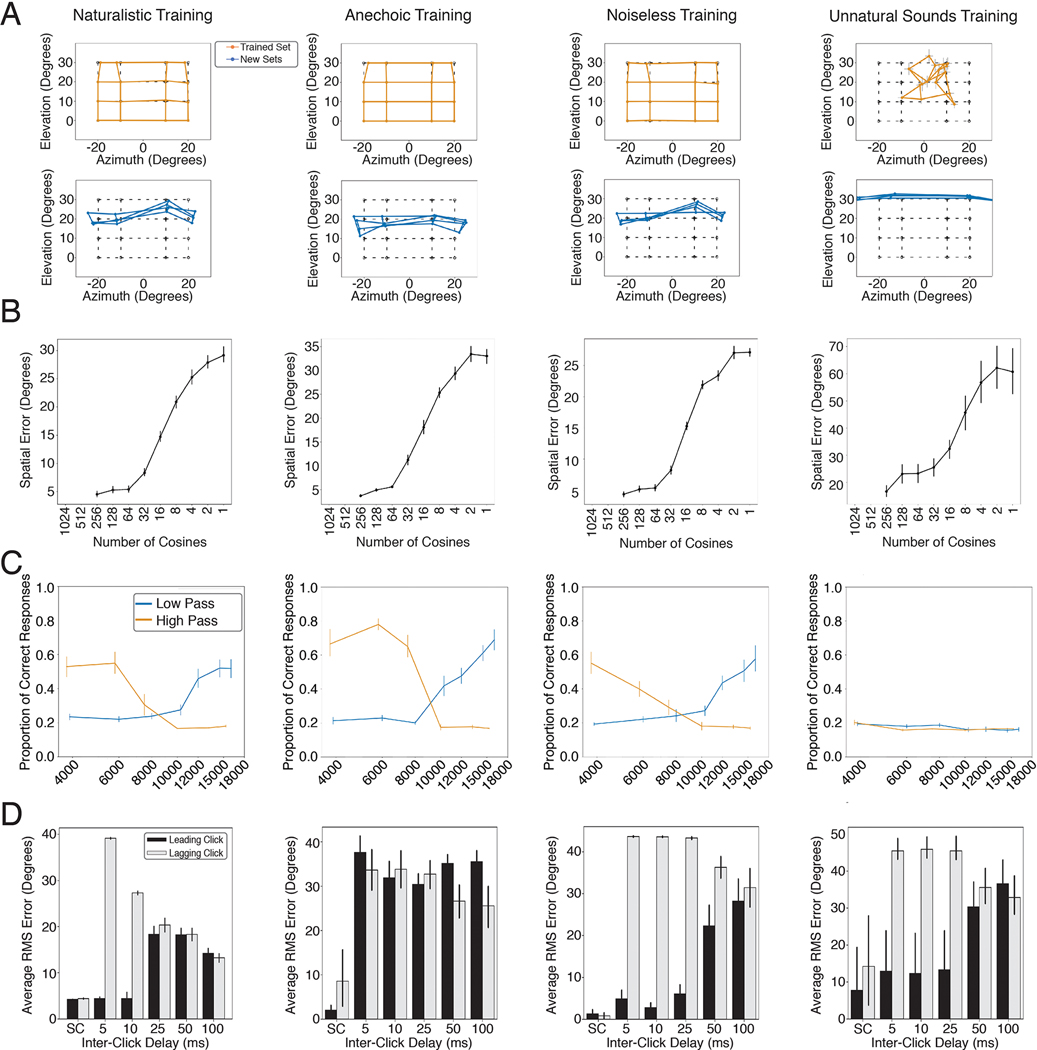

To assess the extent to which the properties of biological hearing are adapted to the constraints of localization in natural environments, we took advantage of the ability to optimize models in virtual worlds altered in various ways, intended to simulate the optimization that would occur over evolution and/or development in alternative environments (Fig. 1A). We altered the training environment in one of three ways (Fig. 7A): 1) by eliminating reflections (simulating surfaces that absorb all sound that reaches them, unlike real-world surfaces), 2) by eliminating background noise, and 3) by replacing natural sound sources with artificial sounds (narrowband noise bursts). In each case we trained the networks to asymptotic performance, then froze their weights and ran them on the full suite of psychophysical experiments described above. The psychophysical experiments were identical for all training conditions; the only difference was the strategy learned by the model during training, as might be reflected in the experimental results. We then quantified the dissimilarity between the model psychophysical results and those of humans as the mean squared error between the model and human results, averaged across experiments (normalized to have uniform axis limits; see Methods).

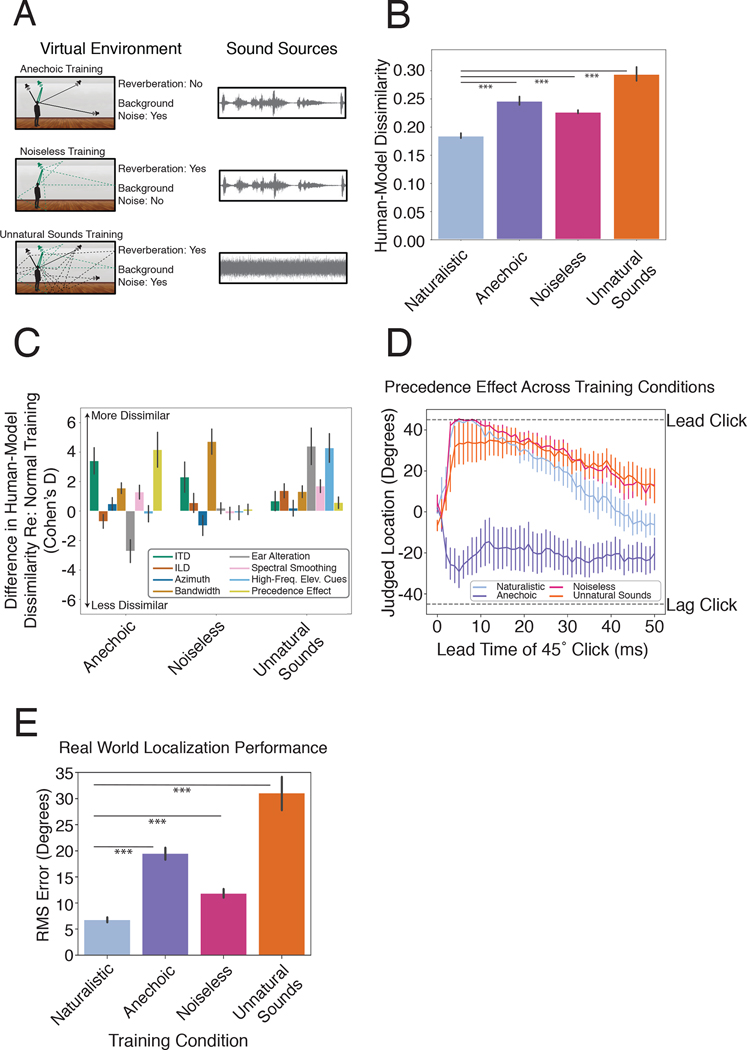

Figure 7.

Effect of unnatural training conditions. A. Schematic depiction of altered training conditions, eliminating echoes or background noise, or using unnatural sounds. B. Overall human-model dissimilarity for natural and unnatural training conditions. Error bars plot SEM, bootstrapped across networks. Asterisks denote statistically significant differences between conditions (p<.001, two-tailed), evaluated by comparing the human-model dissimilarity for each unnatural training condition to a bootstrapped null distribution of the dissimilarity for the natural training condition. C. Effect of unnatural training conditions on human-model dissimilarity for individual experiments, expressed as the effect size of the difference in dissimilarity between the natural and each unnatural training condition (Cohen’s d, computed between human-model dissimilarity for networks in normal and modified training conditions). Positive numbers denote a worse resemblance to human data compared to the model trained in normal conditions. Error bars plot SEM, bootstrapped across the 10 networks D. The precedence effect in networks trained in alternative environments. E. Real-world localization accuracy of networks for each training condition. Error bars plot SEM, bootstrapped across the 10 networks. Asterisks denote statistically significant differences between conditions (p<.001, two-tailed), evaluated by comparing the mean localization error for each unnatural training condition to a bootstrapped null distribution of the localization error for the natural training condition.

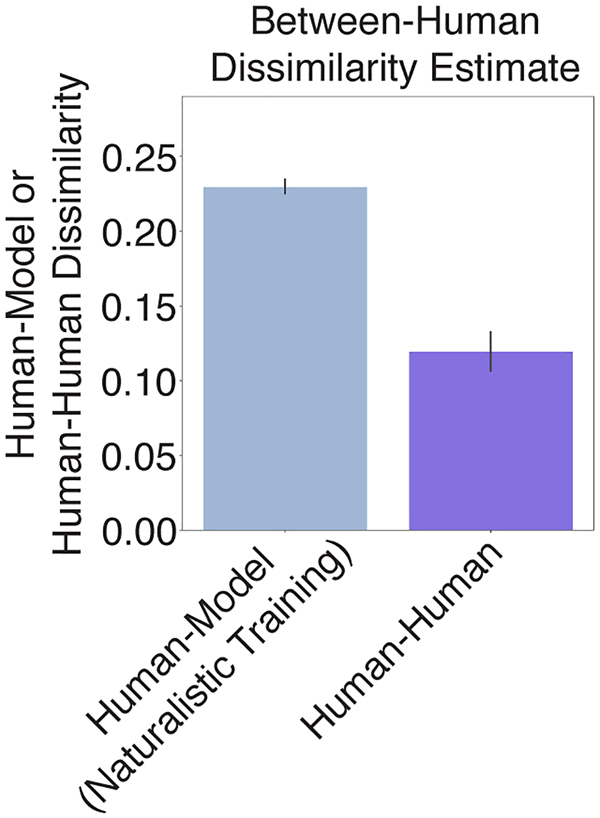

Fig. 7B shows the average dissimilarity between the human and model results on the suite of psychophysical experiments, computed separatedly for each model training condition. The dissimilarity was lowest for the model trained in natural conditions, and significantly higher for each of the alternative conditions (p<.001 in each case, obtained by comparing the dissimilarity of the alternative conditions to a null distribution obtained via bootstrap across the 10 networks trained in the naturalistic condition; results were fairly consistent across networks, Extended Data Fig. 7). The effect size of the difference in dissimilarity between the naturalistic training condition results and each of the other training conditions was large in each case (d=3.06, Anechoic; d=3.05, Noiseless; d=3.01, Unnatural Sounds). This result provides additional evidence that the properties of spatial hearing are consequences of adaptation to the natural environment – human-like spatial hearing emerged from task optimization only for naturalistic training conditions.

To get insight into how the environment influences perception, we examined the human-model dissimilarity for each experiment individually (Fig. 7C). Because the absolute dissimilarity is not meaningful (in that it is limited by the reliability of the human results, which is not perfect; see Extended Data Fig. 8), we assessed the differences in human-model dissimilarity between the natural training condition and each unnatural training condition. These differences were most pronounced for a subset of experiments in each case.

The anechoic training condition produced most abnormal results for the precedence effect, but also produced substantially different results for ITD cue strength. The effect size for the difference in human-model dissimilarity between anechoic and natural training conditions was significantly greater in both these experiments (precedence effect: d=4.16; ITD cue strength: d=3.41) than in the other experiments (p<0.001, by comparing the effect sizes of one experiment to the distribution of the effect size for another experiment obtained via bootstrap across networks). The noiseless training condition produced most abnormal results for the effect of bandwidth (d=4.71; significantly greater than that for other experiments, p<0.001, via bootstrap across networks). We confirmed that this result was not somehow specific to the absence of internal neural noise in our cochlear model, by training an additional model in which noise was added to each frequency channel (see Methods). We found that the results of training in noiseless environments remained very similar. The training condition with unnatural sounds produced most abnormal results for the experiment measuring elevation perception (d=4.4 for the ear alteration experiment; d=4.28 for the high-frequency elevation cue experiment; p<0.001 in both cases, via bootstrap across networks), presumably because without the pressure to localize broadband sounds, the model did not acquire sensitivity to spectral cues to elevation. These results indicate that different worlds would lead to different perceptual systems with distinct localization strategies.

The most interpretable example of environment-driven localization strategies is the precedence effect. This effect is often proposed to render localization robust to reflections, but others have argued that its primary function might instead be to eliminate interaural phase ambiguities, independent of reflections99. This effect is shown in Fig. 7D for models trained in each of the four virtual environments. Anechoic training completely eliminated the effect, even though it was largely unaffected by the other two unnatural training conditions. This result substantiates the hypothesis that the precedence effect is an adaptation to reflections in real-world listening conditions. See Extended Data Figs. 9 and 10 for full psychophysical results for models trained in alternative conditions.

In addition to diverging from the perceptual strategies found in human listeners, the models trained in unnatural conditions performed more poorly at real-world localization. When we ran models trained in alternative conditions on our real-world test set of recordings from mannequin ears in a conference room, localization accuracy was substantially worse in all cases (Fig. 7E; p<.0.001 in all cases). This finding is consistent with the common knowledge in engineering that training systems in noisy and otherwise realistic conditions aids performance37,42,44,100. Coupled with the abnormal psychophysical results of these alternatively trained models, this result indicates that the classic perceptual characteristics of spatial hearing reflect strategies that are important for real-world localization, in that systems that deviate from these characteristics localize poorly.

Model predictions of sound localizability

One advantage of a model that can mediate actual localization behavior is that one can run large numbers of experiments on the model, searching for “interesting” predictions that might then be tested in human listeners. Here we used the model to estimate the accuracy with which different natural sounds would be localized in realistic conditions. We chose to examine musical instrument sounds as these are both diverse and available as clean recordings in large numbers. We took a large set of instrument sounds101 and rendered them at a large set of randomly selected locations. We then measured the average localization error for each instrument.

As shown in Fig. 8A, there was reliable variation in the accuracy with which instrument sounds were localized by the model. The median error was as low as 1.06 degrees for Reed Instrument #3 and as high as 40.02 degrees for Mallet #1 (folded to discount front-back confusions; without front-back folding the overall error was larger, but the ordinal relations among instruments was similar). The human voice was also among the most acurately localized sounds in the set we examined, with a mean error of 2.39 degrees (front-back folded).

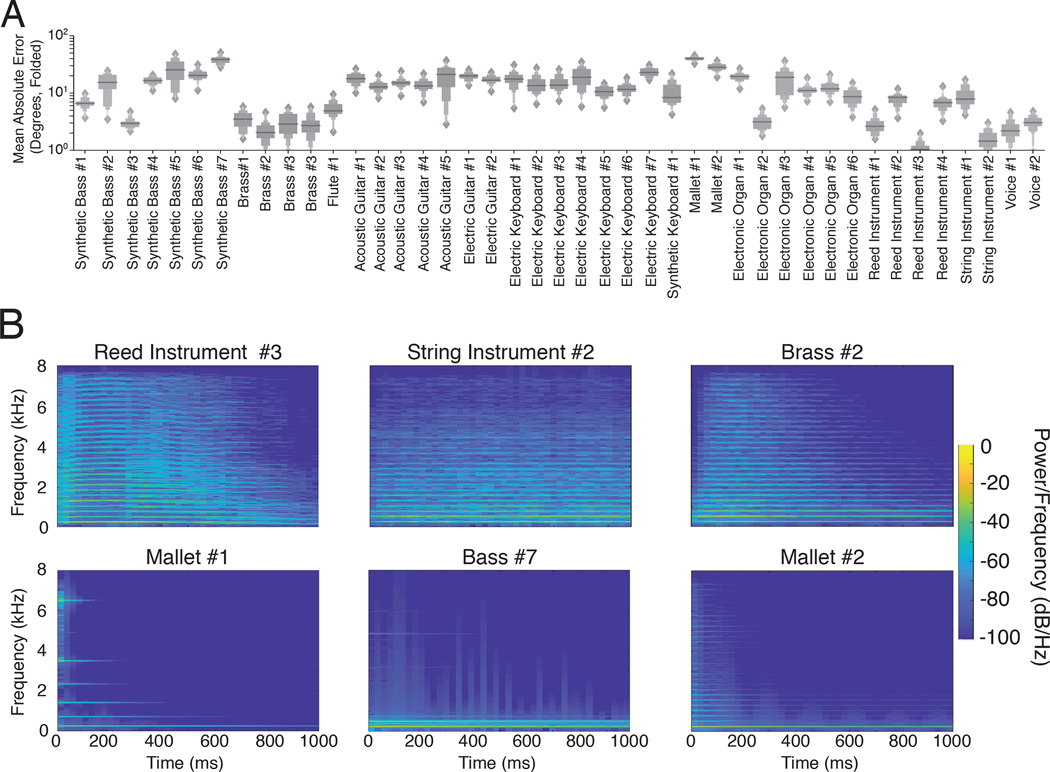

Figure 8.

Model localization accuracy for musical instrument sounds. A. Mean model localization error for each of 43 musical instruments. Each of a set of instrument notes was rendered at randomly selection locations. Graph shows letter-value plots157 of the mean localization error across notes, measured after actual and judged positions were front-back folded. Letter-value plots are boxplots with additional quantiles. The widest box depicts the middle two quartiles (1/4) of the data distribution, as in a box plot, the second widest box depicts the next two octiles (1/8), the third widest box depicts the next two hexadeciles (1/16), etc., up to the upper and lower 1/64 quantiles. Horizontal line plots median value and diamonds denote outliers. B. Spectrograms of example note (middle C) for the three most and least accurately localized instruments (top and bottom, respectively).

Fig. 8B displays spectrograms for example notes for the three best- and worst-localized instruments. The best-localized instruments are spectrally dense, and thus presumably take advantage of cross-frequency integration (which improve localization accuracy in both humans and the model; Fig. 3E&F). This result is consistent with the common idea that narrowband sounds are less well localized, but the model provides a quantitative metric of localizability that we would not otherwise have.

To assess whether the results could be predicted by simple measures of spectral sparsity, we measured the spectral flatness102 of each instrument sound (the ratio of the geometric mean of the power spectrum to the arithmetic mean of the power spectrum). The average spectral flatness of an instrument was significantly correlated with the model’s localization accuracy (rs = .77, p<.001), but this correlation was well below the split-half reliability of the model’s accuracy for an instrument (rs = .99). This difference suggests that there are sound features above and beyond spectral density that determine a sound’s localizability, and illustrates the value of an optimized system to make perceptual predictions.

We had intentions of running a free-field localization experiment in humans to test these predictions, but had to halt experiments due to COVID-19. We have hopes of running the experiment in the future. However, we note that informal observation by the authors listening in free-field conditions suggest that the sounds that are poorly localized by the model are also difficult for humans to localize.

Discussion

We trained artificial neural networks to localize sounds from binaural audio rendered in a virtual world and heard through simulated ears. When the virtual world mimicked natural auditory environments, with surface reflections, background noise, and natural sound sources, the trained networks replicated many attributes of spatial hearing found in biological organisms. These included the frequency-dependent use of interaural time and level differences, the integration of spatial information across frequency, the use of ear-specific high-frequency spectral cues to elevation and robustness to spectral smoothing of these cues, localization dominance of sound onsets, and limitations on the ability to localize multiple concurrent sources. The model successfully localized sounds in an actual real-world environment better than alternative algorithms that lacked ears. The model also made predictions about the accuracy with which different types of real-world sounds could be localized. But when the training conditions were altered to deviate from the natural environment by eliminating surface reflections, background noise, or natural sound source structure, the behavioral characteristics of the model deviated notably from human-like behavior. The results suggest that most of the key properties of mammalian spatial hearing can be understood as consequences of optimization for the task of localizing sounds in natural environments. Our approach extends classical ideal observer analysis to new domains, where provably optimal analytic solutions are difficult to attain but where supervised machine learning can nonetheless provide optimized solutions in different conditions.

The general method involves two nested levels of computational experiments: optimization of a model under particular conditions, followed by a suite of psychophysical experiments to characterize the resulting behavioral phenotype. This approach provides an additional tool with which to examine the constraints that yield biological solutions103,104, and thus to understand evolution105. It also provides a way to link experimental results with function. In some cases these links had been hypothesized but not definitively established. For example, the precedence effect was often proposed to be an adaptation to reverberation21,92, though other functional explanations were also put forth99. Our results suggest it is indeed an adaptation to reverberation (Fig. 7D). We similarly provide evidence that sensitivity to spectral cues to elevation emerges only with the demands of localizing somewhat broadband sounds106. In other cases the model provided explanations for behavioral characteristics that previously had none. One such example is the relatively coarse spectral resolution of elevation perception (Fig. 4H–J), which evidently reflects the absence of reliable information at finer resolutions. Another is the number of sources that can be concurrently localized (Fig. 6B–C), and the dependence of localization accuracy on the number of sources (Fig. 6D–E). Without an optimized model there would be no way to ascertain whether these effects reflect intrinsic limitations of localization cues in auditory scenes or some other human-specific cognitive limit.

Prior models of sound localization required cues to be hand-coded and provided to the model by the experimenter22–24,36. In some cases previous models were able to derive optimal encoding strategies for such cues28, which could be usefully compared to neural data107. In other cases models were able to make predictions of behavior in simplified conditions using idealized cues36. However, the idealized cues that such models work with are not well-defined for arbitrary real-world stimuli108, preventing the modeling of general localization behavior. In addition, ear-specific spectral cues to elevation (Fig. 1B, right) are not readily hand-coded, and as a result have remained largely absent from previous models. It has thus not previously been possible to derive optimal behavioral characteristics for real-world behavior.

Our results highlight the power of contemporary machine learning coupled with virtual training environments to achieve realistic behavioral competence in computational models. Supervised learning has traditionally been limited by the need for large amounts of labeled data, typically acquired via painstaking human annotation. Virtual environments allow the scientist to generate the data, with the labels coming for free (as the parameters used to generate the data), and have the potential to greatly expand the settings in which supervised learning can be used to develop models of the brain109. Virtual environments also allow tests of optimality that would be impossible in biological systems, because they enable environmental conditions to be controlled, and permit optimization on rapid timescales.

Our approach is complementary to the long tradition of mechanistic modeling of sound localization. In contrast with mechanistic modeling, we do not produce specific hypotheses about underlying neural circuitry. However, the model gave rise to rich predictions of real-world behavior, and normative explanations of a large suite of perceptual phenomena. It should be possible to merge these two approaches, both by training model classes that are more faithful to biology (e.g. spiking neural networks)110,111, and by building in additional known biological structures to the neural network (e.g. replicating brainstem circuitry)112,113.

One limitation of our approach is that optimization of biological systems occurs in two distinct stages of evolution and development, which are not obviously mirrored in our model optimization procedure. The procedure we used had separate stages of architectural selection and weight training, but these do not cleanly map onto evolution and development in biological systems. This limitation is shared by classical ideal observers, but limits the ability to predict effects that might be specific to one stage or the other, for instance involving plasticity114.

Our model also shares many limitations common to current deep neural network models of the brain115. The learning procedure is unlikely to have much in common with biological learning, both in the extent and nature of supervision (which involves millions of explicitly labeled examples) and in the learning algorithm, which is often argued to lack biological plausibility110. The model class is also not fully consistent with biology, and so does not yield detailed predictions of neural circuitry. The analogies with the brain thus seem most promising at the level of behavior and representations. Our results add to growing evidence that task-optimized models can produce human-like behavior for signals that are close to the manifold of natural sounds or images50,116,117. However, artificial neural networks also often exhibit substantial representational differences with humans, particularly for unnatural signals derived in various ways from a network118–122, and our model may exhibit similar divergences.

We chose to train models on a fixed representation of the ear. This choice was motivated by the assumption that the evolution of the ear was influenced by many different auditory tasks, such that it may not have been strongly influenced by the particular demands of sound localization, instead primarily serving as a constraint on biological solutions to the sound localization problem117. However, the ear itself undoutedly reflects properties of the natural environment123. It could thus be fruitful to “evolve” ears along with the rest of the auditory system, particularly in a framework with multiple tasks50. Our cochlear model also does not replicate the fine details of cochlear physiology124–126 due to practical constraints of limited memory resources. These differences could in principle influence the results, although the similarity of the model results to those of humans suggests that the details of peripheral physiology beyond those that we modeled do not figure critically in the behavioral traits we examined.

The virtual world we used to train our models also no doubt differs in many ways from real-world acoustic environments. The rendering assumed point sources in space, which is inaccurate for many natural sound sources. The distribution of source locations was uniform relative to the listener, and both the listener and the sound sources were static, all of which are often not true of real-world conditions. And although the simulated reverberation replicated many aspects of real-world reverberation, it probably did not perfectly replicate the statistical properties of natural environmental impulse responses127, or their distribution across environments. Our results suggest that the virtual world approximates the actual world in many of the respects that matter for spatial hearing, but the discrepancies with the real world could make a difference for some behaviors.

We also emphasize that despite presenting our approach as an alternative to ideal observer analysis9,10, the resulting model almost surely differs in some respects from a fully ideal observer. The solutions reached by our approach are not provably optimal like classic ideal observers, and the model class and optimization methods could impose biases on the solutions. It is also likely that the architecture search was not extensive enough to find the best architectures for the task. Those caveats aside, the similarity to human behavior, along with the strong dependence on the training conditions, provides some confidence that the optimization procedure is succeeding to a degree that is scientifically useful.

Our focus in this paper has been to study behavior, as there is a rich set of auditory localization behaviors for which normative explanations have traditionally been unavailable. However, it remains possible that the model we trained could be usefully compared to neural data. There is a large literature detailing binaural circuitry in the brainstem128 that could be compared to the internal responses of the model. The model could also be used to probe for functional organization in the auditory cortex, for instance by predicting brain responses using features from different model stages45–50, potentially helping to reveal hierarchical stages of localization circuitry.

A model that can predict human behavior should also have useful applications. Our model showed some transfer of localization for specific sets of ears (Fig. 4G), and could be used to make predictions about the extent to which sound rendering in virtual acoustic spaces (which may need to use a generic set of head-related transfer functions) should work for a particular listener. It can also predict which of a set of sound sources will be most compellingly localized, or worst localized (Fig. 8). Such predictions could be valuable in enabling better virtual reality, or in synthesizing signals that humans cannot pinpoint in space.

One natural extension of our model would be to incorporate moving sound sources and head movements. We modeled sound localization in static conditions because the vast majority of experimental data has been collected in this setting. But in real-world conditions sound sources often move relative to the listener, and listeners move their head129,130, often to better disambiguate front from back62 and more accurately localize. Our approach could be straightforwardly expanded to moving sound sources in the virtual training environment, and a model that can learn to move its head42, potentially yielding explanations of auditory motion perception131–133. The ability to train models that can localize in realistic conditions also underscores the need for additional measurements of human localization behavior – front-back confusions, localization of natural sounds in actual rooms, localization with head movements etc. – with which to further evaluate models.

Another natural next step is to instantiate both recognition and localization in the same model, potentially yielding insight into the segregation of these functions in the brain134, and to the role of spatial cues in the ‘cocktail party problem’135–141. More generally, the approach we take here – using deep learning to derive optimized solutions to perceptual or cognitive problems in different operating conditions – is broadly applicable to understanding the forces that shape complex, real-world, human behavior.

Methods

Training Data Generation

Virtual acoustic simulator - Image/Source method

We used a room simulator51 to render Binaural Room Impulse Responses (BRIRs). This simulator used the image-source method, which approaches an exact solution to the wave equation if the walls are assumed to be rigid59, as well as an extension to that method that allowed for more accurate calculation of the arrival time of a wave142. This enabled the simulator to correctly render the relative timing between the signals received by the two simulated ears, including reflections (enabling both the direct sound and all reflections to be rendered with the correct spatial cues). Our specific implementation was identical to that used in the original paper51, except for some custom optimization to take advantage of vectorized operations and parallel computation.

The room simulator operated in three separate stages. First, the simulator calculated the positions of reflections of the source impulse forward in time for 0.5s. For each of these positions, the simulator placed an image symmetrically reflected about the wall of last contact. Second, the simulator accounted for the absorption spectra of the reflecting walls for each image location and filtered a broadband impulse sequentially using the absorption spectrum of the simulated wall material. Third, the simulator found the direction of arrival for each image and convolved the filtered impulse with the head-related impulse response in the recorded set whose position was closest to the computed direction. This resulted in a left and right channel signal pair for each path from the source to the listener. Lastly, each of these signal pairs was summed together, factoring in both the delay from the time of arrival and the level attenuation based on the total distance traveled by each reflection. The original authors of the simulator previously assessed this method’s validity and found that simulated BRIRs were good physical approximations to recorded BRIRs provided that sources were rendered more than one meter from the listener51.

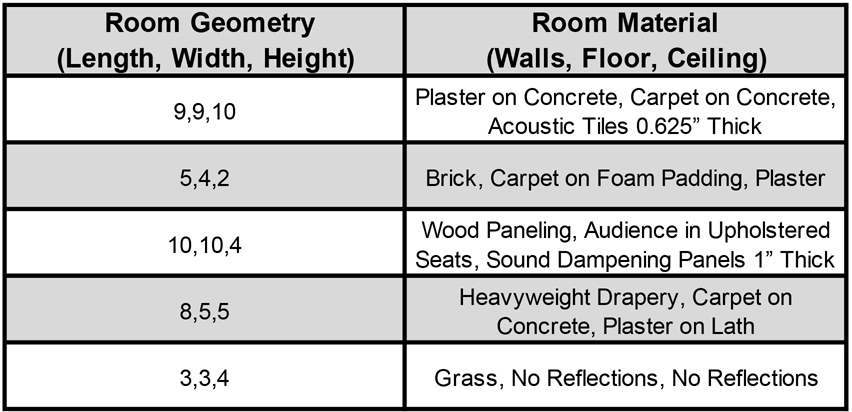

We used this room simulator to render BRIRs at each of a set of listener locations in 5 different rooms varying in size and material (listed in Extended Data Fig. 5) for each of the source location bins in the output layer of the networks: all pairings of 7 elevations (between 0° and 60°, spaced 10°), and 72 azimuths (spaced 5° in a circle around the listener), at a distance of 1.4 meters. This yielded 504 source positions per listener location and room. Listener locations were chosen subject to three constraints. First, the listener location had to be at least 1.4 meters from the nearest wall (because sounds were rendered 1.4 meters from the listener). Second, the listener locations were located on a grid whose axes ran parallel to the walls of the room, with locations spaced 1 meter apart in each dimension. Third, the grid was centered in the room. These constraints yielded 4 listener locations for the smallest room and 81 listener locations for the largest room. This resulted in 71,064 pairs of BRIRs, each corresponding to a possible source-listener-room spatial configuration. Each BRIR took approximately 4 minutes to generate when parallelized across 16 cores. We parallelized143 the generation of the full set of BRIRs across approximately 1000 cores on the MIT OpenMind Cluster, which allowed us to generate the full set of BRIRs in approximately 4 days.

Virtual acoustic simulator - HRIRs

The simulator relied on empirically derived Head Related Impulse Responses (HRIRs) to incorporate the effect of pinna filtering, head shadowing, and time delays without solving wave equations for the ears/head/torso. Specifically, the simulator used a set of HRIRs recorded with KEMAR – a mannequin designed to replicate the acoustic effects of head and torso filtering on auditory signals. These recordings consisted of 710 positions ranging from −40° to +90° elevation at 1.4 meters53. A subset of these positions corresponded to the location bins into which the network classified source locations.

Virtual acoustic simulator - Two-microphone array

For comparison with the networks trained with simulated ears, we also trained the same neural network architectures to localize sounds using audio recorded from a two-microphone array (Extended Data Fig. 6). To train these networks, we simulated audio received from a two-microphone array by replacing each pair of HRIRs in the room simulator with a pair of fractional delay filters (i.e, that delayed the signal by a fraction of a sample). These filters consisted of 127 taps and were constructed via a sinc function windowed with a Blackman window, offset in time by the desired delay. Each pair of delay filters also incorporated signal attenuation from a distance according to the inverse square law, with the goal of replicating the acoustics of a two-microphone array. After substituting these filters for the HRIRs used in our main training procedure, we simulated a set of BRIRs as described above.

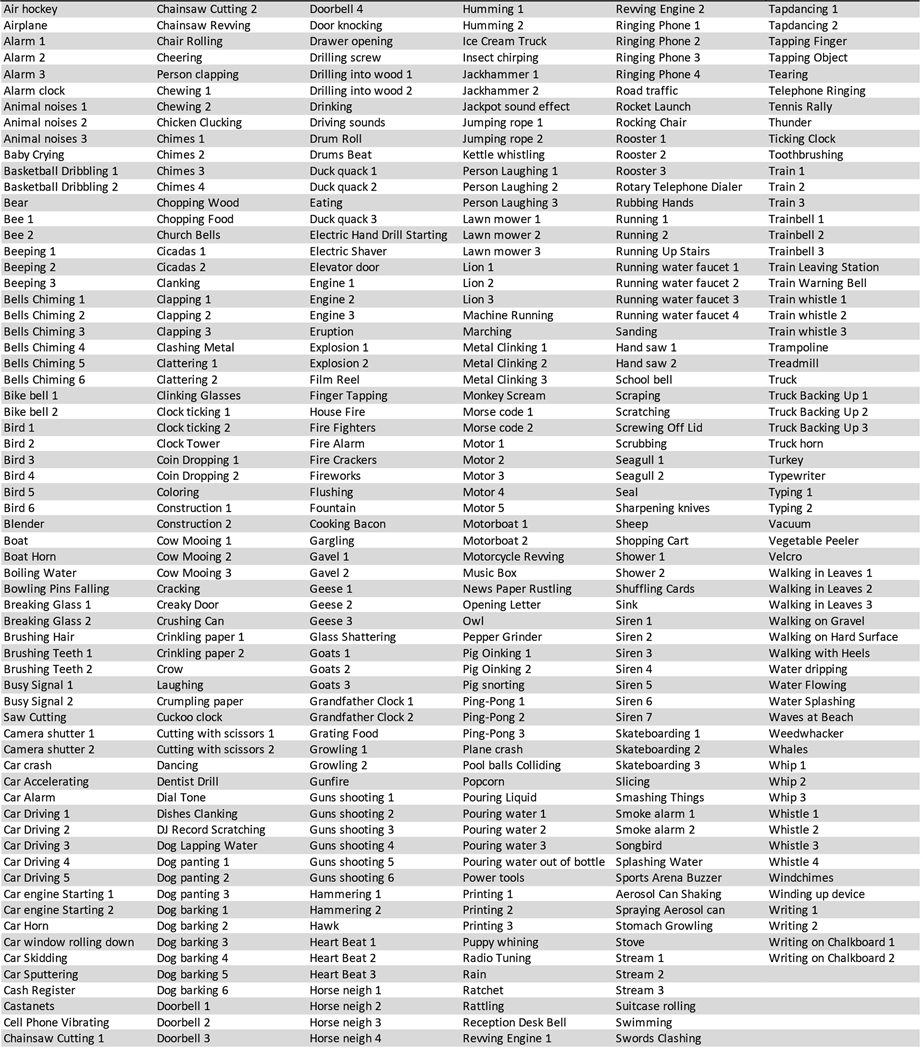

Natural sound sources

We collected a set of 455 natural sounds, each cut to two seconds in length. 300 of these sounds were drawn from a set used in previous work in the lab144. Another 155 sounds were drawn from the BBC Sounds Effects Database, selected by the first author to be easily identifiable. The sounds included human and animal vocalizations, human actions (chopping, chewing, clapping, etc.), machine sounds (cars, trains vacuums, etc.), and nature sounds (thunder, insects, running water). The full list of sounds is given in Extended Data Fig. 4. All sounds were sampled at 44.1 kHz. Of this set, 385 sounds were used for training and another 70 sounds were withheld for model validation and testing. To augment the dataset, each of these was bandpass filtered with a two-octave-wide second-order Butterworth filter with center frequencies spaced in one-octave steps starting from 100 Hz. This yielded 2,492 (2,110 training, 382 testing) sound sources in total.

Background noise sources

Background noise sources were synthesized using a previously described texture generation method that produced texture excerpts rated as highly realistic55. The specific implementation of the synthesis algorithm was that used in60, with a sampling rate of 44.1 kHz. We used 50 different source textures obtained from in-lab collections145. Textures were selected that synthesized successfully, both subjectively (sounding perceptually similar to the original texture) and objectively (the ratio between mean squared statistic values for the original texture and the mean squared error between the statistics of the synthesized and original texture was greater than 40dB SNR). We then rendered 1,000 5-second exemplars for each texture, cut to 2 seconds in length, for a total of 50,000 unique waveforms (1000 exemplars x 50 textures). Background noises were created by spatially rendering between 3 and 8 exemplars of the same texture at randomly chosen locations using the virtual acoustic simulator described above. We made this choice on grounds of ecological validity, based on the intuition that noise sources are typically not completely spatially uniform96 despite being more diffuse than sounds made by single organisms or objects. By adding noises rendered at different locations we obtained background noise that was not as precisely localized as the target sound sources, which seemed a reasonable approximation of common real-world conditions.

Generating training exemplars

To reduce the storage footprint of the training data, we separately rendered the sound sources to be localized, and the background noise, and then randomly combined them to generate training exemplars. For each source, room, and listener location we randomly rendered each of the 504 positions with a probability . We used a base probability of 2.5% to limit the overall size of the training set and normalized by the number of listener locations in the room being used to render the current stimulus so that each room was represented equally in the dataset. This yielded 545,566 spatialized natural sound source stimuli in total (497,935 training, 47,631 testing). This resulted in 988 examples per training location, on average.

For each training example, the audio from one spatialized natural sound source and one spatialized background texture scene was combined (with a signal-to-noise ratio sampled uniformly from 5 to 30 dB SNR) to create a single auditory scene that was used as a training example for the neural network. The resulting waveform was then normalized to have an rms amplitude of 0.1. Each training example was passed through the cochlear model before being fed to the neural network.

Stimulus preprocessing: Cochlear model

Training examples were pre-processed with a cochlear model to simulate the human auditory periphery. The output of the cochlear model is a time-frequency representation intended to represent the instantaneous mean firing rates in the auditory nerve. The cochlear model was chosen to approximate the time and frequency information in the human cochlea subject to practical constraints on the memory footprint of the model and the dataset. Cochleagrams were generated using a filter bank like that in previous work from our lab55. However, the cochleagrams we used provided fine timing information to the neural network by passing rectified subbands of the signal instead of the envelopes of the subbands. This came at the cost of substantially increasing the dimensionality of the input relative to an envelope-based cochleagram. The dimensionality was nonetheless considerably lower than what would have resulted from a spiking model of the auditory nerve, which would have been prohibitive given our hardware.

The waveforms for the left and right channels were first upsampled to 48 kHz, then separately passed through a bank of 36 bandpass filters. These filters were regularly spaced on an equivalent rectangular bandwidth (ERB)N scale54 with bandwidths matched to those expected in a healthy human ear. Filter center frequencies ranged from 45 Hz to 16,975 Hz. Filters were zero-phase, with transfer functions in the frequency domain shaped as the positive portion of a cosine function. These filters perfectly tiled the frequency axis such that the summed squared response of all filters was flat and allowed for reconstruction of the signal in the covered frequency range. Filtering was performed by multiplication in the frequency domain, yielding a set of subbands. The subbands were then transformed with a power function (0.3 exponent) to simulate the outer hair cells’ nonlinear compression. The results were then half-wave rectified to simulate auditory nerve firing rates and were lowpass filtered with a cutoff frequency of 4 kHz to simulate the upper limit of phase-locking in the auditory nerve56, using a Kaiser-windowed sinc function with 4097 taps. The results of the lowpass filtering were then downsampled to 8 kHz to reduce the dimensionality of the neural network input (without information loss because the Nyquist limit matched the lowpass filter cutoff frequency). Because the lowpass filtering and downsampling were applied to rectified filter outputs, the representation retained information at all audible frequencies, just with limits on fidelity that were approximately matched to those believed to be present in the ear. We note also that the input was not divided into “frames” as are common in audio engineering applications, as these do not have an obvious analogue in biological auditory systems. All operations were performed in Python but made heavy use of the NumPy and SciPy library optimization to decrease processing time. Code to generate cochleagrams in this way is available on the McDermott lab webpage (http://mcdermottlab.mit.edu).

To minimize artificial onset cues at the beginning and end of the cochleagram that would not be available to a human listener in everyday listening conditions, we removed the first and last .35 seconds of the computed cochleagram and then randomly excerpted a 1-second segment from the remaining 1.3 seconds. The neural network thus received 1s of input from the cochlear model, as a 36×8000×2 tensor (36 frequency channels × 8000 samples at 8kHz × 2 ears).

For reasons of storage and implementation efficiency, the cochlear model stage was in practice implemented as follows, taking advantage of the linearity of the filter bank. First, the audio from each spatialized natural sound source and each spatialized background texture scene was run through the cochlear filter bank. Second, we excerpted a 1-second segment from the resulting subbands as described in the previous pararaph. Third, the two sets of subbands were stored in separate data structures. Fourth, during training, the subbands for a spatialized natural sound source and a spatialized background scene were loaded, scaled to achieve the desired SNR (sampled uniformly from 5 to 30 dB), summed, and scaled to correspond to a waveform with rms amplitude of 0.1. The resulting subbands were then half-wave rectified, raised to the power of 0.3 to simulate cochlear compression, and downsampled to 8 kHz to simulate the upper limit of auditory nerve phase locking. This “cochleagram” was the input to the neural networks.

Environment Modification for Unnatural Training Conditions

In each unnatural training condition, one aspect of the training environment was modified.

Anechoic environment

All echoes and reflections in this environment were removed. This was accomplished by setting the room material parameters for the walls, floor, and ceiling to completely absorb all frequencies. This can be conceptualized as simulating a perfect anechoic chamber.

Noiseless environment

In this environment, the background noise was removed by setting the SNR of the scene to 85dB. No other changes were made.

Unnatural sound sources

In this environment, we replaced the natural sound sources with unnatural sounds consisting of repeating bandlimited noise bursts. For each 2 second sound source, we first generated a 200 ms 0.5 octave-wide noise burst with a 2 ms half-Hanning window at the onset and offset. We then repeated that noise burst separated by 200 ms of silence for the duration of the signal. The noise bursts in a given source signal always had the same center frequency. The center frequencies (the geometric mean of the upper and lower cutoffs) across the set of sounds were uniformly distributed on a log scale between 60 Hz and 16.8 kHz.

Neural Network Models

The 36×8000×2 cochleagram representation (representing 1s of binaural audio) was passed to a convolutional neural network (CNN), which instantiated a feedforward, hierarchically organized set of linear and nonlinear operations. The components of the CNNs were standard; they were chosen because they have been shown to be effective in a wide range of sensory classification tasks. In our CNNs, there were four different kinds of layers, each performing a distinct operation: (1) convolution with a set of filters, (2) a point-wise nonlinearity, (3) batch normalization, and (4) pooling. The first three types of layers always occurred in a fixed order (batch normalization, convolution, and a point-wise nonlinearity). We refer to a sequence of these three layers in this order as a “block”. Each block was followed by either another block or a pooling layer. Each network ended with either one or two fully connected layers feeding into the final classification layer. Below we define the operations of each type of layer.

Convolutional layer

A convolutional layer consists of a bank of K linear filters, each convolved with the input to produce K separate filter responses. Convolution performs the same operation at each point in the input, which in our case was the cochleagram. Convolution in time is natural for models of sensory systems as the input is a temporal sequence whose statistics are translation invariant. Convolution in frequency is less obviously natural, as translation invariance does not hold in frequency. However, approximate translation invariance holds locally in the frequency domain for many types of sound signals, and convolution in frequency is often present, implicitly or explicitly, in auditory models146,147. Moreover, imposing convolution greatly reduces the number of parameters to be learned, and we have found that neural network models train more readily when convolution in frequency is used, suggesting that it is a useful form of model regularization.

The input to a convolutional layer is a three-dimensional array with shape (nin,min,din) where nin and min are the spectral and temporal dimensions of the input, respectively, and din is the number of filters. In the case of the first convolutional layer, nin = 36 and min = 16,000, corresponding to the temporal and spectral dimensions of the cochleagram, and din = 2, corresponding to the left and right audio channels.

A convolution layer is defined by five parameters:

nk: The height of the convolutional kernels (i.e., their extent in the frequency dimension)

mk: The width of the convolutional kernels (i.e., their extent in the time dimension)

K: The number of different kernels

W: The kernel weights for each of the K kernels; this is an array of dimensions (nk, mk, din, K).

B: The bias vector, of length K

For any input array X of shape (nin,min,din), the output of this convolutional layer is an array Y of shape (nin,min - mk +1,K) (due to the boundary handling choices described below):

where i ranges from (1, …, nin), j ranges (1, …, min), ⊙ represents pointwise array multiplication.

Boundary handling via valid padding in time