Abstract

Recent technological developments pave the path for deep learning-based techniques to be used in almost every domain of life. The precision of deep learning techniques make it possible for these to be used in the medical field for the classification and detection of various diseases. Recently, the coronavirus (COVID-19) pandemic has put a lot of pressure on the health system all around the world. The diagnosis of COVID-19 is possible by PCR testing and medical imagining. Since COVID-19 is highly contagious, diagnosis using chest X-ray is considered safe in various situations. In this study, a deep learning-based technique is proposed to classify COVID-19 infection from other non-COVID-19 infections. To classify COVID-19, three different pre-trained models named EfficientNetB1, NasNetMobile and MobileNetV2 are used. The augmented dataset is used for training deep learning models while two different training strategies have been used for classification. In this study, not only are the deep learning model fine-tuned but also the hyperparameters are fine-tuned, which significantly improves the performance of the fine-tuned deep learning models. Moreover, the classification head is regularized to improve the performance. For the evaluation of the proposed techniques, several performance parameters are used to gauge the performance. EfficientNetB1 with regularized classification head outperforms the other models. The proposed technique successfully classifies four classes that include COVID-19, viral pneumonia, lung opacity, and normal, with an accuracy of 96.13%. The proposed technique shows superiority in terms of accuracy when compared with recent techniques present in the literature.

Keywords: COVID-19, deep learning, classification, transfer learning, chest X-rays

1. Introduction

COVID-19 is the most recent viral outbreak, and started in the city of Wuhan, China. Within the span of a few months, it spread to almost all parts of the world [1]. It is a highly contagious disease and transmits by exhaling droplets while respiring. The sudden spike of this highly contagious infection created a global health crisis [2]. According to recorded data, COVID-19 caused over 0.3 million deaths globally within two months after being recognized globally as a pandemic by the World Health Organization (WHO) [3,4]. This infection is commonly known as COVID-19, its scientific name is severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) and it belongs to the coronaviridae family [2]. By 8 December 2021, it had infected over 267 million people around the globe and caused over 5.2 million deaths [3]. COVID-19 negatively affects every sector of life around the globe due to restrictions enforced by governments that are aimed at avoiding exposure of people to this contagious infection [5]. Illnesses that can be classified as being caused by COVID-19 can vary from mild to severe and critical illnesses. COVID-19 can cause pneumonia. The name pneumonia originates from the Greek word pneumon, which means the lungs. Therefore, pneumonia is related to lung disease. Pneumonia causes inflammation in the lungs, which hinders respiration [6]. Exposure to chemicals and food aspirations are other causes of pneumonia. As mentioned earlier, pneumonia causes inflammation in the lungs, which leads to the lungs’ alveoli being filled with fluid or sticky substance (i.e., pus). The sticky fluid causes hindering in the exchange of carbon dioxide and oxygen between the blood and lungs. Reduced exchange of carbon dioxide and oxygen hampers the ability to breathe [7].

Different pathogens, like bacteria, fungi and viruses, cause pneumonia and each of these pneumonias are treated differently. To treat bacterial pneumonia, antibiotics are used. Antifungal drugs are used to treat pneumonia that is caused by fungi, while antiviral drugs are used to treat viral pneumonia [8]. For the diagnosis of pneumonia, a number of techniques have been adopted, including CT scan, chest X-rays, sputum test, complete blood picture, blood gas analysis, and others. However, for the detection of COVID-19, reverse transcription-polymerase chain reaction (RT-PCR) testing is considered reliable, although it is not 100% accurate. A RT-PCR test is used to detect genetic information regarding SARS-CoV-2 from the upper respiratory tract [9].

There is a need to develop a technique that can help medical staff in the diagnosis of COVID-19. Early diagnosis of COVID-19 can save a patient’s life by providing on-time necessary medical attention. Recently, deep learning has emerged as one of the techniques to be used for image processing tasks. It has been found that it produces significant outcomes in different fields, including agriculture [10], medicine [11,12], gesture recognition [13], and remote sensing [14], etc. In the medical field, it is used for the detection and classification of different diseases, including skin diseases [15,16], different types of ulcers, and cancer [17], etc. These deep learning techniques significantly help doctors to diagnose diseases efficiently. Human errors can also be avoided by using deep learning techniques for the detection of different diseases. As mentioned earlier, CT scan and chest X-rays can be used to detect pneumonia. Pneumonia caused by COVID-19 is intense and affects the lungs significantly very quickly. The major difference between typical pneumonia and pneumonia caused by COVID-19 is that pneumonia caused by COVID-19 affects the whole lungs while typical pneumonia damages only part of the lungs.

2. Related Work

Mahmud et al. [18] proposed a deep learning-based technique for the classification of COVID-19 and pneumonia infection. Features are extracted using a deep CNN model named CovXNet. A public dataset is utilized for training containing 1493 samples of non-COVID-19 pneumonia and 305 samples of COVID-19 pneumonia. The model successfully classified non-COVID-19 pneumonia and COVID-19 pneumonia with an accuracy of 96.9%. Umair et al. [19] presented a technique for the binary classification of COVID-19. A publicly available dataset is used for training and evaluation of the technique, consisting of 7232 chest X-ray images. Four deep learning models are being compared in this study. Various evaluation parameters are utilized for the validation of results. Li et al. [20] proposed a technique for the detection of COVID-19 infection. The proposed technique successfully differentiates COVID-19 pneumonia and community-acquired pneumonia (CAP). The deep learning model that is utilized for training is named COVNet; this is three-dimensional CNN architecture. A publicly available dataset is used which contains CT scan samples of COVID-19 and community acquired pneumonia (CAP). The COVNet model attained a rate of 90% sensitivity and 96% specificity.

Abbas [21] proposes another convolution neural network-based technique for the classification of COVID-19 infection using chest X-ray images. The CNN model named decompose, transfer, and compose, and commonly known as DeTrac, was used. Multiple datasets from various hospitals throughout the world were used in this research. The DeTrac model attained an accuracy of 93.1% and a sensitivity of 100%. To classify COVID-19 and typical pneumonia, Wang et al. [22] presented a technique based on deep learning which used the inception model [23]. Modifications in fully connected layers of inception are completed before training the network. In this study, 1053 images were used. The model gave an accuracy of 73.1% with a sensitivity of 74% and specificity of 67%. Sankar et al. [24] proposed a deep learning technique for the classification of COVID-19 infected chest X-rays. A Gaussian filter was used for preprocessing, while the local binary pattern was utilized to extract texture features. Later, the extracted LBP features are fused with the CNN model InceptionV3 to improve the performance. The classification is carried out using multi-layer perceptron. The model was validated on an X-ray dataset and attained an accuracy of 94.08%. Panwar [25] proposes a convolutional neural network-based technique where a 24 layer CNN model has been used for the classification of COVID-19 and normal images. The author named this model nCOVnet. The X-ray dataset was used for training nCOVnet. The model attains an accuracy of up to 97%. Zheng [26] presents the segmentation-based classification technique. The U-Net [27] is trained on CT images to generate lung masks. Two-dimensional U-Net is used for this purpose. Later, the mask generated by U-Net is fed to DeCoVNet for the classification of COVID-19. The architecture of DeCoVNet consists of three parts: (1) the stem network, consisting of 3-D vanilla, along with a batch norm and pooling layer; (2) two 3D ResBolcks are used in the second stage, where ResBolcks are used for feature map generation; (3) the third part of DeCoVNet is used for classification that is based on probabilities. A progressive classifier is used for the binary classification of COVID-19.

Xu et al. [28] proposed a technique for the detection of COVID-19 infection using the deep learning-based model. Two ResNet [29] based models were used in this study: (1) ResNet18; (2) a modified ResNet18 with the mechanism of localization. The CT scan images were used for training the models. The final evaluation is performed using noisy-OR Bayesian. The overall accuracy of the proposed technique is cited as 88.7%. Hussain et al. [30] proposed a system that is called CoroDet and is based on convolutional neural networks for the detection of COVID-19 infection. The proposed CNN model is comprised of 22 layers, and is trained on chest X-rays and CT scan images. The model is able to classify COVID-19 and non-COVID-19, Moreover, it can classify three different classes, including COVID-19, pneumonia, and normal. The 22 layered model shows good classification results. Khan et al. [31] presented a technique for the classification of COVID-19 disease. The proposed technique used CNN for the classification; a known deep learning model Xception is modified for this purpose. The modified model is named CoroNet by the authors. The dataset used for training consists of four classes, including COVID-19, normal, viral pneumonia, and fungal pneumonia. Using the mentioned dataset, the model is trained using the different combinations of datasets. The model gave 89.6% accuracy. Choudary et al. [32] adopted a deep learning technique to classify COVID-19 and viral pneumonia. Various deep learning models have been used for training in this work. In addition, the transfer learning approach is exploited for training deep learning models. The public dataset is utilized for the training of models. The dataset contains samples of COVID-19, typical viral pneumonia, and chest X-rays of healthy and normal people. The models attained good classification accuracies. Ozturk et al. [33] presented a 17 layered Darknet model for the detection of COVID-19 infection. Different sizes of filters were employed at CNN layers. The presented technique classifies binary classes (COVID-19 and no finding) and multiple classes (COVID-19, pneumonia, and no findings). For model training, raw chest X-ray images were used. The model attained an accuracy of 98.08% for binary classification, while for multiple classes an accuracy of 87.02% is achieved.

For detection of COVID-19, the majority of research has been performed using chest X-rays, which show the importance of chest X-rays in diagnosing chest infections and, specifically, for diagnosing COVID-19. The chest X-rays were found to be the primary tool in medical image analysis. Traditional image processing-based feature extraction techniques are complex compared to deep learning techniques. Recently deep learning techniques surpass traditional techniques in terms of performance. However, traditional techniques can be used, along with deep learning techniques, for aid [24]. Moreover, deep learning techniques require a large amount of data for training and testing. Deep learning models trained on the limited datasets are not generalized; thus, such models are not reliable. It has been found through the literature, that data augmentation techniques can be used to resolve small dataset issues [34]. Furthermore, the already available research is more focused on the binary classification of COVID-19 [18,19,20,21,22] and limited research has been conducted for multiclass classification of COVID-19 [28,29,30,31,32,33]. The performance of multiclass classification is not yet adequate, and hence their performance needs to be improved.

3. Materials and Methods

In this section, the dataset used for the training and testing are discussed along with the deep learning models used in this study. The dataset used in this study acquired from Kaggle is composed of multiple datasets. The dataset is further discussed in Section 3.1. Likewise, the proposed methodology for the classification of COVID-19 infection is discussed in Section 3.2.

3.1. Dataset

The dataset used for training and evaluation of the proposed technique is publicly available on Kaggle [32,35]. This dataset has been revised thrice; the dataset used in this work is acquired from Kaggle after recent revisions. The mentioned dataset is composed of multiple sub-datasets, with four different classes, including COVID-19, lung opacity, normal and viral pneumonia. It is important to discuss the composition of the used dataset in detail as it is made by merging different datasets. Each class is created by merging different sub-datasets. The class COVID-19 contains a total of 3616 images, which are gathered from four different sources. The BIMCV-COVID19+ [36] dataset largely contributes to the used COVID-19 dataset with 2473 images. It is one of the largest independent datasets that is publicly available. Other datasets, which contribute to this COVID-19 dataset, are the German Medical School dataset [37] with 183 chest X-ray images, while 560 chest X-ray images are gathered from SIRM, GitHub, Kaggle, and Twitter [38,39,40,41]. In addition, another dataset is available on GitHub [42] containing 400 chest X-ray images which have been merged.

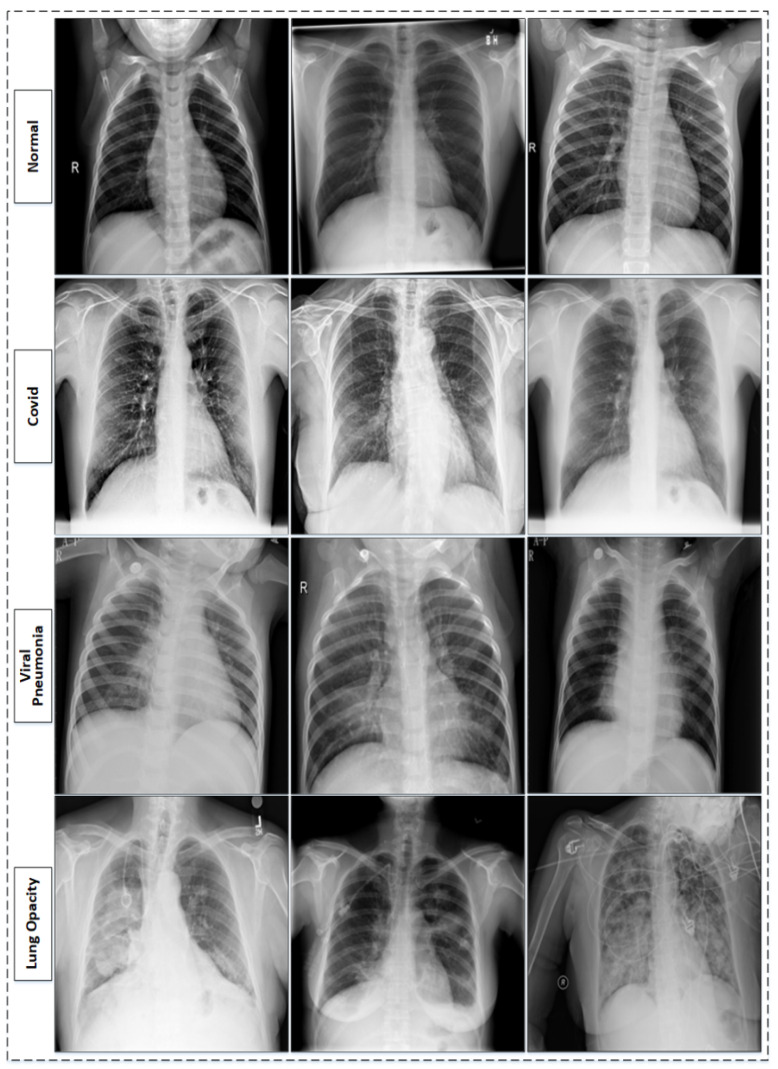

The RSNA pneumonia challenge dataset is one of the known chest X-ray datasets [43]. The RSNA dataset consists of different lung abnormalities and normal lungs (healthy lungs). The abnormalities range from different lung infections to lung cancer. It contains 26,684 chest X-ray images in the Dicom format; further, the dataset is divided into three major categories. The largest of these categories contains 11,821 images with different lung infections and, of these, 6012 images are categorized as non-COVID-19 lung infection (lung opacity), while 8851 images are normal and healthy lungs. The dataset is examined by medical experts based on key symptoms, and clinical history is also considered during inspection, as it is important to know whether or not the patient has ever suffered from any correlated infections before. The dataset is further extended by adding 1341 normal chest X-ray images, along with viral pneumonia chest X-ray images, which are 1345 total in number and are sourced from [44]. The sample images from the used dataset [35] are shown in Figure 1. The composition of the dataset [35] is also presented in Table 1.

Figure 1.

Table 1.

3.2. Proposed Methodolgy

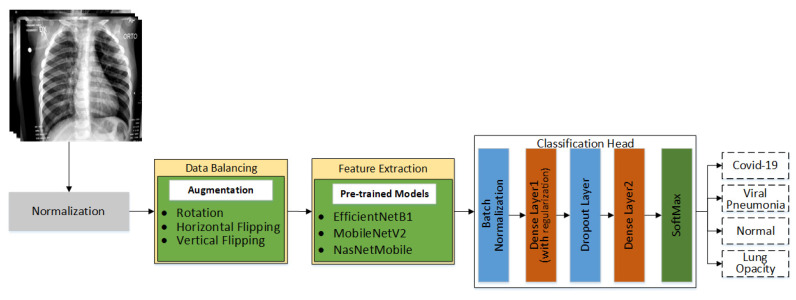

In this proposed work, a multiclass classification technique for chest X-rays is proposed. The main goal is to identify X-rays infected by COVID-19. In the literature, a significant amount of research related to the binary classification of COVID-19 is present, but it is still difficult to find research that is related to the multiclass classification of COVID-19. Multiclass classification of X-rays is a challenging task as there are inter-class similarities. In addition, the availability of datasets is one of the major problems. The datasets publicly available are highly class imbalanced; this is one of the other challenges to deal with while working on multiclass classification. Different pre-trained models are being used in this work to evaluate their performance on chest X-ray for the classification of chest infections, including COVID-19. The proposed workflow of this research is shown in Figure 2. The details of every step shown in Figure 2 are discussed in the following subsections.

Figure 2.

Detailed workflow of proposed technique.

3.2.1. Data Normalization and Augmentation

Acquiring datasets for training deep learning models is not easy, as datasets are not always readily available. The deep learning models require quality datasets with a large number of samples for efficient training [10]. The dataset is normalized within a range of 0 and 1. Every pixel of images present in the dataset are multiplied by a factor of 1/255. This has been done to make the dataset consistent in terms of pixel intensity. The acquired dataset is a class imbalance; such a dataset contains the different numbers of images in each class. The deep learning models cannot be efficiently trained on such datasets, as they are biased toward one or more classes, which significantly affects the performance of the model. Moreover, deep learning models require a significant amount of a dataset for training; otherwise, overfitting can play a role in deteriorating the performance of the model.

To overcome both of these above-mentioned problems, the image augmentation approached is adopted. Image augmentation not only increases the amount of the dataset but also helps in making the dataset class balanced. The image augmentation approach enables the addition of more examples to the classes, which originally have fewer examples, thus enhancing the quality and size of the original dataset, which significantly affects the performance of the model. There are a number of image augmentation techniques. Image augmentation techniques are used according to requirements. In this work, the image dataset is used for training. Choosing a good image augmentation technique is critical; otherwise, it can impact the model performance negatively. A good augmentation technique is meant to preserve all of the information that is present in the original data, however, it also increases the size of the dataset.

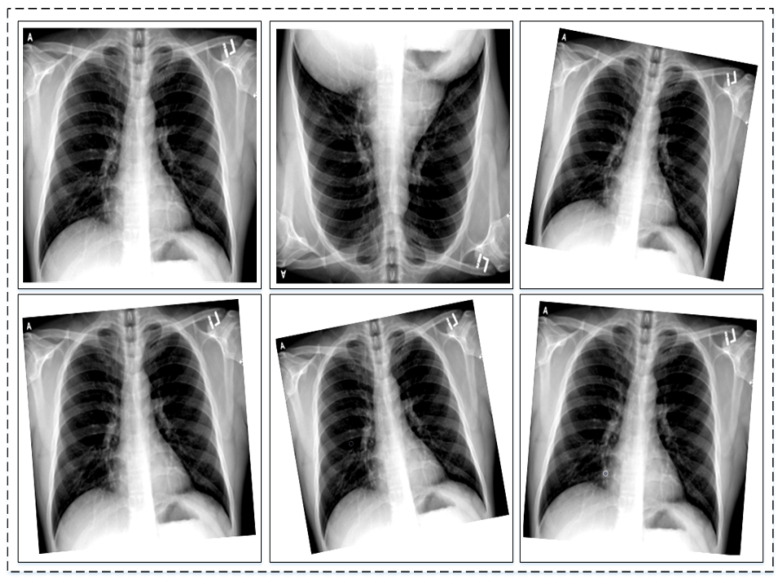

To address the class imbalance challenge, data augmentation is applied to three classes, which are the COVID-19 class, viral pneumonia, and lung opacity. Augmentation carried out on images is known as image augmentation and it has various types. The types include geometric or positional augmentation, random erasing, color space transformation, mixing images, and kernel filter-based augmentation [45]. Geometric augmentation has been found suitable for datasets that are similar to the one used in this work. Geometric augmentation also has various types, including rotational, translation, scaling, cropping, and flipping. Depending upon the need and nature of the original dataset these types can be used. As in this work, the original dataset has inter-class similarities. Keeping this in mind, only two geometric augmentations have been found to be useful; these are rotational and flipping augmentation. In rotational augmentation, images can be rotated clockwise and anti-clockwise with different degrees of angle. Practically, it is possible to rotate images from 1 to 359 degrees, but to preserve augmentation safety, rotation within 20 degrees is suitable. On the other hand, flipping can be horizontal and vertical around the axis. It has been found to be useful when applied on datasets, like Cifar [46] and ImageNet [47]. Flipping is label preserving except for text, but it is important to check the augmentation manually. The nature of the dataset is important when applying augmentation to preserve labels of the dataset. Generally, geometric augmentation is suitable and effective where position bias is present in the dataset. A few examples of augmentation are shown in Figure 3.

Figure 3.

Sample images of original dataset after performing data augmentation.

3.2.2. Feature Extraction and Classification of Chest X-rays

Deep learning models extract features from the images using convolutional layers; based on these features these model also classify images. The initial layers of DL models extract edges, contours, etc., while later layers extract more detailed attributes of images. In this study, three different deep learning models have been used for the feature extraction and classification of chest X-rays. The models used are EfficientNetB1 [48], NasNetMobile [49], and MobileNetV2 [50]. The architecture of the used models are discussed briefly. The MobileNetV2 is a lightweight model, consisting of 17 bottlenecks. These bottlenecks are made of pointwise and depth-wise convolution layers along with Relu6 and batch normalization layers. The model consists of a total of 53 layers. On the other hand, NasNetMobile is made up of two different cells, called normal and reduction cells. The reduction cells are followed by four normal cells throughout the architecture. The reduction cells reduce the dimension of the feature map by a factor of 2. The third model used in this work is EfficientNetB1, which is a variant of the EfficientNetB0 baseline. The MBConv block is the core of EfficientNetB1, an inverted residual block used to reduce the number of trainable parameters. The squeeze and excitation block is the part of the MBConv block which aids in feature extraction by giving weights to channels of the MBConv block. The EfficientNetB0 baseline uses compound scaling, which is a combination of width, depth and resolution scaling. In EfficientNetB1, the swish activation function is used, which is the combination of linear and sigmoid activation functions. The swish activation function helps retain the negative values. EfficientNetB1 uses an input size of 240 × 240. The architecture of the EfficientNetB0 baseline is presented in Table 2.

Table 2.

Architecture of EfficientNetB0 Baseline.

| Stage | Operator | Resolution | Channel | Layers |

|---|---|---|---|---|

| 1 | Conv3 × 3 | 224 × 224 | 32 | 1 |

| 2 | MBConv1, k3 × 3 | 112 × 112 | 16 | 1 |

| 3 | MBConv6, k3 × 3 | 112 × 112 | 24 | 2 |

| 4 | MBConv6, k5 × 5 | 56 × 56 | 40 | 2 |

| 5 | MBConv6, k3 × 3 | 28 × 28 | 80 | 3 |

| 6 | MBConv6, k5 × 5 | 14 × 14 | 112 | 3 |

| 7 | MBConv6, k5 × 5 | 14 × 14 | 192 | 4 |

| 8 | MBConv6, k3 × 3 | 7 × 7 | 320 | 1 |

| 9 | Conv1 × 1 & Pooling & FC | 7 × 7 | 1280 | 1 |

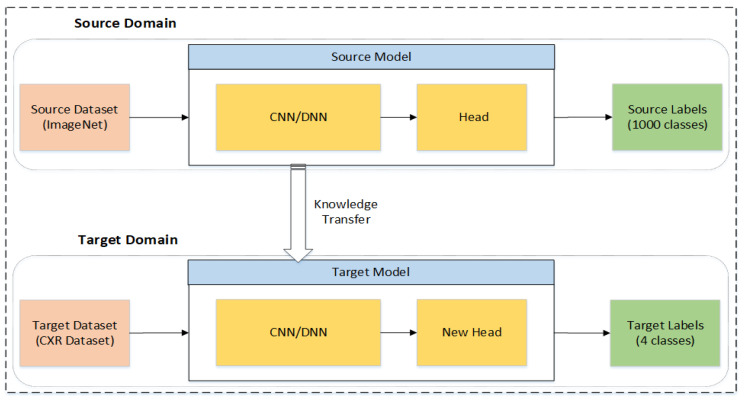

These models are previously trained on the large dataset ImageNet [38]. All three models have been retrained using the concept of Transfer Learning (TL). TL helps the deep learning model obtain better outcomes by reusing the deep learning model’s previously gained information on huge datasets [51]. The TL technique not only improves results but also reduces the training time significantly. The concept of TL is illustrated in Figure 4, where there are two domains: the source domain and the target domain. The source domain transfers the knowledge to the target domain. Both domains have three parts, including the model, dataset, and labels [52]. The models used in the source domain are trained from scratch on a large dataset, where the labels are the categories of that dataset, as in the case of the ImageNet dataset which has 1000 categories. The models are retrained after performing augmentation on the original dataset.

Figure 4.

Illustration of transfer learning.

Later, the models are fine-tuned according to needs of this study. The classifier head is removed from all of the three networks, which consists of fully connected layers and global average pooling layers. Two new fully connected layers are added named the Dense layer1 and Dense layer2 of size 256 and 4, respectively. The last fully connected layer is activated using the softmax function, while the Adam optimizer is used in this work. The batch normalization layer is employed before fully connecting the layers in order to normalize the output of previous layers. Introduction of the batch normalization layer not only reduces the convergence time but also improves the accuracy. The dropout layer is employed after the first fully connected layer to make the model generalized and to avoid overfitting. The cross entropy function is used as a cost function. It is represented mathematically in the following equation:

| (1) |

where N represents total classes, the labels of classes are denoted by l, P(o, l) is the true probability of observation o over class c while Q(o, l) is the predicted probability of observation o over class c. The hyperparameter tuning plays an important role while training the deep learning models. Learning rate is one of the important parameters, instead of using one learning rate throughout the training; a learning scheduler is used in this work. It is designed in such a way that whenever the validation loss stops reducing, the learning rate is divided by a factor of 2. The starting learning rate used in this work is 0.0001. It has been found during experimentation that small learning rates are better when using pre-trained models, as this helps to retain much of the information from the previously trained model. Higher learning rates makes the training faster but can also cause weights to explode during training, which affects the training process adversely.

3.2.3. Experimental Setup

As mentioned earlier, the chest X-ray dataset with four classes is taken from Kaggle. The dataset went through the preprocessing stage, which address the problem of class imbalance. This is an important step, as an imbalanced dataset adversely affects the model training by showing bias towards one or more classes. Later, the dataset is split into three subsets—training, validation, and testing—with a ratio of 70:20:10, respectively. The testing dataset is unseen, and is used for the evaluation of the model after training the models. The experiments are performed on Intel Core I7, which has 16 gigabytes of RAM. The system is also supported by NVIDIA GTX 1070 Ti. Three different deep learning models are used to compute the results using TL. Moreover, two different training strategies have been adopted in this work. In Strategy I, all three models have been trained using TL on the preprocessed datasets (normalization and data augmentation) and classified using the classification head without batch normalization and the dropout layer, and also without the L2 regularization technique. In the Strategy II, all three models are trained again and classification is performed using a classification head with the batch normalization and dropout layer. Moreover, the Dense layer1 is also regularized using the L2 regularization technique, which is also known as weight decay. The results are evaluated using various evaluation parameters to validate the results of the proposed technique. The evaluation parameters used in this work are accuracy, precision, FPR, sensitivity, and specificity.

4. Results

In this section, the classification performance of the proposed technique on a multiclass chest X-ray image dataset is discussed in detail. The classification results are presented in Section 4.1 while Section 4.1.1 presents the findings of experiments along with comparisons of other techniques.

4.1. Classification Results

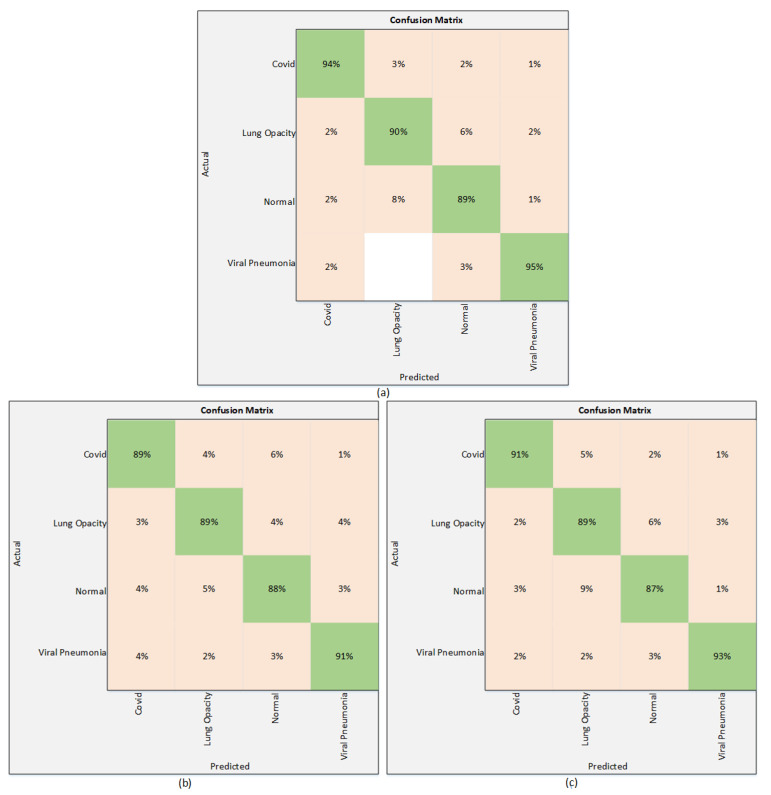

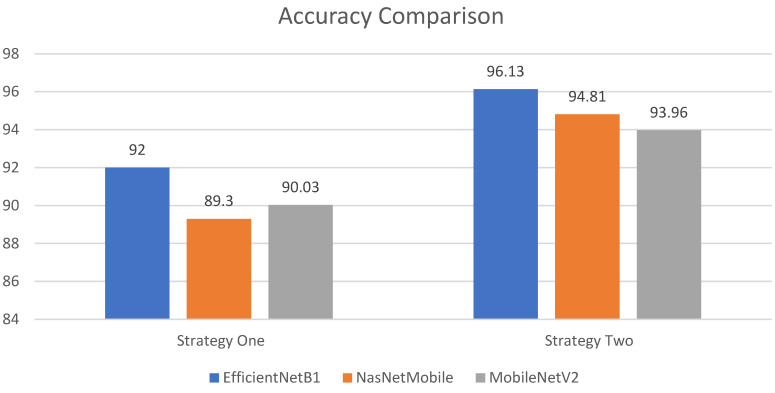

The results of the proposed technique are presented in this section. The results obtained using Strategy I are presented in Table 3. All three models are trained without employing any regularization technique. Table 3 shows that among these three deep learning models, EfficentNetB1 outperforms the others with the highest test accuracy of 92%. It attains a precision of 91.75%, while the sensitivity and F1 scores are 94.5%, and 92.75%, respectively. On the other hand, NasNetMobile provided an accuracy of 89.30% and a precision of 89.25%. The sensitivity is recorded as 91.75% and NasNetMobile gave an F1 score of 91%. The third model used in this work is MobilNetV2, which performs relatively better than NasNetMobile. The MobileNetV2 achieves an accuracy of 90.03%, whereas the precision is recorded as 92.25%. The sensitivity attained using MobileNetV2 is 92% and the F1 score is recorded as 91.75%. It is clearly seen in Table 3 that EfficientNetB1 shows superiority over other models in terms of accuracy, along with other parameters. These results are also validated using a confusion matrix. The confusion matrix is shown in Figure 5.

Table 3.

Classification results using Strategy I.

| Deep Learning Models | Evaluation Parameters | |||

|---|---|---|---|---|

| Accuracy | Precision | Sensitivity | F1 Score | |

| EfficientNetB1 | 92% | 91.75% | 94.50% | 92.75% |

| NasNetMobile | 89.30% | 89.25% | 91.75% | 91% |

| MobileNetV2 | 90.03% | 92.25% | 92% | 91.75% |

Figure 5.

Confusion matrix using Strategy I. (a) EfficientNetB1 (b) NasNetMobile (c) MobileNetV2.

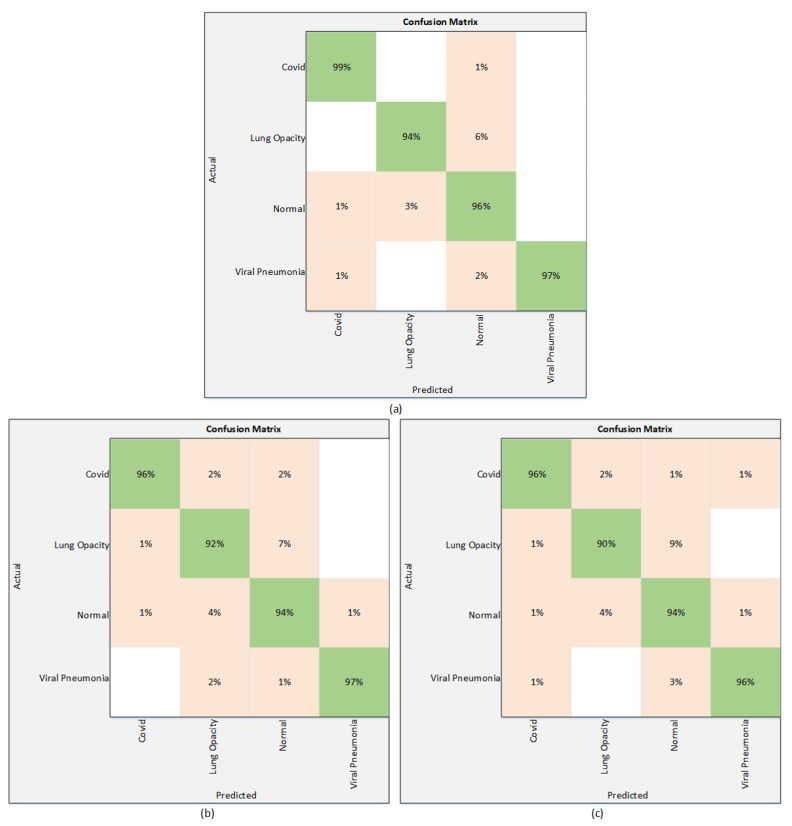

Results of the proposed technique using the Strategy II are presented in Table 4. As mentioned in Section 3, to improve the performance of the models used in Strategy I, the classification head is modified by employing a normalization layer along with the dropout layer. This strategy significantly improves the results of these three models. According to Table 4, EfficientNetB1 attains the highest test accuracy of 96.13%, while the precision, sensitivity, and F1 score are 97.25%, 96.50%, and 97.50%, respectively. Among the other two models, NasNetMobile performs better than MobileNetV2 and attains the accuracy of 94.81%. The value of precision is 95.5%, whereas the sensitivity and F1 score are 95% and 95.25%, respectively. The accuracy and precision attained by the MobileNetV2 are 93.96% and 94.50%, while the sensitivity and F1 score are 95% and 94.50%, respectively. The results of Table 4 can also be validated through a confusion matrix. The Figure 6 presents the confusion matrices of all three models.

Table 4.

Classification results using Strategy II.

| Deep learning Models | Evaluation Parameters | |||

|---|---|---|---|---|

| Accuracy | Precision | Sensitivity | F1 Score | |

| EfficientNetB1 | 96.13% | 97.25% | 96.50% | 97.50% |

| NasNetMobile | 94.81% | 95.50% | 95% | 95.25% |

| MobileNetV2 | 93.96% | 94.50% | 95% | 94.50% |

Figure 6.

Confusion matrix using Strategy II. (a) EfficientNetB1 (b) NasNetMobile (c) MobileNetV2.

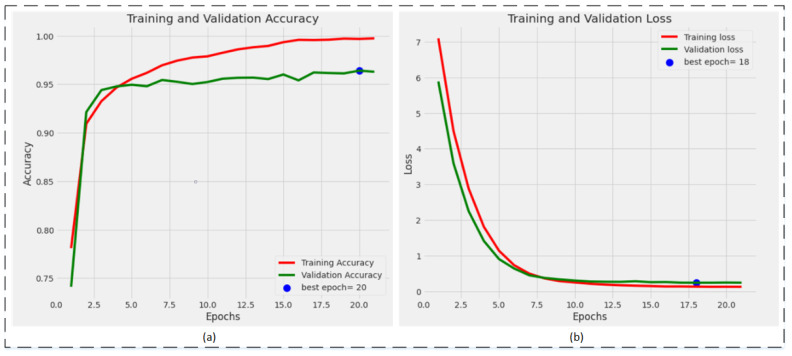

The training plots of the best-performing model, EfficientNetB1, are shown in Figure 7. Figure 7a represents the training and validation accuracy plot. The validation accuracy is achieved by the model on the 20th epoch, while Figure 7b represents the training and validation loss plot. The minimum validation loss is attained on the 18th epoch, while the training continues until the 21st epoch but after the 18th epoch, the loss did not reduce further.

Figure 7.

Training plots of the best performing model, EfficientNetB1: (a) accuracy plot; (b) loss plot.

4.1.1. Analysis and Comparison

In this subsection, the observations during training are stated. In this work, three different models are trained by opting for two different strategies. It has been observed that the performance of all three models significantly improves after employing regularization techniques. Though EfficientNetB1 performs well in both scenarios, regularization techniques significantly improve the performance of EfficientNetB1. Moreover, it also has been observed that the number of the parameters in the model is not related with the performance of the model, as in Strategy I, MobileNetV2 has fewer parameter than NasNetMobile, and attains better accuracy than NasNetMobile. A comparison of the performance of the models are shown in Figure 8. It is clearly seen that a modified EfficientNetB1 outperforms in both strategies. In addition, a comparison of performance with the recent work that is presented in the literature is shown in Table 5.

Figure 8.

Performance comparison between Strategy I and Strategy II.

Table 5.

Comparison with other techniques.

5. Conclusions

A deep learning-based technique is proposed for the classification of different chest infections. The proposed automated system can differentiate chest infections after the evaluation of chest X-ray images. Pixel normalization is used as a preprocessing tool to normalize the pixel intensity of images as the data are gathered from different sources. Moreover, image augmentation is adopted to resolve the class imbalance problem. Three pre-trained deep learning models—EfficeintNetB1, NasNetMobile, and MobileNetV2—are fine-tuned and later retrained to perform the classification of four different chest X-ray classes. This research shows the performance of all three models is improved after applying regularizing techniques to the models. The regularized EfficientNetB1 model outperforms the other models with a classification accuracy of 96.13%, and also when compared with other techniques, the proposed technique shows its superiority in performance.

In the future, this study can be extended for a larger database with more than four classes to be classified. In addition, other lightweight deep learning models can be used to improve the computational time required. Moreover, for the improvement in performance some optimization techniques, specifically metaheuristic techniques, can be employed to select optimum features for classification.

Author Contributions

Conceptualization, E.K. and M.Z.U.R.; methodology, F.A.; software, M.Z.U.R. and F.A.; validation, E.K., F.A. and J.A.; formal analysis, M.Z.U.R.; investigation, F.A.A. and N.M.A.; resources, E.K.; data curation, F.A.; writing—original draft preparation, M.Z.U.R.; writing—review and editing, F.A., F.A.A. and N.M.A.; visualization, E.K.; supervision, F.A. and J.A.; project administration, J.A.; funding acquisition, F.A.A. and N.M.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset used in this research is publicly available with name “COVID-19 Radiography Database | Kaggle” on https://www.kaggle.com/tawsifurrahman/covid19-radiography-database.

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Wang W., Xu Y., Gao R., Lu R., Han K., Wu G., Tan W. Detection of SARS-CoV-2 in different types of clinical specimens. JAMA. 2020;323:1843–1844. doi: 10.1001/jama.2020.3786. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Paules C.I., Marston H.D., Fauci A.S. Coronavirus infections—More than just the common cold. JAMA. 2020;323:707–708. doi: 10.1001/jama.2020.0757. [DOI] [PubMed] [Google Scholar]

- 3. [(accessed on 8 December 2021)]. Available online: https://www.worldometers.info/coronavirus/coronavirus-death-toll/

- 4.Iqbal H.M., Romero-Castillo K.D., Bilal M., Parra-Saldivar R. The emergence of novel-coronavirus and its replication cycle-an overview. J. Pure Appl. Microbiol. 2020;14:13–16. doi: 10.22207/JPAM.14.1.03. [DOI] [Google Scholar]

- 5.Ji T., Liu Z., Wang G., Guo X., Lai C., Chen H., Huang S., Xia S., Chen B., Jia H., et al. Detection of COVID-19: A review of the current literature and future perspectives. Biosens. Bioelectron. 2020;166:112455. doi: 10.1016/j.bios.2020.112455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Obaro S.K., Madhi S.A. Bacterial pneumonia vaccines and childhood pneumonia: Are we winning, refining, or redefining? Lancet Infect. Dis. 2006;6:150–161. doi: 10.1016/S1473-3099(06)70411-X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ibrahim A.U., Ozsoz M., Serte S., Al-Turjman F., Yakoi P.S. Cognitive Computation. Springer; Berlin/Heidelberg, Germany: 2021. Pneumonia Classification Using Deep Learning from Chest X-ray Images during COVID-19; pp. 1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Marrie T.J., Durant H., Yates L. Community-acquired pneumonia requiring hospitalization: 5-year prospective study. Rev. Infect. Dis. 1989;11:586–599. doi: 10.1093/clinids/11.4.586. [DOI] [PubMed] [Google Scholar]

- 9.Zhou P., Yang X.-L., Wang X.-G., Hu B., Zhang L., Zhang W., Si H.-R., Zhu Y., Li B., Huang C.-L., et al. A pneumonia outbreak associated with a new coronavirus of probable bat origin. Nature. 2020;579:270–273. doi: 10.1038/s41586-020-2012-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Rehman Z.U., Khan M.A., Ahmed F., Damaševičius R., Naqvi S.R., Nisar W., Javed K. Recognizing apple leaf diseases using a novel parallel real-time processing framework based on MASK RCNN and transfer learning: An application for smart agriculture. IET Image Process. 2021;15:2157–2168. doi: 10.1049/ipr2.12183. [DOI] [Google Scholar]

- 11.Khan M.A., Khan M.A., Ahmed F., Mittal M., Goyal L.M., Hemanth D.J., Satapathy S.C. Gastrointestinal diseases segmentation and classification based on duo-deep architectures. Pattern Recognit. Lett. 2020;131:193–204. doi: 10.1016/j.patrec.2019.12.024. [DOI] [Google Scholar]

- 12.Corizzo R., Dauphin Y., Bellinger C., Zdravevski E., Japkowicz N. Explainable image analysis for decision support in medical healthcare; Proceedings of the 2021 IEEE International Conference on Big Data (Big Data); Orlando, FL, USA. 15–18 December 2021; New York, NY, USA: IEEE; 2021. pp. 4667–4674. [Google Scholar]

- 13.Rehman M.U., Ahmed F., Khan M.A., Tariq U., Alfouzan F.A., Alzahrani N.M., Ahmad J. Dynamic Hand Gesture Recognition Using 3D-CNN and LSTM Networks. Comput. Mater. Contin. 2022;70:4675–4690. doi: 10.32604/cmc.2022.019586. [DOI] [Google Scholar]

- 14.Petrovska B., Zdravevski E., Lameski P., Corizzo R., Štajduhar I., Lerga J. Deep learning for feature extraction in remote sensing: A case-study of aerial scene classification. Sensors. 2020;20:3906. doi: 10.3390/s20143906. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Liu L., Mou L., Zhu X.X., Mandal M. Skin Lesion Segmentation based on improved U-net; Proceedings of the 2019 IEEE Canadian Conference of Electrical and Computer Engineering (CCECE); Edmonton, AB, Canada. 5–8 May 2019; New York, NY, USA: IEEE; 2019. pp. 1–4. [Google Scholar]

- 16.Ünlü E.I., Çinar A. Segmentation of Benign and Malign lesions on skin images using U-Net; Proceedings of the 2021 International Conference on Innovation and Intelligence for Informatics, Computing and Technologies (3ICT); Zallaq, Bahrain. 29–30 September 2021; New York, NY, USA: IEEE; 2021. pp. 165–169. [Google Scholar]

- 17.Ullah F., Ansari S.U., Hanif M., Ayari M.A., Chowdhury M.E.H., Khandakar A.A., Khan M.S. Brain MR Image Enhancement for Tumor Segmentation Using 3D U-Net. Sensors. 2021;21:7528. doi: 10.3390/s21227528. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Mahmud T., Rahman M.A., Fattah S.A. CovXNet: A multi-dilation convolutional neural network for automatic COVID-19 and other pneumonia detection from chest X-ray images with transferable multi-receptive feature optimization. Comput. Biol. Med. 2020;122:103869. doi: 10.1016/j.compbiomed.2020.103869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Umair M., Khan M.S., Ahmed F., Baothman F., Alqahtani F., Alian M., Ahmad J. Detection of COVID-19 Using Transfer Learning and Grad-CAM Visualization on Indigenously Collected X-ray Dataset. Sensors. 2021;21:5813. doi: 10.3390/s21175813. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Li L., Qin L., Xu Z., Yin Y., Wang X., Kong B., Bai J., Lu Y., Fang Z., Song Q. Artificial intelligence distinguishes COVID-19 from community acquired pneumonia on chest CT. Radiology. 2020;296:200905. doi: 10.1148/radiol.2020200905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Abbas A., Abdelsamea M.M., Gaber M.M. Classification of COVID-19 in chest X-ray images using DeTraC deep convolutional neural network. Appl. Intell. 2021;51:854–864. doi: 10.1007/s10489-020-01829-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Wang S., Kang B., Ma J., Zeng X., Xiao M., Guo J., Cai M., Yang J., Li Y., Meng X., et al. A deep learning algorithm using CT images to screen for Corona Virus Disease (COVID-19) Eur. Radiol. 2021;31:6096–6104. doi: 10.1007/s00330-021-07715-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Szegedy C., Liu W., Jia Y., Sermanet P., Reed S., Anguelov D., Erhan D., Vanhoucke V., Rabinovich A. Going Deeper with Convolutions; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Boston, MA, USA. 7–12 June 2015; pp. 1–9. [Google Scholar]

- 24.Shankar K., Perumal E. A novel hand-crafted with deep learning features based fusion model for COVID-19 diagnosis and classification using chest X-ray images. Complex Intell. Syst. 2021;7:1277–1293. doi: 10.1007/s40747-020-00216-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Panwar H., Gupta P.K., Siddiqui M.K., Morales-Menendez R., Singh V. Application of deep learning for fast detection of COVID-19 in X-rays using nCOVnet. Chaos Solitons Fractals. 2020;138:109944. doi: 10.1016/j.chaos.2020.109944. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Zheng C., Deng X., Fu Q., Zhou Q., Feng J., Ma H., Liu W., Wang X. Deep Learning-Based Detection for COVID-19 from Chest CT Using Weak Label. medRxiv. 2020 doi: 10.1101/2020.03.12.20027185. [DOI] [Google Scholar]

- 27.Ronneberger O., Fischer P., Brox T. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer International Publishing; Cham, Switzerland: 2015. U-net: Convolutional networks for biomedical image segmentation; pp. 234–241. [Google Scholar]

- 28.Xu X., Jiang X., Ma C., Du P., Li X., Lv S., Yu L., Ni Q., Chen Y., Su J., et al. A deep learning system to screen novel coronavirus disease 2019 pneumonia. Engineering. 2020;6:1122–1129. doi: 10.1016/j.eng.2020.04.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Las Vegas, NV, USA. 27–30 June 2016; New York, NY, USA: IEEE; 2016. pp. 770–778. [Google Scholar]

- 30.Hussain E., Hasan M., Rahman M.A., Lee I., Tamanna T., Parvez M.Z. CoroDet: A deep learning based classification for COVID-19 detection using chest X-ray images. Chaos Solitons Fractals. 2021;142:110495. doi: 10.1016/j.chaos.2020.110495. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Khan A.I., Shah J.L., Bhat M.M. CoroNet: A deep neural network for detection and diagnosis of COVID-19 from chest X-ray images. Comput. Methods Programs Biomed. 2020;196:105581. doi: 10.1016/j.cmpb.2020.105581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Chowdhury M.E., Rahman T., Khandakar A., Mazhar R., Kadir M.A., Mahbub Z.B., Islam K.R., Khan M.S., Iqbal A., al Emadi N., et al. Can AI help in screening viral and COVID-19 pneumonia? IEEE Access. 2020;8:132665–132676. doi: 10.1109/ACCESS.2020.3010287. [DOI] [Google Scholar]

- 33.Ozturk T., Talo M., Yildirim E.A., Baloglu U.B., Yildirim O., Acharya U.R. Automated detection of COVID-19 cases using deep neural networks with X-ray images. Comput. Biol. Med. 2020;121:103792. doi: 10.1016/j.compbiomed.2020.103792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Akter S., Shamrat F.M., Chakraborty S., Karim A., Azam S. COVID-19 detection using deep learning algorithm on chest X-ray images. Biology. 2021;10:1174. doi: 10.3390/biology10111174. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.COVID-19 Radiography Database-Kaggle. [(accessed on 20 November 2021)]. Available online: https://www.kaggle.com/tawsifurrahman/covid19-radiography-database.

- 36.BIMCV-COVID-19, Datasets Related to COVID-19’s Pathology Course. 2020. [(accessed on 20 November 2021)]. Available online: https://bimcv.cipf.es/bimcv-projects/bimcv-covid19/#1590858128006-9e640421-6711.

- 37.COVID-19-Image-Repository. 2020. [(accessed on 20 November 2021)]. Available online: https://github.com/ml-workgroup/covid-19-image-repository/tree/master/png.

- 38.Chen R., Liang W., Jiang M., Guan W., Zhan C., Wang T., Tang C., Sang L., Liu J., Ni Z., et al. Risk factors of fatal outcome in hospitalized subjects with coronavirus disease 2019 from a nationwide analysis in China. Chest. 2020;158:97–105. doi: 10.1016/j.chest.2020.04.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Weng Z., Chen Q., Li S., Li H., Zhang Q., Lu S., Wu L., Xiong L., Mi B., Liu D., et al. ANDC: An Early Warning Score to Predict Mortality Risk for Patients with Coronavirus Disease 2019. J. Transl. Med. 2020;18:328. doi: 10.1186/s12967-020-02505-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Liu J., Liu Y., Xiang P., Pu L., Xiong H., Li C., Zhang M., Tan J., Xu Y., Song R., et al. Neutrophil-to-lymphocyte Ratio Predicts Severe Illness Patients with 2019 Novel Coronavirus in the Early Stage. medRxiv. :2020. doi: 10.1101/2020.02.10.20021584. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Huang I., Pranata R. Lymphopenia in severe coronavirus disease-2019 (COVID-19): Systematic review and meta-analysis. J. Intens. Care. 2020;8:36. doi: 10.1186/s40560-020-00453-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.COVID-CXNet. 2020. [(accessed on 20 November 2021)]. Available online: https://github.com/armiro/COVID-CXNet.

- 43.Kaggle RSNA Pneumonia Detection Challenge. [(accessed on 20 November 2021)]. Available online: https://www.kaggle.com/c/rsna-pneumonia-detection-challenge/data.

- 44.Mooney P. Chest X-ray Images (Pneumonia) 2018. [(accessed on 20 November 2021)]. Available online: https://www.kaggle.com/paultimothymooney/chest-xray-pneumonia.

- 45.Shorten C., Khoshgoftaar T.M. A survey on image data augmentation for deep learning. J. Big Data. 2019;6:60. doi: 10.1186/s40537-019-0197-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Krizhevsky A., Hinton G. Learning Multiple Layers of Features from Tiny Images. Volume 7 Citeseer; Princeton, NJ, USA: 2009. [Google Scholar]

- 47.Deng J., Dong W., Socher R., Li L.-J., Li K., Fei-Fei L. the Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009. IEEE; New York, NY, USA: 2009. Imagenet: A large-scale hierarchical image database; pp. 248–255. [Google Scholar]

- 48.Tan M., Le Q. Efficientnet: Rethinking model scaling for convolutional neural networks; Proceedings of the International Conference on Machine Learning; Long Beach, CA, USA. 10–15 June 2019; pp. 6105–6114. [Google Scholar]

- 49.Zoph B., Vasudevan V., Shelens J., Vle Q. Learning Transferable Architectures for Scalable Image Recognition. arXiv. 20171707.07012v3 [Google Scholar]

- 50.Sandler M., Howard A., Zhu M., Zhmoginov A., Chen L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Salt Lake City, UT, USA. 18–22 June 2018; New York, NY, USA: IEEE; 2018. pp. 4510–4520. [Google Scholar]

- 51.Zia M., Ahmed F., Khan M.A., Tariq U., Jamal S.S., Ahmad J., Hussain I. Classification of Citrus Plant Diseases Using Deep Transfer Learning. Comput. Mater. Contin. 2022;70:1401–1417. [Google Scholar]

- 52.Pan S.J., Yang Q. A survey on transfer learning. Ieee Trans. Knowl. Data Eng. 2009;22:1345–1359. doi: 10.1109/TKDE.2009.191. [DOI] [Google Scholar]

- 53.Rahman T., Khandakar A., Qiblawey Y., Tahir A., Kiranyaz S., Kashem S.B.A., Islam M.T., al Maadeed S., Zughaier S.M., Khan M.S., et al. Exploring the effect of image enhancement techniques on COVID-19 detection using chest X-ray images. Comput. Biol. Med. 2021;132:104319. doi: 10.1016/j.compbiomed.2021.104319. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The dataset used in this research is publicly available with name “COVID-19 Radiography Database | Kaggle” on https://www.kaggle.com/tawsifurrahman/covid19-radiography-database.