Abstract

This work presents an automated contactless fingerprint recognition system for smartphones. We provide a comprehensive description of the entire recognition pipeline and discuss important requirements for a fully automated capturing system. In addition, our implementation is made publicly available for research purposes. During a database acquisition, a total number of 1360 contactless and contact-based samples of 29 subjects are captured in two different environmental situations. Experiments on the acquired database show a comparable performance of our contactless scheme and the contact-based baseline scheme under constrained environmental influences. A comparative usability study on both capturing device types indicates that the majority of subjects prefer the contactless capturing method. Based on our experimental results, we analyze the impact of the current COVID-19 pandemic on fingerprint recognition systems. Finally, implementation aspects of contactless fingerprint recognition are summarized.

Keywords: biometrics, fingerprint recognition, contactless fingerprint, usability, biometric performance

1. Introduction

Fingerprints are one of the most important biometric characteristic due to their known uniqueness and persistence properties. Fingerprint recognition systems are not only used worldwide by law enforcement and forensic agencies, they are also deployed in mobile devices as well as in nationwide applications. The vast majority of fingerprint capturing schemes requires contact between the finger and the capturing device’s surface. These systems suffer from distinct problems, e.g., low contrast caused by dirt or humidity on the capturing device plate or latent fingerprints of previous users (ghost fingerprints). Especially in multi-user applications, hygienic concerns lower the acceptability of contact-based fingerprint systems and hence limit their deployment. In a comprehensive study, Okereafor et al. [1] analyzed the risk of an infection by contact-based fingerprint recognition schemes and the hygienic concerns of their users. The authors concluded that contact-based fingerprint recognition carries a high risk of an infection if a previous user has contaminated the capturing device surface, e.g., with the SARS-CoV-2 virus.

To tackle these shortcomings of contact-based schemes, contactless fingerprint recognition systems have been researched for more than a decade. Contactless capturing schemes operate without any contact between the finger and the capturing device. Several contributions to the research area have paved the way for a practical implementation of contactless capturing schemes. Specialized stationary capturing devices based on multi-camera setups combined with powerful processing have already been implemented in a practical way [2]. However, to the best of the authors’ knowledge, no approach to a comprehensive usability-oriented mobile contactless fingerprint recognition scheme based on off-the-shelf components such as smartphones has been documented so far.

In this work, we propose a mobile contactless fingerprint recognition scheme for smartphones. Our contributions can be summarized as follows:

We present the first fully automated four-finger capturing and preprocessing scheme with integrated quality assessment in form of an Android app. A description of every implementation step of the preprocessing pipeline is given.

To benchmark our proposed system, we acquired a database under real-life conditions. A number of 29 subjects was captured by two contactless capturing devices in different environmental situations. Contact-based samples were also acquired as baseline.

We further evaluate the biometric performance of our acquired database and measure the interoperability between both capturing device types.

We provide a first comparative study about the usability of contactless and contact-based fingerprint recognition schemes. The study was conducted after the capture sessions and reports the users’ experiences in terms of hygiene and convenience.

Based on our experimental results, we elaborate on the impact of the current COVID-19 pandemic on fingerprint recognition in terms of biometric performance and user acceptance. Furthermore, we summarize implementation aspects which we consider as beneficial for mobile contactless fingerprint recognition.

The whole capturing, processing, and recognition pipeline discussed in this work is made publicly available for research purposes1. Moreover, interested researchers are welcome to hand-in and benchmark their algorithms on our acquired database2.

The rest of the paper is structured as follows: Section 2 gives an overview of contactless end-to-end schemes proposed in the scientific literature. In Section 3, the proposed processing pipeline is presented. In Section 4, we describe our experimental setup and provide details about the captured database and the usability study. The results of our experiments are reported in Section 5. The influence of the COVID-19 pandemic on fingerprint recognition is discussed in Section 6. Section 7 discusses implementation aspects. Finally, Section 8 concludes.

2. Related Work

In this section, we present an overview of contactless fingerprint recognition workflows. Here, we focus on end-to-end solutions which present a whole recognition pipeline from capturing to comparison. Table 1 summarizes the most relevant related works and their implementation aspects. As Table 1 indicates, the proposed methods are very different in terms of capturing device, fingerprint processing, recognition pipeline, and user convenience. In addition, the acquired databases vary in terms of size, illumination, and environmental influences. For this reason, a fair comparison of the biometric performance reported in the listed works is misleading and is therefore avoided.

Table 1.

Overview of selected recognition workflows with implementation aspects. (Device type: P = prototypical hardware, S = smartphone, W = webcam).

| Authors | Year | Device Type |

Mobile/ Stationary |

Multi-Finger Capturing |

Automatic Capturing |

Free Finger Positioning |

Quality Assessment |

On-Device Processing |

Usability Evaluation |

|---|---|---|---|---|---|---|---|---|---|

| Hiew et al. [3] | 2007 | P | S | N | N | N | N | N | N |

| Piuri and Scotti [6] | 2008 | W | S | N | N | N | N | N | N |

| Wang et al. [4] | 2009 | P | S | N | N | N | N | N | N |

| Kumar and Zhou [7] | 2011 | W | S | N | N | N | N | N | N |

| Noh et al. [8] | 2011 | P | S | Y | Y | N | N | N | Y |

| Derawi et al. [9] | 2012 | S | S | N | N | N | N | N | N |

| Stein et al. [10] | 2013 | S | M | N | Y | Y | N | Y | N |

| Raghavendra et al. [11] | 2014 | P | S | N | N | Y | N | N | N |

| Tiwari and Gupta [12] | 2015 | S | M | N | N | Y | N | N | N |

| Sankaran et al. [13] | 2015 | S | M | N | Y | N | N | N | N |

| Carney et al. [14] | 2017 | S | M | Y | Y | N | N | Y | N |

| Deb et al. [15] | 2018 | S | M | N | Y | Y | Y | Y | N |

| Weissenfeld et al. [16] | 2018 | P | M | Y | Y | Y | N | Y | Y |

| Birajadar et al. [17] | 2019 | S | M | N | Y | N | N | N | N |

| Attrish et al. [5] | 2021 | P | S | N | N | N | N | Y | N |

| Kauba et al. [18] | 2021 | S | M | Y | Y | Y | N | Y | N |

| Our method | 2021 | S | M | Y | Y | Y | Y | Y | Y |

The research on contactless fingerprint recognition has evolved from bulky single-finger devices to more convenient multi-finger capturing schemes. The first end-to-end approaches with prototypical hardware setups were presented by Hiew et al. [3] and Wang et al. [4]. Both works employed huge capturing devices for one single-finger acquisition within a hole-like guidance. A more recent approach by Attrish et al. [5] also used a box-like capturing setup and proposed processing which is implemented in an embedded hardware unit.

For remote user authentication, Piuri et al. [6] and Kumar et al. [7] investigated the use of webcams as fingerprint-capturing device. Both schemes showed a very low EER in experimental results. However, the database capturing process was not reported precisely. In addition, the usability and user acceptance of such an approach should be further investigated.

More recent works use smartphones for contactless fingerprint capturing. Here, a finger image is taken by a photo app and is manually transferred to a remote device where the processing is performed [12,13]. The improvement of the camera and processing power in current smartphones has made it possible to capture multiple fingers in a single capture attempt and process them on the device. Stein et al. [10] showed that it is feasible for the automated capturing of a single finger image using a smartphone. Carney et al. [14] presented the first four-finger capturing scheme. Weissenfeld et al. [16] proposed a system with a free positioning of four fingers in a mobile prototypical hardware setup. In a later work, Kauba et al. [18] showed that the recognition workflow also works on a smartphone.

In summary, Table 1 indicates that the evolution of contactless fingerprint technologies has moved towards mobile out-of-the-box devices. It can also be observed that a more convenient and practically relevant recognition process is increasingly becoming the focus of research. For a comprehensive overview on the topic of contactless fingerprint recognition, including publications which consider only parts of the recognition pipeline, the reader is referred to [19,20].

3. Mobile Contactless Recognition Pipeline

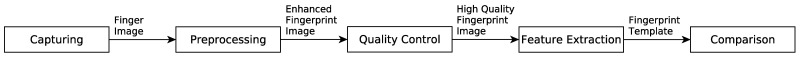

An unconstrained and automated contactless fingerprint recognition system usually requires a more elaborated processing compared to contact-based schemes. Figure 1 gives an overview of the key processing steps of the proposed recognition pipeline. Our method features on-device capturing, preprocessing, and quality assessment, whereas the biometric identification workflow is implemented on a back-end system. This section describes each component of the recognition pipeline in detail. The proposed method combines four implementation aspects seen as beneficial for an efficient and convenient recognition:

An Android application running on a smartphone which continuously captures finger images as candidates for the final fingerprints and provides user feedback.

A free positioning of the four inner-hand fingers without guidelines or a framing.

An integrated quality assessment which selects the best-suited finger image from the list of candidates.

A fully automated processing pipeline which processes the selected candidate to fingerprints ready for the recognition workflow.

Figure 1.

Overview of the most relevant steps of our proposed method.

3.1. Capturing

The vast majority of mobile contactless recognition schemes rely on state-of-the-art smartphones as capturing devices. Smartphones offer a high-resolution camera unit, a powerful processor, and an integrated user feedback via display and speaker, as well as a mobile internet connection for on-demand comparison against centrally stored databases.

In our case, the capturing, as well as the processing, is embedded in an Android app. Once the recognition process is started, the application analyzes the live-view image and automatically captures a finger image if the quality parameters fit the requirements. The application is designed to automatically capture and process up to six images per second. The capturing module resizes the captured image to a fixed size of 1.920 × 1.080 pixels. This makes the processing pipeline more robust against the native resolution of the camera sensor and ensures that the capturing device is able to process the input images with a moderate system load. During capturing, the user is able to see his/her fingers through a live-view on the screen and is able to adjust the finger position. In addition, the capturing progress is displayed.

3.2. Segmentation of the Hand Area

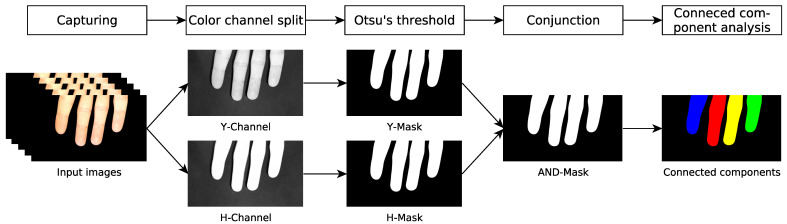

Proposed strategies for the segmentation mainly rely on color and contrast. Many works use color models for segmenting the hand color from the background. Here, an Otsu’s adaptive threshold is preferable over static thresholding. Combinations of different color channels also show superior results compared to schemes based on one channel [21,22,23,24].

Figure 2 presents an overview of the segmentation workflow. We adopt this method and analyze the Cr component of the yCbCr color model and the H component of the HSV color model. As a first step, we normalize the color channels to the full range of the histogram. Subsequently, the Otsu’s threshold determines the local minimum in the histogram curve. A binary mask is created where all pixel values below the threshold are set to black and all pixels above the threshold are set to white.

Figure 2.

Overview of the segmentation of connected components from a continuous stream of input images.

Additionally, our algorithm analyzes the largest connected components within the segmentation mask. Ideally, the segmentation mask should only contain one to four dominant components: from one hand area up to four finger areas, respectively. Our method also implements a plausibility check of the size, shape, and position of segmented areas.

3.3. Rotation Correction, Fingertip Detection, and Normalization

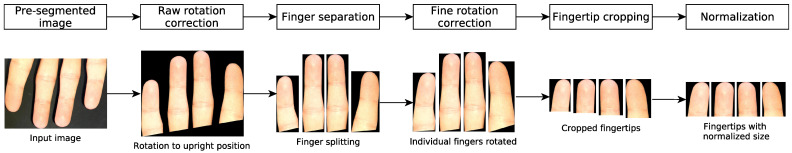

The rotation correction transforms every finger image in a way such that the final fingerprint image is oriented in an upright position.

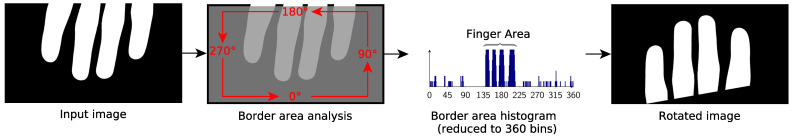

Figure 3 presents an overview of the rotation correction, fingertip detection, and normalization. Our method features two rotation steps: First, a coarse rotation on the full hand, and second, a fine rotation on the separated finger. A robust separation and identification of the fingers requires that the hand is rotated to an upright position. Here, the image border of the binary segmentation mask is analyzed. Many white border pixels indicate that the hand is placed into the sensor area from this particular direction. For this reason, we search for the border area with the most white pixels and calculate a rotation angle from this coordinate. Figure 4 illustrates this method.

Figure 3.

Overview of the coarse rotation correction, separation of fingerprint images from each other, fine rotation correction, fingertip cropping, and normalization of the fingerprint size.

Figure 4.

Detailed workflow of the coarse rotation correction.

After the coarse rotation, the fingertips are separated. To this end, the number of contours of considerable size is compared to a preconfigured value. If there are fewer contours than expected, it is most likely that the finger images contain part of the palm of the hand. In this case, pixels are cut out from the bottom of the image and the sample is tested again. In the case of more considerable contours, the finger image is discarded in order to avoid processing wrong finger-IDs.

An upright rotated hand area does not necessarily mean that the fingers are accurately rotated, because fingers can be spread. A fine rotation is computed on every finger image to correct such cases. Here, a rotated minimal rectangle is placed around every dominant contour. This minimal rectangle is then rotated into an upright position.

Additionally, the height of each finger image needs to be reduced to the area which contains the fingerprint impression. Other works have proposed algorithms which search for the first finger knuckle [25,26]. We implemented a simpler method which cuts the height of the finger image to the double of its width. In our use case, this method leads to slightly less accurate result but is much more robust against outliers.

Contactless fingerprint images captured in different sessions or processed by different workflows do not necessarily have the same size. The distance between sensor and finger defines the scale of the resulting image. For a minutiae-based comparison, it is crucial that both samples have the same size. Moreover, in a contactless-to-contact-based interoperability scenario, the sample size has to be aligned to the standardized resolution, e.g., 500 dpi. Therefore, we normalize the fingerprint image to a width of 300 pixels. This size refers to a ridge-to-ridge distance of approximately seven pixels, which corresponds to the distance of contact-based fingerprints captured with 500 dpi.

Together with the information regarding which hand is captured, an accurate rotation correction also enables a robust identification of the finger-ID, e.g., index, middle, ring, or little finger. Assuming that the capture subject holds the capturing device in an upright position, we analyze whether a left or right hand is presented. Subsequently, our algorithm automatically labels the fingerprint images with the corresponding finger-ID.

3.4. Fingerprint Processing

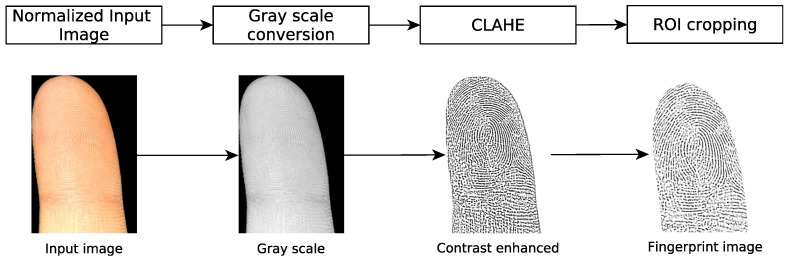

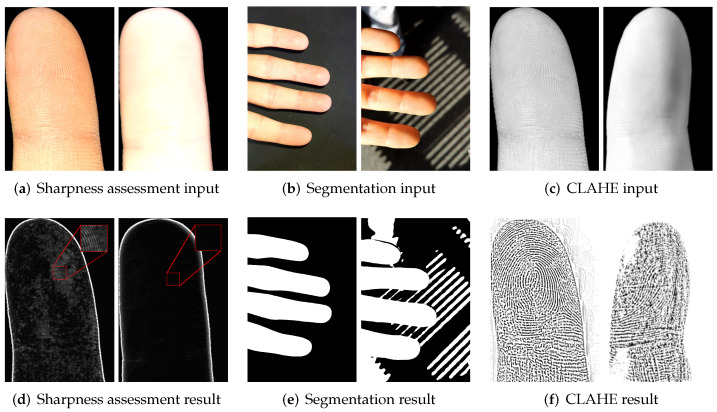

The preprocessed fingerprint image is aligned to resemble the impression of a contact-based fingerprint. Figure 5 presents the conversion from a finger image to a contactless fingerprint. We use the Contrast Limited Adaptive Histogram Equalization (CLAHE) on a grayscale-converted fingerprint image to emphasize the ridge-line characteristics.

Figure 5.

Overview of grayscale conversion, application of CLAHE, and cropping of the fingerprint region Of interest (ROI). This process is executed on every separated finger.

Preliminary experiments showed that the used feature extractor detects many false minutiae at the border region of contactless fingerprint samples. For this reason, we crop approximately 15 pixels of the border region; that is, the segmentation mask is dilated in order to reduce the size of the fingerprint image.

3.5. Quality Assessment

Quality assessment is a crucial task for contact-based and contactless fingerprint recognition schemes. We distinguish between two types of quality assessment: An integrated plausibility check at certain points of the processing pipeline and a quality assessment on the final sample.

The integrated plausibility check is an essential precondition for a successful completion of an automated recognition scheme. It ensures that only samples which passed the check of a processing stage are handed over to the next stage. In the proposed preprocessing pipeline, we implement three plausibility checks:

Segmentation: Analysis of the dominant components in the binary mask. Here, the amount of dominant contours, as well as their shape, size, and position are analyzed. In addition, the relative positions to each other are inspected.

Capturing: Evaluation of the fingerprint sharpness. A Sobel filter evaluates the sharpness of the processed grayscale fingerprint image. A square of 32 × 32 pixels at the center of the image is considered. A histogram analysis then assesses the sharpness of the image.

Rotation, cropping: Assessment of the fingerprint size. The size of the fingerprint image after the cropping stage shows whether the fingerprint image is of sufficient quality.

The combination of these plausibility checks has shown to be robust and accurate in our processing pipeline. Every sample passing all three checks is considered as a candidate for the final sample. For every finger-ID, five samples are captured and processed. All five samples are finally assessed by NFIQ2.0 [27] and the sample with the highest-quality score is considered as the final sample. An assessment on the applicability of NFIQ2.0 on contactless fingerprint samples is presented in [28].

3.6. Feature Extraction and Comparison

As mentioned earlier, the presented contactless fingerprint processing pipeline is designed in a way that obtained fingerprints are compatible with existing contact-based minutiae extractors and comparators. This enables the application of existing feature extraction and comparator modules within the proposed pipeline and facilitates a contact-based-to-contactless fingerprint comparison. Details of the employed feature extractor and comparator are provided in Section 5.

4. Experimental Setup

To benchmark our implemented app, we conducted a data acquisition along with a usability study. Each volunteering subject first participated in a data acquisition session and then was asked to answer a questionnaire.

4.1. Database Acquisition

We acquired a database to evaluate our proposed recognition pipeline under real-life conditions. The database capturing was carried out during the COVID-19 pandemic. For this reason, the acquisition setup had to meet institutional regulations, e.g., the capture subjects had to handle the capturing devices without close interaction of the instructor. This simulated a semisupervised capturing process and fulfilled the hygienic regulations during the database acquisition. It should be mentioned that the recruiting of participants was challenging due to general hygienic concerns. Therefore, the captured database is rather small compared to others, e.g., of Lin and Kumar [29].

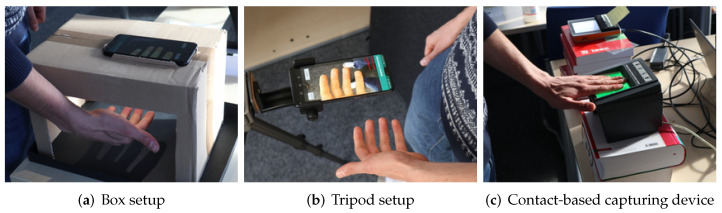

For the capturing of contactless samples, two different setups were used: Firstly, a box-setup simulates a predictable dark environment. Nevertheless, the subject was still able to place their fingers freely, c.f. Figure 6a. Secondly, a tripod setup simulates a fully free capturing setup where the instructor or the subject holds the capturing device, c.f. Figure 6b.

Figure 6.

Capturing device setups during our experiments.

For the contactless database capturing, we used two different smartphones: the Huawei P20 Pro (tripod setup) and the Google Pixel 4 (box setup). The finger images are captured with the highest possible resolution and downscaled as described in Section 3.1. Our proposed application is designed to run on most state-of-the-art Android devices. The downscaling of the input images reduces the influence of different capturing device resolutions and ensures that the system load is at a moderate level. For this reason, the influence of the used smartphones on our experimental results are considered as minor. An overview of the technical specifications of the used contactless capturing devices is shown in Table 2. Both devices captured and processed six frames per second, which resulted in an average system load of less than on both systems.

Table 2.

Technical specifications of the contactless capturing devices used during the data acquisition.

| Device | Google Pixel 4 | Huawei P20 Pro |

|---|---|---|

| Chipset | Snapdragon 855 | Kirin 970 |

| CPU | Octa-core | |

| Ram | 6 GB | |

| Camera | 12.2 MP, f/1.7, 27 mm | 40 MP, f/1.8, 27 mm |

| Flash mode | Always on | |

| Avg. system load | ∼84% | ∼73% |

In addition, contact-based samples were captured to compare the results of the proposed setup against an established system. On every capturing device, the four inner-hand fingers (finger-IDs 2–5 and 7–10 according to ISO/IEC 19794-4 [30]) were captured. The capturing with the three capturing devices was conducted in two rounds. Figure 6 illustrates the capturing setups.

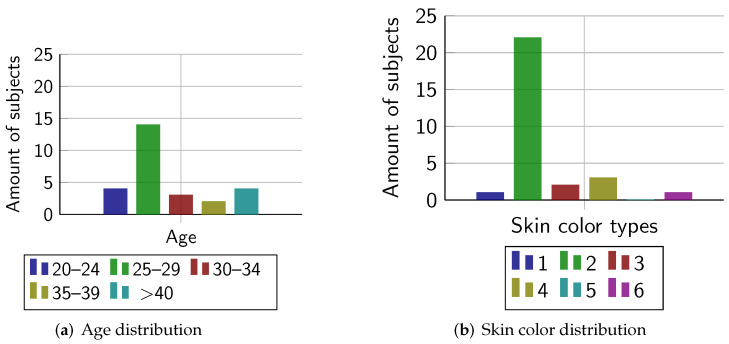

To measure the biometric performance of the proposed system, we captured a database of 29 subjects. The age and skin color distribution can be seen in Figure 7. Table 3 summarizes the database-capturing method. During the capturing of one subject, failure-to-acquire (FTA) errors according to ISO/IEC 19795-1 [31] occurred on both contactless capturing devices. Interestingly, this was most likely caused by the length of the subject’s fingernails. For more information, the reader is referred to Section 7.6. In total, we captured and processed 1360 fingerprints.

Figure 7.

Distribution of age and skin color, according to Fitzpatrick metric [32] of the subjects.

Table 3.

Overview of selected recognition workflows with biometric performance.

| Type | Setup | Device | Subjects Captured | Rounds | Samples |

|---|---|---|---|---|---|

| Contactless | box | Google Pixel 4 | 28 | 2 | 448 |

| Contactless | tripod | Huawei P20 Pro | 28 | 2 | 448 |

| Contact-based | - | Crossmatch Guardian 100 |

29 | 2 | 464 |

4.2. Usability Study Design

A usability study was conducted with each subject after they had interacted with the capturing devices. Each subject was asked about their individual preferences in terms of hygiene and convenience during the capturing process. Parts of our usability study are based on [16,33]. We ensured that the questionnaire was as short and formulated as clearly as possible such that the participants understood all questions correctly [34].

The questionnaire is provided as supplemental material and it contains three parts. The first part contains questions about the subject’s personal preferences; questions 1.2b and 1.2c are aligned with Furman et al. [33]. Here, the different perceptions for personal hygiene before and during the COVID-19 pandemic were asked. The answer options of question 1.5 were rated by the capture subjects using the Rohrmann scale [35] (strongly disagree, fairly disagree, undecided, fairly agree, strongly agree). The questions were intended to find out the subjects’ perception regarding hygienic concerns during the fingerprint capturing process.

The second part of the questionnaire contains questions about the dedicated usability of a capturing device. The same questions were answered by the subject for both devices. This part was designed so that the same questions for both capturing devices were asked separately from each other in blocks. The intention behind this is to conduct comparisons between the different capturing devices. Again, the Rohrmann scale was used, and sub-questions were arranged randomly. In the last part of the questionnaire, the subjects were asked about their personal preference between both capturing devices. Here, the subjects had to choose one preferred capturing device.

5. Results

This section presents the biometric performance achieved by the entire recognition pipeline and the outcome of our usability study.

5.1. Biometric Performance

In our experiments, we first estimate the distributions of NFIQ2.0 scores for the captured dataset. Additionally, the biometric performance is evaluated employing open-source fingerprint recognition systems. The features (minutiae triplets—2D location and angle) are extracted using a neural network-based approach. In particular, the feature extraction method of Tang et al. [36] is employed. For this feature extractor, pretrained models are made available by the authors. To compare extracted templates, a minutiae pairing and scoring algorithm of the sourceAFIS system of Važan [37] is used3. We provide a script to set up the recognition pipeline along with our capturing and preprocessing pipeline.

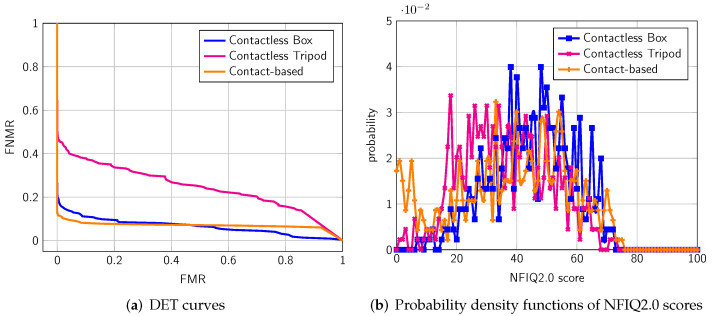

In the first experiment, we compare the biometric performance on all fingers between the different sub-datasets. From Table 4, we can see that the contactless box setup obtains an equal error rate (EER) of , which is comparable to the contact-based setup (). Figure 8a presents the corresponding detection error trade-off (DET) curve, whereas Figure 8b shows the probability density functions of NFIQ2.0 scores. In contrast, the performance of the open setup massively drops to an EER of . The corresponding NFIQ2.0 scores do not reflect this drop in terms of EER. Here, all three datasets have a comparable average score.

Table 4.

Overview of the NFIQ2.0 quality scores and the EER of all captured fingers (finger-IDs 2–5 and 7–10) separated by sensors.

| Capturing Device | Subset | Avg. NFIQ2.0 Score | EER (%) |

|---|---|---|---|

| Contactless box | All fingers | 44.80 (±13.51) | 10.71 |

| Contactless tripod | All fingers | 36.15 (±14.45) | 30.41 |

| Contact-based | All fingers | 38.15 (±19.33 ) | 8.19 |

Figure 8.

NFIQ2.0 score distribution and biometric performance obtained from single finger comparisons.

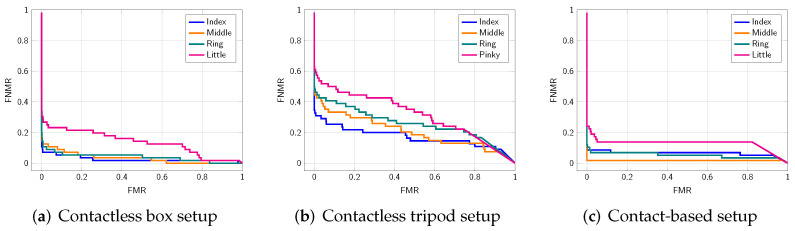

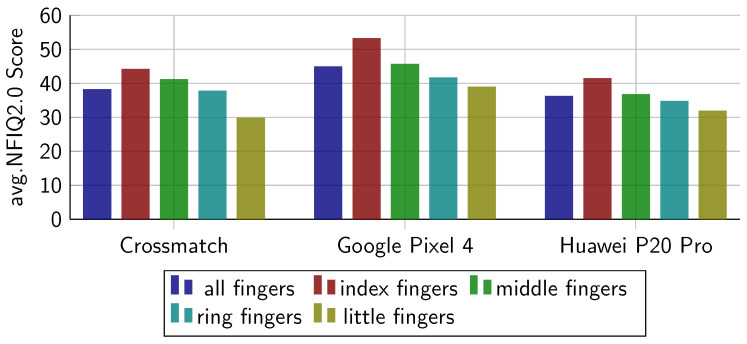

In the second experiment, we compute the biometric performance for every finger separately4. From Table 5 and Figure 9, we can see that on all subsets, the performance of the little finger drops compared to the other fingers. On the contact-based sub-dataset, the middle finger has a much lower EER () than the rest. This could be because it might be easiest for users to apply the correct pressure to the middle finger. From Figure 10, it is observable that there is only a small drop of NFIQ2.0 quality score on the little finger.

Table 5.

Overview of the NFIQ2.0 quality scores and the EER of individual fingers: index fingers (IDs 2, 7), middle fingers (IDs 3, 8), ring fingers (IDs 4, 9), and little fingers (IDs 5, 10).

| Capturing Device | Fingers | Avg. NFIQ2.0 Score | EER (%) |

|---|---|---|---|

| Contactless box | Index fingers | 53.16 (±11.27) | 7.14 |

| Contactless box | Middle fingers | 45.59 (±11.06) | 8.91 |

| Contactless box | Ring fingers | 41.57 (±12.89) | 7.14 |

| Contactless box | Little fingers | 38.88 (±14.21) | 21.43 |

| Contactless tripod | Index fingers | 41.38 (±14.29) | 21.81 |

| Contactless tripod | Middle fingers | 36.68 (±13.01) | 28.58 |

| Contactless tripod | Ring fingers | 34.68 (±14.28) | 29.62 |

| Contactless tripod | Little fingers | 31.79 (±14.63) | 38.98 |

| Contact-based | Index fingers | 44.06 (±17.53 ) | 8.62 |

| Contact-based | Middle fingers | 41.08 (±19.71 ) | 1.72 |

| Contact-based | Ring fingers | 37.68 (±17.08 ) | 6.90 |

| Contact-based | Little fingers | 29.78 (±19.94 ) | 13.79 |

Figure 9.

DET curves obtained from individual finger comparisons: index fingers (IDs 2, 7), middle fingers (IDs 3, 8), ring fingers (IDs 4, 9), and little fingers (IDs 5, 10).

Figure 10.

Averaged NFIQ2.0 scores obtained from the considered databases: average over all fingers (IDs 2–4, 6–10), index fingers (IDs 2, 7), middle fingers (IDs 3, 8), ring fingers (IDs 4, 9), and little fingers (IDs 5, 10).

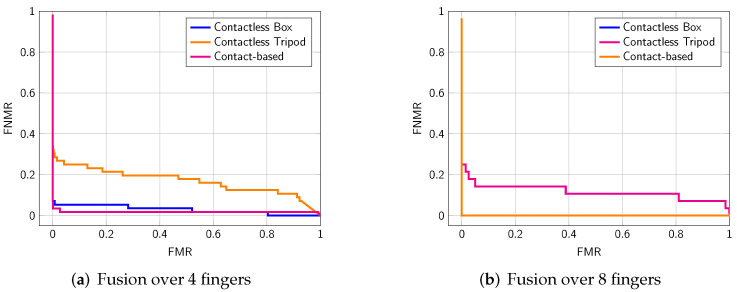

Further, we applied a score level fusion on four and eight fingers. Obtained EERs are summarized in Table 6. As expected, the fusion improves the EER on all sub-datasets. In particular, the fusion of eight fingers shows a huge performance gain (see Figure 11). The box setup and the contact-based sensor show an EER of 0%, which means that matches and nonmatches are completely separated. The open setup also achieves a considerably high performance gain through the fusion. Here, the inclusion of all fingers makes the process much more robust, especially in challenging environmental situations.

Table 6.

Overview of the EER in a fingerprint fusion approach: Fusion over the 4 inner-hand fingers of the left hand (IDs 2–4) and right hand (IDs 7–10) fusing and fusion over 8 fingers of both inner hands (IDs: 2–4, 7–10).

| Capturing Device | Fusion Approach | EER (%) |

|---|---|---|

| Contactless box | 4 fingers | 5.36 |

| Contactless box | 8 fingers | 0.00 |

| Contactless tripod | 4 fingers | 21.42 |

| Contactless tripod | 8 fingers | 14.29 |

| Contact-based | 4 finger | 2.22 |

| Contact-based | 8 finger | 0.00 |

Figure 11.

DET curves obtained in a fingerprint fusion approach: Fusion over the 4 inner-hand fingers of the left hand (IDs 2–4) and right hand (IDs 7–10) fusing (a) and fusion over 8 fingers of both inner hands (IDs: 2–4, 7–10) (b).

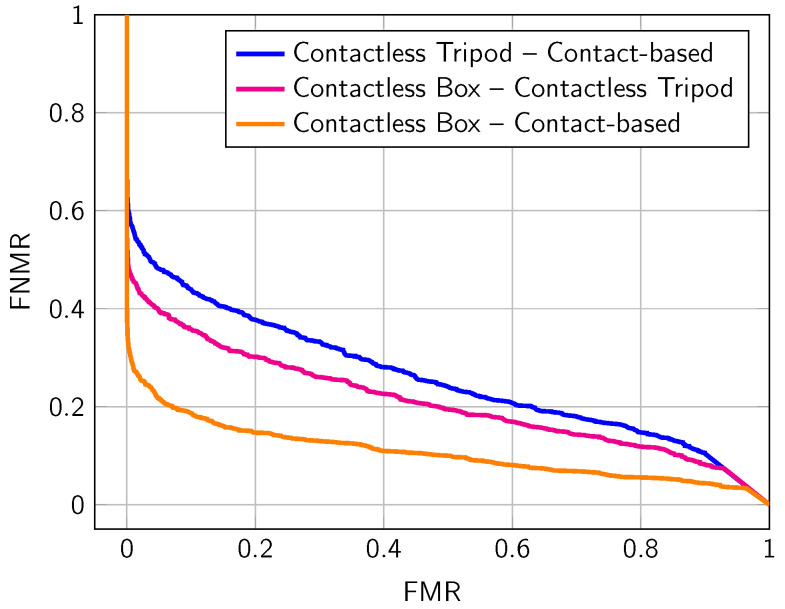

In our last experiment, we analyze the interoperability between the different subsets of the collected data. Table 7 summarizes the EERs achieved by comparing the samples of different setups, and Figure 12 presents the corresponding DET curves. The contactless box setup shows a good interoperability to the contact-based setup (). The EER of the open setup again significantly drops ( to contactless box and to contact-based).

Table 7.

Overview of the interoperability of different subset of the collected data: Comparison of fingerprints captured with different setups. All captured fingers (finger-IDs 2–5 and 7–10) are considered.

| Capturing Device A | Capturing Device B | EER (%) |

|---|---|---|

| Contactless box | Contactless tripod | 27.27 |

| Contactless box | Contact-based | 15.71 |

| Contactless tripod | Contact-based | 32.02 |

Figure 12.

DET curves obtained from the interoperability of different subset of the collected data: Comparison of fingerprints captured with different setups. All captured fingers (finger-IDs 2–5 and 7–10) are considered.

Table 8 compares the biometric performance and the average NFIQ2.0 scores of our proposed system to other publicly available databases. We used the algorithms from our method to process the contactless finger images to fingerprint samples.

Table 8.

Average NFIQ2.0 scores and biometric performance obtained from contactless and contact-based databases including the fingerprint subcorpus of the MCYT Database [38], the FVC2006 Database [39] and the Hong Kong Polytechnic University Contactless 2D to Contact-based 2D Fingerprint Images Database Version 1.0 [40].

| Database | Subset | Avg. NFIQ2.0 Score | EER (%) |

|---|---|---|---|

| MCYT | dp | 37.58 (±15.17) | 0.48 |

| pb | 33.02 (±13.99) | 1.35 | |

| FVC06 | DB2-A | 36.07 (±9.07) | 0.15 |

| PolyU | Contactless session 1 | 47.71 (±10.86) | 3.91 |

| Contactless session 2 | 47.08 (±13.21) | 3.17 | |

| Our database | Contact-based | 38.15 (±19.33 ) | 8.19 |

| Contactless box | 44.80 (±13.51) | 10.71 |

We can see that the biometric performance on the fingerprint subcorpus of the MCYT bimodal database [38] and the Fingerprint Verification Contest 2006 (FVC06) [39] show a good performance. Moreover, the contactless subset of the Hong Kong Polytechnic University Contactless 2D to Contact-based 2D Fingerprint Images Database Version 1.0 (PolyU) [40] shows a competitive performance. Compared to these baselines, the performance achieved on our database is inferior, which is most likely due to the impact of the semisupervised acquisition scenario, as well as the use of hand disinfection measures during the COVID-19 pandemic. Section 6.1 further elaborates on these findings. It should also be noted that the PolyU Database was captured under very constrained environmental conditions with a single-finger capturing scenario and for a different purpose. For this reason, the obtained biometric performance cannot be directly compared to our method.

5.2. Usability Study

We present the results of our usability study based on the questionnaire introduced in Section 4.2. The questionnaire was answered by 27 subjects (8 female, 19 male). The subjects were between 22 and 60 years old (average age: years, median age: 28 years). The age distribution is presented in Figure 7. The majority of subjects have used professional fingerprint scanners before this study. A large proportion of the 27 data subjects also use some type of fingerprint capturing device regularly (at least once per week), e.g., to unlock mobile devices.

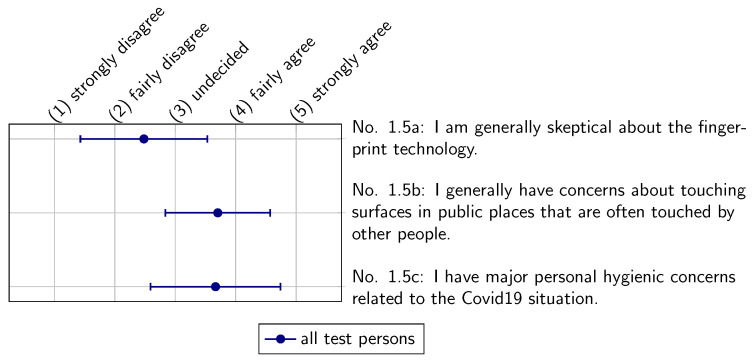

Figure 13 presents the perceptions of the subjects regarding general hygiene. The subjects in our study tend to have general concerns about touching surfaces in public places (Statement 1.5b). Moreover, the majority of the asked subjects have personal concerns related to the COVID-19 pandemic (Statement 1.5c). From the small difference in terms of perception before and during the COVID-19 pandemic, it could be inferred that the pandemic might have only a small influence on the general hygienic awareness of the subjects tested in our study.

Figure 13.

General assessment of fingerprint technology and hygienic concerns.

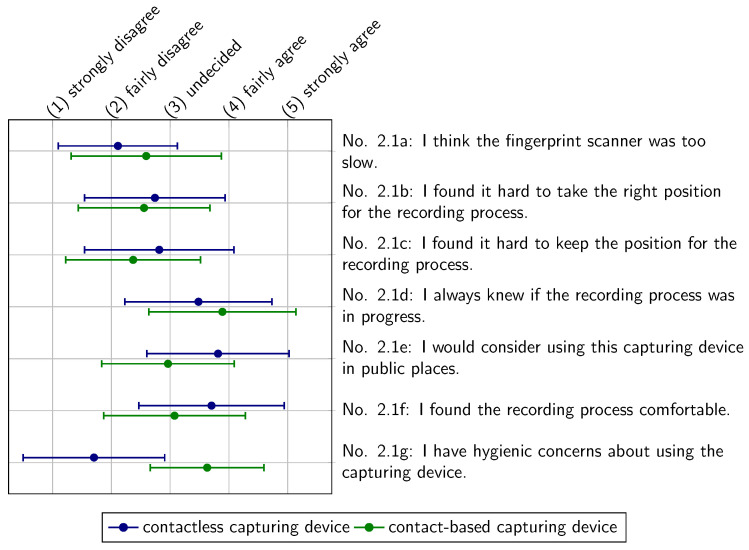

The usability assessment of the contactless and contact-based capturing devices is presented in Figure 14. In most statements, both capturing devices were rated fairly similar by the asked subjects. The contactless capturing device has a slight advantage in terms of capturing speed (Statement 2.1a). The contact-based capturing device tends to be rated better in taking and keeping the capturing position during the whole process (Statements 2.1b and 2.1c). In addition, the subjects asked in our study found it slightly easier to assess whether the capturing process was running (Statement 2.1d). Moreover, it can be observed that the tested group prefer the comfort of the contactless device (Statement 2.1f). Most notably, the asked subjects might have less hygienic concerns using the contactless device in public places (Statements 2.1e and 2.1g). In these cases, a U-Test [41] shows a two-sided significance with a level of .

Figure 14.

Usability assessment of the contactless and contact-based capturing device in comparison to each other.

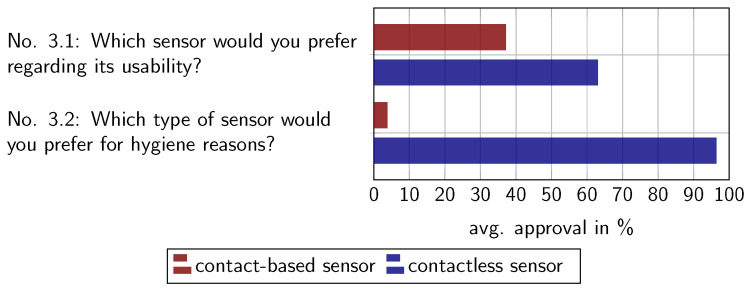

Figure 15 illustrates the comparative results. In a direct comparison of the different capturing device types, the advantage of hygiene might outweigh the disadvantages of hand positioning. The slight majority of subjects in our study might prefer the contactless capturing device over a contact-based one in terms of general usability (Question 3.1). Considering hygienic aspects, the majority of the asked subjects would choose the contactless capturing device over the contact-based one (Question 3.2). This correlates to the assessment of hygienic concerns of Statement 1.5c.

Figure 15.

Comparative assessment of the capturing device type preference.

It should be noted that this study includes only a number of 27 subjects which might not be statistically sufficient to conduct a trustable census. In addition, as Figure 7 indicates, the age and skin color of the subjects are not distributed equally. For this reason, the results might not represent the general perception in society and should be treated with care.

6. Impact of the COVID-19 Pandemic on Fingerprint Recognition

The accuracy of some biometric characteristics may be negatively impacted by the COVID-19 pandemic. The pandemic and its related measures have no direct impact on the operation of fingerprint recognition. Nevertheless, there are important factors that may indirectly reduce the recognition performance and user acceptance of fingerprint recognition.

6.1. Impact of Hand Disinfection on Biometric Performance

The biometric performance drops due to dry and worn-out fingertips. Olsen et al. [42] showed that the level of moisture has a significant impact on the biometric performance of contact-based fingerprint recognition systems. The authors tested five capturing devices with normal, wet, and dry fingers. Dry fingers have especially been shown to be challenging. In addition, medical studies have shown that frequent hand disinfection causes dermatological problems [43,44]. The disinfection liquids dry out the skin and cause chaps in the epidermis and dermis.

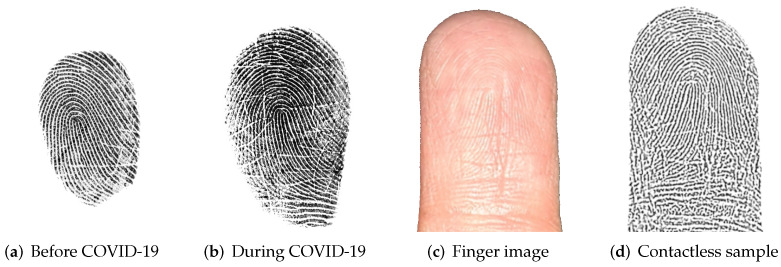

Thus, we can infer that regular hand disinfection leads to two interconnected problems which reduce the recognition performance: Dry fingers show low contrast during the capturing due to insufficient moisture. In addition, disinfection liquids lead to chaps on the finger surface. Figure 16 shows contact-based fingerprints captured before the COVID-19 pandemic (a) and during the COVID-19 pandemic (b). Both samples were captured from the same subject using the same capturing device. It is observable that sample (b) exhibits more impairments in the ridge-line pattern compared to sample (a). Moreover, the finger image (c) clearly shows chaps in the finger surface which are likely caused by hygienic measures. The processed contactless sample (d) shows these impairments, too.

Figure 16.

Four samples of the same subject: Sample (a) was captured before the COVID-19 pandemic using a contact-based capturing device, whereas samples (b–d) were captured during the COVID-19 pandemic. Samples (a,b) were captured with the same capturing device, whereas (c,d) are captured and processed using our method.

The biometric performances reported for different databases presented in Table 8 also support these observations. Compared to the baseline of databases acquired before the COVID-19 pandemic, the performance achieved on our database is inferior. This is most likely caused by the impact of our semisupervised acquisition scenario, as well as the use of hand disinfection measures.

6.2. User Acceptance

Viruses, e.g., SARS-CoV-2, have four main transmission routes: droplet, airborne, direct contact, and indirect contact via surfaces. In the last case, an infected individual contaminates a surface by touching it. A susceptible individual who touches the surface afterwards has a high risk of infection via this indirect transmission route. Otter et al. [45] present an overview of the transmission of different viruses (including SARS coronaviruses) via dry surfaces. The authors conclude that SARS coronaviruses can survive for extended periods on surfaces and, for this reason, form a high risk of infection.

In large-scale implementations e.g., the Schengen Entry/Exit System (EES) [46] where many individuals contact the surface of a capturing device, the users are especially exposed to a major risk of infection. The only way to implement a safe contact-based fingerprint recognition in such application scenarios is to apply a disinfection of the capturing device after every subject.

Nevertheless, the requirement of touching a surface can lower the user’s acceptance of contact-based fingerprint recognition. The results of our usability studies in Section 5.2 show that the asked individuals are fairly skeptical about touching capturing device surfaces in public places and that (in a direct comparison) they would prefer a contactless capturing device. For this reason, the contactless capturing schemes could lead to a higher user acceptance. However, it should be noted that our tested group was very small and user acceptance is dependent on the capturing device design.

7. Implementation Aspects

This section summarizes aspects which are considered beneficial for practical implementation.

7.1. Four-Finger Capturing

As has been shown in previous works, our proposed recognition pipeline demonstrates that it is possible to process four fingerprints from a continuous stream of input images. This requires a more elaborated processing but has two major advantages:

Faster and more accurate recognition process: Due to a larger proportion of finger area in the image, focusing algorithms work more precisely. This results in less misfocusing and segmentation issues.

Improved biometric performance: The direct capturing of four fingerprints in one single capturing attempt is highly suitable for biometric fusion. As shown in Table 6, this lowers the EER without any additional capturing and with very little additional processing.

However, a major obstacle for contactless schemes is to capture the thumbs accurately and conveniently. In most environments, the best results are achieved with the inner-hand fingers facing upwards. This is ergonomically hard to achieve with thumbs.

7.2. Automatic Capturing and On-Device Processing

State-of-the-art smartphones feature powerful processing units which are capable to execute the described processing pipeline in a reasonable amount of time. We have shown that a robust and convenient capturing relies on automatic capturing with integrated plausibility checks. In addition, the amount of data which has to be transferred to a remote recognition workflow is reduced by on-device processing, and the recognition workflow can be based on standard components.

In a biometric authentication scenario, it can be especially beneficial to integrate the feature extraction and comparison into the mobile device. In this case, an authentication of a previously enrolled subject can be implemented on a standalone device.

7.3. Environmental Influences

Contactless fingerprint recognition in unconstrained environmental situations may be negatively affected by varying and heterogeneous influences. In our experiments, we showed that our contactless setup performs rather well under a semicontrolled environment. The performance of the same recognition pipeline drastically drops in an uncontrolled environment. Here, it is observable that different stages of the processing pipeline suffer from challenging environments:

Focusing of the hand area needs to be very accurate and fast in order to provide sharp finger images. Here, a focus point which is missed by a few millimeters causes a blurred and unusable image. Figure 17a,d illustrate the difference between a sharp finger image and a slightly unfocused image with the help of a Sobel filter. Additionally, the focus has to follow the hand movement in order to achieve a continuous stream of sharp images. The focus of our tested devices tend to fail under challenging illuminations which was not the case in the constrained environment.

Segmentation, rotation, and finger separation rely on a binary mask in which the hand area is clearly separated from the background. Figure 17b,e show examples of a successful and unsuccessful segmentation. Impurities in the segmentation mask lead to connected areas between the fingertips and artifacts at the border region of the image. This causes inaccurate detection and separation of the fingertips and incorrect rotation results. Because of heterogeneous background, this is more often the case in unconstrained setups.

Finger image enhancement using the CLAHE algorithm normalizes dark and bright areas on the finger image. From Figure 17c,e, we can see that this also works on samples of high contrast. Nevertheless, the results of challenging images may become more blurry.

Figure 17.

Illustration of accurate and challenging input images and corresponding result images for sharpness assessment (a,d), segmentation (b,e), and contrast adjustment (c,f). The left images of each block represent an accurate image; the right one—a challenging one.

The discussed challenges lead to a longer capturing time and, for this reason, they lower the usability and user acceptance. Furthermore, the recognition performance in unconstrained environments is limited. Here, a weighing between usability and performance should be performed based on the intended use case of the capturing device. The quality assessments implemented in our scheme detect these circumstances and discard finger images with said shortcomings. More elaborated methods could directly adapt to challenging images, e.g., by changing the focusing method or segmentation scheme. This approach could lead to more robustness and hence improved usability in different environments.

7.4. Feature Extraction Strategies

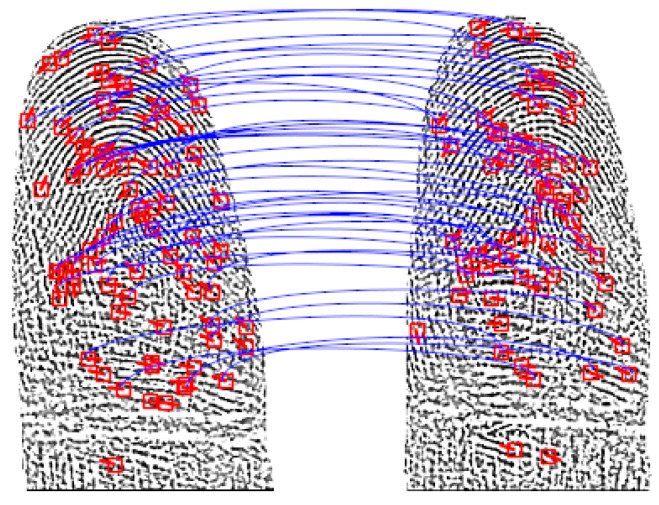

Feature extraction techniques are vital to achieve a high biometric performance. In our experiments, we used an open-source feature extractor which is able to process contact-based and contactless samples. Figure 18 shows an example of this minutiae-based feature extraction and comparison scheme. With this method, we were able to test the interoperability between capturing device types. Nevertheless, the overall performance may be improved by more sophisticated methods, e.g., commercial off-the-shelf-systems such as the VeriFinger SDK [47].

Figure 18.

Illustration of a minutiae-based comparison of two contactless fingerprint samples. The features are extracted using the method described in Section 4. The blue lines indicate mated minutiae.

Dedicated contactless feature-extraction methods can increase the performance, as shown in [13,48]. Here, the authors were able to tune their feature extractor to their capturing and processing and they proposed an end-to-end recognition system.

Contactless fingerprint images do not correspond to the standardized 500 dpi resolution of contact-based capturing devices because of a varying distance between the capturing device and the fingertip. This challenge can be addressed in different ways:

Feature extractors and comparison algorithms which are robust against resolution differences provide an efficient capturing process. Here, metric scaling approaches or deep learning methods could be beneficial implementation strategies. A normalization to a predefined width of the fingerprint image such as proposed in this work (c.f. Figure 3) is also considered as beneficial, especially if off-the-shelf comparison algorithms are used. Countermeasures could also be implemented in the capturing stage. A fixed focal length calibrated on a suitable sensor-to-finger distance could reduce the variance in terms of size. This method could also be combined with an on-screen finger guidance, such as, e.g., that proposed by Carney et al. [14]. It should be noted that these approaches might be inferior for the system’s usability.

7.5. Visual Instruction

According to the presented results in Section 5.2, the visual feedback of the contactless capturing device has also been rated to be inferior compared to the contact-based one. Here, the smartphone display is well suited to show further information about the capturing process. Additionally, an actionable feedback can be given on the positioning of the fingers, as suggested in [14].

7.6. Robust Capturing of Different Skin Colors and Finger Characteristics

An important implementation aspect of biometric systems is that they must not discriminate certain user groups based on skin color or other characteristics. During our database-capturing, subjects of different skin color types were successfully captured. Nevertheless, it must be noted that the amount of subjects is too small to make a general statement about the fairness of the presented approach.

As already mentioned in Section 4.1, we observed one single failure-to-acquire (FTA) during our database acquisition. Most likely the cause for this was that the subject had very long fingernails which were segmented as finger area. Here, the plausibility check during the segmentation failed and a capturing of the subject was not possible. To overcome this flaw, a fingernail detection could be implemented in the segmentation workflow.

8. Conclusions

In this work, we proposed a fingerprint recognition workflow for state-of-the-art smartphones. The method is able to automatically capture the four inner-hand fingers of a subject and process them to separated fingerprint images. With this scheme, we captured a database of 1360 fingerprints from 29 subjects. Here, we used two different setups: a box setup with constrained environmental influences, and a tripod setup. Additionally, we captured contact-based fingerprints as baseline. During a usability study, after the capturing, the subjects were asked about their experience with the different capturing device types.

Our investigations show that the overall biometric performance of the contactless box setup is comparable to the contact-based baseline, whereas the unconstrained contactless tripod setup shows inferior results. All setups benefit from a biometric fusion. A further experiment on the interoperability between contactless and contact-based samples (box setup) shows that the performance drops only slightly.

The presented usability study shows that the majority of users prefer a contactless recognition system over a contact-based one for hygienic reasons. In addition, the usability of the contactless capturing device was seen as slightly better. Nevertheless, the user experience of the tested contactless devices can be further improved.

The COVID-19 pandemic also has an influence on the performance and acceptance of fingerprint recognition systems. Here, hygienic measures lower the recognition performance and users show more concern regarding touching surfaces in public areas.

Our proposed method forms a baseline for a mobile automatic contactless fingerprint recognition system and is made publicly available. Researchers are encouraged to integrate their algorithms into our system and contribute to a more accurate, robust, and secure contactless fingerprint recognition scheme.

Acknowledgments

This research work was funded by the German Federal Ministry of Education and Research and the Hessian Ministry of Higher Education, Research, Science and the Arts within their joint support of the National Research Center for Applied Cybersecurity ATHENE.

Author Contributions

Conceptualization, methodology: J.P. and C.R.; software, data curation, investigation, validation, formal analysis: J.P.; usability analysis: R.H.; writing—original draft preparation: J.P. and C.R.; writing—review and editing: C.R., N.B., and C.B.; visualization: J.P., C.R., and R.H.; supervision: N.B. and C.B. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially funded by the German Federal Ministry of Education and Research and the Hessen State Ministry for Higher Education, Research, and the Arts within their joint support of the National Research Center for Applied Cybersecurity ATHENE.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study. Written informed consent has been obtained from the patient(s) to publish this paper.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Notes

Source code will be made available at https://gitlab.com/jannispriesnitz/mtfr (accessed on 8 December 2021).

Due to privacy regulations, it is not possible to make the database collected in this work publicly available.

The original algorithm uses minutiae quadruplets, i.e., additionally considers the minutiae type (e.g., ridge ending or bifurcation). As only minutiae triplets are extracted by the used minutiae extractors, the algorithm was modified to ignore the type information.

In this experiment, we consider only the same finger-IDs from a different subject as false match.

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Okereafor K., Ekong I., Okon Markson I., Enwere K. Fingerprint Biometric System Hygiene and the Risk of COVID-19 Transmission. JMIR Biomed. Eng. 2020;5:e19623. doi: 10.2196/19623. [DOI] [Google Scholar]

- 2.Chen Y., Parziale G., Diaz-Santana E., Jain A.K. 3D touchless fingerprints: Compatibility with legacy rolled images; Proceedings of the 2006 Biometrics Symposium: Special Session on Research at the Biometric Consortium Conference; Baltimore, MD, USA. 21 August–19 September 2006; pp. 1–6. [Google Scholar]

- 3.Hiew B.Y., Teoh A.B.J., Pang Y.H. Digital camera based fingerprint recognition; Proceedings of the International Conference on Telecommunications and Malaysia International Conference on Communications; Penang, Malaysia. 14–17 May 2007; pp. 676–681. [Google Scholar]

- 4.Wang L., El-Maksoud R.H.A., Sasian J.M., Kuhn W.P., Gee K., Valencia V.S. A novel contactless aliveness-testing (CAT) fingerprint capturing device; Proceedings of the Novel Optical Systems Design and Optimization XII; San Diego, CA, USA. 3–4 August 2009; p. 742915. [Google Scholar]

- 5.Attrish A., Bharat N., Anand V., Kanhangad V. A Contactless Fingerprint Recognition System. arXiv. 20212108.09048 [Google Scholar]

- 6.Piuri V., Scotti F. Fingerprint Biometrics via Low-cost capturing devices and Webcams; Proceedings of the Second International Conference on Biometrics: Theory, Applications and Systems (BTAS); Washington, DC, USA. 28 September–1 October 2008; pp. 1–6. [Google Scholar]

- 7.Kumar A., Zhou Y. Contactless fingerprint identification using level zero features; Proceedings of the Conference on Computer Vision and Pattern Recognition Workshops (CVPRW); Colorado Springs, CO, USA. 20–25 June 2011; pp. 114–119. [Google Scholar]

- 8.Noh D., Choi H., Kim J. Touchless capturing device capturing five fingerprint images by one rotating camera. Opt. Eng. 2011;50:113202. doi: 10.1117/1.3646327. [DOI] [Google Scholar]

- 9.Derawi M.O., Yang B., Busch C. Fingerprint Recognition with Embedded Cameras on Mobile Phones; Proceedings of the Security and Privacy in Mobile Information and Communication Systems (ICST); Frankfurt, Germany. 25–27 June 2012; pp. 136–147. [Google Scholar]

- 10.Stein C., Bouatou V., Busch C. Video-based fingerphoto recognition with anti-spoofing techniques with smartphone cameras; Proceedings of the International Conference of the Biometric Special Interest Group (BIOSIG); Darmstadt, Germany. 5–6 September 2013; pp. 1–12. [Google Scholar]

- 11.Raghavendra R., Raja K.B., Surbiryala J., Busch C. A low-cost multimodal biometric capturing device to capture finger vein and fingerprint; Proceedings of the IEEE International Joint Conference on Biometrics; Clearwater, FL, USA. 29 September–2 October 2014; pp. 1–7. [Google Scholar]

- 12.Tiwari K., Gupta P. A touch-less fingerphoto recognition system for mobile hand-held devices; Proceedings of the International Conference on Biometrics (ICB); Phuket, Thailand. 19–22 May 2015; pp. 151–156. [Google Scholar]

- 13.Sankaran A., Malhotra A., Mittal A., Vatsa M., Singh R. On smartphone camera based fingerphoto authentication; Proceedings of the 7th International Conference on Biometrics Theory, Applications and Systems (BTAS); Arlington, VA, USA. 8–11 September 2015; pp. 1–7. [Google Scholar]

- 14.Carney L.A., Kane J., Mather J.F., Othman A., Simpson A.G., Tavanai A., Tyson R.A., Xue Y. A Multi-Finger Touchless Fingerprinting System: Mobile Fingerphoto and Legacy Database Interoperability; Proceedings of the 4th International Conference on Biomedical and Bioinformatics Engineering (ICBBE); Seoul, Korea. 12–14 November 2017; pp. 139–147. [Google Scholar]

- 15.Deb D., Chugh T., Engelsma J., Cao K., Nain N., Kendall J., Jain A.K. Matching Fingerphotos to Slap Fingerprint Images. arXiv. 20181804.08122 [Google Scholar]

- 16.Weissenfeld A., Strobl B., Daubner F. Contactless finger and face capturing on a secure handheld embedded device; Proceedings of the 2018 Design, Automation Test in Europe Conference Exhibition (DATE); Dresden, Germany. 19–23 March 2018; pp. 1321–1326. [Google Scholar]

- 17.Birajadar P., Haria M., Kulkarni P., Gupta S., Joshi P., Singh B., Gadre V. Towards smartphone-based touchless fingerprint recognition. Sādhanā. 2019;44:161. doi: 10.1007/s12046-019-1138-5. [DOI] [Google Scholar]

- 18.Kauba C., Söllinger D., Kirchgasser S., Weissenfeld A., Fernández Domínguez G., Strobl B., Uhl A. Towards Using Police Officers’ Business Smartphones for Contactless Fingerprint Acquisition and Enabling Fingerprint Comparison against Contact-Based Datasets. Sensors. 2021;21:2248. doi: 10.3390/s21072248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Priesnitz J., Rathgeb C., Buchmann N., Busch C. An Overview of Touchless 2D Fingerprint Recognition. EURASIP J. Image Video Process. 2021;2021:25. doi: 10.1186/s13640-021-00548-4. [DOI] [Google Scholar]

- 20.Yin X., Zhu Y., Hu J. A Survey on 2D and 3D Contactless Fingerprint Biometrics: A Taxonomy, Review, and Future Directions. IEEE Open J. Comput. Soc. 2021;2:370–381. doi: 10.1109/OJCS.2021.3119572. [DOI] [Google Scholar]

- 21.Hiew B.Y., Teoh A.B.J., Ngo D.C.L. Automatic Digital Camera Based Fingerprint Image Preprocessing; Proceedings of the International Conference on Computer Graphics, Imaging and Visualisation (CGIV); Sydney, Australia. 26–28 July 2006; pp. 182–189. [Google Scholar]

- 22.Sisodia D.S., Vandana T., Choudhary M. A conglomerate technique for finger print recognition using phone camera captured images; Proceedings of the International Conference on Power, Control, Signals and Instrumentation Engineering (ICPCSI); Chennai, India. 21–22 September 2017; pp. 2740–2746. [Google Scholar]

- 23.Wang K., Cui H., Cao Y., Xing X., Zhang R. Biometric Recognition. Springer; Cham, Switzerland: 2016. A Preprocessing Algorithm for Touchless Fingerprint Images; pp. 224–234. [Google Scholar]

- 24.Malhotra A., Sankaran A., Mittal A., Vatsa M., Singh R. Human Recognition in Unconstrained Environments. Elsevier; Amsterdam, The Netherlands: 2017. Fingerphoto authentication using smartphone camera captured under varying environmental conditions; pp. 119–144. [Google Scholar]

- 25.Raghavendra R., Busch C., Yang B. Scaling-robust fingerprint verification with smartphone camera in real-life scenarios; Proceedings of the Sixth International Conference on Biometrics: Theory, Applications and Systems (BTAS); Arlington, VA, USA. 29 September–2 October 2013; pp. 1–8. [Google Scholar]

- 26.Stein C., Nickel C., Busch C. Fingerphoto recognition with smartphone cameras; Proceedings of the International Conference of Biometrics Special Interest Group (BIOSIG); Darmstadt, Germany. 6–7 September 2012; pp. 1–12. [Google Scholar]

- 27.NIST NFIQ2.0: NIST Fingerprint Image Quality 2.0. [(accessed on 9 January 2022)]. Available online: https://github.com/usnistgov/NFIQ2.

- 28.Priesnitz J., Rathgeb C., Buchmann N., Busch C. Touchless Fingerprint Sample Quality: Prerequisites for the Applicability of NFIQ2. 0; Proceedings of the International Conference of the Biometrics Special Interest Group (BIOSIG); Online. 16–18 September 2020; pp. 1–5. [Google Scholar]

- 29.Lin C., Kumar A. Contactless and Partial 3D Fingerprint Recognition using Multi-view Deep Representation. Pattern Recognit. 2018;83:314–327. doi: 10.1016/j.patcog.2018.05.004. [DOI] [Google Scholar]

- 30.ISO/IEC 19794-4:2011. Standard, International Organization for Standardization; Geneva, Switzerland: 2011. Information Technology—Biometric Data Interchange Formats—Part 4: Finger Image Data. [Google Scholar]

- 31.IEC 19795-1. ISO/IEC; Geneva, Switzerland: 2021. Information Technology–Biometric Performance Testing and Reporting-Part 1: Principles and Framework. [Google Scholar]

- 32.Fitzpatrick T.B. The Validity and Practicality of Sun-Reactive Skin Types I Through VI. Arch. Dermatol. 1988;124:869–871. doi: 10.1001/archderm.1988.01670060015008. [DOI] [PubMed] [Google Scholar]

- 33.Furman S.M., Stanton B.C., Theofanos M.F., Libert J.M., Grantham J.D. Contactless Fingerprint Devices Usability Test. National Institute of Standards and Technology; Gaithersburg, MD, USA: 2017. Technical Report NIST IR 8171. [DOI] [Google Scholar]

- 34.Porst R. Fragebogen: Ein Arbeitsbuch. 4th ed. Springer VS; Wiesbaden, Germany: 2014. Studienskripten zur Soziologie. OCLC: 870294421. [Google Scholar]

- 35.Rohrmann B. Empirische Studien zur Entwicklung von Antwortskalen für die sozialwissenschaftliche Forschung. Z. FüR Sozialpsychologie. 1978;9:222–245. [Google Scholar]

- 36.Tang Y., Gao F., Feng J., Liu Y. FingerNet: An unified deep network for fingerprint minutiae extraction; Proceedings of the International Joint Conference on Biometrics (IJCB); Denver, CO, USA. 1–4 October 2017; pp. 108–116. [Google Scholar]

- 37.Važan R. SourceAFIS—Opensource Fingerprint Matcher. 2019. [(accessed on 9 January 2022)]. Available online: https://sourceafis.machinezoo.com/

- 38.Ortega-Garcia J., Fierrez-Aguilar J., Simon D., Gonzalez J., Faundez-Zanuy M., Espinosa V., Satue A., Hernaez I., Igarza J., Vivaracho C., et al. MCYT baseline corpus: A bimodal biometric database. IEE Proc. Vis. Image Signal Process. 2003;150:395–401. doi: 10.1049/ip-vis:20031078. [DOI] [Google Scholar]

- 39.Cappelli R., Ferrara M., Franco A., Maltoni D. Fingerprint Verification Competition 2006. Biom. Technol. Today. 2007;15:7–9. doi: 10.1016/S0969-4765(07)70140-6. [DOI] [Google Scholar]

- 40.Kumar A. The Hong Kong Polytechnic University Contactless 2D to Contact-Based 2D Fingerprint Images Database Version 1.0. 2017. [(accessed on 9 January 2022)]. Available online: http://www4.comp.polyu.edu.hk/csajaykr/fingerprint.htm.

- 41.Mann H.B., Whitney D.R. On a Test of Whether one of Two Random Variables is Stochastically Larger than the Other. Ann. Math. Stat. 1947;18:50–60. doi: 10.1214/aoms/1177730491. [DOI] [Google Scholar]

- 42.Olsen M.A., Dusio M., Busch C. Fingerprint skin moisture impact on biometric performance; Proceedings of the 3rd International Workshop on Biometrics and Forensics (IWBF 2015); Gjovik, Norway. 3–4 March 2015; pp. 1–6. [Google Scholar]

- 43.O’Connell K.A., Enos C.W., Prodanovic E. Case Report: Handwashing-Induced Dermatitis During the COVID-19 Pandemic. Am. Fam. Physician. 2020;102:327–328. [PubMed] [Google Scholar]

- 44.Tan S.W., Oh C.C. Contact Dermatitis from Hand Hygiene Practices in the COVID-19 Pandemic. Ann. Acad. Med. Singap. 2020;49:674–676. doi: 10.47102/annals-acadmedsg.2020136. [DOI] [PubMed] [Google Scholar]

- 45.Otter J.A., Donskey C., Yezli S., Douthwaite S., Goldenberg S.D., Weber D.J. Transmission of SARS and MERS coronaviruses and influenza virus in healthcare settings: The possible role of dry surface contamination. J. Hosp. Infect. 2016;92:235–250. doi: 10.1016/j.jhin.2015.08.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.European Union Commission Implementing Decision (EU) 2019/329 of 25 February 2019 laying down the specifications for the quality, resolution and use of fingerprints and facial image for biometric verification and identification in the Entry/Exit System (EES) Off. J. Eur. Union. 2019;57:18–28. [Google Scholar]

- 47.VeriFinger, SDK Neuro Technology. Neuro Technology; Vilnius, Lithuania: 2010. [Google Scholar]

- 48.Vyas R., Kumar A. A Collaborative Approach using Ridge-Valley Minutiae for More Accurate Contactless Fingerprint Identification. arXiv. 20191909.06045 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.