Abstract

Working memory (WM) supports the persistent representation of transient sensory information. Visual and auditory stimuli place different demands on WM and recruit different brain networks. Separate auditory- and visual-biased WM networks extend into the frontal lobes, but several challenges confront attempts to parcellate human frontal cortex, including fine-grained organization and between-subject variability. Here, we use differential intrinsic functional connectivity from 2 visual-biased and 2 auditory-biased frontal structures to identify additional candidate sensory-biased regions in frontal cortex. We then examine direct contrasts of task functional magnetic resonance imaging during visual versus auditory 2-back WM to validate those candidate regions. Three visual-biased and 5 auditory-biased regions are robustly activated bilaterally in the frontal lobes of individual subjects (N = 14, 7 women). These regions exhibit a sensory preference during passive exposure to task stimuli, and that preference is stronger during WM. Hierarchical clustering analysis of intrinsic connectivity among novel and previously identified bilateral sensory-biased regions confirms that they functionally segregate into visual and auditory networks, even though the networks are anatomically interdigitated. We also observe that the frontotemporal auditory WM network is highly selective and exhibits strong functional connectivity to structures serving non-WM functions, while the frontoparietal visual WM network hierarchically merges into the multiple-demand cognitive system.

Keywords: attention, fMRI, frontal cortex, functional connectivity, multisensory

Introduction

Sensory working memory (WM) recruits a network of brain structures that spans frontal and posterior cortical areas as well as subcortical regions (Postle et al. 2000; Crottaz-Herbette et al. 2004; Arnott et al. 2005; Owen et al. 2005; Kastner et al. 2007; Harrison and Tong 2009; Christophel et al. 2012; Jerde et al. 2012; Huang et al. 2013; Lewis-Peacock et al. 2015; Michalka et al. 2015; Brissenden et al. 2018). Each sensory modality receives information from a unique set of receptors that is then processed by modality-specific subcortical and primary cortical regions, and each sensory modality possesses distinct strengths and weaknesses in the resolution and fidelity with which information can be encoded (Welch and Warren 1980; Alais and Burr 2004; Noyce et al. 2016). One leading model of WM has proposed multiple WM components, including specialized visuospatial and auditory/phonological stores (Baddely and Hitch 1974; Baddeley 2010). Although frontal lobe WM mechanisms have long been viewed as agnostic to sensory modality (Duncan and Owen 2000; Postle et al. 2000; Duncan 2010; Fedorenko et al. 2013; Tamber-Rosenau et al. 2013; Assem et al. 2020), a growing body of research reveals substantial influences of sensory modality on the functional organization of WM structures throughout the brain, even in frontal cortex (Romanski and Goldman-Rakic 2002; Hagler and Sereno 2006; Kastner et al. 2007; Romanski 2007; Michalka et al. 2015; Kumar et al. 2016; Mayer et al. 2017; Noyce et al. 2017). Work from our laboratory has previously identified both sensory specialized areas for WM (Michalka et al. 2015; Noyce et al. 2017; Tobyne et al. 2017) and areas that seem to be recruited independent of sensory modality (Noyce et al. 2017). Although these regions are relatively modest in size, their characteristic pattern of organization is repeated across individual subject cortical hemispheres. Moreover, these sensory-biased frontal lobe regions form specific functional connectivity networks with traditionally identified sensory-specific regions in parietal and temporal cortices.

In prior work examining sensory-biased attention and WM structures in frontal lobes, we reported 2 visual-biased and 2 auditory-biased regions in nearly all individual subject hemispheres (Michalka et al. 2015; Noyce et al. 2017). We subsequently leveraged our small in-lab dataset to examine sensory-biased functional connectivity networks (Tobyne et al. 2017) by using resting-state functional magnetic resonance imaging (fMRI) data from 469 subjects of the Human Connectome Project (Glasser et al. 2016). This analysis examined differential functional connectivity from parieto-occipital visual and temporal auditory attention regions to frontal cortex. The results not only confirmed that the previously identified sensory-biased frontal regions reside within sensory-specific functional networks but also suggested that these sensory-specific networks might extend more anteriorly on the lateral surface of frontal cortex and to the medial surface of frontal cortex (Tobyne et al. 2017). However, these analyses were performed with group data and without the benefit of sensory-specific WM task activation in individual subjects. Here, we investigate more fully whether and how these sensory-biased attention networks might extend within frontal cortex.

We are motivated to examine this question for 3 reasons. First, our previous results focused on caudolateral frontal cortex, ignoring evidence of specialization in frontal opercular regions as well as on the medial surface. Second, we know from both our and other’s work that small regions whose exact anatomical location varies across subjects can be difficult to identify and characterize (Mueller et al. 2013; Braga and Buckner 2017; Tobyne et al. 2018). Third, we previously observed variability within regions that we characterized as “multiple demand,” and we now seek to more closely examine the functional properties of these different regions.

We used differential functional connectivity (Tobyne et al. 2017; Lefco et al. 2020) from sensory-biased frontal seed regions to identify 13 candidate sensory-biased regions bilaterally throughout the frontal lobe. After assessing their reliability in individual subjects’ task activation, we drew subject-specific labels for 8 bilateral regions. These were further characterized in terms of 1) their sensory and WM recruitment; 2) their consistency of location across individual subjects; and 3) their network organization. We found that 3 bilateral visual-biased WM regions and 5 bilateral auditory-biased WM regions in frontal cortex occur with high consistency in individual subjects. These regions exhibit sensory-biased activation both during passive exposure to visual and auditory stimuli and during 2-back WM.

Materials and Methods

All procedures were approved by the Institutional Review Board of Boston University.

Overview

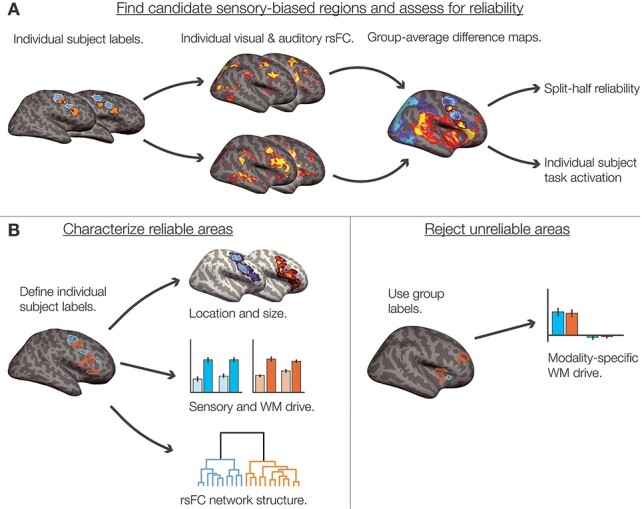

After collecting task (visual and auditory, WM and sensorimotor control) and resting fMRI data, we drew subject-specific labels for 2 bilateral visual-biased and 2 bilateral auditory-biased regions (Fig. 1A). These served as seeds for the differential connectivity analysis. From the group-level differential connectivity results, we defined candidate sensory-biased regions, whose task activation was then assessed in individual subjects. Eight regions met our criteria for investigation via individual subject labels (Fig. 1B); for those, we report their typical location and size, their degree of sensory and WM-specific recruitment, and their network organization structure. Five candidate regions were not reliable in individual subjects (Fig. 1C); we report sensory-specific WM recruitment in each of these rejected candidates.

Figure 1 .

Overview of analytical steps. (A) We began with previously identified sensory-biased regions in caudolateral frontal cortex, along the PCS and IFS (Michalka et al. 2015; Noyce et al. 2017). Within each individual subject, that subject’s auditory- and visual-biased regions were used as seeds to compute seed-to-whole-hemisphere resting-state functional connectivity. We thresholded (see Methods) and z-transformed the resulting connectivity maps before taking the difference between auditory-biased and visual-biased connectivity. The resulting differential connectivity maps were used to identify candidate sensory-biased regions (Fig. 3A). We assessed each candidate region using split-half reliability of task activation (Fig. 3B) and hand-scoring the region’s appearance in individual subjects (Supplementary Table S1). (B) Regions that exhibited consistent sensory-biased WM recruitment both within and across subjects were denoted as reliable regions; we drew subject-specific labels for each region in each subject for analysis (Fig. 4). We reported the mean location and size (Fig. 4 and Table 1), the degree of WM-specific recruitment (Fig. 5), and used hierarchical clustering of seed-to-seed functional connectivity to investigate the structure of sensory-biased networks (Fig. 6). (C) Regions that did not exhibit consistent sensory-biased recruitment were investigated using the candidate search space labels; we again reported the degree of WM-specific recruitment in each (Fig. 7).

Subjects

Sixteen members of the Boston University community (aged 24–35; 9 men and 7 women) participated in this study. All experiments were approved by the Institutional Review Board of Boston University. All subjects gave written informed consent to participate and were paid for their time. Two authors (A.L.N. and J.A.B.) participated as subjects. One subject was excluded from analysis due to technical difficulties with the auditory stimulus presentation; another participated in task scans but not resting-state scans and is included only in task activation analyses.

Experimental Task

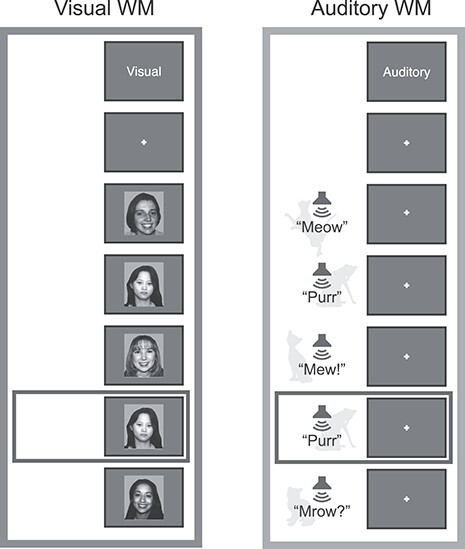

Subjects performed a 2-back WM task for visual (photographs of faces) and auditory (recordings of animal sounds) stimuli, in separate blocks (Fig. 2). Each block contained 32 stimuli and lasted 40 s; onsets of successive stimuli were 1.25 s apart. Visual stimuli were each presented for 1 s; auditory stimuli ranged from 300 to 600 ms in duration. Images were presented at 300 × 300 pixels, spanning approximately 6.4° visual angle, using a liquid crystal display projector illuminating a rear-projection screen within the scanner bore. Auditory stimuli were natural recordings of cat and dog vocalizations. Stimuli were presented diotically. The audio presentation system (Sensimetrics, http://www.sens.com) included an audio amplifier, S14 transformer, and MR-compatible in-ear earphones. At the beginning of each block, subjects were cued to perform either the visual or the auditory 2-back task or to perform a sensorimotor control condition. Each run comprised eight 32-s blocks: 2 auditory 2-back, 2 visual 2-back, 2 auditory sensorimotor control, and 2 visual sensorimotor control. Eight seconds of fixation was recorded at the beginning, midpoint, and end of each run. Block order was counterbalanced across runs; run order was counterbalanced across subjects. During 2-back blocks, participants were instructed to decide whether each stimulus was an exact repeat of the stimulus two prior and to make either a “2-back repeat” or “new” response via button press. Sensorimotor control blocks consisted of the same stimuli and timing, but no 2-back repeats were included, and participants were instructed to make a random button press to each stimulus. Responses were collected using an MR-compatible button box. All stimulus presentation and task control were managed by custom software using Matlab PsychToolbox (Brainard 1997; Cornelissen et al. 2002; Kleiner et al. 2007).

Figure 2 .

Diagram of the 2-back WM task used to identify and characterize sensory-biased and sensory-independent WM structures. Subjects observed a stream of visual or auditory stimuli and reported 2-back repeats via button press. Sensorimotor control blocks consisted of the same stimuli, without repeats included. (A) The visual stimuli comprised black-and-white photographs of faces, drawn from a corpus of student ID photos. (B) The auditory stimuli comprised recordings of cat and dog vocalizations.

Subjects (n = 15) were able to perform both the visual 2-back task (90.1% correct, standard deviation [SD] = 7.5%) and the auditory 2-back task (87.5% correct, SD = 10.9%) at a high level. There was no significant difference in accuracy between the 2 tasks (t(14) = 1.463, P = 0.17).

Eye Tracking

All subjects were trained to hold fixation at a central point. Eye movements during task performance were recorded using an Eyelink 1000 MR-compatible eye tracker (SR Research) sampling at 500 Hz. Eye tracking recordings were unavailable for 4 subjects due to technical problems; eye gaze in these subjects was monitored via camera to confirm acceptable fixation performance. We operationalized fixation as eye gaze remaining within 1.5° visual angle of the central fixation point. Eye position data were smoothed through an 80-Hz low-pass first-order Butterworth filter, after which excursions from fixation were counted within each block. To rule out differences in eye movements as a potential confound, we compared the frequency with which subjects broke fixation between visual and auditory 2-back blocks, between visual and auditory sensorimotor control blocks, and between each sensory modality’s 2-back and sensorimotor control blocks. There were no significant differences in any of these comparisons (paired t-tests, all P > 0.35).

MR Imaging and Analysis

MR Scanning

All scans were performed on a Siemens TIM Trio 3T MR imager, with a 32-channel matrix head coil. Each subject participated in multiple MRI scanning sessions, including collection of high-resolution structural images, task-based functional MRI, and resting-state functional MRI. High-resolution (1.0 × 1.0 × 1.3 mm) magnetization-prepared rapid gradient echo (MP-RAGE) T1-weighted structural MR images were collected for each subject. Functional T2*-weighted gradient-echo echo-planar images were collected using a slice-accelerated EPI sequence that permits simultaneous multislice acquisition via the blipped-CAIPI technique (Setsompop et al. 2012). Sixty-nine slices (0% skip; time echo [TE] 30 ms; time repetition [TR] 2000 ms; 2.0 × 2.0 × 2.0 mm) were collected with a slice acceleration factor of 3. Partial Fourier acquisition (6/8) was used to keep TE in a range with acceptable signal to noise ratios. We collected 8 runs of task-based functional data per subject and we collected 2–3 runs (360 s per run) of eyes-open resting-state functional data per subject. MRI data collection was performed at the Harvard University Center for Brain Science neuroimaging facility. The structural and task data reported here were also included in a previous report (Noyce et al. 2017).

Structural Processing

The cortical surface of each hemisphere was reconstructed from the MP-RAGE structural images using FreeSurfer software (http://surfer.nmr.mgh.harvard.edu, Version 5.3.0, details in Dale et al. 1999; Fischl, Sereno, and Dale 1999a; Fischl, Sereno, Tootell, et al. 1999b; Fischl et al. 2001; Fischl 2004). Each cortical reconstruction was manually checked for accuracy.

Functional Preprocessing and GLM

Functional data were analyzed using FreeSurfer’s FS-FAST analysis tools (version 5.3.0). Analyses were performed on subject-specific anatomy unless noted otherwise. All task and resting state data were registered to individual subject anatomy using the middle timepoint of each run. Data were slice-time corrected, motion corrected by run, intensity normalized, resampled onto the individual’s cortical surface (voxels to vertices), and spatially smoothed on the surface with a 3-mm full-width at half-maximum (FWHM) Gaussian kernel. Task data were also resampled to the FreeSurfer fsaverage surface for group analysis.

To analyze task data, we used standard functions from the FS-FAST pipeline to fit a general linear model (GLM) to each cortical vertex. The regressors of the GLM matched the time course of the experimental conditions. The timepoints of the cue period were excluded by assigning them to a regressor of no interest. The canonical hemodynamic response function was modeled by a gamma function (δ = 2.25 s, τ = 1.25); this hemodynamic response function was convolved with the regressors before fitting. Resting-state data (N = 14) were collected using the same T2*-weighted sequences that were used to collect task fMRI. For each subject, we collected an average of 483 timepoints (range 220–540 timepoints, or 440–1080 s) of eyes-open resting state, during which subjects maintained fixation at the center of the display. (All subjects were weighted equally in any group-level analyses, regardless of their number of timepoints.) Data were preprocessed similarly to the task data: slice-time corrected, motion corrected by run, intensity normalized, resampled onto the individual’s cortical surface, and spatially smoothed on the surface with a 3-mm FWHM Gaussian kernel. Multiple resting-state acquisitions for each subject were temporally demeaned and concatenated to create a single timeseries. In order to attenuate artifacts that could induce spurious correlations, resting-state data were further preprocessed using custom scripts in MATLAB. The following preprocessing steps were performed: linear interpolation across high-motion timepoints (>0.5 mm framewise displacement; Power et al. 2012; Carp 2013), application of a fourth-order Butterworth temporal bandpass filter to extract frequencies between 0.009 and 0.08 Hz, mean “grayordinate” signal regression (Burgess et al. 2016), and censoring of high-motion timepoints by deletion (Power et al. 2012). On average, 6.0 timepoints were censored (1.54% of data, range 0–48 timepoints).

Differential Connectivity

In order to identify candidate sensory-biased structures within the hypothesized extended networks, we computed differential functional connectivity (Tobyne et al. 2017; Lefco et al. 2020) from previously defined visual-biased (superior precentral sulcus [sPCS] and inferior precentral sulcus [iPCS]) and auditory-biased (transverse gyrus intersecting precentral sulcus [tgPCS] and caudal inferior frontal sulcus/gyrus [cIFS/G]) frontal seeds (Fig. 1A). For each subject, the 2 visual-biased frontal labels from Noyce et al. (2017) were combined into one single functional connectivity seed per hemisphere, as were the 2 auditory-biased frontal labels. For each seed in each individual subject hemisphere, we calculated a mean resting-state time course over all vertices within the seed. We then computed the correlation (Pearson’s r) between the 2 seeds’ timecourses and those of every vertex within the same cortical hemisphere.

Visual seed and auditory seed correlation maps for each subject hemisphere were resampled to the FreeSurfer fsaverage surface and subjected to Fisher’s r-to-z transformation, before submission to a group-level analysis. Group-average z maps were standardized within each cortical hemisphere by subtracting the mean z-value from each vertex and then dividing by the SD. Difference maps were then created by subtracting the visual-seed connectivity map from the auditory-seed connectivity map (Fig. 3A).

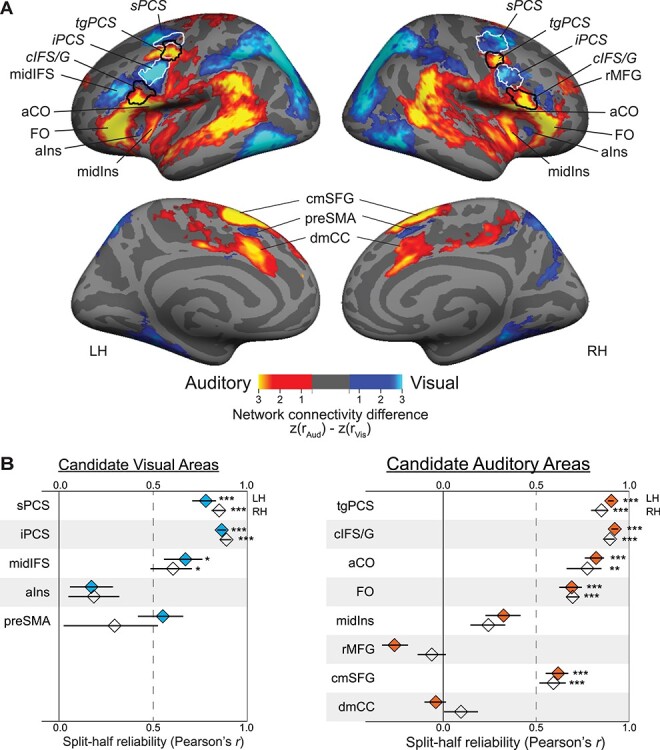

Figure 3 .

(A) Group average (N = 14) differential functional connectivity to visual-biased frontal regions (seeds outlined in white; significantly greater connectivity shown in blue) and auditory-biased frontal regions (seeds outlined in black; significantly greater connectivity shown in yellow). The 4 bilateral seed regions were defined in individual subjects from task activation. (Seed label outlines shown here are regions where individual subject labels overlap in at least 20% of subjects.) Note clear preferential connectivity between visual frontal seeds and pVis attention regions (parietal and occipital cortex) and between auditory frontal seeds and pAud attention regions (superior temporal cortex). Within frontal cortex, we observe regions of preferential visual network connectivity to bilateral aIns and preSMA as well as to left midIFS; we observe regions of preferential auditory connectivity to bilateral aCO, FO, midIns, cmSFG, and dmCC, as well as to right rMFG. Maps are thresholded at P < 0.05 after correcting for multiple comparisons (Smith and Nichols 2009). (B) Split-half reliability (Pearson’s r) of task activation (Aud WM vs. Vis WM) for each candidate visual-biased (left, blue) and auditory-biased (right, orange) region. Three bilateral visual-biased regions (sPCS, iPCS, and midIFS) and 5 bilateral auditory-biased regions (tgPCS, cIFS/G, aCO, FO, and cmSFG) are significantly non-zero. Left preSMA is above r = 0.5 but does not survive multiple comparisons correction. Error bars are within-subject standard error of the Fisher’s z-transformed mean correlation (Loftus and Masson 1994; Cousineau 2005; Morey 2008). *P < 0.05, **P < 0.01, ***P < 0.001, Holm–Bonferroni corrected for 26 tests.

In order to exclude any vertices that were uncorrelated or anticorrelated with both seeds, we restricted this map to only vertices that were significantly positively correlated with the visual-biased seed, the auditory-biased seed, or both. For each single-seed correlation map, we used permutation testing (Eklund et al. 2016) to generate a nonparametric null distribution of threshold-free cluster enhancement (TFCE) values (Smith and Nichols 2009). First, we computed Fisher z-transformed correlations for each vertex for each subject. Group statistic maps were generated via voxel-wise t tests with variance smoothing (s = 4 mm) followed by TFCE transformation. For each permutation (n = 1000), the sign of the z-values were flipped for a random selection of subjects and a group average map was computed. A null distribution was then generated by recording the image-wise maximum TFCE statistic for each permuted group map. Using this null distribution, the original group map was thresholded at P < 0.05, one-sided. The union of vertices that survived correction in the visual and auditory connectivity maps was used to mask the final differential connectivity map, excluding any vertices that were anticorrelated or uncorrelated with both sets of seeds. From this difference map, we identified 7 new bilateral and 2 new unilateral candidate sensory-biased frontal regions, in addition to the original 4 bilateral seeds.

Assessing Candidate Regions

We used 2 methods to determine which candidate sensory-biased frontal regions (from the differential connectivity analysis) were reliable enough to characterize. First, we computed split-half reliability of task activation in each region. Group-space labels from the differential connectivity analysis (Supplementary Fig. S1) were projected back into individual subjects to serve as search spaces. Where a region only appeared unilaterally, the mirrored label was also created. Each individual subject’s data were divided into odd- and even-numbered runs, and a univariate first-level analysis of auditory 2-back versus visual 2-back was performed. Vertices within each candidate region were masked to include only those which showed the expected direction of activation in at least one set of runs (to exclude any contribution from adjacent, opposite-biased regions). We then computed the vertex-wise correlation (Pearson’s r) between odd-numbered and even-numbered runs. Correlation values were Fisher’s z-transformed for averaging and computing standard error and transformed back to r values for visualization (Fig. 3B).

Second, 2 authors (A.L.N. and D.C.S.) visually inspected task activation maps of the univariate first-level auditory 2-back versus visual 2-back contrast (see below). We independently scored each region of interest (ROI)'s strength in each subject, considering its size, activation intensity, position, and compactness (Table S1). Regions were scored as strong (1), weak (0.5), or absent (0). Again, although 2 regions only appeared unilaterally in the corrected differential connectivity map, we assessed them in both hemispheres at this stage.

Task Activation and Labels

For each subject, we directly contrasted blocks in which the subject performed visual WM against blocks in which the subject performed auditory WM. This contrast was liberally thresholded at P < 0.05, uncorrected. This threshold was set to maximally capture frontal lobe vertices showing a bias for auditory or visual WM. For regions that occurred reliably in individual subjects (mean correlation above 0.5 and mean scoring above 0.7), we drew labels based on each individual subject’s activation pattern in this contrast. This resulted in a set of 3 bilateral visual-biased frontal regions and 5 bilateral auditory-biased frontal regions for further analysis. Note that sPCS, iPCS, tgPCS, and cIFS/G were previously identified as sensory-biased frontal regions (Michalka et al. 2015; Noyce et al. 2017) and were used as seeds for the differential connectivity analysis, above. We also drew large posterior visual (pVis, including parietal and occipital regions) and posterior auditory (pAud, including superior temporal gyrus and sulcus) labels for each subject, to capture canonical sensory attention structures; these labels were used in the functional connectivity analysis (see below).

Probabilistic ROIs (Fig. 4B,C) were constructed as in Tobyne et al. (2017), by projecting individual subject task activation (binarized at P < 0.05, uncorrected) to the fsaverage template surface via spherical registration and trilinear interpolation (Fischl, Sereno, and Dale 1999a). For each surface vertex, we computed the proportion of subjects for whom that vertex was included in a given region of interest.

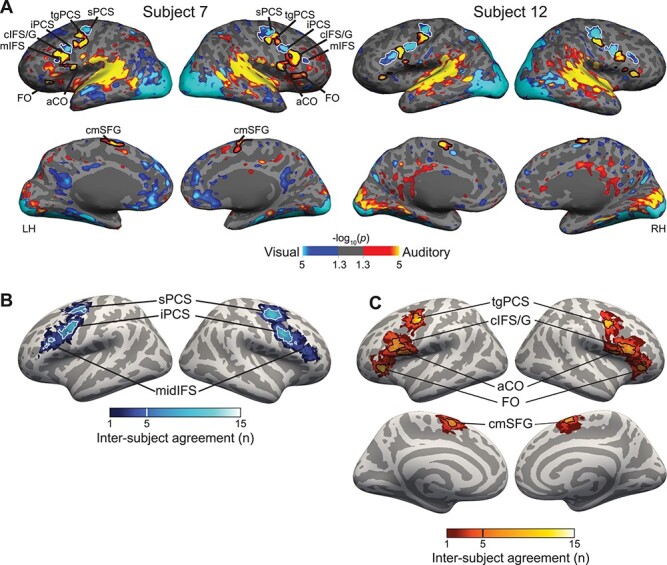

Figure 4 .

(A) Contrast of auditory 2-back (yellow) versus visual 2-back (blue) WM task activation in 2 representative subjects (all subjects are shown in Supplementary Fig. S2). In lateral frontal cortex, we observe 3 visual-biased structures: sPCS and iPCS and midIFS. They are interleaved with auditory-biased structures including the transverse gyrus intersecting precentral sulcus (tgPCS), cIFS/G, aCO, and FO. On the medial surface, we observe auditory-biased cmSFG. (B) Probabilistic overlap map of visual-biased ROI labels projected into fsaverage space. Although there is great variability in the exact positioning of these regions, they overlap into 3 clear “hot spots.” Thick outlines are drawn around vertices that are included in 5 or more subjects (33% of the participants); for right midIFS, thin outlines are drawn around vertices that are included in 3 or more subjects (20% of the participants). (C) Probabilistic overlap map of auditory-biased ROI labels projected into fsaverage space. Outlines are drawn around vertices that are included in 5 or more subjects (33% of the participants).

Within each subject-specific region, we computed mean % blood oxygen level-dependent (BOLD) signal change in auditory sensorimotor control versus visual sensorimotor control, (Figs 1B and 5A), capturing the degree of sensory drive in each region in the absence of the WM task. In order to estimate the magnitude of WM-specific sensory bias (Fig. 5B), we performed a split-half analysis, so that region labels and activation estimates were calculated on independent pools of data. For each region, we defined a search space using the probabilistic ROIs at 20% agreement. That is, the search space comprised all vertices that were included in that region for at least 3 subjects. Within the search space, each subject’s region label consisted of all vertices that were significant (uncorrected P < 0.05) in the visual WM > auditory WM (or vice versa, for auditory-biased regions) contrast. These labels were computed for each subject using half of their data (even-numbered and odd-numbered runs, separately). In order to estimate the increase in sensory bias during WM relative to sensorimotor control, we used these labels and the other half of each subject’s data (odd-numbered and even-numbered runs, separately) to contrast the difference between visual 2-back and visual passive against the difference between auditory 2-back and auditory passive. Finally, we took the mean of the 2 estimates for each subject and region.

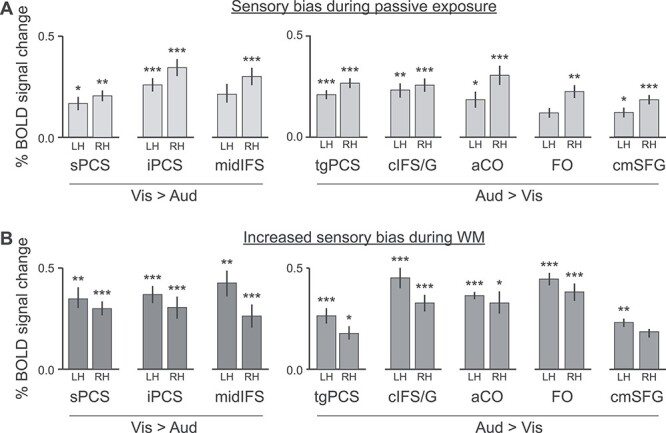

Figure 5 .

Stimulus-driven (top) and WM-driven sensory bias in frontal lobe activation. (A) Average sensory bias in visual-biased regions (left, visual sensorimotor control > auditory sensorimotor control) and auditory-biased regions (right, auditory sensorimotor control > visual sensorimotor control). Bilateral sPCS and iPCS and right midIFS show significant preference for visual stimuli in the absence of the 2-back task; bilateral tgPCS, cIFS/G, aCO, and cmSFG, and right FO show significant preference for auditory stimuli in the absence of the 2-back task. (B) Average WM increase in bias in visual-biased regions (left, visual 2-back—visual sensorimotor control > auditory 2-back—auditory sensorimotor control) and auditory-biased regions (right, auditory 2-back—auditory sensorimotor control > visual 2-back—visual sensorimotor control). Bilateral sPCS, iPCS, and midIFS are significantly more sensory-biased during the 2-back task than during sensorimotor control; bilateral tgPCS, cIFS/G, aCO, and FO, and left cmSFG are significantly more sensory-biased during the 2-back task than during sensorimotor control. ROIs for B were defined from a split-half analysis (see text for details). *P < 0.05, **P < 0.01, ***P < 0.001; Holm–Bonferroni corrected for 16 comparisons.

Network Clustering Analysis

To compute seed-to-seed or seed-to-whole-brain connectivity among the ROIs, we took the mean resting-state timecourse across all vertices in each region. Pairwise correlations between timecourses measured connectivity between seeds, or between a seed and each surface vertex.

For each ROI, its connectivity to each other seed gave a connectivity profile across the network; these connectivity profiles were averaged across subjects and then fed into a hierarchical clustering analysis (HCA) to characterize the network structure (Dosenbach et al. 2007; Michalka et al. 2015; Tobyne et al. 2017; Brissenden et al. 2018). We computed pairwise Euclidean distance between each region’s connectivity profile. We then applied Ward’s linkage algorithm to these distances, which forms each new cluster by merging the 2 clusters that lead to the minimum possible increase in the total sum of squares of the node to centroid distances. Reliability of the clusters was assessed via a bootstrap approach: On each of 10 000 iterations, 14 datasets were sampled (with replacement) and the HCA was performed. This yielded a bootstrapped distribution of network structures. For each subtree in the original structure, we counted how frequently it appeared in the bootstrapped distribution.

Results

Candidate Sensory-Biased Frontal Structures Identified from Differential Functional Connectivity

We began by examining functional connectivity within frontal cortex in order to identify “candidate” regions that are likely members of either visual-biased or auditory-biased WM networks. Previously, we examined functional connectivity of frontal cortex to visual-biased parieto-occipital cortex and to auditory-biased temporal cortex (Tobyne et al. 2017). Many regions in frontal cortex exhibited preferential connectivity to visual or auditory posterior cortex. That analysis, performed on publicly available HCP data, lacked the task data necessary to validate the candidate network regions (Tobyne et al. 2017).

Here, we examined differential functional connectivity within frontal cortex by contrasting connectivity from visual-biased frontal regions (sPCS and iPCS combined as a single seed; Michalka et al. 2015; Noyce et al. 2017) against connectivity from previously identified auditory-biased frontal regions (tgPCS and cIFS/G combined as a single seed; Michalka et al. 2015; Noyce et al. 2017). We constructed connectivity maps for each subject hemisphere from each seed; maps were combined across subjects (using TFCE and nonparametric randomization tests to create significance masks; Smith and Nichols 2009) and the 2 sensory-biased connectivity maps were subtracted from each other to yield a differential connectivity map (Fig. 3A). The differential connectivity map shows several expected patterns. First, this contrast identifies preferential connectivity between visual-biased frontal regions and posterior parietal and occipital areas, and between auditory-biased frontal regions and posterior areas in superior temporal lobe, similar to the results of Michalka et al. (2015) and Tobyne et al. (2017). Second, this contrast recapitulates the seed regions that went into the connectivity analysis (visual-biased sPCS and iPCS; auditory-biased tgPCS and cIFS/G).

More interestingly, this contrast suggests extended sensory-biased structures within frontal, insular, and cingulate cortices. The visual-biased frontal seeds (sPCS and iPCS) are preferentially connected to bilateral regions in anterior insula (aIns) and pre-supplementary motor area (preSMA), as well as to left middle inferior frontal sulcus (midIFS). The auditory-biased frontal seeds (tgPCS and cIFS/G) are preferentially connected to bilateral regions in anterior central operculum (aCO), frontal operculum (FO), middle insula (midIns), caudomedial superior frontal gyrus (cmSFG), and dorsal middle cingulate cortex (dmCC), as well as to right rostral middle frontal gyrus (rMFG). Therefore, the differential functional connectivity analysis yields 3 novel (and 2 previously identified) frontal regions as candidates for the visual-biased network, along with 6 novel (and 2 previously identified) frontal candidates for the auditory-biased network.

Defining Sensory-Biased Frontal Structures

To examine whether these candidate sensory-biased regions occurred reliably, we first tested whether they exhibited consistent patterns of task activation in the expected direction. Labels from the group-average differential connectivity map (Fig. 3A and Supplementary Fig. S1) were projected into individual subjects’ anatomical space (including mirrored labels for candidate regions that occurred unilaterally) and used to test split-half (odd- and even-numbered runs) reliability. For each half of the task runs, we computed auditory 2-back versus visual 2-back contrasts and computed the vertex-wise correlation of task activation across the ROI, between halves (Fig. 3B). Bilaterally, candidate visual regions sPCS, iPCS, and midIFS exhibited significant correlations (P < 0.05, Holm–Bonferroni corrected for 26 comparisons), as did candidate auditory regions tgPCS, cIFS/G, aCO, FO, and cmSFG.

In a separate analysis, we inspected each individual subject’s task activation map of auditory WM (auditory 2-back) contrasted with visual WM (visual 2-back) to assess the robustness of sensory-biased WM activation in each candidate structure. Figure 4A shows maps for 2 representative subjects; the complete set of subjects is shown in Supplementary Figure S2. Each region from Figure 3 was scored by 2 authors as “Strong” (1.0), “Weak” (0.5), or “Absent” (0.0) in each subject; Supplementary Table S1 reports the average scores for each rater and region.

All regions with significant split-half reliability of vertex-by-vertex activation also were scored 0.7 or higher by both raters. Regions with significant split-half reliability and high mean ratings were thus identified as reliable sensory-biased WM regions. By “sensory-biased,” we mean that these regions have significantly stronger activation during visual WM than during auditory WM, or vice versa. Candidate visual-biased region bilateral preSMA was low in split-half reliability (LH r = 0.55, corrected P = 0.112; RH r = 0.29, corrected P > 0.99) and moderate in visual scoring (LH Rater 1 score = 0.60, Rater 2 score = 0.53; RH Rater 1 score = 0.77, Rater 2 score = 0.63), and therefore, there was not sufficient evidence to support classifying this as a sensory-biased region. All other regions with low split-half reliability were visually scored 0.30 or lower (Table S1) and were rejected as sensory-biased regions.

This resulted in a set of 8 bilateral sensory-biased regions for subsequent analysis. For each subject and region, a label was drawn using that subject’s activation in the auditory 2-back versus visual 2-back contrast, thresholded at P < 0.05 (uncorrected). Previously, we reported 2 bilateral visual-biased structures lying in the sPCS and iPCS (Michalka et al. 2015; Noyce et al. 2017; Tobyne et al. 2017); in addition, a third visual-biased structure reliably occurs ventral and anterior to iPCS, lying in the midIFS. We also observe bilateral auditory-biased structures lying on the tgPCS and in the cIFS/G, as previously reported (Michalka et al. 2015; Noyce et al. 2017). A third auditory-biased structure lies on the aCO and a fourth occurs on the FO. On the medial surface, an auditory-biased structure occurs in the caudomedial portion of the superior frontal gyrus (cmSFG). Table 1 summarizes the modality preference, location, and size of these regions. Each ROI was evident in at least 14 of the 15 subjects.

Table 1.

Size and position of sensory-biased ROIs

| Sensory bias | MNI coordinates | Area (mm2) | ||||||

|---|---|---|---|---|---|---|---|---|

| Hemi | Location | ROI | N | Mean | SD | Mean | SD | |

| LH | Lateral | sPCS | Visual | 14 | (−30, −5, 50) | 7, 5, 4 | 284 | 264 |

| tgPCS | Auditory | 15 | (−43, −3, 45) | 7, 5, 2 | 293 | 182 | ||

| iPCS | Visual | 15 | (−43, 3, 31) | 6, 5, 5 | 257 | 223 | ||

| cIFS/G | Auditory | 14 | (−45, 13, 20) | 5, 7, 5 | 358 | 206 | ||

| aCO | Auditory | 14 | (−52, 8, 10) | 3, 5, 6 | 303 | 185 | ||

| midIFS | Visual | 14 | (−39, 26, 17) | 6, 7, 6 | 170 | 148 | ||

| FO | Auditory | 15 | (−40, 30, −1) | 5, 5, 6 | 316 | 215 | ||

| Medial | cmSFG | Auditory | 14 | (−7, 0, 62) | 2, 6, 4 | 172 | 143 | |

| RH | Lateral | sPCS | Visual | 15 | (34, −3, 50) | 7, 4, 4 | 505 | 366 |

| tgPCS | Auditory | 15 | (47, −1, 43) | 5, 4, 5 | 277 | 198 | ||

| iPCS | Visual | 15 | (44, 6, 29) | 5, 5, 4 | 353 | 180 | ||

| cIFS/G | Auditory | 15 | (47, 16, 20) | 5, 6, 6 | 403 | 226 | ||

| aCO | Auditory | 14 | (52, 7, 6) | 3, 6, 4 | 223 | 198 | ||

| midIFS | Visual | 14 | (45, 30, 15) | 4, 8, 8 | 203 | 131 | ||

| FO | Auditory | 15 | (45, 28, −3) | 4, 5, 5 | 260 | 219 | ||

| Medial | cmSFG | Auditory | 14 | (7, 3, 62) | 2, 6, 3 | 154 | 130 | |

Note: Sensory bias is given as visual (V > A) or auditory (A > V). N is out of 15 total subjects. MNI coordinates are of mean centroid position. Areas are spatial extent on the cortical surface (FreeSurfer). MNI, Montreal Neurological Institute.

Each subject’s labels for the 8 bilateral structures were projected into fsaverage space to create a probabilistic ROI map that visualizes anatomical consistency across subjects. Figure 4B,C shows the degree of overlap of these labels for visual-biased and auditory-biased regions. For each structure, there is variability in its exact positioning across participants; moreover, this variability tends to be greater for more-anterior ROIs (particularly midIFS). Outlines in Figure 4B,C show the range of vertices that occur in at least 5 subjects (33%). Note that despite substantial positional variability in right midIFS (Fig. 4B), that region was robustly identified in individual subjects (Table 1; Supplementary Table S1 and Supplementary Fig. S2).

Quantifying Sensory and WM Drive in Each Region

We examined whether the sensory-biased activation reflects simple sensory drive, WM-specific processes, or both. First, using the subject-specific region labels described in Figure 4 and Table 1, we computed the average % BOLD signal change in auditory versus visual sensorimotor control (Fig. 5A). In these conditions, stimuli were presented to subjects and they were asked to press a button on each trial, but there were no other task requirements. This contrast thus captures the degree of sensory preference that each region exhibits in the absence of the 2-back WM task. We observe that the majority of regions (bilateral sPCS, iPCS, tgPCS, cIFS/G, aCO, and cmSFG, and right midIFS and FO) show a significant sensory preference. (All regions exhibit sensory drive in the expected direction, and all are significant before correction for multiple comparisons.)

In order to examine changes in activation when WM task demands are added, we performed a split-half analysis, so that the ROI labels were estimated independently of the activation measurement. Even-numbered runs were used to define labels and odd-numbered runs were used to estimate BOLD signal change, or vice versa (see Methods). During 2-back WM, the degree of sensory specialization increases in all but 1 region in 1 hemisphere (Fig. 5B). Bilateral sPCS, iPCS, midIFS, tgPCS, cIFS/G, aCO, and FO, and left cmSFG are all driven significantly more strongly by the 2-back task than by sensorimotor control. (Again, all comparisons are significant before correction for multiple comparisons.)

Network Structure of Visual-Biased and Auditory-Biased Regions

Above, we employed differential functional connectivity to identify candidate sensory-biased regions and then employed task data to test whether each candidate region was truly sensory-biased. Now, to test whether these confirmed sensory-biased regions, whose labels were drawn according to task activation boundaries, do indeed form discrete sensory-specific networks, we performed 2 resting-state functional connectivity analyses (n = 14, as 1 subject lacked resting-state data). First, we mapped each subjects’ individual sensory-biased frontal structures as well as broad posterior visual and auditory attention regions in order to define subject-specific ROI seeds. We then measured connectivity from each frontal structure (seeds) to the 2 posterior regions (targets). We ran a repeated-measures linear model with fixed effects of target region (pVis, pAud) and seed region sensory bias (visual, auditory), and random intercepts for subject and seed region. Connectivity to posterior regions varied strongly as an interaction between target region and seed sensory bias (F(1,13) = 248.8229, P < 0.0001), such that visual-biased regions were connected more strongly to pVis (r = 0.583) than to pAud (r = 0.440) and auditory-biased regions were connected more strongly to pAud (r = 0.604) than to pVis (r = 0.312). Overall connectivity was slightly stronger to pAud (r = 0.503) than to pVis (r = 0.415; F(1,13) = 30.736, P = 0.0002), and there was no main effect of seed region sensory bias (F(1,13) = 0.0006).

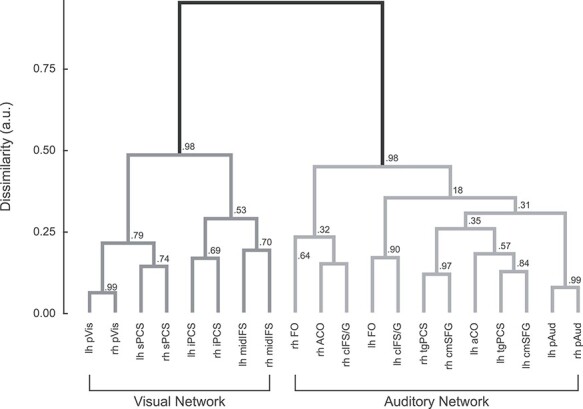

Second, HCA was performed to more closely examine the network structure of these ROIs. A distance matrix was constructed using each seed’s connectivity to each other region in the set (16 frontal and 4 posterior, with separate seeds in each hemisphere). HCA applied to these connectivity profiles confirmed that these regions assort into 2 discrete sensory-biased networks (Fig. 6). All of the visual-biased regions (as identified by task activation) formed one network, while all of the auditory-biased regions formed the other network. A bootstrap test of the reliability of this behavior confirmed that on more than 97% of bootstrap samples, the auditory and visual subtrees were perfectly segregated from one another.

Figure 6 .

HCA of seed-to-seed resting-state functional connectivity confirms that frontal visual and auditory WM structures assort into 2 discrete networks along with their respective posterior sensory attention structures. Values at each branch point show the reliability of each exact subtree, calculated as the proportion of bootstrapped datasets in which that subtree occurred.

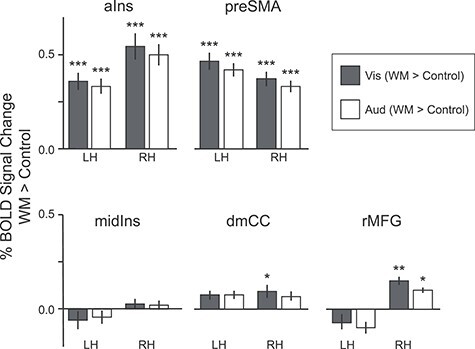

Finally, we examined the WM-related behavior of regions that appeared in the differential functional connectivity analysis (Fig. 3A), but that did not meet our criterion for reliable, sensory-biased WM recruitment (Figs 3B and 4). These “rejected candidate” regions included visual-connected candidate regions in bilateral aIns and preSMA and auditory-connected candidate regions in rMFG and bilateral midIns and dmCC. Each region was rejected because we failed to reliably identify sensory-biased task activation on the maps of individual subject hemispheres. In order to further characterize WM task activation in these regions, we drew ROI labels based on the group-average connectivity maps and projected them back into each individual subject’s anatomical space. We then computed the % BOLD signal change between 2-back WM and passive exposure to the same stimuli for each region. Figure 7 shows the results.

Figure 7 .

Task activation in rejected candidate regions; that is, extended network regions that do not show a sensory bias in WM recruitment. Rejected candidate ROIs are divided into members of the visual network (aIns and preSMA, top panel) and auditory network (midIns, dmCC, and rMFG, bottom panel). Bar plot shows mean BOLD % signal change in visual (blue) and auditory (orange) 2-back WM blocks, contrasted against sensorimotor control blocks. Note that aIns and preSMA, connected to the visual network, are robustly recruited in both WM tasks, while midIns, dmCC, and rMFG are at best minimally recruited in either. Error bars are repeated-measures standard error of the mean. *P < 0.05, **P < 0.01, ***P < 0.001, Holm–Bonferroni corrected for 20 comparisons.

Bilaterally, aIns and preSMA, regions that are preferentially connected to the visual network, appear to be truly multisensory during WM, with robust (corrected P < 0.001) recruitment in both tasks. This is in contrast to the regions that are preferentially connected to the auditory network (rMFG, midIns, and dmCC), which exhibit at most very modest activation, and often none in either WM modality. We ran a repeated-measures linear model with fixed effects of task (auditory WM; visual WM) and preferential connectivity (pAud > pVis; pVis > pAud), and random intercepts for subject, hemisphere, and region, and confirmed that task activation differed with preferential connectivity (F(1,13) = 81.3227, P < 0.0001) but not with either the WM task (F(1,13) = 3.1139) nor the task-by-connectivity interaction (F(1,13) = 0.6747).

Discussion

Our results indicate that sensory modality is a driving factor in the functional organization of a substantial portion of frontal cortex. In both lateral and medial frontal cortices, we observed swaths of bilateral cortex, running caudodorsal to rostroventral, containing multiple regions that exhibit a preference for one sensory modality during N-back WM tasks. Our analysis began with 13 bilateral candidate sensory-biased frontal lobe regions identified by intrinsic connectivity. Split-half reliability and scoring in individual subjects found that 8 of these were sufficiently robust to draw individualized labels for further investigation. We tested their consistency of anatomical location, their degree of sensory and WM-specific drive, and their participation in sensory-specific resting state networks. In each individual subject, the frontal lobes in both hemispheres exhibited multiple regions that were activated more strongly for visual WM than for auditory WM and multiple regions that exhibited the opposite preference. Across our subjects, 8 bilateral sensory-biased regions in frontal cortex exhibited a high degree of anatomical consistency, both in terms of stereotactic coordinates and in terms of relational position to other sensory-biased regions within the individual hemisphere (Fig. 4B,C, Table 1). Although visual-biased and auditory-biased regions interleave across frontal cortex, HCA of resting-state data demonstrates that they form 2 distinct functional connectivity networks, one containing all of the visual-biased regions and the other containing all of the auditory-biased regions (Fig. 7). In prior work, we had identified 4 bilateral sensory-biased frontal lobe regions, visual-biased sPCS and iPCS and auditory-biased tgPCS and cIFS/G (Michalka et al. 2015; Noyce et al. 2017). Here, we extend those findings to report 4 additional sensory-biased frontal regions, bilaterally. One new visual-biased frontal region was identified, midIFS, a region midway along the inferior frontal sulcus. Visual-biased preSMA, a region on the medial surface, exhibited some properties of the other visual-biased regions, but fell short of our criteria for a reliable sensory-biased region. Three new auditory-biased frontal regions were identified: aCO, on the anterior portion of the central operculum; FO, a region on the frontal operculum and cmSFG, a medial surface region lying in the caudal portion of the superior frontal gyrus. On the lateral surface, visual-biased sPCS is the most caudal and dorsal region. Running rostroventral from sPCS are tgPCS (aud), iPCS (vis), cIFS/G (aud), midIFS (vis), and FO (aud). aCO lies caudoventral to cIFS/G. On the medial surface, we reliably observed auditory-biased cmSFG. These results confirm the existence of these regions, and their sensory bias, as posited in Tobyne et al. (2017).

Although sensory modality is widely accepted to be a major organizing principle for occipital, temporal, and parietal lobes, only a handful of human or nonhuman primate studies have examined sensory modality as a factor in the organization of the frontal lobes (Barbas and Mesulam 1981; Petrides and Pandya 1999; Romanski and Goldman-Rakic 2002; Romanski 2007; Braga et al. 2013, 2017; Michalka et al. 2015; Mayer et al. 2017; Noyce et al. 2017; Tobyne et al. 2017). In human neuroimaging studies, activation within the frontal lobes is typically much weaker than activation within other cortical lobes. As a result, it is very common for frontal lobe studies to report only group-averaged activity in order to increase statistical power. However, frontal cortex displays a high degree of inter-subject variability (Mueller et al. 2013; Tobyne et al. 2018), which may mask fine-grained sensory-specific organization. Numerous studies have concluded that frontal lobe organization is independent of sensory modality (e.g., Duncan and Owen 2000; Ivanoff et al. 2009; Krumbholz et al. 2009; Duncan 2010). Other studies have identified patterns of cortical organization based on biases toward visual versus auditory processing (Crottaz-Herbette et al. 2004; Fedorenko et al. 2013; Mayer et al. 2017) or connectivity (e.g., Blank et al. 2014; Glasser et al. 2016; Braga et al. 2017); however, none of these approaches have identified the pattern of interdigitated visual- and auditory-biased structures that is so striking in individual subjects here. More recent work has localized multiple-demand regions within lateral frontal cortex with a higher degree of precision and less intersubject smearing (Assem et al. 2020).

Our present analysis was initially guided by contrasting the intrinsic functional connectivity of the 2 visual-biased regions that we had previously identified, sPCS and iPCS, with that of the 2 auditory-biased regions that we had previously identified, tgPCS and cIFS/G. This analysis revealed not only the expected sensory-biased regions in temporal, occipital, and parietal cortices and the 4 frontal seed regions, but also 6 regions on the lateral surface and insula and 3 structures on the medial surface. Examination of task activation led us to reject 5 of these 9 candidate regions, which did not show consistent patterns of activity across subjects, while supporting the 4 others. Of these 4 regions, the 3 lateral structures, midIFS (vis), FO (aud), and aCO (aud), were each robustly observed in 80% or more of individual hemispheres. Our observed visual-biased and auditory-biased regions, particularly in caudolateral frontal cortex, tend to lie posterior and adjacent to the multiple-demand regions found by Assem et al. (2020).

We tested the degree of pure sensory drive and of sensory-specific WM activation in each region of interest. The vast majority of regions exhibited both a significant preference for auditory or visual activity during sensorimotor control blocks and a significant increase in activity during 2-back WM. These regions thus appear to be recruited both for perceptual processing, and also to support demanding cognitive tasks such as WM in their preferred modality. These 8 frontal lobe regions, similar to sensory WM structures in posterior cortical areas, exhibit both sensitivity for the modality of physically present stimuli and enhanced selectivity during sensory WM processes. Our findings suggest that these frontal lobe regions, rather than being amodal and disjoint from posterior cortical WM regions in parieto-occipital and temporal lobes, are integral components of 2 sensory-biased WM networks that stretch across the brain.

One interesting result in the HCA is that within the visual network, the lowest level clusters first tend to group the same regions in the 2 hemispheres, such as left and right sPCS, or left and right midIFS; in contrast, within the auditory network, the left cIFS/G and FO group together, as do the right cIFS/G, aCO, and FO; similarly, the left aCO, tgPCS, and SMA group together, as do the right tgPCS and SMA. This may be evidence for greater hemispheric specialization within the auditory network, perhaps related to language and/or speech processing, than in the visual network.

The contrast of resting-state functional connectivity from visual-biased and auditory-biased frontal seed regions generated some candidate regions (aIns, preSMA, midIns, rMFG, dmCC) that the task analysis rejected. This result indicates that the seed regions (sPCS, iPCS, tgPCS, cIFS/G) not only belong to visual-biased and auditory-biased attention and WM networks, respectively, but also to other functional networks. Note that univariate analyses may spuriously reject some regions that do discriminate among conditions (as demonstrated by e.g., Harrison and Tong 2009); however, the stimuli and task used here are not sufficiently well controlled to be good candidates for a multivariate classifier-based approach. Future work should more carefully assess this question.

Previously, we noted that the visual-biased frontal regions (sPCS, iPCS) exhibited a significantly greater responsiveness to the nonpreferred modality than did the auditory-biased frontal regions (tgPCS, cIFS/G) (Noyce et al. 2017); that is, the visual-biased regions tended to exhibit a degree of “multiple demand” functionality. In that work, we also characterized aIns and preSMA as full “multiple demand” regions that were strongly recruited for WM tasks in both sensory modalities (see also Duncan and Owen 2000; Duncan 2010; Fedorenko et al. 2013; Assem et al. 2020). Here, we confirmed that aIns and preSMA respond robustly to WM tasks in both sensory modalities in individual subjects and thus reaffirm the claim of multiple-demand functionality. However, aIns and preSMA exhibited stronger resting-state connectivity with the visual-biased than with the auditory-biased frontal regions.

In contrast, the rejected auditory candidate regions, midIns, dmCC, and rMFG, exhibited little response to the WM demands in either sensory modality. Noyce et al. (2017) observed that the visual-biased frontal structures exhibited some degree of multisensory WM recruitment, while the auditory-biased frontal structures were strictly selective for auditory tasks. Our observation that regions with preferential connectivity to those structures show related asymmetries between the visual and auditory networks is consistent with that result. It may be that visual-biased frontal structures are more multisensory exactly because they are more strongly connected to modality-general WM regions.

The present data do not provide a basis for drawing any further conclusions about the functionality of the auditory-connected regions; however, it is worth noting that midIns and dmCC appear to anatomically correspond to regions identified as part of the speech production network (Bohland and Guenther 2006; Guenther 2016). Additionally, auditory-biased attention regions aCO, FO, and cmSFG, which were revealed here with a nonspeech task, also appear to anatomically correspond to regions recruited when subjects are asked to listen to and repeat back spoken syllables (Turkeltaub et al. 2002; Brown et al. 2005; Bohland and Guenther 2006; Guenther 2016; Markiewicz and Bohland 2016; Scott and Perrachione 2019). The possible overlap of speech production regions and auditory-biased attention regions should be examined in individual subjects; however, given the auditory WM demands of the speech production tasks, such an overlap would not be surprising.

Taken together, these observations suggest that the visual-biased attentional network hierarchically merges into or at least overlays with a multiple-demand network, with the degree of visual bias varying across the network, finally disappearing in aIns and preSMA. Similarly, the auditory-biased attentional network may overlay with substantial portions of the speech production network; however, further direct studies are required to confirm this. Further studies are also needed to better understand the specific functional roles contributed by each specific region in these extensive sensory-biased attention networks.

We used a group-average analysis of differential functional connectivity to guide ROI definitions from fMRI task activation ROI definition in individual subjects. We find that an extended frontal network of 8 bilateral sensory-biased regions is robust and replicable across individual subjects and that both task activation and resting-state functional connectivity affirm the sensory-biased identities of these regions. We further find that these regions exhibit both sensory drive in their preferred modality and significant increases in activation during WM. These results highlight the importance of understanding cortical organization on the individual subject level, because this fine-scale structure can vary slightly across individuals, smearing or blurring group-level effects. Finally, our results provide support for an emerging hypothesis that the auditory and visual cortical processing networks support fundamentally different kinds of computations for human cognition, with the auditory network providing very specialized processing and the visual network participating more generally across a range of tasks.

Supplementary Material

Contributor Information

Abigail L Noyce, Neuroscience Institute, Carnegie Mellon University, Pittsburgh, PA 15213, USA; Department of Psychological and Brain Sciences, Boston University, Boston, MA 02215, USA.

Ray W Lefco, Graduate Program in Neuroscience, Boston University, Boston, MA 02215, USA.

James A Brissenden, Department of Psychological and Brain Sciences, Boston University, Boston, MA 02215, USA; Department of Psychology, University of Michigan, Ann Arbor, MI 48109, USA.

Sean M Tobyne, Graduate Program in Neuroscience, Boston University, Boston, MA 02215, USA.

Barbara G Shinn-Cunningham, Neuroscience Institute, Carnegie Mellon University, Pittsburgh, PA 15213, USA.

David C Somers, Department of Psychological and Brain Sciences, Boston University, Boston, MA 02215, USA.

Funding

National Institutes of Health (grants R01-EY022229, R21-EY027703 to D.C.S., F31-NS103306 to S.M.T., F32-EY026796 to A.L.N.); National Science Foundation (grants DGE-1247312 to J.A.B., BCS-1829394 to D.C.S.); Center of Excellence for Learning in Education Science and Technology, National Science Foundation Science of Learning Center (Grant SMA-0835976 to B.G.S.-C). The views expressed in this article do not necessarily represent the views of the NIH, NSF, or the US Government.

Notes

We thank Ilona Bloem, Allen Chang, Kathryn Devaney, Emily Levin, David Osher, and Maya Rosen for assistance with data collection, and David Beeler, Ryan Marshall, Ningcong Tong, and Vaibhav Tripathi for discussions and feedback. Conflict of Interest: The authors declare no competing financial interests.

References

- Alais D, Burr D. 2004. The ventriloquist effect results from near-optimal bimodal integration. Curr Biol. 14(3):257–262. 10.1016/j.cub.2004.01.029. [DOI] [PubMed] [Google Scholar]

- Arnott SR, Grady CL, Hevenor SJ, Graham S, Alain C. 2005. The functional organization of auditory working memory as revealed by fMRI. J Cogn Neurosci. 17(5):819–831. 10.1162/0898929053747612. [DOI] [PubMed] [Google Scholar]

- Assem M, Glasser MF, Van Essen DC, Duncan J. 2020. A domain-general cognitive core defined in multimodally parcellated human cortex. Cereb Cortex. 30(8):4361–4380. 10.1093/cercor/bhaa023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baddeley A. 2010. Working memory. Curr Biol. 20(4):R136–R140. [DOI] [PubMed] [Google Scholar]

- Baddely AD, Hitch G. 1974. Working memory. In: Bower GH, editor. The psychology of learning and motivation. Vol 8. New York: Academic Press, pp. 47–89. [Google Scholar]

- Barbas H, Mesulam M-M. 1981. Organization of afferent input to subdivisions of area 8 in the rhesus monkey. J Comp Neurol. 200(3):407–431. 10.1002/cne.902000309. [DOI] [PubMed] [Google Scholar]

- Blank I, Kanwisher N, Fedorenko E. 2014. A functional dissociation between language and multiple-demand systems revealed in patterns of BOLD signal fluctuations. J Neurophysiol. 112(5):1105–1118. 10.1152/jn.00884.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bohland JW, Guenther FH. 2006. An fMRI investigation of syllable sequence production. Neuroimage. 32(2):821–841. 10.1016/j.neuroimage.2006.04.173. [DOI] [PubMed] [Google Scholar]

- Braga RM, Buckner RL. 2017. Parallel interdigitated distributed networks within the individual estimated by intrinsic functional connectivity. Neuron. 95(2):457–471.e5. 10.1016/j.neuron.2017.06.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Braga RM, Hellyer PJ, Wise RJS, Leech R. 2017. Auditory and visual connectivity gradients in frontoparietal cortex: frontoparietal audiovisual gradients. Hum Brain Mapp. 38(1):255–270. 10.1002/hbm.23358 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Braga RM, Wilson LR, Sharp DJ, Wise RJS, Leech R. 2013. Separable networks for top-down attention to auditory non-spatial and visuospatial modalities. Neuroimage. 74:77–86. 10.1016/j.neuroimage.2013.02.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard D. 1997. The psychophysics toolbox. Spat Vis. 10(4):433–436. 10.1163/156856897X00357. [DOI] [PubMed] [Google Scholar]

- Brissenden JA, Tobyne SM, Osher DE, Levin EJ, Halko MA, Somers DC. 2018. Topographic cortico-cerebellar networks revealed by visual attention and working memory. Curr Biol. 28(21):3364–3372.e5. 10.1016/j.cub.2018.08.059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown S, Ingham RJ, Ingham JC, Laird AR, Fox PT. 2005. Stuttered and fluent speech production: an ALE meta-analysis of functional neuroimaging studies. Hum Brain Mapp. 25(1):105–117. 10.1002/hbm.20140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burgess GC, Kandala S, Nolan D, Laumann TO, Power JD, Adeyemo B, Harms MP, Petersen SE, Barch DM. 2016. Evaluation of denoising strategies to address motion-correlated artifacts in resting-state functional magnetic resonance imaging data from the Human Connectome Project. Brain Connect. 6(9):669–680. 10.1089/brain.2016.0435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carp J. 2013. Optimizing the order of operations for movement scrubbing: comment on Power et al. Neuroimage. 76:436–438. 10.1016/j.neuroimage.2011.12.061. [DOI] [PubMed] [Google Scholar]

- Christophel TB, Hebart MN, Haynes J-D. 2012. Decoding the contents of visual short-term memory from human visual and parietal cortex. J Neurosci. 32(38):12983–12989. 10.1523/JNEUROSCI.0184-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cornelissen FW, Peters EM, Palmer J. 2002. The Eyelink toolbox: eye tracking with MATLAB and the psychophysics toolbox. Behav Res Methods Instrum Comput. 34(4):613–617. 10.3758/BF03195489. [DOI] [PubMed] [Google Scholar]

- Cousineau D. 2005. Confidence intervals in within-subject designs: a simpler solution to Loftus and Masson’s method. Tutor Quant Methods Psychol. 1(1):42–45. 10.20982/tqmp.01.1.p042. [DOI] [Google Scholar]

- Crottaz-Herbette S, Anagnoson RT, Menon V. 2004. Modality effects in verbal working memory: differential prefrontal and parietal responses to auditory and visual stimuli. Neuroimage. 21(1):340–351. 10.1016/j.neuroimage.2003.09.019. [DOI] [PubMed] [Google Scholar]

- Dale AM, Fischl B, Sereno MI. 1999. Cortical surface-based analysis I: segmentation and surface reconstruction. Neuroimage. 9:179–194. [DOI] [PubMed] [Google Scholar]

- Dosenbach NUF, Fair DA, Miezin FM, Cohen AL, Wenger KK, Dosenbach RAT, Fox MD, Snyder AZ, Vincent JL, Raichle ME, et al. 2007. Distinct brain networks for adaptive and stable task control in humans. Proc Natl Acad Sci. 104(26):11073–11078. 10.1073/pnas.0704320104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duncan J. 2010. The multiple-demand (MD) system of the primate brain: mental programs for intelligent behaviour. Trends Cogn Sci. 14(4):172–179. 10.1016/j.tics.2010.01.004. [DOI] [PubMed] [Google Scholar]

- Duncan J, Owen AM. 2000. Common regions of the human frontal lobe recruited by diverse cognitive demands. Trends Neurosci. 23(10):475–483. 10.1016/S0166-2236(00)01633-7. [DOI] [PubMed] [Google Scholar]

- Eklund A, Nichols TE, Knutsson H. 2016. Cluster failure: why fMRI inferences for spatial extent have inflated false-positive rates. Proc Natl Acad Sci. 113(28):7900–7905. 10.1073/pnas.1602413113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fedorenko E, Duncan J, Kanwisher N. 2013. Broad domain generality in focal regions of frontal and parietal cortex. Proc Natl Acad Sci. 110(41):16616–16621. 10.1073/pnas.1315235110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischl B. 2004. Automatically parcellating the human cerebral cortex. Cereb Cortex. 14(1):11–22. 10.1093/cercor/bhg087. [DOI] [PubMed] [Google Scholar]

- Fischl B, Liu A, Dale AM. 2001. Automated manifold surgery: constructing geometrically accurate and topologically correct models of the human cerebral cortex. IEEE Trans Med Imaging. 20(1):70–80. 10.1109/42.906426. [DOI] [PubMed] [Google Scholar]

- Fischl B, Sereno MI, Dale AM. 1999a. Cortical surface-based analysis II: inflation, flattening, and a surface-based coordinate system. Neuroimage. 9:195–207. [DOI] [PubMed] [Google Scholar]

- Fischl B, Sereno MI, Tootell RBH, Dale AM. 1999b. High-resolution intersubject averaging and a coordinate system for the cortical surface. Hum Brain Mapp. 8(4):272–284. . [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glasser MF, Coalson TS, Robinson EC, Hacker CD, Harwell J, Yacoub E, Ugurbil K, Andersson J, Beckmann CF, Jenkinson M, et al. 2016. A multi-modal parcellation of human cerebral cortex. Nature. 536(7615):171–178. 10.1038/nature18933. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guenther FH. 2016. Neural control of speech. Cambridge (MA): The MIT Press. [Google Scholar]

- Hagler DJ, Sereno MI. 2006. Spatial maps in frontal and prefrontal cortex. Neuroimage. 29(2):567–577. 10.1016/j.neuroimage.2005.08.058. [DOI] [PubMed] [Google Scholar]

- Harrison SA, Tong F. 2009. Decoding reveals the contents of visual working memory in early visual areas. Nature. 458(7238):632–635. 10.1038/nature07832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang S, Seidman LJ, Rossi S, Ahveninen J. 2013. Distinct cortical networks activated by auditory attention and working memory load. Neuroimage. 83:1098–1108. 10.1016/j.neuroimage.2013.07.074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ivanoff J, Branning P, Marois R. 2009. Mapping the pathways of information processing from sensation to action in four distinct sensorimotor tasks. Hum Brain Mapp. 30(12):4167–4186. 10.1002/hbm.20837. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jerde TA, Merriam EP, Riggall AC, Hedges JH, Curtis CE. 2012. Prioritized maps of space in human frontoparietal cortex. J Neurosci. 32(48):17382–17390. 10.1523/JNEUROSCI.3810-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kastner S, DeSimone K, Konen CS, Szczepanski SM, Weiner KS, Schneider KA. 2007. Topographic maps in human frontal cortex revealed in memory-guided saccade and spatial working-memory tasks. J Neurophysiol. 97(5):3494–3507. 10.1152/jn.00010.2007. [DOI] [PubMed] [Google Scholar]

- Kleiner M, Brainard D, Pelli D. 2007. What’s new in PsychToolbox 3? Italy: Arezzo. [Google Scholar]

- Krumbholz K, Nobis EA, Weatheritt RJ, Fink GR. 2009. Executive control of spatial attention shifts in the auditory compared to the visual modality. Hum Brain Mapp. 30(5):1457–1469. 10.1002/hbm.20615. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kumar S, Joseph S, Gander PE, Barascud N, Halpern AR, Griffiths TD. 2016. A brain system for auditory working memory. J Neurosci. 36(16):4492–4505. 10.1523/JNEUROSCI.4341-14.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lefco RW, Brissenden JA, Noyce AL, Tobyne SM, Somers DC. 2020. Gradients of functional organization in posterior parietal cortex revealed by visual attention, visual short-term memory, and intrinsic functional connectivity. Neuroimage. 219:117029. 10.1016/j.neuroimage.2020.117029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis-Peacock JA, Drysdale AT, Postle BR. 2015. Neural evidence for the flexible control of mental representations. Cereb Cortex. 25(10):3303–3313. 10.1093/cercor/bhu130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loftus GR, Masson MEJ. 1994. Using confidence intervals in within-subject designs. Psychon Bull Rev. 1(4):476–490. 10.3758/BF03210951. [DOI] [PubMed] [Google Scholar]

- Markiewicz CJ, Bohland JW. 2016. Mapping the cortical representation of speech sounds in a syllable repetition task. Neuroimage. 141:174–190. 10.1016/j.neuroimage.2016.07.023. [DOI] [PubMed] [Google Scholar]

- Mayer AR, Ryman SG, Hanlon FM, Dodd AB, Ling JM. 2017. Look hear! The prefrontal cortex is stratified by modality of sensory input during multisensory cognitive control. Cereb Cortex. 27(5), 2831–2840. 10.1093/cercor/bhw131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Michalka SW, Kong L, Rosen ML, Shinn-Cunningham BG, Somers DC. 2015. Short-term memory for space and time flexibly recruit complementary sensory-biased frontal lobe attention networks. Neuron. 87(4):882–892. 10.1016/j.neuron.2015.07.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morey RD. 2008. Confidence intervals from normalized data: a correction to Cousineau (2005). Tutor Quant Methods Psychol. 4(2):61–64. 10.20982/tqmp.04.2.p061. [DOI] [Google Scholar]

- Mueller S, Wang D, Fox MD, Yeo BTT, Sepulcre J, Sabuncu MR, Shafee R, Lu J, Liu H. 2013. Individual variability in functional connectivity architecture of the human brain. Neuron. 77(3):586–595. 10.1016/j.neuron.2012.12.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noyce AL, Cestero N, Michalka SW, Shinn-Cunningham BG, Somers DC. 2017. Sensory-biased and multiple-demand processing in human lateral frontal cortex. J Neurosci. 37(36):8755–8766. 10.1523/JNEUROSCI.0660-17.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noyce AL, Cestero N, Shinn-Cunningham BG, Somers DC. 2016. Short-term memory stores organized by information domain. Atten Percept Psychophys. 78(3):960–970. 10.3758/s13414-015-1056-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Owen AM, McMillan KM, Laird AR, Bullmore E. 2005. N-back working memory paradigm: a meta-analysis of normative functional neuroimaging studies. Hum Brain Mapp. 25(1):46–59. 10.1002/hbm.20131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petrides M, Pandya DN. 1999. Dorsolateral prefrontal cortex: comparative cytoarchitectonic analysis in the human and the macaque brain and corticocortical connection patterns: dorsolateral prefrontal cortex in human and monkey. Eur J Neurosci. 11(3):1011–1036. 10.1046/j.1460-9568.1999.00518.x. [DOI] [PubMed] [Google Scholar]

- Postle BR, Stern CE, Rosen BR, Corkin S. 2000. An fMRI investigation of cortical contributions to spatial and nonspatial visual working memory. Neuroimage. 11(5):409–423. 10.1006/nimg.2000.0570. [DOI] [PubMed] [Google Scholar]

- Power JD, Barnes KA, Snyder AZ, Schlaggar BL, Petersen SE. 2012. Spurious but systematic correlations in functional connectivity MRI networks arise from subject motion. Neuroimage. 59(3):2142–2154. 10.1016/j.neuroimage.2011.10.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romanski LM. 2007. Representation and integration of auditory and visual stimuli in the primate ventral lateral prefrontal cortex. Cereb Cortex. 17(suppl 1):i61–i69. 10.1093/cercor/bhm099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romanski LM, Goldman-Rakic PS. 2002. An auditory domain in primate prefrontal cortex. Nat Neurosci. 5(1):15–16. 10.1038/nn781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott TL, Perrachione TK. 2019. Common cortical architectures for phonological working memory identified in individual brains. Neuroimage. 202:116096. 10.1016/j.neuroimage.2019.116096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Setsompop K, Gagoski BA, Polimeni JR, Witzel T, Wedeen VJ, Wald LL. 2012. Blipped-controlled aliasing in parallel imaging for simultaneous multislice echo planar imaging with reduced g-factor penalty. Magn Reson Med. 67(5):1210–1224. 10.1002/mrm.23097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith S, Nichols T. 2009. Threshold-free cluster enhancement: addressing problems of smoothing, threshold dependence and localisation in cluster inference. Neuroimage. 44(1):83–98. 10.1016/j.neuroimage.2008.03.061. [DOI] [PubMed] [Google Scholar]

- Tamber-Rosenau BJ, Dux PE, Tombu MN, Asplund CL, Marois R. 2013. Amodal processing in human prefrontal cortex. J Neurosci. 33(28):11573–11587. 10.1523/JNEUROSCI.4601-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tobyne SM, Osher DE, Michalka SW, Somers DC. 2017. Sensory-biased attention networks in human lateral frontal cortex revealed by intrinsic functional connectivity. Neuroimage. 162:362–372. 10.1016/j.neuroimage.2017.08.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tobyne SM, Somers DC, Brissenden JA, Michalka SW, Noyce AL, Osher DE. 2018. Prediction of individualized task activation in sensory modality-selective frontal cortex with ‘connectome fingerprinting. Neuroimage. 183:173–185. 10.1016/j.neuroimage.2018.08.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turkeltaub PE, Eden GF, Jones KM, Zeffiro TA. 2002. Meta-analysis of the functional neuroanatomy of single-word reading: method and validation. Neuroimage. 16(3):765–780. 10.1006/nimg.2002.1131. [DOI] [PubMed] [Google Scholar]

- Welch RB, Warren DH. 1980. Immediate perceptual response to Intersensory discrepancy. Psychol Bull. 88(3):638–667. [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.