Abstract

Background and Aims:

We need more pragmatic trials of interventions to improve care and outcomes for people living with Alzheimer’s disease and related dementias. However, these trials present unique methodological challenges in their design, analysis, and reporting — often, due to the presence of one or more sources of clustering. Failure to account for clustering in the design and analysis can lead to increased risks of type I and type II errors. We conducted a review to describe key methodological characteristics and obtain a “baseline assessment” of methodological quality of pragmatic trials in dementia research, with a view to developing new methods and practical guidance to support investigators and methodologists conducting pragmatic trials in this field.

Methods:

We used a published search filter in MEDLINE to identify trials more likely to be pragmatic and identified a subset that focused on people living with Alzheimer’s disease or other dementias or included them as a defined subgroup. Pairs of reviewers extracted descriptive information and key methodological quality indicators from each trial.

Results:

We identified N=62 eligible primary trial reports published across 36 different journals. There were 15 (24%) individually randomized, 38 (61%) cluster randomized, and 9 (15%) individually randomized group treatment designs; 54 (87%) trials used repeated measures on the same individual and/or cluster over time and 17 (27%) had a multivariate primary outcome (e.g., due to measuring an outcome on both the patient and their caregiver). Of the 38 cluster randomized trials, 16 (42%) did not report sample size calculations accounting for the intracluster correlation and 13 (34%) did not account for intracluster correlation in the analysis. Of the 9 individually randomized group treatment trials, 6 (67%) did not report sample size calculations accounting for intracluster correlation and 8 (89%) did not account for it in the analysis. Of the 54 trials with repeated measurements, 45 (83%) did not report sample size calculations accounting for repeated measurements and 19 (35%) did not utilize at least some of the repeated measures in the analysis. No trials accounted for the multivariate nature of their primary outcomes in sample size calculation; only one did so in the analysis.

Conclusions:

There is a need and opportunity to improve the design, analysis, and reporting of pragmatic trials in dementia research. Investigators should pay attention to the potential presence of one or more sources of clustering. While methods for longitudinal and cluster randomized trials are well-developed, accessible resources and new methods for dealing with multiple sources of clustering are required. Involvement of a statistician with expertise in longitudinal and clustered designs is recommended.

Keywords: Cluster randomized trials, longitudinal data analysis, multilevel modeling, missing data, intracluster correlation, pragmatic trials, Alzheimer’s disease, dementia

Introduction

There is a critical need for more pragmatic trials of interventions to improve care and clinical outcomes for people living with Alzheimer’s disease or related dementias and their caregivers.1 This vulnerable population is increasing in prevalence but is at risk of poor health outcomes and poor quality of life due to the relative absence of high quality pragmatic randomized controlled trials evaluating interventions and programs to meet their complex needs. Pragmatic trials aim to generate evidence that can directly inform decision-making by embedding the research in clinical practice and deviating as little as possible from usual care conditions.2 The recently launched US National Institute on Aging Imbedded Pragmatic Alzheimer’s disease or Alzheimer’s disease and related dementia Collaboratory (IMPACT) aims to build capacity to conduct pragmatic trials for people living with dementia and their caregivers, and to develop statistical methodology and guidance through its Design and Statistics Core.3,4 As a first step, we undertook a review of the existing landscape of pragmatic trials in this field.

Pragmatic trials involving people living with dementia and their caregivers raise unique statistical complications for several reasons. First, the nature of interventions and the setting (e.g., health system interventions implemented in nursing homes) may necessitate or encourage the use of cluster randomization; alternatively, individual randomization may be feasible but the intervention may need to be delivered by a common provider or therapist within a group setting.5 Cluster randomized trials and individually randomized group treatment trials have special requirements for design and analysis due to the presence of intracluster correlation, i.e., the nonindependence of observations from multiple individuals belonging to the same cluster.6 Second, repeated outcome assessments on the same individual may be necessary to examine the effect of an intervention over time.7 In cluster randomized trials, the need for greater statistical efficiency may encourage adoption of stepped wedge or other multiple period designs that measure outcomes repeatedly in the same cluster (and/or the same individuals) over time.8 To reap the benefits of repeated measures, statistical methods that incorporate all available measurements and account for correlations over time are required. Third, the intervention may specifically target the patient-caregiver dyad with outcomes assessed on each patient and their caregiver.9 For example, depressive symptoms or quality of life may be assessed on both the patient and their caregiving spouse to allow for mutual influences of patients and caregivers on the response to an intervention. Ideally, such trials should be designed and analyzed using multivariate approaches that take the correlation between the outcomes into account.10 In the presence of missing data — a particular challenge in aging research — multivariate approaches can also help mitigate loss of information due to missing values for one member of the dyad.11 Fourth, the intervention may be tested for its effect on a range of outcomes, e.g., multiple domains within a questionnaire-based scale.12 An estimate of the intervention effect can be obtained with greater precision by taking the correlation between the outcomes into account.13,14 Furthermore, co-primary endpoints may be necessary,15 for example, to demonstrate an intervention’s effect on both cognitive and functional endpoints; analyzing such outcomes using a multivariate approach has several advantages. In summary, pragmatic trials in dementia research may be characterized by one or more sources of clustering due to the choice of cluster randomization, delivery of treatment in groups, use of repeated measures, interventions targeting patient-caregiver dyads, and multivariate primary trial outcomes. Failing to account for these sources of clustering can lead to increased risks of either type I or type II errors. Table 1 summarizes these sources of clustering, and their implications for sample size calculation and analysis.

Table 1.

Potential sources of clustering and consequences of ignoring the intracluster correlation in the sample size calculation and analysis

| Type of clustering | Explanation | Implications for calculated sample size when correlation is ignoreda | Implications for analysis when correlation is ignoreda |

|---|---|---|---|

| Cluster randomized trial | Observations from multiple individuals belonging to the same cluster are usually positively correlated | Too small | Increased risk of Type I error |

| Individually randomized group treatment trial | Multiple observations from individuals receiving treatment in the same group or by the same interventionist are usually positively correlated | Too small | Increased risk of Type I error |

| Individually randomized parallel arm trial with repeated measures on the same individual after intervention (treatment is between-subject effect) | Multiple repeated measures on the same individual are usually positively correlated | Too smallb | Increased risk of Type I errorb |

| Individually randomized cross-over trial (treatment is within-subject effect) | Multiple repeated measures on the same individual are usually positively correlated | Too largeb | Increased risk of Type II errorb |

| Individually randomized parallel arm trial with repeated measures on the same individual before and after intervention | Multiple repeated measures on the same individual are usually positively correlated | Either too large or too smallc | Increased risk of Type I or Type II errorc |

| Dyadic outcome with both members of the pair allocated to the same intervention (treatment is a between-dyads effect) | Measurements on two individuals in a dyad (e.g., patient-caregiver) may be positively or negatively correlated | Too small if correlation is positived Too large if correlation is negatived |

Increased risk of Type I error if correlation is positived Increased risk of Type II error if correlation is negatived |

| Multivariate or co-primary endpoints when the trial is designed to evaluate a joint effect on all the endpoints | Multiple components of the multivariate outcome are usually positively correlated. Power decreases as the number of endpoints being evaluated increases. To maintain nominal power, the sample size should be increased. Accounting for the correlation can lessen the increase in the sample size. | Too large | Increased risk of Type II error |

Note: we consider here a superiority trial design with a continuous or binary endpoint and a single source of clustering; we further consider an analysis involving all measurements but ignoring the intracluster correlations.

Assumes all measurements are analyzed, ignoring the correlation. Failing to utilize available repeated measures (i.e., basing the sample size calculation or analysis on a single measurement per subject) has the opposite effect: it means that the sample size may be larger than required and the analysis may be statistically inefficient.

Depending on the number of repeated measurements and the strength of the within-subject correlation.

Assumes all measurements are analyzed, ignoring the correlation. Failing to utilize the available pairwise measurements (i.e., basing the sample size calculation or analysis on a single member of the dyad when the observations are positively correlated) has the opposite effect: the sample size may be larger than required and the analysis may be statistically inefficient..

This manuscript describes the results from a systematic literature survey to assess the methodological conduct and reporting of pragmatic trials in people living with dementia published over the past five years with a special emphasis on how sources of clustering were handled and reported. CONSORT reporting guidelines for cluster randomized and stepped wedge designs16,17 require clear descriptions of how clustering was accounted for in the sample size calculation and analysis. They also require estimates for the intracluster correlation coefficient and correlations in repeated measures over time to be reported as these correlation coefficients can usefully inform sample size calculations for future trials. Our main objectives were to a) describe characteristics and design features of pragmatic trials in this field; b) assess the extent to which clustering was accommodated in sample size calculations; c) assess the extent to which clustering was accommodated in the analysis; and d) describe the prevalence of reporting estimates for correlation coefficients. We also examined the extent to which covariates used in the randomization were adjusted for in the analysis,18 and whether methods to mitigate effects of missing data were used.

Methods

Database of pragmatic trials

This study was embedded within the scope of a larger project about ethical and methodological issues in pragmatic trials.19 As part of that project, we derived and validated a search filter to locate pragmatic trials in MEDLINE,20 implemented the filter on 3 April 2019 to cover the period 1 January 2014 to that date, and conducted a landscape analysis of the resulting set of 4337 eligible trials.21 We chose 2014 as the start date as this was the year that the National Library of Medicine began indexing articles as “pragmatic clinical trial as publication type” as well as topic. The methods for identifying eligible trials and flow diagram for trials included in the larger landscape analysis have been described in detail elsewhere.21 In brief, we included primary reports of pragmatic randomized controlled trials with at least 100 individuals (excluding pilot or feasibility studies, protocols, and secondary analyses).

Identification of pragmatic trials in Alzheimer’s disease and related dementias

For the present manuscript, we aimed to identify —within the larger pragmatic trials database — trials that a) specifically focused on people living with dementia, or b) focused on a broader cohort of older adults which included a subgroup with dementia and conducted a stratified or subgroup analysis on that cohort. To locate trials of type a), we applied a search filter from the Cochrane dementia and cognitive improvement group.22 To efficiently identify trials of type b), we used MeSH terms to identify trials exclusively in the elderly (aged 65 and over). Appendix 1 in the supplemental material provides full details of our search. Five reviewers (BQ, CC, LZ, MT and FL) then jointly screened 15 potentially eligible trials as part of a training process. After discussing discrepancies, the remaining trials were distributed amongst the first three reviewers who independently screened them with one reviewer per trial. Each trial screened in was classified as an individually randomized, cluster randomized, or individually randomized group treatment trial.

Data extraction

The data extraction form was pilot tested on samples of three individually randomized trials, three cluster randomized trials, and three individually randomized group treatment trials as part of a training and calibration exercise. All five reviewers, (BQ, CC, LZ, MT and FL) completed these extractions and then met to review discrepancies and refine the extraction form. Thereafter, the remaining studies were distributed amongst three reviewers (BQ, CC, LZ) with two reviewers per trial independently extracting information from each trial. The reviewers met to discuss discrepancies; MT and FL were consulted and reached agreement when discrepancies could not be resolved.

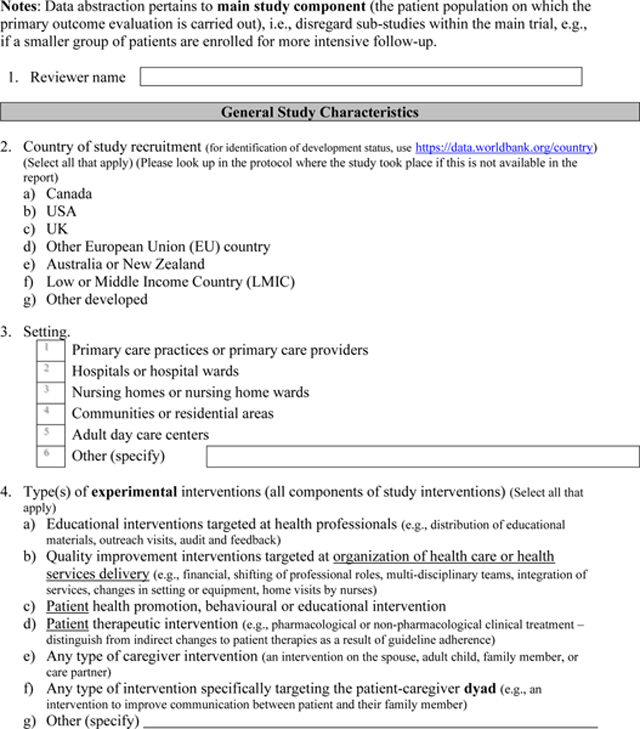

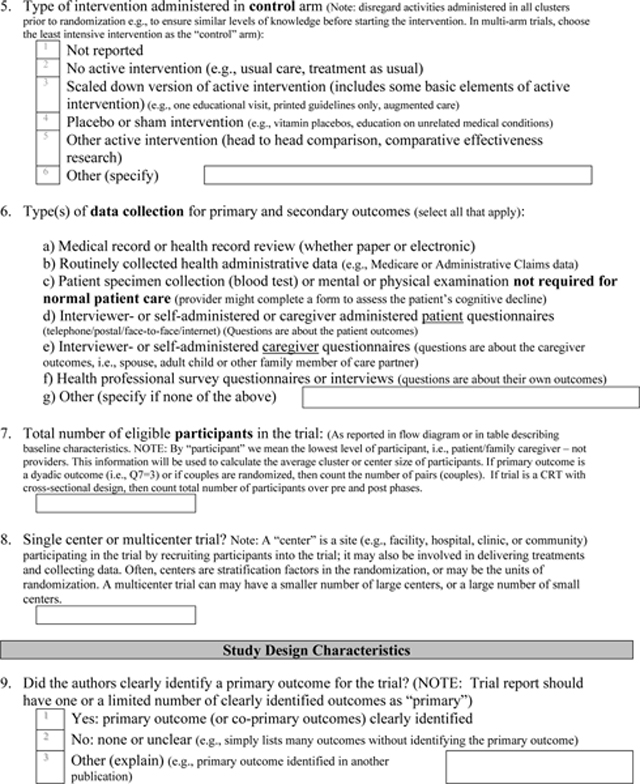

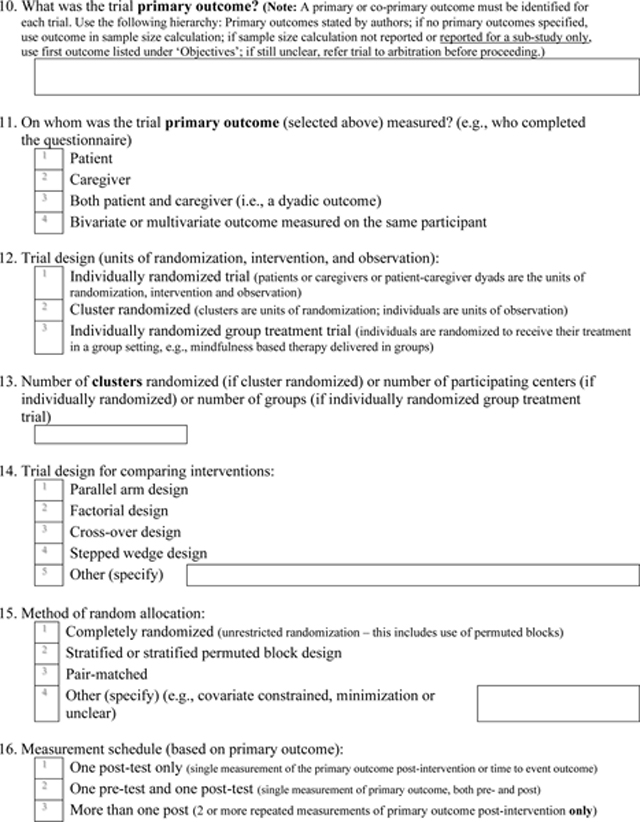

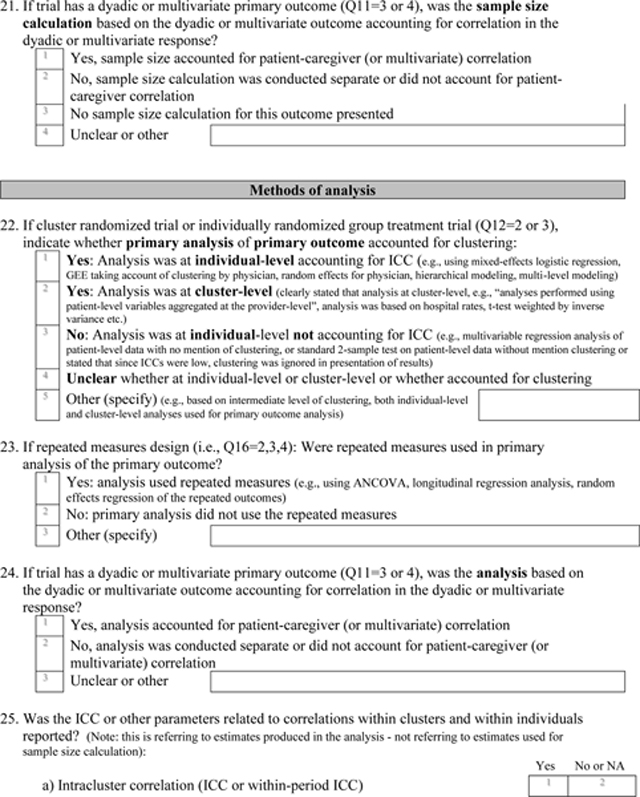

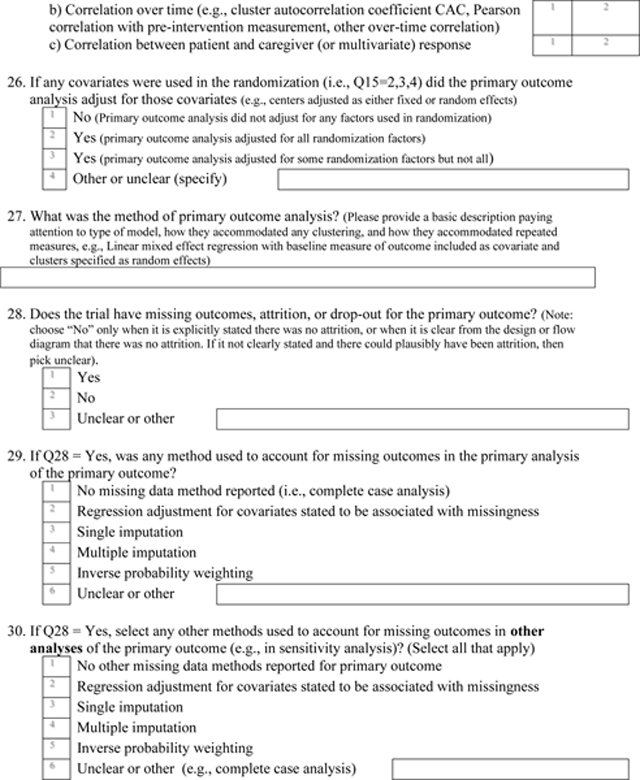

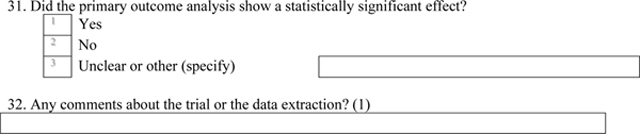

The data extraction form is attached as Appendix 2. It included sections on general study characteristics, study design, sample size calculation, and analysis. General study characteristics included country of study conduct, setting, study and control interventions, data collection procedures, number of individuals (or dyads) randomized, and journal impact factor. The study design section included items on the unit of randomization, method of allocation, and type of primary outcome. The primary outcome was classified as a univariate outcome measured on either the patient or caregiver, bivariate outcome measured on both the patient and caregiver, or multivariate outcome measured on the same participant. If a primary outcome was not identified, we used the outcome in the sample size calculation, and if a sample size calculation was not provided, the first outcome listed under the objectives. Cluster randomized trials with repeat measurements were classified as closed cohort (i.e., the same individuals measured over time), open cohort (i.e., a mixture of the same and different individuals), or cross-sectional (i.e., different individuals measured each time).

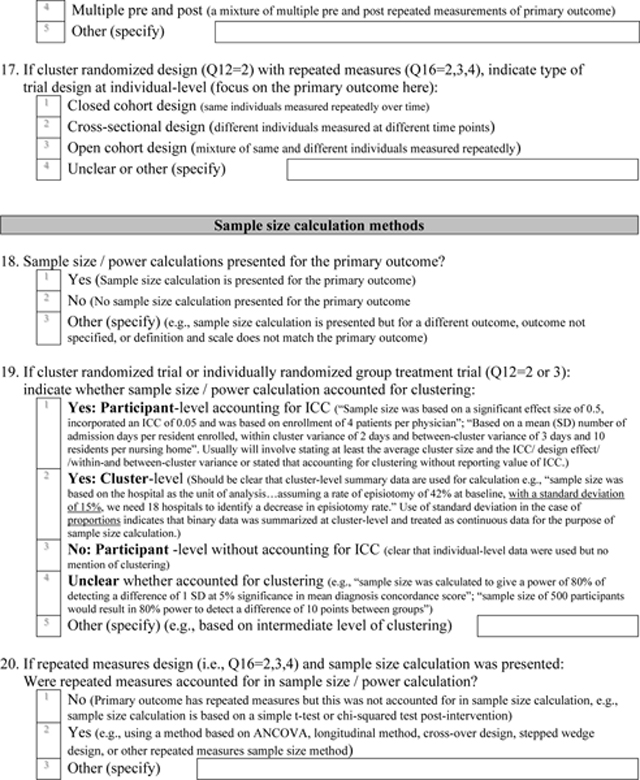

We extracted whether a sample size or power calculation was presented. If a cluster randomized or individually randomized group treatment trial, we classified whether clustering was accounted for in the calculation. Clustering was considered accounted for if the calculation was done at the individual-level and clearly stated that an intracluster correlation coefficient or design effect was used to adjust for clustering, or if the calculation was done at the cluster-level. If the trial had repeated measures, we extracted whether the sample size calculation accounted for them (e.g., by assuming a correlation with baseline, or by using design effects for longitudinal designs such as cluster cross-over or stepped wedge designs). If the trial had a dyadic or multivariate primary outcome, we extracted whether the sample size calculation was based on the dyadic or multivariate outcome accounting for correlation in the outcomes.

For cluster randomized trials and individually randomized group treatment trials, we extracted whether the analysis accounted for clustering. Clustering was considered accounted for if analysis was at individual-level and clearly accounted for intracluster correlation or was conducted at the cluster-level. For repeated measures designs, we extracted whether the analysis was based on repeated measures (e.g., using analysis of covariance or longitudinal regression accounting for correlation in repeated measures). If the trial had a dyadic or multivariate primary outcome, we extracted whether the analysis used a multivariate approach accounting for correlation in outcomes. We also extracted whether any correlation coefficients (within clusters, over time, or between multivariate outcomes) were reported. Adjustment for any covariates used in the randomization, presence of missing data, and use of any missing data method in both the primary analysis of the primary outcome, and secondary or sensitivity analyses for the primary outcome were recorded.

Analysis

We described categorical variables using frequencies and percentages, and continuous variables using range, mean and standard deviation, and median and interquartile range (Q1-Q3). Average cluster size was calculated as the number of randomized individuals or dyads divided by the number of clusters.

Results

Identification of eligible trials

From our database of 4337 pragmatic trials, 488 trials were identified as potentially relevant to Alzheimer’s disease and related dementias using a composite of either the Cochrane filter (273 trials) and the MeSH aged filter (281 trials). From these trials, reviewers screened in N=62 as eligible: 60 focused solely on people living with dementia and 2 included such populations as a defined subgroup. These trials were published across 36 different journals (see Appendix 3) with the highest frequency in the Journal of the American Geriatrics Society (7 trials).

Study characteristics

Table 2 presents a summary of the study characteristics. The majority were conducted in the European Union, United Kingdom or United States, and took place in a nursing home setting. The types of experimental interventions were diverse: most commonly patient non-pharmacological interventions (47%), followed by educational interventions targeted at health professionals (31%), interventions targeted at the health care organization (27%), interventions targeting the caregiver (19%) and the patient-caregiver dyad (13%), and patient pharmacological interventions (5%). The most commonly used data collection procedures were patient-focused questionnaires (73%); more than half used mental or physical examinations not required for normal patient care (53%); 36% used caregiver-focused questionnaires; 34% used routinely collected data or reviews of patient medical records. The majority were multicenter trials and enrolled a median of 267 individuals or dyads.

Table 2.

General characteristics of trials included in the review (N=62)

| Characteristic | Frequency (%)a |

|---|---|

|

| |

| Country b | |

| Canada | 2 (3.2%) |

| United States of America | 13 (21.0%) |

| United Kingdom | 14 (22.6%) |

| European Union | 17 (27.4%) |

| Australia or New Zealand | 5 (8.1%) |

| Low and Middle-Income Country | 4 (6.5%) |

| Other | 9 (14.5%) |

|

| |

| Setting | |

| Primary care | 8 (12.9%) |

| Hospital care | 6 (9.7%) |

| Nursing homes | 28 (45.2%) |

| Communities | 15 (24.2%) |

| Other | 5 (8.1%) |

|

| |

| Intervention b | |

| Educational intervention targeting health professionals | 19 (30.6%) |

| Quality improvement targeting organization/health care system | 17 (27.4%) |

| Patient non-pharmacological intervention | 29 (46.8%) |

| Patient pharmacological intervention | 3 (4.8%) |

| Any intervention targeting caregiver only | 12 (19.4%) |

| Any intervention targeting the patient-caregiver dyad | 8 (12.9%) |

|

| |

| Control arm(s) | |

| No active intervention (usual care) | 40 (64.5%) |

| Scaled down version of active intervention | 14 (22.5%) |

| Placebo or sham intervention | 3 (4.8%) |

| Other active intervention (i.e., head to head comparison) | 3 (4.8%) |

| Otherc | 2 (3.2%) |

|

| |

| Data collection b | |

| Review of medical records | 21 (33.9%) |

| Routinely collected health administrative data | 2 (3.2%) |

| Mental or physical examination not required for normal care | 33 (53.2%) |

| Patient-focused questionnaires completed by patient and/or caregiver | 45 (72.6%) |

| Caregiver-focused questionnaires | 22 (35.5%) |

| Health professional questionnaires | 4 (6.5%) |

| Direct observation | 3 (4.8%) |

|

| |

| Single center or multicenter trial? | |

| Single center | 5 (8.1%) |

| Multicenter | 56 (90.0%) |

| Unclear | 1 (1.6%) |

|

| |

| Number of individuals (or dyads) randomized | |

| Mean (Standard deviation) | 574 (1958) |

| Median (Q1 to Q3) | 267 (140 to 402) |

| Range | 101 to 15,574 |

|

| |

| Journal impact factor | |

| Median (Q1 to Q3) | 3.6 (2.8 to 5.0) |

| Range | 0.9 to 27.6 |

Entries are frequency (%) unless otherwise indicated

A trial can belong to multiple categories; thus, numbers don’t add up to 100%

One study had two control arms: sham and usual care; another study had two control arms: scaled down version of active intervention and usual care

Types of trial designs

Table 3 presents the types of trial designs, methods of random allocation and use of repeated measures. There were 15 (24%) individually randomized, 38 (61%) cluster randomized, and 9 (15%) individually randomized group treatment designs. The majority were parallel arm designs; two used a stepped wedge design. Over a third (39%) did not use stratification or some other type of balancing constraint. Twelve trials (19%) did not clearly identify a primary trial outcome. The nature of the primary outcome was most often continuous (81%), and univariate assessed on the patient (63%) or caregiver (10%); 5 trials (8%) had a dyadic primary outcome assessed on both the patient and their caregiver, and 12 (19%) a multivariate primary outcome measured on the same participant. Only 8 trials (13%) did not have repeated measures on the primary outcome. Among the 34 cluster randomized trials with repeated measures, almost all (94%) were closed cohort designs. The median number of clusters randomized was 22 ranging from 2 to 168. The median cluster size was 14 (3 to 708).

Table 3.

Trial design features (N=62) based on the identified primary trial outcome

| Characteristic | Frequency (%)a |

|---|---|

|

| |

| Type of design (unit of randomization) | |

| Individually randomized | 15 (24.2%) |

| Cluster randomized | 38 (61.3%) |

| Individually randomized group treatment trial | 9 (14.5%) |

|

| |

| Trial design for comparing interventions | |

| Parallel arm | 55 (88.7%) |

| Factorial | 4 (6.5%) |

| Cross-over | 1 (1.6%) |

| Stepped wedge | 2 (3.2%) |

|

| |

| Method of random allocation | |

| All trials (N=62) | |

| Completely randomized | 24 (38.7%) |

| Stratified | 27 (43.5%) |

| Pair-matched | 9 (14.5%) |

| Stratified and minimization | 2 (3.2%) |

| Cluster randomized trials (N=38) | |

| Completely randomized | 15 (39.5%) |

| Stratified | 13 (34.2%) |

| Pair-matched | 9 (23.7%) |

| Stratified and minimization | 1 (2.6%) |

|

| |

| Primary or co-primary outcome(s) clearly identified? | |

| Yes | 50 (80.6%) |

| No or unclear | 12 (19.4%) |

|

| |

| Type of primary outcome variable b | |

| Continuous | 50 (80.6%) |

| Binary | 9 (14.5%) |

| Time to event | 3 (4.8%) |

|

| |

| Nature of the primary outcome b | |

| Univariate outcome measured on the patient | 39 (62.9%) |

| Univariate outcome measured on the caregiver | 6 (9.7%) |

| Bivariate outcome measured on both the patient and caregiver | 5 (8.1%) |

| Multivariate outcome measured on the same participant | 12 (19.4%) |

|

| |

| Measurement schedule for the primary outcome b | |

| One post-intervention measurement only | 8 (12.9%) |

| One pre- and one post-intervention measurement | 12 (19.4%) |

| More than one post-intervention measurement only | 4 (6.5%) |

| More than one pre- and post-intervention measurements | 38 (61.3%) |

|

| |

| If cluster randomized design with repeated measures (N=34), type of trial design at individual-level | |

| Closed cohort | 32 (94.1%) |

| Cross-sectional | 1 (2.9%) |

| Open cohort | 1 (2.9%) |

|

| |

| Size of cluster randomized trials (N=38) | |

| Number of clusters randomized | |

| Not reported | 1 |

| Mean (Standard deviation) | 31 (31) |

| Median (Q1 to Q3) | 22 (15 to 34) |

| Range | 2 to 168 |

| Cluster size (number of individuals or dyads per cluster) | |

| Mean (Standard deviation) | 43 (11) |

| Median (Q1 to Q3) | 14 (8 to 29) |

| Range | 3 to 708 |

Entries are frequency (%) unless otherwise indicated

If primary outcome was not identified in the report, reviewers nevertheless attempted to identify a “primary” outcome, using the outcome used in sample size calculation, or if sample size calculation was not reported, the first outcome listed under ‘Objectives’.

Sample size calculation methods

Table 4 presents details about reporting and conduct of sample size calculation procedures. Fifty trials (81%) reported a sample size or power calculation. Among the 38 cluster randomized trials, 22 (58%) accounted for the intracluster correlation while 6 (16%) did not; the remaining 10 (26%) did not present sample size calculations. Among the 9 individually randomized group treatment trials, all presented sample size calculations but 6 (67%) did not account for the intracluster correlation. Among 54 trials with repeated measurements, 9 (17%) accounted for repeated measures in the sample size calculation, while 33 (61%) did not; the remaining 12 (22%) did not present sample size calculations. Among 17 trials with dyadic or multivariate outcomes, none presented sample size calculations that accounted for the dyadic or multivariate nature of their primary outcomes.

Table 4.

Conduct and reporting of sample size or power calculations (N=62)

| Characteristic | Frequency (%) |

|---|---|

|

| |

| Sample size / power calculations presented? | |

| Yesa | 50 (80.6%) |

| No | 12 (19.4%) |

|

| |

| Did sample size / power calculation account for clustering? | |

| Cluster randomized trial (N=38) | |

| Yes: Participant-level accounting for clustering | 20 (52.6%) |

| Yes: Cluster-level | 2 (5.3%) |

| No: Participant -level without accounting for clustering | 5 (13.2%) |

| Unclear whether accounted for clustering | 1 (2.6%) |

| No sample size calculation presented | 10 (26.3%) |

| Individually randomized group treatment trial (N=9) | |

| Yes: Participant-level accounting for clustering | 3 (33.3%) |

| No: Participant -level without accounting for clustering | 6 (66.7%) |

| No sample size calculation presented | 0 |

|

| |

| Did sample size/ power calculation account for repeated measures? (N=54) | |

| Yes | 9 (16.7%) |

| No | 33 (61.1%) |

| No sample size calculation presented | 12 (22.2%) |

|

| |

| Was correlation in dyadic or multivariate outcome accounted for in sample size calculation? (N=17) | |

| Yes | 0 |

| No | 12 (70.6%) |

| No sample size calculation presented | 5 (29.4%) |

Includes one trial presenting a sample size calculation based on an unspecified outcome

Methods of analysis

Table 5 presents details about the methods of analysis for the primary outcome and reporting of correlation coefficients. Among the 38 cluster randomized trials, 13 (34%) did not clearly account for the intracluster correlation in the analysis; among the 9 individually randomized group treatment trials, 8 (89%) did not account for intracluster correlation in the analysis. Among the 54 trials with repeated measures, 19 (35%) did not utilize at least some of the repeated measures in the analysis. Among the 17 trials with dyadic or multivariate outcomes, 16 (94%) did not account for the dyadic or multivariate nature of the outcome in the analysis. Reporting of measures of correlation was poor: 17 (36%) of studies using cluster randomization and group treatment reported an estimate of the intracluster correlation; no studies reported measures of correlation over time and no studies reported measures of correlation between patient and caregiver or multivariate outcomes. The most frequently used method of analysis was mixed effects regression.

Table 5.

Methods of analysis and reporting of correlation coefficients (N=62)

| Characteristic | Frequency (%) |

|---|---|

|

| |

| Did primary analysis of primary outcome account for clustering? | |

| Cluster randomized trials (N=38) | |

| Yes: Analysis was at individual-level accounting for clustering | 20 (52.6%) |

| Yes: Analysis was at cluster-level | 3 (7.9%) |

| Yes: Othera | 2 (5.3%) |

| No: Analysis was at individual-level not accounting for clustering | 11 (28.9%) |

| No: Clustering effect was small; proceeded without accounting for clustering | 1 (2.6%) |

| Unclear whether accounted for clustering | 1 (2.6%) |

| Individually randomized group treatment trial (N=9) | |

| Yes: Analysis was at individual-level accounting for clustering | 1 (11.1%) |

| No: Analysis was at individual-level not accounting for clustering | 7 (77.8%) |

| No: Clustering effect was small; proceeded without accounting for clustering | 1 (11.1%) |

|

| |

| Were repeated measures utilized in primary analysis of the primary outcome? (N=54) | |

| Yes | 35 (64.8%) |

| No | 19 (35.2%) |

|

| |

| Was correlation in dyadic or multivariate outcome accounted for in analysis? (N=17) | |

| Yes | 1 (5.9%) |

| No | 16 (94.1%) |

|

| |

| Reported any correlation coefficients? | |

| Trials with potential intracluster correlation (N=47) | 17 (36.2%) |

| Trials with potential correlation over time (N=54) | 0 |

| Trials with potential correlation between multivariate outcomes (N=17) | 0 |

|

| |

| Methods of analysis | |

| Simple method (e.g., t-test, chi-squared test) | 9 (14.5%) |

| Generalized Estimating Equations (GEE) | 11 (17.7%) |

| Mixed-effects regression | 22 (35.5%) |

| Fixed-effects regression | 16 (25.8%) |

| Other (e.g., structural equation modeling, MANCOVA, two-stage method) | 4 (6.5%) |

One trial conducted analyses at both individual-level accounting for clustering and cluster-level; one trial used a two-stage method: in stage one ANCOVA was used within each cluster-pair; in stage two random effects meta-analysis was used

Covariate adjustment and missing data

Table 6 presents details about adjustment for covariates used in the randomization and missing data. Among the 38 trials using restricted randomization methods to balance allocations between the arms, 14 (37%) adjusted for all balancing factors as covariates in the analysis. Nearly all trials (95%) had missing data on the primary outcome; 34 of these (58%) did not use any method to account for missing data in the primary analysis of the primary outcome. When considering both primary and sensitivity analyses, 32 trials (54%) did not use any method to account for missing data. Nearly half reported a statistically significant result for the primary outcome.

Table 6.

Covariate adjustment and missing data treatment (N=62)

| Characteristic | Frequency (%) |

|---|---|

|

| |

| If covariates were used in the randomization, did primary outcome analysis adjust for those covariates? (N=38) | |

| Yes, for all relevant covariates | 14 (36.8%) |

| Yes, but not for all relevant covariates | 4 (10.5%) |

| No | 18 (47.4%) |

| Uncleara | 2 (3.2%) |

|

| |

| Does the trial have missing outcomes or attrition for the primary outcome? | |

| Yes | 59 (95.2%) |

| No | 2 (3.2%) |

| Unclear | 1 (1.6%) |

|

| |

| Method used to account for missing data in the primary analysis of the primary outcome (N=59) | |

| None | 34 (57.6%) |

| Regression adjustment for covariates stated to be associated with missingness | 6 (9.7%) |

| Single imputation | 9 (14.5%) |

| Multiple imputation | 9 (14.5%) |

| Otherb | 1 (1.7%) |

|

| |

| Method used to account for missing data in sensitivity analyses of the primary outcome (N=59) | |

| None | 52 (88.1%) |

| Regression adjustment for covariates stated to be associated with missingness | 3 (5.1%) |

| Single imputation | 2 (3.4%) |

| Multiple imputation | 1 (1.7%) |

| Otherc | 1 (1.7%) |

|

| |

| Any method used to account for missing data in primary or sensitivity analyses for the primary outcome? | |

| Yes | 27 (45.8%) |

| No | 32 (54.2%) |

|

| |

| Did the trial report a statistically significant effect for the primary outcome(s)? | |

| Yes | 30 (48.4%) |

| No | 29 (46.8%) |

| Mixed resultsd | 3 (4.8%) |

One trial presented both adjusted and unadjusted analyses without clearly stating the primary analysis; one trial used stratification but did not define the stratification variables

Primary outcome assessed in “modified intent to treat population” consisting only of those residents with available data at 8 months

Single imputation for primary analysis followed by complete case analysis as sensitivity analysis

One trial did not present results for the overall outcome score, but only for the individual components; one three-arm trial had two co-primary outcomes with mixed results; one trial had three co-primary outcomes with mixed results

Handling of clustering by number and sources of clustering

We conducted a post-hoc analysis tabulating the adequacy of sample size and analysis methods according to the number and source of clustering (see Appendix 4). Three trials (5%) had no sources of clustering; 12 (19%) had one, 38 (61%) two, and 9 (24%) had three sources of clustering. Among trials with one, two, and three sources of clustering, the percentages accounting for all sources of clustering in their sample size calculations were 33%, 11% and 0% respectively, while the percentages accounting for all sources of clustering in the analysis were 75%, 24% and 0% respectively.

Discussion

Summary of principal findings

We reviewed recently conducted pragmatic trials in Alzheimer’s disease and related dementias focusing on their key statistical and reporting requirements. Trials commonly had multiple sources of clustering. Despite several decades of availability of methods to account for intracluster correlation, many cluster randomized trials and individually randomized group treatment trials did not account for intracluster correlation in their sample size calculations and analysis. Trials with repeated measures were common but this design aspect was often ignored during sample size calculation and analysis. Few trials with dyadic or multiple primary outcome domains used methods for multivariate outcomes. Sample size and analysis methods were particularly poor when there were multiple sources of clustering. Estimates for relevant correlation coefficients were seldom reported. Nearly all trials were subject to missing data but methods to account for missing data were infrequently used.

Comparison with other studies

We are unaware of any other published methodological reviews of pragmatic trials in this field. Previous reviews of cluster randomized trials, focused on single sources of clustering, have consistently found that quality tends to be poor. Diaz-Ordaz and colleagues23 reviewed 73 cluster randomized trials in nursing homes until the end of 2010, of which 27% reported accounting for clustering in sample size calculations and 74% in the analyses (compared to our review of 62 trials published 2014–2019 in which 58% reported accounting for clustering in sample size calculation and 66% in the analyses); they found that only 11% of trials reported intracluster correlation coefficients (compared to 36% in our review). Kahan and colleagues18 found that 26% of 258 individually randomized trials published in four major medical journals in 2010 adjusted for all balancing factors in their primary analyses (compared to 37% in our review). We are unaware of any published reviews of clustered longitudinal trials or trials with multiple sources of clustering.

Limitations

Locating pragmatic trial reports in the literature is challenging. We used our published search filter to locate trials that are more likely to be pragmatic.20 The search filter was designed to capture not only trials that self-declare their pragmatic intention in the title or abstract, but also trials that only have design features suggesting a pragmatic intention (without explicit identification as pragmatic in the title or abstract). We did not score each trial (e.g., using the PRECIS-2 tool)24 to confirm that the trial was mostly pragmatic. Even if retrospective scoring of a large database of trials were feasible, there is no objective threshold for determining when a trial can be considered sufficiently “pragmatic”.25 Furthermore, no reporting guidelines require authors to label their trials as “pragmatic” in the title or abstract;26 thus, our search filter may not have captured all trials with pragmatic intentions in this field.

We extracted data mainly from the primary trial report and did not access protocols. It is possible that appropriate methods were used in the design; however, CONSORT guidelines are clear in their requirements to provide explicit details about methods in the trial report.

Implications of our findings

There is a recognized need for more pragmatic trials in Alzheimer’s disease and related dementias and there is now an opportunity to build capacity through the IMPACT Collaboratory.27 Our review of the existing landscape of pragmatic trials in this field reveals a critical need for improvement. Failure to account for clustering in sample size calculations may mean that many trials are under-powered, while failure to account for repeated measures may imply over-recruitment in a vulnerable population and a waste of resources. Failure to account for intracluster correlation in analysis may mean that some trials have overstated the statistical significance of their findings, while failure to adopt methods that account for repeated measures imply a missed opportunity for statistical efficiency, and potentially useful treatments being declared ineffective. While our focus has been on pragmatic trials in dementia research, we expect similar considerations to apply in pragmatic trials in other clinical areas involving vulnerable populations and their caregivers for example, in hemodialysis where attempts are being made to advance the conduct of more pragmatic trials.28

Recommendations

We make several recommendations based on these results:

Trialists conducting clustered designs should collaborate with statisticians experienced in methods for these designs and should ensure that all sources of clustering are accounted for in the sample size calculation and analysis.29 When cluster randomization is necessary, trialists should consider the use of more efficient multiple period designs which can substantially reduce the required number of clusters, although this should be balanced against the increase in the number of measurements.

When a single primary outcome is not adequate to inform a decision about effectiveness of an intervention, a limited number of co-primary outcomes may be considered. However, multiple primary outcomes raise complex challenges for type I and type II error control during the design and analysis. An important consideration is whether the intervention will be declared a success if at least one primary outcome is significant or whether joint significance on all primary outcomes is required. When joint significance is required, power decreases as the number of outcomes being evaluated increases. It is useful to consider the correlation between the outcomes during sample size calculation as it may lessen the increase in the required sample size. When the outcomes have very different effect sizes and the correlation is not very strong, there may not be much practical benefit to incorporating correlations between the outcomes in the sample size calculation as the smallest effect size will primarily determine the sample size.30,31,32 Nevertheless, adopting multivariate methods which account for correlation between the outcomes has additional advantages, for example, when there are missing values for one outcome but not others.

Restricted randomization techniques such as covariate-constrained randomization should be considered to improve balance in cluster randomized trials with few clusters33 and variables balanced by design should be adjusted in the primary analysis to obtain correct p-values and improve power and efficiency.34

Methods to account for missing data should be used in the analysis and where relevant, should account for sources of clustering.35,36,37

There is a need for new methods for trials with multiple sources of clustering, for example, cluster randomized trials with dyadic or multivariate outcomes and longitudinal trials with multivariate outcomes. Practical tools including statistical software and tutorial-style manuscripts should accompany more theoretical work to promote the uptake of appropriate methods in practice.38 Appendix 5 summarizes available references for multiple sources of clustering.

Journal editors and reviewers should require adherence to minimum reporting requirements16,17,26 and should especially insist that estimates of correlation are provided to inform the design of future studies. Investigators with access to routinely collected health administrative data should consider producing databases of correlation estimates for outcomes of potential interest in future trials and making them publicly available.39

Conclusions

There is a need and opportunity to improve the design, analysis, and reporting of pragmatic trials in dementia research. These trials often have multiple sources of clustering that need special consideration in the design, analysis, and reporting. While methods for longitudinal and cluster randomized designs are well-developed, accessible resources and new methods for dealing with multiple sources of clustering are required. Involvement of a statistician with expertise in longitudinal and clustered designs is recommended.

Acknowledgements

We thank Hayden Nix and Jennifer Zhang who contributed to the creation of the large database of pragmatic trials. We thank Dr. Spencer Hey who helped with database management aspects.

Funding

The author(s) disclosed receipt of the following financial support for the research of this article: This work was supported by the Canadian Institutes of Health Research through the Project Grant competition (competitive, peer-reviewed), award number PJT-153045; and by the National Institute of Aging (NIA) of the National Institutes of Health under Award Number U54AG063546, which funds NIA Imbedded Pragmatic Alzheimer’s Disease and AD-Related Dementias Clinical Trials Collaboratory (NIA IMPACT Collaboratory). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Appendix 1:

Search filters used in the identification of N=62 eligible trials

| Search strategies for pragmatic trials in Ovid MEDLINE 1 | |

| # | Search Statement |

| Trial design terms | |

| 1 | (((pragmatic$ OR naturalistic OR real world OR real life OR unblinded OR unmasked OR cluster OR step$ wedge$ OR point of care OR factorial OR switchback OR switch back OR phase 4 OR phase IV) adj 10 (study OR trial)) OR (practical trial OR effectiveness trial OR ((cluster$ or communit$) adj2 randomi$))).tw. |

| Trial attribute terms | |

| 2 | (general practice$ OR primary care OR registry based OR health record$ OR medical record$ OR EHR OR EMR OR administrative data OR routinely collected data OR (communit$ adj2 intervention$) OR quality improvement OR implementation OR decision support OR health service$ OR health system$ OR comparative effectiveness OR CER OR usual care OR evidence based OR practice guideline$ OR (guideline$ adj1 recommend$) OR knowledge translation OR health technology assessment OR HTA OR cost effectiveness OR process evaluation OR economic evaluation OR patient oriented).tw. |

| Limit to records likely to be RCTs | |

| 3 | randomized controlled trial.pt. OR ((comparative effectiveness OR randomi?ed) adj10 (trial OR study)).ti. |

| 4 | (comment on OR phase 1 OR phase I OR phase 2 OR phase II OR non-randomi?ed OR quasi-randomi?ed OR pseudo-randomi?ed).ti. OR (clinical trial, phase I OR clinical trial, phase II OR systematic review OR meta-analysis OR review OR editorial).pt. |

| Include records tagged as pragmatic trials | |

| 5 | pragmatic clinical trial.pt. |

| Sensitivity-maximizing search (combines trial design terms or attribute terms with RCT terms) | |

| 6 | ((1 OR 2) AND (3 NOT 4)) OR 5 |

| 7 | exp Animals/ NOT Humans/ |

| 8 | 6 NOT 7 |

RCT=randomized controlled trial

Taljaard M, McDonald S, Nicholls SG, Carroll K, Hey SP, Grimshaw JM, Fergusson DA, Zwarenstein M, McKenzie JE. A search filter to identify pragmatic trials in MEDLINE was highly specific but lacked sensitivity. J Clin Epidemiol. 2020 Aug;124:75–84. doi: 10.1016/j.jclinepi.2020.05.003. Epub 2020 May 11. PMID: 32407765

Cochrane Dementia and Cognitive Improvement Group PubMed search filter to identify trials specifically focused on Alzheimer’s and dementia disease2

((((((((Dementia[Mesh]) OR “Neurocognitive Disorders”[Mesh:NoExp]) OR dement*[tiab]) OR alzheimer*[tiab]) OR AD[tiab]) OR ((“lewy bod*”[tiab] OR DLB[tiab] OR LBD[tiab] OR FTD[tiab] OR FTLD[tiab] OR “frontotemporal lobar degeneration”[tiab] OR “frontaltemporal dement*”[tiab]))) OR “cognit* impair*”[tiab]) OR ((cognit*[tiab] AND (disorder*[tiab] OR declin*[tiab] OR fail*[tiab] OR function*[tiab] OR degenerat*[tiab] OR deteriorat*[tiab])))) OR ((memory[tiab] AND (complain*[tiab] OR declin*[tiab] OR function*[tiab] OR disorder*[tiab])))

Airtable filter to identify trials in the elderly based on MeSH terms3

AND(IF(OR(FIND(“Aged”,{MeSH_Terms}),FIND(“aged”,{MeSH_Terms})),”True”,”False”)=“True”,IF(OR(FIND(“Middle”,{MeSH_Terms}),FIND(“middle”,{MeSH_Terms})),”False”, “True”)=“True”,IF(OR(FIND(“Adult”,{MeSH_Terms}),FIND(“adult”,{MeSH_Terms})), “False”,”True”)=“True”)

Appendix 2: Data extraction form

Appendix 3:

Journals where N=62 eligible trials were published

| Journal title | Number of trials |

|---|---|

| Age & Ageing | 1 |

| Aging & Mental Health | 2 |

| Alzheimer’s Research & Therapy | 1 |

| American Journal of Geriatric Psychiatry | 6 |

| American Journal of Physical Medicine & Rehabilitation | 1 |

| American Journal of Psychiatry | 1 |

| Annals of Internal Medicine | 1 |

| Applied Nursing Research | 1 |

| Biological Research for Nursing | 1 |

| BMC Medicine | 1 |

| BMJ | 1 |

| BMJ Open | 1 |

| British Journal of Psychiatry | 1 |

| Clinical Interventions In Aging | 1 |

| Dementia & Geriatric Cognitive Disorders | 1 |

| Deutsches Arzteblatt International | 1 |

| Emergency Medicine Journal | 1 |

| Gerontologist | 2 |

| Health Technology Assessment (Winchester, England) | 1 |

| International Journal of Geriatric Psychiatry | 4 |

| International Journal of Nursing Studies | 2 |

| International Psychogeriatrics | 3 |

| JAMA Internal Medicine | 2 |

| JAMA Psychiatry | 1 |

| Journal of Aging & Health | 1 |

| Journal of Comparative Effectiveness Research | 1 |

| Journal of Medical Internet Research | 1 |

| Journal of Neurology, Neurosurgery & Psychiatry | 1 |

| Journal of the American Geriatrics Society | 7 |

| Journal of the American Medical Directors Association | 2 |

| Journals of Gerontology Series B-Psychological Sciences & Social Sciences | 1 |

| Palliative Medicine | 2 |

| PLoS Medicine / Public Library of Science | 3 |

| PLoS ONE [Electronic Resource] | 3 |

| The Lancet. Psychiatry | 1 |

| Zeitschrift fur Gerontologie und Geriatrie | 1 |

Appendix 4:

Post-hoc analysis examining the extent to which clustering was accounted for in sample size and analysis according to number and sources of clustering

| Accounted for all sources of clustering? | ||

|---|---|---|

| Sources of clustering | Sample size calculation | Analysis |

| No sources of clustering (N=3) | NA | NA |

| One source of clustering (N=12) | 4 (33.3%) | 9 (75.0%) |

| Repeated measures only (N=8) | 2 (25%) | 8 (100%) |

| Cluster randomization only (N=3) | 2 (66.7%) | 1 (33.3%) |

| Group treatment only (N=1) | 0 | 0 |

| Two sources of clustering (N=38) | 4 (10.5%) | 9 (23.7%) |

| Repeated measures and multivariate/dyadic outcome (N=4) | 0 | 0 |

| Cluster randomization and repeated measures (N=25) | 3 (12.0%) | 8 (32.0%) |

| Cluster randomization and multivariate/dyadic outcome (N=1) | 0 | 0 |

| Group treatment and repeated measures (N=8) | 1 (12.5%) | 1 (12.5%) |

| Three sources of clustering (N=9) | 0 | 0 |

| Cluster randomization and repeated measures and multivariate/dyadic outcome (N=9) | 0 | 0 |

NA: Not applicable

Appendix 5: Key references describing methods for trials with multiple sources of clustering

Cluster randomized trials with repeated measures on the same cluster and/or the same individual over time:

Murray DM, Hannan PJ, Wolfinger RD et al. Analysis of data from group-randomized trials with repeat observations on the same groups. Statistics in Medicine 1998; 17: 1581–1600.

Ukoumunne OC, Thompson SG. Analysis of cluster randomized trials with repeated cross-sectional binary measurements. Statistics in Medicine. 2001.,15;20(3):417–33.

Liu, A., Shih, W.J. and Gehan, E, Sample size and power determination for clustered repeated measurements. Statist. Med 2002., 21: 1787–1801. https://doi.org/10.1002/sim.1154

Localio AR, Berlin JA, Have TR. Longitudinal and repeated cross-sectional cluster-randomization designs using mixed effects regression for binary outcomes: bias and coverage of frequentist and Bayesian methods. Statistics in Medicine. 2006., 30;25(16):2720–36.

Teerenstra S, Lu B, Preisser JS et al. Sample size considerations for GEE analyses of three-level cluster randomized trials. Biometrics 2010; 66(4): 1230–1237.

Hooper R, Teerenstra S, de Hoop E et al. Sample size calculation for stepped wedge and other longitudinal cluster randomised trials. Statistics in Medicine 2016; 35(26): 4718–4728.

Hemming K, Kasza J, Hooper R, Forbes A, Taljaard M. A tutorial on sample size calculation for multiple-period cluster randomized parallel, cross-over and stepped-wedge trials using the Shiny CRT Calculator, International Journal of Epidemiology, Volume 49, Issue 3, June 2020, Pages 979–995, https://doi.org/10.1093/ije/dyz237

Li F, Hughes JP, Hemming K, Taljaard M, Melnick ER, Heagerty PJ. Mixed-effects models for the design and analysis of stepped wedge cluster randomized trials: An overview. Stat Methods Med Res. 2020 Jul 6:962280220932962. Doi: 10.1177/0962280220932962. Epub ahead of print. PMID: 32631142.

Clustered dyadic data:

Moerbeek, M., & Teerenstra, S. (2016). Power analysis of trials with multilevel data. Boca Raton, FL: CRC Press

Kenny, D. A., Kashy, D. A., & Cook, W. L. (2006). Dyadic data analysis. New York, NY: Guilford Press

Clustered multivariate outcomes:

Turner RM, Omar RZ, Thompson SG. Modelling multivariate outcomes in hierarchical data, with application to cluster randomised trials. Biom J. 2006 Jun;48(3):333–45. Doi: 10.1002/bimj.200310147. PMID: 16845899.

Li D, Cao J, Zhang S. Power analysis for cluster randomized trials with multiple binary co-primary endpoints. Biometrics. 2019 Dec 24.

Multivariate longitudinal data:

Verbeke G, Fieuws S, Molenberghs G, Davidian M. The analysis of multivariate longitudinal data: a review. Stat Methods Med Res. 2014;23(1):42–59. doi:10.1177/0962280212445834

Footnotes

ALOIS A comprehensive, open-access register of dementia studies. Available at: [https://alois.medsci.ox.ac.uk/about-alois]. Accessed: 4 December 2020.

Syntax applied in Airtable [https://airtable.com/product] to identify trials exclusively focused on the elderly population and may include people living with dementia as a defined subgroup. Syntax identifies trials tagged with MeSH terms that include “aged”, but not “middle” or “adult”.

Declaration of conflicting interests

None declared.

Contributor Information

Monica Taljaard, Clinical Epidemiology Program, Ottawa Hospital Research Institute, Ottawa, Ontario, Canada; School of Epidemiology and Public Health, University of Ottawa, Ottawa, Canada.

Fan Li, Department of Biostatistics, Yale School of Public Health, Yale University, New Haven, Connecticut, USA.

Bo Qin, Department of Biostatistics, Yale School of Public Health, Yale University, New Haven, Connecticut, USA.

Caroline Cui, Department of Biostatistics, Yale School of Public Health, Yale University, New Haven, Connecticut, USA.

Leyi Zhang, Department of Biostatistics, Yale School of Public Health, Yale University, New Haven, Connecticut, USA.

Stuart G Nicholls, Clinical Epidemiology Program, Ottawa Hospital Research Institute, Ottawa, Canada.

Kelly Carroll, Clinical Epidemiology Program, Ottawa Hospital Research Institute, Ottawa, Canada.

Susan L Mitchell, Hebrew Senior Life Marcus Institute for Aging Research, Boston, Massachusetts, USA.

References

- 1.Baier RR, Mitchell SL, Jutkowitz E, et al. Identifying and Supporting Nonpharmacological Dementia Interventions Ready for Pragmatic Trials: Results From an Expert Workshop. J Am Med Dir Assoc 2018;19(7):560–562. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Zwarenstein M ‘Pragmatic’ and ‘explanatory’ attitudes to randomised trials. Journal of the Royal Society of Medicine 2017; 110(5), 208–218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Mitchell SL, Mor V, Harrison J, et al. Embedded Pragmatic Trials in Dementia Care: Realizing the Vision of the NIA IMPACT Collaboratory. J Am Geriatr Soc 2020; 68: S1–S7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Allore HG, Goldfeld KS, Gutman R, et al. Statistical Considerations for Embedded Pragmatic Clinical Trials in People Living with Dementia. J Am Geriatr Soc 2020; 68: S68–S73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Andridge RR, Shoben AB, Muller KE, et al. Analytic methods for individually randomized group treatment trials and group-randomized trials when subjects belong to multiple groups. Stat Med 2014;33(13):2178–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Donner A and Klar N. Design and Analysis of Cluster Randomization Trials in Health Research New York. New York: Oxford University Press, 2000. [Google Scholar]

- 7.Fitzmaurice GM, Laird NM and Ware JH. Applied Longitudinal Analysis, Second Edition, by: Hoboken, NJ: Wiley, 2011, ISBN 978–0-470–38027-7, xxv + 701 pp. [Google Scholar]

- 8.Hooper R and Bourke L. Cluster randomised trials with repeated cross sections: alternatives to parallel group designs. BMJ 2015; 350: h2925. [DOI] [PubMed] [Google Scholar]

- 9.Reed RG, Butler EA and Kenny DA. Dyadic Models for the Study of Health. Soc Personal Psychol Compass 2013; 7: 228–245. [Google Scholar]

- 10.Kenny DA. The effect of nonindependence on significance testing in dyadic research. Personal Relationships 1995; 2: 67–75. [Google Scholar]

- 11.Hardy SE, Allore H and Studenski SA. Missing data: a special challenge in aging research. J Am Geriatr Soc 2009; 57(4):722–729. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Van Ness PH, Charpentier PA, Ip EH, et al. Gerontologic biostatistics: the statistical challenges of clinical research with older study participants. J Am Geriatr Soc 2010; 58(7):1386–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Turner RM, Omar RZ and Thompson SG. Modelling multivariate outcomes in hierarchical data, with application to cluster randomised trials. Biom J 2006; 48(3): 333–345. [DOI] [PubMed] [Google Scholar]

- 14.Li D, Cao J and Zhang S. Power analysis for cluster randomized trials with multiple binary co-primary endpoints. Biometrics 2020; 76(4): 1064–1074. [DOI] [PubMed] [Google Scholar]

- 15.Food and Drug Administration. Guidance for industry: Alzheimer’s disease: developing drugs for the treatment of early stage disease. U.S. Department of Health and Human Services Food and Drug Administration, Rockville, MD, USA, 2013. [Google Scholar]

- 16.Campbell MK, Piaggio G, Elbourne DR, et al. Consort 2010 Statement: extension to cluster randomised trials. BMJ 2012; 345: e5661. [DOI] [PubMed] [Google Scholar]

- 17.Hemming K, Taljaard M, McKenzie JE, et al. Reporting of stepped wedge cluster randomised trials: extension of the CONSORT 2010 Statement with explanation and elaboration. BMJ 2018; 363: k1614. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kahan Brennan C and Morris Tim P. Reporting and analysis of trials using stratified randomisation in leading medical journals: review and reanalysis BMJ 2012; 345: e5840. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Taljaard M, Weijer C, Grimshaw JM, et al. Developing a framework for the ethical design and conduct of pragmatic trials in healthcare: a mixed methods research protocol. Trials 2018; 19: 525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Taljaard M, McDonald S, Nicholls SG, et al. A search filter to identify pragmatic trials in MEDLINE was highly specific but lacked sensitivity. J Clin Epidemiol 2020; 124: 75–84. [DOI] [PubMed] [Google Scholar]

- 21.Nicholls SG, Carroll K, Hey SP, et al. A review of pragmatic trials found a high degree of diversity in design and scope, deficiencies in reporting and trial registry data, and poor indexing. J Clin Epidemiol 2021; 137: 45–57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.ALOIS A comprehensive, open-access register of dementia studies, https://alois.medsci.ox.ac.uk/about-alois. (Accessed 4 December 2020).

- 23.Diaz-Ordaz K, Froud R, Sheehan B, et al. A systematic review of cluster randomised trials in residential facilities for older people suggests how to improve quality. BMC Med Res Methodol 2013; 13: 127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Loudon K, Treweek S, Sullivan F, et al. The PRECIS-2 tool: Designing trials that are fit for purpose BMJ 2015, 350: h2147. [DOI] [PubMed] [Google Scholar]

- 25.Nicholls SG, Zwarenstein M, Hey SP, et al. The importance of decision intent within descriptions of pragmatic trials. J Clin Epidemiol 2020; 125: 30–37. [DOI] [PubMed] [Google Scholar]

- 26.Zwarenstein M, Treweek S, Gagnier JJ, et al. Improving the reporting of pragmatic trials: an extension of the CONSORT statement. BMJ 2008; 337: a2390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.NIA IMPACT Collaboratory: Transforming Dementia Care, https://impactcollaboratory.org/ (Accessed 13 December 2020). [Google Scholar]

- 28.Lee EJ, Patel A, Acedillo RR, et al. Cultivating Innovative Pragmatic Cluster-Randomized Registry Trials Embedded in Hemodialysis Care: Workshop Proceedings From 2018. Can J Kidney Health Dis 2019; 6:2054358119894394, e collection 2019. doi: 10.1177/2054358119894394 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Murray DM, Taljaard M, Turner EL, et al. Essential Ingredients and Innovations in the Design and Analysis of Group-Randomized Trials. Annu Rev Public Health 2020; 41: 1–19. [DOI] [PubMed] [Google Scholar]

- 30.Xiong C, Yu K, Gao F, et al. Power and sample size for clinical trials when efficacy is required in multiple endpoints: application to an Alzheimer’s treatment trial. Clin Trials 2005; 2(5): 387–93. [DOI] [PubMed] [Google Scholar]

- 31.Sozu T, Sugimoto T and Hamasaki T. Sample Size Determination in Superiority Clinical Trials With Multiple Co-Primary Correlated Endpoints. J Biopharm Stat 2011; 21(4): 650–668. [DOI] [PubMed] [Google Scholar]

- 32.Lafaye de Micheaux P, Liquet B, Marque S, et al. Power and sample size determination in clinical trials with multiple primary continuous correlated endpoints. J Biopharm Stat 2014; 24(2): 378–397. [DOI] [PubMed] [Google Scholar]

- 33.Moulton LH. Covariate-based constrained randomization of group-randomized trials. Clin Trials 2004; 1(3): 297–305. [DOI] [PubMed] [Google Scholar]

- 34.Li F, Lokhnygina Y, Murray DM, et al. An evaluation of constrained randomization for the design and analysis of group-randomized trials. Stat Med 2016, 35(10): 1565–1579. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Hossain A, Diaz-Ordaz K and Bartlett JW. Missing continuous outcomes under covariate dependent missingness in cluster randomised trials. Stat Methods Med Res 2016; 26: 1543–1562. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Hossain A, DiazOrdaz K and Bartlett JW. Missing binary outcomes under covariate-dependent missingness in cluster randomised trials. Stat Med 2017; 36: 3092–3109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Turner EL, Yao L, Li F, et al. (2020). Properties and pitfalls of weighting as an alternative to multilevel multiple imputation in cluster randomized trials with missing binary outcomes under covariate-dependent missingness. Stat Methods Med Res 2020; 29(5): 1338–1353. [DOI] [PubMed] [Google Scholar]

- 38.Hemming K, Kasza J, Hooper R, et al. A tutorial on sample size calculation for multiple-period cluster randomized parallel, cross-over and stepped-wedge trials using the Shiny CRT Calculator. Int J Epidemiol 2020; 49(3): 979–995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Korevaar E, Kasza J, Taljaard M, et al. Intra-cluster correlations from the CLustered OUtcome Dataset bank to inform the design of longitudinal cluster trials. Clin Trials. 2021. Jun 4:17407745211020852. doi: 10.1177/17407745211020852. Epub ahead of print. [DOI] [PubMed] [Google Scholar]