Abstract

Background

Computational biology provides software tools for testing and making inferences about biological data. In the face of increasing volumes of data, heuristic methods that trade software speed for accuracy may be employed. We have studied these trade-offs using the results of a large number of independent software benchmarks, and evaluated whether external factors, including speed, author reputation, journal impact, recency and developer efforts, are indicative of accurate software.

Results

We find that software speed, author reputation, journal impact, number of citations and age are unreliable predictors of software accuracy. This is unfortunate because these are frequently cited reasons for selecting software tools. However, GitHub-derived statistics and high version numbers show that accurate bioinformatic software tools are generally the product of many improvements over time. We also find an excess of slow and inaccurate bioinformatic software tools, and this is consistent across many sub-disciplines. There are few tools that are middle-of-road in terms of accuracy and speed trade-offs.

Conclusions

Our findings indicate that accurate bioinformatic software is primarily the product of long-term commitments to software development. In addition, we hypothesise that bioinformatics software suffers from publication bias. Software that is intermediate in terms of both speed and accuracy may be difficult to publish—possibly due to author, editor and reviewer practises. This leaves an unfortunate hole in the literature, as ideal tools may fall into this gap. High accuracy tools are not always useful if they are slow, while high speed is not useful if the results are also inaccurate.

Supplementary Information

The online version contains supplementary material available at (10.1186/s13059-022-02625-x).

Background

Computational biology software is widely used and has produced some of the most cited publications in the entire scientific corpus [1–3]. These highly-cited software tools include implementations of methods for sequence alignment and homology inference [4–7], phylogenetic analysis [8–12], biomolecular structure analysis [13–17], and visualisation and data collection [18, 19]. However, the popularity of a software tool does not necessarily mean that it is accurate or computationally efficient; instead, usability, ease of installation, operating system support or other indirect factors may play a greater role in a software tool’s popularity. Indeed, there have been several notable incidences where convenient, yet inaccurate software has caused considerable harm [20–22].

There is an increasing reliance on technological solutions for automating biological data generation (e.g. next-generation sequencing, mass-spectroscopy, cell-tracking and species tracking), therefore the biological sciences have become increasingly dependent upon software tools for processing large quantities of data [23]. As a consequence, the computational efficiency of data processing and analysis software is of great importance to decrease the energy, climate impact, and time costs of research [24]. Furthermore, as datasets become larger even small error rates can have major impacts on the number of false inferences [25].

The gold-standard for determining accuracy is for researchers independent of individual tool development to conduct benchmarking studies; these benchmarks can serve a useful role in reducing the over-optimistic reporting of software accuracy [26–28] and the self-assessment trap [29, 30]. Benchmarking typically involves the use a number of positive and negative control datasets, so that predictions from different software tools can be partitioned into true or false groups, allowing a variety of metrics to be used to evaluate performance [28, 31, 32]. The aim of these benchmarks is to robustly identify tools that make acceptable compromises in terms of balancing speed with discriminating true and false predictions, and are therefore suited for wide adoption by the community.

For common computational biology tasks, a proliferation of software-based solutions often exists [33–35]. While this is a good problem to have, and points to a diversity of options from which practical solutions can be selected, having many possible options creates a dilemma for users. In the absence of any recent gold-standard benchmarks, how should scientific software be selected? In the following we presume that the “biological accuracy” of predictions is the most desirable feature for a software tool. Biological accuracy is the degree to which predictions or measurements reflect the biological truths based on expert-derived curated datasets (see Methods for the mathematical definition used here).

A number of possible predictors of software quality are used by the community of computational biology software users [36–38]. Some accessible, quantifiable and frequently used proxies for identifying high quality software include: (1) Recency: recently published software tools may have built upon the results of past work, or be an update to an existing tool. Therefore these may be more accurate and faster. (2) Wide adoption: a software tool may be widely used because it is fast and accurate, or because it is well-supported and user-friendly. In fact, “large user base”, “word-of-mouth”, “wide-adoption”, “personal recommendation”, and “recommendation from a close colleague” were frequent responses to surveys of “how do scientists select software?” [36–38]. (3) Journal impact: many believe that high profile journals are run by editors and reviewers who carefully select and curate the best manuscripts. Therefore, high impact journals may be more likely to select manuscripts describing good software [39]. (4) Author/group reputation: the key to any project is the skills of the people involved, including maintaining a high collective intelligence [37, 40, 41]. As a consequence, an argument could be made that well respected and high-profile authors may write better software [42, 43]. (5) Speed: software tools frequently trade accuracy for speed. For example, heuristic software such as the popular homology search tool, BLAST, compromises the mathematical guarantee of optimal solutions for more speed [4, 7]. Some researchers may naively interpret this fact as implying that slower software is likely to be more accurate. But speed may also be influenced by the programming language [44], and the level of hardware optimisation [45, 46]; however, the specific method of implementation generally has a greater impact (e.g. brute-force approaches versus rapid and sensitive pre-filtering [47–49]). (6) Effective software versioning: With the wide adoption of public version-control systems like GitHub, quantifiable data on software development time and intensity indicators, such as the number of contributors to code, number of code changes and versions is now available [50–52].

In the following study, we explore factors that may be indicative of software accuracy. This, in our opinion, should be one of the prime reasons for selecting a software tool. We have mined the large and freely accessible PubMed database [53] for benchmarks of computational biology software, and manually extracted accuracy and speed rankings for 498 unique software tools. For each tool, we have collected measures that may be predictive of accuracy, and may be subjectively employed by the research community as a proxy for software quality. These include relative speed, relative age, the productivity and impact of the corresponding authors, journal impact, number of citations and GitHub activity.

Results

We have collected relative accuracy and speed ranks for 498 distinct software tools. This software has been developed for solving a broad cross-section of computational biology tasks. Each software tool was benchmarked in at least one of 68 publications that satisfy the Boulesteix criteria [54]. In brief, the Boulesteix criteria are (1) the main focus of the article is a benchmark, (2) the authors are reasonably neutral, and (3) the test data and evaluation criteria are sensible.

For each of the publications describing these tools, we have (where possible) collected the journal’s H5-index (Google Scholar Metrics), the maximum H-index and corresponding M-indices [42] for the corresponding authors for each tool, and the number of times the publication(s) associated with a tool has been cited using Google Scholar (data collected over a 6-month period in late 2020). Note that citation metrics are not static and will change over time. In addition, where possible we also extract the version number, the number of commits, number of contributors, total number “issues”, the proportion of issues that remain open, the number of pull requests, and the number of times the code was forked from public GitHub repositories.

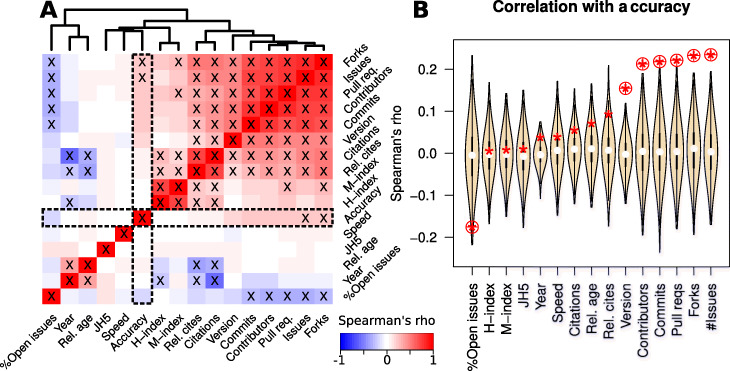

We have computed the Spearman’s correlation coefficient for each pairwise combination of the mean normalised accuracy and speed ranks, with the year published, mean relative age (compared to software in the same benchmarks), journal H5 metrics, the total number of citations, the relative number of citations (compared to software in the same benchmarks) and the maximum H- and corresponding M-indices for the corresponding authors, version number, and the GitHub statistics commits, contributors, pull requests, issues, % open issues and forks. The results are presented in Fig. 1A, B, and Additional file 1: Figs. S5&S6. We find significant associations between most of the citation-based metrics (journal H5, citations, relative citations, H-index and M-index). There is also a negative correlation between the year of publication, the relative age and many of the citation-based metrics.

Fig. 1.

A A heatmap indicating the relationships between different features of bioinformatic software tools. Spearman’s rho is used to infer correlations between metrics such as citations based metrics, the year and relative age of publication, version number, GitHub derived activity measures, and the mean relative speed and accuracy rankings. Red colours indicate a positive correlation, blue colours indicate a negative correlation. Correlations with a P value less than 0.05 (corrected for multiple-testing using the Benjamini-Hochberg method) are indicated with a ‘X’ symbol. The correlations with accuracy are illustrated in more detail in B, the relationship between speed and accuracy is shown in more detail in Fig. 2. B Violin plots of Spearman’s correlations for permuted accuracy ranks and different software features. The unpermuted correlations are indicated with a red asterisk. For each benchmark, 1000 permuted sets of accuracy and speed ranks were generated, and the ranks were normalised to lie between 0 and 1 (see Methods for details). Circled asterisks are significant (empirical P value < 0.05, corrected for multiple-testing using the Benjamini-Hochberg method)

Data on the number of updates to software tools from GitHub such as the version number, and numbers of contributors, commits, forks and issues was significantly correlated with software accuracy (respective Spearman’s rhos = 0.15, 0.21, 0.22, 0.23, 0.23 and respective Benjamini & Hochberg corrected P values = 6.7×10−4,1.1×10−3,8.4×10−4,3.4×10−4,3.1×10−4, Additional file 1: Fig. S6). The significance of these features was further confirmed with a permutation test (Fig. 1B). These features were not correlated with speed however (see Fig. 1A & Additional file 1: Figures S5 & S6). We also found that reputation metrics such as citations, author and journal H-indices, and the age of tools were generally not correlated with either tool accuracy or speed (Fig. 1A, B).

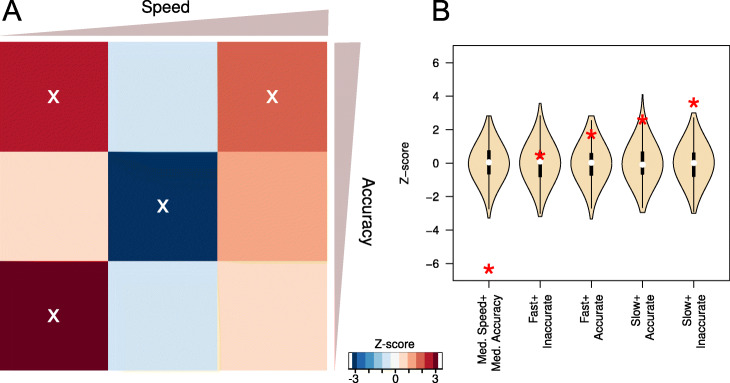

In order to gain a deeper understanding of the distribution of available bioinformatic software tools on a speed versus accuracy landscape, we ran a permutation test. The ranks extracted from each benchmark were randomly permuted, generating 1000 randomised speed and accuracy ranks. In the cells of a 3×3 grid spanning the normalised speed and accuracy ranks we computed a Z-score for the observed number of tools in a cell, compared to the expected distributions generated by 1000 randomised ranks. The results of this are shown in Fig. 2. We identified 4 of 9 bins where there was a significant excess or dearth of tools. For example, there was an excess of “slow and inaccurate” software (Z=3.40, P value= 3.3×10−4), with more moderate excess of “slow and accurate” and “fast and accurate” software (Z=2.49 and 1.7, P= 6.3×10−3 and 0.04, respectively). We find that only the “fast and inaccurate” extreme class is at approximately the expected proportions based upon the permutation test (Fig. 2B).

Fig. 2.

A A heatmap indicating the relative paucity or abundance of software in the range of possible accuracy and speed rankings. Redder colours indicate an abundance of software tools in an accuracy and speed category, while bluer colours indicate scarcity of software in an accuracy and speed category. The abundance is quantified using a Z-score computation for each bin, this is derived from 1000 random permutations of speed and accuracy ranks from each benchmark. Mean normalised ranks of accuracy and speed have been binned into 9 classes (a 3×3 grid) that range from comparatively slow and inaccurate to comparatively fast and accurate. Z-scores with a P value less than 0.05 are indicated with a ‘X’. B The z-score distributions from the permutation tests (indicated with the wheat coloured violin plots) compared to the z-score for the observed values for each of the corner and middle square of the heatmap

The largest difference between the observed and expected software ranks is the reduction in the number of software tools that are classed as intermediate in terms of both speed and accuracy based on permutation tests (see Methods for details, Fig. 2). The middle cell of Fig. 2A and left-most violin plot of Fig. 2B highlight this extreme, (Z = − 6.38, P value= 9.0×10−11).

Conclusion

We have gathered data on the relative speeds and accuracies of 498 bioinformatic tools from 68 benchmarks published between 2005 and 2020. Our results provide significant support for the suggestion that there are major benefits to the long-term support of software development [55]. The finding of a strong relationship between the number of commits and code contributors to GitHub (i.e. software updates) and accuracy, highlights the benefits of long-term or at least intensive development.

Our study finds little evidence to support that impact-based metrics have any relationship with software quality, which is unfortunate, as these are frequently cited reasons for selecting software tools [38]. This implies that high citation rates for bioinformatic software [1–3] is more a reflection of other factors such as user-friendliness or the Matthew Effect [56, 57] other than accuracy. Specifically, software tools published early are more likely to appear in high impact journals due to their perceived novelty and need. Yet without sustained maintenance these may be outperformed by subsequent tools, yet early publications still accrue citations from users, and all subsequent software publications as tools need to be compared in order to publish. Subsequent tools are not perceived to be as novel, hence appear in “lower” tier journals, despite being more reliable. Hence, the “rich” early publishers get richer in terms of citations. Indeed, citation counts are mainly predictive of age (Fig. 1A).

We found the lack of a correlation between software speed and accuracy surprising. The slower software tools are over-represented at both high and low levels of accuracy, with older tools enriched in this group (Fig. 2 and Additional file 1: Figure S7). In addition, there is an large under-representation of software that has intermediate levels of both accuracy and speed. A possible explanation for this is that bioinformatic software tools are bound by a form of publication-bias [58, 59]. That is, the probability that a study being published is influenced by the results it contains [60]. The community of developers, reviewers and editors may be unwilling to publish software that is not highly ranked on speed or accuracy. If correct, this may have unfortunate consequences as these tools may nevertheless have further uses.

While we have taken pains to mitigate many issues with our analysis, nevertheless some limitations remain. For example, it has proven difficult to verify if the gap in medium accuracy and medium speed software is genuinely the result of publication bias, or due to additional factors that we have not taken in to account. In addition, all of the features we have used here are moving targets. For example, as software tools are refined, their relative accuracies and speeds will change, the citation metrics, ages, and version control derived measures also change over time. Here we report a snapshot of values from 2020. The benchmarks themselves may also introduce biasses into the study. For example, there are issues with a potential lack of independence between benchmarks (e.g. shared datasets, metrics and tools), there are heterogeneous measures of accuracy and speed and often unclear processes for including different tools.

We propose that the full spectrum of software tool accuracies and speeds serves a useful purpose to the research community. Like negative results, if honestly reported this information, illustrates to the research community that certain approaches are not practical research avenues [61]. The current novelty-seeking practices of many publishers, editors, reviewers and authors of software tools therefore may be depriving our community of tools for building effective and productive workflows. Indeed, the drive for novelty may be an actively harmful criteria for the software development community, just as it is for reliable and reproducible research [62]. Novelty-criteria for publication may, in addition, discourage continual, incremental improvements in code post-publication in favour of splashy new tools that are likely to accrue more citations.

In addition we suggest that further efforts be made to encourage continual updates to software tools. To paraphrase some of the suggestions of Siepel (2019), these efforts may include more secure positions for developers, institutional promotion criteria include software maintenance, lower publication barriers for significant software updates, encourage further funding for software maintenance and improvement—not just new tools [55]. If these issues were recognised by research managers, funders and reviewers, then perhaps the future bioinformatic software tool landscape will be much improved.

The most reliable way to identify accurate software tools remains through neutral software benchmarks [54]. We are hopeful that this, along with steps to reduce the publication-bias we have described, will reduce the over-optimistic and misleading reporting of tool accuracy [26, 27, 29].

Methods

In order to evaluate predictors of computational biology software accuracy, we mined the published literature, extracted data from articles, connected these with bibliometric databases, and tested for correlates with accuracy. We outline these steps in further detail below.

Criteria for inclusion

We are interested in using computational biology benchmarks that satisfy Boulesteix’s (ALB) three criteria for a “neutral comparison study” [54]. Firstly, the main focus of the article is the comparison and not the introduction of a new tool as these can be biased [30]. Secondly, the authors should be reasonably neutral, which means that the authors should not generally have been involved in the development of the tools included in the benchmark. Thirdly, the test data and evaluation criteria should be sensible. This means that the test data should be independent of data that tools have been trained upon, and that the evaluation measures appropriately quantify correct and incorrect predictions. In addition, we excluded benchmarks with too few tools ≤3, or those where the results were inaccessible (no supplementary materials or poor figures).

Literature mining

We identified an initial list of 10 benchmark articles that satisfy the ALB-criteria. These were identified based upon previous knowledge of published articles and were supplemented with several literature searches (e.g. [“benchmark” AND “cputime”] was used to query both GoogleScholar and PubMed [53, 63]). We used these articles to seed a machine-learning approach for identifying further candidate articles and to identify new search terms to include. This is outlined in Additional file 1: Fig. S1.

For our machine-learning-based literature screening, we computed a score, s(a), for each article that tells us the likelihood that it is a benchmark. In brief, our approaches uses 3 stages:

Remove high frequency words from the title and abstract of candidate articles (e.g. ‘the’, ‘and’, ‘of’, ‘to’, ‘a’, …)

Compute a log-odds score for the remaining words

Use a sum of log-odds scores to give a total score for candidate articles

For stage 1, we identified a list of high frequency (e.g. f(word) > 1/10,000) words by pooling the content of two control texts [64, 65].

For stage 2, in order to compute a log-odds score for bioinformatic words, we computed the frequency of words that were not removed by our high frequency filter in two different groups of articles: bioinformatics-background and bioinformatics-benchmark articles. The text from bioinformatics-background articles were drawn from the bioinformatics literature, but these were not necessarily associated with benchmark studies. For background text we used PubMed [53, 63] to select 8908 articles that contained the word “bioinformatics” in the title or abstract and were published between 2013 and 2015. We computed frequencies for each word by combining text from titles and abstracts for the background and training articles. A log-odds score was computed for each word using the following formula:

Where δ was a pseudo-count added for each word (δ=10−5, by default), fbg(word) and ftr(word) were the frequencies of a word in the background and training datasets respectively. Word frequencies were computed by counting the number of times a word appears in the pool of titles and abstracts, the counts were normalised by the total number of words in each set. Additional file 1: Figure S2 shows exemplar word scores.

Thirdly, we also collected a group of candidate benchmark articles by mining Pubmed for articles that were likely to be benchmarks of bioinformatic software, these match the terms: “((bioinformatics) AND (algorithms OR programs OR software)) AND (accuracy OR assessment OR benchmark OR comparison OR performance) AND (speed OR time)”. Further terms used in this search were progressively added as relevant enriched terms were identified in later iterations. The final query is given in Additional file 1.

A score is computed for each candidate article by summing the log-odds scores for the words in title and abstract, i.e. . The high scoring candidate articles are then manually evaluated against the ALB-criteria. Accuracy and speed ranks were extracted from the articles that met the criteria, and these were added to the set of training articles. The evaluated candidate articles that did not meet the ALB-criteria were incorporated into the set of background articles. This process was iterated and resulted in the identification of 68 benchmark articles, containing 133 different benchmarks. Together these ranked 498 distinct software packages.

There is a potential for bias to have been introduced into this dataset. Some possible forms of bias include converging on a niche group of benchmark studies due to the literature mining technique that we have used. A further possibility is that benchmark studies themselves are biased, either including very high performing or very low performing software tools. To address each of these concerns we have attempted to be as comprehensive as possible in terms of benchmark inclusion, as well as including comprehensive benchmarks (i.e., studies that include all available software tools that address a specific biological problem).

Data extraction and processing

For each article that met the ALB-criteria and contained data on both the accuracy and speed from their tests, we extracted ranks for each tool. Until published datasets are made available in consistent, machine-readable formats this step is necessarily a manual process—ranks were extracted from a mixture of manuscript figures, tables and supplementary materials, each data source is documented in Additional file 2: Table S1. In addition, a variety of accuracy metrics are reported, e.g. “accuracy”, “AUROC”, “F-measure”, “Gain”, “MCC”, “N50”, “PPV”, “precision”, “RMSD”, “sensitivity”, “TPR”, and “tree error”. Our analysis makes the necessarily pragmatic assumption that highly ranked tools on one accuracy metric will also be highly ranked on other accuracy metrics. Many articles contained multiple benchmarks, in these cases we recorded ranks from each of these, the provenance of which is stored with the accuracy metric and raw speed and accuracy rank data for each tool (Additional file 2: Table S1). In line with rank-based statistics, the cases where tools were tied were resolved by using a midpoint rank (e.g. if tools ranked 3 and 4 are tied, the rank 3.5 was used) [66]. Each rank extraction was independently verified by at least one other co-author to ensure both the provenance of the data could be established and that the ranks were correct. The ranks for each benchmark were then normalised to lie between 0 and 1 using the formula where ‘r’ is a tool’s rank and ‘n’ is the number of tools in the benchmark. For tools that were benchmarked multiple times with multiple metrics (e.g. BWA was evaluated in 6 different articles [67–72]) a mean normalised rank was used to summarise the accuracy and speed performance. Or, more formally:

For each tool we identified the corresponding publications in GoogleScholar; the total number of citations was recorded, the corresponding authors were also identified, and if they had public GoogleScholar profiles, we extracted their H-index and calculated a M-index () where ‘y’ is the number of years since their first publication. The journal quality was estimated using the H5-index from GoogleScholar Metrics.

The year of publication was also recorded for each tool. “Relative age” and “relative citations” were also computed for each tool. For each benchmark, software was ranked by year of first publication (or number of citations), ranks were assigned and then normalised as described above. Tools ranked in multiple evaluations were then assigned a mean value for “relative age” and “relative citations”.

The papers describing tools were checked for information on version numbers and links to GitHub. Google was also employed to identify GitHub repositories. When a repository was matched with a tool, the number of “commits” and number of “contributors” was collected, when details of version numbers were provided, these were also harvested. Version numbers are inconsistently used between groups, and may begin at either 0 or 1. To counter this issue we have added ‘1’ to all versions less than ‘1’, for example, version 0.31 become 1.31. In addition, multiple point releases may be used e.g. ‘version 5.2.6’, these have been mapped to the nearest decimal value ‘5.26’.

Statistical analysis

For each tool we manually collected up to 12 different statistics from GoogleScholar, GitHub and directly from literature describing tools ((1) corresponding author’s H-index, (2) corresponding author’s M-index, (3) journal H5 index, (4) normalised accuracy rank, (5) normalised speed rank, (6) number of citations, (7) relative age, (8) relative number of citations, (9) year first published, (10) version, (11) number of commits to GitHub, (12) number of contributors to GitHub). These were evaluated in a pairwise fashion to produce Fig. 1A, B, the R code used to generate these is given in a GitHub repository (linked below).

For each benchmark of three or more tools, we extracted the published accuracy and speed ranks. In order to identify whether there was an enrichment of certain accuracy and speed pairings we constructed a permutation test. The individual accuracy and speed ranks were reassigned to tools in a random fashion and each new accuracy and speed rank pairing was recorded. For each benchmark this procedure was repeated 1000 times. These permuted rankings were normalised and compared to the real rankings to produce the ‘X’ points in Fig. 1B and the heatmap and histograms in Fig. 2. The heatmap in Fig. 2 is based upon Z-scores (). For each cell in a 3×3 grid a Z-score (and corresponding P value is computed, either with the ‘pnorm’ distribution function in R (Fig. 2A) or empirically (Fig. 2B)) is computed to illustrate the abundance or lack of tools in a cell relative to the permuted data.

The distributions for each feature and permuted accuracy or speed ranks are shown in Additional file 1: Figures S3 & S4. Scatter-plots for each pair of features is shown in Additional file 1: Figure S5. Plots showing the sample sizes for each tool, and feature are shown in Additional file 1: Figure S8, illustrates a power analysis to show what effect sizes we are likely to detect for our sample sizes.

Supplementary Information

Additional file 1 Supplementary Figures S1-S8 and the neutral software benchmark reference list the accuracy and speed data is derived from [1-66].

Additional file 2 Supplementary Tables S1-S7.

Acknowledgements

The authors acknowledge the valued contribution of invaluable discussions with Anne-Laure Boulesteix, Shinichi Nakagawa, Suetonia Palmer and Jason Tylianakis. Murray Cox, Raquel Norel, Alexandros Stamatakis, Jens Stoye, Tandy Warnow, and Luis Pedro Coelho and three anonymous reviewers provided valuable feedback on drafts of the manuscript.

This work was largely conducted on the traditional territory of Kāi Tahu.

Peer review information

Andrew Cosgrove was the primary editor of this article and managed its editorial process and peer review in collaboration with the rest of the editorial team.

Review history

The review history is available as Additional file 3.

Authors’ contributions

PPG conceived the study. PPG, JMP, SM, FAG and SUU contributed with assessing manuscripts against inclusion criteria, data extraction and data validation. PPG, AP, AG and MAB contributed to the design and coordination of the study. The authors read and approved the final manuscript.

Funding

PPG is supported by a Rutherford Discovery Fellowship, administered by the Royal Society Te Apārangi, PPG, AG and MAB acknowledge support from a Data Science Programmes grant (UOAX1932).

Availability of data and materials

Raw datasets, software and documents are available under a CC-BY license at Github [73] and FigShare [74].

Declarations

Competing interests

The authors declare that they have no competing interests.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Change history

2/28/2022

The review history for this article has been added.

Contributor Information

Paul P. Gardner, Email: paul.gardner@otago.ac.nz

James M. Paterson, Email: james.paterson@canterbury.ac.nz

Stephanie McGimpsey, Email: srm88@cam.ac.uk.

Fatemeh Ashari-Ghomi, Email: fatgho@food.dtu.dk.

Sinan U. Umu, Email: sinan.ugur.umu@kreftregisteret.no

Aleksandra Pawlik, Email: PawlikA@landcareresearch.co.nz.

Alex Gavryushkin, Email: alex@biods.org.

Michael A. Black, Email: mik.black@otago.ac.nz

References

- 1.Perez-Iratxeta C, Andrade-Navarro MA, Wren JD. Evolving research trends in bioinformatics. Brief Bioinform. 2007;8(2):88–95. doi: 10.1093/bib/bbl035. [DOI] [PubMed] [Google Scholar]

- 2.Van Noorden R, Maher B, Nuzzo R. The top 100 papers. Nature. 2014;514(7524):550–53. doi: 10.1038/514550a. [DOI] [PubMed] [Google Scholar]

- 3.Wren JD. Bioinformatics programs are 31-fold over-represented among the highest impact scientific papers of the past two decades. Bioinformatics. 2016;32(17):2686–91. doi: 10.1093/bioinformatics/btw284. [DOI] [PubMed] [Google Scholar]

- 4.Altschul SF, Gish W, Miller W, Myers EW, Lipman DJ. Basic local alignment search tool. J Mol Biol. 1990;215(3):403–10. doi: 10.1016/S0022-2836(05)80360-2. [DOI] [PubMed] [Google Scholar]

- 5.Thompson JD, Higgins DG, Gibson TJ. CLUSTAL w: improving the sensitivity of progressive multiple sequence alignment through sequence weighting, position-specific gap penalties and weight matrix choice. Nucleic Acids Res. 1994;22(22):4673–80. doi: 10.1093/nar/22.22.4673. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Thompson JD, Gibson TJ, Plewniak F, Jeanmougin F, Higgins DG. The CLUSTAL_X windows interface: flexible strategies for multiple sequence alignment aided by quality analysis tools. Nucleic Acids Res. 1997;25(24):4876–82. doi: 10.1093/nar/25.24.4876. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Altschul SF, Madden TL, Schäffer AA, Zhang J, Zhang Z, Miller W, Lipman DJ. Gapped BLAST and PSI-BLAST: a new generation of protein database search programs. Nucleic Acids Res. 1997;25(17):3389–402. doi: 10.1093/nar/25.17.3389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Felsenstein J. Confidence limits on phylogenies: An approach using the bootstrap. Evolution. 1985;39(4):783–91. doi: 10.1111/j.1558-5646.1985.tb00420.x. [DOI] [PubMed] [Google Scholar]

- 9.Saitou N, Nei M. The neighbor-joining method: a new method for reconstructing phylogenetic trees. Mol Biol Evol. 1987;4(4):406–25. doi: 10.1093/oxfordjournals.molbev.a040454. [DOI] [PubMed] [Google Scholar]

- 10.Posada D, Crandall KA. MODELTEST: testing the model of DNA substitution. Bioinformatics. 1998;14(9):817–18. doi: 10.1093/bioinformatics/14.9.817. [DOI] [PubMed] [Google Scholar]

- 11.Ronquist F, Huelsenbeck JP. MrBayes 3: Bayesian phylogenetic inference under mixed models. Bioinformatics. 2003;19(12):1572–74. doi: 10.1093/bioinformatics/btg180. [DOI] [PubMed] [Google Scholar]

- 12.Tamura K, Dudley J, Nei M, Kumar S. MEGA4: Molecular evolutionary genetics analysis (MEGA) software version 4.0. Mol Biol Evol. 2007;24(8):1596–99. doi: 10.1093/molbev/msm092. [DOI] [PubMed] [Google Scholar]

- 13.Sheldrick GM. Phase annealing in SHELX-90: direct methods for larger structures. Acta Crystallogr A. 1990;46(6):467–73. [Google Scholar]

- 14.Sheldrick GM. A short history of SHELX. Acta Crystallogr A. 2008;64(Pt 1):112–22. doi: 10.1107/S0108767307043930. [DOI] [PubMed] [Google Scholar]

- 15.Jones TA, Zou JY, Cowan SW, Kjeldgaard M. Improved methods for building protein models in electron density maps and the location of errors in these models. Acta Crystallogr A. 1991;47(Pt 2):110–19. doi: 10.1107/s0108767390010224. [DOI] [PubMed] [Google Scholar]

- 16.Laskowski RA, MacArthur MW, Moss DS, Thornton JM. PROCHECK: a program to check the stereochemical quality of protein structures. J Appl Crystallogr. 1993;26(2):283–91. [Google Scholar]

- 17.Otwinowski Z, Minor W. Processing of X-ray diffraction data collected in oscillation mode. Methods Enzymol. 1997;276:307–26. doi: 10.1016/S0076-6879(97)76066-X. [DOI] [PubMed] [Google Scholar]

- 18.Kraulis PJ. MOLSCRIPT: a program to produce both detailed and schematic plots of protein structures. J Appl Crystallogr. 1991;24(5):946–50. [Google Scholar]

- 19.Berman HM, Westbrook J, Feng Z, Gilliland G, Bhat TN, Weissig H, Shindyalov IN, Bourne PE. The protein data bank. Nucleic Acids Res. 2000;28(1):235–42. doi: 10.1093/nar/28.1.235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Leveson NG, Turner CS. An investigation of the therac-25 accidents. Computer. 1993;26(7):18–41. [Google Scholar]

- 21.Cummings M, Britton D. Living with Robots. London: Elsevier; 2020. Regulating safety-critical autonomous systems: past, present, and future perspectives. [Google Scholar]

- 22.Herkert J, Borenstein J, Miller K. The boeing 737 max: Lessons for engineering ethics. Sci Eng Ethics. 2020;26(6):2957–74. doi: 10.1007/s11948-020-00252-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Marx V. Biology: The big challenges of big data. Nature. 2013;498(7453):255–60. doi: 10.1038/498255a. [DOI] [PubMed] [Google Scholar]

- 24.Gombiner J. Carbon footprinting the internet. Consilience-J Sustain Dev. 2011; 5(1).

- 25.Storey JD, Tibshirani R. Statistical significance for genomewide studies. Proc Natl Acad Sci USA. 2003;100(16):9440–45. doi: 10.1073/pnas.1530509100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Boulesteix A. Over-optimism in bioinformatics research. Bioinformatics. 2010;26(3):437–39. doi: 10.1093/bioinformatics/btp648. [DOI] [PubMed] [Google Scholar]

- 27.Jelizarow M, Guillemot V, Tenenhaus A, Strimmer K, Boulesteix A. Over-optimism in bioinformatics: an illustration. Bioinformatics. 2010;26(16):1990–98. doi: 10.1093/bioinformatics/btq323. [DOI] [PubMed] [Google Scholar]

- 28.Weber LM, Saelens W, Cannoodt R, Soneson C, Hapfelmeier A, Gardner PP, Boulesteix AL, Saeys Y, Robinson MD. Essential guidelines for computational method benchmarking. Genome Biol. 2019;20(1):125. doi: 10.1186/s13059-019-1738-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Norel R, Rice JJ, Stolovitzky G. The self-assessment trap: can we all be better than average? Mol Syst Biol. 2011;7(1):537. doi: 10.1038/msb.2011.70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Buchka S, Hapfelmeier A, Gardner PP, Wilson R, Boulesteix AL. On the optimistic performance evaluation of newly introduced bioinformatic methods. Genome Biol. 2021;22(1):152. doi: 10.1186/s13059-021-02365-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Egan JP. Signal Detection Theory and ROC-analysis. Series in Cognition and Perception. New York: Academic Press; 1975. [Google Scholar]

- 32.Hall T, Beecham S, Bowes D, Gray D, Counsell S. A systematic literature review on fault prediction performance in software engineering. IEEE Trans Software Eng. 2012;38(6):1276–304. [Google Scholar]

- 33.Felsenstein J. Phylogeny programs. 1995. http://evolution.gs.washington.edu/phylip/software.html. Accessed Nov 2020.

- 34.Altschul S, Demchak B, Durbin R, Gentleman R, Krzywinski M, Li H, Nekrutenko A, Robinson J, Rasband W, Taylor J, Trapnell C. The anatomy of successful computational biology software. Nat Biotechnol. 2013;31(10):894–97. doi: 10.1038/nbt.2721. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Henry VJ, Bandrowski AE, Pepin A, Gonzalez BJ, Desfeux A. OMICtools: an informative directory for multi-omic data analysis. Database. 2014; 2014. [DOI] [PMC free article] [PubMed]

- 36.Hannay JE, MacLeod C, Singer J, Langtangen HP, Pfahl D, Wilson G. Proceedings of the 2009 ICSE Workshop on Software Engineering for Computational Science and Engineering. SECSE ’09. Washington: IEEE Computer Society; 2009. How do scientists develop and use scientific software? [Google Scholar]

- 37.Joppa LN, McInerny G, Harper R, Salido L, Takeda K, O’Hara K, Gavaghan D, Emmott S. Troubling trends in scientific software use. Science. 2013;340(6134):814–15. doi: 10.1126/science.1231535. [DOI] [PubMed] [Google Scholar]

- 38.Loman N, Connor T. Bioinformatics infrastructure and training survey. 2015. Figshare. Dataset. https://doi.org/10.6084/m9.figshare.1572287.v2.

- 39.Garfield E. Citation indexes for science; a new dimension in documentation through association of ideas. Science. 1955;122(3159):108–11. doi: 10.1126/science.122.3159.108. [DOI] [PubMed] [Google Scholar]

- 40.Woolley AW, Chabris CF, Pentland A, Hashmi N, Malone TW. Evidence for a collective intelligence factor in the performance of human groups. Science. 2010;330(6004):686–88. doi: 10.1126/science.1193147. [DOI] [PubMed] [Google Scholar]

- 41.Cheruvelil KS, Soranno PA, Weathers KC, Hanson PC, Goring SJ, Filstrup CT, Read EK. Creating and maintaining high-performing collaborative research teams: the importance of diversity and interpersonal skills. Front Ecol Environ. 2014;12(1):31–38. [Google Scholar]

- 42.Hirsch JE. An index to quantify an individual’s scientific research output. Proc Natl Acad Sci USA. 2005;102(46):16569–72. doi: 10.1073/pnas.0507655102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Bornmann L, Mutz R, Daniel H. Are there better indices for evaluation purposes than the h-index? a comparison of nine different variants of the h-index using data from biomedicine. J Am Soc Inf Sci. 2008;59(5):830–37. [Google Scholar]

- 44.Fourment M, Gillings MR. A comparison of common programming languages used in bioinformatics. BMC Bioinformatics. 2008;9:82. doi: 10.1186/1471-2105-9-82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Farrar M. Striped Smith–Waterman speeds database searches six times over other SIMD implementations. Bioinformatics. 2007;23(2):156–61. doi: 10.1093/bioinformatics/btl582. [DOI] [PubMed] [Google Scholar]

- 46.Dematté L, Prandi D. GPU computing for systems biology. Brief Bioinform. 2010;11(3):323–33. doi: 10.1093/bib/bbq006. [DOI] [PubMed] [Google Scholar]

- 47.Schaeffer J. The history heuristic and alpha-beta search enhancements in practice. IEEE Trans Pattern Anal Mach Intell. 1989;11(11):1203–12. [Google Scholar]

- 48.Papadimitriou CH. Encyclopedia of Computer Science. Chichester: John Wiley and Sons Ltd.; 2003. Computational complexity. [Google Scholar]

- 49.Leiserson CE, Thompson NC, Emer JS, Kuszmaul BC, Lampson BW, Sanchez D, Schardl TB. There’s plenty of room at the top: What will drive computer performance after moore’s law?Science. 2020; 368(6495). [DOI] [PubMed]

- 50.Ray B, Posnett D, Filkov V, Devanbu P. Proceedings of the 22nd ACM SIGSOFT International Symposium on Foundations of Software Engineering. New York: Association for Computing Machinery; 2014. A large scale study of programming languages and code quality in github. [Google Scholar]

- 51.Dozmorov MG. Github statistics as a measure of the impact of open-source bioinformatics software. Front Bioeng Biotechnol. 2018;6:198. doi: 10.3389/fbioe.2018.00198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Mangul S, Mosqueiro T, Abdill RJ, Duong D, Mitchell K, Sarwal V, Hill B, Brito J, Littman RJ, Statz B, Lam AK, Dayama G, Grieneisen L, Martin LS, Flint J, Eskin E, Blekhman R. Challenges and recommendations to improve the installability and archival stability of omics computational tools. PLoS Biol. 2019;17(6):3000333. doi: 10.1371/journal.pbio.3000333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Sayers EW, Barrett T, Benson DA, Bolton E, Bryant SH, Canese K, Chetvernin V, Church DM, Dicuccio M, Federhen S, Feolo M, Geer LY, Helmberg W, Kapustin Y, Landsman D, Lipman DJ, Lu Z, Madden TL, Madej T, Maglott DR, Marchler-Bauer A, Miller V, Mizrachi I, Ostell J, Panchenko A, Pruitt KD, Schuler GD, Sequeira E, Sherry ST, Shumway M, Sirotkin K, Slotta D, Souvorov A, Starchenko G, Tatusova TA, Wagner L, Wang Y, John W W, Yaschenko E, Ye J. Database resources of the national center for biotechnology information. Nucleic Acids Res. 2010;38(Database issue):5–16. doi: 10.1093/nar/gkp967. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Boulesteix A, Lauer S, Eugster MJA. A plea for neutral comparison studies in computational sciences. PLoS ONE. 2013;8(4):61562. doi: 10.1371/journal.pone.0061562. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Siepel A. Challenges in funding and developing genomic software: roots and remedies. Genome Biol. 2019;20(1):1–14. doi: 10.1186/s13059-019-1763-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Larivière V, Gingras Y. The impact factor’s Matthew Effect: A natural experiment in bibliometrics. J Am Soc Inf Sci. 2010;61(2):424–27. [Google Scholar]

- 57.Merton RK. The Matthew Effect in Science. Science. 1968;159(3810):56–63. [PubMed] [Google Scholar]

- 58.Boulesteix A, Stierle V, Hapfelmeier A. Publication bias in methodological computational research. Cancer Inform. 2015;14(Suppl 5):11–19. doi: 10.4137/CIN.S30747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Nissen SB, Magidson T, Gross K, Bergstrom CT. Publication bias and the canonization of false facts. Elife. 2016;5:21451. doi: 10.7554/eLife.21451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Sterling TD, Rosenbaum WL, Weinkam JJ. Publication decisions revisited: The effect of the outcome of statistical tests on the decision to publish and vice versa. Am Stat. 1995;49(1):108–12. [Google Scholar]

- 61.Fanelli D. Negative results are disappearing from most disciplines and countries. Scientometrics. 2012;90(3):891–904. [Google Scholar]

- 62.Brembs B. Reliable novelty: New should not trump true. PLoS Biol. 2019;17(2):3000117. doi: 10.1371/journal.pbio.3000117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.McEntyre J, Lipman D. PubMed: bridging the information gap. CMAJ. 2001;164(9):1317–19. [PMC free article] [PubMed] [Google Scholar]

- 64.Carroll L. Alice’s Adventures in Wonderland. London: Macmillan and Co.; 1865. [Google Scholar]

- 65.Tolkien JRR. The Hobbit, Or, There and Back Again. UK: George Allen & Unwin; 1937. [Google Scholar]

- 66.Mann HB, Whitney DR. On a test of whether one of two random variables is stochastically larger than the other. Ann Math Stat. 1947;18(1):50–60. [Google Scholar]

- 67.Bao S, Jiang R, Kwan W, Wang B, Ma X, Song Y. Evaluation of next-generation sequencing software in mapping and assembly. J Hum Genet. 2011;56(6):406–14. doi: 10.1038/jhg.2011.43. [DOI] [PubMed] [Google Scholar]

- 68.Caboche S, Audebert C, Lemoine Y, Hot D. Comparison of mapping algorithms used in high-throughput sequencing: application to ion torrent data. BMC Genomics. 2014;15:264. doi: 10.1186/1471-2164-15-264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Hatem A, Bozdağ D, Toland AE, Çatalyürek ÜV. Benchmarking short sequence mapping tools. BMC Bioinformatics. 2013;14:184. doi: 10.1186/1471-2105-14-184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Schbath S, Martin V, Zytnicki M, Fayolle J, Loux V, Gibrat J. Mapping reads on a genomic sequence: an algorithmic overview and a practical comparative analysis. J Comput Biol. 2012;19(6):796–813. doi: 10.1089/cmb.2012.0022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Ruffalo M, LaFramboise T, Koyutürk M. Comparative analysis of algorithms for next-generation sequencing read alignment. Bioinformatics. 2011;27(20):2790–96. doi: 10.1093/bioinformatics/btr477. [DOI] [PubMed] [Google Scholar]

- 72.Holtgrewe M, Emde A, Weese D, Reinert K. A novel and well-defined benchmarking method for second generation read mapping. BMC Bioinformatics. 2011;12:210. doi: 10.1186/1471-2105-12-210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Gardner PP, Paterson JM, McGimpsey S, Ashari-Ghomi F, Umu SU, Pawlik A, Gavryushkin A, Black MA. Sustained software development, not number of citations or journal choice, is indicative of accurate bioinformatic software. Github. 2022. https://github.com/Gardner-BinfLab/speed-vs-accuracy-meta-analysis. Accessed Jan 2022. [DOI] [PMC free article] [PubMed]

- 74.Gardner PP, Paterson JM, McGimpsey S, Ashari-Ghomi F, Umu SU, Pawlik A, Gavryushkin A, Black MA. Sustained software development, not number of citations or journal choice, is indicative of accurate bioinformatic software. FigShare. 2022. 10.6084/m9.figshare.15121818.v2. [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional file 1 Supplementary Figures S1-S8 and the neutral software benchmark reference list the accuracy and speed data is derived from [1-66].

Additional file 2 Supplementary Tables S1-S7.

Data Availability Statement

Raw datasets, software and documents are available under a CC-BY license at Github [73] and FigShare [74].