Abstract

Internally generated imagery and externally triggered perception rely on overlapping sensory processes. This overlap poses a challenge for perceptual reality monitoring: determining whether sensory signals reflect reality or imagination. In this study, we used psychophysics to investigate how imagery and perception interact to determine visual experience. Participants were instructed to detect oriented gratings that gradually appeared in noise while simultaneously either imagining the same grating, a grating perpendicular to the to-be-detected grating, or nothing. We found that, compared to both incongruent imagery and no imagery, congruent imagery caused a leftward shift of the psychometric function relating stimulus contrast to perceptual threshold. We discuss how this effect can best be explained by a model in which imagery adds sensory signal to the perceptual input, thereby increasing the visibility of perceived stimuli. These results suggest that, in contrast to changes in sensory signals caused by self-generated movement, the brain does not discount the influence of self-generated sensory signals on perception.

Keywords: mental imagery, reality monitoring, perception

Introduction

Visual signals can be triggered externally, by the appearance of a stimulus in the outside world (i.e., perception), or internally, via top-down processes such as mental imagery. Neuroimaging evidence has shown that neural representations of internally and externally generated signals are highly similar (Dijkstra, Bosch, & van Gerven, 2019; Horikawa, Tamaki, Miyawaki, & Kamitani, 2013; Pearson, 2019). This overlap might complicate perceptual reality monitoring: determining whether a given sensory signal reflects external reality or internal imagination (Dijkstra, Kok, et al., 2022). In order to understand how the brain is able to keep imagined and perceived signals separate, it is necessary to gain insight into how internally and externally generated sensory signals interact to determine visual experience.

One line of research has shown that mental imagery can bias perception of subsequent ambiguous stimuli toward the imagined stimulus (Pearson, 2014; Pearson, Clifford, & Tong, 2008) and that the strength of this effect is proportional to the vividness of the imagery (Bergmann et al., 2016; Keogh et al., 2020; Keogh & Pearson, 2014). However, results obtained during simultaneous imagery and perception, a situation in which perceptual reality monitoring is particularly challenging, have been more mixed. Early studies found that simultaneous imagery decreased the likelihood that participants detected external stimuli, which was interpreted as showing that the external input was erroneously being attributed to imagery (Perky, 1910; Segal & Fusella, 1969; Segal & Glicksman, 1967; Segal & Nathan, 1964). This line of evidence suggests that during imagery, the brain explains away or suppresses incoming sensory signals. However, an imagery-induced increase in misses could also be due to distraction or decreases in (sensory) processing capacity (Craver-Lemley & Reeves, 1992; Reeves et al., 2020; Segal & Fusella, 1970). In contrast, later experiments reported that when the same stimulus was simultaneously imagined and perceived, participants were more likely to report perceptual presence (Dijkstra & Fleming, 2021; Dijkstra, Mazor, et al., 2021; Farah, 1985, 1989; Moseley et al., 2016; Saad & Silvanto, 2013). These results have been interpreted as showing that imagined signals are incorporated into perception (Dijkstra, Mazor, et al., 2021). These latter observations are inconsistent with the brain explaining away or suppressing sensory signals when engaging in imagery and instead suggest that imagined signals are combined with incoming sensory signals, increasing the probability of reporting external stimulus presence.

Increases or decreases in reports of perceptual presence—a change in detection criterion—can be caused by several different underlying mechanisms, not all of them reflective of changes in sensory processing (Gold & Ding, 2013; Witt et al., 2015). Within a signal detection theoretic framework, a criterion shift can be due to a change in response bias, a shift in the underlying sensory signals, or both (Wyart, Nobre & Summerfield, 2012). To shed light on this question, here we quantified the effects of imagery on the psychometric function for detection to dissociate three hypothesized mechanisms for how simultaneous imagery and perception interact. Participants detected tilted gratings that gradually appeared in noise and simultaneously imagined the same grating, a grating perpendicular to the to-be-detected grating, or nothing (Fig. 1A). Importantly, stimulus contrast was varied parametrically, allowing us to estimate the full psychometric function relating stimulus strength to the probability of detecting an external grating in each imagery condition (Wichmann & Hill, 2001a, 2001b).

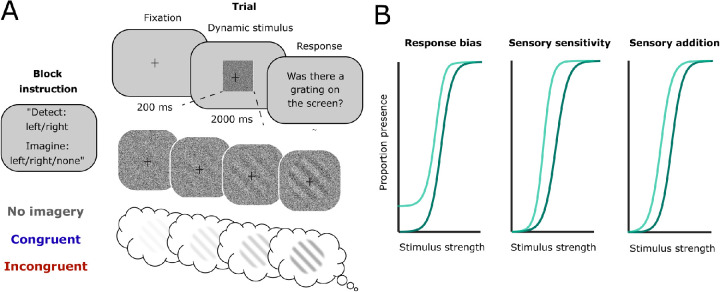

Figure 1.

Experimental design and hypotheses. (A) Experimental paradigm. At the start of each block, participants were instructed whether they would be detecting left- or right-tilted gratings and what they had to imagine this block: nothing, a left-tilted grating, or a right-tilted grating. Trials consisted of 200-ms fixation followed by 2 s of dynamically fluctuating noise in which a stimulus gradually ramped up until a certain visibility level. The task was to indicate whether a grating was present or not. After each block, participants were asked what they had imagined during this block to check whether they accurately followed instructions. (B) Hypotheses. Imagery might increase or decrease perceptual presence response via different mechanisms. Left: a response bias would be reflected in a change in guess rate and mean. Middle: a change in sensory sensitivity would be reflected in a change in slope. Right: a subtraction or addition to the sensory signal would be reflected in only a change in mean.

We hypothesized that imagery could lead to an increase or decrease in perceptual presence responses via different mechanisms (Fig. 1B). First, simultaneous imagery might cause a response bias in favor or against the imagined stimulus, without changing sensory processing. This would be reflected by a vertical shift of the psychometric curve for the congruent condition, quantified by a change in both the mean and the guess rate—as such a response bias should cause an increase in presence responses independently of stimulus contrast. Furthermore, such a stimulus-independent response bias should be observed most prominently at low contrast values (equivalent to classical false alarms) and then be washed out by an increasing influence of the stimulus as contrast increases (Fig. 1B, left). Second, top-down imagery might change the sensitivity to external sensory signals by amplifying signals proportionally to their strength. This would be reflected by a change in both the slope (variance) and the mean of the psychometric curve (Reynolds & Heeger, 2009; Fig. 1B, middle). Finally, imagined signals might simply be added or subtracted from the perceived signal, reflected by a horizontal shift in the psychometric curve, associated most strongly with a change in the mean and potentially with a small change in guess rate if the curve does not converge to zero presence responses at zero contrast (Fig. 1B, right).

Methods

Participants

Based on power calculations of a previous experiment with the same paradigm (Dijkstra, Mazor, et al., 2021), we required 34 participants to capture differences in detection criterion and accuracy between conditions. Given that we are interested in slightly more subtle effects in the current study, we decided to recruit 50 participants. Participants were recruited online via Prolific (www.prolific.co), and data were collected on an institutional server managed by the JATOS tool (Lange et al., 2015). All participants gave written informed consent to participate in the study, and all procedures were approved by the University College London ethics committee. The experiment took approximately 40 min to complete, and participants were paid £5 for their participation, equivalent to an hourly rate of £7.50. Data from two participants were not obtained due to technical issues. Furthermore, we excluded five participants because they failed the imagery check (see below) and an additional two because of poor psychometric curve fits. The final sample consisted of 41 participants (mean age = 29.14 years, SD = 11.5).

Experimental procedure

The experimental paradigm is depicted in Figure 1A. The experiment was programmed in JavaScript using jsPsych (de Leeuw, 2015). Participants were instructed to detect gratings that gradually appeared in dynamic noise while simultaneously imagining the same grating (congruent condition), a grating perpendicular to the presented grating (incongruent), or nothing (no imagery). Prior to the main experiment, participants filled out the VVIQ2 (Marks, 1995), which we used to instruct participants what we meant by the concept of mental imagery. After this, participants first practiced detecting gratings with a high contrast until they responded correctly on at least 75% of the trials, making sure they understood the task. Then, participants practiced imagining the gratings while looking at the dynamic noise for 20 trials in a row, 10 per orientation. They were instructed to imagine the grating “as if it was actually presented.” After each trial, participants were asked to indicate the vividness of their imagery using a scale from 1 (not vivid at all) to 5 (perfectly clear and as vivid as real seeing), similar to the scale used in the VVIQ2.

For the main task, participants were instructed that a grating of one orientation would be presented on some of the trials and that in some cases, the grating would be quite hard to see. In order to avoid visual priming, no trial-wise cues were delivered, and instead the different conditions were implemented in a block-wise fashion such that during the entire block, participants detected one specific orientation and imagined one specific orientation. At the onset of each experimental block, the participant was instructed which grating orientation would be shown and what they had to imagine during the block (Fig. 1A) as follows: “During this block you will see right/left tilted gratings. Please do not/also imagine left/right-tilted gratings as vividly as possible.” To start with a block, participants had to press the space bar. There were 12 blocks, two per condition (orientation × imagery), each consisting of 42 trials. The order of the blocks was randomized within each participant. Each trial started with a 200-ms fixation cross followed by 2 s of either pure dynamic white noise or dynamic white noise within which a gradually appearing stimulus was embedded. The task of the participants was to indicate whether or not a grating was present on each trial. After each block, participants were asked which, if any, stimulus they had imagined during this block, to ensure that they had correctly followed the instructions. Blocks were removed prior to analyses if the answer to this imagery check was incorrect.

At the end of the experiment, after filling out their age, we also asked participants whether they thought imagining the gratings had altered their responses on the detection task. The replies varied, with comments such as “I think imagining either left or right tilted gratings helped me see the ones that were actually there” and “I made some mistakes in the answers because I got confused between the pattern that I imagined and the one that was displayed.”

Stimuli

The stimuli were generated in MATLAB (version R2018b; The MathWorks, Natick, MA, USA) and consisted of a sinusoidal grating at an orientation of 45o or 135o masked with an annulus and embedded in white noise (Fig. 1A). The visibility of the stimulus indicated the probability that a given pixel contained the grating stimulus rather than noise (Mazor et al., 2020), that is, the percentage signal relative to noise. Seven visibility levels were used: 0%, 3.7%, 4.8%, 5.3%, 6.1%, 7.3%, and 14% (Fig. 1B). The exact values of the visibility levels were predetermined via piloting to allow for accurate estimation of the psychometric curves.

For each orientation separately, 50 stimulus images were generated and distributed equally in log space across the seven visibility levels (from 0% to 14%). For the pure noise trials, an additional 20 images of pure white noise were generated. For the trials with a visibility level above zero, 20 stimulus images ranging from zero visibility to that specific visibility level were presented over the course of 2 s, giving the impression that the stimulus was gradually appearing in the noise. This ramping up was done to mimic the gradual nature of mental image generation (Perky, 1910). During zero visibility trials, 20 noise images were presented in a random order.

Data analysis

Per imagery condition, we fit the detection responses for the separate visibility levels using the following cumulative gaussian function:

where Ppresent is the probability of reporting stimulus presence; μ is the mean of the normal distribution, reflecting the horizontal offset of the psychometric curve/how much signal is needed to achieve 50% presence responses; σ is the standard deviation, reflecting the slope or sensitivity of presence responses to increases in signal; and g is the guess rate, reflecting the vertical offset at the zero point, or how likely presence responses are in the complete absence of signal. We did not include a lapse rate (Wichmann & Hill, 2001a) because we did not have prior hypotheses about this parameter, and therefore including it would unnecessarily increase the complexity of the model. Fitting was performed using maximum likelihood via the binomial link function. The curve parameters μ and σ were initialized at 0.05 (5%), and g was bounded to be between 0 and 1 by transforming it to another parameter, θ, such that , where θ was initialized at −8, corresponding to a guess rate close to zero. Statistical inference was performed by comparing the three curve parameters between conditions using analyses of variance.

Results

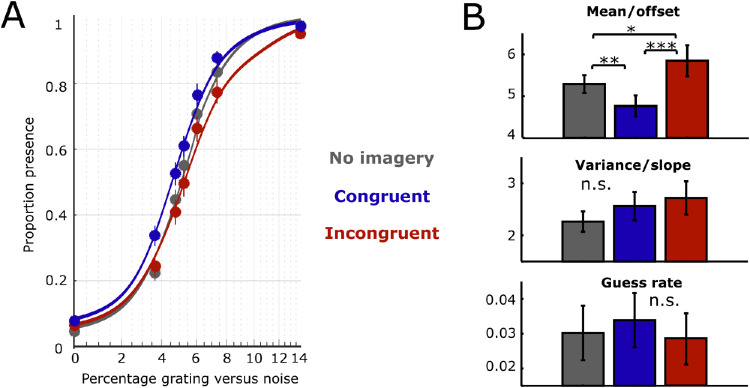

The curve fits and proportion of presence responses per visibility level for the three conditions are shown in Figure 2. Mental imagery caused a horizontal shift of the psychometric curve, reflected in a significant effect of condition on the mean (Fig. 1C; F(39, 2) = 7.34, p = 0.002, ηp2 = 0.273). Post hoc comparisons revealed that the amount of signal needed to achieve 50% presence responses was significantly lower during congruent imagery (M = 4.76, SD = 1.61) than during no imagery (M = 5.29, SD = 1.36; t(40) = 2.97, p = 0.005) or during incongruent imagery (M = 5.85, SD = 2.39; t(40) = 3.63, p = 0.0007). Furthermore, the mean was also significantly higher during incongruent imagery compared to no imagery (t(40) = 2.12, p = 0.038). In contrast, there was no significant effect of imagery condition on either the slope (F(39, 2) = 1.325, p = 0.277) or the guess rate (F(39, 2) = 0.169, p = 0.845). We further quantified the evidence for the presence or absence of a condition difference using Bayesian statistics in JASP (JASP & JASP Team, 2019). In keeping with the interpretation of Bayes factors from Jeffreys (1961) and Lee and Wagenmakers (2014), there was extreme evidence for an effect on mean (BF = 109.85), moderate evidence for the absence of an effect on slope (BF = 0.29), and strong evidence for the absence of an effect on guess rate (BF = 0.08).

Figure 2.

Results. (A) Psychometric function per condition. Solid points represent proportion presence trials at the different visibility levels for each condition averaged over participants. Vertical lines reflect SEM for these points. (B) Curve parameter estimates. Top: mean, the signal value that is associated with a 0.5 probability of reporting stimulus presence. Middle: variance/slope, the sensitivity of presence responses to changes in signal. Bottom: guess rate, the proportion of presence responses for zero signal. *p < 0.05; **p < 0.01; ***p < 0.001.

Discussion

In this study, we set out to characterize how internally and externally generated visual signals interact when stimuli are simultaneously imagined and perceived. To this end, we estimated psychometric functions while participants were simultaneously detecting and imagining oriented gratings. We found that congruent imagery was associated with a leftward shift of the psychometric function compared to both no imagery and incongruent imagery. In contrast, imagery did not have an effect on either the slope or the guess rate of the psychometric function. These results suggest that imagery adds sensory evidence to perceptual signals, thereby increasing the visibility of perceived stimuli.

Imagery did not influence the guess rate of the psychometric function. This makes it unlikely that imagery led to a response bias toward reporting stimulus presence, for instance, due to demand characteristics. Such a bias in the context of our experiment might have caused participants to blindly respond “yes” more often during congruent imagery, even in the absence of any changes in sensory processing (Dijkstra, Mazor, et al., 2021). However, if this were the case, it would have led to an increase in presence responses across the full range of stimulus strengths and therefore also a shift in the guess rate parameter. In contrast, we found that the influence of imagery on perceptual detection was best captured by a change in the horizontal offset without a change in guess rate, indicating imagery interacts with sensory input such that the effect of imagery was largest at intermediate levels of sensory evidence.

While the absence of an effect on guess rate rules out a simple response bias, a change in mean could be caused by effects at different levels of sensory processing. The effect could be due to either a change in low-level sensory representations and/or by a change in the decision variable in more high-level evidence accumulation circuits (Gold & Ding, 2013). Previous neuroimaging research has shown that imagery activates perception-like neural representations in low-level sensory areas (Albers, Kok, Toni, Dijkerman, & De Lange, 2013; Dijkstra, Bosch, & van Gerven, 2017; Lee, Kravitz, & Baker, 2012; Ragni, Tucciarelli, Andersson, & Lingnau, 2020), even in the presence of external stimuli (Rademaker et al., 2019). Furthermore, the imagery-induced horizontal shift in the psychometric function reported here is similar to previously observed effects of microstimulation of stimulus-preferred sensory neurons (Fetsch et al., 2014). Therefore, we believe that the most likely explanation for our findings is that imagery adds sensory signals to a perceptual input stage, creating an intermixed sensory representation, which is strongest when the imagined and perceived content match.

The precise implementation of how exactly imagery adds to the sensory signal remains unclear. One intriguing possibility is that imagery, like expectation, not only enhances activity of neural populations congruent with the imagined stimulus (Wyart et al., 2012) but also suppresses activity of incongruent populations—thereby “sharpening” the neural representation in line with imagination (Kok et al., 2012). This idea could neatly explain the pattern of results observed here: an increase in perceptual presence responses for congruent imagery would be due to enhancement of stimulus-specific activity, whereas incongruent imagery would instead suppress stimulus-specific activity (and enhance incongruent activity), thereby decreasing the probability of detecting the stimulus. This would suggest that expectation and imagery rely on similar neural mechanisms, in line with previous proposals (Feuerriegel et al., 2021; Pearson, 2019). Future research should further investigate the neural implementation of imagery's influence on perception and to what extent it is similar to effects of expectation.

The consistent increase in perceptual presence responses for congruent imagery reported here and elsewhere (Dijkstra, Mazor, et al., 2021; Farah, 1989; Moseley et al., 2016) suggests that the brain does not account for self-generated sensory signals during imagery. This observation seems to be in contrast to analogous mechanisms hypothesized to discount self-generated changes in sensory input caused by action. During action, the nervous system is thought to send a copy of the motor signal to sensory areas, leading to cancellation of the expected sensory change (Crapse & Sommer, 2008). The better the change in sensory input can be predicted by the movement, the stronger the agent has the feeling that it is self-generated rather than being caused by a change in the outside world (Haggard, 2017). However, recent behavioral and neuroimaging studies have found evidence for enhancement rather than suppression of self-generated, expected sensory consequences of actions (Dogge et al., 2018; Yon et al., 2018, 2020), raising doubts about whether sensory attenuation is necessary to distinguish self-generated from externally generated sensory signals during action. One important open question is how these effects of enhancement and suppression are related to source attribution. It is possible that these two effects (sensory attenuation and self-attribution) are dissociable—for instance, whether or not sensory changes are inferred to be self-generated may still be determined by the predictability of sensorimotor consequences, even if attribution to self is associated with an enhancement rather than a suppression of the sensory signals. This would mean that sensory signals predicted from self-generated actions would be more likely to be perceived than unexpected ones, in line with recent findings (Yon et al., 2020) and seemingly in line with the current results.

However, there is an important difference between reality monitoring of sensory signals during action and reality monitoring during imagery. During action, the sensory signals are always assumed to reflect real changes in external input—either caused by our own movement or by changes in the outside world. In other words, reality monitoring during action is not about whether there is a change in the external visual input of our hand but about whether that change is caused by us moving our hand or by some outside source. In contrast, reality monitoring during imagery involves a lower-level decision about whether there was any change in external visual input or whether such signals were generated by imagination—that is, in the current experiment, answering the question, “Was there a grating presented on the screen?” Answering yes to this question already implies that the sensory signal has an external source and was not imagined. In other words, attributing sensory changes during action to self can be associated with an increase in perceptual presence responses, whereas attributing sensory changes during imagery to self should be associated with a decrease in perceptual presence responses. Such decreases have been observed in the past (Perky, 1910), but recent evidence suggests that these effects might have been specific to historical context (Dijkstra & Fleming, 2021). A failure to correct for self-generated visual signals during imagery might play a role in reality monitoring errors causing hallucinations (Dijkstra, Kok, et al., 2022). More research is necessary to further investigate the relationship between self-attribution and changes in perceptual detection during imagery versus action.

Conclusions

Taken together, our results suggest that congruent imagery increases the probability of reporting perceptual presence by adding sensory evidence in favor of the imagined stimulus. These findings indicate that the brain does not discount the impact of self-generated sensory signals generated during imagery on perception, instead treating it as additional evidence for the presence of external input.

Acknowledgments

Supported by a Rubicon grant from the Netherlands Organization for Scientific Research (NWO; 019.192SG.003) to N.D., a Wellcome/Royal Society Sir Henry Dale Fellowship (218535/Z/19/Z) and a European Research Council (ERC) Starting Grant (948548) to P.K., and a Wellcome/Royal Society Sir Henry Dale Fellowship (206648/Z/17/Z) and a Philip Leverhulme Prize from the Leverhulme Trust to S.M.F. The Wellcome Centre for Human Neuroimaging is supported by core funding from the Wellcome Trust (203147/Z/16/Z). For the purpose of open access, the author has applied a CC BY public copyright license to any author accepted manuscript arising from this submission.

Commercial relationships: none.

Corresponding author: Nadine Dijkstra.

Email: n.dijkstra@ucl.ac.uk.

Address: Wellcome Centre for Human Neuroimaging, Queen Square Institute of Neurology, University College London, London, UK.

References

- Albers, A. M., Kok, P., Toni, I., Dijkerman, H. C., & De Lange, F. P. (2013). Shared representations for working memory and mental imagery in early visual cortex. Current Biology, 23, 1427–1431, 10.1016/j.cub.2013.05.065. [DOI] [PubMed] [Google Scholar]

- Bergmann, J., Genç, E., Kohler, A., Singer, W., & Pearson, J. (2016). Smaller primary visual cortex is associated with stronger, but less precise mental imagery. Cerebral Cortex, 26(9), 3838–3850, 10.1093/cercor/bhv186. [DOI] [PubMed] [Google Scholar]

- Crapse, T. B., & Sommer, M. A. (2008). Corollary discharge across the animal kingdom. Nature Reviews Neuroscience, 9(8), 587–600, 10.1038/nrn2457. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Craver-Lemley, C., & Reeves, A. (1992). How visual imagery interferes with vision. Psychological Review, 99(4), 633–649, 10.1037/0033-295X.99.4.633. [DOI] [PubMed] [Google Scholar]

- de Leeuw, J. R. (2015). jsPsych: A javascript library for creating behavioral experiments in a web browser. Behavior Research Methods, 47(1), 1–12, doi: 10.3758/s13428-014-0458-y. [DOI] [PubMed] [Google Scholar]

- Dijkstra, N., Bosch, S. E., & van Gerven, M. A. J. (2017). Vividness of visual imagery depends on the neural overlap with perception in visual areas. Journal of Neuroscience, 37(5), 1367–1373, 10.1523/jneurosci.3022-16.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dijkstra, N., Bosch, S. E., & van Gerven, M. A. J. (2019). Shared neural mechanisms of visual perception and imagery. Trends in Cognitive Sciences, 23, 18–29, 10.1016/j.tics.2019.02.004. [DOI] [PubMed] [Google Scholar]

- Dijkstra, N., & Fleming, S. (2021). Fundamental constraints on distinguishing reality from imagination. PsyArXiv, 10.31234/OSF.IO/BW872. [DOI] [Google Scholar]

- Dijkstra, N., Kok, P., & Fleming, S. M. (2022). Perceptual reality monitoring: Neural mechanisms dissociating imagination from reality. Neuroscience & Biobehavioral Reviews, 135, 104557, 10.1016/J.NEUBIOREV.2022.104557. [DOI] [PubMed] [Google Scholar]

- Dijkstra, N., Mazor, M., Kok, P., & Fleming, S. (2021). Mistaking imagination for reality: Congruent mental imagery leads to more liberal perceptual detection. Cognition, 212, 104719, 10.1016/j.cognition.2021.104719. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dogge, M., Gayet, S., Custers, R., & Aarts, H. (2018). The influence of action-effect anticipation on bistable perception: differences between onset rivalry and ambiguous motion. Neuroscience of Consciousness, 2018(1), niy004, 10.1093/nc/niy004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farah, M. J. (1985). Psychophysical evidence for a shared representational medium for mental images and percepts. Journal of Experimental Psychology: General, 114(1), 91–103, 10.1037/0096-3445.114.1.91. [DOI] [PubMed] [Google Scholar]

- Farah, M. J. (1989). Mechanisms of imagery-perception interaction. Journal of Experimental Psychology: Human Perception and Performance, 15(2), 203–211, 10.1037/0096-1523.15.2.203. [DOI] [PubMed] [Google Scholar]

- Fetsch, C. R., Kiani, R., Newsome, W. T., & Shadlen, M. N. (2014). Effects of cortical microstimulation on confidence in a perceptual decision. Neuron, 83(4), 797–804, 10.1016/j.neuron.2014.07.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feuerriegel, D., Blom, T., & Hogendoorn, H. (2021). Predictive activation of sensory representations as a source of evidence in perceptual decision-making. Cortex, 136, 140–146, 10.1016/j.cortex.2020.12.008. [DOI] [PubMed] [Google Scholar]

- Gold, J. I., & Ding, L. (2013). How mechanisms of perceptual decision-making affect the psychometric function. Progress in Neurobiology, 103, 98–114, 10.1016/j.pneurobio.2012.05.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haggard, P. (2017). Sense of agency in the human brain. Nature Reviews Neuroscience, 18(4), 196–207, 10.1038/nrn.2017.14. [DOI] [PubMed] [Google Scholar]

- Horikawa, T., Tamaki, M., Miyawaki, Y., & Kamitani, Y. (2013). Neural decoding of visual imagery during sleep. Science, 340(6132), 639–642, 10.1126/science.1234330. [DOI] [PubMed] [Google Scholar]

- JASP & JASP Team. (2019). JASP [Computer software].

- Jeffreys, H. (1961). The theory of probability (3rd ed.). Oxford, UK: Oxford University Press, https://jasp-stats.org/faq/how-do-i-cite-jasp/. [Google Scholar]

- Keogh, R., Bergmann, J., & Pearson, J. (2020). Cortical excitability controls the strength of mental imagery. ELife, 9, e50232, 10.7554/eLife.50232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keogh, R., & Pearson, J. (2014). The sensory strength of voluntary visual imagery predicts visual working memory capacity. Journal of Vision, 14(12), 1427–1431, 10.1167/14.12.7. [DOI] [PubMed] [Google Scholar]

- Kok, P., Jehee, J. F. M., & de Lange, F. P. (2012). Less is more: Expectation sharpens representations in the primary visual cortex. Neuron, 75, 265–270, 10.1016/j.neuron.2012.04.034. [DOI] [PubMed] [Google Scholar]

- Lange, K., Kühn, S., & Filevich, E. (2015). “Just Another Tool for Online Studies” (JATOS): An easy solution for setup and management of web servers supporting online studies. PLoS ONE, 10(6), e0130834, 10.1371/journal.pone.0130834. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee, M. D., & Wagenmakers, E. J. (2014). Bayesian cognitive modeling: A practical course . Cambridge, UK: Cambridge University Press. [Google Scholar]

- Lee, S.-H., Kravitz, D. J., & Baker, C. I. (2012). Disentangling visual imagery and perception of real-world objects. NeuroImage, 59(4), 4064–4073, 10.1016/j.neuroimage.2011.10.055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marks, D. F. (1995). New directions for mental imagery research. Journal of Mental Imagery, 19(3–4), 153–167, 10.1177/0963721414532287. [DOI] [Google Scholar]

- Mazor, M., Friston, K., & Fleming, S. (2020). Distinct neural contributions to metacognition for detecting, but not discriminating visual stimuli. ELife, 9, 1–34, 10.1101/853366. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moseley, P., Smailes, D., Ellison, A., & Fernyhough, C. (2016). The effect of auditory verbal imagery on signal detection in hallucination-prone individuals. Cognition, 146, 206–216, 10.1016/j.cognition.2015.09.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pearson, J. (2014). New directions in mental-imagery research: The binocular-rivalry technique and decoding fMRI patterns. Current Directions in Psychological Science, 23(3), 178–183, 10.1177/0963721414532287. [DOI] [Google Scholar]

- Pearson, J. (2019). The human imagination: The cognitive neuroscience of visual mental imagery. Nature Reviews Neuroscience, 20, 624–634, 10.1038/s41583-019-0202-9. [DOI] [PubMed] [Google Scholar]

- Pearson, J., Clifford, C. W. G., & Tong, F. (2008). The functional impact of mental imagery on conscious perception. Current Biology, 18(13), 982–986, 10.1016/j.cub.2008.05.048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perky, C. W. (1910). An experimental study of imagination. American Journal of Psychology, 21(3), 422–452. [Google Scholar]

- Rademaker, R. L., Chunharas, C., & Serences, J. T. (2019). Coexisting representations of sensory and mnemonic information in human visual cortex. Nature Neuroscience, 22(8), 1336–1344, 10.1038/s41593-019-0428-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ragni, F., Tucciarelli, R., Andersson, P., & Lingnau, A. (2020). Decoding stimulus identity in occipital, parietal and inferotemporal cortices during visual mental imagery. Cortex, 127, 371–387, 10.1016/j.cortex.2020.02.020. [DOI] [PubMed] [Google Scholar]

- Reeves, A., Grayhem, R., & Craver-Lemley, C. (2020). The Perky effect revisited: Imagery hinders perception at high levels, but aids it at low. Vision Research, 167, 8–14, 10.1016/j.visres.2019.12.004. [DOI] [PubMed] [Google Scholar]

- Reynolds, J. H., & Heeger, D. J. (2009). The normalization model of attention. Neuron, 61(2), 168–185, 10.1016/j.neuron.2009.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saad, E., & Silvanto, J. (2013). Contrast and strength of visual memory and imagery differentially affect visual perception. PLoS ONE, 8(12), e84827, 10.1371/journal.pone.0084827. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Segal, S. J., & Fusella, V. (1969). Effects of imaging of signal-to-noise ratio, with varying signal conditions. British Journal of Psychology, 60(4), 459–464, 10.1111/j.2044-8295.1969.tb01219.x. [DOI] [PubMed] [Google Scholar]

- Segal, S. J., & Fusella, V. (1970). Influence of imagined pictures and sounds on detection of visual and auditory signals. Journal of Experimental Psychology, 83(3), 458–464. [DOI] [PubMed] [Google Scholar]

- Segal, S. J., & Glicksman, M. (1967). Relaxation and the Perky effect: The influence of body position on judgments of imagery. American Journal of Psychology, 80(2), 257–262, 10.2307/1420985. [DOI] [PubMed] [Google Scholar]

- Segal, S. J., & Nathan, S. (1964). The Perky effect: Incorporation of an external stimulus into an imagery experience under placebo and control conditions. Perceptual and Motor Skills, 18(2), 385–395, 10.2466/pms.1964.18.2.385. [DOI] [PubMed] [Google Scholar]

- Wichmann, F. A., & Hill, N. J. (2001a). The psychometric function: II. Bootstrap-based confidence intervals and sampling. Perception and Psychophysics, 63(8), 1314–1329, 10.3758/BF03194545. [DOI] [PubMed] [Google Scholar]

- Wichmann, F. A., & Hill, N. J. (2001b). The psychometric function: I. Fitting, sampling, and goodness of fit. Perception and Psychophysics, 63(8), 1293–1313, 10.3758/BF03194544. [DOI] [PubMed] [Google Scholar]

- Witt, J. K., Taylor, J. E. T., Sugovic, M., & Wixted, J. T. (2015). Signal detection measures cannot distinguish perceptual biases from response biases. Perception, 44(3), 289–300, 10.1068/p7908. [DOI] [PubMed] [Google Scholar]

- Wyart, V., Nobre, A. C., Summerfield, C. (2012). Dissociable prior influences of signal probability and relevance on visual contrast sensitivity. Proceedings of the National Academy of Sciences of the United States of America, 109(9), 3593–3598, 10.1073/pnas.1120118109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yon, D., Gilbert, S. J., de Lange, F. P., & Press, C. (2018). Action sharpens sensory representations of expected outcomes. Nature Communications, 9(1), 1–8, 10.1038/s41467-018-06752-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yon, D., Zainzinger, V., de Lange, F. P., Eimer, M., & Press, C. (2020). Action biases perceptual decisions toward expected outcomes. Journal of Experimental Psychology: General, 150(6), 1225–1236, 10.1037/xge0000826. [DOI] [PMC free article] [PubMed] [Google Scholar]