Abstract

Brain networks store new memories using functional and structural synaptic plasticity. Memory formation is generally attributed to Hebbian plasticity, while homeostatic plasticity is thought to have an ancillary role in stabilizing network dynamics. Here we report that homeostatic plasticity alone can also lead to the formation of stable memories. We analyze this phenomenon using a new theory of network remodeling, combined with numerical simulations of recurrent spiking neural networks that exhibit structural plasticity based on firing rate homeostasis. These networks are able to store repeatedly presented patterns and recall them upon the presentation of incomplete cues. Storage is fast, governed by the homeostatic drift. In contrast, forgetting is slow, driven by a diffusion process. Joint stimulation of neurons induces the growth of associative connections between them, leading to the formation of memory engrams. These memories are stored in a distributed fashion throughout connectivity matrix, and individual synaptic connections have only a small influence. Although memory-specific connections are increased in number, the total number of inputs and outputs of neurons undergo only small changes during stimulation. We find that homeostatic structural plasticity induces a specific type of “silent memories”, different from conventional attractor states.

Author summary

Memories are thought to be stored in groups of strongly connected neurons, or engrams. Much effort has been put into understanding engrams, but currently there is no definitive consensus about how they are formed. Hebbian plasticity was proposed to underlie engram formation. Hebbian plasticity occurs when synaptic weights are strengthened between pairs of neurons with correlated activity. Hebbian plasticity, therefore, is thought to rely on a mechanism that can detect correlation between neurons. However, the increased synaptic weight implies increased correlation, which creates a positive feedback loop that can lead to runaway growth. Avoiding such an unfavorable condition would require regulatory mechanisms that are much faster than the ones which have been observed in the brain. Here we show that a structural plasticity rule that is based on firing rate homeostasis can also lead to the formation of engrams. In this case, engram formation does not rely on a mechanism that traces correlation. Instead, stronger connectivity between correlated neurons emerges as a network effect, based on self-organized rewiring. Our work proposes a different possibility how engrams could be created. This new perspective could be instrumental for better understanding the process of memory formation.

Introduction

Memories are thought to be stored in the brain using cell assemblies that emerge through coordinated synaptic plasticity [1]. Cell assemblies with strong enough recurrent connections lead to bistable firing rates, which allows a network to encode memories as dynamic attractor states [2, 3]. If strong excitatory recurrent connections are counteracted by inhibitory plasticity, “silent” memories are formed [4–6]. In principle, assemblies can be generated by strengthening already existing synapses [2, 3], but potentially also by increasing connectivity among neurons. It has been shown that attractor networks can emerge through the creation of such neuronal clusters [7].

The creation of clusters through changes in connectivity between cells would require synaptic rewiring, or structural plasticity. Structural plasticity has been frequently reported in different areas of the brain, and sprouting and pruning of synaptic contacts was found to be often activity-dependent [8–10]. Sustained turnover of synapses, however, poses a severe challenge to the idea of memories being stored in synaptic connections [11]. Interestingly, recent theoretical work has shown that stable assemblies can be maintained despite ongoing synaptic rewiring [12, 13].

The formation of neuronal assemblies, or clusters, is traditionally attributed to Hebbian plasticity, driven by the correlation between pre- and postsynaptic neuronal activity on a certain time scale. For a typical Hebbian rule, a positive correlation in activity causes an increase in synaptic weight, which in turn increases the correlation between neuronal firing. This positive feedback cycle can result in unbounded growth, runaway activity and an essential dynamic instability of the network, if additional regulatory mechanisms are lacking. In fact, neuronal networks of the brain appear to employ homeostatic control mechanisms that regulate neuronal activity [14], and actively stabilize the firing rate of individual neurons at specific target levels [15, 16]. However, even though homeostatic mechanisms have been reported in experiments to operate on a range of different time scales, they seem to be too slow to trap the instabilities caused by Hebbian learning rules [17]. All things considered, it remains to be elucidated, what are the exact roles of Hebbian and homeostatic plasticity, and how these different processes interact to form cell assemblies in a robust and stable way [18].

Concerning the interplay between Hebbian and homeostatic plasticity, we have recently demonstrated by simulations that homeostatic structural plasticity alone can lead to the formation of assemblies of strongly interconnected neurons [19]. Moreover, we found that varying the strength of the stimulation and the fraction of stimulated neurons in combination with repetitive protocols can lead to even stronger assemblies [20]. In both papers, we used a structural plasticity model based on firing rate homeostasis, which had been used before to study synaptic rewiring linked with neurogenesis [21, 22], and the role of structural plasticity after focal stroke [23, 24] and after retinal lesion [25]. This model has also been used to study the emergence of criticality in developing networks [26] and other topological aspects of plastic networks [27]. Similarly to models with inhibitory plasticity [4–6], the memories formed in networks of this type represent a form of silent memory that is not in any obvious way reflected by neuronal activity.

The long-standing discussion about memory engrams in the brain has been revived recently. Researchers were able to identify and manipulate engrams [28], and to allocate memories to specific neurons during classical conditioning tasks [29]. These authors have also emphasized that an engram is not yet a memory, but merely the physical substrate of a potential memory in the brain [28]. Similar to the idea of a memory trace, it should provide the necessary conditions for a retrievable memory to emerge. Normally, the process of engram formation is thought to involve the strengthening of already existing synaptic connections. Here, we propose that new engrams could also be formed by a special form of synaptic clustering with increased synaptic connectivity among participating neurons, but the total number of incoming and outgoing synapses remaining approximately constant.

To demonstrate the feasibility of the idea, we performed numerical simulations of a classical conditioning task in a recurrent network with structural plasticity based on firing rate homeostasis. We were able to show that the cell assemblies formed share all characteristics of a memory engram. We further explored the properties of the formed engrams and developed a mean-field theory to explain the mechanisms of memory formation with homeostatic structural plasticity. We showed that these networks are able to effectively store repeatedly presented patterns. The formed engrams implement a special type of silent memory, which normally exists in a quiescent state and can be successfully retrieved using incomplete cues.

Results

Formation of memory engrams by homeostatic structural plasticity

We simulated a classical conditioning paradigm using a recurrent network. The network was composed of excitatory and inhibitory leaky integrate-and-fire neurons, and the excitatory-to-excitatory connections were subject to structural plasticity regulated by firing rate homeostasis. All neurons in the network received a constant background input in the form of independent Poisson spike trains. Three different non-overlapping subsets of neurons were sampled randomly from the network. The various stimuli considered here were conceived as increased external input to one of the specific ensembles, or combinations thereof. As the stimuli were arranged exactly as in behavioral experiments, we also adopted their terminology “unconditioned stimulus” (US) and “conditioned stimulus” (C1 and C2). The unconditioned response (UR) was conceived as the activity of a single readout neuron, which received input from the ensemble of excitatory neurons associated with the US (Fig 1A, top).

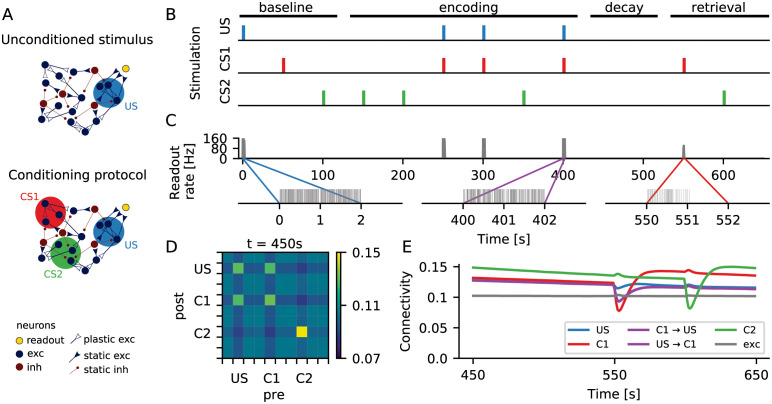

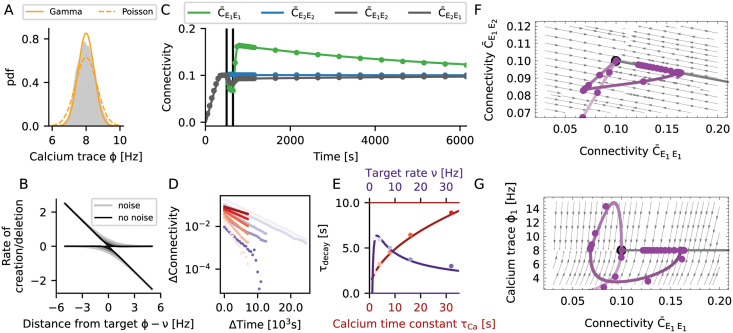

Fig 1. Formation of memory engrams in a neuronal network with homeostatic structural plasticity.

(A) In a classical conditioning scenario, an unconditioned stimulus (US) was represented by a group of neurons that were connected to a readout neuron (yellow) via static synapses. The readout neuron spiked whenever neuronal activity representing the US was above a certain threshold (top). During a conditioning protocol, two other groups of neurons (CS1 and CS2) were chosen to represent two different conditioned stimuli (bottom). (B) During stimulation, the external input to a specific group of neurons was increased. The color marks indicate when each specific group was being stimulated. During the “encoding” phase, CS1 was always stimulated together with US, and CS2 was always stimulated alone. The time axis matches that of panel C (top). (C) Firing rate (top) and spike train (bottom) of the readout neuron. Before the paired stimulation (“baseline”), the readout neuron responded strongly only upon direct stimulation of the neuronal ensemble corresponding to the US. After the paired stimulation (“retrieval”), however, a presentation of C1 alone triggered a strong response of the readout neuron. This was not the case for a presentation of C2 alone. (D) Coarse grained connectivity matrix. Neurons are divided into 10 groups of 100 neurons each, shown is the average connectivity within each group. After encoding, the connectivity matrix indicates that engrams were formed, and we found enhanced connectivity within all three ensembles as a consequence of repeated stimulation. Bidirectional inter-connectivity across different engrams, however, is only observed for the pair C1-US that experienced paired stimulation. (E) Average connectivity as a function of time. The connectivity dynamics shows that engram identity was strengthened with each stimulus presentation, and that engrams decayed during unspecific external stimulation.

Fig 1B illustrates the protocol of the conditioning experiment simulated here. During a baseline period, the engrams representing US, C1 and C2 were stimulated once, one after the other. The stimulation consisted of increasing the rate of the background input by 40% for a period of 2mathrms. In this phase, the activity of the US ensemble was high only upon direct stimulation (Fig 1B, middle). The baseline period was followed by an encoding period, in which the C1 engram was always stimulated together with the US engram, while the C2 engram was always stimulated in isolation. Simultaneous stimulation of neurons in a recurrent network with homeostatic structural plasticity can lead to the formation of reinforced ensembles [19], which are strengthened by repetitive stimulation [20]. This is also what happened here: After the encoding period, each of the three neuronal ensembles had increased within-ensemble connectivity, as compared to baseline. Memory traces, or engrams, were formed (Fig 1D). Moreover, the US and C1 engrams also had higher bidirectional across-ensemble connectivity, representing an association between their corresponding memories.

Between encoding and retrieval, memory traces remained in a dormant state. Due to the homeostatic nature of network remodeling, the ongoing activity after encoding was very similar to the activity before encoding, but specific rewiring of input and output connections led to the formation of structural engrams. It turns out that these “silent memories” are quite persistent, as “forgetting” is much slower than “learning” them. In later sections, we will present a detailed analysis of this phenomenon. Any silent memory can be retrieved with a cue. In our case, this is a presentation of the conditioned stimulus. Stimulation of C1 alone, but not of C2 alone, triggered a conditioned response (Fig 1C) that was similar to the unconditioned response. Inevitably, stimulation of C1 and C2 during recall briefly destabilized the corresponding cell assemblies (seen as a drop in connectivity in Fig 1E), as homeostatic plasticity was still ongoing. The corresponding engrams then went through a reconsolidation period, during which the within-assembly connectivity grew even higher than before retrieval (Fig 1E, red and green). As a consequence, stored memories got stronger with each recall. Interestingly, as in our case the retrieval involved stimulation of either C1 or C2 alone, the connectivity between the US and C1 engrams decreased a bit after the recall (Fig 1E, purple).

Memories and associations were formed by changes in synaptic wiring, triggered by neuronal activity during the encoding period. They persisted in a dormant state and could be reactivated by a retrieval cue that reflected the activity experienced during encoding. This setting exactly characterizes a memory engram [28]. In the following sections, we will further characterize the process of formation of a single engram. We will also explore the nature and stability of the formed engram in more detail.

Engrams represent silent memories, not attractors

Learned engrams have a subtle influence on network activity. For a demonstration, we first grew a network under the influence of homeostatic structural plasticity (see Section Grown networks). All neurons received a baseline stimulation in the form of independent Poisson spike trains. We then randomly selected an ensemble E1 of excitatory neurons and stimulated it repeatedly. Each stimulation cycle was comprised of a period of 150s increased input to E1 and another 150 s relaxation period with no extra input. After 8 such stimulation cycles, the within-engram connectivity had increased to . At this point, the ongoing activity of the network exhibited no apparent difference to the activity before engram encoding (Fig 2A). Due to the homeostatic nature of structural plasticity, neurons fired on average at their target rate, even though massive rewiring had led to higher within-engram connectivity. Looking closer, however, revealed a conspicuous change in the second-order properties of neuronal ensemble activity. We quantified this phenomenon using the overlap mμ. This quantity reflects the similarity of the neuron-by-neuron activity in a given time bin with a specified pattern, for example a pattern with all stimulated neurons being active and the remaining ones being silent (see Section Overlap measure for a more detailed explanation of the concept). Fig 2B depicts the time-dependent (bin by bin) overlap of ongoing network activity with the engram E1 (, see Table 1 for a complete list of symbols). It also shows the overlap with 10 different random ensembles x (mx), which are of the same size as E1 but have no neurons in common with it. The variance of is slightly larger than that of mx (Fig 2C). This indicates that the increased connectivity also increased the tendency of neurons belonging to the same learned engram to synchronize their activity, in comparison to other pairs of neurons. An increase in pairwise correlations within the engram, however, leads to increased fluctuations of the population activity [30], which also affects population measures such as the overlap used here.

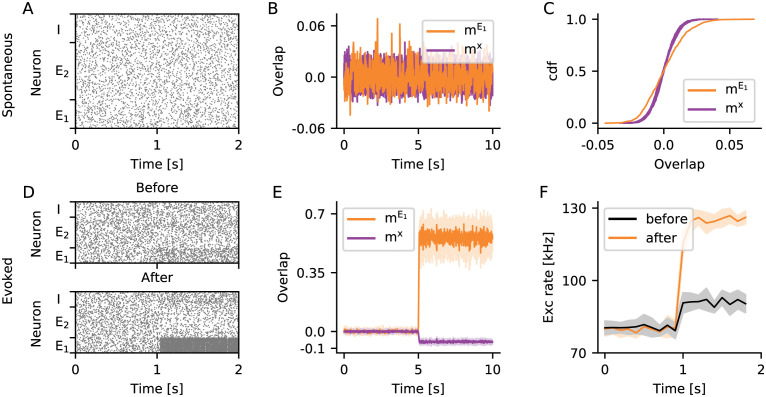

Fig 2. Silent memory based on structural engrams.

(A–C) The ongoing activity of neurons belonging to the engram E1 can hardly be distinguished from the activity of the rest of the network. (A) Raster plot showing the spontaneous activity of 50 neurons randomly selected from E1, 100 neurons randomly selected from E2 but not belonging to E1, and 50 neurons randomly selected from the pool I of inhibitory neurons. (B) Overlap of ongoing network activity with the learned engram E1 (, orange), and separately for 10 different random ensembles x disjoint with E1 (mx, purple). (C) Cumulative distribution of mμ shown in (B). (D–F) The activity evoked upon stimulation of E1 is higher, if the within-engram connectivity is large enough () as a consequence of learning. (D) Same as A for evoked activity, the stimulation starts at t = 1 s. The neurons belonging to engram E1 are stimulated before (top, ) and after (bottom, ) engram encoding. (E) Overlap with the learned engram (, orange) and with random ensembles (mx, purple) during specific stimulation of engram E1. (F) Population rate of all excitatory neurons during stimulation of E1 before (black) and after (orange) engram encoding. (E, F) Solid line and shading depict mean and standard deviation across 10 independent simulation runs, respectively. In all panels, the bin size for calculating overlaps is 10 ms, and the bin size for calculating population rates is 100 ms.

Table 1. List of symbols.

| Symbol | Description |

|---|---|

| ϕ(t) | Calcium trace |

| τ Ca | Calcium time constant |

| S(t) | Spike train |

| r(t) | Instantaneous firing rate |

| ν | Target rate |

| a(t) | Number of axonal elements |

| d(t) | Number of dendritic elements |

| β d | Dendritic growth parameter |

| β a | Axonal growth parameter |

| Cij(t) | Number of synaptic connections from neuron j (presynaptic) to neuron i (postsynaptic) |

| Expected value of number of synaptic connections Cij(t) | |

| E | Excitatory neurons |

| E 1 | Stimulated excitatory neurons |

| E 2 | Non-stimulated excitatory neurons |

| I | Inhibitory neurons |

| τ drift | Effective time constant of encoding |

| τ diffusion | Effective time constant of forgetting |

| mx(t) | Overlap of network activity with pattern x |

| τ rate | Relaxation time of rate dynamics |

During specific stimulation, the differences between the evoked activity of learned engrams and random ensembles were more pronounced. Fig 2D shows raster plots of network activity during stimulation of E1 before and after the engram was encoded. The high recurrent connectivity within the E1 assembly after encoding amplified the effect of stimulation, leading to much higher firing rates of E1 neurons. This effect could even be seen in the population activity of all excitatory neurons in the network (Fig 2F). During stimulation, the increase in firing rate of engram neurons was accompanied by a suppression of activity of all other excitatory neurons not belonging to the engram. This was what underlied the conspicuous decrease in the overlap mx with random ensembles x during stimulation (Fig 2E).

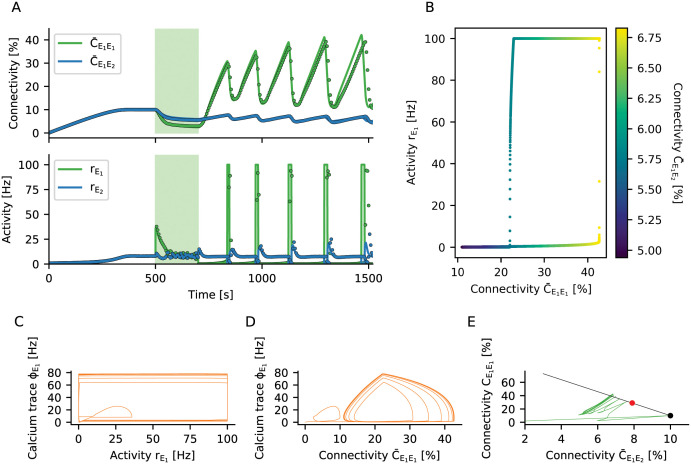

How does the evoked response of an engram depend on the connectivity within? To answer this question, we looked into evoked activity at different points in time during stimulation. The within-engram connectivity increased with every stimulation cycle (Fig 3A), and so did the population activity of excitatory neurons during stimulation (Fig 3B). The in-degree of excitatory neurons, in contrast, was kept at a fixed level by the homeostatic controller, even after engram encoding (Fig 4C). This behavior was well captured by a simple mean-field firing rate model (grey line in Fig 3B), in which the within-engram connectivity was varied and all the remaining excitatory connections were adjusted to maintain a fixed in-degree of excitatory neurons.

Fig 3. Evoked activity depended on the strength of a memory.

(A) Starting from a random network grown under the influence of unstructured stimulation (black dot), we repeatedly stimulated the same ensemble of excitatory neurons E1 to eventually form an engram. Multiple stimulation cycles increased the recurrent connectivity within the engram. (B) Population activity of all excitatory neurons upon stimulation of E1, for different levels of engram connectivity . Crosses depict the population rate observed in a simulation. Colors indicate engram connectivity , matching the colors used in panels (A) and (D). The grey line outlines the expectation from a simple mean-field theory. (C) Firing rate of E1 engram neurons upon stimulation of 50% its neurons. Shown is the mean firing rate of the stimulated engram neurons (E1S, orange), the mean firing rate of the non-stimulated engram neurons (E1N, grey), and the mean firing rate of excitatory neurons not belonging to the engram (E2, blue). Solid line and shading depict mean and standard deviation for 10 independent simulation runs, respectively. (D) Time-averaged overlap , for different fractions of E1 being stimulated. The recurrent nature of memory engrams enabled them to perform pattern completion. The degree of pattern completion depended monotonically on engram strength. (E) Two engrams (orange and green) were encoded in a network. Both engrams had a different strength with regard to their within-engram connectivity (green stronger than orange). A simple readout neuron received input from a random sample comprising 9% of all excitatory and 9% of all inhibitory neurons in the network. (F) Raster plot for the activity of 10 different readout neurons during the stimulation of learned engrams and random ensembles, respectively. Readout neurons were active when an encoded engram was stimulated (orange and green), and they generally responded with higher firing rates for stronger engrams (green). The activity of a readout neuron was low in absence of a stimulus (white), or upon stimulation of a random ensemble of neurons (purple and blue).

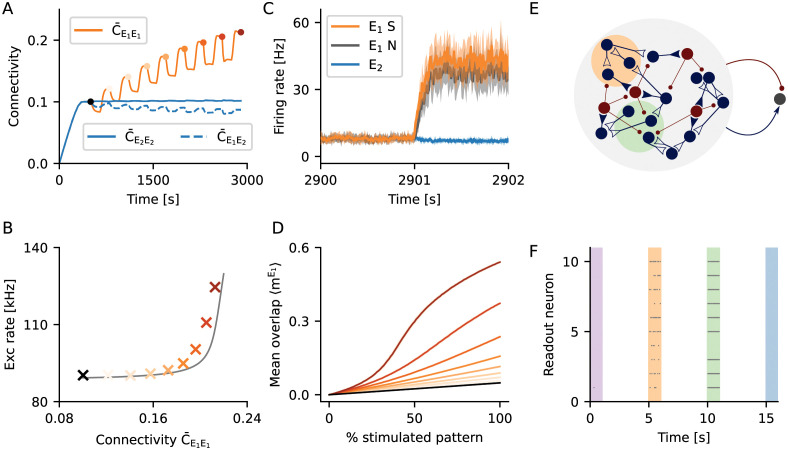

Fig 4. Hebbian properties emerge through the interaction of selective input and homeostatic control.

(A) The activity of the neuronal network was subject to homeostatic control. For increased external input, it transiently responded with a higher firing rate. With a certain delay, the rate was down-regulated to the imposed set-point. When the stimulus was turned off, the network transiently responded with a lower firing rate, which was eventually up-regulated to the set-point again. The activity was generally characterized by irregular and asynchronous spike trains. (B) It was assumed that the intracellular calcium concentration followed the spiking dynamics, according to a first-order low-pass characteristic. Dots correspond to numerical simulations of the system, and solid lines reflect theoretical predictions from a mean-field model of dynamic network remodeling. (C) Dendritic elements (building blocks of synapses) were generated until an in-degree of Kin = 1000 was reached. This number slightly decreased during specific stimulation, but then recovered after the stimulus was removed. (D) Synaptic connectivity closely followed the dynamics of dendritic elements until the recovery phase, when the recurrent connectivity within the stimulated group E1 overshooted. (A–D) Black vertical lines indicate beginning and end of the stimulation. (E, F) Phase space representation of the activity. The purple lines are projections of the full, high-dimensional dynamics to different two-dimensional subspaces: (E) within-engram connectivity vs. across-ensemble connectivity and (F) within-engram connectivity vs. engram calcium trace. The dynamic flow was represented by the gray arrows. The steady state of the plastic network was characterized by a line attractor (thick gray line), defined by a fixed total in-degree and out-degree. The ensemble of stimulated neurons formed a stable engram, and the strength of the engram was encoded by its position on the line attractor. (G) The effective “instantaneous” learning rule for the expected connectivity between a pair of neurons is homeostatic in nature. It could also be viewed as an “inverse covariance rule” with baseline at the set-point of the homeostatic controller. The emerging Hebbian properties results from the more long-term combinatorial properties of rewiring across the whole network.

We were interested to know whether engrams performed pattern completion, and how this feature depended on the connectivity within. Following the formation of the engram E1 through 8 stimulation cycles (Fig 3A), we tested pattern completion by stimulating only 50% of the neurons in E1 (E1S), while the remaining neurons in E1 (E1N) were not directly stimulated. Fig 3C shows that, as expected, stimulation led to an increase in the activity of the directly stimulated neurons (E1S, orange). It also shows, however, a very similar increase of the activity of the remaining non-stimulated neurons (E1N, grey), indicating pattern completion. In order to quantitatively assess pattern completion at different levels of the connectivity within, we measured how the overlap of network activity within the engram, , depended on partial stimulation. For an unstructured random network, increased at a certain rate with the fraction of stimulated neurons (Fig 3C, black line). We speak of “pattern completion”, if was systematically larger than in an unstructured random network. Fig 3C demonstrates clearly that the degree of pattern completion associated with a specific engram increased monotonically with the within-engram connectivity.

The evoked activity in learned engrams and in random ensembles of the same size is different. This feature can be taken as a marker for the existence of a stored memory. To demonstrate the potential of this idea, we employed a simple readout neuron for this task (Fig 3D). This neuron had the same properties as any other neuron in the network, and it received input from a random sample comprising a certain fraction (here 9%) of all excitatory and the same fraction of all inhibitory neurons in the network. We encoded two engrams in the same network, one being slightly stronger (, green) than the other one (, orange). We recorded the firing of the readout neuron during spontaneous activity, during specific stimulation of the engrams, and during stimulation of random ensembles of the same size. Fig 3E shows a raster plot of the activity of 10 different readout neurons, each of them sampling a different random subset of the network. With the parameters chosen here, the activity of a readout neuron was generally very low, except when a learned memory engram was stimulated. Due to the gradual increase in population activity with memory strength (Fig 3B), readout neurons responded with higher rates upon the stimulation of stronger engrams (green).

Homeostatic structural plasticity enables memories based on neuronal ensembles with increased within-ensemble connectivity, or engrams. Memories are acquired quickly and can persist for a long time. Moreover, the specific network configuration considered here admits a gradual response to the stimulation of an engram reflecting the strength of the memory. As we will show later, the engram connectivity lies on a line attractor, which turns into a slow manifold if fluctuations are taken into consideration. This configuration allows the network to simultaneously learn to recognize a stimulus (“Does the current stimulation correspond to a known memory?”) and to assess its confidence of the recognition (“How strong is the memory trace of this pattern?”). Such behavior would be absolutely impossible in a system that relies on bistable firing rates (attractors) to define engrams. Details of our analysis will be explained later in Section Fluctuation-driven decay of engrams.

The mechanism of engram formation

We have shown how homeostatic structural plasticity created and maintained memory engrams, and we have then further elucidated the mechanisms underlying this process. We considered a minimal stimulation protocol [19] to study the encoding process for a single engram E1. We performed numerical simulations and developed a dynamical network theory to explain the emergence of associative (Hebbian) properties. In Fig 4, the results of numerical simulations are plotted together with the results of our theoretical analysis (see Section Mean-field approximation of population dynamics). Upon stimulation, the firing rate followed the typical homeostatic dynamics [31]. In the initial phase, the network stabilized at the target rate (Fig 4A). Upon external stimulation, it transiently responded with a higher firing rate. With a certain delay, the rate was down-regulated to the set-point. When the stimulus was turned off, the network transiently responded with a lower firing rate, which was eventually up-regulated to the set-point again.

Firing rate homeostasis relied on the intracellular calcium concentration ϕi(t) of each neuron i (Fig 4D), which can be considered as a proxy for its firing rate. In our simulations, it was obtained as a low-pass filtered version of the spike train Si(t) of the neuron

| (1) |

with time constant τCa. Each excitatory neuron i used its own calcium trace ϕi(t) to control its number of synaptic elements. Deviations of the instantaneous firing rate (calcium concentration) ϕi(t) from the target rate νi triggered either creation or deletion of elements according to

| (2) |

where ai(t) and di(t) are the number of axonal and dendritic elements, respectively. The parameters βa and βd are the associated growth parameters (see Section Plasticity model for more details). These equations are describing homeostatic control in our model. When activity is larger than the set-point, excitatory synapses are deleted. This tends to reduce activity. When activity is lower than the set-point, synaptic elements are created and new excitatory synapses are formed. This tends to increase activity. The number of elements are represented by continuous variables. A floor operation ⌊x⌋ is used to create the integers required for simulations.

During the initial growth phase, the number of elements increased to values corresponding to an in-degree Kin = ϵN, which was the number of excitatory inputs to the neuron that are necessary to sustain firing at the target rate (Fig 4D). Upon stimulation (during the learning phase), the number of connections was down-regulated due to the transiently increased firing rate of neurons. After the stimulus was turned off, the activity returned to its set-point. During the growing and learning phases, connectivity closely followed the dynamics of synaptic elements (Fig 4D), and connectivity was proportional to the number of available synaptic elements (Fig 4D). After removal of the stimulus, however, in the reconsolidation phase, the recurrent connectivity within the stimulated group E1 was found to overshoot (Fig 4D). Then the average connectivity in the network returned to baseline (Fig 4C). While recurrent connectivity of the engram E1 increased, both the connectivity to the rest of network and from rest of the network decreased, keeping the mean input to all neurons fixed. This indicates that although the network was globally subject to homeostatic control, local changes effectively exhibit associative features, as already pointed out in [19]. Our theoretical predictions generally match the simulations very well (Fig 4), with the exception that it predicted a larger overshoot. This discrepancy will be resolved in Section Fluctuation-driven decay of engrams.

Deriving a theoretical framework of network remodeling (see Section Mathematical re-formulation of the algorithm) for the algorithm suggested by [25] posed a great challenge due to the large number of variables of both continuous (firing rates, calcium trace) and discrete (spike times, number of elements, connectivity, rewiring step) nature. The dimensionality of the system was effectively reduced by using a mean-field approach, which conveniently aggregated discrete counting variables into continuous averages (see Section Time-continuous limit and Section Mean-field approximation of population dynamics for more details of derivation).

Synaptic elements are accounted for by the number of free axonal a+(t) and free dendritic d+(t) elements, while the number of deleted elements is denoted by a−(t) and d−(t), respectively. Free axonal elements are paired with free dendritic elements in a completely random fashion to form synapses. The deletion of dendritic or axonal elements in neuron i automatically induces the deletion of incoming or outgoing synapses of that neuron, respectively. We employed a stochastic differential equation (Section Time-continuous limit) to describe the time evolution of connectivity from neuron j to neuron i

| (3) |

In this equation, , is the rate of creation/deletion of the axonal and dendritic elements of neuron i, respectively, and ρ(t) is the rate of creation of elements in the whole network. Note that ρ′+ is a corrected version of ρ+ (see Section Time-continuous limit for details). The stochastic process described by Eq 3 decomposes into a deterministic drift process and a diffusive noise process. The noise process has two sources. The first source derives from the stochastic nature of the spike trains, and the second source is linked with the stochastic nature of axon-dendrite bonding. In this section, we ignore the noise and discuss only the deterministic part of the equation. This is equivalent to reducing the spiking dynamics to a firing rate model and, at the same time, treat connectivity in terms of its expectation values.

Stable steady-state solutions of the system described by Eq 3 represent a hyperplane in the space of connectivity (see Eq 17 in Section Line attractor of the deterministic system). These steady-state solutions are (random) network configurations with a fixed in-degree and out-degree such that . We will show that these states are attractive in Section Network stability and constraints on growth parameters and Section Linear stability analysis. The hyperplane solution in our mean-field approach also generalizes to population variables (Section Mean-field approximation of population dynamics and Section Line attractor of the deterministic system). In the case of only one engram stored in the network, there is the stimulated population and the “rest” of the network. In this case, the hyperplane from Eq 17 reduces to a line attractor of Eq 16 (Fig 4E and 4F, gray line). Memories are stored in the network in the following way: When a group of neurons is stimulated, the network diverges from the line attractor and takes a different path back during reconsolidation. The new position on the line encodes the strength of the memory. Stronger memories increase their connectivity within at expense of other connections. Furthermore, as the attractor is a skewed hyperplane in the space of connectivity, the memory is distributed across the whole neural network, and not only in recurrent connections among stimulated neurons in E1. As a reflection of this, other connectivity parameters (, , , cf. Fig 4D) are also slightly changed.

To understand why changes in recurrent connectivity are associative, we note that the creation part of Eq 3 is actually a product and , similar to a pre-post pair in a typical Hebbian rule. The main difference to a classical Hebbian rule is that only neurons firing below their target rate are creating new synapses. The effective rule is depicted in Fig 4G. Only neurons with free axonal or dendritic elements, respectively, can form new synapses, and those neurons are mostly the ones with low firing rates. The deletion part of Eq 3 depends linearly on and , reducing to a simple multiplicative homeostasis. Upon excitatory stimulation, the homeostatic part is dominant and the number of synaptic elements decreases. Once the stimulus is terminated, the Hebbian part takes over, inducing a post-stimulation overshoot in connectivity. This leads to a peculiar bimodal dynamics of first decreasing connectivity and then overshooting, an important signature of this rule. We summarize this process in an effective rule

| (4) |

where ΔIi is the input perturbation of neuron i. The term ΔIiΔIj is explicitly Hebbian with regard to input perturbations. Eq 4 only holds, however, if the stimulus is presented for a long enough time such that the calcium concentration tracks the change in activity and connectivity drops.

Fluctuation-driven decay of engrams

The qualitative aspects of memory formation have been explored in Section The mechanism of engram formation. Now we investigated the process of memory maintenance. A noticeable discrepancy between theory and numerical simulations was pointed out in Fig 4D. The overshoot is exaggerated and memories last forever. We found that this discrepancy was resolved when we take the spiking nature of neurons into account (Section Spiking noise).

Neurons use discrete spike trains for signaling, and we conceived them here as stochastic point processes. We found that Gamma processes (Section Spiking noise) could reproduce the first two moments of the spike train statistics of the simulated networks with sufficient precision. The homeostatic controller in our model uses the trace of the calcium concentration ϕi(t) as a proxy for the actual firing rate of the neuron. As the calcium trace ϕi(t) is just a filtered version of the stochastic spike train Si(t), it is a stochastic process in its own respect. In Fig 5A we showed the stationary distribution of the time-dependent calcium concentration ϕi(t). Apparently, a filtered Gamma process (purple line) provided a better fit to the simulated data than a filtered Poisson process (red line). The reason is that Gamma processes have an extra degree of freedom to match the irregularity of spike trains (coefficient of variation, here CV ≈ 0.7) as compared to Poisson processes (always CV = 1).

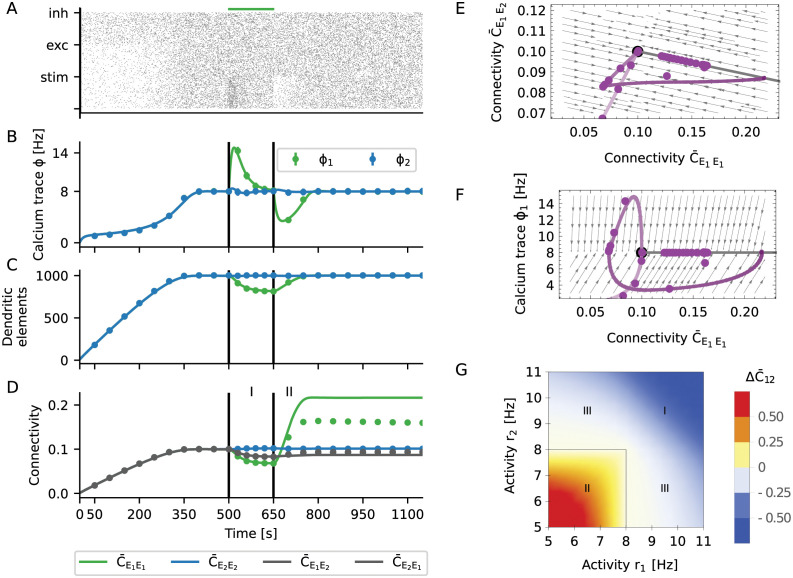

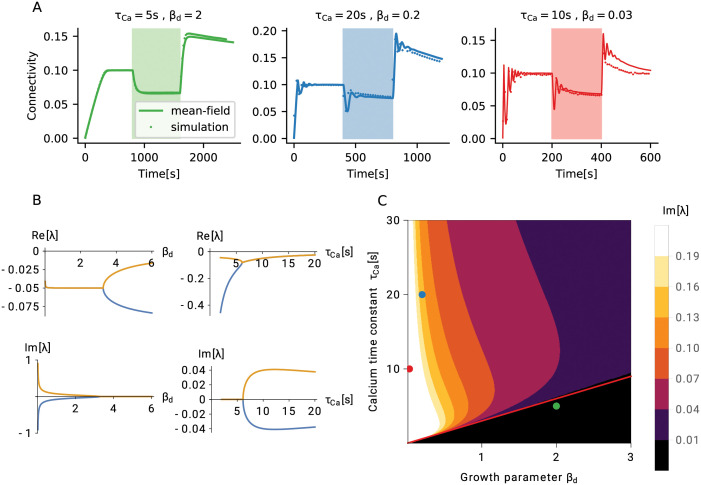

Fig 5. Noisy spiking induces fluctuations that lead to memory decay.

(A) The gray histogram shows the distribution of calcium levels for a single neuron across 5000 s of simulation. The yellow lines resulted from modeling the spike train as a Poisson process (dotted line) or a Gamma process (solid line), respectively. (B) The rate of creation or deletion of synaptic elements depends on the difference between the actual firing rate from the target rate (set-point), for different levels of spiking noise. The negative gain (slope) of the homeostatic controller in presence of noise is transformed into two separate processes of creation and deletion of synaptic elements. In the presence of noise (grey lines, lighter colors correspond to stronger noise), even when the firing rate is on target, residual fluctuations of the calcium signal induced a continuous rewiring of the network, corresponding to a diffusion process. (C) If noisy spiking and the associated diffusion was included in the model, our mean-field theory matched the simulation results very well. This concerns the initial decay, the overshoot and subsequent slow decay. Vertical lines indicate the beginning and the end of the stimulation. (D) Change in connectivity during the decay period, for different values of the calcium time constant (different shades of red, from light to dark τCa = 1, 2, 4, 8, 16, 32 s, ρ = 8 Hz) and the target rate (different shades of purple, from light to dark ρ = 2, 4, 8, 16, 32 Hz, τCa = 10 s). We generally observed exponential relaxation as a consequence of a constant rewiring rate. (E) Time constant of the diffusive decay as a function of the calcium time constant and the target rate. Lines show our predictions from theory, and dots represent the values extracted from numerical simulations of plastic networks. The decay time τdecay increases with . The memory was generally more stable for small target rates ν, but collapsed for very small rates. This indicates an optimum for low firing rates, at about 3 Hz. (F, G) Same phase diagrams as shown in Fig 4E and 4F, but taking noise into consideration. (F) The spiking noise compromises the stability of the line attractor, which turns into a slow manifold. (G) The relaxation to the high-entropy connectivity configuration during the decay phase is indeed confined to a constant firing rate manifold.

The homeostatic controller (Eq 2) strived to stabilize ϕi(t) at a fixed target value ν, but ϕi(t) fluctuates (Fig 5A) due to the random nature of the spiking. These fluctuations resulted in some degree of random creation and deletion of connections. Our theory (Section Spiking noise) reflected this aspect by an effective rule (Eq 12), which was obtained by averaging Eq 3 over the spiking noise (Fig 5B, red lines). The shape of this function indicates that connections were randomly created and deleted even when neurons were firing at their target rate. The larger the amplitude of the noise, the larger is the asymptotic variance of the process and the amount of spontaneous rewiring taking place.

We now extended our mean-field model of the rewiring process (see Section The mechanism of engram formation) to account for the spiking noise (see Section Spiking noise). According to the enhanced model, both the overshoot and the decay now matched very well with numerical simulations of plastic networks (Fig 5C). The decay of connectivity following its overshoot was exponential (Fig Fluctuation-driven decay of engramsD), and Eq 3 revealed that the homeostasis was multiplicative and that the decay rate should be constant. The exponential nature of the decay is best understood by inspecting the phase space (Fig 5F and 5G). In terms of connectivity, the learning process was qualitatively the same as in the noise-less case (Fig 4E), where a small perturbation led to a fast relaxation to the line attractor (see Eq 16 in Section Line attractor of the deterministic system). In the presence of spiking noise (Fig 5F), however, the line attractor was deformed into a slow stochastic manifold (see Eq 18 in Section Slow manifold and diffusion to the global fixed point). The process of memory decay corresponded to a very slow movement toward the most entropic stable configuration compatible with firing rates clamped at their target value (Fig 5G). In our case, this led to a constraint on the in-degree Kin = ϵNE, where ϵ is the mean equilibrium connectivity of excitatory neurons and NE is the number of excitatory neurons. In equilibrium, the connectivity matrix relaxed to a uniform connection probability . The realized connections, however, were constantly fluctuating. In every moment, the network had a configuration as for the Erdős-Rényi model. We refer to this state as “the” most entropic configuration. It will only change as a result of external stimulation. We summarize the memory decay process by the equation

| (5) |

The fast “drift” process of relaxation back toward the slow manifold corresponds to the deterministic part of Eq 3. In contrast, the slow “diffusion” process of memory decay along the slow manifold corresponds to the stochastic part of Eq 3. In the equation above, τdrift represents the time scale of the fast drift process, and τdiffusion is the time scale of the slow diffusion process.

The drift process is strongly non-linear, and its bandwidth is limited by the time constant of the calcium filter τCa, but also by the growth parameters of the dendritic elements βd and axonal elements βa. The diffusion process, on the other hand, is essentially constrained to the slow manifold of constant in-degree and firing rate (Section Slow manifold and diffusion to the global fixed point). Analytic calculations yield the relation

| (6) |

where NE is number of excitatory neurons, c is the average connectivity between excitatory neurons, and η is a correction factor to account for the reduced irregularity of spike trains as compared to a Poisson process. Both size and connectivity of the network increase the longevity of stored memories. Assuming that neurons rewire at a constant speed, it takes more time to rewire more elements. There is an interesting interference with the noise process, as memory longevity depends on the time constant of calcium in proportion to (Fig 5E). On the other hand, the time scale of learning τdrift is limited by the low-pass characteristics of calcium, represented by τCa. Increasing τCa leads to more persistent memories, but it also makes the learning slower. In the extreme case of τCa → ∞ the system is unresponsive and exhibits no learning. As the calcium time constant, however, is finite, we don’t consider this possibility. We also find that the longevity of memories depends as on the target rate. Therefore, making the target rate small enough should lead to very persistent memories. This path to very stable memories is not viable, though, because the average connectivity c implicitly changes with target rate. In Fig 5E longevity of the memory is depicted as a function of the target rate with corrected connectivity c = c(ν), and we find that for very small target rates memory longevity tends to zero instead. This suggests that there is an optimal range of target rates centered at a few spikes per second, fully consistent with experimental recordings from cortical neurons [32].

To summarize, the process of forming an engram (“learning”, see Section The mechanism of engram formation) exploits the properties of a line attractor. Taking spiking noise into account, the structure of the line attractor is deformed into a slow stochastic manifold. This still allows learning, but introduces controlled “forgetting” as a new feature. Forgetting is not necessarily an undesirable property of a memory system. In a dynamic environment, it might be an advantage for the organism to forget non-persistent or unimportant aspects of it. Homeostatic plasticity implements a mechanism of forgetting with an exponential time profile. However, psychological forgetting curves are often found to follow a power law [33]. The presented model cannot account for this directly, and additional mechanisms (e.g., replay, repeated stimulation, or alternative forms of plasticity) might be necessary to get this. Strong memories are sustained for a longer period, but they will eventually also be forgotten. In the framework of this model, the only way to keep memories forever is to repeat the corresponding stimulus from time to time, as illustrated in Fig 1D. If we think of the frequency of occurrence as a measure of the relevance of a stimulus, this implies that irrelevant memories decay but the relevant ones remain. Memories are stored in a way where the increase in connectivity within the engram is accompanied by a decrease of connectivity with non-engram neurons. This means that storage is linked with distributed changes in the connectivity matrix, instead of being stored in specific and localized synaptic connections [11]. Forgetting is reflected by a diffusion to the most entropic network configuration along the slow manifold. As a result, the system performs continuous inference from a persistent stream of information about the environment. Already stored memories are constantly refreshed in terms of a movement in directions away from the most entropic point of the slow manifold (novel memories define new directions), while diffusion pushes the system back to the most entropic configuration.

Network stability and constraints on growth parameters

So far, we have described the process of forming and maintaining memory engrams based on homeostatic structural plasticity. We have explained the mechanisms behind the striking associative properties of the system. Now, we will explore the limits of stability of networks with homeostatic structural plasticity and derive meaningful parameter regimes for a robust memory system. A homeostatic controller that operates on the basis of firing rates can be expected to be very stable by construction. Indeed we find that, whenever parameters are assigned meaningful values, the Jacobian obtained by linearization of the system around the stable mean connectivity ϵ has only eigenvalues λ with non-positive real parts Re[λ] ≤ 0 (see Section Linear stability analysis). As a demonstration, we consider the real part of the two “most unstable” eigenvalues as a function of two relevant parameters, the dendritic/axonal growth parameter βd and the calcium time constant τCa (Fig 6B, upper panel). The real part of these eigenvalues remains negative for any meaningful choice of time constants. It should be noted at this point, however, that the system under consideration is strongly non-linear, and linear stability alone does not guarantee global stability. We will discuss an interesting case of non-linear instability in Section Loss of control leads to bursts of high activity. Oscillatory transients represent another potential issue in general control systems, and we will now explore the damped oscillatory phase of activity in more detail.

Fig 6. Linear stability of a network with homeostatic structural plasticity.

(A) For a wide parameter regime, the structural evolution of the network has a single fixed point, which is also stable. Three typical types of homeostatic growth responses are depicted for this configuration: non-oscillatory (left), weakly oscillatory (middle), and strongly oscillatory (right) network remodeling. (B) All eigenvalues of the linearized system have a negative real part, for all values of the growth parameters of dendritic (axonal) elements βd and calcium τCa. In the case of fast synaptic elements (small βd) or slow calcium (large τCa), the system exhibits oscillatory responses. Shown are real parts and imaginary parts of the two “most unstable” eigenvalues, for different values of βd (left column, τCa = 10 s) and τCa (right column, βd = 2). (C) Phase diagram of the linear response. The black region below the red line indicates non-oscillatory responses, which corresponds to the configuration τCa ≤ 3βd s. Dots indicate the parameter configurations shown in panel (A), with matching colors.

In Fig 6A we depict three typical cases of homeostatic growth responses: non-oscillatory (left, green), weakly oscillatory (middle, blue), and strongly oscillatory (right, red) network remodeling. The imaginary parts of the two eigenvalues shown in Fig 6B (bottom left), which are actually responsible for the oscillations, become non-zero when the dendritic and axonal growth parameters are too small (for other parameters, see Section The mechanism of engram formation), and both creation and deletion of elements are too fast. Oscillations occur, on the other hand, for large values of the calcium time constant τCa (Fig 6B, bottom right). The system oscillates, if the low-pass filter is too slow as compared to the turnover of synaptic elements. The combination of βd and τCa that leads to the onset of oscillations can be derived from the condition Im[λ] = 0. We can further exploit the fact that two oscillatory eigenvalues are complex conjugates of each other, and that the imaginary part is zero, when the real part bifurcates.

To elucidate the relative importance of the two parameters βd and τCa, we now explore how they together contribute to the emergence of oscillations (Fig 6C). The effect of parameters on oscillations is a combination of the two mechanisms discussed above: low-pass filtering and agility of control. We use the bifurcation of the real part of the least stable eigenvalues as a criterion for the emergence of Im[λ] = 0, which yields the boundary between the oscillatory and the non-oscillatory region (Fig 6C, red line). The black region of the phase diagram corresponds to a simple fixed point with no oscillations, the green point corresponds to the case shown in Fig 6A, left. From Fig 6B, bottom, we conclude that the fastest oscillations are created when βd is very small, as the oscillation frequency then exhibits a asymptotic dependence. The calcium dependence is a slowly changing function. The specific case indicated by a red point in Fig 6C corresponds to the dynamics shown in Fig 6A, right. Although intermediate parameter values result in damped oscillations (Fig 6C, blue dot; Fig 6A, middle), its amplitude remains relatively small. This link between two parameters can be used to predict a meaningful range of values for βd. Experimentally reported values for τCa are combined with the heuristic of not exhibiting strong oscillations.

The analysis outlined in the previous paragraph clearly suggests that, in order to avoid excessive oscillations, the calcium signal (a proxy for neuronal activity) has to be faster than the process which creates elements. Oscillations in network growth are completely suppressed, if it is at least tree times faster. Strong oscillations can compromise non-linear stability, as we will show in Section Loss of control leads to bursts of high activity. A combination of parameters that leads to oscillations could also imply a loss of stability for certain stimuli, for reasons explained later. We assume that the calcium time constant is in the range between 1 s and 10 s, in line with the values reported for somatic calcium transients in experiments [34–37]. This indicates that homeostatic structural plasticity should not use element growth parameters smaller than around 0.4. Faster learning must be based on other types of synaptic plasticity (e.g. spike-timing dependent plasticity, or fast synaptic scaling).

We use this analysis framework now to compute turnover rates (TOR) and compare them to the values typically found in experiments. In Sections The mechanism of engram formation and Fluctuation-driven decay of engrams we use a calcium time constant of 10 s and a growth parameters for synaptic elements of βa = βd = 2, which results in TOR of around 18% per day (see Methods). Interestingly, [38] measured TOR in the barrel cortex of young mice and found TOR values of around 20% per day. After sensory deprivation, the TOR increased to a maximum of around 30% per day in the barrel cortex (but not elsewhere). In our model, stimulus-dependent rewiring is strongest in the directly stimulated engram E1 (Fig 4C). This particular ensemble rewires close to 25% of its dendritic elements per stimulation cycle. This very large turnover is a result of our experimental design involving a very strong stimulus. In principle, we could choose weaker stimuli, but then we would need many more encoding episodes, as described in [20]. The main results of the paper, however, would remain the same.

Loss of control leads to bursts of high activity

A network the connectivity of which is subject to homeostatic regulation generally exhibits robust linear stability around the fixed point of connectivity ϵ, as explained in detail in Section Network stability and constraints on growth parameters and Section Linear stability analysis. But what happens, if the system is forced far away from its equilibrium? To illustrate the new phenomena arising, we repeat the stimulation protocol described in Section The mechanism of engram formation with one stimulated ensemble E1. However, we now increase both the strength and the duration of the stimulation (Fig 7A). The network behaves as before during the growth and the stimulation phase (Fig 7A, upper panel), but during the reconsolidation phase connectivity gets out of control. New recurrent synapses are formed at a very high rate until excessive feedback of activity triggered by input from the non-stimulated ensemble causes an explosion of firing rates (Fig 7A bottom panel). The homeostatic response of the network to such seizure-like activity can only be a brisk decrease in recurrent connectivity. As a consequence, the activity quickly drops to zero and the deregulated growth cycle starts all over.

Fig 7. Non-linear stability of a network with homeostatic structural plasticity.

(A) The engram E1 is stimulated with a very strong external input. As the homeostatic response triggers excessive pruning of recurrent connections, the population E1 is completely silenced after the stimulus is turned off. This, in turn, initiates a strong compensatory overshoot of connectivity and consecutive runaway population activity. The dots with corresponding color show the results of a plastic network simulation, and the solid lines indicate the corresponding predictions from our theory. The theoretical instantaneous firing rate is clipped at 100 Hz. (B) The network settles in a limit cycle of connectivity dynamics. The hysteresis-like behavior is caused by the faster growth of within-engram connectivity as compared to connectivity from the non-engram ensemble . During the initial phase of the cycle, the increase of has no effect on the activity of population E1 yet, as its neurons are not active. Only when the input from population E2 through gets large enough, the rate becomes non-zero and rises to very high values quickly due to already large recurrent connectivity. (C) The calcium signal ϕ adds an additional delay to the cycle. (D) This leads to smoother trajectories when scattering calcium concentration against connectivity. (E) Connectivity within the stimulated group plotted against input connectivity from the non-engram population . The black line shows configurations with constant in-degree, of which the black dot represents the most entropic one. The red dot corresponds to critical connectivity, beyond which the limit cycle behavior is triggered. The limit cycle transients in connectivity space are orthogonal to the line attractor, indicating that the total in-degree is oscillating and no homeostatic equilibrium can be established.

We explore the mechanism underlying this runaway process by plotting the long-term dynamics in a phase plane spanned by recurrent connectivity and the activity of the engram (Fig 7B). A special type of limit cycle emerges, and we can track it using the input connectivity from the rest of the network to the learned engram (, see Fig 7B). The cycle is started when the engram E1 is stimulated with a very strong external input. As the homeostatic response of the network triggers excessive pruning of its recurrent connections, the population E1 is completely silenced, , after the stimulus has been turned off. Then, homeostatic plasticity sets in and tries to compensate the activity below target by increasing the recurrent excitatory input to the engram E1. The growth of intra-ensemble connectivity is faster than the changes in inter-ensemble connectivity , as the growth rate of is quadratic in the rate (Fig 4G), but depends only linearly on it. However, while there are no recurrent spikes, , the increase in intra-ensemble connectivity cannot restore the activity to its target value. As soon as input from the rest of the network via is strong enough to increase the rate to non-zero values, engram neurons very quickly increase their own rate by activating recurrent connectivity . At this point, however, the network has entered a state in which the population activity is bistable, and there is a transition to high activity state, coinciding with a pronounced outbreak of population activity . The increase in rate is then immediately counteracted by the homeostatic controller. Due to the seizure-like activity burst, a high amount of calcium is accumulated in all participating cells. As a consequence, neurons delete many excitatory connections, and the firing rates are driven back to zero. This hysteresis-like cycle of events is repeated over and over again (Fig 7B), even if the stimulus has meanwhile been turned off. The period of the limit cycle is strongly influenced by the calcium variable, which lags behind activity (Fig 7C). Replacing activity by recurrent connectivity , a somewhat smoother picture emerges (Fig 7D).

Two aspects are important for the emergence of the limit cycle. Firstly, the specific relation between the time scales of calcium and synaptic elements gives rise to different types of instabilities (see Figs 6, 7C and 7D). Secondly, the rates of creation and deletion of elements do not have the same bounds. While the rate with which elements are created ρ+ is limited by , the rate of deletion ρ− is limited by . This peculiar asymmetry causes the observed brisk decrease in connectivity after an extreme seizure-like burst of activity. An appropriate choice of the calcium time constant, in combination with a strict limit on the rate of deletion, might lead to a system without the (pathological) limit cycle behavior observed in simulations. Finally, we have derived a criterion for bursts of population activity to arise, related to the loss of stability due to excessive recurrent connectivity (see Section Linear stability analysis, Eq 22). Indeed, a network with fixed in-degrees becomes dynamically unstable, if the connectivity of subpopulation E1 exceeds the critical value , which for our parameters is at about 29%. The neurons comprising the engram E1 receive too much recurrent input (Fig 7E), and the balance of excitation and inhibition brakes. In this configuration, only one attractive fixed point exists for high firing rates, and a population burst is inevitable. In simulations, the stochastic nature of the system tends to elicit population bursts even earlier, at about 22% connectivity in our hands. We conclude that 22–29% connectivity is a region of bi-stability, with two attractive fixed points coexisting. Early during limit cycle development, the total in-degree is less than ϵN (the connectivity is in the region below the black line in Fig 7E), and the excitation-inhibition balance is broken by positive feedback. Later, the limit cycle settles into a configuration, where the total in-degree exceeds ϵN (the black line is crossed from below in Fig 4E). We have shown before that stable learning leads to silent memories in the network (Section Formation of memory engrams by homeostatic structural plasticity and Engrams represent silent memories, not attractors), but in the case discussed here, sustained high activity is at odds with stable homeostatic control of network growth.

Discussion

We have demonstrated by numerical simulations and by mathematical analysis that structural plasticity controlled by firing rate homeostasis can implement a memory system based on the emergence and the decay of engrams. Input patterns are defined by a stimulation of the corresponding ensemble of neurons in a recurrent network. Presenting two patterns concurrently leads to their association by newly formed synaptic connections between the involved neurons. This mechanism can be used, among other things, to effectively implement classical conditioning. The memories are stored in network connectivity in a distributed fashion, defined by the engram as a whole and not by isolated individual connections. The memories are dynamic. They decay if previously learned stimuli are no longer presented, but they get stronger with every single recall. The memory is not affecting the firing rates during spontaneous activity, but even weak memory traces can be identified by the correlation of activity. Memories become visible as a firing rate increase of a specific pattern upon external stimulation, though. The embedding network is able to perform pattern completion, if a partial cue is presented. Finally, we have devised a simple recognition memory mechanism, in which downstream neurons respond with a higher firing rate, if any of the previously learned patterns is stimulated.

Memory engrams emerge because the homeostatic rule acts as an effective Hebbian rule with associative properties. This unexpected behavior is achieved by an interaction between the temporal dynamics of homeostatic control and a network-wide distributed formation of synapses. Memory formation is a fast process, exploiting degrees of freedom orthogonal to a line attractor while it reacts to the stimulus, and storing memories as positions on the line attractor. The spiking of neurons introduces fluctuations, which lead to the decay of memory on a slow time scale through diffusion along the line attractor. In absence of specific stimulation, the network slowly relaxes to the most entropic configuration of uniform connectivity across all pairs of neurons. In contrast, multiple repetitions of a stimulus push the system to states of lower entropy, corresponding to stronger memories. The dynamics of homeostatic networks is, by construction, very robust for a wide parameter range. Instabilities occur when the time scales of creating and deleting synaptic elements are much smaller than the time scales of the calcium trace, which feeds the homeostatic controller. Under these conditions, the network displays oscillations of fast decaying amplitudes, but it remains linearly stable. Stability is lost, though, when the stimulus is too strong. In this case, the compensatory forces lead to a limit cycle dynamics with pathologically large amplitudes.

Experiments involving engram manipulation have increased our current knowledge about this type of memory [28], and some recent findings are actually in accordance with our model. For example, memory re-consolidation was disrupted if a protein synthesis inhibitor was administered immediately after the retrieval cue during an auditory fear conditioning experiment [39]. In our model, engram connectivity initially decreases upon stimulation, and memories are shortly destabilized and consolidated again after every retrieval. Interfering with plasticity during or after retrieval could, therefore, also lead to active forgetting. Our model also predicts a decreased connectivity following cue retrieval, which should be visible if the disruption is imposed at the right point in time. We are, however, not aware of any experiments demonstrating this decreased connectivity. It is conceivable, therefore, that additional mechanisms exist in biological networks that actively counteract such decay. Another example of experiments that are in accordance to our model refers to excitability and engram allocation. Recent experiments have shown that neurons are more likely to be allocated to an engram, if they are more excitable before stimulation [40, 41]. In our model, more excitable neurons would fire more during stimulation, making them more likely to become part of an engram as a result of the increased synaptic turnover. Moreover, our analysis of the model suggests that decreasing excitability of some neurons soon after stimulation should also increase the likelihood that they become part of the engram. Further research with our model of homeostatic engram formation might include even more specific predictions for comparison with experiments involving engram manipulation. This would also help to better characterize and understand the process of engram formation in the brain.

The exact rules governing the sprouting and pruning of spines and boutons, and how these depend on neuronal activity, are unknown. While some studies show a constant turnover of spines, but fixed spine density [42], [38], other studies report either an increased spine density after stimulation in LTP protocols [43], [44], [45], or a loss of spines after persistent depolarization [46], [47], see [48] for a review on activity dependent structural plasticity. These different results might seem contradictory at first, but our model suggests that they could all be related. This is because the specific connectivity within the stimulated population undergoes a bimodal change over time (Fig 4D), and whether one encounters an increase or a decrease in spine density would depend on when the measurements were performed. More specifically, our model predicts that the overall spine density remains unchanged before and after stimulation (Fig 4C), notwithstanding a small temporary decrease during stimulation. Most importantly, however, our model emphasizes a reallocation of synapses, resulting in higher connectivity between stimulated neurons and reduced connectivity to non-stimulated neurons. This means that the overall number of spines is the same before and after stimulation (same number of dendritic elements before and after stimulation on Fig 4). However, the number of spines connecting to other stimulated neurons is higher after stimulation (green line on Fig 4D) and the number of spines connecting to non-stimulated neurons is smaller (grey lines on Fig 4D). Demonstrating such specific effects experimentally would require to establish for each synapse separately, whether the presynaptic and the postsynaptic neuron was stimulated, or not. To our knowledge, there is currently no experimental study available reporting such labeled connectivity data. As the model is inherently stochastic, it is not necessary that a synapse is deleted each time their presynaptic or postsynaptic neurons are stimulated. Instead, random deletions between any pair of stimulated neurons might lead to a small change in overall engram connectivity. Although small, these decrements in connectivity accumulate over time, as a result of repetitive stimulation [20].

High turnover rates of synapses increase the volatility of network structure. This, in turn, poses a grand challenge to any synaptic theory of memory [11], and it is not yet clear how memories can at all persist in a system that is constantly rewiring [49]. In our model, the desired relative stability of memories is achieved by storing them with the help of a slow manifold mechanism. An estimation of turnover rates in our model amounts to about 18% per day, which is comparable to the 20% per day that have been measured in mouse barrel cortex [38]. In general, however, adult mice have more persistent synapses with much lower turnover rates as low as 4% per month [10, 50, 51]. This can be accounted for in our model, as increased growth parameters of axonal, βa, and dendritic, βd, elements would lead to smaller synaptic turnover rates and, consequently, to more persistent spines (see Methods). The downside of increasing the growth parameters is that the learning process becomes slower. The turnover rate of 18% per day corresponds to a specific value of the parameter βd. It is conceivable, however, to implement an age-dependent parameter βd. For example, one could have a high turnover rate in the beginning and let the growth become slower with time. This would reflect the idea that the brains of younger animals are more plastic than the brains of older ones. As animals grow older, synapses become more persistent. Similar to certain machine learning strategies (“simulated annealing”), this could be the optimal strategy for an animal, which first explores a given environment and then exploits the acquired adaptations to thrive in it.

Recently, [12] showed that Hebbian structural plasticity could be the force behind memory consolidation through a process of stabilization of connectivity, which is based on the existence of an attractive fixed point in the plastic network structure. In our model, because of the decay along the slow manifold, memories are never permanent, and repeated stimulation is necessary to refresh them. We would argue, though, that forgetting is an important aspect of any biological system. In our case, we observe an exponential decay, if the stimulus is no longer presented. Furthermore, [25] has shown that a network can repair itself after lesion using a structural plasticity model similar to the one used in our current paper. Together with our results, this suggests that a structural perturbation of engrams (e.g. by removing connections or deleting neurons) could actually trigger a “healing” process and eventually rescue the memory. In the case of unspecific lesions, however, such perturbation might also lead to the formation of “fake” memories, or to the false association of actually unconnected memory items.

It appears that the attractor metaphor of persistent activity is not consistent with our model of homeostatic plasticity. As explained in Section Loss of control leads to bursts of high activity, homeostatic control tends to delete connections between neurons which are persistently active, and in extreme cases could even lead to pathological oscillations. In the case discussed in Section Engrams represent silent memories, not attractors, in contrast, the memories formed are “silent” (elsewhere classified as “transient” [52]), very different from the persistent activity usually considered in working memory tasks (elsewhere classified as “persistent”, or “dynamic” [52]). It was previously shown that silent memories can emerge in networks with both excitatory and inhibitory plasticity [4–6]. In all these cases, inhibitory plasticity allows the memory to be silent, but memory formation still relies on explicit Hebbian plasticity rules of excitatory connections. Some authors [5, 6] apply STDP to the excitatory connections, and others [4] just impose an increase in weight of excitatory-to-excitatory connections between neurons forming the assembly. As we understand it, such an increase could be achieved by Hebbian plasticity of excitatory connections. In contrast, our work shows that silent memories could potentially also be formed without correlation-based Hebbian plasticity on excitatory connections. Persistent activity, on the other hand, seems to be exclusively consistent with Hebbian plasticity models [2, 3, 12]. One consequence of the lack of persistent activity in our model is that pattern completion is restricted to the stimulation period. As previously seen, in our model, stimulating partial patterns leads to the subsequent activation of the full pattern (Fig 3C and 3D). Given that there is no persistent activity, however, the firing rates of all neurons go back to their baseline values as soon as the stimulus is gone. In models with persistent activity, on the other hand, a brief stimulation of partial patterns leads to activation of the full pattern, which remains active even after the stimulus is removed.

One possible way to integrate both mechanisms in a single network would be to keep their characteristic time scales separate. This could be accomplished, for example, by choosing faster time constants for Hebbian functional plasticity, and slower ones for homeostatic structural plasticity. An effective separation of time scales could also be obtained, if homeostatic structural plasticity would use somatic calcium as a signal, but not exert any control of the intermediate calcium levels in dendritic spines [53]. This might eliminate the need to specify a target rate in the model, and fast functional plasticity would shape connectivity in the allowed range of values where neurons have a distribution of firing rates reflecting previous experience. This induces a natural separation of time scales, where memories encoded by homeostatic plasticity would last much longer than in the present model, as only extreme transients would trigger rewiring. Homeostatic plasticity would perform Bayesian-like inference similar to structure learning, while functional plasticity would perform fast associative learning, similar to the system proposed by [54]. Integrating both functional and structural plasticity opens the possibility that different information is represented by the number of synapses and by the synaptic weights between pairs of neurons. This would allow for the encoding of more complex patterns. If the mean connectivity among neurons establishes the engram, the synaptic weights would offer additional degrees of freedom to encode information and to modulate the activity of individual neurons within the engram in an independent way. Different plasticity rules could thus be used to encode different temporal aspects of neuronal activity in either synaptic weights or synaptic connectivity.

Synaptic plasticity also influences the joint activity dynamics of neurons, which can be assessed with appropriate data analysis methods. Functional and effective connectivity, for example, are inferred from measured neuronal activity [55], [56], [57]. One implication of our results is that different activity dependent plasticity rules can lead to the same changes in functional and effective connectivity. Specifically, changes in network connectivity triggered by homeostatic plasticity also lead to changes in correlation. Therefore, these changes appear to be driven by correlation, and a correlation detector for each synapse might be postulated to implement it. This is, however, a wrong conclusion. The increased correlation and the Hebbian properties of the homeostatic model emerge as a network property due to availability of free elements and random self-organization. It remains a challenge to devise experiments that can differentiate between these fundamentally different possibilities.

We showed that very strong stimulation can damage the network by deleting too many synapses in a short time. The compensatory processes, which normally guarantee stability, get out of control and lead to seizure-like bursts of very high activity. This pathological behaviour of the overstimulated system could contribute to the etiology of certain brain diseases, such as epilepsy. The disruption of healthy stable activity is caused by a broken excitation-inhibition balance due to the high activity of one subgroup (Fig 7A). This, in turn, leads to the emergence of a runaway connectivity cycle (Fig 7E). Strategies for intervention in this case must take the whole cycle into account, and not just the phase of extreme activity. Inhibiting neurons during the high-activity phase, for example, could have an immediate effect, but it would not provide a sustainable solution to the problem of runaway connectivity. Our results suggest, against intuition maybe, that additional excitation of the highly active neurons could actually terminate the vicious cycle quite efficiently. It is important to note, however, that our system has not been designed as a model of epilepsy, and therefore does not reproduce all features of it [58]. In particular, seizure occurrence is stochastic in nature, but the limit cycle we describe here implies periodic activity and a periodic dynamics of connectivity. Although increased mossy-fibre connectivity among granule cells is known to be one of the structural changes related to epilepsy [59], there is currently no evidence for cyclic changes of this recurrent connectivity. Furthermore, the process of epileptogenesis in real brains is accompanied by other structural changes, such as neuronal death and glia-related tissue reorganization. In any case, our results shed light on a novel mechanism of pathological structural overcompensation and could potentially instruct alternative approaches in future epilepsy research.