Abstract

An analysis of scar tissue is necessary to understand the pathological tissue conditions during or after the wound healing process. Hematoxylin and eosin (HE) staining has conventionally been applied to understand the morphology of scar tissue. However, the scar lesions cannot be analyzed from a whole slide image. The current study aimed to develop a method for the rapid and automatic characterization of scar lesions in HE-stained scar tissues using a supervised and unsupervised learning algorithm. The supervised learning used a Mask region-based convolutional neural network (RCNN) to train a pattern from a data representation using MMDetection tools. The K-means algorithm characterized the HE-stained tissue and extracted the main features, such as the collagen density and directional variance of the collagen. The Mask RCNN model effectively predicted scar images using various backbone networks (e.g., ResNet50, ResNet101, ResNeSt50, and ResNeSt101) with high accuracy. The K-means clustering method successfully characterized the HE-stained tissue by separating the main features in terms of the collagen fiber and dermal mature components, namely, the glands, hair follicles, and nuclei. A quantitative analysis of the scar tissue in terms of the collagen density and directional variance of the collagen confirmed 50% differences between the normal and scar tissues. The proposed methods were utilized to characterize the pathological features of scar tissue for an objective histological analysis. The trained model is time-efficient when used for detection in place of a manual analysis. Machine learning-assisted analysis is expected to aid in understanding scar conditions, and to help establish an optimal treatment plan.

Keywords: scar tissue, machine learning, structural analysis, pathological features, histological analysis

1. Introduction

Scar tissue develops as a result of tissue damage caused by injury, surgery, or burns. Inflammation, proliferation, and remodeling are the three stages of the healing process [1]. Scars can be faded or removed, using a variety of techniques, including corticosteroid injections [2], cryotherapy [3], and laser therapy [4]. Identifying and evaluating scar tissue is the most crucial step in determining the extent of tissue damage and planning a suitable treatment pathway for scar management and removal [5]. However, determining and analyzing the scar regions in the skin tissue remains challenging. The limited field of view often restricts analysis of the entire scar lesion in the whole slide image (WSI). For instance, recent research studies have simply manually located scar lesions to analyze a region of interest (ROI) in the scar area [6,7]. An in-depth analysis of a scar region is also difficult, owing to the comparable color distributions in hematoxylin and eosin (HE)-stained images. Although morphological changes in the scar region can be identified and distinguished, histological analyses often suffer from ambiguous classifications of tissue components in the scar region [8,9]. Additionally, HE-stained image suffers from high memory usage and is often labor-intensive in manual analysis.

The orientation of collagen fibers is an important factor in distinguishing the tissue characteristics between normal and scar tissue [10,11]. Several studies have used second harmonic generation (SHG) microscopy to examine collagen fiber formation [12,13]. Bayan et al. [14] used SHG imaging combined with the Hough transform to characterize the important features and orientation of collagen fibers in collagen gels for disease diagnosis. Histological analysis has also been used to visualize and analyze collagen fiber formation [15]. Several histological dyes, including HE [16], picrosirius red [17] and Masson’s trichrome (MT) [18] have been used to stain tissue components, to improve tissue contrast, and to highlight cellular features for in-depth analysis. MT is one of the most commonly used staining dyes in histological images for identifying the distribution of collagen [19]. MT stains collagen fibers in blue using three staining colors, so as to distinguish them from the other microstructures [20]. Tri Tram et al. used a convolutional neural network (CNN) model to classify both normal and scar tissues, as well as to visually characterize collagen fiber microstructures based on features extracted from the developed model, such as the collagen density and directional variance (DV) [21].

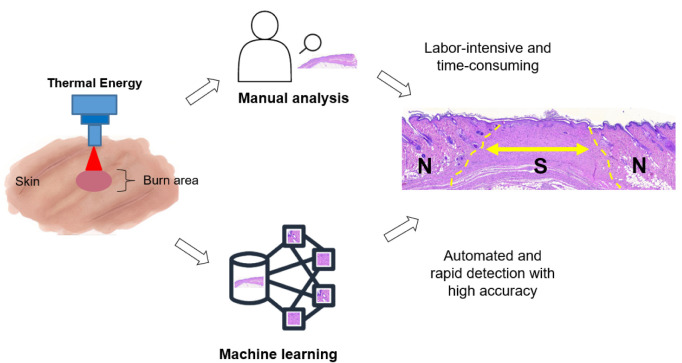

Machine learning and deep learning techniques have been used to analyze medical images from X-rays, computational tomography, ultrasound, and magnetic resonance imaging, and have shown high accuracy and reliability [22]. Machine learning techniques can help increase performance (i.e., speed, automation, and accuracy) and provide reliable results from iterative calculations, making machine learning analysis more effective than conventional methods (Figure 1) [23]. Unsupervised machine learning, such as K-means, is used to segment and cluster unlabeled data [24] by learning the high-level features from convolutional and pooling processes. The CNN has become the most popular deep learning model, as it is capable of recognizing key features [25]. CNN methods have been widely applied in the classification and segmentation of a vast number of medical images [26]. The class activation mapping method is useful to understand the predictions by mapping important features based on the convolution output. Transfer learning and progressive resizing methods could also increase the performance of CNN model training [27].

Figure 1.

Schematic comparison between manual detection and machine learning for histology analysis of scar tissue (ML = Machine learning; N = Normal; S = Scar).

In view of the above, in this study, machine and deep learning techniques based on object segmentation were developed, aiming to effectively classify and characterize scar lesions in a WSI with a Mask region-based CNN (Mask-RCNN) and object segmentation, and to overcome the limitations in current histology analysis. The Mask RCNN was initially developed from the Faster RCNN, which generates classification, box regression, and branch additions to predict a mask area for each ROI [28]. The feature pyramid network (FPN) has also been used to increase the accuracy and speed of the analysis [29].

2. Materials and Methods

2.1. In Vivo Scar Model

Four male Sprague Dawley rats (7 weeks; 200–250 g) were raised in a controlled room (temperature = 25 ± 2 °C and relative humidity = 40–70%) with an alternating 12 h light/dark (wake-sleep) cycle (i.e., on at 7 a.m. and off at 7 p.m.). During the tests, all animals were under respiratory anesthesia using a vaporizer system (Classic T3, SurgiVet, Waukesha, WI, USA). Initially, 3% isoflurane (Terrell™ isoflurane, Piramal Critical Care, Bethlehem, PA, USA) in 1 L/min oxygen was delivered into an anesthesia induction chamber, and 1.5% of isoflurane was supplied to maintain anesthesia via a nosecone. Then, an electric hair clipper and waxing cream (Nair Sensitive Hair Removal Cream, Nair, Australia) were used to completely remove the hair on the buttocks of the anesthetized animals for maximum light absorption by the skin. All animal experiments were approved by the Institutional Animal Care and Use Committee of Pukyong National University (Number: PKNUIACUC-2019-31). The current study used laser-induced thermal coagulation to fabricate a reliable mature scar model on the animal skin, according to previous research [30]. A 1470-nm wavelength laser system (FC-W-1470, CNI Optoelectronics Tech. Co., China) was employed in a continuous-wave mode to induce the thermal wound, owing to the strong water absorption (absorption coefficient = 28.4 cm at 1470 nm) and short optical penetration depth in the skin. A 600-µm flat optical fiber was used to deliver the laser light to the target tissue. Before laser irradiation, a laser power thermal sensor (L50(150)A-BB-35, Ophir, Jerusalem, Israel) in conjunction with a power meter (Nova II, Ophir, Jerusalem, Israel) was used to measure the output power of the optical fiber. For testing, the flat fiber was situated 25 mm above the skin surface, and the beam spot size on the surface was 0.3 cm. The targeted tissue was irradiated at 5 W (power density = 16.7 W/cm) for 30 s (energy density = 500 J/cm) to generate a circular thermal wound with minimal or no carbonization. As a result, a 1–2 mm thick section of coagulated tissue, 10 mm in diameter, was created in the epidermis and dermis on each side of the animal buttocks (N = 8). Four weeks after irradiation, the thermal wound became dense scar tissue via wound healing.

2.2. Histology Preparation

All of the tested scar tissues were harvested from animals 30 days after laser irradiation. The extracted scar tissues included both the scar tissue and the surrounding normal tissue. After tissue harvesting, all samples were fixed in 10% neutral buffered formalin (Sigma Aldrich, St. Louis, MO, USA) for two days. The fixed samples were dehydrated, cleared, and infiltrated sequentially using an automatic tissue processor (Leica TP1020; Leica, Wetzlar, Germany). Paraffin blocks were fabricated and divided into slices with a thickness of 5 µm to prepare histology slides. The histology slides were serially sectioned (N = 6 slides per block; total of 48 slides) at 50-µm intervals to monitor morphological variations. All of the histological slides were stained with HE (American MasterTech, Lodi, CA, USA) to qualitatively assess the morphological changes and visualize the collagen fiber distribution in the tissue. A Motic digital slide assistant system (Richmond, British Columbia, Canada; 40X and 0.26 µm/pixel resolution) was employed to capture microscopic images of the HE-stained histology slides (Figure 2) and to prepare the datasets for machine learning.

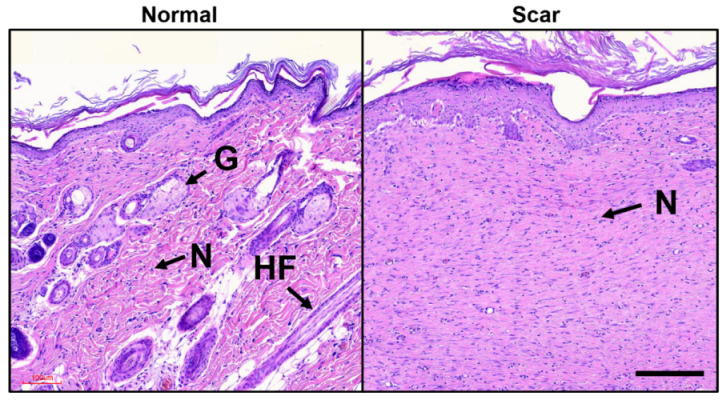

Figure 2.

Morphology of skin tissue stained with hematoxylin and eosin (HE): normal tissue (left) shows hair follicles (HFs), gland (Gs), and nuclei (N) whereas scar tissue (right) yields the absence of both HFs and Gs (scale bar = 100 µm; 10×).

2.3. Scar Recognition: Mask Region-Based Convolutional Neural Network (RCNN)

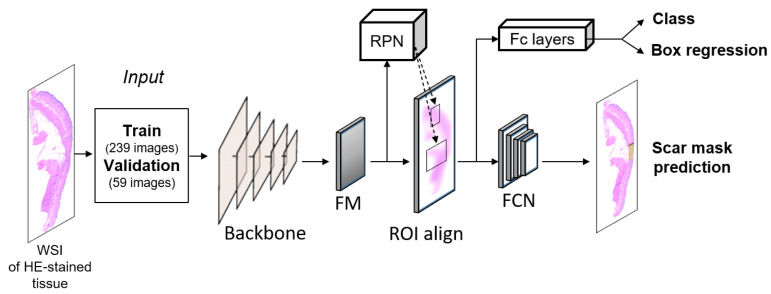

In this study, the Mask RCNN was selected for object detection and instance segmentation to recognize the scar in the WSIs of the HE-stained tissues. Mask RCNN provides classification, localization, and mask prediction, thereby providing an essential benchmark for object detection [31]. The main idea is to label each pixel corresponding to each detected object by adding a parallel mask branch [32]. The Mask RCNN was adapted from MMDetection tools, a toolset comprising numerous methods for object detection [33]. In spite of its specific detection methods, the model architectures in MMDetection have typical components, such as a convolutional backbone, neck, and head. Here, the convolutional backbone was used to extract the features from the entire image [34], and four backbones were selected to extract the features from the WSI: ResNet 50, ResNet 101, ResNeSt 50, and ResNeSt 101. Next, a region proposal network (RPN) was used as a neck stage to provide a sliding window class-agnostic object detector [35]. The RPN was developed to predict both the bounding box and class labels from the extracted features [36]. Object detection models could fail to detect when facing the varying sizes of the objects that have low resolution. RPN in the Mask R-CNN uses multi-scale anchor box to enhance the detection accuracy by extracting features at the multiple convolution levels of the object [37,38]. The ROI head generated mask predictions, classifications, and bounding box predictions. The entire process is shown in the Mask RCNN diagram in Figure 3.

Figure 3.

Block diagram of Mask region-based convolutional neural network (RCNN) algorithm for scar identification from histology slides (FM = Feature maps, RPN = region proposal network; FCN = fully connected network; FC layer = fully connected layer).

2.4. Machine Learning

Obtaining medical data is difficult and causes a problem of small sample size. Data augmentation has been addressed to generate more samples. Image manipulation and Generative adversarial network model [39] can be used. Here, image manipulation of pure rotation was used to generate 372 whole slide images, from originally 93 slide images. They were randomly selected to train (239 images, 64%), validate (59 images, 16%), and test (74 images, 20%) the neural network model. Train-validate-test split was used to validate the deep learning method. Train set was used for training the model, validate set was used for justifying the performance of the model during training process, and test set was used for the final validation. As the dataset had images of various sizes, the Mask RCNN model automatically resized the maximum scale to 1333 × 800 [40]. The scar detection Mask RCNN model was trained through the entire set of stages for 600 epochs, and was implemented in Python with Caffe frameworks. The use of fine-tuning affected the computational time and cost memory in each epoch. The fine-tuning employed an adjustment parameter to enhance the effectiveness of the training process, and was repeated frequently to increase the accuracy of the model [41,42], as shown in Table 1. After the training was completed, checkpoint files were obtained containing the Mask RCNN model parameters for the target detection and identification. A collection of scar data was then employed in the Mask RCNN testing program to obtain the trained model parameters. Regarding the implementation details, a GPU NVIDIA GeFORCE RTX 2080 Ti was used for the entire process. Torchvision 0.7.0, openCv 4.5.2, mmcv 1.3.5, and MMDetection 2.13.0 were installed as the environments for all models.

Table 1.

Hyperparameter setting.

| Hyperparameter | Configuration |

|---|---|

| Optimizer | Stochastic gradient descent (SGD) |

| Learning rate | 0.0025 |

| Epoch | 600 |

| batch size | 2 |

2.5. Evaluation Metrics

The evaluation metrics were based on using the MMDetection tools to obtain the precision, recall, loss, accuracy, and confidence score. In general, the multi-task loss function (L) of a Mask RCNN combines the losses of the classification (), localization (), and segmentation mask (), as follows:

| (1) |

The loss function for classification and localization () is defined as follows:

| (2) |

In the above, i is the index of an anchor in the mini-batch, are the predicted probability and ground-truth label, respectively, are vectors representing the four coordinates and ground truth box associated with the positive anchor, and is a balancing parameter for normalization. The loss function from the model attempted to learn a mask for each class, and no competition occurred among the classes for generating the masks. The mask loss was defined as the average binary cross-entropy loss, as follows:

| (3) |

Here, is the label of a cell in the true mask for the region of size , and is the predicted value of the same cell in the mask learned for the ground truth class k [43]. In addition to obtaining the confidence score, the SoftMax classifier () converted the score from the SoftMax calculation into probabilities [44]. All of the evaluation metrics were computed using MMDetection tools [33], and were adapted to a scar detection artificial intelligence (AI) model.

2.6. Scar Extraction

To evaluate the performance of the scar mask prediction, the scar area measurements from the conventional method and machine learning were compared. In the conventional method, the WSI was cropped, and non-scar areas were selectively removed. Image J (National Institute of Health, Bethesda, MD, USA) was used to estimate the scar area by obtaining the scar pixel values [45]. The scar measurements for mask prediction were automatically calculated by using the proposed model for each backbone. A statistical analysis using the analysis of variance (ANOVA) was performed to determine the statistical significance between the conventional method and proposed method.

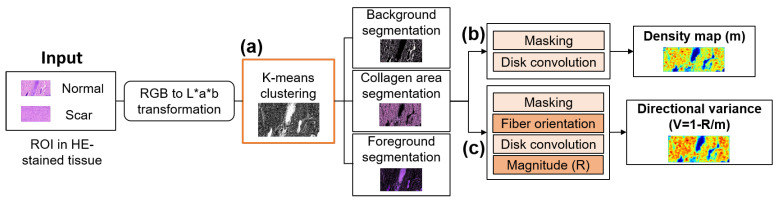

2.7. Tissue Segmentation: K-Means Clustering

The HE-stained tissue images were segmented to distinguish and clarify the morphologies of the normal and scar tissues. Tissue segmentation using K-means clustering was deployed to cluster each color point from each tissue image, and then to segment the main features, such as the collagen, hair follicle (HFs), glands (Gs), and nuclei (N) from each image. ROI inputs of various sizes were tested, i.e., 886 × 1614, 750 × 500, 500 × 500, and 250 × 250 pixels. Figure 4 describes the entire scar characterization process. The first step comprised the transformation of the tissue color space (Figure 4a). As the ROI input had an RGB color space that was unstable in terms of chrominance and luminance, the CIE L*a*b color space was chosen, and the main features were brought from the RGB color space to the CIE L*a*b as a stable color space [46]. All of the color information was in the ‘a*’ and ‘b*’ layers [47], and L was used to adjust the lightness and darkness of the image. After the color space transformation, the K-means clustering algorithm separated each data point from the tissue image into three groups: collagen area, foreground, and background. These groups were labeled with a number (0, 1, and 2) using the algorithm. The algorithm measured the distance between each cluster and the three centroids one-by-one. The algorithm then grouped the data points with the closest centroids. After grouping, the K-means clustering algorithm provided the collagen area segmentation (CAS), foreground segmentation (FS), and background segmentation.

Figure 4.

Block diagram of collagen density extractor using (a) K-Means clustering, (b) collagen density extractor, and (c) directional variance of collagen.

2.8. Collagen Density and Directional Variance of Collagen

The CAS, as characterized by K-means clustering, was selected as a feature for generating a collagen density map (CDM) (Figure 4b). A collagen mask was created by masking the collagen-positive pixels, and was convolved with an airy disk kernel to produce a map of the fiber density (m) [48]. Moreover, a vector summation method [49] was adopted to calculate the fiber orientation (Figure 4c). The developed algorithm defined the fiber orientation by identifying the variability of the image intensity along the different directions surrounding each pixel within an image. All of the positive pixels passing through the center pixel and the angles associated with these orientation vectors were calculated. After the X and Y components of the orientation were acquired, a spatial convolution using the airy disk kernel was conducted to obtain the vector summation and determine the magnitude of the resultant orientation (R). Lastly, normalization was performed attain the DV of the collagen, as follows:

| (4) |

Here, V, R, and m denote the DV, magnitude of the resultant orientation, and CDM, respectively.

2.9. Statistical Analysis

Statistical analysis All of data were expressed as mean ± standard deviation. The data were analyzed using Student’s t-test for two-group comparisons, or a one-way ANOVA for multiple-group comparisons. The statistical significance was set at p < 0.05.

3. Results

3.1. Scar Recognition

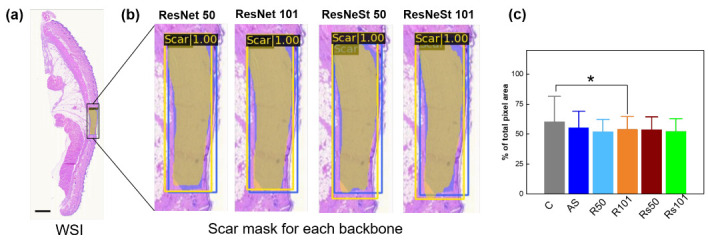

Figure 5 shows a scar identification and comparison of scar areas from using the various Mask RCNN backbones. Figure 5a demonstrates a WSI of HE-stained tissue with the scar prediction highlighted in a black box. The annotated and predicted scar masks are depicted with blue and yellow colors, respectively. Each image has a class label, and a confidence score in each scar bounding box. The function of the confidence score is to eliminate the false positive detection of the bounding box [50]. The confidence score in this model ranges from 0 to 1, and the dataset has a higher value (1) in each bounding box. A higher confidence score denotes a more appropriate AI model for predicting the scar area in the WSI. However, over confidence can occur owing to the class prediction for scar and non-scar areas, which are often rare and ambiguous classes [44]. Accordingly, a threshold provided a good balance of a high detection rate with few false positives, and was set automatically from the MMDetection tools (0.5).

Figure 5.

Scar identification with Mask RCNN using various backbones: (a) whole slide image with scar mask in yellow color and (b) magnified images of scar area, and (c) statistical comparison of measured scar areas (in pixel numbers) between conventional and machine learning methods. Blue and red boxes in (a,b) represent annotated answers (blue) and the predicted scar areas (yellow), respectively (scale bar = 3000 µm; (c) C = conventional method; AS = annotated scar; R50 = ResNet 50; R101 = ResNet 101; Rs50 = ResNeSt 50; Rs101 = ResNeSt 101; * p < 0.05 C vs. R101).

Figure 5b shows the four backbones, along with the scar masks and bounding box predictions. Regardless of the backbones, the Mask RCNN successfully recognizes the scar area in the WSI of the HE-stained tissue. ResNet 101 outperforms the other backbones in terms of mask branch, and the bounding box prediction fits into the annotation data (as ground truth of this model) Table 2. Figure 5c compares the measured scar areas between the conventional and machine-learning methods. The measurement is based on the calculations of the respective total pixel areas in the scar lesions for each backbone. The statistical analysis of the scar measurement confirms that ResNet 101 is slightly higher than the other backbones. The machine learning shows a consistent value for each measurement, depending on the standard deviations from the measurements. Meanwhile, the conventional method even includes non-scar regions, thereby increasing the mean and standard deviation values compared to the machine learning method. Furthermore, regarding the evaluation metrics, such as the precision and recall in the mask and prediction box, ResNet 101 shows higher values than the other metrics, implying that the performance of ResNet 101 is the closest to the total pixel value for the annotated scar in predicting the mask and bounding box. The mean average precision and mean average recall (mAR) from the mask prediction and bounding box confirm the results of the ResNet 101 visualization, as shown in Figure 5b.

Table 2.

Detection and segmentation results of scar by using Mask region-based convolutional neural network (R-CNN) with feature proposal network (FPN) and various backbones (ResNet50, ResNet10, ResNeSt50, and ResNeSt101): mean average precision (mAP) and mean average recall (mAR).

| Backbone | Time (s) | ||||

|---|---|---|---|---|---|

| ResNet 50 | 0.598 | 0.666 | 0.619 | 0.672 | 0.05 |

| ResNet 101 | 0.620 | 0.680 | 0.631 | 0.677 | 0.07 |

| ResNeSt 50 | 0.564 | 0.641 | 0.613 | 0.659 | 0.07 |

| ResNest 101 | 0.597 | 0.672 | 0.587 | 0.645 | 0.09 |

The computational time for predicting the scar area in the tissue was also acquired for the total number of test images (74 images) Table 2. ResNet 50 is superior in terms of time performance, ResNet 101 and ResNeSt 50 have comparable times, and ResNeSt 101 is relatively slower. Table 2 summarizes the results of the evaluation metrics for all of the backbone networks.

3.2. Scar Characterization

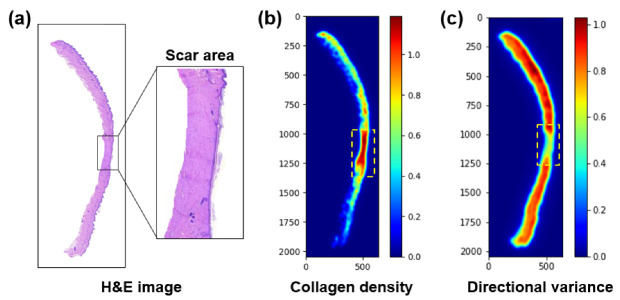

Figure 6 shows a characterization of a scar in the HE-stained tissue. Figure 6a illustrates the dermal regions acquired from the WSI with a scar region highlighted by a black box. Markedly, the scare region is full of dense fibrotic collagen, in an organized manner. Figure 6b presents a CDM corresponding to the WSI in Figure 6a. The scar region is evidently identified in a red color (yellow dashed box) to highlight its higher collagen density, relative to the surrounding normal tissue. Figure 6c shows a DV map for indicating the orientation(s) of the collagen fibers. Lower variance values indicate that the collagen fibers are highly oriented (directional). Similar to Figure 6b, the scar region shows a lower variance, owing to the abundant presence of the collagen.

Figure 6.

Tissue characterization from whole histology slide image: (a) HE-stained tissue (dermis area), (b) corresponding collagen density map (CDM), and (c) directional variance map. Yellow dashed lines represent the corresponding scar area.

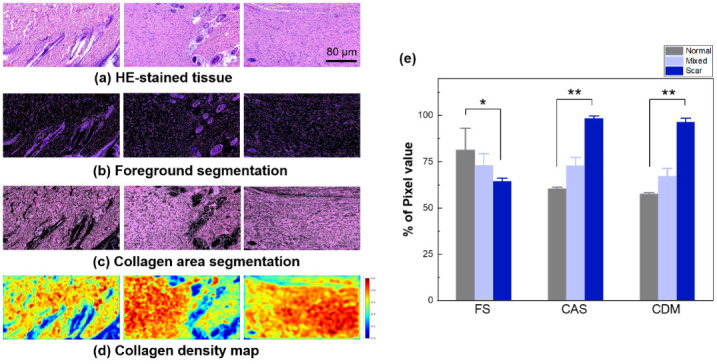

Figure 7 demonstrates a tissue characterization of three regions (normal, mixed, and scar) using k-means clustering-based color segmentation. The mixed region indicates a mixture of normal (right) and scar (left) tissues. Figure 7a represents the original input from the three regions with a size of 886 × 1614 pixels (acquired from HE-stained tissue). Figure 7b presents the results of the FS on the WSI from Figure 7a. The K-means clustering vividly segments the HFs, Gs, and N in purple. The absence of HFs and Gs in the scar tissue indicate irreversible thermal injury, as well as an excessive formation of collagen in the tissue. However, the mixed region (middle) contains HFs, Gs, and N in the normal region (non-scar) but no HFs, Gs, and N in the scar area in the same ROI. Both the CAS and CDM show that the normal tissue is associated with more black spots, representing HFs, Gs, and N (Figure 7c,d. In contrast, the scar tissue shows no or minimal black areas, indicative of the presence of dense and excessive collagen. Figure 7e shows the percentage of pixel values from each segmentation and DCM. The FS shows that the normal tissue has a greater number of HFs, Gs, and N than the scar and mixed tissues (p < 0.05). The difference between the normal and scar tissues in the FS is insignificant, because the scar tissue still has N distributed throughout the entire tissue. Both CAS and CDM show that the scar area has more collagen than the normal and mixed tissues (p < 0.005).

Figure 7.

Tissue analysis with color segmentation with K-means clustering: (a) original dataset (HE-stained tissue), (b) foreground segmentation (FS; HFs, Gs, and N), (c) collagen area segmentation (CAS), (d) CDM, and (e) statistical comparison of FS, CAS, and CDM (input size = 886 × 1614; scale bar = 80 µm; 16X; * p < 0.05 and ** p < 0.005 between normal and scar).

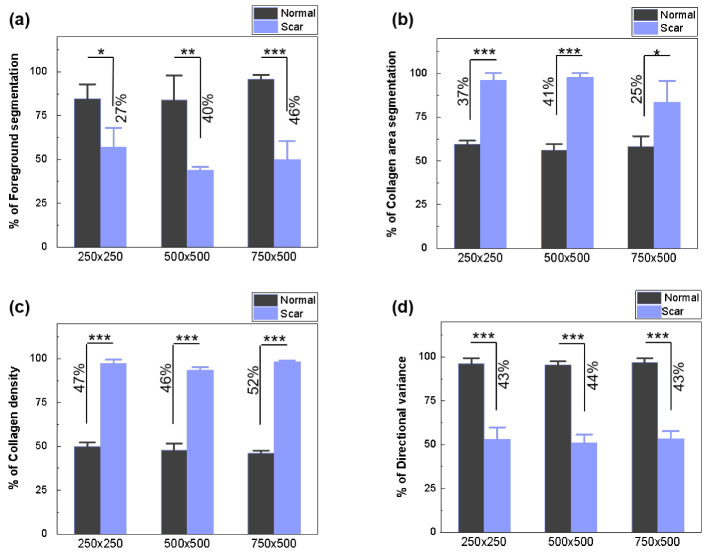

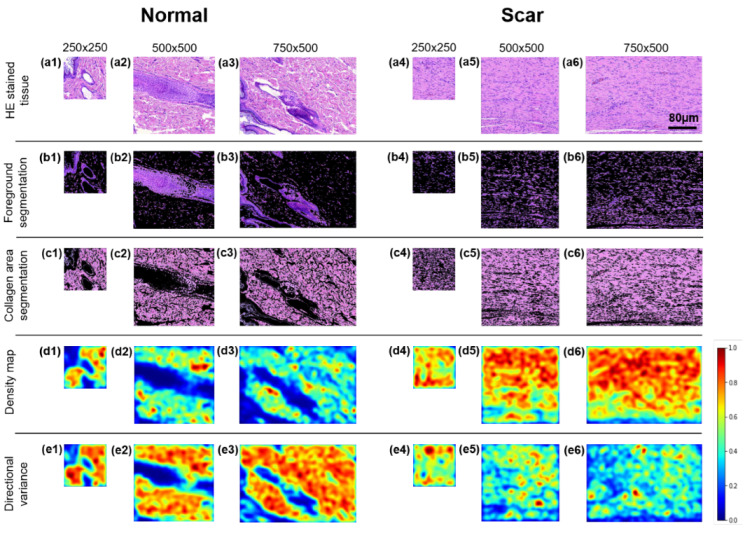

Figure 8 compares the scar characterizations of three different ROI sizes (250 × 250, 500 × 500, and 750 × 500) in terms of the FS, CAS, CDM, and DV, so as to validate the applicability of the developed algorithm to various image sizes. Regardless of the image size, the characterization algorithm identifies the excessive presence of densely oriented collagen without HFs, Gs, and N in the scar areas.

Figure 8.

Region of interest (ROI) image comparison between normal (left) and scar tissues (right) in different image sizes (250 × 250, 500 × 500, and 750 × 500 pixels): (a) HE-stained tissue (a1–a3 for normal and a4–a6 for scar), (b) FS (b1–b6), (c) CAS (c1–c6), (d) CDM (d1–d6), and (e) directional variance map (e1–e6).

The images in Figure 8 and Figure 9 quantify the extent of the FS (Figure 9a), CAS using K-means clustering (Figure 9b, CDM (Figure 9c), and DV (Figure 9d). Regardless of size, the FS shows that scar tissue has fewer pixel areas representing HF, G, and N (50%) than normal tissue (88%; p < 0.001 for 750 × 500; Figure 9a). The reduction occurs owing to the absence of HF and G in the scar tissue resulting from the irreversible thermal injury. According to the CAS (Figure 9b), the scar tissue has larger collagen areas (up to 92%; magenta color in Figure 8c) than the normal tissue (58%; p < 0.001) for all sizes, representing the collagen expansion owing to fibrotic activity from tissue injury. The CDM demonstrates that the scar tissue has a two-fold higher collagen density than the normal tissue (96% for scar vs. 48% for normal; p < 0.001), irrespective of the image size. However, the DV shows that normal tissue is associated with higher variances than scar tissue (96% for normal vs. 52% for scar; p < 0.001). Both the CDM and DV validate that upon tissue injury, the collagen formation in the scar tissue is densely oriented in a relatively organized manner.

Figure 9.

Statistical comparison between normal and scar tissues: (a) FS, (b) CAS, (c) CDM, and (d) directional variance of collagen (* p < 0.05, ** p < 0.01, *** p < 0.001).

4. Discussion

In the current study, the AI models trained by the Mask RCNN show outstanding performance for scar recognition in various unstructured sizes of HE-stained tissues. A previous study classified normal and scar tissues by using the modified “VGG” model for an ROI image of MT-stained tissue, as commonly used for collagen extraction [21]. In this study, the Mask RCNN was able to classify and localize the scar area in an WSI under challenging conditions, such as with a limited size of the input in the Mask RCNN (1333 × 800 pixels) [51], and unspecified staining for the collagen extraction [52]. Hence, the Mask RCNN attained the best results for scar recognition in HE-stained tissues, with high accuracy. The features extracted from the scar regions depended on the backbone performance. The combination of both the backbone and RPN aided in increasing the performance in terms of the feature alignment and computational time [53]. All backbones showed poor performance in predicting scar lesions in the WSI using the Mask RCNN method. However, ResNet 101 had a slightly better result (depending on the evaluation metrics) than the other backbones for scar lesion prediction. The advantage of ResNet is that the performance of this model does not reduce the ability to extract features and train the network, even though it is becoming more profound than other architectures [54]. The ResNet model achieves an advanced performance in image classification relative to other models [55]. The residual mapping and shortcut connections of ResNet produce better outcomes than those of intense plain networks, and training is also more accessible [31]. ResNeSt represents a structure modified from ResNet [56], and can obtain better (or nearly better) results than ResNet.

Unlike conventional methods, the present study successfully extracted the scar mask prediction from the Mask RCNN, confirming that scar area assessment using Mask RCNN is more effective than approaches in previous studies [57]. One limitation of the conventional method is its need to remove the non-scar area to calculate the entire scar area in the dermis; thus, it takes time to calculate the scar area in the WSI. In contrast, the scar area was properly quantified using Mask RCNN during the validation process, and took less than a minute to analyze 74 images in total (including model loading and all other processing times). In addition, the results from the scar measurements in both approaches show that the Mask RCNN can measure the scar area more precisely than the conventional method, based on the standard deviation (which determines the stabilization of the data distribution).

HE staining has been widely used as a conventional tissue technique for histopathological analysis. Different tissue types often have ambiguous boundaries in the stained sections under a microscope, compared to high-precision cell imaging and fluorescence imaging techniques [58]. The K-means clustering algorithm successfully characterized the HE-stained tissue using the FS and CAS for various image sizes (Figure 8). A previous study reported that K-means clustering could be used to quantify collagen changes in chronologically aged skin by using Herovici’s polychrome stain to investigate the collagen dynamics and differentiation of the collagen by age [59]. The current study was able to analyze the changes in collagen patterns and the absence of FS as the major histological features of scar tissue. The decrease in the level of FS in the scar tissue was an indication of irreversible tissue damage, as the current findings showed a 38% decrease in the FS from the scar tissue relative to normal tissue.

The collagen density and DV of the collagen are essential features in distinguishing between normal and scar tissues. To analyze the quality of the collagen in regards to the fiber density and fiber orientation, the current research provided the CAS as the second result of K-means clustering. The CAS was analyzed by using the CDM and DV to determine both the collagen density and fiber orientation in both normal and scar tissues. The automated pixel-wise fiber orientation analysis within the histology images showed an increase in the collagen fiber alignment and collagen density during scar formation after eschar detachment. An increase in the amount of CDM in the scar tissue indicates that the inflammatory process produces additional collagen to restore the damage caused by the injury. In addition, the collagen orientation was disorganized into cellular tissues throughout the wound in a random distribution. The decreased DV of the collagen in the scar tissue caused a temporary loss of tissue elasticity during the inflammatory process.

Although rodent tissue has different tissue components and structures from human tissue, the current study suggests the feasibility of a histopathological analysis assisted by machine learning. The proposed method was able to classify and characterize the collagen structures representing the main features of scar tissue. However, limitations to the proposed method remain. Real clinical data are still needed to improve the model performance for clinical translations. The training time should be reduced further by optimizing the hyperparameters for the proposed model. The model should be robust for the skewed dataset, as clinical data tends to be sparse and imbalanced. Several methods can be to overcome the current problems, e.g., by changing the training data to reduce data imbalance, or by modifying the learning or decision-making process models to increase the sensitivity to minority classes. Some of these methods can also be grouped into data-level, algorithm-level, and hybrid approaches.

Scar tissue remodeling monitoring in clinical application is crucial for better understanding the wound healing process and evaluating treatment alternatives. The findings of this study reveal that it is possible to recognize and characterize scar tissue in a short time. Because of the modest input requirements of the model, the characteristics derived by the proposed machine learning model may result in pathologists performing less labor-intensive analysis of histological images. Collagen structures can be utilized as imaging biomarkers in various pathological research, including cancer, aging, and wound healing. By combining data from multiple stages of scar development, it is feasible to establish an objective and quantifiable histological database for research purposes. Thus, the information can aid in planning post-injury treatment that is personalized to the patient’s specific circumstances. Therefore, the proposed method in this work, which assesses the recognition and characterization of scar tissue, may prove to be a useful diagnostic tool.

5. Conclusions

The proposed object detection model, the Mask RCNN, accurately detects scar lesions in the WSI of HE-stained tissue. ResNet 101 is superior (as a backbone) to RPN for extracting features from HE-stained tissues. The detected ROI fed to the K-means image clustering can be used to automatically separate the structure and characterize the density and orientation of the collagen fibers. Further research will attempt to improve the Mask RCNN model to proceed with various datasets, such as with different staining methods from rat skin or clinical data.

Abbreviations

The following abbreviations are used in this manuscript:

| HE | Hematoxylin and eosin |

| WSI | Whole slide image |

| SHG | Second harmonic generation |

| MT | Masson’s trichrome |

| CNN | Convolutional neural network |

| RCNN | Region-based CNN |

| RPN | Region proposal network |

| ANOVA | Analysis of variance |

| ROI | region of interest |

| CAS | Collagen area segmentation |

| CDM | Collagen density map |

| DV | Directional variance |

| AI | Artificial intelligence |

| FS | Foreground segmentation |

| HF | Hair follicle |

| G | Gland |

| N | Nuclei |

Author Contributions

Conceptualization, H.W.K. and M.Y.; methodology, L.M., H.K. (Hyeonsoo Kim), Y.L., Y.C. and H.K. (Hyunjung Kim); software, L.M., Y.C. and H.K. (Hyunjung Kim); validation, L.M.; formal analysis, L.M., Y.C. and H.K. (Hyunjung Kim); investigation, L.M.; resources, H.W.K. and M.Y.; data curation, L.M.; writing—original draft preparation, L.M., H.K. (Hyeonsoo Kim) and Y.L.; writing—review and editing, H.W.K. and M.Y.; visualization, L.M.; supervision, H.W.K. and M.Y.; project administration, H.W.K. and M.Y.; funding acquisition, H.W.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Korea Medical Device Development Fund grant funded by the Korea government (the Ministry of Science and ICT, the Ministry of Trade, Industry and Energy, the Ministry of Health & Welfare, the Ministry of Food and Drug Safety) (Project Number: 202016B01) and Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (No. 2021R1A6A1A03039211).

Institutional Review Board Statement

All animal experiments were approved by the Institutional Animal Care and Use Committee of Pukyong National University (Number:PKNUIACUC-2019-31).

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Chow L., Yick K.L., Sun Y., Leung M.S., Kwan M.Y., Ng S.P., Yu A., Yip J., Chan Y.F. A Novel Bespoke Hypertrophic Scar Treatment: Actualizing Hybrid Pressure and Silicone Therapies with 3D Printing and Scanning. Int. J. Bioprint. 2021;7:327. doi: 10.18063/ijb.v7i1.327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Liu X.G., Zhang D. Evaluation of efficacy of corticosteroid and corticosteroid combined with botulinum toxin type A in the treatment of keloid and hypertrophic scars: A meta-analysis. Aesthetic Plast. Surg. 2021;45:3037–3044. doi: 10.1007/s00266-021-02426-w. [DOI] [PubMed] [Google Scholar]

- 3.Salem S.A.M., Abdel Hameed S.M., Mostafa A.E. Intense pulsed light versus cryotherapy in the treatment of hypertrophic scars: A clinical and histopathological study. J. Cosmet. Dermatol. 2021;20:2775–2784. doi: 10.1111/jocd.13971. [DOI] [PubMed] [Google Scholar]

- 4.Zhang Y., Wang Z., Mao Y. RPN Prototype Alignment for Domain Adaptive Object Detector; Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition; Nashville, TN, USA. 20–25 June 2021; pp. 12425–12434. [Google Scholar]

- 5.Machado B.H.B., Zhang J., Frame J., Najlah M. Treatment of Scars with Laser-Assisted Delivery of Growth Factors and Vitamin C: A Comparative, Randomised, Double-blind, Early Clinical Trial. Aesthetic Plast. Surg. 2021;45:2363–2374. doi: 10.1007/s00266-021-02232-4. [DOI] [PubMed] [Google Scholar]

- 6.Fayzullin A., Ignatieva N., Zakharkina O., Tokarev M., Mudryak D., Khristidis Y., Balyasin M., Kurkov A., Churbanov S., Dyuzheva T. Modeling of Old Scars: Histopathological, Biochemical and Thermal Analysis of the Scar Tissue Maturation. Biology. 2021;10:136. doi: 10.3390/biology10020136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Huang T.Y., Wang Z.Z., Gong Y.F., Liu X.C., Zhang X.M., Huang X.Y. Scar-reducing effects of gambogenic acid on skin wounds in rabbit ears. Int. Immunopharmacol. 2021;90:107200. doi: 10.1016/j.intimp.2020.107200. [DOI] [PubMed] [Google Scholar]

- 8.Hsu W.C., Spilker M.H., Yannas I.V., Rubin P.A.D. Inhibition of conjunctival scarring and contraction by a porous collagen-glycosaminoglycan implant. Investig. Ophthalmol. Vis. Sci. 2000;41:2404–2411. [PubMed] [Google Scholar]

- 9.Limandjaja G.C., van den Broek L.J., Waaijman T., van Veen H.A., Everts V., Monstrey S., Scheper R.J., Niessen F.B., Gibbs S. Increased epidermal thickness and abnormal epidermal differentiation in keloid scars. Br. J. Dermatol. 2017;176:116–126. doi: 10.1111/bjd.14844. [DOI] [PubMed] [Google Scholar]

- 10.Sivaguru M., Durgam S., Ambekar R., Luedtke D., Fried G., Stewart A., Toussaint K.C. Quantitative analysis of collagen fiber organization in injured tendons using Fourier transform-second harmonic generation imaging. Opt. Express. 2010;18:24983–24993. doi: 10.1364/OE.18.024983. [DOI] [PubMed] [Google Scholar]

- 11.Yang S.W., Geng Z.J., Ma K., Sun X.Y., Fu X.B. Comparison of the histological morphology between normal skin and scar tissue. J. Huazhong Univ. Sci. Technol. Med. Sci. 2016;36:265–269. doi: 10.1007/s11596-016-1578-7. [DOI] [PubMed] [Google Scholar]

- 12.Mostaço-Guidolin L., Rosin N.L., Hackett T.L. Imaging collagen in scar tissue: Developments in second harmonic generation microscopy for biomedical applications. Int. J. Mol. Sci. 2017;18:1772. doi: 10.3390/ijms18081772. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wang Q., Liu W., Chen X., Wang X., Chen G., Zhu X. Quantification of scar collagen texture and prediction of scar development via second harmonic generation images and a generative adversarial network. Biomed. Opt. Express. 2021;12:5305–5319. doi: 10.1364/BOE.431096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Bayan C., Levitt J.M., Miller E., Kaplan D., Georgakoudi I. Fully automated, quantitative, noninvasive assessment of collagen fiber content and organization in thick collagen gels. J. Appl. Phys. 2009;105:102042. doi: 10.1063/1.3116626. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Clift C.L., Su Y.R., Bichell D., Smith H.C.J., Bethard J.R., Norris-Caneda K., Comte-Walters S., Ball L.E., Hollingsworth M.A., Mehta A.S. Collagen fiber regulation in human pediatric aortic valve development and disease. Sci. Rep. 2021;11:9751. doi: 10.1038/s41598-021-89164-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Fereidouni F., Todd A., Li Y., Chang C.W., Luong K., Rosenberg A., Lee Y.J., Chan J.W., Borowsky A., Matsukuma K. Dual-mode emission and transmission microscopy for virtual histochemistry using hematoxylin-and eosin-stained tissue sections. Biomed. Opt. Express. 2019;10:6516–6530. doi: 10.1364/BOE.10.006516. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Coelho P.G.B., de Souza M.V., Conceição L.G., Viloria M.I.V., Bedoya S.A.O. Evaluation of dermal collagen stained with picrosirius red and examined under polarized light microscopy. An. Bras. De Dermatol. 2018;93:415–418. doi: 10.1590/abd1806-4841.20187544. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Suvik A., Effendy A.W.M. The use of modified Masson’s trichrome staining in collagen evaluation in wound healing study. Mal. J. Vet. Res. 2012;3:39–47. [Google Scholar]

- 19.Rieppo L., Janssen L., Rahunen K., Lehenkari P., Finnilä M.A.J., Saarakkala S. Histochemical quantification of collagen content in articular cartilage. PLoS ONE. 2019;14:e0224839. doi: 10.1371/journal.pone.0224839. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Tanaka R., Fukushima S.i., Sasaki K., Tanaka Y., Murota H., Matsumoto K., Araki T., Yasui T. In vivo visualization of dermal collagen fiber in skin burn by collagen-sensitive second-harmonic-generation microscopy. J. Biomed. Opt. 2013;18:061231. doi: 10.1117/1.JBO.18.6.061231. [DOI] [PubMed] [Google Scholar]

- 21.Pham T.T.A., Kim H., Lee Y., Kang H.W., Park S. Deep Learning for Analysis of Collagen Fiber Organization in Scar Tissue. IEEE Access. 2021;9:101755–101764. doi: 10.1109/ACCESS.2021.3097370. [DOI] [Google Scholar]

- 22.Erickson B.J., Korfiatis P., Akkus Z., Kline T.L. Machine learning for medical imaging. Radiographics. 2017;37:505–515. doi: 10.1148/rg.2017160130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Rajula H.S.R., Verlato G., Manchia M., Antonucci N., Fanos V. Comparison of conventional statistical methods with machine learning in medicine: Diagnosis, drug development, and treatment. Medicina. 2020;56:455. doi: 10.3390/medicina56090455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Li M., Cheng Y., Zhao H. Unlabeled data classification via support vector machines and k-means clustering; Proceedings of the International Conference on Computer Graphics, Imaging and Visualization, 2004. CGIV 2004; Penang, Malaysia. 2 July 2004; pp. 183–186. [Google Scholar]

- 25.Yamashita R., Nishio M., Do R.K.G., Togashi K. Convolutional neural networks: An overview and application in radiology. Insights Imaging. 2018;9:611–629. doi: 10.1007/s13244-018-0639-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Hashemzehi R., Mahdavi S.J.S., Kheirabadi M., Kamel S.R. Detection of brain tumors from MRI images base on deep learning using hybrid model CNN and NADE. Biocybern. Biomed. Eng. 2020;40:1225–1232. doi: 10.1016/j.bbe.2020.06.001. [DOI] [Google Scholar]

- 27.Bhatt A.R., Ganatra A., Kotecha K. Cervical cancer detection in pap smear whole slide images using convnet with transfer learning and progressive resizing. PeerJ Comput. Sci. 2021;7:e348. doi: 10.7717/peerj-cs.348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Johnson J.W. Adapting mask-rcnn for automatic nucleus segmentation. arXiv. 20181805.00500 [Google Scholar]

- 29.Ghiasi G., Lin T.Y., Le Q.V. Nas-fpn: Learning scalable feature pyramid architecture for object detection; Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition; Long Beach, CA, USA. 15–20 June 2019; pp. 7036–7045. [Google Scholar]

- 30.Kim M., Kim S.W., Kim H., Hwang C.W., Choi J.M., Kang H.W. Development of a reproducible in vivo laser-induced scar model for wound healing study and management. Biomed. Opt. Express. 2019;10:1965–1977. doi: 10.1364/BOE.10.001965. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.He K., Gkioxari G., Dollár P., Girshick R. Mask r-cnn; Proceedings of the IEEE International Conference on Computer Vision; Venice, Italy. 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- 32.Kim Y., Park H. Deep learning-based automated and universal bubble detection and mask extraction in complex two-phase flows. Sci. Rep. 2021;11:8940. doi: 10.1038/s41598-021-88334-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Chen K., Wang J., Pang J., Cao Y., Xiong Y., Li X., Sun S., Feng W., Liu Z., Xu J. MMDetection: Open mmlab detection toolbox and benchmark. arXiv. 20191906.07155 [Google Scholar]

- 34.Amjoud A.B., Amrouch M. International Conference on Image and Signal Processing. Springer; Berlin/Heidelberg, Germany: 2020. Convolutional neural networks backbones for object detection; pp. 282–289. [Google Scholar]

- 35.Lin T.Y., Dollár P., Girshick R., He K., Hariharan B., Belongie S. Feature pyramid networks for object detection; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Honolulu, HI, USA. 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- 36.Jaiswal A., Wu Y., Natarajan P., Natarajan P. Class-agnostic object detection; Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision; Waikola, HI, USA. 5–9 January 2021; pp. 919–928. [Google Scholar]

- 37.Varadarajan V., Garg D., Kotecha K. An Efficient Deep Convolutional Neural Network Approach for Object Detection and Recognition Using a Multi-Scale Anchor Box in Real-Time. Future Internet. 2021;13:307. doi: 10.3390/fi13120307. [DOI] [Google Scholar]

- 38.Garg D., Jain P., Kotecha K., Goel P., Varadarajan V. An Efficient Multi-Scale Anchor Box Approach to Detect Partial Faces from a Video Sequence. Big Data Cogn. Comput. 2022;6:9. doi: 10.3390/bdcc6010009. [DOI] [Google Scholar]

- 39.Chaudhari P., Agrawal H., Kotecha K. Data augmentation using MG-GAN for improved cancer classification on gene expression data. Soft Comput. 2020;24:11381–11391. doi: 10.1007/s00500-019-04602-2. [DOI] [Google Scholar]

- 40.Kozlov A. Working with scale: 2nd place solution to Product Detection in Densely Packed Scenes [Technical Report] arXiv. 20202006.07825 [Google Scholar]

- 41.Cetinic E., Lipic T., Grgic S. Fine-tuning convolutional neural networks for fine art classification. Expert Syst. Appl. 2018;114:107–118. doi: 10.1016/j.eswa.2018.07.026. [DOI] [Google Scholar]

- 42.Rukmangadha P.V., Das R. Computational Intelligence in Pattern Recognition. Springer; Berlin/Heidelberg, Germany: 2022. Representation-Learning-Based Fusion Model for Scene Classification Using Convolutional Neural Network (CNN) and Pre-trained CNNs as Feature Extractors; pp. 631–643. [Google Scholar]

- 43.Shaodan L., Chen F., Zhide C. A ship target location and mask generation algorithms base on Mask RCNN. Int. J. Comput. Intell. Syst. 2019;12:1134–1143. doi: 10.2991/ijcis.d.191008.001. [DOI] [Google Scholar]

- 44.Neumann L., Zisserman A., Vedaldi A. Relaxed Softmax: Efficient Confidence Auto-Calibration for Safe Pedestrian Detection. 2018. [(accessed on 22 July 2021)]. Available online: https://openreview.net/forum?id=S1lG7aTnqQ.

- 45.WS R. Imagej, US National Institutes of Health, Bethesda, Maryland, USA. [(accessed on 5 August 2021)];2009 Available online: http://rsb.info.nih.gov/ij/

- 46.Kaur A., Kranthi B.V. Comparison between YCbCr color space and CIELab color space for skin color segmentation. Int. J. Appl. Inf. Syst. 2012;3:30–33. [Google Scholar]

- 47.Dey R., Roy K., Bhattacharjee D., Nasipuri M., Ghosh P. An Automated system for Segmenting platelets from Microscopic images of Blood Cells; Proceedings of the 2015 International Symposium on Advanced Computing and Communication (ISACC); Silchar, India. 14–15 September 2015; pp. 230–237. [Google Scholar]

- 48.Quinn K.P., Golberg A., Broelsch G.F., Khan S., Villiger M., Bouma B., Austen W.G., Jr., Sheridan R.L., Mihm M.C., Jr., Yarmush M.L. An automated image processing method to quantify collagen fibre organization within cutaneous scar tissue. Exp. Dermatol. 2015;24:78–80. doi: 10.1111/exd.12553. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Quinn K.P., Georgakoudi I. Rapid quantification of pixel-wise fiber orientation data in micrographs. J. Biomed. Opt. 2013;18:46003. doi: 10.1117/1.JBO.18.4.046003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Ivasic-Kos M., Pobar M. Building a labeled dataset for recognition of handball actions using mask R-CNN and STIPS; Proceedings of the 2018 7th European Workshop on Visual Information Processing (EUVIP); Tampere, Finland. 26–28 November 2018; pp. 1–6. [Google Scholar]

- 51.Qin Z., Li Z., Zhang Z., Bao Y., Yu G., Peng Y., Sun J. ThunderNet: Towards real-time generic object detection on mobile devices; Proceedings of the IEEE/CVF International Conference on Computer Vision; Seoul, Korea. 27–28 October 2019; pp. 6718–6727. [Google Scholar]

- 52.Bautista P.A., Abe T., Yamaguchi M., Yagi Y., Ohyama N. Digital staining for multispectral images of pathological tissue specimens based on combined classification of spectral transmittance. Comput. Med. Imaging Graph. 2005;29:649–657. doi: 10.1016/j.compmedimag.2005.09.003. [DOI] [PubMed] [Google Scholar]

- 53.Zhang C., Yin K., Shen Y.m. Efficacy of fractional carbon dioxide laser therapy for burn scars: A meta-analysis. J. Dermatol. Treat. 2021;32:845–850. doi: 10.1080/09546634.2019.1704679. [DOI] [PubMed] [Google Scholar]

- 54.Sarwinda D., Paradisa R.H., Bustamam A., Anggia P. Deep Learning in Image Classification using Residual Network (ResNet) Variants for Detection of Colorectal Cancer. Procedia Comput. Sci. 2021;179:423–431. doi: 10.1016/j.procs.2021.01.025. [DOI] [Google Scholar]

- 55.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Las Vegas, NV, USA. 27–30 June 2016; pp. 770–778. [Google Scholar]

- 56.Zhang H., Wu C., Zhang Z., Zhu Y., Lin H., Zhang Z., Sun Y., He T., Mueller J., Manmatha R. Resnest: Split-attention networks. arXiv. 20202004.08955 [Google Scholar]

- 57.Mehl A.A., Schneider B., Schneider F.K., Carvalho B.H.K.D. Measurement of wound area for early analysis of the scar predictive factor. Rev. Lat.-Am. Enferm. 2020;28 doi: 10.1590/1518-8345.3708.3299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Shi P., Zhong J., Huang R., Lin J. Automated quantitative image analysis of hematoxylin-eosin staining slides in lymphoma based on hierarchical Kmeans clustering; Proceedings of the 2016 8th International Conference on Information Technology in Medicine and Education (ITME); Fuzhou, China. 23–25 December 2016; pp. 99–104. [Google Scholar]

- 59.Osman O.S., Selway J.L., Harikumar P.E., Jassim S., Langlands K. BIOIMAGING. SCITEPRESS—Science and Technology Publications, Lda; Angers, France: 2014. Automated Analysis of Collagen Histology in Ageing Skin; pp. 41–48. [Google Scholar]