Abstract

The evaluation of white blood cells is essential to assess the quality of the human immune system; however, the assessment of the blood smear depends on the pathologist’s expertise. Most machine learning tools make a one-level classification for white blood cell classification. This work presents a two-stage hybrid multi-level scheme that efficiently classifies four cell groups: lymphocytes and monocytes (mononuclear) and segmented neutrophils and eosinophils (polymorphonuclear). At the first level, a Faster R-CNN network is applied for the identification of the region of interest of white blood cells, together with the separation of mononuclear cells from polymorphonuclear cells. Once separated, two parallel convolutional neural networks with the MobileNet structure are used to recognize the subclasses in the second level. The results obtained using Monte Carlo cross-validation show that the proposed model has a performance metric of around 98.4% (accuracy, recall, precision, and F1-score). The proposed model represents a good alternative for computer-aided diagnosis (CAD) tools for supporting the pathologist in the clinical laboratory in assessing white blood cells from blood smear images.

Keywords: white blood cells classification, deep learning, multi-level classification, multi-source datasets

1. Introduction

The peripheral blood smear represents a routine laboratory test that provides the physician with a great deal of information about a patient’s general condition. It provides a qualitative and quantitative assessment of blood components, mainly cells and platelets. Blood cells can be divided into white blood cells (WBC) or leukocytes and red blood cells (RBC) or erythrocytes [1]. Leukocytes, in turn, comprise five types of nucleated cells: monocytes, basophils, eosinophils, neutrophils, and lymphocytes. The total WBC count as the difference of percentage between the subtypes provides critical information in infectious diseases and chronic processes such as anemia, leukemia, and malnutrition [2].

The manual WBC count is based on the microscopic observation of the blood smear by the analyst, who can differentiate the subtypes mainly based on the morphological characteristics of the cell nucleus and cytosol. However, this process strongly depends on the analyst’s time and experience, leading to errors if the analyst is not adequately trained [3]. In addition, since this hematological evaluation is a routine test, it is often in high demand in clinical laboratories, representing an increased workload that affects performance. Thus, providing computer-aided diagnosis (CAD) tools for diagnostic assistance in the laboratory is required. For instance, CAD systems have been developed that use image processing techniques to classify the differential white blood cell count [4,5]. This automatic leukocyte classification allows a faster and more reproducible result to be generated while reducing bias and inter-observer variability.

On the other hand, due to the inherent complexity of observing medical examinations, their automation is still a challenge for researchers today. Achieving levels of precision comparable to those of a professional in the area is critical. The automatization is prone to errors or biases in recognizing and classifying these images. These will directly impact the diagnosis, increasing the treatment costs and negatively affecting the recovery and survival of patients.

The main component of the computer-aided system for WBC classification is the cell detection and segmentation algorithm. Based on image processing analysis, it will identify the different elements of interest considering various aspects associated with cell morphology: size, shape, texture, nucleus, etc. [4]. Commonly, cell segmentation is a complex task in tissue samples. However, this task is straightforward in cell smears, given the dark nucleus in leukocyte staining. The challenge is mainly in delineating cell borders, separating overlapping cells, and removing noise and artifacts in the image during acquisition [6]. Given the advantages of artificial intelligence in image processing, several machine learning (ML) alternatives have been evaluated to classify and segment leukocytes. These methods range from the support vector machine (SVM) [7,8] and Naïve Bayesian [9,10] to more complex algorithms such as deep learning (DL) models [11,12,13].

Within DL models, convolutional neural networks (CNNs) have shown exemplary performance in medical image classification [14,15,16,17]. CNNs are feed-forward neural networks that can be divided into two main parts: deep convolutional feature extraction and classification. This model extracts features by applying multiple convolutional and pooling layers that include linear operations (called kernels) that emphasize an input image’s characteristic. A fully connected dense layer does the classification step to learn the model using the extracted features [18,19]. Following this structure, several types of CNN models have been proposed for specific tasks such as classification, among which are AlexNet [20], ResNet [21], VGG [22], and GoogLeNet [23], and segmentation, highlighting the fully connected network (FCN) [24], U-Net [25], and Faster-RCNN [26], these being applied in the processing of blood smear images for differential WBC counting, achieving good performance results. Recently, an efficient network architecture called MobileNet was proposed as a small, lightweight, and low latency model for mobile and embedded vision applications [27]. This model has been demonstrated to be effective when applied to various tasks.

Most researchers have used these methods as one-level designs or single models built on the entire dataset. In other fields, these single models may experience difficulty in handling the increasingly complex data distribution [28] or large-scale visual recognition [29]. Given the characteristics of leukocytes, better performance in WBC classification could be obtained if a multi-level scheme is developed. In the first level of this scheme, the polymorphonuclears are separated from the mononuclear. Afterward, on the one hand, the monocytes and lymphocytes (mononuclear) and, on the other hand, the neutrophils, eosinophils, and basophils (polymorphonuclears) are classified in the second level. Thus, more features could be extracted from each cell image, and the classification performance could be increased.

On the other hand, medical datasets acquired from several institutions may have different quality, contrast, and acquisition mechanisms. Thus, the datasets are prone to an inherent bias caused by various confounding factors [30]. In machine learning, the dataset bias may lead to a difference between the estimated and the true value of desired model parameters. A possible solution to this problem is combining multi-source datasets such that the model will be robust to the unseen domains with better generalization performance.

Therefore the main objective of this research is to develop a multi-level convolutional neural network (ML-CNN) model to improve the automatic detection and classification of individual white blood cells by mitigating the dataset bias with the combination of multi-source datasets. The main contribution of this work is twofold. First, a new multi-level deep learning algorithm separates the leukocyte detection and classification processes into two levels. In the first level, the Faster R-CNN network is applied to identify the region of interest of white blood cells, together with the separation of mononuclear cells from polymorphonuclear cells. Once separated, two parallel CNN models are used to classify the subclasses in the second level. Second, the ML-CNN proposal was implemented using the MobileNet architecture as the base model. It is an efficient model with an adequate balance between high performance and structural complexity, making its implementation in automated equipment such as a CAD system feasible. Furthermore, the MobileNet applies the depthwise separable convolution to extract relevant features from each channel, which better uses the information contained in the images to improve leukocyte classification.

The article is structured as follows. In Section 2, we present a brief revision of the state of the art. In Section 3, the proposed method is presented. Results and discussion are presented in Section 4. Finally, in Section 5, we give some concluding remarks, and we outline some future works.

2. State of the Art

Traditional machine learning (ML) and deep learning (DL) models have been extensively proposed as alternatives for the automatic classification of leukocytes [5,31,32]. Such is the case of Abou et al. [33], who developed a CNN model to identify WBC. Likewise, Togacar et al. [34] proposed a subclass separation of WBC images using the AlexNet model. In addition, Hegde et al. [13] proposed a deep learning approach for the classification of white blood cells in peripheral blood smear images. Wang et al. [35] applied deep convolution networks on microscopy hyperspectral images to learn spectral and spatial features. This form makes full use of three-dimensional hyperspectral data for WBC classification. Basnet et al. [36] optimized the WBC CNN classification enhancing loss function with regularization and weighted loss, decreasing time processing. Jiang et al. [37] constructed a new CNN model called WBCNet that can fully extract features of the WBC image by combining the batch normalization algorithm, residual convolution architecture, and improved activation function. Other authors include steps to improve the feature extraction process. Thus, Yao et al. [38] proposed the two-module weighted optimized deformable convolutional neural networks (TWO-DCNN) white blood classification, characterized as two-module transfer learning and deformable convolutional layers for the betterment of robustness.

A novel blood-cell classification framework named MGCNN, which combines a modulated Gabor wavelet with CNN kernels, was proposed by Huang et al. [39]. In this model, multi-scale and orientation Gabor operators are taken dot product with initial CNN kernels for each convolutional layer. Experimental results showed that MGCNN outperformed traditional SVM and single CNN networks. Likewise, Khan et al. [40] presented a new model called MLANet-FS, which combined an AlexNet network with a feature selection strategy for WBC-type identification. Razzak et al. [41] proposed a WBC segmentation and classification using a deep contour aware CNN and extreme machine learning (ELM).

Another interesting strategy is to apply hybrid methods such as the proposal of Çınar and Tuncer [23]. They presented an approach combining AlexNet and GoogleNet to extract features from WBC images. Then, these features are concatenated and classified using SVM. In the same way, Özyurt [42] proposed a fused CNN model for WBC detection where the pre-trained architectures AlexNet, VGG-16, GoogleNet, and ResNet were used as feature extractors. Then, the features obtained were combined and later classifier using ELM. Patil et al. [43] presented a deep hybrid model, CNN and recurrent neural networks (RNN), with canonical correlation analysis to extract overlapping and multiple nuclei patches from blood cell images. As a novel proposal, Baydilli and Atila [44], to overcome the problem of data sets, developed a leukocyte classification via capsule networks, an enhanced deep learning approach. A capsule network consists of the decoder (used to reconstruct the image) and the encoder (responsible for extracting features and classifying the image).

Research presenting a multi-level scheme in the WBC classification is scarce. Although Baghel et al. [45] do not perform a plan, they propose a CNN classification model whose performance is evaluated in two phases: an initial step, binary discrimination between the mononuclear and polymorphonuclears, and a second phase that corresponds to the classification of subtypes. Tran et al. [46] presented as an initial setup a DL semantic segmentation between WBC and RBC, as initial steps for classifying leukocytes. However, none of these methods has a similar scheme to the one proposed in our research, so we consider that our algorithm is novel and can efficiently contribute to leukocyte classification.

Table 1 summarizes the main methods that use deep learning models found in the literature. The main advantage of deep learning models is that they are highly efficient since they extract the most important information available in the data to generate the prediction. The models presented above have shown an excellent performance higher than 90% [23,35,40], which makes them a helpful tool in the WBC classification. However, their architecture implies a high computational cost due to the use of complex architecture and large volumes of data, this being its main disadvantage. Simpler models that maintain good performance with fewer trainable parameters in their architecture could be generated. However, these methods require image processing techniques for feature extraction, making them slower [45,47]. Therefore, to implement deep learning models in a CAD system, methods should have a reasonable trade-off between performance and complexity without affecting the processing speed.

Table 1.

Summary of the state-of-the-art models for white blood cells classification.

| Authors | Model Description |

|---|---|

| Abou et al. [33] | CNN model with ad hoc structure. |

| Baghel et al. [45] | CNN model. |

| Banik et al. [47] | CNN with fusing features in the first and last convolutional layer. |

| Basnet et al. [36] | DCNN model with image pre-processing and a modified loss function. |

| Baydilli et al. [44] | WBC classification using a small dataset via capsule networks. |

| Çınar et al. [23] | Hybrid AlexNet, GoogleNet networks, and support vector machine. |

| Hegde et al. [48] | AlexNet and CNN model with ad hoc structure. |

| Huang et al. [39] | MFCNN CNN with hyperspectral imaging with modulated Gabor wavelets. |

| Jiang et al. [37] | Residual convolution architecture. |

| Khan et al. [40] | AlexNet model with feature selection strategy and extreme learning machine (ELM). |

| Kutlu et al. [49] | Regional CNN with a Resnet50. |

| Liang et al. [50] | Combining Xception-LSTM. |

| Özyurt [42] | Ensemble of CNN models (AlexNet, VGG16, GoogleNet, ResNet) for feature extraction combined with the MRMR feature selection algorithm and ELM classifier. |

| Patil et al. [43] | Combining canonical correlation analysis CCANet and convolutional neural networks (Inception V3, VGG16, ResNet50, Xception) with recursive neural network (LSTM). |

| Razzak [41] | CNN combined with extreme learning machine (ELM). |

| Togacar et al. [34] | AlexNet with QDA. |

| Wang et al. [35] | Three-dimensional attention networks for hyperspectral images. |

| Yao et al. [38] | Two-module weighted optimized deformable convolutional neural networks. |

| Yu et al. [51] | Ensemble of CNN (Inception V3, Xception, VGG19, VGG16, ResNet50). |

| ML-CNN (Our proposal) |

Multi-level convolutional neural network approach with multi-source datasets. Combines Faster R-CNN for cell detection with a MobileNet for type classification. |

3. Materials and Methods

In this research, a multi-level convolutional neural network (ML_CNN) was developed to detect and classify individual WBC obtained from blood smear images.

3.1. White Blood Cell Images Datasets

In this work, five different datasets were used. The description of these sources follows.

Blood Cell Detection (BCD) dataset (Aslan [52]): Contains 100 annotated images in png format, with 2237 labeled as Red Blood Cells and only 103 as White Blood Cells. Each image consists of 256 pixels in height and width of RGB channels. (More information can be found at https://github.com/draaslan/blood-cell-detection-dataset. Accessed date: 30 June 2020)

Complete Blood Count (CBC) dataset (Alam et al. [53]): Contains 360 blood smear images along with their annotation files. (More information can be found at [54], https://github.com/MahmudulAlam/Complete-Blood-Cell-Count-Dataset. Accessed date: 20 June 2021)

White Blood Cells (WBC) dataset (Zheng et al. [55,56]): Contains 300 images of size , and 100 color images of size . (More information can be found at http://www.doi.org/10.17632/w7cvnmn4c5.1. Accessed date: 15 May 2019)

Kaggle Blood Cell Images (KBC) dataset (Mooney [57]): Contains 12,500 augmented images of blood cells (JPEG) with accompanying cell type labels (CSV). There are approximately 3000 images for each of 4 different cell types. (More information can be found at https://www.kaggle.com/paultimothymooney/blood-cells. Accessed date: 15 May 2019)

Leukocyte Images for Segmentation and Classification (LISC) dataset (Rezatofighi et al. [58]): Corresponds to a dataset of 250 blood smear images in BMP format. It contains a set of 25 basophil images. (More information can be found at http://users.cecs.anu.edu.au/~hrezatofighi/Data/Leukocyte%20Data.htm. Accessed date: 15 May 2019)

In the previous datasets, there was an insufficient number of basophils. Therefore, the algorithm was developed using images that contain monocytes, lymphocytes, segmented, and eosinophils.

For developing the proposal, a human specialist used the LabelImg (https://github.com/tzutalin/labelImg, Accessed date: 10 September 2019) graphical annotation tool to label the blood smear images. For the first level, a subset of images obtained from the KBC data set was selected, the cells were detected with the identification of the region of interest (ROI) using bounding boxes, and the cells were labeled as polymorphonuclear or mononuclear. A total of 365 labeled images with the bounding boxes of the cells were used to train the Faster R-CNN of the first level. A human specialist used the LabelImg tool to label the cell images into lymphocytes, monocytes, segmented, and eosinophils. On the one hand, a CNN model was trained with the mononuclear cells to classify between the lymphocytes and monocytes, and a total of 2282 and 2134 images were used, respectively. On the other hand, a CNN model was trained with the polymorphonuclear cells to classify between the segmented neutrophils and eosinophils, and a total of 2416 and 2477 images were used, respectively.

3.2. A Multi-Level Convolutional Neural Network Approach

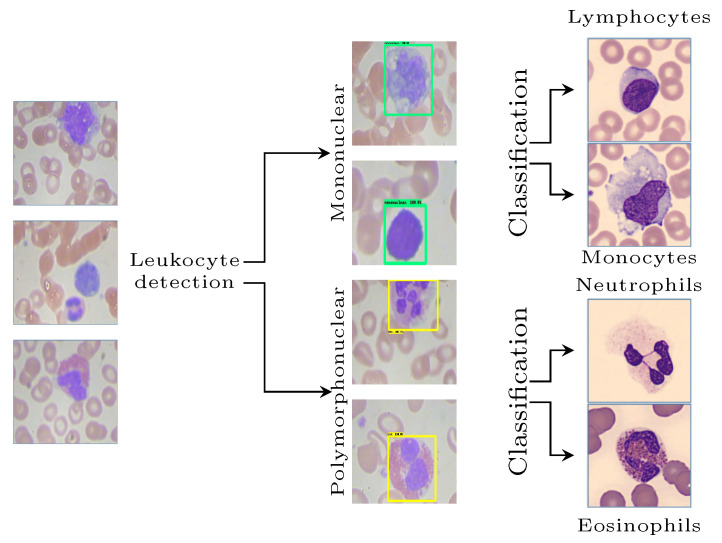

For WBC classification, a multi-level and hybrid scheme is proposed, in which the first step corresponds to the detection and separation of leukocytes into mononuclear and polymorphonuclear. Subsequently, the classification of the subtypes in the second level, monocytes, lymphocytes, eosinophils, and neutrophils, is performed, as shown in Figure 1.

Figure 1.

Scheme of identification and classification of white blood cells by the proposed method.

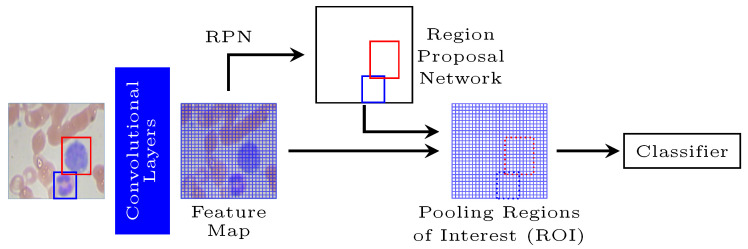

A Faster R-CNN allows individual white blood cells to be detected and extracted in the first level. This model is an object detection system that improves on Fast R-CNN by utilizing a region proposal network (RPN) with the CNN model [59]. Thus, this network is structured in two distinct modules: a CNN that proposes regions and a Fast R-CNN detector that uses the proposed regions (Figure 2).

Figure 2.

Representation of Faster R-CNN segmentation.

In the Faster R-CNN, the RPN from an image generates a set of proposed rectangular objects, each of which has an objectivity score. These proposals are developed by sliding a small network over the feature map emitted by the last shared convolutional layer, considering an spatial window mapped to a lower-dimensional feature. Multiple region proposals are simultaneously predicted at each sliding window location, where the number of maximum possible suggestions for each area is denoted as k. Each of the k proposals is parameterized relative to k reference boxes, marked as anchors, and associated with a specific scale and aspect ratio. Each anchor is assigned a binary class (an object or not). The positive or negative labels depend on the most significant overlap of intersection over union values to minimize the objective function. Finally, the Fast R-CNN network inputs the evaluated image and the set of object proposals already obtained [60]. First, the whole image is processed with several convolutional and maxima clustering layers to produce a feature map. Then, for each object proposal, a region of interest (RoI) clustering layer extracts a fixed-length feature vector from the feature map. Each feature vector is fed into a sequence of fully connected layers that ultimately branch into two sister output layers: one that produces softmax probability estimates over K object classes plus a general “background” type. Another layer makes four real-valued numbers for each of the K object classes. Each set of 4 values encodes the refined positions of the enclosures for one of the K classes.

The softmax function takes an input vector and normalizes it into a probability distribution for the K classes:

| (1) |

In the proposed model, the Faster R-CNN is trained to detect leukocytes by separating these cells into two distinct classes according to the morphology of their nuclei, creating two labels at this stage:

Mononuclear (MN), whose nuclei show morphological unity, and includes lymphocytes and monocytes.

Polymorphonuclear (PMN), whose nuclei are divided, and includes segmented neutrophils and eosinophils.

After the first level, the dataset was separated into two cell groups according to the segmentation of the nucleus. In the second level of the proposal, two CNNs were developed: one for classifying mononuclear cells into lymphocytes and monocytes and another for separating polymorphonuclear cells into segmented neutrophils and eosinophils. The CNNs implemented have the MobileNet architecture as a base model with the application of transfer learning, where the weights were pretrained for the ImageNet classification. The output layer was discarded and replaced with a new fully connected classifier.

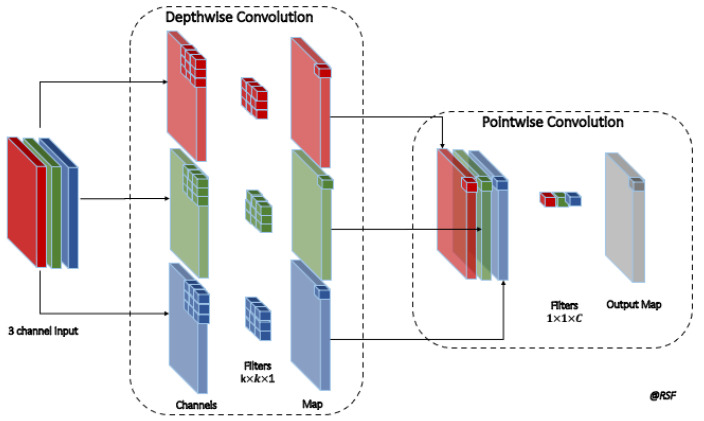

The MobileNet uses a depthwise separable convolution that reduces the number of parameters compared to conventional CNN. The depthwise separable convolutions factorize the convolution into a depthwise (dw) and a pointwise (pw) (see Figure 3). On the one hand, the dw convolution applied a single filter to each input channel. On the other hand, the pw convolution applied a convolution to combine the outputs of the pw convolution.

Figure 3.

Depthwise separable convolution of the MobileNet, which factorizes the convolution into depthwise and pointwise convolutions.

The filtered matrix is obtained by applying the classical convolution between the kernel and the input matrix with the following equation:

| (2) |

where is the bias introduced to the convolution product.

Table 2 schematically shows the architecture of the MobileNet. In this article, we set the hyperparameters of the width multiplier and the resolution multiplier . However, the width multiplier reduces the input and output channels by half in this work. The first layer uses the classical convolution. The following layers are depthwise separable convolutions followed by batch-normalization and ReLU activation functions. The ReLU activation function has the following equation:

| (3) |

Table 2.

Architecture of the MobileNet with transfer learning.

| Layer | Layer Type | Stride | Kernel Size | Input Size | N°Parameters | ||

|---|---|---|---|---|---|---|---|

| MobileNet Base Model | 1 | Conv. 2D | s2 | 496 | |||

| 2 | Conv. dw | s1 | 208 | ||||

| 3 | Conv. pw | s1 | 640 | ||||

| 4 | Conv. dw | s2 | 416 | ||||

| 5 | Conv. pw | s1 | 2304 | ||||

| 6 | Conv. dw | s1 | 832 | ||||

| 7 | Conv. pw | s1 | 4352 | ||||

| 8 | Conv. dw | s2 | 832 | ||||

| 9 | Conv. pw | s1 | 8704 | ||||

| 10 | Conv. dw | s1 | 1664 | ||||

| 11 | Conv. pw | s1 | 16,896 | ||||

| 12 | Conv. dw | s2 | 1664 | ||||

| 13 | Conv. pw | s1 | 33,792 | ||||

| 14–23 | Conv. dw | s1 | |||||

| Conv. pw | s1 | ||||||

| 24 | Conv. dw | s2 | 3328 | ||||

| 25 | Conv. pw | s1 | 133,120 | ||||

| 26 | Conv. dw | s1 | 6656 | ||||

| 27 | Conv. pw | s1 | 264,192 | ||||

| Dense | – | Global Avg. Pool | s1 | Pool | - | ||

| 28 | FC | – | – | 512 | 262,656 | ||

| – | Softmax | – | Output | 2 | 1026 | ||

| Total Parameters: 1,093,218 | |||||||

| Trainable Parameters: 263,682 | |||||||

The stride is a parameter that controls the movement of the filter over the image and induces the kernels’ downsampling. For instance, a stride of 2 (s2) reduces the image to half the original size. A final average pooling reduces the spatial resolution to 1 before the fully connected layer.

The model was trained using the backpropagation algorithm with the Adam optimizer and the binary cross-entropy loss function:

| (4) |

where and are the i-th target and the predictions, respectively. N is the total number of samples.

The hyper-parameters of the learning algorithm were set to , , , and .

In this work, we have used a web-based virtual experimental environment. We have run the models using the Kaggle and Google Colab environment with GPU, and the models were implemented with Keras and TensorFlow.

3.3. Performance Metrics

The confusion matrix corresponds to a summary of the prediction results obtained with the machine learning model. Given n samples, the is the number of true positives; the is the number of true negatives; is the number of false negatives; and is the number of false positives.

We use the classification metrics obtained from the confusion matrix to evaluate the performance. These metrics are accuracy, precision, recall, and F1-score, and they are described below.

- Accuracy: the accuracy value refers to how close a measurement is to the true value, and the equation is given by

where are true negatives.(5) - Recall: the measure of sensitivity or recall is the percentage of positive cases that were correctly labeled by the model. The recall equation is given by

where is the ratio of true positives, is the ratio of false positives, and are the false negatives.(6) - Precision: precision is the percentage of correct classifications. This metric is defined with the following equation:

(7) - F_Score: F_score corresponds to the harmonic mean between precision and sensitivity and gives a trade-off measure between the recall and the precision:

(8)

4. Results and Discussion

As mentioned in the previous sections, a two-level hybrid model was run to classify white blood cells in blood smears. To evaluate the performance of our proposed model, we have used Monte Carlo cross-validation with 10 repetitions, with a split of 70% for training and 30% for testing. The averages and standard deviations of the metrics computed for the test set are shown in Table 3. It can be seen that a good performance was obtained, with metrics from 96% reaching up to 100%. All the performance metrics were around 98.4%, highlighting the model’s discriminatory power developed for classifying leukocytes.

Table 3.

Performance obtained in the classification model for each of the WBC cell types considered in the validation set.

| Cells | Classification Model | Accuracy | Recall | Precision | F_Score |

|---|---|---|---|---|---|

| Mononuclear | Lymphocytes | ||||

| Monocytes | |||||

| Polymorphonuclear | Eosinophils | ||||

| Segmented Neutrophils | |||||

| Average |

Table 4 shows a comparative analysis of the results with those obtained in the state of the art. Our proposed model achieved higher performance than those reported by Abou et al. [33], Baydilli [44], Banik et al [47], Huang et al. [39], Jiang et al. [37], Kutlu et al. [49], Liang et al. [50], Özyurt [42], Patil et al. [43], Togacar et al. [34], Wang et al. [35], Yao et al. [38], and Yu et al. [51], who reported accuracy between 83% and 98%. However, it should be noted that the average performance of our proposal was lower than those reported by Baghel et al. [45] and Basnet et al. [36], where they have included image processing for feature extraction to enhance the prediction performance. Likewise, the works of Çınar et al. [23], Hedge et al. [48], and Khan et al. [40] have reported accuracy values higher than 99%. Nevertheless, it should be noted that the latter models have more complex structures and a larger number of trainable parameters, which represents a disadvantage in terms of computational cost.

Table 4.

Comparison of WBC classification results with models in the sate of the art. (NI denotes not informed.)

| Authors | Accuracy (%) | Recall (%) | F Score(%) | Layers | Parameters |

|---|---|---|---|---|---|

| Abou et al. [33] | 96.8 | NI | NI | 5 | NI |

| Baghel et al. [45] | 98.9 | 97.7 | 97.6 | 7 | 519,860 |

| Baydilli et al. [44] | 96.9 | 92.5 | 92.3 | 6 | 8,238,608 |

| Banik et al. [47] | 97.9 | 98.6 | 97.0 | 10 | |

| Basnet et al. [36] | 98.9 | 97.8 | 97.7 | 4 | NI |

| Çınar et al. [23] | 99.7 | 99 | 99 | 8 22 |

(AlexNet) (GoogleNet) |

| Hegde et al. [48] | 98.7 | 99 | 99 | 8 | (AlexNet) |

| Huang et al. [39] | 97.7 | NI | NI | 4 | NI |

| Jiang et al. [37] | 83.0 | NI | NI | 33 | NI |

| Khan et al. [40] | 99.1 | 99 | 99 | 8 3 |

(AlexNet) (ELM) |

| Kutlu et al. [49] | 97 | 99 | 98 | 50 | (Resnet50) |

| Liang et al. [50] | 95.4 | 96.9 | 94 | 71 | (Xception) |

| Özyurt [42] | 96.03 | NI | NI | 8 22 16 50 |

(AlexNet) (GoogleNet) (VGG16) (Resnet) |

| Patil et al. [43] | 95.9 | 95.8 | 95.8 | 71 | (Xception) |

| Razzak et al. [41] | 98.8 | 95.9 | 96.4 | 3 | NI |

| Togacar et al. [34] | 97.8 | 95.7 | 95.6 | 8 | (AlexNet) |

| Wang et al. [35] | 97.7 | NI | NI | 18 | |

| Yao et al. [38] | 95.7 | 95.7 | 95.7 | 55 | |

| Yu et al. [51] | 90.5 | 92.4 | 86.6 | 48 71 19 50 |

(InceptionV3) (Xception) (VGG19) (Resnet50) |

| ML-CNN (Our proposal) |

98.4 | 98.4 | 98.4 | 28 | (MobileNet) |

It is essential to highlight some advantages in terms of the functionality of our proposal. The first one is that the ML-CNN is a method that involves simpler models and obtains comparable performances. This fact differentiates it, for example, from Wang’s proposal [35], which requires microscopy hyperspectral images and the architecture of a 3D residual block used in the deep hyper model. Likewise, Khan et al. [40] presents a more complex model involving convolutional features followed by a selection strategy to identify cellular subtypes. Alternatively, Yao et al. [38] propose a model based on two modules involving transfer learning. All these models are more complex than our proposal. Therefore, the multi-level structure presented is simpler to implement without affecting the classification performance on a large scale.

Another noteworthy aspect of the functionality of the proposed model is that the multi-level strategy allows for the first phase of cell detection. It is possible to identify the regions of interest in the images to extract the white blood cells to be subsequently classified. With the exception of [41,49], none of the reviewed works focuses on this detection scheme. It should be noted that the proposed multi-level scheme not only allows efficient cell element classification but also reduces processing times by running CNN networks in parallel during stage two. This hybrid scheme is one of the differentiating aspects of the proposal compared to the methods discussed in the state of the art, which are primarily single-level. This makes our proposal functionally efficient for use in automated equipment as a CAD system.

Figure 4 and Figure 5 are examples of the operation of the ML-CNN for mononuclear and polymorphonuclear cells, respectively, showing in both cases examples of correctly and erroneously classified images. The excellent classification performance obtained for the mononuclear may be due to the morphological characteristics being well-differentiated between monocytes and lymphocytes, where the former has an irregularly shaped nucleus, and the latter has a wholly rounded shape [1]. If a partial comparison is made, it can be observed that the hybrid model developed obtained a better mononuclear classification accuracy compared to the proposals of Wang et al. [35], Baydilli and Atila [44], and Huang et al. [39]. Although it is ideal to have a model that efficiently achieves a global classification of leukocytes, a model that classifies mononuclear cells very well could help screen viral infections since the count of these subclasses acquires greater relevance in these infections [2].

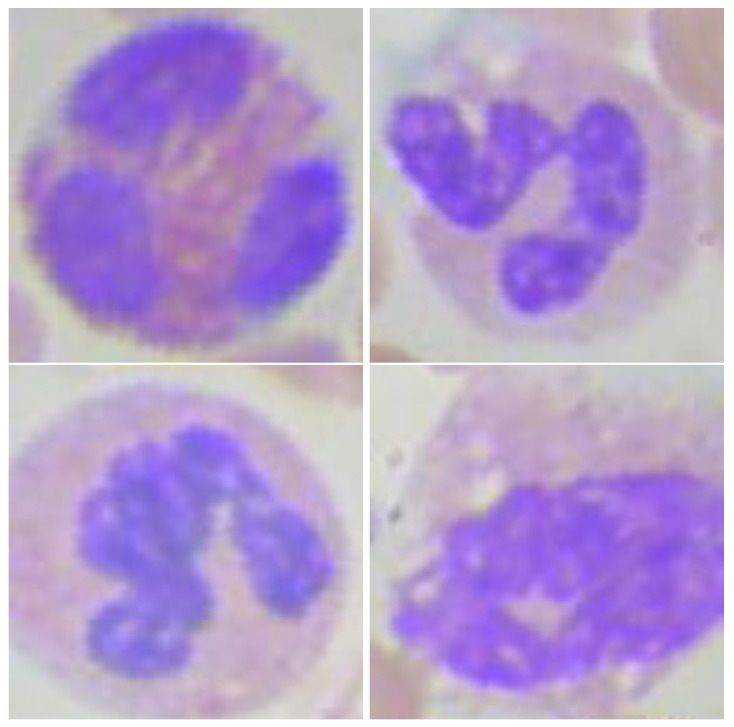

Figure 4.

Mononuclear cells classified by the proposed multi-level convolutional neural network. (upper-left) Lymphocytes correctly classified; (upper-right) monocytes Correctly classified; (lower-left) lymphocytes incorrectly classified as monocytes; (lower-right) monocytes incorrectly classified as lymphocytes.

Figure 5.

Polymorphonuclear cells classified by the proposed multi-level convolutional neural network. (upper-left) Eosinophils correctly classified; (upper-right) neutrophils correctly classified; (lower-left) eosinophils incorrectly classified as neutrophils; (lower-right) neutrophils incorrectly classified as eosinophils.

In the case of polymorphonuclear cells (Figure 5), a good classification of the cellular subtypes was also observed. However, in this group, the identification is more complex since the cellular characteristics are not as well differentiated as in the case of mononuclear cells. In this case, the differentiating aspect is not the nucleus but the cell cytoplasm. Therefore, the staining of the blood smear and the acquisition of the image for its correct identification play a fundamental role. Another aspect that could contribute to misclassification is the shape of the cell nucleus. Although mononuclear cells have a rounded core, in the case of polymorphonuclear cells, a slight indentation generates a lobule shape that is not always constant.

Another aspect to highlight is the image labeling process for the model developed. A medical technologist with extensive professional experience performed the manual labeling, thus minimizing the risk of using erroneous datasets. Likewise, we worked with a subset of random images from four databases that were subsequently labeled for the validation set. Thus, we have a new database whose labels are verified by a professional and that in the future will be available for use in other investigations.

A limitation of the model developed is that, on the one hand, basophils were not considered among the polymorphonuclears due to the small number of cases available in the databases. This issue is a challenge to be faced in future work since basophils are part of a routine blood smear but are found in a shallow frequency, which prevents having enough data to run the training of a machine learning model. It should be noted that in the first instance, it was considered to use a generative network to increase the basophil data. Still, the available cases were scarce; therefore, it was decided not to do so in order not to bias the sample. On the other hand, it is necessary to remember that the proposed model was developed to run a classification of mature leukocytes in blood smears, so it does not include the identification of immature cells that could lead to some pathology such as leukemia. The model would have to be extended to identify these cell subtypes. We intend to include more images for working with this cellular subclass in the classification model for future research.

At the particular point of the images present in the blood smear, which is the type of examination on which this work focuses, we have the additional difficulty that each particulate component of the blood has its shapes, characteristics, internal arrangement, and even color, which are relevant for the classification. Of these components, leukocytes (white blood cells), due to their structural complexity, represent a problem when developing algorithms that have a good level of precision in their classification and detection of each of the cell types that make up this group (lymphocytes, monocytes, eosinophils, segmented, and basophils).

5. Conclusions

This paper presents a hybrid multi-level approach for automatically detecting and classifying white blood cells into mononuclear (lymphocytes and monocytes) and polymorphonuclear (segmented neutrophils and eosinophils) types from blood smear images. At the first level, a Faster R-CNN network is applied to identify the region of interest of white blood cells, together with the separation of mononuclear cells from polymorphonuclear cells. Once separated, two parallel convolutional neural networks with the MobileNet structure are used to recognize the subclasses. The model achieved good performance metrics, achieving an average accuracy, precision, recall, and F-score of 98.4%, indicating that the proposal represents an excellent tool for clinical and diagnostic laboratories. Moreover, the proposed ML-CNN approach allows obtaining better accuracy results while optimizing the cost of computational resources, thus allowing the creation, evaluation, and retraining of each neural algorithm in an isolated way, without affecting those that achieve the expected levels of performance.

For further work, on the one hand, we intend to use data augmentation tools to include basophil images in the polymorphonuclear group in training and extend the model for the classification of immature leukocytes. On the other hand, it is also intended to develop machine learning techniques that include expert knowledge to improve performance [61,62].

Author Contributions

Conceptualization, C.C. and R.T.; methodology, C.C., R.L., R.T. and R.S.; software, C.C., R.L. and R.S.; validation, C.C., R.L. and R.S.; investigation, C.C. and M.Q.; data curation, C.C.; writing—original draft preparation, C.C., M.Q. and R.L.; writing—review and editing, M.Q., R.S. and R.T.; visualization, R.L.; supervision, R.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable due to the data is publicly available and de-identified.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data that support the findings of this study are openly available in a public repositories.

Conflicts of Interest

The authors declare that they have no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Adewoyin A. Peripheral blood film-a review. Ann. Ib. Postgrad. Med. 2014;12:71–79. [PMC free article] [PubMed] [Google Scholar]

- 2.Bonilla M., Menell J. Lanzkowsky’s Manual of Pediatric Hematology and Oncology. Elsevier; Amsterdam, The Netherlands: 2016. Chapter 13–Disorders of White Blood Cells; pp. 209–238. [Google Scholar]

- 3.Gurcan M.N., Boucheron L.E., Can A., Madabhushi A., Rajpoot N.M., Yener B. Histopathological image analysis: A review. IEEE Rev. Biomed. Eng. 2009;2:147–171. doi: 10.1109/RBME.2009.2034865. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Xing F., Yang L. Robust nucleus/cell detection and segmentation in digital pathology and microscopy images: A comprehensive review. IEEE Rev. Biomed. Eng. 2016;9:234–263. doi: 10.1109/RBME.2016.2515127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Saraswat M., Arya K. Automated microscopic image analysis for leukocytes identification: A survey. Micron. 2014;65:20–33. doi: 10.1016/j.micron.2014.04.001. [DOI] [PubMed] [Google Scholar]

- 6.Janowczyk A., Madabhushi A. Deep learning for digital pathology image analysis: A comprehensive tutorial with selected use cases. J. Pathol. Inform. 2016;7:29. doi: 10.4103/2153-3539.186902. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Su M.C., Cheng C.Y., Wang P.C. A neural-network-based approach to white blood cell classification. Sci. World J. 2014;2014:796371. doi: 10.1155/2014/796371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hegde R.B., Prasad K., Hebbar H., Singh B.M.K., Sandhya I. Automated decision support system for detection of leukemia from peripheral blood smear images. J. Digit. Imaging. 2020;33:361–374. doi: 10.1007/s10278-019-00288-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Prinyakupt J., Pluempitiwiriyawej C. Segmentation of white blood cells and comparison of cell morphology by linear and naïve Bayes classifiers. Biomed. Eng. Online. 2015;14:63. doi: 10.1186/s12938-015-0037-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Gautam A., Singh P., Raman B., Bhadauria H. Automatic classification of leukocytes using morphological features and naïve Bayes classifier; Proceedings of the 2016 IEEE Region 10 Conference (TENCON); Singapore. 22–25 November 2016; pp. 1023–1027. [Google Scholar]

- 11.Acevedo A., Alférez S., Merino A., Puigví L., Rodellar J. Recognition of peripheral blood cell images using convolutional neural networks. Comput. Methods Programs Biomed. 2019;180:105020. doi: 10.1016/j.cmpb.2019.105020. [DOI] [PubMed] [Google Scholar]

- 12.Tiwari P., Qian J., Li Q., Wang B., Gupta D., Khanna A., Rodrigues J.J., de Albuquerque V.H.C. Detection of subtype blood cells using deep learning. Cogn. Syst. Res. 2018;52:1036–1044. doi: 10.1016/j.cogsys.2018.08.022. [DOI] [Google Scholar]

- 13.Hegde R.B., Prasad K., Hebbar H., Singh B.M.K. Feature extraction using traditional image processing and convolutional neural network methods to classify white blood cells: A study. Australas. Phys. Eng. Sci. Med. 2019;42:627–638. doi: 10.1007/s13246-019-00742-9. [DOI] [PubMed] [Google Scholar]

- 14.Ullah A., Muhammad K., Hussain T., Baik S.W. Conflux LSTMs network: A novel approach for multi-view action recognition. Neurocomputing. 2021;435:321–329. doi: 10.1016/j.neucom.2019.12.151. [DOI] [Google Scholar]

- 15.Mellado D., Saavedra C., Chabert S., Torres R., Salas R. Self-improving generative artificial neural network for pseudorehearsal incremental class learning. Algorithms. 2019;12:206. doi: 10.3390/a12100206. [DOI] [Google Scholar]

- 16.Castro J.S., Chabert S., Saavedra C., Salas R.F. Convolutional neural networks for detection intracranial hemorrhage in CT images. CRoNe. 2019;2564:37–43. [Google Scholar]

- 17.Chabert S., Mardones T., Riveros R., Godoy M., Veloz A., Salas R., Cox P. Applying machine learning and image feature extraction techniques to the problem of cerebral aneurysm rupture. Res. Ideas Outcomes. 2017;3:e11731. doi: 10.3897/rio.3.e11731. [DOI] [Google Scholar]

- 18.Gao J., Yang J., Zhang J., Li M. Natural scene recognition based on convolutional neural networks and deep Boltzmannn machines; Proceedings of the 2015 IEEE International Conference on Mechatronics and Automation (ICMA); Beijing, China. 2–5 August 2015; pp. 2369–2374. [Google Scholar]

- 19.Mellado D., Saavedra C., Chabert S., Salas R. Pseudorehearsal approach for incremental learning of deep convolutional neural networks; Proceedings of the Computational Neuroscience: First Latin American Workshop, LAWCN 2017; Porto Alegre, Brazil. 22–24 November 2017; Cham, Switzerland: Springer; 2017. pp. 118–126. [Google Scholar]

- 20.Yildirim M., Cinar A.C. Classification of White Blood Cells by Deep Learning Methods for Diagnosing Disease. Rev. d’Intell. Artif. 2019;33:335–340. doi: 10.18280/ria.330502. [DOI] [Google Scholar]

- 21.Toğaçar M., Ergen B., Cömert Z. Classification of white blood cells using deep features obtained from Convolutional Neural Network models based on the combination of feature selection methods. Appl. Soft Comput. 2020;97:106810. doi: 10.1016/j.asoc.2020.106810. [DOI] [Google Scholar]

- 22.Honnalgere A., Nayak G. ISBI 2019 C-NMC Challenge: Classification in Cancer Cell Imaging. Springer; Singapore: 2019. Classification of normal versus malignant cells in B-ALL white blood cancer microscopic images; pp. 1–12. [Google Scholar]

- 23.Çınar A., Tuncer S.A. Classification of lymphocytes, monocytes, eosinophils, and neutrophils on white blood cells using hybrid Alexnet-GoogleNet-SVM. SN Appl. Sci. 2021;3:503. doi: 10.1007/s42452-021-04485-9. [DOI] [Google Scholar]

- 24.H Mohamed E., H El-Behaidy W., Khoriba G., Li J. Improved White Blood Cells Classification based on Pre-trained Deep Learning Models. J. Commun. Softw. Syst. 2020;16:37–45. doi: 10.24138/jcomss.v16i1.818. [DOI] [Google Scholar]

- 25.Lu Y., Qin X., Fan H., Lai T., Li Z. WBC-Net: A white blood cell segmentation network based on UNet++ and ResNet. Appl. Soft Comput. 2021;101:107006. doi: 10.1016/j.asoc.2020.107006. [DOI] [Google Scholar]

- 26.Khouani A., Daho M.E.H., Mahmoudi S.A., Chikh M.A., Benzineb B. Automated recognition of white blood cells using deep learning. Biomed. Eng. Lett. 2020;10:359–367. doi: 10.1007/s13534-020-00168-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Howard A.G., Zhu M., Chen B., Kalenichenko D., Wang W., Weyand T., Andreetto M., Adam H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv. 20171704.04861 [Google Scholar]

- 28.Zhong W., Gu F. A multi-level deep learning system for malware detection. Expert Syst. Appl. 2019;133:151–162. doi: 10.1016/j.eswa.2019.04.064. [DOI] [Google Scholar]

- 29.Kuang Z., Yu J., Li Z., Zhang B., Fan J. Integrating multi-level deep learning and concept ontology for large-scale visual recognition. Pattern Recognit. 2018;78:198–214. doi: 10.1016/j.patcog.2018.01.027. [DOI] [Google Scholar]

- 30.Zhang Y., Wu H., Liu H., Tong L., Wang M.D. Improve Model Generalization and Robustness to Dataset Bias with Bias-regularized Learning and Domain-guided Augmentation. arXiv. 20191910.06745 [Google Scholar]

- 31.Khan S., Sajjad M., Hussain T., Ullah A., Imran A.S. A Review on Traditional Machine Learning and Deep Learning Models for WBCs Classification in Blood Smear Images. IEEE Access. 2020;9:10657–10673. doi: 10.1109/ACCESS.2020.3048172. [DOI] [Google Scholar]

- 32.Deshpande N.M., Gite S., Aluvalu R. A review of microscopic analysis of blood cells for disease detection with AI perspective. PeerJ Comput. Sci. 2021;7:e460. doi: 10.7717/peerj-cs.460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Abou El-Seoud S., Siala M., McKee G. Detection and Classification of White Blood Cells Through Deep Learning Techniques. Int. J. Online Biomed. Eng. (iJOE). 2020;16:15. doi: 10.3991/ijoe.v16i15.15481. [DOI] [Google Scholar]

- 34.Togacar M., Ergen B., Sertkaya M.E. Subclass separation of white blood cell images using convolutional neural network models. Elektron. Elektrotech. 2019;25:63–68. doi: 10.5755/j01.eie.25.5.24358. [DOI] [Google Scholar]

- 35.Wang Q., Wang J., Zhou M., Li Q., Wen Y., Chu J. A 3D attention networks for classification of white blood cells from microscopy hyperspectral images. Opt. Laser Technol. 2021;139:106931. doi: 10.1016/j.optlastec.2021.106931. [DOI] [Google Scholar]

- 36.Basnet J., Alsadoon A., Prasad P., Al Aloussi S., Alsadoon O.H. A novel solution of using deep learning for white blood cells classification: Enhanced loss function with regularization and weighted loss (ELFRWL) Neural Process. Lett. 2020;52:1517–1553. doi: 10.1007/s11063-020-10321-9. [DOI] [Google Scholar]

- 37.Jiang M., Cheng L., Qin F., Du L., Zhang M. White blood cells classification with deep convolutional neural networks. Int. J. Pattern Recognit. Artif. Intell. 2018;32:1857006. doi: 10.1142/S0218001418570069. [DOI] [Google Scholar]

- 38.Yao X., Sun K., Bu X., Zhao C., Jin Y. Classification of white blood cells using weighted optimized deformable convolutional neural networks. Artif. Cells Nanomed. Biotechnol. 2021;49:147–155. doi: 10.1080/21691401.2021.1879823. [DOI] [PubMed] [Google Scholar]

- 39.Huang Q., Li W., Zhang B., Li Q., Tao R., Lovell N.H. Blood cell classification based on hyperspectral imaging with modulated Gabor and CNN. IEEE J. Biomed. Health Inform. 2019;24:160–170. doi: 10.1109/JBHI.2019.2905623. [DOI] [PubMed] [Google Scholar]

- 40.Khan A., Eker A., Chefranov A., Demirel H. White blood cell type identification using multi-layer convolutional features with an extreme-learning machine. Biomed. Signal Process. Control. 2021;69:102932. doi: 10.1016/j.bspc.2021.102932. [DOI] [Google Scholar]

- 41.Imran Razzak M., Naz S. Microscopic blood smear segmentation and classification using deep contour aware CNN and extreme machine learning; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops; Honolulu, HI, USA. 21–26 July 2017; Piscataway, NJ, USA: IEEE Press; 2017. pp. 49–55. [Google Scholar]

- 42.Özyurt F. A fused CNN model for WBC detection with MRMR feature selection and extreme learning machine. Soft Comput. 2020;24:8163–8172. doi: 10.1007/s00500-019-04383-8. [DOI] [Google Scholar]

- 43.Patil A., Patil M., Birajdar G. White blood cells image classification using deep learning with canonical correlation analysis. IRBM. 2021;42:378–389. doi: 10.1016/j.irbm.2020.08.005. [DOI] [Google Scholar]

- 44.Baydilli Y.Y., Atila Ü. Classification of white blood cells using capsule networks. Comput. Med. Imaging Graph. 2020;80:101699. doi: 10.1016/j.compmedimag.2020.101699. [DOI] [PubMed] [Google Scholar]

- 45.Baghel N., Verma U., Nagwanshi K.K. WBCs-Net: Type identification of white blood cells using convolutional neural network. Multimed. Tools Appl. 2021:1–17. doi: 10.1007/s11042-021-11449-z. [DOI] [Google Scholar]

- 46.Tran T., Kwon O.H., Kwon K.R., Lee S.H., Kang K.W. Blood cell images segmentation using deep learning semantic segmentation; Proceedings of the 2018 IEEE International Conference on Electronics and Communication Engineering (ICECE); Xi’an, China. 10–12 December 2018; pp. 13–16. [Google Scholar]

- 47.Banik P.P., Saha R., Kim K.D. An automatic nucleus segmentation and CNN model based classification method of white blood cell. Expert Syst. Appl. 2020;149:113211. doi: 10.1016/j.eswa.2020.113211. [DOI] [Google Scholar]

- 48.Hegde R.B., Prasad K., Hebbar H., Singh B.M.K. Comparison of traditional image processing and deep learning approaches for classification of white blood cells in peripheral blood smear images. Biocybern. Biomed. Eng. 2019;39:382–392. doi: 10.1016/j.bbe.2019.01.005. [DOI] [Google Scholar]

- 49.Kutlu H., Avci E., Özyurt F. White blood cells detection and classification based on regional convolutional neural networks. Med. Hypotheses. 2020;135:109472. doi: 10.1016/j.mehy.2019.109472. [DOI] [PubMed] [Google Scholar]

- 50.Liang G., Hong H., Xie W., Zheng L. Combining convolutional neural network with recursive neural network for blood cell image classification. IEEE Access. 2018;6:36188–36197. doi: 10.1109/ACCESS.2018.2846685. [DOI] [Google Scholar]

- 51.Yu W., Chang J., Yang C., Zhang L., Shen H., Xia Y., Sha J. Automatic classification of leukocytes using deep neural network; Proceedings of the 2017 IEEE 12th International Conference on ASIC (ASICON); Guiyang, China. 25–28 October 2017; pp. 1041–1044. [Google Scholar]

- 52.Aslan A. WBC & RBC Detection Dataset from Peripheral Blood Smears. 2020. [(accessed on 10 June 2020)]. Available online: https://github.com/draaslan/blood-cell-detection-dataset.

- 53.Alam M.M., Islam M.T. Machine learning approach of automatic identification and counting of blood cells. Healthc. Technol. Lett. 2019;6:103–108. doi: 10.1049/htl.2018.5098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Alam M., Islam M. Complete Blood Count (CBC) Dataset. 2019. [(accessed on 10 June 2020)]. Available online: https://github.com/MahmudulAlam/Complete-Blood-Cell-Count-Dataset.

- 55.Zheng X., Wang Y., Wang G., Liu J. Fast and Robust Segmentation of White Blood Cell Images by Self-supervised Learning. Micron. 2018;107:55–71. doi: 10.1016/j.micron.2018.01.010. [DOI] [PubMed] [Google Scholar]

- 56.Zheng X. Data for: Fast and Robust Segmentation of Cell Images by Self-Supervised Learning. Mendeley Data, V1. 2018. [(accessed on 10 June 2020)]. Available online: https://data.mendeley.com/datasets/w7cvnmn4c5/1.

- 57.Mooney P. Blood Cell Images. 2018. [(accessed on 10 June 2020)]. Available online: https://www.kaggle.com/paultimothymooney/blood-cells.

- 58.Rezatofighi S.H., Soltanian-Zadeh H. Automatic recognition of five types of white blood cells in peripheral blood. Comput. Med. Imaging Graph. 2011;35:333–343. doi: 10.1016/j.compmedimag.2011.01.003. [DOI] [PubMed] [Google Scholar]

- 59.Ren S., He K., Girshick R., Sun J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015;28:91–99. doi: 10.1109/TPAMI.2016.2577031. [DOI] [PubMed] [Google Scholar]

- 60.Girshick R. Fast r-cnn. arXiv. 20151504.08083 [Google Scholar]

- 61.Chabert S., Castro J.S., Muñoz L., Cox P., Riveros R., Vielma J., Huerta G., Querales M., Saavedra C., Veloz A., et al. Image Quality Assessment to Emulate Experts’ Perception in Lumbar MRI Using Machine Learning. Appl. Sci. 2021;11:6616. doi: 10.3390/app11146616. [DOI] [Google Scholar]

- 62.Cantor E., Salas R., Rosas H., Guauque-Olarte S. Biological knowledge-slanted random forest approach for the classification of calcified aortic valve stenosis. BioData Min. 2021;14:35. doi: 10.1186/s13040-021-00269-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data that support the findings of this study are openly available in a public repositories.