Abstract

Kidney disease is a major public health concern that has only recently emerged. Toxins are removed from the body by the kidneys through urine. In the early stages of the condition, the patient has no problems, but recovery is difficult in the later stages. Doctors must be able to recognize this condition early in order to save the lives of their patients. To detect this illness early on, researchers have used a variety of methods. Prediction analysis based on machine learning has been shown to be more accurate than other methodologies. This research can help us to better understand global disparities in kidney disease, as well as what we can do to address them and coordinate our efforts to achieve global kidney health equity. This study provides an excellent feature-based prediction model for detecting kidney disease. Various machine learning algorithms, including k-nearest neighbors algorithm (KNN), artificial neural networks (ANN), support vector machines (SVM), naive bayes (NB), and others, as well as Re-cursive Feature Elimination (RFE) and Chi-Square test feature-selection techniques, were used to build and analyze various prediction models on a publicly available dataset of healthy and kidney disease patients. The studies found that a logistic regression-based prediction model with optimal features chosen using the Chi-Square technique had the highest accuracy of 98.75 percent. White Blood Cell Count (Wbcc), Blood Glucose Random (bgr), Blood Urea (Bu), Serum Creatinine (Sc), Packed Cell Volume (Pcv), Albumin (Al), Hemoglobin (Hemo), Age, Sugar (Su), Hypertension (Htn), Diabetes Mellitus (Dm), and Blood Pressure (Bp) are examples of these traits.

Keywords: usability score artificial intelligence, medical information systems, image matching, machine learning algorithms, morphological operations

1. Introduction

Kidney disease affects over 750 million people worldwide, a figure that is growing. Kidney disease is a condition that affects people all over the world, but the disease’s prevalence, identification, and treatment are all very different. Renal failure is the leading cause of death among people living in modern society. Cigarette smoking, excessive alcohol consumption, high cholesterol, and a variety of other risk factors all play a role in the disease. The kidney is a vital organ in the human body, performing a variety of vital functions. Despite the fact that kidney disease is better understood in developed countries, new research indicates that the condition is more prevalent in developing countries. The primary function is to collect waste and excess fluid from the circulatory system and excrete it via the kidneys via urine. If the function of this organ is compromised, the amount of harmful liquids and wastes in our systems may have disastrous consequences [1]. It is critical to emphasize that there are two kinds of kidney disease: acute kidney disease and chronic (long-term) kidney disease [2]. The most common type of kidney illness is acute renal disease. Chronic kidney failure is characterized by a progressive decline in kidney function over time (usually years). When the kidney’s blood supply is cut off, the flow of urine is hampered by an enlarged prostate, or the kidney itself is injured and becomes ineffective, this type of kidney failure occurs. As a result of a chronic renal condition, kidney failure does not occur overnight. In the early stages of the disease, the patient exhibits no signs or symptoms of the illness. Patients who have had diabetes and high blood pressure for a long time are more likely to develop this syndrome. Patients who have been exposed for an extended period of time to lead-based medications and poisons are at risk of developing this disease. According to a poll, this condition affects a large number of people in our country, and thousands of people die from it each year. Only the most affluent countries have access to renal failure treatment. According to the World Health Organization, only 11% of the world’s population receive adequate treatment for renal failure. Because they cannot afford dialysis or a kidney transplant, low-income patients die of renal failure. Patients who are identified and treated early on have a better chance of avoiding renal failure entirely. Scientists have developed a number of methods for detecting kidney disease at an early stage [3,4]. Patients’ doctors may inform them ahead of time. Taking preventative measures before things get out of hand is a viable option.

Chronic Kidney Disease

Humans have two kidneys that are roughly the size of a fist. Their primary purpose is to filter blood. They remove waste and excess water, which turn into urine. They also help to keep the body’s chemical balance, control blood pressure, and produce hormones. Chronic kidney disease means that the kidneys are damaged and are unable to filter blood as effectively as they should. This damage can cause waste to accumulate in the body and cause other issues that can be harmful to health. The most common causes of chronic kidney disease are diabetes and high blood pressure. Kidney damage occurs gradually over a long period of time. Many people have no symptoms until their kidney disease is advanced. Only blood and urine tests can inform you if you have kidney disease. Treatments cannot cure kidney disease, but they can help to slow its progression. They include blood pressure medications, blood sugar control medications, and cholesterol-lowering medications. Chronic kidney disease can worsen over time. It can occasionally result in kidney failure. Dialysis or a kidney transplant will be required if your kidneys fail. Based on population studies from developed countries, a systematic review found a mean prevalence of 7.2% in individuals older than 30 years. According to WHO data, it affects approximately 10% of the adult population and more than 20% of those over the age of 60, and it is undoubtedly underdiagnosed. The prevalence of CKD can reach 35–40% in patients followed up in primary care for diseases as common as high blood pressure (HBP) or diabetes mellitus (DM). The magnitude of the problem is magnified by the increase in morbidity and mortality, particularly cardiovascular mortality, caused by renal deterioration. CKD is thought to be the common final destination of a group of pathologies that affect the kidney in a chronic and irreversible manner. Once the diagnostic and therapeutic options for primary kidney disease have been exhausted, CKD necessitates common protocols of action that are, in general, independent of it. The most common causes of ACKD are described below, along with links to further information. More than one cause frequently coexists and worsens kidney damage.

In this work, the primary objective is to identify the best early-stage prediction model [5] for renal disease based on the most optimal attributes possible [6]. The following sub-goals are included:

Review the existing approaches for the detection of kidney disease.

Determine the best feature by applying various feature selection techniques.

Build various prediction models on a kidney dataset using different machine learning algorithms and analyze their accuracy in the detection of kidney disease.

The rest of the article is organized as follows: Section 2 provides a review of the literature on the detection of kidney disease. Section 3 proposes a method for detecting kidney disease that makes use of machine learning and feature extraction. Section 4 discusses the kidney dataset, experimental results, and comparisons with existing methods. Section 5 discusses the conclusion and future work.

2. Related Works

The diagnosis of kidney illness using machine learning algorithms is an emerging subject of computer vision in healthcare. Because of their great accuracy in identifying illnesses, these procedures are gaining prominence. Using machine learning algorithms, such as decision trees, J48, Support Vector Machine (SVM), and others, researchers have developed several methods for identifying kidney illness. This section describes previous research ideas proposed by a variety of scholars.

Boukenze, B. et al. [6] suggested a machine learning-based method for identifying renal disorders. They employed the k-nearest neighbors algorithm (KNN), support vector machine (SVM), decision tree, and artificial neural network (ANN) machine learning algorithms. They used a number of performance measures to evaluate the accuracy of prediction models. They observed that the decision tree-based model outperformed all other models in diagnosing chronic failure, with an accuracy of 63 percent.

A. Salekin and colleagues employed SVM, KNN, and random forest techniques to build prediction models. They based their findings on a dataset of 400 cases. There were 24 properties in each record. Different machine learning algorithm-based models produced variable degrees of accuracy, it was revealed. The accuracy of the decision tree-based model was 98 percent, which was greater than that of earlier models.

H. Polat et al. [7] predicted renal disease using the SVM machine learning technique. They had a 97.5 percent accuracy rate. In order to enhance the accuracy, they applied a variety of feature selection methodologies. They improved the accuracy by 1% by employing feature selection.

Panwong, P. et al. [8] proposed an approach using KNN, NB, and decision tree classifiers. They also reduced the number of features by using the wrapper technique. Using the decision tree technique, they attained a maximum accuracy of 85 percent.

Dulhare, U. N. et al. [9] suggested a technique for diagnosing kidney illness using the naive Bayes machine learning algorithm in combination with the R attribute selector. They were 97.5% accurate in diagnosing renal illness.

Vasquez-Morales et al. [10] developed a neural network classifier based on massive quantities of CKD data, and the model proved to be 95 percent accurate in its predictions. To predict the advancement of diabetic kidney disease, Makino et al. [11] collected patient diagnoses and treatment information from textual data in an attempt to predict the progression of diabetic kidney disease.

According to Ren et al. [12], they developed a prediction model for diagnosing chronic kidney disease (CKD) using data from electronic health records (EHR). Based on a neural network architecture, the proposed model encoded and decoded textual and numerical data from electronic health records (EHR). A deep neural network model for identifying chronic renal disease was developed by Ma F. et al. [13]. Comparing the supplied model with ANN and SVM, the accuracy of the given model was the highest.

Almansour and colleagues [14] utilized machine learning to develop a technique for preventing chronic kidney disease. Researchers used machine learning classification methods, such as SVM and ANN, to make their findings. The experiments revealed that ANN outperformed SVM in terms of accuracy, with a 99.75% accuracy rate.

J. Qin and colleagues [15] presented a machine learning strategy for diagnosing chronic kidney disease (CKD) in its early stages. In order to construct their models, they used logistic regression, random forest, SVM, naive Bayes classifier, KNN, and the feedforward neural network as techniques. With an accuracy rating of 99.75%, the random forest classification model was shown to be the most accurate.

Z. Segal and colleagues [16] developed an ensemble tree-based machine learning algorithm (XGBoost) for the diagnosis of kidney disease in its early stages. Models such as random forest, CatBoost, and regression with regularization were used to compare the results of the stated model. All matrices were improved by using the proposed model, which had c-statistics of 0.93, sensitivity of 0.715%, and specificity of 0.958, among other improvements.

Khamparia et al. [17] developed a deep learning model for the early identification of chronic kidney disease (CKD) that employed a stacked autoencoder model to extract features from multimedia data and was published in Nature Communications. The authors used a SoftMax classifier to predict the final class, which they found to be accurate. Using the UC Irvine Machine Learning Repository (UCI) chronic kidney disease (CKD) dataset [18], it was revealed that the recommended model outperformed standard classification algorithms when compared to the data set in question.

Ebiaredoh Mienye Sarah A. et al. [19] developed a robust model for predicting chronic kidney disease (CKD) by combining an enhanced sparse autoencoder (SAE) with Softmax regression. The autoencoders in our proposed model achieved sparsity by penalizing the weights, as previously stated. Because the SoftMax regression model was specifically tailored for the classification task, the proposed model performed wonderfully in the testing environment. On the chronic kidney disease (CKD) data set, the proposed model had a precision of 98 percent, according to the researchers. When it came to performance, the proposed model outperformed other already available strategies.

According to Zhiyong Pang et al. [20], a fully automated computer-aided diagnostic approach that employed breast magnetic resonance imaging to differentiate between malignant and benign masses was proposed.

Using a combination of the support vector machine and the ReliefF feature selection approaches, the texture features were selected for use. It was found that this method was 92.3% accurate.

Chen, G. et al. [21] developed a model for identifying Hepatitis C virus infection that used the Fisher discriminating analysis method with an SVM classifier to obtain a more accurate diagnosis. The comparison of the proposed methodology to current methods showed that the hybrid method outperformed all other methods, reaching the highest classification accuracy of 96.77%. The authors of this paper developed a breast cancer diagnosis model [22]. Artificial neural networks are used to classify breast cancer based on qualities that have been selected using sequential forward and backward selection processes. SBSP obtained the highest level of accuracy, with a score of 98.75%.

Table 1 outlines prior studies by different researchers. According to the table, researchers employed multiple machine learning algorithm-based prediction models to predict renal disorders. The accuracy of these models varied and was inadequate. We noticed that many researchers did not pre-process their data and used no feature selection strategy.

Table 1.

Summary of related work.

| Sr. No. | Author | Year | Machine Learning Algorithms and Accuracy (%) |

|---|---|---|---|

| 1. | A. J. Aljaaf et al. [1] | 2018 | Naïve Bayes: 83.4%, J48: 86.23% |

| 2. | N. Borisagar, D. Barad, and P. Raval [5] | 2017 | ANN: 99.5 |

| 3. | B. Boukenze, A. Haqiq, and H. Mousannif [6] | 2018 | SVM: 63.5%, LR: 64.0, C4.5: 63%, KNN: 55.15% |

| 4. | H. Polat, H. D. Mehr and A. Cetin [7] | 2019 | SVM: 97.5% |

| 5. | P. Panwong and N. Iam-On [8] | 2016 | KNN: 86.32%, naïve Bayes: 60.46%, ANN: 83.24%, RF: 86.60%, J48: 79.52% |

| 6. | Makino et al. [11] | 2019 | KNN, Naïve Bayes + LDA + random subspace + Tree-based decision: 94% |

| 7. | Ren et al. [12] | 2019 | SVM + ReliefF: 92.7% |

| 8. | Ma F. et al. [13] | 2019 | Fisher discriminatory analysis and SVM: 96.7% |

| 9. | Almansour and colleagues [14] | 2020 | KNN and SVM: 99% |

| 10. | J. Qin and colleagues [15] | 2019 | SVM, KNN, and naïve Bayes decision tree: 99.7% |

| 11. | Z. Segal and colleagues [16] | 2019 | SVM, KNN, and decision tree: 99.1% |

| 12. | Khamparia et al. [17] | 2020 | Logistic regression, KNN, SVM, random forest, naive Bayes, and ANN: 99.7% |

| 13. | Ebiaredoh-Mienye Sarah A. et al. [18] | 2017 | SVM 98.5% |

| 14. | Zhiyong Pang et al. [19] | 2020 | Softmax regression 98% |

| 15. | Tabassum, Mamatha et al. [23] | 2017 | DT: 85%, RF: 85% |

| 16. | K. R. A. Padmanaban and G. Parthiban [24] | 2016 | DT: 91%, naïve Bayes: 86% |

| 17. | Sahil Sharma, Vinod Sharma, and Atul Sharma [25] | 2018 | ANN: 80.4%, RF: 78.6% |

| 18. | Pratibha Devishri [26] | 2019 | ANN: 86.40%, SVM: 77.12% |

| 19. | Sujata Drall, G. Singh Drall, S. Singh, Bharat Naib [27] | 2018 | Naïve Bayes: 94.8%, KNN: 93.75%, SVM: 96.55% |

LR: Logistic Regression; KNN: k-Nearest Neighbors; SVM: Support Vector Machines; CART: Classification and Regression Trees; ANN: Artificial Neural Networks; LDA: Linear Discriminant Analysis; DT: Decision Tree; RF: Random Forest.

3. Support Vector Machine

The first concepts and foundational principles of SVM were provided by the statistical learning theory (structural risk minimization). It can be used in classification and nonlinear regression. This broad classification of SVM can be further subdivided into two subcategories: linear SVM (linear SVM) and nonlinear SVM (nonlinear SVM) [28].

L-SVM [29] training data of different types are classified using linear SVM, which classifies training data by giving Class 1 to the “+1” and Class 2 to the “−1” symbols, then uses the mathematical notation

| (1) |

here w is the weight vector, x is the input dataset, and b is a bias in the hyper plane, which is referred to as a displacement. Bias is used to make sure that the hyper plane [11] is positioned correctly following movement in a horizontal plane. Thus, prejudice is affected by training with bias. A hyper plane has its parameters, which are w and b. A decision surface G. Chen et al. (2020) [29] is considered to be a function when SVM is used for classification.

| (2) |

SVM generally serves to increase the marginal distance of the data set and therefore enhance the distinguishing function, allowing better categorization. Improving the hyperplane’s distinguishing function is a quantic programming issue.

| (3) |

To solve the initial minimization issue, we apply the Lagrange theory:

| (4) |

In the end, the linear divisive decision-making function has been completed.

| (5) |

To sum up, when f(x) > 0, it indicates that the sample is marked +1 and is in the same category as samples marked with “+1”; otherwise, it indicates that the sample is marked −1 and is in the same category as samples marked with “−1”. Linear hyper planes [30] cannot properly identify data points when training data include noise. Slack variables ξi are introduced to the constraint, resulting in a modification of the original (3):

| (6) |

The position of the border and the classification point are separated by a distance of ξi; in this case, C represents the cost of the training data classification mistake, as specified by the user. A lower C value means that the margin will be narrower, suggesting that fault tolerance has a lower chance of working in the event of a problem [31,32]. The fault tolerance rate will be larger if C is lower. The linear inseparable issue (also known as the infinitely large linear problem) will degenerate into a linear separable problem as C→∞. In this instance, the parameters and the optimal solution of the target function may be found by using the Lagrangian coefficient [33,34] in order to solve the linear inseparable dual optimization issue; hence, the solution of the linear inseparable dual optimization problem is as follows:

| (7) |

Finally, the linear decision-making function is

| (8) |

a support vector machine whose operation can include nonlinear inputs (nonlinear SVM). In the case where we cannot separate training samples using linear SVM, we may apply feature transformation, such as the function φ, to convert original 2-D data into a new, high-dimensional feature space that allows us to solve linear separable problems. SVM can use the kernel technique to effectively conduct nonlinear classification utilizing an approach known as the kernel trick. For the time being, there are many diverse foundational components being put forward. Differentiating distinct data characteristics with respect to different core functions allows for more efficient computation with SVMs [7]. Of the very common fundamental functions, these four functions have something in common:

Linear kernel function:

| (9) |

Polynomial kernel function:

| (10) |

Radial basis kernel function:

| (11) |

Sigmoid kernel function:

| (12) |

This study utilizes the emissive core function, because settings such as γ and C can increase computation efficiency and lower SVM complexity.

4. Materials and Methods

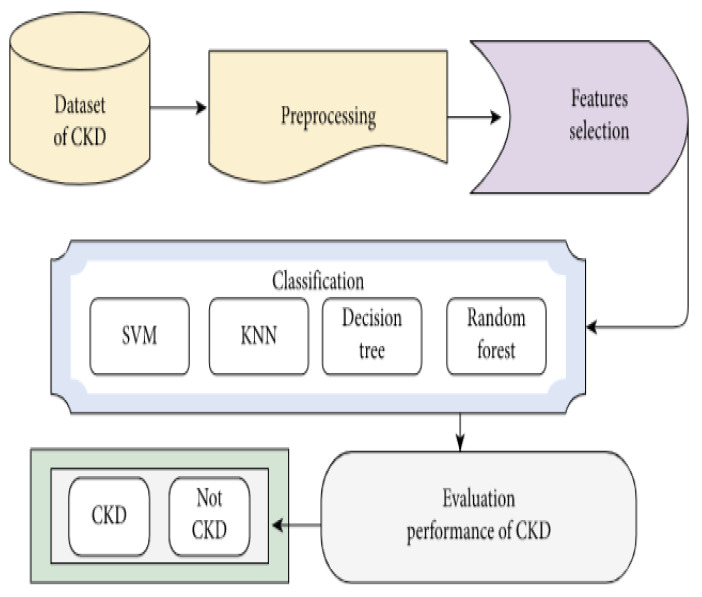

The proposed strategy is based on data mining framework as shown in Figure 1. Data mining employs computational approaches at the intersection of artificial intelligence, machine learning, statistics, and database systems [35]. Data mining is predicated on the idea that data can be analyzed from a variety of perspectives. The “Knowledge Discovery in Databases” (KDD) process is employed in this study to extract unknown patterns from web data [36]. This section describes the suggested method for detecting kidney disease. The availability of kidney disease care is directly affected by each country’s public policies and financial situation. A lower dialysis–to–transplant ratio, for example, suggests that more affluent countries have a higher rate of kidney transplantation.

Figure 1.

Detection of chronic kidney disease using recursive feature elimination and classification algorithms. CKD: Chronic Kidney Disease; SVM: Support Vector Machine; KNN: K-Nearest Neighbors.

4.1. Kidney Disease Dataset

In this work, we used a dataset of 400 patients, each with 24 attributes [18,37]. The dataset had 250 records of patients who were suffering from kidney disease and 150 medical records for completely healthy people. This dataset has medical data for different age groups. It has 50 records of people less than 30 years old and 55 records of people greater than 70 years old. The remaining records belong to people aged 31–69. From the various studies, it was found that people of any age group may suffer from kidney disease. Therefore, there is no risk of bias in evaluating the performance of prediction models. Table 2 shows the details of the various kidney disease-related attributes.

Table 2.

Details of the various kidney disease-related attributes.

| Name | Feature | Description |

|---|---|---|

| Age | Age | Patient’s age |

| Blood pressure | Bp | Blood pressure of the patient |

| Sugar level | Su | Sugar level of the patient |

| Bacteria | Ba | Presence of bacteria in the blood |

| Ratio of the density of urine | Sg | Ratio of the density of urine |

| Albumin level in the blood | Al | Ratio of the albumin level in the blood |

| Pedal edema | Pe | Does the patient have pedal edema or not |

| Red blood cells | Rbc | Patients’ red blood cell counts |

| Patient class | Class | Does the patient have kidney disease or not |

| Pus cell clumps | Pcc | Presence of pus cell clumps in the blood |

| Anemia | Ane | Does the patient have anemia or not |

| Red blood cell count | Rc | Red blood cell count of the patient |

| Hypertension | Htn | Does the patient have hypertension on not |

| Serum creatinine | Sc | Serum creatinine level in the blood |

| Diabetes mellitus | Dm | Does the patient have diabetes or not |

| Blood urea | Bu | Blood urea level of the patient |

| Blood glucose | Bgr | Blood glucose random count |

| Sodium | Sod | Sodium level in the blood |

| White blood cell count | Wc | White blood cell count of the patient |

| Hemoglobin | Hemo | Hemoglobin level in the blood |

| Packed cell volume | Pcv | Packed cell volume in the blood |

| Pus cell | Pc | pus cell count of patient |

| Potassium | Pot | Potassium level in the blood |

| Appetite | Appet | Patient’s appetite |

| Coronary artery disease | Cad | Does the patient have coronary artery disease or not |

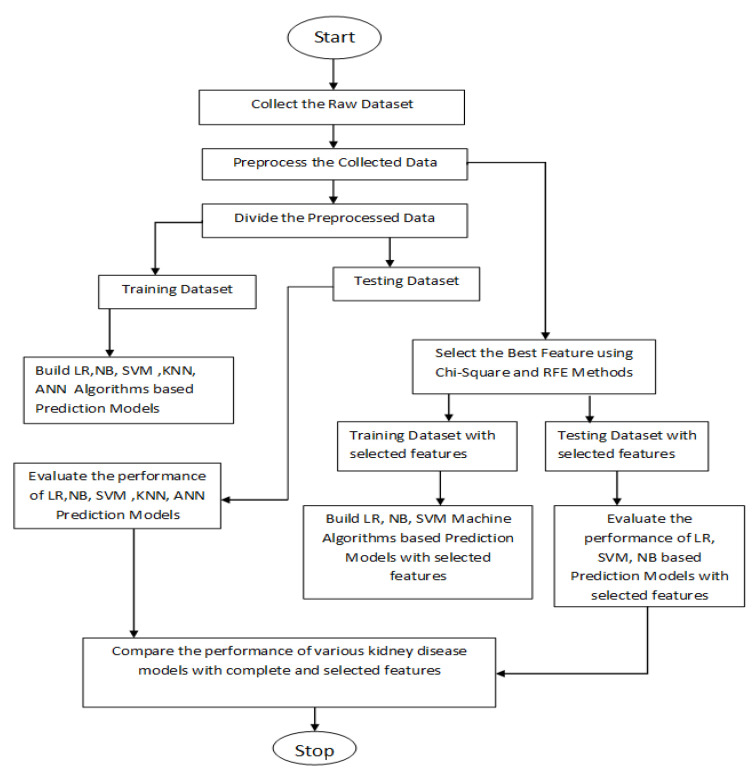

To explain the proposed approach in an easy and efficient manner, a flow chart of the whole procedure is given in Figure 2 and the steps are explained one–by–one as follows:

Figure 2.

Flow chart of the proposed model. LR: Logistic Regression; NB: Naïve Bayes; SVM: Support Vector Machine; KNN: Nearest Neighbors; ANN: Artificial Neural Network; RFE: Recursive Feature Elimination.

4.2. Proposed Algorithm

Procedure: The proposed approach for the detection of kidney disease

Input: Dataset of kidney disease records

Output: Performance of the prediction models in detecting kidney disease.

It has the following steps:

Step 1: The Glomerular Filtration Rate (GFR) is the most often utilized measure of kidney health function in CKD medical therapy. In order to calculate which, the formula uses information such as the patient’s blood creatinine, age, race, gender, and other variables. As is widely accepted, the standard formula for renal disease modification of diet (MDRD).

| (13) |

Then preprocess the collected data: In this step, we preprocess the collected kidney disease dataset. In the original dataset, the ‘rbc’ and ‘pc’ columns have normal, abnormal, and empty values. The ‘rbc’ and ‘pc’ columns have 150 and 120 entries without any values, respectively. In this dataset, the ‘pcc’ and ‘ba’ columns have ‘present’ and ‘not present’ values. The ‘cad’, ‘pe’, ‘htn’, ‘dm’, and ’ane’ columns have the values ‘yes’ and ‘no’. Also, in this dataset, ‘appet’ has the values ‘poor’ and ‘good’. Therefore, preprocessing of this dataset is a mandatory task for correct results. In this step, the empty values are replaced by NaN. We converted nominal values to binary values as follows:

In the ‘rbc’ and ‘pc’ columns, ‘normal’ and ‘abnormal’ nominal values are replaced with 1 and 0, respectively.

In the ‘pcc’ and ‘ba’ columns, the ‘present’ and ‘nonpresent’ values are replaced with 1 and 0, respectively.

In the ‘htn’, ‘pe’, ’ane’, ‘dm’, and ‘cad’ columns, the values ‘yes’ and ‘no’ are replaced with 1 and 0, respectively.

Finally, in the ‘appet’ column, ‘good’ and ‘poor’ are replaced with 1 and 0, respectively.

In the next step, null values are replaced by the average value of that particular column’s values.

Step 2. Observe the relationship between different features. In this step, we find the relationship between input and target features. We found that ‘pot’ and ‘ba’ are weakly related to the target feature.

Step 3. Divide the dataset. In this step, we divide the dataset into training and testing datasets using an 80:20 ratio. It means that 80% of data are used for training and 20% of data are used for testing purposes.

Step 4. Set the parameters of the machine learning algorithms. In this step, the kidney disease dataset’s processed features are used with machine learning algorithms to build prediction models. We used Logistic Regression, Naive Bayes, Support Vector Machine, K-Nearest Neighbors (KNN), and Artificial Neural Network (ANN) machine learning algorithms. We applied a 10-fold cross validation for building the prediction models.

Let be a ridge basis function, nonconstant, limited, and monotonically growing. If is a compact subset on , and is a real-valued continuous function on K, then K may be represented as a subset of R^n, where f is a collection of real numbers. Given an arbitrary positive parameter, there are integer N and real parameters

| (14) |

it satisfies the condition

| (15) |

We are saying that, for every given ε > 0, there exists a three-layer network, where the hidden layer represented by the ridge basis function ϕ(x) and whose input–output function is f˜(x1, …, xn), which has a mapping function f˜(x1, …, xn) that results in f(x1, …, xn) being greater than or equal to ε.

Step 5. Feature selection. In this step, we select the best features using the Recursive Feature Selection (RFE) and Chi-Square feature selection methods. As our kidney disease data set was a labeled dataset, we used the wrapper and filter technique that is the supervised feature selection technique. As we discussed earlier, the supervised feature selection techniques were divided into three categories, which had different methods in each category.

For feature selection, we used and , where S is the set of attributes of feature and attribute set with respect to the conditional attribute subset , then the evaluation function for feature selection is defined by

| (16) |

In this case, N is the number of decision classes generated by the decision attribute set D, and is equal to , reflecting the uncertainty measure of each decision class, and describes the integrated uncertainty degree of blocks .

Recursive Feature Elimination (RFE) is a feature selection algorithm of the wrapper type. Internally, it employs filter-based techniques that are distinct from the filter approach. It has two important configuration options: a. it specifies the number of features to be selected, and b. it specifies the machine learning algorithm used in feature selection. In the first case, it searches for a subset of features by considering all of the features in the training dataset and removing them until the required number of features remains. In the second case, it employs a machine learning algorithm that ranks features [38] based on their importance. It removes the least important features and then repeats the model fitting process. The process is repeated until the specified number of features remain.

The Chi-Squared feature selection method investigates the relationship between the input features and the target class. In this test, the Chi-Square value is calculated for each input feature and the target class. It has the required number of features, as well as the highest Chi-Square scores. We used the formula below to calculate the chi-square metric (Xc2) between each target class feature and each input feature. It chooses only the input features with the highest Chi-Squared values.

Chi-Square feature selection in data with m attribute values and k class labels as output. Then, the value of is

| (17) |

where is the observed frequency.

Step 6. Build the prediction model using the selected features. In this step, again, we applied 10-fold cross validation with the selected features and various machine learning algorithms to build different prediction models.

Step 7. Finally, the performance of prediction models with all features and selected features are compared.

5. Results and Analysis

To assess the performance of machine learning approaches, researchers use a variety of performance metrics. To evaluate and compare the performance of proposed prediction models, we used the precision, recall, F-measure, and accuracy performance measures.

5.1. Performance Measures

Accuracy is calculated by dividing the number of test records by the number of successfully classified records. The percentage of True Positive (TP) records to the total number of True Positive (TP) records in a certain class is called precision. There are two types of recall: true positives and false negatives. The total number of records properly categorized to the total number of records in a class is known as the recall ratio (FN). The precision, recall, F-measure, and accuracy were calculated using the following formulas:

| (18) |

| (19) |

| (20) |

where β is a parameter that can be used to give the importance to any one precision or recall.

Accuracy is commonly used as a measure for categorization techniques.

| (21) |

where TPi is the number of records correctly classified as belonging to the kidney disease class, FPi is the number of records incorrectly classified as having kidney disease, FNi is the number of records that were not classified as having a kidney disease, and TNi is the number of images that were not assigned to the correct kidney disease class.

Precision (P) is a metric that quantifies the proportion of correct positive outcomes among all possible outcomes. It is computed as follows:

| (22) |

Specificity: The system’s ability to accurately recognize the absence of impurities in the ghee picture is measured in this category. To obtain it, the number of true negatives recognized in the photographs must be counted and divided by the amount of pure milk included in the images. It was utilized to determine the specificity of the data.

| (23) |

Mean: Means are a straightforward approach commonly used in pure mathematics, as well as in analysis and computing; a wide variety of means have been invented to perform these duties. During an image processing competition, the technique of filtering by the mean is evaluated as abstraction filtering and is utilized for noise reduction.

| (24) |

A measure of variability or diversity in statistics, the standard deviation is the most widely used measure available to researchers. In the context of image processing, it indicates what fraction of variance or dispersion occurs between the predicted value and the observed value. An extremely low standard deviation suggests that the data points have a strong tendency to be extremely near to one another. A large standard deviation, on the other hand, shows that the data points are evenly distributed throughout a wide range of values.

| (25) |

We used Anaconda, an enterprise-ready, secure, and scalable data science platform, and Spyder to build and analyze the prediction approaches (Python 3.6). To evaluate the proposed method’s performance, we downloaded a kidney disease dataset containing 400 patient records. We pre-processed the data to remove null values and for other purposes. The data set was divided into two parts: training and testing, with 80 percent of the records in training and 20% in testing. Using machine learning algorithms, such as Logistic Regression, NB, SVM, K-Nearest Neighbors (KNN), and Artificial Neural Network, we developed a variety of prediction models (ANN).

The data correlation matrix was represented using Heatmap [6]. It shows how different features interact with one another. It is a useful visualization technique for comparing the values of any two features. A positive correlation indicates that, as the value of a feature increases, so does the value of the target variable. It could be negative, implying that increasing the value of a feature decreases the value of the target variable. The heatmap was created with the help of the seaborn library. It visually displays which features are closely related to the target variable. By simply looking at the different color tones used, it can be determined which value is higher, lower, and so on. A heatmap correlation matrix of kidney disease data was displayed. It showed that the Ane, Bgr, Bu, Sc, Pcv, Al, Hemo, Age, Su, Htn, Dm, and Bp characteristics were highly related to the target variable (represented in green color). This means that raising these parameter values raises the risk of kidney disease.

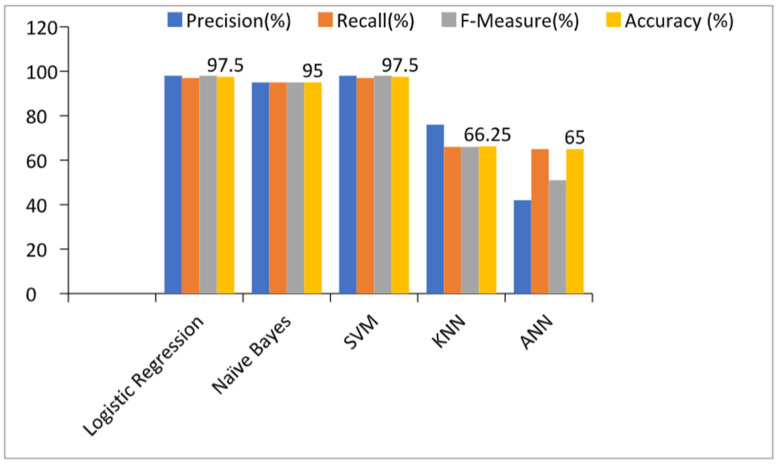

5.2. Prediction Models with All Features

Table 3 and Figure 3 show the performance of the prediction models by considering all features or, in other words, without applying any feature selection technique. From the table and graph, we can see that the accuracies of the Logistic Regression, Naïve Bayes, SVM, KNN, and ANN-based prediction models with all features were 97.5%, 95%, 97.5%, 66.25%, and 65% respectively.

Table 3.

Results of the prediction models with all features.

| Machine Learning | Precision | Recall | F-Measure | Accuracy |

|---|---|---|---|---|

| Algorithms | (%) | (%) | (%) | (%) |

| Logistic regression | 98 | 97 | 98 | 97.5 |

| Naïve Bayes | 95 | 95 | 95 | 95 |

| Support Vector Machines | 98 | 97 | 98 | 97.5 |

| k-Nearest Neighbors | 76 | 66 | 66 | 66.25 |

| Artificial Neural Networks | 42 | 65 | 51 | 65 |

Figure 3.

Results of the prediction models with all features. SVM: Support Vector Machine; KNN: K-Nearest Neighbors; ANN: Artificial Neural Network.

These also show that the accuracy of the Logistic Regression and SVM algorithm-based prediction models were highest i.e., 97.5%. The ANN-based prediction model achieved the lowest accuracy in the detection of kidney diseases. The performances of Logistic Regression and SVM were the same and can be used interchangeably for the detection of kidney diseases in the early stage. We can also see that the precision, recall, and F-measure values were the highest for the Logistic Regression and SVM-based prediction models.

5.3. Prediction Models with RFE Feature Selection Technique

Recursive Feature Elimination (RFE) is a feature selection algorithm of the wrapper type. It internally uses filter-based techniques; however, it is different to the filter approach. It has two important configuration options: a. it specifies the number of features to be selected, and b. it sets the machine learning algorithm in choosing the features. In the first case, it searches a subset of features by considering all features present in the training dataset and removes the features until the required number of features remains. In the second case, it uses a machine learning algorithm and ranks the features by their importance. It discards the least important features and repeats the model fitting process. The whole process is repeated until the mentioned number of features remains.

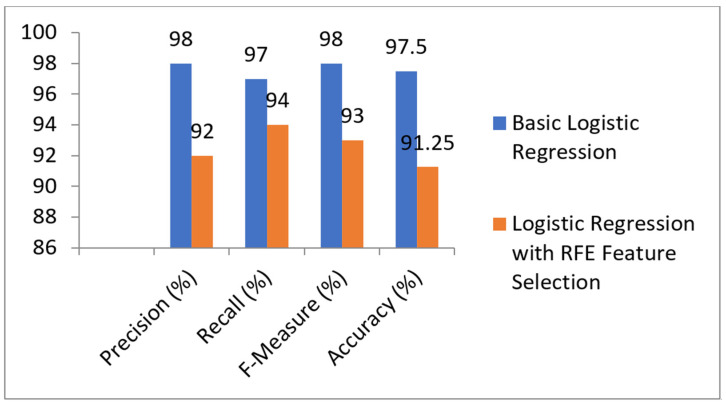

Table 4 and Figure 4 show the results of the prediction models built with basic logistic regression and with the RFE feature selection technique. From the table and graph, we can see that it achieved 97.5% accuracy without feature selection and 91.25% accuracy with RFE feature selection. It was also observed that the values of precision, recall, and F-measure were better without the RFE feature selection technique. Therefore, we conclude that the accuracy of the basic logistic model is higher than that with the RFE feature selection technique. Table 5 shows the results of the prediction models built with basic SVM and with the RFE feature selection technique.

Table 4.

Results of the LR model with RFE feature selection technique.

| Performance Measure | Basic Logistic Regression |

Logistic Regression with RFE Feature Selection |

|---|---|---|

| Precision (%) | 98 | 92 |

| Recall (%) | 97 | 94 |

| F-Measure (%) | 98 | 93 |

| Accuracy (%) | 97.5 | 91.25 |

RFE: Recursive Feature Selection.

Figure 4.

Comparison of LR Models with and without RFE feature selection. RFE: Recursive Feature Selection.

Table 5.

Results of the SVM model with the RFE feature selection technique.

| Performance Measure | Basic SVM | SVM with RFE Feature Selection |

|---|---|---|

| Precision (%) | 98 | 98 |

| Recall (%) | 97 | 96 |

| F-Measure (%) | 98 | 97 |

| Accuracy (%) | 97.5 | 96.25 |

SVM: Support Vector Machine; RFE: Recursive Feature Elimination.

From this, we can see that it achieved 97.5% accuracy without feature selection and 96.25% accuracy with RFE feature selection. It was also observed that the values of precision, recall, and F-measure were also better without the RFE feature selection technique. Therefore, we conclude that the accuracy of the basic SVM model is higher than that with the RFE feature selection technique.

5.4. Performance of Prediction Models with Chi-Square Feature Selection

In this subsection, from Table 3, we found that the accuracy of the Logistic Regression-based model was highest among the other built models in the detection of kidney disease. As we know, the feature selection technique may improve the performance of the model. In this section, we applied the Chi-Squared (chi2) statistical test to select the K-best features from the kidney disease-prediction dataset.

The Chi-Square feature selection method checks the relationship between input features and the target class. In this test, Chi-Square is determined among each input feature and the target class. It provides the required number of features with the best Chi-Square scores. It selects only those input features that have the maximum Chi-Square values. The scikit-learn library provides the SelectKBest class that is used to select a specific number of features in a suite of different statistical tests. Table 6 shows the scores of various features. It shows that the Wbcc, Bgr, Bu, Sc, Pcv, Al, Hemo, Age, Su, Htn, Dm, and Bp features have high scores in comparison with the other features.

Table 6.

Features and their scores by the Chi-Square test.

| Features | Score |

|---|---|

| Wbcc | 12,733.73 |

| Bgr | 2428.328 |

| Bu | 2336.005 |

| Sc | 354.4105 |

| Pcv | 324.7065 |

| Al | 228.1047 |

| Hemo | 125.0657 |

| Age | 113.4602 |

| Su | 100.95 |

| Htn | 86.29181 |

| Dm | 82.2 |

| Bp | 80.02432 |

| Pe | 45.10802 |

| Ane | 35.6116 |

| Sod | 28.7933 |

| Pcc | 24.07546 |

| Rbcc | 20.848 |

| Cad | 19.93604 |

| Pc | 14.16913 |

| Ba | 12.58705 |

| Appet | 12.58703 |

| Rbc | 9.416036 |

| Pot | 4.071145 |

| Sg | 0.005035 |

Wbcc: White Blood Cell Count; brg: Blood Glucose Random; Bu: Blood Urea; Sc: Serum Creatinine; Pcv: Packed Cell Volume; Al: Albumin; Hemo: Hemoglobin; Su: Sugar; Htn: Hypertension; Dm: Diabetes Mellitus; Bp: Blood Pressure; Pe: Pedal edema; Ane: Anemia; Sod: Sodium; Pcc: Pus cell clumps; Rbcc: Red blood cells count; Cad: Coronary artery disease; Pc: Pus cell; Ba: Bacteria; Appet: Appetite; Rbc: Red blood cells; Pot: Potassium; Sg: Ratio of the density of urine.

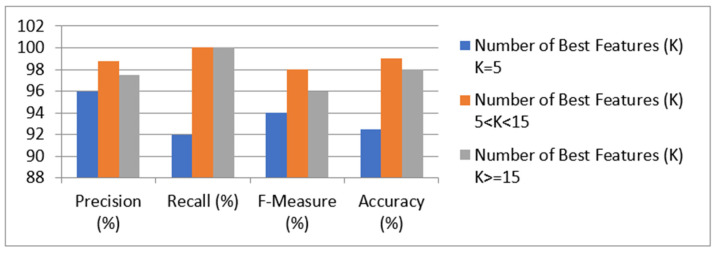

Table 7 and Figure 5 show the performance of the LR prediction model with the Chi-Square feature-selection technique.

Table 7.

Results of the LR prediction model with Chi-Square feature selection.

| Performance Measure | Number of Features (K) | Best K > = 15 |

|---|---|---|

| K = 5 5 < K < 15 | ||

| Precision (%) | 96 100 | 100 |

| Recall (%) | 92 98 | 96 |

| F-Measure (%) | 94 99 | 98 |

| Accuracy (%) | 92.5 98.75 | 97.5 |

Figure 5.

Results of the LR prediction model with Chi-Square feature selection.

We evaluated the technique using a variety of best features. It was discovered that, when the k-values ranged from 6 to 14, the model provided the best precision, recall, f-measure, and accuracy, i.e., 100 percent, 98 percent, 99 percent, and 98.75 percent, respectively. When k = 5 or fewer features are used, the model had the lowest accuracy. The table also shows that, when more than 15 features were used, the model’s performance suffered. As a result, it can be concluded that the model with more than 5 and less than 15 features provided the highest accuracy in detecting kidney disease. The performance of the SVM prediction model with the Chi-Square feature-selection technique is shown in Table 8.

Table 8.

Comparative analysis of existing models on a dataset of 400 patients each with 24 attributes [2,27].

| Method | Accuracy | Recall | Precision | F-Measure |

|---|---|---|---|---|

| Logistic regression [28] | 91.8 | 1 | 0.98 | 0.98 |

| KNN [29] | 92.7 | 0.88 | 0.98 | 0.92 |

| Naïve Bayes [30] | 95.21% | 0.92 | 1.00 | 0.94 |

| SVM [31] | 92.32 | 0.87 | 0.96 | 0.93 |

| Decision tree [32] | 93.45 | 0.95 | 1.00 | 0.96 |

| Proposed method [33] | 97.54 | 0.99 | 1.00 | 1.0 |

KNN: k-nearest neighbors algorithm; SVM: support vector machines.

We evaluated the technique with different numbers of best features. It was found that the model achieved the best precision, recall, f-measure, and accuracy when the k-values were greater than 15, i.e., 100%, 96%, 98%, and 97.5%, respectively. The model gave the lowest accuracy when K = 5 or a smaller number of features was taken. From the table, we also see that the performance of the model decreased whenever fewer than 15 features were taken. Therefore, it can be concluded that the accuracy of the SVM model did not increase by applying the Chi-Square test.

5.5. Comparison of Models with and without Feature Selection Technique

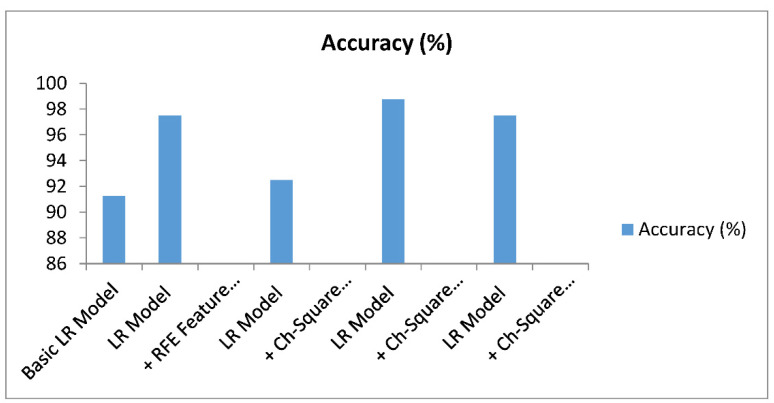

From all of the results, it can be seen that the accuracy of the Logistic Regression model with the Chi-Square feature selection techniques was the best in the detection of kidney disease. This result was the best among the other approaches in the detection of kidney disease. Table 9 shows the results of various combinations of LR models and Figure 6 graphically compares the accuracy of the different models.

Table 9.

Prediction models with and without various feature-selection techniques.

| Prediction Model | Accuracy (%) |

|---|---|

| Basic LR model | 91.25 |

| LR model + RFE feature selection | 97.5 |

| LR model + Chi-Square feature selection (K = 5) | 92.5 |

| LR model + Chi-Square feature selection (5 < K < 14) | 98.75 |

| LR model + Chi-Square feature selection (K > 14) | 97.5 |

Figure 6.

Results of the models with and without feature selection. LR: Logistic Regression; REF: Recursive Feature Elimination.

The accuracies of the basic LR model, LR model with RFE feature selection, LR model with Chi-Square feature selection (K = 5), LR model with Chi-Square feature selection (5K14), and LR model with Chi-Square feature selection (K > 14) were 91.25 percent, 97.5 percent, 92.5 percent, 98.75 percent, and 97.5 percent, respectively, as shown in Table 9. This demonstrates that the Chi-Square method outperformed the RFE feature method in terms of accuracy. It is also worth noting that the model produced good results, with 5 to 15 of the best features out of a total of 24. In summary, we achieved 98.5 percent accuracy in detecting kidney disease. In comparison to existing approaches, this has the highest accuracy.

As a result of the random forest algorithm, 250 positive samples (TP) and 150 negative samples (TN) were correctly identified as positive. Positive (TP) samples were scored at 94.74 percent by the SVM, KNN, and Decision Tree algorithms with an error (TN) of 5.26 percent each, and 97.37 percent by the SVM, KNN, and Decision Tree algorithms with an error (TN) of 1.32 percent each. Table 6 shows the results of the four classifiers that were used. The random forest method outperformed the other classifiers on all metrics, including accuracy, precision, recall, and F1-score. The decision tree algorithm came in second, with accuracy, precision, recall, and F1-score values of 99.17 percent, 100 percent, 98.68 percent, and 99.34 percent, respectively. As a result, the KNN algorithm achieved 98.33 percent accuracy, precision, recall, and an F1-score of 98.67 percent. The final SVM accuracy, precision, recall, and F1-score were 96 percent, 92 percent, 93 percent, and 97 percent, respectively.

6. Conclusions and Future Work

In this paper, we developed many prediction models by using different machine learning algorithms and feature-selection techniques. We used a dataset that contained a large set of healthy and unhealthy patients with kidney disease. We used LR, SVM, and many other classifiers to develop various prediction models. We exercised the prediction models with Recursive Feature Elimination (RFE) and Chi-Square test feature selection techniques. From the results, it was shown that the accuracy of the Logistic Regression model with the Chi-Square feature selection technique achieved the best result in the detection of kidney disease. This result was the best among other approaches in the detection of kidney disease. It was also observed that the model achieved good results with 5 to 15 best features among 24 features. It was also found that the Wbcc, Bgr, Bu, Sc, Pcv, Al, Hemo, Age, Su, Htn, Dm, and Bp features had more significance in the detection of kidney diseases. In the future, we will develop a hybrid approach for improving disease detection accuracy before actual disease arises in humans.

Acknowledgments

The authors extend their appreciation to the Deanship of Scientific Research at King Khalid University for funding this work under grant number (RGP 1/190/43), Princess Nourah bint Ab-dulrahman University Researchers Supporting Project number (PNURSP2022R191), and Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia. The authors would like to acknowledge the support of Prince Sultan University for paying the Article Processing Charges (APC) of this publication.

Author Contributions

Conceptualization, methodology; validation and writing—review and editing, R.C.P. and T.B.; formal analysis, M.K.G.; investigation, I.A.; resources, data curation, A.A.A.; visualization, M.A.H.; funding acquisition, F.N.A.-W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Deanship of Scientific Research, King Khalid University, Saudi Arabia under grant number (RGP 1/190/43).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all participants involved in the study.

Data Availability Statement

Data is available on reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Aljaaf A.J. Early Prediction of Chronic Kidney Disease Using Machine Learning Supported by Predictive Analytics; Proceedings of the IEEE Congress on Evolutionary Computation (CEC); Wellington, New Zealand. 8–13 July 2018. [Google Scholar]

- 2.Nishanth A., Thiruvaran T. Identifying Important Attributes for Early Detection of Chronic Kidney Disease. IEEE Rev. Biomed. Eng. 2018;11:208–216. doi: 10.1109/RBME.2017.2787480. [DOI] [PubMed] [Google Scholar]

- 3.Ogunleye A., Wang Q.-G. XGBoost Model for Chronic Kidney Disease Diagnosis. IEEE/ACM Trans. Comput. Biol. Bioinform. 2020;17:2131–2140. doi: 10.1109/TCBB.2019.2911071. [DOI] [PubMed] [Google Scholar]

- 4.Aqlan F., Markle R., Shamsan A. Data Mining for Chronic Kidney Disease Prediction; Proceedings of the 67th Annual Conference and Expo of the Institute of Industrial Engineers; Pittsburgh, PA, USA. 20–23 May 2017. [Google Scholar]

- 5.Borisagar N., Barad D., Raval P. Chronic Kidney Disease Prediction Using Back Propagation Neural Network Algorithm; Proceedings of the International Conference on Communication and Networks; Ahmedabad, India. 19–20 February 2017; pp. 295–303. [Google Scholar]

- 6.Boukenze B., Haqiq A., Mousannif H. Predicting Chronic Kidney Failure Disease Using Data Mining Techniques. In: El-Azouzi R., Menasche D.S., Sabir E., De Pellegrini F., Benjillali M., editors. Advances in Ubiquitous Networking. Volume 2. Springer; New York, NY, USA: 2018. pp. 701–712. [Google Scholar]

- 7.Polat H., Mehr H.D., Cetin A. Diagnosis of chronic kidney disease based on support vector machine by feature selection methods. J. Med. Syst. 2017;41:55. doi: 10.1007/s10916-017-0703-x. [DOI] [PubMed] [Google Scholar]

- 8.Panwong P., Iam-On N. Predicting transitional interval of kidney disease stages 3 to 5 using data mining method; Proceedings of the 2016 Second Asian Conference on Defence Technology (ACDT); Chiang Mai, Thailand. 21–23 January 2016; pp. 145–150. [DOI] [Google Scholar]

- 9.Dulhare U.N., Ayesha M. Extraction of action rules for chronic kidney disease using Naïve bayes classifier; Proceedings of the 2016 IEEE International Conference on Computational Intelligence and Computing Research (ICCIC); Chennai, India. 15–17 December 2016; pp. 1–5. [DOI] [Google Scholar]

- 10.Vasquez-Morales G.R., Martinez-Monterrubio S.M., Moreno-Ger P., Recio-Garcia J.A. Explainable Prediction of Chronic Renal Disease in the Colombian Population Using Neural Networks and Case-Based Reasoning. IEEE Access. 2019;7:152900–152910. doi: 10.1109/ACCESS.2019.2948430. [DOI] [Google Scholar]

- 11.Makino M., Yoshimoto R., Ono M., Itoko T., Katsuki T., Koseki A., Kudo M., Haida K., Kuroda J., Yanagiya R., et al. Artificial intelligence predicts the progression of diabetic kidney disease using big data machine learning. Sci. Rep. 2019;9:11862. doi: 10.1038/s41598-019-48263-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ren Y., Fei H., Liang X., Ji D., Cheng M. A hybrid neural network model for predicting kidney disease in hypertension patients based on electronic health records. BMC Med. Inf. Decis. Mak. 2019;19:131–138. doi: 10.1186/s12911-019-0765-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Ma F., Sun T., Liu L., Jing H. Detection and diagnosis of chronic kidney disease using deep learning-based heterogeneous modified artificial neural network. Future Gener. Comput. Syst. 2020;111:17–26. doi: 10.1016/j.future.2020.04.036. [DOI] [Google Scholar]

- 14.Almansour N., Syed H.F., Khayat N.R., Altheeb R.K., Juri R.E., Alhiyafi J., Alrashed S., Olatunji S.O. Neural network and support vector machine for the prediction of chronic kidney disease: A comparative study. Comput. Biol. Med. 2019;109:101–111. doi: 10.1016/j.compbiomed.2019.04.017. [DOI] [PubMed] [Google Scholar]

- 15.Qin J., Chen L., Liu Y., Liu C., Feng C., Chen B. A Machine Learning Methodology for Diagnosing Chronic Kidney Disease. IEEE Access. 2019;8:20991–21002. doi: 10.1109/ACCESS.2019.2963053. [DOI] [Google Scholar]

- 16.Segal Z., Kalifa D., Radinsky K., Ehrenberg B., Elad G., Maor G., Lewis M., Tibi M., Korn L., Koren G. Machine learning algorithm for early detection of end-stage renal disease. BMC Nephrol. 2020;21:518. doi: 10.1186/s12882-020-02093-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Khamparia A., Saini G., Pandey B., Tiwari S., Gupta D., Khanna A. KDSAE: Chronic kidney disease classification with multimedia data learning using deep stacked autoencoder network. Multimed. Tools Appl. 2020;79:35425–35440. doi: 10.1007/s11042-019-07839-z. [DOI] [Google Scholar]

- 18.Dua D., Graff C. UCI Machine Learning Repository. 2019. [(accessed on 6 December 2021)]. Available online: http://archive.ics.uci.edu/ml.

- 19.Ebiaredoh-Mienye S.A., Esenogho E., Swart T.G. Integrating Enhanced Sparse Autoencoder-Based Artificial Neural Network Technique and Softmax Regression for Medical Diagnosis. Electronics. 2020;9:1963. doi: 10.3390/electronics9111963. [DOI] [Google Scholar]

- 20.Pang Z., Zhu D., Chen D., Li L., Shao Y. A Computer-Aided Diagnosis System for Dynamic Contrast-Enhanced MR Images Based on Level Set Segmentation and ReliefF Feature Selection. Comput. Math. Methods Med. 2015;2015:450531. doi: 10.1155/2015/450531. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Chen G., Ding C., Li Y., Hu X., Li X., Ren L., Ding X., Tian P., Xue W. Prediction of Chronic Kidney Disease Using Adaptive Hybridized Deep Convolutional Neural Network on the Internet of Medical Things Platform. IEEE Access. 2020;8:100497–100508. doi: 10.1109/ACCESS.2020.2995310. [DOI] [Google Scholar]

- 22.Khan B., Naseem R., Muhammad F., Abbas G., Kim S. An Empirical Evaluation of Machine Learning Techniques for Chronic Kidney Disease Prophecy. IEEE Access. 2020;8:55012–55022. doi: 10.1109/ACCESS.2020.2981689. [DOI] [Google Scholar]

- 23.Tabassum M., Bai B.G., Majumdar J. Analysis and Prediction of Chronic Kidney Disease using Data Mining Techniques. Int. J. Eng. Res. Comput. Sci. Eng. 2018;4:25–31. [Google Scholar]

- 24.Padmanaban K.R.A., Parthiban G. Applying Machine Learning Techniques for Predicting the Risk of Chronic Kidney Disease. Indian J. Sci. Technol. 2016;9 doi: 10.17485/ijst/2016/v9i29/93880. [DOI] [Google Scholar]

- 25.Sharma S., Sharma V., Sharma A. Performance Based Evaluation of Various Machine Learning Classification Techniques for Chronic Kidney Disease Diagnosis. Int. J. Mod. Comput. Sci. 2018;4:11–15. [Google Scholar]

- 26.Devishri P., Ragin S., Anisha O.R. Comparative Study of Classification Algorithms in Chronic Kidney Disease. Int. J. Recent Technol. Eng. 2019;8:180–184. [Google Scholar]

- 27.Drall S., Drall G.S., Singh S. Chronic Kidney Disease Prediction Using Machine Learning: A New Approach. Int. J. Manag. 2018;8:278. [Google Scholar]

- 28.Dang B.V., Taylor R.A., Charlton A.J., Le-Clech P., Barber T.J. Toward Portable Artificial Kidneys: The Role of Advanced Microfluidics and Membrane Technologies in Implantable Systems. IEEE Rev. Biomed. Eng. 2020;13:261–279. doi: 10.1109/RBME.2019.2933339. [DOI] [PubMed] [Google Scholar]

- 29.Cheng L.C., Hu Y.H., Chiou S.H. Applying the Temporal Abstraction Technique to the Prediction of Chronic Kidney Disease Progression. J. Med. Syst. 2019;41:85. doi: 10.1007/s10916-017-0732-5. [DOI] [PubMed] [Google Scholar]

- 30.Hodneland E. In Vivo Detection of Chronic Kidney Disease Using Tissue Deformation Fields from Dynamic MR Imaging. IEEE Trans. Biomed. Eng. 2019;66:1779–1790. doi: 10.1109/TBME.2018.2879362. [DOI] [PubMed] [Google Scholar]

- 31.Marsh J.N., Matlock M.K., Kudose S., Liu T.-C., Stappenbeck T.S., Gaut J.P., Swamidass S.J. Deep Learning Global Glomerulosclerosis in Transplant Kidney Frozen Sections. IEEE Trans. Med. Imaging. 2018;37:2718–2728. doi: 10.1109/TMI.2018.2851150. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Antony L., Azam S., Ignatious E., Quadir R., Beeravolu A.R., Jonkman M., De Boer F. A Comprehensive Unsupervised Framework for Chronic Kidney Disease Prediction. IEEE Access. 2021;9:126481–126501. doi: 10.1109/ACCESS.2021.3109168. [DOI] [Google Scholar]

- 33.Hossain M., Detwiler R.K., Chang E.H., Caughey M.C., Fisher M.W., Nichols T.C., Merricks E.P., Raymer R.A., Whitford M., Bellinger D.A., et al. Mechanical Anisotropy Assessment in Kidney Cortex Using ARFI Peak Displacement: Preclinical Validation and Pilot In Vivo Clinical Results in Kidney Allografts. IEEE Trans. Ultrason. Ferroelectr. Freq. Control. 2018;66:551–562. doi: 10.1109/TUFFC.2018.2865203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Hussain M.A., Hamarneh G., Garbi R. Cascaded Localization Regression Neural Nets for Kidney Localization and Segmentation-free Volume Estimation. IEEE Trans. Med. Imaging. 2021;40:1555–1567. doi: 10.1109/TMI.2021.3060465. [DOI] [PubMed] [Google Scholar]

- 35.Shehata M., Khalifa F., Soliman A., Ghazal M., Taher F., El-Ghar M.A., Dwyer A.C., Gimel’Farb G., Keynton R.S., El-Baz A. Computer-Aided Diagnostic System for Early Detection of Acute Renal Transplant Rejection Using Diffusion-Weighted MRI. IEEE Trans. Biomed. Eng. 2018;66:539–552. doi: 10.1109/TBME.2018.2849987. [DOI] [PubMed] [Google Scholar]

- 36.Bhaskar N., Manikandan S.M.S. A Deep-Learning-Based System for Automated Sensing of Chronic Kidney Disease. IEEE Sens. Lett. 2019;3:1–4. doi: 10.1109/LSENS.2019.2942145. [DOI] [Google Scholar]

- 37.Chittora P., Chaurasia S., Chakrabarti P., Kumawat G., Chakrabarti T., Leonowicz Z., Jasinski M., Jasinski L., Gono R., Jasinska E., et al. Prediction of Chronic Kidney Disease—A Machine Learning Perspective. IEEE Access. 2021;9:17312–17334. doi: 10.1109/ACCESS.2021.3053763. [DOI] [Google Scholar]

- 38.Zollner F.G., Kocinski M., Hansen L., Golla A.-K., Trbalic A.S., Lundervold A., Materka A., Rogelj P. Kidney Segmentation in Renal Magnetic Resonance Imaging—Current Status and Prospects. IEEE Access. 2021;9:71577–71605. doi: 10.1109/ACCESS.2021.3078430. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data is available on reasonable request.