Abstract

Objectives.

The present study examined whether remote microphone (RM) systems improved listening-in-noise performance in youth with autism. We explored effects of RM system use on both listening-in-noise accuracy and behaviorally measured listening effort in a well-characterized sample of participants with confirmed clinical diagnoses of autism. We hypothesized that listening-in-noise accuracy would be enhanced and listening effort reduced, on average, when participants used the RM system. Furthermore, we predicted that effects of RM system use on listening-in-noise accuracy and listening effort would vary according to participant characteristics. Specifically, we hypothesized that participants who were chronologically older, had greater nonverbal cognitive and language ability, displayed fewer features of autism, and presented with more typical sensory and multisensory profiles might exhibit greater benefits of RM system use than participants who were younger, had less nonverbal cognitive or language ability, displayed more features of autism, and presented with greater sensory and multisensory disruptions.

Design.

We implemented a within-subjects design to investigate our hypotheses, wherein 32 youth with autism completed listening-in-noise testing with and without an RM system. Listening-in-noise accuracy and listening effort were evaluated simultaneously using a dual-task paradigm for stimuli varying in complexity (i.e., syllable-, word-, sentence-, and passage-level). In addition, several putative moderators of RM system effects (i.e., sensory and multisensory function, language, nonverbal cognition, and broader features of autism) on outcomes of interest were evaluated.

Results.

Overall, RM system use resulted in higher listening-in-noise accuracy in youth with autism compared to no RM system use. The observed benefits were all large in magnitude, though the benefits on average were greater for more complex stimuli (e.g., key words embedded in sentences) and relatively smaller for less complex stimuli (e.g., syllables). Notably, none of the putative moderators significantly influenced the effects of the RM system on listening-in-noise accuracy, indicating that RM system benefits did not vary according to any of the participant characteristics assessed. On average, RM system use did not have an effect on listening effort across all youth with autism compared to no RM system use, but instead yielded effects that varied according to participant profile. Specifically, moderated effects indicated that RM system use was associated with (a) decreased listening effort for youth with average- to above-average nonverbal cognitive and language ability and (b) increased listening effort for youth who were less cognitively and linguistically able.

Conclusions.

This study extends prior work by showing that RM systems have the potential to boost listening-in-noise accuracy for youth with autism, as well as to reduce listening effort for youth with average to above-average cognitive and linguistic skills. In contrast, we found that, for those with below-average cognitive and linguistic skills, listening effort as indexed by reaction time increased with RM system use, perhaps suggesting greater engagement in the listening-in-noise tasks when using versus not using the RM system for this subgroup of youth with autism. These findings have important implications for clinical practice, suggesting RM system use in classrooms could potentially improve listening-in-noise performance for at least some youth with autism.

Introduction

Autism is a highly prevalent neurodevelopmental condition (Maenner et al., 2020) defined by early-emerging social communication challenges and the presence of restricted, repetitive patterns of behavior, interests, or activities (American Psychiatric Association, 2013). Youth with autism additionally display differences in sensory function, including alterations in the perception of audiovisual information (Feldman et al., 2020a; Irwin & DiBlasi, 2017; Megnin et al., 2012; Mongillo et al., 2008; Patten et al., 2014; Smith et al., 2017) and difficulties perceiving and understanding speech in the presence of background noise (e.g., Alcántara et al., 2004; Irwin et al., 2011; Russo et al., 2009). Furthermore, youth with autism do not benefit as much as their neurotypical peers when given increased access to visual speech cues (Foxe et al., 2015; Smith & Bennetto, 2007; Stevenson et al., 2017, 2018). Thus, technology meant to improve listening-in-noise performance could benefit those with autism.

One established method for supporting listening in noise is to improve the signal-to-noise ratio (SNR) with the use of a wireless remote microphone (RM) system. An RM system improves the SNR by providing low-delay and reliable broadband audio broadcast to listeners with a microphone near the talker of interest and an ear-level device on, or loudspeaker near, the listener (Ching et al., 2005; Holt et al., 2005; Schafer & Thibodeau, 2006). Benefits of RM system use in school and home environments for children with hearing loss have been widely reported (e.g., Anderson & Goldstein, 2004; Anderson et al., 2005; Benítez-Barrera et al., 2018, 2019; Flynn et al., 2005; Thompson et al., 2020).

Likewise, recent work has suggested benefits of RM systems for youth with autism. Rance and colleagues (2014, 2017) reported that use of an RM system in mainstream classrooms by school-age children with autism resulted in improved listening-in-noise accuracy, social interactions, and educational achievements, as well as reduced physiologic stress levels. Similarly, Schafer and colleagues (2013, 2016, 2019) reported that improving SNRs via use of personal RM systems both in school and at home facilitated speech recognition in noise, observed listening behaviors, teacher observations, and auditory processing.

Cumulatively, the aforementioned findings suggest that by improving the audio signal, an RM system has the potential to improve several aspects of listening-in-noise performance in youth with autism. However, there are a number of limitations to the work that has been conducted to date (see van der Kruk et al., 2017 for a recent review). For example, most previous studies of RM system effects in youth with autism did not confirm the diagnostic status of their participants utilizing gold-standard assessment procedures (e.g., Autism Diagnostic Observation Schedule [ADOS]; Lord et al., 2012). Additionally, the extant literature provides little insight into individual variability that might influence RM system benefits. Given that autism is highly heterogeneous, it is unlikely that any single intervention will uniformly benefit this entire clinical population.

A number of participant characteristics, such as chronological age, language ability, nonverbal cognitive ability, and/or broader features of autism, for example, might predict differential effects of RM system use on listening-in-noise performance in youth with autism. It has also been noted, anecdotally, that sensory profiles of youth with autism could moderate effects of RM systems on listening-in-noise performance. Specifically, several past studies have suggested that youth with autism with altered tactile responsiveness might not benefit from (or even tolerate wearing) RM systems (Rance, 2014; Schafer et al., 2016, 2019). Furthermore, it is possible that the effects of RM system use could vary according to one’s ability to integrate auditory information provided via an RM system with the corresponding visual speech cues (Feldman et al., 2018; Stevenson et al., 2014, 2018).

Finally, previous studies did not explore whether the effects of RM systems varied according to the complexity of the stimuli employed in listening-in-noise tasks. For example, listening-in-noise performance in children with hearing loss is known to suffer with increased complexity of the linguistic stimulus (Lewis et al., 2015), and there is some evidence that listening-in-noise performance of youth with autism similarly differs according to stimulus complexity (Stevenson et al., 2017). Thus, it is important to evaluate systematically whether the benefits of RM system use vary according to stimulus complexity.

The previous literature on RM system use in those with autism to date has centered largely on listening-in-noise accuracy, some broader classroom behaviors, and physiologic indicators of stress. Though prior studies suggest that listening in noise is easier with RM systems (e.g., Schafer et al., 2019), most of these studies relied on self-reports of listening effort, or on parent or teacher reports of listening difficulties. Listening effort is defined as the allocation of mental resources, such as attention, to assist with understanding spoken language (Hicks & Tharpe, 2002; McGarrigle et al., 2014). According to the Framework for Effortful Listening (Pichora-Fuller et al., 2016) and Ease of Language Understanding model (Rönnberg et al., 2008, 2013), human cognitive capacity is fixed, but is required for recognizing and understanding speech signals. When factors, such as background noise, interfere with a listener’s ability to recognize the incoming signal easily, additional cognitive resources must be deployed to achieve understanding. The cost of this deployment is fewer resources available for other tasks, such as memory encoding or comprehension of complex passages.

Self-report measures of listening effort (e.g., questionnaires), such as those used in previous work with RM systems for youth with autism, are thought to reflect a different construct than behavioral measures of listening effort (e.g., dual-task paradigms; Strand et al., 2018), and data from the two tasks often do not converge (Feuerstein, 1992; Fraser et al., 2010; Hicks & Tharpe, 2002; Picou & Ricketts, 2017). This lack of convergence likely reflects a difference between one’s perceived and exerted effort (Francis & Love, 2019). Indices of effort derived from self report are thought to reflect the subjective assessment of one’s own exerted effort while listening, but might be biased by listening-in-noise accuracy (Moore & Picou, 2018). Behavioral indices of listening effort are thought to reflect the attentional resources necessary for speech recognition and are based on the limited capacity of human resources (Kahneman, 1973). A commonly-used behavioral measure of listening effort is the dual-task paradigm, wherein an individual listens to and/or repeats speech while performing a secondary task, such as responding to a visual probe as quickly as possible (Gagne et al., 2017). Consistent with the aforementioned frameworks for understanding listening effort, interventions that improve audibility or the SNR also reduce listening effort (i.e., reduce reaction times) as measured behaviorally (Desjardins & Doherty, 2014; Picou et al., 2017a), even in the absence of concomitant improvements in listening-in-noise accuracy (Gustafson et al., 2014; Sarampalis et al., 2009). Thus, listening-in-noise accuracy and behavioral listening effort are related but distinct constructs.

Purpose

The present investigation explored whether RM systems improved listening-in-noise performance in a well-characterized sample of youth with confirmed clinical diagnoses of autism. We tested effects of RM system use on both listening-in-noise accuracy and behaviorally measured listening effort. The research questions for this study of youth with autism were:

In the presence of background noise, does RM system use affect (i) listening-in-noise accuracy and/or, (ii) listening effort?

Do any effects of RM system use vary according to the complexity of the stimuli?

Do any effects of RM system use vary according to chronological age, language, nonverbal cognitive ability, tactile responsiveness, audiovisual integration, and/or broader features of autism?

We hypothesized that in youth with autism: (a) listening-in-noise accuracy would be enhanced and listening effort would be reduced through the use of RM system technology; (b) any effects of RM system use would vary across levels of stimulus complexity, with greater benefits likely observed for more complex stimuli; (c) effects of the RM system on listening-in-noise accuracy and listening effort would vary according to participant characteristics, such that participants who (i) were chronologically older, (ii) had greater nonverbal cognitive and/or language ability, and/or presented with (iii) fewer core features of autism, (iv) less alterations in tactile responsiveness, and/or (v) more intact and acute audiovisual integration would demonstrate greater benefit from RM system use.

Materials and Methods

To test our hypotheses, we employed a within-subjects design wherein youth with autism completed listening-in-noise testing with and without an RM system in a classroom-like environment. Listening-in-noise accuracy and listening effort were evaluated simultaneously using a dual-task paradigm for stimuli varying in complexity from syllable to passage levels. In addition, several putative moderators of RM system effects on outcomes of interest were evaluated in a quiet, clinic-like environment.

Participants

Thirty-two youth with autism (26 males, 6 females; Mage = 14.3 years) were recruited from an ongoing study of multisensory processing (see Table 1 for a summary of participant characteristics). As a part of the larger study, participants completed a comprehensive psychoeducational evaluation that included the ADOS-2, the Leiter International Performance Scale, 3rd edition (Leiter-3; Roid et al., 2013), a battery of standardized language assessments, and vision and hearing screenings. All eligibility criteria for the current study were determined by testing conducted and surveys collected as part of the aforementioned larger study of multisensory processing and included: (a) chronological age between 7 and 21 years, (b) autism diagnosis confirmed by research-reliable administrations of the ADOS-2 and clinical judgments of a licensed psychologist or speech-language pathologist on the research team, (c) monolingual English-speaking background (per parent report), (d) normal or corrected-to-normal vision, as confirmed by screening via Pediatric Snellen and/or Tumbling “E” eye chart, (e) normal peripheral hearing, as confirmed by screening in a sound booth at 20 dB at octave intervals from 500 Hz through 4000 Hz, bilaterally, (f) no history of seizure disorders (per parent report), (g) no diagnosed comorbid genetic disorders (per parent report), and (h) previously demonstrated ability to complete psychophysical tasks (as described below). All recruitment and study procedures were approved by the Vanderbilt University Institutional Review Board. Parents or guardians signed written informed consent documents, and all participants provided assent prior to the start of testing. Financial compensation for participation was provided.

Table 1.

Description of Participant Characteristics

| M (SD) | Range | |

|---|---|---|

| Chronological Age (Years) | 14.27 (3.54) | 7.92 – 20.33 |

| Biological Sex | 26 male, 6 female | |

| Nonverbal IQ | 113.6 (19.4) | 45 – 147 |

| Core Language Standard Score | 94.7 (20.8) | 40 – 120 |

| SRS-2 Total Raw Score | 93.4 (28.3) | 2 – 148 |

| SP Touch Index | 63.6 (9.8) | 45 – 83 |

| SEQ Tactile Index | 23.2 (6.8) | 10 – 37 |

| Perception of the McGurk Illusion (%) | 78.3 (35.0) | 0 – 100 |

| Temporal Binding Window (ms) | 457.1 (224.8) | 126.2 – 1166.9 |

Note. Nonverbal IQ = Leiter International Performance Scale, 3rd edition (Roid et al., 2013), Core Language Standard Score = Standard scores for the core language index from the Clinical Evaluation of Language Fundamentals, 4th edition (CELF-4; n = 27; Semel et al., 2004) or the total language score from the Preschool Language Scale, 5th edition (PLS-5; n = 4; Zimmerman et al., 2011), SRS-2 = Social Responsiveness Scale, 2nd edition (Constantino & Gruber, 2012), SP = Sensory Profile (Dunn, 1999), SEQ = Sensory Experiences Questionnaire (Baranek et al., 2006).

Measures of Putative Moderators

Table 2 summarizes the constructs, measures, and variables utilized in the analyses, including all the putative moderators of RM system effects evaluated. With the exception of chronological age, which was ascertained in years according to the date of each child’s participation in the present study, putative moderators are described in detail below.

Table 2.

Summary of Constructs, Measures, and Variables Utilized in Analyses

| Construct | Task | Variable(s) | Role |

|---|---|---|---|

| Listening-in-Noise Accuracy | Listening-in-Noise Task | Percentage of syllables, words, and words in sentences correctly repeated, and questions answered correctly about passage-level stimuli | Dependent variable |

| Listening Effort | Listening-in-Noise Task | Response time to pressing button following presentation of visual probe (in ms) | Dependent variable |

| Chronological Age | NA | Chronological age on the day of listening-in-noise testing | Putative moderator |

| Nonverbal Cognitive Ability | Leiter-3 | Nonverbal IQ on the Leiter-3 | Putative moderator |

| Language Ability | CELF-4 or PLS-5 | Standard scores for the core language index from the CELF-4 (n = 27) or the total language score from the PLS-5 (n = 4) | Putative moderator |

| Broader Features of Autism | SRS-2 | Total raw score from the SRS-2 | Putative moderator |

| Tactile Responsiveness | SP, SEQ | Aggregate of z-scores from the touch index of the SP and the tactile index of the SEQ | Putative moderator |

| Magnitude of Integration for Audiovisual Speech | McGurk Task | Percentage of “ta” and “ha” percepts in response to incongruent audiovisual stimuli (i.e., visual “pa” and auditory “ka”) | Putative moderator |

| Temporal Binding of Audiovisual Speech | SJ Task | Temporal binding window for audiovisual speech (see Feldman et al., 2020; Powers et al., 2009 for more information) | Putative moderator |

Note. Leiter-3 = Leiter International Performance Scale, 3rd edition (Roid et al., 2013), CELF-4 = Clinical Evaluation of Language Fundamentals, 4th edition (Semel et al., 2004), PLS-5 = Preschool Language Scale, 5th edition (Zimmerman et al., 2011), SRS-2 = Social Responsiveness Scale, 2nd edition (Constantino & Gruber, 2012), SP = Sensory Profile (Dunn, 1999), SEQ = Sensory Experiences Questionnaire (Baranek et al., 2006), SJ = Simultaneity judgment.

Language and Nonverbal Cognitive Ability

Standard scores for the core language index from the Clinical Evaluation of Language Fundamentals, 4th edition (CELF-4; n = 27; Semel et al., 2004) or for the total language score from the Preschool Language Scale, 5th edition (PLS-5; n = 4; Zimmerman et al., 2011) were used to quantify participants’ language ability. Note that one participant did not complete the language assessment and this missing value was imputed (see Data Analysis). Standard scores for nonverbal IQ from the Leiter-3 were used to quantify nonverbal cognitive ability.

Broader Features of Autism

The Social Responsiveness Scale, 2nd edition (SRS-2; Constantino & Gruber, 2012) was completed by a parent electronically to quantify participants’ broader features of autism. The total raw score from this measure was utilized in analyses.

Tactile Responsiveness

Parents also completed the Sensory Profile (Dunn, 1999) and the Sensory Experiences Questionnaire (Baranek et al., 2006) electronically to measure participants’ tactile responsiveness at entry to the present study. An aggregate measure of tactile responsiveness was generated from the touch index of the Sensory Profile and the tactile index of the Sensory Experiences Questionnaire following z-score transformation of each index. This aggregate was generated to increase the stability, and thus potential construct validity, of the putative moderator (Rushton et al., 1983). Component scores from the Sensory Experiences Questionnaire and Sensory Profile were sufficiently intercorrelated (i.e., r > .5) to warrant aggregation.

Magnitude of Integration for Audiovisual Speech

Participants’ magnitude of integration for audiovisual speech was measured using a previously developed McGurk task (see for task development and details, Dunham et al., 2020; Feldman et al., 2020a, 2020b). Briefly, stimuli for this task were auditory, visual, or auditory-visual clips of a female speaker saying the syllables “pa” and “ka” at a natural rate and volume with neutral affect.

Participants were presented with 10 trials of each syllable in the auditory-only, visual-only, and matched audiovisual conditions and 10 trials of the incongruent audiovisual stimuli (i.e., visual “ka” + auditory “pa”, which frequently induces a “fused” McGurk percept of “ta” or “ha”) in a randomized order (70 trials per run). After each trial, participants reported the syllable they perceived using the four-button response box. The percentage of illusory McGurk percepts was utilized to index magnitude of integration for audiovisual speech stimuli. Lower scores for this index reflect a tendency towards a reduced magnitude of integration for audiovisual speech stimuli, whereas higher scores for this index reflect a tendency towards increased magnitude of integration for audiovisual speech stimuli. To keep participants motivated, before testing they each chose images of special interests (e.g., Pokémon, vacuum cleaners), which were presented randomly between trials. Five or six total images (from a bank of 15 per theme) were randomly inserted among stimuli during each run of each task.

Temporal Binding of Audiovisual Speech

A simultaneity judgment task was used to measure participants’ temporal binding of audiovisual speech using procedures and stimuli described in detail elsewhere (Feldman et al., 2020b; Powers et al., 2009). During each run, videos of the same female speaker saying “ba” were presented synchronously and at 12 stimulus onset asynchronies. The difference in the relative timing of the initiation of the auditory and visual components of the stimuli, with negative values indicating auditory-first and positive values indicating visual-first: ±500ms, ±400ms, ±350ms, ±300ms, ±250ms, and ±150ms. Each stimulus onset asynchrony (including synchrony) was presented two times in each run in a random order.

From this task, the temporal binding window (TBW; in ms) was calculated as described in previous reports (Feldman et al., 2020b; Powers et al., 2009). Briefly, participant’s rate of perceived synchrony (i.e., calculated for each stimulus onset asynchrony as the number of times a participant reported that the stimuli were “synchronous” divided by the total number of trials presented) at each stimulus onset asynchrony was fit to two psychometric functions using the glmfit function in MATLAB, one for auditory-leading trials and another for visual-leading trials, after normalizing the data by setting the maximum value to 100%. The point at which each psychometric function reached 75% reported synchrony was derived, and the TBW was calculated as the difference between those points (in ms).

Larger TBWs indicate that participants tend to integrate or combine auditory and visual information over a wider window of time, whereas smaller TBWs indicate that participants tend to integrate or combine auditory and visual information over a narrower window of time. Prior work indicates the TBW for audiovisual speech tends to be extended in youth with autism relative to non-autistic peers (e.g., Woynaroski et al., 2013).

Listening-in-Noise Testing

Effects of the RM system on listening-in-noise accuracy and listening effort were evaluated for stimuli that varied in complexity across syllable, word, sentence, and passage levels. Levels of stimulus complexity were counterbalanced across participants and RM and No-RM system conditions.

Stimuli

Syllable level.

Syllable level stimuli were videos of the same female talker utilized in McGurk and temporal binding tasks described above. The videos displayed the talker saying “ba,” “ga,” “da”, “ta,” “pa,” or “ka.” Two lists of 24 syllables each were presented in RM and No-RM system conditions.

Word level.

Word level stimuli were four lists comprised of 25 monosyllables describing concrete nouns as produced by a female talker. The approximately equivalent lists have been used in previous studies of listening effort (Picou et al., 2019) and are described in detail elsewhere (Picou et al., 2017b). Examples include nouns such as “bee”, “tongue”, and “moon”. Two lists were used in each (RM and No-RM system) condition.

Sentence level.

Connected Speech Test sentences were utilized for the sentence level stimuli (Cox et al., 1987, 1988). This test battery consists of 48 passages of conversationally connected speech about a specific topic (e.g., giraffes). Each passage is comprised of 10 simple sentences, ranging in length from seven to ten words. The authors identified 25 key words in each 10-sentence passage that were essential for understanding the meaning of the passage; these were used for scoring purposes. Two passage pairs (comprising 50 key words from 20 sentences in total) were used in each (RM and No-RM system) condition.

Passage level.

Recorded stories spoken by a female talker served as passage level stimuli. Each story was approximately 15 minutes in duration and had been translated from a foreign language fairy tale. The story stimuli were based on work by Lewis and colleagues (2015), and details of their development are described elsewhere (Picou et al., 2020a; 2020b). Each 15-minute story was stored on the experimental computer as 25 short segments. One story was used in each (RM and No-RM system) condition. Following presentation of each story passage (all 25 segments), research personnel queried the participant about the characters, plot, and meaning of the story with 10 questions, six factual and four inferential, for a total possible score of 10 points.

Dual-task Paradigm

All listening-in-noise testing was completed at a fixed SNR of −4 dB (60 dB speech, 64 dB noise) based on previous work indicating participants could be expected to repeat 80% of the word-level stimuli accurately at this SNR (Picou et al., 2019). During dual-task testing, the primary task was listening in noise, and the secondary task was a timed response to a visual probe. For syllable, word, and sentence level stimuli, the participant was instructed to listen to the stimuli presented from the front loudspeaker and repeat what was heard (i.e., syllables, words, or sentences). The participant was able to see a video of the talker via a computer monitor placed atop the loudspeaker. For passage-level stimuli, the participant was instructed to listen intently to the story and verbally answer questions from the examiner at the end of the story. Accuracy was scored as the number of syllables, words, or key words from sentences correctly repeated, and the number of questions about the passage correctly answered.

The secondary task was a physical response to a visual probe. Participants were instructed to press a button on the computer keyboard when a red oval appeared as quickly as possible. The red oval, when present, was approximately 28(h) x 38(w) cm, was displayed on a black background, and appeared immediately after the conclusion of the stimulus video. The oval was visible until the participant pressed the button, at which point the oval disappeared. If no response was recorded after three seconds, the visual probe disappeared automatically. During trials with no probe, only a black background was present. Visual probes appeared randomly following 25% of the listening-in-noise trials in a given list. Response time (in ms) to the visual probe was used as the index of listening effort. Practice sessions were completed prior to the start of the first experimental condition, using only syllable level stimuli, and entailed: (a) pressing the keyboard key alone, (b) repeating the stimuli and pressing the keyboard key with no background noise, and (c) repeating the stimuli and pressing the keyboard key in the presence of background noise. All participants showed evidence of mastery of the aforementioned procedures during practice sessions prior to proceeding with test sessions. The same images selected by participants for use during the psychophysical tasks were presented before and after dual-task testing with each list to encourage and reinforce engagement with the task.

RM System Fitting

All participants were fit with an ear-level device (Phonak Roger™ Focus) with the RM receiver on their right ear. The Focus device was coupled to a slim tube and occluding, non-custom foam tip for signal routing and retention. Slim tubes and tips were selected for appropriate fit for each participant. We chose occluding eartips because venting can reduce the level of the signal from the remote microphone (e.g., Tharpe et al 2004; Whitmer et al., 2011). We chose a unilateral receiver because it has been previously suggested that bilateral receivers with occluding eartips would be inappropriate for listeners with normal hearing bilaterally (Tharpe et al., 2004). The Focus was paired to a Roger™ TouchScreen, which was positioned on a stand 8 inches from the front loudspeaker throughout RM system testing and activated to “lanyard mode” to ensure directionality toward the speech signal. Listening checks were completed prior to each test session by the examiner, and function was also verified with each participant. The ear-level device was removed from the participant’s ear during No-RM system testing.

Test Environment

Participants completed the two psychophysical tasks (measuring magnitude of integration and temporal binding of audiovisual speech) in a sound- and light-attenuated room (WhisperRoom Inc., Morristown, TN, USA) using a computer monitor (22” Samsung Syncmaster 2233RZ) and supra-aural headphones (Sennheiser HD559). Stimulus presentation was managed by E-prime software.

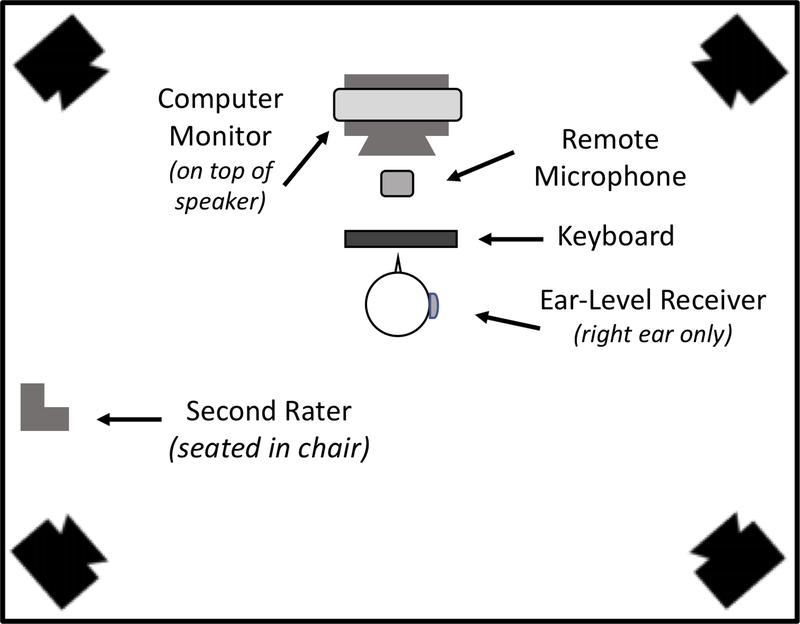

Listening-in-noise testing was completed in a moderately reverberant test room (5.5 × 6.5 × 2.25 m) consisting of a concrete floor with walls and ceiling having semirandom angles covered in reflective paint. Acoustic blankets (Sound Stopper 124) were hung on the ceiling and walls to reduce the reverberation to a moderate level (T30 = 475 ms as verified with Larsen Davis 824S). The participant was seated in the center of the room at a desk with a computer keyboard (Dell). A single speech loudspeaker was located at 0 degrees azimuth at a distance 1.5 meters from the participant (see gray speaker in Figure 1). A computer monitor (Dell 2408WFPb) was placed atop the speech loudspeaker at a height optimal for visual access by the participant. All target stimuli were presented via custom programming in Presentation software (Neurobehavioral Systems, v 12) on a computer (Dell). Signal level was controlled by an attenuator (Tucker Davis Technologies PA5), and then signals were routed to the speech loudspeaker at the front of room (Tannoy System 60). Background noise was presented from four loudspeakers (Tannoy System 600) located at 45, 135, 225, and 315 degrees around the room, 3.5 meters from the participant. Routing of the background noise stimulus was accomplished via a multitrack sound editing software (Adobe Audition CS16) to an amplifier (Crown CT 8200) prior to presentation through the noise loudspeakers.

Figure 1.

Diagram of test room set-up.

Participants wore a lapel microphone (Belden-M B451; 1.75 inches long) to ensure the primary tester could hear and record the verbal responses accurately. For one of every five participants, a trained research assistant was seated behind the participant to record verbal responses as a reliability check on scored responses (further detail regarding interrater reliability calculations is provided below).

Procedures

Following study intake, informed consent, and participant assent, each participant completed the study protocol, which included the evaluation of the putative moderators and listening-in-noise testing. Test order of the moderator assessments and listening-in-noise testing was counterbalanced across participants. All moderators were evaluated without the use of an RM system. The listening-in-noise tasks were completed in two test conditions: (a) RM system and (b) No-RM system. Counterbalancing of RM and No-RM system conditions was utilized to control for possible order effects; half of the participants completed the RM system condition first. In each test session, participants listened to the four different stimulus types (i.e., syllables, words, sentences, passages), also counterbalanced across participants. List order within a stimulus level was randomized. Stimulus and background noise calibration was completed prior to the start of each participant’s session using steady-state noises with the same long-term-average spectrum as the stimulus and noise signals and a sound level meter in the approximate location of a participant’s ear.

Data Analysis

Prior to conducting analyses, interrater reliability was evaluated for accuracy scores derived from the listening-in-noise task by deriving intraclass correlation coefficients (ICCs) for the 20% of samples scored by both a primary and secondary coder. ICCs reflecting absolute agreement amongst the raters of interest to the present study were calculated via two-way mixed effects models using the irr package (Gamer et al., 2019) in R (R Core Team, 2020; see Koo & Li, 2016). ICCs represent the proportion of variance in scores attributable to between-participant differences (Shavelson & Webb, 1991; Yoder et al., 2018). Higher ICCs represent greater coding reliability, with an ICC of .7 considered a benchmark for “very good” reliability (Mitchell, 1979).

All variables of interest to analyses (see Table 2) were subsequently evaluated for normality, specifically for skewness > |1.0| and kurtosis > |3.0|. Missing data (ranging from 0–13% across variables) were then imputed using the missForest package (Stekhoven & Bühlmann, 2012) in R (R Core Team, 2020). This approach utilizes a random forest approach (see Breiman, 2001) to impute missing values in datasets with continuous and categorical values (Stekhoven & Bühlmann, 2012). No data were missing due to participant noncompliance. Discrete missingness, rather, was due to selected standardized assessments in some instances not being collected as a part of the larger study (e.g., due to participant fatigue prior to completion of the entire comprehensive assessment protocol), incomplete or missing parent questionnaires, technical difficulties encountered during the listening-in-noise task, and errors obtained in response to curve fitting procedures in Matlab; thus, data could be considered missing at random. Imputation of missing values in such cases is preferred to other methods of handling missing data (e.g., pairwise or listwise deletion) as it minimizes bias and preserves power to detect effects of interest (Enders, 2010).

To evaluate the effects of RM system use on listening-in-noise accuracy and response times, 2 (RM system versus No-RM system) x 4 (stimulus complexity) repeated measures analyses of variance (ANOVAs) were conducted on accuracy (percent correct) and listening effort (response time) data. When data violated the assumption of sphericity for ANOVA analyses, Greenhouse-Geisser corrections were applied.

A series of multiple regression analyses was then carried out to assess the effects of the putative moderators on listening-in-noise accuracy and listening effort as averaged across levels of stimulus complexity in RM system versus No-RM system conditions using the Mediation and Moderation for Repeated Measures (MEMORE) macro in SPSS (Montoya, 2019; Montoya & Hayes, 2017). In tests of moderation, interaction effects were probed using Johnson-Neyman tests at a .05 probability level (Hayes, 2017). Only cut-points along the continuously quantified moderators within the range of observed data were interpreted (Aiken & West, 1991).

Results

Listening-in-Noise Accuracy

Secondary scoring was completed for 7/32 (21.9%) of participants. ICCs derived to index interrater reliability ranged from .80 – .98 (M = .90) in the RM system condition and 0.76 – 1.0 (M = 0.92) in the No-RM system condition across levels of stimulus complexity. These values indicate very good to excellent interrater reliability for accuracy scores derived from the listening-in-noise task.

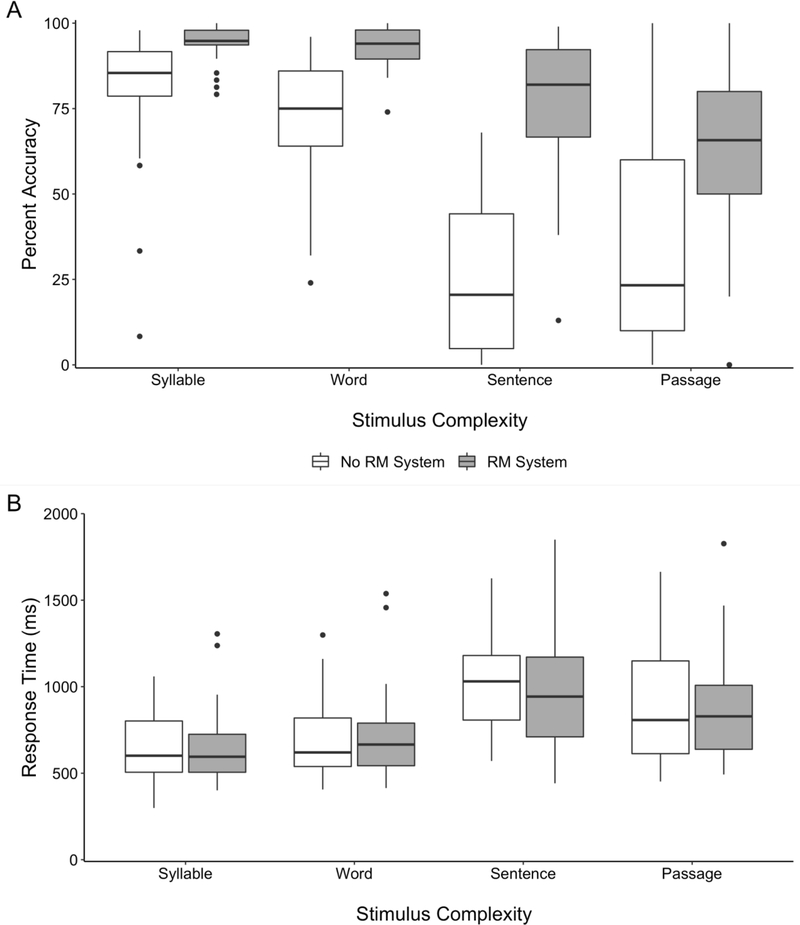

Results with listening-in-noise accuracy as the dependent variable indicated that there were significant main effects of RM system (F [1, 31] = 104.03, p < .001, = .77) and stimulus complexity (F [1.661, 51.486] = 111.80, p < .001, = .78), in addition to a significant RM system x stimulus complexity interaction (F [2.126, 65.9] = 22.61, p < .001, = .42). These results indicate that the effect of the RM system varied across levels of stimulus complexity. According to post-hoc Tukey tests, the difference in accuracy between the RM system and No-RM system conditions was statistically significant at the word level (Maided = 93.0%, Munaided = 73.2%, p = .002, d = 1.45), the sentence level (Maided = 78.0%, Munaided = 26.5%, p < .001, d = 2.46), and the passage level (Maided = 61.3%, Munaided = 34.0%, p < .001, d = 0.95), but not at the syllable level (Maided = 94.4%, Munaided = 79.8%, p = .074, d = 0.95). Effect sizes, on average, tended to be larger at higher versus lower levels of stimulus complexity.

Response Times

Results with secondary-task response times as the dependent variable indicated no significant main effect of the RM system (F [1, 31] = 0.41, p = .53, = .01) and no stimulus complexity x RM interaction (F [2.113, 65.517] = 0.38, p = .70, = .01). However, there was a significant main effect of stimulus complexity (F [2.252, 69.81] = 36.62, p < .001, = .54), indicating that response times varied on average across levels of stimulus complexity. Post-hoc Tukey tests indicated that nearly all of the conditions significantly differed from one another (p < .05; after correcting for multiple comparisons, the contrast between passage- and sentence-level and the contrast between word- and syllable-level were the only two non-significant pairwise differences). Response times were longest for sentence- (M = 979.5 ms) and passage-level stimuli (M = 878.7 ms), and shortest for word- (M = 702.5 ms) and syllable-level stimuli (M = 653.4 ms; see Figure 2b).

Figure 2.

Listening-in-noise accuracy (panel A) and listening effort (panel B) for each level of stimulus complexity. Lines represent median scores; boxes represent 1st-3rd quartiles; dots represent data points outside the interquartile range. Note: For listening-in-noise accuracy, there were significant main effects of both remote microphone (RM) system and stimulus complexity, as well as a significant interaction effect. For listening effort, there was only a significant main effect of stimulus complexity.

Moderation Analyses

Table 3 summarizes the results of tests of all putative moderators of RM system effects on listening-in-noise accuracy and listening effort. None of the tested variables significantly moderated the results observed for listening-in-noise accuracy.

Table 3.

Results of Tests of Putative Moderators of Remote Microphone (RM) System Effects on Listening-in-Noise Accuracy and Listening Effort

| Putative Moderator | β | SE | 95% CI |

|---|---|---|---|

| Effect of Moderator on Listening-in-Noise Accuracy | |||

| Chronological Age | −0.01 | 0.01 | −0.02, 0.01 |

| Language Ability | 0.00 | 0.00 | −0.01, 0.01 |

| Nonverbal Cognitive Ability | 0.00 | 0.00 | 0.00, 0.01 |

| Broader Features of Autism | 0.02 | 0.03 | −0.04, 0.08 |

| Tactile Responsiveness | −0.02 | 0.03 | −0.08, 0.05 |

| Magnitude of Integration for Audiovisual Speech | −0.01 | 0.03 | −0.07, 0.05 |

| Temporal Binding of Audiovisual Speech | 0.05 | 0.03 | −0.01, 0.10 |

| Effect of Moderator on Listening Effort | |||

| Chronological Age | 11.1 | 6.15 | −1.48, 23.7 |

| Language Ability | 3.14** | 0.94 | 1.23, 5.06 |

| Nonverbal Cognitive Ability | 2.92** | 1.05 | 0.78, 5.07 |

| Broader Features of Autism | −4.23 | 22.9 | −51.0, 42.5 |

| Tactile Responsiveness | 9.12 | 26.2 | −44.4, 62.7 |

| Magnitude of Integration for Audiovisual Speech | 45.0* | 21.4 | 1.36, 88.7 |

| Temporal Binding of Audiovisual Speech | 15.6 | 22.7 | −30.8, 62.0 |

Note. Results derived via the Mediation and Moderation for Repeated Measures (MEMORE) macro in SPSS (see Data Analysis)

p < 0.05,

p < 0.01

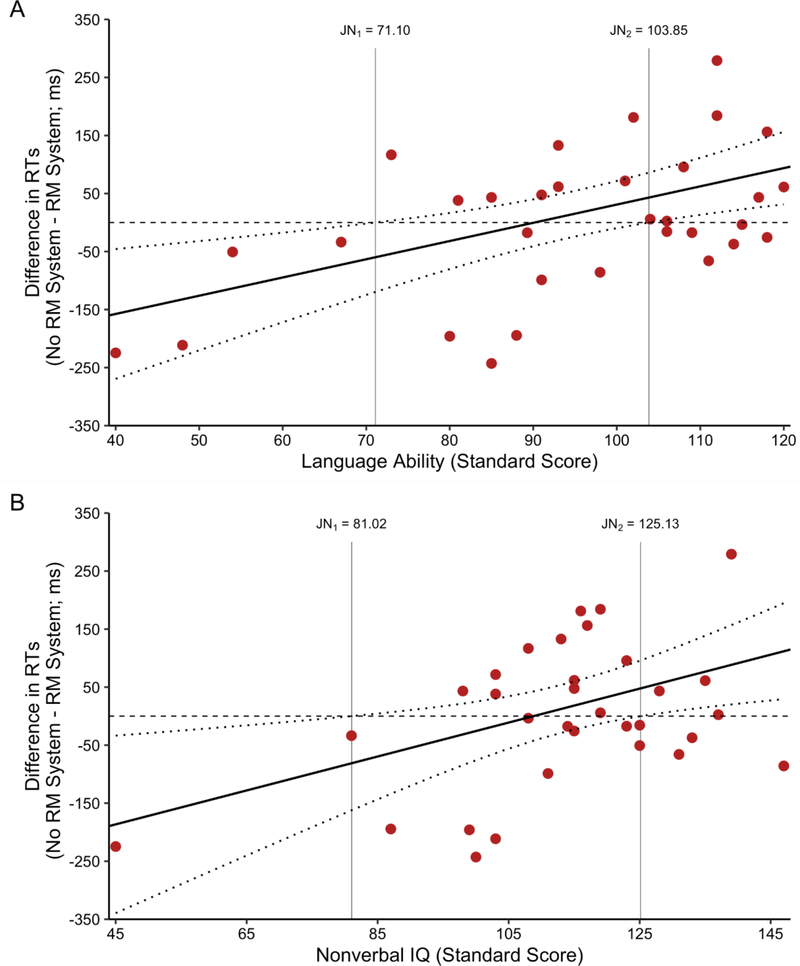

However, for response times, results indicated that the effect of the RM system on listening effort was moderated by several of the tested variables. Both language (p = .002) and nonverbal cognitive ability (p = .009) moderated the effect of RM system on listening effort. In both cases, the calculated difference in response time between RM and No-RM conditions increased with increasing standard scores. Johnson-Neyman tests utilized to derive precise cut points along the continuous moderators indicated that at nonverbal IQs below 71.10 and language scores below 81.02, the RM system significantly increased listening effort over No-RM system (i.e., increased response times in RM versus No-RM system conditions). At nonverbal IQs above 103.85 and language scores above 125.13, the RM system significantly decreased listening effort over No-RM system (i.e., decreased response times in RM versus No-RM system conditions; see Figure 3). Thus, RM systems increased listening effort (increased response times) for youth with below average nonverbal cognitive ability and low-average language ability, but reduced listening effort (reduced response times) for youth with at least average nonverbal cognitive ability and above average language ability.

Figure 3.

Depiction of moderated effects of remote microphone (RM) system use on listening effort as indexed by response times (RTs) with language score as moderator (panel A) and nonverbal IQ as moderator (panel B). Note: The main effect of RM system use on listening effort was significantly moderated by (a) language ability and (b) nonverbal cognitive ability. For language scores, individuals with language standard scores below 71.10 (Johnson-Neyman transition point 1; JN1; where the confidence interval [dotted line] around the conditional effect [solid line] intersects zero [dashed line] on the y-axis) experienced a significant increase in listening effort as a result of RM system use, while individuals with a language standard score above 103.85 (JN2) experienced a significant reduction in listening effort as a result of RM system use. Results for nonverbal cognitive ability were similar: individuals with nonverbal IQ scores below 81.02 (JN1) experienced a significant increase in listening effort as a result of RM system use, while individuals with nonverbal IQ scores above 125.13 (JN2) experienced a significant reduction in listening effort as a result of RM system use.

The effect of RM systems on listening effort was also significantly moderated by magnitude of integration for audiovisual speech as indexed by susceptibility to the McGurk effect (p = .044), with a trend towards reduced listening effort in RM versus No-RM system conditions for those with relatively greater ability to integrate auditory and visual speech cues. However, the Johnson-Neyman test did not identify a region of significance within the observed data, limiting our ability to interpret this finding. None of the other tested variables significantly moderated the effect of RM system use.

Discussion

The purpose of this study of youth with autism was three-fold: (a) to evaluate the effects of RM system use in the laboratory on listening-in-noise accuracy and listening effort using a dual-task paradigm, (b) to evaluate whether RM system benefits varied according to the level of stimulus complexity, and (c) to evaluate whether RM system effects on outcomes of interest differed according to participant characteristics. Results indicate that RM systems indeed have the potential to yield effects on listening-in-noise accuracy that vary according to stimulus complexity, and to yield effects on listening effort that vary according to characteristics of youth with autism.

Effects of RM System Use on Listening-in-Noise Accuracy Tend to Be Larger for More Complex Stimuli

RM systems improved listening-in-noise accuracy in youth with autism. The observed benefits were all large in magnitude; however, on average the benefits were greater for the more complex stimuli, most notably key words embedded in sentences, and relatively smaller for less complex stimuli, such as syllables. Although it is not clear if it was stimulus complexity or unaided accuracy that contributed more so to this significant interaction, this study highlights the importance of investigating multiple levels of stimulus complexity, which have been relatively understudied in both the autism and RM system literature on listening-in-noise performance. Notably, the effects of the RM system on listening-in-noise accuracy did not vary according to any of the participant characteristics tested. Instead, RM system benefits were robust across a rather heterogeneous sample of participants, suggesting most youth with autism in this study benefited from the RM system in this classroom-like environment.

Effects on Listening Effort Vary According to Participant Profile

On average, RM system use did not produce an effect on listening effort across all youth with autism in this study. Rather, RM system use yielded differential effects on listening effort that varied according to participant profile. Moderated effects on the whole suggest that RM systems have the potential to decrease (positively impact) listening effort for children with average to above average nonverbal cognitive and language ability (at a nonverbal IQ above about 104 and language scores above about 125). However, RM systems might increase listening effort as indexed by secondary-task response times for youth with autism who present with co-occurring cognitive and language impairments (i.e., nonverbal IQs below 71 and language scores below 81). It is worth noting that these moderated effects are similar to those recently reported for a novel intervention targeting integration of audiovisual speech (Feldman, 2020).

Whether the observed differences in listening effort with RM use are favorable or unfavorable for these youth with more limited cognitive and linguistic ability remains an open question. Typically, increased listening effort is interpreted as an unfavorable outcome. Increased effort indicates more cognitive resources are necessary to understand the linguistic input, leaving fewer resources for other important tasks (Pichora-Fuller et al., 2016). However, an alternative interpretation in this case is that the increased response times when using an RM system, in light of robust accuracy benefits, indicate these youth are more engaged in the task with the RM system in place. This explanation is supported to some degree by existing evidence demonstrating that when tasks become too difficult, participants disengage from the task, resulting in faster response times (e.g., Wu et al., 2016). It is, therefore, possible to interpret these data as increased accuracy (as indicated by percent correct) and increased task engagement (as indicated by increased response times) with the RM system for youth with comorbid cognitive and language impairments. However, this interpretation of the results requires future exploration.

Limitations and Future Directions

The present study focused on short-term effects of RM system use in a sample that, although variable in cognitive and language abilities, was limited to relatively older youth who could attend to the behavioral tasks over a somewhat extended period of time and actively report their perceptions orally during the listening-in-noise task and via button press during the McGurk and simultaneity judgement tasks. More research is therefore needed to determine if the present results will generalize to the broader population of persons with autism, and to evaluate effects of more extended RM system use (e.g., use over extended periods of time throughout a school day, use over the school year/s). Additionally, although participant compliance in the present study was in general quite high across tasks, future work should evaluate whether attentional factors, such as attention to study stimuli and adherence to study procedures, could partially explain these findings.

Second, the current study examined only a single RM system configuration. Notably, the receiver was always in the participant’s right ear with an occluding eartip. Existing studies represent a mixture of fitting strategies. Some prior investigations used bilateral receivers with the RM system (e.g., Schafer et al., 2016, 2019), while others used a unilateral receiver (e.g., Rance et al., 2017). It is possible the addition of a second RM system receiver would provide additional benefits, as has been noted in the past for children with mild hearing loss (Tharpe et al., 2004). However, the combination of closed eartip with bilateral receivers could be undesirable for youth with normal hearing, given that use of a unilateral receiver would allow one ear to remain open to hearing voices from those who are not using an RM system (e.g., fellow students; Tharpe et al., 2004). Furthermore, some evidence suggests youth with autism may have significant interaural asymmetry in complex listening tasks (e.g., Khalfa et al., 2001; Moossavi & Moallemi, 2019; Prior & Bradshaw, 1979); it is possible benefits would be maximized by individualizing which ear was fit with the receiver in a unilateral fitting. Thus, additional work is necessary to evaluate the optimal fitting strategy for youth with autism (e.g., number of receivers, microphone settings, optimal ear, etc.).

Furthermore, additional research is needed to determine the mechanisms by which RM system use yields improvements in listening-in-noise accuracy and, for some youth, in listening effort. RM systems are intended to improve listening-in-noise by improving SNRs, and thereby increasing the audibility of the speech signal (e.g., Boothroyd, 2004; Sherbecoe & Studebaker, 2002). A related possibility is that the RM system improves the efficiency by which the brain processes the speech signal, which is made clearer and more accessible by virtue of the RM system and the more favorable SNR it confers (Benítez-Barrera, 2020). Another possibility is that use of an RM system improves accuracy and/or effort by reducing distraction or frustration and thereby boosting the child’s attention to or engagement with the listening-in-noise task. Our research team is presently working to test these hypotheses.

Conclusions

This study extends prior work by showing that RM systems have the potential to increase listening-in-noise accuracy for youth with autism, as well as to reduce listening effort for youth with average to above-average cognitive and linguistic skills. In contrast, it was demonstrated that for those with below-average cognitive and linguistic skills, listening effort increased with RM system use, perhaps suggesting greater engagement in the listening-in-noise tasks. These findings have important implications for clinical practice, suggesting RM system use in classrooms could potentially improve listening-in-noise performance for at least some youth with autism.

Acknowledgements

We would like to thank the children and families who participated in this project. Anne Marie Tharpe, Erin Picou, and Tiffany Woynaroski designed the study and secured funding for this project. Jacob I. Feldman and Tiffany Woynaroski recruited and characterized the participants. All authors piloted the procedures and collected the data. Jacob Feldman, Emily Thompson, Hilary Davis, and Erin Picou scored and organized data from the listening-in-noise task. Jacob Feldman, Kacie Dunham, Bahar Keceli-Kaysili, and Tiffany Woynaroski scored and organized data for tests of putative moderators. Jacob Feldman and Tiffany Woynaroski completed data analyses. All authors assisted in the interpretation of data and generation of the manuscript. All authors signed off on and accepted responsibility for this research report.

Conflicts of interest and source of funding:

This work was funded by Sonova USA and supported in part by NIH U54 HD083211 (PI: Neul), NIH T32 MH064913 (PI: Winder), NIH/NCATS UL1 TR000445 (PI: Bernard), and NSF NRT grant DGE 19-22697 (PI: Wallace). Anne Marie Tharpe serves as Chair of the Phonak Pediatric Research Advisory Board. The other authors have no conflicts of interest to disclose. The contents of this article are solely the responsibility of the authors and do not necessarily represent the official views of the funding agencies.

References

- Aiken LS, & West SG (1991). Multiple regression: Testing and interpreting interactions. Sage Publications. [Google Scholar]

- Alcántara JI, Weisblatt EJ, Moore BC, & Bolton PF (2004). Speech-in-noise perception in high-functioning individuals with autism or Asperger’s syndrome. Journal of Child Psychology and Psychiatry, 45(6), 1107–1114. 10.1111/j.1469-7610.2004.t01-1-00303.x [DOI] [PubMed] [Google Scholar]

- American Psychiatric Association. (2013). Diagnostic and statistical manual of mental disorders-5.

- Anderson KL, & Goldstein H (2004). Speech perception benefits of FM and infrared devices to children with hearing aids in a typical classroom. Language Speech Hearing and Services in Schools, 35(2), 169–184. 10.1044/0161-1461(2004/017) [DOI] [PubMed] [Google Scholar]

- Anderson KL, Goldstein H, Colodzin L, & Iglehart F (2005). Benefit of S/N enhancing devices to speech perception of children listening in a typical classroom with hearing aids or a cochlear implant. Journal of Educational Audiology, 12, 14–28. [Google Scholar]

- Baranek GT, David FJ, Poe MD, Stone WL, & Watson LR (2006). Sensory Experiences Questionnaire: Discriminating sensory features in young children with autism, developmental delays, and typical development. Journal of Child Psychology and Psychiatry, 47, 591–601. 10.1111/j.1469-7610.2005.01546.x [DOI] [PubMed] [Google Scholar]

- Benítez-Barrera CR (2020). Cortical auditory evoked potentials of children with normal hearing following a short auditory training with a remote microphone system [Doctoral dissertation, Vanderbilt University]. Vanderbilt University Institutional Repository. http://hdl.handle.net/1803/16060 [Google Scholar]

- Benítez-Barrera CR, Angley GP, & Tharpe AM (2018). Remote microphone system use at home: Impact on caregiver talk. Journal of Speech, Language, and Hearing Research, 61(2), 399–409. 10.1044/2017_JSLHR-H-17-0168 [DOI] [PubMed] [Google Scholar]

- Benítez-Barrera CR, Thompson EC, Angley GP, Woynaroski T, & Tharpe AM (2019). Remote microphone system use at home: Impact on child-directed speech. Journal of Speech, Language, and Hearing Research, 62(6), 2002–2008. 10.1044/2019_JSLHR-H-18-0325 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boothroyd A (2004). Hearing aid accessories for adults: The remote FM microphone. Ear and Hearing, 25(1), 22–33. [DOI] [PubMed] [Google Scholar]

- Breiman L (2001). Random forests. Machine Learning, 45(1), 5–32. 10.1023/A:1010933404324 [DOI] [Google Scholar]

- Ching TY, Hill M, Brew J, Incerti P, Priolo S, Rushbrook E, & Forsythe L (2005). The effect of auditory experience on speech perception, localization, and functional performance of children who use a cochlear implant and a hearing aid in opposite ears. International Journal of Audiology, 44(12), 677–690. 10.1080/00222930500271630 [DOI] [PubMed] [Google Scholar]

- Constantino JN, & Gruber CP (2012). Social Responsiveness Scale (2nd ed.). Western Psychological Services. [Google Scholar]

- Cox R, Alexander G, & Gilmore C (1987). Development of the Connected Speech Test (CST). Ear and Hearing, 8(5 Suppl), 119s–126s. 10.1097/00003446-198710001-00010 [DOI] [PubMed] [Google Scholar]

- Cox RM, Alexander GC, Gilmore C, & Pusakulich KM (1988). Use of the Connected Speech Test (CST) with hearing-impaired listeners. Ear and Hearing, 9(4), 198–207. [DOI] [PubMed] [Google Scholar]

- Desjardins JL, & Doherty KA (2014). The effect of hearing aid noise reduction on listening effort in hearing-impaired adults. Ear and Hearing, 35(6), 600–610. https://doi.org/0.1097/AUD.0000000000000028 [DOI] [PubMed] [Google Scholar]

- Dunham K, Feldman JI, Liu Y, Cassidy M, Conrad JG, Santapuram P, Suzman E, Tu A, Butera IM, Simon DM, Broderick N, Wallace MT, Lewkowicz DJ, & Woynaroski TG (2020). Stability of variables derived from measures of multisensory function in children with autism spectrum disorder. American Journal of Intellectual and Developmental Disabilities, 125(4), 287–303. https://doi.org/d2xz [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunn W (1999). The Sensory Profile: User’s manual. Psychological Corporation. [Google Scholar]

- Enders CK (2010). Applied missing data analysis. Guilford Press. [Google Scholar]

- Feldman JI (2020). Multisensory perceptual training in youth with autism spectrum disorder: A randomized controlled trial [Doctoral dissertation, Vanderbilt University]. Vanderbilt University Institutional Repository. http://hdl.handle.net/1803/16133 [Google Scholar]

- Feldman JI, Conrad JG, Kuang W, Tu A, Liu Y, Simon DM, Wallace MT, & Woynaroski TG (2020a). Relations between the McGurk effect, social and communication skill, and autistic features in children with and without autism. Journal of Autism and Developmental Disabilities. Advance online publication. 10.1007/s10803-021-05074-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feldman JI, Dunham K, Cassidy M, Wallace MT, Liu Y, & Woynaroski TG (2018). Audiovisual multisensory integration in individuals with autism spectrum disorder: A systematic review and meta-analysis. Neuroscience & Biobehavioral Reviews, 95, 220–234. 10.1016/j.neubiorev.2018.09.020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feldman JI, Dunham K, Conrad JG, Simon DM, Cassidy M, Liu Y, Tu A, Broderick N, Wallace MT, & Woynaroski TG (2020b). Plasticity of temporal binding in children with autism spectrum disorder: A single-case experimental design perceptual training study. Research in Autism Spectrum Disorders, 74, 1–13. 10.1016/j.rasd.2020.101555 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feuerstein J (1992). Monaural versus binaural hearing: Ease of listening, word recognition, and attentional effort. Ear and Hearing, 13(2), 80–86. [PubMed] [Google Scholar]

- Flynn TS, Flynn MC, & Gregory M (2005). The FM advantage in the real classroom. Journal of Educational Audiology, 12, 37–44. [Google Scholar]

- Foxe JJ, Molholm S, Del Bene VA, Frey H-P, Russo NN, Blanco D, Saint-Amour D, & Ross LA (2015). Severe multisensory speech integration deficits in high-functioning school-aged children with autism spectrum disorder (ASD) and their resolution during early adolescence. Cerebral Cortex, 25(2), 298–312. 10.1093/cercor/bht213 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Francis AL, & Love J (2019). Listening effort: Are we measuring cognition or affect, or both? Wiley Interdisciplinary Reviews: Cognitive Science, 11(0), Article e1514. 10.1002/wcs.1514 [DOI] [PubMed] [Google Scholar]

- Fraser S, Gagne J, Alepins M, & Dubois P (2010). Evaluating the effort expended to understand speech in noise using a dual-task paradigm: The effects of providing visual speech cues. Journal of Speech, Language, and Hearing Research, 53(1), 18–33. 10.1044/1092-4388(2009/08-0140) [DOI] [PubMed] [Google Scholar]

- Gagne J-P, Besser J, & Lemke U (2017). Behavioral assessment of listening effort using a dual-task paradigm: A review. Trends in Hearing, 21, 1–25. 10.1177/2331216516687287 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gamer M, Lemon J, Fellows I, & Singh P (2019). irr: Various coefficients of interrater reliability and agreement (Version 0.84.1). https://CRAN.R-project.org/package=irr [Google Scholar]

- Gustafson S, McCreery R, Hoover B, Kopun JG, & Stelmachowicz P (2014). Listening effort and perceived clarity for normal-hearing children with the use of digital noise reduction. Ear and Hearing, 35(2), 183–194. 10.1097/01.aud.0000440715.85844.b8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayes AF (2017). Introduction to mediation, moderation, and conditional process analysis: A regression-based approach. Guilford Press. [Google Scholar]

- Hicks C, & Tharpe A (2002). Listening effort and fatigue in school-age children with and without hearing loss. Journal of Speech, Language, and Hearing Research, 45(3), 573–584. 10.1044/1092-4388(2002/046) [DOI] [PubMed] [Google Scholar]

- Holt RF, Kirk KI, Eisenberg LS, Martinez AS, & Campbell W (2005). Spoken word recognition development in children with residual hearing using cochlear implants and hearing aids in opposite ears. Ear and Hearing, 26(4), 82S–91S. [DOI] [PubMed] [Google Scholar]

- Irwin J, & DiBlasi L (2017). Audiovisual speech perception: A new approach and implications for clinical populations. Language and Linguistics Compass, 11(3), 77–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Irwin JR, Tornatore LA, Brancazio L, & Whalen D (2011). Can children with autism spectrum disorders “hear” a speaking face? Child Development, 82(5), 1397–1403. 10.1111/j.1467-8624.2011.01619.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahneman D (1973). Attention and effort. Prentice-Hall. [Google Scholar]

- Khalfa S, Bruneau N, Roge B, Georgieff N, Veuillet E, Adrien JL, Barthélémy C, & Collet L (2001). Peripheral auditory asymmetry in infantile autism. European Journal of Neuroscience, 13(3), 628–632. 10.1046/j.1460-9568.2001.01423.x [DOI] [PubMed] [Google Scholar]

- Koo TK, & Li MY (2016). A guideline of selecting and reporting intraclass correlation coefficients for reliability research. Journal of Chiropractic Medicine, 15(2), 155–163. 10.1016/j.jcm.2016.02.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis DE, Valente DL, & Spalding JL (2015). Effect of minimal/mild hearing loss on children’s speech understanding in a simulated classroom. Ear and Hearing, 36(1), 136–144. 10.1097/AUD.0000000000000092 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lord C, Rutter M, DiLavore P, Risi S, Gotham K, & Bishop SL (2012). Autism Diagnostic Observation Schedule, second edition (ADOS-2) manual (Part I): Modules 1–4. Western Psychological Services. [Google Scholar]

- Maenner MJ, Shaw KA, & Baio J (2020). Prevalence of autism spectrum disorder among children aged 8 years—autism and developmental disabilities monitoring network, 11 sites, United States, 2016. MMWR Surveillance Summaries, 69(4), 1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGarrigle R, Munro KJ, Dawes P, Stewart AJ, Moore DR, Barry JG, & Amitay S (2014). Listening effort and fatigue: What exactly are we measuring? A British Society of Audiology Cognition in Hearing Special Interest Group ‘white paper’. International Journal of Audiology, 53(7), 433–445. 10.3109/14992027.2014.890296 [DOI] [PubMed] [Google Scholar]

- Megnin O, Flitton A, Jones CRG, de Haan M, Baldeweg T, & Charman T (2012). Audiovisual speech integration in autism spectrum disorders: ERP evidence for atypicalities in lexical-semantic processing. Autism Research, 5, 39–48. 10.1002/aur.231 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell SK (1979). Interobserver agreement, reliability, and generalizability of data collected in observational studies. Psychological Bulletin, 86(2), 376–390. 10.1037/0033-2909.86.2.376 [DOI] [Google Scholar]

- Mongillo EA, Irwin JR, Whalen D, Klaiman C, Carter AS, & Schultz RT (2008). Audiovisual processing in children with and without autism spectrum disorders. Journal of Autism and Developmental Disorders, 38, 1349–1358. 10.1007/s10803-007-0521-y [DOI] [PubMed] [Google Scholar]

- Montoya AK (2019). Moderation analysis in two-instance repeated measures designs: Probing methods and multiple moderator models. Behavior Research Methods, 51(1), 61–82. 10.3758/s13428-018-1088-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Montoya AK, & Hayes AF (2017). Two-condition within-participant statistical mediation analysis: A path-analytic framework. Psychological Methods, 22(1), 6–27. 10.1037/met0000086 [DOI] [PubMed] [Google Scholar]

- Moore TM, & Picou EM (2018). A potential bias in subjective ratings of mental effort. Journal of Speech, Language, and Hearing Research, 61, 2405–2421. 10.1044/2018_JSLHR-H-17-0451 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moossavi A, & Moallemi M (2019). Auditory processing and auditory rehabilitation approaches in autism. Auditory and Vestibular Research, 28(1), 1–13. 10.18502/avr.v28i1.410 [DOI] [Google Scholar]

- Patten E, Watson LR, & Baranek GT (2014). Temporal synchrony detection and associations with language in young children with ASD. Autism Research and Treatment, 2014, Article 678346. 10.1155/2014/678346 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pichora-Fuller MK, Kramer SE, Eckert MA, Edwards B, Hornsby BW, Humes LE, Lemke U, Lunner T, Matthen M, & Mackersie CL (2016). Hearing impairment and cognitive energy: The Framework for Understanding Effortful Listening (FUEL). Ear and Hearing, 37, 5S–27S. 10.1097/AUD.0000000000000312 [DOI] [PubMed] [Google Scholar]

- Picou EM, Bean B, Marcrum SC, Ricketts TA, & Hornsby BWY (2019). Moderate reverberation does not affect behavioral listening effort, subjective listening effort, or self-reported fatigue in school-aged children with normal hearing. Frontiers in Psychology, 10, Article 1749. 10.3389/fpsyg.2019.01749 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Picou EM, Charles LM, & Ricketts TA (2017b). Child–adult differences in using dual-task paradigms to measure listening effort. American Journal of Audiology, 26, 143–154. 10.1044/2016_AJA-16-0059 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Picou EM, Davis H, Lewis D, & Tharpe AM (2020a). Contralateral routing of signals systems can improve speech recognition and comprehension in dynamic classrooms. Journal of Speech, Language and Hearing Research, 63, 2468–2482. 10.1044/2020_JSLHR-19-00411 [DOI] [PubMed] [Google Scholar]

- Picou EM, Lewis D, Angley G, & Tharpe AM (2020b). Rerouting hearing aid systems for overcoming limited useable unilateral hearing in dynamic classrooms. Ear and Hearing, 41(4), 790–803. 10.1097/AUD.0000000000000800 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Picou EM, Moore TM, & Ricketts TA (2017a). The effects of directional processing on objective and subjective listening effort. Journal of Speech, Language, and Hearing Research, 60, 199–211. 10.1044/2016_JSLHR-H-15-0416 [DOI] [PubMed] [Google Scholar]

- Picou EM, & Ricketts TA (2017). The relationship between speech recognition, behavioral listening effort, and subjective ratings. International Journal of Audiology, 57(6), 457–467. 10.1080/14992027.2018.1431696 [DOI] [PubMed] [Google Scholar]

- Powers AR, Hillock AR, & Wallace MT (2009). Perceptual training narrows the temporal window of multisensory binding. Journal of Neuroscience, 29(39), 12265–12274. 10.1523/JNEUROSCI.3501-09.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prior MR, & Bradshaw JL (1979). Hemisphere functioning in autistic children. Cortex, 15(1), 73–81. 10.1016/S0010-9452(79)80008-8 [DOI] [PubMed] [Google Scholar]

- R Core Team. (2020). R: A language and environment for statistical computing (Version 4.0.2) Vienna, Austria. https://www.R-project.org/ [Google Scholar]

- Rance G (2014). Wireless technology for children with autism spectrum disorder. Seminars in Hearing, 3, 217–226. [Google Scholar]

- Rance G, Chisari D, Saunders K, & Rault J-L (2017). Reducing listening-related stress in school-aged children with autism spectrum disorder. Journal of Autism and Developmental Disorders, 47(7), 2010–2022. 10.1007/s10803-017-3114-4 [DOI] [PubMed] [Google Scholar]

- Rance G, Saunders K, Carew P, Johansson M, & Tan J (2014). The use of listening devices to ameliorate auditory deficit in children with autism. The Journal of Pediatrics, 164(2), 352–357. 10.1016/j.jpeds.2013.09.041 [DOI] [PubMed] [Google Scholar]

- Roid GH, Miller LJ, Pomplun M, & Koch C (2013). Leiter International Performance Scale (3rd ed.). Western Psychological Services. [Google Scholar]

- Rönnberg J, Lunner T, Zekveld A, Sörqvist P, Danielsson H, Lyxell B, Dahlström Ö, Signoret C, Stenfelt S, Pichora-Fuller MK, & Rudner M (2013). The Ease of Language Understanding (ELU) model: Theoretical, empirical, and clinical advances. Frontiers in Systems Neuroscience, 7, Article 31. 10.3389/fnsys.2013.00031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rönnberg J, Rudner M, Foo C, & Lunner T (2008). Cognition counts: A working memory system for ease of language understanding (ELU). International Journal of Audiology, 47(s2), S99–S105. 10.1080/14992020802301167 [DOI] [PubMed] [Google Scholar]

- Rushton JP, Brainerd CJ, & Pressley M (1983). Behavioral development and construct validity: The principle of aggregation. Psychological Bulletin, 94, 18–38. 10.1037/0033-2909.94.1.18 [DOI] [Google Scholar]

- Russo N, Zecker S, Trommer B, Chen J, & Kraus N (2009). Effects of background noise on cortical encoding of speech in autism spectrum disorders. Journal of Autism and Developmental Disorders, 39(8), 1185–1196. 10.1007/s10803-009-0737-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sarampalis A, Kalluri S, Edwards B, & Hafter E (2009). Objective measures of listening effort: Effects of background noise and noise reduction. Journal of Speech, Language and Hearing Research, 52(5), 1230–1240. 10.1044/1092-4388(2009/08-0111) [DOI] [PubMed] [Google Scholar]

- Schafer EC, Gopal KV, Mathews L, Thompson S, Kaiser K, McCullough S, Jones J, Castillo P, Canale E, & Hutcheson A (2019). Effects of auditory training and remote microphone technology on the behavioral performance of children and young adults who have autism spectrum disorder. Journal of the American Academy of Audiology, 30(5), 431–443. 10.3766/jaaa.18062 [DOI] [PubMed] [Google Scholar]

- Schafer EC, Mathews L, Mehta S, Hill M, Munoz A, Bishop R, & Moloney M (2013). Personal FM systems for children with autism spectrum disorders (ASD) and/or attention-deficit hyperactivity disorder (ADHD): An initial investigation. Journal of Communication Disorders, 46(1), 30–52. 10.1016/j.jcomdis.2012.09.002 [DOI] [PubMed] [Google Scholar]

- Schafer EC, & Thibodeau LM (2006). Speech recognition in noise in children with cochlear implants while listening in bilateral, bimodal, and FM-system arrangements. American Journal of Audiology, 15(2), 114–126. 10.1044/1059-0889(2006/015) [DOI] [PubMed] [Google Scholar]

- Schafer EC, Wright S, Anderson C, Jones J, Pitts K, Bryant D, Watson M, Box J, Neve M, & Mathews L (2016). Assistive technology evaluations: Remote-microphone technology for children with autism spectrum disorder. Journal of Communication Disorders, 64, 1–17. 10.1016/j.jcomdis.2016.08.003 [DOI] [PubMed] [Google Scholar]

- Semel EM, Wiig EH, & Secord WA (2004). Clinical Evaluation of Language Fundamentals (4th ed.). The Psychological Corporation. [Google Scholar]

- Shavelson RJ, & Webb NM (1991). Generalizability theory: A primer. Sage. [Google Scholar]

- Sherbecoe RL, & Studebaker GA (2002). Audibility-index functions for the Connected Speech Test. Ear and Hearing, 23(5), 385–398. https://journals.lww.com/ear-hearing/Fulltext/2002/10000/Audibility_Index_Functions_for_the_Connected.1.aspx [DOI] [PubMed] [Google Scholar]

- Smith EG, & Bennetto L (2007). Audiovisual speech integration and lipreading in autism. Journal of Child Psychology and Psychiatry, 48(8), 813–821. 10.1111/j.1469-7610.2007.01766.x [DOI] [PubMed] [Google Scholar]

- Smith EG, Zhang S, & Bennetto L (2017). Temporal synchrony and audiovisual integration of speech and object stimuli in autism. Research in Autism Spectrum Disorders, 39, 11–19. 10.1016/j.rasd.2017.04.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stekhoven DJ, & Bühlmann P (2012). MissForest—Non-parametric missing value imputation for mixed-type data. Bioinformatics, 28, 112–118. 10.1093/bioinformatics/btr597 [DOI] [PubMed] [Google Scholar]

- Stevenson RA, Baum SH, Segers M, Ferber S, Barense MD, & Wallace MT (2017). Multisensory speech perception in autism spectrum disorder: From phoneme to whole-word perception. Autism Research, 10, 1280–1290. 10.1002/aur.1776 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Segers M, Ncube BL, Black KR, Bebko JM, Ferber S, & Barense MD (2018). The cascading influence of multisensory processing on speech perception in autism. Autism, 22, 609–624. 10.1177/1362361317704413 [DOI] [PubMed] [Google Scholar]

- Stevenson RA, Siemann JK, Schneider BC, Eberly HE, Woynaroski TG, Camarata SM, & Wallace MT (2014). Multisensory temporal integration in autism spectrum disorders. Journal of Neuroscience, 34, 691–697. 10.1523/JNEUROSCI.3615-13.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strand JF, Brown VA, Merchant MB, Brown HE, & Smith J (2018). Measuring listening effort: Convergent validity, sensitivity, and links with cognitive and personality measures. Journal of Speech, Language, and Hearing Research, 61, 1463–1486. 10.1044/2018_JSLHR-H-17-0257 [DOI] [PubMed] [Google Scholar]

- Tharpe AM, Ricketts T, & Sladen DP (2004). FM systems for children with minimal to mild hearing loss. ACCESS: Achieving Clear Communication Employing Sound Solutions. Chicago: Phonack AG, 191–197. [Google Scholar]

- Thompson EC, Benítez-Barrera CR, Angley GP, Woynaroski T, & Tharpe AM (2020). Remote microphone system use in the homes of children with hearing loss: Impact on caregiver communication and child vocalizations. Journal of Speech, Language, and Hearing Research, 63, 633–642. 10.1044/2019_JSLHR-19-00197 [DOI] [PMC free article] [PubMed] [Google Scholar]

- van der Kruk Y, Wilson WJ, Palghat K, Downing C, Harper-Hill K, & Ashburner J (2017). Improved signal-to-noise ratio and classroom performance in children with autism spectrum disorder: A systematic review. Journal of Autism and Developmental Disorders, 4(3), 243–253. [Google Scholar]

- Whitmer WM, Brennan-Jones CG, & Akeroyd MA (2011). The speech intelligibility benefit of a unilateral wireless system for hearing-impaired adults. International Journal of Audiology, 50(12), 905–911. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woynaroski TG, Kwakye LD, Foss-Feig JH, Stevenson RA, Stone WL, & Wallace MT (2013). Multisensory speech perception in children with autism spectrum disorders. Journal of Autism and Developmental Disorders, 43(12), 2891–2902. 10.1007/s10803-013-1836-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu Y-H, Stangl E, Zhang X, Perkins J, & Eilers E (2016). Psychometric functions of dual-task paradigms for measuring listening effort. Ear and Hearing, 37(6), 660–670. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yoder PJ, Lloyd BP, & Symons FJ (2018). Observational measurement of behavior (2nd ed.). Brookes Publishing. [Google Scholar]

- Zimmerman IL, Steiner VG, & Pond RE (2011). Preschool Language Scale (5th ed.). Harcourt. [Google Scholar]