Abstract

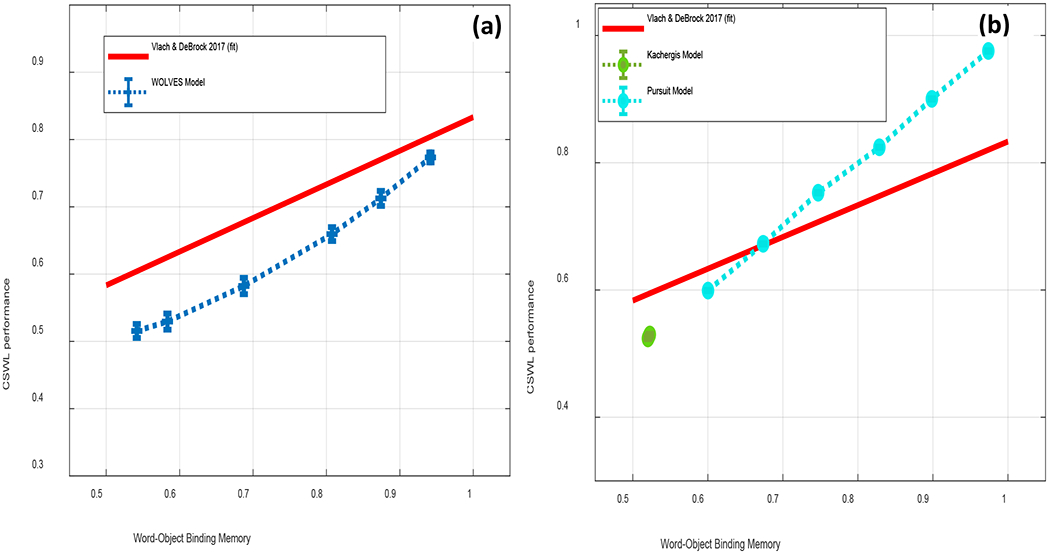

Infants, children and adults have been shown to track co-occurrence across ambiguous naming situations to infer the referents of new words. The extensive literature on this cross-situational word learning (CSWL) ability has produced support for two theoretical accounts—associative learning (AL) and hypothesis testing (HT)—but no comprehensive model of the behaviour. We propose WOLVES, an implementation-level account of CSWL grounded in real-time psychological processes of memory and attention that explicitly models the dynamics of looking at a moment-to-moment scale and learning across trials. We use WOLVES to capture data from 12 studies of CSWL with adults and children, thereby providing a comprehensive account of data purported to support both AL and HT accounts. Direct model comparison shows that WOLVES performs well relative to two competitor models. In particular, WOLVES captures more data than the competitor models (132 vs. 69 data values) and fits the data better than the competitor models (e.g., lower percent error scores for 12 of 17 conditions). Moreover, WOLVES generalizes more accurately to three ‘held-out’ experiments, although a model by Kachergis and colleagues (2012) fares better on another metric of generalization (AIC/BIC). Critically, we offer the first developmental account of CSWL, providing insights into how memory processes change from infancy through adulthood. WOLVES shows that visual exploration and selective attention in CSWL are both dependent on and indicative of learning within a task-specific context. Further, learning is driven by real-time synchrony of words and gaze and constrained by memory processes over multiple timescales.

Keywords: cross-situational learning, word learning, neural process model, dynamic field theory (DFT), attention and memory

Words are the building blocks of language. Thus, word learning forms a central challenge in language acquisition. The difficulty of this challenge becomes apparent while attempting to make sense of people conversing in an unknown language. In such a conversation, every spoken word can potentially refer to a seemingly infinite set of referents, thus challenging the learner to determine and learn the speaker-intended mapping (termed the indeterminacy of reference problem; Quine, 1960). Furthermore, the size of the vocabulary to learn and retain over multiple learner-environment interactions is very large. Despite these difficulties, humans are adept at acquiring vocabulary from infancy, and do so at a remarkable speed. By two years of age, infants are typically well-skilled and efficient at word learning (Bloom, 2000; Fenson et al., 2007; McMurray, 2007) quickly mapping a word to its correct referent in relatively few learning trials (e.g., Carey & Bartlett, 1978, but see Bion, Borovsky, & Fernald, 2013; Kalashnikova, Escudero, & Kidd, 2018; Kucker, Mcmurray, & Samuelson, 2015; Horst & Samuelson, 2008 and Kucker, McMurray & Samuelson, 2015 for recent qualifications of this ability). In fact, by age six, children know approximately 14,000 words (Templin, 1957), many learned from hearing other people use them in noisy and ambiguous contexts (Carey, 1978; Gaskell & Marslen-Wilson, 1999; Newman & Hussain, 2006). Word knowledge estimates jump to numbers ranging between 50,000 to 100,000 distinct words in adulthood (Bloom, 2000).

How do children learn and retain this large vocabulary from often ambiguous day-to-day conversational data? There is structure in the way words and objects co-occur in our daily conversations, especially with infants: words more often co-occur with their referents than with other objects. Learners can therefore capitalize on this word-referent co-occurrence to infer the intended referent of a word. This ability is often termed cross-situational word learning (CSWL; Gleitman, 1990; Pinker, 2009). The first empirical results showing that children could learn words by tracking information across multiple separately ambiguous occasions came from Akhtar and Montague (1999). However, a set of papers from Yu and Smith (2007; Smith & Yu, 2008) sparked the recent explosion of interest in CSWL. Yu and Smith (2007) presented adults (and later infants, see Smith & Yu, 2008) with a number of novel objects and an equal number of novel names, with no other clue about correct word-object mappings. Across several trials, however, a word and its ‘true’ referent always co-occurred while the co-occurrence of all other word-object pairs was lower. Following these training trials, participants showed above-chance accuracy when asked to select a word’s referent from a set of possible choices, suggesting cross-situational statistics were sufficient to support learning.

Statistical learning, the detection and extraction of reliable patterns in the stream of incoming sensory inputs, has been shown to operate over different linguistic subdomains such as word segmentation (Estes, Evans, Alibali, & Saffran, 2007), and voice-pitch tracking (Saffran, Reeck, Niebuhr, & Wilson, 2005; Saffran & Thiessen, 2003), in addition to other non-linguistic modalities and types of stimuli including shapes (Fiser & Aslin, 2001), scenes (Brady & Oliva, 2008), tactile stimuli (Conway & Christiansen, 2005), and spatial locations (Mayr, 1996). Further, recent studies exploring the underlying structure in audio and video recordings of infants in common everyday activities reveal significant structure in the word-object co-occurrence data outside of the laboratory (Frank, Goodman, & Tenenbaum, 2009; Yu, 2008; Yu & Ballard, 2007; Yu, Ballard, & Aslin, 2005; Yu & Smith, 2012; Yurovsky, Smith & Yu, 2013). But what is the nature of the statistical computations that support this learning?

The literature suggests two alternatives: hypothesis testing and associative learning. Table 1 summarizes 19 existing models in the CSWL literature. Models are grouped according to theoretical accounts – hypothesis testing (HT), associative learning (AL), and models that integrate both perspectives (Mixed). The table compares models in terms of Input—the form of data the model processes, for example, sub-symbolic data such as human utterances or artificially generated symbolic stimuli, and the computational Formalism that the model uses, e.g., connectionist or Bayesian. The table also highlights key model features, the main constraints or biases the model assumes, the experimental data and key behaviours it captures, its main implications, and some of its limitations. Below, we evaluate these models and the theoretical accounts they formalize.

Table 1:

CSWL models in literature.

| Paper | Input | Formalism | Key features | Constraints/Biases | Captures | Implications | Limitations |

|---|---|---|---|---|---|---|---|

| Hypothesis Testing Models | |||||||

| Siskind (1996) | Symbolic | Discrete rule-based inference; incremental | Pre-defined rules detect noise and homonymy; Heuristic functions disambiguate word senses under homonymy. | Mutual exclusivity and coverage (to narrow down the set of meanings for a word); composition |

Data: Artificially generated corpus Behaviour: Learns under variable vocabulary size and degree of referential uncertainty; fast mapping; bootstrapping from partial knowledge |

Incremental systems of CSWL and mutual exclusivity (ME) can solve lexical acquisition problems like of children | Not possible to revise the meaning of a word once it is considered learned; sensitive to noise and missing data |

| Frank, Goodman & Tenenbaum (2009) | Sub-symbolic audiovisual | Bayesian | Batch processing; Uses speaker’s intention to derive mappings | Speaker’s intent is known |

Data: Small corpus of mother-infant interactions CHILDES dataset Behaviour: ME; Fast map; Generalizes from social cues |

Some language-specific constraints such as ME may not be necessary | Learns small lexicon; arbitrary representation of speaker’s intention |

| Truesell et al. (2013) | Symbolic | Mathematical | Incremental; Retains one referent per word at a time; minimal free parameters | Some degree of failure to recall |

Data:

Trueswell et al. (2013) Behaviour: Captures participant’s knowledge (or lack) of referents from preceding trials |

Adults retain only one hypothesis about a word’s meaning at each learning instance | Arbitrary assumptions of recall probability |

| Sadegi, Scheutz & Krause (2017) | Sub-symbolic audiovisual | Bayesian, Embodied | Incremental; Uses speaker’s referential intentions; Robotic implementation; Robust to noise | Speaker’s Intent; ME; Limited memory |

Data: Simple utterances from a human to the robot Behaviour: Learns under referential uncertainty |

Incremental models help avoid a need for excessive memory | Tested on a very limited data set and an artificial experiment only |

| Najnini & Banerjee (2018) | Symbolic | Connectionist | Integrates socio-pragmatic theory; Batch processing; Deep reinforcement learning; Uses four reinforcement algorithms | Novel-Noun Novel-Category (N3C); Attentional, prosodic cues |

Data: Artificial experiments on two transcribed video clips of mother-infant interaction from CHILDES corpus Behaviour: Referential uncertainty; N3C bias |

Reinforcement learning models are well-suited for word-learning | Learns one-to-one mappings only; No modelling of empirical experiments |

| Associative Learning Models | |||||||

| Yu & Ballard (2007) | Sub-symbolic Audio-symbolic visual | Probabilistic | Batch processing; Uses expectation maximization; Adds speaker’s visual attention and social cues in speech | Visual Attentional and Social cues |

Data: 600 mother utterances from CHILDES database corpus Behaviour: Referential uncertainty; Role of prosodic and visual cues |

Speakers’ attentional and prosodic cues guide CSWL learning | No modelling of empirical results |

| Fazly, Alishahi & Stevenson (2010) | Sub-symbolic audio; Symbolic visual | Probabilistic | Incremental; Calculates and accumulates probability for each word-object pair | Prior knowledge bias |

Data: Artificial experiments on the CHILDES database corpus Behaviour: Referential uncertainty; ME bias |

Inbuilt biases such as ME not necessary; Primarily, input shapes development | Basic CSWL; no modelling of empirical results |

| Yu & Smith (2011) | Eye-tracking data | Mathematical | Incremental; Moment-by-moment modelling; Uses eye fixations to build associations | Selective visual attention |

Data:

Yu & Smith (2011) Behaviour: Learning under referential uncertainty in infants; Selective attention |

Visual attention drives learning; Learners actively select word–object mappings to store | Mathematical treatment of infant gaze data; Not a model of audiovisual input |

| Nematzadeh, Fazly & Stevenson (2012) | Sub-symbolic audio Symbolic visual | Probabilistic | Extension from Fazly et.al. (2010) forgetting and attention to novelty | Prior knowledge; Attention to novelty; Memory |

Data : Artificial experiments on a small corpus from CHILDES database Behaviour: Referential uncertainty; spacing effect |

Memory and attention processes underlie spacing effect behaviours | No modelling of empirical results |

| Kachergis, Yu & Shiffrin (2012, 2013, 2017) | Symbolic | Mathematical | Incremental; Learned associations and novel items compete for attention; Associations decay; WM supports successive repeated associations | Familiarity/prior knowledge; Novelty/entropy for attentional shifting |

Data:

Kachergis, Yu & Shiffrin (2012, 2013, 2017), Behaviour: ME as well as its relaxation; Sensitivity to variance in input frequency and contextual diversity |

ME can arise in associative mechanisms through attentional shifting and memory decay | Bias to associate uncertain words with uncertain objects similar to ME; Unexplained parametric variations |

| Yurovsky, Fricker, Yu, & Smith (2014) | Symbolic | Mathematical, Bayesian | Compares role of full and partial knowledge in generating mutual exclusivity behaviour | Prior knowledge bias |

Data:

Yurovsky et al (2014) Behaviour: ME; Bootstrapping from partial knowledge |

Partial knowledge can help disambiguate word meanings | Specific to analysis of the role of prior knowledge reported in this work |

| Rasanen & Rasilo (2015) | Sub-symbolic audiovisual | Probabilistic | Transition probability-based; Joint learning of word segmentation and word-object mappings from continuous speech | Transition probability (TP) analysis |

Data : Yu and Smith (2007), Yurovsky, Yu, & Smith (2013) Caregiver Y2 UK corpus; Behaviour: ME, Sensitivity to varying degrees of referential uncertainty |

CSWL can aid bootstrapping of speech segmentation and vice versa | Hard allocation of TPs into disjoint referential contexts; No experiments on development |

| Bassani & Araujo (2019) | Sub-symbolic audiovisual | Modular connectionist | Incremental trial-by-trial learning; Raw images of objects, streams of phonemes as input data | Time-Varying Self-Organizing Maps |

Data:

Yurovsky et al. (2013), Yu and Smith (2007), Trueswell et al. (2013); Behaviour: Referential uncertainty, Local/global competition, Context-sensitive association learning |

Time-Varying Self-Organizing Maps are better at capturing co-variations than Hebbian learning | Does not benefit from prior knowledge in forming new associations |

| Mixed Models | |||||||

| Fontanari, Tikhanoff, Cangelosi, & Perlovsky (2009) | Symbolic | Neural Modelling Fields | Batch processing of input; NMF categorization mechanism | Noise/Clutter detection; Parametric models |

Data: Small artificial dataset Behaviour: Referential uncertainty |

Fuzziness in noise can be exploited to find the correct associations | The number of models is chosen a priori; No modelling of any empirical data |

| Kachergis & Yu (2018) | Symbolic | Mathematical | Extends Kachergis e.al. (2012) with uncertain responses during training | Uncertain response probability |

Data:

Kachergis & Yu (2018) Behaviour: Captures participant accuracy and uncertainty in learning trials |

Neither pure HT or extreme AL models can account for CSWL behaviours | Processes / representations that generate uncertain responses not specified |

| Smith, Smith, & Blythe (2011) | Symbolic | Probabilistic analysis | Comparison of different possible strategies in an associative model |

Data:

Smith et al. (2011) Behaviour: Learning under varying referential uncertainty and interleaving trials |

Continuum of possible strategies used, modulated task difficulty | Mathematical treatment is specific to the empirical work by the authors | |

| Stevens, Gleitman, Trueswell, & Yang (2017)) | Sub-Symbolic audio Symbolic visual | Probabilistic | Incremental; Combines selection, ME, reward based learning and associative learning; | Mutual exclusivity |

Data: CHILDES; Cartmill et al. (2013); Yu & Smith (2007); Trueswell et al. (2013); Koehne et al. (2013) Behaviour: CSWL under varying uncertainty |

Adults can retain multiple associations but always a single favoured hypothesis | Does not account for retaining multiple hypotheses for one word |

| Taniguchi, Taniguchi & Cangelosi (2017) | Sub-symbolic audiovisual | Embodied, Bayesian, Generative | Unsupervised machine learning based on a Bayesian generative model; Robotic implementation; Word learning for objects and actions | Mutual exclusivity; Taxonomic bias |

Data: Artificial experiment on a limited word-referent set Behaviour: learning under referential uncertainty; Learning of objects and actions |

Mutual exclusivity constraint is effective for lexical acquisition in CSL | Does not deal with major issues in CSWL |

| Yurovsky & Frank (2015) | Symbolic | Probabilistic | Incremental; shares intention/attention to create AL to HT spectrum | Intention distribution and memory decay |

Data:

Yurovsky & Frank (2015) Behaviour: CSWL under varying within and between trial uncertainty |

CSWL distributional but modulated by limited attention and memory | Even distribution of attention among non-hypothesized is arbitrary |

Hypothesis Testing Accounts

Hypothesis testing (HT) accounts of CSWL suggest that learners form a single hypothesis about word-object mappings on each presentation that is either verified by later consistent encounters or discontinued causing the learner to build and test a new hypothesis (Medina, Snedeker, Trueswell, & Gleitman, 2011; Trueswell, Medina, Hafri, & Gleitman, 2013). For example, Trueswell et al. (2013) exposed adult participants to a set of everyday objects and a novel word and asked them to choose the most-likely referent. If learners responded incorrectly to a given word, they were found on later trials to be equally likely to choose any of the alternatives, even though some of those alternatives had co-occurred with the tested word in prior trials and were, therefore, more likely candidates for the word. Trueswell et al. (2013) interpreted this finding as showing that participants had not tracked multiple possible referents for a given word, as they did not have a preferred second choice. This argument was also supported by eye-tracking data showing that participants did not look significantly more at the statistically more-frequent alternative referent (but see Roembke & McMurray, 2016 for conflicting data). It appeared that learners simply restarted from scratch if their previous guess was wrong. Using a similar paradigm with 2- and 3-year-old children, Woodard, Gleitman, and Trueswell (2016) concluded that children also hypothesize a single meaning that is tested on subsequent encounters.

HT models represent word learning as an instance-by-instance selection, induction and inference computation, guided by (presumably) built-in language-specific constraints such as one-trial fast mapping (Trueswell et al., 2013), mutual exclusivity (Markman, 1990), or the novel name nameless category principle (Golinkoff, Mervis, & Hirsh-Pasek, 1994). These constraints help limit the set of possible initial hypotheses about a word’s correct referent. For example, Trueswell et al.’s (2013) Propose-but-Verify (PbV) model stores only one hypothesized mapping for a word at the first instance. This hypothesis is recalled with some probability when the same word is encountered again and is compared against the currently available referent set. If the hypothesized referent is present, the model infers the hypothesis is correct and stores it with an increased probability for recall. Otherwise, the model removes the current hypothesis from memory and makes a new hypothesis by selecting one from any of the available referents at random.

A strength of HT models is that they are memory efficient, storing a limited number of associations per word. More importantly, HT models highlight referent selection as a core process which makes the model an active learner whose selection decisions impact its future learning (shown empirically by Trueswell et al., 2013). HT models are limited, however, in that forming a single hypothesis means missing a lot of structure in the data; for example, HT models cannot learn homophones (Stevens et al. 2017). HT models often require that strong constraints like mutual exclusivity or N3C pre-exist, and these models are specified at a computational, rather than process, level. Furthermore, to date, HT models of CSWL have been applied to either artificially generated corpuses (Najin & Banerjee, 2018; Siskind, 1996; see Table 1), small sets of utterances (Frank, Goodman & Tenenbaum, 2009; Sadehgi, Scheutz & Krause, 2017), or a single empirical study (Trueswell et al., 2013). Thus, while these models demonstrate the possibility that HT could be used to learn multiple word-object mappings, they have not been generalized widely across studies. Furthermore, these models have not been applied to the range of infant studies that have demonstrated the importance of basic cognitive processes such as visual exploration (Yu & Smith, 2011; Smith & Yu, 2013) or memory (Vlach & Johnson, 2013) in CSWL.

Associative Learning Accounts of CSWL

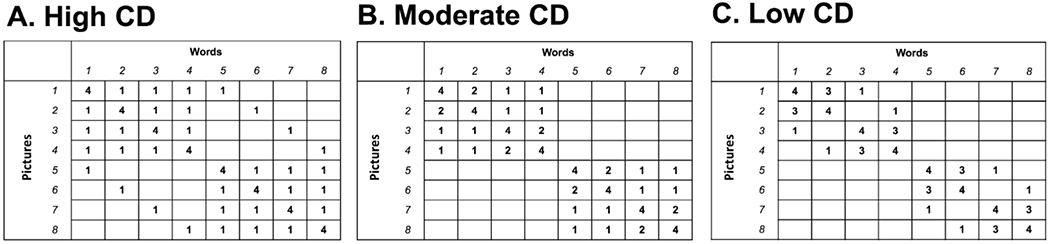

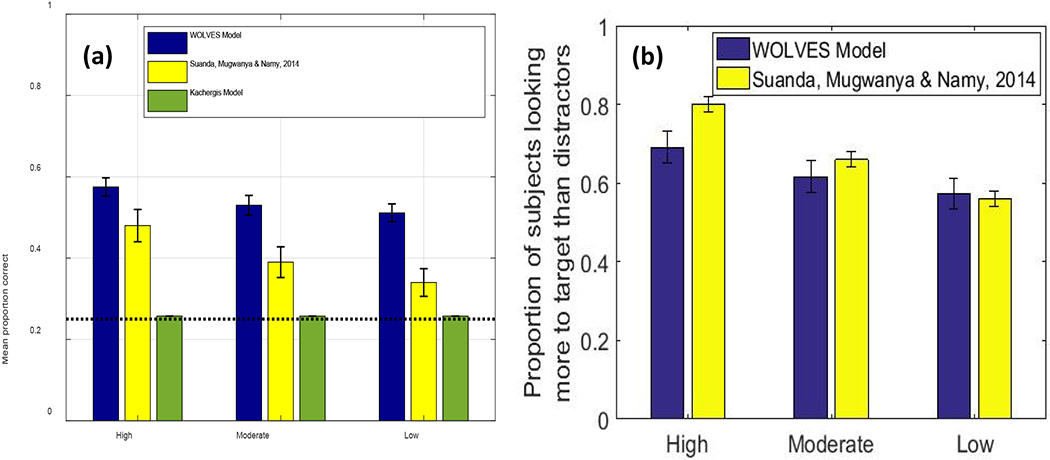

In contrast to HT accounts, associative learning (AL) accounts suggest that learners store information about the multiple possible word-referent mappings that are available in each word-learning situation (Smith, 2000; Yu, 2008). Correct mappings then emerge from strengthening and weakening of associations over repeated exposures. These accounts, therefore, suggest that CSWL is a gradual, parallel accumulation of statistical regularities in the input as information about multiple word-object co-occurrences are tracked simultaneously (Yu & Ballard, 2007; Yu & Smith, 2007). For example, Suanda, Mugwanya, & Namy (2014) exposed children to a set of novel images and words with two pairings per trial while varying the frequency with which a word co-occurred with a distractor in training. They found that children’s learning of a word was directly proportional to the frequency of its co-occurrence with a target image (and inversely proportional to distractor frequency). Suanda et al. (2014) concluded that children’s responses reflected an accumulation of the statistical structure of the learning environment. Similarly, Yu and Smith (2007) controlled within-trial uncertainty in their study with adults by varying the maximum possible word-object associations per trial from four to sixteen. Adults’ performance at test was directly related to within-trial uncertainty. Yu and Smith (2007) suggested that adult performance reflected the statistical structure in the input capped by the real-time processing demands of limited attention and memory.

The core of AL models of CSWL is a set of mappings between words and referents with strengths that, over trials, come to reflect the statistical structure in the input data (Kachergis, Yu, & Shiffrin, 2012; Rasanen & Rasilo, 2015; see Table 1). Most AL models, however, bias this statistical accumulation over trials using cognitive constraints of attention, memory, prior knowledge, and so on (Table 1). For example, a very successful biased AL model proposed by Kachergis and colleagues (2012, 2013, 2017) distributes attention among possible associations in a trial based on a competition between a bias toward known associations (prior knowledge) and a bias toward unknown stimuli (novelty). This allows learning of multiple associations for each word (or object). These associations are also modified by memory decay that diminishes highly infrequent associations over trials. Together these computations enable the model to retain the ‘essential’ statistical structure in the input. This constrained associative learning allows the model to capture, for example, the role of sensitivity to variance in CSWL input frequency (Kachergis et al., 2017) and relaxing of mutual exclusivity (Kachergis et al., 2012) seen in adult studies of CSWL.

Some AL models have been formulated with reference to psychological processes of attention and memory, such as Kachergis et al. (2012) and Nametzadeh et al. (2012) (see Table 1). Another strength is that AL models preserve multiple associations to learn homophones and even show the emergence of constrains like mutual exclusivity (Fazly et al., 2010; Kachergis et al. 2012; Yurovsky et al. 2014). However, AL models lack any form of selection process which is necessary to unpack how decision-making unfolds during learning. Furthermore, like HT models of CSWL, the majority of AL models have been developed in the context of, and applied to, single empirical studies (see Yu & Ballard, 2007; Yurovsky, Fricker, Yu & Smith, 2014 in Table 1) or limited sets of data such as small utterance corpuses rather than the results of empirical studies (see Fazly et al.; 2010; Yu & Smith, 2011; Nematzadeh, Fazly & Stevenson, 2012 in Table 1). A few AL models have captured data from multiple studies (Bassani & Araujo, 2019; Kachergis et al., 2012; Kachergis, Yu, & Shiffrin, 2013, 2017; Rasanen & Rasilo, 2015), suggesting that they are better able to generalize across specific CSWL paradigms. Yet, while promising, no AL model has been applied to the full range of CSWL studies from infants to adults, and thus no AL account has explained changes in CSWL over development.

Mixed Hypothesis Testing / Associative Learning Models

Several recent models bridge the HT and AL distinction by combining aspects of associative learning with constraints on how candidate referents are selected (see Mixed Models in Table 1). For example, Stevens, Gleitman, Trueswell and Yang (2017) proposed a HT model that uses an associative learning mechanism to weigh the different hypotheses at each instance of the word-learning task. In this model, a word is only added to the model’s lexicon if the conditional probability of its hypothesised referent exceeds a threshold value. As a second example, Kachergis and Yu (2018) extended their biased AL model (Kachergis et al. 2012) with a probabilistic selection computation that makes uncertain responses at every word learning instance. This allows the model to capture participant accuracy and uncertainty on learning trials which is not possible with the original AL model. Similarly, Yurovsky and Frank’s (2015) model incorporates a parameter to control how attention (or intention) is distributed across associations. At one extreme of this parameter, this model can focus attention narrowly and behave in an HT fashion. At the other, it can distribute attention and behave more like an AL model; although a mid-range value of this parameter fit participant data best (Yurovsky & Frank, 2015).

Similar to HT and AL models, a number of mixed models have been applied to small artificial datasets (see Fontanari, Tinkhanoff, Cangelosi & Perlovsky, 2009; Taniguchi, Taniguchi & Cangelosi, 2017 in Table 1), or single empirical studies (Smith, Smith & Blythe, 2011; Kachergis & Yu 2018, Yurovsky & Frank, 2015 in Table 1). Nevertheless, some models that bridge HT and AL, such as Stevens et al. (2017), provide more coverage of the literature, suggesting the possibility that the full breadth of CSWL findings might only be captured by an approach that blends aspects of HT and AL.

But are mixed models the best way forward? Yu and Smith (2012) demonstrated that depending on the specific information selection and decision computations employed, a model with an associative learning core can perform strict hypothesis testing and vice versa. Yu and Smith (2012) concluded that the debate between hypothesis testing and associative learning in the context of statistical word learning is not well formed because accounts to date have been proposed at what Marr (1982) called the “computational” level—dealing only with the nature of the information available to the learner—and not at the “algorithmic” level (or below) to explicitly specify the (neural) representations and psychological processes used to build and manipulate those representations (Smith, Suanda, & Yu, 2014).

Beyond HT and AL: Implementing A Different Approach

Inspired by Yu and Smith (2012), the over-arching goal of the present paper is to propose an implementation-level theory that is comprehensive and takes time seriously—real time (millisecond by millisecond), learning time (trial to trial), and developmental time (from infancy into adulthood). This goal is motivated by prior empirical work showing that time matters for what is learned at the level of real-time looking behaviours, trial-to-trial task structure, and over the longer timescale of development. We are also motivated by the fact that while there are numerous models of CSWL, the field lacks a consistent narrative linking the influence of cognitive processes across CSWL tasks, behaviours, and participant populations.

A growing body of data demonstrate that real-time selection and visual exploration matter for learning in CSWL. We seek to explain why and to unpack the processes involved. For example, we know that infant learning in CSWL tasks is affected by the patterns of looking demonstrated during training: strong learners tend to have fewer longer looks while weak learners have more shorter looks (Colosimo, Forbes, & Samuelson, 2020; Yu & Smith, 2011). No models explain how these looking patterns – these real-time shifts of attention – are generated or provide a mechanistic account of how they influence learning.

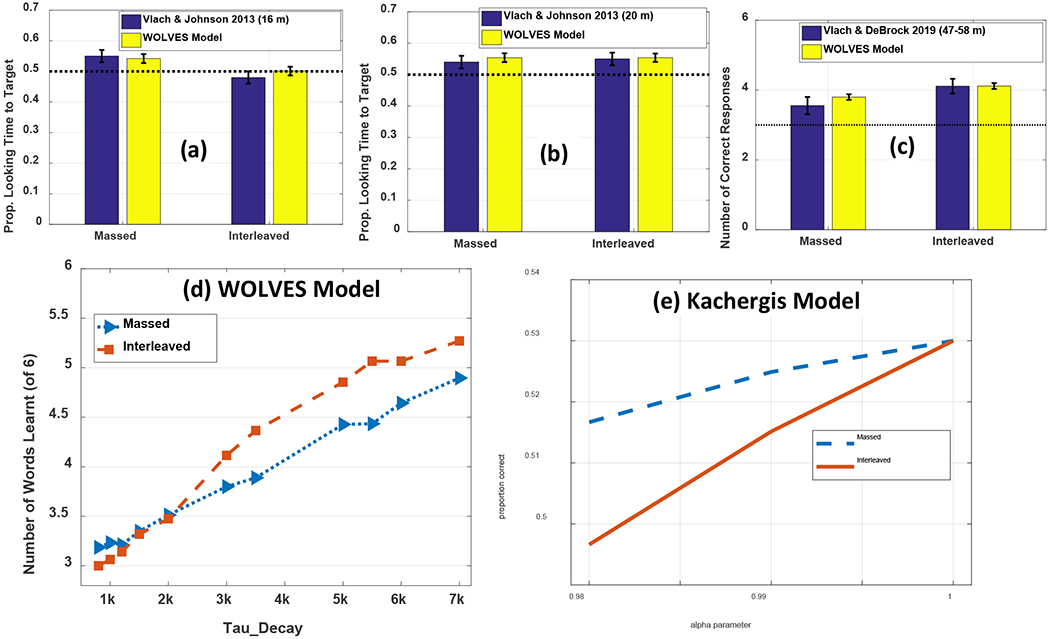

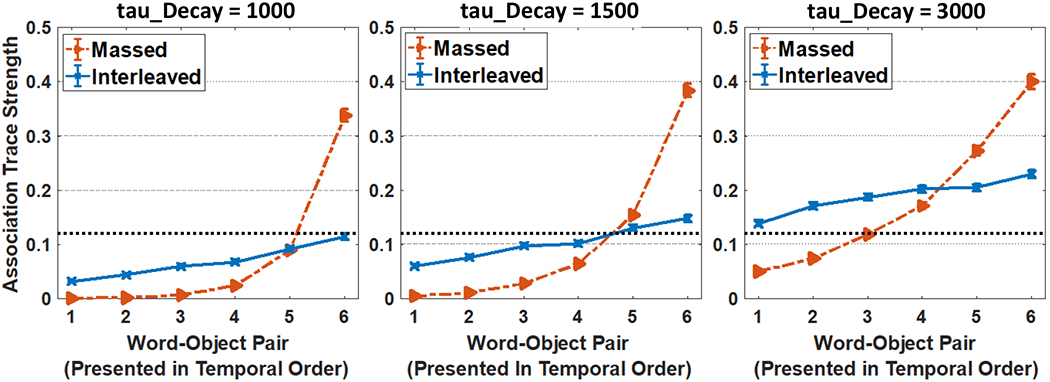

The literature also demonstrates that the order of training trial matters for what is learned. If trials are structured such that objects repeat from trial to trial, 12- to 14-month-old infants habituate to repeating items and learn less (Smith & Yu, 2013). Furthermore, studies examining the influence of massed versus interleaved presentation show differential effects over learning. Vlach and colleagues have found that 16-month-old toddlers learn best when there is little delay between presentations of a word-object pair in a CSWL task, while 20-month-old’s learn best with more delay (Vlach & DeBrock, 2017, 2019; Vlach & Johnson, 2013). Benitez, Zettersten & Wojcik (2020) found that 4- to 7-year-old children learned equally successfully with massed or interleaved presentation while adults benefited substantially from massed object presentation (see also, Kachergis et al., 2009; Smith et al., 2011; Yurovsky & Frank, 2015). In all of these cases, what people learn over time is affected by the trial sequence because the sequence of trials changes what learners do over time on each trial.

This creates an important distinction because many models in the literature conceptualize each trial in a ‘one-shot’ manner. For example, in Kachergis et al.’s (2012) model, attention is distributed via normalization across a set of stimuli. This requires that the learner knows the set of objects and words to be presented up front so the model can make a single computation over these stimuli on each trial. If real-time processes constrain what is learned, theories that simplify these processes into a single ‘shot’ are limited.

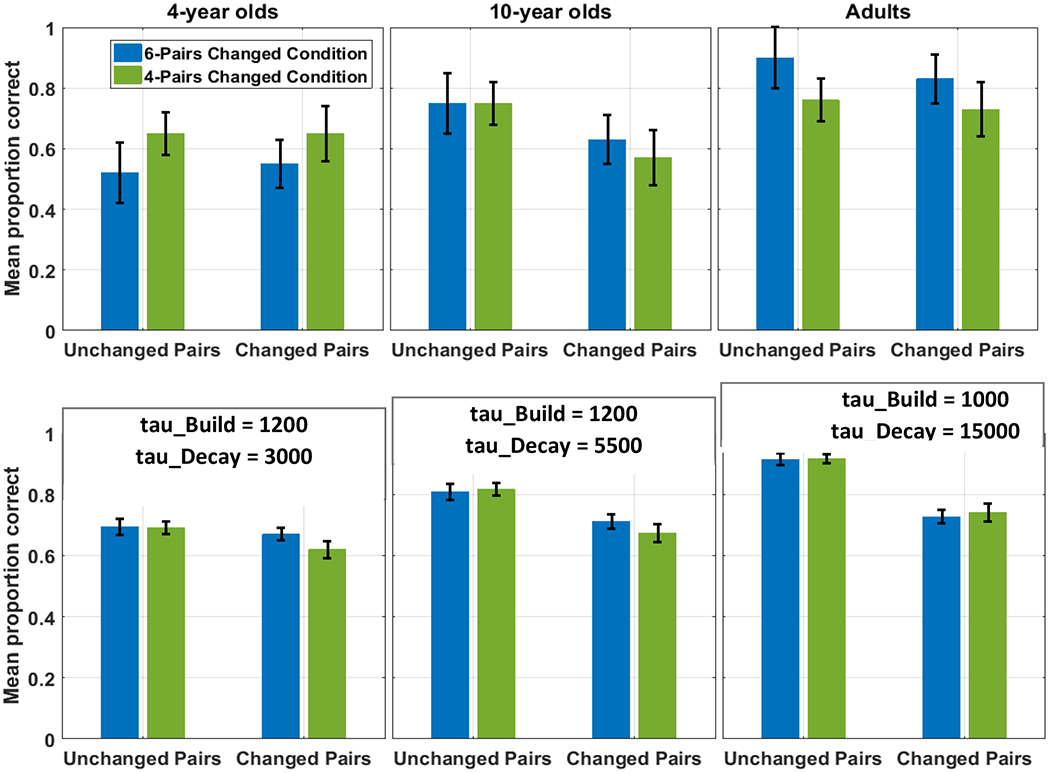

We also seek to capture developmental differences in CSWL. While few studies directly compare the performance of adults and children in the same task (see Benitez et al., 2020; Bunce & Scott, 2017; Fitneva & Christiansen, 2017, for exceptions), it is clear from the literature that there are developmental differences in CSWL. In addition to the example of massed or interleaved presentation above, adults and children differ in the influence of initial accuracy on final learning outcome. Fitneva and Christiansen (2017) found that 4-year-old children’s learning outcome was best when their initial accuracy on a subset of word-referent pairings was high, 10-year-old children’s outcome was similar when initial accuracy was high or low, and adults did best when initial accuracy was low. As a second example, Vlach and DeBrock (2017) have related differences in CSWL performance in a group of 2.5- to 6-year-old children to differences in memory abilities. No current models have explained these developmental effects. It is certainly fine for theories to focus only on adult (or child) data, but if a theory can reach into development and offer a systematic account of such differences, such a theory would be notable in moving beyond current accounts.

Finally, we seek to provide a comprehensive theory of CSWL that explains multiple findings from multiple paradigms / tasks. Most prior models reproduce only a handful of empirical results from the CSWL domain (see Table 1). Furthermore, previous models fit parameters to each task or condition individually without any restrictions as to how parameter changes are made from report to report (Fazly, Alishahi, & Stevenson, 2010; Kachergis et al., 2012). Thus, there is currently little theoretical specification of why parameter values change across tasks, even for the same group of participants. In this context, our goal is to test a theoretical account of CSWL by simulating data from 12 experiments including data from infants, young children, and adults across a variety of task procedures – ideally with a constrained parameter set. We also seek a theory that compares favourably to current models. Thus, in addition to simulating our own model, we fit the same data with two other models from the literature, comparing results using multiple metrics including mean absolute percent error (MAPE), the Akaike Information Criterion (AIC), and the Bayesian Information Criterion (BIC). These latter two criteria penalize more complex models such as ours. We also probe the generalizability of the models using the generalization criterion methodology (GNCM) proposed by Busemeyer and Wang (2000).

Because we seek to ground our understanding of CSWL in terms of the real-time processes that underlie memory, attention, and the building of word-object associations, our model – Word Object Learning via Visual Exploration in Space (WOLVES) – is built from two previously established process models: one on word-object association mapping (Samuelson, Smith, Perry, & Spencer, 2011; Samuelson, Spencer, & Jenkins, 2013) and the other on visual attention and memory (Johnson, Spencer, Luck, & Schöner, 2009; Perone & Spencer, 2013; Schneegans, Spencer, & Schöner, 2016). As we will demonstrate, the integration of these models provides a process-level account of CSWL that simulates in-the-moment visual behaviours and trial-by-trial looking and learning, mechanistically explaining differences across tasks and over development. Furthermore, the theoretical framework WOLVES is embedded within – Dynamic Field Theory (DFT) – offers a neurally-grounded set of concepts for understanding the emergence of cognition in embodied systems (Schöner, Spencer & The DFT Research Group, 2016), and provides direct connections to related processes such as visual working memory, visual search, visual exploration, and word learning biases.

We start the present report by introducing WOLVES via an overview of the two prior models upon which it is based (a more detailed introduction to the core concepts of DFT is provided in Appendix A). We then detail the WOLVES architecture, stepping through how the model captures cycles of autonomous looking in real time (millisecond by millisecond), and how these cycles map words and object features together from trial to trial over learning. This includes a discussion of both bottom-up and top-down influences in the model, that is, how looking structures word-object learning and how word-object learning influences looking. Simulations of the model show how the time-extended nature of learning in CSWL tasks has implications for both the AL v HT debate and our understanding of how contextual factors and individual differences shape performance in the task.

We then establish that WOLVES is a comprehensive theory of CSWL via quantitative simulations of data from 12 studies of CSWL. This includes adult studies purported to support both sides of the AL v HT debate, as well as developmental studies which have not been the focus of prior modelling work. We show that WOLVES compares favourably to two other models by simulating the same set of experiments with models from Kachergis et al. (2012) and Stevens et al. (2017). This model comparison highlights that WOLVES captures more data from the literature (132 data values vs. 69 for the comparator models), captures the same data more accurately (i.e., lower percent error scores in 12 of 17 conditions), generalizes more accurately to three ‘held out’ experiments, and provides the only systematic account of development. In terms of overall model evaluation metrics (AIC/BIC), however, the Kachergis et al. model fares better. We conclude with a discussion of the key findings from the model comparison exercise as well as broader implications of WOLVES, highlighting several future directions for this line of work including tests of novel predictions.

Word-Object Learning via Visual Exploration in Space

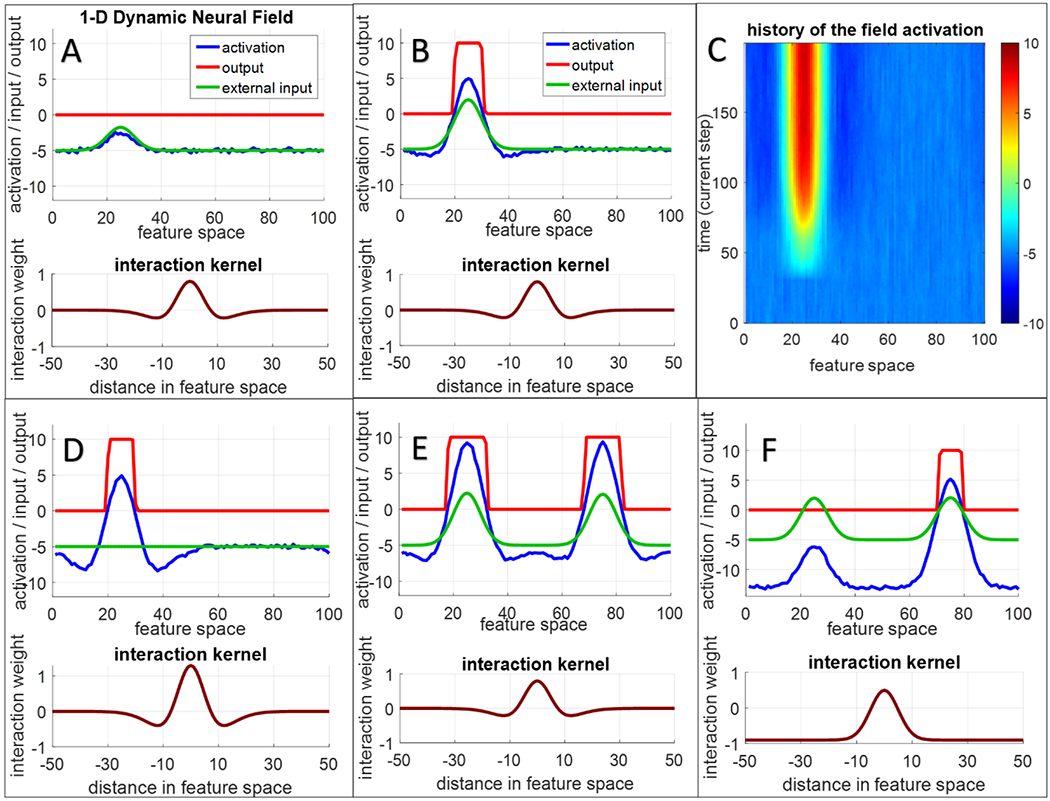

Dynamic Field Theory (DFT) is a framework that provides an embodied, dynamic systems approach to understanding and modelling cognitive-level processes and their interaction with the external world via sensorimotor systems (Schöner et al., 2016; Spencer & Schöner, 2003). DFT has been used to test predictions about early visual processing, attention, working memory, response selection, spatial cognition, and word learning (Erlhagen & Schöner, 2002; Johnson, Spencer, & Schöner, 2009; Samuelson, Schutte, & Horst, 2009; Samuelson et al., 2011; Schutte & Spencer, 2009) at behavioural and brain levels using multiple neuroscience technologies (Bastian, Schöner, & Riehle, 2003; Buss et al., 2021; Erlhagen et al., 1999; Markounikau, Igel, Grinvald, & Jancke, 2010; McDowell, Jeka, Schöner, & Hatfield, 2002). Since learning in CSWL scenarios is directly related to these cognitive processes, DFT offers a good framework for understanding how these processes come together in the CSWL task.

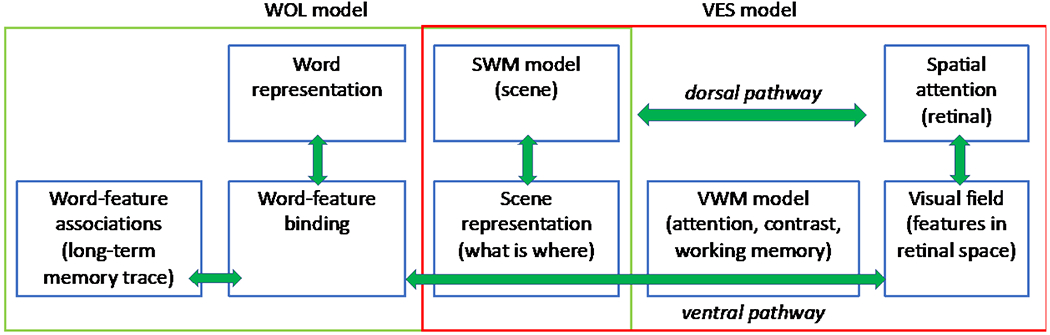

Figure 1 shows a schematic of WOLVES. The model integrates the word-object learning (WOL) model shown in green (Samuelson, Smith, Perry, & Spencer, 2011; Samuelson, Spencer, & Jenkins., 2013) with a model of visual exploration in space (VES) shown in red (Schneegans, Spencer, & Schöner, 2016). These two models share the common elements in the overlapping shaded boxes (aspects of spatial working memory and a scene representation). Note that the VES model is also an integrative model in its own right, bringing together earlier models of the neural processes that operate in early visual processing (Jancke et al., 1999; Markounikau Igel, C., and Jancke, D., 2008), models of spatial attention (Schneegans et al., 2014; Wilimzig, Schneider, & Schöner, 2006), a model of visual working memory (Johnson, Spencer, Luck, & Schöner, 2009; Perone & Spencer, 2013), and a model of spatial working memory (Schutte & Spencer, 2009; Schutte, Spencer, & Schöner, 2003). These models are integrated in a way that is consistent with neural evidence for dorsal (‘where’ or ‘how’) and ventral (‘what’) pathways in the brain (Deco, Rolls, & Horwitz, 2004; Hickok & Poeppel, 2004; Schneegans et al., 2016)

Figure 1:

A schematic of WOLVES which integrates two previous models: the Word-Object Learning (WOL; green box) model and the Visual Exploration in Space (VES; red box) model. Note that the VES model is also an integration of earlier models of visual processing, including models of the neural dynamics in early visual fields, spatial attention, visual working memory (VWM) and spatial working memory (SWM).

To make our discussion of WOLVES as simple as possible we first describe the architecture and functionality of the two component models—WOL and VES—before discussing their integration. We keep this discussion brief as these models have been presented elsewhere (Johnson, Spencer, Luck, & Schöner, 2009; Perone & Spencer, 2013; Schneegans, Spencer, & Schöner, 2016; Samuelson, Smith, Perry, & Spencer, 2011; Samuelson, Spencer, & Jenkins., 2013). Readers unfamiliar with Dynamic Field Theory may find the primer in Appendix A to be a useful starting point.

The Word-Object Learning (WOL) model

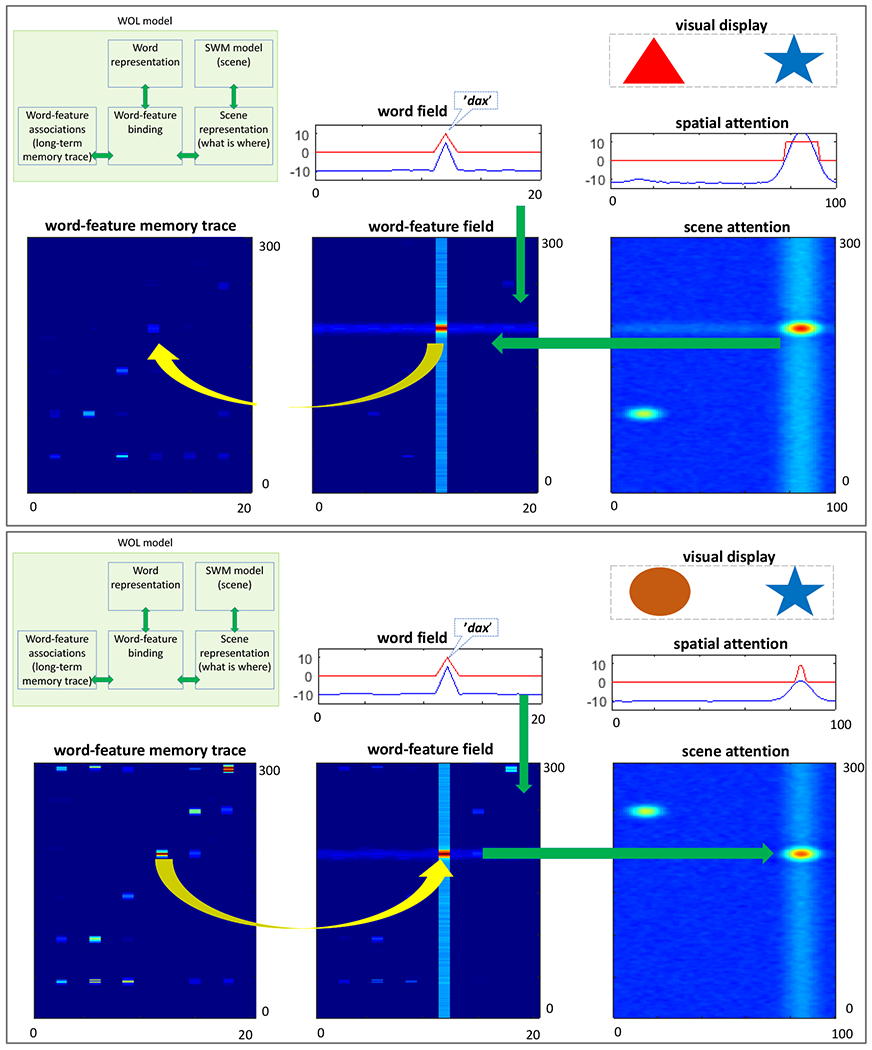

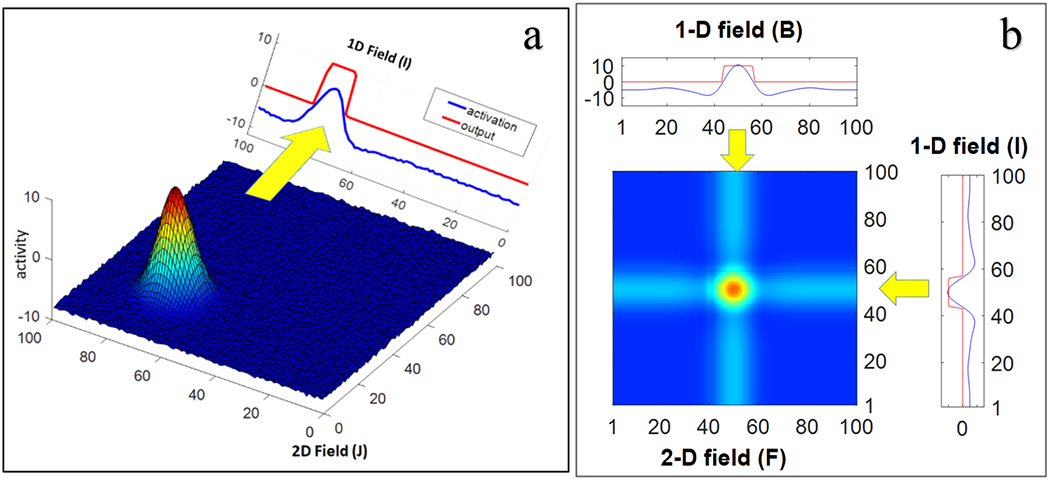

The core elements of the WOL model are shown in the top panel of Figure 2; two one-dimensional (1D) dynamic fields – word and spatial attention (part of a spatial working memory model, see Schutte & Spencer, 2009) – and two two-dimensional (2D) fields – a scene representation field (aka scene-attention) and a word-feature binding field. The final layer is the memory trace of word-feature associations which is the primary contributor to word learning over trials.

Figure 2:

Operation of the WOL part of the model. In the top panel, input from the word field and scene attention field intersect in the word-feature field and form a new memory trace (indicated by the yellow arrow) in the memory trace field. The bottom panel shows a later time-point when the word corresponding to this trace is again presented to the model. The word input activates the trace forming a peak that signifies a recall of the encoded association and drives attention to the corresponding object.

The word field captures the representation of external word input, that is, which word is presented to the model. Note that words in this model are represented as abstract units (a layer of discrete nodes as in many connectionist models) rather than as a sequence of auditory inputs. The activation peak in shown in the field (blue line) indicates that the ‘dax’ (arbitrarily assigned to unit 12) has been activated in response to input. Note that the red line indicates which unit is above a threshold value (activation = 0). Only neurons that are above threshold contribute to neural interactions within and between layers (see Appendix A for overview and sigmoidal function in Appendix B for details).

Visual stimuli are input to the scene attention field. Here, each field site is ‘tuned’ to a particular object feature (colour in Figure 2) at a specific location in the scene (e.g., left or right in horizontal space). Thus, each neuron in the 2D scene attention field has a predefined tuning curve, and the neurons are arranged such that neurons with similar tuning curves are near one another. Concretely, neurons that ‘prefer’ orange items on the left will be nearby neurons that ‘prefer’ red items on the far left. Activation in the 2D field is captured by the colour scale with ‘hotter’ colours indicating more intense activation. The red hotspot in the scene attention field indicates that a peak has formed from the detection of the blue item to the right. The scene attention field also has activation on the left caused by the red item, but this activation profile is weaker / less intense.

The reason that the activation associated with the blue item is more intense is that the scene attention field is reciprocally coupled to the spatial attention field. This is a ‘winner-take-all’ field, that is, there can only be one focus of attention (one peak) at any moment in time. Here, ‘winner-take-all’ refers to the ‘rule’ governing how neural activation changes from millisecond-to-millisecond. In particular, above-threshold neurons that are close to one another are mutually-excitatory, while above-threshold neurons that are far apart are mutually-inhibitory. In some fields, inhibition follows a Gaussian rule, so there is an inhibitory trough around each excitation peak. In a ‘winner-take-all’ field, inhibition is global; this suppresses activation everywhere except at the centre of excitation, ensuring, for instance, that there is only one attentional focus at each moment in time.

As seen in the spatial attention field, the model is currently attending to the right item (see blue activation curve). Consequently, the spatial attention field passes a ‘ridge’ of activation into the scene attention field at the right location. This vertical ridge (the blurry blue line in the scene attention field) boosts the activation of the blue item, leading to selection of this item in scene attention. That is, as excitation was approaching threshold in the attention layer, random fluctuations caused some neurons to go above threshold, engaging local excitation and causing a peak to emerge. Thus, there is nothing special about the blue item in this example; rather, neural noise helped the model select the blue item in spatial attention.

Object selection in the scene attention field causes a horizontal ridge of activation at the feature value of the attended object (blue in this case) to be passed to the 2-dimensional word-feature field (see leftward green arrow). The word-feature field also receives vertical ridge input from the 1D word-field after it has detected the presence of the word input (‘dax’; see downward green arrow). If ridges from the scene attention field and the word field overlap through time, their intersection will form a peak in the word-feature field (red dot in the word-feature field).

The word-feature peak engages the last piece of the architecture – the memory trace layer (see yellow arrow). In particular, when a peak goes above threshold in the word-feature field, it leaves a trace at the associated position in the memory layer. Memory traces are association strengths that vary between 0 and 1, much like a connection weight in a connectionist model. This enables learning of word-feature mappings, that is, which object features go with each word. Note that there are many localized memory traces in the word-feature memory trace layer as this exemplary simulation is multiple trials into a word learning paradigm. To anticipate the discussion below, it is useful to highlight here that many of the words have memory traces for multiple object features. Similarly, the same object features have memory traces linked to multiple words.

What is the function of these memory traces? Because the memory trace layer and the word-feature field are bi-directionally coupled, the memory trace can impact real-time ‘decisions’ in the word-feature field. This is evident if we run the simulation in a different scenario. Rather than starting with a visual input and an auditory word, we can present a word and ask the model to pick from one of two objects in the task space. This is shown in the lower panel of Figure 2. Here we present a word (again, the ‘dax’ or unit 12); we also boost the resting level of the word-feature field to bring the influence of the memory traces closer to threshold (activation = 0). Consequently, the strongest memory trace associated with the word pierces threshold (see yellow arrow), forming a peak in the word-feature field. This sends a horizontal ridge to the scene attention field (see rightward green arrow), amplifying the feature that matches the recalled item. This causes the model to form a peak in the scene attention field and drives attention to the right item, effectively choosing this item as the object that matches the word.

Note that associations in the memory trace layer build over a slow, learning timescale that is typically several times slower than the ‘real’ or millisecond timescale of the activation dynamics in the neural fields. In addition, memory traces decay over a very long timescale. For a detailed overview of these memory trace dynamics, see Appendix A.

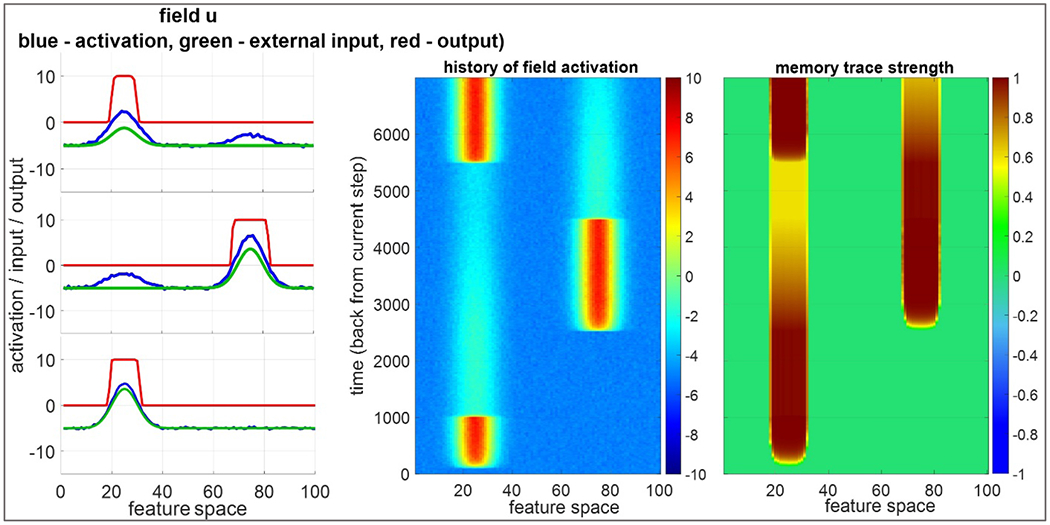

The Visual Exploration in Space (VES) model

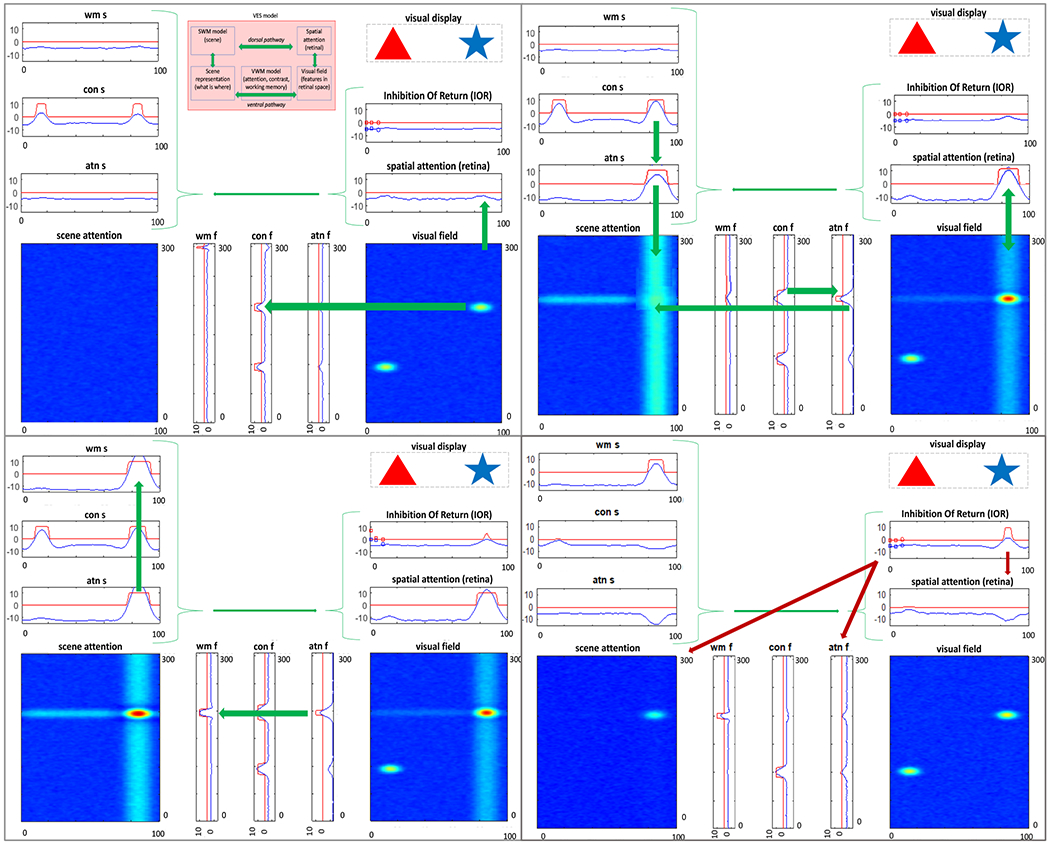

The four panels of Figure 3 show the architecture and functionality of the VES model. As indicated in the schematic of WOLVES in Figure 1, VES shares two fields with the WOL model – the scene attention field and the spatial attention field. The other parts of this model capture how visually presented items become part of a scene representation, that is, how lower-level features are perceived in a retinal frame of reference and become ‘bound’ together in a scene representation. The model also captures the reverse operation – how items at the level of the scene representation are selected such that an eye movement can be directed to the item’s location in the world.

Figure 3:

VES model in four stages of an autonomous looking cycle. The top-left panel shows the model detecting novel objects in the scene. The top-right panel shows the model attending to one object. The bottom-left panel shows the model having consolidated the object in working memory. The bottom-right panel show model releasing attention to begin a new looking cycle.

In the top-left panel, stimuli (see visual display) are input to the VES model via a 2D visual field that responds to the presence of visual features (e.g., colour) at particular locations on the retina. The two ovals in the visual field show the activation produced by the visual display after the first few milliseconds when the display is turned ‘on’. The visual field passes activity to a retinal spatial attention field, as well as three 1D fields along a feature (e.g., wm f, con f, and atn f) and a spatial (wm s, con s, and atn s) pathway. Attention Fields (atn s and atn f) represent what object the model is currently attending to in terms of its spatial position (atn s) and its feature (atn f). Working Memory Fields (wm s and wm f) maintain short-term memories of the spatial locations and features of objects the model has recently attended. Contrast Fields (con s, con f) detect novelty in the scene where novelty is defined as locations and features in the scene that are not currently maintained in Working Memory.

Neural activity flows through VES in a four-stage cycle:

Input & Novelty Detection, top-left panel: The model receives two localized inputs to the visual field, detecting the red item on the left and the blue item on the right. Output from the visual field is input to the feature-contrast field (green arrow from visual field to con f) which builds multiple peaks. This signifies detection of two novel colours in the scene. Similarly, the spatial-contrast field (con s) detects the positions of these objects.

Object Attention, top-right panel: After the model has detected the novel objects, the contrast fields pass activation to the 1D attention fields. The attention fields are winner-take-all (WTA) fields that allow the model to attend to only one object at a given time. As peaks form in the attention fields, this results in selection of the corresponding object in the 2D visual field through reciprocal connectivity (see red hot spot in visual field). The attention peaks also project ridges of activation into the scene attention field.

Consolidation in WM and binding in an allocentric scene representation, bottom-left panel: Attention to features and locations passes activation to the 1D working memory fields and results in consolidation, indicated by peaks in these fields (wm f, wm s). These 1D WM fields forward their output to a 2D WM field (not shown). The 2D WM field forms a robust scene-level working memory of what is where in the world, passing its activation to the scene attention field. The convergence of inputs from the attention fields as well as input from the 2D WM field form peaks in the scene attention field, binding the feature and the location of the attended object into a unified allocentric representation (red peak in scene attention).

Release of Attention, bottom-right panel: Peaks in the scene attention field are detected by the Inhibition of Return (IOR) field via input to an IOR detector node. Once activation of the IOR detector node goes above threshold, this boosts the resting level of the IOR field, allowing input from the retinal spatial attention field to build a peak at the currently attended location in the IOR field. The peak in the IOR field then inhibits the attentional peak. In addition, a global inhibitory signal is sent to the other attentional fields (see red arrows). These inhibitory influences release the model from its current attentional focus.

The sequence of events in Figures 3 capture how the model consolidates one object (blue star) in working memory; once this is complete, the system is ready to explore another item in the visual scene. Note that the WM layers in VES have memory traces (Perone & Spencer, 2013). These traces influence the cycle of visual exploration by speeding consolidation in working memory over learning. This, in turn, speeds the release from fixation for familiar items, leading to habituation (Perone & Spencer, 2013). Prior work shows that these dynamics capture the details of habituation and preferential looking performance across a variety of paradigms (Perone, Simmering, & Spencer, 2011; Perone & Spencer, 2013, 2014).

One additional feature of the VES model is that it specifies the neural mechanisms that transform between retinal and allocentric space (see Lipinski, Schneegans, Sandamirskaya, Spencer, & Schöner, 2012; Sandamirskaya, Zibner, Schneegans, & Schöner, 2013; Schneegans & Schöner, 2012). To simplify the presentation of the model here, we treat shifts of attention in space as shifts of covert, rather than overt, attention. Many adult experiments modelled using VES are covert attention tasks with gaze fixed at a central location (Johnson, Spencer, Luck, et al., 2009; Johnson, Spencer, & Schöner, 2009; Schneegans, Spencer, & Schöner, 2016); thus, this simplification maps onto simplifications used in the adult literature.

Integration via WOLVES

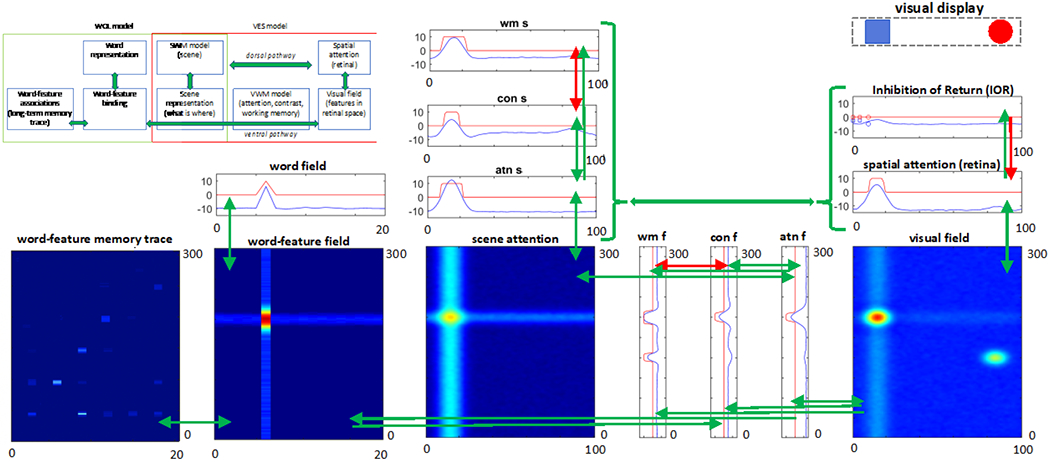

The integration of these models into a single architecture, WOLVES (see Figure 4), is straightforward since both WOL and VES models share both scene attention and spatial attention. To enable information flow between the two component models in WOLVES, we first add a bottom-up connection from the feature attention field (atn f) in VES to the word-feature field of WOL (green arrow). In addition, word-feature associations must also be able to drive looking. Thus, we add a top-down connection from the word-feature field to the feature contrast field (con f) in VES. Through these bottom-up and top-down connections, looking can influence what the model learns about word-feature mappings and this learning can influence what the model finds ‘interesting / novel’ and, consequently, where the model looks. This means that processing in the full model evolves over two cycles and two timescales: a real-time cycle of autonomous looking and a learning-based cycle of word-driven attention.

Figure 4:

The overall architecture of WOLVES. Scene WMs and memory traces are not shown for representational simplicity. Arrows represent uni-/bi-directional (green: excitatory, red: inhibitory) connectivity in the model. See text for additional details.

Cycle of autonomous looking: VES ➔ WOL.

During an individual trial of a CSWL task, WOLVES cycles through a regular set of processes. First, the 2D visual field responds to the presence of feature inputs at particular locations in the visual scene. This field passes feature-specific activation along the feature pathway and location specific activation along the spatial pathway to the contrast fields (con s and con f). Activity in the contrast fields project activation to the ID attention fields (atn s and atn f). Objects that build peaks first in these winner-take-all fields will be attended, leading to the consolidation of these features in the ID working memory fields (wm s and wm f) and at the level of the 2D scene representation. Following object consolidation in WM, peaks in the scene attention fields drive release from fixation and the autonomous cycle of input detection, novelty detection, attention, consolidation, and release can start again. Over repeated trials, this cycle becomes more efficient as the memory traces of the working memory layers speed up consolidation, leading to habituation. In addition, this cycle becomes increasingly influenced by a cycle of word-driven attention happening over the longer timescale of learning.

Cycle of word-driven attention: WOL ➔ VES.

In a CSWL task, as objects are presented on individual trials, words are presented as well. The word field sends an activation ridge into the word-feature field that intersects with a ridge sent simultaneously along the feature pathway as a feature is attended. This intersection of activation ridges results in the formation of peaks in the word-feature field and the build-up of memory-traces at associated sites in the memory trace layer. Over the course of multiple trials in a CSWL task, the same objects are presented along with the same words. Thus, memory traces of the same word-feature mappings are repeatedly strengthened, resulting in a pre-shaping of the activity in the word-feature field. This pre-shaping leads to the formation of a peak in the word-feature field when a feature ridge hits strong memory traces. Therefore, in later training trials, the presentation of a previously encountered word can cause the formation of a peak at the corresponding word-feature mapping in the word-feature field. Such peaks can then send top-down activation to the ID feature contrast field and bias the model to selectively attend to the associated object.

Critically, the details of how accumulated word-object mappings drive attention depend on the current state of the attentional system. If WOLVES is not currently attending to any object, the top-down input from word-feature fields will bias attentional selection to the object features associated with a presented word. Likewise, if WOLVES is already looking at the associated object, once consolidation and release of attention occurs, the top-down influence of words will again bias attention to the associated object, effectively creating two bouts of sustained attention to the same object. However, if WOLVES is looking at an object not associated with the word, strong associations in the word-feature fields can only push the next look once the current object is consolidated and released from attention.

Note that – as we discuss in greater detail below – we operate the word-feature fields in a competitive winner-take-all mode. Consequently, the model will only form a single peak in each word-feature field at any moment in time. While this has important consequences for CSWL that we discuss below, it is important to emphasize that this is about the real-time dynamics of the word-feature fields – only one peak at any moment – and not a statement about how peaks evolve on the trial-to-trial timescale typically emphasized in CSWL.

In what sense are these cycles ‘autonomous’?

By ‘autonomous’ behaviour, we literally mean that the model does its own thing on the millisecond timescale. Our job when running a simulation experiment is to turn inputs on and off to reproduce the timing of external events in the task. We then just track what the model does through time. Critically, every object it attends to and every association and decision it makes happen ‘internally’ without any intervention from us (beyond ‘tuning’ parameters; see discussion below). Thus, if the model is a good model with all of the necessary processes in place, it should mimic or reproduce patterns of looking and learning in detail. This would give us confidence that the autonomous model we have created can faithfully reproduce all the behaviours of the autonomous system we are trying to model – the participant.

Interestingly, autonomy also means that from trial-to-trial, the model ‘behaves’ differently, that is, it can show a different pattern of looking and learning as events unfold during a trial and over the course of the task. This is because all the fields operate with a small amount of noise that can change how they respond to the same stimuli from run to run. This means we have to run many simulations to track what the model does and why. We discuss this in greater detail below where we embed the model in a CSWL paradigm. In particular, the next section presents simulated data from two of the first studies to use the canonical CSWL paradigm – Smith and Yu (2008) and Yu and Smith (2011). Later in the paper, we demonstrate that WOLVES is a comprehensive model of CSWL by simulating results from 5 canonical studies with adults and 5 additional developmental studies. Note that in all simulations below, we used a model with two feature pathways in the ventral stream – one set of fields for colours and one set of fields for shapes. While this makes the model more complex, it allows us to capture the details of object features in the different experiments. Critically, the dynamics we summarise above operate comparably in this larger model.

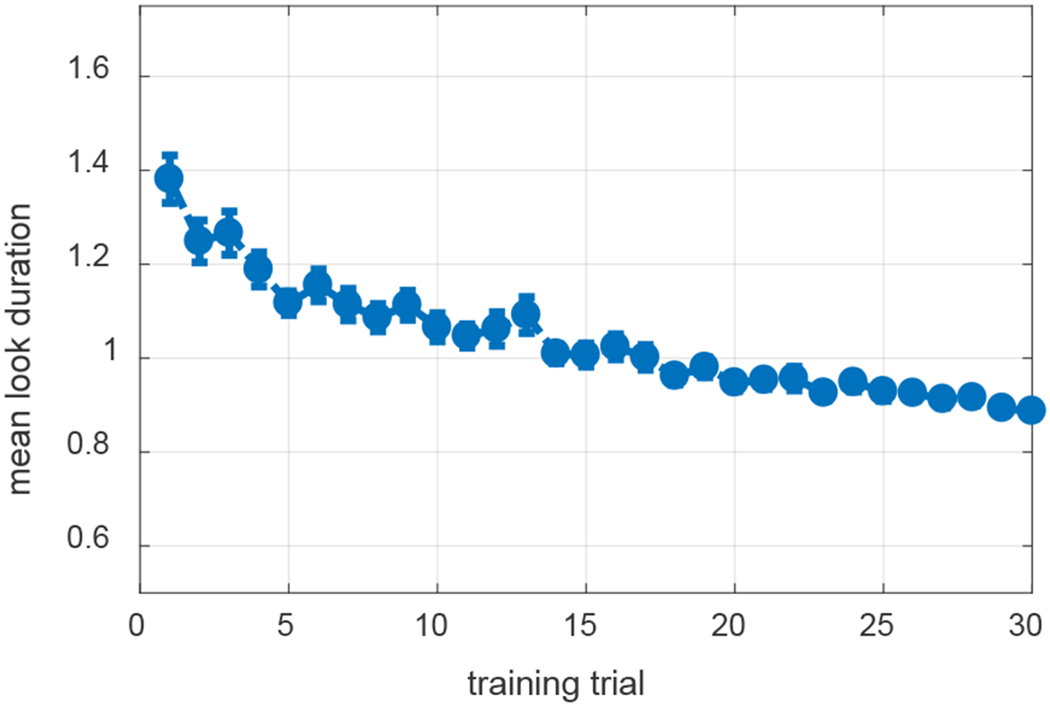

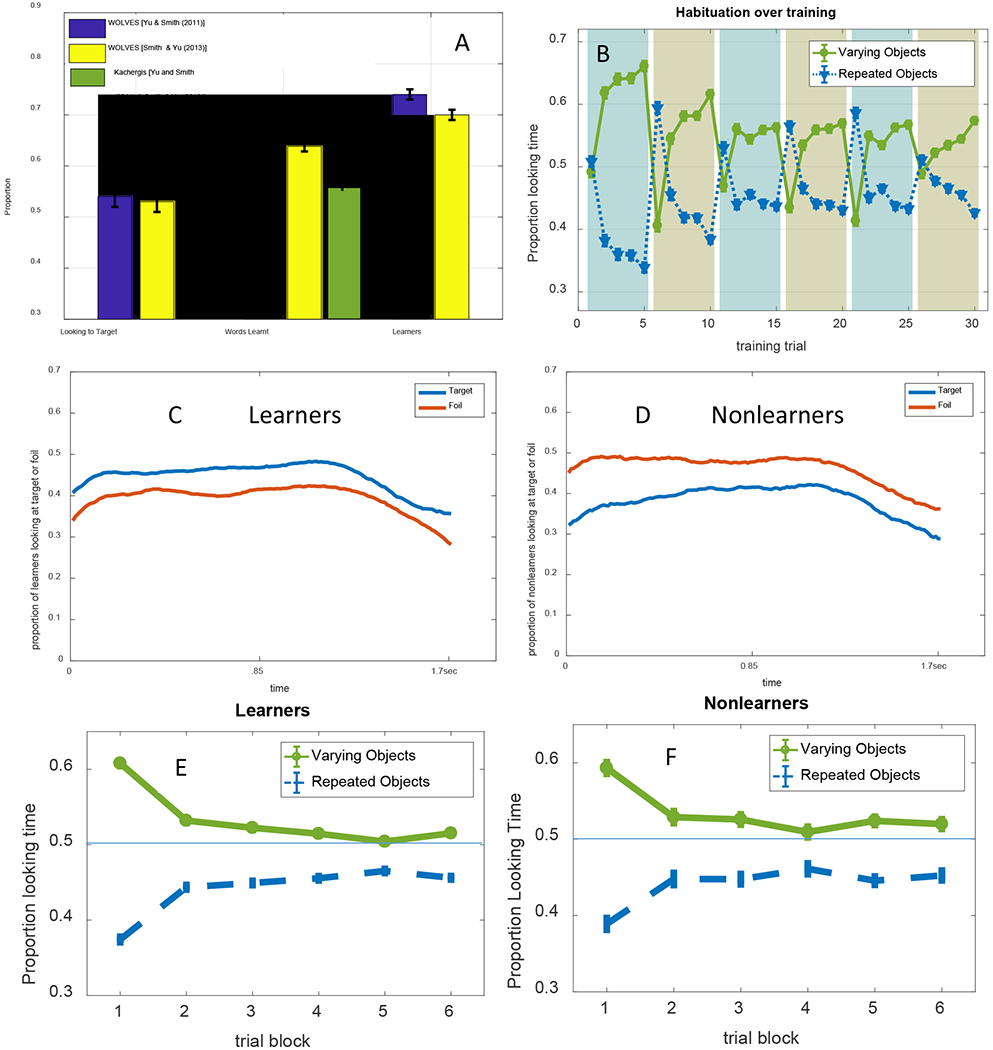

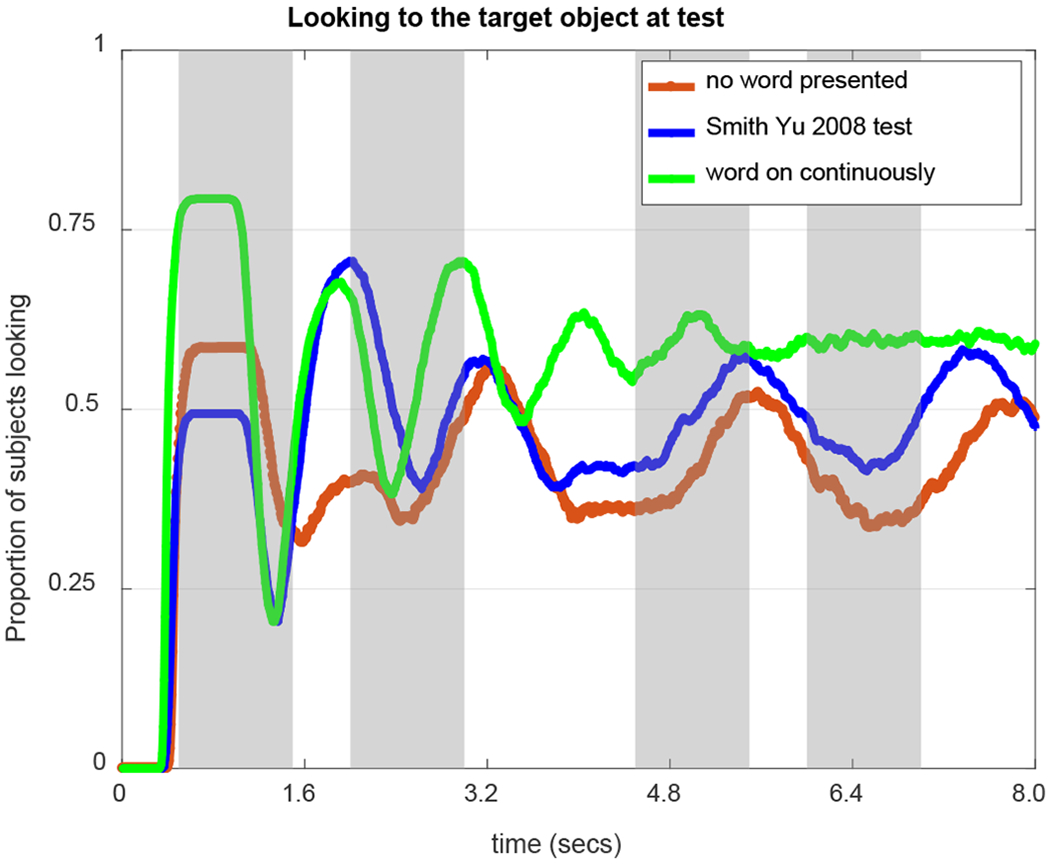

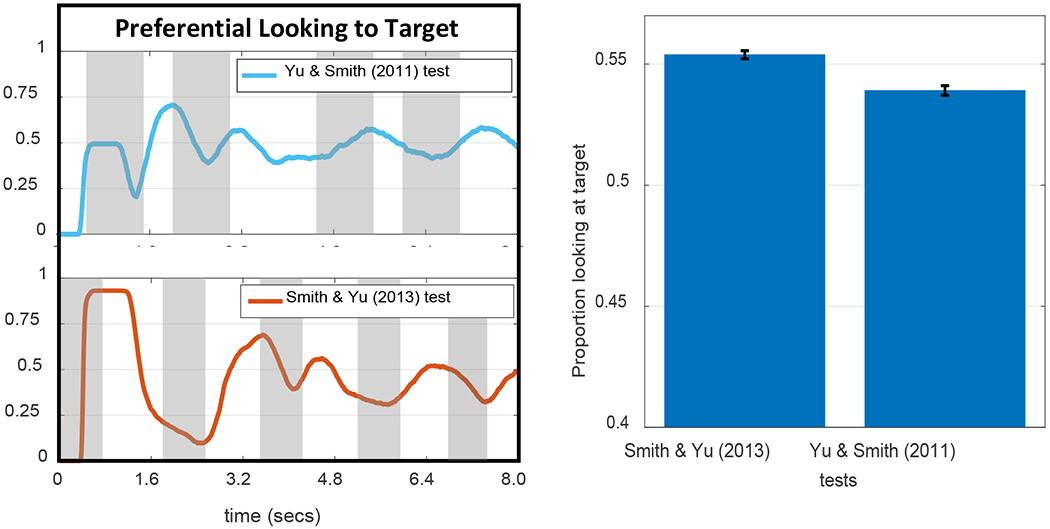

Experiments 1 and 2: Simulations of Infant Cross-Situational Word Learning

In their canonical examination of infant CSWL, Smith and Yu (2008) used preferential looking to ask whether 12- and 14-month-old infants could learn words from a series of naming events that provided ambiguous information about mappings in the moment, but correct pairings via co-occurrences over time. Infants saw 30 4-second training slides that each presented two novel objects and were accompanied by two novel words. Across the training slides, six word-object pairs were presented. Immediately after training, word-object mappings were tested by presenting two objects for 8 seconds along with a single word repeated four times. Greater looking to the labelled object (the target) was taken to indicate learning. Each mapping was tested twice across 12 test trials.

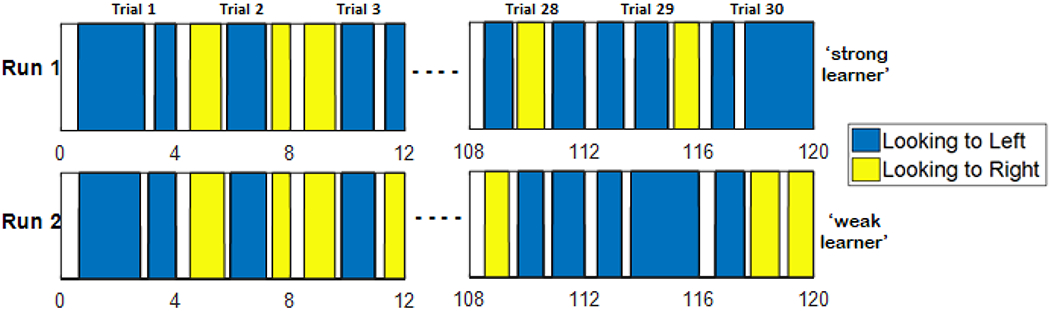

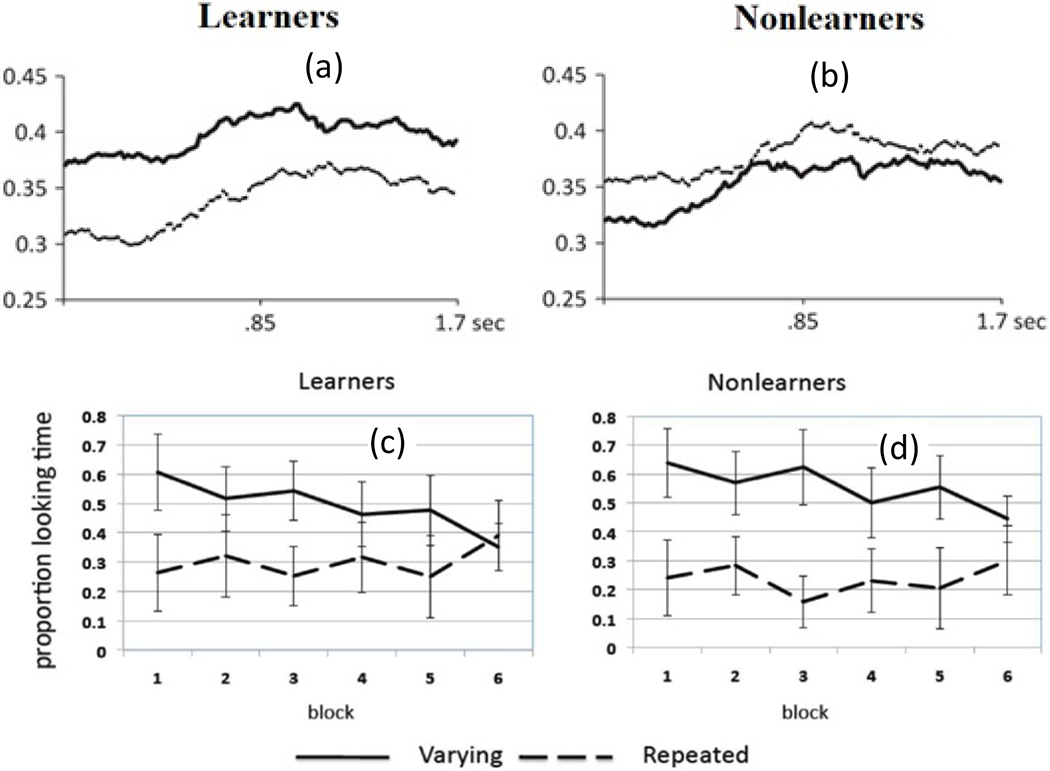

As summarized in Table 2, infants looked more to the targets than distractors and learned about four of the six words. In a follow-up study, Yu and Smith (2011) used an eye-tracker in the same task to explore the relationship between selective attention and learning in infants. Individual infants who looked more to target objects than distractors at test were classified as ‘strong’ learners and infants who looked more to distractors were ‘weak’ learners. Yu and Smith (2011) reported that strong learners tended to have fewer, longer looks during training whereas weak learners had more, shorter looks (see Table 2).

Table 2:

Summary of Infant and WOLVES model performance in a canonical CSWL task

| Measure | Smith & Yu (2008) | Yu & Smith (2011) | Range | WOLVES | RMSE | MAPE | |

|---|---|---|---|---|---|---|---|

| TEST TRIALS | |||||||

| Mean looking time per 8s trial | 6.10 | 5.92 | 5.92 – 6.10 | 6.26 | 0.26 | 4.22 | |

| Preferential looking time ratio | 0.60 | 0.54 | 0.54 – 0.60 | 0.54 | 0.04 | 6.10 | |

| Mean words learned (of 6) | 4.0 | 3.5 | 3.5 – 4 | 4.0 | 0.35 | 7.14 | |

| Proportion of Strong (S) vs Weak (W) Learners | N/A | 0.67 | N/A | 0.74 | 0.07 | 10.45 | |

| Mean looking per trial to Target | 3.6 | 3.25 | 3.25 – 3.6 | 3.36 | 0.19 | 5.03 | |

| Mean looking per trial to Distractor | 2.5 | 2.67 | 2.5 – 2.67 | 2.89 | 0.32 | 11.92 | |

|

| |||||||

| TRAINING TRIALS | S | W | |||||

| Mean looking time per 4s trial | 3.04 | 2.96 | 3.07 | 2.96 – 3.07 | 3.01 | 0.02 | 0.71 |

| Mean fixations per trial | N/A | 2.75 | 3.82 | 2.75 – 3.82 | 2.89 | 0.22 | 6.98 |

| Mean fixation duration | N/A | 1.69 | 1.21 | 1.21 – 1.69 | 1.31 | 0.22 | 14.38 |

We situated WOLVES in Smith and Yu’s task—the same 30 training slides and 12 test slides presented for the same durations. On each trial, WOLVES was allowed to autonomously explore the two presented objects in the context of two words (training) or one word (test). Each object was represented as two Gaussian inputs, one for each feature, that were spatially co-located but presented to the two different visual feature fields (colour, shape). The model then autonomously cycled through bouts of detecting novelty, attending to one object, consolidating that object in a scene representation, and releasing attention.

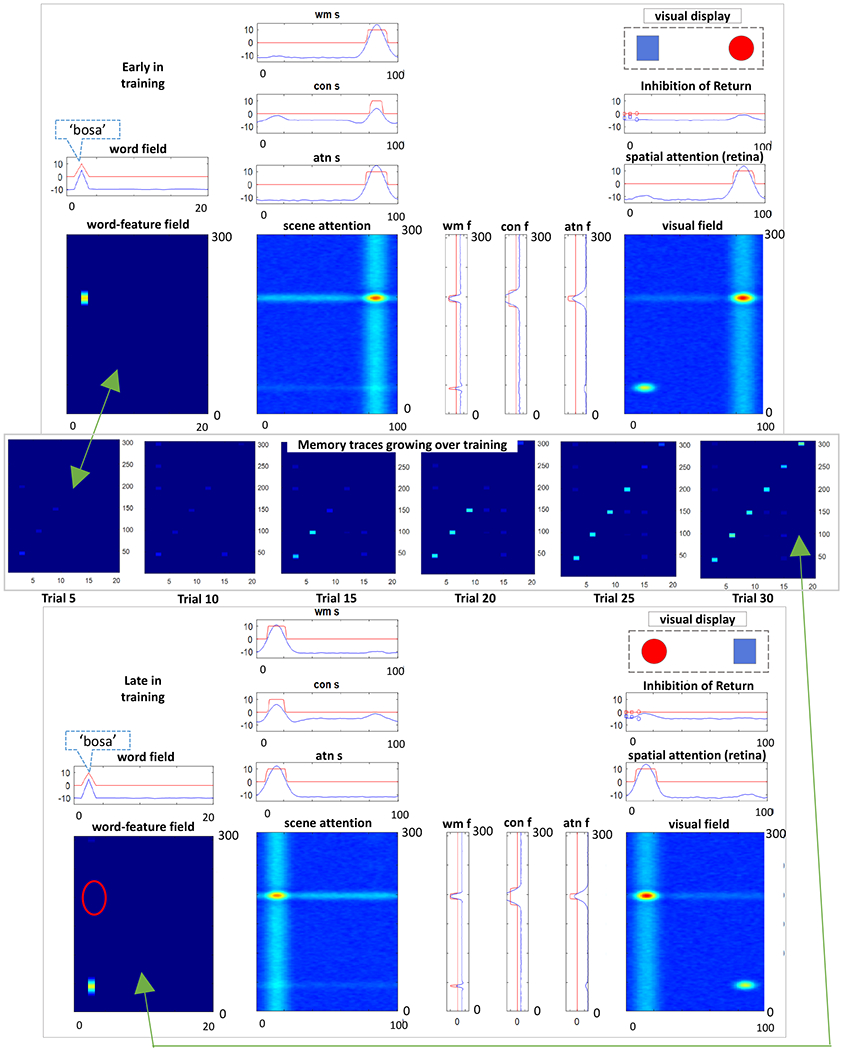

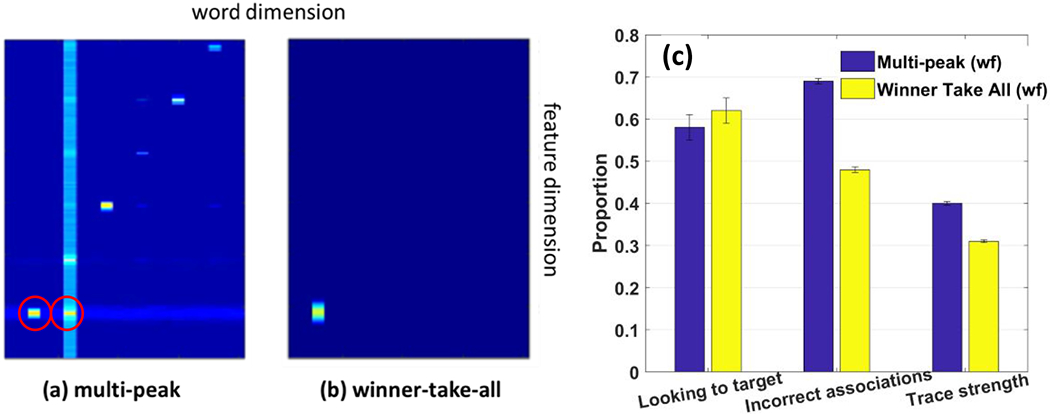

Importantly, as the model attended to a feature pair, ridges were projected horizontally along the feature pathway to the word-feature fields. WOLVES was also presented with words with timing matching the experiment. The word field sent activity ridges along the word dimension of the word-feature fields (top panel Figure 5, see vertical blurry blue line in scene attention). As the feature (horizontal) and word (vertical) ridges crossed each other, a peak built in each word-feature field corresponding to a potential word-object mapping. These peaks laid down memory traces at the site corresponding to the word-feature association. Critically, the associations formed may be correct, if the model happened to be attending to the right object, or incorrect, if the model happened to be attending to the ‘distractor’ object. For instance, the top panel of Figure 5 shows the model attending to the red object (the ‘blicket’ – word 4) while hearing the name for the blue object (the ‘bosa’ – word 1). Because this is early in learning, there is nothing to stop the model from forming an incorrect association. Thus, the model lays down an incorrect association between red and ‘bosa’.

Figure 5.

Top: early in training the model registers an ‘incorrect’ association between the red object and the word ‘bosa’ (word 1). Middle: snapshots of the model’s memory traces taken every 5 training trials show gradual learning of the correct associations. Bottom: late in training the model does not form the incorrect association as in the top panel.

Over training, however, correct associations tend to form because the statistics of the input reinforce the correct mappings most often. This is shown in the middle panel of Figure 5 which plots the memory trace layer for feature 1 (colour) after every batch of five training trials. Notice that early in learning there are many feature associations for each word (i.e., faint memory traces aligned vertically) and some features are associated with multiple words (i.e., faint memory traces aligned horizontally). By the 30th trial, however, most words have a single, strong word-feature memory trace along the diagonal (which are all correct mappings in this example).

Critically, these memory traces exert a strong influence on the behaviour of the model. The bottom panel of Figure 5 shows the model later in training again attending to the red object while hearing the name for the blue object (‘bosa’). Notice how the model late in learning does not form an association between red and ‘bosa’; rather, when the model heard ‘bosa’ a vertical ridge was sent down into the word-feature field, and this ridge intersected a strong memory trace indicating that blue is associated with ‘bosa’. This formed a word-feature peak at the intersection of ‘bosa’ and blue (see peak in word feature field) that blocked the formation of a peak at ‘bosa’ and red (empty red oval). This ‘blocking’ occurs due to the winner-take-all dynamics in the word-feature fields – in the moment, only one peak can form, and the strongest activation occurred at the intersection of blue and ‘bosa’. Once formed, the blue-’bosa’ peak can then influence the model’s looking behaviour, quickly driving attention to the blue object once attention has been released from the red item.

Such top-down influences are necessary to direct looking at test. Specifically, during a test trial, the model is presented with a word and two objects (a target and a distractor). Each time the word is presented, the word field sends down a ridge to the word-feature fields. If the ridge encounters memory traces of word-features associations, a peak will form, sending top-down activation to the contrast fields and biasing the system to look more to the corresponding object (the target).

As in the empirical study, we can calculate the proportion of time the model spends looking at the target, divided by the total overall looking. Likewise, we can record the moment-to-moment history of looking during training trials and can, thus, extract the same measures reported by Yu and Smith (2011). This allowed us to quantitatively compare the model’s performance to the empirical findings in Table 2. It also gives us the opportunity to use WOLVES to understand why strong learners have different fixation dynamics than weak learners.

Simulation methods.

Simulations were conducted in Matlab 2016b via the COSIVINA framework, a modelling package for designing DF models (Schneegans, 2012; Schöner et al., 2016). Note that all of our code is available on www.dynamicfieldtheory.org along with tutorial videos explaining how to run WOLVES in both interactive mode using a graphic user interface (GUI) and in batches of simulations required to quantitatively fit data.

Two machines both using intel i5 processors were used to run all the simulations: a PC with 36 parallel processing cores and a High Performance Cluster with 28 parallel processing cores. Gaussian inputs were used to represent the words and the colour and shape features of the novel objects. Based on the stimuli used by Smith and Yu (2008), we assumed the objects and words were all distinct and evenly spaced across the shape, colour, and word fields. While Smith and Yu (2008) included attention getters between some trials, our simulations use a one-second gap between every two trials for simplicity. The timing between the model and experiment time was scaled such that each simulation step equals eight real-time milliseconds. Simulation results for each experiment were aggregated over 300 runs (i.e., 300 individuals). To evaluate the model’s performance, we computed the root mean squared error (RMSE) and mean absolute percentage error (MAPE) between the simulated and empirical data, two common metrics used to quantitatively evaluate the quality of model fits to data. Additional simulation method details are discussed in the Quantitative Simulations section below and in Appendix C.

Results.

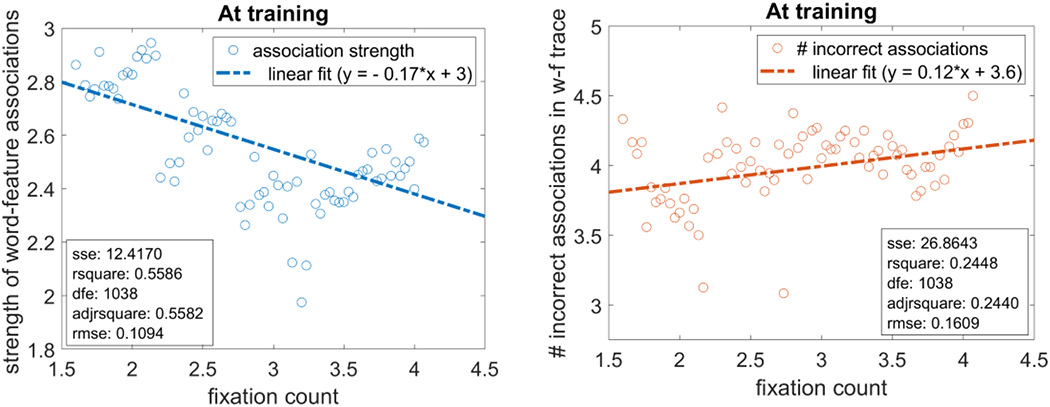

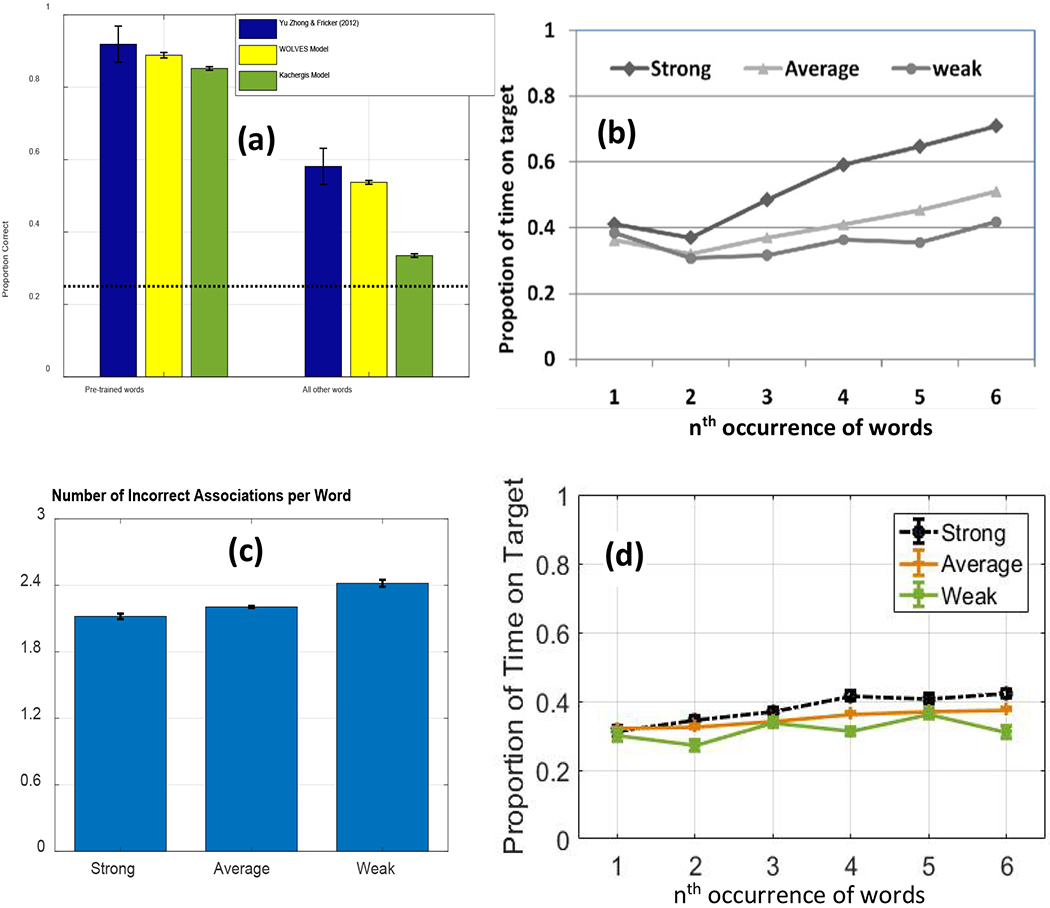

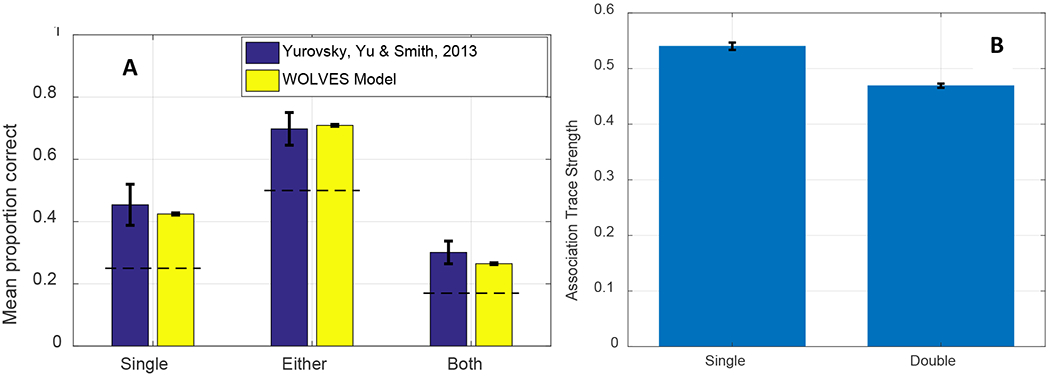

Smith and Yu (2008) and Yu and Smith (2011) found that 12- to 14-month-old infants looked more to the target than the distractor at test, suggesting they had learned the word-object mappings. WOLVES shows a preference for the target within the range found in Smith and Yu’s studies and has a low MAPE and RMSE (see Table 2). Individual runs of WOLVES can be classified as strong and weak learners as in Yu and Smith (2011). Doing so reveals a similar, although somewhat higher, proportion of strong learning models compared to infants. WOLVES also matches the infant data on a range of other measures (Table 2) with low RMSEs and MAPEs.

Table 2 shows that WOLVES reproduces key indices of performance in the CSWL task, including a lower number of longer-duration fixations for strong learners. But why does this happen, that is, why do models with fewer, longer-duration fixations during training learn more? The advantage of having a model like WOLVES is that we can manipulate the fixation dynamics artificially – by changing key model parameters – to create models that tend to have more fixations per trial or fewer fixations per trial. We can then probe why these models learn different numbers of words. This accomplishes two things: it establishes that fixation dynamics are lawfully related to learning in the model and it helps us understand why this might be the case with participants in CSWL, that is, why real-time visual exploration in CSWL affects trial-to-trial learning.

Spatial processing is one of the key features of WOLVES, affecting how the model attends to objects on the retina and binds object features together at the level of the scene representation. Critically, the details of how the spatial pathway is ‘tuned’ modulate visual exploration. For example, strengthening spatial attention by increasing the input from the spatial attention fields into scene attention fields helps the model build scene representations faster and release attention from the current object more quickly. This decreases the duration of each look and increases fixation counts per trial. Note that more switching back-and-forth between objects also affects total looking because there are more ‘off-looking’ gaps between the looks.

Given that the strength of input from spatial attention to scene attention can modulate fixation dynamics, we set up batches of simulations where we ran Yu and Smith’s (2011) CSWL paradigm and varied the strength of this parameter across 5 steps (5 spatial attention strengths by 300 runs each = 1500 simulations). Note that all other parameter values were held constant. This should yield models that vary in the number of fixations during training. We can then ask if these variations are lawfully related to learning at test and, if so, why.

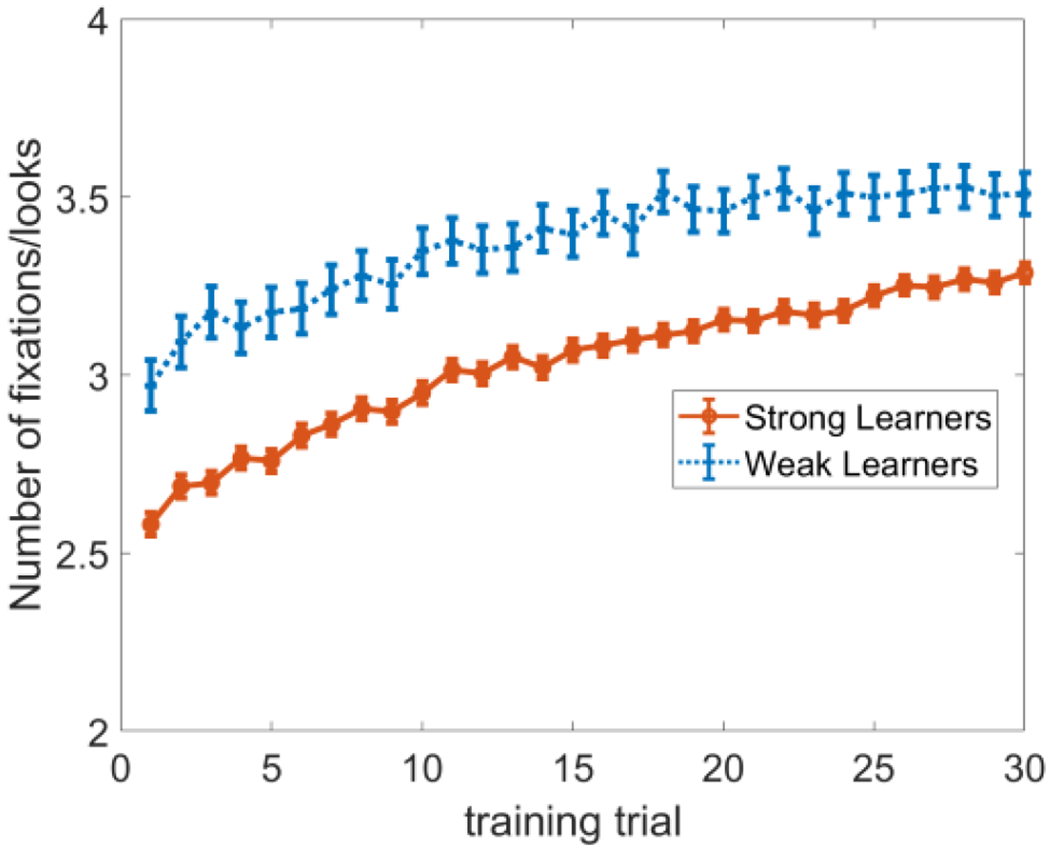

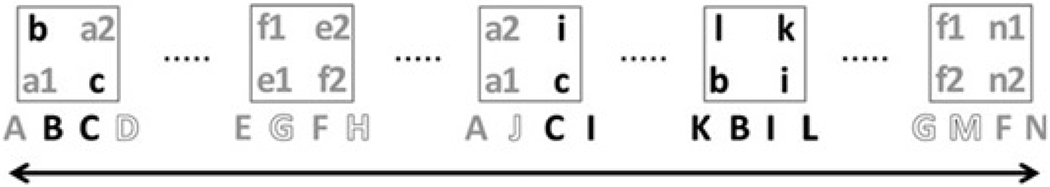

Manipulating spatial attention in WOLVES did indeed create large variations in fixation dynamics during training across the 1500 models, and these differences in looking dynamics had an impact on performance during test. To illustrate this in a way that allows for direct comparisons to data from Yu and Smith, we sorted the 1500 models into strong and weak learners based on test performance. Figure 6 shows that weak learning models have more fixations (and shorter look durations) than strong learning models. This replicates findings from Yu and Smith (2011) but extends this pattern over a broader range of looking dynamics so we can explore why this relationship holds. Note that Yu and Smith did not report an increase in number of fixations over trials, although previous studies with infants have shown such effects (see, e.g., Rose et al., 2002).

Figure 6:

The effect of spatial attention on fixation dynamics during Smith & Yu’s CSWL task. We varied the strength of spatial attention which increased the number of looks made by the model thereby changing how many words were learned. After classifying models as strong (red) and weak (blue) learners as Yu and Smith (2011) did in their experiment, we see that these models have different numbers of fixations per training trial.

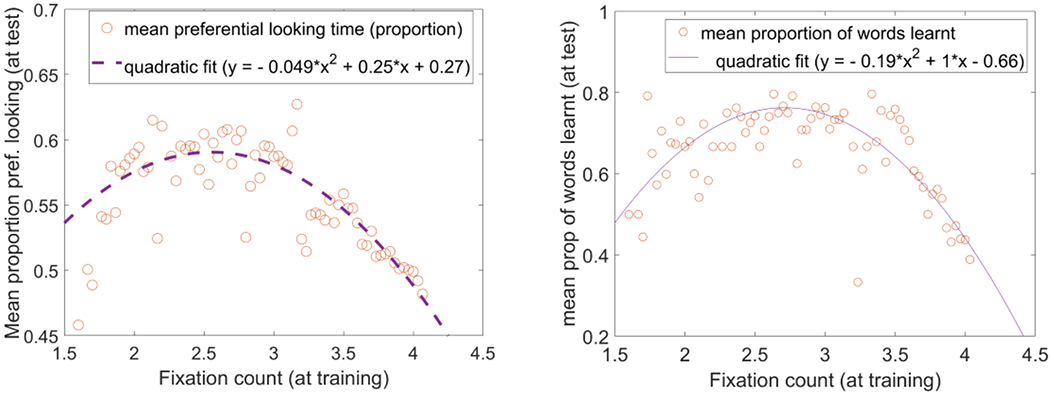

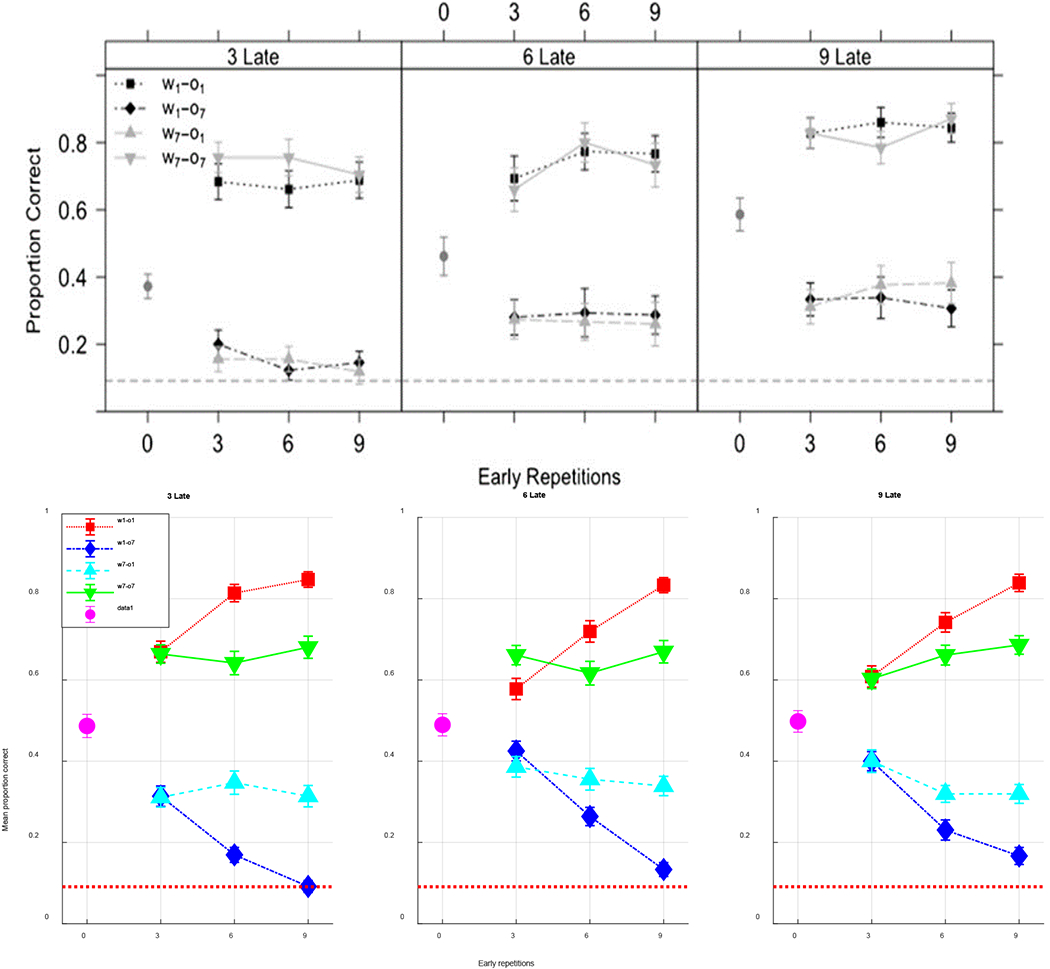

We first looked at how differences in fixation dynamics were related to the build-up of word-feature associations during training. The left panel of Figure 7 shows that as stronger spatial attention increased the number of fixations (see fixation count on x axis), the strength of word-feature associations decreased. Conversely, the right panel shows that as the number of fixations WOLVES made during training increased, the number of incorrect word-feature associations increased. This makes intuitive sense: if WOLVES makes a single fixation per training trial, it is likely to form only one or two associations on that trial (roughly, one per word presented). If the model makes two fixations per training trial, it is likely to form between two and four associations. Clearly then, fixation dynamics should be a critical determinant of learning; this is indeed the case in WOLVES.

Figure 7.

Left: Relation between the number of fixations a model makes during a training trial and the average strength of the word-feature associations formed by the model. The blue line shows a linear fit of the data with an RMSE=0.11. Right: Growth of the average number of erroneous associations formed with increasing fixations at training. The red line shows a linear fit of the data with an RMSE=0.16. Other statistical measures are indicated in both plots. Note that each value plotted on the Y-axis is averaged (after binning) over models within a bin-width of 0.03 in fixation counts.

Interestingly, when we look at how differences in fixation dynamics were related to performance during test, we see a more nuanced relationship. The left panel of Figure 8 plots the mean proportion of looking to the target at test against the average number of fixations per model during training. The data are best fit with a quadratic curve, indicating that 2.25 to 3 fixations per training trial results in the best test performance compared to higher or lower numbers of fixations. A similar relationship is seen between fixation dynamics and the number of words learned (right panel).

Figure 8:

Relation between mean proportion of preferential looking to the target at test (left panel), mean proportion of words learned (right panel), and the number of fixations during training. Note that each value plotted on Y-axes is averaged (after binning) over models within a bin-width of 0.03 in fixation counts.

Discussion.

Overall, WOLVES fit multiple measures of the empirical data from Smith and Yu’s experiments quite well with low MAPEs. To our knowledge, this is the first process model to reproduce the looking measures reported from these canonical CSWL studies. A strength of the model is that it generates real-time looking behaviour; consequently, all the measures reported by Smith and Yu can be calculated for the model as well. This provides strong constraints on modelling as parameter changes necessarily impact how the entire pattern of looking cascades over trials.

A fascinating finding from this initial simulation experiment is that we reproduced the empirical patterns for strong and weak learners from models that were all identical at the start of the experiment (i.e., identical parameters). This arises in the model because each model is autonomous. Every field has internal noise that affects the decisions the model makes as activation grows toward threshold. Critically, looking behaviours early in learning lay down associations that can bias attention on subsequent trials. Consequently, each model follows its own trajectory of looking and learning. This is true even when noise is very weak. For instance, Figure 9 shows two runs of the model with the same parameters and a very tiny amount of noise (our canonical noise value in all simulations = 1.0; here we used 0.125). We gave both models the same order of object-word presentations. The panels on the left plot looking to the object on the left side (blue bars) and right side (yellow bars) of the scene on the first three trials of training. The panels on the right side show looking behaviour for the final three training trials. While looking across both runs during the first two trials was similar, looking during the last three trials is very different. Performance of the two runs at test was also different: run 1 was a strong learner, while run 2 was a weak learner. Thus, learning trajectories initially directed by noise will quickly be influenced by other factors as memory traces build, leading to emergent differences. This suggests that ‘strong’ and ‘weak’ learning effects in experiment could arise via learning in the experiment rather than due to individual differences in infants.

Figure 9:

Model looking trial by trial over the course of training. Each row shows the looking patterns of a particular run of the model, blue indicates looks to the left and yellow to the right of the scene. White indicates off/centre looking. Both runs included the same model parameters and the same fixed order of object presentations. Differences arise due to noise in the system and the autonomous learning of the model.

This finding of emergent individual differences without parameter changes on one hand, and that a parameter change can create the best learning by generating a ‘sweet spot’ of 2.25-3 fixations per training trial, on the other, have critical implications for empirical work. First, WOLVES predicts that individual differences in spatial attention and fixation dynamics should manifest in differential learning, such that participants with stronger spatial attention / faster visual processing learn less in CSWL. This could be tested by first assessing individual differences in a spatial attention / visual processing task and then running participants in the Yu and Smith (2011) CSWL task. WOLVES also predicts that direct manipulations of fixation dynamics during training should yield a curvilinear relation between fixation dynamics during training and learning at test. This could be tested empirically by, for instance, inserting attentional cues during training in CSWL to manipulate fixation switching. Cueing attention in a manner consistent with the ‘sweet spot’ for fixations should lead to good learning. Cueing attention outside of this ‘sweet spot’ should lead to less learning at test.

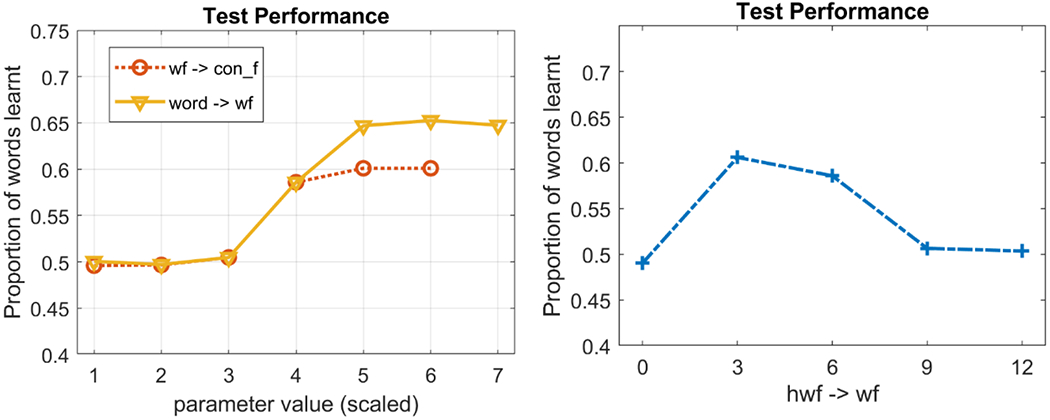

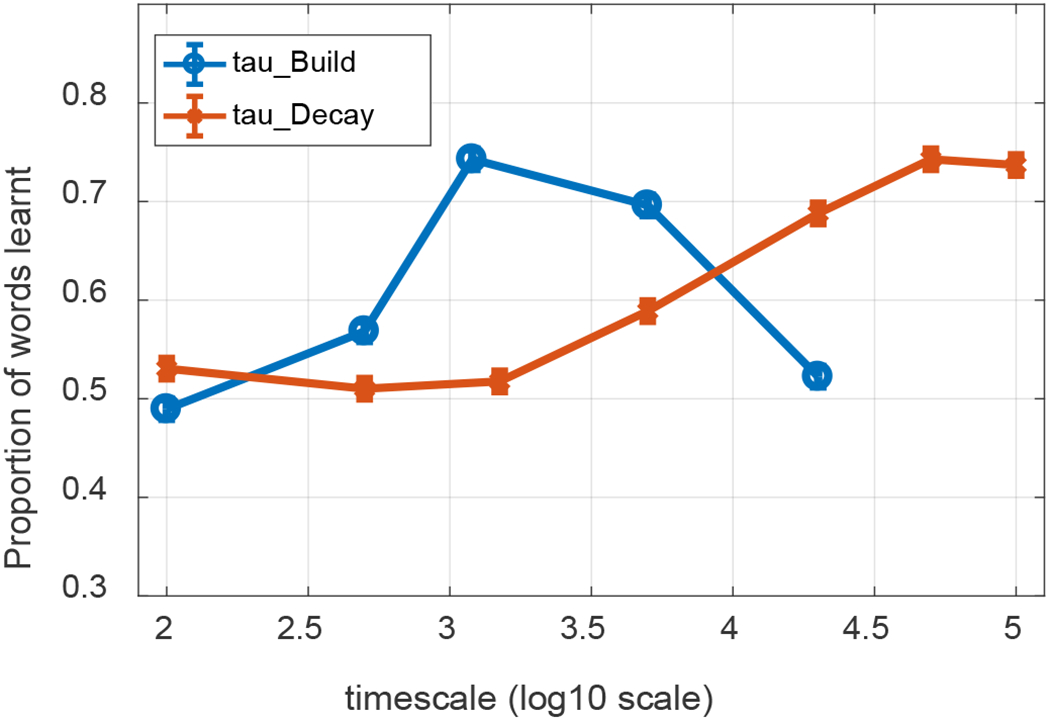

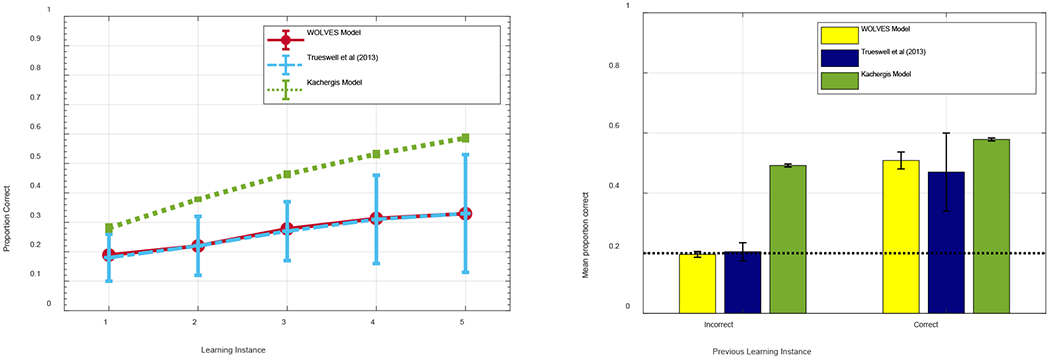

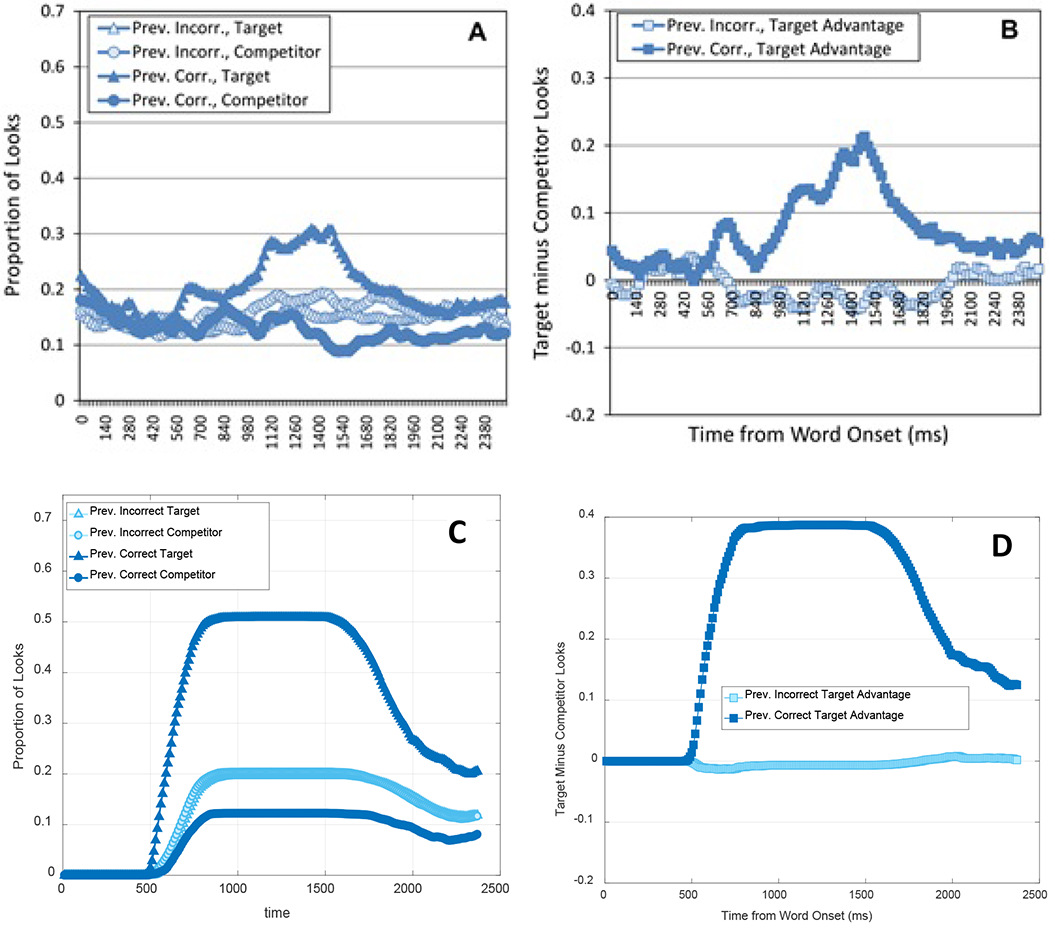

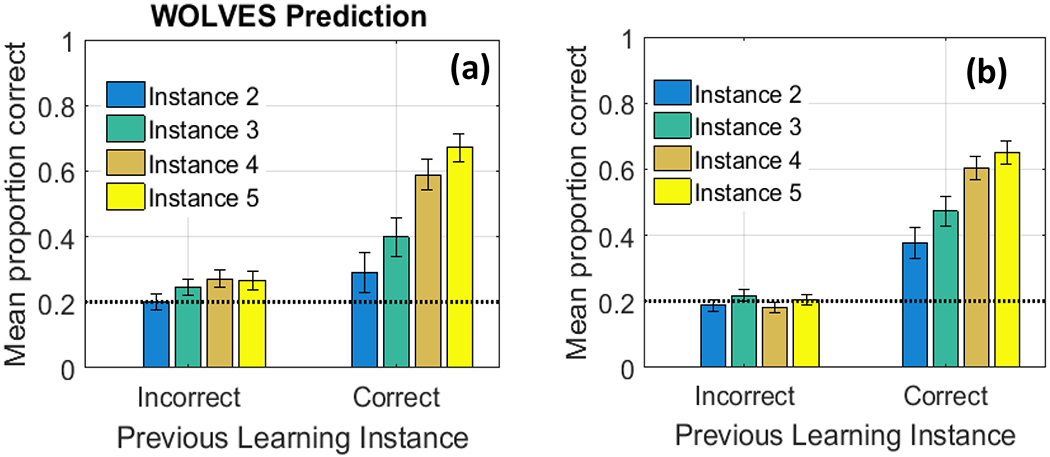

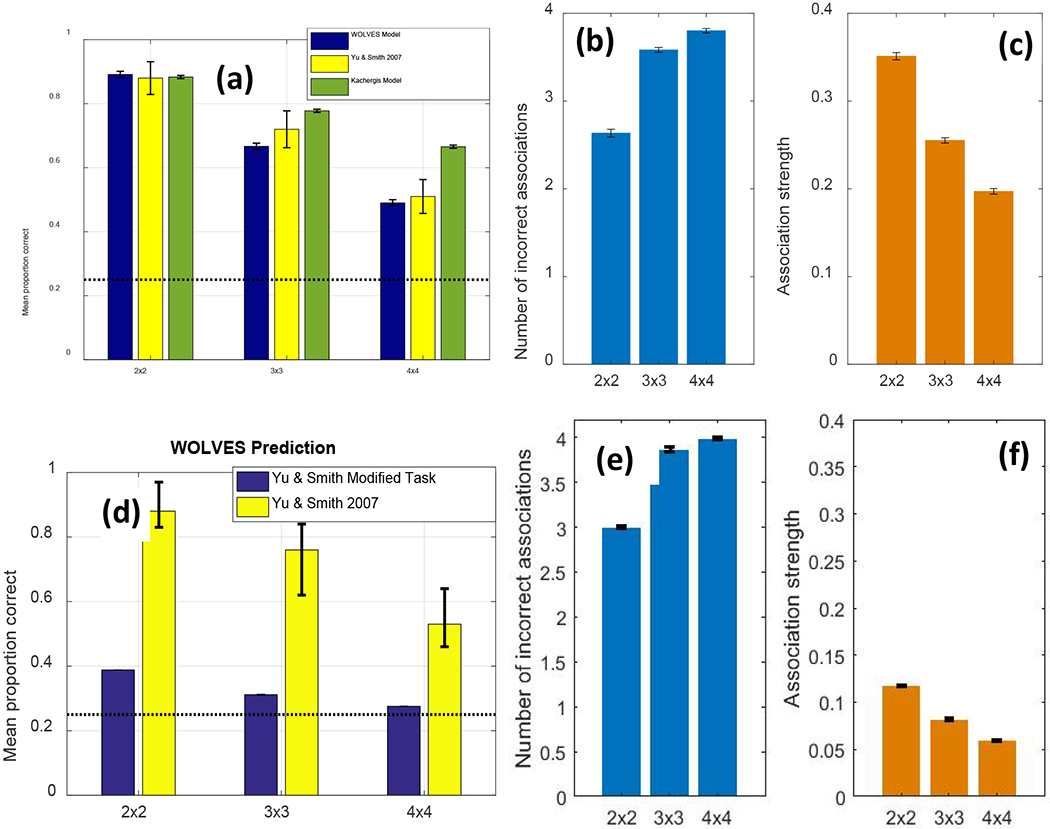

Interim summary: Is WOLVES an HT or AL model?