Within the school-age population, 5% to 17% of students experience severe reading difficulties (RD; Grigorenko et al., 2020). Without effective instructional support, early difficulties in reading may worsen, resulting in a host of poor academic and occupational outcomes (Snowling & Hulme, 2021). Although interventions have been developed for struggling readers, even the best of these fail to meet the needs of some students (e.g., Peng et al., 2020). More intensive and individualized support are needed to fully address the needs of students with RD.

In this article, we explain how DBI can be used to support students with RD. After first describing the purpose and principles of DBI, we illustrate the process with an example. We follow with a discussion of how DBI fits within the context of multi-tiered systems of support (MTSS). We conclude by reviewing evidence for the efficacy of DBI for reading and provide recommendations for implementation.

What is DBI?

DBI is a form of data-based decision making (DBDM) used to systematically intensify academic and behavioral support for students with intensive learning and behavioral difficulties (D. Fuchs et al., 2014). The origins of DBI can be traced to the work of Stanley Deno and his colleagues at the University of Minnesota in the 1970s (Jenkins & Fuchs, 2012). At the time, federal reforms in the United States were rapidly expanding special education services. With the increased identification of students with disabilities came the practical challenge of designing and delivering intensive academic and behavioral interventions on a large scale. In response, Deno and colleagues developed the DBI process (Deno & Mirkin, 1977). This involved a systematic, problem-solving approach for identifying a student’s instructional needs, developing short- and long-term instructional goals, selecting interventions to address these goals, and making adaptations to those interventions based on frequent progress monitoring data.

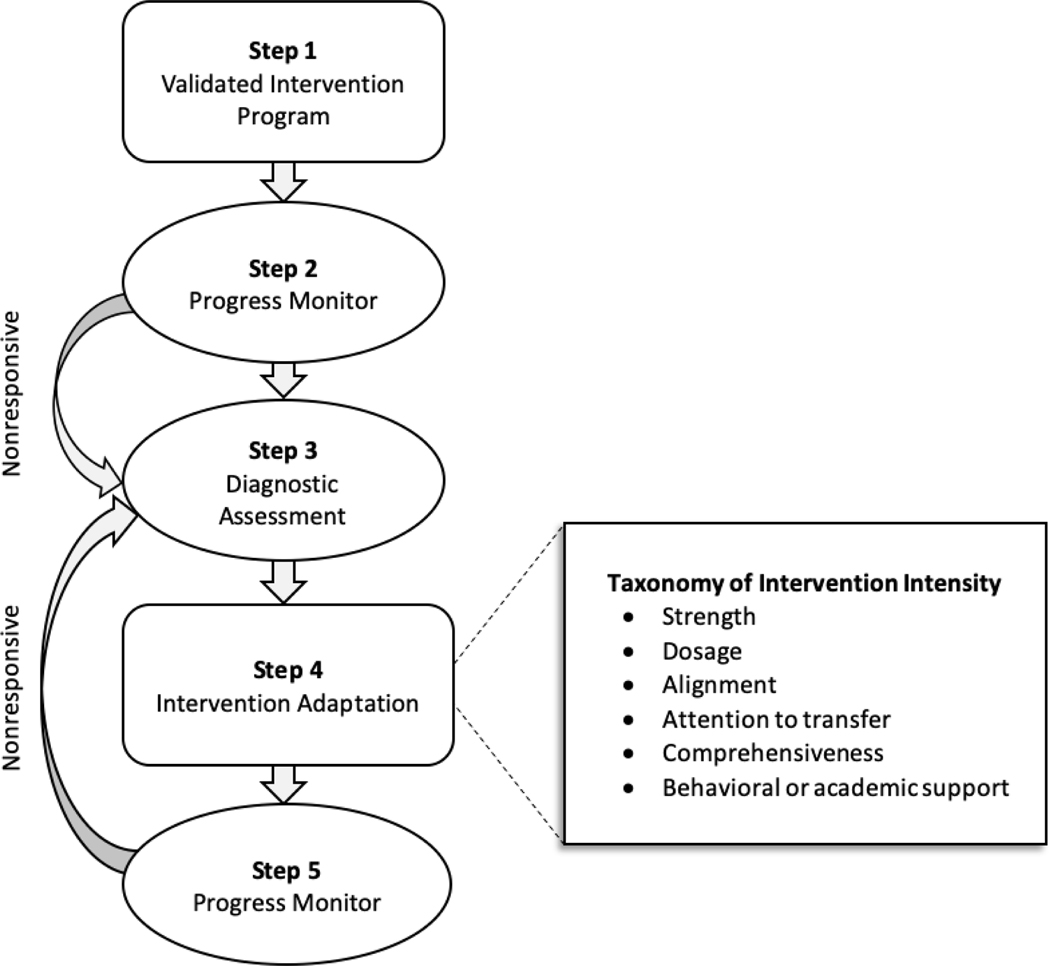

Recently, the National Center on Intensive Intervention (NCII, 2013), a technical assistance center funded by the Office of Special Education Programs within the U.S. Department of Education, developed a five-step DBI process (see Figure 1) based on findings amassed from research in the intervening years. For illustrative purposes, we describe the NCII DBI process with Ms. Martinez, a fictional first-grade reading intervention teacher and her student Diego.

Figure 1.

The five-step data-based individualization process and the Taxonomy of Intervention Effectiveness.

Note. Adapted from National Center on Intensive Intervention (2013) and Fuchs et al. (2017).

The DBI Process

Diego began first grade as an engaged and curious student. However, he encountered difficulty learning letter-sound correspondences, which led to inaccurate and dysfluent word reading. Results from a universally administered fall screening assessment brought his difficulties to the attention of Ms. Flores, his general education teacher. Ms. Flores faithfully implemented her district’s literacy curriculum (Tier 1), which aligned with research-based instructional principles (e.g., Castles et al., 2018). Although this proved effective for most of the children in the district, for Diego and several other students it was not. Following the school’s MTSS procedures, Ms. Flores recommended Diego, along with the other students, for supplemental, small-group reading instruction with Ms. Martinez (Tier 2). Ms. Martinez worked with the students for the first and second quarters. The other students in the group responded well to this added support, each progressing to average performance levels. However, Diego made little progress. At the end of the second quarter, it became clear to Martinez that he required additional support. Therefore, she decided to use DBI to intensify his intervention (Tier 3).

Step 1: Validated Intervention Program

As illustrated in Figure 1, the first step in the DBI process is to select a validated program as the intervention platform. Based on the nature of Diego’s difficulties, Ms. Martinez sought an intervention that targeted letter-sound correspondences, decoding, and word recognition fluency. She reviewed the reading interventions listed in the Academic Intervention Tools Chart on the NCII website. There she found an intervention that met her criteria in terms of instructional content. She was careful also to ensure that empirical evidence indicated the program’s efficacy for students with challenges similar to Diego’s. After selecting the intervention program, a goal then needs to be set for how much progress the student should be expected to achieve. To this end, the student’s baseline performance level first needs to be assessed. Given Diego’s difficulty with word recognition, Ms. Martinez chose Word Identification Fluency (WIF; Zumeta et al., 2012) to assess his baseline performance level. As we explain later in Step 2, she also used the WIF to monitor his progress throughout the intervention. Importantly, WIF data have been shown to be reliable and valid for these dual purposes (Zumeta et al., 2012).

To determine Diego’s baseline performance level, Ms. Martinez administered three alternate (i.e., equivalent) WIF forms. Across these forms, Diego achieved a median score of four words correct per minute (WCPM), placing him well below even typical fall first-grade performance level (~33 WCPM; Zumeta et al., 2012). With his baseline performance level now established, Ms. Martinez determined a long-term performance goal for him. To this end, she considered the three methods described below to determine Diego’s year-end goal.

Average level. The first and simplest method would be to select as his goal as the average spring WIF performance level for first-grade students. With this year-end goal, Diego would be expected to achieve at least average reading performance.

Average rate of improvement. The second method would base the goal on an average weekly rate of improvement (ROI). For example, say that on average, first-grade students improved by 1 WCPM every week on the WIF. To determine the goal, the teacher would multiply the average ROI by the number of weeks remaining in the intervention (e.g., 10 weeks). The product is then added to the student’s baseline performance level.

Individual rate of improvement. The final method is similar to the second. However, instead of using an average ROI, the student’s own baseline (before intervention) ROI is calculated. This is done over a period of several weeks, during which the student’s performance is assessed three or more times. For example, in this approach, Ms. Martinez would administer at least three more alternate forms of WIF to Diego. To determine his ROI, she would then enter these data into a computer spreadsheet (e.g., Microsoft Excel) and use the slope function. As with the second method, she would multiply his ROI by the number of weeks remaining in the intervention, then add the product to his baseline performance level.

These three methods can produce dramatically different goals, and there are strengths and appropriate uses for each. Ms. Martinez first considered the method of basing Diego’s goal on the average performance level. However, she felt that the amount of growth that would be needed for Diego to achieve this goal was unrealistic. Similarly, she felt that even with intensive support, Diego would not likely progress at an average rate. Therefore, she chose the third method and calculated a goal based on Diego’s own baseline ROI.

Step 2: Progress Monitor

The intervention commences in the second step of the DBI process. In our example, Ms. Martinez began by implementing the selected intervention with close fidelity to its design. Each week thereafter, she monitored Diego’s progress using an alternate form of WIF. As with goal setting, there are different options for how frequently to monitor students’ progress (e.g., weekly, bi-weekly), and for the decision rules that can be applied to these data (Pentimonti et al., 2019). The latter is a point of critical importance. Simply collecting and graphing data is of little value if systematic rules are not used to guide instructional decisions.

The easiest decision rule involves examining where a student’s four most recent data points fall in relation to their goal line. If the four points fall above the line, then the goal is increased (see Step 1 for goal setting), but the intervention continues unchanged. If instead the four points overlap with the goal line, or are distributed around it, then both the goal and the intervention are unaltered. Finally, if all four points fall below the line, the goal is maintained but the intervention is adapted (Pentimonti et al., 2019).

An alternative approach is to consider the slope (trendline) of the student’s 6–8 most recent data points (Pentimonti et al., 2019). Just as the ROI was determined in Step 1 for goal setting for goal setting, the slope of these data can be calculated using spreadsheet software. The same decisions used with the four-point rule are then applied to the trendline. If the trendline falls above the goal line, the intervention continues as is, but toward an increased goal. If the trendline overlaps or closely parallels the goal line, then the goal and the intervention remain unaltered. Should the trendline fall beneath the goal line, then the goal is maintained but an intervention adaptation is considered.

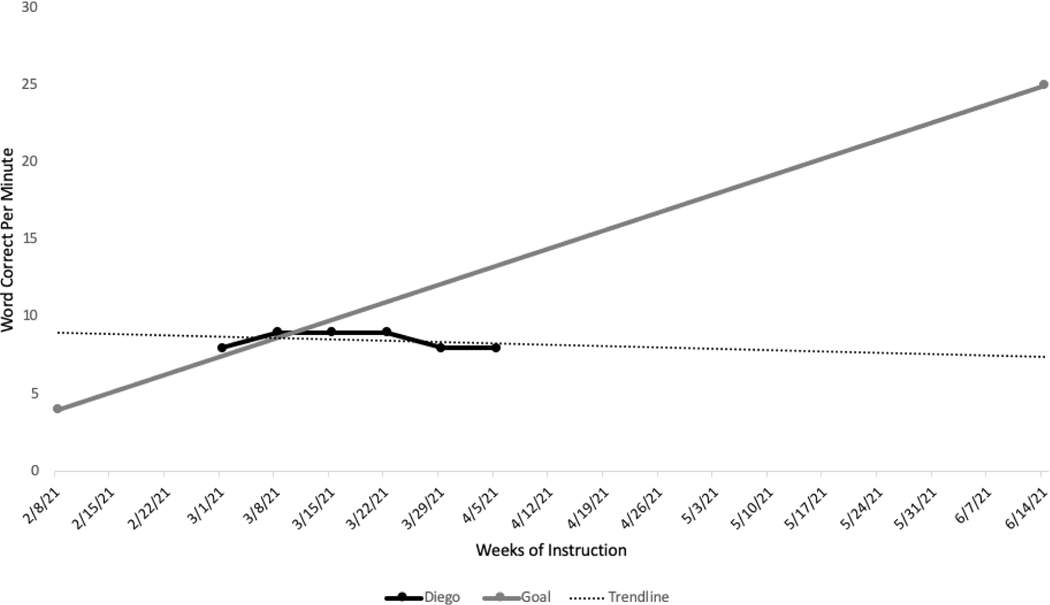

A third decision-rule approach combines the four-point rule and the trendline analysis (Pentimonti et al., 2019). For its reliability, Ms. Martinez selected this option. As illustrated in Figure 3, the trendline based on Diego’s six most recent data points fell well below his goal line. His four most recent points also fell beneath the line. Taken together, Ms. Martinez could reliably conclude that the intervention was not adequately addressing Diego’s needs. To achieve his goal, an adaptation was needed.

Figure 3.

Four-point rule and trendline analysis of Diego’s Word Identification Fluency progress monitoring data.

Step 3: Diagnostic Assessment

Although progress monitoring metrics like word read correctly per minute (WCPM), as done with WIF, are useful for identifying whether an intervention is working, they do not explain why and therefore cannot inform the nature of specific instructional adaptations. To understand why the intervention no longer met Diego’s needs, Ms. Martinez conducted a diagnostic assessment. She began by assessing his knowledge of phonics concepts taught up to that point in the intervention. Results revealed that Diego had successfully learned the target concepts. Moreover, he was able to successfully apply these concepts when reading real and pseudowords. However, even when reading real words, Diego relied exclusively on a decoding strategy (i.e., sounding out and then blending together the letter-sound correspondences). Although this strategy is common in the early phases of reading development, failure to transition from decoding to automatic retrieval often portends serious reading difficulties (Castles et al., 2018).

Step 4: Intervention Adaptation

The information learned in Step 3 is put into action in Step 4. There are many potential ways to adapt an intervention. The Taxonomy of Intervention Intensity (Fuchs et al., 2017), shown in Figure 1, outlines six dimensions of adaptation: strength, dosage, alignment, attention to transfer, comprehensiveness, and behavioral support. Any one or combination of these may be appropriate for a particular student. For example, a student might be able to effectively decode words presented in isolation yet struggle to apply this strategy to similar words encountered in connected texts. For this student, the teacher might need to provide instruction that explicitly attends to transfer. In Diego’s case, Ms. Martinez concluded from the diagnostic assessment conducted in Step 3 that he required additional practice with word recognition fluency. She therefore increased the intervention dosage by adding time to each session for additional practice and feedback.

Step 5: Progress Monitor

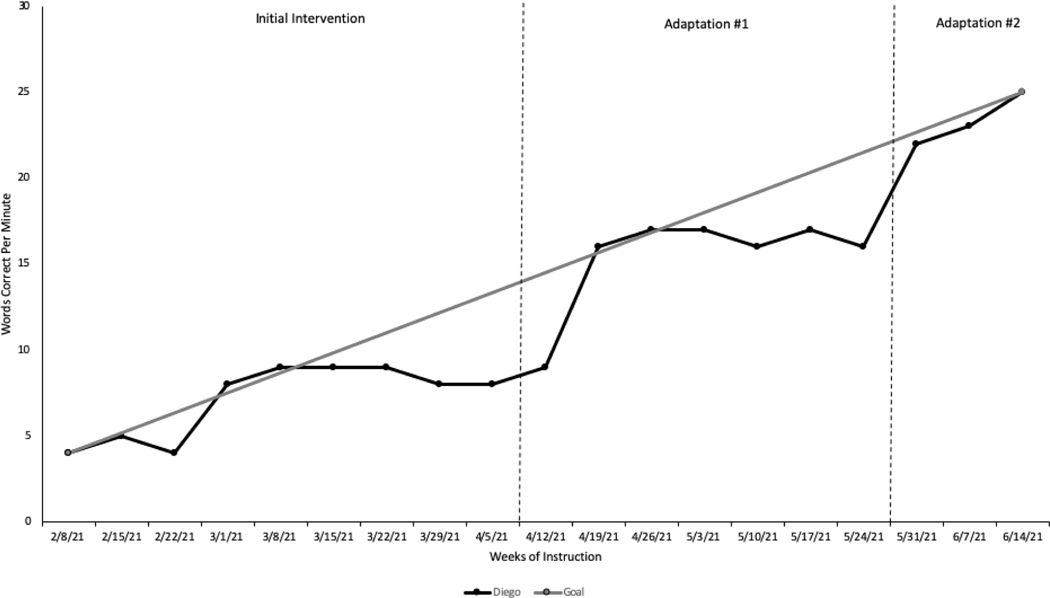

After adapting the intervention, Ms. Martinez continued to monitor Diego’s progress weekly with WIF. As shown in Figure 2, the adaptation she applied in Step 4 initially proved effective. However, over time, ongoing data indicated that his progress gradually slowed. Using the combined four-point and trendline analysis decision rule, Ms. Martinez determined that another adaptation was needed (Step 2). She again conducted a diagnostic assessment to better understand Diego’s difficulties (Step 3). Based on what she learned from the diagnostic data, she adapted the intervention (Step 4) and continued to monitor his performance (Step 5). Through this iterative process, Diego ultimately achieved his goal.

Figure 2.

Diego’s weekly performance on the Word Identification Fluency measure is graphed against his goal line.

Summary

After Ms. Martinez’s initial intervention (Tier 2) proved unsuccessful, she intensified instruction for Diego using DBI (Tier 3). She began by selecting a validated intervention as the platform from which to individualize Diego’s support. She approached this by first determining his baseline performance level and projecting a long-term goal for him (Step 1). She then began implementing the intervention and monitoring his progress toward the goal (Step 2). When the data revealed that he was not progressing adequately (Step 3), she conducted a diagnostic assessment to identify a solution to accelerate his progress (Step 3). She then adapted the intervention’s dosage (Step 4) while continuing to frequently monitor his progress (Step 5). When the data again indicated that he was progressing inadequately, she repeated Steps 3 and 4. This iterative process of intervention refinement continued until Diego achieved his goal.

DBI and Multi-Tiered Systems of Support

Over the past two decades, many K-12 interventions have coalesced under the MTSS framework. This prevention system comprises three progressively intensive support levels: universal/primary (Tier 1), selected/secondary (Tier 2), and implicated/tertiary/intensive (Tier 3; Schulte, 2016). Universal level supports are intended for the entire population (e.g., core reading program). Selected level interventions provide more intensive and targeted support (e.g., small-group reading instruction) to students with higher probabilities of experiencing academic difficulties. When implemented consistently and with fidelity, high-quality universal intervention can be effective for many students (Greenberg & Abenavoli, 2017). Similarly, high-quality selected level interventions have been shown to be effective at preventing the frequency and magnitude of learning difficulties (Wanzek et al., 2016). However, to sustain positive effects, students need continuous access to high-quality universal level instruction and sometimes several doses of selected level interventions (Bailey et al., 2020).

Even with universal and selected interventions in place, some students require more intensive and ongoing support (Tier 3; Fuchs et al., 2014); this marks the divide between prevention and treatment. From our description above, it should be clear that DBI requires a level of intensity untenable and unnecessary at the universal level (Tier 1). Furthermore, DBI is not intended as a selected level short-term intervention. Rather, DBI should be reserved for students, like Diego, whose needs demand intensive and long-term support (Tier 3; Fuchs et al., 2014).

How Effective Is DBI?

In the years since Deno and colleagues first developed DBI, it has become the standard approach for providing specially designed instruction (Jenkins & Fuchs, 2012). It is important to ask, then, how effective is it? The major components of DBI–curriculum-based assessment and academic interventions–draw from some of the richest veins in education research (Wanzek et al., 2016). However, considerably fewer studies have examined the combined effects of these components. Nonetheless, a sizable literature on the efficacy of DBI has emerged.

In a systematic review of the DBI literature, Stecker and colleagues (2005) found that in comparison to other Tier 3 approaches, the DBI process produced superior outcomes on both student reading and math outcomes (see McMaster et al., 2020 for an RCT reporting positive effects DBI in writing). Similar results were reported in two recent meta-analyses (Filderman et al., 2018; Jung et al., 2018). In both, reading outcomes were significantly stronger for students whose teachers used DBI in comparison to those in control group conditions. Across these two reviews, average effects in reading were significant but modest. However, these averages represent aggregates of both strong and weak implementations of DBI. Furthermore, although modest, these effects may be practically meaningful for students, like Diego, with severe RD.

DBI Implementation

Certain conditions must be in place to realize the potential of DBI for improving instructional planning and student performance (Lemons et al., 2017). Foremost, teachers must understand the DBI process and be able to orchestrate its components. Fortunately, much has been learned about how to cultivate these competencies through pre- and in-service teacher professional development (Gesel et al., 2021). Even trained teachers require ongoing coaching and benefit from technological systems that support data analysis and decision making (Fuchs et al., 2021).

For example, Fuchs and colleagues (1984) found that students of teachers who received coaching on DBI performed better in reading than students whose teachers received a similar amount of coaching but for other instructional skills. Fuchs and colleagues (1989) also examined whether technological systems help teachers with analyzing student data and making instructional decisions. Teachers in their study were randomly assigned to one of two groups. In one group, a computer program graphed student progress monitoring data. In the other, the program also provided diagnostic reports and organized students into groups focused on specific instructional skills. The addition of these two features for the second group had a significant effect on student reading outcomes. Similarly, Fuchs and colleagues (1992) found in comparison to students whose teachers received graphed or even graphed and analyzed data, those whose teachers received computerized instructional recommendations performed significantly better on measures of reading fluency and comprehension. In sum, the effectiveness of DBI is greatly enhanced when teachers receive ongoing coaching and technological support.

Conclusion

In this article, we provided an overview of the DBI process, its effectiveness, and important factors to consider for implementation. Additional information about DBI and resources for implementation are freely available on the websites for the NCII (www.intensiveintervention.org) and Progress Center (www.promotingprogress.org).

Acknowledgments

The authors were supported by Grant H325D180086 from the Office of Special Education Programs in the U.S. Department of Education to Vanderbilt University and Grant P20 HD075443 from the Eunice Kennedy Shriver National Institute of Child Health & Human Development to Vanderbilt University. The content is solely the responsibility of the authors and does not necessarily represent official views of the Office of Special Education Programs, the Eunice Kennedy Shriver National Institute of Child Health & Human Development, or the National Institutes of Health.

References

- Bailey DH, Duncan GJ, Cunha F, Foorman BR, & Yeager DS (2020). Persistence and fade-out of education-intervention effects: Mechanisms and potential solutions. Psychological Science in the Public Interest, 21(2), 55–97. 10.1177/1529100620915848 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Castles A, Rastle K, & Nation K. (2018). Ending the reading wars: Reading acquisition from novice to expert. Psychological Science in the Public Interest, 19(1), 5–51. 10.1177/1529100618772271 [DOI] [PubMed] [Google Scholar]

- Deno SL, & Mirkin PK (1977). Data-based program modification: A manual. Reston, VA: Council for Exceptional Children. [Google Scholar]

- Filderman MJ, Toste JR, Didion LA, Peng P, & Clemens NH (2018). Data-based decision making in reading interventions: A synthesis and meta-analysis of the effects for struggling readers. The Journal of Special Education, 52(3), 174–187. 10.1177/0022466918790001 [DOI] [Google Scholar]

- Fuchs D, McMaster KL, Fuchs FS, Al Otaiba S. (2014). Data-based individualization as a means of providing intensive instruction to students with serious learning disorders. In Swanson HL, Harris KR, & Graham S. (Eds.), Handbook of learning disabilities (2nd. ed, pp. 526–544). New York, NY: Guilford Press. [Google Scholar]

- Fuchs LS, Deno SL, & Mikin PK (1984). The effects of frequent curriculum-based measurement and evaluation on pedagogy, student achievement, and students’ awareness of learning. American Educational Research Journal, 21(2), 448–460. 10.10.3102/00028312021002449 [DOI] [Google Scholar]

- Fuchs LS, Fuchs D, & Hamlett CL (1989). Computer and curriculum-based measurement: Effects of teacher feedback systems. School Psychology Review, 18(1). 10.1080/02796015.1989.12085405 [DOI] [Google Scholar]

- Fuchs LS, Fuchs D, Hamlett CL, & Ferguson C. (1992). Effects of expert system consultation within curriculum-based measurement using a reading maze task. Exceptional Children, 58(5), 436–450. 10.1177/001440299205800507 [DOI] [Google Scholar]

- Fuchs L, Fuchs D, Hamlett CL, & Stecker P. (2021). A call for next-generation technology of teacher supports. Journal of Learning Disabilities. Advanced online publication. 10.1177/0022219420950654 [DOI] [PubMed] [Google Scholar]

- Fuchs LS, Fuchs D, & Malone AS (2017). The taxonomy of intervention intensity. TEACHING Exceptional Children, 50(1), 35–43. 10.1177/0040059918758166 [DOI] [Google Scholar]

- Gesel SA, LeJeune LM, Chow JC, Sinclair AC, & Lemons CJ (2021). A meta-analysis of the impact of professional development on teachers’ knowledge, skill, and self-efficacy in data-based decision-making. Journal of Learning Disabilities. Advanced online publication. 10.1177/0022219420970196 [DOI] [PubMed] [Google Scholar]

- Greenberg MT, & Abenavoli R. (2017). Universal interventions: Fully exploring their impacts and potential to produce population-level impacts. Journal of Research on Educational Effectiveness, 10(1), 40–67. 10.1080/19345747.2016.1246632 [DOI] [Google Scholar]

- Grigorenko EL, Compton DL, Fuchs LS, Wagner RK, Willcutt EG, & Fletcher JM (2020). Understanding, educating, and supporting children with specific learning disabilities: 50 years of science and practice. American Psychologist, 75(1), 37–51. 10.1037/amp0000452 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jenkins JR, & Fuchs LS (2012). Curriculum-based measurement: The paradigms, history, and legacy. In Espin CA, McMaster KL, Rose S, Wayman MM (Eds.), A measure of success: The influence of curriculum-based measurement on education (pp. 7–26). University of Minnesota Press. [Google Scholar]

- Jung P-G, McMaster KL, Kunkel AK, Shin J, & Stecker PM (2018). Effects of data-based individualization for students with intensive learning needs: A meta-analysis. Learning Disabilities Research & Practice, 33(3), 144–155. 10.1111/ldrp.12172 [DOI] [Google Scholar]

- Lemons CJ, Sinclair AC, Gesel S, Gandhi AG, & Danielson L. (2017). Supporting implementation of data-based individualization: Lessons learned from NCII’s first five years. Washington, DC: National Center on Intensive Intervention at American Institutes for Research. [Google Scholar]

- McMaster KL, Lembke ES, Shin J, Poch AL, Smith AR, Pyung-Gang P, … & Wagner K. (2020). Supporting teachers’ use of data-based instruction to improve students’ early writing skills. Journal of Educational Psychology, 112(1), 1–21. 10.1037/edu0000358 [DOI] [Google Scholar]

- National Center on Intensive Intervention. (2013). Data-based individualization: A framework for intensive intervention. U.S. Department of Education. [Google Scholar]

- Peng P, Fuchs D, Fuchs LS, Cho E, Elleman AM, Kearns DM, Patton S, & Compton DL (2020). Is “response/no response” too simple a notion for RTI frameworks? Exploring multiple response types with latent profile analysis. Journal of Learning Disabilities, 53(6), 454–468. 10.1177/0022219420931818 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pentimonti JM, Fuchs LS, & Gandhi AG (2019). Issues of assessment with intensive intervention. In Edmonds RZ, Gandhi AG & Danielson L, L. (Eds.), Essentials of intensive intervention (pp. 30–51). New York, NY: Guildford Press. [Google Scholar]

- Schulte AC (2016). Prevention and response to intervention: Past, present, and future. In Jimerson SR, Burns MK, & VanDerHeyden AM (Eds.), Handbook of response to intervention: The science and practice of multi-tiered systems of support (pp. 59–73). Springer. [Google Scholar]

- Snowling MJ, & Hulme C. (2021). Annual research review: Reading disorders revisited – the critical importance of oral language. The Journal of Child Psychology and Psychiatry. Advanced online publication. 10.1111/jcpp.13324 [DOI] [PubMed] [Google Scholar]

- Stecker PM, Fuchs LS, & Fuchs D. (2005). Using curriculum-based measurement to improve student achievement: Review of research. Psychology in Schools, 42(8), 795–819. 10.1002/pits.20113 [DOI] [Google Scholar]

- Wanzek J, Vaughn S, Scammacca N, Gatlin B, Walker MA, & Capin P. (2016). Meta-analyses of the effects of tier 2 type reading interventions in grades K-3. Educational Psychology Review, 23(3), 551–576. 10.1007/s10648-015-9321-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zumeta RO, Compton DL, & Fuchs LS (2012). Using word identification fluency to monitor first-grade reading development. Exceptional Children, 78(2), 201–220. 10.1177/001440291207800204 [DOI] [PMC free article] [PubMed] [Google Scholar]