Abstract

Human facial analysis (HFA) has recently become an attractive topic for computer vision research due to technological progress and mobile applications. HFA explores several issues as gender recognition (GR), facial expression, age, and race recognition for automatically understanding social life. This study explores HFA from the angle of recognizing a person’s gender from their face. Several hard challenges are provoked, such as illumination, occlusion, facial emotions, quality, and angle of capture by cameras, making gender recognition more difficult for machines. The Archimedes optimization algorithm (AOA) was recently designed as a metaheuristic-based population optimization method, inspired by the Archimedes theory’s physical notion. Compared to other swarm algorithms in the realm of optimization, this method promotes a good balance between exploration and exploitation. The convergence area is increased By incorporating extra data into the solution, such as volume and density. Because of the preceding benefits of AOA and the fact that it has not been used to choose the best area of the face, we propose utilizing a wrapper feature selection technique, which is a real motivation in the field of computer vision and machine learning. The paper’s primary purpose is to automatically determine the optimal face area using AOA to recognize the gender of a human person categorized by two classes (Men and women). In this paper, the facial image is divided into several subregions (blocks), where each area provides a vector of characteristics using one method from handcrafted techniques as the local binary pattern (LBP), histogram-oriented gradient (HOG), or gray-level co-occurrence matrix (GLCM). Two experiments assess the proposed method (AOA): The first employs two benchmarking datasets: the Georgia Tech Face dataset (GT) and the Brazilian FEI dataset. The second experiment represents a more challenging large dataset that uses Gallagher’s uncontrolled dataset. The experimental results show the good performance of AOA compared to other recent and competitive optimizers for all datasets. In terms of accuracy, the AOA-based LBP outperforms the state-of-the-art deep convolutional neural network (CNN) with 96.08% for the Gallagher’s dataset.

Keywords: Archimedes optimization algorithm (AOA), Human facial analysis (HFA), Wrapper feature selection (FS), Handcrafted methods, Automatic selection

Introduction

Human vision allows performing several tasks in parallel and rapidly, particularly facial detection, gender recognition, and recognizing the state of mind, which differentiates the human being from others (Khan et al. 2020).

The automation of gender recognition represents a real challenge for scientific researchers , and it has a significant impact on the commercial field and video surveillance (Greco et al. 2021). For example, shopping centers are interested in knowing the sales rate and the category of people who buy their products, particularly the gender, age, and origins, to increase the sales rate. Also, another area requires the application of gender recognition to detect suspected people captured by surveillance cameras in large spaces such as airports, shopping malls, and gas stations. To reduce the time of searching for the target suspected person, the gender recognition application can contribute profoundly to solving this issue, especially for critical situations as suicide bombing or airport attack. Also, the current situation of the Covid-19 pandemic obliged people to wear the mask that the automation of gender recognition plays a vital role in our life (Fitousi et al. 2021).

Automatic gender recognition has grown critical in recent years, particularly in crime detection. Numerous modalities have been employed to identify female and male subjects, including the face (Hsu et al. 2021), voice (Livieris et al. 2019), gait (Lee et al. 2022), hand (Afifi 2019), fingerprint (Jalali et al. 2021), and electrocardiogram (ECG) signals (Uçar et al. 2021).

For this study, we selected visual information that provides direct and more intuitive information instead of more abstract signals such as biosignals (Comas et al. 2020). According to the literature, the human detection rate of GR is lower than 95%, which increases the difficulties of automatic gender recognition (Boon Ng 2012). In this field, several challenges are considered as rotated, occluded faces, and the human who is similar to women with long hair, which strongly affects the performance of gender recognition.

In general, the task of gender recognition requires three crucial steps (Greco et al. 2020). The first step detects the facial area, and the second aims to extract the features from faces. In contrast, the last step is reserved for realizing the task of classification into binary classes using machine learning approaches such as support vector machines (SVM), extreme learning machines (ELM), and multilayer perceptron (MLP). As a case study, we find the work of Goel and Vishwakarma. (Goel and Vishwakarma 2016) which applied kernel principal component analysis (KPCA) to reduce the size of intensity vectors and provide 64 principal components for gender classification. This vector is considered as an input of SVM that used 2-fold cross-validation on three datasets. The quantitative study has shown a performance of accuracy 97.375%, 99.7%, and 96.67% for the three datasets: GT, AT@T, and Faces94 datasets. Several descriptors based on handcrafted are introduced for face recognition, gender, and age identification during the last decades. This type includes mainly four categories: texture features, facial shape features, intensity pixels, and geometric features. The first type includes predominately local binary pattern (LBP) (Sajja and Kalluri 2019), local phase quantization (LPQ) (Nguyen and Huong 2017), and local ternary patterns (LTP) (Osman and Viriri 2020), while the second type employed basically histogram-oriented gradient (HOG) (Khalifa and Şengül 2018), pyramid HOG (PHOG), and multi-level HOG (ML-HOG) (Bekhouche et al. 2017). The third type used mostly gray-level co-occurrence matrix (GLCM) and rotated GLCM. Finally, the features are extracted by scale-invariant features transform (SIFT). It is important to highlight that many algorithms are designed to enhance the basic handcrafted methods by fusing textural information with facial shape features for gender recognition (Micheal and Geetha 2019). This work consists of extracting two textural descriptors ( dominated RLBP and rotation-invariant LPQ), combined with pyramid HOG, to determine the gender of persons automatically. In addition, the authors employed an SVM classifier based on three kernel functions (linear, polynomial, and RBF). The experimental study has been validated by three datasets: FEI, LFW, and Adience. The obtained results proved that the SVM-based RBF kernel achieved higher performance in terms of accuracy for FEI, LFW, and Adience with 95.3, 98.7, and 96%, respectively. In the same context, Geetha et al.(Geetha et al. 2019) designed a new gender descriptor which is based on edge feature, texture feature, and intensity characteristics. The first part is encoded by 8-local directional pattern (8-LDP), whereas LBP implements the second part and, the final part represents the pixels values of the image. The authors used FEI and a self-designed dataset to treat gender classification tasks. The obtained results attained 99% as accuracy for FEI and 94% for the self-designed dataset using an SVM classifier based on multi-blocks combined descriptor.

Nowadays, deep-learned features are used exponentially in machine learning, especially in computer vision for facial analysis (Singh et al. 2021), including gender, age, emotion and alignment (Aspandi et al. 2021), affect estimation (Aspandi et al. 2020), biosignal analysis (Comas et al. 2020), biomedical application (Peimankar and Puthusserypady 2021; Yu et al. 2021), and remote sensing (Peker 2021; Alhichri et al. 2021). Recently, several architecture are created by pretrained CNN such as VGG16, ResNet, GoogleNet, CaffeNet, AlexNet, and Inception for gender recognition (Huynh and Nguyen 2020; Savchenko 2019; Lapuschkin et al. 2017; Silva 2019; Abirami et al. 2020; Lin et al. 2020[?]). Furthermore, a comparative study between several pretrained CNN such as MobileNet, DensNet, Xception, and SqueezeNet is realized by (Greco et al. 2020) for gender recognition. Hence, a great competition between handcrafted features and deep-learned (DL) features have been highlighted. For instance, we find the work of (Lapuschkin et al. 2017), which used three pretrained CNN called CaffeNet, VGG16, and GoogleNet for estimating age and gender information.

The great number of attributes generated by both methods (Handcrafted and deep features) prompts researchers to develop new methods called wrapper feature selection based on metaheuristics (MHs). MHs are derived from different subjects, allowing the development of many optimization algorithms that can be merged with machine learning techniques.

Despite the significant development of metaheuristics generated by the imitation of animal behavior, mathematical and physical laws in the field of feature selection as manta-ray foraging optimizer (MRFO) (Ghosh et al. 2021), emperor penguin optimizer (EPO) (Dhiman et al. 2021), Harris hawks optimizer (HHO) (Thaher et al. 2020), gray wolf optimizer (GWO) (Al-Tashi et al. 2020), whale optimization algorithm (WOA) (Mafarja and Mirjalili 2018), Henry gas solubility optimization (HGSO) (Neggaz et al. 2020), multi-verse optimizer (MVO), equilibrium optimizer (EO) (Gao et al. 2020), and sine cosine algorithm (SCA) (Taghian and Nadimi-Shahraki 2019), the integration of these methods in complex problems such as gender recognition remains limited.

The theory of “No-free lunch” (Wolpert and Macready 1997) allows us to introduce a novel issue in the field of optimization. Furthermore, AOA is a physical-based population metaheuristic published in 2020. Also, AOA is a particular algorithm due to its encoding solutions. It includes the search space representation in D dimension with complementary information such as volume and density, which improves the convergence speed compared to other metaheuristics. This algorithm is more suitable for applying to real applications, and it contains fewer control parameters than other physical algorithms such as HGSO (Hashim et al. 2019), MVO (Mirjalili et al. 2016), and EPO (Dhiman and Kumar 2018). Despite its recent creation, this algorithm has grown significantly by affecting several application areas, such as optimization and engineering problems (Hashim et al. 2020), heart disease diagnosis (Anand 2021), industry design (Yıldız et al. 2021), WSN performance prediction (Preeti 2021), wind energy generation systems (Fathy et al. 2021), plant disease identification (Annrose et al. 2021), and PEM fuel estimation (Sun et al. 2021; Yao and Hayati 2021). Due to the prior merits of AOA and the fact that it has not been utilized to select the optimal area of interest on the face, this issue can be handled by utilizing a wrapper feature selection technique, which is a significant motivation in the field of computer vision and machine learning. Thus, gender recognition from face images is still a big challenge to this day, i.e., how to determine the most significant areas from face images characterized by local binary pattern (LBP), histogram of oriented gradient (HOG) or gray-level co-occurrence matrix (GLCM) descriptors intelligently and automatically?

This paper automatically determines the significant areas based on handcrafted features (LBP, HOG, or GLCM) from the face using the Archimedes optimization algorithm (AOA) to solve gender recognition problems using an optimal number of faces extracted areas.

The major contributions of this paper are as follows:

Designing a novel wrapper physical algorithm AOA for predicting gender identification using an automatic selection of the optimal and significant areas of face images.

Comparing the performance of AOA with several recent and robust optimizers as for facial analysis based on feature selection (FS).

Evaluating the impact of three handcrafted features based on LBP, HOG, and GLCM.

Testing the efficiency of AOA for gender recognition over smallest datasets (FEI (Thomaz and Giraldi 2010) and GT (Nefian 2013)) and largest dataset (Gallagher’s database (Gallagher and Chen 2009)).

Comparing the efficiency of handcrafted methods based on AOA to deep convolutional neural network (CNN) for largest dataset (Gallagher’s database)

The following structure of our paper contains six sections. Section 2 explains some works which treat gender recognition based on handcrafted features, deep features, hybrid descriptors, and automatic feature extraction methods. In Sect. 3, three handcrafted descriptors are explained, including local binary pattern (LBP), histogram-oriented gradient (HOG), and gray-level co-occurrence matrix (GLCM). Section 4 gives the concept of the Archimedes optimizer algorithm in detail. After, we propose our architecture of AOA wrapper feature selection for gender recognition by defining the structure of the encoding solution of an immersed object, the score function, and the designed framework. The section represents the kernel of our paper which includes datasets description, parameters of algorithms, quantitative, and graphical results proved by statistical analysis using rank-sum Wilcoxon’s (Neggaz et al. 2020). Finally, Sect. 7 shows our conclusion with some future horizons.

Related work

This part summarizes the potential work of literature related to facial analysis.

Firstly, we give a recap of handcrafted features for gender recognition. Secondly, a short description of deep-learned features is shown for human facial analysis. Thirdly, hybrid features are described. Finally, an overview of wrapper feature selection-based gender recognition is presented.

Handcrafted features

Dago-Casas et al. (2011) proposed a combination of Gabor jets, pixels, and LBP, tested on the largest datasets captured in a real-world environment, including LFW and Gallagher’s datasets. The authors used two classifiers (SVM and LDA) to recognize gender as male and female. We noticed that the dimensionality is reduced using PCA. The obtained results show that Gabor+PCA+SVM is better than Gabor+PCA+LDA in terms of accuracy. The numerical results indicate that the accuracies are 86.61% for the Gallagher’s dataset and 94.01% for the LFW dataset.

Castrillón-Santana et al. (2013) proposed a combination of global and local features for recognizing gender in the FERET dataset. LBP and 2D-DCT were used as local features, while the authors used PCA as a global feature. The classification task is realized by k-NN, which reached 98.16% in terms of accuracy.

Surinta and Khamket. (2013) used a combination of LBP and HOG features taken from multiple resolutions of the head and shoulders pattern. The experimental study is conducted following Dago’s procedure on Gallagher’s dataset (distance inter-occular greater than 20 pixels with 5-fold). Two classifiers are used to accomplish the gender identification task: SVM and Bagging. In terms of accuracy, Bagging+LBP+HOG exceeds SVM+LBP+HOG, with the best model reaching 88.1%.

Ng et al. (2015) summarized a review of gender recognition in facial images They discussed several methods for extracting features, including LBP, SIFT, Gabor Wavelet, and external cues. Additionally, they mentioned numerous datasets that pose difficulties for GR, including AR, FERET, BioID, CMU-PIE, FRGC, MORPH, LFW, and Gallagher’s. Due to the increased quantity of facial photographs, 28231 were collected in uncontrolled situations, and the last dataset poses a significant challenge in the field of gender identification.

Castrillón-Santana et al. (2016) extracted the periocular area firstly to determine the set of features using handcrafted methods including HOG, LBP, local ternary patterns (LTP), Weber local descriptor (WLD), and local oriented statistics information booster (LOSIB). Their idea consists of creating a grid with cells. Then, they applied handcrafted methods to each cell. The experimental study is validated by Gallagher’s dataset under Dago’s protocol (Dago-Casas et al. 2011). The best descriptor is a handcrafted combination of HOG, uniform LBP , LTP, and WLD, using SVM as the classifier. This combination achieved an accuracy of 82.91%.

Recently, several methods have been developed in the literature called handcrafted techniques. The extracted features are determined from the whole face or some regions by computing the local gradient parameters as a histogram of oriented gradients (HOGs) and scale-invariant feature transform (SIFT) (Surinta et al. 2019). The authors used a support vector machine as a classifier for identifying the two classes: female and male from Color FERET datasets. The experimental study has shown that HOG outperformed the SIFT descriptor-based SVM when the size of training data is reduced.

Zhang et al. (2018) developed a novel fusion of facial features for gender recognition. The vector of characteristics is obtained by combining local binary pattern (LBP), local phase quantization (LPQ), and a multi-block. The classification task is realized using a support vector machine (SVM) and tested on Gallagher’s dataset. The experimental results show that the proposed method outperformed another basic versions (LBP and LPQ).

Ghojogh et al. (2018) designed four frameworks for gender identification using facial images. The first framework consists of extracting features using the texture method based on LBP and reducing the dimension of vector features using PCA, which will be served as input for multilayer perceptron (MLP).The second framework used Gabor filters to provide the vector of features reduced by PCA and served as input for the kernel SVM classifier. The third framework extracts the lower part of face with the size of sub-image , which will be reshaped to column vector with the size of and served as input for kernel SVM classifier. The last framework extracts 34 landmarks from the face, classified by linear discriminant algorithm (LDA). All proposed frameworks are assessed on FEI datasets, and the experimental results shows that the third framework outperforms others by 90% in terms of accuracy. However, the accuracy increases to 94% when the weighting vote takes the decision. Also, gender identification is solved by texture and geometric features, which can be determined by local binary pattern (LBP) and gray-level co-occurrence matrices (GLCM) (Omer et al. 2019). More recently, several enhanced versions of LBP have been developed for face and gender recognition as local directional pattern (LDP) and local phase quantization (LPQ). In the same context, a novel variant of LBP was proposed by Chen and Jeng. (2020) named adaptive patch-weight LBP (APWLBP). Their method used a pyramid structure to compute the gradient using weight parameters determined by Eigen theory. The main objective of (APWLBP) is to determine the optimal projection on the hyperplane with a high value of variance for gender recognition. The performance of APWLBP-based SVM is very competitive against CNN on three-dimensional Adience1and LFW datasets (Gary et al. 2007). In the same context, scale-invariant feature transform (SIFT) is combined with trainable features (CROSSFIRE) (Pai and Shettigar 2021).

Deep-learned features

In recent years, deep learning CNNs have dominated many computer vision applications.

Mansanet et al. (2016) developed a local-deep textcolorbrownneural network (LDNN) model for gender recognition; this model was constructed using a feed-forward NN without dropout to extract features from the input photographs. After detecting the edges of the facial picture, small image patches were selected around these edges. All the image patches were used to train the neural networks. The final output was calculated by averaging the expectations for all the patches in the input test picture. The LDNN model achieves an accuracy of 96.25% and 90.58% on the largest datasets of LFW and Gallagher’s datasets, respectively.

Orozco et al. (2017) proposed a deep CNN architecture to recognize the gender of the two largest datasets, named LFW (13233 pictures) and Gallegher’s dataset (28231 images), captured in real-world conditions. The authors applied the first face detection step using Haar features embedded in the AdaBoost detector. Then, the classification task is realized by Ubunsa CNN.2 The obtained results show that the accuracies of LFW and Gallagher’s datasets are 95.42 and 91.48%.

A novel synergy between CNN and ELM is illustrated by the work of Duan et al. for age and gender identification (Duan et al. 2018). The CNN is used as an extractor of features. In contrast, ELM is used as a classifier to simultaneously determine the person’s age and distinguish between male and female from face images. Acien et al. (2018) employed two architectures of pretrained CNN named VGG16 and ResNet for measuring ethnicity and gender information. Ito et al. (2018) designed four architecture of pretrained CNN: AlexNet, VGG-16, ResNet-152, and Wide-ResNet-16-8 for predicting the age and gender over IMDB-WIKI datasets. The experiment study showed that Wide-ResNet presented a textcolorbrownhigh accuracy compared to others pretrained CNN. Mane and Shah (2019) explored human facial analysis using three points: face recognition, gender, and expression recognition. This study focuses only on gender recognition; hence, the authors used CNN for gender recognition. Agrawal and Dixit (2019) used CNN as extractor for predicting age and gender from facial images. Then, the dimensionality reduction is applied using principal component analysis (PCA), and the task of classification is implemented using feed-forward neural network.

Haider et al. (2019) proposed a specific deep CNN architecture for real-time gender identification using smartphones. The architecture comprises 4 convolutional layers, 3 max-pooling layers, 2 fully connected layers, and a single layer for regression. The training is realized by fusing two datasets, FEI and CAS-PEAL, including 200 persons with 2800 faces and 1040 persons with 30,871. For the FEI dataset, the deep-gender registered 98.75% as accuracy by considering a specific process, including alignment, before reducing the size of the facial image. However, the proposed method reached 97.73% in terms of accuracy for CAS-PEAL-R1 datasets. It is important to indicate that the authors split their dataset to 5-fold as cross-validation.

Abdalrady and Aly (2020) used a straightforward feature fusion technique based on two simple CNN architectures. The authors created a simple CNN called PCANet that replaces the complex convolutional layer filters with a bank of PCA filters in each stage. Based on Gallagher’s dataset, the suggested model “2-stage PCANet” performance was analyzed and found to be 89.65%.

Deep-learned assisted by handcrafted features

A significant number of studies have been investigated deep-learned features and their impact compared to handcrafted features and fused features (handcrafted with deep features) for gender identification (Simanjuntak and Azzopardi 2020).

Dwivedi and Singh (2019) summarized firstly, some methods based on handcrafted as LBP, HOG, SIFT, weighted HOG, and CROSSFIRE Filter, and secondly, the authors explained the role of CNN, which can be used for double tasks, i.e., used as an extractor of features and classifier to recognize age and gender from the face.

Althnian et al. (2021) have employed three methods, including LBP, HOG, and PCA as handcrafted features, deep CNN features, and fused features based on three combinations named LBP-DL, HOG-DL, and PCA-DL. Furthermore, gender identification task is realized by two classifiers, SVM and CNN. The experimental results showed a high average accuracy of 88.1% obtained by fused features (LBP-DL) and SVM classifier, tested on two datasets, LFW and Adience. Additionally, Hsu et al. (2021) designed three occlusion methods assisted by AdienceNet and VGG16 for recognizing age and gender tasks.

Automatic feature extraction methods using evolutionary algorithms

A few works have been published in this area. For example, Zhou and Li (2019) used a genetic algorithm (GA) to classify gender-based faces automatically. Their idea involves applying GA in order to determine the optimal set of Eigen-features extracted from faces by PCA and classified by neural network. The experimental study is validated using two datasets, including FEI and FERET. The obtained results achieved 96% and 94% as accuracy rates, respectively.

Ghazouani (2021) applied genetic programming (GP) for HFA based on feature selection, specifically facial expression recognition. The author applied several steps as face detection using HOG as descriptor and SVM as binary classifier. Then, the step of features extraction is realized using the texture method (LBP) and geometric methods (linear and eccentricity features). Finally, the author applied GP to select the relevant features of the fused set to recognize the emotions.

Features extraction

Several methods have been proposed to describe the texture characteristic In this section. We present below a brief study of some existing techniques for extracting of texture features, which are applied to the analysis of facial images. The purpose of extracting descriptors (characteristic) in pattern recognition is to express primitives in a numerical or symbolic form called encoding. This part will introduce the descriptors used in the experiments and results part. These are first of all the local binary patterns (LBP), then the descriptors of histogram based on oriented gradient (HOG), and finally the gray-level co-occurrence matrix (GLCM).

Local binary patterns (LBP)

Texture descriptors based on local binary patterns were initially proposed by Ojala et al. (2002). The computation of the resulting image from the LBP application is akin to a correlation operation while applying a filter to a digital image. It suffices to process each image pixel by considering the eight pixels of its immediate neighborhood. The neighborhood of a pixel forms a matrix of pixels where the pixel to be processed is in the center, and its neighborhood is around. Figure 1 shows an example of the execution of the LBP algorithm relating to the steps described below.

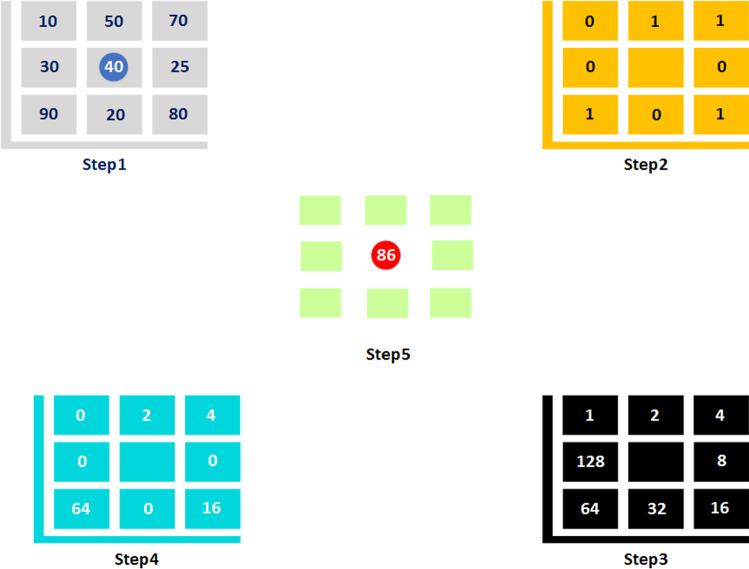

Fig. 1.

Basic LBP operator

Step 1 Extraction of the neighborhood of the pixel to be processed. The eight intensity values of pixel’s neighborhood to be processed are extracted from a matrix of pixels. In this example, each pixel has a different gray intensity value. The pixel being processed has the value of intensity 40.

Step 2 A thresholding is performed on the intensity value of the neighboring pixels. Any pixel with an intensity value greater than or equal to the intensity value of the processed pixel is assigned the value 1. The value 0 is assigned to any intensity value lower than that of the processed pixel.

Step 3 A multiplier matrix is stored. This matrix will describe the resulting local binary form uniquely in the next step of the algorithm.

Step 4 Element-by-element multiplication. This operation is carried out between the matrix resulting from the thresholding of step 2 and the multiplying matrix of step 3.

Step 5 The summation of the values of the resulting matrix from step 4 is performed. This sum is related in the output image to the corresponding coordinates of the pixel to be processed in the input image. The algorithm re-executes steps 1 to 5 until all the input image pixels are processed. According to the procedure for identifying LBP, a histogram is calculated to characterize the frequency of appearance of various patterns. The computed number for each pixel in step 5 uniquely identifies a gray intensity pattern among the possible patterns. The shape of the resulting histogram is characteristic of the texture studied by the LBP algorithm.

In general, extracting features from facial images using LBP starts by dividing the input image into several blocks . Then, we extract the histogram for each block based on LBP. The final step consists of concatenating all histograms to realize gender recognition. To calculate the LBP code in a neighborhood of P pixels with a radius R, we simply count the occurrences of gray levels greater than or equal to the central value using Eq. (1).

| 1 |

where and are the gray levels of a neighboring pixel and the central pixel, respectively. S indicates the Heaviside function defined by Eq. 2:

| 2 |

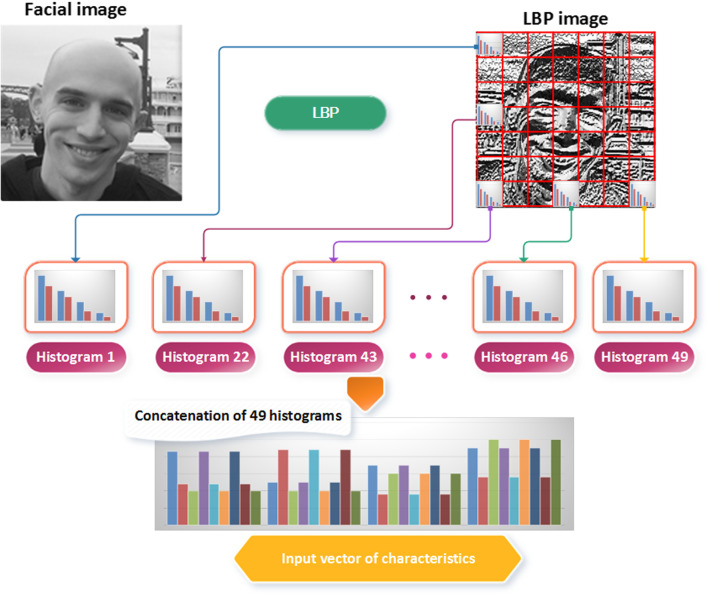

According to the work of Ghazouani (2021), provides a total of 58 uniform pattern and a total of 59 bins. As depicted in Fig. 2, the cropped face is divided into blocks. The 59 bins have been computed for each block and concatenated to construct a global histogram for the entire face. Therefore, each face is represented by a histogram of bins

Fig. 2.

The coding face using a set of LBP histogram

Histogram of oriented gradient (HOG)

HOG is a powerful descriptor proposed by Dalal and Triggs in 2005, initially developed for human detection Dalal et al. (2005). However, later it is extended and applied to other computer vision problems, including facial recognition Hung (2021), gender and age estimation Patil (2021), detection of plant pathology’s Pattnaik and Parvathi (2021), and facial expressions recognition (Lakshmi and Ponnusamy 2021). HOG describes the appearance and local shape of the object in an image using the distribution of gradients. The characteristic vector of an image I(x, y) using the HOG technique is obtained by the following procedure:

Step 1—Divide the image I(x, y) into equal blocks , where each block contains regular cells of size pixels.

Gradient values are computed for each pixel using a centered derivative filter in the horizontal and vertical directions. For this, the following masks are used and defined by Eq.(3) and Eq. (456):

| 3 |

| 4 |

| 5 |

| 6 |

Step 2—The magnitude and gradient orientation of each pixel (x, y) are calculated using Eqs. (7) and (8):

| 7 |

| 8 |

and represent the horizontal gradient and the vertical gradient at pixel (x, y), respectively.

Step 3—The histogram of the orientation based on the gradient inside each cell is calculated by quantizing unsigned gradients at each pixel in 9 channels (bins) orientations. The histograms are uniform from 0 to 180 (unsigned case) or from 0 to 360 (signed case).

Step 4—The characteristic vector for each cell is normalized using histograms in their recognized blocks. In this work, we use the L2-norm for the normalization of the blocks; the normalization factor is calculated using the following equation:

| 9 |

where Hist is the non-normalized vector containing all the histograms in a block, is the L2 norm of the descriptor vector, and is a regularization term.

Step 5—The characteristic vector of each block is formed by concatenating the histogram vectors of all the cells in the block. The characteristic vector HOG is formed by concatenating the characteristic vectors of all the blocks for a given image.

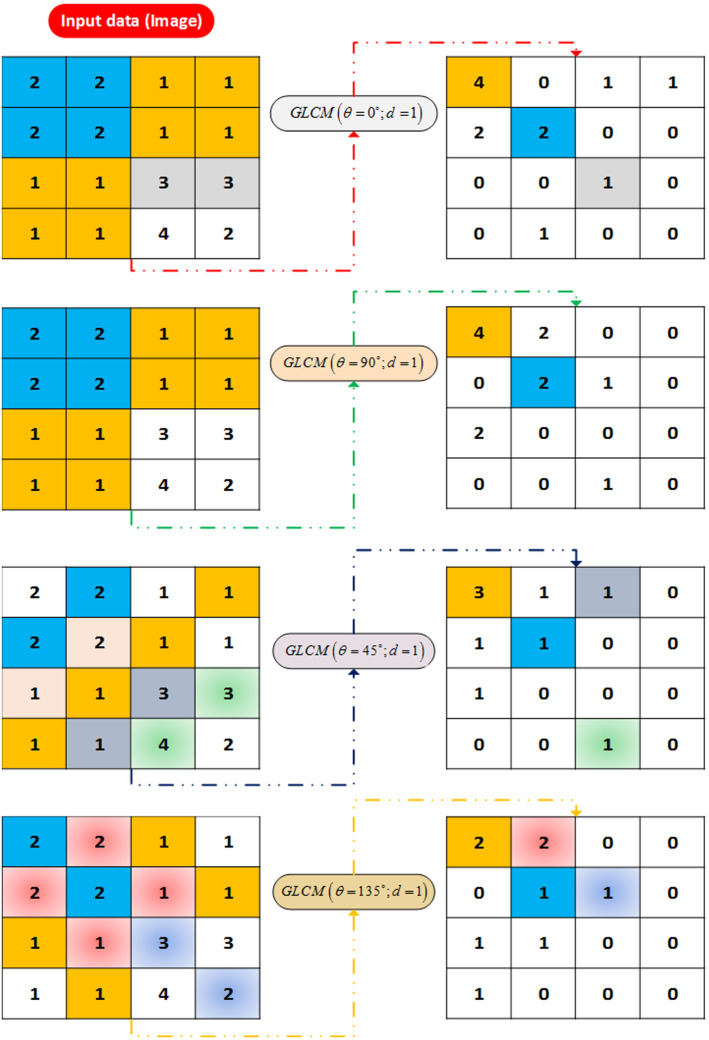

Gray-level co-occurrence matrix (GLCM))

GLCM is a method widely used in image processing that belongs to the class of texture-based statistical methods. Textural content is expressed differently depending on distance d and orientation of the displacement considered between the pairs of sites, which provides 4 GLCMs due to the 4 orientations defined by : , , and (Vimal et al. 2021). Figure 3 illustrates the configuration of 4 GLCMs that correspond to the 4 directions with the distance fixed to . The extracted features from GLCMs include mainly the energy, the contrast, entropy, correlation, homogeneity, dissimilarity, the cluster shade, and the cluster prominence, which are explained briefly by:

- The energy : expresses the regularity of the texture, which can be computed by:

It is important to note that a higher value of signifies a completely homogeneous image.10 - The contrast : It measures the rate of local variation in the picture (I). The formula of is given by :

11 - The entropy : is the inverse of energy and characterizes the irregular appearance of the image, hence a strong correlation between these two attributes. The formula of is computed by :

12 - Correlation : It can be compared to a measure of the linear dependence of gray levels in the image. It is calculated by:

13 - Homogeneity :The homogeneity changes inversely to the contrast and takes on high values if the differences between the analyzed pixel pairs are weak. Therefore, it is more sensitive to the element’s diagonals of the GLCM, unlike the contrast, which depends more on the distant element’s diagonal. It is measured by:

14 -

Dissimilarity : It expresses the same characteristics of the image as contrast to the difference that the weight of the GLCM inputs increases linearly as they move away from the diagonal rather than quadratically in the case of contrast.

It calculated by:15 - The cluster shade and the cluster prominence give information on the degree of symmetry of the GLCM. – The cluster shade is defined by :

whereas, the cluster prominence is given by:16 17

Fig. 3.

An example of GLCMs based on different orientations

Similar to multi-blocks LBP/HOG, the whole image is divided into blocks, where from each block, the statistical moments GLCM are extracted and then combined to generate the descriptors vectors.

Archimedes optimization algorithm (AOA)

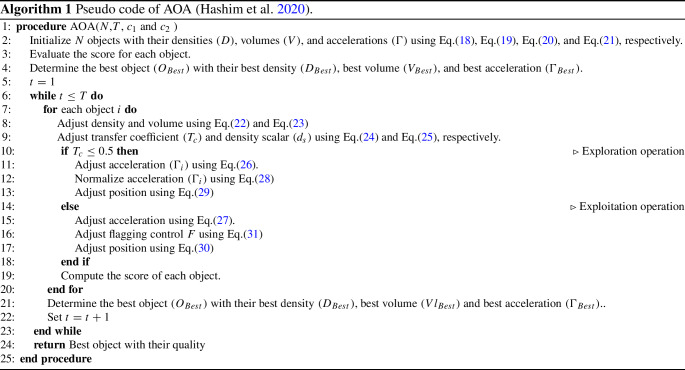

AOA is an algorithm inspired by physics, more particularly Archimedes’ law. This algorithm is introduced by Fatma Hashim in 2020 and belongs to the class of metaheuristics (Hashim et al. 2020). The particularity of this algorithm lies in the encoding of the solution, which encompasses three auditory information: volume (V), density (D), and acceleration to the basic agents. Hence, the group of agents is initially generated randomly in Dim dimensions. As additive data, random values of V, D and, are provided. After, the evaluation process is realized for each object to determine the best object .

During the process of AOA, the update of density and volume is realized to change the acceleration based on the collision concept between objects, which play an important role in determining the novel position of current solution. The general steps of AOA are described as follows:

The first step–Initialization: This step aims to initialize randomly the real population that contains N objects using Eq. (18). Also, each object is characterized by their density , volume , and acceleration , which are defined in a random way using following Eqs. (19), (20), and (21):

| 18 |

| 19 |

| 20 |

| 21 |

where represents the object, and are the maximal and minimal limits of the search space, respectively.

, and are random vectors which belong to .

The population will be evaluated by computing the score for each object to determine the best object by joining their best values of density , volume , and acceleration .

The second step–The update of densities and volumes In this step, the values of density and volume for each object are updated by the control of the best density and best volume using Eqs. (22) and ((23):

| 22 |

| 23 |

where are random scalars in [0, 1].

The third step–Transfer coefficient and density scalar: In this step, the collision between object is occurred until obtaining the equilibrium state. The principal role of transfer function is to switch from exploration to exploitation mode, defined by Eq. (24):

| 24 |

The increases exponentially over time until reaching 1. t is the current iteration, while T denotes the maximum number of iterations. Also, the decrease of density scalar in AOA allows to find an optimal solution using Eq. (25):

| 25 |

The fourth step–Exploration phase: In this step, the collision between agents is occurred using a random selection of material (Mr). Thus, the update of acceleration objects is applied using Eq. (26) when the transfer function value is less or equal to 0.5.

| 26 |

The fifth step–Exploitation phase: In this step, the collision between agents is not realized. Hence, the update of acceleration objects is applied using Eq. (27) when the transfer coefficient value is greater than 0.5.

| 27 |

where is the acceleration of the optimal object .

The sixth step–Normalization of acceleration: In this step, we normalize the acceleration to determine the rate of change using (28):

| 28 |

where and are fixed to 0.9 and 0.1, respectively. The shows the proportion of steps modified by each agent. A lower acceleration value indicates that the object is operating in the exploitation mode; otherwise, the object is operating in the exploration mode.

The seventh step–The Update process: For exploration phase , the position of object in iteration is modified by Eq. (29), whereas the object position is updated by Eq. (30) in exploitation phase .

| 29 |

where is fixed to to 2.

| 30 |

where is fixed to 6.

The parameter is positively correlated with the time and this parameter is proportionally linked to the transfer coefficient , i.e., . The main role of this parameter is to ensure a good balance between exploration and exploitation operations. During the first iterations, the margin between the best object and the other object is higher, which provides a high random walk. However, the margin will be reduced in last iterations and provide a low random walk.

F is employed for flagging which controls search direction using Eq. (31):

| 31 |

where .

The eighth step–The evaluation: In this step, we evaluate the novel population using score index Sc to determine the best object and the best additive information including , , and .

AOA-based FS for gender recognition

This section explains our system of gender recognition using Archimedes optimizer algorithm (AOA)-based feature selection. This system required three key points: the encoding solution, the evaluation of score, and the architecture system of gender recognition.

Structure of immersed object

This ingredient plays a vital role in the optimization process using physical or swarm algorithms.

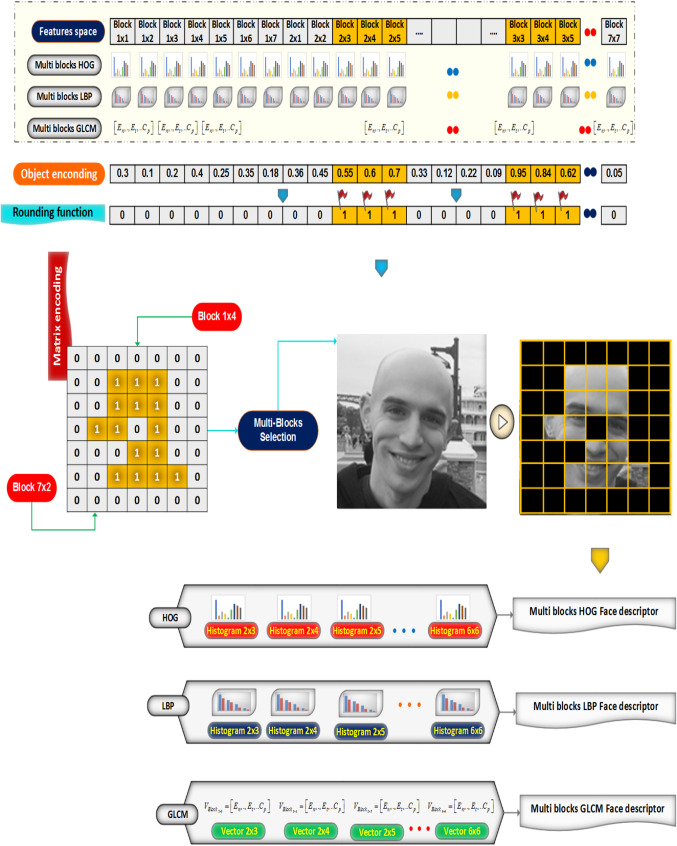

The size of each object in AOA corresponds to the number of blocks, which is a 1-D vector with 49 elements. The vector components are randomly generated in the range [0, 1. At this stage, if the value is greater or equal to 0.5, thus the value is rounded to one. In this case, the bloc is considered as a relevant feature and coded by a histogram of (LBP, HOG, or GLCM). Contrarily, the block is ignored when the value is rounded to zero. This encoding aims to select the informative areas by realizing the concatenation of activated Multi-blocks-based histograms (LBP, HOG, or GLCM). The encoding object is shown in Fig. 4.

Fig. 4.

Encoding solution

From Fig. 4, the first component(0.3) up to the 9th component (0.45) is less than 0.5. Thus, the current blocks will be inactivated. while the 10th (0.55), 11th, 12th, 46th, 47th, and 48th (0.62) components are greater than 0.5. As a result, the corresponding blocks will be selected to extract the features using HOG, LBP, or GLCM. This vector will be transformed to a matrix with a size of to project the encoding solution on the image. The final vector of features represents the concatenation of activated histograms in the cases of LBP and HOG. However, a vector of parameters is used for GLCM.

According to this figure, we can see clearly that the current object selects fifteen blocks based histograms from 49 face areas, which will be concatenated and serve as the input of the neural network classifier.

Score evaluation

To apply the process of gender recognition using a wrapper feature selection assisted by AOA, a good compromise between accuracy and a lower number of features must be assured. Hence, the score for each object is computed by:

| 32 |

where (Acc) and (d) are the accuracy obtained by multilayer perceptron neural network (MLP) and the size of selected histograms, respectively.

In Eq. (32), D is the total number of multi-blocks-based histogram extracted from original image.

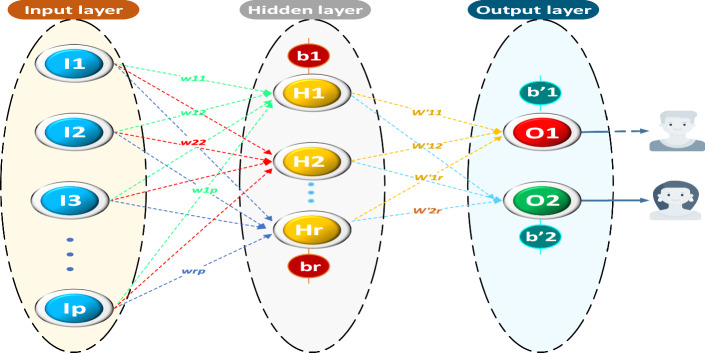

The MLP is integrated as a classifier in the FS process using k-folds as a cross-validation strategy. In this study, the value of k is fixed to 5 to realize a fair comparison. Thus, 80% of samples is used in the training step, where the rest is used for testing. Additionally, the architecture of MLP is described in Fig. 5.

Fig. 5.

The architecture of MLP

This architecture includes three layers:

Input layer It corresponds to the multi-blocks-based histograms (LBP/HOG) input features or GLCM vector. Hence, the number of neurons in this layer for LBP, HOG, and GLCM is equal to bins, bins and , respectively.

Hidden layer It contains the double of neurons used in the input layer.

Output layer It contains two neurons; the first corresponds to males, while the second is reserved for females.

In our study, the AOA aims to determine only the optimal patches for distinguishing between males and females. Thus, the MLP is designed for a classification task, knowing that the male output is coded by and the female output is coded by .

The training configuration of MLP requires that the hidden neurons use the sigmoid function while the output neurons utilize softmax function. Also, the weights of MLP have been updated using a basic backpropagation algorithm, and the stop condition is indicated by the logical operator between the maximum number of iterations ((500) or the value of the mean square error (MSE), which is less than .

It is important to indicate that the higher value of the computed score through all objects is assigned to the best object .

Design framework

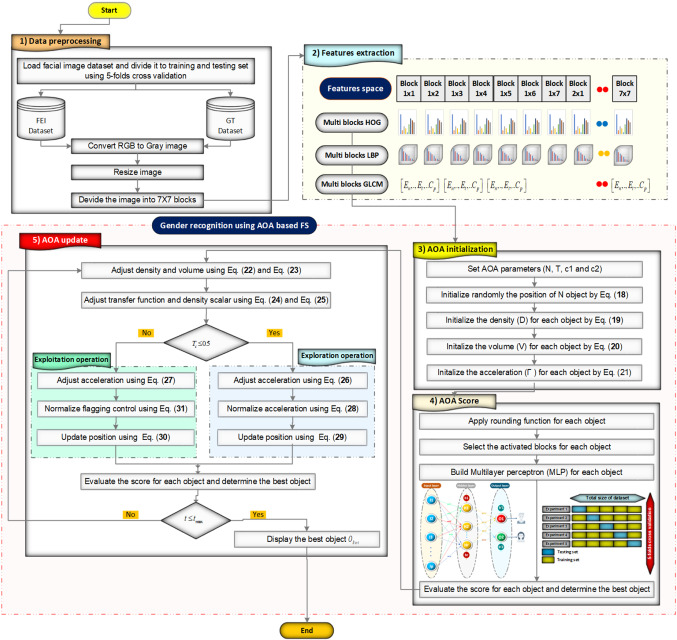

This part represents the core of our work, which consists of applying the AOA algorithm in gender recognition based on the selection of attributes. To better understand the proposed architecture, we preferred to explain the essential ingredients in bullet points:

Initialization: This step is started by creating a random population of N immersed objects with as dimension (49) elements.

Encoding solution: This step aims to transform random objects into binary vectors to select the relevant blocks-based histograms or GLCM features.

Selection of subset features: After decoding the object as illustrated in Fig. 4, the corresponding blocks are determined from the datasets based on LBP, HOG, or GLCM.

Score evaluating: Each object generated by AOA indicates the subset of selected features must be assessed using an MLP classifier. The score represents the relationship between accuracy and the selected number of features, computed by Eq. 32.

The update of position: The most important step in our architecture consists of applying a sequence of operators such as updating densities and volumes, exploring/ exploiting tasks, and norming of acceleration to produce better solutions with a higher score as shown in Algorithm 1.

Stop condition: The cycle of AOA is an iterative process, controlled by the maximum number of iterations as a stop condition.

Figure 6 illustrates the overall steps of the AOA-based gender recognition and FS process. It is important to indicate that the MLP is performed using 5-fold cross-validation, which means that the MLP is trained 5 times and the average fitness evaluation is computed.

Fig. 6.

The design framework of AOA-based FS for gender recognition

Experimental results

To realize a fair analysis, the efficiency of AOA is compared with different and recent computational algorithms inspired from swarm intelligence, mathematical algorithm, and physical algorithms, including HHO, MRFO, EPO, SCA, EO, HGSO, and MVO, tested on two scenarios.

The first scenario used smallest datasets captured in controlled conditions in the case of GT and FEI datasets.

The second scenario employed the challenger largest dataset, taken in a real environment in the Gallagher’s dataset. All algorithms used the same conditions: the population size is fixed to ten solutions (), and the maximum number of iterations is fixed to 100 (). Also, three textural descriptors based on multi-blocks HOG, LBP, and GLCM, are implemented.

Simulation setup

Statistical metrics

To investigate the efficiency of the AOA algorithm in facial analysis-based FS, especially in gender recognition. The confusion matrix must be used and defined in Table 1. Next, some measures must be computed as Accuracy (Ac), Recall (Re), Precision (P), and F-score.

Table 1.

Confusion matrix

| Predicted | ||

|---|---|---|

| Actual | Male | Female |

| Male | TrP | FaN |

| Female | FaP | TrN |

where: TrP The classifier identifies the person correctly knowing that their class is male;

TrN the classifier identifies the person correctly knowing that their class is female;

FaP the classifier assigns the person to the male class, knowing that the example belongs to the female class

FaN the classifier assigns the person to the female class, knowing that the example belongs to the male class.

- The accuracy metric (A) Among the most important metrics, we find the accuracy which measures the rate of correct data classification, defined by:

33 - The recall metric(R) This metric is also called true positive rate (TPR), which indicates the percentage of predicting male person as:

34 - The precision(P) It indicates the rate of true predicted samples as:

35 The fitness value metric (Sc) This metric evaluates the performance of algorithms, which aims to maximize the accuracy and the rate of elimination of irrelevant features as computed in Eq. (32).

- The size of selected features This metric implies the size of relevant features. It is computed as:

where d is the number of relevant blocks-based faces, which increase the performance of gender recognition.36 - F-score : In statistical F-score indicates the harmonic mean between recall and precision. It computed by Eq. 37:

37 CPU time (Cpu): It is the required time for each algorithm measured in second (S).

Parameters settings

It is important to enumerate the list of algorithms used for realizing the task of gender recognition from faces. This subsection defines all parameters used for each optimizer. The parameters settings of HHO, MRFO, EPO, SCA, EO, HGSO, and MVO are defined in Table 2.

Table 2.

Parameters settings of physical, mathematical and swarm inspired algorithms

| Algorithms | Parameters setting |

|---|---|

| Common settings | Population size () |

| Maximum number of iterations () | |

| Maximal limit=1 | |

| Minimal limit=0 | |

| Dimension corresponds to the number of blocks | |

| AOA (Hashim et al. 2020) | and |

| and | |

| EO (Faramarzi et al. 2020) | |

| Generation Probability | |

| MVO (Mirjalili et al. 2016) | Wormehole Existance Prob |

| and | |

| Traveling Distance Rate (TDR [0.5; 1]) | |

| HGSO (Hashim et al. 2019) | Clusters number=2 |

| and | |

| and | |

| , and | |

| EPO (Dhiman and Kumar 2018) | Temperature Profile [1; 1000] ) |

| A | |

| f [2, 3] | |

| l [1.5, 2] | |

| Function S() [0, 1.5] | |

| SCA (Mirjalili 2016) | a [2, 0] |

| MRFO (Zhao et al. 2020) | |

| HHO (Heidari et al. 2019) |

Description of datasets

FEI dataset It is a Brazilian dataset that contains 200 individuals. It is important to note that each individual has 14 images, thus 2800. The images were captured on a white background and of color quality. The age category is between 19 and 40 years old. Some changes in the appearance of the face, such as hairstyle and adornments, have also been incorporated. The dataset is balanced because half of the examples are men and half are women.3 We note that the resolution of each image is .

Georgia Tech Face dataset (GT) It contains 50 people captured in two sessions between 04/06/99 and 11/15/99. Each person contains 15 images, hence a total of 750 images of size pixels. The average size of a face is pixels. Images are frontal expressed in light conditions, change of scale and expression. This dataset contains 7 women and 43 men.4

Gallagher’s dataset This dataset is a massive, publicly accessible database that categorizes people by gender and age. It comprises almost 28,000 low-resolution photographs collected from Flickr images taken in natural light5. All images are captured as accurately as possible to reflect real-world scenarios, including variances in appearance, pose, dark glasses, lighting conditions, and image quality (Gallagher and Chen 2009). According to the book of (Grother et al. 2019), this database presents a major challenge in determining gender and age.

Results and discussion of smallest datasets (FEI and GT datasets)

This subsection shows the first scenario, which applies wrapper FS using optimization algorithms, tested on standard benchmarking datasets (FEI and GT datasets) and taken in controlled situations with few images.

In terms of fitness and Cpu time Table 3 summarizes the results of the AoA algorithm based on wrapper FS in terms of fitness by varying the extraction methods (HOG, LBP, and GLCM), tested on two GT and FEI databases to achieve gender recognition.

Table 3.

The impact of features descriptors on the performance of AOA against other recent optimizers over fitness measures

| Fitness | GT dataset | FEI dataset | ||||

|---|---|---|---|---|---|---|

| Algorithms | HOG | LBP | GLCM | HOG | LBP | GLCM |

| HHO | 0.8947 | 0.8644 | 0.8913 | 0.9776 | 0.9262 | 0.9849 |

| SCA | 0.8914 | 0.8653 | 0.8873 | 0.9820 | 0.9518 | 0.9841 |

| EO | 0.9002 | 0.8642 | 0.8927 | 0.9853 | 0.9461 | 0.9829 |

| EPO | 0.8966 | 0.8658 | 0.8887 | 0.9804 | 0.9351 | 0.9780 |

| MRFO | 0.8950 | 0.8593 | 0.8902 | 0.9837 | 0.9412 | 0.9853 |

| HGSO | 0.8902 | 0.8543 | 0.8833 | 0.9837 | 0.9298 | 0.9834 |

| MVO | 0.8981 | 0.8589 | 0.8954 | 0.9805 | 0.9335 | 0.9825 |

| AOA | 0.9015 | 0.8690 | 0.8969 | 0.9882 | 0.9534 | 0.9858 |

By analyzing the results of Table 3, we firstly notice a precise performance obtained by the AOA algorithm based on the three extraction methods for the two GT and FEI databases. Secondly, AOA-based HOG achieves a higher value of of fitness than AOA-based on LBP and GLCM for both datasets.

Furthermore, the comparable results can be observed between AOA-based HOG and AOA-based GLCM for both datasets. Also, it is essential to show that EO is ranked second place for both datasets.

The time consumed by the optimization methods based on wrapper Fs varying the extraction methods is shown in Table 4. The SCA algorithm is the fastest for the FEI database compared to other competitors based on the three descriptors (HOG, LBP, and GLCM). EO requires less time for the GT database, based on LBP and GLCM than other optimizers. Note also that the HOG-based EPO algorithm is ranked first in terms of execution time compared to other algorithms.

Table 4.

The impact of features descriptors on the performance of AOA against other recent optimizers over Cpu Time measures

| CPU time | GT dataset | FEI dataset | ||||

|---|---|---|---|---|---|---|

| Algorithms | HOG | LBP | GLCM | HOG | LBP | GLCM |

| HHO | 446.6000 | 466.0300 | 363.8200 | 249.3200 | 295.8900 | 258.3600 |

| SCA | 301.8600 | 211.4300 | 264.7200 | 181.5400 | 118.8400 | 144.1000 |

| EO | 324.7700 | 182.8200 | 221.4100 | 250.8200 | 214.4500 | 295.8000 |

| EPO | 285.5800 | 272.2800 | 324.0800 | 248.1200 | 126.0400 | 211.3700 |

| MRFO | 301.8600 | 445.4700 | 468.4100 | 302.8600 | 226.5200 | 218.7900 |

| HGSO | 454.8300 | 398.6100 | 312.1300 | 282.4900 | 199.3700 | 229.3200 |

| MVO | 368.0900 | 430.3200 | 369.4800 | 375.0500 | 272.1800 | 229.4900 |

| AOA | 391.3700 | 332.3200 | 388.8600 | 197.0700 | 231.6700 | 340.9200 |

In terms of Accuracy and Selection ratio Tables 5 and 6 illustrate the accuracy and selection ratio results based on wrapper AOA and other optimization algorithms by varying descriptors features such as HOG, LBP, and GLCM.

Table 5.

The impact of features descriptors on the performance of AOA against other recent optimizers over Accuracy measures

| Accuracy | GT dataset | FEI dataset | ||||

|---|---|---|---|---|---|---|

| Algorithms | HOG | LBP | GLCM | HOG | LBP | GLCM |

| HHO | 0.8990 | 0.8689 | 0.8960 | 0.9831 | 0.9320 | 0.9887 |

| SCA | 0.8914 | 0.8660 | 0.8887 | 0.9841 | 0.9534 | 0.9864 |

| EO | 0.9031 | 0.8651 | 0.8953 | 0.9889 | 0.9506 | 0.9864 |

| EPO | 0.8993 | 0.8669 | 0.8917 | 0.9840 | 0.9370 | 0.9815 |

| MRFO | 0.9028 | 0.8636 | 0.8944 | 0.9893 | 0.9456 | 0.9889 |

| HGSO | 0.8944 | 0.8578 | 0.8873 | 0.9881 | 0.9349 | 0.9886 |

| MVO | 0.9020 | 0.8624 | 0.8997 | 0.9865 | 0.9392 | 0.9875 |

| AOA | 0.9034 | 0.8701 | 0.9014 | 0.9916 | 0.9561 | 0.9904 |

Table 6.

The impact of features descriptors on the performance of AOA against other recent optimizers over Selection ratio measures

| Selection ratio | GT dataset | FEI dataset | ||||

|---|---|---|---|---|---|---|

| Algorithms | HOG | LBP | GLCM | HOG | LBP | GLCM |

| HHO | 0.5306 | 0.5714 | 0.5714 | 0.5714 | 0.6122 | 0.3878 |

| SCA | 0.3673 | 0.2041 | 0.2449 | 0.2245 | 0.2041 | 0.2449 |

| EO | 0.3878 | 0.5306 | 0.3673 | 0.3673 | 0.4898 | 0.3673 |

| EPO | 0.3673 | 0.2449 | 0.4082 | 0.3673 | 0.2449 | 0.3673 |

| MRFO | 0.8776 | 0.5714 | 0.5306 | 0.5714 | 0.4898 | 0.3673 |

| HGSO | 0.5306 | 0.4898 | 0.5102 | 0.4490 | 0.5714 | 0.5306 |

| MVO | 0.4898 | 0.4898 | 0.5306 | 0.6122 | 0.6327 | 0.5102 |

| AOA | 0.2857 | 0.2449 | 0.5510 | 0.3469 | 0.6327 | 0.4694 |

According to the results of Table 5, AOA based on the three descriptors (AOA-HOG, AOA-LBP, and AOA-GLCM) produces accurate results for both datasets in terms of accuracy compared to other algorithms, including HHO, SCA, EO, EPO, MRFO, HGSO, and MVO. Also, AOA-based HOG recognizes the gender of persons with a rate of 90.34% and 99.16% for GT and FEI, respectively. This behavior can be interpreted by the excellent balance between exploration and exploitation of AOA and the efficiency of HOG descriptors based on orientation histogram.

From Table 6, The AOA-based HOG keeps the most area of face for GT, i.e., 14 blocks are selected from 49, which presents the best performance in blocks selection. Concerning the FEI dataset, AOA-based HOG is ranked second with keeping 17 blocks from 49, while SCA allows eliminating many irrelevant blocks in the case of HOG, LBP, and GLCM. Also, SCA-based LBP and GLCM obtain fewer informative gender faces area for the GT dataset than other optimizers.

In terms of recall and precision The comparison of eight wrapper FS algorithms using three descriptors (HOG, LBP, and GLCM) based on recall and precision are illustrated in Tables 7 and 8. By inspecting the results of precision measure, we can see that AOA- and MRFO-based HOG for the FEI dataset provides the same performance with 99.50% as precision. Also, HGSO- and AOA-based GLCM attains the same performance in terms of precision for the FEI dataset.

Table 7.

The impact of features descriptors on the performance of AOA against other recent optimizers over Recall measures

| Recall | GT dataset | FEI dataset | ||||

|---|---|---|---|---|---|---|

| Algorithms | HOG | LBP | GLCM | HOG | LBP | GLCM |

| HHO | 0.9040 | 0.8747 | 0.9000 | 0.9875 | 0.9425 | 0.9900 |

| SCA | 0.8960 | 0.8693 | 0.8907 | 0.9850 | 0.9550 | 0.9900 |

| EO | 0.9067 | 0.8693 | 0.8987 | 0.9925 | 0.9475 | 0.9900 |

| EPO | 0.9040 | 0.8813 | 0.8960 | 0.9875 | 0.9400 | 0.9825 |

| MRFO | 0.9107 | 0.8707 | 0.8973 | 0.9950 | 0.9500 | 0.9925 |

| HGSO | 0.9000 | 0.8613 | 0.8880 | 0.9925 | 0.9400 | 0.9950 |

| MVO | 0.9080 | 0.8693 | 0.9040 | 0.9925 | 0.9450 | 0.9925 |

| AOA | 0.9040 | 0.8733 | 0.9080 | 0.9950 | 0.9475 | 0.9950 |

Table 8.

The impact of features descriptors on the performance of AOA against other recent optimizers over Precision measures

| Precision | GT dataset | FEI dataset | ||||

|---|---|---|---|---|---|---|

| Algorithms | HOG | LBP | GLCM | HOG | LBP | GLCM |

| HHO | 0.9014 | 0.8677 | 0.8949 | 0.9903 | 0.9347 | 0.9951 |

| SCA | 0.8891 | 0.8400 | 0.8870 | 0.9880 | 0.9560 | 0.9877 |

| EO | 0.9018 | 0.8414 | 0.8927 | 0.9928 | 0.9642 | 0.9904 |

| EPO | 0.8960 | 0.8475 | 0.8883 | 0.9882 | 0.9383 | 0.9881 |

| MRFO | 0.9052 | 0.8352 | 0.8913 | 0.9951 | 0.9510 | 0.9929 |

| HGSO | 0.8908 | 0.8361 | 0.8874 | 0.9928 | 0.9417 | 0.9927 |

| MVO | 0.8974 | 0.8399 | 0.8977 | 0.9927 | 0.9478 | 0.9927 |

| AOA | 0.9099 | 0.8529 | 0.8980 | 0.9951 | 0.9457 | 0.9951 |

From Table 8, AOA-based HOG and GLCM provide better performance than other optimizers for both datasets. Moreover, MRFO- based HOG provides the same performance for FEI as AOA-based HOG, whereas it takes the second rank for the GT dataset.

In terms of F-score Table 9 indicates the values of F-score obtained by AOA and other optimizers by employing three descriptors features like HOG, LBP, and GLCM for both datasets. By inspecting the obtained results, we can see that F-score values over FEI are significantly higher than GT dataset due to the balanced samples of gender categories. For the GT dataset, a great competition between MRFO- and AOA-based HOG is highlighted with a slight advantage for MRFO because the margin is significantly lower with a value of 0.0008. In addition, AOA-based GLCM is still better for GT datasets than other algorithms including, HHO, SCA, EO, EPO, MRFO, HGSO, and MVO. Also, wrapper FS techniques based on LBP show lower F-score values than others descriptors for both datasets (GT and FEI).

Table 9.

The impact of features descriptors on the performance of AOA against other recent optimizers over F-score measures

| F-score | GT dataset | FEI dataset | ||||

|---|---|---|---|---|---|---|

| Algorithms | HOG | LBP | GLCM | HOG | LBP | GLCM |

| HHO | 0.8977 | 0.8427 | 0.8953 | 0.9887 | 0.9379 | 0.9924 |

| SCA | 0.8892 | 0.8428 | 0.8866 | 0.9862 | 0.9549 | 0.9888 |

| EO | 0.9026 | 0.8400 | 0.8922 | 0.9925 | 0.9547 | 0.9900 |

| EPO | 0.8981 | 0.8496 | 0.8898 | 0.9875 | 0.9388 | 0.9848 |

| MRFO | 0.9058 | 0.8328 | 0.8896 | 0.9950 | 0.9499 | 0.9925 |

| HGSO | 0.8918 | 0.8359 | 0.8854 | 0.9925 | 0.9397 | 0.9938 |

| MVO | 0.9004 | 0.8351 | 0.8967 | 0.9925 | 0.9449 | 0.9925 |

| AOA | 0.9050 | 0.8422 | 0.9009 | 0.9950 | 0.9453 | 0.9950 |

For FEI-, AOA-, and MRFO-based HOG demonstrates a strong efficiency of Fscore with 99.50% as performance compared to other algorithms such as HHO, SCA, EO, EPO, HGSO, and MVO. Also, AOA-based GLCM reached the same performance as AOA and MRFO based HOG.

Results and discussion of largest dataset (Gallagher’s dataset)

This subsection shows the second scenario, which applies wrapper FS using optimization algorithms, tested on real challenger Gallagher’s dataset, taken in uncontrolled situations with a high number of images.

Table 10 summarizes the results of GR using AOA-HOG against other optimizers, including HHO, SCA, EO, EPO, MRFO, HGSO, and MVO. It can be seen clearly that AOA-HOG generates precise results in terms of fitness, accuracy, recall, precision, Fscore, and selection ratio. Also, AOA based on the HOG descriptor ensures a good compromise between higher accuracy and a lower selection ratio. The numerical results indicate that the performance of correct gender classification is 95.51% by selecting a reduced number of patches, more precisely 20 patches out of 49, i.e., 40.82%. We can observe that the second best optimizer is MRFO, which achieved 95.01% of accuracy, but it contains a high number of selected patches, with 39 zones from 49.

Table 10.

The performance results of AOA based on HOG descriptor against other recent optimizers for Gallagher’s dataset

| Gallagher’s dataset – HOG Descriptor | |||||||

|---|---|---|---|---|---|---|---|

| Algorithms | Fitness | Accuracy | Recall | Precision | Fscore | Selection R | CPU tiime |

| HHO | 0.9336 | 0.9405 | 0.9476 | 0.9477 | 0.9476 | 0.5510 | 18317.6000 |

| SCA | 0.9125 | 0.9137 | 0.9165 | 0.9143 | 0.9152 | 0.4490 | 19271.4500 |

| EO | 0.9387 | 0.9405 | 0.9416 | 0.9426 | 0.9420 | 0.4898 | 8490.3050 |

| EPO | 0.9100 | 0.912 | 0.9130 | 0.9144 | 0.9136 | 0.5306 | 16416.4600 |

| MRFO | 0.9451 | 0.9501 | 0.9549 | 0.9553 | 0.9550 | 0.5306 | 9257.9900 |

| HGSO | 0.9167 | 0.9183 | 0.9212 | 0.9198 | 0.9204 | 0.4898 | 1449.6810 |

| MVO | 0.9169 | 0.9196 | 0.9202 | 0.9230 | 0.9213 | 0.5918 | 1319.9640 |

| AOA | 0.9515 | 0.9551 | 0.9600 | 0.9583 | 0.9588 | 0.4082 | 573.8000 |

Regarding Fscore, AOA-HOG reached a higher rate with 95.88%, which ensures a good balance between the binary classes (female and male).

In terms of CPU time, AOA-HOG is faster than other competitors, with 573.8 s, while the second-best optimizer is EO, with an 8490.305 s.

Table 11 indicates the results of different optimizers based on the LBP descriptor in terms of fitness, accuracy, recall, precision, Fscore, selection ratio, and CPU time. It can be remarked that AOA is the best algorithm in terms of correct gender classification compared to other competitors, with 96.08% accuracy. Also, AOA-LBP reached a lower number of selected patches, with 10 areas only from 49, i.e., 20.49%. Furthermore, the second rank is obtained by SCA in terms of accuracy and selection ratio, with 95.28% and 26.53%, respectively.

Table 11.

The performance results of AOA based on LBP descriptor against other recent optimizers for Gallagher’s dataset

| Gallagher’s dataset – LBP Descriptor | |||||||

|---|---|---|---|---|---|---|---|

| Algorithms | Fitness | Accuracy | Recall | Precision | Fscore | Selection R | CPU tiime |

| HHO | 0.9364 | 0.9419 | 0.9475 | 0.9482 | 0.9475 | 0.6122 | 1564.6520 |

| SCA | 0.9506 | 0.9528 | 0.9550 | 0.9578 | 0.9550 | 0.2653 | 745.7800 |

| EO | 0.8754 | 0.8742 | 0.8693 | 0.8744 | 0.8708 | 0.3061 | 6460.6420 |

| EPO | 0.9461 | 0.9481 | 0.9500 | 0.9503 | 0.9500 | 0.3878 | 615.9800 |

| MRFO | 0.9383 | 0.9422 | 0.9450 | 0.9504 | 0.9463 | 0.4490 | 615.8400 |

| HGSO | 0.9192 | 0.9233 | 0.9275 | 0.9287 | 0.9276 | 0.4898 | 6460.6400 |

| MVO | 0.9310 | 0.9342 | 0.9375 | 0.9383 | 0.9375 | 0.3878 | 1449.0000 |

| AOA | 0.9592 | 0.9608 | 0.9625 | 0.9626 | 0.9625 | 0.2041 | 391.6900 |

AOA still shows a better performance with 96.25% than other competitors in erms of Fscore.

In terms of time consuming, AOA-LBP is faster than the rest of the algorithms with 391.69 s.

Table 12 reports the gender recognition results tested on Gallagher’s dataset using AOA, HHO, SCA, EO, EPO, MRFO, HGSO, and MVO based on the GLCM descriptor. From this table, we can see great competition between SCA and AOA in terms of fitness, accuracy, precision, and selection ratio.

Table 12.

The performance results of AOA based on GLCM descriptor against other recent optimizers for Gallagher’s dataset

| Gallagher’s Dataset – GLCM Descriptor | |||||||

|---|---|---|---|---|---|---|---|

| Algorithms | Fitness | Accuracy | Recall | Precision | Fscore | Selection R | CPU tiime |

| HHO | 0.9425 | 0.9475 | 0.9525 | 0.9533 | 0.9524 | 0.5510 | 1861.5200 |

| SCA | 0.9486 | 0.9505 | 0.9525 | 0.9535 | 0.9525 | 0.2449 | 1106.1770 |

| EO | 0.9470 | 0.9501 | 0.9520 | 0.9530 | 0.9520 | 0.4490 | 1566.2000 |

| EPO | 0.9408 | 0.9441 | 0.9475 | 0.9486 | 0.9475 | 0.3878 | 1600.2400 |

| MRFO | 0.9445 | 0.9457 | 0.9495 | 0.9496 | 0.9495 | 0.5714 | 1560.8800 |

| HGSO | 0.9417 | 0.9452 | 0.9500 | 0.9483 | 0.9488 | 0.4082 | 453.2250 |

| MVO | 0.9437 | 0.9493 | 0.9524 | 0.9534 | 0.9525 | 0.6122 | 2045.6700 |

| AOA | 0.9482 | 0.9503 | 0.9525 | 0.9537 | 0.9525 | 0.2653 | 406.5800 |

For example, in terms of accuracy and fitness, SCA outperforms AOA with a low margin of 0.02% and 0.04%, respectively. Additionally, the selection ratio obtained by SCA is slightly higher than that of the AOA algorithm, with a margin of 2.4%. Furthermore, AOA outperforms SCA ith a low margin of 0.02% in terms of precision.

In terms of recall and Fscore, both algorithms AOA and SCA based on GLCM achieve the same performance with 95.25%.

In terms of CPU time, AOA is faster than SCA with a margin of 699.597s.

Graphical analysis

-

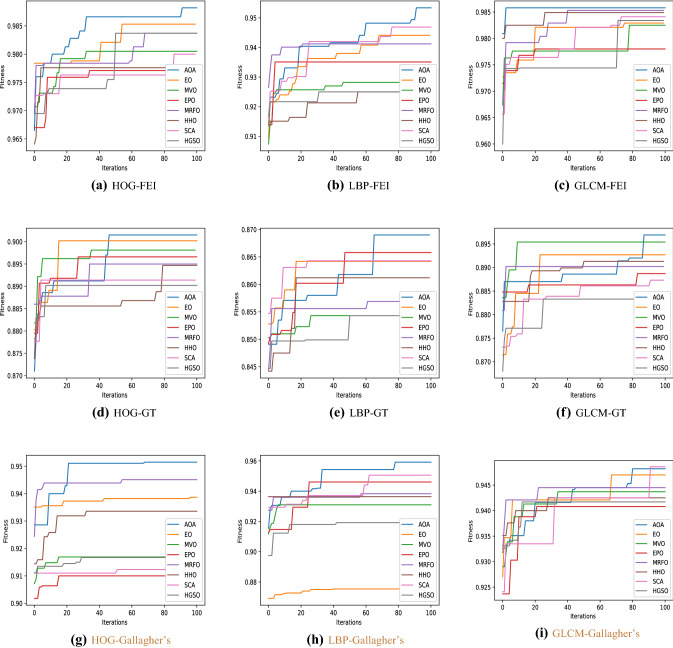

Fitness curve analysis: The fitness curves obtained by the different optimizers, including AOA, HHO, SCA, EO, EPO, MRFO, HGSO, and MVO are shown in Fig. 7.

By analyzing the behavior of the convergence of the AOA algorithm for the smallest databases ( FEI and GT) based on the different descriptors, a clear growth is illustrated by increasing the number of iterations compared to other algorithms, including EO, MVO, EPO, MRFO, HHO, SCA, and HGSO. This phenomenon is justified by a better balance between exploitation and exploration, making it possible to avoid convergence toward local minima. However, for largest database (Gallagher’s dataset), AOA-HOG and AOA-LBP maintain a clear superiority during iterations, while AOA based on GLCM shows a clear growth until the iteration, and the SCA-GLCM takes over between the and iteration, which will finish in first position with a margin of 0.04%.

For smallest datasets (FEI and GT), we can conclude that AOA based on the HOG descriptor provides a higher fitness value than other descriptors as GLCM and LBP. Concerning the largest dataset (Gallagher’s dataset), It can be seen that AOA-LBP reached a higher value of fitness with 95.92%, compared to AOA-HOG with 95.15% and AOA-GLCM with 94.82%.

-

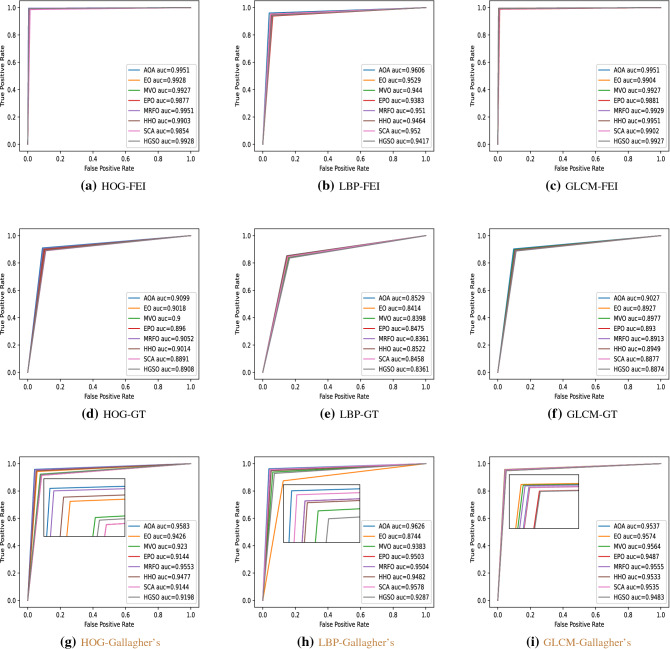

ROC curve analysis: In addition, we have graphically represented the ROC curve, as shown in Fig. 8, which relates true positive rate (TPR) as a function of false positive rate (FPR) by using the different algorithms based on the three descriptors (HOG, LBP, and GLCM) for all the images of the three corpora (FEI, GT, and Gallagher’s datasets).

We notice that AOA shows a clear performance in terms of AUC compared to other optimizers. For the FEI dataset, the values of AUC obtained by AOA-based on HOG, LBP, and GLCM are 0.9951, 0.9606, and 0.9951, respectively. However, for the GT dataset obtained 0.9099, 0.8529, and 0.9027, respectively.

Concerning largest dataset (Gallagher’s dataset), the values of AUC obtained by AOA-LBP, AOA-HOG, and AOA-GLCM are 0.9626, 0.9583, and 0.9537, respectively.

-

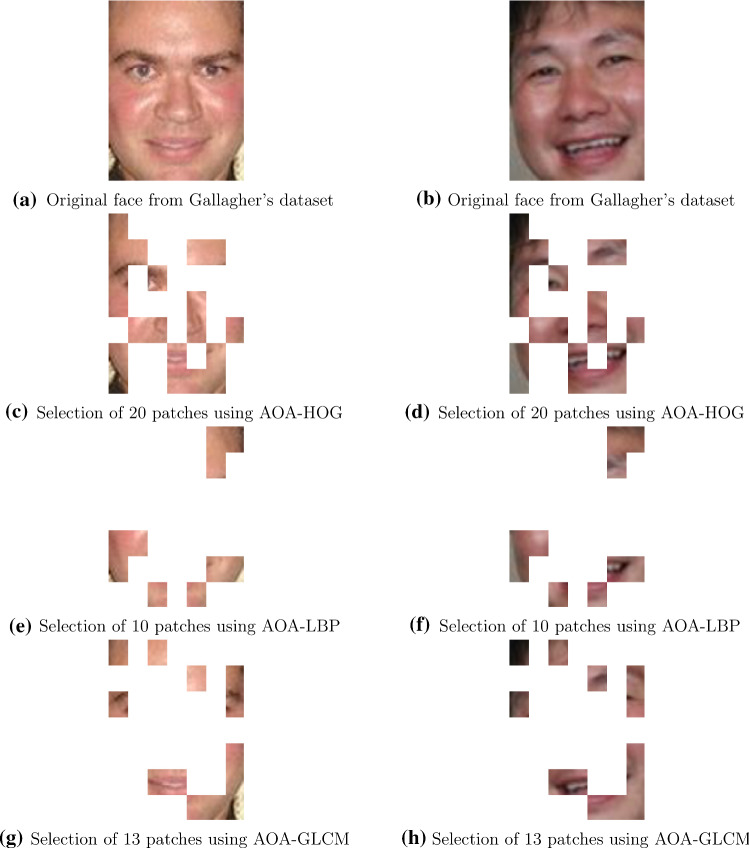

Visual examples of selected patches: Fig. 9 shows the optimal patches obtained by the proposed method AOA based on three handcrafted descriptors: HOG, LBP, and GLCM for two individuals from Gallagher’s dataset. It can be seen clearly that AOA-LBP finds a lower number of patches, with 10 zones out of 49, compared to AOA-HOG with 20 and AOA-GLCM with 13.

AOA-HOG appears to cover more important facial areas for gender recognition, such as the eyes, nose, and mouth, whereas LBP and GLCM detect sparse areas and focus on the edges. As shown in Table 5, these behaviors reflect HOG’s superior overall accuracy.

Fig. 7.

Convergence curve of AOA versus other swarm intelligence algorithms over smallest and largest datasets

Fig. 8.

ROC of AOA versus other swarm intelligence algorithms over smallest and largest datasets

Fig. 9.

Visual examples of selected patches from Gallagher’s dataset using AOA

Statistical analysis

To validate the efficiency of AOA to other competitive algorithms, a statistical study is required. Thus, this study is validated by Wilcoxon rank-sum test between the fitness values obtained by AOA and other algorithms, including HHO, SCA, EO, EPO, MRFO, HGSO, and MVO.

From Table 15, we can observe for the smallest datasets (FEI and GT) that AOA is statistically substantial to all competitors in the case of the GLCM descriptor (). Also, the same behavior is highlighted for the FEI dataset when the descriptor is HOG. Additionally, AOA is not substantial to MVO- based HOG and EPO-based LBP in the case of the GT datasets. Also, EO-based LBP in the case of the FEI dataset dominates AOA.

Table 15.

Comparative performance in terms of accuracy with the existing methods– GT dataset

| References | Classifier | Extracted features | Accuracy |

|---|---|---|---|

| Goel and Vishwakarma (2016a) | SVM (2-folds) | DCT | 98.96% |

| Goel and Vishwakarma (2016) | SVM (2-folds) | KPCA | 97.38% |

| Goel and Vishwakarma (2016b) | SVM (2-folds) | DWT+DCT | 99% |

| Proposed method | AOA-BPNN (2-folds) | Multi-blocks HOG | 99.50% |

| Multi-blocks LBP | 97.25% | ||

| Multi-blocks GLCM | 99.15% |

For largest dataset (Gallagher’s dataset), AOA is statistically substantial in the case of HOG and LBP descriptors against HHO, SCA, EO, EPO, MRFO, HGSO, and MVO. Furthermore, in the case of GLCM, AOA is substantial to all optimizers except HHO and MRFO. In general, AOA shows a good performance in terms of Wilcoxon’s test.

Comparative study

Comparative study with the existing works

A novel comparative study with the literature works has been realized further to explore the power of the proposed system AOA-based HOG. Mainly, we have selected some works from the literature based on machine learning, deep learning, and genetic-based FS. It is essential to show that the comparison is tough because the most authors used different conditions

FEI dataset A deep analysis of Table 13 indicates that the proposed method, AOA-based on multi-blocks HOG with BPNN as classifier achieves a higher accuracy performance, i.e., 99.16% as correct prediction rate of gender from faces. Most machine learning methods used mainly SVM classifier with multi-features based on handcrafted techniques inspired by LBP, LDP, and HOG. In this category, the SVM proposed by (Geetha et al. 2019) represents the best classifier that can predict the gender correctly with high accuracy of 99% compared to other ML methods. The deep-gender provided by Haider et al. (2019) shows a good accuracy with 98.75%. Furthermore, a wrapper FS based on a genetic algorithm is employed to predict gender from the face, using Eigen-space of features. The authors utilized BPNN as a classifier, whereas GA selects the significant Eigen vectors, which obtained 96% as accuracy. In conclusion, AOA-based HOG and AOA-based GLCM are the best classifier for predicting gender from the face compared to other approaches inspired by ML and deep CNN.

Table 13.

Statistical study using Wilcoxon’s test ( In bold best values , which implies that AOA is substantial against algorithm X)

| AOA | GT dataset | FEI dataset | Gallagher’s datase | ||||||

|---|---|---|---|---|---|---|---|---|---|

| versus | HOG | LBP | GLCM | HOG | LBP | GLCM | HOG | LBP | GLCM |

| HHO | 4.40E-21 | 2.06E-14 | 5.00E-02 | 1.26E-32 | 3.59E-30 | 1.23E-39 | 9.57E-27 | 1.22E-21 | 5.12E-01 |

| SCA | 2.20E-02 | 1.71E-02 | 6.86E-31 | 9.74E-27 | 1.40E-04 | 2.62E-38 | 3.58E-36 | 4.20E-14 | 3.37E-04 |

| EO | 7.20E-03 | 3.37E-02 | 1.50E-05 | 2.70E-13 | 2.64E-01 | 1.33E-38 | 8.42E-25 | 4.76E-36 | 2.93E-04 |

| EPO | 1.04E-02 | 8.09E-01 | 6.97E-21 | 8.87E-23 | 2.86E-17 | 7.37E-42 | 4.43E-38 | 1.47E-10 | 2.77E-16 |

| MRFO | 5.11E-07 | 2.38E-10 | 1.13E-06 | 7.25E-19 | 1.91E-05 | 5.49E-39 | 3.58E-36 | 5.06E-20 | 1.17E-01 |

| HGSO | 3.58E-25 | 5.65E-26 | 1.11E-36 | 1.15E-19 | 4.36E-30 | 1.08E-39 | 7.35E-37 | 7.13E-35 | 2.30E-03 |

| MVO | 9.91E-02 | 2.20E-04 | 3.16E-17 | 1.11E-19 | 1.30E-17 | 1.31E-40 | 4.45E-38 | 1.21E-28 | 4.22E-05 |

GT dataset For this dataset, a novel run is realized due to the value of k-folds. We note that some works of literature used 2-fold. Hence, Table 14 highlights the performance results of AOA with some methods of machine learning (ML) methods using 2-fold across the correct rate of gender identification.

Table 14.

Comparative performance in terms of accuracy with the existing approaches–FEI dataset

| References | Classifier | Extracted features | Accuracy |

|---|---|---|---|

| Micheal and Geetha (2019) | SVM | DRLBP++RILPQ+PHOG | 95.30% |

| Geetha et al. (2019) | SVM | 8-LDP+LBP | 99% |

| Ghojogh et al. (2018) | LDA+weighting vote | Intensity of lower part of face | 94% |

| Haider et al. (2019) | Deepgender | * | 98.75% |

| Zhou and Li (2019) | GA-BPNN | Eigen-features based on PCA | 96% |

| Khan et al. (2019) | MSFS-CRFs | Segmentation based on Super-Pixels | 93.70% |

| Kumar et al. (2019) | SVM | Multi-features (BoW+SIFT) | 98% |

| Proposed method | AOA-BPNN | Multi-blocks HOG | 99.16% |

| Multi-blocks LBP | 95.61% | ||

| Multi-blocks GLCM | 99.04% |

The accuracy findings reveal that the AOA-based multi-blocks HOG approach outperforms other ML methods such as SVM based on combined DWT (discrete wavelet transform) and DCT (discrete cosine transform). Also, it is essential to show that the multi-blocks LBP based-AOA reached a lower performance with 97.25% in terms of the correct rate of gender identification. The AOA-based multi-blocks GLCM is ranked in the second position with 99.15% of accuracy, followed by the work of (Goel and Vishwakarma 2016a), which applied SVM based on DCT and reached 98.96% in accuracy.

Gallagher’s dataset Table 16 shows the results obtained from the proposed methods (AOA-HOG, AOA-LBP, and AOA-GLCM) as well as from the state-of-the-art, including machine learning (ML) and deep learning (DL) techniques, tested on the largest Gallagher’s dataset captured in uncontrolled conditions. From this table, it can be observed that AOA-LBP outperforms other variants of AOA, i.e., AOA-HOG and AOA-GLCM. Furthermore, AOA-LBP provides better performance in terms of accuracy compared to ML and DL methods. The numerical results indicate that AOA-LBP reached 96% of accuracy, while Ubunsa CNN and Bagging+LBP+HOG achieved 91.48% and 88.01%, respectively.

Table 16.

Comparative performance in terms of accuracy with the existing approaches–Gallagher’s dataset under Dago’s protocol

| References | Classifier | Extracted features | Accuracy |

|---|---|---|---|

| Dago-Casas et al. (2011) | SVM | Gabor+PCA | 86.01% |

| Castrillón-Santana et al. (2013) | Bagging | LBP+HOG | 88.01% |

| Castrillón-Santana et al. (2016) | SVM | HOG++LTP+WLD | 82.91% |

| Mansanet et al. (2016) | Local DNN | * | 90.58% |

| Orozco et al. (2017) | Ubunsa CNN | * | 91.48% |

| Abdalrady and Aly (2020) | 2-stage PCANet | * | 89.65% |

| Proposed method | AOA-BPNN | Multi-blocks HOG | 95.51% |

| Multi-blocks LBP | 96.08% | ||

| Multi-blocks GLCM | 95.03% |

Comparative study with the basic MLP

To confirm the effectiveness of AOA, an ablation study is realized between AOA and MLP. Furthermore, the input of MLP considers all patches to extract features using HOG, LBP, or GLCM descriptors. The stop condition of backpropagation algorithm is the logical operator between max iteration (500) or mean square error ()

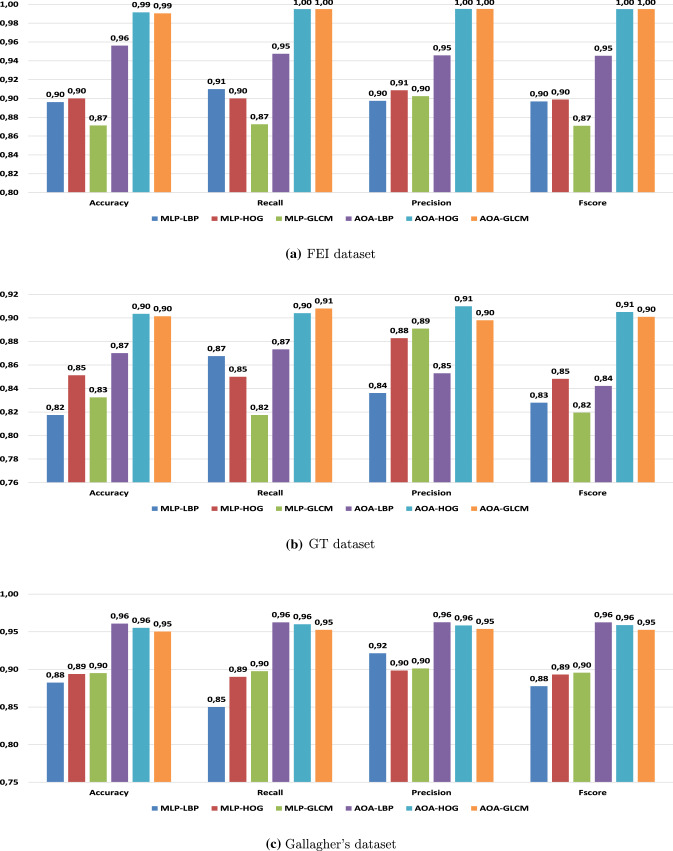

Figure 10 illustrates the performance of AOA based on HOG, LBP, and GLCM against MLP in terms of accuracy, recall, precision, and Fscore.

Fig. 10.

The performance of AOA against MLP

In terms of accuracy for FEI dataset, MLP based on LBP, HOG, and GLCM achieve 90%, 90%, and 87%, respectively. While, AOA based on LBP, HOG, and GLCM reach 96%, 99%, and 99%, respectively.

For GT dataset, in terms of accuracy MLP-LBP, MLP-HOG ,and MLP-GLCM achieve 82%, 85%, and 83%, respectively. Although, AOA-LBP, AOA-HOG, and AOA-GLCM attain 87%, 90% and 90%, respectively.

For the largest dataset (Gallagher’s dataset), the margin of accuracy between AOA and MLP obtains 8%, 7%, and 5% using LBP, HOG and GLCM, respectively.

In terms of Fscore, the AOA-HOG and AOA-GLCM outperform the conventional MLP-HOG and MLP-GLCM for the smallest datasets (FEI and GT datasets). In addition, the values of Fscore obtained by AOA using the three descriptors are very close to 96% for Gallagher’s dataset, compared to MLP that achieves approximately 90%.

Results analysis and discussion

The main objective of this study is to design a new model within the framework of gender recognition based on a metaheuristic inspired by Archimedes’ law of physics named AOA. This study requires three descriptors like HOG, LBP, and GLCM, along with an MLP classifier. The experimental results showed the efficiency of the AOA algorithm compared to other competitors in the field of GR-based FS for the smallest (FEI and GT) and largest dataset (Gallagher’s database). In addition, the way of integrating AOA to select the best patches from a face makes a real contribution.

The existing AOA has several benefits.

For the smallest datasets (GT and FEI datasets), it can be seen that AOA based on three descriptors, HOG, LBP, and GLCM, provides better performance in terms of accuracy (see Table 5).

Also, it is important to highlight that AOA-HOG achieved high accuracies for GT and FEI datasets.

Concerning the optimal number of selected patches for the smallest datasets, AOA and SCA detect a lower number of selected patches (see Table 6).

For the smallest dataset, EPO-HOG is the fastest algorithm for the GT dataset, and SCA-HOG is faster for the FET dataset. The main reason lies in using simple operators to explore the search space, i.e., EPO determines the temperature profile in the neighborhood of the huddle and locates the mover. It used only trigonometric operators based on sine and cosine functions to assess SCA (see Table 4).

From the obtained results of the smallest datasets, we notice that the gender performance of the GT dataset causes less quality compared to FEI, and this is due to the complexity of the datasets, which depend on several challenging factors such as highlighting variation, facial expressions, different poses, and occluded eye area.