Abstract

Background

We performed a trial to evaluate the efficacy of a blended intervention with personalized health coaching and virtual cardiac rehabilitation to improve medication adherence and risk factors. The trial was terminated early. Here, we describe findings from a root cause analysis and lessons learned.

Methods

SmartGUIDE was an open-label, single-center trial that randomized participants with coronary artery disease who were prescribed a statin and/or P2Y12 inhibitor 1:1 to either usual care or the added use of a mobile app with components of cardiac rehabilitation paired with personal virtual coaching. The primary outcome was medication adherence: proportion of days covered (PDC). The planned sample size was 132. We performed a root cause analysis to evaluate processes from study development to closure.

Results

During trial conduct, the technology start-up withdrew the intervention. The study was terminated early with 63 participants randomized and data from 26 available for analysis. The median PDC was high in both groups (intervention group 94%, interquartile range [IQR] 88%–96%; control group: 99%, IQR 95%–100%). Root cause analysis identified factors for not achieving trial objectives: key factors that limited enrollment (inclusion criteria, low penetration of compatible smartphones), participant retention or engagement (poor app product, insufficient technology support), and suboptimal choice of a technology partner (technology start-up’s inexperience in health care, poor product design, inadequate fundraising).

Conclusion

We identified important and preventable factors leading to trial failure. These factors may be common across digital health trials and may explain prior observations that many such trials are never completed. Careful vetting of technology partners and more pragmatic study designs may prevent these missteps.

Keywords: Adherence, Cardiovascular risk factor, Coronary artery disease, Digital health, Digital platform, mHealth, Mobile health

Key Findings.

-

•

We performed a trial to evaluate the efficacy of a blended intervention with custom-designed mobile application and personalized health coaching to improve adherence to cardiovascular medications and risk factors. The trial was terminated early.

-

•

A root cause analysis identified important and preventable factors leading to trial failure: key factors that limited enrollment (inclusion criteria, low penetration of compatible smartphones), participant retention or engagement (poor app product, insufficient technology support of the health coaching), and suboptimal choice of a technology partner (technology start-up’s inexperience in health care, poor product design, inadequate fundraising).

-

•

These factors may be common across digital health trials and may explain prior observations that many such trials are never completed.

-

•

Careful vetting of technology partners and more pragmatic study designs may prevent these missteps.

Introduction

Most cardiac rehabilitation solutions are suited for major cardiovascular episodes or treatments such as myocardial infarction or coronary artery bypass grafting rather than for the general secondary prevention population. Low-cost solutions that blend technological interventions with personalized health coaching to address medication adherence, risk factor management, and exercise have the potential to improve cardiovascular outcomes compared to current care models and overcome poor referral and low retention rates of traditional rehabilitation. Cardiac rehabilitation aims to improve survival after a cardiac event through cardiovascular risk factor management and medication adherence. Medication adherence is crucial for long-term clinical outcomes in patients with cardiovascular disease.1,2 A systematic review of mobile adherence platforms found that text messaging can improve medication adherence.3 However, text messaging–only interventions have limited capacity to monitor adherence with small effects.4 A limitation of these studies is that they attempt to test digital therapeutics in isolation (eg, as simple reminders) without involvement of the patient’s physicians or care team. “Smart” phone mobile applications can provide real-time education and adherence monitoring to participants and providers that can be critical key processes for adherence.5 Personalized coaching has the potential to further improve adherence and the management of cardiovascular risk factors.6,7

The objective of the smartphone Guided Medication Adherence and Rehabilitation in Patients with Coronary Artery Disease (smartGUIDE) trial was to evaluate the effectiveness of an intervention with a smartphone-based heart disease therapeutic for virtual health coaching, compared with physician- or nurse-guided standard of care, to improve cardiovascular medication adherence and cardiovascular risk factors in participants with coronary artery disease. The trial was terminated early owing to a number of factors. Here, we report the trial results, describe findings from a root cause analysis, and share lessons learned.

Methods

Study design and oversight

The full rationale and study design can be found in the protocol (Supplemental Appendix). In brief, SmartGUIDE was a 1:1 randomized, 2-arm, open-label, controlled, single-center trial. Participants were enrolled and randomly assigned to usual physician- or nurse-guided care (control arm) or usual care plus the BrightHeart® program, a mobile therapeutic intervention (WellnessMate, Cupertino, CA) of personal health coaching with components of cardiac rehabilitation. All participants were recruited at inpatient or outpatient sites (Stanford Health Care, Stanford, CA). Random allocation sequence was generated with Sealed Envelope (London, UK) by blocks of 4 by the lead investigator and maintained concealed to the research coordinator until assignment. AstraZeneca provided funding of the trial but was not involved in the design, the selection of the intervention, data collection, or analysis and interpretation of the results. The protocol and amendments were approved by the local ethics committee, privacy and security offices, and institutional review board (Stanford IRB 4). The study was registered on clinicaltrials.gov (NCT03207646) and was posted on July 5, 2017.

Trial population

Patients were eligible if they were diagnosed with coronary artery disease, had a compatible smartphone (iPhone 5s or newer generation), and had an active prescription of a statin and/or an oral P2Y12 inhibitor. Each participant was required to consent in writing. Main exclusion criteria were anticipated inability (eg, visual impairment or disorders of fine motor skills) to adhere to the mobile application per judgement of the investigator, the current use of adherence tracking devices (standard daily pill dispenser boxes were permitted), and life expectancy less than 3 months. Detailed inclusion and exclusion criteria are listed in Supplemental Table S1.

Intervention

The control group (Control) received usual care as determined by the treating physician. Participants randomized to the intervention arm received the virtual health care intervention in addition to usual care. First, the BrightHeart mobile application was installed on their smartphone by the study research coordinator or investigator. The participant was trained in menu navigation and received a program brochure that specified the features of the app. The program included components of home-based cardiac rehabilitation that were protocoled in the program coaching guide (Supplementary Appendix), consistent with the target health behaviors for cardiac rehabilitation identified in a recent scientific statement8: tobacco counseling for smoking cessation, medication management for adherence, exercise plan for physical activity, dietary education for healthy eating, and psychosocial assessment for stress management.

Participants randomized to the application were paired with a cardiac rehabilitation coach (J.H., registered nurse with a master’s degree in physiology, a certified health coach, member of the Motivational Interviewing Network of Trainers [MINT], and former president of the American Association of Cardiovascular and Pulmonary Rehabilitation). The coach offered a structured coaching intervention that observed nationally accepted standards for both Health Coach Scope of Practice and Code of Ethics as set forth by the National Board for Health and Wellness Coaching (NBHWC). Coaching interactions adhered to the rigorous standards for coaching process and structure as defined jointly by NBHWC and the National Board of Medical Examiners. Details on the program are outlined in Supplemental Appendix 1. Briefly, an action plan was jointly created by the participant and the coach after a telephone interview. Data such as medication adherence, lifestyle, and vitals may have been entered by the participant or passively collected (eg, through connection to the step counter of the smartphone). The platform generated insights on performance and engagement and the coach modified the action plan as needed. Participants assigned to the intervention received recommendations for physical activity. The program was created by the company and J.H. A detailed description of the physical activity plan can be found in Supplemental Appendix 2.

Study endpoints, follow-up, and statistical considerations

The primary endpoint was the proportion of days covered (PDC, expressed as a percentage9) of the composite of the P2Y12 inhibitor and/or the statin. Adherence to these medications is crucial for long-term outcomes in patients with cardiovascular disease.1 Follow-up was 90 ± 10 days from the end of study visit. Supplemental Appendix 3 details secondary and exploratory endpoints. Statistical considerations are described in Supplemental Appendix 4. The research reported in this paper adhered to the CONSORT guidelines.10

Recruitment and early termination of the trial

Enrollment commenced on July 19, 2017. Patients were recruited initially from the cardiac catheterization lab and from inpatient service. During the first 6 weeks, the mean recruitment was 1.3 participants per week, below our goal of 3.4. Traditional referral patterns (ie, by the treating physician) were ineffective for this study, as interventionalists did not feel comfortable enrolling in a program when medications were managed by the referring physician. Additionally, the penetration of iPhones among eligible people with smartphones was lower than expected (50%). Consequently, in week 10 of the study, we obtained IRB approval to broaden the inclusion criterion, from coronary artery disease per angiographic evidence to ischemic heart disease as defined by ICD-10 codes I20–I25. Screening was extended to outpatient clinics. These changes were associated with a minimal enrollment increase to a mean of 1.4 participants per week. In week 29, we employed a mass email to recruit potentially eligible patients from the Stanford Health Care Network based on inpatient and outpatient ICD-9 code I25.X. This was performed by a third-party honest-broker system and approved by the university privacy and security offices. Six email batches were sent out to a total of 3000 health care system patients, leading to increased mean enrollment of 4.7 participants per week. On April 2, 2018 (week 37 of enrollment), we were notified that the start-up company that provided the tested mobile application BrightHeart was shut down. In the absence of funding or revenue prospects, the company’s board of directors decided to shut down the product and dissolve the company. Enrollment was immediately closed, and participants were continued to study termination. Participants within the end-of-study visit window or those ≥6 weeks in the study proceeded until they reached the end-of-study visit; participants with <6 weeks since enrollment were terminated early. These changes were approved by the IRB.

Root cause analysis of trial failure

Investigators (M.P.T., C.B.O.) summarized unstructured feedback of participants, investigators, clinical research coordinators, the coach, and study start-up staff provided during interviews or in written communication and performed a root cause analysis11,12 to evaluate processes from study development to closure. A qualitative interrogative technique, first developed in industrial manufacturing to determine cause-and-effect relationships, that is now widely used to ascertain root causes of complex operational failures was applied. Root causes were categorized and summarized in an Ishikawa (also known as fishbone) cause-and-effect diagram.13

Data availability statement

The data that support the findings of this study are not publicly available owing to the nature of the restrictions in the informed consent form. The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Results

Patient population

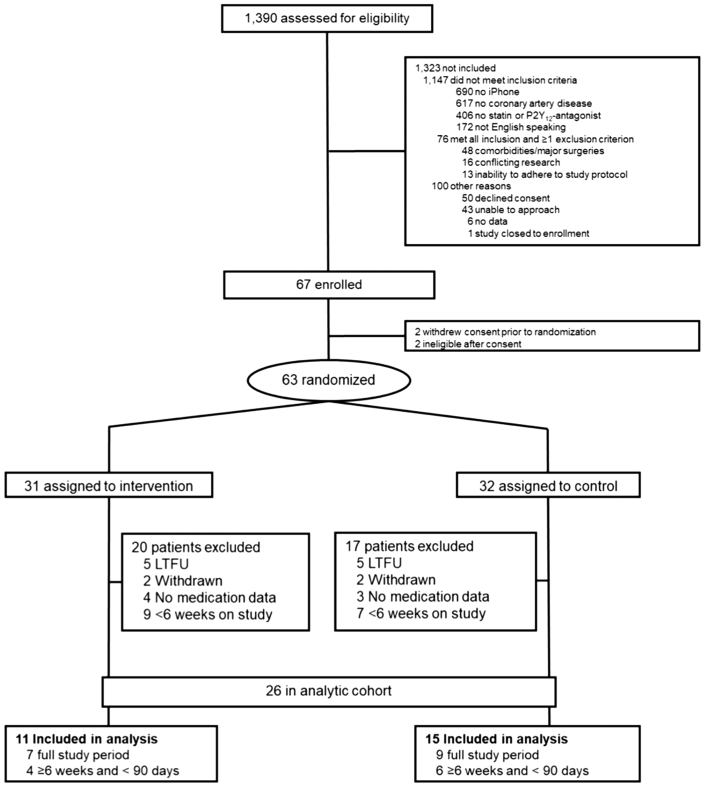

From July 2017 to April 2018, 1390 patients were screened and 63 patients were randomized (31 patients assigned to have the mobile application installed on their smartphone and 32 patients assigned to control). None of the patients randomized to the control group participated in cardiac rehabilitation during the intervention period. The main reason for screening failure was lack of possession of a compatible smartphone (690/1390 [50%]). The study flow chart is presented in Figure 1. Of the 63 randomized, 37 patients were excluded from the analysis (10 loss to follow-up, 4 withdrawn, 7 no primary outcome data available, 16 early termination [<6 weeks on the study]). The final analytic cohort consisted of 26 patients, with 11 in the intervention arm and 15 in the control arm. No significant differences in baseline characteristics were observed between patients in the analytic cohort and those randomized but not analyzed (Supplemental Table S2). Clinical baseline characteristics of the analyzed patients by treatment arm are shown in Supplemental Table S3.

Figure 1.

Flow chart of the study: overview of the patients screened, randomized, and analyzed in the smartGUIDE trial. Since the company that provided the tested mobile application folded and withdrew the study intervention, the trial was terminated early. Patients that were on the study for less than 6 weeks were not included for analysis. LTFU = lost to follow-up.

Primary and secondary endpoints

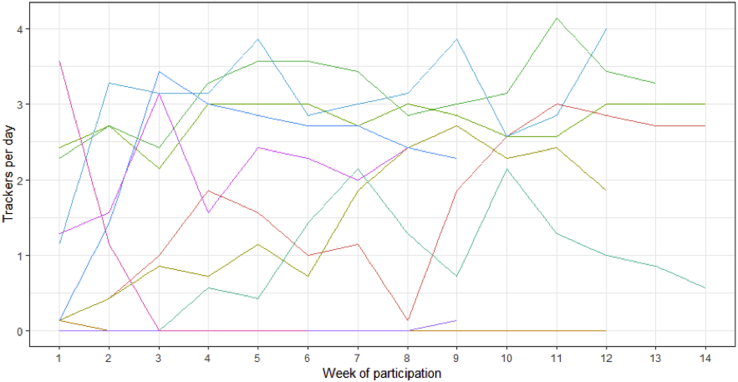

The outcomes are presented in Table 1. Owing to the failure to achieve our target sample size and loss to follow-up, findings are inconclusive regarding the study hypothesis. The primary outcome of P2Y12 inhibitor and statin adherence was high in both arms, as were secondary outcomes of serum LDL, blood pressure at target, and HbA1c ≤ 7%. There were no adverse events. App engagement of the patients of the analytical cohort randomized to the intervention group is shown in Supplemental Table S4. All participants successfully installed the app. The mean patient-entered data towards their care plan per patient on study with app access was 1.6. Supplemental Figure S1 shows consistent engagement with the app over time in most participants.

Table 1.

Primary and secondary outcomes

| Outcome | Treatment arm |

Intervention (n = 11) | P value |

|---|---|---|---|

| Control (n = 15) | |||

| Adherence, n (%) | |||

| Median PDC, composite (primary) | 99 (95, 100) | 94 (88, 96) | .034 |

| PDC ≥ 80%, composite | 14 (93%) | 9 (82%) | .56 |

| Median PDC, statin | 99 (96, 100) | 96 (91, 99) | .14 |

| Median PDC, P2Y12 inhibitor | 99 (97, 100) | 92 (74, 100) | .73 |

| PDC ≥ 80%, statin | 15 (100%) | 10 (91%) | .42 |

| PDC ≥ 80%, P2Y12 inhibitor | 5 (33%) | 3 (27%) | 1.00 |

| Time to first fill [days] | 3 (n=2) | 0 (n=1) | NA |

| Persistence, n (%) | |||

| Persistent to prescribed therapy | 15 (100%) | 11 (100%) | NA |

| Cardiovascular risk factors | |||

| Median serum LDL-C [mg/dL] | 46 (23, 78) | 54 (34, 97) | .44 |

| Participants within target BP, n (%) | 11 (79%) | 7 (70%) | .67 |

| Diabetics with HbA1c ≤ 7%, n (%) | 1/2 (50%) | 1/2 (50%) | 1.00 |

| Absolute change in body weight [kg] | 0.2 (-5.5, 5.5) | 0.3 (-6.7, 2.8) | .93 |

| Relative % change in body weight [kg] | 0.3 (-2.0, 1.0) | 0.3 (-2.0, 1.2) | .84 |

| Quality of life (self-reported) | n=12 | n=8 | |

| EQ-5D-3L, reporting no problems, n (%) | |||

| Mobility | 11 (92%) | 5 (63%) | .26 |

| Self-care | 11/11 (100%) | 8 (100%) | NA |

| Usual activities | 12 (100%) | 7 (88%) | .40 |

| Pain/discomfort | 9 (75%) | 6 (75%) | 1.00 |

| Anxiety/depression | 10 (83%) | 7 (88%) | 1.00 |

| Change in EQ VAS | 4 (-28, 26) | 0 (-16, 20) | .96 |

| Patient activation (self-reported) | n=12 | n=8 | |

| Change in PAM® level, n (%) | .82 | ||

| -2 | 1 (8%) | 0 | |

| -1 | 0 | 1 (13%) | |

| 0 | 8 (67%) | 5 (63%) | |

| 1 | 1 (8%) | 1 (13%) | |

| Weekly physical activity (self-reported) | n=10 | n=8 | |

| Miles walked | 7 (3.5, 19.3) | 11 (5.8, 18.4) | .86 |

| Stairs climbed | 315 (175, 735) | 700 (508, 875) | .21 |

| Self-perception, exercise participation, n (%) | 1.00 | ||

| Sufficient exercise to keep healthy | 4 (40%) | 4 (50%) | |

| Insufficient exercise to keep healthy | 6 (60%) | 4 (50%) | |

| Activity hours on a typical day | |||

| Vigorous | 1 (0, 6) | 1 (0, 2.5) | .76 |

| Moderate | 2 (0, 8) | 2 (0.5, 6) | .96 |

| Light | 4.5 (2, 6) | 5 (3.5, 9) | .29 |

| Sitting | 5 (1, 12) | 7 (4, 10) | .15 |

| Sleeping or reclining | 8 (4, 9) | 8 (7, 9) | .46 |

| Exploratory outcomes | |||

| 30-day hospitalization for any reason | 1 (7%) | 1 (9%) | 1.00 |

| Major adverse cardiac events (MACE) | 1 (7%) | 0 | NA |

Continuous and categorical variables are reported as median (IQR) and count (percent) unless otherwise noted. MACE is a composite of death, myocardial infarction, acute coronary syndrome, out-of-hospital cardiac arrest, stent thrombosis, or repeat revascularization after 90 days.

EQ-5D-3L = European quality of life 5 dimensions 3 level version; NA = not applicable; PDC = proportion of days covered; PAM = patient activation measure; VAS = visual analogue scale.

P values for continuous and categorical variables are based on the Mann-Whitney U and Fisher exact test, respectively.

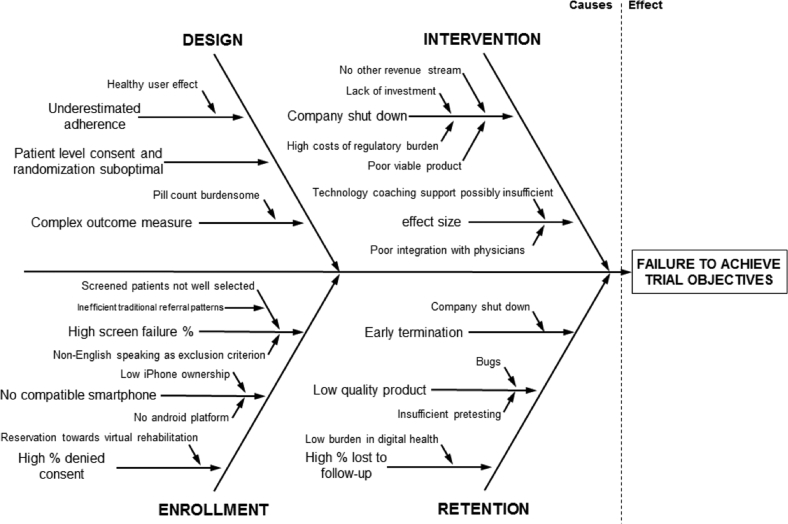

Root cause analysis

Figure 2 shows a cause-and-effect diagram (also called Ishikawa or fishbone diagram) for root cause analysis of trial failure. Root causes included aspects of study design, enrollment, intervention, and retention.

Figure 2.

Fishbone diagram for causes of failure of the smartGUIDE trial. Root causes for trial failure were categorized in aspects of study design, intervention, enrollment, and retention.

Design

We overestimated the high level of adherence and the healthy user effect. Other key factors for trial failure included patient-level consent and randomization (rather than cluster randomization) and complex outcome measures. The collection of primary outcome measures is crucial for trials. In more than 10% of the randomized patients, no data on the primary outcome were available. Pill count ascertainment revealed several flaws (such as patients not bringing in all pills despite previous reminders, several pill bottles in different locations, shared pill bottles with spouse, unreported pill bottles filled months in advance).

Enrollment

Our enrollment rate was lower than anticipated, in large part owing to a high proportion of screening failures. Six hundred and ninety out of 1390 (50%) patients did not have a compatible smartphone, as the intervention application was only available for iOS (iPhone). Since traditional referral patterns were insufficient, we screened outpatient clinics using information technology by ICD codes of patients who were scheduled for treatment. The low specificity of this approach contributed to the low rate of enrolled per screened patients. Despite the low risk of this study, 50 individuals who fulfilled all inclusion criteria and no exclusion criterion declined consent.

Intervention

Other key reasons for trial failure were related to the start-up partner’s product used as the intervention. Users repeatedly reported software bugs and malfunctioning of the app owing to insufficient pretesting. Eighteen percent of the participants did not set up their care plan and 45% of the participants did not allow the app to use the phone’s integrated step counter (data shown in Supplemental Table S4). The median number of “trackers” (ie, medication taken, exercise description, sleep time quantified, or nutrition specified) was 1.6 and the median number of values (ie, heart rate, blood pressure, weight, glucose) was 0.8 per day on study with app access. We did not collect information on whether patients who reported malfunctioning of the app were less engaged with the study app compared with users who did not provide negative feedback. During trial conduct, the provider of the mobile application withdrew the intervention, leading to early termination of the trial.

Retention

Retention might also be influenced by the quality of the product for the patients randomized to the intervention. For patients randomized to the control group, the burden for loss to follow-up or withdrawal of consent appeared low. Many patients could not be included for analysis owing to early termination after the intervention was withdrawn by the start-up partner. However, we observed a high rate of loss to follow-up (10/63 [16%]). We speculate that the burden to disengage with the study or withdraw consent is low in digital health studies compared with conventional pharmaceutical trials. This might be explained by (1) the more abstract intervention of a virtual health coaching vs a concrete compound in pharmaceutical or device trials and/or (2) the open-label design in this digital health trial vs placebo-controlled pharmaceutical trials. Unfortunately, reasons for withdrawal were not systematically collected.

Discussion

This was a randomized controlled trial to investigate whether a blended intervention with technology and health coaching can improve adherence outcomes in a secondary-prevention population. The trial had difficulties in recruitment, enrollment, and engagement, and ultimately was terminated early because the partner digital health company ran out of funding and stopped supporting the study app. Owing to a reduced sample size, the study was underpowered to draw conclusions on the study hypothesis. We identified root causes of trial failure, including aspects of study design, intervention, enrollment, and retention.

Although no statements can be made regarding the intervention’s efficacy, there are many lessons to be learned in the conduct of digital health intervention studies. First, digital health trials and conventional trials share many challenges in a local environment as site-based trials: open-label trial effect sizes may be much smaller than real-world data, insufficient patient referral, and high screen failure rates. A cluster (site-level) randomized trial rather than patient-level consent and randomization could be considered to test these types of interventions. Cluster randomization can be helpful to test interventions that are within the range of standard of care.14 Because digital health intervention studies are typically open-label, the cluster-level randomization can minimize differential effects owing to unblinding or Hawthorne effect at the patient level. Cluster-crossover, where a single site evaluates outcomes during a control and intervention period, can be useful to decrease variance across sites. These designs may also give better point estimates of the estimated effect of the implementation of such an intervention in a health care setting.

Compared with conventional trials (ie, pharmaceutical or device trials), the loss-to-follow-up rate in this trial was high and might indicate reservations regarding virtual health coaching.15 Treating physicians and participants might interpret digital health interventions as more abstract and potentially less effective compared with an intervention in pharmaceutical or device trials. This may decrease engagement and retention. Low retention may also reflect a poor user app or software experience, as we identified a poor viable product as a root cause. For this app, cursory usability testing was performed by the company, but not by the trial investigators. Some digital health studies require participant-owned hardware to test interventions such as software. This can increase screen failure rates and poses a risk for selection bias and reduces findings’ generalizability. Compared with pharmaceutical or device companies, the barrier to introduce a technology intervention can be relatively low for tech start-ups. Many such companies do not have sufficient health care experience and may not be familiar with clinical trial processes and regulations. The in-person health coaching component of this intervention met rigorous standards. However, malfunctioning of the app did not allow the evidence-based coaching to succeed. Many conversations with participants were dominated by expressing frustration on the app, since the coach was the only live person in contact with the participant. This suggests that tech support should be separated of the actual content of a health coaching intervention to allow for sufficient time to deliver first-rate, evidence-based cardiac rehabilitation and risk reduction services through the combined efforts of high-tech and high-touch interventions that can address the challenge of those living with cardiovascular disease and the impact of lifestyle choices and decisions. For all of these reasons, a multidisciplinary team that includes behavioral scientists, user interface and design experts, and clinical experts should work together on development of the final product and pretesting before proceeding with a more typical trial launch.

A major preventable risk of the current study was proceeding without assurance of sufficient funding for trial completion. Future studies should ensure stability and vitality of start-ups in digital medicine. Companies should factor in clinical trial costs as part of their strategy for the round of fundraising. There are also start-up barriers such as data security and privacy that preclude innovations from being tested in trials. Start-ups may not have the experience or resources to verify Health Insurance Portability and Accountability Act compliance, adhere to the International Organization for Standardization, maintain compliance with university and health care system policies, or execute business associate agreements. Start-ups may also over-promise their capabilities, their expertise, or simply their product.

Many registered digital health studies are small, possibly because they are framed as pilots and not powered for true treatment effects, and many of them are unfinished.16 The causes for trial failure identified in our study may explain why many of these digital health studies also remain incomplete.11 There have been other start-ups in the cardiovascular risk modification space, however, who successfully completed trials.17, 18, 19

Conclusion

A root cause analysis of this failed digital health trial identified important and preventable factors. These factors may be common across digital health trials and may explain why many digital health studies remain as yet incomplete. Careful vetting of technology partners, use of implementation study designs (eg, cluster trials) rather than patient-level consent and randomization, and improved data and outcomes collection methodologies may be important lessons for others embarking on digital health trials.

Funding Sources

This investigator-initiated trial was funded by a grant from AstraZeneca. This work was supported by a grant from the German Research Foundation (OL371/2-1) to Christoph B. Olivier.

Disclosures

Christoph B. Olivier reports research support from the German Research Foundation and speaker honoraria from Bayer Vital GmbH.

Kenneth W. Mahaffey’s financial disclosures can be viewed at http://med.stanford.edu/profiles/kenneth-mahaffey.

Mintu P. Turakhia: Research Grant; Significant; Apple Inc, Janssen Pharmaceuticals, Medtronic Inc, AstraZeneca, Veterans Health Administration, Cardiva Medical Inc. Other Research Support; Modest; AliveCor Inc, American Heart Association; iRhythm Technologies Inc. Honoraria; Significant; Abbott. Honoraria; Modest; Medtronic Inc, Boehringer Ingelheim, Precision Health Economics, iBeat Inc, Cardiva Medical Inc.

Stephanie K. Middleton, Natasha Purington, Sumana Shashidhar, and Jody Hereford report no competing interests.

Authorship

All authors attest they meet the current ICMJE criteria for authorship.

Patient consent

All patients provided written informed consent.

Ethics statement

The authors designed the study and gathered and analyzed the data according to the Helsinki Declaration guidelines on human research. The research protocol used in this study was reviewed and approved by the institutional review board.

Footnotes

Supplementary data associated with this article can be found in the online version at https://doi.org/10.1016/j.cvdhj.2021.01.003.

Appendix. Supplementary data

Supplemental Figure S1.

References

- 1.Kurlansky P., Herbert M., Prince S., Mack M. Coronary artery bypass graft versus percutaneous coronary intervention clinical perspective. Circulation. 2016;134:1238–1246. doi: 10.1161/CIRCULATIONAHA.115.021183. [DOI] [PubMed] [Google Scholar]

- 2.Ho P.M., Spertus J.A., Masoudi F.A., et al. Impact of medication therapy discontinuation on mortality after myocardial infarction. Arch Intern Med. 2006;166:1842–1847. doi: 10.1001/archinte.166.17.1842. [DOI] [PubMed] [Google Scholar]

- 3.Anglada-Martinez H., Riu-Viladoms G., Martin-Conde M., Rovira-Illamola M., Sotoca-Momblona J.M., Codina-Jane C. Does mHealth increase adherence to medication? Results of a systematic review. Int J Clin Pract. 2015;69:9–32. doi: 10.1111/ijcp.12582. [DOI] [PubMed] [Google Scholar]

- 4.Thakkar J., Kurup R., Laba T.-L., et al. Mobile telephone text messaging for medication adherence in chronic disease: a meta-analysis. JAMA Intern Med. 2016;176:340–349. doi: 10.1001/jamainternmed.2015.7667. [DOI] [PubMed] [Google Scholar]

- 5.Shore S., Ho P.M., Lambert-Kerzner A., et al. Site-level variation in and practices associated with dabigatran adherence. JAMA. 2015;313:1443–1450. doi: 10.1001/jama.2015.2761. [DOI] [PubMed] [Google Scholar]

- 6.Laustsen S., Oestergaard L.G., van Tulder M., Hjortdal V.E., Petersen A.K. Telemonitored exercise-based cardiac rehabilitation improves physical capacity and health-related quality of life. J Telemed Telecare. 2020;26:36–44. doi: 10.1177/1357633X18792808. [DOI] [PubMed] [Google Scholar]

- 7.Widmer R.J., Allison T.G., Lennon R., Lopez-Jimenez F., Lerman L.O., Lerman A. Digital health intervention during cardiac rehabilitation: a randomized controlled trial. Am Heart J. 2017;188:65–72. doi: 10.1016/j.ahj.2017.02.016. [DOI] [PubMed] [Google Scholar]

- 8.Thomas R.J., Beatty A.L., Beckie T.M., et al. Home-based cardiac rehabilitation: a scientific statement from the American Association of Cardiovascular and Pulmonary Rehabilitation, the American Heart Association, and the American College of Cardiology. Circulation. 2019;140:e69–e89. doi: 10.1161/CIR.0000000000000663. [DOI] [PubMed] [Google Scholar]

- 9.Shore S., Carey E.P., Turakhia M.P., et al. Adherence to dabigatran therapy and longitudinal patient outcomes: Insights from the Veterans Health Administration. Am Heart J. 2014;167:810–817. doi: 10.1016/j.ahj.2014.03.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Schulz K.F., Altman D.G., Moher D., CONSORT Group CONSORT 2010 statement: updated guidelines for reporting parallel group randomized trials. Ann Intern Med. 2010;152:726–732. doi: 10.7326/0003-4819-152-11-201006010-00232. [DOI] [PubMed] [Google Scholar]

- 11.Serrat O. In: Knowledge Solutions: Tools, Methods, and Approaches to Drive Organizational Performance. Serrat O., editor. Springer Singapore; 2017. The five whys technique; pp. 307–310. [Google Scholar]

- 12.Ohno T. Productivity Press; 1988. The Toyota Production System: Beyond Large-Scale Production. [Google Scholar]

- 13.Ishikawa K. Asian Productivity Organization; 1974. Guide to Quality Control. [Google Scholar]

- 14.Donner A., Klar N. Pitfalls of and controversies in cluster randomization trials. Am J Public Health. 2004;94:416–422. doi: 10.2105/ajph.94.3.416. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kerkhoff L.A., Butler J., Kelkar A.A., Shore S., Speight C.D., Wall L.K. Trends in consent for clinical trials in cardiovascular disease. J Am Heart Assoc. 2016;5 doi: 10.1161/JAHA.116.003582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Chen C.E., Harrington R.A., Desai S.A., Mahaffey K.W., Turakhia M.P. Characteristics of digital health studies registered in ClinicalTrials.gov. JAMA Intern Med. 2019;179:838–840. doi: 10.1001/jamainternmed.2018.7235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hallberg S.J., McKenzie A.L., Williams P.T., et al. Effectiveness and safety of a novel care model for the management of type 2 diabetes at 1 year: an open-label, non-randomized, controlled study. Diabetes Ther. 2018;9:583–612. doi: 10.1007/s13300-018-0373-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Bollyky J.B., Bravata D., Yang J., Williamson M., Schneider J. Remote lifestyle coaching plus a connected glucose meter with certified diabetes educator support improves glucose and weight loss for people with type 2 diabetes. J Diabetes Res. 2018;2018:3961730. doi: 10.1155/2018/3961730. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Sepah S.C., Jiang L., Ellis R.J., McDermott K., Peters A.L. Engagement and outcomes in a digital Diabetes Prevention Program: 3-year update. BMJ Open Diabetes Research and Care. 2017;5 doi: 10.1136/bmjdrc-2017-000422. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data that support the findings of this study are not publicly available owing to the nature of the restrictions in the informed consent form. The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.