Abstract

Adaptive optics (AO) is a technique that corrects for optical aberrations. It was originally proposed to correct for the blurring effect of atmospheric turbulence on images in ground-based telescopes and was instrumental in the work that resulted in the Nobel prize-winning discovery of a supermassive compact object at the centre of our galaxy. When AO is used to correct for the eye’s imperfect optics, retinal changes at the cellular level can be detected, allowing us to study the operation of the visual system and to assess ocular health in the microscopic domain. By correcting for sample-induced blur in microscopy, AO has pushed the boundaries of imaging in thick tissue specimens, such as when observing neuronal processes in the brain. In this primer, we focus on the application of AO for high-resolution imaging in astronomy, vision science and microscopy. We begin with an overview of the general principles of AO and its main components, which include methods to measure the aberrations, devices for aberration correction, and how these components are linked in operation. We present results and applications from each field along with reproducibility considerations and limitations. Finally, we discuss future directions.

High-resolution optical imaging relies upon the high-fidelity focusing of light. Light can be described in terms of its optical field, and thus its properties are parameterized for a given wavelength at each point in space and time in terms of amplitude, phase and polarization. However, these fields can be perturbed (in amplitude, phase and polarization) as they propagate through optical systems and other media, and the performance of the imaging systems can be highly sensitive to those perturbations. For instance, astronomical image quality is limited by atmospheric turbulence; microscopes produce blurred images when samples have a non-uniform refractive index distribution; and ophthalmoscopes that image the back of the eye are detrimentally affected by the eye’s imperfect optics. Adaptive optics (AO) is an ensemble of electro-optical and computational methods that aim to recover the optimal performance of an optical system1–6. This has brought benefits to a range of applications. For example, by integrating AO in their telescopes, astronomers have been able to expand the observation of celestial bodies7. Implemented into microscopes, AO has enabled neuroscientists to monitor the activity of neurons embedded deep inside the living mammalian brain8,9. And integrated into ophthalmoscopes, it has enabled vision scientists and ophthalmologists to visualize, quantify and track in situ the many different types of cell that compose the retina, offering significant clinical potential10–14.

Optical field.

Describes the distribution of light as an electrical field across space and time in terms of amplitude, phase, frequency and polarization.

There are many imaging applications in which AO has a significant impact, and the above list is far from exhaustive. Compensation through modulation of the optical field is the fundamental working principle through which AO alleviates the effect of optical aberrations15. Most commonly, we consider aberrations as variations in phase of the optical field. Such phase variations are equivalent to changes in the wavefront shape16. In general, aberrations can be understood as deformations of the light’s wavefront from its perfect form — planar for a collimated beam or spherical for a focusing beam — that produces the sharpest image1,2. Wavefront aberrations, such as those caused by refractive index variations in biological tissue or air motion, can be rectified by locally modulating the phase of the light such that the effects of the aberrations and the applied modulation cancel out16. In other words, the optimal wavefront shape is recovered by introducing a modulated wavefront with its phase conjugate to the problematic aberrations. This process of correction is one of the two essential components shared by all AO methods. The other process consists of evaluating or sensing the aberrations, to determine the optimal phase compensation. Instead of using a dedicated sensor, the image can also be used to determine the appropriate correction. There is wide diversity in approaches to realize both sensing and correction1–4. In addition, implementation requirements can vary widely between fields in which AO is used, making it challenging to translate key concepts and methods across applications. However, connecting these concepts is worthwhile, as fundamental advances in AO originate from all these application areas, and developers can benefit from important advances in separate fields.

Compensation.

Reduction of an effect by modulation of the optical field through introducing the opposite effect.

Focusing.

All rays being brought to meet at one point.

In this Primer, we aim to provide a unified perspective on AO for imaging applications by highlighting commonalities, and differences where necessary, in experimentation between fields. We present results from astronomy, vision science and microscopy to illustrate the potential of AO for improving images in a range of imaging modalities and then discuss how the improvements afforded by AO facilitate and enable new scientific discoveries in diverse applications. For this purpose, we select exemplar results and applications and discuss them in varying levels of detail to reflect intrinsic properties of AO in different fields and modalities. We give information about external resources to ease the adoption of AO and support reproducibility. Finally, we consider current limitations of AO methods before we present an outlook on anticipated technology development.

Experimentation

Different application fields using AO for imaging have their particular technical requirements and AO implementations. However, there are many underpinning concepts that are common to all applications. In this section, we review the fundamental principles and methods of implementing AO and how and why this varies for different fields.

Generic AO system for imaging

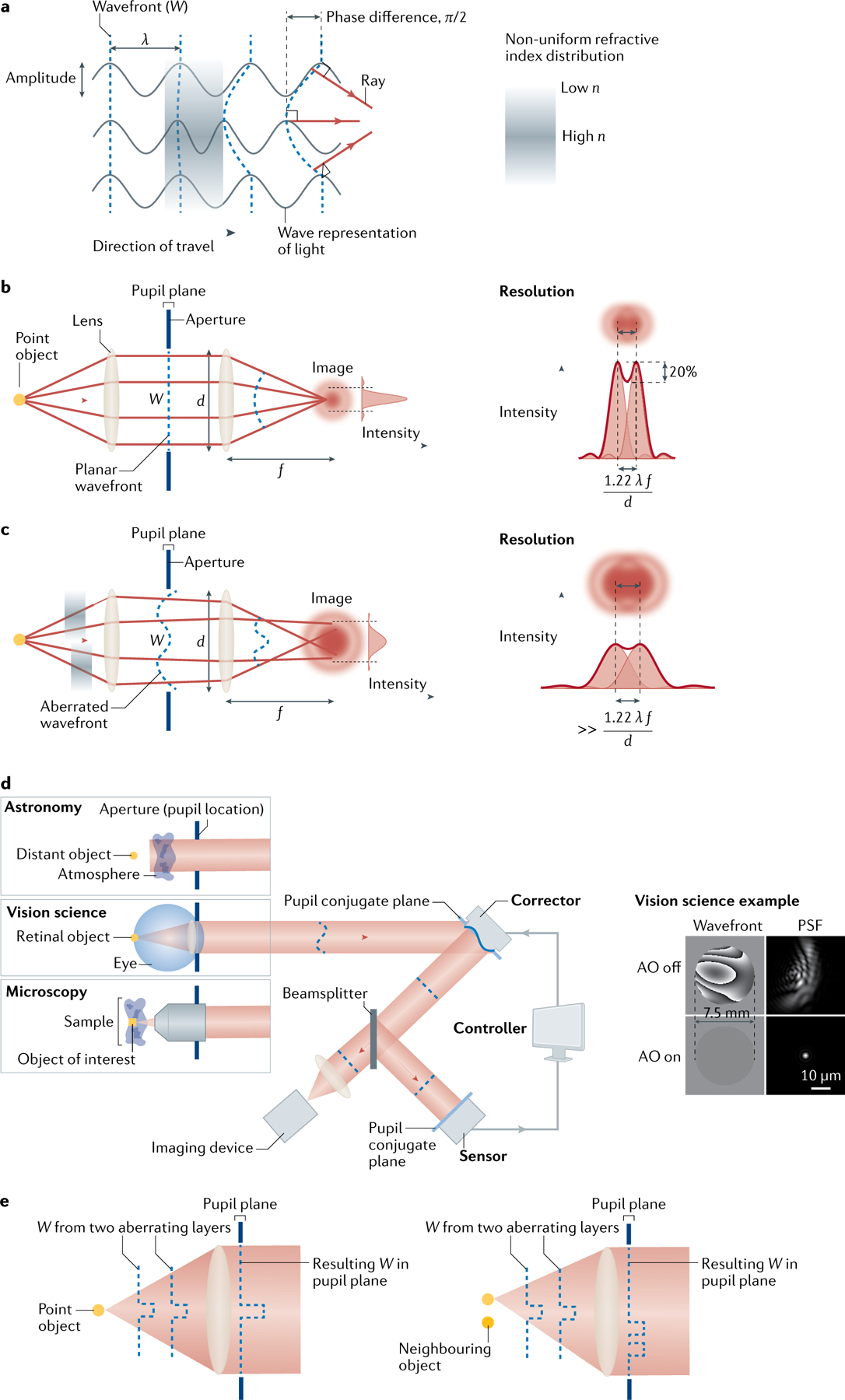

The image quality of an optical system is typically characterized by its point spread function (PSF). The PSF describes how the image of a point is blurred when light passes through the optical system. The degree of blurring depends upon the shape of the wavefront. FIGURE 1a illustrates the concept of a wavefront and its distortion. The propagation of light is represented conceptually by multiple adjacent waves. The shape of the wavefront, W, is found by joining up the peaks of each wave, which is where the light has the same phase. Note that the ray directions are locally orthogonal to the wavefront. Initially the wavefront is planar. When the light reaches an object that has a non-uniform refractive index distribution, the different waves travel at different speeds, causing them to be delayed with respect to each other. In the example shown, the central wave travels through a medium with a relatively high refractive index, which causes the light in that region to travel more slowly. The consequence of this is that the outgoing waves are now out of phase owing to the difference in optical path length. The PSF of an imaging system is directly related on the shape of the wavefront in the pupil plane of the system, where the limiting optical aperture is typically placed. Although the above example is two-dimensional for simplicity, it should be kept in mind that a wavefront is a surface in a three-dimensional space. For an aberration-free system, the wavefront is planar in the pupil plane as the light is typically collimated. This results in a spherical wave when the light is focusing to form the image. The resulting PSF is diffraction-limited, as shown in FIG. 1b. The diffraction-limited PSF is the smallest obtainable image of a point and sets the resolution limit of an imaging system (unless super-resolution techniques are employed, such as structured illumination)17. According to the Rayleigh resolution criterion, for two point objects to be resolved, the maximum intensity of the PSF of one object must lie on or further from the first minimum of the PSF of the other. The narrower the PSF, the closer the two objects can be in order to be resolved. This resolution, R, is set by the diffraction limit and given by

| (1) |

where λ is the wavelength, f is the focal length and d is the pupil diameter.

Fig. 1 |. The nature and effect of wavefront aberrations and how they are corrected.

a | The relationship between the wavefront and how it is affected by changes in optical path. The central light wave is slowed down relative to the outer light waves owing to it passing through a medium with a higher refractive index in that region. The result is the wavefront becoming distorted. b | In an aberration-free system, the wavefront is planar in the pupil plane and resolution is diffraction-limited. c | When aberrations are present, the pupil plane wavefront is distorted and resolution decreases. The thick red lines outlining the point spread functions (PSFs) represent the normalized sum of the individual PSFs. d | General adaptive optics (AO) system. The corrector shown here is a deformable mirror, but could in principle be another device. Wavefront aberrations at the eye’s pupil and corresponding PSFs at the retina for a typical eye with and without perfect AO. Wavefront maps are shown with a modulo-2π greyscale. e | How multiple aberration layers affect the pupil wavefront for different points in the imaged field.

Optical path length.

The length of the path followed by a light ray multiplied by the refractive index of the medium.

Pupil plane.

Aperture stop location.

Collimated.

All rays are parallel to each other.

Diffraction-limited.

There are no aberrations present in the focus. The minimum focal diameter is limited by diffraction owing to the wave nature of light.

Focal length.

The distance between a lens and where the rays meet the optical axis for incoming collimated light.

When aberrations are present, for example, owing to refractive index inhomogeneities in the medium through which the light must pass, as in the case of microscopy and astronomy, or the optical components having a non-ideal shape, as in the case of vision science, the wavefront is no longer described by a plane or spherical wave, as shown in FIG. 1c. Consequently, the maximum intensity of the PSF is reduced and the width of the PSF is increased, resulting in reduced image resolution and contrast, as shown by comparing the right-hand sides of FIG. 1b,c. The goal of AO is to introduce a distortion that is equal in magnitude but opposite in sign to that of the aberrated wavefront, to correct for aberrations and achieve diffraction-limited image quality with maximal signal. This is achieved by changing the optical path length using deformable mirrors, for example. The magnitude of the wavefront distortion is typically specified in micrometres (μm). To achieve diffraction-limited resolution, which is equivalent to a Strehl ratio of 0.8 or above, AO must reduce this distortion to less than λ/14. Note that amplitude variations that result in intensity variations, whereby the amplitude is denoted by the peak-to-valley of the wave as shown in FIG. 1a, are typically neglected. This is primarily owing to phase distortions having a more pronounced effect on the imaging properties. In addition, intensity correction with current devices would involve introducing losses. Similarly, distortions of the polarization state are also often neglected despite polarization control gaining interest in microscopy, as it is important in some imaging modalities18,19.

Strehl ratio.

The ratio of the intensity of the peak of the aberrated point spread function (PSF) to that of the diffraction-limited PSF.

A general AO imaging system is shown in FIG. 1d. It consists of three main components: a sensor to measure the aberrations, a corrector to compensate the aberrations and a controller that calculates the required signals sent to the corrector based on the sensor measurements. The corrector and sensor are typically conjugate to the pupil plane of the imaging system, which means that the pupil is imaged on to the corrector and sensor. In the case of microscopy, the pupil is the aperture of the objective lens that transfers light to and from the specimen. For vision science, it is the eye’s pupil and for astronomy, it is the telescope aperture. Conjugation of the corrector, sensor and system pupil is achieved via pairs of lenses or curved mirrors, called relay telescopes, that reimage each one on to the next20–22. AO systems are typically designed using commercial optical ray tracing software such as Zemax and constructed from a myriad of mostly high-end stock lenses and mirrors. In some AO systems, there is no dedicated wavefront sensor and the quality of the image is used to control the corrector. Although this method has some advantages such as reduced system complexity, it is also slow and is not suitable for situations in which the aberrations are rapidly evolving. Consequently, this technique is mainly confined to microscopy. Note that the system shown in FIG. 1d is a generic AO imaging system. Different fields have various different imaging systems with specific AO implementation.

Across all fields, the aberrations that are induced originate from multiple layers. In astronomy the turbulence varies with altitude, while in the eye aberrations are introduced mostly by the surfaces of the crystalline lens and cornea. For microscopy, there can be a volume of inhomogeneous tissue in front of the object of interest. This means that the shape of the wavefront varies depending upon the location of the object of interest as shown in Fig. 1e. The most straightforward method for aberration compensation is to update a single corrector accordingly. Use of a single corrector is referred to as single-conjugate AO (SCAO) and is the most widely used implementation of AO. A less commonly employed method is to use multiple correctors, in which each corrector is conjugate to a different layer or depth. This is referred to as multiconjugate AO (MCAO)23–25. This adds significant cost and complexity to an AO system.

The area over which the aberrations can be considered invariant is referred to as the isoplanatic patch. Typical values for the isoplanatic patch for astronomy are 1.5 arcsec to 2 arcsec at a wavelength of 0.5 μm, varying with seeing and turbulence layer heights, and scaling with wavelength to the 6/5 power3,26. For vision science it is approximately 1°, or 300 μm at the retina27. In the case of microscopy, the patch size ranges from hundreds of microns, in mouse brain for example8,28,29, to a few microns, such as inside tissue with high curvature or complexity, for example, zebrafish larvae30 or Caenorhabditis elegans29,31. Although a single corrector is commonly placed in a pupil conjugate plane, it can be more advantageous to place a single corrector conjugate to the layer introducing the most significant aberrations. This can widen the field of view over which sharp images are obtained. This is referred to as ground-layer AO in astronomy and sample-conjugate AO in microscopy32.

In all cases, the temporal dynamics of the perturbations must be considered as this has implications for the way in which AO is implemented. Although some perturbations are relatively stable, often the case in microscopy applications, perturbations due to dynamic processes in the eye can vary significantly, with perturbations due to atmospheric turbulence evolving even more quickly. We note that in astronomy there is a distinction between active optics and AO. Active optics involves changing the shape of the telescope mirrors to account for environmental factors and to align and collimate the telescope; its role is not to correct aberrations due to turbulence and is much slower than AO33. There is no such semantic distinction in vision science and microscopy.

Aberration characteristics

When implementing AO, it is important to consider the properties of the aberrations to be corrected. This includes what type of aberrations are present and how rapidly the wavefront changes shape over time. The shape of the wavefront, W, can be considered to consist of a sum of shapes or modes. It is common for these modes to be expressed in terms of Zernike polynomials34:

| (2) |

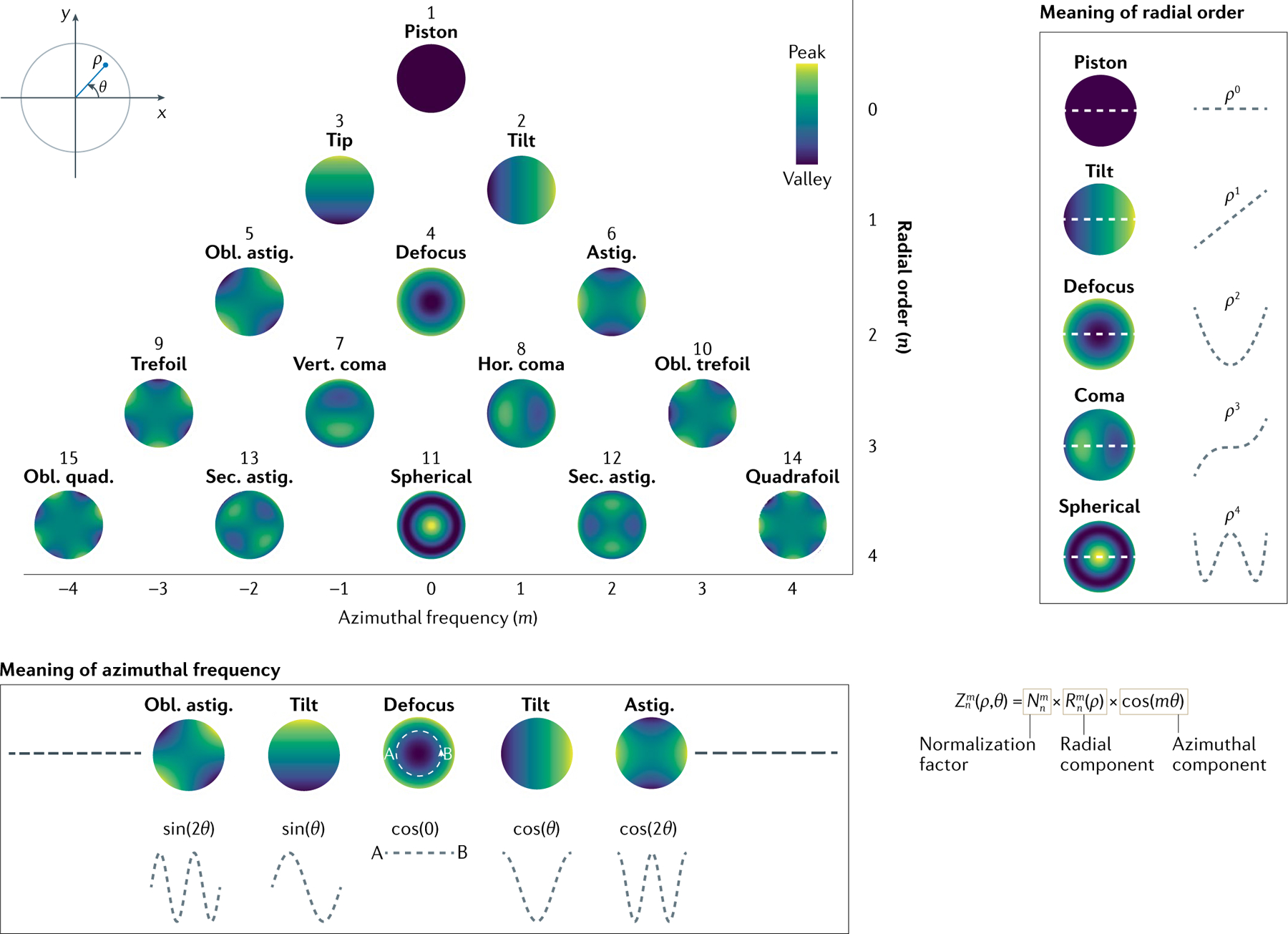

where ρ is the normalized pupil radius ranging from zero to one, θ is the angle around the optical axis, and is the coefficient (or magnitude) of the Zernike polynomial with the azimuthal frequency m and radial order n. We note that there are certain constraints on the allowable values of m and n. Each polynomial describes the shape of the wavefront for a given aberration (or mode). Each of these modes is shown in FIG. 2. Several Zernike modes correspond closely to aberrations used in classical optics such as astigmatism and coma. An advantage of Zernike polynomials is that they are orthogonal, that is, they have convenient mathematical properties that permit us to consider them as having independent effects on the optical system. There are slight variations on the definition of Zernike polynomials, such as whether the angles are measured clockwise or anticlockwise. FIGURE 2 shows the Zernike aberrations as defined by the Noll convention35,36, which are typically used in astronomy and microscopy. In vision science, the OSA ANSI convention is commonly used because the way in which the angle is measured matches the way it is defined when determining a patient’s spectacle or contact lens prescription37. The piston mode, which is a constant phase offset and can be seen at the top of FIG. 2, is not considered when correcting the wavefront as it does not affect the image because it merely represents translation of the wavefront along the optical axis. Tip and tilt represent a shift in the position of the image and so are not usually corrected for in microscopy with stationary samples. In vision science, even when the eye is fixated on a static object, miniature eye movements cause the retina to rapidly move around. In scanning imaging systems for the eye, in which the image is built up line by line, this can cause image distortion. Tip and tilt changes due to atmospheric turbulence also cause image blur that needs to be corrected. Note that in the literature, aberration modes are often broadly categorized as being of lower order or higher order. The definition of lower order and higher order varies between fields. Higher order is typically defined as modes with a radial order above three for astronomy, above two for vision science and above four for microscopy.

Fig. 2 |. Modal representation of aberrations using Zernike polynomials according to the Noll notation35.

The polynomials are organized according to their radial order n and azimuthal frequency m. The radial component describes how the polynomial varies with the radius ρ. For example, a mode with a radial order of two means that the polynomial describing the mode has a mathematical term where the highest power is two, that is, it has a ρ2 term. The azimuthal frequency describes how the polynomial varies with angle θ. The positive numbers represent a cosinusoidal variation, with negative numbers representing sinusoidal variation. For example, a value of 2 means that the polynomial varies with cos(2θ). Astig., astigmatism; Hor., horizontal; Obl., oblique; Sec., secondary; Vert., vertical. Adapted with permission from REF.36, Zenodo. CC BY NC-ND 4.0.

Noll convention.

Mathematical description of aberrated wavefront shapes as proposed by Noll.

A summary of the properties of the aberrations for each field are shown in TABLE 1. In astronomy and vision science, there are statistical models of the aberrations38–40. These mathematical models generate the sort of aberrations that are likely to occur based on experimental parameters such as pupil diameter of the eye or the telescope41. In astronomy, the number of Zernike modes corrected varies from facility to facility and depends on the type of AO implementation, but can vary from the order of one hundred to several thousand, equivalent to radial orders from 15 to ~70. The higher the radial order, the lower the magnitude of the aberration35. The peak-to-valley wavefront amplitude is typically 1–3 μm root mean square (rms). The dominant source of temporal evolution is wind-driven motion of the atmosphere42. Depending on telescope diameter and wind speed, aberrations can vary with frequencies up to 100 Hz (REF.43). The variation in aberration magnitude with temporal frequency follows a power law with an exponent of −17/3 (REFS44,45). In vision science, the aberrations increase with pupil size and typically the higher the Zernike radial order, the lower the magnitude of the aberration46. The pupil of the eye is often dilated when imaging the retina to increase resolution. For a 7.5 mm pupil, achieving diffraction-limited imaging in 95% of the population requires correction of the Zernike radial orders from two to ten40. The peak-to-valley of the wavefront is around 11 μm, but is much higher if the individual requires a spectacle prescription (radial order two). These aberrations also temporally vary, most likely owing to dynamics in the optics of the eye caused by the heartbeat, tear film instabilities and movement of the eye. The power law exponent of these fluctuations is about −1.3 (REFS47,48). Today’s ophthalmic AO systems are generally designed to handle dynamic aberrations up to a couple of Hertz, but a recent study that included many more individuals suggests that correcting fluctuations varying at higher frequencies may be necessary to achieve diffraction-limited performance in most eyes49.

Table 1|.

Comparison of aberration characteristics and their correction across fields

| Field | Aberrations | Sensors | Correctors | Control |

|---|---|---|---|---|

|

| ||||

| Astronomy | Main source is wind-driven motion of the atmosphere Wavefront variance decays with Zernike radial order following a power law with exponent −11/3 Temporal PSD varies with f−17/3 Peak-to-valley wavefront is 1–3 μm rms Isoplanatic patch size is 1.5–2 arcsec at 500 nm Correcting between 15 and 70 Zernike radial orders is typical |

SH sensor most widely used SH lenslets to actuators ratio ~1:1 Pyramid sensors increasingly used |

Continuous surface DMs most widely used Typical number of DM actuators: 100s–1,000s |

Closed-loop systems by far the most common Closed-loop bandwidth of 50 Hz is typical Integral controller is most widely used |

|

| ||||

| Vision science | Main source is optical imperfections in the crystalline lens and cornea Wavefront variance decays exponentially with Zernike radial order in the range −1.1 to −1.8 Temporal PSD varies with f−1.3 – f−1.5 Peak-to-valley wavefront is 7–11 μm for a dilated pupil with no refractive error Isoplanatic patch size is about 300 μm Correcting 10 Zernike radial orders is typical for a dilated pupil |

SH sensor most widely used SH lenslets to actuators ratio 3:1–6:1 |

Continuous surface DMs most widely used Typical number of DM actuators <100 |

Closed-loop systems by far the most common Closed-loop bandwidth of <2 Hz is typical Integral controller is most widely used |

|

| ||||

| Microscopy | Main source is shape and refractive index inhomogeneity of cells and tissue Aberration magnitudes vary with sample Aberrations are mostly temporally static Peak-to-valley wavefront varies from submicrometre to several microns Isoplanatic patch size is several to 100s of microns Correcting between 7 and 11 Zernike radial orders is typical |

Indirect sensing and SH are both common SH lenslets to actuators ratio ~1:1 Indirect sensing employs both modal and zonal aberration representation |

Continuous DMs most widely used LCSLMs used in laser illumination paths Segmented DMs used for high-order scattering compensation Typical number of DM actuators <100 Typical number of SLM pixels 512 × 512 |

Open- and closed-loop systems are both common As aberrations are mostly static, closed-loop dynamics are determined by imaging speed |

DM, deformable mirror; f, frequency; LCSLM, liquid crystal spatial light modulator; PSD, power spectral density; rms, root mean square; SH, Shack–Hartmann.

Although the aberration characteristics of some microscopy samples have been shown to be similar to that of astronomy with regard to the decrease in magnitude of the Zernike aberrations with increasing radial order50, there are no comprehensive statistical models for microscopy owing to the vast range of specimen types for which AO might be used. Aberrations of samples with flat geometries and a homogeneous refractive index distribution are dominated by Zernike modes up to and including the fourth radial order, while samples with more complex shaped surfaces and/or heterogeneous refractive index distributions require correction of many aberration modes beyond these. Similarly, the magnitudes of aberrations in microscopy depend on the sample, and can vary from submicrometre to several microns. Even for microscopy of live specimens, sample-induced aberrations usually do not vary rapidly over time. Therefore, AO measurement and correction do not have to be carried out at high speed.

A useful descriptor to capture the severity of the aberrations in a system is the rms wavefront error. This is given by the square root of the sum of the squared coefficients. An aim of AO is to reduce this value to less than λ/14 to obtain diffraction-limited resolution. Although Zernike modes are commonly used, they are not the only — nor necessarily the best — representation for a particular application. Other analytically or empirically defined mode sets can also be used that represent a more efficient basis than Zernike modes. For example, for vision science see REFS40,51. Furthermore, when operating an AO system, it is often convenient to think of the wavefront as consisting of discrete non-overlapping zones, or segments, as opposed to a sum of superimposed modes. The choice of modes or zones often depends on the implementation of sensing and correction, which is discussed further in the relevant sections.

Aberration measurement

Aberrations can be measured most directly using a dedicated wavefront sensor, or they can be determined indirectly from the images. We refer to these two methods as direct sensing and indirect sensing, respectively. The indirect method is often referred to as sensorless AO as there is no dedicated wavefront sensor. Indirect methods are significantly slower than sensor-based methods at determining the magnitude of the aberrations present and the required correction. This is because they typically require collection of many images. Depending on the imaging speed, indirect methods can take seconds to minutes to determine the required correction in comparison with milliseconds for a dedicated wavefront sensor. As the aberrations in microscopy are mostly static, indirect methods are more suited to this field. Indirect methods have also been used to some extent in vision science — see for example, REF.52 — owing to the advantage of requiring no extra sensing hardware. However, for astronomy, in which the aberrations due to atmospheric turbulence evolve at high rates, the slow speed of indirect sensing would present a significant problem. A summary of the typical sensors used for each field and their properties is shown in TABLE 1.

Direct sensing.

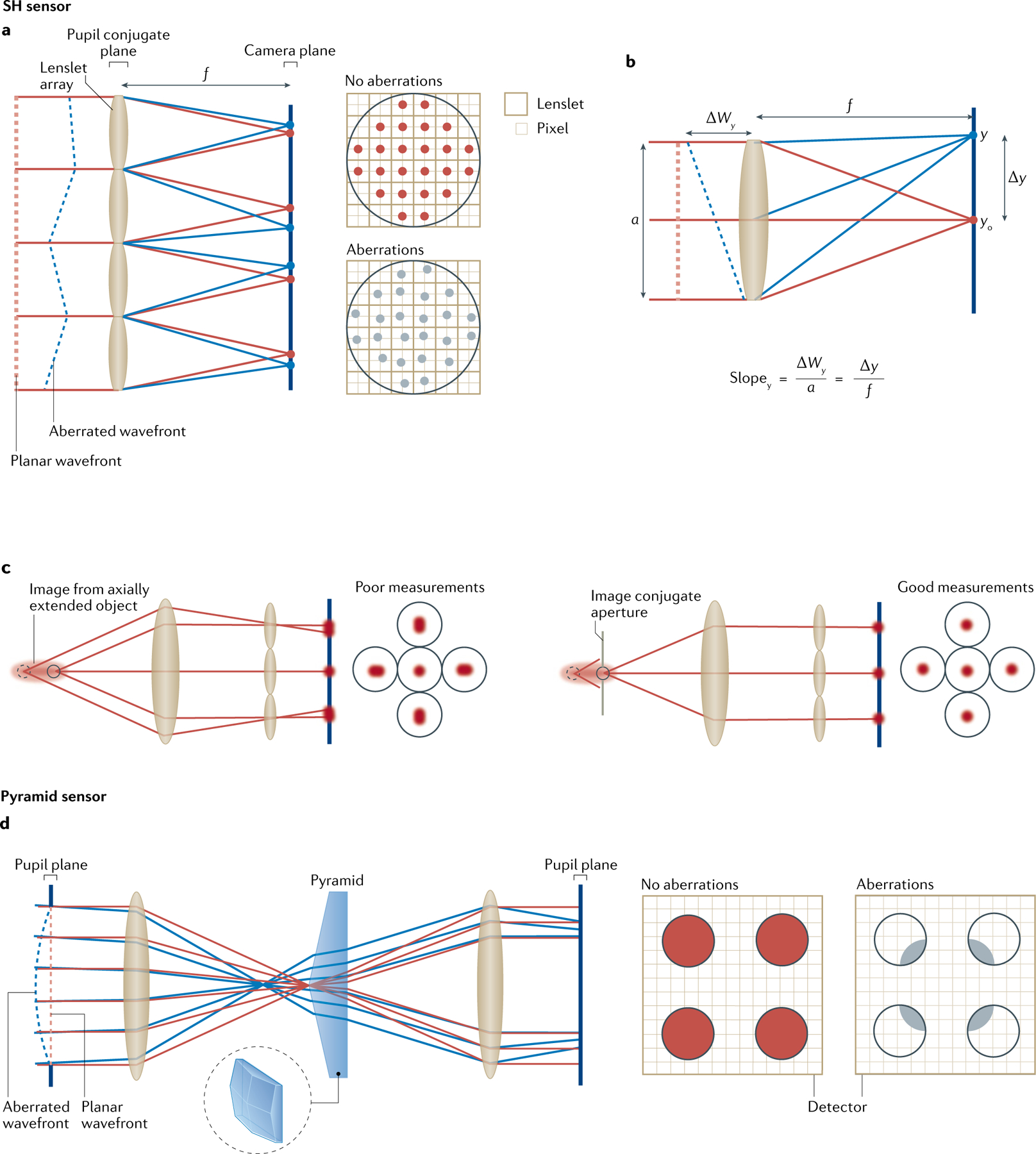

The most widely used sensor to measure the aberrations across all fields in an AO system is the Shack–Hartmann (SH) sensor, as it can be quick, simple and effective16. The principle of operation is shown in FIG. 3a. It consists of an array of lenslets placed in a pupil conjugate plane and a camera at the focal plane of the lenslets53. For an aberration-free wavefront, a regular and evenly spaced array of spots is formed on the camera. For an aberrated wavefront, the location of each spot is shifted according to the local tilt (or slope) of the wavefront across that given lenslet as shown in FIG. 3b. Typically, a minimum of four camera pixels per spot is required to accurately determine the location of a spot. There are various algorithms for determining spot locations, with the centre of mass being one of the most frequently used54. Finding the centre of the spot using the centre of mass is often referred to as centroiding.

Fig. 3 |. Principles of the Shack–Hartmann wavefront sensor and pyramid wavefront sensor.

a | The Shack–Hartmann (SH) sensor consists of a lenslet array conjugate to the pupil plane and a camera placed at the focal plane of the array. For an aberration-free wavefront, a regularly spaced array of spots is formed on the camera. In the example shown there are four camera pixels behind each lenslet. b | Aberrations shift each spot according to the local wavefront slope, slopey (and slopex), across each lenslet. The magnitude of the wavefront, ΔWy (and ΔWx), across a lenslet of diameter a, is determined from the shift in the spot, Δy (and Δx), divided by the focal length, f. c | To obtain the slope using conventional SH algorithms, the light returning from the object must be point-like, that is, confined axially and laterally. Otherwise, the SH spots will be elongated, which can adversely affect the algorithm to determine their precise location. For light that is axially elongated, an image conjugate aperture can be used to alleviate these problems by reducing the amount of out-of-focus light reaching the sensor. d | Pyramid wavefront sensor. A four-faceted prism is placed at the focal plane and forms four images of the pupil. For an aberration-free or planar wavefront, four pupil images, with identical intensity distributions, are imaged on to the detector. Aberrations result in changes to the intensity distribution of each pupil image. An example for defocus is shown.

Lenslets.

Miniature lenses usually as part of an array.

Note that the traditional implementation of the SH sensor requires light returning from a point source to inform the sensor measurements. In microscopy, this could be light originating from a point object, for example, a fluorescent bead. In vision science the retina is typically illuminated with a point of light from an infrared (IR) light source. If the light informing the SH sensor is not confined axially and laterally the SH spots will become elongated. FIGURE 3c shows an example of spot elongation owing to an axially extended object where light is returning from multiple depths. This effect is more pronounced with higher numerical apertures and therefore occurs more in microscopy than in vision science55,56. When elongated spots are present, either an image conjugate aperture can be used (as shown in FIG. 3c57) or more advanced algorithms must be implemented. Spot elongation is inherent in astronomy when using laser guide stars (LGSs) to provide light to inform the wavefront sensing measurements. Some astronomical AO systems implement image-based SH wavefront sensing58, in which the image of the object of interest, such as the surface of the Sun, forms behind each lenslet, and the shift of each of these images determines the local slope of the wavefront. An advantage of this technique is that there is no need for an extra light source for the SH. This technique is starting to be adopted in microscopy59 but has yet to be used in vision science. In astronomy, multiple wavefront sensors can be used to reconstruct the turbulence based on measurements from multiple guide stars, even if a single corrector (SCAO) is to be used. Called tomographic AO60, this technique is used to calculate the shape of the corrector for aberration-free imaging of an object of interest within the field of view. Using the light of multiple guide stars is necessary because the light from a single guide star can take a very different path through the turbulence in comparison with the light from the object. Consequently, the aberration measurements from a single guide star may not be appropriate.

When using a deformable mirror as the corrector, astronomical and microscopy AO systems typically use a ratio of the total number of lenslets to the total number of actuators of around one, owing to limited light9,16. For vision science AO systems, where considerably more light is available for wavefront sensing, a minimum ratio of about two has been reported as optimal61, but a typical ratio in actual systems is between three and six. Once the ratio has been set, the focal length should be chosen to be the longest possible before the SH spots cross into regions behind neighbouring lenslets. This is to keep the highest sensitivity possible without exceeding the desired dynamic range. The longer the focal length the higher the sensitivity in detecting the movement of the spots, but if the focal length is too large, the spots will quickly cross into regions behind neighbouring lenslets and reduce the dynamic range. Consequently, there is a trade-off between sensitivity and dynamic range.

Actuators.

Elements that deform the mirror.

Dynamic range.

The range between the smallest and largest measurable values.

Although the SH is the most widely used sensor, there are several alternatives16. For instance, curvature and pyramid sensors are used in astronomy, with the curvature sensors being phased out and the pyramid sensors being increasingly used. FIGURE 3d shows the principle of operation of the pyramid wavefront sensor, which uses a different principle to encode phase variations into intensity measurements62. The tip of a four-faceted prism is placed at the image plane and the prism forms four images of the pupil. The aberrations present are determined from the relative intensity distributions of the pupil images. For a planar, that is, aberration-free wavefront, each pupil image is illuminated identically. When an aberration is present, for example, defocus, the light is refracted by the prism such that each pupil is illuminated differently. An advantage of this sensor in comparison with the SH is that the dynamic range and sensitivity can be adjusted independently63 and a wider range of aberration magnitudes, from low to high, can be measured accurately. The pyramid sensor has been demonstrated across fields63–65 but is not yet used as extensively as the SH sensor, perhaps owing to a later introduction in AO compared with the SH. In the future there may be other direct-sensing alternatives to the SH sensor such as diffusive plates, whereby the local wavefront slope is determined from the local shift in the resultant speckle pattern66. Another approach is to use machine learning to improve the sensor performance in heavy scattering, scintillation or when sensor spots are distorted or obscured66,67.

Indirect sensing.

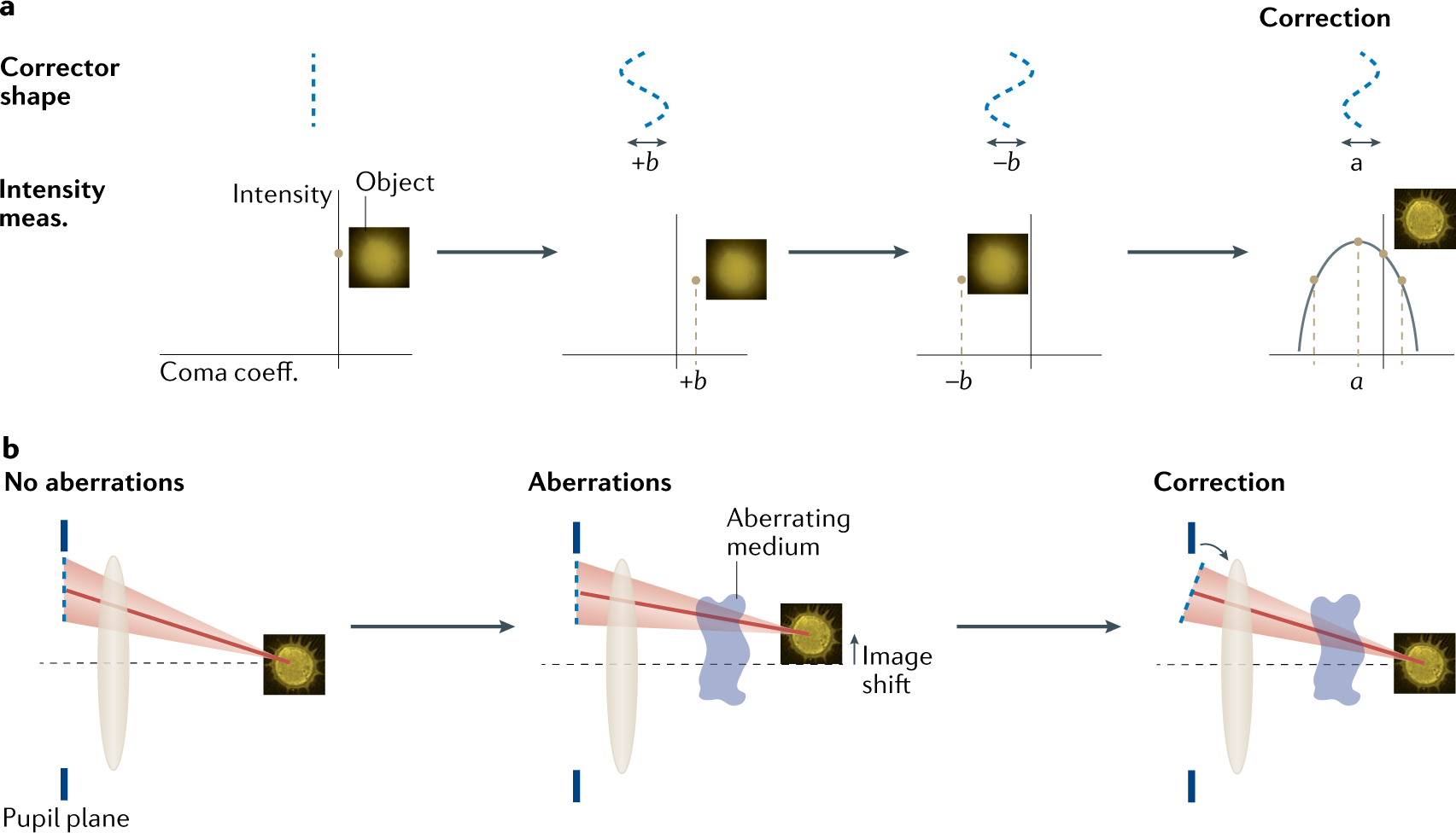

Two main types of indirect sensing are modal and zonal. In the modal case, a continuous surface wavefront corrector is used, and the wavefront consists of the sum of aberration modes, as illustrated in FIG. 2. Individual aberration modes, such as Zernike modes, are applied sequentially to the wavefront corrector, and changes in the image are quantified using an image quality metric such as intensity or image sharpness1. FIGURE 4a shows a simple example in which coma is present. By applying different magnitudes of coma to the corrector and measuring the intensity in the image, a parabolic curve can be fitted to the data points to determine the optimum amount of coma that must be introduced to obtain a clear image. Other modes can be corrected in a similar way. Recent work has shown that wavelet decomposition of images provides a versatile way to define optimization metrics for indirect sensing68. There are several algorithms that have been used to determine the correction in modal schemes5,69.

Fig. 4 |. Indirect sensing schemes.

a | In modal adaptive optics (AO) schemes, different modes, which are equivalent to different shapes, are sequentially applied to the corrector. If an aberration is present such as the coma shown here, the maximum intensity will occur when the coma applied by the corrector has an equal but opposite magnitude to that introduced by the aberrating medium. Using three intensity measurements: one with the corrector introducing a plane wavefront, and one each with the corrector introducing coma with a chosen magnitude of −b or +b, the required correction can be determined using a parabolic fit to the data. b | In zonal schemes, each zone is modulated. An example of the zone-based pupil segmentation method is shown in which the required tip and tilt of each segment is determined from shifts in the image. The object is an image of a pollen grain. For simplicity only a single zone is shown but the blur in the image results from the image being shifted by different amounts by different zones owing to the aberrations. coeff., coefficient; meas., measurement.

Wavelet.

A mathematical function basis that is confined in both space and frequency.

In the zonal case, instead of considering the wavefront as consisting of aberration modes across the whole pupil, it is considered to consist of discrete non-overlapping zones. This method is often used with segmented correctors. FIGURE 4b shows an example of the pupil segmentation zonal method whereby the required tilt of each zone to correct the wavefront is determined from shifts in the image by ensuring rays meet at the focus. To ensure that the rays arriving at the focus are also in phase, the piston (equivalent to the forwards–backwards position) of each segment is determined from intensity measurements with differing amounts of piston applied70,71. Note that some wavefront correctors such as liquid crystal spatial light modulators (LCSLMs) can only perform piston modulation where the refractive index of their pixels is changed instead of mirrored segments that move back and forth. To determine the required piston introduced by each mirrored segment or pixel simultaneously, the piston for each can be modulated at a different frequency and power spectrum components can be used29. Note that indirect sensing has also been used in vision science using various different modal-based algorithms52.

Phase retrieval and phase diversity are other methods of indirect wavefront sensing72. Phase retrieval is an iterative algorithm that evaluates the local wavefront curvature from the difference in intensity between images of a point source. The simplest implementation of phase retrieval consists of acquiring two images of a fluorescent bead with different but known values of defocus and is commonly used in microscopy to correct for static aberrations introduced by the optical system30,73. Phase diversity operates on images of a spatially extended source and requires multiple phase masks at the pupil to calculate the local wavefront curvature from the difference in intensity between images. Phase diversity has been employed in astronomy to correct aberrations originating from the system, in this case the telescope itself74.

Aberration correctors

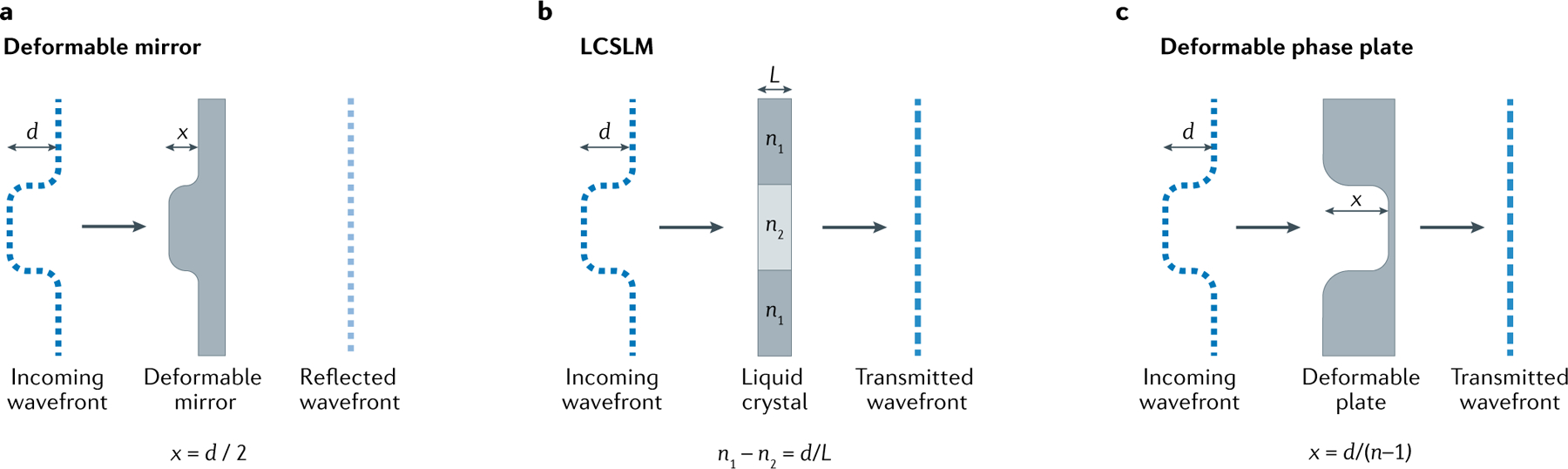

Correctors are devices that correct wavefront aberrations by changing the optical path length, which in turn modulates the wavefront. There are three main types of corrector as shown in FIG. 5. Deformable mirrors consist of a reflective surface that is either continuous (as shown in FIG. 5) or segmented. Continuous surface deformable mirrors are the most commonly employed corrector across fields. For a continuous surface mirror, when each area of its surface is pulled or pushed, a particular smooth shape known as an influence function is created. The surface shape is the sum of these influence functions. For a segmented corrector, each mirror facet can either change piston (move forwards or backwards) or change piston, tip and tilt16. Deformable mirrors flatten the wavefront using differences in physical distance travelled by the wave. LCSLMs use differences in refractive index to alter the optical path length; they can be constructed in reflective or transmissive designs75. Care must be taken when using LCSLMs as they require the use of (quasi-) monochromatic polarized light because the corrector affects wavelengths differently and is designed for use with linear polarized light. In cases where multiple wavelengths require correction, for example, in some fluorescence systems, a deformable mirror may be more appropriate as it does not suffer from chromatic effects. Deformable phase plates are fluidic devices that can change their shape based upon movement of the fluid owing to localized pressure76,77. Although current designs have far fewer actuators or pixels than deformable mirrors and LCSLMs, respectively, they are an attractive option because their size and transmissivity allow them to be easily integrated into existing imaging systems.

Fig. 5 |. Three main types of corrector.

a | Deformable mirrors consist of a reflective surface that may be continuous or segmented. b | Liquid crystal spatial light modulators (LCSLMs) consist of pixels that are able to change their refractive index, n. They can be transmissive or reflective. c | Deformable phase plates are fluidic devices that are able to change their shape.

Influence function.

The shape of modulation produced by a device when a signal, such as voltage, is sent to one actuator or pixel.

Monochromatic polarized light.

Light of a single wavelength with a structured oscillation of the electric field.

TABLE 1 summarizes the typical correctors used for each field. Selecting the most suitable corrector for your application depends on the properties of the aberrations you will encounter. An important consideration is the maximum peak-to-valley of the wavefront that the device can correct. For a deformable mirror for example, this value is dictated by the stroke, which is the physical distance that an adaptive element surface can move. The maximum peak-to-valley of the wavefront that can be corrected by a deformable mirror is twice the stroke, as the additional path length is imparted to both the incident and reflected light. For example, for vision science, as discussed above, the required peak-to-valley correction is around 11 μm. Note that for segmented correctors such as LCSLMs, phase wrapping is typically used to increase the effective modulation range. Another consideration is the number of actuators (or pixels in the case of a LCSLM), which depends on the number of Zernike aberrations that will be corrected. In astronomy for example, where a significant number of Zernike aberrations are corrected, a deformable mirror with thousands of actuators may be required. Note that when considering segmented devices versus continuous surface devices, the number of required actuators can change significantly for the same aberrations78. This is related to how well a device can match the incoming wavefront. For example, for relatively smooth wavefronts, segmented devices require many more actuators. When correcting for aberrations that change rapidly with time, as is the case for ophthalmology and astronomy, the temporal response of a corrector is another factor to consider. Typically, for the aberrations of the eye, most correctors are faster than the fluctuations that need to be corrected49. By contrast, astronomical AO systems must run fast enough to keep up with the bulk flow of turbulence driven by wind above the observatory. System update rates of 1,000 Hz or faster may be required79 for best performance on bright stars, and deformable mirrors are therefore more suited as a corrector because they are generally faster than LCSLMs and deformable phase plates. There are also secondary factors that affect corrector suitability such as cost, physical size, transmissivity/reflectivity, stability and linearity. Several of the aforementioned factors relate not just to the corrector but to how the corrector interfaces with other parts of the AO system. This interfacing, termed control, is the topic of the next section.

Stroke.

Maximal physical distance that an adaptive element can move, which limits the optical path length of phase modulation that can be imparted.

Phase wrapping.

Representation of the phase information within the range [0,2π] or [−π, π] radians by adding or subtracting multiples of 2π.

Control

We discuss here some of the most important factors in calibration and control of an AO system. The main focus is on the control of continuous surface correctors using direct sensing. Note that zonal and modal wavefront control using indirect sensing is presented in FIG. 4 and so is not discussed in this section. A summary of the typical control schemes for each field can be found in TABLE 1.

Calibration.

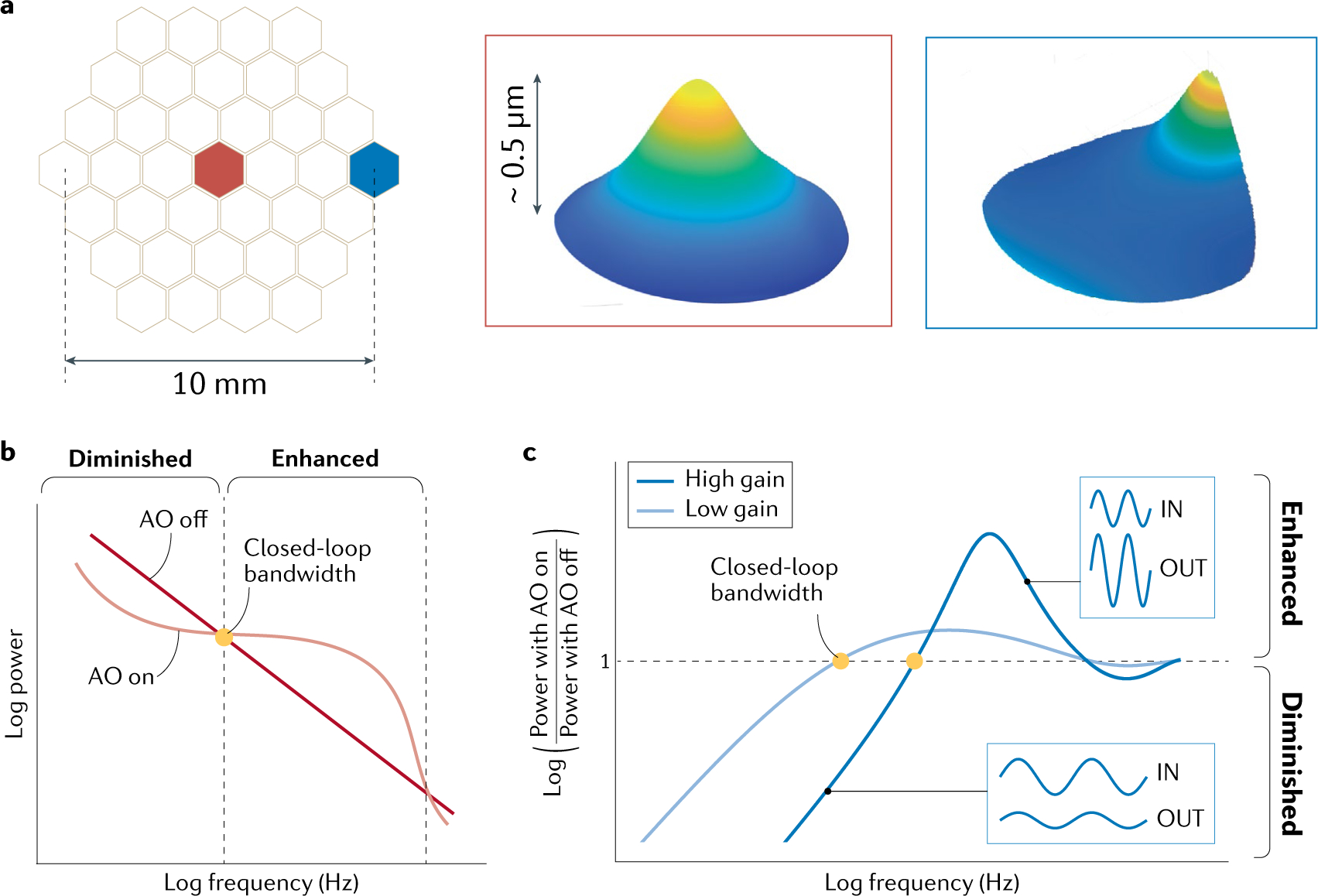

Before operating a corrector, it is important to determine the relationship between the control signal applied to each actuator, such as the voltage, and the measured wavefront. This relationship is called the influence function. An example influence function for the central actuator of a 37-element transmissive phase plate24 is shown in FIG. 6a. The calibration procedure measures these influence functions. The corrector and sensor can be assumed to be a linear system, whereby the overall effect of the corrector on the wavefront is a linear superposition of these influence functions. It is also assumed that the sensor is linear in response.

Fig. 6 |. Influence functions and dynamic control.

a | Actuator layout and two example influence functions for a 37-element transmissive phase plate24. b | Typical power spectrum of the fluctuations in the aberrations (root mean square (rms) wavefront error) of the eye or atmospheric turbulence with adaptive optics (AO) off, equivalent to no correction (aberrations only), and with AO on (aberrations + correction). c | The ratio of the power spectra for two gains. The closed-loop bandwidth is the maximum frequency at which the magnitude of the aberration fluctuations can be reduced (diminished). Beyond this frequency, the amplitude of the aberration fluctuations is magnified (enhanced). A higher gain results in a higher closed-loop bandwidth. Although the magnitude of a larger range of frequencies can be reduced, the higher temporal frequencies are enhanced more significantly. The curves are referred to as power rejection curves.

Implementation.

From the calibration procedure, we now have:

| (3) |

where AMeas represents a vector of aberration measurements, IF is the matrix containing the influence functions and C is a vector of control signals. AMeas can be slopes, modal coefficients, or any other measurement provided that IF is defined appropriately, that is, the influence functions are defined as slopes, modal coefficients or any other measurement. The control signals to be sent to the corrective device to generate a given wavefront can be found from:

| (4) |

where IF+ is the pseudo-inverse of the matrix containing the influence functions. IF+ is referred to as the control matrix and is often calculated using a mathematical technique for matrix inversion referred to as singular value decomposition (SVD)16. The control matrix is also referred to as the reconstructor because multiplication of this matrix by AMeas reconstructs the required control signal values. It is referred to as direct slope or modal reconstructor if the slopes or aberration coefficients are used, respectively. The advantage of SVD is that it optimizes the calculation of the inverse by removing components that can lead to instability of the system or other problems such as actuator saturation, although other methods are also available77. The required voltages to implement a given aberration mode, for an indirect sensing modal control scheme, can be calculated from Eq. 4.

Consider the general AO system shown in FIG. 1d. Before the light reaches the sensor, it first passes via the deformable mirror. Consequently, what the sensor sees is the sum of the wavefront owing to the aberrations present and the wavefront imparted by the corrector. This is referred to as a closed-loop system and is how the vast majority of AO systems are operated. If the location of the sensor is such that the light that reaches it does not pass via the corrector, it is referred to as an open-loop system. A major advantage of closed-loop systems is that the sensor checks that the wavefront imparted by the corrective device is correct. This is very important as assumptions of linearity between the signal sent to the corrector and the wavefront imparted by the corrector, as assumed during calibration, do not need to hold exactly true. Closed-loop sensor-based AO systems are typically controlled using an integral controller, although other controllers can be used16. The integral controller is implemented as:

| (5) |

where Ct are the control signals at a time t, At are the aberrations measured at a time t, and Ct0+Δt are the new control signals to be implemented. The control gain g is a value between 0 and 1 that controls the temporal response and the stability of the correction, and has an effect on which temporal frequencies can be mitigated. This is particularly important for astronomy and vision science in which the aberrations vary rapidly over short timescales, as the correction needs to keep up with the changes in the aberrations. As discussed above, the magnitude of the aberration dynamics of the eye and the atmosphere, which can be characterized by the variations in the rms wavefront error, typically follow an inverse frequency power law16,49 as shown schematically in FIG. 6b. Also shown is the residual power of the fluctuations with dynamic correction of the aberrations. The ratio of these two plots is shown in FIG. 6c and referred to as the power rejection curve.

AO systems measure and correct temporally fluctuating aberrations up to a maximum frequency, or cut-off, that defines their closed-loop bandwidth. This means that aberrations fluctuating at a frequency below the cut-off are reduced by the AO, resulting in improved image quality, while aberrations fluctuating at a frequency above the cut-off are amplified and degrade image quality. The closed-loop bandwidth is affected by many AO parameters. As an example, increasing the control gain g increases the closed-loop bandwidth but at the expense of increasingly amplifying aberration fluctuations at frequencies above this closed-loop bandwidth (FIG. 6c). Thus, an optimal trade-off is sought between the closed-loop bandwidth and gain, which is often determined using both empirical results and predictions from theory. Note that to correct for aberration fluctuations up to a given maximum defined by the closed-loop bandwidth, the aberrations must be measured and the corrector updated at significantly faster rates than the closed-loop bandwidth frequency limit. For example, to achieve good correction, astronomical AO systems require updates at around 1 kHz or more.

Closed-loop bandwidth.

The maximum frequency fluctuation that an adaptive optics system can fully or partially correct.

Results

The different applications of AO imaging have reached different levels of maturity. In astronomy, AO is routinely incorporated into new ground-based telescopes and upgrades. These are usually one-off systems that are dedicated to a particular telescope and given specific names. AO use in vision science and clinical applications continues to increase, driven by the need to elucidate structural and functional changes in the microscopic domain of the intact eye. Like astronomy applications, there is a good understanding of the nature of ophthalmic aberrations. AO in microscopy is somewhat newer and presents a different challenge with a vast range of microscope modalities and specimen types. In this section, we outline the AO optical instrumentation advances that have been made in each area. The focus of this section is on what AO can achieve in terms of image quality enhancement.

Astronomy

The original proposal for astronomical AO was made in 1953 (REF.15). After a period of development by the military, AO began to be used on astronomical telescopes and is now routinely used on large telescopes around the world. Here, we present just a few examples of such systems. For more on the history and development of AO in astronomy, see reviews by Beckers3, Davies and Kasper7, Rigaut and Neichel25, and the books by Hardy26 and Duffner80.

In astronomical AO, a star (typically called the guide star) is used as the reference for sensing the wavefront. The main source of aberrations to be corrected is atmospheric turbulence, which is blown across the telescope aperture by wind. The output of the wavefront sensor is compared with the signal expected for a flat wavefront (that is a wavefront with no turbulence) and the resultant correction is applied to a deformable mirror. The strength of the turbulence and speed of its evolution are not fixed, and AO systems are tuned to maximize correction in current conditions by adjusting the system gain (see FIG. 6c).

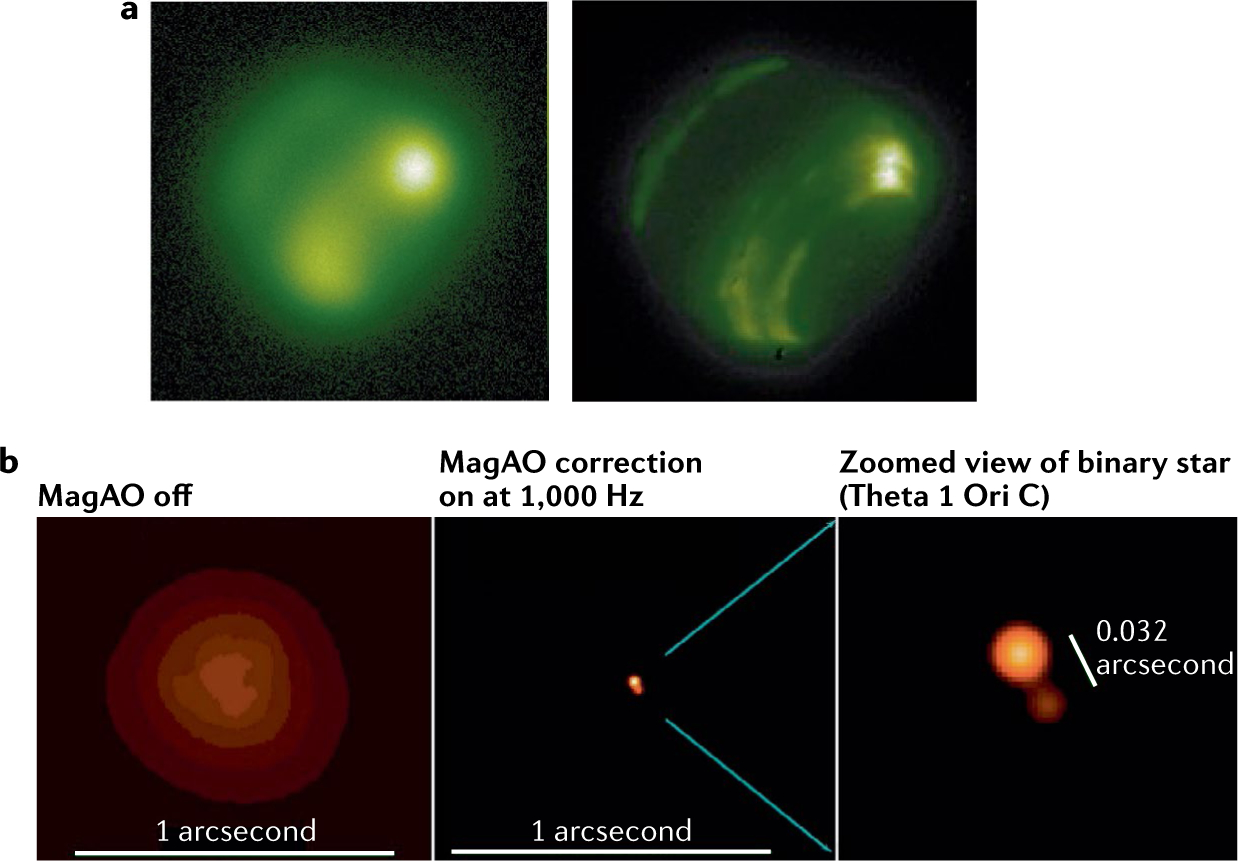

The Keck telescopes are among the best-known and productive general purpose astronomical AO systems, and are twin 10 m diameter segmented telescopes on the summit of Mauna Kea, Hawaii, USA. First light for AO on the Keck II telescope occurred on 4 February 1999 (REF.79) This natural guide star system was the first of a new generation of 8–10 m telescopes, delivering Strehl ratios of up to 0.37 in H band (1.6 μm) and demonstrating the dramatic improvements in image quality afforded by AO on large ground-based telescopes. The original Keck AO systems consisted of a separate tip–tilt corrector, a 349 actuator deformable mirror and a SH wavefront sensor80. The AO systems on the two telescopes were identical, and in addition to imaging and spectroscopy, AO was used to feed the Keck interferometer, which combined the light from the two telescopes. See FIG. 7a for a demonstration of Keck imaging with AO81.

Fig. 7 |. Image improvements from astronomical AO systems.

a | Early Keck adaptive optics (AO) system demonstrating the benefits of AO for astronomy. Here, Neptune is shown in a narrow filter at 1.17 μm showing methane absorption. The image on the left is uncorrected in very good 0.4 arcsec seeing. The image on the right is with AO correction81. b | Demonstration of high-resolution AO in visible light on a 6.5m telescope with Magellan AO (MagAO). The star Theta 1 Ori C is the brightest star in the Orion Trapezium cluster, a known tight binary. The left panel shows the seeing-limited image with AO off. The middle panel is the same star after closing the AO loop (AO on), with the same image field of view. Note the significant concentration of light once diffraction-limited performance is achieved. The right panel is zoomed in on the star, demonstrating the spatial resolution of AO on large telescopes92. Panel a reprinted with permission from REF.81, IOP. Panel b, image courtesy of Laird Close.

Keck AO and the Nasmyth AO System & CONICA instrument (NAOS-Conica)82,83 at the European Southern Observatory (ESO) Very Large Telescope (VLT) were instrumental in the study of the Milky Way galaxy’s supermassive black hole, which was the subject of the 2020 Nobel Prize in Physics. We further discuss the contributions of AO to this research in the Applications below.

The Keck AO systems were upgraded to use a sodium LGS, which was installed in 2001 and began science operations in 2004 on Keck II84. The LGS upgrade significantly improved sky coverage because a bright natural star was no longer required in the field. The systems received a wavefront sensor and control upgrade in 2008, which improved the sampling, frame rate and latency85. This upgrade delivered further improvements to Strehl ratio and faint star limits for natural guide star and LGS modes86.

The latest upgrades to Keck AO are currently in progress. The Keck Planet Imager and Characterizer (KPIC87) includes an IR pyramid wavefront sensor as well as a planned 1,000 actuator deformable mirror88. A key science goal for KPIC is the characterization of exoplanets orbiting late-type stars, for which the IR pyramid wavefront sensor will provide significant gains owing to the higher IR flux of such stars. As the pyramid wavefront sensor makes use of light interfered across the entire telescope pupil rather than smaller sub-apertures, it is more sensitive to low-order aberrations such as tip and tilt, focus and astigmatism than the SH sensor and takes full advantage of the diffraction limit of large telescopes89,90. Another advantage is that it provides for selectable sensitivity and dynamic range. With charge-coupled device (CCD) detectors, on-camera binning lowers the contribution of detector noise, thus allowing higher sensing speeds on fainter stars. As the dynamic range of the pyramid wavefront sensor can be adjusted by varying the modulation amplitude62, larger amplitudes provide a wider linear range while smaller amplitudes provide more sensitivity and precision. These advantages have led to the adoption of the pyramid wavefront sensor in many recent SCAO systems, including the large binocular telescope (LBT) AO systems, Subaru Coronagraphic Extreme Adaptive Optics (SCExAO), and it is under consideration for the upgrade of the Gemini Planet Imager (GPI). Furthermore, each of the coming generation of extremely large telescopes (ELTs) will be using pyramid wavefront sensors in their AO architectures.

A typical example of a more recently developed astronomical AO system is given by the Magellan AO system (MagAO). The corrector for MagAO is a 585-actuator adaptive secondary mirror (ASM), and the wavefront is sensed by a pyramid wavefront sensor (see FIG. 3d). ASMs minimize the need for compact optical relays to other deformable mirrors, thus minimizing both optical losses and the thermal background noise for IR imaging, and maximizing the field of view of the imaging system. MagAO is installed on the 6.5 m Magellan Clay telescope91 at Las Campanas Observatory in Chile. The high number of actuators in the ASM facilitates excellent correction down to visible wavelengths. As shown in FIG. 1, spatial resolution depends on wavelength, and working at visible wavelengths thus provides improved spatial resolution, which is demonstrated by MagAO in FIG. 7b (REF.92).

The above results are examples of correction derived from a single guide star in what is called SCAO. SCAO only works well for other objects near the guide star. The wavefronts from objects farther away, say 10–50 arcsec depending on wavelength, propagate through slightly different aberrations, and so will suffer from rapidly degrading correction with distance from the guide star. MCAO can significantly improve the corrected field of view. MCAO provides good correction over a wide field of view but imperfectly samples the turbulence above the telescope. Rigaut and Niechel25 provide an overview of the error sources inherent in MCAO that result in lower Strehl ratio on any single object compared with SCAO.

A wider field of view can be corrected using multiple guide stars, either natural or artificial stars created with lasers. The power of this technique is exemplified by the Multi-Conjugate Adaptive Optics Demonstrator (MAD) on the 8 m VLT in Chile. Another example of MCAO is the Gemini MCAO system (GEMS) on the Gemini South Telescope93. GEMS provides uniform sky coverage of a field as large as 85 arcsec by 85 arcsec, with sky coverage of 55%.

AO is also used for Solar astronomy94, most recently applied at the Daniel K. Inouye Solar Telescope (DKIST)95. The earliest use of AO was for observation and tracking of objects orbiting the Earth by the US military96,97.

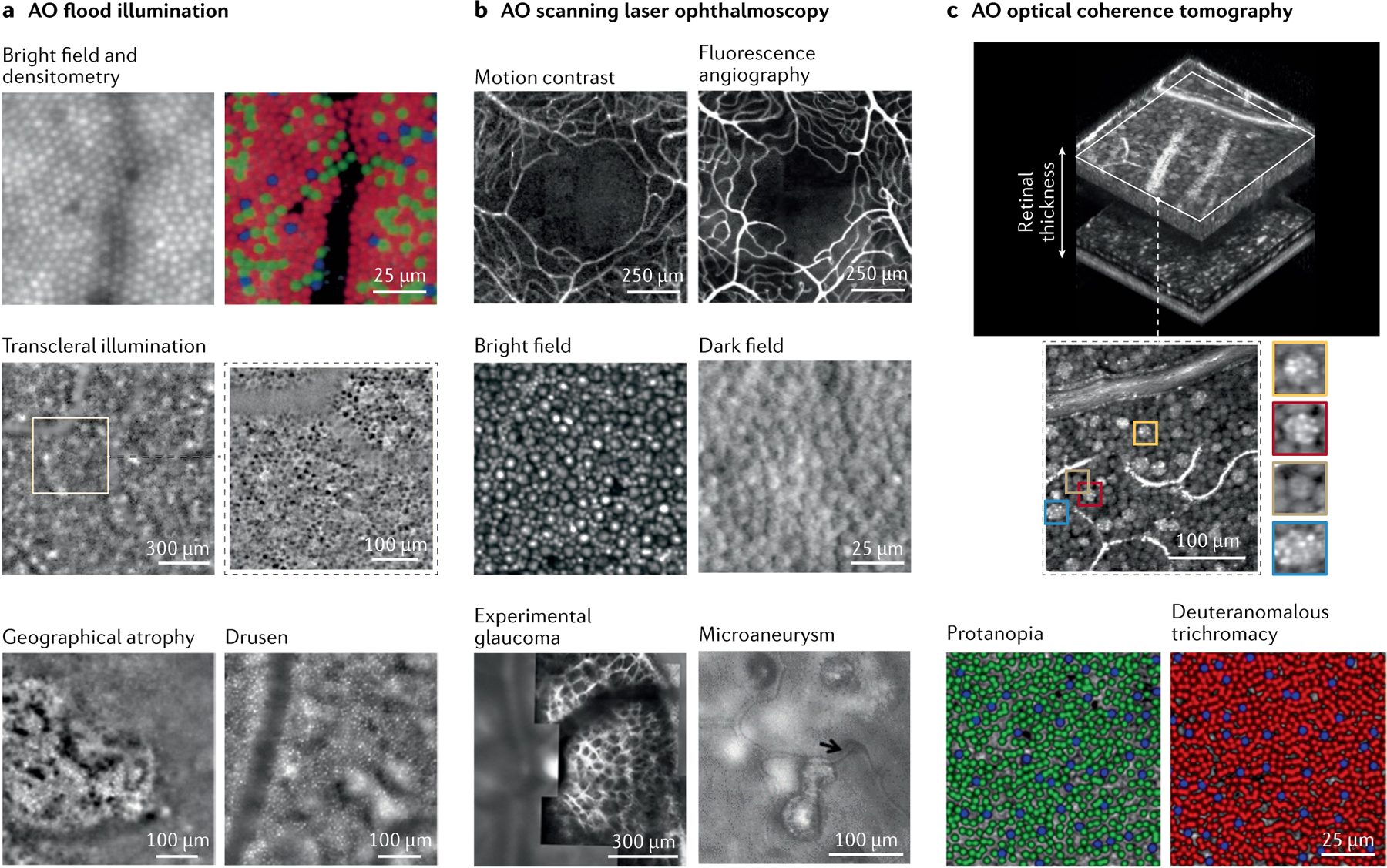

Vision science

It has been known since at least the mid-nineteenth century that the eye contains many aberrations, but methods to effectively measure and correct them did not materialize until the end of the twentieth century. The first vision corrective methods borrowed heavily from the AO ground-based astronomical and military communities. In 1997, the first AO system was used for high-order aberration correction in the eye for both vision improvement and high-resolution retinal imaging98. Spurred by this success, AO has been integrated into various types of ophthalmoscope, principal ones being flood illumination, scanning laser ophthalmoscopy (SLO) and optical coherence tomography (OCT)10,11,99,100. Because AO is a highly scalable technology, it has been integrated into large laboratory-based ophthalmoscopes, small hand-held devices and systems designed for different species, especially human, monkey and mouse. AO is now routinely used in many scientific and clinical research laboratories around the world. For more on the history and development of AO for vision science and ophthalmology, see REFS4,101. Here, we present how AO is applied to the eye and what it can achieve.

Flood illumination.

A traditional ophthalmoscopy modality based on flash photography in which the image of the illuminated retina is captured by an area detector.

The eye’s imperfect optics and diffraction caused by the finite size of the eye’s pupil (1–8mm diameter) limit the lateral resolution at the retina to a size larger than most retinal cells and cell components and prevent their visualization. Diffraction can be minimized by dilating the pupil with mydriatic drops. However, this benefit comes at the cost of additional aberrations exposed by dilation40,46. AO increases lateral resolution by a factor of up to five over commercial ophthalmoscopes, permitting resolution of retinal details as small as 2–3 μm after pupil dilation, sufficient to resolve most major cell types in the retina including the densely packed cone photoreceptor cells in the fovea. AO also increases sensitivity as it allows a larger pupil to be used and more reflected light to be captured by the ophthalmoscope (up to a theoretical 20-fold improvement depending on pupil size and scattering properties of the retinal tissue type). This permits more-weakly reflecting retinal structures to be detected.

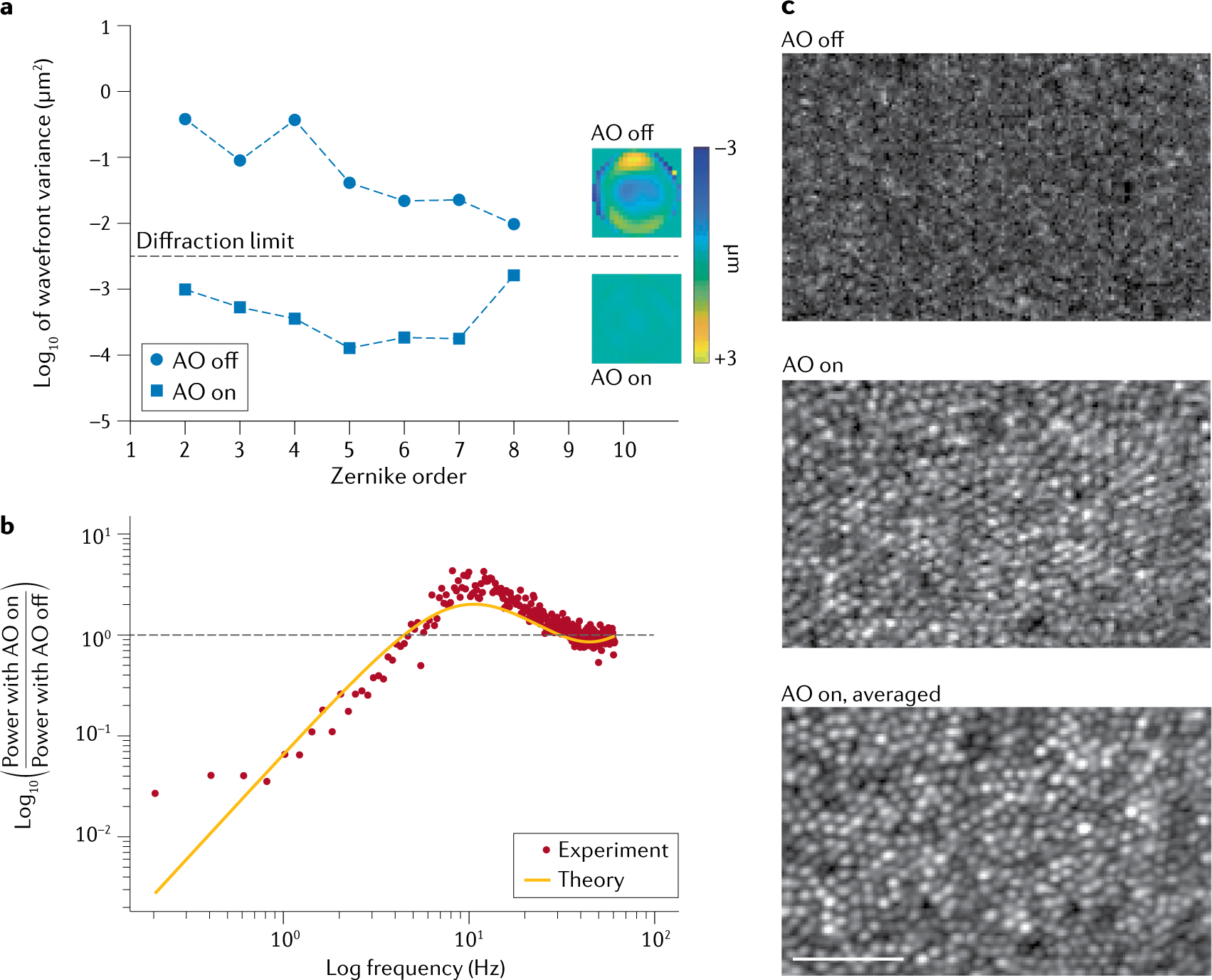

FIGURE 8 shows the performance of a representative AO system that was integrated into an OCT system at Indiana University21,102,103. As depicted in FIG. 8a, the AO reduces the wavefront variance of the first seven Zernike orders by two to three orders of magnitude, resulting in diffraction-limited resolution as measured by the wavefront sensor (less than λ/14 rms wavefront error). FIGURE 8b demonstrates that the AO can track and correct temporally fluctuating aberrations up to 4.5 Hz, fast enough for the vast majority of the aberrations in the eye (<2 Hz)47,48,104. Finally, FIG. 8c shows the benefit of AO for OCT retinal imaging — revealing thousands of individual cone photoreceptor cells spaced 4.5 μm apart that would otherwise not be seen. A powerful consequence of resolving cells is the ability to track them over time to observe their dynamic behaviour. It also allows images to be registered and averaged to increase signal to noise ratio over that of a single image, as illustrated in FIG. 8c and Supplementary Fig. 1.

Fig. 8 |. AO performance on a subject with high myopia.

The adaptive optics (AO) system dynamically measured and corrected aberrations over a 6.7 mm pupil at the eye using a 300-lenslet Shack–Hartmann wavefront sensor, a 97-actuator deformable mirror and a direct-slope reconstructor running at a loop rate of 122 Hz REF.103. The measurement and imaging wavelength was 790 nm. The AO is part of the high-resolution AO-optical coherence tomography (OCT) imaging system developed at Indiana University21,102. A static pre-correction of −6.5 dioptres was applied to the deformable mirror to compensate for the subject’s spectacle prescription. For loop stability, control gain g was set to 0.2 and the 12 singular value decomposition (SVD) mirror modes of highest gain (most unstable) were removed from the control matrix. a | Spatial performance of the AO is quantified in terms of variance in wavefront height by Zernike order and wavefront aberration map across the eye’s pupil with AO off and on. b | Temporal performance of the AO is quantified in terms of the power rejection magnitude. Measurement and theoretical prediction are given. c | Single and averaged AO-OCT images allow visualization of cone photoreceptor cells at 1° from the fovea with AO on, but not off. By registering and averaging images acquired of the same retinal patch, the image signal to noise ratio increases and visualization of cellular structures in the image improves. Images are cropped from 1° by 1° acquired images and the scale bar is 50 μm. The associated Supplementary Video 1 shows the uncropped patch of cone photoreceptors during image acquisition with AO off and on, and Supplementary Fig. 1 shows the full extent of the registered and averaged image.

Over the past two decades, a large number of customized AO systems have been developed in the vision science community. A 2017 survey105 found that the most common AO platform in today’s ophthalmoscopes is a traditional SH wavefront sensor and a deformable mirror. This combination is used in FIG. 8. In these systems, the SH wavefront sensor typically samples a large 6.5–8 mm eye pupil with 300–600 lenslets and employs a near-IR beacon (up to a wavelength of 940 nm) to be less distracting to the individual. Although SH sensors dominate today’s ophthalmological AO systems, indirect sensing52 is garnering increased interest as it requires no hardware sensor, reducing both cost and system complexity. Numerous types of wavefront corrector (discrete-actuator deformable mirrors, LCSLMs, deformable phase plates, bimorph mirrors, magnetic membrane mirrors, microelectromechanical systems (MEMS) mirrors and combinations of these corrector types) have been tested on the eye, revealing the need for high-stroke, high-actuator-count correctors76,78. The most commonly used corrector today is a ±50 μm-stroke, 97-actuator voice-coil deformable mirror (DM97; ALPAO). The combination of a large actuator stroke and count is unique to this corrector. The ±50 μm stroke is nearly ideal, allowing correction of the refractive error in most individuals, thereby precluding the need for auxiliary lenses to correct for the individual’s spectacle prescription. The dense pattern of 97 actuators is well matched to the aberration content of the eye, delivering sharp images. However, AO does not perform well on all individuals. More challenging individuals include those with high refractive errors, unclear or highly aberrated optics owing to pathology or surgery (for example, dry eye, kerataconus, cataract, keratoplasty or refractive surgery), elevated eye motion (strabismus) and reduced fixation.

Unlike astronomical AO, the ratio of lenslets to actuators of AO systems for vision science is typically high (3:1 to 6:1). Oversampling with lenslets with a high lenslet to actuator ratio is beneficial in that it makes the measurements more robust to pupil edge effects, eye motion, system noise, drying of the tear film and other local inhomogeneities in the ocular media that can occur with ageing. Many ophthalmological AO systems use zonal control of the corrector via a direct-slope reconstructor, which has been shown to be more effective than modal control106. This is typically followed by a separate modal reconstructor for Zernike coefficients for real-time AO diagnostics during retinal image acquisition. Most laboratories develop their own AO control software or partner with laboratories that do. Open-source107 and commercial software108,109 are also available but limited.

Commercialization of AO instruments for the eye is ongoing110–112, but scientific and clinical discoveries being made with them continue to grow exponentially.

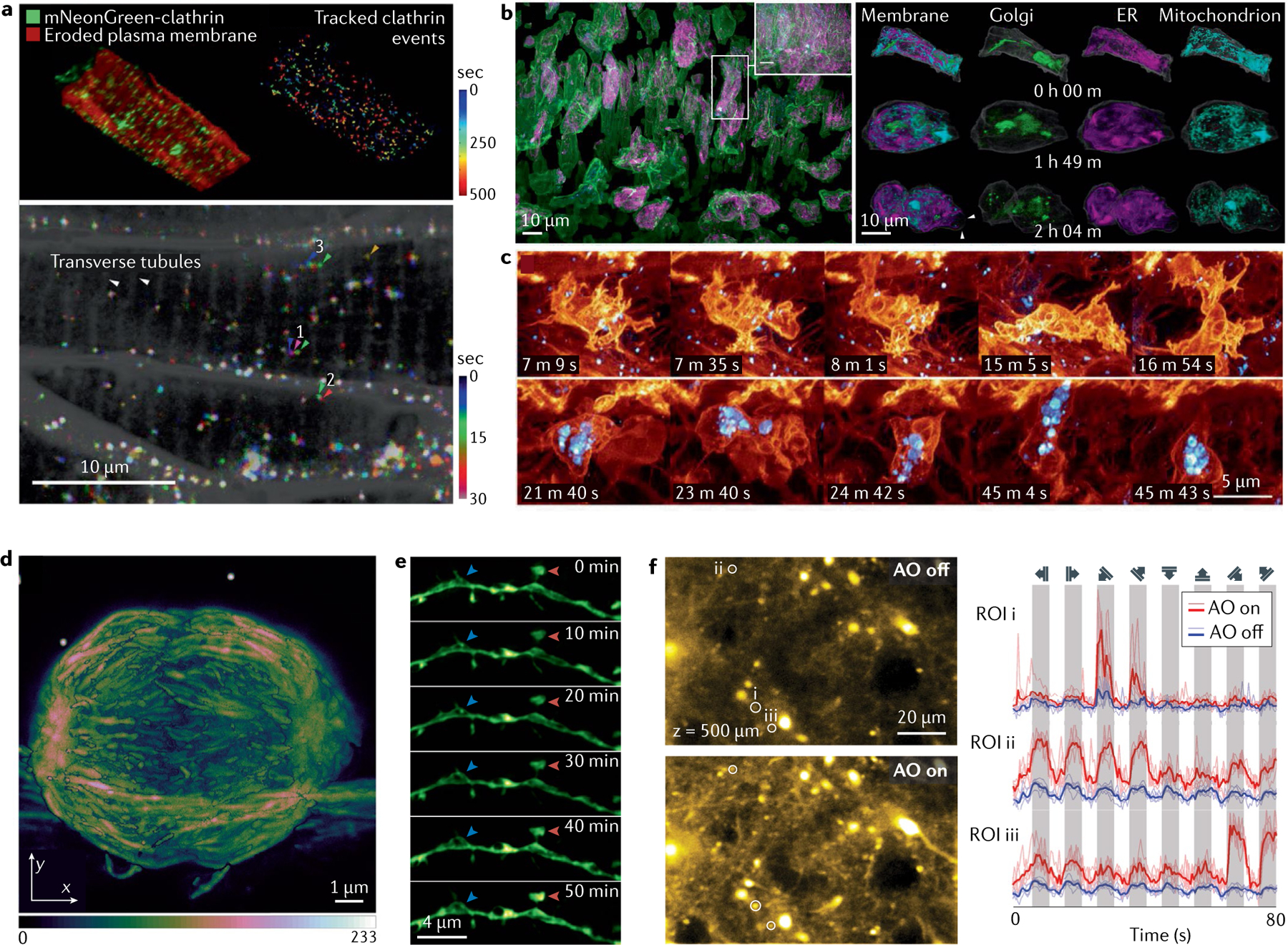

Microscopy

Adaptive optics was first extended to the field of microscopy around the year 2000. The first implementation of specimen-induced aberration compensation was in a laser scanning fluorescence confocal microscope using an indirect sensing method113. Since then, various AO schemes have been developed for a wide range of high-resolution microscopes1,2 and super-resolution fluorescence methods114 for applications ranging from neuroscience to cell biology. Although AO is becoming more widespread in the research environment, it has not yet been widely adopted in the commercial sphere.

Two types of optical field are typically involved in microscopy and can become aberrated during the imaging process — the illumination light and the emission light. Depending on the modality of the microscope, the aberrations on the wavefront of one or both optical fields need to be corrected (Supplementary Fig. 2). Most widefield microscopy, whereby emitted light propagates through the sample and forms an image on a camera (Supplementary Fig. 2a), requires aberrations to be removed from the detection path. By contrast, two-photon fluorescence microscopy, a point-scanning modality, focuses the excitation light field and collects (but does not image) emitted photons115 (Supplementary Fig. 2b). To ensure the tightest focus leading to the highest resolution, signal and contrast, aberrations of the excitation wavefront need to be removed.

The performance of some microscopy methods depends on both the excitation and emission PSFs. For example, a widefield microscopy method, lattice light sheet microscopy116, uses structured light to illuminate a single plane and image the fluorescence from this plane on a camera. Confocal microscopy scans a focused excitation laser spot across a sample and detects the emission light that passes through a pinhole, which blocks emission originating from outside the focus; its PSF is equivalent to the product of the excitation and detection PSFs. As a result, diffraction-limited focusing of both the excitation and emission light is required for optimal performance. For these methods, aberrations need to be removed from both the excitation and the emission wavefronts. In the case of lattice light sheet microscopy, because the excitation and emission wavefronts go through different objectives and experience distinct aberrations (Supplementary Fig. 2c), AO corrections have to be carried out for the two wavefronts separately.

Both the optics in the microscope itself and the samples it investigates can introduce aberrations. The optical system aberration originates from the components of the microscope and does not vary with time. Its presence can be detected from a distorted PSF, usually obtained from the image of a small fluorescent bead and derived from the PSF using phase retrieval approaches. Optical system aberration should be corrected before the imaging experiment so that only sample aberrations may degrade image quality. Sample aberrations arise from the mismatches of the sample refractive index from that of the immersion medium of the microscope objective and can severely degrade imaging performance, especially for high-resolution microscopy. Super-resolution microscopy, a collection of methods that can achieve resolution beyond the diffraction limit and reach spatial resolution of tens of nanometres, is even more sensitive to optical aberrations than the diffraction-limited methods, with biological samples a few microns thick capable of substantially reducing image quality.

Sample aberrations may vary spatially and sometimes evolve over time, for example, if imaging a developing embryo28,30,117, and must be measured and corrected in situ. AO has been extensively applied to optical microscopy to remove both system and sample aberrations. Both direct and indirect wavefront sensing methods are used for aberration measurement. For direct wavefront sensing, an SH sensor and a wavefront corrector measure and correct aberrations, respectively, and are applicable to transparent samples or to the shadow depths of opaque samples. For indirect wavefront sensing, the same corrector is typically employed for both measurement and correction of aberrations and can be applied to samples with no or substantial light scattering alike. Deformable mirrors, insensitive to polarization and having broadband high reflectivity, can efficiently remove aberrations from both illumination and emission wavefronts. For illumination that is monochromatic and polarized, LCSLMs can be applied for wavefront correction to take advantage of their large number of pixels.

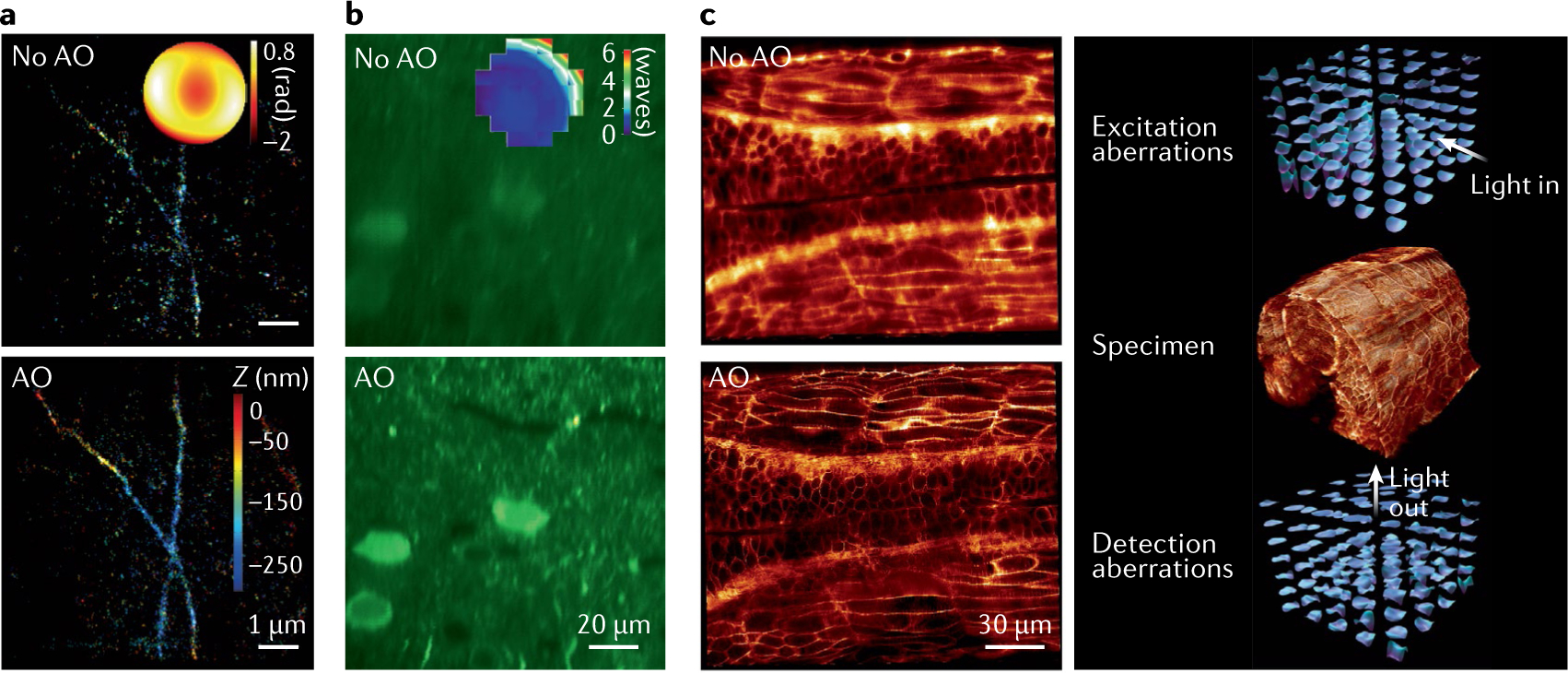

By correcting both system and sample-induced aberrations, AO enables optical microscopy to achieve optimal performance in optically complex samples. Here, we list a few examples. In one example118 (FIG. 9a; Supplementary Fig. 2d), modal indirect sensing was used to measure the aberration encountered by the fluorescence emission of microtubule structures through a mammalian cell in a widefield super-resolution single-molecule localization microscope. A deformable mirror was used as the corrector to remove the cell-induced aberrations from the emitted fluorescence before image formation on a camera, which increased the number of detected fluorescent molecules and improved the measurement accuracy of their positions in 3D.

Fig. 9 |. AO in optical microscopy.

Adaptive optics (AO) correction on 3D super-resolution widefield microscopy118 (panel a), two-photon fluorescence microscopy29 (panel b) and lattice light sheet microscopy117 (panel c). Panel a reprinted with permission from REF.118 © The Optical Society. Panel b reprinted from REF.29, Springer Nature Limited. Panel c reprinted with permission from REF.117, AAAS.

In another example (FIG. 9b; Supplementary Fig. 2e), a zonal indirect sensing method was used to measure the tissue-induced aberrations when the excitation light of a two-photon fluorescence microscope travelled through a living mouse brain29. A corrective wavefront was then applied to a LCSLM to allow the formation of a diffraction-limited focus in vivo, which increased the fluorescence intensity and contrast of neurons in the brain.

Finally, AO was applied to a lattice light sheet microscope117 (FIG. 9c; Supplementary Fig. 2f) to image zebrafish embryos with a high curvature. A 1D lattice of light excited the fluorescence in a thin optical section of the sample through one objective and the emitted fluorescence was collected by another objective and imaged on a camera. Direct wavefront sensing with two SH sensors was used to measure the aberrations encountered by both the excitation and emission lights. A LCSLM was used to correct the excitation wavefront while a deformable mirror was used to correct the emission wavefront. The high speed of direct wavefront sensing measured the excitation and detection aberrations in 140 local volumes. These localized corrections were required to achieve diffraction-limited resolution throughout the image volume within the zebrafish embryo, the high curvature of which led to small isoplanatic patches.

Applications

We elaborate here on a range of applications in which the AO methods described above have been deployed to tackle imaging challenges and provide improved understanding in scientific areas ranging from the role of molecules in biological specimens to the nature of the cosmos.

Astronomy

Here, we review two of the major areas of astronomy and astrophysics affected by the use of AO, both related to night-time observations.

The Milky Way supermassive black hole.

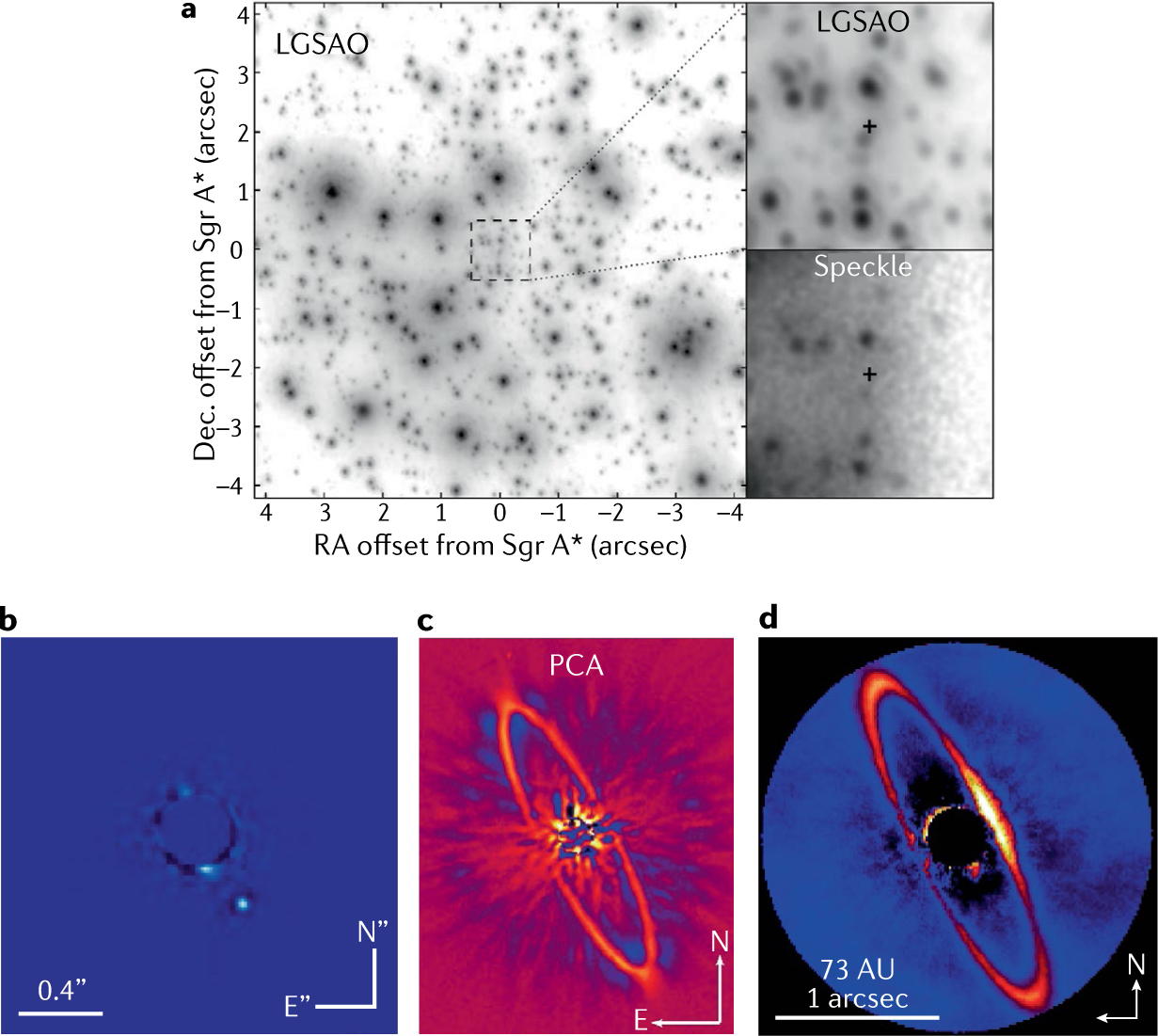

AO has made a key contribution to the study of the supermassive black hole (SMBH) at the centre of the Milky Way galaxy. The centre of the Milky Way was long suspected to harbour a SMBH, expected to coincide with the compact radio source Sagittarius A* (see Melia and Falcke119 for a review). It was the use of AO, with the IR wavefront sensor on NACO at the VLT120 and with artificial LGSs at Keck121, that allowed the precise measurement of the mass and concentration at the centre of the Milky Way and confirmed its correspondence with Sagittarius A*. This research led to the 2020 Nobel Prize in Physics being awarded to Andrea Ghez and Reinhard Genzel for their discovery of the SMBH at the centre of our galaxy. Compared with speckle imaging techniques, AO significantly improved the image quality, dynamic range and astrometric precision of such observations122 (see FIG. 10a). Furthermore, AO has enabled spatially resolved spectroscopy123 with sufficient spectral resolution to measure the radial velocities of individual stars in the nuclear cluster of the Milky Way122,124. AO continues to make significant contributions to the study of the Milky Way SMBH — see for instance, the AO-fed GRAVITY interferometer125, which measured the gravitational redshift of a star at closest approach to the Milky Way SMBH126.

Fig. 10 |. AO in astronomy.

a | The centre of the Milky Way galaxy, as revealed by laser guide star adaptive optics (LGSAO) on the Keck 10 m telescopes. The right-hand panels compare speckle imaging (bottom) with the remarkable improvement in image quality and sensitivity afforded by LGSAO (top). The cross marks the location of the supermassive black hole (SMBH) at the galactic centre. AO-enabled observations such as this have been used to confirm the SMBH, measure its mass and test general relativity122. b–d | The extrasolar planet beta Pictoris b, as imaged by the Gemini Planet Imager (GPI)130. The HR 4796A debris disc as seen by the SPHERE instrument on VLT129 (panel c) and the GPI instrument on Gemini South (panel d)155. These instruments are optimized for high-contrast imaging close to bright stars to study exoplanets and circumstellar discs. The well-defined ring of the HR 4796A disc strongly suggests the presence of a planet, although none has yet been detected. Panel a reprinted with permission from REF.122, IOP. Panel b reprinted with permission from REF.130, PNAS. Panel c reprinted with permission from REF.129, EDP Science. Panel d reprinted with permission from REF.155, IOP.

Extreme AO for exoplanets and discs.

One key driver of AO performance has been the study of extrasolar planets and their environments. The first confirmed exoplanet orbiting a main sequence star, detected in the orbital reflex motion of its host star, was announced in 1995 (REF.127). This radial velocity technique and the similarly indirect transit method have accounted for most exoplanet discoveries. By their nature, the radial velocity and transit methods are biased towards close-in planets. The study of more widely separated planets can be accomplished with direct imaging, which requires AO on ground-based telescopes128.