Abstract

Objective:

Due to the recent COVID-19 pandemic, the field of neuropsychology must rapidly evolve to incorporate assessments delivered via telehealth, or teleneuropsychology (TNP). Given the increasing demand to deliver services electronically due to public health concerns, it is important to review available TNP validity studies. This systematic review builds upon the work of Brearly and colleagues’ (2017) meta-analysis and provides an updated review of the literature, with special emphasis on test-level validity data.

Method:

Using similar methodology as Brearly and colleagues (2017) three internet databases (PubMed, EBSCOhost, PsycINFO) were searched for relevant articles published since 2016. Studies with older adults (aged 65+) who underwent face-to-face and TNP assessments in a counterbalanced cross-over design were included. After review, 10 articles were retained. Combined with 9 articles from Brearly’s (2017) analysis, a total of 19 studies were included in the systematic review.

Results:

Retained studies included samples from 5 different countries, various ethnic/cultural backgrounds, and diverse diagnostic populations. Test-level analysis suggests there are cognitive screeners (MMSE, MoCA), language tests (BNT, Letter Fluency), attention/working memory tasks (Digit Span Total), and memory tests (HVLT-R) with strong support for TNP validity. Other measures are promising but lack sufficient support at this time. Few TNP studies have done in-home assessments and most studies rely on a PC or laptop.

Conclusions:

Overall, there appears to be good support for TNP assessments in older adults. Challenges to TNP in the current climate are discussed. Finally, a provisional outline of viable TNP procedures used in our clinic is provided.

Keywords: Telehealth, Teleneuropsychology, Systematic Review, Validity Study

On March 11, 2020, the World Health Organization characterized the outbreak caused by the novel COVID-19 virus as a pandemic (Adhanom Ghebreyesus, T., 2020). COVID-19 is a respiratory disease that can cause mild to severe illness. As of 26 April 2020, there were 2,804,796 cases worldwide with 193,710 deaths; in the United States there were 899,281 confirmed cases and 38,509 deaths (World Health Organization, 2020). Due to the highly contagious nature of the virus, it is spreading widely throughout the world and exhibiting “hotspots” conforming to population density (e.g., New York City). According to the CDC, adults 65 and older are particularly at risk of severe illness from COVID-19 and have a higher mortality rate than their younger counterparts (Centers for Disease Control and Prevention, 2020). Of the adults aged 65 and older in the U.S. with confirmed cases of COVID-19, 31–59% required hospitalization and11–31% required admission to an intensive care unit. Eight out of ten deaths due to COVID-19 in the U.S. have been older adults. Thus, world-wide efforts are underway to protect the public and “flatten the curve” of COVID-19 incidence. Such efforts include social distancing, self-quarantine, and “stay at home” orders. Thus, the utilization of telehealth has become critical to allow access to medical care during this pandemic.

In response to the increased demand for health care service delivery via telehealth, Medicare has relaxed some of the pre-existing regulations for telehealth services and will reimburse at the same dollar-amount as in-person visits (Coronavirus Preparedness and Response Supplemental Appropriations Act, 2020). Similarly, the Department of Health and Human Services (HHS) has relaxed HIPPA privacy laws such that a provider, who is practicing in good faith, can use any non-public facing remote communication production that is available (Office for Civil Rights, 2020).

Diagnostic and interventional telehealth services have been well-established for age-related cognitive decline and dementia, especially in underserved and rural communities. A recent review found good support for telehealth in the assessment and management of patients with Parkinson’s disease (PD) and Alzheimer’s disease (AD) (Adams, Myers, Waddell, Spear, & Schneider, 2020). Similarly, a recent systematic review found almost perfect correspondence between in-person and telehealth assessments when diagnosing AD via clinical interview. Furthermore, telehealth may also be useful for early detection of MCI and preclinical dementia (Costanzo et al., 2020).

Despite its growing support, neuropsychological assessment delivered via telehealth (i.e., teleneuropsychology) has not been utilized by the vast majority of practitioners. This may largely be due to the previous lack of reimbursement from Medicare and private insurances. Additionally, there are some challenges and criticisms of teleneuropsychology (TNP) assessments. Such challenges include limited access to or familiarity with technological services (i.e., high-speed internet, web camera), inability to perform “hands-on” portions of an assessment, and reduced opportunities for behavioral observations due to camera angles (Barton, Morris, Rothlind, & Yaffe, 2011; Brearly et al., 2007; Harrell, Wilkins, Connor, & Chodosh, 2014; Parikh et al., 2013; Turner, Horner, Vankirk, Myrick, & Tuerk, 2012). Most studies utilizing TNP assessments take place in a satellite clinic, where a technician can set up and configure the equipment and necessary test stimuli. However, given a desire to abide by appropriate social distancing practices, TNP assessments at satellite clinics may not be possible; rather the in-home model of TNP assessments may be favored. Thus, in-home TNP assessments would occur in a non-controlled environment where there may be more interruptions and there is no control over test material created by the patient, raising concerns for test security. In addition, there is a concern that normative data, derived from standardized test procedures, may not be appropriate for TNP evaluations (Brearly et al., 2017). Given all of this, there are some clinicians who believe TNP evaluations may be unethical, especially for high-stakes evaluations (e.g., forensic and competency evaluations). Finally, there is the ethical consideration of Justice, in that it is unclear whether all patients can be equally served via TNP due to lack of appropriate computer/internet connections (low SES) or disability (visually, hearing impaired), potentially exacerbating existing problems with healthcare delivery system.

While there are some notable concerns to TNP evaluations, proponents point to several benefits. First, there is generally positive feedback from patients and caregivers regarding these services (Barton et al., 2011; Harrell et al.,, 2014; Parikh et al., 2013; Turner et al., 2012). TNP assessments can also reach a wider population of individuals who may have restricted mobility or live long distances from the clinic (Brearly et al., 2017). Regarding the on-going pandemic, private insurances and Medicare are temporarily reimbursing telehealth visits at the same dollar-amount as in-person services (Coronavirus Preparedness and Response Supplemental Appropriations Act, 2020), allowing neuropsychologists to continue providing services to patients from their home, without the added risk of virus exposure. TNP may also facilitate connectedness with patients, many of whom need services and interpersonal contact. Finally, TNP may facilitate other medical treatment when stay-in-place orders are lifted.

With regard to TNP validity, some validity studies showed subtle differences in task-performance when comparing face-to-face (FTF) with TNP assessments (Cullum, Weiner, Gehrmann, & Hynan, 2006; Grosch, Weiner, Hynan, Shore, & Cullum, 2015; Hildebrand, Chow, Williams, Nelson, & Wass, 2004; Wadsworth et al., 2018; Wadsworth et al., 2016); though many other studies showed no such performance differences (Ciemins, Holloway, Coon, McClosky-Armstrong, & Min, 2009; DeYoung & Shenal, 2019; Galusha-Glasscock, Horton, Weiner, & Cullum, 2015; McEachern, Kirk, Morgan, Crossley, & Henry, 2008; Menon et al., 2001; Turkstra, Quinn-Padron, Johnson, Workinger, & Antoniotti, 2012; Vahia et al., 2015; Vestal, Smith-Olinde, Hicks, Hutton, & Hart, 2006). To evaluate potential performance differences between TNP and FTF assessments, Brearly and colleagues (2017) conducted a meta-analysis of 12 studies published between 1997 and 2016 (see Table 1). The included studies were counter-balanced cross-over designs (FTF, virtual) with adult patient samples (>17 years of age). Studies were excluded if active involvement of an assistant was required during testing (e.g., more than just showing a participant how to adjust volume).

Table 1.

Results of Brearly et al., (2017) Meta-Analysis

| Test | k | N | Hedges g | Q | I2 (%) |

|---|---|---|---|---|---|

| BNT or BNT-15 | 4 | 329 | −0.12*** | 1.76 | 0 |

| Semantic Fluency | 3 | 319 | −0.08 | 5.77 | 65.34 |

| Clock Drawing | 5 | 335 | −0.13 | 12.6 | 68.25 |

| Digit Span | 5 | 359 | −0.05 | 9.38 | 57.34 |

| List Learning (total) | 3 | 313 | 0.1 | 4.59 | 56.46 |

| MMSE | 7 | 380 | −0.4 | 3.36*** | 80.24 |

| Letter Fluency | 5 | 356 | −0.02 | 1.41 | 0 |

| Synchronous Dependent Tests | NR | NR | NRa | 56.42*** | 82.28 |

| Non-Synchronous Dependent Test | NR | NR | −0.10*** | 12.99 | 38.43 |

| Overall Results | 12 | 497 | −0.03 | 55.67 *** | 80.24 |

Note. Adopted from Brearly et al. (2017) published in Neuropsychology Review

BNT = Boston Naming Test; MMSE = Mini Mental State Examination; NR = Not Reported Synchronous refers to timed tests or single-trial tests where repetition could confound results (e.g., digit span).

= p < .05,

= p < .01,

= p < .001

Authors did not provide an effect size estimate due to significant between-study heterogeneity

Across the 12 included studies, a total sample of 497 study participants and patients were included. The overall effect size distinguishing TNP from FTF performance was small and non-significant (g = −0.03; SE = 0.03; 95% CI [−0.08, 0.02], p = .253). Across all 79 scores from included studies, 26 mean scores were higher for the videoconference condition (32.91%), 48 mean scores were higher for the FTF condition (60.76%), and five mean scores were exactly the same in both conditions (6.33%). Further analysis showed a small, but significant effect size (g = −0.10; SE = 0.03; 95% CI [−0.16, −0.04], p < .001) for timed tests or tests where a disruption of stimulus presentation may affect test results (e.g., digit span, list-learning tests), with TNP performance approximately 1/10 of a SD lower than FTF testing performance. A similar magnitude of difference was found for the BNT-15 items (g = −.12; SE = .03, p <.001). Finally, a moderator analysis showed that there was no difference in FTF vs. virtual performance for adults aged 65–75 (g = 0.00, SE = .01, p = .162). Further moderator analyses were not interpreted due to significant heterogeneity between sub-groups. The authors concluded “the current findings did not reveal a clear trend towards inferior performance when tests were administered via videoconference. Consistent differences were found for only one test (BNT-15) and the effect size was small” (Brearly et al., 2017, pg. 183).

The meta-analysis conducted by Brearly and colleagues (2017) was a critical first step to demonstrate the relative validity and utility of TNP. While this review was quite useful in objectively and quantitatively demonstrating the utility of various neurocognitive assessments in the TNP environment, it lacked a qualitative analysis of the available validity data for each assessment. As such, it may be difficult to critically appraise the available validity evidence (e.g., sample size, demographic composition) of each test when selecting a test battery for TNP.

The current project is a limited systematic review that builds on the important work of Brearly and colleagues (2017). Using similar methodology, the present review provides an updated qualitive analysis and test-level data from each validity study. Given older adult’s particular susceptibility to COVID-19, we limited our analysis to studies of adults aged 65 and older. While test-level validity data for various measures delivered via TNP was the primary objective of this systematic review, we also conducted a critical (non-systematic) review of the modality in which TNP services were delivered as well as an appraisal of validity studies that included ethnic minority populations.

Methods

This review was conducted in accordance with Preferred Reporting Items for Systematic Review and Meta-analyses guidelines (PRISMA; Moher, Liberati, Tetzlaff, & Altman, 2009) and was pre-registered with PROSPERO, an international prospective register of systematic reviews (PROSPERO ID: 175521).

Article Search and Selection

For consistency, article search and selection largely mirrored the methodology used by Brearly and colleagues (see Brearly et al., 2017 for full details). Briefly, the same three internet databases (PubMed, EBSCO (PsycINFO), ProQuest) were searched for relevant articles using the terms, “(tele OR remote OR video OR cyber) AND cognitive AND (testing OR assessment OR evaluation).1” Diverging from their methodology, additional specifiers were included to align with the aims of the present systematic review and identify studies involving older adults (ages 65+). In addition, the article search was limited to studies published after 1/1/2016, the endpoint of Brearly and colleagues’ (2017) article search.

Articles were included if the average age of the study sample was 65 or greater and neuropsychological assessments were conducted with a counter-balanced cross-over design where participants were assessed FTF and via videoconference. Articles were excluded if inferential statistics for test-level data were not included, if participants required significant in-person assistance from a technician or test administrator, or if studies “utilized proprietary software or hardware specifically designed for test administration (e.g., touchscreen kiosks, mobile applications).” (Brearly et al., 2017, pg. 176). Studies from Brearly’s analysis were also included in the following qualitative analysis when the average age of participants was 65+.

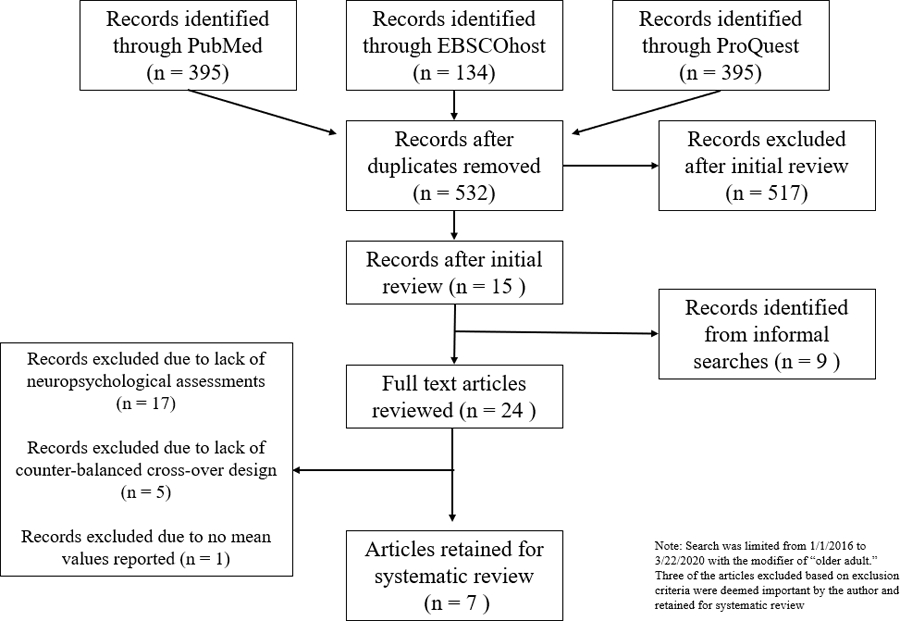

Article extraction took place on 3/21/2020. A total of 591 articles were extracted across the three internet databases, with 532 remaining after duplicates were removed. Nine articles were identified for review from informal searches and from the reference sections of published articles. Titles and abstracts were reviewed by the primary author for potential inclusion. The initial screening process was managed by the open-sourced software, abstrackr (Wallace, Small, Brodley, Lau, & Trikalinos, 2012). 54 of 532 (10%) of abstracts from the initial screening were double-coded to by the primary author to establish reliability, which was perfect (κ = 1.00, p <. 0001). After initial review, 24 articles were selected for full-text review. Seven unique articles were included after full-text review (see Figure 1)

Figure 1.

Flowchart of Study Selection

Three articles did not meet full inclusionary/exclusionary criteria as outlined by Brearly (2017) but were included because the authors felt they were informative to the systematic review. In two of these studies (Abdolahi et al., 2016; Stillerova, Liddle, Gustafsson, Lamont, & Silburn, 2016) the cross-over design was not counterbalanced. That is, the participants did FTF assessments followed by remote assessments. However, these studies were retained because the study sample included participants with movement disorders and, uniquely, the assessments were conducted at the participants’ homes. A third study (Vahia et al., 2015) provided inferential statistics for the overall analyses, but did not provide test-level data and inferential statistics. However, this study was retained because it included an exclusively Hispanic sample, with tests administered in Spanish.

After full text review, 10 studies published since 2016 were retained for systematic review. Nine articles from Brearly and colleagues (2017) analysis were also included for qualitative review. In sum, a total of a total 19 articles were included in the systematic review.

Study Quality and Risk of Bias

PRISMA guidelines suggest an analysis of study quality and risk of bias. However, checklists to assess these domains, such as the Cochrane Review Checklist, are ill-equipped for assessing bias in cross-study designs (Brearly et al., 2017; Ding et al., 2015). An alternate checklist for cross-over designs was proposed by Ding and colleagues (2015). However, this checklist is problematic as neither clinicians nor study participants can be blinded to condition (FTF vs. TNP). Based on items that could be applied to the studies of this review, almost all studies were deemed to be of moderate to high quality based on the requirement that all included studies were counter-balanced cross-over designs with all outcomes reported. The three studies described immediately above (Abdolahi et al., 2016; Stillerova et al., 2016; Vahia et al., 2015) were of lower study quality due to a lack of counter-balance in the cross-over design and failure to report all outcomes.

Publication Bias

Publication bias arises when studies with larger effect sizes are more likely to get published, whereas studies with null findings or smaller effect sizes are less likely to be published (i.e., file-drawer effect). However, as noted by Brearly and colleagues (2017), the risk of publication bias is likely low as authors in this field are more likely to publish articles in which effect sizes are small. Nonetheless, a quantitative analysis of the effect sizes from Brearly and colleagues’ (2017) study (9 of the 19 articles from the current review) showed no evidence of bias (symmetry around the funnel plot, Kendell’s tau b = −.227, p = .304). Formal assessment of publication bias for the present review was not conducted (e.g., funnel plot, Egger’s Test) as effect sizes were not calculated in this study but were qualitatively reviewed, only when provided by study authors.

Assessing Teleneuropsychology Validity

The validity of TNP was assessed via several facets. First, through the authors’ report of mean performance differences across testing environments (FTF vs. TNP). Effect sizes (Cohen’s d, Hedge’s g, Pearson r), which measures the standardized difference between two means, were interpreted according to conventions established by Cohen (1988) (i.e., Cohen’s d and Hedge’s g of .20 = small, .50 = medium, .80 = large; Pearson’s r of .10 = small, .30 = medium, .50 = large ). Absolute Intraclass correlation (ICC), a metric of test-retest reliability (Koo & Li, 2016), was used to describe validity of TNP testing performance relative to FTF performance. ICC was interpreted based on conventions established by Cicchetti (1994) (i.e., ICC 0-.39 = poor, .40-.59 = fair, .60-.74 = good, .75–1.00 = excellent). Similar to ICC, Cohen’s kappa measures reliability, but for categorical items and corrects for agreement that may have occurred by chance (Cohen, 1988). Interpretation of Cohen’s kappa was based on conventions established by Cohen (1988) (i.e., kappa 0-.20 = none, .21-.39 = minimal; .40=.59 = weak, .60 - .79 = moderate, .80 - .90 = strong, > .90 = almost perfect). Finally, the Bland-Altman plot, a calculation of the mean difference between two assessment methods, is a method of assessing bias (Bland & Altman, 1986). This method yields a 95% Limits of Agreement. If the 95% Limits of Agreement includes 0, then there is no evidence of systematic bias favoring performance in FTF or TNP.

Each measure was qualitatively judged to have either strong, moderate, or limited/insufficient evidence of TNP validity based on tiered review of available evidence of: 1) the number of available validity studies for each measure and the between-study agreement; and 2) the sample size and diagnostic characteristics of the validity studies. That is, a measure was considered having strong TNP validity if there were multiple validity studies - some with large sample sizes and diagnostically diverse patient populations – that showed relatively good between-study agreement. A measure was considered to have moderate TNP validity evidence if there were multiple validity studies with some between-study variability or a few large studies with diagnostically diverse patient populations that showed good between-study variability. Finally, studies were considered to have limited/insufficient evidence of TNP validity if there were few validity studies with small sample sizes/lack of diagnostic diversity or extreme between-study variability.

Results

Study Characteristics

Diagnostic Groups.

There is a wide range of diagnostic samples represented in the 19 TNP validity studies. Diagnostic groups included participants with movement disorders (Abdolahi et al., 2016; Stillerova et al., 2016), stroke/cerebrovascular accident (Chapman et al., 2019), psychiatric diagnoses (Grosch et al., 2015), and mild cognitive impairment (MCI) or Alzheimer’s disease (AD) (Carotenuto et al., 2018; Cullum et al., 2006; Loh, Donaldson, Flicker, Maher, & Goldswain, 2007; Vestal et al., 2006). Other samples were mixed with healthy controls (HC) and patients with psychiatric conditions or memory disorders (Cullum, Hynan, Grosch, Parikh, & Weiner, 2014; Lindauer et al., 2017; Loh et al., 2004; Montani et al., 1997; Wadsworth et al., 2018; Wadsworth et al., 2016). Of the 930 study participants across the 19 validity studies, n = 410 (44.09%) were healthy controls, n = 359 (38.60%) were patients with memory disorders, n = 28 (3.01%) were patients with movement disorders, n = 78 (8.39%) were patients with stroke/cerebrovascular accident, n = 30 (3.22%) were psychiatric patients, and n = 34 (3.65%) were patients from mixed clinical groups with no further diagnostic differentiation.

Diagnosis was a potential confound to TNP validity in only one study; Abdolahi and colleagues (2016) found the psychometric properties of the Montreal Cognitive Assessment (MoCA) to be relatively poor in a group of participants with PD (ICC = .37; Cronbach’s alpha = .54, Pearson r = .37) compared to a group with Huntington’s disease (HD) (ICC = .65; Cronbach’s alpha = .79; Pearson’s r = .65). However, the sample size of this study was relatively small (n = 8 PD; n = 9 HD), there were differences in follow-up assessments (7 months PD vs. 3 months HD), and the cross-over design was not counter-balanced. Finally, the authors did not control for the assessment time-of-day, potentially introducing variability in response to dopaminergic medications. In contrast, a study with similar methodology and PD participants found relatively good reliability, with a median difference score of only 2 (IQR = 1.0–2.5) out of 30 points (Stillerova et al., 2016). Thus, with these minor exceptions, diagnosis does not seem to affect TNP validity (see Disease Severity).

Cultural and Racial Groups.

Seven of the included studies were conducted in countries outside of the United States. One study was conducted in Italy (Carotenuto et al., 2018), three in Australia (Loh et al., 2007; Loh et al., 2004; Stillerova et al., 2016), one in Korea (Park, Jeon, Lee, Cho, & Park, 2017), one in Canada (Hildebrand et al., 2004), and one in Japan (Yoshida et al., 2019).

Of the studies conducted in the United States, there was an underrepresentation of ethnic minorities. However, two studies included samples that were 100% ethnic minorities. Vahia and colleagues (2015) conducted a study with monolingual and bilingual Hispanics with testing completed exclusively in Spanish. Wadsworth and colleagues (2016) conducted a validity study with a sample of American Indians. Of the studies with mixed demographic compositions, non-Hispanic Caucasians were overrepresented, with little representation from Hispanics, African Americans, or ethnic minorities. African Americans had little or no representation in these validity studies.

Teleneuropsychology Test Validity2

Cognitive Screeners.

The brief cognitive screener with the most support is the Mini Mental State Examination (MMSE), with nine of the ten studies reporting no mean differences in test scores when comparing TNP to FTF administration (See Table 2). In the one study where mean differences were observed, the effect size was small-medium (g = −.40, p < .001), but a strong correlation was observed between scores in the two testing modalities (r = .95) (Montani et al., 1997). Psychometrics for reliability were generally excellent (ICC ranged from .42 −.92; Pearson r ranged from .90-.95). The strongest support comes from Cullum and colleagues (2014) which had a large sample of MCI/AD patients (n = 83) and healthy controls (n = 199) and showed excellent reliability (ICC = .798). The MMSE was also valid during longitudinal assessments. Carotenuto and colleagues (2018) conducted serial MMSE assessments at baseline, 6, 12, 18, and 24 months in a sample of 28 Italian patients with AD. Across they whole AD group, they found no mean differences in performance across testing modalities at any time-point. Taken together, it appears the MMSE is a valid telehealth measure for screening cognitive status across different clinical populations and also has utility as a longitudinal assessment measure.

Table 2.

Brief Tests of Global Cognitive Functioning

| Test Name | Study | Population Characteristics | Video Modality | Connection Speed | Delay Between Assessments | Findings | Administration Notes |

|---|---|---|---|---|---|---|---|

| MoCA | Lindauer (2017) | N = 66, n = 33 AD, n =33 caretakers; Mage AD = 71.6 (SD = 11.6); 61% Female; “mostly” Caucasian | Using their computer and a camera via Cisco’s Jabber TelePresence platform; iPads loaned if patients did not have a computer | - | 2 weeks | ICC = 0.93 (excellent) | Visuospatial materials enlarged and mailed to patient; patient held up stimuli to camera for scoring |

| Abdolahi (2018)a | N = 17 PD/HD patients; Mage = 61.18, 70.61% Female | Patient’s own in-home equipment | High Speed | 7 months for PD patients; 3 months for HD patients | Slight, but non-significant increase in scores from FTF to VC, moreso in PD group. ICC = .59 (good), Cronbach’s alpha = .74 (adequate), Pearson’s r = .59 (strong) | Visuosptial and naming sub-section emailed to patient | |

| Chapman (2019) | N = 48 stroke survivors from Australia; Mage = 64.6 (SD = 10.1), Meducation = 13.7 (SD = 3.3); 46% Female | Two laptops, provided by researchers, located in separate rooms at the same location; ideoconference sessions were conducted using the cloud-based videoconferencing Zoom | 384 kbit/s | 2 weeks | No difference in total scores across testing modalities (t(47) = .44, p - .658, d = 0.06); similar findings across MoCA domain scores. ICC = .615 (good); 72.9% of participants classified consistently across conditions (normal vs. impaired). Neither age, computer literacy, or hospital depression/anxiety predicted difference scores between conditions | A MoCA response form including only the visuospatial/executive and naming items was in an envelope at the participant’s location. | |

| Stillerova (2016) a | N = 11 Austrailian patients with PD; Median age = 69; 36% Female | Patient’s own devices using Skype or Google+ Hangouts; Completed at their own home | - | 7 days | Median difference in scores was 2.0 points out of 30 (IQR = 1.0–2.5); 2 patients changed from ‘impaired’ to ‘normal’ from FTF to videoconference, 1 patients went from ‘normal’ to ‘impaired’ | Non-randomized design (FTF then Videoconference); Patients given a sealed envolope with the visual stimuli; | |

|

| |||||||

| MMSE | Cullum (2014) * | N = 202, n = 83 MCI/AD, n = 119 healthy controls; Mage = 68.5 (SD = 9.5), Meducation = 14.1 (SD = 2.7); 63% Female | H.323 PC - Based Videoconferencing System (Polycom™ iPower 680 Series) that was set up in two non-adjacent rooms | High Speed | Same Day | Similar mean scores between two testing modalities. ICC = .798 (excellent) | The visuospatial measures were scored via the television monitor by asking participants to hold up their paper in front of camera |

| Cullum (2006) * | N = 33, n = MCI, n = AD; Mage = 73.5 (SD = 6.9), Meducation = 15.1 (SD = 2.7); 33% Female; 97% Caucasian | An H.323 PC-based Videoconferencing System was set up in two nonadjacent rooms using a Polycom iPower 680 Series videoconferencing system (2 units) | High Speed | Same Day | Similar mean scores between two testing modalities. ICC = .88 (excellent) | The visuospatial measures were scored via the television monitor by asking participants to hold up their paper in front of camera | |

| Loh (2004) * | N = 20 Mixed Clinical Sample from Australia; Mage = 82; 80% Female | VCON cruiser, version 4.0, videoconferencing unit with Sony D31 PTZ camera; used onsite | 128–384 kb/s | - | Similar mean scores between two testing modalities. Correlation = .90. Limits of agreement = −4.6 – 4.0; Reliability increased when 4 patients with delerium were removed from analyses | ||

| Montani (1997) * | N = 14 Mixed rehabilitation; Mage = 88 (SD = 5), 46.7% Female | Camera, television screen, and microphone in an adjacent room | Coaxial | 8 days | Small, but significant difference between testing modalities with better FTF performance (p = .003); strong correlation of scores between testing modalities (r = .95) | ||

| Loh (2007) * | N = 20 Memory Disorder Sample from Australia; Mage = 79; 55% Female | PC-based videoconferencing equipment (Cruiser, version 4, VCON). Conducted on-site | 384 kbit/s | - | Similar mean scores between two testing modalities. ICC = .89 (excellent). Limits of agreement = −1.89 – 0.04). Kappa of physicians making AD diagnosis face-to-face and in person = .80 (p < 0.0001) | ||

| Carotenuto (2018) | N = 28 AD patients from Italian Memory Disorders Clinic; Mage = 75.39; Meducation = 7.61 (SD = 4.07); 71% Female | Sony VAIO laptops contained an IntelCore Duo CPU P8400 2.26 GHz processor, 4 GB memory, Intel Media Accelerator X3100 graphics card, and a 17.3″ LCD LED (1920×1080) integrated screen. Completed at the hospital | 100 Mbit/s | Video and FTF done at baseline, 6, 12, 18, and 24 months. Video and FTF assessments done 2 weeks apart | No mean differences in performance across testing modalities at any timepoint (p > .05); Video performance lower (worse) than FTF at baseline and 24 months for severe patients (MMSE 15–17), but not moderate (MMSE = 18–20) or slight (MMSE = 21–24) AD patients | ||

| Park (2017) | N = 30 Korean patients with stroke, Mage = 68.83 (SD = 12.95), Meducation = 11.70 (SD = 5.38); 66.6% Female | martphone (iPhone 5S) with communication via FaceTime; assessment conducted in adjacent room | 100 Mbit/s | 3 days | No significant differences in scores between testing modalities for Total Score (Z = −1.574, p = .116) or across subdomains (all p > .05). Spearman correlation significant (.949, p < .001). No significant differences between patitents with mild aphasia or dysarhtira (n = 11, p = .039) or patients with cognitive defict (MMSE < 25; n = 4, p = 0.104) | ||

| Vahia (2015) a | N = 22 spanish-speaking Hispanics living in US who were referred for cognitive testing by psychiatrist; Mage = 70.75, Meducation = 5.54 ; 22.75% Female; 0% Caucasian; 100% Hispanic | CODEC (coder-decoder) capable of simultaneously streaming video and content (i.e., laptop screen) on side by side monitors, remotely controlled Pan Tilt and Zoom cameras, a tablet PC laptop, videoconference microphone; and dual 26 inch LCD TVs | 512 kbit/s | 2 weeks | Using mixed-effects models, no significant difference between testing modalities on overall Cognitive Composite score derived from entire test battery (F(1,37) = .31, p =.0579), with slightly better (but not significantly better) performance at second testing session, irrespective of modality. No significant difference in MMSE scores (differences in test scores across testing modalities not provided) | Administered in Spanish | |

| Wadsworth (2016) * | N = 84, n = 29 MCI/dementia, n = 55 HC; Mage = 64.89 (SD = 9.73), Meducation = 12.58 (SD = 2.35); 63% Female; 0% Caucasian; 100% American Indian | Polycom iPower 680 series videoconferencing system in same facility | High Speed | Same Day | No differences in mean performance. ICC = .92 (excellent) | ubjects were asked to holdup their drawing to the camera in order for the examiner to score it immediately | |

| Grosch (2015) * | N = 8 geropsychiatric VA patients; 12.5% Female | An H.323 PC-based Videoconferencing System at VAMC | 384 kb/s | Same Day | No difference in mean test score across conditions. ICC was non-significant and just-above “poor” (ICC =.42) | After completing the MMSE pentagons and Clock Drawings, subjects were asked to hold their paper in front of the camera so as to allow scoring by the examiner via television monitor. | |

|

| |||||||

| ADAS-cog | Carotenuto (2018) | N = 28 AD patients from Italian Memory Disorders Clinic; Mage = 75.39; Meducation = 7.61 (SD = 4.07); 71% Female | Sony VAIO laptops contained an IntelCore Duo CPU P8400 2.26 GHz processor, 4 GB memory, Intel Media Accelerator X3100 graphics card, and a 17.3″ LCD LED (1920×1080) integrated screen. Completed at the hospital | 100 Mbit/s | Video and FTF done at baseline, 6, 12, 18, and 24 months. Video and FTF assessments done 2 weeks apart | No mean differences in performance across testing modalities at any timepoint (p > .05); Video performance worse (higher) than FTF at all timepoints for severe patients (MMSE 15–17), but not moderate (MMSE = 18–20) or slight (MMSE = 21–24) AD patients | |

| Yoshida (2019) | N = 73, n = 34 MCI/AD, n = 48 HC; Mage = 76.3 (SD = 7.6), Meducation = 13; 50.7% Female; 100% Japanese sample | Cisco TelePresenceVR System EX60, DX80, SX20 and Roomkit in an adjacent examiner room | High Speed | 2 weeks - 3 months | ICC = .86 for whole sample (excellent); ICCMCI = .63 (good); ICCdementia = .80 (excellent); ICCHC = .74 (good) | ||

|

| |||||||

| RBANS | Galusha-Glassock (2016)* | N = 18, n = 11 MCI/AD, n = 7 HC; Mage = 69.67 (SD = 7.76), Meducation = 14.28 (SD = 2.76), MMMSE = 26.72 (SD = 2.89); 38.8% Female; 78% Caucasian | Polycom iPower 680 series videoconferencing system in two nonadjacent rooms in the same facility | High Speed | Same Day | Mean scores for RBANS Total and all index scores were statistically similar across testing modalities. ICC for Total Score = .88 (excellent). ICC for index scores was fair visuosptial/constructional (ICC = .59). ICC for all other index scores were excellent (ICC range .75-.90) | examiner held up the stimulus in front ofthe cameraforRBANSFigureCopy, Line Orientation, Picture Naming,and Coding.Blank paperand a penwere available in the testingroomfor the participant as wasacopy ofthe Coding sheet from the test protocol. |

Notes.

indicates a study from Brearly et al., (2017)

= not a counter-balanced cross-over design (FTF then videoconference administration)

The Montreal Cognitive Assessment (MoCA) was used in four validity studies, though as described above, two studies (Abdolahi et al., 2018; Stillerova et al., 2016) did not use a counter-balanced cross-over design. Nonetheless, the psychometrics appear sound with strong reliability metrics (ICC range from .59-.93) and no study finding mean TNP vs. FTF differences. In a study of 48 stroke survivors, neither age, computer literacy, nor self-reported anxiety/depression predicted differences in scores between testing conditions (Chapman et al., 2019). Thus, individual factors may not account for TNP vs. FTF differences. Taken together, while only two of the four validity studies were counter-balanced cross-over designs, there appears to be good validity for using the MoCA in TNP assessments.

Two validity studies utilized the Alzheimer’s Disease Assessment Scale – Cognitive Subscale (ADAS-cog), one consisting of a mixed sample of AD/MCI/HC patients (Yoshida et al., 2019) and one involving a two-year longitudinal study of AD patients from Italy (Carotenuto et al., 2018). Yoshida and colleagues showed excellent reliability metrics (ICC = .86). Similarly, Carotenuto (2018) found no mean differences in performance across any time point (baseline, 12, 18, and 24 months) for the whole group of AD patients. Although there are only two validity studies, the sample size from one of the studies was relatively large (N = 73) and another showed relatively good validity for mild-to-moderate AD patients across five different assessment periods.

A single study utilized the Repeatable Battery for the Assessment of Neuropsychological Status (RBANS; Galusha-Glassock et al., 2016). In this mixed sample of MCI, AD, and HC (N = 18), average RBANS Total score and all other index scores were statistically similar across testing environments. The ICC for the Total score was excellent (ICC = .88). Reliability of the visuospatial/constructional index score was fair (ICC = .59), whereas the ICC for every other index score was excellent (ICC range .75-.90). Notably, however, the record form for the Coding sub-test was left with the patient, who was assessed in a non-adjacent room of the same facility. Therefore, giving the full RBANS may not be practical when the patient is assessed from home unless materials are mailed to the patient in advance of the appointment. Given the small sample size of the single validity study and the difficulty that would accompany getting patients the appropriate record forms, there is limited support for the validity of the RBANS for TNP assessments. Rather, it may be beneficial to utilize select subtests from the RBANS (e.g., Line Orientation) to supplement a neurocognitive assessment.

Intelligence.

Only one study (see Table 3) assessed intellectual functioning using the Matrix Reasoning and Vocabulary subtests from the Wechsler Adulty Intelligence Scale – 3rd edition (WAIS-III) (Hildebrand et al., 2004). This relatively small sample (N = 29) consisted of a HC population from Canada. There were no TNP vs. FTF differences in mean scores for either subtest. Furthermore, the limits of agreement did not suggest performance bias in either domain (95% Limits of Agreement MR: −4.56 – 6.08; 95% Limits of Agreement Vocabulary: −3.07–3.13). While measures of intellectual functioning are an instrumental part of a neuropsychological assessment, research is lacking in this domain and there is presently limited support for telehealth validity of such measures, especially in clinical samples.

Table 3.

Test of Intelligence

| Test Name | Study | Population Characteristics | Video Modality | Connection Speed | Delay Between Assessments | Findings | Administration Notes |

|---|---|---|---|---|---|---|---|

| Matrix Reasoning | Hildebrand (2004) * | N = 29 HC from Canada, Mage = 68 (SD = 8), Meducation = 13 (SD = 3), MMMSE = 28.9 (SD = 1.3); 73% Female | Videoconferencing systems (LC5000, VTEL) with two 81cm monitors and far-end camera control. Conducted on-site | 336 kbit/s | 2–4 weeks apart | No difference in mean score across conditions (Limits of agreeement −4.56 – 6.08) | Pictures of the visual test material were presented using a document camera (Elmo Visual Presenter, EV400 AF). |

|

| |||||||

| Vocabulary | Hildebrand (2004) * | N = 29 HC from Canada, Mage = 68 (SD = 8), Meducation = 13 (SD = 3), MMMSE = 28.9 (SD = 1.3); 73% Female | Videoconferencing systems (LC5000, VTEL) with two 81cm monitors and far-end camera control. Conducted on-site | 336 kbit/s | 2–4 weeks apart | No difference in mean score across conditions (Limits of agreeement −3.07 – 3.13) | |

Notes.

indicates a study from Brearly et al., (2017)

Attention/Working Memory.

Six unique validity studies utilized the Digit Span task (Digit Span Forwards, Digit Span Backwards, Digit Span Total; see Table 4). These studies included minority samples (American Indians, Hispanics) and included different diagnostic groups (MCI, AD, HC, Psychiatric samples). For Digit Span Forward, three of the four studies found no TNP vs. FTF difference in mean performance. In the one study that did find significant differences (Wadsworth et al., 2016), a small effect size favoring FTF performance was reported. Of similar concern, the largest validity study (Cullum et al., 2014) consisting of 202 MCI, AD, and HC patients, reported only fair validity statistics (ICC = .590). In this study, the authors used an alternative version of the digit span task to reduce practice effects, though they did not indicate which versions were used. They did acknowledge, “it is possible that our choice of alternate digit strings resulted in lower correlations, and other versions (e.g., WAIS-4, RBANS) may show higher correlations.” (Cullum et al., 2014, pg. 6). Alternatively, another large study (N = 197 AD, MCI, HC) found no TNP v. FTF main effects after controlling for age, education, gender, and depression) with very small effect sizes (d = .007) for the clinical group (Wadsworth et al., 2018). Thus, there appears to be moderate evidence of validity for utilizing Digit Span Forward in TNP assessments.

Table 4.

Tests of Attention/Working Memory

| Test Name | Study | Population Characteristics | Video Modality | Connection Speed | Delay Between Assessments | Findings | Administration Notes |

|---|---|---|---|---|---|---|---|

| Digit Span Forward | Cullum (2014) * | N = 202, n = 83 MCI/AD, n = 119 healthy controls; Mage = 68.5 (SD = 9.5), Meducation = 14.1 (SD = 2.7); 63% Female | H.323 PC - Based Videoconferencing System (Polycom™ iPower 680 Series) that was set up in two non-adjacent rooms | High Speed | Same Day | Similar mean performance. ICC = .590 (fair) | |

| Wadsworth (2016) * | N = 84, n = 29 MCI/dementia, n = 55 HC; Mage = 64.89 (SD = 9.73), Meducation = 12.58 (SD = 2.35); 63% Female; 0% Caucasian; 100% American Indian | Polycom iPower 680 series videoconferencing system | High Speed | Same Day | Small but significant difference with better face-to-face performance (t - 2.98, p = .004). ICC = .75 (good) | ||

| Vahia (2015) | N = 22 spanish-speaking Hispanics living in US who were referred for cognitive testing by psychiatrist; Mage = 70.75, Meducation = 5.54 ; 22.75% Female; 0% Caucasian; 100% Hispanic | CODEC (coder-decoder) capable of simultaneously streaming video and content (i.e., laptop screen) on side by side monitors, remotely controlled Pan Tilt and Zoom cameras, a tablet PC laptop, videoconference microphone; and dual 26 inch LCD TVs | 512 kbit/s | 2 weeks | Using mixed-effects models, no significant difference between testing modalities on overall Cognitive Composite score derived from entire test battery (F(1,37) = .31, p =.0579), with slightly better (but not significantly better) performance at second testing session, irrespective of modality. No significant difference in digit forward scores (differences in test scores across testing modalities not provided) | Administered in Spanish | |

| Wadsworth (2018) | N = 197, n = 78 MCI/AD, n = 119 Healthy Controls; Mage MCI/AD = 72.71 (SD = 8.43), Meducation MCI/AD = 14.56 (SD = 3.10); 46.2% Female; 61.5% Caucasian, 29.5% American Indian | Polycom iPower 680 series videoconferencing system | High Speed | Same Day | No main effect of administration modality (ANCOVA controlling for age, education, gender, and depression scores p = .276). | ||

|

| |||||||

| Digit Span Backwards | Cullum (2014) * | N = 202, n = 83 MCI/AD, n = 119 healthy controls; Mage = 68.5 (SD = 9.5), Meducation = 14.1 (SD = 2.7); 63% Female | H.323 PC - Based Videoconferencing System (Polycom™ iPower 680 Series) that was set up in two non-adjacent rooms | High Speed | Same Day | Similar mean performance across testing modalities. ICC was significant, but fair (.545) | |

| Wadsworth (2016) * | N = 84, n = 29 MCI/dementia, n = 55 HC; Mage = 64.89 (SD = 9.73), Meducation = 12.58 (SD = 2.35); 63% Female; 0% Caucasian; 100% American Indian | Polycom iPower 680 series videoconferencing system in same facility | High Speed | Same Day | No differences in mean performance (t = .31, p = .760). ICC = .69 (good) | ||

| Vahia (2015) | N = 22 spanish-speaking Hispanics living in US who were referred for cognitive testing by psychiatrist; Mage = 70.75, Meducation = 5.54 ; 22.75% Female; 0% Caucasian; 100% Hispanic | CODEC (coder-decoder) capable of simultaneously streaming video and content (i.e., laptop screen) on side by side monitors, remotely controlled Pan Tilt and Zoom cameras, a tablet PC laptop, videoconference microphone; and dual 26 inch LCD TVs | 512 kbit/s | 2 weeks | Using mixed-effects models, no significant difference between testing modalities on overall Cognitive Composite score derived from entire test battery (F(1,37) = .31, p =.0579), with slightly better (but not significantly better) performance at second testing session, irrespective of modality. No significant difference in digit forward scores (differences in test scores across testing modalities not provided) | Administered in Spanish | |

| Wadsworth (2018) | N = 197, n = 78 MCI/AD, n = 119 Healthy Controls; Mage MCI/AD = 72.71 (SD = 8.43), Meducation MCI/AD = 14.56 (SD = 3.10); 46.2% Female; 61.5% Caucasian, 29.5% American Indian | Polycom iPower 680 series videoconferencing system | High Speed | Same Day | No main effect of administration modality (ANCOVA controlling for age, education, gender, and depression scores p = .635) | ||

|

| |||||||

| Digit Span Total | Cullum (2006) * | N = 33, n = MCI, n = AD; Mage = 73.5 (SD = 6.9), Meducation = 15.1 (SD = 2.7); 33% Female; 97% Caucasian | An H.323 PC-based Videoconferencing System was set up in two nonadjacent rooms using a Polycom iPower 680 Series videoconferencing system (2 units) | High Speed | Same Day | Similar mean scores between two testing modalities. ICC = .78 (excellent) | |

| Grosch (2015) * | N = 8 geropsychiatric VA patients; 12.5% Female | An H.323 PC-based Videoconferencing System at VAMC | 384 kb/s | Same Day | No difference in mean test score across conditions. ICC = .72 (good) | ||

|

| |||||||

| Brief Test of Attention | Hildebrand (2004) * | N = 29 HC from Canada, Mage = 68 (SD = 8), Meducation = 13 (SD = 3), MMMSE = 28.9 (SD = 1.3); 73% Female | Videoconferencing systems (LC5000, VTEL) with two 81cm monitors and far-end camera control. Conducted on-site | 336 kbit/s | 2–4 weeks apart | No difference in mean score across conditions (Limits of agreeement −5.09 – 6.95) | |

Notes.

indicates a study from Brearly et al., (2017)

A very similar pattern emerges for studies that utilized Digit Span Backwards. While no study found significant TNP v. FTF differences in mean performance, the largest study (Cullum et al., 2014) observed only fair validity metrics (ICC = .545); whereas, another large study with a mixed group (Wadsworth et al., 2018) found no such effects after controlling for age, education, gender, and depression scores, and very small effect sizes for the clinical group (d = .048). Again, there is moderate evidence of validity for TNP assessments.

Finally, for the two studies that reported Digit Span Total (Cullum et al., 2006; Grosch et al., 2015), reliability metrics were good and excellent (ICC = .72 and .78, respectively). Though the samples were small, they were mixed samples of AD, MCI, and geropsychiatric patients. Thus, there appears to be good validity evidence for using Digit Span Total in TNP.

One study using a HC sample of individuals from Canada (Hildebrand et al., 2004) used the Brief Test of Attention (BTA) and the authors found no mean differences in scores resulting from TNP vs. FTF assessments. There also was no evidence of bias towards a particular testing modality (95% Limits of Agreement: −5.09 – 6.95). Given the small sample size with no clinical patients, there is limited validity evidence for the BTA to be used in TNP assessments of a clinical population.

Processing Speed.

Only one validity study used a measure of processing speed (see Table 5), Oral Trails A (Wadsworth et al., 2016). This mixed-sample study (AD, MCI, HC) showed a significant difference in completion time, with a better or faster performance in person. The authors maintain that the difference in performance was small (Mean Time = 8.9 (SD = 2.4) vs. 11.1 (SD = 3.0) and not clinically meaningful as they fell within the normal range of test-retest reliability. Similarly, the validity metrics for this test were excellent (ICC = .83). Taken together, there is some support for the validity of Oral Trails A in TNP assessments.

Table 5.

Tests of Processing Speed

| Test Name | Study | Population Characteristics | Video Modality | Connection Speed | Delay Between Assessments | Findings | Administration Notes |

|---|---|---|---|---|---|---|---|

| Oral Trails A | Wadsworth (2016) * | N = 84, n = 29 MCI/dementia, n = 55 HC; Mage = 64.89 (SD = 9.73), Meducation = 12.58 (SD = 2.35); 63% Female; 0% Caucasian; 100% American Indian | Polycom iPower 680 series videoconferencing system | High Speed | Same Day | Small but significant difference with better face-to-face performance (t = −9.60, p = < .001). ICC = .83 (excellent) |

Notes.

indicates a study from Brearly et al., (2017)

Language.

A small study of ten Veterans referred for a memory disorders evaluation was assessed using the full 60-item Boston Naming Test (BNT), which found no difference in same-day performance between TNP and FTF assessment modalities (Vestal et al., 2006). The 15-item BNT (BNT-15) was used in four studies with medium to large samples (N range from 33–202) of mixed clinical and healthy samples, which showed excellent reliability metrics (ICC ranged from .812 to .930). A significant mean TNP vs. FTF performance difference was found in only one of the four studies (Wadsworth et al., 2016), but the effect size was small in favor of FTF assessment (g = −0.15, p < .001). Overall, there appears to be good support for the validity of the BNT in TNP assessments (see Table 6).

Table 6.

Tests of Language

| Test Name | Study | Population Characteristics | Video Modality | Connection Speed | Delay Between Assessments | Findings | Administration Notes |

|---|---|---|---|---|---|---|---|

| BNT | Vestal (2006) * | N = 10 Memory Disorder Referral at VA; Mage = 73.9 (SD = 3.7), MMMSE = 26.1 (SD = 1.4) | Television monitor and a microphone completed at VA; technician in room to help | 384 kbit/s | Same Day | Wilcox signed Rank Test non-significant (z = −0.171, p = 0.864) | |

|

| |||||||

| BNT-15 | Cullum (2014) * | N = 202, n = 83 MCI/AD, n = 119 healthy controls; Mage = 68.5 (SD = 9.5), Meducation = 14.1 (SD = 2.7); 63% Female | H.323 PC - Based Videoconferencing System (Polycom™ iPower 680 Series) that was set up in two non-adjacent rooms | High Speed | Same Day | Similar mean scores between testing modalities. ICC = .812 (excellent) | |

| Cullum (2006) * | N = 33, n = MCI, n = AD; Mage = 73.5 (SD = 6.9), Meducation = 15.1 (SD = 2.7); 33% Female; 97% Caucasian | An H.323 PC-based Videoconferencing System was set up in two nonadjacent rooms using a Polycom iPower 680 Series videoconferencing system (2 units) | High Speed | Same Day | Similar mean scores between testing modalities. ICC = .87 (excellent) | ||

| Wadsworth (2016) * | N = 84, n = 29 MCI/dementia, n = 55 HC; Mage = 64.89 (SD = 9.73), Meducation = 12.58 (SD = 2.35); 63% Female; 0% Caucasian; 100% American Indian | Polycom iPower 680 series videoconferencing system | High Speed | Same Day | Small but significant difference with better face-to-face performance (t = 3.21, p = .002). ICC = .93 (excellent) | ||

| Wadsworth (2018) | N = 197, n = 78 MCI/AD, n = 119 Healthy Controls; Mage MCI/AD = 72.71 (SD = 8.43), Meducation MCI/AD = 14.56 (SD = 3.10); 46.2% Female; 61.5% Caucasian, 29.5% American Indian | Polycom iPower 680 series videoconferencing system | High Speed | Same Day | No main effect of administration modality (ANCOVA controlling for age, education, gender, and depression scores). Health controls performed better than MCI/AD | ||

|

| |||||||

| Ponton-Satz Spanish Naming Test | Vahia (2015) | N = 22 spanish-speaking Hispanics living in US who were referred for cognitive testing by psychiatrist; Mage = 70.75, Meducation = 5.54 ; 22.75% Female; 0% Caucasian; 100% Hispanic | CODEC (coder-decoder) capable of simultaneously streaming video and content (i.e., laptop screen) on side by side monitors, remotely controlled Pan Tilt and Zoom cameras, a tablet PC laptop, videoconference microphone; and dual 26 inch LCD TVs | 512 kbit/s | 2 weeks | Using mixed-effects models, no significant difference between testing modalities on overall Cognitive Composite score derived from entire test battery (F(1,37) = .31, p =.0579), with slightly better (but not significantly better) performance at second testing session, irrespective of modality. No significant difference in naming scores (differences in test scores across testing modalities not provided) | Administered in Spanish |

|

| |||||||

| Letter Fluency | Cullum (2014) * | N = 202, n = 83 MCI/AD, n = 119 healthy controls; Mage = 68.5 (SD = 9.5), Meducation = 14.1 (SD = 2.7); 63% Female | H.323 PC - Based Videoconferencing System (Polycom™ iPower 680 Series) that was set up in two non-adjacent rooms | High Speed | Same Day | Similar mean scores between testing modalities. ICC = .848 (excellent) | |

| Cullum (2006) * | N = 33, n = MCI, n = AD; Mage = 73.5 (SD = 6.9), Meducation = 15.1 (SD = 2.7); 33% Female; 97% Caucasian | An H.323 PC-based Videoconferencing System was set up in two nonadjacent rooms using a Polycom iPower 680 Series videoconferencing system (2 units) | High Speed | Same Day | Similar mean scores between testing modalities. ICC = .83 (excellent). | ||

| Hildebrand (2004) * | N = 29 HC from Canada, Mage = 68 (SD = 8), Meducation = 13 (SD = 3), MMMSE = 28.9 (SD = 1.3); 73% Female | Videoconferencing systems (LC5000, VTEL) with two 81cm monitors and far-end camera control. Conducted on-site | 336 kbit/s | 2–4 weeks apart | No difference in mean score across conditions (Limits of agreeement −4.34 – 4.82) | ||

| Vestal (2006) * | N = 10 Memory Disorder Referral at VA; Mage = 73.9 (SD = 3.7), MMMSE = 26.1 (SD = 1.4) | Television monitor and a microphone completed at VA; technician in room to help | 384 kbit/s | Same Day | Wilcox signed Rank Test non-significant (z = −1.316, p = 0.188) | ||

| Wadsworth (2016) * | N = 84, n = 29 MCI/dementia, n = 55 HC; Mage = 64.89 (SD = 9.73), Meducation = 12.58 (SD = 2.35); 63% Female; 0% Caucasian; 100% American Indian | Polycom iPower 680 series videoconferencing system in same facility | High Speed | Same Day | No differences in mean performance (t = −0.10, p = .920; Bonferonni correction set alpha to .004). ICC = .93 (excellent) | ||

| Vahia (2015) | N = 22 spanish-speaking Hispanics living in US who were referred for cognitive testing by psychiatrist; Mage = 70.75, Meducation = 5.54 ; 22.75% Female; 0% Caucasian; 100% Hispanic | CODEC (coder-decoder) capable of simultaneously streaming video and content (i.e., laptop screen) on side by side monitors, remotely controlled Pan Tilt and Zoom cameras, a tablet PC laptop, videoconference microphone; and dual 26 inch LCD TVs | 512 kbit/s | 2 weeks | Using mixed-effects models, no significant difference between testing modalities on overall Cognitive Composite score derived from entire test battery (F(1,37) = .31, p =.0579), with slightly better (but not significantly better) performance at second testing session, irrespective of modality. No significant difference in letter fluency performance (differences in test scores across testing modalities not provided) | Administered in Spanish | |

| Wadsworth (2018) | N = 197, n = 78 MCI/AD, n = 119 Healthy Controls; Mage MCI/AD = 72.71 (SD = 8.43), Meducation MCI/AD = 14.56 (SD = 3.10); 46.2% Female; 61.5% Caucasian, 29.5% American Indian | Polycom iPower 680 series videoconferencing system | High Speed | Same Day | No main effect of administration modality (ANCOVA controlling for age, education, gender, and depression scores p = .814). | ||

|

| |||||||

| Category Fluency | Cullum (2014) * | N = 202, n = 83 MCI/AD, n = 119 healthy controls; Mage = 68.5 (SD = 9.5), Meducation = 14.1 (SD = 2.7); 63% Female | H.323 PC - Based Videoconferencing System (Polycom™ iPower 680 Series) that was set up in two non-adjacent rooms | High Speed | Same Day | Similar mean performance across testing modalities. ICC = .719 (good) | |

| Cullum (2006) * | N = 33, n = MCI, n = AD; Mage = 73.5 (SD = 6.9), Meducation = 15.1 (SD = 2.7); 33% Female; 97% Caucasian | An H.323 PC-based Videoconferencing System was set up in two nonadjacent rooms using a Polycom iPower 680 Series videoconferencing system (2 units) | High Speed | Same Day | Similar mean scores between testing modalities. ICC = .58 (fair), which was below threshold of .60. | ||

| Vahia (2015) | N = 22 spanish-speaking Hispanics living in US who were referred for cognitive testing by psychiatrist; Mage = 70.75, Meducation = 5.54 ; 22.75% Female; 0% Caucasian; 100% Hispanic | CODEC (coder-decoder) capable of simultaneously streaming video and content (i.e., laptop screen) on side by side monitors, remotely controlled Pan Tilt and Zoom cameras, a tablet PC laptop, videoconference microphone; and dual 26 inch LCD TVs | 512 kbit/s | 2 weeks | Using mixed-effects models, no significant difference between testing modalities on overall Cognitive Composite score derived from entire test battery (F(1,37) = .31, p =.0579), with slightly better (but not significantly better) performance at second testing session, irrespective of modality. No significant difference in category fluency performance (differences in test scores across testing modalities not provided) | Administered in Spanish | |

| Wadsworth (2016) * | N = 84, n = 29 MCI/dementia, n = 55 HC; Mage = 64.89 (SD = 9.73), Meducation = 12.58 (SD = 2.35); 63% Female; 0% Caucasian; 100% American Indian | Polycom iPower 680 series videoconferencing system in same facility | High Speed | Same Day | No differences in mean performance (t = 2.27, p = .026; Bonferonni correction set alpha to .004). ICC = .74 (good) | ||

| Wadsworth (2018) | N = 197, n = 78 MCI/AD, n = 119 Healthy Controls; Mage MCI/AD = 72.71 (SD = 8.43), Meducation MCI/AD = 14.56 (SD = 3.10); 46.2% Female; 61.5% Caucasian, 29.5% American Indian | Polycom iPower 680 series videoconferencing system | High Speed | Same Day | Small but significant effect of administration modality (p < .001; d = .184 for MCI/AD) | ||

|

| |||||||

| Token Test | Vestal (2006) * | N = 10 Memory Disorder Referral at VA; Mage = 73.9 (SD = 3.7), MMMSE = 26.1 (SD = 1.4) | Television monitor and a microphone completed at VA; technician in room to help | 384 kbit/s | Same Day | Wilcox signed Rank Test non-significant (z = −1.084, p = 0.279) | . In addition, a clinician-generated Token Test palate (Appendix B) was used to aid the participants in the correct re-formation of the tokens while being administered the Token Test |

|

| |||||||

| Picture Description | Vestal (2006) * | N = 10 Memory Disorder Referral at VA; Mage = 73.9 (SD = 3.7), MMMSE = 26.1 (SD = 1.4) | Television monitor and a microphone completed at VA; technician in room to help | 384 kbit/s | Same Day | Wilcox signed Rank Test non-significant (z = 0.0, p = 1.00) | Cookie theft and picnic scenes |

|

| |||||||

| Aural Comprehnsion of Words and Phrases | Vestal (2006) * | N = 10 Memory Disorder Referral at VA; Mage = 73.9 (SD = 3.7), MMMSE = 26.1 (SD = 1.4) | Television monitor and a microphone completed at VA; technician in room to help | 384 kbit/s | Same Day | Wilcox signed Rank Test non-significant (z = −1.20, p = 0.230) | |

Notes.

indicates a study from Brearly et al., (2017)

Vahia and colleagues (2015) administered the Ponton-Satz Spanish Naming Test to 22 Spanish-speaking Hispanics who were referred for a memory disorders evaluation by their psychiatrist. Using a mixed-effects model, the authors found no significant TNP vs. FTF performance differences (though means and standard deviations were not provided). Taken together, there is some evidence of validity for TNP with this population.

With regards to letter fluency, there are seven validity studies with sample sizes ranging from small (N = 10) to large (N = 202), consisting of multi-ethnic demographics (American Indians, Spanish-speaking Hispanics), with various clinical samples (MCI, AD, HC, psychiatric). No mean differences between TNP and FTF evaluations were reported in any study and validity metrics were excellent (ICC = .83 to .93). There appears to be strong support for the validity of letter fluency in TNP assessments.

Category fluency results were slightly more variable. There were five validity studies that administered category fluency with similar demographic and clinical compositions as letter fluency. However, one study (Wadsworth et al., 2018) found a small, but significant difference in testing modality (d = .184 for MCI/AD group). Similarly, validity metrics were only fair-to-good (ICC ranged from .58 - .74). This may be due to the use of a single trial semantic/category fluency measure (e.g., Animals), which likely creates more variability performance. Thus, there is moderate validity for using category fluency as part of a TNP assessment, though it may be beneficial to use a category fluency test with multiple trials (e.g., Animals, Vegetables, Fruits).

As part of their language evaluation, Vestal and colleagues (2006) administered the Token Test, Picture Description, and Aural Comprehension of Words and Phrases to 10 Veterans who were referred for memory disorders evaluations. There were no significant TNP vs. FTF performance differences. Notably, the Veterans were given the tokens and a template to re-organize the stimuli for the Token test, making it unlikely to be useful in telehealth evaluations unless examiners can find creative ways to provide distant examinees with appropriate stimulus materials in advance of the assessment. Given the small sample size of this single study, there is insufficient evidence for the validity of these measures in TNP assessments.

Memory.3

Five studies examined the validity of the Hopkins Verbal Learning Test – Revised (HVLT-R). The sample size of these studies ranged from medium (N = 22) to large (N = 202), consisting of multi-ethnic demographics (American Indians, Spanish-speaking Hispanics), with various clinical samples (MCI, AD, HC, psychiatric). For HVLT-R Immediate Recall Total, only one study found significant TNP v. FTF performance differences (Cullum et al., 2014), though the effect size was small (g = .13, p = .004). Validity metrics were excellent (ICC = .77 - .88). Three of the studies mentioned above also examined HVLT-R Delayed Recall, which found no significant differences in performances; validity metrics were good-to-excellent (ICC = .61 & .90). Taken together, the HVLT-R has strong support for validity in TNP assessments (see Table 7).

Table 7.

Tests of Memory

| Test Name | Study | Population Characteristics | Video Modality | Connection Speed | Delay Between Assessments | Findings | Administration Notes |

|---|---|---|---|---|---|---|---|

| HVLT Immediate | Cullum (2014) * | N = 202, n = 83 MCI/AD, n = 119 healthy controls; Mage = 68.5 (SD = 9.5), Meducation = 14.1 (SD = 2.7); 63% Female | H.323 PC - Based Videoconferencing System (Polycom™ iPower 680 Series) that was set up in two non-adjacent rooms | High Speed | Same Day | Significantly higher test performance for video administration (23.4 (SD = 6.90) vs. 22.5 (SD = 6.98), p = .005). ICC was still excellent (.798) | |

| Cullum (2006) * | N = 33, n = MCI, n = AD; Mage = 73.5 (SD = 6.9), Meducation = 15.1 (SD = 2.7); 33% Female; 97% Caucasian | An H.323 PC-based Videoconferencing System was set up in two nonadjacent rooms using a Polycom iPower 680 Series videoconferencing system (2 units) | High Speed | Same Day | Similar mean scores between two testing modalities. ICC = .77 (excellent) | ||

| Wadsworth (2016) * | N = 84, n = 29 MCI/dementia, n = 55 HC; Mage = 64.89 (SD = 9.73), Meducation = 12.58 (SD = 2.35); 63% Female; 0% Caucasian; 100% American Indian | Polycom iPower 680 series videoconferencing system in same facility | High Speed | Same Day | No differences in mean performance (t = −2.11, p = .038; Bonferonni correction set alpha to .004). ICC = .88 (excellent) | ||

| Vahia (2015) | N = 22 spanish-speaking Hispanics living in US who were referred for cognitive testing by psychiatrist; Mage = 70.75, Meducation = 5.54 ; 22.75% Female; 0% Caucasian; 100% Hispanic | CODEC (coder-decoder) capable of simultaneously streaming video and content (i.e., laptop screen) on side by side monitors, remotely controlled Pan Tilt and Zoom cameras, a tablet PC laptop, videoconference microphone; and dual 26 inch LCD TVs | 512 kbit/s | 2 weeks | Using mixed-effects models, no significant difference between testing modalities on overall Cognitive Composite score derived from entire test battery (F(1,37) = .31, p =.0579), with slightly better (but not significantly better) performance at second testing session, irrespective of modality. No significant difference in HVLT scores (differences in test scores across testing modalities not provided). | Administered in Spanish | |

| Wadsworth (2018) | N = 197, n = 78 MCI/AD, n = 119 Healthy Controls; Mage MCI/AD = 72.71 (SD = 8.43), Meducation MCI/AD = 14.56 (SD = 3.10); 46.2% Female; 61.5% Caucasian, 29.5% American Indian | Polycom iPower 680 series videoconferencing system | High Speed | Same Day | No main effect of administration modality (ANCOVA controlling for age, education, gender, and depression scores p = .457) | ||

|

| |||||||

| HVLT Delay | Cullum (2006) | N = 33, n = MCI, n = AD; Mage = 73.5 (SD = 6.9), Meducation = 15.1 (SD = 2.7); 33% Female; 97% Caucasian | An H.323 PC-based Videoconferencing System was set up in two nonadjacent rooms using a Polycom iPower 680 Series videoconferencing system (2 units) | High Speed | Same Day | Slightly higher, but non-significant performance for face-to-face testing. ICC = .61 (good) | |

| Wadsworth (2016) * | N = 84, n = 29 MCI/dementia, n = 55 HC; Mage = 64.89 (SD = 9.73), Meducation = 12.58 (SD = 2.35); 63% Female; 0% Caucasian; 100% American Indian | Polycom iPower 680 series videoconferencing system in same facility | High Speed | Same Day | No differences in mean performance (t = −2.25, p = .027; Bonferonni correction set alpha to .004). ICC = .90 (excellent) | ||

| Wadsworth (2018) | N = 197, n = 78 MCI/AD, n = 119 Healthy Controls; Mage MCI/AD = 72.71 (SD = 8.43), Meducation MCI/AD = 14.56 (SD = 3.10); 46.2% Female; 61.5% Caucasian, 29.5% American Indian | Polycom iPower 680 series videoconferencing system | High Speed | Same Day | No main effect of administration modality (ANCOVA controlling for age, education, gender, and depression scores p = .735) | ||

|

| |||||||

| BVMT-R | Vahia (2015) | N = 22 spanish-speaking Hispanics living in US who were referred for cognitive testing by psychiatrist; Mage = 70.75, Meducation = 5.54 ; 22.75% Female; 0% Caucasian; 100% Hispanic | CODEC (coder-decoder) capable of simultaneously streaming video and content (i.e., laptop screen) on side by side monitors, remotely controlled Pan Tilt and Zoom cameras, a tablet PC laptop, videoconference microphone; and dual 26 inch LCD TVs | 512 kbit/s | 2 weeks | Using mixed-effects models, no significant difference between testing modalities on overall Cognitive Composite score derived from entire test battery (F(1,37) = .31, p =.0579), with slightly better (but not significantly better) performance at second testing session, irrespective of modality. No significant difference in BVMT performance (differences in test scores across testing modalities not provided). | Administered in Spanish |

Notes.

indicates a study from Brearly et al., (2017)

One study used the Brief Visuospatial Memory Test – Revised (BVMT-R), which included a sample of 22 Spanish-speaking Hispanics. A mixed-effects model showed no significant TNP v. FTF differences, though mean scores were not provided. Taken together, there appears to be some evidence of validity for using BVMT-R in TNP evaluations.

Executive Functioning.

In the review of available studies, many traditional measures of executive functioning (e.g., Wisconsin Card Sort Test, Trail Making Test B, Stroop) were not assessed. Instead, the most widely used measure of “executive functioning” was the Clock Drawing Test, which involves multi-componential processes including visuospatial skills, language, as well as executive skills such as planning and inhibitory control.

The Clock Drawing Test was used in eight validity studies, ranging from small (N = 8) to large (N = 202) sample sizes, with different ethnic compositions (American Indian, Hispanic) and clinical populations (MCI, AD, HC, rehabilitation, psychiatric). While no study reported significant differences in mean performance between testing conditions, there was variability in findings and validity metrics. For example, two studies reported large, but non-significant differences between TNP and FTF evaluations (Hildebrand et al., 2004; Montani et al., 1997). Furthermore, validity metrics ranged for poor-to-good (ICC range .42 - .71) with only moderate reliability (kappa = .48). However, the studies that reported poorer validity metrics tended to be much smaller than the others. For example, Grosch (2015) found poor validity metrics (ICC = .42) with a sample of only 8 patients seen in a VA geropsychiatry clinic. The three largest studies (Cullum, 2014; Wadsworth, 2016, 2018) reported no significant TNP v. FTF differences and good validity metrics (ICC = .65 and .71). Given the variability in findings and only moderate validity metrics, there appears to be only moderate evidence for the reliability of the Clock Drawing Test in TNP (see Table 8).

Table 8.

Tests of Executive Functioning

| Test Name | Study | Population Characteristics | Video Modality | Connection Speed | Delay Between Assessments | Findings | Administration Notes |

|---|---|---|---|---|---|---|---|

| Clock Drawing Test | Cullum (2014) * | N = 202, n = 83 MCI/AD, n = 119 healthy controls; Mage = 68.5 (SD = 9.5), Meducation = 14.1 (SD = 2.7); 63% Female | H.323 PC - Based Videoconferencing System (Polycom™ iPower 680 Series) that was set up in two non-adjacent rooms | High Speed | Same Day | Similar mean performance. ICC = .709 (moderate) | The visuospatial measures were scored by asking participants to hold up their paper in front of camera |

| Cullum (2006) * | N = 33, n = MCI, n = AD; Mage = 73.5 (SD = 6.9), Meducation = 15.1 (SD = 2.7); 33% Female; 97% Caucasian | An H.323 PC-based Videoconferencing System was set up in two nonadjacent rooms using a Polycom iPower 680 Series videoconferencing system (2 units) | High Speed | Same Day | Kappa was moderate (0.48, p < .0001) | The visuospatial measures were scored via the television monitor by asking participants to hold up their paper in front of camera | |

| Wadsworth (2016) * | N = 84, n = 29 MCI/dementia, n = 55 HC; Mage = 64.89 (SD = 9.73), Meducation = 12.58 (SD = 2.35); 63% Female; 0% Caucasian; 100% American Indian | Polycom iPower 680 series videoconferencing system | High Speed | Same Day | No differences in mean performance. ICC = .65 (good) | ubjects were asked to holdup their drawing to the camera in order for the examiner to score it immediately | |

| Wadsworth (2018) | N = 197, n = 78 MCI/AD, n = 119 Healthy Controls; Mage MCI/AD = 72.71 (SD = 8.43), Meducation MCI/AD = 14.56 (SD = 3.10); 46.2% Female; 61.5% Caucasian, 29.5% American Indian | Polycom iPower 680 series videoconferencing system | High Speed | Same Day | No main effect of administration modality (ANCOVA controlling for age, education, gender, and depression scores p = .520) | ||

| Grosch (2015) * | N = 8 geropsychiatric VA patients; 12.5% Female | An H.323 PC-based Videoconferencing System at VAMC | 384 kb/s | Same Day | No difference in mean test score across conditions. ICC was non-significant and just-above “poor” (ICC =.42) | Held up clock to camera for scoring; alterante times used for test-retest | |

| Vahia (2015) | N = 22 spanish-speaking Hispanics living in US who were referred for cognitive testing by psychiatrist; Mage = 70.75, Meducation = 5.54 ; 22.75% Female; 0% Caucasian; 100% Hispanic | CODEC (coder-decoder) capable of simultaneously streaming video and content (i.e., laptop screen) on side by side monitors, remotely controlled Pan Tilt and Zoom cameras, a tablet PC laptop, videoconference microphone; and dual 26 inch LCD TVs | 512 kbit/s | 2 weeks | Using mixed-effects models, no significant difference between testing modalities on overall Cognitive Composite score derived from entire test battery (F(1,37) =clock drawing performance (differences in test scores across testing modalities not provided) | Administered in Spanish | |

| Hildebrand (2004) * | N = 29 HC from Canada, Mage = 68 (SD = 8), Meducation = 13 (SD = 3), MMMSE = 28.9 (SD = 1.3); 73% Female | Videoconferencing systems (LC5000, VTEL) with two 81cm monitors and far-end camera control. Conducted on-site | 336 kbit/s | 2–4 weeks apart | Large, but not significant difference in mean score across conditions (Mean difference −1.93; 95% CI = −5.76 – 1.90); Large Limits of Agreement (−22.07 – 18.21) | ||

| Montani (1997) * | N = 14 Mixed rehabilitation; Mage = 88 (SD = 5), 46.7% Female | Camera, television screen, and microphone in an adjacent room | Coaxial | 8 days | Large, but non-significant diifference in mean scores across conditions (22.4 vs. 19.8) with better FTF performance. Correlation of test scores significant (r = 0.55) | ||

|

| |||||||

| Oral Trails B | Wadsworth (2016) * | N = 84, n = 29 MCI/dementia, n = 55 HC; Mage = 64.89 (SD = 9.73), Meducation = 12.58 (SD = 2.35); 63% Female; 0% Caucasian; 100% American Indian | Polycom iPower 680 series videoconferencing system in same facility | High Speed | Same Day | No differences in mean performance (t = −.35, p = .726). ICC = .79 (excellent) | |

Notes.

indicates a study from Brearly et al., (2017)

Disease Severity

Two studies discussed validity metrics for TNP based on disease severity. Corotenuto and colleagues (2018) assessed AD patients with the MMSE and ADAS-Cog at baseline, 6 12, 18, and 24 months. When separated by severity (characterized as mild, moderate, and severe), MMSE videoconference performance for the severe AD patients (MMSE 15–17) was worse than FTF performance at baseline and 24 months; whereas, the mild (MMSE 21–24) and moderate (MMSE 18–20) AD patients did not show performance differences at any time. Similarly, they found that patients with severe AD had significantly higher (worse) ADAS-Cog scores during teleconference evaluation, whereas there were no performance differences in the mild and moderate AD severity patients. In contrast, Park (2017) did not find differences in MMSE performance for post-stroke patients with cognitive deficits. However, Park and colleagues (2017) defined cognitive deficit as MMSE < 25. Of the 11 patients with cognitive deficit, 7 had MMSE scores between 18–25 and four patients had an MMSE score <18. Thus, the difference in study findings may be because the patients with cognitive deficits in Park’s (2017) study were not as impaired as the “severe AD” group in Corotenuto’s (2018) study. Considering these findings, the MMSE may be insufficiently valid for TNP with patients who are severely cognitively impaired or in late-stages of AD or other dementias.

Teleneuropsychology Equipment

Nearly all validity studies utilized desktop or laptop computers, which were set up in a room inside a clinic or hospital. Only two studies were conducted in patient homes (Abdolahi et al., 2018; Stillerova et al., 2016) and only one study used a smartphone (Park et al., 2017). In this study, testing was done in the clinic and the smartphone was owned by the researchers and set up on a tripod for “hands-free” interacting. Thus, the patient did not have direct control of the smartphone during the assessment nor was there concern for distractions from notifications. Of the 11 patients who used their own equipment in Stillerova and colleagues’ (2016) study, 9 used computers and only 2 used a smartphone or tablet.

There is a clear temporal trend in which the technology used in these validity studies becomes increasingly sophisticated and convenient for patients; from the television unit used in Montani (1997) to the PC-based teleconferencing system used by Loh (2007), the tablet laptop used by Vahia (2015), the smartphone used by Park (2017), and the patient’s own in-home equipment used by Abdolahi (2018) and Stillerova (2016). Recent studies also began to use cloud-based videoconferencing. For example, Lindauer utilized Cisco’s Jabber Telepresence platform, Chapman (2019) used Zoom, and Stillerova (2016) used Skype or Google+. Generally, all studies published after 2007 had sufficiently high-speed internet connections (> 25 mbit/s).

Taken together, there is sufficient evidence for the utility of PC and laptop computers in TNP. However, there is insufficient evidence for the use of smartphones. More recent studies are starting to use cloud-based communication services, which does not seem contraindicated so long as there is a sufficiently fast and reliable internet connection.

Discussion

In response to the COVID-19 pandemic, the field of neuropsychology must rapidly evolve in response to public health concerns and social distancing directives. TNP, which was in its nascent stages at the time the outbreak began, is likely to become the preferred modality for both research and clinical practice. This systematic review provides an updated review of the available validity studies published since Brearly and colleagues’ (2017) meta-analysis. Importantly, it also offers a comprehensive outline of test-level validity data to support neuropsychologists’ informed decision-making as they select neuropsychological measures for TNP assessments with older adults.