Abstract

A debate surrounding modularity—the notion that the mind may be exclusively composed of distinct systems or modules—has held philosophers and psychologists captive for nearly 40 years. Concern about this thesis—which has come to be known as the massive modularity debate—serves as the primary grounds for skepticism of evolutionary psychology’s claims about the mind. In this article we argue that the entirety of this debate, and the very notion of massive modularity itself, is ill-posed and confused. In particular, it is based on a confusion about the level of analysis (or reduction) at which one is approaching the mind. Here we provide a framework for clarifying at what level of analysis one is approaching the mind and explain how a systemic failure to distinguish between different levels of analysis has led to profound misunderstandings of not only evolutionary psychology but also of the entire cognitivist enterprise of approaching the mind at the level of the mechanism. We furthermore suggest that confusions between different levels of analysis are endemic throughout the psychological sciences—extending well beyond issues of modularity and evolutionary psychology. Therefore, researchers in all areas should take preventive measures to avoid this confusion in the future.

Keywords: cognition, evolutionary psychology, Fodorian modularity, levels of analysis, modularity, philosophy

Although most psychologists and philosophers of mind would grant some degree of modularity to the mind, evolutionary psychology in particular is considered extreme in its endorsement of massive modularity—the notion that the mind is mostly, if not entirely, composed of different systems or modules (Bechtel, 2003; Samuels, 2000, 2012). Massive modularity serves as a perennial grounds for skepticism or outright rejection of evolutionary psychology (for a review, see Goldfinch, 2015), and a lengthy debate on whether the mind is massively modular—or even in principle could be—has raged for more than 40 years with no resolution in sight (e.g., Barrett, 2005, 2007, 2015; Barrett et al., 2006; Barrett & Kurzban, 2006, 2012; Bechtel, 2003; Carruthers, 2003, 2005, 2006, 2007; Chiappe & Gardner, 2012; DeSteno et al., 2002; Ermer et al., 2007; Fodor, 1983, 1985, 1998, 2000; Frankenhuis & Ploeger, 2007; Goldfinch, 2015; Hagen, 2016; MacDonald, 2008; Newcombe et al., 2009; Samuels, 2000, 2012; Seok, 2006; Sperber, 1994, 2001; Tooby et al., 2005).

Oddly, given the length of this debate, there is not only disagreement about the degree of modularity within the mind but also about what is even meant by modularity. For at least 3 decades, successive waves of ink have been spilled in an attempt to clarify that evolutionary psychology does not subscribe to Fodor’s well-known criteria for modules (e.g., encapsulation and automaticity; Fodor, 1983). Rather, evolutionary psychology uses modularity simply to mean functional specialization—that is, that the mind is not an undifferentiated mass of equipotential associationist connections but is instead composed of heterogenous functions (Barrett, 2015; Barrett & Kurzban, 2006; Ermer et al., 2007). The response to this clarification has been to (a) ignore it (for a review, see Goldfinch, 2015), (b) suggest that this is not what evolutionary psychology meant in the past and that evolutionary psychology is now making a banal, shift-the-goalpost claim that applies to everything (Chiappe & Gardner, 2012; Morin, 2016), or (c) stipulate that functional specialization applies only to certain kinds of cognitive processes (such as peripheral, System 1 processes) but not to others (such as central, System 2 processes; Chiappe & Gardner, 2012; Fodor, 1983, 2000). In turn, the counterresponse from evolutionary psychology has been to (a) assert that functional specialization has been what evolutionary psychology meant all along (Barrett et al., 2006; Tooby et al., 2005), (b) question why dual-systems theory (i.e., System 1 and System 2) and Fodorian modularity seem to be interwoven concepts for these critics (Barrett, 2015), and (c) argue that functional specialization can apply to processes that land on either side of the distinctions made by both Fodor or dual-systems theory (Barrett, 2015; Barrett & Kurzban, 2012; Carruthers, 2003).

Who’s On First?

The upshot of all this back-and-forth is that both sides in this debate believe that the other side is patently absurd in its convictions. Evolutionary psychologists cannot imagine what else could exist but functional specialization in the mind. Meanwhile, critics on the other side believe that the bottom has been pulled out from their understanding of evolutionary psychology if it does not intend the attributes of modularity that it now seems to be backing away from. Both sides are left, understandably, exasperated and at a seeming impasse.

The cost of this state of affairs cannot be overstated. It has misled an entire generation of scientists about how to think about the relationship between evolution and the mind, and it actively hinders progress in understanding how the mind works. However, this crisis represents a unique and powerful opportunity for clarification: Chronic intransigence typically indicates that there is not really a debate at all but rather a profound misunderstanding. Indeed, when reading through the enormous literature comprising the modularity debate it becomes increasingly clear that one is looking at a giant—but patently unfunny and scientifically tragic—version of Abbott and Costello’s “Who’s on First?” comedy routine (for video link, see NYYGehrig, 2012). For anyone unfamiliar with this routine, Costello attempts to ask Abbott about the names of players on a baseball team. However, the players all have names like Who, What, I Don’t Know, etc., which are then misinterpreted as evasive answers to Costello’s questions about the players’ identities. So when Costello asks, “Who’s on first?” Abbott replies, “Yes,” leading to an extended argument based on repeated misunderstandings of the meaning of the players’ names (and one of the classic comedy routines of the 20th century). Although Abbott and Costello never resolve their misunderstanding, the modularity debate is not doomed to the same fate. Instead, the vicious cycle of misunderstanding can be brought to an end by clearly articulating what both sides are arguing—which turns out to be decidedly different from what either side has believed the other to be saying up until now.

Our goal in this article is to dismantle the modularity debate entirely and show that it rests on a “Who’s on First?”–style misunderstanding—what we refer to as the modularity mistake. The modularity mistake can be succinctly summarized as follows. The debate until now has appeared as if two sides are quarreling about the extent of modularity within the mind and about what criteria should be assigned to modules. That is, it seems as if two sides are referring to the same entity—a module—but disagreeing about where it is and what it is like. But this is not what is happening. Rather, the two sides are simply talking past one another because each side is approaching the mind at a fundamentally different level of description or, as we prefer—and following Marr (1982)—a different level of analysis.

A level of analysis is a level of reduction or a level of explanation. In philosophical terms, each level constitutes its own ontology: a set of entities and rules stipulating how those entities can interact. As has been pointed out perennially by philosophers and scientists (e.g., Aristotle, ca. 350 B.C.E./1994; Dennett, 1987; Lorenz, 1948/1996; Marr, 1982), a full understanding of any complex entity or phenomenon requires description at more than one level. Moreover, each level of analysis complements the others. Different levels are not in direct competition with each other, and a description at one level does not obviate the need for a description at another. However, care must be taken to not confuse different levels of analysis because each level constitutes its own closed system. For this reason, unknowingly operating at different levels of analysis can create significant problems.

Take, for example, vanilla ice cream. One can describe (a) the way vanilla ice cream tastes when you eat it, (b) the structure of the vanillin molecule that is responsible for the vanilla taste, and (c) the physics of the particles that constitute the vanillin molecule. All three levels or descriptions—the taste, chemistry, and physics—are all valid scientific ways of describing the ice cream. Each constitutes what philosophers would refer to as a distinct ontology, meaning that each is its own independent causal framework, featuring a different set of entities and rules governing how those entities can interact. Thus, it is a category mistake (i.e., a confusion about what kind of thing something is) to combine levels or to think that the entities residing at one level can causally interact with entities residing at another. For example, it would be a mistake to think that the taste of vanilla can causally interact with a vanillin molecule or to think that a drawing of a vanillin molecule is somehow incomplete because the vanilla taste is not depicted somewhere within it.

Here, we argue that the levels of analysis framework is essential for understanding the debate surrounding modularity and why it has lasted for so long. Building on the previous ontologies of Dennett and Marr (e.g., Dennett, 1987; Marr, 1982), we present three levels of analysis—intentional, functional, and implementational. These three different ways of thinking about the mind each represent a different level of reduction, and each has its own validity. We argue that Fodor was operating mainly at the intentional level of analysis, whereas evolutionary psychology operates at the functional level of analysis. Neither side’s formulation of modularity makes sense within the other side’s level of analysis, and we show how much, if not all, of the controversy surrounding modularity is simply a consequence of each side operating at these different levels. We furthermore suggest that the unqualified concept of modularity be abandoned—not only by evolutionary psychologists but also whenever the term is applied to the mind—and be replaced with terminology that clearly denotes the level of analysis at which one is approaching the mind.

Our goal goes beyond simply articulating how damaging the “Who’s on First?”–style modularity mistake has been for evolutionary psychology or demonstrating how our new framework resolves these issues. Rather, we use the modularity mistake as an illustrative case study of what can happen when different level of analysis are confused with one another. This most basic of starting points—being clear about the level at which one is describing the mind—has been given remarkably short shrift by psychologists and philosophers of mind. This state of affairs is all the more troubling once one begins to notice that different levels of analysis are confounded frequently, and often without awareness, throughout the behavioral and psychological sciences. Explaining the modularity mistake is therefore the first step of a broader movement to resolve confusions stemming from unmarked shifts in perspective within psychology and philosophy of mind. If successful, this enterprise can tidy up nearly every area of inquiry in which humans seek to study themselves by clarifying at what level of analysis one is (and is not) operating within when approaching the mind.

Levels of Analysis

If one hopes to achieve a full understanding of a system as complicated as a nervous system, a developing embryo, a set of metabolic pathways, a bottle of gas, or even a large computer program, then one must be prepared to contemplate different kinds of explanation at different levels of description.

—Marr, 1982 (p. 20)

One of the great achievements of 20th-century psychology was the establishment of the kinds of descriptions and explanations that will be necessary for a complete science of the mind. There are two main components of this framework. First, a complete science of the mind cannot just describe measurable outcomes (i.e., experimental effects)—it must also appeal to latent variables (psychological entities) within the organism (e.g., Chomsky, 1959; Fodor, 1987a; Kendler, 1987; Tolman, 1925). Second, these psychological entities can be described at three different levels of reduction or analysis (Dennett, 1987, 1991a; see Fig. 1). Each level hosts different kinds of entities and rules of causation (i.e., each has a different ontology), and each sits at a different level of reduction than the others.

Fig. 1.

The causal processes within the mind responsible for producing measurable outcomes (such as observable behaviors or empirical data) can be understood at three different levels of analysis. These measurable outcomes afford inferences about the structure or content of the mind at any one of these three levels.

Intentional level of analysis

The intentional level of analysis is the least reductive of the levels and the default ontology that humans adopt when thinking about the mind. Elements of this level are studied under various names, including theory of mind and psychological essentialism, and the entirety of this level corresponds to what Dennett (1987) has called taking the intentional stance. At this level, a unitary agency or a “you” (i.e., an internal impetus; Starmans & Bloom, 2011) resides within the mind. This is the “self,” the “you,” or the “I” that peers out from the privileged location inside of our skulls, thinking, feeling, and making decisions. This agency—which we refer to here as the central agency—is sometimes thought of as a little person living inside of one’s head (a homunculus) or as a place where various operations of the mind come together to be evaluated and acted on by some holistic decision-making entity. This is the part of the mind where “you” are, where information has to arrive before “you” see it, and where “you” are in control (what Dennett [1991a] has called the Cartesian theater). This level also contains the first-person phenomenology of how things feel (e.g., being easy or effortful, under volitional control or automatic) and includes mental states such as emotions, beliefs, thoughts, desires, and so on (see Russell, 1921/2013).

Although this level can be scientific, it is intrinsically dualistic, meaning that some entities that fall within the body envelope are not seen as a part of the central agency but rather as separate from it (Starmans & Bloom, 2011). For example, one can lose one’s arm and not lose one’s self. The same applies to mental entities. For example, if I cannot retrieve a memory, that memory (at least for the time being) resides outside of my purview and becomes something separate from me. Thus, at this level, there is an agent who directs their attention, who consults their memories, and who uses strategies. These mental entities (e.g., attention, memories, strategies) are separate from the agent but are used by it (or interface with it). That is, in philosophical parlance, interactionism (Radner, 1971) is an essential feature of this ontology. Causation at this level involves an impetus that either originates from the agent (i.e., “I” meant to do it) or from any of the entities residing outside of it. These entities may be something in the external world or something internal but not within the purview of the homunculus (e.g., a reflex, or mechanisms that do not share my goal of weight loss and therefore tempt me with chocolate cake). A description of visual processes at this level would be, for example, that “I see objects in the world automatically but attend to different parts of a scene effortfully.”

Functional level of analysis

The next level down—the functional level—is mechanistic (Bechtel, 2008), which means that there is no longer any agent present or impetus involved. Rather, the entities that exist at this level, mechanisms, work by executing functions that are abstract input/output, or if/then, contingencies and rules of causation (Fodor, 1976, p. 83). This level corresponds to what Dennett (1987) has called the design stance and encompasses both of Marr’s computational and algorithmic and representational levels. 1 Causation at this level occurs because of the particular constellation of functions being carried out across different mechanisms and the abstract if/then causal relationships between mechanisms (it is at this level that input/output equivalence exists; Dennett, 1995 2 ).

At this level, there is no “you” or “I”; there is no “central” location where the operations of the mind come together—nor any exogenous agent or impetus sitting outside of any mechanism and acting on its outputs. Instead, only mechanisms exist. A description of vision at this level would feature descriptions of mechanistic input/output steps and the representations necessary for parsing objects in the world, including the abstract computational logic of color, size, and shape constancy, scene analysis, and so on.

Likewise, the intentional level description above that “I see objects in the world automatically but attend to different parts of a scene effortfully” would at this level be described exclusively in terms of the operation of mechanisms, whose functions in aggregate produce the intentional level description. For example, “I see” corresponds to the activation of a complex array of mechanistic functions—systems for representing lines, colors, occlusions, depths, and objects; classifying objects; communicating to conspecifics; and so on—none of which in themselves “see” and none of which ever becomes or interfaces with a unitary “I,” as the unitary “I” is itself another complex array of mechanistic functions at this level.

Implementational level of analysis

The third and most reductive level is the implementational level (Marr, 1982). This level describes the interactions between entities defined by their physical locations and attributes (e.g., the anatomical features and electrochemical processes of different brain regions) and corresponds to what Dennett (1987) has called the “physical stance.” Causation at this level occurs through the unfolding of physical events. A description of vision at this level would articulate how visual processes are physically instantiated. For example, electromagnetic radiation hits rhodopsin molecules housed within photoreceptors, leading to the electrochemical excitation of particular kinds of cells in the visual cortex, and so on (eventually all the way down to descriptions of the chemistry and physics of these steps).

The three levels

These three levels exhaust all known levels of description for the mind (see Adolphs, 2015), and we already intuitively appeal to these different levels when we think about ourselves from the neck down. For example, if you go to the doctor complaining of pain (an intentional-level description), you expect to hear about what system is malfunctioning (a functional-level description) and may be prescribed some kind of drug or surgery (to provide an implementation-level intervention). In other words, you might complain of searing pain in your back, your doctor would then explain this is caused by the fact that a nerve cell whose function is to relay pain signals is currently being physically pinched, which causes it to (mis)fire, and you may be prescribed an anti-inflammatory to reduce the swelling around the nerve. As this example demonstrates, (a) all three levels are complementary (one does not have to choose between feeling pain or having a pinched nerve), (b) the higher level gives meaning or significance to the next lower level, and (c) all three levels are important for a complete medical (or, in our case, scientific) account of what is happening.

The Modularity Mistake

Although there is no alternative to using some combination of these three levels of analysis to describe the mind, there has not yet been adequate attention paid toward making the level at which one is operating explicit or to avoid cross-contamination (particularly between intentional and functional levels). Consequently, the adoption of these levels in psychology and philosophy of mind has been largely implicit, piecemeal, and confused. The functional level of analysis seems to be the most fragile and the least likely to be adopted. Indeed, in our experience, an appreciable number of behavioral scientists fail to recognize it entirely. This combination of factors has caused endless confusion about the claims different research traditions are making about the modularity of mind.

Notably, Fodor’s articulation of modularity exists at an intentional level of analysis. As we articulate in detail below, the criteria that Fodor considered most important for modularity are only coherent at this level. 3 Although he never explicitly summarized it in this way, Fodorian modules are the subset of entities in the mind that fall outside of the purview of a central agency. In contrast, evolutionary psychology’s notion of modularity—including the “updated” notion of functional specialization—is a discussion of entities falling entirely within the functional level of analysis (see Fig. 2).

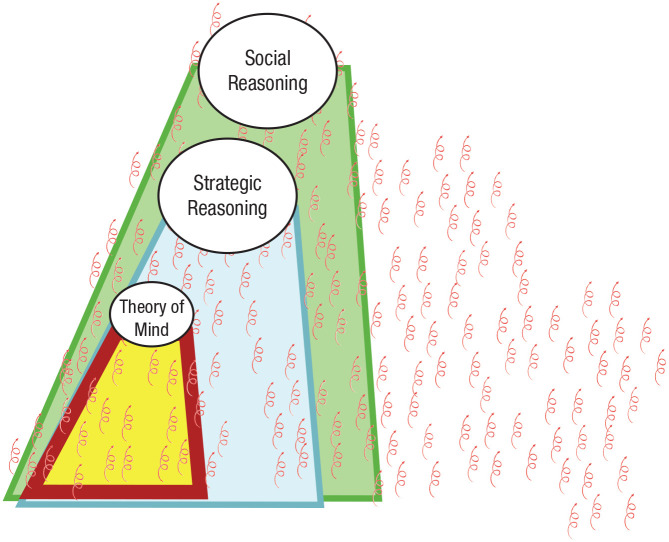

Fig. 2.

A visual depiction of the modularity mistake. One side of the debate, Fodorian modularity (on the left), conceives of modules as those entities that reside outside of the purview of a central agency—which is coherent at an intentional level of analysis. The other side of the debate, evolutionary psychology (on the right), conceives of modules as descriptions of the mind at a functional level of analysis. Using the same word, “module,” has sowed profound confusion in the back-and-forth debates surrounding modularity. In particular, criticism of evolutionary psychology’s claims of “massive modularity” in almost all cases stems from perceiving those claims through the lens of Fodorian modularity, which is simply incorrect.

Although Fodor had good reasons for articulating his conception of modularity at the intentional level, his use of this level was entirely implicit. Therefore, when evolutionary psychologists began to adopt the language of modularity (as a way of talking about the functional level of analysis; e.g., Cosmides & Tooby, 1992; Tooby & Cosmides, 1992 4 ), Fodorian-informed philosophers and psychologists incorrectly interpreted this language through the lens of the intentional level of analysis.

This confusion of levels of analysis—what we call the modularity mistake—unleashed a cascade of profound misunderstandings that has wreaked havoc for decades. It has led to a perverse view of what evolutionary psychology is and what it is trying to do and, even more broadly, a perverse view of what is entailed by claims (coming from any theoretical perspective) that something is a function or a mechanism within the mind. Consequently, being explicit about the different levels of analysis at which claims or theoretical arguments are made will be vital not only for dispensing with the modularity mistake and an accurate view of the goals and worldview of evolutionary psychology but also for the long-term viability of the entire interdisciplinary cognitivist enterprise. No less is at stake.

Fodorian Modularity and the Intentional Level of Analysis

To understand why Fodorian modularity exists at the intentional level of analysis, it is important to recognize where Fodorian modularity came from and why it was created. Fodor’s conception did not arise out of a vacuum. The implicit notion of modularity (in the explicit form of a “faculty”) has played an important role in physiology and medicine since at least Galen’s writings in the second century (Galen, 1523/1916) and later in psychology—as pointed out by Fodor 5 —beginning with Gall in the 19th century. The explicit concept of modularity began to appear in developmental and evolutionary biology around the 1930s (Needham, 1933), and by the 1960s it began to appear in artificial intelligence and software engineering (Simon, 1962, 1969/1996). We return to this original conception of modularity (which we argue is perfectly coherent) later, but for our purposes here it is sufficient to say that it had relatively little impact on psychologists’ and philosophers’ conceptualizations of the mind (Carruthers, 2003; although there were exceptions, e.g., Minsky, 1974/1975).

This would all change with the publication of Fodor’s (1983) book The Modularity of Mind. Fodor’s book arrived at a critical inflection point in psychology and philosophy of mind: Debates over behaviorism had recently run their course, and there was rekindled openness to the idea that the mind would be composed of a large number of diverse processes (H. Gardner, 1985; Minsky, 1974/1975, 1986). Researchers were settling into the enterprise of identifying what these processes might be and establishing how to talk about them (Kendler, 1987; Marr, 1982; Miller, 2003). Within this scientific context, Fodor proposed that some aspects of cognition have the attribute of being modular. In point of fact, however, much of the book is not about modularity but rather about “input systems” (see Box 1). Fodor’s analysis of input systems is beautiful, and there remains an active debate about their nature (e.g., Firestone & Scholl, 2016).

Box 1.

Modularity of Mind

Anyone who comes to read Modularity of Mind by way of the modularity debate is likely to be surprised at just how little of the book is actually about modularity. Instead, the vast majority of the book (the first two thirds) is concerned with characterizing “input systems.”

Input systems sit downstream from sensory transducers (those things that transduce electromagnetic or mechanical energy into nerve conductance, such as on the cochlea or retina) and convey distal stuff out in the world into a format that can be used by the mind. Moreover,

since, in the general case, transducer outputs underdetermine perceptual analysis . . . we can think of each input system as a computational mechanism which projects and confirms a certain class of hypotheses on the basis of a certain body of data. (Fodor, 1983, p. 68)

Critically for Fodor, these hypotheses (guesses within the system about what is being seen or said) are drawn from “considerably less than the organism may know. That is, the confirmation function for input systems does not have access to all of the information that the organism internally represents” (p. 69). This is for a very good reason: Input analysis should be independent of “what the perceiver presumes or desires . . . at least for a fallible organism . . . it generally sees what’s there, not what it wants or expects to be there. Organisms that don’t do so become extinct” (p. 68).

Fodor refers to this “cordoning off” from the organism as “information encapsulation” (pp. 41, 67, 69, 71–73, 77, 80, etc.).

What is most important for our purposes here is that Fodor used the attributes of input systems as a vehicle to argue for a property—modularity—within the mind. In his words, “input systems constitute a natural kind” (Fodor, 1983, p. 99). For Fodor, input systems are by their nature informationally encapsulated, and it is this property that picks out a natural kind: modules. As he put it: “The key to modularity is information encapsulation” (p. 98). An entity is encapsulated if it “does not have access to all of the information that the organism internally represents” (p. 69; see also Box 1). Encapsulation is “the heart” (Fodor, 2000, p. 63) and “the essence” (Fodor, 1983, p. 71) of modularity. To fully understand what a Fodorian module is, it is also instructive to know what a Fodorian module is not. Fodor contrasted modules with central systems (see Box 2). These systems, like modules, are defined with respect to encapsulation. Unlike modules, however, central systems are not encapsulated—which means that they have full access to the organism’s beliefs, desires, and goals.

Box 2.

“Central” Systems

As Fodor pointed out, “Mechanisms that operate as modules presuppose mechanisms that don’t” (Fodor, 2005, p. 71). He called these nonmodular, unencapsulated mechanisms central systems:

I assume that there must be relatively nondenominational (i.e., domain-inspecific) psychological systems which operate, inter alia, to exploit the information that input systems provide. Following the tradition, I shall call these “central” systems, and I will assume that it is the operation of these sorts of systems that people have in mind when they talk, pretheoretically, of such mental processes as thought and problem-solving. (Fodor, 2000, p. 103)

For Fodor, what is critical to these central systems is that they are for “belief fixation” (Fodor, 2000, pp. 112, 115, etc.), and that they have the attributes of being Quineian (“sensitive to properties of the entire belief system”; p. 107) and isotropic, meaning that propositions (beliefs, mental representations, etc.) are equally accessible—that is, “facts relevant” to a hypothesis “may be drawn from anywhere in the field” (p. 105).

Why did Fodor carve up the mind in this way? Scholars have suggested that Fodor was arguing against a prevailing assumption at the time of his writing that there would be some uniform “grand design” to all mental phenomena (Callebaut, 2005; Sperber, 1994). In other words, Fodorian modularity was an argument against content-blind, domain-general faculties (H. Gardner, 1985), or what Minsky (1974/1975) called logistic architectures, in which propositions embodying knowledge are separable from the rules of inference operating on them (Bechtel, 2003 6 ).

Fodor’s modules codified a set of phenomena in which propositions are in fact cordoned off from the rest of the cognitive architecture. For example, in visual illusions, relevant explicit knowledge about what one is seeing cannot affect the outputs of visual perception (Fodor, 1983; Pinker, 2005; Rozin, 1976; Scholl & Leslie, 1999). Fodor’s modules thus served as an existence proof that the notion of a uniform, grand design could not be correct. Essentially, Fodor was asking whether any portions of the mind are closed off from its free-floating propositions. It is those entities that are “restricted” from these propositions (Fodor, 2000, p. 63) that are modules, whereas those entities that are not restricted are central systems.

Although Fodor did not outright state at which level he was operating in his writing, there is very little room for interpretation on this matter. Encapsulation meant isolation from the organism’s background beliefs, desires, and goals. This description could have been perfectly coherent at a functional level of analysis: Mechanisms, defined according to their function, have a particular purview. Therefore, Fodor could have meant that the mechanisms that underwrite input systems do not take as inputs any of the outputs coming from the mechanisms underwriting beliefs, desires, or goals. This would be similar to the way that the mechanisms that represent lines on the retina do not take as inputs any of the outputs of mechanisms for representing skin temperature. Fodor’s definition could thus be functionally defined as the scope of the computational purview of a mechanism.

But this is not what Fodor meant. Fodor acknowledged this would be a possible way to understand encapsulation:

It is a point of definition that distinct functional components cannot interface everywhere on pain of their ceasing to be distinct. It is this consideration that flow-chart notation captures by drawing boxes around the processing systems it postulates. That only the inputs and outputs of functionally individuated systems can mediate their information exchanges is tautological. (Fodor, 1983, p. 87)

In other words, at a functional level, each mechanism is defined according to what class of things it takes as inputs. Consequently, each mechanism is tautologically encapsulated because it cannot have as inputs other things outside of the inputs that it uses to execute its function (simply by definition).

But Fodor was decidedly against this understanding of modularity:

There is a usage according to which anything that is or purports to be a functionally individuated cognitive mechanism—anything that would have its proprietary box in a psychologist’s information flow diagram—thereby counts as a module. . . . In contrast . . . I shall simply take it for granted that cognition is typically the interaction of many functionally individuated parts, and use the “modularity thesis” as the name of something more tendentious. (Fodor, 2000, pp. 56–57)

Later he stated that “confusions of modularity with functional individuation have embarrassed the cog. sci. literature for several decades now; it really is time to stop” (Fodor, 2005, p. 29). Fodor was painfully, abundantly clear on this point; modules are not equivalent to functionally individuated entities.

So if Fodor did not mean functionally individuated entities in his distinction between modules and central systems, what did he mean, and at what level was he operating?

Fodorian modularity exists at the intentional level

In fact, Fodor’s notion of informational encapsulation is meaningful only at the intentional level of analysis. This is because Fodor accepted the premise that a central agency exists in the form of central systems but then went on to argue that there are parts of the mind (i.e., modules) that do not fall within this region (see Fig. 3).

Fig. 3.

Three conceptions of the mind. Fodorian modularity is an argument against the view of the mind on the left—that “you” (i.e., a central agency) extends to cover all of the mind. It makes this argument by adopting the view in the center—that there are parts of the mind that do not include a central agency. However, at a functional level of analysis (the view on the right) even the phenomenological experience of a central agency—the “you”—is the result of a collection of mechanistic systems.

This fact can be demonstrated by examining a canonical example of modularity: visual illusions (Fodor, 1983; Pinker, 2005; Rozin, 1976; Scholl & Leslie, 1999). Figure 4 depicts a well-known visual illusion drawn by the cognitive scientist Roger Shepard. In this illusion, a large monster appears to be chasing a smaller monster down a corridor. However, the two monsters are in fact identically sized ink blotches; they subtend the same amount of visual angle on the retina. As is explained in introductory textbooks, the reason why the monsters are perceived as being different sizes has to do with an assumption of the visual system: In the real world, size and distance are conflated. Closer objects become optically larger, and objects farther away become smaller. To establish actual size, the visual system must take into account both the angle subtended on the retina and relative depth cues. In Shepard’s illusion, proximity to the vanishing point serves as a monocular depth cue. Therefore, the monster closer to the vanishing point appears farther away from the viewer. And because the two monsters subtend the same amount of visual angle on the retina, the one farther away is represented as being larger because in a real, three-dimensional scene it would be.

Fig. 4.

Roger Shepard’s Terror Subterra (copyright R. Shepard; reprinted with permission).

This illusion captures the point of Fodor’s modularity: One can learn from a textbook that the monsters are the same objective size and understand the reasons why, but that declarative knowledge cannot change the first-person experience of seeing a larger monster chasing a smaller one (or, as Fodor put it, “one simply cannot see the world under its retinal projection”; Fodor, 1983, p. 54). This phenomenon demonstrates that propositions are not uniformly accessible across the mind. Specifically, the proposition that the two monsters are the same size does not reach those parts of the mind responsible for producing conscious visual experience. Therefore, the mind is not purely isotropic (Fodor, 1983; Pinker, 2005; Rozin, 1976; Scholl & Leslie, 1999).

A skeptic might note that there is nothing yet in this example to indicate that Fodorian modularity exists at the intentional level of analysis. That is, the observation that content in one portion of the mind does not affect content in another is also perfectly compatible with adopting a functional level of analysis. But where the intentional level reveals itself is in Fodor’s treatment of encapsulation as being an intrinsic property of modules (as opposed to a relational property, as we discuss in detail below). Fodor used visual illusions to argue that visual input analyzers are modular because they have the property of being encapsulated. Recall that, for Fodor, something is encapsulated if it “has access, in the course of its computations, to less than all of the information at the disposal of the organism whose cognitive faculty it is” (Fodor, 1987a, p. 25); “encapsulated systems are prohibited by definition from considering all things” (Fodor, 1983, p. 89). That is, encapsulation makes sense only if there is a place where everything else comes together—what Fodor called central systems, which are “sensitive, at least in principle, to everything that the organism knows” (Fodor, 1983, p. 70).

Although such statements are perfectly coherent at an intentional level of analysis, they are perfectly incoherent at a functional level of analysis 6 : At a functional level of analysis, it is impossible for any mechanism to ever have access to all of the information at the disposal of the entire organism because the entire organism is itself composed of mechanisms—even processes that would fall under Fodor’s rubric of central systems (see Fig. 3). Therefore, it is not possible for there to be a place where all (or most) of the information will otherwise come together (see also Sperber, 2001). It is then meaningless to define a cognitive mechanism at the functional level according to whether it has access to all of the information at the disposal of the organism because this will never happen. It would be like defining a physiological process in the body—such as gas exchange across alveolar tissues in the lungs—according to whether that process had access to all of the other physiological processes occurring within the body. It is simply an incoherent statement.

Later we show how the notion of intrinsic encapsulation (or of intrinsic separateness) has wreaked havoc on the evidentiary standards held against researchers who adopt a functional level of analysis, such as evolutionary psychologists. For now, it is sufficient to note that appealing to encapsulation as an intrinsic property requires implicitly evoking a central agency from which an entity is isolated. No such thing exists at a functional level, but it does exist at an intentional level. In other words, the pitting of modules against central systems is simply a rebranding of the me/not me distinction within the intentional level of analysis. Something is intrinsically encapsulated (i.e., a module) when it resides outside of the purview of the central agency. Nonencapsulated central systems, in turn, are the central agency (for an explicit depiction of a Cartesian theater in a synopsis of Fodorian modularity, see, for instance, Coltheart, 1999, p. 116).

To be clear, we are not arguing that encapsulation (which is coherent at an intentional level of analysis) cannot have a corresponding functional level of analysis account. To return to an earlier example: Just as the taste of vanilla has a corresponding molecular description, so too does encapsulation have a corresponding functional-level description. In other words, the perfectly coherent intentional-level attribute encapsulated (or unencapsulated) is necessarily made possible by a set of cognitive mechanisms. Therefore, it has to be the case that each instance of an encapsulated (or unencapsulated) cognitive phenomenon can be described at a functional level of analysis. But what is important is that those mechanisms underwriting those phenomena do not themselves work by virtue of being encapsulated. That is, intrinsic encapsulation is meaningless at the functional level of analysis because all mechanisms at this level are all equally encapsulated: None is any more or less isolated from everything else in the mind.

In sum, then, encapsulation from the homunculus or Cartesian theater is perfectly coherent at an intentional level of analysis, but there is no coherent way to make Fodor’s attribute of encapsulation an intrinsic property of a mechanism at a functional level of analysis. 8 Yet, to our knowledge, this simple fact has been missed by critics and proponents of Fodorian modularity alike.

Unfortunately, and even more confusingly from the perspective of trying to keep levels of analysis clear, Fodor included an additional set of attributes for modules (see Box 3). None did he deem as important as encapsulation (e.g., Fodor, 1983, pp. 71, 98, 110). Rather, he suggested that these attributes might co-occur with encapsulated modules in a fuzzy-set, family-resemblance kind of way (e.g., Fodor, 1983, pp. 99–101). The reason why these attributes create additional confusion about levels of analysis is that some are obviously at the intentional level of analysis (e.g., automaticity), whereas others appear as if they might exist at a functional level of analysis (e.g., domain specificity). For example, automaticity, like encapsulation, is treated as an intrinsic property of mechanisms (e.g., Fodor, 1983, 2000; Samuels, 2000, 2012) and thus is meaningless at a functional level of analysis. Automaticity, by definition, entails the lack of some agency that is present for nonautomatic processes (e.g., an automatic transmission in a car is the kind that does not need to be directly operated by the driver, unlike a manual transmission). 9 In contrast, domain specificity—which describes a class of inputs to which a mechanism is sensitive (see Boyer & Barrett, 2016)—does not require a central agency to be coherent.

Box 3.

Additional Attributes of Fodor’s Modules

In addition to information encapsulation, Fodor also—reluctantly at times—suggested that modules may tend to have the following other attributes, which may more or less stick together:

Automatic

“Because these processes are automatic, you save computation (hence time) that would otherwise have to be devoted to deciding whether, and how, they ought to be performed” (Fodor, 1983, p. 64).

Fast

“Eyeblink is a fast response because it is a reflex—i.e., because you don’t have to decide whether to blink your eye” (Fodor, 1983, p. 64; generally pp. 61–64).

Domain-specific

“The more eccentric a stimulus domain, the more plausible the speculation that is it computed by a special-purpose mechanism” (Fodor, 1983, p. 51).

Fixed neural architecture

“Hardwired connections indicate privileged paths of informational access; the effect of hard-wiring is thus to facilitate the flow of information from one neural structure to another” (Fodor, 1983, p. 98).

Characteristic development

“The neural mechanisms subserving input analysis develop according to specific, endogenously determined patterns under the impact of environmental releasers” (Fodor, 1983, p. 100).

Shallow outputs

Outputs are not elaborated on by “background knowledge” (Fodor, 1983, p. 87) but are not so shallow that they are not “phenomenologically accessible” (p. 88; see also pp. 93–94, 96).

Characteristic breakdown

“Input systems exhibit characteristic and specific breakdown patterns” (Fodor, 1983, p. 99).

Because this article is not a complete review of Fodorian modularity, we will not go any further into Fodor’s other modularity criteria, aside from noting that encapsulation and automaticity are widely considered the key, defining elements of Fodor’s concept of modularity (the latter primarily by others other than Fodor; e.g., Bechtel, 2003; Coltheart, 1999; Fodor, 1983, 1985, 1998, 2000; Frankenhuis & Ploeger, 2007; Samuels, 2000, 2012; Seok, 2006; Sperber, 1994). This is precisely because Fodor was arguing against a purely isotropic mind, and therefore the attribute of being cordoned off from what seems to be an otherwise open pool of propositions is central to Fodor’s argument.

But we would argue that Fodor’s true argument was something far more profound—an argument against the notion that “you” are in complete control of how your mind works. Modules are those things in the mind that are not “you.” This is why, according to Fodor’s criteria for identifying modules, modules are automatic or mandatory—because “you” cannot change them. This is why there is limited central access—because you cannot get to them. This is why there are shallow inputs—because deep inputs would get to “you,” and “you” would be able to elaborate and act on those inputs. And this is why modules are separated from central systems (i.e., “you”). To see Fodor’s criteria used in this manner, see, for instance, Coltheart (1999), Fodor (1983, 2000), and Samuels (2000, 2012). 10

Indeed, although it has been pointed out elsewhere that Fodor’s notion of modularity is intrinsically dualistic—for example, Barrett (2015) referred to it as an “igloo model” of the mind—we would argue that this is a feature of Fodor’s approach, not a bug. Fodor’s conceptualization of modularity did not gain traction because of wide-ranging interest in issues of isotropy. Rather, Fodorian modularity became wildly popular because it confronted near-universal, everyday intuitions about how the mind works—that “you” are more or less in complete control of your mind. Fodor’s modules did the important work of retracting the boundaries of where “you” (the central agency) resides—and did so in a compelling way by addressing the issue at the intentional level of analysis, which is the way that people intuitively and naturally approach the mind. Indeed, Fodor himself seemed to acknowledge the continued existence of something like a central agency in his theoretical framework:

A lot is known about the transformations of representations which serve to get information into a form appropriate for central processing; practically nothing is known about what happens after the information gets there. The ghost has been chased further back into the machine, but it has not been exorcised. (Fodor, 2000, p. 127)

Evolutionary Psychology and the Functional Level of Analysis

Cognitive processes, like electrons, are entities defined solely by input-output relations.

—Cosmides (1985, p. 2)

In contrast to Fodor’s framework for carving up processes in the mind, evolutionary psychology approaches the mind primarily at a functional level of analysis 11 (e.g., Barrett, 2015; Buss, 1995; Conway & Schaller, 2002; Cosmides, 1985; Cosmides & Tooby, 1987, 1994a, 1994b; Daly & Wilson, 1986, 1988; Pinker, 1997; Smith & Winterhalder, 1992; Symons, 1979, 1987, 1992; Tooby & Cosmides, 1990, 1992, 2016). At this level of analysis, the entity making decisions is not a central agency but instead a constellation of mechanisms. Mechanisms are material things that execute some function, and the function is defined by the problem to be solved (e.g., holding open a door, digesting meat, vacuuming a room, or avoiding predators; see Cosmides & Tooby, 1987, 1994b; Dennett, 1995).

Within the functional level of analysis, mechanisms and their functions can be described at different degrees of abstraction. For example, the entire digestive system is in some sense a mechanism because it can be described as having the function of digesting food: taking in food and then performing digestive operations that eventually lead to the output of delivering bioavailable raw materials out of which the organism is built, maintained, and run. Moreover, the small intestine can equally be described as a mechanism—executing the more specific function of absorbing nutrients and minerals. So too can villi, specialized microfingers within the small intestine that capture particular classes of nutrients via diffusion. Villi are in turn composed of microvilli, and so on. Each description here meaningfully constitutes a mechanism because each description captures the execution of a particular function.

Consequently, there is no one scale at which “the mechanism” exists. There are as many mechanisms as there are ways of describing functions. Therefore, it is not terribly meaningful to ask how many mechanisms there are. Rather, it is more meaningful to ask what the functions are and to answer that question as precisely as possible. In an evolutionary framework, these bits of functional mechanism are called adaptations and are characterized or described in terms of their purpose and how they work (Buss, 1995, 2016; Cronk et al., 2000; Davies et al., 2012; Dennett, 1995; A. Gardner, 2009; Grafen, 2007; Lorenz, 1948/1996; Pinker, 1997; Smith & Winterhalder, 1992; Tooby & Cosmides, 1990, 1992, 2016; Williams, 1966; Winterhalder & Smith, 2000). What evolutionary psychologists have meant by the functional specialization of the mind, then, is that the mind is composed of many different mechanisms, each of which can described according to its function (e.g., Barrett, 2006, 2015; Barrett & Kurzban, 2012; Tooby et al., 2005).

Adopting this functional level of analysis—which of course is not unique to an evolutionary approach—becomes particularly critical for applying evolution to psychology and behavior (Buss, 1995; Cosmides & Tooby, 1987; Daly & Wilson, 1988; Symons, 1979). Intuitively, we often think of the whole person as the entity making decisions and that attributes of the person modify those decision-making proclivities: Some people are stingy, some generous, and so on. Evolution applied to behavior is often (incorrectly) thought of in terms of these kinds of traits. That is, it can be tempting to think that evolution—or more precisely, a history of evolution—modifies or exerts a pull on what the individual would otherwise choose or do. This way of thinking is often characterized by the language of “bias” or “predisposition.” However, this is not the correct way to think about evolution applied to behavior. Evolution cannot be a partial contribution to the person because all of the processes that make up the person—everything that allows the person to think, plan, feel, learn, decide, and so on—are the result of adaptations, 12 by-products of those adaptations, or noise (Barrett, 2015; Buss et al., 1998; Neuberg et al., 2010; Tooby & Cosmides, 1990, 1992). Moreover, evolution cannot directly act on behavior. Thus, the link between evolution and behavior is found in the form and function of the mechanisms for producing behavior—the organism control systems typically studied under the rubric of psychology (Cosmides & Tooby, 1987; Daly & Wilson, 1986, 1988; Dennett, 1995; Smith & Winterhalder, 1992; Symons, 1979, 1987, 1992). In evolutionary biological terms, these control systems are called the proximate phenotype, or more specifically, the proximate psychology (see also Scott-Phillips et al., 2011).

One of the insights of the 20th century was that all information-processing devices, including the behavioral control systems within organisms, can be characterized as a set of mechanistic if/then contingency rules (Turing, 1950; see also Pietraszewski, 2020). Consequently, all of the psychological mechanisms for producing behavior can also be described as sets of nested if/then contingency rules. At each scale, such mechanisms or systems take particular classes of entities in as inputs, perform some operation or process on those inputs, and then generate some output. This input/output level of description is the functional level of analysis (Dennett, 1987) and mirrors exactly how one can describe how the rest of the body works (from cells to organs or to entire systems, such as the digestive system) in terms of each mechanism’s role or function (Block, 1998; Cosmides & Tooby, 1987; Dennett, 1995; Tooby & Cosmides, 1992).

Evolutionary processes dictate the form of the if/then contingencies for each mechanism. Because natural selection is the only known force that creates biological complexity (A. Gardner, 2009), and because natural selection works in a particular way (Grafen, 2007), all if/then contingency rules are built according to the same fundamental logic: They will take as inputs those features of the environment that were reliably present over multiple generations of evolutionary time and generate outputs that would have been selected for within that environment (Buss, 1995; Cosmides & Tooby, 1987; Daly & Wilson, 1988; Tooby & Cosmides, 1992; see Fig. 5). The “environment” here refers to those features that are stable enough to have interacted with mechanisms over multiple generations, thereby shaping the structure of those mechanisms (Lewis et al., 2017; Symons, 1992; Tooby & Cosmides, 1990; Wertz & Moya, 2019). Despite frequent misconceptions, “learning” is not an exception to this principle. Rather, learning mechanisms are themselves a class of evolved if/then contingency rules and fall squarely within an evolutionary analysis (for some examples, see Barrett, 2015, 2016; Boyd & Richerson, 1985; Gallistel, 2000; Oña et al., 2019, Tooby & Cosmides, 1992, 2016; Wertz, 2019).

Fig. 5.

An axiom of adaptationism. All biologically evolved contingency systems within organisms, including the human brain, are constrained by natural selection to embody the following relationship: They take as inputs features of the environment that were reliably present over evolutionary time and generate outputs that would have been selected for over evolutionary time. All nonentropic phenomena produced by organisms are the result of some combination of such systems (adapted from Pietraszewski, 2020).

This conceptualization of mechanisms as evolved input/output devices, as depicted in Figure 5, is simply another way of restating the principles that (a) all mechanisms at a functional level of analysis can be described in terms of their inputs, processing, and outputs, and (b) all biologically evolved mechanisms described at this level are the product of natural selection, which constrains what these inputs, processing, and outputs can be (see also Buss, 1995). Properly understood, evolutionary psychology is then simply the wing of the evolutionary social sciences that is concerned with proposing and testing for what the input/process/output mechanisms in the mind might be (Barrett, 2015; Buss, 1995; Conway & Schaller, 2002; Cosmides & Tooby, 1987, 1994b; Lewis et al., 2017; Neuberg et al., 2010; Symons, 1992). In other words, it is an approach to the mind that marries the functional level of analysis to adaptationism (Tooby & Cosmides, 1992, 2016).

Two additional concepts are critical for understanding evolutionary psychology: evolvability and computational adequacy. Evolvability asks whether a postulated input/process/output mechanism could in principle have evolved (Andrews et al., 2002; Tooby & Cosmides, 1992). In particular, evolutionary psychologists constrain themselves to positing only biological mechanisms for dealing within inputs that would in principle have been recurrent over evolutionary time (and therefore cannot propose mechanisms that take as inputs evolutionary novelties, unless these inputs are taken in as a side effect or by-product of the mechanism’s evolved structure; e.g., Park, 2007; Tooby et al., 2003). This is why evolutionary psychologists are explicit in their assumptions about ancestral environments (Symons, 1992; Tooby & Cosmides, 1990; Winterhalder & Smith, 2000). Operating within the constraints of evolvability also means that evolutionary psychologists constrain themselves to positing mechanisms that generate outputs that would have been selected for on average over evolutionary time (what outputs would be selected for are in turn informed by optimality analyses from behavioral ecology and models of evolvability from evolutionary modeling and game theory; e.g., see Buss, 2016; Cronk et al., 2000; A. Gardner, 2009; Grafen, 2007; Smith & Winterhalder, 1992; Winterhalder & Smith, 2000). This is why evolutionary psychologists focus on adaptive problems (i.e., problems that have consequences for the long-term differential reproductive success of mechanisms; Tooby & Cosmides, 1992).

Computational adequacy refers to whether a specified mechanism can adequately solve a particular problem (Chomsky, 1980; Marr, 1982; Minsky, 1961, 1974/1975; Tooby & Cosmides, 1992). That is, if the description of the mechanism were to be implemented, would that implementation produce the same kinds of observables or behaviors that we see in the biological system, without the help of an intervening central agency or experimenter? To the degree the answer is “yes,” then the mechanism is computationally adequate.

The twin issues of evolvability and computational adequacy explain why evolutionary psychology has historically posited much more structure to the mind than many of its contemporaries. Adaptive problems are specific, real-world problems—such as finding food; finding a mate; staying alive and thriving in the face of resource exchanges, conflicts, and ever-shifting social alliances; and so on at every stage of the life span (for reviews, see Buss, 2016; Cronk et al., 2000; Davies et al., 2012). When one’s goal is to address these problems in a computationally adequate way one quickly realizes the inadequacy of logistic, content-neutral (i.e., “domain-general”) architectures, or positing that high-level abstractions such as “memory,” “attention,” or so on can adequately describe how these problems are solved. Indeed, whenever researchers have the long-term goal of completely solving the information-engineering problems of the real world, regardless of whether they take an evolutionary approach (such as in artificial intelligence; e.g., Minsky, 1961, 1974/1975, 2006; Mueller, 2015; Wall & Brock, 2019), one finds similar views regarding the number and heterogeneity of information-processing functions that must be accounted for.

The above concepts are essential to understanding what evolutionary psychologists have had in mind when they have appealed to the concept of modularity in the past. Consider the well-known cheater-detection work of Cosmides and colleagues (e.g., Cosmides, 1985, 1989; Cosmides & Tooby, 1989, 1992, 2015). The goal of the research program is not to show that cheater detection meets the criteria for a Fodorian module (Tooby et al., 2005) but rather to empirically examine whether the predicted design solutions for a particular information-processing problem—in this case, not being exploited in social exchanges—do in fact exist in the mind (for evidence collected thus far, see Cosmides, 1985, 1989; Cosmides et al., 2010; Cosmides & Tooby, 1989, 1992, 2015). To claim that a cheater-detection module exists in the mind is to claim that social exchange is (a) an evolutionarily recurrent problem with a clear set of selection pressures (i.e., it satisfies evolvability constraints) and (b) not being exploited in social exchanges requires specifying a set of information-processing functions within the mind that are adequate for solving this problem (i.e., the proposed solutions must be computationally adequate; for an in-depth task analysis of the information-processing requirements, see Cosmides & Tooby, 1989). The cheater-detection “module” is therefore defined according to its inputs and outputs: It takes as input those events in which an agent intentionally attempts to acquire a benefit without paying a cost or satisfying a requirement and then outputs the detection of those events to other systems involved in orchestrating antiexploitation responses (see Cosmides & Tooby, 2015).

In sum, evolutionary psychology operates at the functional level of analysis, and modularity has been evoked within evolutionary psychology to describe mechanisms defined according to their functions (i.e., functional specialization; Barrett & Kurzban, 2006, 2012). These functionally specified mechanisms can be described in the language of inputs and outputs and are constrained by the principles of evolvability and computational adequacy. The nature of each adaptive problem determines the attributes of the mechanism(s) that solves it—the form of the mechanism fits its function (Barrett, 2015). Moreover, there is no entailment that cheater detection, or any other proposed mechanism, should have the intentional-level attributes of being encapsulated or automatic, as would be the interpretation under Fodorian modularity (Tooby et al., 2005). These tenets have been central to evolutionary psychology since its inception and continue to be: “When thinking about the design features of mechanisms, we can think about the design of all three of these aspects—inputs, operations, and outputs—in adaptationist terms” (Barrett, 2015, p. 273). Evolutionary psychology makes no other axiomatic claims about the mind.

Fodorian Modularity, Evolutionary Psychology, and the Modularity Mistake

Given that Fodorian modularity approaches the mind largely at an intentional level of analysis and evolutionary psychology approaches the mind largely at a functional level of analysis, how did the two become mixed up with one another? The modularity mistake emerged in part because of word choice. Both Fodor and evolutionary psychologists used the same word, “module,” to refer an entity at each of their respective levels of analysis. As Carruthers (2006) pointed out, Fodor had an outsized impact on philosophers of mind and psychologists, many of whom first encountered modularity by way of his book. Fodor’s book also arrived earlier than most of the foundational publications in evolutionary psychology. So, by the time evolutionary psychologists began to appeal to modularity (e.g., Cosmides & Tooby, 1997; Gallistel, 2000; Tooby & Cosmides, 1992), the concept was already widely understood through the lens of Fodor’s intentional level of analysis. 13

However, word choice should not be given too much credit (or blame) for the confusion between the intentional and functional levels of analysis. An intentional level of analysis is the default way that people naturally think about the mind (Churchland, 1981; Dennett, 1987; Knobe, 2006; Starmans & Bloom, 2011), and this default is exceedingly difficult to overcome, even for scientists (Braitenberg, 1984; Cosmides & Tooby, 1994b). If Fodor had approached the mind at a functional level of analysis, he, too, would likely have struggled against an interpretation of his version of modularity through the lens of the intentional level of analysis, just as evolutionary psychology has.

Once one understands that the modularity mistake takes place amid a fundamental confusion between intentional and functional levels of analysis, the content of the modularity debate becomes predictable (almost uninterestingly so). A main point of contention is evolutionary psychology’s claim that the mind is composed entirely of modules rather than just containing a handful at the periphery—a thesis that came to be known as massive modularity (e.g., Bechtel, 2003; Samuels, 2000, 2012; Sperber, 1994, 2001). Massive modularity is then considered a radical position, sparking decades of debate (e.g., Chiappe & Gardner, 2012; DeSteno et al., 2002; Ermer et al., 2007; Fodor, 1998, 2000; Frankenhuis & Ploeger, 2007; Hagen, 2016; MacDonald, 2008; Newcombe et al., 2009; Samuels, 2000, 2012; Seok, 2006).

Of course, viewed within the correct functional level of analysis, evolutionary psychology’s claims of so-called massive modularity are not radical at all. If anything, they are boringly axiomatic. The claim is simply a logical entailment of Darwin’s theory of natural selection: If one is a materialist, then one must accept that organisms are composed of packets of evolved functions, their by-products, and noise (for an extended discussion, see Barrett, 2015; Buss et al., 1998; Dennett, 1995; Park, 2007; Williams, 1966). There is currently no known viable alternative. Therefore, the mind must also be composed entirely of modules—if by “modules” one means evolved functions (i.e., mechanisms)—by-products of their operation, and noise. Of course, there is plenty of room for debate and rancor over exactly what those evolved functions are and the degree to which particular outcomes reflect true biological adaptations rather than by-products or noise, but these were not the issues being debated. Instead, it was the very notion that the mind could in principle be massively modular that was treated as problematic (e.g., Chiappe & Gardner, 2012; DeSteno et al., 2002; Fodor, 1998, 2000; Goldfinch, 2015; MacDonald, 2008; Newcombe et al., 2009). In other words, evolutionary psychologists were happy to argue about which functional systems exist and how those systems may be structured, but they found it absurd that they must defend the very notion of modularity itself.

In contrast, from Fodor’s intentional level of analysis, a massively modular mind would be problematic. Within this framework, modules are inflexible, autonomous, and independent. Thus, a massively modular mind would be composed of largely isolated, inflexible units with limited ability to communicate with one another or with “central systems” (the homunculus or Cartesian theater). This kind of mind would be a many headed monster, and it would be exceedingly reasonable to question the plausibility (or even the logical coherence) of such a mental architecture.

Evolutionary psychologists did defend—and try to explain—their position to critics who misunderstood their approach. But at no point in time did evolutionary psychologists explicitly point out that they were adopting a different level of analysis than was Fodor. Instead, the debate centered around what attributes modules should have. As a result, the misunderstandings persisted.

For example, in what is probably the strongest and most recent attempt to clarify what evolutionary psychologists mean when they invoke modularity, Barrett and Kurzban (2006) explicitly rejected Fodor’s long list of attributes and instead offered (again) the simpler notion of functional specialization—“that mental phenomena arise from the operation of multiple distinct processes rather than a single undifferentiated one” (p. 628). This argument is exactly correct at a functional level of analysis and so would be the right argument to make if everyone was already clear about the level of analysis at which they were operating. However, because everyone was not already clear on this issue, critics of evolutionary psychology simply continued to interpret (and therefore misunderstand) this clarification through the lens of their intentional level of analysis (for an example, see Box 4). 14

Box 4.

An Example of the Modularity Mistake

The following back and forth between Chiappe and Gardner (2012) and Barrett and Kurzban (2012) exemplifies the confusion between different levels of analysis in the modularity debate. In the first quote, Chiappe and Gardner criticize the Barrett and Kurzban (2006) clarification that by “module” evolutionary psychology simply means a unit of functional specialization:

Barrett and Kurzban (2006) fail to adequately deal with the challenges posed by novelty. The reason is because they attempt to deal with it using only the System 1 processes traditionally discussed by EP. Specifically, they try to reduce the problem of novelty to one that can be dealt with by relying on Sperber’s (1994) distinction between the proper and actual domain of modules. The proper domain of a module is the set of inputs that a module evolved to process. The actual domain refers to stimuli that are similar in relevant respects to the proper domain of a module. . . . However, this does not eliminate the problem of novelty . . . one cannot always rely on the lucky coincidence where a novel stimulus just happens to fit the input criteria of a module, and whose operation is going to produce a suitable response to that stimulus. In other words, sometimes we have to deal with novelty by engaging in problem solving. Sometimes we actually have to think about a problem and gain insight into it so that we can improvise a solution. We can’t rely on a prepared response produced by natural selection. This can require considerable effort and ingenuity. (Chiappe & Gardner, 2012, p. 679)

Barrett and Kurzban responded as follows:

Our view is not that the System 1/System 2 distinction is necessarily useless, at least when defined in terms of “automaticity”: for example, some kinds of processes do appear to respond to subjects’ self-reported “volition” more than others (Wegner, 2002). However, we believe that it is a mistake to think of the “automatic” systems as being the result of evolution by natural selection, and the other systems as being the result of something else. . . . If this is right, then an evolutionary “modular” view is likely to illuminate both the functions and functional design features of System 2 processes. (Barrett & Kurzban, 2012, p. 685)

In the first quote, Chiappe and Gardner are operating at the intentional level of analysis: They suggest that mechanisms limited by their inputs are not flexible enough to deal with novelty, so rather a “one” or a “we” must “think” about the problem using “effort” and “ingenuity.” Of course, at a functional level of analysis, there only are mechanisms and their inputs. The ontology in which there exists mechanisms limited by their inputs on the one hand and a flexible agent (denoted by personal pronouns of “one” and the plural “we”) on the other is the intentional level of analysis. As a result, this is a clear instance of misunderstanding evolutionary psychology through the incorrect lens of the intentional level of analysis.

In the second quote, Barrett and Kurzban are operating at the functional level of analysis: They state that both sides of the me/not-me distinction at the intentional level of analysis have a corresponding functional-level description and that the entirety of that description is composed of evolved mechanisms. However, by charitably switching back and forth between levels (i.e., meeting Chiappe and Gardner halfway by suggesting that there are “automatic” systems and those that are not automatic), the difference in the level of analysis between the two sides remains obscure, even though what they are saying in this response is exactly correct.

In short, this is the heart of the modularity mistake: Both sides were arguing about the extent and meaning of modularity but all the while were referring to completely different levels of analysis. Such a debate has all the scientific merit of two people looking at a long, rectangular box from two different vantage points—one looking at the long side, the other at the short side—and then arguing about its dimensions.

The Consequences of the Modularity Mistake

The modularity mistake has not only prolonged what is, essentially, an unnecessary debate over what is meant by (massive) modularity. It has also actively hindered progress into what we are all ostensibly interested in doing—figuring out how the mind works. In particular, the modularity mistake has led an appreciable number of researchers—possibly even the majority of behavioral scientists—to fundamentally misunderstand the goals and worldview of evolutionary psychology. In its most charitable form, this misunderstanding has caused researchers who share the same goals as evolutionary psychologists to believe instead that the approach is something else entirely, thereby robbing both sides of opportunities for mutually informative collaboration. In its most cynical form, this misunderstanding serves either as a misdirection away from the real issues of evolvability and computational adequacy by theories that lack either or a way to reassure oneself of one’s own theoretical sophistication by being “at least not as bad as those evolutionary psychologists.”

Although the harms caused by the modularity mistake specifically (let alone confusions surrounding different levels of analysis broadly) have been immeasurable, the following sections address what are to us the two broadest problematic consequences relevant specifically to evolutionary psychology. These consequences capture the heart of the modularity mistake’s impact and serve as a cautionary tale for other areas of the cognitive sciences in which confusing levels of analysis results in fundamental misunderstandings.

Misunderstanding evolutionary psychology through the lens of an intentional level of analysis

Through the incorrect intentional-level lens, evolutionary psychology has come to be seen by many researchers as an enterprise in which one is trying to demonstrate that something is an evolved mechanism by showing that it falls outside the purview of the central agency (e.g., DeSteno et al., 2002, 2006). In other words, from this viewpoint, the influence of evolution on the mind becomes (incorrectly) narrowed to just those processes that are inflexible, autonomous, and independent of “you.”

What follows logically from this flawed way of thinking is that researchers can (and should) empirically test for the influence of evolution on the mind by determining which mental phenomena fall outside of one’s control (i.e., seem “automatic” or “inflexible”). If the phenomenon is under “your” control, it is placed into the nonevolved, nonmodular bin. If instead the phenomenon is not under “your” control, then it is placed into the evolved, modular bin (e.g., see Chiappe & Gardner, 2012; DeSteno et al., 2002, 2006; MacDonald, 2008; see also Box 4). 15 Any theoretical framing in which evolution is pitted against flexibility, rationality, or conscious deliberation is invariably an example of approaching claims about evolved cognitive processes through the lens of the intentional level of analysis (for many additional examples, see the work reviewed in Goldfinch, 2015).

Evolutionary psychologists have responded to this mistaken perspective and in the process have at times acted as if they accept its premise. That is, evolutionary psychologists have sometimes adopted the habit of explicitly trying to document effects that are automatic, unconscious, mandatory, and not intuitively rational (e.g., Haley & Fessler, 2005; cf. Dear et al., 2019; for a discussion, see Jung et al., 2012). Likewise, manuscripts informed by evolutionary theorizing are frequently rejected during the review process if the results can be described as originating from a central agency (e.g., reasoning, deliberative thought).

To be clear, there is nothing inherently mistaken about documenting cognitive processes that, from an intentional-level perspective, have attributes of automaticity, irrationality, and so on. The error is to assume, and to perpetuate the misunderstanding, that only those processes that bear these attributes can be considered the result of evolutionary processes. There is nothing in the theoretical framework of the evolutionary social sciences that says that adaptations have to be fast, encapsulated, and automatic or have limited central access and shallow outputs—even if we consider each one of these characteristics within their appropriate level of analysis. Natural selection does not obey Jerry Fodor’s rules or, for that matter, anyone else’s. A clear and cogent application of evolutionary principles entails that the products of evolved cognitive mechanisms can, in principle, take any form and have any attributes—including the attributes of being slow, deliberative, conscious, and so on. As Barrett (2015) noted, the central mantra of adaptationism is “it depends.” In other words, the functional properties one should expect to find in adaptations and by-products of adaptations will reflect the structure of the adaptive problem that mechanism evolved to solve. 16 That is, form follows function (Williams, 1966). How could it be otherwise?

Holding evolutionary psychology to standards of evidence appropriate only for Fodorian modularity

The consequences of the modularity mistake are not limited to a misunderstanding of the theoretical claims evolutionary psychologists make. Confusing different levels of analysis has also led to a misapplication of standards of evidence for the resulting empirical work. As outlined above, it is possible for encapsulation and automaticity—key features of Fodor’s modules—to be intrinsic properties only if one appeals to a central agency from which modules are isolated and separated, whereas at evolutionary psychology’s functional level of analysis the central agency does not exist. However, if one fails to recognize this disconnect, then one can (mistakenly) insist that evolutionary psychology’s claims of modularity be accompanied by evidence that the proposed module is intrinsically isolated and separate. In essence, this mistake then leaves one with the notion that a module is a functional mechanism that is intrinsically isolated and separated from something else—but the “something else” is left completely unspecified.

This transposition of a standard of evidence appropriate to the intentional level but incoherent at the functional level explains a very common misunderstanding: that evolutionary psychology proposes that an entire bounded computer exists for each problem that the mind is designed to solve (see Goldfinch, 2015; Fig. 6). If one misunderstands evolutionary psychology’s claims about the mind in this way, then showing that there are cognitive processes shared among, for example, social exchange (e.g., Cosmides & Tooby, 1989, 1992, 2015) and coalitional psychology (e.g., Pietraszewski et al., 2014) or between coalitional psychology and theory of mind (e.g., Baron-Cohen et al., 1985; Ermer et al., 2006; Scholl & Leslie, 1999), then one would have seemingly invalidated the entire enterprise of evolutionary psychology (to see this view in action, see the work reviewed in Goldfinch, 2015).

Fig. 6.

The fallacy of separate, bounded computers. The modularity mistake invites the perception that there are separate bounded computers responsible for each phenomenon studied by evolutionary psychologists (e.g., Barrett, 2016; Boyer et al., 2015; Boyer & Liénard, 2006; Cosmides et al., 2018; Ermer et al., 2006; Lopez et al., 2011; New et al., 2007).