Abstract

Face recognition has become a significant challenge today since an increasing number of individuals wear masks to avoid infection with the novel coronavirus or Covid-19. Due to its rapid proliferation, it has garnered growing attention. The technique proposed in this chapter seeks to produce unconstrained generic actions in the video. Conventional anomaly detection is difficult because computationally expensive characteristics cannot be employed directly, owing to the necessity for real-time processing. Even before activities are completely seen, they must be located and classified. This paper proposes an expanded Mask R-CNN (Ex-Mask R-CNN) architecture that overcomes these issues. High accuracy is achieved by using robust convolutional neural network (CNN)-based features. The technique consists of two steps. First, a video surveillance algorithm is employed to determine whether or not a human is wearing a mask. Second, Multi-CNN forecasts the frame's suspicious conventional abnormality of people. Experiments on tough datasets indicate that our approach outperforms state-of-the-art online traditional detection of anomaly systems while maintaining the real-time efficiency of existing classifiers.

Keywords: Mask R-CNN, Image detection, Video surveillance, Resnet-152, Apache MXNet

1. Introduction

Numerous strategies have been attempted to control the spread of coronavirus in view of the increasing number of cases. Additionally, Artificial Intelligence (AI) is frequently employed in this activity. Keeping a social distance and wearing masks have become fashionable. However, in order to achieve rapid results and leverage the potential of AI, certain variables were compromised, and important capabilities of AI were not exploited. The World Health Organization (WHO) has declared mask use mandatory during the COVID-19 pandemic (McIntosh, Hirsch, & Bloom, 2020). In this Covid-19 era, people's use of face masks in public places has risen. The older face mask was worn on a temporary basis or for personal reasons; scientists have noted an increase in the mask's use during Covid-19 (Feng et al., 2020, Pradhan et al., 2020).Table 1 .

Table 1.

Notation of Algorithm 1 and Algorithm 2.

| Symbol | Notation |

|---|---|

| I | image dataset |

| LABEL | label on the image |

| MASK | person in image wearing a mask |

| Resnet-152 | backbone architecture |

| F | features from image I |

| RPN | region proposal network |

| R | region |

| ROIW | region of interest wrapping |

| IN | interesting regions |

| HOG | HOG classifier |

| NB | nonlinear Bayesian filter |

| ROI | region of interest |

| A | annotations |

| MCNN | multi-CNN |

This epidemic has resulted in unprecedented levels of scientific cooperation throughout the world. Machine learning and deep learning algorithms powered by artificial intelligence may play a significant role in combating this pandemic. Machine learning techniques facilitate the evaluation of the huge amount of data in the Covid-19 issue for its investigation (Loey, Manogaran, Taha, & Khalifa, 2021).

To address and detect suspicious persons through observation of their behaviour, developing technology such as artificial intelligence (AI), the Internet of Things (IoT), big data, and machine learning is required (Phule & Sawant, 2017).

In this paper, we present a method for detecting face masks based on Mask R-CNN ROI wrapping with Resnet-152 and then evaluate the proposed model using Apache MXNet. Additionally, we devised an algorithm for detecting anomalous human behaviour. The model presented in this manuscript can also be used in conjunction with video surveillance systems to detect individuals wearing masks. Our work analyses algorithms with high accuracy and the shortest running time for system training and recognition.

We examine mask detection in this research using the proposed technique, which identifies whether or not persons are wearing face masks using real images or videos collected by IoT-based cameras. Following that, using standard anomaly detection, the proposed Ex-Mask R-CNN method is applied to detect humans. The COVID-19 pandemic serves as the impetus for this endeavour due to increased social gatherings during COVID-19.

We proposed Ex-Mask R-CNN to verify human face masks and perform conventional anomaly detection using real image or videos. The following are the paper's primary contributions:.

-

1.

To recognize human face masks utilizing image and video datasets that assist in locating individuals who are not wearing a mask during the COVID-19 epidemic.

-

2.

The approach for detecting human face masks is based on Mask R-CNN ROI wrapping with Resnet-152.

-

3.

To identify standard anomaly detection techniques on image and video datasets that can aid in social distancing during COVID-19.

-

4.

The conventional way of detecting anomalies is by the use of an optical-flow stacked difference image.

The novelty of our approach is that it combines end-to-end feature extraction with machine learning algorithms for recognizing facial masks. The paper is structured as follows: Section 2 summarizes the literature reviewed. The model described in Section 3 is illustrated in Section 4. Section 4 describes and analyses the experiments, and Section 6 concludes with a discussion of prospective future work.

2. Related works

The purpose of this paper is to enable automatic action recognition in surveillance systems in order to aid people in alerting, retrieving, and summarising data (Parwez et al., 2017, Shahroudy et al., 2016, Vu et al., 2018). Our work utilizes the CNN algorithm to detect actions in video surveillance systems. Previously introduced approaches detected actions by the use of silhouette or form sequences. Motion Energy Images (MEI) and Motion History Images (MHI) are the most prevalent appearance-based temporal features (Brox et al., 2004, Lowe, 2004). Significant advantages of these methods are their speed, simplicity, and ability to function in controlled situations. The drawback of MHI is that it cannot record inner motions; only human shapes can be caught (Vu et al., 2018). Active shape model, prior motion model, and learnt previous dynamic model are all temporal approaches that are appearance-based. Additionally, movement is consistent, and some features have a distinct space-time trajectory, making them straightforward to characterize. Several methods employ visual tracking of motion trajectories for predefined regions of the human body and some specific portions of the body for action recognition (Ali et al., 2007, Fathi and Mori, 2008).

Local spatial-temporal feature-based approaches calculate numerous descriptors in addition to appearance-based (for example, HOG (Dalal & Triggs, 2005), Cuboids (Dollár, Rabaud, Cottrell, & Belongie, 2005), or SIFT (Lowe, 2004)) features on spatial-temporal interest point trajectory (for example, optical flow (Brox et al., 2004), HoF (Dalal, Triggs, & Schmid, 2006), or MBH (H. Wang Additionally, hidden Markov models (HMMs), dynamic Bayesian networks (DBNs), and dynamic time warping (DTP) are included in the techniques used to account for speed changes observed in actions. Particularly when discussing the detection of actions for multiple types, the looks and patterns identified in motions tend to vary. The results from the deep architectures provide a foundation for training deep CNNs to classify actions.

Numerous researchers have attempted to detect facial masks (Dhiman and Vishwakarma, 2019, Mukhopadhyay, 2015). Pezzini and Padovani, 2020, Wang et al., 2020, Rahman et al., 2020, Chen et al., 2020, Chavda, Dsouza, Badgujar, & Damani, 2020, Chen et al., 2020, Wang, Zheng, Peng, De, and Song (2014) studied the distributed detection of deviant behaviour in intelligent environments. B. Wang, Ye, Li, and Zhao (2011) pioneered the use of size-adapted spatiotemporal characteristics to detect anomalous crowd behaviour.

Elsayed, Mohamed Marzouky, Atef, and Salem (2019) discussed Anomaly Detection Using Conventional Anomalies in Video Surveillance. B. Zhang et al. (Zhang, Wang, Wang, Qiao, & Wang, 2016) demonstrated how to detect activities using a real-time CNN. Jin (2017) demonstrated how to detect any video surveillance activities using a real-world surveillance video dataset and a method. However, to our knowledge, no technique is capable of accurately detecting action involving people wearing masks in a real-time context. Nonetheless, there is no uniform methodology that assesses the security risk associated with people wearing masks.

The proposed approach for action detection is based on a two-stage framework. However, the crucial distinction is that their network is optimized for a single actor and produces inaccurate predictions for numerous actors. Additionally, appearance-based temporal feature integration is extremely distinct, and our suggested approach is capable of detecting the activities of numerous actors who are wearing masks. We offer a method for detecting face masks that are based on Mask R-CNN ROI wrapping with Resnet-152. Below is the mathematical formulation for our suggested method based on Resnet-152 (Yin, Li, Zhang, & Wang, 2019).

ResNet with Path-Integral Formula (Yin et al., 2019).

| (1) |

Given , Eq. (1) can be rewritten as follows,.

| (2) |

In Eq. (2),. Under the influence of relu, k deployed on f leads to or 0 depending on u(x). As such, we can define. With inverse discrete Fourier transform, we can obtain functions in the frequency domain as follows,.

| (3) |

| (4) |

By incorporating Eq. (4) into Eq. (2), we have,.

| (5) |

Based on the assumption that the skip connection weights are much larger than the convolution kernel, we have such that and, leading to,.

| (6) |

With the definition of Hamiltonian Eq. (6) can be rewritten as:.

| (7) |

This corresponds to Eq. (15), where the short time propagator is defined. In essence, H defined the energy of a specific path. After N residual convolution steps, the outputs from the network can be formulated as,.

| (8) |

In Eq. (8), we define, where =. This implies that summing along every path leads to. More strictly speaking, is the functional integral over trajectory functions. Analogously, can be derived. Eq. (8) can be equivalently written as follows,.

| (9) |

By comparing Eq. (9) to Eq. (16), Eq. (9) can be regarded as the phase space path integral of ResNet. Regarding the mathematical equivalence between residual convolution and PDE as discussed in Eq. (18), the residual convolution results in in the frequency domain. Obviously, corresponds to:.

| (10) |

The two order form of Hamiltonian H guarantees that it is integral by inverse Fourier transform over frequency p, such that an integral path formula in position space can be obtained,.

| (11) |

By defining, with the definition of kinetic energy T and potential energy V, the Lagrangian L = T-V can be obtained,.

| (12) |

| (13) |

As such, the evolution of ResNet can be written based on the form of integrals over action S:.

| (14) |

This formulation is equivalent to the Feynman path integral formulation in Eq. (17). It is regarded as the path integral formulation of ResNet, which helps us better understand the ResNet architecture. The ResNet output is given by adding the contributions along all paths that information flow through together. The contribution of a path is proportional to, where does the time integral of the Lagrangian give the action along the path. Lagrangian is defined based on the kinetic energy Eq. (14) and potential energy Eq. (13), i.e.,.

| (15) |

| (16) |

| (17) |

| (18) |

The first to eighteenth equations are for the Feynman path integral from ResNet. The equation demonstrates how ResNet mathematically works. As a result of the Feynman route integral formulation above, we may determine the end state by combining the contributions of all pathways in the configuration space. The contribution of a path is proportional to eiS, where S is the action given by the time integral of the Lagrangian L along the path.

2.1. Problem identification

The following work has been identified after a deep study of literature related to testing and training environment, Dataset name, Dataset for training, Dataset for testing.

-

a.

Testing and training environment

Python Programming Language is used to design 15.6 in HD WLED touch screen (1366×768), 10-finger multi-touch support. 10th Generation Intel Core i7-1065G7 1.3 GHz up to 3.9 GHz. 8 GB DDR4 SDRAM 2666 MHz, 512 GB SSD, No Optical Drive, Intel Iris Plus Graphics, HD Audio with stereo speakers, HP True Vision HD camera, Realtek RTL8821CE 802.11b/g/n/ac. The Python Programming is done on Windows 10 64 bit Operating System platform.

-

b.

Dataset name

ICVL dataset, KTH dataset, SGSITS College (custom dataset), Indore Railway Station (custom dataset), Guwahati Railway Station (custom dataset), New Delhi-Howrah Train (custom dataset), video dataset (custom dataset).

-

c.

Dataset for training

-

•

We have 2500 images in the training section, each approximately 3 Mb in size.

-

•

8 h real-time video

-

d.

Dataset for testing

-

•

1500 images in the testing section

-

•

2 h real-time video

3. Proposed work

The work performed here is for the real-time detection of individual actions via video surveillance systems. Human activity regions are detected using the nonlinear Bayesian filter algorithm. Three CNNs were employed to classify three categories, namely shape, motion history, and combination cues. The classifier predicts output for each region based on the sub action description.

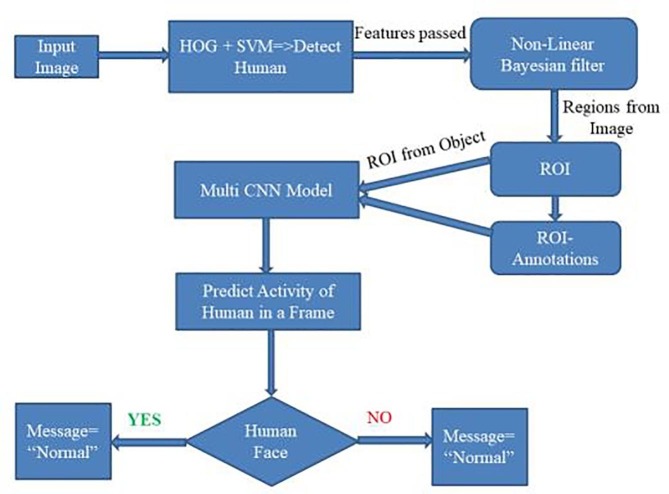

Due to the increase in social gatherings during COVID-19, we proposed Ex-Mask R-CNN to verify human face masks and perform conventional anomaly detection using live images or videos. Fig. 1 depicts the suggested architecture, which includes mask identification and traditional human detection of an anomaly. It also describes the general design of the real-time conventional anomaly detection model.

Fig. 1.

Proposed Architectural Diagram.

The classifiers' output is post-processed to obtain the appropriate results and make final judgments. The proposed architectural diagram is depicted in Fig. 1. The proposed real-time conventional anomaly detection model's overall structure. Three CNNs are fed appearance-based temporal aspects of Regions of Interest (ROIs) using a motion-detection, human-detection, and multiple-tracking algorithm. The CNNs create predictions using shape, motion history, and combination cues. Each action is subdivided into three sub-action categories by the sub-action descriptor, which provides a comprehensive description of human activity. Fig. 1 depicts the proposed architecture in its entirety, which includes mask identification and human conventional anomaly detection. Fig. 2 is a subset of Fig. 1 in the training section, illustrating the processing of images.

Fig. 2.

Facial Mask Detection with Instance Segmentation using Extended Mask R-CNN.

Facial Mask Detection with Instance Segmentation using Extended Mask R-CNN.

-

•

Finding the region of interest by using wrapping instead of pooling.

-

•

Generating region matrix using P, defined algorithm 1.

-

•

Introducing interpolation in finding proposal region.

3.1. Sub-action descriptor

The challenge of expressing an activity is not well-defined in terms of a geometric measurement problem (e.g., measurement of an image or camera motion). To provide extensive information on human activities and to help clarify action information, this research proposes a model of an action using a sub-action descriptor. The descriptor has three levels: posture, mobility, and gesture. The connecting line between two sub-actions at various levels denotes their independence from one another. No connection implies an incompatibility between the two sub-actions. Each level contains one CNN; hence, for each action taken by an individual, three CNNs operate concurrently.

Additionally, the design is enhanced by adding the fourth proposed network, Optical-Flow Stacked Difference Image (OFSDI). The activities' descriptor is constructed in such a way that it may subdivide the normal challenge of action recognition into several simple multi-level sub action identification problems. It is inspired by the large number of activities created by a relatively small number of discrete sub-actions. Three levels combine to express a wide variety of actions with a high degree of freedom in the description.

3.2. Multi-CNN action classifier

During the training phase, the ROI and sub-action annotations are manually determined for each frame of the training videos, and the ROI is then used to compute three appearance-based temporal features: Binary Difference Image (BDI), Motion History Image (MHI), and Weighted Average Image (WAI).

BDI: Binary Difference Image.

The BDI feature accurately captures the actor's static shape cue in two-dimensional frames, denoted by the variable b(x, y, t), as defined by Eq. (19):.

| (19) |

Where the values in the BDI are set to 255 if the difference between the current frame f(x, y, t) and the background frame f(x, y, t0) of the input video is bigger than a threshold, and × and y are indexes in the image domain. BDI is a binary image that indicates the silhouette of the posture.

MHI: Motion History Image.

The pixel intensity in a motion history image is a function of the temporal history of motion at that moment. MHI captures the actor's motion history patterns, indicated by h (x, y, t), and is defined in Eq. 20–22 using a simple replacement and decay operator.

| (20) |

| (21) |

| (22) |

MHI is used to measure locomotion, which includes standing, walking, and running. It is formed in Eq. (20) by subtracting the present frame f(x, y, t) from the prior frame f(x, y, t-1). The MHI at time t is calculated for each frame using the result of the preceding MHI. As a result, this temporal characteristic does not need to be calculated again for the entire collection of frames. In Eq. (22), n denotes the number of frames to account while calculating the action history capacity. The hyper-parameter n plays a key role in establishing the action's temporal range. Although an MHI with a big n spans a significant amount of action history, it is insensitive to present activities. Similarly, MHI with a small n prioritizes recent acts and overlooks previous ones. As a result, selecting a suitable n can be somewhat challenging.

WAI: Weighted Average Image.

Weighted Average Images (WAIs) are used at the gesture level of the sub-action descriptor, which includes inactivity, texting, and smoking. The simplest method to recognize delicate acts (e.g., texting and smoking) would be to look at the actor's shape or motion history. The disadvantages of this technology include the inability to record extensive information about minor actions and sensitivity to background movement, such as camera shaking. Shape and motion history signals together create a spatial-temporal characteristic for subtle actions. WAI is denoted as s(x, y, t). It is constructed as a linear combination of BDI and MHI, given by Eq. (23):.

| (23) |

Even as activities get more intricate, WAI is not fully lost. w = w1 + w2 T is an additional hyper parameter.

Three temporal features based on appearance are derived from human behaviors for the BDI, MHI, and WAI CNNs. The first CNN (BDI) accepts input and detects the shape of the actor in the given data. Following that, it utilizes MHI to determine the motion of the actor's history. The final one, which is based on WAI, records the actor's motion history and shape. CNNs are used to classify actions, and each CNN is trained consecutively. Selecting the architecture required for CNN presents difficulties, as it is highly dependent on the application for which it will be utilized. We have retained a lightweight CNN architecture that detects real-time human actions to achieve a fast computation time. All CNNs have identical architectures. The number of sub-actions at each descriptor is equal to the number of output layers. Finally, we've included a Softmax regression layer.

3.3. Post processing

This layer, referred to as post-processing, revises the action classifier's predictions using many CNNs for each action. As indicated in Fig. 1, the two sub-actions positioned at various levels of the descriptor are independent. No connection suggests that the two sub-actions are incompatible. A classifier that makes predictions using several CNNs does so using a single frame. The links in the sub-action description serve to validate the classifier's predictions (Jin, 2017).

3.4. Algorithmic design

Two methods were proposed for detecting suspicious actions performed by people wearing masks in video frames. First, we proposed the Extended Mask R-CNN algorithm (Ex-Mask R-CNN) to detect individuals wearing masks. Second, we described a technique known as the Optical-Flow Stacked Difference Image (OFSDI). The OFSDI is used to determine whether an individual is engaging in questionable activity. Both algorithms are described in the subsection that follows.

3.4.1. Facial mask detection with instance segmentation using Extended Mask R-CNN

The approach provided here continues the mask R-CNN (He, Gkioxari, Dollár, & Girshick, 2020) framework, which is the state-of-the-art method for object detection, but with increased accuracy at various phases of object detection. Two branches can be noticed in the described approach; one detects the face, and the other performs face and background segmentation on the given image. We used RES-NET-152 to extract the features of a face from the input image. The Region of Interest Wrapping is constructed quickly on the feature map to maintain precise spatial coordinates and output the feature map at a fixed size. Finally, the network's bounding box is located, and classification occurs in the detection branch. In the segmentation branch, a corresponding facial mask is created on the image using a Fully Convolutional Network (FCN) (Long et al., 2014).

The entire model trains Apache MXNet, which significantly reduces the time required to train the Ex-Mask R-CNN model—the dotted outer line in Fig. 2 is Apache MXNet. Original Mask R-CNN (He et al., 2020) is a method that makes advantage of ROI pooling. The disadvantage of ROI pooling is that resolution is lost as it is fed through FC layers, soft-max layers, and so on. Then, ROI aligns were introduced in (He et al., 2020), although ROI Align performs better on datasets with a smaller bounding box and fewer recognized objects. To address this issue, Resnet-152 incorporates ROI wrapping in place of ROI pooling, or Mask R-CNN incorporates ROI Align, which crops and warps a specific ROI on the feature map to a set dimension. The distinction between ROI warping and ROI aligns is that wrapping modifies the contour of the feature map; it employs bipolar interpolation to extend or contract the picture to the same dimension as ROI align.

Algorithm 1: Ex-Mask R-CNN.

Data: Image IResults: Face masked pixels are masked by the algorithm

|

Algorithm 1 explains the steps involved in implementing the proposed Ex-Mask R-CNN. To begin, the algorithms initiate Apache MXNet in step 1. In step 2, the Ex-Mask-RCNN algorithm is started. Resnet-152 extracts feature from image I and return them to F in steps 3–5. Following that, in steps 6–8, the region proposal network delivers a list of regions that contain images. These regions are then provided to ROI wrapping, which returns regions of interest and crops and wraps them. The mask is discovered in step 11. At step 12, the algorithm comes to a halt.

Numerical computation algorithm 1st at 9th steps Calculate the interested region (P).

Compute interested region (P) because we are interested in the region of the frame where our interested object is. In P, x and y of the locater with the Q., the value of p identify intersection over union, which is unable algorithm to select the region which only has—intersection over union greater than a defined threshold.

Numerical computation algorithm 2, with the steps 5 Bayesian filters take × feature as an input in; where t denotes the time instance, k constant whose value range from 1 to n, zt is an output feature, and wt is weight. Which return matrix as an output that gives region for annotation. This annotation region matrix passes through, t is time, is update rate, this returns a matrix that contains feature O, which is motion feature matrix. This helps final decision abnormal and normal motion of action.

3.4.2. Optical-flow stacked difference image (OFSDI)

The Kalman filter is used in the Original Mask R-CNN (He et al., 2020). A nonlinear Bayesian filter has replaced this filter. The Kalman filter has two important limitations:.

-

•

It makes the assumption that both the system and observation models are linear, which is not true in many real-world circumstances.

-

•

It makes the assumption that state beliefs are Gaussian distributed.

The proposed appearance-based elements have been incorporated into the OFSDI CNN. Combining local, global, and spatial-temporal information extracts a robust and discriminative quality from infrared action data. OFSDI's architecture is depicted in Fig. 3 . Where FP denotes the future passed in Fig. 3. Algorithm 2 explains the OFSDI approach.

Fig. 3.

Flow for human activity detection in video frame.

Algorithm 2: Activity Algorithm: OFSDI.

|

Algorithm 2 begins with Step 1. The dataset is loaded in step 2. Following that, iteration over each image in the dataset is performed in step 3. In step 4, the image I is processed through a Histogram of Oriented Gradients (HOG) combined with a Support Vector Machine (SVM) to extract the picture's features F. In step 5, F is used to refine positions using the nonlinear Bayesian filter, which also provides regions of interest ROI. Stage 6: Annotations are also generated across regions during this step. To detect suspicious human activity, these annotated regions are fed to multi-CNN (MCNN) appeared-based models. Finally, in step 8, we observe both normal and deviant behavior. The algorithm then comes to a halt at step 9. The first table lists all symbols with algorithm 1 and algorithm 2 notations.

4. Experimental results

The recognition rate and processing time of the suggested approach for real-time conventional anomaly detection in surveillance videos are tested in this section. We investigate the systematic estimation of many hyper-parameters associated with appearance-based temporal characteristics. Additionally, the proposed OFSDI of the appearance temporal aspects is provided using a CNN-based technique. The conventional anomaly detection findings are demonstrated using the ICVL dataset, which is the only dataset suited for multiple-individual conventional anomaly identification in surveillance films. The processing time is determined by the localization and recognition of the actors in any particular video. Additionally, we implemented the proposed method on the KTH dataset (Schüldt, Laptev, & Caputo, 2004) to compare its performance to several current methods.

We have taken action recognition dataset from different sources in different actions of humans. First, we have taken the two data sets in form of image and video. The taken data sets collected the different souses points. First case from SGSITS College events in Indore, second case from the Indore Railway Station, third case from the Guwahati Railway Station, and in the fourth case from the Indore Railway Station, Indore (Madhya Pradesh), India. We have 2500 images in the training section, each approximately 3 Mb in size, from all four examples described above, and 1500 images in the testing section, which belong to all four cases mentioned before. We employed a video dataset to detect activity; every footage was captured in full HD quality, and 10 h of real-time created films were used. We use 8 h of video frames for training and 2 h of video frames for testing our model. The experimental results indicate that when the OFSDI appearance-based temporal features are combined with a multi-CNN classifier, more accurate detection from surveillance videos is possible. Python Programming Language is used to design 15.6 in HD WLED touch screen (1366×768), 10-finger multi-touch support. The next section contains a detailed description of the dataset employed in the experiment.

4.1. Dataset description

We used two different types of data sets: an image dataset and a video dataset. The image data set is applied for human object detection with mask detection and activity analysis, while the video data set is used for human object detection with mask detection and activity analysis. Additionally, these two types of image data sets correspond to four distinct scenarios. Which are images of data sets obtained in the first case from SGSITS College events in Indore, in the second case from the Indore Railway Station, in the third case from the Guwahati Railway Station, and in the fourth case from the Indore Railway Station. Splendid New Delhi-Howrah Train and video data sets are divided into three categories. The first category includes videos of data sets collected at SGSITS College events in Indore; the second category includes data sets collected at Indore Railway Station, Guwahati Railway Station. This category includes data sets collected at ICVL. The data set is separated into two halves, one for training and another for testing, with an 80:20 training to testing ratio.

4.2. Hardware/software used for implementation

Python Programming Language is used to design 15.6 in HD WLED touch screen (1366×768), 10-finger multi-touch support. 10th Generation Intel Core i7-1065G7 1.3 GHz up to 3.9 GHz. 8 GB DDR4 SDRAM 2666 MHz, 512 GB SSD, No Optical Drive, Intel Iris Plus Graphics, HD Audio with stereo speakers, HP True Vision HD camera, Realtek RTL8821CE 802.11b/g/n/ac. The Python Programming is done on Windows 10 64 bit Operating System platform. The following python libraries were used during implementation: NumPy, Pandas, Matplotlib, SciPy.

Evaluation Metrics.

At video-based video-AP, we employ average precision to quantify results. Video-AP provides an informative measurement for action detection in video-based evaluation. The Video-AP; in this case, the detection is correct if it meets the frame-AP conditions for spatial domain and frame intersection with the ground truth. If the frames correctly predicted for a single action exceed the temporal domain (τ).

Additionally, the Mean Average Precision (mAP) for all action categories at video-based measurement is used to evaluate the presented approach, as the number of activities visible in a single video is limited, and the distribution of instances within each category is significantly unbalanced on the test and validation sets. In all approaches and throughout experiments, an intersection-over-union threshold of σ = 0.5 and an intersection-over-frames threshold of τ = 0.5 were used.

4.3. Results and discussions

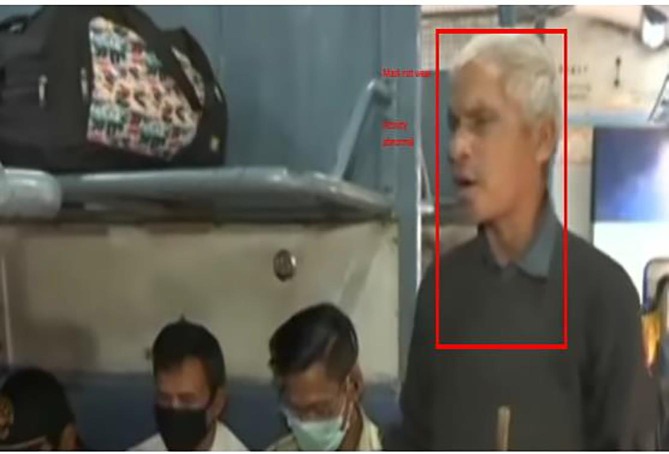

This section evaluates the proposed algorithm with mask detection using ICVL and human activity analysis and a video dataset for object detection with mask detection and human activity analysis. Fig. 4 illustrates scenes captured by two distinct cameras at the Indore Railway station. The figure represents a person wearing a mask while engaging in routine tasks. Whereas, Fig. 5 demonstrates that the person is not wearing a mask, as determined by the suggested Ex-Mask R-CNN algorithm and the proposed OFSDI method. Fig. 6, Fig. 7 illustrate human activity associated with face mask detection.

Fig. 4.

Human mask detected with normal action.

Fig. 5.

Human without mask detected with suspicious action.

Fig. 6.

Human mask detected with normal action.

Fig. 7.

Human mask detected with abnormal action.

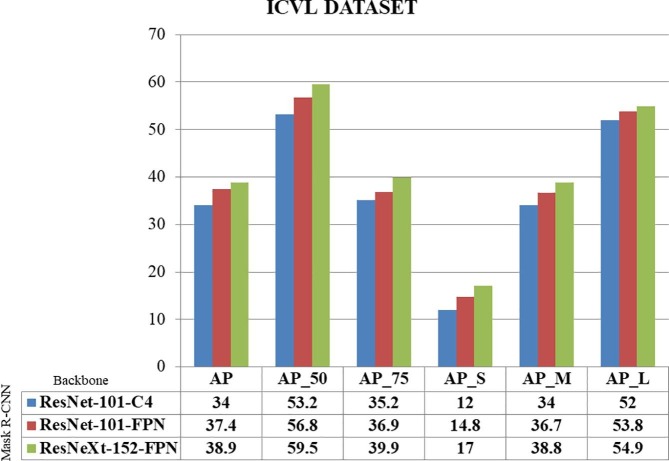

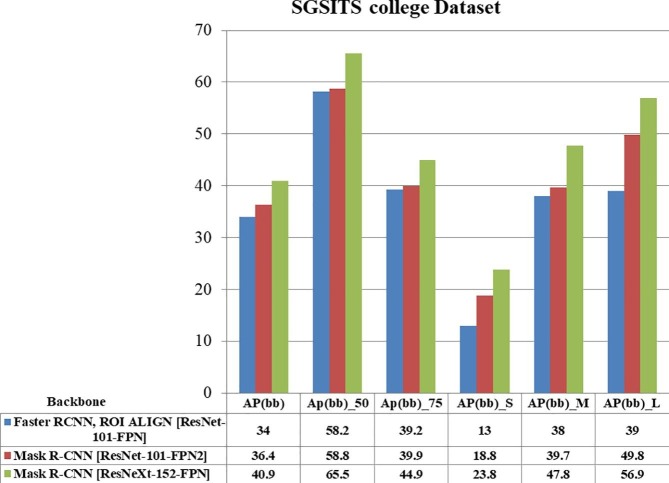

4.3.1. Results of Ex-Mask R-CNN

The Ex-Mask R-CNN results are reported in this part on a series of test images using Resnet152-FPN running at 6 frames per second with 36.3 masks AP. Fig. 8, Fig. 9, Fig. 10 illustrates object detection proposed Ex-Mask R-CNN determines whether or not the object in the frame is a human face. Using the ICVL dataset without a mask, Fig. 8 illustrates the performance of ResNeXt-152-FPN results from the suggested Ex-Mask R-CNN method. The findings of the Railway Station datasets with the human face mask are shown in Fig. 9. Fig. 10 depicts the SGSITS college dataset's results with a human face mask. Fig. 11, Fig. 12, Fig. 13 illustrates whether or not a mask covers a human face, and the suggested Ex-Mask R-CNN detects an object as a human face covered by a mask.

Fig. 8.

ICVL dataset without a face mask.

Fig. 9.

Railway Station dataset with a face mask.

Fig. 10.

SGSITS college dataset with a face mask.

Fig. 11.

ICVL dataset without a human face mask.

Fig. 12.

Railway Station dataset with the human face mask.

Fig. 13.

SGSITS college dataset with a human face with a mask.

The performance of ResNet-152-FPN is shown in Fig. 11, along with the results of the suggested Ex-Mask R-CNN method on the ICVL dataset without a mask. The findings of the Railway Station datasets with a human face mask are shown in Fig. 12. The results of the SGSITS college dataset with a human face mask are shown in Fig. 13.

The graph above illustrates the performance of Mask-RCNN when several ResNet architectures are used. Additionally, it demonstrates that the ResNet 152 architecture utilized in our proposed model outperforms all other ResNet architectures in terms of evaluating average precision matrices.

4.3.2. Action detection

For BDI-CNN, MHI-CNN, WAI-CNN, and proposed OFSDI, Video-AP is reported. OFSDI outperformed AP measures by a large margin, demonstrating the importance of the combined cues for the job of action recognition. Table 2 , Table 3 , and Table 4 compares the proposed method to previously published methods for action recognition across all datasets.

Table 2.

Results of the action recognition of IVCL dataset.

| Video-AP (%) | Normal | Abnormal | mAP |

|---|---|---|---|

| Novel Spatiotemporal Network | 56 | 51 | 53.5 |

| NEUCOM21 | 59 | 52 | 55.5 |

| Improved Robust Video Saliency Detection based on Long-term Spatial-temporal Information | 69 | 47 | 58 |

| BDI-CNN | 77.8 | 53.9 | 65.8 |

| MHI-CNN | 70.6 | 64.3 | 67.4 |

| WAI-CNN | 82.9 | 77.1 | 80 |

| OFSDI | 80.1 | 78.4 | 79.3 |

Table 3.

Results of the action recognition of Railway Station dataset.

| Video-AP (%) | Normal | Abnormal | mAP |

|---|---|---|---|

| Novel Spatiotemporal Network | 68 | 72 | 70 |

| NEUCOM21 | 59 | 71 | 65 |

| Improved Robust Video Saliency Detection based on Long-term Spatial-temporal Information | 69 | 76 | 72.5 |

| BDI-CNN | 77.8 | 53.9 | 65.8 |

| MHI-CNN | 70.6 | 64.3 | 67.4 |

| WAI-CNN | 82.9 | 77.1 | 80 |

| OFSDI | 80.1 | 78.4 | 79.3 |

Table 4.

Results of the action recognition of the College dataset.

| Video-AP (%) | Normal | Abnormal | mAP |

|---|---|---|---|

| Novel Spatiotemporal Network | 68 | 61 | 64.5 |

| NEUCOM21 | 71 | 52 | 61.5 |

| Improved Robust Video Saliency Detection based on Long-term Spatial-temporal Information | 69 | 51 | 60 |

| BDI-CNN | 75.8 | 51.9 | 63.85 |

| MHI-CNN | 69.6 | 61.3 | 65.4 |

| WAI-CNN | 79.9 | 74.1 | 77 |

| OFSDI | 82.1 | 78.4 | 80.25 |

On the ICVL dataset, Table 2 summarizes the suggested OFSDI model's conventional anomaly detection performance. Here, normal refers to the individual performing a normal activity, whereas abnormal refers to performing an abnormal activity or behaving abnormally. The metric used to evaluate the traditional anomaly detection in the image frame is average precision.

On the Railway Station dataset, Table 3 summarizes the performance of the suggested named OFSDI model in terms of traditional anomaly detection. This collection contains images of individuals in various locations within the Railway Station, including inside the train, on the platform, and near the platform. The average precision metric is employed in this case. It performs an analysis of the image frame's traditional anomaly detection.

Table 4 summarizes the proposed OFSDI model's conventional anomaly detection performance on the SGSITS College dataset. This dataset is comprised of images collected at various locations within the SGSITS campus, including the entrance, the greenery, and so on. The standard deviation of the average metric precision is used to evaluate the conventional anomaly detection in an image frame.

5. Conclusion

This paper described a novel real-world surveillance video dataset and a method for real-time anomaly detection in video surveillance systems. In the future, the datasets employed in the experimental evaluation will drive research on multiple conventional anomaly detection. According to major implementations of the suggested approach, the sub-action descriptor provides comprehensive information on human actions. It reduces misclassifications caused by a greater number of activities composed of several distinct sub-actions at various levels. Additionally, our suggested approach localizes and recognizes the actions of a large number of individuals with a low computational cost and high accuracy. For face mask recognition, the proposed Ex-Mask R-CNN outperforms other Mask R-CNNs. In the future, we can adapt our proposed approach for big data platforms to various datasets.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgement

The authors like to express their gratitude to the anonymous referees for their constructive comments that contributed to the paper's improvement in quality.

References

- Ali S., Basharat A., Shah M. Proceedings of the IEEE International Conference on Computer Vision. 2007. Chaotic invariants for human action recognition. [DOI] [Google Scholar]

- Brox T., Bruhn A., Papenberg N., Weickert J. High accuracy optical flow estimation based on a theory for warping. Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) 2004;3024:25–36. doi: 10.1007/978-3-540-24673-2_3. [DOI] [Google Scholar]

- Chavda, A., Dsouza, J., Badgujar, S., & Damani, A. (2020). Multi-Stage CNN Architecture for Face Mask Detection. http://arxiv.org/abs/2009.07627.

- Dalal, N., & Triggs, B. (2005). Histograms of oriented gradients for human detection. Proceedings – 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2005, I, 886–893. https://doi.org/10.1109/CVPR.2005.177.

- Dalal N., Triggs B., Schmid C. Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) 2006. Human detection using oriented histograms of flow and appearance. [DOI] [Google Scholar]

- Dhiman C., Vishwakarma D.K. A review of state-of-the-art techniques for abnormal human activity recognition. Engineering Applications of Artificial Intelligence. 2019;77:21–45. doi: 10.1016/j.engappai.2018.08.014. [DOI] [Google Scholar]

- Dollár P., Rabaud V., Cottrell G., Belongie S. Behavior recognition via sparse spatio-temporal features. Proceedings – 2nd Joint IEEE International Workshop on Visual Surveillance and Performance Evaluation of Tracking and Surveillance. VS-PETS. 2005;2005:65–72. doi: 10.1109/VSPETS.2005.1570899. [DOI] [Google Scholar]

- Elsayed O.A., Mohamed Marzouky N.A., Atef E., Salem M.A.M. Proceedings – 2019 IEEE 9th International Conference on Intelligent Computing and Information Systems. ICICIS; 2019. Abnormal Conventional anomaly detection in video surveillance. [DOI] [Google Scholar]

- Fathi A., Mori G. 26th IEEE Conference on Computer Vision and Pattern Recognition. 2008. Action recognition by learning mid-level motion features. [DOI] [Google Scholar]

- Feng, S., Shen, C., Xia, N., Song, W., Fan, M., & Cowling, B. J. (2020). Rational use of face masks in the COVID-19 pandemic. In The Lancet Respiratory Medicine (Vol. 8, Issue 5, pp. 434–436). Lancet Publishing Group. https://doi.org/10.1016/S2213-2600(20)30134-X. [DOI] [PMC free article] [PubMed]

- He K., Gkioxari G., Dollár P., Girshick R. Mask R-CNN. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2020;42(2):386–397. doi: 10.1109/TPAMI.2018.2844175. [DOI] [PubMed] [Google Scholar]

- Jin C.-B., et al. Real-Time Action Detection in Video Surveillance using Sub-Action Descriptor with Multi-CNN. ArXiv abs/1710.03383. 2017;n. pag [Google Scholar]

- Loey M., Manogaran G., Taha M.H.N., Khalifa N.E.M. A hybrid deep transfer learning model with machine learning methods for face mask detection in the era of the COVID-19 pandemic. Measurement: Journal of the International Measurement Confederation. 2021;167 doi: 10.1016/j.measurement.2020.108288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lowe D.G. Distinctive image features from scale-invariant keypoints. International Journal of Computer Vision. 2004;60(2):91–110. doi: 10.1023/B:VISI.0000029664.99615.94. [DOI] [Google Scholar]

- McIntosh K., Hirsch M., Bloom A. Coronavirus disease 2019 (COVID-19): Epidemiology, virology, and prevention. UpToDate.com. 2020 [Google Scholar]

- Mukhopadhyay, S. C. (2015). Wearable sensors for human activity monitoring: A review. In IEEE Sensors Journal (Vol. 15, Issue 3, pp. 1321–1330). Institute of Electrical and Electronics Engineers Inc. https://doi.org/10.1109/JSEN.2014.2370945.

- Parwez M.S., Rawat D.B., Garuba M. Big data analytics for user-activity analysis and user-anomaly detection in mobile wireless network. IEEE Transactions on Industrial Informatics. 2017;13(4):2058–2065. doi: 10.1109/TII.2017.2650206. [DOI] [Google Scholar]

- Pezzini A., Padovani A. Lifting the mask on neurological manifestations of COVID-19. Nature Reviews Neurology. 2020;16(11):636–644. doi: 10.1038/s41582-020-0398-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phule S.S., Sawant S.D. Proceedings of the 2017 International Conference on Intelligent Computing and Control Systems. 2017. Abnormal activities detection for security purpose unattainded bag and crowding detection by using image processing. [DOI] [Google Scholar]

- Pradhan, D., Biswasroy, P., Kumar Naik, P., Ghosh, G., & Rath, G. (2020). A Review of Current Interventions for COVID-19 Prevention. In Archives of Medical Research (Vol. 51, Issue 5, pp. 363–374). Elsevier Inc. https://doi.org/10.1016/j.arcmed.2020.04.020. [DOI] [PMC free article] [PubMed]

- Rahman, M. M., Manik, M. M. H., Islam, M. M., Mahmud, S., & Kim, J. H. (2020, September 1). An automated system to limit COVID-19 using facial mask detection in smart city network. IEMTRONICS 2020 – International IOT, Electronics and Mechatronics Conference, Proceedings. https://doi.org/10.1109/IEMTRONICS51293.2020.9216386.

- Schüldt C., Laptev I., Caputo B. Recognizing human actions: A local SVM approach. Proceedings – International Conference on Pattern Recognition. 2004;3:32–36. doi: 10.1109/ICPR.2004.1334462. [DOI] [Google Scholar]

- Shahroudy A., Liu J., Ng T.T., Wang G. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. 2016. NTU RGB+D: A large scale dataset for 3D human activity analysis. [DOI] [Google Scholar]

- Vu H.Q., Li G., Law R., Zhang Y. Tourist Activity Analysis by Leveraging Mobile Social Media Data. Journal of Travel Research. 2018;57(7):883–898. doi: 10.1177/0047287517722232. [DOI] [Google Scholar]

- Wang, B., Ye, M., Li, X., & Zhao, F. (2011). Abnormal crowd behavior detection using size-adapted spatio-temporal features. In International Journal of Control, Automation and Systems (Vol. 9, Issue 5, pp. 905–912). Springer. https://doi.org/10.1007/s12555-011-0511-x.

- Wang, J., Pan, L., Tang, S., Ji, J. S., & Shi, X. (2020). Mask use during COVID-19: A risk adjusted strategy. In Environmental Pollution (Vol. 266, Issue Pt 1). Elsevier Ltd. https://doi.org/10.1016/j.envpol.2020.115099. [DOI] [PMC free article] [PubMed]

- Wang C., Zheng Q., Peng Y., De D., Song W.-Z. Distributed Abnormal Activity Detection in Smart Environments. International Journal of Distributed Sensor Networks. 2014;10(5) doi: 10.1155/2014/283197. [DOI] [Google Scholar]

- Yin M., Li X., Zhang Y., Wang S. On the Mathematical Understanding of ResNet with Feynman Path Integral. ArXiv. 2019 http://arxiv.org/abs/1904.07568 [Google Scholar]

- Zhang B., Wang L., Wang Z., Qiao Y., Wang H. Real-Time Action Recognition with Enhanced Motion Vector CNNs. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2016;2016:2718–2726. doi: 10.1109/CVPR.2016.297. [DOI] [Google Scholar]

References

Further reading

- Chen C., Wang G., Peng C., Zhang X., Qin H. Improved Robust Video Saliency Detection Based on Long-Term Spatial-Temporal Information. IEEE Transactions on Image Processing. 2020;29:1090–1100. doi: 10.1109/TIP.2019.2934350. [DOI] [PubMed] [Google Scholar]

- Chen, Y., Hu, M., Hua, C., Zhai, G., Zhang, J., Li, Q., & Yang, S. X. (2020). Face Mask Assistant: Detection of Face Mask Service Stage Based on Mobile Phone. http://arxiv.org/abs/2010.06421. [DOI] [PMC free article] [PubMed]

- Chen C., Wang G., Peng C., Fang Y., Zhang D., Qin H. Exploring Rich and Efficient Spatial Temporal Interactions for Real-Time Video Salient Object Detection. IEEE Transactions on Image Processing. 2021;30:3995–4007. doi: 10.1109/TIP.2021.3068644. [DOI] [PubMed] [Google Scholar]

- Gkioxari G., Malik J. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. 2015. Finding action tubes. [DOI] [Google Scholar]

- Gupta P., et al. People detection and counting using YOLOv3 and SSD models. Materials Today: Proceedings. 2021 doi: 10.1016/j.matpr.2020.11.562. https://www.sciencedirect.com/science/article/pii/S2214785320392312?via%3Dihub. [DOI] [Google Scholar]

- Improving Video Anomaly Detection Performance by Mining Useful Data from Unseen Video Frames, NEUCOM21(early access).

- Perronnin, F., Sánchez, J., & Mensink, T. (2010). Improving the Fisher kernel for large-scale image classification. Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 6314 LNCS(PART 4), 143–156. https://doi.org/10.1007/978-3-642-15561-1_11.

- E. Shelhamer, J. Long and T. Darrell, “Fully Convolutional Networks for Semantic Segmentation,” in IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 39, no. 4, pp. 640-651, 1 April 2017, doi: 10.1109/TPAMI.2016.2572683. [DOI] [PubMed]

- Wang H., Kläser A., Schmid C., Liu C.L. Dense trajectories and motion boundary descriptors for action recognition. International Journal of Computer Vision. 2013;103(1):60–79. doi: 10.1007/s11263-012-0594-8. [DOI] [Google Scholar]