Abstract

We show that a new design criterion, i.e., the least squares on subband errors regularized by a weighted norm, can be used to generalize the proportionate-type normalized subband adaptive filtering (PtNSAF) framework. The new criterion directly penalizes subband errors and includes a sparsity penalty term which is minimized using the damped regularized Newton’s method. The impact of the proposed generalized PtNSAF (GPtNSAF) is studied for the system identification problem via computer simulations. Specifically, we study the effects of using different numbers of subbands and various sparsity penalty terms for quasi-sparse, sparse, and dispersive systems. The results show that the benefit of increasing the number of subbands is larger than promoting sparsity of the estimated filter coefficients when the target system is quasi-sparse or dispersive. On the other hand, for sparse target systems, promoting sparsity becomes more important. More importantly, the two aspects provide complementary and additive benefits to the GPtNSAF for speeding up convergence.

Index Terms—: PtNSAF, LMS, system identification, sparsity

I. Introduction

The classic least mean square (LMS) and normalized LMS (NLMS) [1]–[3] both show degraded convergence behaviors when the input signal is colored. This problem can be addressed by whitening the colored input using a family of conventional subband adaptive filters (SAFs) [4] where each subband utilizes an adaptive filter independently. However, they are known to suffer from the problem of aliasing and band-edge effects [4]. To address this issue, a family of new SAFs has been proposed in [5]–[7] where each subband error signal is normalized by the corresponding input power and aggregated to update the fullband filter taps. It has been shown that the family of new SAFs can be derived from three different perspectives: i) gradient descent on weighted subband errors [5], ii) polyphase decomposition [6], and iii) constrained subband updates [7]. These new SAFs are termed normalized SAF (NSAF) due to their identical behavior. Hence, the NSAF can be viewed as a subband generalization of the NLMS [8].

In [9], the proportionate NLMS (PNLMS) was introduced to improve convergence behavior by intuitively assigning a step size proportional to the magnitude of the estimated coefficient to each filter tap. Unfortunately, PNLMS tends to slow down after initial fast convergence [10]. Many PNLMS variants were later proposed to address this convergence issue and [11] provides a good review. Among those variants, the pNLMS [12] has been proposed as a generalization of PNLMS and was derived by minimizing a modified mean squared error criterion regularized by the p-norm-like diversity measure. The p value can be chosen to promote different degrees of sparsity and the effectiveness has been verified in the application of adaptive feedback cancellation [13].

A family of proportionate NSAFs (PNSAFs) [14]–[17] has been proposed on top of NSAF to speed up the convergence of adaptive filters by simultaneously exploiting the sparse structure of the fullband filter taps and decorrelating the colored input signals. However, these PtNSAFs were proposed in an intuitive way and no theoretical convergence analysis was conducted. In [18], a zero-attracting PNSAF (ZA-PNSAF) was derived from an optimization criterion, yet, the proportionate matrix used does not have theoretical support and the ability to fit in with different degrees of sparsity. Besides, all these previous works use a decimation factor which is equal to the number of subbands in PNSAFs. Due to the nature of the proportionate matrix (function of current filter taps), an analysis with no decimation on PtNSAF is needed.

In this paper, we propose a generalized PtNSAF (GPtNSAF) which is derived utilizing a well posed optimization criterion reflecting the filtering objectives, as well as based on well founded optimization algorithmic principles. Furthermore, the proposed filtering structure can be operated on any decimation factor. We show that GPtNSAF is a generalization of the PtNSAF, proportionate-type affine projection algorithm (PtAPA), NSAF, PtNLMS, and NLMS. The effectiveness of the proposed adaptive filter is verified on different environments including quasi-sparse (compressible), sparse, and dispersive target systems via computer simulations.

Signal Model:

Before deriving the proposed adaptive filter, we define some useful notations and the signal model in Fig. 1. is an M-channel analysis filter bank matrix where each of the analysis filter is of length N and each column of H is a band-pass filter, i.e., H = [h1 h2 ⋯ hM]. is the fullband error vector where e(n) is the error in the fullband at time n. is the fullband input data matrix where is the fullband input vector. is the subband error vector where is the error in the ith subband at time n. is the subband input data matrix where is the input vector at the ith subband which can be computed by ui(n) = U(n)hi. Typically, we require L ≥ M to avoid the overcomplete representation of the input signal and the singularity introduced by the correlation matrix of the subband input data matrix [7]. Next, by defining the fullband desire vector as where d(n) = uT(n)s0 + v(n), we are able to expand the fullband error vector as e(n) = d(n) − UT(n)s(n). denotes the target system and is the system noise. is the adaptive filter taps at time n. Finally, we define the proportionate matrix as a positive definite matrix which promotes the sparse structure of s(n + 1); it takes the adaptive filter s(n) at current time n as its input; hence, W(n) is given in each iteration. In Fig. 1, is defined as . Finally, is the adaptive filter which is an optimization variable.

Fig. 1:

Block diagram of the GPtNSAF.

II. The Proposed Generalized Proportionate-Type Normalized Subband Adaptive Filter

In this section, we propose a novel optimization criterion and the derivation for the GPtNSAF which exploits the structures of the input signal and the underlying unknown (target) system. We find that the PtNSAF, PtAPA, NSAF, PtNLMS, and NLMS are all special cases and can be obtained by using different settings of the hyperparameters in GPtNSAF. We focus on the derivation where there is no decimation. One can easily show that the proposed adaptive filter can be readily extended to include an arbitrary decimation factor.

A. The Proposed Criterion: The Least Squares on Subband Errors Regularized by a Weighted Norm

Instead of minimizing the fullband squared error [19], we minimize the sum of the squared error in each subband with a sparsity penalty term. We propose the following cost function:

| (1) |

where and we have used to stand for the weighted norm squared sTW−1(n)s; this regularization term is designed to expedite the system identification process by introducing a weighted norm for filter taps. In this paper, we use the W(n) suggested in [12], [13], [19] for promoting different degrees of sparsity due to its theoretical support. Since the task of correctly identifying the underlying unknown system is more important than promoting the sparsity of the filter taps, the regularization parameter τ > 0 is set to a very small number. In order to find an LMS-like adaptation, we minimize the cost function (1) by using the damped regularized Newton’s method.

B. Deriving GPtNSAF

To proceed, we perform the affine scaling transform (AST) [20] on the optimization variable s:

| (2) |

Applying (2) into (1) , the equivalent optimization problem in q domain can be easily solved. We define the a posteriori AST variable at time n as and the a priori AST variable as .

Now, we consider the damped regularized Newton’s method for the update rule on minimizing J(q), i.e., where μ > 0 is the step size for adaptation and δ > 0 is a regularization parameter. The gradient of J(q) is given by

| (3) |

Next, the Hessian is given by

| (4) |

Therefore, the update rule on q domain is given by

| (5) |

where we have applied the Woodbury matrix identity to avoid large matrix inversion (L-by-L) in the damped regularized Newton’s method and

| (6) |

Notice that the inverse of the regularized weighted subband correlation matrix, i.e.,

| (7) |

is an M-by-M matrix inversion (L ≫ M in most cases). Converting q back to the s domain, we have

| (8) |

Finally, setting τ → 0+ leads to the update rule for the GPtNSAF: s(n + 1) = s(n) + μg(n) where

| (9) |

C. Special Cases of the GPtNSAF

PtNSAF: By selecting H as the set of eigenvectors for the weighted correlation matrix UT(n)W(n)U(n), we have the PtNSAF: .

PtAPA: By choosing H = I, we have the PtAPA: g(n) = W(n)U(n) [δIM + UT(n)W(n)U(n)]−1e(n). Obviously, the APA is directly followed by setting W(n) = I.

NSAF: Based on PtNSAF, setting W(n) = I gives the NSAF: .

PtNLMS: Setting M = N = 1 yields , thus we get the PtNLMS: .

NLMS: Based on PtNLMS, setting W(n) = I gives the NLMS: .

III. Simulation Results

We study the convergence performance of the proposed GPtNSAF and some of its special cases including PtNSAF, NSAF, PtNLMS, and NLMS in a system identification scenario via computer simulations.

A. Experimental Setup

The impulse responses (IRs) of the target systems are shown in Fig. 2. The input signal is a first order autoregressive (AR) process defined by u(n) = ρu(n − 1) + x(n) where ρ = 0.9 and x(n) is a zero mean and unit variance white Gaussian noise. In the simulations, we discarded the first 2000 samples of u(n) to make sure the stationarity of the AR process. The system noise v(n) is a zero mean white Gaussian noise with variance which gives −30 dB noise level (Jmin). The length of the adaptive filter L = 256 was set to the same size as in Fig. 2 and all taps were initialized by 0.

Fig. 2:

(a), (b), and (c) are three different IRs (target systems) of length L = 256 with different degrees of sparsity. (a) is a measured acoustic feedback path IR. (b) and (c) are artificial IRs. Notice that the IRs in (a), (b), and (c) have the same energy.

The analysis bank H is a cosine-modulated pseudo-quadrature mirror filter (QMF) bank. We maintain the same transition bandwidth of the analysis filters for M = 2, 4, 8 so that the comparison is fair. Therefore, the length of the analysis filter N goes up with the number of subbands M. For M = 1, 2, 4, 8, we use N = 1, 16, 30, 60, respectively.

We used the sparsity promoting proportionate matrix

| (10) |

suggested in [12], [13], [19] so that we were allowed to adjust the degree of promoting sparsity by a single scalar p ∈ [1.0, 2.0] and a regularization parameter c > 0.

The mean squared error (MSE) at time n is defined by where denotes the mathematical expectation. The MSE curves were obtained as the ensemble average over 1000 Monte Carlo runs and normalized to start from 0 dB. For all MSE simulations, we used , δ = 10−6, and c = 10−3.

B. Studying M and W(n) in GPtNSAF

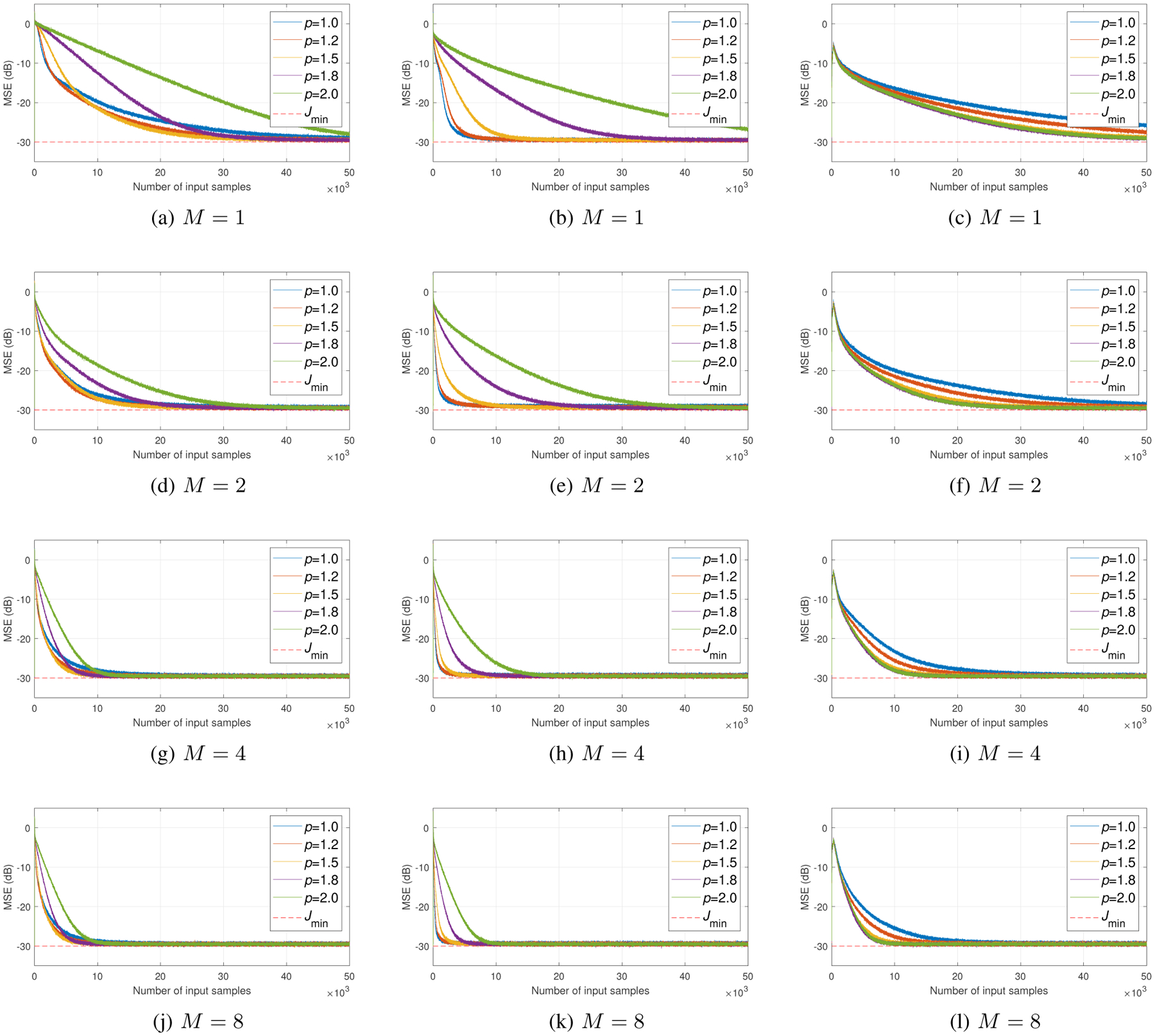

We aim to show that the benefits of increasing the number of subbands M and incorporating the proportionate matrix W(n) are complementary and additive for fast convergence. Fig. 3 shows the MSE curves of GPtNSAF using sparsity promoting proportionate matrix with different p values for M = 1, 2, 4, 8. According to the convergence behaviors in Fig. 3, the best p values on each target system are consistent across different numbers of subbands. Therefore, we suggest p ∈ [1.2, 1.5], p ∈ [1.0, 1.2], and p ∈ [1.8, 2.0] for quasi-sparse, sparse, and dispersive target systems, respectively. Notice that the convergence speed is significantly improved for all target systems as the number of subbands increases. However, the performance gain is saturated at M = 8. This is mainly due to the design of the analysis filter bank in which we did not emphasize on any particular bands. Instead, the spectrum is equally divided by the cosine-modulated pseudo-QMF bank.

Fig. 3:

The MSE curves of GPtNSAF using sparsity promoting proportionate matrix with different p values for M = 1, 2, 4, 8. The target system for (a), (d), (g), and (j) is in Fig. 2(a); (b), (e), (h), and (k) is in Fig. 2(b); (c), (f), (i), and (l) is in Fig. 2(c). For the sake of comparing the different W(n), M, and target systems which have different degrees of sparsity, we visualize these MSE curves with the same number of input samples here.

In Fig. 4, we use the suggested p values for M = 1, 2, 4, 8. By increasing the number of subbands, the MSE curves with colored input signal approach the ideal case, i.e., the GPtNSAF with M = 1 using white input signal which is equivalent to the propotionate-type NLMS with white input.

Fig. 4:

The MSE curves of GPtNSAF using sparsity promoting proportionate matrix with the suggested p values for M = 1, 2, 4, 8. Note that the curve for M = 8 in (b) is overlapped with the ideal case.

Fig. 5(a) compares the convergence behaviors of GPtNSAF and its special cases for the quasi-sparse target system of Fig. 2(a). One interesting finding here is that NSAF outperforms PtNLMS in terms of the convergence speed on the whole signal duration and even for the initial stage. This indicates that the benefit of increasing the number of subbands is larger than promoting fullband sparsity in the time domain when the target system is quasi-sparse. Besides, PtNSAF and PtNLMS have slower convergence rate when they reach steady state. Note that the convergence speed of NSAF does not slow down but PtNSAF and PtNLMS do.

Fig. 5:

The comparison of convergence behaviors for GPtNSAF and its special cases in the quasi-sparse, sparse, and dispersive target systems of Fig. 2. We use different p for the proportionate matrix but the same M = 8 for NSAF.

On the other hand, promoting sparsity is more important than increasing the number of subbands for sparse target systems according to Fig. 5(b). In this case, PtNLMS outperforms NSAF. Still, we observe the same degraded convergence behavior after the fast converence for PtNLMS. For the dispersive case in Fig. 5(c), the PtNLMS and PtNSAF almost reduce to NLMS and NSAF, respectively. Note that p = 1.8 yields W(n) ≈ I.

Lastly, Fig. 5 shows that PtNSAF approximates GPtNSAF under different degrees of sparsity since the magnitude responses of the analysis filters do not significantly overlap. To sum up, GPtNSAF yields the best convergence speed than the others as we expected under all cases.

IV. Conclusion

A generalized PtNSAF is proposed to further improve the convergence speed based on directly minimizing subband errors with a sparsity penalty term. Different adaptive filters including the PtNSAF, PtAPA, NSAF, PtNLMS, and NLMS can be obtained by choosing the corresponding hyperparameters of GPtNSAF. The benefits of increasing the number of subbands and promoting different degrees of sparsity of the estimated filter coefficients are compared under various environments. The simulation results show that the proposed GPtNSAF is suitable for identifying quasi-sparse, sparse, and dispersive systems under colored excitation. At the cost of inverting a small matrix, the proposed GPtNSAF is superior than its special cases in accelerating the convergence.

Acknowledgment

This work was supported by NIH/NIDCD grants R01DC015436 and R33DC015046.

References

- [1].Widrow B and Stearns SD, Adaptive Signal Processing. Upper Saddle River, NJ, USA: Prentice-Hall, Inc., 1985. [Google Scholar]

- [2].Haykin SS, Adaptive Filter Theory. Pearson Education India, 2008. [Google Scholar]

- [3].Sayed AH, Adaptive Filters. John Wiley & Sons, 2011. [Google Scholar]

- [4].Lee K-A, Gan W-S, and Kuo SM, Subband Adaptive Filtering: Theory and Implementation. John Wiley & Sons, 2009. [Google Scholar]

- [5].De Courville M and Duhamel P, “Adaptive filtering in subbands using a weighted criterion,” IEEE Transactions on Signal Processing, vol. 46, no. 9, pp. 2359–2371, Sep. 1998. [Google Scholar]

- [6].Sandeep Pradham S and Reddy VU, “A new approach to subband adaptive filtering,” IEEE Transactions on Signal Processing, vol. 47, no. 3, pp. 655–664, March 1999. [Google Scholar]

- [7].Lee K-A and Gan W-S, “Improving convergence of the NLMS algorithm using constrained subband updates,” IEEE Signal Processing Letters, vol. 11, no. 9, pp. 736–739, Sep. 2004. [Google Scholar]

- [8].Lee K-A and Gan W-S, “Inherent decorrelating and least perturbation properties of the normalized subband adaptive filter,” IEEE Transactions on Signal Processing, vol. 54, no. 11, pp. 4475–4480, Nov 2006. [Google Scholar]

- [9].Duttweiler DL, “Proportionate normalized least-mean-squares adaptation in echo cancelers,” IEEE Transactions on Speech and Audio Processing, vol. 8, no. 5, pp. 508–518, 2000. [Google Scholar]

- [10].Benesty J and Gay SL, “An improved PNLMS algorithm,” in 2002 IEEE International Conference on Acoustics, Speech, and Signal Processing, vol. 2, May 2002, pp. II–1881–II–1884. [Google Scholar]

- [11].Wagner K and Doroslovački M, Proportionate-type Normalized Least Mean Square Algorithms. John Wiley & Sons, 2013. [Google Scholar]

- [12].Rao BD and Song B, “Adaptive filtering algorithms for promoting sparsity,” in 2003 IEEE International Conference on Acoustics, Speech, and Signal Processing, 2003. Proceedings. (ICASSP ‘03)., vol. 6, April 2003, pp. VI–361. [Google Scholar]

- [13].Lee C-H, Rao BD, and Garudadri H, “Sparsity promoting LMS for adaptive feedback cancellation,” in 2017 25th European Signal Processing Conference (EUSIPCO), Aug 2017, pp. 226–230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Abadi MSE, “Proportionate normalized subband adaptive filter algorithms for sparse system identification,” Signal Processing, vol. 89, no. 7, pp. 1467–1474, 2009. [Google Scholar]

- [15].Abadi MSE and Kadkhodazadeh S, “A family of proportionate normalized subband adaptive filter algorithms,” Journal of the Franklin Institute, vol. 348, no. 2, pp. 212–238, 2011. [Google Scholar]

- [16].See X, Lee K-A, Gan W-S, and Li H, “Proportionate subband adaptive filtering,” in 2008 International Conference on Audio, Language and Image Processing, July 2008, pp. 128–132. [Google Scholar]

- [17].Pradhan S, Patel V, Somani D, and George NV, “An improved proportionate delayless multiband-structured subband adaptive feedback canceller for digital hearing aids,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 25, no. 8, pp. 1633–1643, Aug 2017. [Google Scholar]

- [18].Vasundhara, Puhan NB, and Panda G, “Zero attracting proportionate normalized subband adaptive filtering technique for feedback cancellation in hearing aids,” Applied Acoustics, vol. 149, pp. 39–45, 2019. [Google Scholar]

- [19].Lee C-H, Rao BD, and Garudadri H, “Proportionate adaptive filters based on minimizing diversity measures for promoting sparsity,” in 2019 Asilomar Conference on Signals, Systems, and Computers (ACSSC), 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Rao BD and Kreutz-Delgado K, “An affine scaling methodology for best basis selection,” IEEE Transactions on Signal Processing, vol. 47, no. 1, pp. 187–200, Jan 1999. [Google Scholar]