ABSTRACT

In order to explore the effect of normal aging on executive function, we tested 25 younger adults and 25 neurologically healthy older adults on the Wisconsin Card Sorting Test (WCST) and the Brixton Spatial Anticipation Test (BRXT), two classic tests of executive function. We found that older participants were more likely than younger participants to err on both tasks, but the additional errors of older participants tended to be related to task set maintenance and rule inference rather than perseveration. We further found that the tendency to perseverate (across all participants) on the WCST was related to the tendency to produce stimulus or response perseverations on the BRXT, rather than any tendency to perseverate on BRXT rule application. Finally, on both tasks, older participants were also slower, particularly on trials following an error, than younger participants. To explore the neurocomputational basis for the observed behaviours we then extended an existing model of schema-modulated action selection on the WCST to the BRXT. We argue on the basis of the model that the performance of older participants on both tasks reflects a slower update of schema thresholds within the basal ganglia, coupled with a decrease in sensitivity to feedback.

The efficiency of executive functioning – the ability to modulate over-learned behaviors in the service of complex tasks and higher-order goals – is known to decline with age. For example, Pettigrew & Martin (2014) found that older adults (aged 64 to 87) were more susceptible to interference in a range of tasks (such as the Stroop task and a flanker task) than young adults (aged 18 to 32), while when comparing participants aged 64–87 with younger participants, Treitz, Heyder, & Daum (2007) found specific deficits in the inhibition of prepotent responses and the ability to divide attention between tasks in the older group. Many other studies, including some reviewed below, have found broadly similar effects, and some authors have even argued that cognitive performance of executive tasks in older populations can be compared to the neuropsychological profile of patients with mild frontal damage (Greenwood, 2000).

However, although there is consensus on their specific cognitive vulnerability in aging, it is unclear how the pattern of declining efficiency of these function unfolds during normal aging (Jurado & Rosselli, 2007). Moreover, while there is clear evidence of neurobiological changes with increasing age (decreased prefrontal gray matter volume: Raz et al. 1997); decreased concentration of dopamine, acetylcholine, and norepinephrine: Jacques, Ebinger, & Vauquelin 1990), the link between neurobiological changes and declining efficiency of the cognitive-level constructs involved in executive functioning is less well developed (but see Chao & Knight, 1997; Persson et al., 2006). This paper uses experimental and neurocomputational methods to investigate how potential neurobiological changes with age result in changes in executive function.

We focus on executive functions underlying performance in two related rule-induction tasks – the Wisconsin Card Sorting Test (WCST; Milner, 1963) and the Brixton Spatial Anticipation Test (BRXT; Burgess & Shallice, 1996). Both tests involve presenting participants with multiple stimuli in sequence, and require participants to induce a rule or regularity that: a) holds over the stimuli of the sequence; but b) periodically changes. Participants must do this using feedback provided after each stimulus. The tests are described in more detail below, but for current purposes it is sufficient to note that the former is widely used in the clinical assessment of executive function deficits, while the latter was designed to mimic certain aspects of the WCST while reducing the susceptibility of behavior to perceptually salient stimulus features (specifically, the color, number, and shape of a stimulus – the distinguishing features of WCST stimuli).

It is generally accepted that both the WCST and the BRXT tap a variety of executive functions, including set switching, and response inhibition, as well as processes involved in task-set maintenance and rule induction (e.g., Esther et al., 2009; Miyake et al., 2000; Reverberi, Lavaroni, Gigli, Skrap, & Shallice, 2005; Stuss et al., 2000). Moreover, at a broad level, both tasks are also known to be sensitive to age (e.g., Bielak, Mansueti, Strauss, & Dixon, 2006; Haaland, Vranes, Goodwin, & Garry, 1987), in that in both tasks older participants generally make fewer correct responses than younger participants. However, given the similarities and differences between the tests, fine grained analysis of participant performance on them offers the prospect of shedding light on whether (or how) age affects those processes required in performance of both tasks or those involved in performance of one or the other task.

Analyses of behavior on tasks such as the WCST and BRXT can also help to formulate or validate cognitive/information processing level theories of task performance, but as argued by Marr (1982), implementational level theories (or neurocomputational models, as considered here) further allow such cognitive-level theories to be linked with the neurobiological level. For example, theories that propose that behavior is the product of selection between competing schemas (as adopted here) may be further constrained with neurobiological data by supplementing such theories with computational accounts that associate the cognitive constructs of schemas and competition resolution with, for example, corticostriatal circuits, together with computational accounts of corticostriatal/basal ganglia function and dysfunction.

In the light of this, the current paper reports a study in which younger and older participants completed both the WCST and the BRXT. We find both similarities and differences in the behavior of the two participant groups on the tests. We then demonstrate how an existing neurobiological model of the modulation of schema-driven behavior can be applied to model each task, and consider how effects of aging may be incorporated into the model. More specifically, we demonstrate first that the behavior of older participants on each task may be simulated by reducing the values of two learning parameters (which we associate with cortical and subcortical learning) from the values associated with younger participant behavior, and second that within the model different values of the learning parameters can, to a certain extent, be compensated for by different levels of executive control.

Experiment

Introduction

As noted, our interest here is in two specific executive function tasks: the Wisconsin Card Sorting Test (WCST) and the Brixton Spatial Anticipation Test (BRXT).

The WCST requires that a participant sort a series of cards according to criteria that a) must be inferred from feedback after each trial by the participant, and that b) change without warning after a series of correct responses. The test is generally held to measure some level of executive functioning and is frequently used to assess executive (dys)function in clinical populations such as frontal patients as well as in healthy elderly participants (Heaton, 1981). Participants can commit several distinct kinds of error on the task, two of which are of particular interest with respect to executive functioning: perseverative errors (PE) and set loss errors (SL). These two forms of error are mutually exclusive, in that perseverative errors are scored when a participant continues to apply a rule that is defunct, while set loss errors are scored when the participant switches rules (despite positive feedback suggesting that the rule applied on the previous trial is correct). Consistent with some previous research (e.g., the electrophysiological study of Lange et al., 2016), we argue that these two error types depend on partially separable cognitive processes, the first of which is primarily a function of subcortical processing while the second is primarily cortical in nature. This position is also consistent with neuropsychological studies that have found different patient groups (as defined by lesion location) to be susceptible to different error types (most notably Stuss et al., 2000).

A first necessary (but not sufficient) step to argue in support of this dissociation is to show that the error types are independent, at least in some populations. An aging population is ideal, because functional decline of cognitive control in the elderly covaries with the degree of overlap in task representation (Mayr, 2001), which presumably is high across the possible rules involved in the WCST. In this regard, prior research has also found that in older populations there is an overall decline of proactive control (Paxton, Barch, Racine, & Braver, 2007; Pettigrew & Martin, 2014), defined as the ability to sustain goal-relevant information. Within the WCST, proactive control is required to maintain task set (i.e., to avoid set loss errors).

Reactive control, that is the ability to mobilize resources once interference or error is detected, is instead thought to be spared by aging. In the WCST, reactive control is required to avoid perseverative errors. Yet the findings concerning the relation between perseveration and aging are mixed. Heaton (1981) reported that individuals over 60 produce more perseverative errors than younger controls. However, Boone, Ghaffarian, Lesser, Hill-Gutierrez, & Berman (1993) failed to replicate this effect, reporting that individual older than 70 did not, and Haaland et al. (1987) found that increased perseveration appears only after the age of 80. A potential reconciliation of these findings has been offered by Rhodes (2004), who argued that age-related perseveration is moderated by the number of years of education, with more educated participants tending to commit fewer perseverative errors. Consistent with this, and with the potential dissociation of perseverative and set loss errors, Plumet, Gil, & Gaonac’h (2005) found that women over 70 with fewer than 12 years of education committed more perseverative errors than similarly aged participants with more education, but set loss errors were higher in older participants irrespective of educational level.

For current purposes, a critical element of the BRXT is that, like the WCST, it affords two distinct error types – perseverative errors and non-perseverative errors. In the standard version of the BRXT, participants are presented with a series of cards showing 10 disks, with the disks arranged in two rows of five columns, and numbered 1 to 10. One disk on each card is shaded black. The position of the shaded disk changes from one card to the next, and the participant’s task is to predict where s/he suspects the shaded disk will appear on the next card, given its location on the previous card(s). The shaded locations follow various simple temporal rules (e.g., alternating within a column, or moving left-to-right along the upper row and then right-to-left along the lower row, etc.). Burgess & Shallice (1996) classify errors on the test (i.e., incorrect predictions) into three types: perseverative errors, application of an incorrect rule, and participant responses that “are unconstrained by their previous history of performance on the task” (p. 247). Within the category of perseverative errors, Burgess & Shallice (1996) further distinguish between perseveration of the previous response, perseveration of the previous stimulus, and perseveration of the previously applied rule.

As noted, previous studies have shown that age is a predictor of errors on the BRXT. Thus, in a large norming study with participants aged 53 to 90, Bielak et al. (2006) found a strong positive correlation between total errors on the BRXT and age (, ), but they did not decompose error score into the various error types. The authors also found correlations between total error score on the BRXT and performance on other tests of executive function. In particular, in addition to the BRXT, their participants completed the Hayling sentence completion test (a timed test requiring participants to generate both likely and unlikely completions to sentence frames; Burgess & Shallice, 1997) and tests of crystallized and fluid intelligence. BRXT total error score was found to correlate positively with age and negatively with education (with both effects being independently significant), but also positively with time to complete each part of the Hayling test, and negatively with the measure of fluid intelligence. For present purposes, however, and despite its suggestive nature, the theoretical import of the Bielak et al. (2006) study is unclear as it does not establish whether the increasing tendencies with age and education to err on the BRXT reflects an increase in perseverative or non-perseverative errors.

While the BRXT evidence relating to age and specific error types is unclear, the WCST evidence suggests that while set loss errors (and attentional failure errors more generally) may be a hallmark of aging, perseveration appears to be dependent on other factors that are potentially less clearly connected with the underlying neurobiology of the frontal cortex alone (such as level of education). This position is also consistent with the possibility that perseverative errors arise from an inability or reluctance to use feedback to update task representations – an inability that might be dependent on the failure to generate or apply an efficient strategy (which might in turn be dependent upon education) rather than one that is solely dependent on unspecified frontal dysfunction.

In order to examine whether, and if so how, performance on the WCST and BRXT changes with age, we therefore asked 25 younger and 25 older participants to complete a computerized version of each task (as described below). We hypothesized that in WCST we would observe significantly more set loss errors in the behavior of the older group compared to the younger group, but that there would be no significant difference in the number of perseverative errors produced by the two groups. With regard to the BRXT, we hypothesized an increase in non-perseverative errors in the older group compared to the younger group (meaning that only the total error score would be significantly greater for older participants). Furthermore, we hypothesized a positive correlation between perseverative errors in the WCST and the BRXT (and specifically perseverative rule application errors in the BRXT), on the assumption that both types of error result from suboptimal functioning of the same underlying mechanisms.

Method

Participants

Participants consisted of 25 younger adults (9 men and 16 women) and 25 older adults (8 men and 17 women). The age of younger participants ranged from 19 to 53 years (, ). The age of older participants ranged from 62 to 84 years (, ). A chi-square test found no relationship between gender and age group (, ). Younger participants were recruited mainly through the university participant database while older participants were recruited via charities for the elderly such as Age UK and the University of the Third Age, in London (UK). All participants were required to be free of any neurological or psychiatric diagnosis, although these conditions were not formally assessed.

Materials and measures

The Wisconsin Card Sorting Test (WCST)

We used a version of the Wisconsin Card Sorting Test in which participants were presented with a touch screen tablet computer showing four “target” cards arranged from left to right across the upper half of the screen, and a “test” card centered in the lower half of the screen. The images on the target cards showed, from left to right, one red triangle, two green stars, three yellow crosses, and four blue circles. The image on the test card varied in number, color, and shape across trials. Participants were instructed that the test card could be matched to one or more target cards according to the number, color, or shape of figures on the cards (i.e., they were informed of the potential sorting rules), and that their task was to determine the rule being followed by the computer by dragging the test card to beneath one of the four target cards, whereupon feedback (“Correct” or “Incorrect”) would be given both on screen and auditorily. Once the test card was released it remained visible on screen for one second before it disappeared. The complete task required participants to sort 64 cards (i.e., all combinations of the four numbers, colors, and shapes), with the computer’s sorting rule changing after every run of six correct responses.

The version of the WCST administered here thus differed from the standard clinical one in two ways. First, explicit instruction on the possible rules was given (as, e.g., in the 64A condition of Stuss et al., 2000). Second, each test card was removed from view once it had been sorted. The latter was included both to prevent participants from using the position of last test card as a mnemonic for the current rule, and to prevent participants who might do this from confusing the last test card with one of the earlier test cards and thereby inferring the wrong rule from cues present on the screen.

Responses were registered by the tablet in order to compute several performance measures, including: the number of categories achieved (CA), the number of total errors (TE), the number of perseverative errors (), and the number of set loss errors ().

The number of categories achieved (CA) was the number of rules correctly inferred by the participant (i.e., the number of times the participant was correct six times in succession). With 64 cards, CA could in principal range from 0 to 10. The number of total errors (TE) was the total number of times negative feedback was given. The maximum score was 64.

Perseverative errors were calculated as indicated in the manual of Heaton (1981). Thus, each response that would have been correct according to the previous sorting rule (or “set”) was counted as a . Moreover, if a participant selected an incorrect rule consistently and unambiguously on more than three successive trials, then immediate subsequent errors were considered to be perseverative. Set loss errors were calculated as in Stuss et al. (2000): as errors following three correct and unambiguous responses (hence we refer to this as , in contrast to Heaton, 1981, where five correct and unambiguous responses are required to establish a set). All errors after a set loss error were not counted as such. Perseverative errors and set loss errors are mutually exclusive, as the former occur following a change in the target sorting rule, while the latter occur in the absence of a change in the target sorting rule, but other errors are also possible, so TE may be more than the sum of and .

The Brixton Spatial Anticipation Test (BRXT)

We also used a touch-screen-based variant of the Brixton Spatial Anticipation Test devised by Burgess & Shallice (1996). In our version of the task, participants were presented with a set of nine small disks arranged in a circular fashion around a central point. One of the disks was always filled (i.e., it was a black). Participants were asked to touch the location on the screen where they believed the next filled disk would appear. The filled disk moved after each touch following a series of five sequential rules (e.g., move counter-clockwise), with each rule comprising ten successive disk locations. We did not disallow selection of locations outside of the nine disks, but we carefully instructed participants that targets could only occur in the nine disk locations and whenever they tapped outside of those locations text was shown on screen reminding participants to tap on one of the nine disks. Responses were registered by the device in order to compute correct and incorrect predictions, as well as response times.

Our version of the BRXT differed from that of Burgess & Shallice (1996) in the spatial arrangement of the small disks – in Burgess & Shallice (1996) 10 disks were arranged in a two-by-five rectangle – and in that we recorded response times.

Beyond response times, four measures were calculated from the series of responses. The number of Total Errors () was the number of incorrect predictions (including responses where the selected location was not one of the nine disks). This could range from 0 to 50. Stimulus perseverative errors () were counted whenever a participant selected the current disk (which was also the previous target). Response perseverative errors () were scored whenever a participant selected the disk corresponding to their previous response. Note that in some circumstances, a error could also count as error. Finally, perseverative rule errors () were counted whenever a participant selected the response that would have been correct under the previously active rule. Since the participant was unaware of when the rule would change, some errors were inevitable whenever the rule changed.

Procedure

The study took place in an acoustically isolated booth, with each participant tested separately. All 50 participants completed both the WCST and the BRXT, with task order randomized by participant. In order to minimize distractions, participants were asked to switch off their phone, and to try to focus on the task as much as they could. If participants normally wore glasses for close vision they were asked to wear them. Participants also were asked to avoid overthinking or rushing, and to complete the tasks at their normal pace. Participants were further advised that they could withdraw from the study without penalty at any point if they so wished.

The procedure was approved by the local ethics committee, and all aspects of the study conformed to the Helsinki declaration.

Results

WCST

Table 1 shows descriptive statistics for the two groupsof participants on the WCST. We first ran non-parametric tests on each of the performance measures (Total Errors, Perseverative Errors, Set Loss Errors) to observe whether and how they were affected by age. We also performed correlational analyses between the error types to explore potential associations between them. We then analyzed mean response times for trials following positive and negative feedback, by performing a log-transformed 2 2 ANOVA, with age group as a between-subjects factor and feedback as a within-subjects factor. This was primarily to examine the interaction between age group and feedback type for response time.

Table 1.

Means (and standard deviations) for key dependent measures on the WCST for the two groups of participants

| Younger | 6.2 (SD = 1.9) | 11.3 (SD = 4.29) | 0.64 (SD = 1.00) | 17.0 (SD = 5.59) |

| Older | 4.5 (SD = 2.8) | 13.3 (SD = 6.40) | 1.48 (SD = 1.56) | 20.9 (SD = 8.97) |

Performance measures

All dependent measures were non-normally distribution, and significantly so (Shapiro–Walk test: in all cases). Given the relatively small sample, the non-normality, and the poor response to data transformation, we proceeded with non-parametric tests for the performance variables. In addition to the traditional statistics, we calculated the Bayes Factor () using a Cauchy distribution centered on zero and with scale as a prior. The value indicates how many times the alternative hypothesis is more likely than the point null hypothesis (Wagenmakers et al., 2018). Independent two-tailed Mann–Whitney tests were conducted between groups for each dependent variable.

Comparing Total Errors in Younger (, ) and Older (, ) participants revealed that the difference was not significant (, , ). Comparing Perseverative Errors in Younger (, ) and Older (, ) participants also revealed that this difference was not significant (, , ). However, Set Loss errors were found to be significantly less frequent in Younger ( ) participants than in Older (, ) participants (, ), albeit with a only marginally higher than 1, (robust). Similarly (and perhaps as a consequence), the number of Categories Achieved by Younger (, ) participants was significantly greater than that of Older (, ) participants (, , ).

Consistent with the results of these between-subjects comparisons, the correlation between age in years and was not significant (, , two-tailed), but the correlation between Age and was (, , two-tailed). There was also a significant correlation between and (, , two-tailed), and this correlation remained significant when the effects of Age were partialled out (, , two-tailed).

Response times

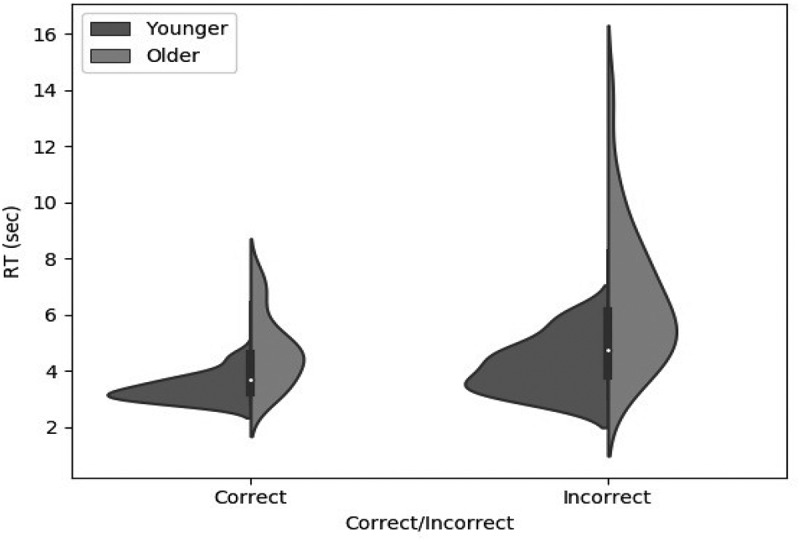

Figure 1 shows mean response time as a function of age and feedback on the previous trial. A two-way ANOVA on the log-transformed data showed that Older participants took significantly longer than Younger participants (, , ), and that response time was significantly longer on the trial after an incorrect response than on the trial after a correct response (, , ). The interaction between age group and feedback approached significance (, , ), but Bayesian analysis showed that including the interaction term did not bring any substantive improvement to the model ().

Figure 1.

Response time violin plot as a function of age group in the WCST, on trials following “correct” versus “incorrect” feedback. Older participants were in general slower than younger participants, and both groups were slower following negative than positive feedback.

BRXT

Possibly due to a misunderstanding of the instructions, one older participant did not give any correct answer in the BRXT, and was therefore excluded from the analysis of the BRXT data. The data reported here is therefore from 25 younger participants and 24 older participants. Table 2 shows descriptive statistics for the two groups of participants on the BRXT. We ran non-parametric tests on each of the performance measures (total errors, perseverative response errors, perseverative stimulus errors, perseverative rule errors) to observe whether and how age affected them. We also performed correlational analyses between the error types to explore potential associations between them. Like the WCST, we then analyzed response times by performing a log-transformed ANOVA, with age group as a between-subjects factor and feedback type as a within-subjects factor, again primarily to examine the interaction between age group and feedback type on response times.

Table 2.

Means (and standard deviations) for key dependent measures on the BRXT for each group of participants

| Younger | 1.36 (SD = 1.04) | 0.44 (SD = 0.91) | 4.16 (SD = 1.48) | 10.44 (SD = 4.74) |

| Older | 1.63 (SD = 1.74) | 0.17 (SD = 0.64) | 3.79 (SD = 0.78) | 13.83 (SD = 7.03) |

Performance measures

All performance variables analyzed (total errors, perseverative response errors, perseverative stimulus errors, perseverative rules errors) were not normally distributed, as shown by a Shapiro–Wilk test . Again, given the relatively small sample, the non-normality, and the poor response to data transformation, we used non-parametric tests to analyze these performance variables. In addition to the traditional statistics, we again calculated the Bayes Factor () using a Cauchy distribution centered on and with scale as a prior.

Independent two-tailed Mann–Whitney tests were conducted. The difference in total errors produced by Younger participants (, ) and Older participants (, ) was significant (, , (robust)). However, the difference in perseverative response errors in Younger (, ) and Older (, SD = 1.74) participants was not significant (, , ). Similarly, the number of perseverative stimulus errors produced by Younger (, ) and Older (, ) participants did not differ (, , ), and neither did the number of perseverative rule errors (Younger: , ; Older: , ; , , ).

Correlational analysis revealed that while total errors correlated significantly with Age in years (, ), this was not reflected in any of the more specific perseverative error measures (Age against : , ; Age against : , ; Age against : , ). However, across all participants the correlation between and was significant (, ), while the correlations between and (, ) and and (, ) were not.

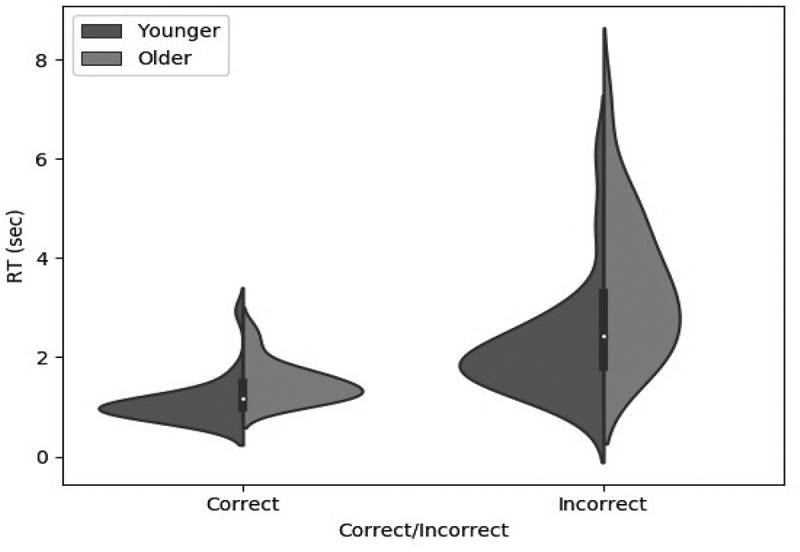

Response times

Figure 2 shows the mean response time across trials as a function of age group and feedback on the previous trial. A two-way ANOVA on the log-transformed data showed that Older participants took significantly longer than Younger participants (, , ), and that response time was significantly longer after an incorrect response than after a correct response (, , ). The interaction between the effects of age and feedback was not significant (, ), and Bayesian analysis showed that including the interaction term did not bring any substantive improvement to the model ().

Figure 2.

Response time violin plot as a function of age group in the BRXT, on trials following “correct” versus “incorrect” feedback. Older participants were in general slower than younger participants, and both groups were slower following negative than positive feedback.

Cross-task correlations

The correlation (across all participants) between the total number of errors on the WCST and on the BRXT was significant and positive (, , ). This correlation remained significant when the effect of age was partialled out (, , ).

Table 3 shows the correlations between the numbers of each type of error (across all participants) for each task. While none of the correlations between SL3 on the WCST and any of the BRXT perseverative error types are significant, PE on the WCST correlates significantly with PRESP and PSTIM on the BRXT, but not with PRULE Given the small sample size and the relevance to the discussion, we computed the Bayes Factor for three of these correlations. Bayesian analysis using the aforementioned priors yielded a BF10 of 5.94, 3.32, and a BF01 of 1.53 for PE correlations with PRESP, PSTIM, and PRULE, respectively.

Table 3.

Pearson correlations between types of WCST error (rows) and BRXT error (columns). All probabilities are two-tailed

| PRESP | PSTIM | PRULE | TE | |

|---|---|---|---|---|

| PE | r = .367 | r = .322 | r = −.088 | r = .384 |

| p = .009* | p = .024* | p = .547 | p = .006* | |

| SL3 | r = .104 | r = −.006 | r = −.110 | r = .535 |

| p = .477 | p = .968 | p = .454 | p = .001* |

Note. Values of p < .05 are marked with *

Discussion

In the WCST, we found that older participants produced more set loss errors, but not more perseverative errors, than younger participants. The significant increase in set loss errors with age is consistent with the detrimental effect of aging in proactive control, conceptualized as the process by which information in working memory biases attention toward goal-relevant schemas (Braver, 2012). Thus, we attribute the tendency toward increased set loss errors in older participants to a reduction with age in the ability to maintain or sustain attention to an ongoing task. This interpretation for the origin of set loss errors is consistent with, for example, that of Stuss et al. (2000) and deriving from the neuropsychological literature, who found that patients with inferior medial frontal lesions (as opposed to those with superior medial frontal lesions or with lateral lesions) were particularly prone to producing such errors. They attributed this to a deficit in sustained attention to an ongoing task (see also Shallice, Stuss, Picton, Alexander, & Gillingham, 2008; Stuss et al., 2005).

The absence of a significant effect of age on perserverative errors within the WCST was also predicted, though only on the assumption that our older participants were unrepresentative of the general population. Older participants recruited through age charities, and in particular the University of the Third Age (which characterizes itself as “an international movement whose aims are the education and stimulation of mainly retired members of the community”), are unlikely to constitute a representative sample of the elderly population in that such participants are likely to be more active and better educated than average. This interpretation is consistent with prior research cited above, but is of particular interest when considered in conjunction with the age effect on set loss errors, for together the results show that, at least in this population, the two error types dissociate.

Results from the BRXT were also consistent both with prior literature (e.g., Bielak et al., 2006; Esther et al., 2009) and the above interpretation of the WCST results, in that there was no statistical difference between the number of perseverative errors made by older participants and younger participants, while the total number of errors made by older participants was significantly greater than that made by younger participants. Recall that prior literature concerned with this task and aging has focussed only on total errors. Thus, Bielak et al. (2006) reported that while their 50–59 year olds () produced 16.5 errors on average (), their 70–79 year olds (N = 97) produced 20.5 errors on average (). Similarly, Esther et al. (2009), who also assessed years of education, derived the following regression equation relating age to total errors:

This was based on a group of 283 healthy controls with mean age 67.4. On this equation, a 65 year old adult with 14 years education would be predicted to produce 17.71 errors, while an education-matched 85 year old would be predicted to produce 22.31 errors. While the absolute total error scores in these two studies are higher than observed in the current study, their relative values are consistent with the pattern observed here. Absolute differences may be explained by procedural differences in the current study (e.g., our use of a circular arrangement of disks in place of the rectangular array, and the use of 9, rather than 10, disks). The absence of any significant effect of age on perseverative errors in the current study suggests that it is processes related to the production of non-perseverative errors, such as those involved in sustaining attention to the current goal, maintaining representations of recent trials, and inducing rules via the identification of temporospatial patterns across those representations, that are affected by aging.

With regard to response times, as expected, in both tasks latency to response was greater on trials following incorrect responses (and hence negative feedback) than on trials following correct responses (and hence positive feedback), and for older participants than for younger participants. However, while the difference between response time after correct and incorrect responses was numerically larger in both tasks for older participants than younger participants, the interaction effect was not significant. The slowed response times following negative feedback presumably reflect processes related to response inhibition and subsequent rule induction. These processes would seem to be shared by the two tasks.

Turning to the relation between performance on the WCST and the BRXT, the main outcomes of our correlational analyses were that (across participants of all ages): a) in WCST is predicted by (and to a lesser extent ) in BRXT (or vice versa), but not by ; and b) in WCST is predicted by in BRXT (or vice versa), but not by any of the three measures of BRXT perseverative errors. The former suggests that the processes underlying production of perseverative errors in the WCST are related to those underlying production of stimulus or response perseveration in the BRXT (which were also found to correlate), but not perseveration of rule application, while the latter further supports the claim that set loss errors and perseverative errors have different origins, or more precisely, that set loss errors in the WCST are the result of (failure of) processes that are separable from those involved in the production (or avoidance) of perseverative errors.

Computational modeling

In the second part of this paper, we consider how a recently developed neurocomputational model of schema-driven behavior, that of Caso & Cooper (2020), which has previously been used to help understand the deficit of Parkinson’s patients on the WCST, can be applied to our data to elucidate how putative neurobiological effects of aging might impact upon the learning and performance of rule-based tasks. The model has been described in full elsewhere. Therefore, rather than repeat that description here, we instead focus only on the aspects of the model critical to understanding its application to the two tasks considered here.1

Model description

The model is based on the work of Norman & Shallice (1986), who developed a schema-theoretic account of sequential action selection. Their theory proposes that hierarchically organized action schemas – abstractions over instances of action sequences – compete with each other through activation-based processes for control of action. This functional description is not committed to a specific neural implementation or a specific task, but application of the model to a specific task (e.g., the WCST or the BRXT) may be achieved by providing the model with appropriate task-specific schemas.

Model structure and operation

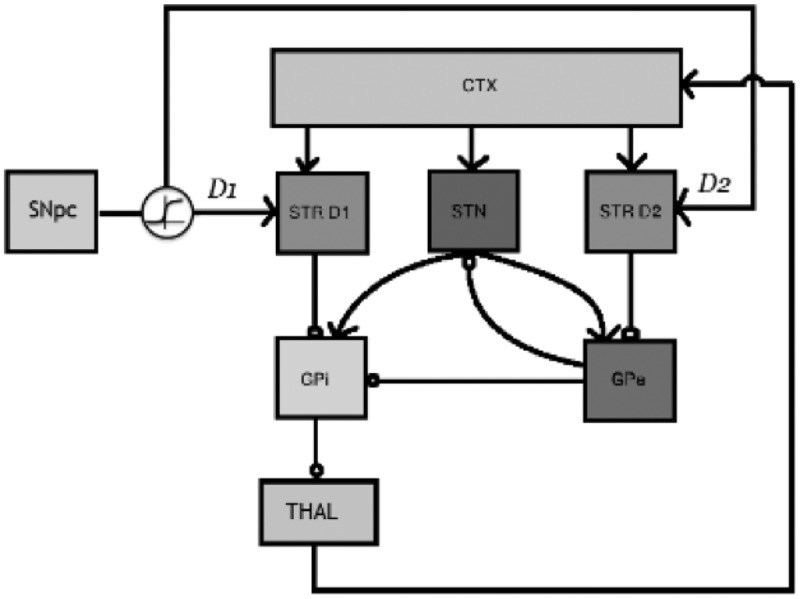

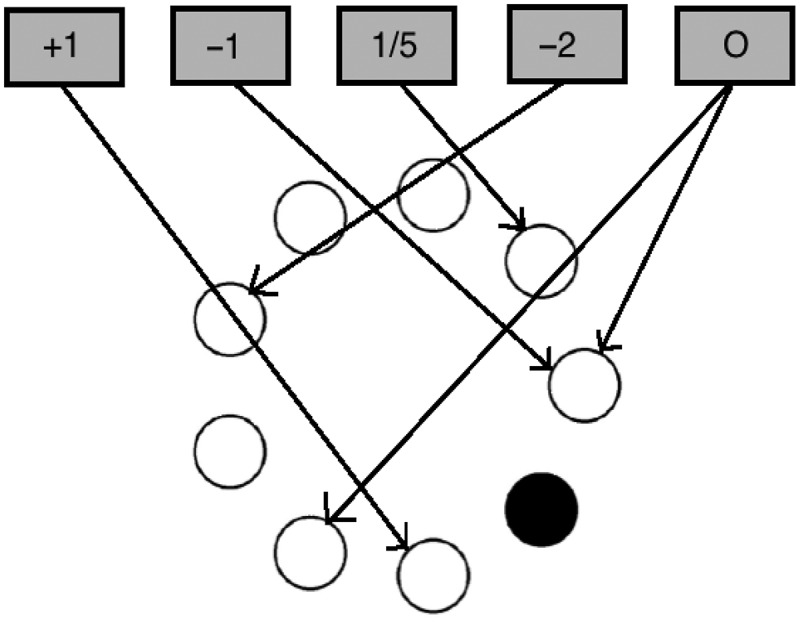

Our general model (see Caso & Cooper, 2020, for full details) features two sets of schemas: cognitive schemas and motor schemas (conceptualized as localized in prefrontal and premotor areas, respectively). The former encode higher level regularities of a task (e.g., sort by color, or alternate between positions) while the latter correspond to specific sensorimotor or response schemas (e.g., select pile 1 in the WCST, or select the disk at the “4 o’clock” location in the BRXT). Each schema has an associated activation value that varies between zero and one and that is maintained by a simulated cortico-thalamic loop. Each such loop comprises a schema node and a set of nodes corresponding to the nuclei of the basal ganglia, following the functional organization of the neuroanatomically detailed model of Gurney, Prescott, & Redgrave (2001), as shown in Figure 3. The basal ganglia units implement the multiple sequential probability ratio test (Bogacz & Gurney, 2007), an optimal method of simultaneously testing multiple hypotheses by estimating the probability of each on the basis of their support as judged by the input data.

Figure 3.

Schematic of the basal ganglia. STR D1: Striatum D1 receptors, STN: Subthalamic Nucleus, STR D2: Striatum D2 receptors, GPi: Globus pallidus internal segment, GPe: Globus Pallidus external segment, THAL: Thalamus, CTX: Cortex, SNpc: Substantia Nigra pars compacta. Standard arrow heads indicate excitatory connections. Circular arrow heads indicate inhibitory connections.

All units function according to standard activation-based processing principles, with the activation of each unit at any point in time being a function of the weighted sum of its inputs, and with the saturation function by which a node’s activation is calculated being the standard logistic function:

| (1) |

where is the unit’s summed weighted input, and and are parameters that control the unit’s gain and threshold, respectively.

Each cortico-thalamic loop operates in parallel, with the organization of Figure 3 ensuring tonic inhibition of all schema nodes through the inhibitory connection from each schema’s globus pallidus internal segment (GPi) unit to its thalamus (THAL) unit. In addition, however, each schema’s GPi unit also receives excitation from the output of the subthalamic nuclei (STN) units of all schemas within each competing set. Because unit activation is thresholded (as per Equation 1), this acts to release tonic inhibition from the more active schema nodes, allowing the activation of those nodes to increase. With appropriate parameter settings the dynamics of the system ensures that at any point in time only one schema node from each set is highly active. If this activation is sustained, the schema is then selected, and begins to control behavior. If, subsequently, the threshold of that node is increased or its gain is decreased (e.g., as a result of negative feedback), the node’s activation will reduce, allowing some other node within the set to become active.

As described thus far, schemas within each set compete with each other. Thus, cognitive-level schemas compete with each other and sensorimotor schemas compete with each other. Schemas across sets, however, cooperate. Thus, cognitive-level schema nodes may also excite corresponding sensorimotor schema nodes, with that excitation dependent on schema selection at the cognitive level and the input stimulus (e.g., if in the WCST “sort by color” is selected and the color of stimulus card is red, then excitation will be passed from the “sort by color” node to the sensorimotor schema node corresponding to the red target location).

Cortical learning

We assume that the slope or gain of the saturation functions of cortical units dynamically adapts to the level of conflict between those units. In particular, when the activation of several cortical representations is very similar, and the basal ganglia alone cannot arbitrate between different representations (e.g., because feedback/reward has not yet been received and computed), a mechanism is required to resolve this conflict so as to allow the simulated basal ganglia to make a decision. At the same time, the stability of cognitive representations must be sensitive to the need to trade off exploration and exploitation at different levels of the schema hierarchy (Goschke & Bolte, 2014). Allowing the gain of the saturation functions at each level of the schema hierarchy to vary in response to conflict provides a mechanism for this.

Here, we implement a mechanism that allows the cortical sensorimotor/response units to change the gain/slope of their saturation function, , via the free parameter εsms according to Equation 2:

| (2) |

where is sensorimotor unit noise, the product is over all sensorimotor units, and is the output signal of the sensorimotor unit. For simplicity, we do not include the analogous dynamic slope adjustment for cognitive schemas.

Increasing the parameter is comparable to being more confident at responding, provided that the subject has accrued the same level of evidence and has the properties desired of a conflict construct, as shown by Berlyne (1957; see also Botvinick, Braver, Barch, Carter, & Cohen, 2001). Conflict should increase when the number and the activation of competing representations increase, and it should have its maximum value once all units reach theirs. Many functions satisfy these criteria, but the product of activation values is the simplest analytical form, and it is therefore the one we use here. The presence of conflict stabilizes or destabilizes schema activation as a function of their input, driving a change to schema activation values in a dynamic fashion. The change of slope of the saturation function in the sensorimotor units provides this implementation. Cognitive control can be construed as a detection mechanism of suboptimal performance (or anticipation of such performance in the case of proactive control), followed by a change in attentional focus. At the level of evidence accumulation, conflict resolution can be achieved by slowing the accumulation of activation in specific units. The value of is updated each time feedback is received.

Basal ganglia learning

The basal ganglia units are regulated in a different fashion from the cortical units. While cortical units are solely regulated by their online state, regardless of history of activation and external stimuli, basal ganglia units change their characteristics with a history-based and reward-driven time course. Within the model this is reflected by adjusting , the threshold of the saturation function in striatal units, which is assumed to be related to the level of striatal dopamine. On each processing cycle and within each cortico-thalamic loop, , is adjusted according to Equation 3:

| (3) |

where the calculated value of is clipped to within the range [0, 1] if it falls below 0 or above 1.

The in Equation 3 is the reward prediction error, expressed as the difference between the actual reward and the median activation value in the last trial , as per Equation 4:

| (4) |

where is either or , according to whether feedback following the previous action/response was positive (e.g., a correct answer) or negative (e.g., an incorrect answer).

In Equation 4, is calculated as follows:

| (5) |

The index indicates the values of the variables on the previous iteration, while the parameters and determine the reward for mismatching schemas. If (mismatch reward sensitivity) is zero then the baseline reward is , meaning that prediction error ( in Equation 3) will be zero when the median activation of schema on the previous trial is . As increases, this baseline increases. The parameter (memory for negative feedback) determines the extent to which feedback from previous trials modulates the baseline given by . If it is zero, feedback from previous trials is ignored, but if it is greater than zero then feedback from previous trials persists across trials.

Together, Equation 3, Equation 4 and Equation 5 allow the model to bias in the correct direction following feedback.

Key model parameters

The model has a relatively large number of parameters (see Caso & Cooper, 2020, for a complete list), but in the simulations reported here we consider four of them as free parameters: mismatch reward sensitivity (), memory for negative feedback (), striatal learning rate (εstr) and sensorimotor schema learning rate (εsma). As noted above, the first two parameters affect the sensitivity of mismatching schemas to feedback. When and are zero, in is for mis-matching schemas, meaning that if the correct schema was selected (so is ), the thresholds of mismatching schemas will be increased (making them even less likely to be selected), while if the incorrect schema is selected (so is ), the thresholds of mis-matching schemas will be decreased, making them more likely to be selected. As mismatch reward sensitivity () increases, the magnitude of the threshold adjustment for mismatching schemas will decrease. Moreover, as memory for negative feedback () increases, the threshold adjustment for mismatching schemas is increasingly modulated by reward on the previous trial. The third and fourth parameters affect the efficacy of learning in the model’s striatal and the cortical components. The striatal learning rate (εstr) controls the rate of learning of biases/thresholds in the activation functions of the basal ganglia units for the cognitive schemas, while the sensorimotor schema learning rate (εsma) modulates the extent to which the information entropy of sensorimotor schemas drives the change of slope in their activation functions.

Relation to other neurobiological models

Rule induction tasks such as the WCST, and frontal functioning more generally, have been the target of a number of computational models, both at the cognitive level and the neurobiological level. Our model bears similarities to some of these existing models, but also incorporates notable differences. Bishara et al. (2010)’s cognitive-level of the WCST, for example, effectively maintains a representation of the probability of each rule (or more specifically each stimulus dimension) controlling the response, with this representation being updated following feedback such that the probabilities of rules consistent with the response/feedback are increased and the probabilities of rules inconsistent with the response/feedback are decreased following each trial. Model parameters determine sensitivity to reward, punishment, and decision consistency. The model has subsequently been applied to clinical data from a range of neurological conditions (e.g., Parkinsonism: Steinke, Lange, Seer, & Kopp, 2018), with moderate success. Response/feedback pairs in our model can similarly be understood as providing evidence for or against rules, but unlike the Bishara et al. (2010) model, our model operates at a more mechanistic level (by incorporating a neurobiologically plausible model of the basal ganglia) and incorporates lower-level sensorimotor schemas. Such low-level schemas effectively provide our model with a direct route whereby a stimulus can drive a response in the absence of top-down input.

An alternative cognitive-level account of rule induction is provided by Steinke, Lange, & Kopp (2020), who provide a reinforcement learning model of the WCST which combines model-free (habitual) and model-based (deliberative) subsystems. Like the model of Bishara et al. (2010), Steinke et al. (2020)’s model produces a probability distribution over responses on each trial, and like the model of Bishara et al. (2010) it produces perseverative errors because negative feedback does not reduce the probability of applying the previously most likely rule to zero, while it produces set loss errors because even with positive feedback there is a non-zero probability of failing to apply the most probable rule. The innovation of the Steinke et al. (2020) model lies in its combination of model-based and model-free “routes” to action. This is based on the authors’ demonstration of learning at the response level, whereby detailed analysis of behavioral data suggests that negative feedback results in an increased likelihood of participants avoiding the specific (punished) response on the next trial. Steinke et al. (2020) argue that their model provides a superior account, compared with the model of Bishara et al. (2010). The reinforcement learning model does not directly refer to the operation of cortico-subcortical loops, but nevertheless obtains a neurobiological interpretation through the identification of model-based processing with frontal functions and model-free processing with habitual action selection primarily associated with the basal ganglia (e.g., Daw, Niv, & Dayan, 2006). The model therefore bears a more abstract relation to neural processes than our model presented here, though both models include elements of learning from reinforcement (in our model, through Equation 4.) More crucially both models include two levels of processing – model-based processing is equivalent to application of rule schemas while model-free processing is equivalent to application of sensorimotor schemas. It is, however, beyond the scope of the current work to determine whether or how our model might capture the specific effects of perseverative response avoidance (what they term “the modulation of perseveration propensity by response demands”) seen by Steinke et al. (2020) to be a critical aspect of behavior.

Several other authors have also developed neurobiologically-grounded models of rule induction tasks which incorporate cortico-subcortical loops (e.g., Frank & Badre, 2012; Monchi, Taylor, & Dagher, 2000), and the operation of striatal learning (Equation 3, Equation 4, and Equation 5) in our model bears a resemblance to those present in that proposed by Frank & Badre (2012). However, our model differs from that of Frank & Badre (2012) in several substantial details. First, our model assumes that participants come to tasks such as the WCST with schemas for the classification of objects based on visually distinct dimensions (such as number, color, and form). This is similar to the assumption of Monchi et al. (2000), but connectionist network models such as that of Rougier & O’Reilly (2002) (and subsequently that of Frank & Badre, 2012) achieve this by pretraining the model with color, form, and number classification subtasks (see also Rougier, Noelle, Braver, Cohen, & O’Reilly, 2005). Perhaps more critically, in our model, the basal ganglia do not gate information through a series of weights adjusted by experience (in order words, it is not a connectionist network in the PDP tradition). Rather, the basal ganglia implement a control system that operates on time-continuous variables. Furthermore, while motor and premotor areas are separated and hierarchically related (i.e., information is passed downward) in both our model and that of Frank & Badre (2012), our model does not distinguish between a maintenance layer, deep lamina layer, and even a visual input layer, but instead incorporates all of them in sensorimotor schemas.

Most recently, Barceló (2020) has argued for a reframing of the WCST in terms of the predictive coding framework of Friston (2009). This approach argues that the function of the brain is to “infer the causes of its sensory inputs.” When applied to the WCST and rule induction more generally, the predictive processing approach attempts to infer the underlying rule by minimizing unanticipated feedback. Barceló (2020) argues that this results in two phases of operation. Early in the induction process, when there is greatest uncertainty in the rule, the system must explore the various options, while later in the induction process, when the rule is known, the system must exploit its knowledge (and predictions). These two phases are held by Barceló (2020) to be reflected in electrophysiological studies which have found different ERP profiles associated with the early and late trials within each run of a rule, with early processing being associated with prefrontal negativity and later processing associated with more posterior (and possibly parietal or subcortical) negativity (e.g., Lange, Seer, & Kopp, 2017; Stuss & Picton, 1978). The explore/exploit dimension has echoes of reinforcement learning, but within the predictive processing account exploration is not unguided or random, but aimed at reducing the uncertainty in the current rule, thereby improving the generative model guiding behavior, and decreasing the likelihood of negative feedback. An assumption that is common to our approach and the predictive processing approach is that both effectively rely on predicting feedback and attempting to minimize prediction error – note that on the assumption that activation in our model encodes an expectation, the central term in Equation 4, , represents the error between actual reward feedback and anticipated feedback. Hence, when prediction error is zero, changes in striatal gain occur only as a result of noise. Indeed, ongoing changes in striatal gain during the latter stages of a run of trials with the same rule, through Equation 4, work to reduce prediction error.

Simulation 1: modeling the WCST

Introduction: application of the model to the WCST

To model performance on the WCST, the model was configured with three cognitive schemas, corresponding to the three WCST rules (sort by color, sort by number, sort by form), and four sensorimotor/response schemas, corresponding to the four target locations where stimulus cards might be placed. When a cognitive schema is selected, activation is passed (via the corresponding response-level STN unit) into the cortico-thalamic loop for the appropriate target location (which is determined with reference to the stimulus card and the selected cognitive schema). If the selection is correct (i.e., experimenter feedback is positive, and so in Equation 4 is ), feedback to cognitive-level schemas (as per Equation 5) is positive if at least one feature of the stimulus card matches the selected target card and negative otherwise. Alternatively, if the selection is incorrect (i.e., experimenter feedback is negative, and so in Equation 4 is ), feedback to cognitive-level schemas (as per Equation 5) is negative when at least one feature of the stimulus card matches the selected target card and positive otherwise.

Method

In order to fit the model to the data from the WCST and to explore the effects of the model parameters, we conducted a series of data-fitting studies, where TE, PE, and were the target variables. More specifically, we sought to minimize the norm of the scores for the three variables (i.e., the square root of the sum of squared errors for the three variables) by using simulated annealing (Peter JM & Aarts, 1987). Additionally, we calculated the Bayes Factor, , for each dependent measure,2 given a standard Cauchy prior with and . While there is a negative correlation between values and , the simulated annealing algorithm does not minimize efficiently, but operates better on a function of the values, perhaps owing to the fact that the space is less smooth than the space, and it is therefore easier when minimizing to get stuck in local minima. Therefore, if two parameter sets yielded a similarly low value, the one with higher was chosen. This additional criterion improves model selection by taking into account distributions as well as providing a more intuitive fitting index.

We found the best-fitting values for each age group separately. In each case, simulated annealing was initialized with a parameter set obtained through qualitative analysis to ensure faster convergence. Moreover, we observed that for both groups very good fits were obtained with at its default value of zero, and so this parameter was fixed at this value while the other three parameters were varied in order to fit the group data.

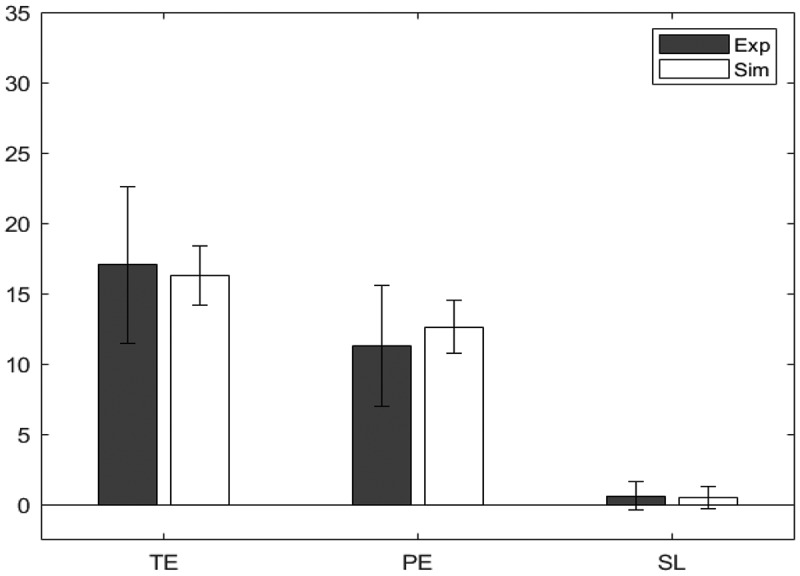

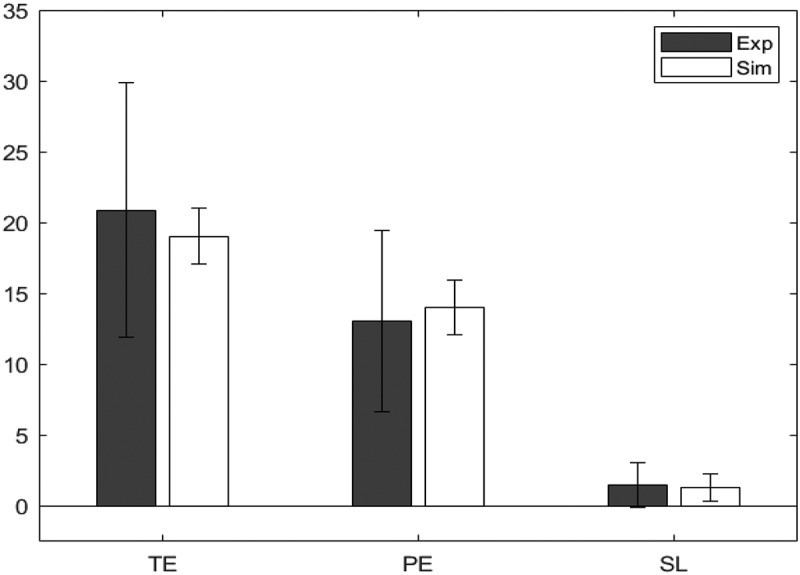

Results

Table 4 shows the minimized absolute scores for the three dependent measures, across the two groups. A value closer to 0 indicates a better fit. Also shown in the table are Bayes Factors for each dependent measure. A value greater than 1 indicates support for the null hypothesis, and therefore a better fit (Wagenmakers et al., 2018). The resultant values of the parameters of interest for the two groups are shown in Table 5, while Figure 4 and Figure 5 show bar charts for the dependent measures for the experimental and simulated younger and older participants, respectively.

Table 4.

Z statistics for the model fit for the two age groups in the WCST. A value closer to 0 indicates that experimental and simulated values have closer means, and therefore a better model fit. The subscripts near the z value are the Bayes Factor (BF01). A value higher than 1 indicates greater support for the null hypothesis, which suggests a good model fit

| Younger | |||

| Older |

Table 5.

Values of the parameters , , εstr, and εsma for the simulations of the two age groups in the WCST

| εstr | εsma | |||

|---|---|---|---|---|

| Younger | ||||

| Older |

Figure 4.

Model fit for younger participants, comparing the experimental (Exp) values and the simulated (Sim) values for the WCST.

Figure 5.

Model fit for older participants, comparing the experimental (Exp) values and the simulated (Sim) values for the WCST.

Importantly, swapping the parameter values determined for each age group results in substantially poorer overall fits for both groups, with larger values, and values generally smaller than 1 (see Table 6). This suggests that the model adequately simulates the responses of the two participant groups as being distinct.

Table 6.

Z and Bayes Factor BF01 as a subscript for model fits obtained by swapping young and old simulated data. The overall increase in Z values and the presence of three BFs below zeros suggest that the two models are not interchangeable and they capture the differences between the two groups

| SL3 | |||

|---|---|---|---|

| , | |||

| , | .2630.30 |

(and Bayes Factor as a subscript) for model fits obtained by swapping young and old simulated data. The overall increase in Z values and the presence of four BFs below one suggest that the two models are not interchangeable and they capture the differences between the two groups.

Recall that in the experimental study we found that response time was affected by both age and feedback on the previous trial, with older participants slower than younger ones, and responses after negative feedback slower than responses after positive feedback. Table 7 shows the number of cycles to selection of a sorting action for the model given the parameter settings determined for younger and older groups, and as a function of feedback on the previous trial. The simulations reproduce the effect of age (, ), with the simulated older participant group taking more cycles to respond than the simulated younger participant group, but not the effect of feedback type (, ). Moreover, the factors do not interact (, ).

Table 7.

Mean (standard deviation) simulated response time (i.e., number of cycles to generate a response) for each simulated group for trials following positive feedback and trials following negative feedback. Data are based on 25 simulated runs with parameters set as in Table 5

| Positive | Negative | |

|---|---|---|

| Younger | 132.3 (1.4) | 132.2 (2.9) |

| Older | 146.9 (5.5) | 144.5 (8.8) |

Discussion

The model is able to fit, near perfectly, the error data of both participant groups. Despite a similar number of perseverative errors in both groups, older participants are simulated with a reduction of 0.042 in the value of the striatal learning rate (εstr, down from 0.139 to 0.097), which suggests a slower update of basal ganglia thresholds in older participants than in younger participants. The corresponding reduction from 0.833 to 0.000 in the sensorimotor learning rate (εsma) reflects instead a reduction in cognitive control. With regard to mismatch reward sensitivity, the value of for younger participants (of ) suggests substantial insensitivity to negative feedback. The value for older participants (of ) suggests that older individuals are more sensitive to negative feedback than their younger counterparts. Since the fit was obtained with fixed at zero for both groups, these results assume that both groups are insensitive to reward from previous trials – an assumption that appears reasonable given the fits obtained.

With respect to the response time data, the model accounts well for the difference in response time due to age, but not for the difference due to feedback type. This contrasts with our earlier application of the model to data from Parkinson’s patients on a slightly modified version of the WCST (Caso & Cooper, 2020), where the number of model cycles to a response was greater following negative feedback than following positive feedback. The lack of effect in the model behavior here is in part due to a difference in the version of the WCST and differences in parameter values. More specifically, the version considered in the earlier work followed that of Barceló (2003) and included only stimulus cards that matched target cards on a single feature. The version considered here includes all possible cards, including ones which match target cards on two and even three features. The difference in RT on trials following positive and negative feedback is less when ambiguous cards are present, possibly because negative feedback after a card that matches on two dimensions (i.e., ambiguous cards) allows the model to effectively and efficiently rule out both dimensions on which the card matches. The difference in RT also depends in a nontransparent way on the values of the parameters (and in the simulations reported here is positive in some regions of the parameter space and negative in others). We return to this issue below in the discussion of simulation 3.

Simulation 2: modeling the BRXT

Introduction: application of the model to the BRXT

To model performance on the BRXT, the model was configured with five high-level schemas and nine lower-level sensorimotor schemas (see Figure 6). Four of the high-level schemas represented the four possible rules from the empirical study (clockwise, counter clockwise, alternate between positions 1–5, counter clockwise skipping one circle). One additional schema was included to represent all the other potential rules. All high-level schemas were fed with a constant input and uniformly distributed noise. The nine lower-level sensorimotor schemas represented the nine possible response options. These low-level schemas were also activated by environmental cues (stimuli) in much the same way as low-level schemas in the WCST model were activated by environmental cues. In this way, in absence of top-down control, environmental cues have the potential to drive the choice of pattern.

Figure 6.

Schematic of the BRXT model without the basal ganglia arbitration device. For instance, given that specific filled-in circle as an input, the schema (clockwise) excites the following circle, whereas the schema (counter-clockwise) excites the preceding one.

The present simulation is not concerned with rule inference per se, since this is assumed to happen higher up in the mental processing hierarchy, but is focused instead on the cognitive control of these rules. Thus, we assume that individuals have acquired similar pattern schemas, such as progressing clockwise around a circle, throughout their life. Nevertheless, some additional comment is required on the rule represented by the fifth cognitive schema. This was randomized to a different rule for each simulated participant, and contributes to inter-subject variability in the simulation. Different participants may have different concepts that are not necessarily triggered by the stimuli presented. For example, some individuals might overcomplicate rules and infer that clockwise motion of the circles is mirrored anticlockwise after a semi-circle is completed or infer bizarre responses that do not reflect any of the most common rules that neurologically healthy individuals seem to employ. Adding this schema also produces meaningful variations in responses.

For the BRXT, schema matching (as required for Equation 5) is determined by comparing the two most recent responses (in order) with the patterns corresponding to each of the known rules. If, for example, two consecutive filled disks appear in counter-clockwise fashion, then the schema corresponding to this pattern will be considered to match (and will receive bottom-up activation). If this arrangement also has features in common with the random schema, then the latter will also be considered to match (and be activated). If feedback is positive then the saturation functions of matching schemas are biased to increase the likelihood of them being selected, as per the first clause in Equation 5. The saturation function of non-matching schemas is biased in the opposite way – to decrease that likelihood (as per the second clause in Equation 5). The reverse applies if feedback is negative, ensuring that on the following trial matching rules are less likely to be selected but mis-matching rules are more likely to be selected.

Method

The model fitting studies for the BRXT were conducted in the same way as for the WCST, with one exception, namely that when fitting the data from older participants the value of the memory for negative feedback parameter () was also allowed to vary (for reasons given below). This time , , , and were the target variables. Again, fitting was performed using simulated annealing (Peter JM & Aarts, 1987), by minimizing the norm of the values of the four target variables.

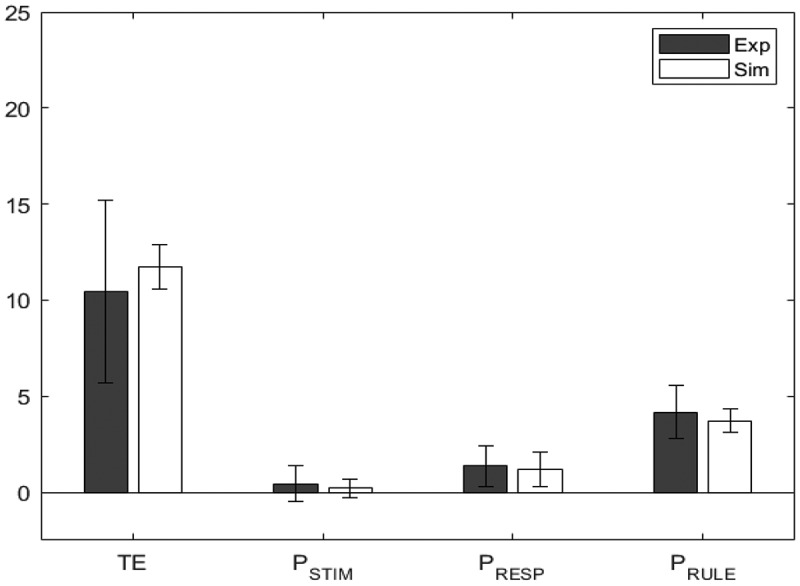

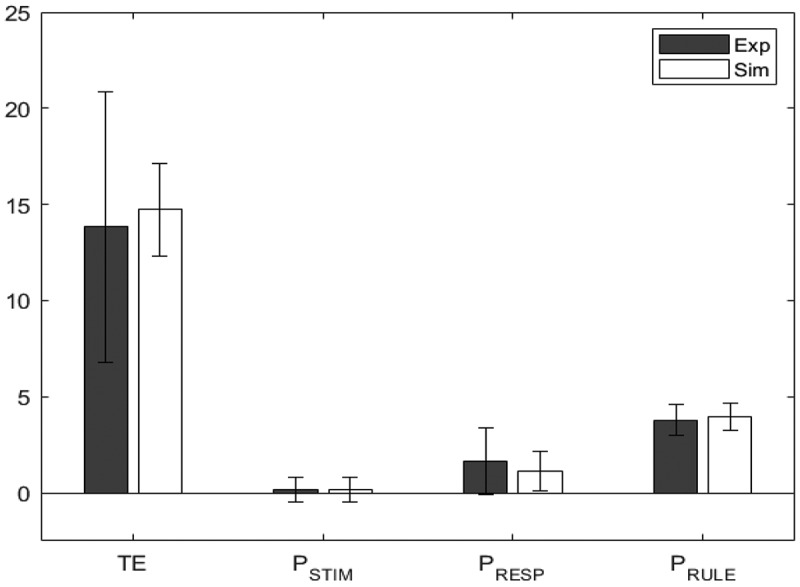

Results

Table 8 shows the minimized absolute values for the two groups. As before, a value closer to 0 indicates a better fit. Table 9 shows the resultant values of the parameters of interest for the two groups, while Figure 7 and Figure 8 show bar charts for the dependent measures for the experimental and simulated younger and older participants, respectively. As in the WCST, swapping the age groups worsened the overall fits producing much smaller than 1 for Total Errors (see Table 10). For this task (unlike in the case of WCST) and values were almost unchanged for the other variables. This is expected, given that TE is the only error whose value differed significantly across the two groups.

Table 8.

Z statistics for the model fit for the two age groups in the BRXT. A value closer to 0 indicates that experimental and simulated value have closer means, and therefore a better fit. The subscripts near the z value represent the Bayes Factor BF01. A value higher than 1 indicates greater support for the null hypothesis, which suggests a good model fit

| Younger | ||||

| Older |

Table 9.

Value of the parameters , , εstr, and εsma for the two age groups in the BRXT. Note that for the simulation of the Younger data, mr was fixed at zero and not a free parameter

| εstr | εsma | |||

|---|---|---|---|---|

| Younger | 0.162 | 0.000 | 0.621 | 0.512 |

| Older | 0.048 | 0.165 | 0.377 | 0.089 |

Figure 7.

Model fit for younger participants, comparing the experimental (Exp) values in blue/black and the simulated (Sim) values in orange/white, for the BRXT.

Figure 8.

Model fit for older participants, comparing the experimental (Exp) values in blue/black and the simulated (Sim) values in orange/white, for the BRXT.

Table 10.

Z and Bayes Factor BF01 as a subscript for model fits obtained by swapping young and old simulated data. The presence of one BFs below zeros suggest that the two models are not intercheangable and they capture the differences between the two groups

Paralleling the results reported for the WCST model Table 11 shows the number of cycles to selection of a sorting action for the model of the BRXT given the parameter settings determined for younger and older groups, and as a function of feedback on the previous trial. In contrast to the WCST simulations, the BRXT simulations reproduce both the effect of age (, ), with simulated older participant group taking more cycles to respond than the simulated younger participant group, and the effect of feedback type (, ), with responses following negative feedback taking longer than those following positive feedback. However, and in contrast to the empirical study, the interaction between the factors is significant (, ).

Table 11.

Mean (standard deviation) simulated response time (i.e., number of cycles to generate a response) for each simulated group for trials following positive feedback and trials following negative feedback. Data are based on 25 simulated runs with parameters set as in Table 9

| Positive | Negative | |

|---|---|---|

| Younger | 110.1 (1.3) | 112.8 (2.7) |

| Older | 111.2 (1.4) | 128.8 (21.4) |

Discussion

Whilst the younger group yields an excellent fit when memory for negative feedback () is held at 0 and the other three parameters are allowed to vary freely, this is not true for the older group, which in addition requires that memory for negative feedback ( be greater than 0. Recall that this parameter regulates the extent to which feedback from the previous trial persists to the next trail. Allowing its value to increase when simulating older participants yields an overall model fit that is commensurate with the one observed in the WCST (i.e., with small and ), and again, inverting the age groups in experimental and simulated data worsens the overall fit, albeit to a lesser extent than in the WCST.3 Older participants are simulated with a reduction in the striatal learning rate (εstr, from 0.621 to 0.337), which corresponds to a slower update of basal ganglia threshold, and a reduction in the sensorimotor schema learning rate (εsma, from 0.512 to 0.089), which corresponds to a sizable decrease in cognitive control. The mismatch reward sensitivity () is also lower for older individuals than younger ones, indicating that, as in the WCST, older participants appear to be more sensitive to negative feedback than their younger counterparts. Fitting the model to older adults also requires a higher value of the memory for negative feedback parameter () than the default of 0 for younger participants, suggesting that older participants weigh negative feedback from previous trials more than younger ones.

The simulated RT data may be understood as a prediction of the model based on the best fitting parameter values for the two groups. In this sense, the model fits correctly predict that older participants will take longer than younger participants across all trials, and that responses following negative feedback will be slower than responses following positive feedback for both groups. This is all consistent with the empirical effects, though the magnitude of the RT differences (in model processing cycles) is generally small (of the order of 1 to 2 cycles). It is unclear whether this apparent difference in effect size should be of concern, particularly when, as shown in subsequent simulations, a sizable volume of parameter space yields a good fit (within 1 standard error) to the error data, and when response time following both positive and negative feedback varies substantially in that volume.

Simulations 3 and 4: model sensitivity and compensatory mechanisms in WCST and BRXT

Introduction

Inspection of results during simulated annealing revealed that for both tasks there was often more than one set of parameter values yielding similar scores. In order to explore the sensitivity of the model’s behavior to the values of the key parameters we ran two additional series of simulations (one for each task) in which the key parameter values were systematically varied across their entire range. Firstly, we expected to find extended regions in parameter space resulting in good fits of the model to the data from each participant group, but a key question of interest was how large such regions might be. Clearly, the answer to this question depends on what is considered a “good fit” to the data. For simplicity, we assume here that a good fit is one where the score difference between the model and the target participant group is less than 1.00.

A second question addressed by the parameter sensitivity studies concerns the generality of the broad statements relating to the effects of aging and derived from simulations 1 and 2, namely that older participants are better simulated with decreased levels of the three key parameters – mismatch reward sensitivity (), striatal learning rate (εstr) and sensorimotor schema learning rate (εsma) – relative to younger participants.

Thirdly, we hypothesized the existence of compensatory processes within the model, whereby variation in one parameter might be approximately countered by a compensatory adjustment in another parameter. More specifically, we hypothesized that an increase in mismatch reward sensitivity () should be compensated by an increase in cognitive control, reflected in an increased striatal learning rate (εstr) and/or increased sensorimotor schema learning rate (εsma) in both tasks, and with comparable model fits.

Method

The model was run 25 times (to simulate 25 virtual participants) for each tasks at every point in the multi-dimensional parameter space defined by varying , εstr, and εsma from 0.0 to 1.0 in increments of 0.1. For the WCST simulations, this three-dimensional scan was performed twice, once with (memory for negative feedback) fixed at 0.0 and once with fixed at 0.1. For the BRXT simulations, the three-dimensional parameter space scan was performed four times, once each with at 0.0, 0.1, 0.2, and 0.3. For each point in parameter space, the norm of the -score of the dependent variables (the three error measures for WCST and the four error measures for BRXT) was calculated (i.e., the square root of the sum of squared standard error scores, as in the simulations 1 and 2) with respect to each participant group (i.e., for the data from the younger group and the older group) in order to determine the goodness of fit for each group.

Results: WCST

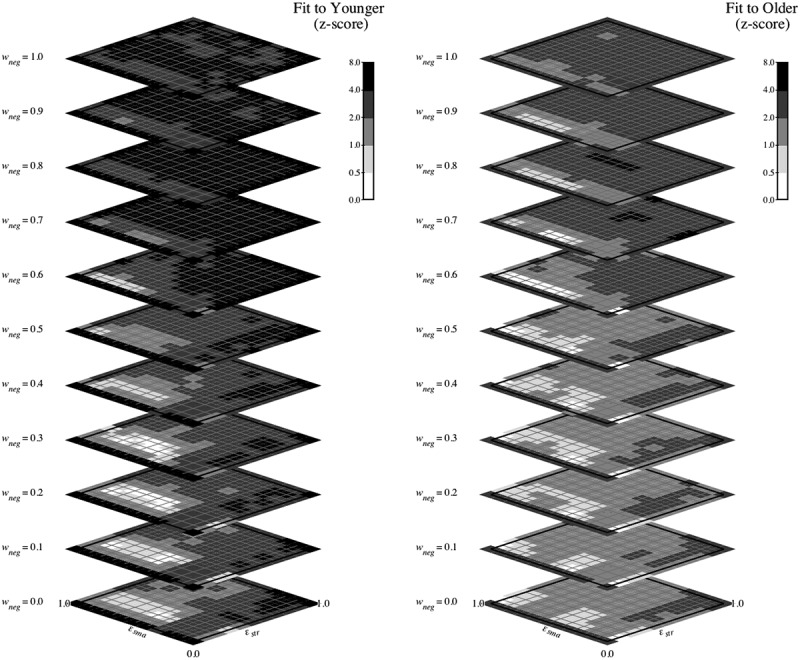

Figure 9 shows a heatmap representation of the -score fits of the model configured for the WCST task to the younger (left panel) and older (right panel) data when was 0.0. Each panel of the figure shows 11 planes/slices (one for each value of from 0.0 to 1.0 in increments of 0.1), with each plane/slice showing the fit (using logarithmic coding) at each value of εstr and εsma.

Figure 9.

Model fit for younger (left) and older (right) participants, across the 3-dimensional parameter space defined by wneg, ϵstr and ϵsma with mr = 0.0 for the WCST, based on 25 simulated participants at each of 113 points in parameter space. The greyscale shows the z-score fit as described in simulation 1, with red/white representing fits with a z-score of less than 0.5 and bluer/darker values representingprogressively poorer fits (i.e., z ≥ 0.5).

The figure shows that good model fits, corresponding to the lighter regions (light gray and white, for ), occur over a region of parameter space that extends beyond the fits obtained by simulated annealing in simulation 1. For the younger group, this region extends approximately from values of mismatch reward sensitivity () of to , striatal learning rate (εstr) of to , and sensorimotor schema learning rate (εsma) of to . For the older group, the region of best fit is less easily defined, but extends to higher values of mismatch reward sensitivity ( up to ), lower values of striatal learning rate (εstr down to ), and includes points with zero sensorimotor schema learning (εsma= 0.0). Inspection of the corresponding plots when memory for negative feedback () was revealed a) that there were fewer points of good fit of the model to the younger data, but b) that the fit to the older data was similar for without and with memory for negative feedback ( and , respectively).

Results: BRXT

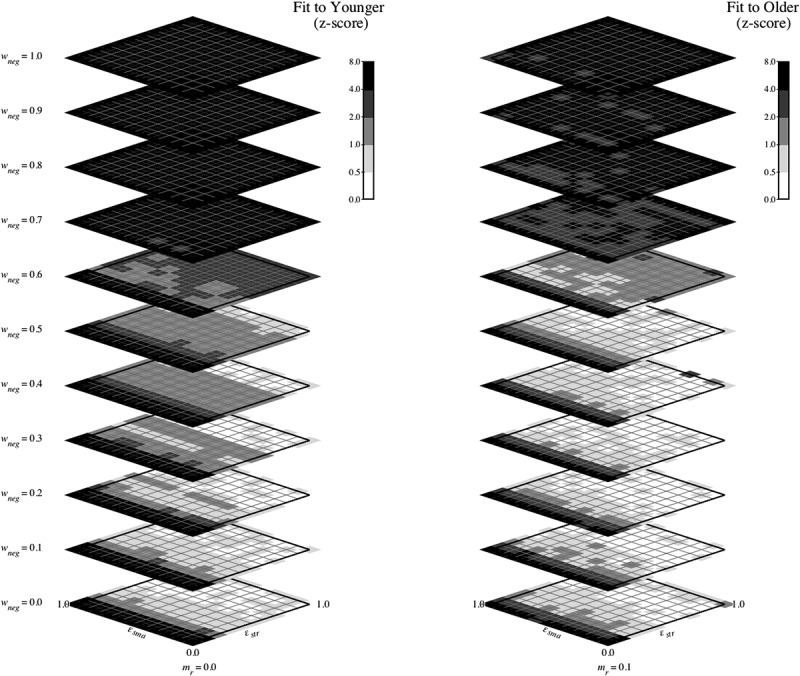

Figure 10 shows a heatmap representation of the -score fits of the model configured for the BRXT task to the younger (left panel) and older (right panel) data. As in Figure 9, each panel of the figure shows 11 planes/slices (one for each value of from 0.0 to 1.0 in increments of 0.1), with each plane/slice showing the fit (again using logarithmic coding) at each value of εstr and εsma. Note that the figure only shows data for a subset of the BRXT simulations, namely those with yielding the best fits. For the younger panel, . For the older panel, .

Figure 10.

Model fit for younger (left) and older (right) participants, across the 3-dimensional parameter space defined by wneg, ϵstr and ϵsma for the BRXT, based on 25 simulated participants at each of 113 points in parameter space and with mr = 0.0 for the younger / left panel and mr = 0.1 for the older / right panel. The greyscale shows the z-score fit as described in simulation 2, with white representing fits with a z-score of less than 0.5 and darker values representing progressively poorer fits (i.e., z ≥ 0.5).