Abstract

Artificial intelligence (AI) for the purpose of this review is an umbrella term for technologies emulating a nephropathologist’s ability to extract information on diagnosis, prognosis, and therapy responsiveness from native or transplant kidney biopsies. Although AI can be used to analyze a wide variety of biopsy-related data, this review focuses on whole slide images traditionally used in nephropathology. AI applications in nephropathology have recently become available through several advancing technologies, including (i) widespread introduction of glass slide scanners, (ii) data servers in pathology departments worldwide, and (iii) through greatly improved computer hardware to enable AI training. In this review, we explain how AI can enhance the reproducibility of nephropathology results for certain parameters in the context of precision medicine using advanced architectures, such as convolutional neural networks, that are currently the state of the art in machine learning software for this task. Because AI applications in nephropathology are still in their infancy, we show the power and potential of AI applications mostly in the example of oncopathology. Moreover, we discuss the technological obstacles as well as the current stakeholder and regulatory concerns about developing AI applications in nephropathology from the perspective of nephropathologists and the wider nephrology community. We expect the gradual introduction of these technologies into routine diagnostics and research for selective tasks, suggesting that this technology will enhance the performance of nephropathologists rather than making them redundant.

Keywords: artificial intelligence, computer, convolutional neural network, image recognition, nephropathology

Artificial intelligence (AI) has become an umbrella term for technologies emulating human “natural intelligence” or cognitive functions. In the context of nephropathology, we are interested in a machine’s ability to function as a nephropathologist by extracting clinically useful information from histological images of conventional and ancillary stains, transmission electron microscopy, and omics data. We can therefore quantify AI performance on the basis of its ability to inform about diagnosis, prognosis, and potential therapy responsiveness. This narrow definition excludes other AI-related research focusing on emotional or social intelligence, which, although certainly appreciated by a nephropathologist, is irrelevant to this review. We focus on AI for the primary tissue analysis. Although AI also plays a successful role in integrating information from downstream histopathological reports,1 our focus is on the precursor analysis of raw histological images, more recently in the form of whole slide images (WSIs), that are the cornerstone of diagnostic and experimental nephropathology.

Computers have been used in histopathology for decades, usually for repetitive and cumbersome tasks and to enhance reproducibility. Compared to modern image analysis, these first applications were simple and offered pixel-based planimetry solutions for the quantification of fibrosis (e.g., Sirius red stains2–4), capillary rarefaction,5 or immunohistological stains (e.g., C4d6,7) by using public domain software packages such as Fiji,8 ImageJ (both National Institutes of Health, Bethesda, MD),9 or other commercially available software.

Since these early days, the transformation of the histopathology work environment from desk microscopes to the “virtual” slides of WSIs and dramatic improvements in hardware and software have enabled complex AI analyses of WSIs (~300–500 MB per WSI/slide for a kidney biopsy core scanned with a 40 objective), both for native and transplant biopsies, both online and off-line.10 The application of AI and machine learning, a subfield of AI, in nephropathology is within reach now.

The case for machine learning in nephropathology and precision medicine

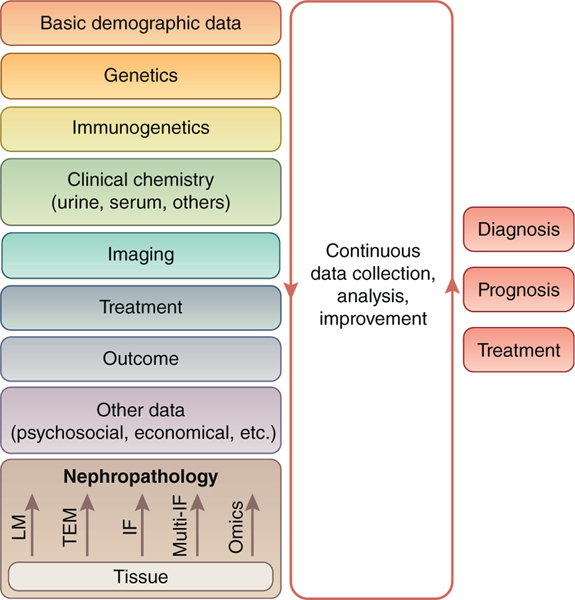

Precision medicine shows promise for major improvements in native11,12 and transplant nephrology.13 The foundation of precision medicine is the accumulation, integration, and analysis of massive, comprehensive, and precise data. As shown in Figure 1, a kidney biopsy WSI contains a wealth of information from conventional staining, single or multichannel immunohistological stains, and omics data. Over the past 100 years, nephropathology has become a powerful diagnostic tool integrating these data into a structured report, indispensable for the management of most native and transplant kidney diseases. However, even in a standardized report, many of these data are filtered and are not available for assessment in precision medicine. Successful examples of integrating nephropathology into big data–driven tools are the risk score for IgA glomerulonephritis14 using histopathological parameters from the Oxford Classification15–17 and the iBox risk score for transplant kidney survival (http://www.paristransplantgroup.org/; accessed July 3, 2019) using Banff Lesion Scores.18,19 Reproducibility of nephropathology parameters is crucial for both the development and clinical application of these tools. In fact, reproducibility of the relevant histopathological parameters is good among expert nephropathologists for the Oxford Classification16 (Table 1).16,18,20–30

Figure 1 |. Nephropathology in the context of other disciplines contributing to big data in precision medicine.

Artificial intelligence can be used in all steps of tissue analysis and during the integration and analysis of data from other disciplines for the big data–based determination of diagnosis, prognosis, and treatment of an individual patient. IF, immunofluorescence; LM, light microscopy; Multi-IF, multichannel immunofluorescence; TEM, transmission electron microscopy.

Table 1 |.

Interobserver variation in nephropathological scoring in native and transplant kidneys

| Disease | Parameter | Reproducibility | Reference |

|---|---|---|---|

|

| |||

| SLE | GN class | 0.19–0.60 | (20–25) |

| SLE GN activity index | 0.39–0.72 | (21–25) | |

| Endocapillary hypercellularity | 0.10–0.64 | (20, 21, 23, 24) | |

| Leukocyte infiltration | 0.28–0.32 | (20, 21, 23) | |

| Subendothelial hyaline deposits | 0.39–0.53 | (21, 23) | |

| Fibrinoid necrosis/karyorrhexis | 0.27–0.49 | (21, 23, 24) | |

| SLE GN chronicity index | 0.38–0.69 | (21–24) | |

| Cellular crescents | 0.51–0.55 | (21, 23) | |

| Glomerulosclerosis | 0.37–0.40 | (21, 23) | |

| Fibrous crescents | 0.23–0.59 | (21, 23) | |

| Interstitial fibrosis | 0.20–0.43 | (21, 23, 24) | |

| Tubular atrophy | 0.08–0.51 | (21, 23, 24) | |

| IgAN (Oxford Classification) | M for mesangioproliferation | Interexpert: ICC > 0.54 Expert vs. nonexpert: κ = 0.28 | Interexpert (16); expert vs. nonexpert (26) |

| E for endocapillary hypercellularity | Interexpert: ICC > 0.57 Expert vs. nonexpert: κ = 0.19 | ||

| S for segmental glomerulosclerosis | Interexpert: ICC > 0.46 Expert vs. nonexpert: κ = 0.51 | ||

| T for tubular atrophy and interstitial fibrosis | Interexpert: ICC > 0.78 Expert vs. nonexpert: κ = 0.53 | ||

| C for active crescents | Interexpert: ICC > 0.64 Expert vs. nonexpert: κ = 0.24 | ||

| Transplant rejection (Banff Classification) | i for cortical tubulointerstitial infiltrates | κ = 0.320 | (27) |

| t for tubulitis | Since the most recent redefinition with the 2017 Banff update18 the reproducibility has never been re-examined. | ||

| v for endarteritis | κ = 0.327 | (27) | |

| g for glomerulitis | κ = 0.39 | (28) | |

| ptc for peritubular capillaritis | κ = 0.38 | ||

| ci for interstitial fibrosis | κ = 0.306 | (27) | |

| ct for tubular atrophy | κ = 0.314 | ||

| cv for arterial intimal fibrosis | κ = 0.360 | ||

| cg for glomerular basement membrane splitting | κ = 0.48 | (28) | |

GN, glomerulonephritis; ICC, intraclass correlations; IgAN, IgA nephropathy; SLE, systemic lupus erythematosus.

Although nephropathology is and will remain the criterion standard for diagnosing most native and transplant kidney diseases, certain histopathological parameters required for prognostication and therapy planning have unsatisfactory reproducibility, particularly among nonexperts. These shortcomings could be improved by fully transparent artificial intelligence solutions in the hands of nephropathologists. By convention, ICC < 0.40 indicates poor interrater reliability, ICC =0.40–0.59 is moderate, ICC =0.60–0.79 is substantial, and ICC > 0.80 is outstanding.29,30

This illustrates the value and potential of traditional light microscopy in the hands of experts who developed the scores.26 However, nonexperts struggle to apply the Oxford Classification with the same accuracy.26 Matters become even more complicated for lupus nephritis (LN), the leading cause of morbidity and mortality in patients with systemic lupus erythematosus.31 Nephropathology is still the criterion standard for diagnosing LN and the best predictor of end-stage kidney disease.32–34 Nephropathologists are expected to assign a class from I to VI for lupus glomerulonephritis on the basis of light microscopy on a battery of stainings, immunohistology, and transmission electron microscopy results according to the recently revised International Society of Nephrology/Renal Pathology Society classification including activity and chronicity indices.35 These 3 parameters, class, activity index, and chronicity index, inform prognosis and treatment decisions.36–38 Because LN has a much wider histopathological spectrum than does IgA nephropathy, it is not surprising that reproducibility of these key parameters is suboptimal. The interpathologist reproducibility for class I to VI is between 0.19 and 0.60, with a median of 0.33 (Table 1), qualifying as poor to moderate.29,30 Likewise, the interpathologist reproducibility for the activity and chronicity indices ranges from ~ 0.4 to 0.7.20–22,24,25 The situation is similar in transplant nephropathology. Here, the relevant nephropathological parameters are defined in the predominant39 Banff Classification,18,19 which are considered in the iBox prognostic tool.40 Reproducibility of the Banff Lesion Scores g, ptc, i, t, cg, ci, and ct relevant for the iBox ranges from 0.306 to 0.4827,28 (Table 1). Even the reproducibility of the prognostically most important diagnosis of antibody-mediated rejection in the class 2 diagnosis of the Banff Classification was considered unsatisfactory by highly trained nephropathologists from eminent centers.28 These examples from native and transplant nephropathology illustrate the urgent need to improve reproducibility to supply quality histopathological data beyond the standardized report for these already existing and future tools that are expected to be extremely useful for diagnostic classifications, prognostication, and prediction of therapy responsiveness in precision medicine. Although educative efforts in nephropathology might be helpful in the interim, technological AI-based solutions could provide a long-term solution in the hand of nephropathologists. By definition, they deliver perfectly reproducible results, fit for precision medicine. In turn, nephropathology will continuously improve through analysis of increasingly comprehensive databases containing laboratory, serological, imaging, genetic, treatment, and follow-up data. These tools can be tuned, reflecting the progress of precision medicine and harnessing it for the advancement of nephropathology (Figure 1).

In addition to extracting information from routine WSIs, AI applications can be useful for other tasks. For example, AI and machine learning may be better suited for the precise and exhaustive extraction of the multidimensional information from multichannel immunofluorescence images or integration of omics (mRNA, microRNA, protein, and others) data from biopsy tissue.

Machine learning in image analysis and histopathology

Many nephropathological features or descriptors integrate subtle variations in local color and texture that are difficult to quantify directly. Attempts have been made to create robust features using texture filters,41 gray-level cooccurrence matrices,42 speeded-up robust features,43 and many other approaches. However, these features are context independent and may be suboptimal for any given classification problem.

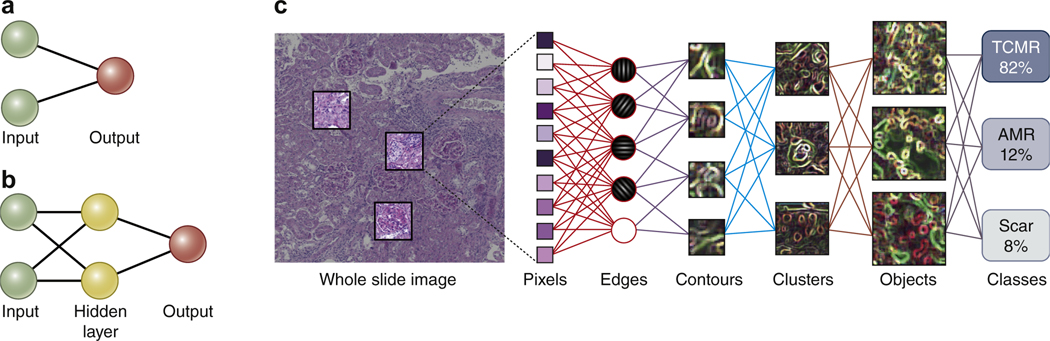

Machine learning as a subfield of AI gives computers the ability to learn without being explicitly programmed by adjusting themselves in response to the data they are exposed to. It is based on artificial neural networks (ANNs), which are inspired by, but not identical to, biological neural networks of the brain. ANNs learn from large data sets by automatically extracting features that they find useful for a particular task or classifier (Figure 2). An ANN will train itself for a classification task, identifying relevant features. Training can be supervised (e.g., replicating a manual annotation of podocyte nucleus vs. endothelial nucleus in a glomerular tuft), semi-supervised (e.g., WSI with rejection vs. WSI without rejection), or unsupervised. Interestingly, at the moment, these decisive features acquired through self-training by an ANN cannot be extracted and remain a “black box.” This current shortcoming explains the rare but sometimes blatant, seemingly obvious classification errors by ANNs. However, we are able to visualize the decisive features for a classification task in a given image (see Figure 3) with software such as Grad-CAM44 or Lime,45 providing the transparency necessary for building trust, which is essential particularly for clinical applications.

Figure 2 |. Development of machine learning algorithms and their architecture and functionality.

(a) The first neural networks used in image analysis were the simple perceptrons with a single input layer connected to a binary output. (b) Radial basis forward networks represent an intermediate stage with a hidden layer in between the input and output. (c) The current state of the art in the analysis of whole slide images is the convolutional neural network (CNN) that links the pixel input to the classification output via several interconnected hidden layers as shown in this fictional example for the classification of severity of T cell–mediated rejection (TCMR), antibody-mediated rejection (AMR), and scar in a rodent kidney allograft. Note that the hidden layers in such CNNs currently remain a black box. However, the trust-building mandatory transparency can be achieved with additional software, as shown in Figure 3. To optimize viewing of this image, please see the online version of this article at www.kidney-international.org.

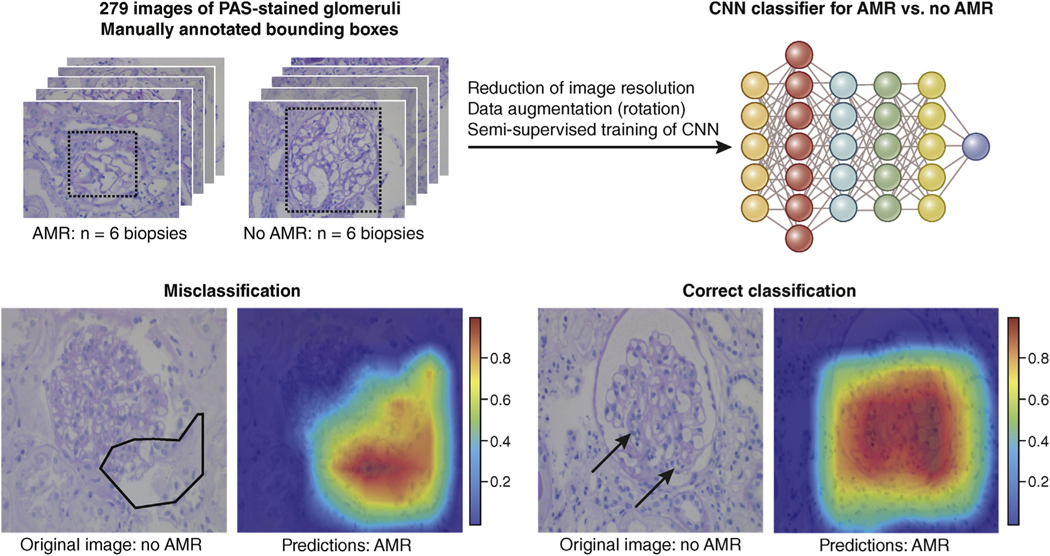

Figure 3 |. Example of a preliminary convolutional neural network (CNN) solution for a classification task in transplant nephropathology.

The CNN (schematically depicted in the top right) was trained on 279 images from glomerular transections on periodic acid–Schiff (PAS)–stained slides from 6 transplant biopsies with antibody-mediated rejection (AMR) and 6 biopsies without AMR (no AMR). Data augmentation on this relatively small sample size was achieved with rotation. With this semi-supervised training on this augmented data set, the classification accuracy for individual glomeruli reached 91.3%. Interestingly, data augmentation through cropping resulted in lower accuracy. Grad-CAM was used for the superimposition of a heat map highlighting the decisive areas for classification. A trained human nephropathologist would look for the features of glomerulitis, glomerular basement membrane splitting, and perhaps also secondary focal and segmental glomerulosclerosis for this classification task. The misclassification example shows the advantage of the superimposed heat maps. Obviously, the decisive areas (superimposed as a polygon on the PAS image) are largely outside the glomerular tuft, alerting the pathologist of a possible misclassification. In contrast, the heat map in the example with the correct classification highlights tuft segments with glomerulitis (arrows) indicative of AMR. To optimize viewing of this image, please see the online version of this article at www.kidneyinternational.org.

State-of-the-art machine learning approaches focus on supervised methods, that is, methods that are dependent on training with particularly large data sets labeled by nephropathologists. When trained properly and with reliable data, these methods yield high accuracy for the respective classification. However, the annotation of large numbers of WSIs often requires significant time and effort by nephropathologists. A solution to this problem is offered by semi-supervised or unsupervised methods, with the latter not requiring any labeling at all. Although it is more challenging and less reliable to train networks without supervision, the reduced costs and time required for training might in some instances outweigh the reduced accuracy.

The output of a convolutional neural network (CNN) is typically a likelihood and thus a continuous parameter. Although nephropathology reports typically consist of discontinuous parameters such as diagnosis, grade, and stage, we do not think this discrepancy will be problematic. Nephropathologists are used to constantly filter visual input into output parameters such as nodes on the diagnostic decision tree and nominal or ordinal histopathological classifiers. This is done in full knowledge of the subjectivity of these decisions and the inevitable loss of information. Continuous AI output can still be simplified, alone or in combination, into nominal scales for, for example, diagnostic classes or ordinal scales for, for example, activity or chronicity. This categorization should be informed by meaningful big data analysis under consideration of the usual statistical performance parameters.

As a still recent and developing field, a number of limitations exist in digital pathology. Of particular concern is the lack of a common acquisition protocol for histological images, which results in wide inter- and intralaboratory variabilities in slide appearances, such as section thickness, stains from different manufacturers, and scan quality.

Despite these limitations, digital pathology and automating tasks on a large-scale promise huge benefits and will likely transform diagnostic and experimental nephropathology in the coming years. Pathomics, a recently introduced concept,46 encompasses this idea of extracting subvisual patterns using feature analysis methods with the aim of characterizing morphological and behavioral patterns specific for different pathologies.

Machine learning architectures

A wide range of ANN architectures is available to automate classification tasks on the basis of a set of input features. These features can be quantitative measurements such as glomerular numbers and diameter, presence and density of infiltrates, or the strength of various immunohistochemical signals. They could also be segmentation tasks such as identifying glomeruli, arteries, cortex, and medulla. Commercial applications have been regularly used to perform such classifications on the basis of quantitative features.47

The current state of the art in feature extraction is the CNN, a subtype of ANN.48 Note that ANN is an umbrella term for these artificial networks with multiple layers whereas CNNs refers to a special architecture of the ANN defined by convolutional operations to increase the efficiency in image analysis. A CNN is a deep learning approach that integrates optimization of a set of feature extraction filters with the associated classification problem. Figure 2 shows the architecture of a CNN in comparison to much more simple predecessors. A CNN produces a bank of feature extraction filters that are ideally optimized to provide feature values tailored toward the desired nephropathological problem. CNN architectures are still an active area of research and have shown state-of-the-art performance for computer vision tasks.

CNNs are the most popular ANNs for the analysis of WSIs. This section discusses popular deep learning architectures that can reliably perform these tasks.

For the detection of important image structures, Faster R-CNN49 is a popular architecture choice. This architecture consists of 2 networks: (i) a region proposal network for finding potential regions (each represented by a rectangular bounding box) containing the structures of interest and (ii) a refinement network to classify whether important structures are present in these proposed regions. Another popular choice is a YOLO (an acronym for “you only look once”) detector,50 which splits the input images into a grid of multiple regions, passes them once through a CNN, and predicts class probabilities and bounding boxes for each region. In contrast to Faster R-CNN, which is a 2-stage detector, YOLO is a 1-stage detector.

Two-stage detectors commonly achieve higher detection accuracy, whereas 1-stage detectors are significantly more computationally efficient. For an overview, we refer the reader to a recent comprehensive review of recent object detection algorithms.51 Training of these detectors requires input data sets consisting of images (or WSIs) and rectangular bounding boxes around important structures within images (see Figure 3).

In addition to detecting structures of interest, recent networks also perform semantic segmentation to remove background from the detected structures. Specifically, the goal is to predict pixel-wise labels (background or foreground) using another network. Common choices of semantic segmentation networks include fully convolutional networks,52 U-Net,53 and V-Net.54 Training semantic segmentation networks requires a data set consisting of images and pixel-wise labels (also known as label masks). Recent architectures such as Mask R-CNN55 and Mask Scoring R-CNN jointly train image detection and semantic (pixel-based labeling of, e.g., podocyte nucleus vs. cytoplasm) segmentation. This setting is commonly known as instance-based segmentation. The training of instance-based segmentation algorithms requires input images (typically WSIs), bounding boxes, and pixel-wise labels.

After detection and semantic segmentation, one can crop specific regions in images and categorize them into different disease types (e.g., IgA nephropathy vs. membranous nephropathy) or histopathological features (e.g., tubulitis and endarteritis). State-of-the-art architectures for this image classification task include ResNet,56 DenseNet,57 and Capsule Networks.58,59 Recent work has shown that high-performing architectures can be discovered through automatic hyperparameter optimization techniques. Hyperparameters are used to steer the training algorithm. Examples of such automatically discovered effective networks include AmoebaNet,60 which achieves top performance image classification, and MobileNet61 for fastest speed. Of course, one can also train the classification task with detection and semantic segmentation in parallel.

In our experience, classifying disease types using entire WSIs without first detecting important image structures is ineffective because of the high dimensionality of images and typically a small number of training examples (compared with hundreds of thousands or millions of images typically used for training deep networks).

Prerequisites for machine learning in pathology

The prerequisite for any machine learning approach in nephropathology is the availability of digital images or WSIs. The capability of generating WSIs in a high-throughput manner stimulated the emerging field of digital pathology with the fast development of CNN approaches we are currently experiencing.

Hardware requirements for the training of a CNN for image classification typically are a modern multicore computer with decent graphics processing units. Examples of such graphics processing units are Tesla V100, Titan V, Titan X, and GTX 1080 (NVIDIA, Santa Clara, CA). Graphics processing units significantly speed up the training through distributed computing, which could lead to several orders of magnitude faster training than with traditional central processing units. In a clinical or research setting, such equipment will render classifications for a WSI with acceptable computational delays. They could be run, for example, during the lag time between the finalization of the WSI and the review by a nephropathologist.

Machine learning in neoplastic cytopathology and histopathology

Most research effort in histopathology is devoted to neoplasia. Consequently, machine learning applications are most advanced in tumor pathology. In the next section, we give a short overview of the achievements in this field, illustrating the potential of AI in histopathology in general and its promise for nephropathology.

The first applications of machine learning were in cytopathology. The analysis of solitary cells or tissue fragments instead of whole slides was well suited for the less powerful computing methods of the past. Methods reducing the inherent subjectivity of cytopathological examination by introducing automated objective measurements have been developed since the 1950s and recently included ANNs (reviewed in Pouliakis et al.62). Applications in histopathology on WSIs have been developed with CNNs for the detection of lymph node metastases. Under time constraints common in routine diagnostics, CNNs performed better than histopathologists, whereas without time constraints the performance was similar.62 A symbol-based machine learning approach performed well in classifying micrographs from follicular lymphomas.63 Similarly, CNN-based approaches have shown promise in recognition of breast carcinoma,64 adenocarcinoma infiltrates in prostate biopsies, breast cancer metastasis in sentinel lymph nodes,65 and non–small cell lung carcinoma on WSIs.66 Because of the recent success of immunotherapy in oncology, researchers have also developed deep learning models to label tumor-infiltrating mononuclear cells on hematoxylin and eosin (HE)–stained sections. For this, the training set consisted of virtual slides of testicular tumors in which a researcher marked mononuclear cells manually under the supervision of a pathologist. The algorithm was trained using 812 marked mononuclear cells and was able to count these mononuclear infiltrating cells with an accuracy of 0.89 compared with a pathologist assessment in the test set.67 Similar deep learning approaches seem capable of assessing mononuclear infiltrates in diagnosing T cell–mediated rejection and microvascular inflammation relevant for antibody-mediated rejection.18 In native kidney biopsies, the scoring of glomerulocapillary leukocytes as in the Oxford Classification’s E parameter would benefit from such tools. This E parameter in particular, although prognostically important in the hands of reference pathologists,15 has shown poor reproducibility when scored by less experienced pathologists.26 Immunohistochemical staining for the macrophage marker CD68 has been reported to be helpful, providing better reproducibility for surrogate markers for E.68 Still, a deep learning algorithm developed on a training set with expert annotation providing E scores with perfect reproducibility and no need for additional immunostains would be highly desirable. The same argument could be made for the activity parameters endocapillary hypercellularity and interstitial inflammation in the revised National Institutes of Health activity grading of LN and, of course, also for the other parameters that are not based on leukocyte margination or infiltration.35

Even underlying biological mechanisms can be unraveled with machine learning models. A CNN was able to classify tumors according to their underlying biology, such as microsatellite instability in gastrointestinal carcinomas, based on their appearance on HE-stained WSIs.69 Usually, this would require ancillary staining or molecular pathology tests. Interestingly, albeit trained in 1 type of cancer, it was able to predict the molecular alterations in different types of cancer as well. In a similar approach, deep learning was used to predict virus-induced carcinogenesis with reasonable performance.70 In another study, an attempt to visualize how the neural network perceives the typical feature of virus- versus non–virus-induced cancer showed clearly distinct forms, which human pathologists were not able to translate into reproducible features. Similarly, CNNs have shown good performance when classifying breast cancer into luminal A and B subtypes, which are related to distinct molecular subtypes.71 Moreover, estrogen receptor positivity, a critical marker for therapy response, could be predicted with 75% certainty on the basis of HE morphology.72 These striking examples illustrate 4 points: First, pathology and pathogenesis reveal themselves in histomorphology. Second, traditionally derived histopathological parameters can be reproducibly classified by machine learning models. Third, machine learning models can outperform histopathologists in some of these highly specialized tasks to detect these features. Fourth, in the hand of pathologists, these machine learning models can complement, simplify, and enhance the traditional histopathological assessment.

Machine learning in nephropathology

In comparison to oncopathology, the development of machine learning application in nontumor pathology is still in its infancy. Published data on machine learning analysis in non-neoplastic histopathology are relatively rare and devoted to find solutions to common and sometimes cumbersome tasks such as finding Helicobacter pylori in gastric biopsies.73

Machine learning and ANNs have been used in nephrology for many years and have been used, for example, to personalize anemia management in end-stage kidney disease,74 improve estimation of the glomerular filtration rate,75 refine tacrolimus dosing in kidney transplantation,76 and facilitate the early diagnosis of acute kidney injury77 or chronic kidney disease.78

Applications in nephropathology have been published for automated recognition (segmentation) of open or sclerosed glomerular transections in tissue sections in rodent and human kidneys, both after paraffin embedding and on frozen sections.79–83 A recent publication has shown that ANNs are capable not only of glomerular segmentation but also of classifying diabetic nephropathy with sufficient precision.84 Similar pipelines have been created that enable the rapid instruction of a CNN by nephropathologists for tasks such as the segmentation of glomeruli, glomerular cell types, and cortical tubular atrophy and interstitial fibrosis on mouse and human biopsy tissue.85 Similarly, 2 groups have been able to train a CNN for the segmentation of all relevant tissue compartments of transplant kidney biopsies86 and for the differentiation of glomerular components in diabetic nephropathy.84 Recently, CNNs have shown better performance in fibrosis staging of native kidney biopsies than pathologists.87 Figure 3 shows preliminary results from the training of a CNN to distinguish between photographs of glomeruli from a transplant biopsy with and without antibody-mediated rejection. Additional training data will clearly improve the already remarkable good accuracy of 91.3% (vs. 0.5 for a coin flip for this binary classification), considering that only a fraction of the glomeruli show lesions considered as indicative of antibody-mediated rejection by a human nephropathologist. It will be interesting for nephropathologists to review the decisive image hotspots from this semi-supervised training and compare them to traditional diagnostic features.

Obstacles: integration, standardization, data availability, and annotation

Diagnostic nephropathology has a unique position in anatomic pathology, because it is based on the integration of a battery of conventional paraffin stains, immunostains, and transmission electron microscopy. Considering the relatively high number of routine paraffin stainings required for nephropathology (at least HE, periodic acid–Schiff, Jones, and trichrome-elastica), staining variability poses another serious problem for machine learning algorithms in our field and is a well-known, sometimes irritating, problem even for the human nephropathologist.10 A solution to this problem is offered by color normalization techniques, whereby the color distribution of the target and the source images is matched in different ways. This commonly works only under conditions where the distribution of objects in both images is roughly the same, such as in consecutive level sections. Several technologies have successfully addressed this problem for the task of glomerular segmentation88,89 without resorting to large databases with different staining methods. Another limitation is the current lack of standard data sets as well as data sets including all possible variations relevant for a certain classifier. Ultimately, the effectiveness of any CNN will depend largely on data available for training. Advances in transfer learning,90 enabling knowledge transfer from, for example, more abundant animal tissue of a given disease to human biopsy tissue, could provide a solution to this problem. Transfer learning allows the transfer of features learned from images to more specific networks typically requiring dramatically lower numbers of biopsies for the transfer. Basic autoencoders trained to identify, for example, glomeruli on HE or periodic acid–Schiff-stained WSIs can be used as a starting point and re-trained with relatively little effort for other or more specific classifiers, such as the distinction between entities of glomerulonephritis. The requirement of these large annotated (e.g., mesangioproliferation, focal and segmental glomerulosclerosis, and tubular atrophy) data sets can be overcome with a different approach that has recently been described; this approach is not based on the trained detection of these features per se, but rather by flagging deviation from normal kidney histology,91 examples of which are usually abundantly present in urological nephrectomy specimens.

The major bottleneck for the creation of suitable training sets is the time-consuming manual annotation by a highly trained nephropathologist. For specific tasks, this bottleneck could be avoided through nonexpert annotation, such as the Amazon Mechanical Turk,92 provided that basic features can be explained effectively through an example. Of course, the usual caveat in research about the quality of input data determining the quality of the results applies and should inform the project strategy. In most cases, high-level annotations will likely require annotation by trained—or even panels of trained—nephropathologists. Platforms such as Labelbox (www.labelbox.com, last accessed May 2020) are useful to distribute such tasks among pathologists in a comfortable way.

The generation of extremely large data sets, matching a recent proposal of >40,000 histological cancer samples for oncopathology,93 will require a joint international effort from the nephrology and nephropathology community, merging the current efforts from several institutions and consortia. For example, the Banff community has devoted a Working Group on Digital Pathology chaired by Kim Solez and Alton “Brad” Farris to the application of AI in transplant pathology.

Outlook and summary

Although we are clearly in the early stages of AI application in nephropathology, the potential demonstrated above in the field of oncopathology provides a promising outlook for the next decade. Nephropathology is arguably more complicated than neoplastic histopathology, because it requires grading across a continuous spectrum of inflammatory and degenerative diseases with multifactor (genetic, environmental, degenerative, autoimmune, etc.) causes. At minimum, a nephropathologist must integrate multimodal tissue diagnostics, which include conventional light microscopy with special stains, immunohistology for Ig and complement split products, other special immunostains (e.g., DNAJB9 for fibrillary glomerulonephritis),94 transmission electron microscopy, and, among others, serological, immunogenetical, and genetic data.95,96 Each of these modalities poses challenges regarding standardization and reproducibility within and between laboratories, introducing additional barriers to AI integration that must be controlled. Of course, one cannot expect AI to instantly offer some miraculous full diagnostic solution, emulating a skilled nephropathologist. We rather expect a gradual introduction for selected tasks and diagnostic scenarios into routine practice. For example, AI-aided flagging of leukocytes could help in diagnosing rejection in transplants whereas flagging of focal and segmental glomerulosclerosis could help in the diagnostic workup of steroidresistant nephrotic syndrome. Even some simple tools for, for example, automated counting and presentation of glomeruli might already be helpful in daily practice.

We do not expect AI to make nephropathologists redundant in the foreseeable future. AI will rather transform the nephropathologist’s work profile, for example, offering solutions for repetitive work that requires high levels of attention. The nephropathologist will have to acquire skills to manage the information derived from AI applications during the diagnostic workup, which will require a new, different set of skills. Therefore, the claim that medical professionals supported by machine learning could lose skills97 reflects only the negative part of the constant advancement of medicine. Indeed, we urge more optimism and point out that so far the gains through new technologies have been far greater than the loss of human skills they replaced. Understanding of the functions, benefits, and limitations of AI and its classifiers will become crucial for nephropathologists. Moreover, procedures and rules will have to be devised and implemented to identify uncertainty and override questionable AI outputs when deemed necessary by the human nephropathologist who will ultimately be held accountable. To this end, AI developers must ensure that the decision-making process remains transparent. AI applications should complement traditional nephropathology in the hand of nephropathologists, eliminating human flaws and weaknesses. They should not be expected to deliver a substitute for nephropathology or skilled nephropathologists.

Moreover, the regulatory environment must be considered. For example, diagnostic applications using virtual slides have to meet the European Union directive (https://ec.europa.eu/growth/single-market/european-standards/harmonisedstandards/iv-diagnostic-medical-devices_en; accessed October 14, 2019) for Communauté Européenne (CE) certification. In the United States, conventional diagnostic applications using WSIs are provided only with recommendations issued by the College of American Pathologists98 and the Food and Drug Administration (http://www.fda.gov/downloads/MedicalDevices/DeviceRegulationandGuidance/GuidanceDocuments/UCM435355.pdf; accessed October 14, 2019). At the moment, any clinical application using AI in nephropathology can be considered for “research use only.” The necessary full Communauté Européenne (CE) certification requirements will require close collaboration between researchers, commercial partners, legislators, and regulators. Establishing these novel technologies in an ethical fashion can only be achieved by involving all stakeholders including physicians, patients, and institutional review boards.99 This is particularly important for the continuous update and improvement of clinical AI applications for which issues of consent and data ownership must be settled with all stakeholders, including patients.99 For example, the European Union’s General Data Protection Regulation emphasizes that patients should have the right not to be subjected to decisions based on automated processing (https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A32016R0679; accessed May 20, 2020).

We are skeptical about the promise that AI can alleviate the shortage of trained nephropathologists in underresourced countries. For the foreseeable future, AI applications in nephropathology are expected to perform only in an environment where preanalytical procedures are tightly controlled and where AI integration and supervision by skilled nephropathologists is ensured. These requirements will also prevent nonspecialists or even patients to apply these tools to their own biopsies. AI for diagnostic purposes in nephropathology should remain in the hands of nephropathologists.

In summary, AI is a promising technology that can be expected to enhance the histopathological examination of native and transplant kidney. It will be exciting to see this new technology develop: Nephropathologists should not fear but embrace and use these new technologies in the global fight against kidney disease.

DISCLOSURE

All the authors declared no competing interests.

ACKNOWLEDGMENTS

JUB receives funding from Deutsche Forschungsgemeinschaft (BE3801/3). PB receives funding from the German Research Foundation (DFG; SFB/TRR57 and SFB/TRR219, BO3755/3-1 and BO3755/6-1), the German Federal Ministry of Education and Research (BMBF; STOPFSGS-01GM1901A), and the RWTH Interdisciplinary Centre for Clinical Research (IZKF; O3-7). DM receives funding from the National Institutes of Health/National Heart, Lung, and Blood Institute (#R01HL146745) and the Cancer Prevention and Research Institute of Texas (#RR140013). Two authors of this publication (JUB and PB) are members of the European Rare Kidney Disease Network (ERKNet; project ID no. 739532).

REFERENCES

- 1.Burger G, Abu-Hanna A, de Keizer N, Cornet R. Natural language processing in pathology: a scoping review [e-pub ahead of print]. J Clin Pathol. 10.1136/jclinpath-2016-203872. Accessed April 20, 2020. [DOI] [PubMed] [Google Scholar]

- 2.De Heer E, Sijpkens YW, Verkade M, et al. Morphometry of interstitial fibrosis. Nephrol Dial Transplant. 2000;15(suppl 6):72–73. [DOI] [PubMed] [Google Scholar]

- 3.Pape L, Henne T, Offner G, et al. Computer-assisted quantification of fibrosis in chronic allograft nephropathy by picosirius red-staining: a new tool for predicting long-term graft function. Transplantation. 2003;76: 955–958. [DOI] [PubMed] [Google Scholar]

- 4.Farris AB, Adams CD, Brousaides N, et al. Morphometric and visual evaluation of fibrosis in renal biopsies. J Am Soc Nephrol. 2011;22:176–186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Babickova J, Klinkhammer BM, Buhl EM, et al. Regardless of etiology, progressive renal disease causes ultrastructural and functional alterations of peritubular capillaries. Kidney Int. 2017;91:70–85. [DOI] [PubMed] [Google Scholar]

- 6.Brazdziute E, Laurinavicius A. Digital pathology evaluation of complement C4d component deposition in the kidney allograft biopsies is a useful tool to improve reproducibility of the scoring. Diagn Pathol. 2011;6(suppl 1):S5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Jen KY, Nguyen TB, Vincenti FG, Laszik ZG. C4d/CD34 doubleimmunofluorescence staining of renal allograft biopsies for assessing peritubular capillary C4d positivity. Mod Pathol. 2012;25:434–438. [DOI] [PubMed] [Google Scholar]

- 8.Schindelin J, Arganda-Carreras I, Frise E, et al. Fiji: an open-source platform for biological-image analysis. Nat Methods. 2012;9:676–682. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.ImageJ. Available at: https://imagej.nih.gov/ij/index.html. Accessed April 20, 2020.

- 10.Niazi MKK, Parwani AV, Gurcan MN. Digital pathology and artificial intelligence. Lancet Oncol. 2019;20:e253–e261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Saez-Rodriguez J, Rinschen MM, Floege J, Kramann R. Big science and big data in nephrology. Kidney Int. 2019;95:1326–1337. [DOI] [PubMed] [Google Scholar]

- 12.Pesce F, Pathan S, Schena FP. From -omics to personalized medicine in nephrology: integration is the key. Nephrol Dial Transplant. 2013;28:24–28. [DOI] [PubMed] [Google Scholar]

- 13.Dafoe DC, Tantisattamo E, Reddy U. Precision medicine and personalized approach to renal transplantation. Semin Nephrol. 2018;38:346–354. [DOI] [PubMed] [Google Scholar]

- 14.Barbour SJ, Coppo R, Zhang H, et al. Evaluating a new international riskprediction tool in IgA nephropathy. JAMA Intern Med. 2019;179:942–952. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Cattran DC, Coppo R, Cook HT, et al. The Oxford classification of IgA nephropathy: rationale, clinicopathological correlations, and classification. Kidney Int. 2009;76:534–545. [DOI] [PubMed] [Google Scholar]

- 16.Roberts IS, Cook HT, Troyanov S, et al. The Oxford classification of IgA nephropathy: pathology definitions, correlations, and reproducibility. Kidney Int. 2009;76:546–556. [DOI] [PubMed] [Google Scholar]

- 17.Trimarchi H, Barratt J, Cattran DC, et al. Oxford Classification of IgA nephropathy 2016: an update from the IgA Nephropathy Classification Working Group. Kidney Int. 2017;91:1014–1021. [DOI] [PubMed] [Google Scholar]

- 18.Haas M, Loupy A, Lefaucheur C, et al. The Banff 2017 Kidney Meeting Report: revised diagnostic criteria for chronic active T cell-mediated rejection, antibody-mediated rejection, and prospects for integrative endpoints for next-generation clinical trials. Am J Transplant. 2018;18: 293–307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Roufosse C, Simmonds N, Groningen MC, et al. A 2018 Reference Guide to the Banff Classification of Renal Allograft Pathology. Transplantation. 2018;102:1795–1814. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Wilhelmus S, Cook HT, Noel LH, et al. Interobserver agreement on histopathological lesions in class III or IV lupus nephritis. Clin J Am Soc Nephrol. 2015;10:47–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Wernick RM, Smith DL, Houghton DC, et al. Reliability of histologic scoring for lupus nephritis: a community-based evaluation. Ann Intern Med. 1993;119:805–811. [DOI] [PubMed] [Google Scholar]

- 22.Schwartz MM, Lan SP, Bernstein J, et al. ; Lupus Nephritis Collaborative Study Group. Irreproducibility of the activity and chronicity indices limits their utility in the management of lupus nephritis. Am J Kidney Dis. 1993;21:374–377. [DOI] [PubMed] [Google Scholar]

- 23.Oni L, Beresford MW, Witte D, et al. Inter-observer variability of the histological classification of lupus glomerulonephritis in children. Lupus. 2017;26:1205–1211. [DOI] [PubMed] [Google Scholar]

- 24.Grootscholten C, Bajema IM, Florquin S, et al. Interobserver agreement of scoring of histopathological characteristics and classification of lupus nephritis. Nephrol Dial Transplant. 2008;23:223–230. [DOI] [PubMed] [Google Scholar]

- 25.Furness PN, Taub N. Interobserver reproducibility and application of the ISN/RPS classification of lupus nephritis—a UK-wide study. Am J Surg Pathol. 2006;30:1030–1035. [DOI] [PubMed] [Google Scholar]

- 26.Bellur SS, Roberts ISD, Troyanov S, et al. Reproducibility of the Oxford classification of immunoglobulin A nephropathy, impact of biopsy scoring on treatment allocation and clinical relevance of disagreements: evidence from the VALidation of IGA study cohort. Nephrol Dial Transplant. 2019;34:1681–1690. [DOI] [PubMed] [Google Scholar]

- 27.Furness PN, Taub N, Assmann KJ, et al. International variation in histologic grading is large, and persistent feedback does not improve reproducibility. Am J Surg Pathol. 2003;27:805–810. [DOI] [PubMed] [Google Scholar]

- 28.Smith B, Cornell LD, Smith M, et al. A method to reduce variability in scoring antibody-mediated rejection in renal allografts: implications for clinical trials—a retrospective study. Transpl Int. 2019;32:173–183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Koch GG, Landis JR, Freeman JL, et al. A general methodology for the analysis of experiments with repeated measurement of categorical data. Biometrics. 1977;33:133–158. [PubMed] [Google Scholar]

- 30.Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33:159–174. [PubMed] [Google Scholar]

- 31.Bernatsky S, Boivin JF, Joseph L, et al. Mortality in systemic lupus erythematosus. Arthritis Rheum. 2006;54:2550–2557. [DOI] [PubMed] [Google Scholar]

- 32.Moroni G, Pasquali S, Quaglini S, et al. Clinical and prognostic value of serial renal biopsies in lupus nephritis. Am J Kidney Dis. 1999;34: 530–539. [DOI] [PubMed] [Google Scholar]

- 33.Contreras G, Pardo V, Cely C, et al. Factors associated with poor outcomes in patients with lupus nephritis. Lupus. 2005;14:890–895. [DOI] [PubMed] [Google Scholar]

- 34.Rijnink EC, Teng YKO, Wilhelmus S, et al. Clinical and histopathologic characteristics associated with renal outcomes in lupus nephritis. Clin J Am Soc Nephrol. 2017;12:734–743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Bajema IM, Wilhelmus S, Alpers CE, et al. Revision of the International Society of Nephrology/Renal Pathology Society classification for lupus nephritis: clarification of definitions, and modified National Institutes of Health activity and chronicity indices. Kidney Int. 2018;93:789–796. [DOI] [PubMed] [Google Scholar]

- 36.Bertsias GK, Tektonidou M, Amoura Z, et al. Joint European League Against Rheumatism and European Renal Association-European Dialysis and Transplant Association (EULAR/ERA-EDTA) recommendations for the management of adult and paediatric lupus nephritis. Ann Rheum Dis. 2012;71:1771–1782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Hahn BH, McMahon MA, Wilkinson A, et al. American College of Rheumatology guidelines for screening, treatment, and management of lupus nephritis. Arthritis Care Res (Hoboken). 2012;64:797–808. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.KDIGO Clinical Practice Guideline for Glomerulonephritis. Chapter 12: Lupus nephritis. Kidney Int Suppl (2011). 2012;2:221–232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Becker JU, Chang A, Nickeleit V, et al. Banff borderline changes suspicious for acute T-cell mediated rejection: where do we stand? Am J Transplant. 2016;16:2654–2660. [DOI] [PubMed] [Google Scholar]

- 40.Loupy A, Aubert O, Orandi BJ, et al. Prediction system for risk of allograft loss in patients receiving kidney transplants: international derivation and validation study. BMJ. 2019;366:l4923. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Oberholzer M, Ostreicher M, Christen H, Bruhlmann M. Methods in quantitative image analysis. Histochem Cell Biol. 1996;105:333–355. [DOI] [PubMed] [Google Scholar]

- 42.Chan KL. Quantitative characterization of electron micrograph image using fractal feature. IEEE Trans Biomed Eng. 1995;42:1033–1037. [DOI] [PubMed] [Google Scholar]

- 43.Qiao G, Zong G, Wang J, Sun M. Automatic toxic granulation detection and grading based on speeded up robust features. Cytometry A. 2011;79: 887–890. [DOI] [PubMed] [Google Scholar]

- 44.Selvaraju RR, Cogswell M, Das A, et al. Grad-CAM: visual explanations from deep networks via gradient-based localization. Paper presented at: 2017 IEEE International Conference on Computer Vision (ICCV). October 22–29, 2017; Venice, Italy. [Google Scholar]

- 45.Ribeiro MT, Singh S, Guestrin C. “Why should I trust you?” Explaining the predictions of any classifier. Paper presented at: 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. August 13–17, 2016. San Francisco, CA. [Google Scholar]

- 46.Gupta R, Kurc T, Sharma A, et al. The emergence of pathomics. Curr Pathobiol Rep. 2019;7:73–84. [Google Scholar]

- 47.Beck AH, Sangoi AR, Leung S, et al. Systematic analysis of breast cancer morphology uncovers stromal features associated with survival. Sci Transl Med. 2011;3:108ra113. [DOI] [PubMed] [Google Scholar]

- 48.Krizhevsky A, Sutskever I, Hinton GE. ImageNet classification with deep convolutionalneural networks. Commun ACM. 2017;60:84–90. [Google Scholar]

- 49.Ren S, He K, Girshick R, Sun J. Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell. 2017;39:1137–1149. [DOI] [PubMed] [Google Scholar]

- 50.Redmon J, Divvala S, Girshick R, Farhadi A. You only look once: unified, real-time object detection. Paper presented at: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). June 27–30, 2016; Las Vegas, NV. [Google Scholar]

- 51.Liu L, Ouyang W, Wang X, et al. Deep learning for generic object detection: a survey. 2018. arXiv:1809.02165 [cs.CV]. [Google Scholar]

- 52.Long J, Shelhamer E, Darrell T. Fully convolutional networks for semantic segmentation. Paper presented at: IEEE Conference on Computer Vision and Pattern Recognition. June 7–12, 2015; Boston, MA. [DOI] [PubMed] [Google Scholar]

- 53.Ronneberger O, Fischer P, Brox T. U-Net: convolutional networks for biomedical image segmentation. In: Navab N, Hornegger J, Wells WM, Frangi AF, eds. Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015. Cham, Switzerland: Springer; 2015. Image Processing, Computer Vision, Pattern Recognition, and Graphics Series 9350. [Google Scholar]

- 54.Milletari F, Navab N, Ahmadi S. Fully convolutional neural networks for volumetric medical image segmentation. Paper presented at: Fourth International Conference on 3D Vision (3DV). October 25–28, 2016; Stanford University, Stanford, CA. [Google Scholar]

- 55.He K, Gkioxari G, Dollár P, Girshick R. Mask R-CNN. 2017. arXiv:1703.06870 [cs.CV]. [DOI] [PubMed] [Google Scholar]

- 56.He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. Paper presented at: IEEE Conference on Computer Vision and Pattern Recognition. June 27–30, 2016; Las Vegas, NV. [Google Scholar]

- 57.Huang G, Liu Z, van der Maaten L, Weinberger KQ. Densely connected convolutional networks. Paper presented at: IEEE Conference on Computer Vision and Pattern Recognition. July 21–26, 2017; Honolulul, HI. [Google Scholar]

- 58.Sabour S, Frosst N, Hinton GE. Dynamic routing between capsules. In: von Luxburg U, Guyon I, Bengio S, et al. , eds. NIPS’17: Proceedings of the 31st International Conference on Neural Information Processing Systems. Red Hook, NY: Curran Associates Inc.; 2017:3856–3866. [Google Scholar]

- 59.Mobiny A, Van Nguyen H. Fast CapsNet for Lung Cancer Screening. Cham, Switzerland: Springer; 2018:741–749. [Google Scholar]

- 60.Real E, Aggarwal A, Huang Y, Quoc VL. Regularized evolution for image classifier architecture search. In: The Thirty-Third AAAI Conference on Artificial Intelligence. 33. Palo Alto, CA: AAAI Press; 2019: 4780–4789. [Google Scholar]

- 61.Sandler M, Howard A, Zhu M. Mobilenetv2: inverted residuals and linear bottlenecks. Paper presented at: IEEE Conference on Computer Vision and Pattern Recognition, June 18–23, 2018; Salt Lake City, UT. [Google Scholar]

- 62.Pouliakis A, Karakitsou E, Margari N, et al. Artificial neural networks as decision support tools in cytopathology: past, present, and future. Biomed Eng Comput Biol. 2016;7:1–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Zorman M, Sanchez de la Rosa JL, Dinevski D. Classification of follicular lymphoma images: a holistic approach with symbol-based machine learning methods. Wien Klin Wochenschr. 2011;123:700–709. [DOI] [PubMed] [Google Scholar]

- 64.Goudas T, Maglogiannis I. Cancer cells detection and pathology quantification utilizing image analysis techniques. Conf Proc IEEE Eng Med Biol Soc. 2012;2012:4418–4421. [DOI] [PubMed] [Google Scholar]

- 65.Litjens G, Sanchez CI, Timofeeva N, et al. Deep learning as a tool for increased accuracy and efficiency of histopathological diagnosis. Sci Rep. 2016;6:26286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Vu QD, Graham S, Kurc T, et al. Methods for segmentation and classification of digital microscopy tissue images. Front Bioeng Biotechnol. 2019;7:53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Linder N, Taylor JC, Colling R, et al. Deep learning for detecting tumourinfiltrating lymphocytes in testicular germ cell tumours. J Clin Pathol. 2019;72:157–164. [DOI] [PubMed] [Google Scholar]

- 68.Soares MF, Genitsch V, Chakera A, et al. Relationship between renal CD68+ infiltrates and the Oxford Classification of IgA nephropathy. Histopathology. 2019;74:629–637. [DOI] [PubMed] [Google Scholar]

- 69.Kather JN, Pearson AT, Halama N, et al. Deep learning can predict microsatellite instability directly from histology in gastrointestinal cancer. Nat Med. 2019;25:1054–1056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Kather JN, Schulte J, Grabsch HI, et al. Deep learning detects virus presence in cancer histology [e-pub ahead of print]. bioRxiv. 10.1101/690206. Accessed April 20, 2020. [DOI] [Google Scholar]

- 71.Ha R, Mutasa S, Karcich J, et al. Predicting breast cancer molecular subtype with MRI dataset utilizing convolutional neural network algorithm. J Digit Imaging. 2019;32:276–282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Shamai G, Binenbaum Y, Slossberg R, et al. Artificial intelligence algorithms to assess hormonal status from tissue microarrays in patients with breast cancer. JAMA Netw Open. 2019;2:e197700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Martin DR, Hanson JA, Gullapalli RR, et al. A deep learning convolutional neural network can recognize common patterns of injury in gastric pathology. Arch Pathol Lab Med. 2020;144:370–378. [DOI] [PubMed] [Google Scholar]

- 74.Brier ME, Gaweda AE, Aronoff GR. Personalized anemia management and precision medicine in ESA and iron pharmacology in end-stage kidney disease. Semin Nephrol. 2018;38:410–417. [DOI] [PubMed] [Google Scholar]

- 75.Liu X, Li N, Lv L, et al. Improving precision of glomerular filtration rate estimating model by ensemble learning. J Transl Med. 2017;15:231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Tang J, Liu R, Zhang YL, et al. Application of machine-learning models to predict tacrolimus stable dose in renal transplant recipients. Sci Rep. 2017;7:42192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Molitoris BA. Beyond biomarkers: machine learning in diagnosing acute kidney injury. Mayo Clin Proc. 2019;94:748–750. [DOI] [PubMed] [Google Scholar]

- 78.Almansour NA, Syed HF, Khayat NR, et al. Neural network and support vector machine for the prediction of chronic kidney disease: a comparative study. Comput Biol Med. 2019;109:101–111. [DOI] [PubMed] [Google Scholar]

- 79.Kato T, Relator R, Ngouv H, et al. Segmental HOG: new descriptor for glomerulus detection in kidney microscopy image. BMC Bioinformatics. 2015;16:316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Bukowy JD, Dayton A, Cloutier D, et al. Region-based convolutional neural nets for localization of glomeruli in trichrome-stained whole kidney sections. J Am Soc Nephrol. 2018;29:2081–2088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Marsh JN, Matlock MK, Kudose S, et al. Deep learning global glomerulosclerosis in transplant kidney frozen sections. IEEE Trans Med Imaging. 2018;37:2718–2728. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Simon O, Yacoub R, Jain S, et al. Multi-radial LBP features as a tool for rapid glomerular detection and assessment in whole slide histopathology images. Sci Rep. 2018;8:2032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Gadermayr M, Dombrowski AK, Klinkhammer BM, et al. CNN cascades for segmenting sparse objects in gigapixel whole slide images. Comput Med Imaging Graph. 2019;71:40–48. [DOI] [PubMed] [Google Scholar]

- 84.Ginley B, Lutnick B, Jen KY, et al. Computational segmentation and classification of diabetic glomerulosclerosis. J Am Soc Nephrol. 2019;30: 1953–1967. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Lutnick B, Ginley B, Govind D, et al. An integrated iterative annotation technique for easing neural network training in medical image analysis. Nat Mach Intell. 2019;1:112–119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Hermsen M, de Bel T, den Boer M, et al. Deep learning-based histopathologic assessment of kidney tissue. J Am Soc Nephrol. 2019;30: 1968–1979. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Kolachalama VB, Singh P, Lin CQ, et al. Association of pathological fibrosis with renal survival using deep neural networks. Kidney Int Rep. 2018;3:464–475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Gadermayr M, Gupta L, Appel V, et al. Generative adversarial networks for facilitating stain-independent supervised and unsupervised segmentation: a study on kidney histology. IEEE Trans Med Imaging. 2019;38:2293–2302. [DOI] [PubMed] [Google Scholar]

- 89.Bentaieb A, Hamarneh G. Adversarial stain transfer for histopathology image analysis. IEEE Trans Med Imaging. 2018;37:792–802. [DOI] [PubMed] [Google Scholar]

- 90.Chang H, Han J, Zhong C, et al. Unsupervised transfer learning via multiscale convolutional sparse coding for biomedical applications. IEEE Trans Pattern Anal Mach Intell. 2018;40:1182–1194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Uzunova H, Schultz S, Handels H, Ehrhardt J. Unsupervised pathology detection in medical images using conditional variational autoencoders. Int J Comput Assist Radiol Surg. 2019;14:451–461. [DOI] [PubMed] [Google Scholar]

- 92.Albarqouni S. Fine-tuning deep learning by crowd participation. IEEE Pulse. 2018;9:21. [DOI] [PubMed] [Google Scholar]

- 93.Campanella G, Hanna MG, Geneslaw L, et al. Clinical-grade computational pathology using weakly supervised deep learning on whole slide images. Nat Med. 2019;25:1301–1309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Dasari S, Alexander MP, Vrana JA, et al. DnaJ heat shock protein family B member 9 is a novel biomarker for fibrillary GN. J Am Soc Nephrol. 2018;29:51–56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Chang A, Gibson IW, Cohen AH, et al. A position paper on standardizing the nonneoplastic kidneybiopsy report.Clin J Am SocNephrol. 2012;7:1365–1368. [DOI] [PubMed] [Google Scholar]

- 96.Sethi S, Haas M, Markowitz GS, et al. Mayo Clinic/Renal Pathology Society Consensus Report on Pathologic Classification, Diagnosis, and Reporting of GN. J Am Soc Nephrol. 2016;27:1278–1287. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Cabitza F, Rasoini R, Gensini GF. Unintended consequences of machine learning in medicine. JAMA. 2017;318:517–518. [DOI] [PubMed] [Google Scholar]

- 98.Pantanowitz L, Sinard JH, Henricks WH, et al. Validating whole slide imaging for diagnostic purposes in pathology: guideline from the College of American Pathologists Pathology and Laboratory Quality Center. Arch Pathol Lab Med. 2013;137: 1710–1722. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Prabhu SP. Ethical challenges of machine learning and deep learning algorithms. Lancet Oncol. 2019;20:621–622. [DOI] [PubMed] [Google Scholar]